[AINews] o3 solves AIME, GPQA, Codeforces, makes 11 years of progress in ARC-AGI and 25% in FrontierMath

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

DistilledInference Time Compute is all you need.

AI News for 12/19/2024-12/20/2024. We checked 7 subreddits, 433 Twitters and 32 Discords (215 channels, and 6058 messages) for you. Estimated reading time saved (at 200wpm): 607 minutes. You can now tag @smol_ai for AINews discussions!

With the departure of key researchers, Veo 2 beating Sora Turbo in heads up comparisons, and Noam Shazeer debuting a new Gemini 2.0 Flash Reasoning model, the mood around OpenAI has been tense to say the least.

But patience has been rewarded.

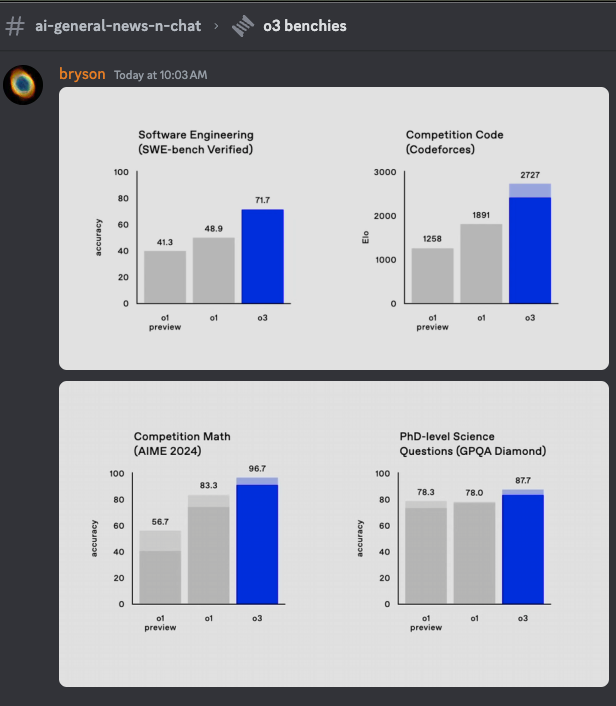

As teased by sama and with clues uncovered by internet sleuths and journalists, the last day of OpenAI's Shipmas brought the biggest announcement: o3 and o3-mini were announced, with breathtaking early benchmark results:

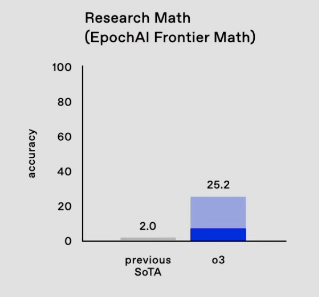

- FrontierMath: the hardest Math benchmark ever (our coverage here) went from 2% -> 25% SOTA

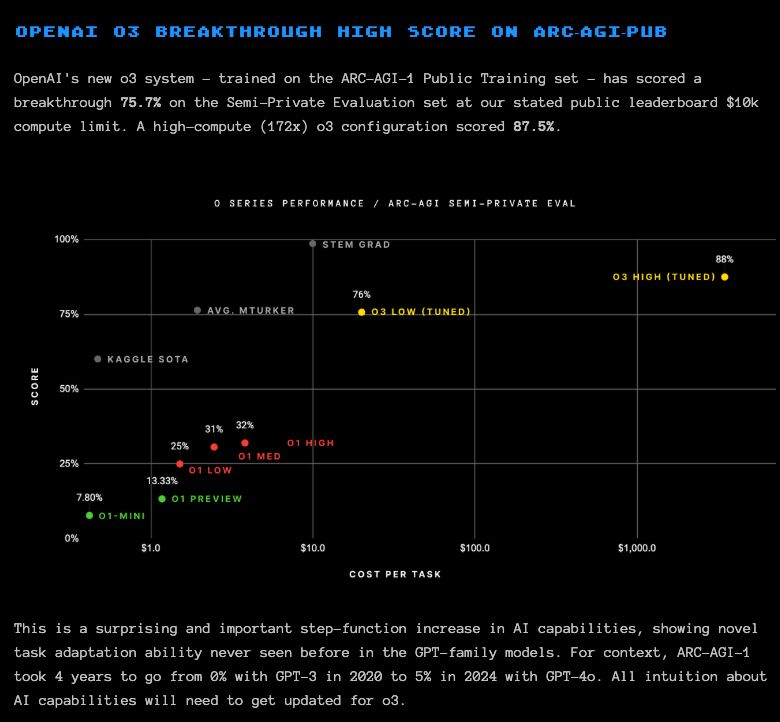

- ARC-AGI: the famously difficult general reasoning benchmark extended in a ~straight line the performance seen by the o1 models, in both o3 low ($20/task) and o3 high ($thousands/task) settings. Greg Kamradt appeared on the announcement to verify this and published a blogpost with their thoughts on the results. As they state, "ARC-AGI-1 took 4 years to go from 0% with GPT-3 in 2020 to 5% in 2024 with GPT-4o". o1 then extended it to 32% in its highest setting, and o3-high pushed to 87.5% (about 11 years worth of progress on the GPT3->4o scaling curve)

- SWEBench-Verified, Codeforces, AIME, GPQA: It's too easy to forget that none of these models existed before September, and o1 was only made available in API this Tuesday:

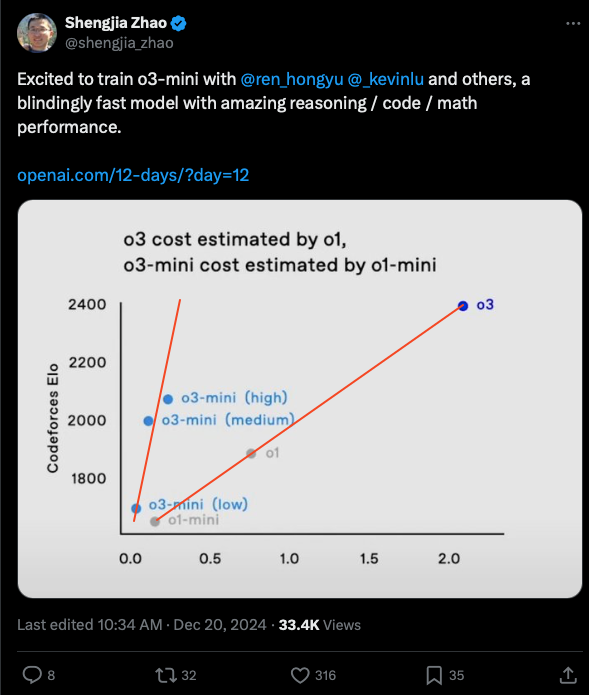

o1-mini is not to be overlooked, as the distillation team proudly showed off how it has an overwhelmingly superior inference-intelligence curve than o3-full:

as sama says: "on many coding tasks, o3-mini will outperform o1 at a massive cost reduction! i expect this trend to continue, but also that the ability to get marginally more performance for exponentially more money will be really strange."

Eric Wallace also published a post on their o-series deliberative alignment strategy and applications are open for safety researchers to test it out.

Community recap videos, writeups, liveblogs, and architecture speculations are also worth checking out.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

OpenAI Model Releases (o3 and o3-mini)

- o3 and o3-mini Announcements and Performance: @polynoamial announced o3 and o3-mini, highlighting o3 achieving 75.7% on ARC-AGI and 87.5% with high compute. @sama expressed excitement for the release and emphasized the safety testing underway.

- Benchmark Achievements of o3: @dmdohan noted o3 scoring 75.7% on ARC-AGI and @goodside congratulated the team for o3 achieving new SOTA on ARC-AGI.

Other AI Model Releases (Qwen2.5, Google Gemini, Anthropic Claude)

- Qwen2.5 Technical Advancements: @huybery released the Qwen2.5 Technical Report, detailing improvements in data quality, synthetic data pipelines, and reinforcement learning methods enhancing math and coding capabilities.

- Google Gemini Flash Thinking: @shane_guML discussed Gemini Flash 2.0 Thinking, describing it as fast, great, and cheap, outperforming competitors in reasoning tasks.

- Anthropic Claude Updates: @AnthropicAI shared insights into Anthropic's work on AI safety and scaling, emphasizing their responsible scaling policy and future directions.

Benchmarking and Performance Metrics

- FrontierMath and ARC-AGI Scores: @dmdohan highlighted o3's 25% on FrontierMath, a significant improvement from the previous 2%. Additionally, @cwolferesearch showcased o3's performance on multiple benchmarks, including SWE-bench and GPQA.

- Evaluation Methods and Challenges: @fchollet discussed the limitations of scaling laws and the importance of downstream task performance over traditional test loss metrics.

AI Safety, Alignment, and Ethics

- Deliberative Alignment for Safer Models: @cwolferesearch introduced Deliberative Alignment, a training approach aimed at enhancing model safety by using chain-of-thought reasoning to adhere to safety specifications.

- Societal Implications of AI Advancements: @Chamath emphasized the need to consider profound societal implications of AI advancements and their impact on future generations.

AI Tools, Applications, and Research

- CodeLLM for Enhanced Coding: @bindureddy introduced CodeLLM, an AI code editor integrating multiple LLMs like o1, Sonnet 3.5, and Gemini, offering unlimited introductory quota for developers.

- LlamaParse for Audio File Processing: @llama_index announced LlamaParse's ability to parse audio files, expanding its capabilities to handle speech-to-text conversions seamlessly.

- Stream-K for Improved Kernel Implementations: @hyhieu226 showcased Stream-K, enhancing GEMM kernels and providing a better view of kernel implementations for persistent kernels.

Memes and Humor

- Humorous Takes on AI and Culture: @dylan522p humorously stated, "Motherfuckers were market buying Nvidia stock cause OpenAI O3 is so fucking good", blending AI advancements with stock market humor.

- AI-Related Jokes and Puns: @teknium1 tweeted, "If anyone in NYC wanna meet I'll be at Stout, 4:00 to 5:30 with couple friends.", playfully mixing social plans with AI discussions.

- Lighthearted Comments on AI Trends: @saranormous shared a humorous reflection on posting clickbait content on X, blending AI content creation with social media humor.

AI Research and Technical Insights

- Mixture-of-Experts (MoE) Inference Costs: @EpochAIResearch explained that MoE models often have lower inference costs compared to dense models, clarifying common misconceptions in AI architecture.

- Neural Video Watermarking Framework: @AIatMeta introduced Meta Video Seal, a neural video watermarking framework, detailing its application in protecting video content.

- Query on LLM Inference-Time Self-Improvement: @omarsar0 posed a survey on LLM inference-time self-improvement, exploring techniques and challenges in enhancing AI reasoning capabilities.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. OpenAI's O3 Mini Outperforms Predecessors

- OpenAI just announced O3 and O3 mini (Score: 234, Comments: 186): OpenAI's newly announced O3 and O3 mini models show significant performance improvements, with O3 achieving an 87.5% score on the ARC-AGI test, which evaluates an AI's ability to learn new skills beyond its training data. This marks a substantial leap from O1's previous score of 25% to 32%, with Francois Chollet acknowledging the progress as "solid."

- Skepticism surrounds the ARC-AGI benchmark results, with users questioning its validity due to private testing conditions and the model being trained on a public training set, unlike previous versions. Concerns about AGI claims are expressed, emphasizing the benchmark's limitations in proving true AGI capabilities.

- The cost of achieving high performance with the O3 model is highlighted, with the 87.5% accuracy version costing significantly more than the 75.7% accuracy version. Users discuss the model's current economic viability and predict that cost-performance might improve over time, potentially making it more accessible.

- The naming choice of skipping "O2" due to trademark issues with British telecommunications giant O2 is noted, with some users expressing dissatisfaction with the naming conventions. Additionally, there is anticipation for public release and open-source alternatives, with a release expected in late January.

- 03 beats 99.8% competitive coders (Score: 121, Comments: 69): O3 has achieved a 2727 ELO rating on CodeForces, placing it in the 99.8th percentile of competitive coders. More details can be found in the CodeForces blog.

- O3's Performance and Computation Costs: O3 achieved significant performance on CodeForces with a 2727 ELO rating but required generating over 19.1 billion tokens to reach a high accuracy, incurring substantial costs, such as $1.15 million for the highest tier setting. The discussion highlights how compute costs are currently high but expected to decrease over time, emphasizing progress in AI capabilities.

- Challenges in AI Problem Solving: O3's approach is contrasted with traditional methods like CoT + MCTS, with comments noting its efficiency and scalability with compute, though it requires iterative processes to handle mistakes. The complexity of problems and the need for in-context computation are discussed, comparing AI's token generation to human problem-solving capabilities.

- Impact on Coding Interviews: The advancement of models like O3 sparks debate about the relevance of LeetCode-style interviews, with some suggesting they could become obsolete as AI improves. There's a call for interviews to incorporate modern tools like LLMs, and a humorous critique of the unrealistic nature of some technical interview questions.

- The o3 chart is logarithmic on X axis and linear on Y (Score: 139, Comments: 65): The O3 chart uses a logarithmic X-axis for "Cost Per Task" and a linear Y-axis for "Score," illustrating performance metrics of various models like O1 MIN, O1 PREVIEW, O3 LOW (Tuned), and O3 HIGH (Tuned). Notably, O3 HIGH (Tuned) achieves an 88% score at higher costs, contrasting with O1 LOW's 25% score at a $1 cost, highlighting the trade-off between cost and performance in ARC AGI evaluations.

- Several commenters criticize the O3 chart for its misleading representation due to the logarithmic X-axis, with hyperknot highlighting that the chart gives a false impression of linear progress towards AGI. Hyperknot further argues that achieving AGI would require a massive reduction in costs, estimating a need for a 10,000x decrease to make it viable.

- Discussions on the cost and practicality of AGI suggest skepticism about its current feasibility, with Uncle___Marty arguing against the trend of increasing model sizes and compute power. Others, like Ansible32, counter that demonstrating functional AGI is valuable, akin to research projects like ITER, although ForsookComparison questions the cost logic, suggesting high expenses might not be justified.

- There is debate over the progress in computational hardware, with Chemical_Mode2736 and mrjackspade discussing the potential for cost reductions and exponential improvements in compute power. However, EstarriolOfTheEast points out that recent advancements may not be as significant as they seem due to assumptions like fp8 or fp4 and increased power demands, suggesting a slowdown in exponential improvement.

Theme 2. Qwen QVQ-72B: New Frontiers in AI Modeling

- Qwen QVQ-72B-Preview is coming!!! (Score: 295, Comments: 48): Qwen QVQ-72B is a 72 billion parameter model with a pre-release placeholder now available on ModelScope. There is some uncertainty about the naming convention change from QwQ to QvQ, and it is unclear if it includes any specific reasoning capabilities.

- The Qwen QVQ-72B model is speculated to include vision/video capabilities, as indicated by Justin Lin's Twitter post, suggesting that the "V" in QVQ stands for Vision. There is a placeholder on ModelScope, but it may have been made private or taken down shortly after its creation.

- Discussions highlight the internal thought process of models, with comparisons drawn between QwQ and Google's model. Google's model is praised for its efficiency and transparency in reasoning, contrasting with QwQ's tendency to be verbose and potentially "adversarial" in its thought process, which can be cumbersome when running on CPU due to slow token generation.

- The potential for open-source contributions is discussed, with Google’s decision not to hide the model's reasoning being seen as beneficial for both competitors and the local LLM community. This transparency contrasts with OpenAI's approach, which does not reveal the reasoning process, potentially using techniques like MCTS at inference time.

- Qwen have released their Qwen2.5 Technical Report (Score: 175, Comments: 11): Qwen has released their Qwen2.5 Technical Report, though no additional information or details were provided in the post.

- Qwen2.5's Coding Capabilities: Users are impressed by the Qwen2.5-Coder model's ability to implement complex functions, like the Levenshtein distance method, without explicit instructions. The model benefits from a comprehensive multilingual sandbox for static code checking and unit testing, which enhances code quality and correctness across nearly 40 programming languages.

- Technical Report vs. White Paper: The term "technical report" is used instead of "white paper" because it allows some methodologies to be shared while keeping other details, such as model architecture and data, as trade secrets. This distinction is crucial for understanding the level of transparency and information shared in such documents.

- Model Training and Performance: The model's efficacy, especially in coding tasks, is attributed to its training on datasets from GitHub and code-related Q&A websites. Even the 14b model demonstrates strong performance in suggesting and implementing algorithms, with the 72b model expected to be even more powerful.

Theme 3. RWKV-7's Advances in Multilingual and Long Context Processing

- RWKV-7 0.1B (L12-D768) trained w/ ctx4k solves NIAH 16k, extrapolates to 32k+, 100% RNN (attention-free), supports 100+ languages and code (Score: 117, Comments: 16): RWKV-7 0.1B (L12-D768) is an attention-free, 100% RNN model excelling at long context tasks and supporting over 100 languages and code. Trained on a multilingual dataset with 1 trillion tokens, it outperforms other models like SSM (Mamba1/Mamba2) and RWKV-6 in handling long contexts, using in-context gradient descent for test-time-training. The RWKV community also developed a tiny RWKV-6 model capable of solving complex problems like sudoku with extensive chain-of-thought reasoning, maintaining constant speed and VRAM usage regardless of context length.

- RWKV's Future Potential: Enthusiasts express excitement for the potential of RWKV models, especially in their ability to outperform traditional transformer-based models with attention layers in reasoning tasks. The community anticipates advancements in scaling beyond 1B parameters and the release of larger models like the 3B model.

- Learning Resources: There is a demand for comprehensive resources to learn about RWKV, indicating interest in understanding its architecture and applications.

- Research and Development: A user shares an experience of attempting to create an RWKV image generation model, highlighting the model's capabilities and the ongoing research efforts to optimize it further. The discussion includes a reference to a related paper: arxiv.org/pdf/2404.04478.

Theme 4. Open-Source AI: The Necessary Evolution

- The real reason why, not only is opensource AI necessary, but also needs to evolve (Score: 57, Comments: 25): The author criticizes OpenAI's pricing strategy for their o1 models, highlighting the high costs associated with both base prices and invisible output tokens, which they argue amounts to a monopoly-like practice. They advocate for open-source AI and community collaboration to prevent monopolistic behavior and ensure the benefits of competition, noting that companies like Google may offer lower prices but not out of goodwill.

- Monopoly Concerns: Commenters agree that monopolistic behavior is likely in the AI field, as seen in other industries where early entrants push for regulations to maintain their market dominance. OpenAI's pricing strategy is viewed as anti-consumer, similar to practices by companies like Apple that charge premiums for exclusivity.

- Invisible Output Tokens: There's a discussion about the costs associated with "invisible" output tokens, where critics argue that charging for these as if they were part of a larger model is unfair. Some believe that users should be able to see the tokens since they are paying for them.

- Open Source vs. Big Tech: There's a belief that open-source models can foster competition in pricing, similar to how render farms operate in the rendering world. Collaboration between open-source communities and smaller companies is seen as a potential way to challenge the dominance of big players like OpenAI and Google.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. OpenAI's O3: High ARC-AGI Performance But High Cost

- OpenAI's new model, o3, shows a huge leap in the world's hardest math benchmark (Score: 196, Comments: 80): OpenAI's new model, o3, demonstrates significant progress in the ARC-AGI math benchmark, achieving an accuracy of 25.2%, compared to the previous state-of-the-art model's 2.0%. This performance leap underscores o3's advancements in tackling complex mathematical problems.

- Discussion on AI's Role in Research: Ormusn2o emphasizes the potential of AI models like o3 in advancing autonomous and assisted machine learning research, which could be crucial for achieving AGI. Meanwhile, ColonelStoic discusses the limitations of current LLMs in handling complex mathematical proofs, suggesting the integration with automated proof checkers like Lean for improvement.

- Clarification on Benchmark and Model Performance: FateOfMuffins points out a misunderstanding regarding the benchmark, clarifying that the 25% accuracy pertains to the ASI math benchmark and is not directly comparable to human performance at the graduate level. Elliotglazer further explains the tiered difficulty levels within FrontierMath, noting that the performance spans different problem complexities.

- Model Evaluation and Utilization: Craygen9 expresses interest in evaluating the model's performance across various specialized domains, advocating for the development of models tailored to specific fields like math, coding, and medicine. Marcmar11 and DazerHD1 discuss the performance metrics, highlighting differences in model performance based on thinking time, with dark blue indicating low thinking time and light blue indicating high thinking time.

- Year 2025 will be interesting - Google was joke until December and now I have a feeling 2025 will be very Good for Google (Score: 118, Comments: 26): Logan Kilpatrick expresses optimism for significant advancements in AI coding models by 2025, receiving substantial engagement with 2,400 likes. Alex Albert responds skeptically, suggesting uncertainty about these advancements, and his reply also attracts attention with 639 likes.

- OpenAI vs. Google: Commenters discuss the flexibility of OpenAI compared to Google due to corporate constraints, suggesting that both companies are now on more equal footing. Some express skepticism about Google's ability to improve their AI offerings, particularly with concerns about their search functionality and potential ad tech interference.

- Gemini Model: The Gemini model is highlighted as a significant advancement, with one user noting its superior performance compared to previous models like 4o and 3.5 sonnet. There's debate about its capabilities, particularly its native multimodal support for text, image, and audio.

- Corporate Influence: There is a shared sentiment of distrust towards Google's influence on AI advancements, with concerns about the potential negative impact of business and advertising departments on the Gemini model by 2025. Users express a mix of skepticism and anticipation for future developments in the AI landscape.

- OpenAI o3 performance on ARC-AGI (Score: 138, Comments: 88): The post links to an image, but no specific details or context about O3 performance on ARC-AGI are provided in the text body itself.

- Discussions highlight O3's significant performance gains on the ARC-AGI benchmark. RedGambitt_ emphasizes that O3 represents a leap in AI capabilities, fixing limitations in the LLM paradigm and requiring updated intuitions about AI. Despite its high performance, O3 is not considered AGI, as noted by phil917, who cites the ARC-AGI blog stating that O3 still fails on simple tasks and that ARC-AGI-2 will present new challenges.

- The cost of using O3 is a major concern, with daemeh and ReadySetPunish noting prices of around $20 per task for O3(low) and $3500 for O3(high). Phil917 mentions that the high compute variant could cost approximately $350,000 for 100 questions, highlighting the prohibitive expense for widespread use.

- The conversation includes skepticism about AGI, with hixon4 and phil917 pointing out that passing the ARC-AGI does not equate to achieving AGI. The high costs and limitations of O3 are discussed, with phil917 noting potential data contamination in results due to training on benchmark data, which diminishes the impressiveness of O3's scores.

Theme 2. Google's Gemini 2.5 Eclipses Competitors amid O3 Buzz

- He won guys (Score: 117, Comments: 25): Gary Marcus predicts by the end of 2024 there will be 7-10 GPT-4 level models but no significant advancements like GPT-5, leading to price wars and minimal competitive advantages. He highlights ongoing issues with AI hallucinations and expects only modest corporate adoption and profits.

- Discussions highlight skepticism around Gary Marcus's predictions, with users questioning the credibility of his forecasts and suggesting that OpenAI is currently leading over Google. However, some argue that Google might still achieve breakthroughs in Chain of Thought (CoT) capabilities with upcoming models.

- There is debate over the release and impact of OpenAI's o3 model, with some users noting that its availability and pricing could limit its accessibility. While o3-mini is expected by the end of January, doubts remain about the timeliness and public access of these releases.

- Users discuss the efficiency and potential cost benefits of new reasoning models for automated workflows, contrasting them with the complexity and resource requirements of previous models like GPT-4. These advancements are seen as smarter solutions for powering automated systems.

Theme 3. TinyBox GPU Manipulations and Networking Deception

- I would hate to be priced out of AI (Score: 126, Comments: 91): The post discusses concerns over the rising costs of AI services, particularly with the O1 unlimited plan already at $200 per month and potential future pricing of $2,000 per month for agentic AI. The author expresses frustration about being priced out of quality AI while acknowledging the possible justifications for these costs, prompting reflection on the broader pricing trajectory of AI technologies.

- There is a strong sentiment that open-source AI is critical to counteract the high costs of proprietary AI solutions, as expressed by GBJI who advocates supporting FOSS AI developers to fight corporate control. The concern is that high pricing could create a bottleneck for global intelligence, disadvantaging researchers outside the US/EU and stifling innovation, as noted by Odd_Category_1038.

- LegitimateLength1916 and BlueberryFew613 discuss the economic implications of AI agents potentially replacing workers, with the former suggesting businesses will opt for AI over human employees due to cost savings. However, BlueberryFew613 argues that current AI lacks the capability and infrastructure to fully replace skilled professionals, emphasizing the need for advancements in symbolic reasoning and AI integration.

- NoWeather1702 raises concerns about the scalability of AI due to insufficient energy and compute power, noting that the growth in power/compute needed for LLMs is outpacing production. ThenExtension9196, working in the global data center industry, assures that efforts are underway to address this issue.

Theme 4. ChatGPT Pro Pricing and Market Impact Discussion

- Will OpenAI release 2000$ subscription? (Score: 349, Comments: 144): The post speculates about a potential $2000 subscription from OpenAI, referencing a playful Twitter post by Sam Altman dated December 20, 2024. The post humorously suggests a connection between the sequence "ooo -> 000 -> 2000" and Altman's tweet, which features casual and humorous engagement metrics.

- O3 Model Speculation: There are discussions about a potential new model, O3, as a successor to O1O3. This speculation arises because O2 is already a trademarked phone provider in Europe, and some users humorously suggest it might offer limited messages per week for different subscription tiers.

- Pricing and Value Concerns: Commenters express skepticism about the rumored $2000/month subscription, joking that such a price would warrant an AGI (Artificial General Intelligence), which they believe would be worth much more.

- Humor and Satire: The comments are filled with humor, referencing a potential NSFW companion model and playful associations with Ozempic and OnlyFans. There's a satirical take on the marketing strategy with phrases like "ho ho ho" and "oh oh oh."

AI Discord Recap

A summary of Summaries of Summaries by o1-2024-12-17

Theme 1. The O3 Frenzy and New Benchmarks

- O3 Breaks ARC-AGI: OpenAI’s O3 model hit 75.7% on the ARC-AGI Semi-Private Evaluation and soared to 87.5% in high-compute mode. Engineers cheered its “punch above its weight” reasoning, though critics worried about the model’s massive inference costs.

- High-Compute Mode Burns Big Money: Some evaluations cost thousands of dollars per run, suggesting big companies can push performance at a steep price. Smaller outfits fear the compute barrier and suspect O3’s Musk-tier budget puts SOTA gains out of reach for many.

- O2 Goes Missing, O3 Arrives Fast: OpenAI skipped “O2” over rumored trademark conflicts, rolling out O3 just months after O1. Jokes about naming aside, devs marveled at the breakneck progression from one frontier model to the next.

Theme 2. AI Editor Madness: Codeium, Cursor, Aider, and More

- Cursor 0.44.5 Ramps Up Productivity: Users praised the new version’s agent mode as fast and stable, fueling a return to Cursor from rival IDEs. A fresh $100M funding round at a $2.5B valuation added extra hype to its flexible coding environment.

- Codeium ‘Send to Cascade’ Streams Bug Reports: Codeium’s Windsurf 1.1.1 update introduced a button to forward issues straight to Cascade, removing friction from debugging. Members tested bigger images and legacy chat modes with success, referencing plan usage details in the docs.

- Aider and Cline Tag-Team Repos: Aider handles tiny code tweaks while Cline knocks out bigger automation tasks thanks to extended memory features. Devs see a sharper workflow with fewer repetitive chores and a complimentary synergy between the two tools.

Theme 3. Fine-Tuning Feuds: LoRA, QLoRA, and Pruning

- LoRA Sparks Hot Debate: Critics questioned LoRA’s effectiveness on out-of-distribution data, while others insisted it’s a must for super-sized models. Some proposed full finetuning for consistent results, igniting a never-ending training argument.

- QAT + LoRA Hit Torchtune v0.5.0: The new recipe merges quantization-aware training with LoRA to create leaner, specialized LLMs. Early adopters loved the interplay between smaller file sizes and decent performance gains.

- Vocab Pruning Proves Prickly: Some devs prune unneeded tokens to reduce memory usage but keep fp32 parameters to preserve accuracy. This balancing act highlights the messy realities of training edge-case models at scale.

Theme 4. Agents, RL Methods, and Rival Model Showdowns

- HL Chat: Anthropic’s Surprise and Building Anthropic: Fans teased a possible holiday drop, noticing the team’s enthusiastic environment. Jokes about Dario’s “cute munchkin” vibe underscored the fun tone around agent releases.

- RL Without Full Verification: Some teams speculated on reward-model flip-flops when tasks lack a perfect checker, suggesting “loose verifiers” or simpler binary heuristics. They expect bigger RL+LLM milestones by 2025, bridging uncertain outputs with half-baked reward signals.

- Gemini 2.0 Flash Thinking Fights O1: Google’s new model displays thought tokens openly, letting devs see step-by-step logic. Observers praised the transparency but questioned whether O3 now outshines Gemini in code and math tasks.

Theme 5. Creative & Multimedia AI: Notebook LM, SDXL, And Friends

- Notebook LM Pumps Out Podcasts: Students and creators used AI to automate entire show segments with consistent audio quality. The tool also helps build timelines and mind maps for journalism or academic writing, showcasing flexible content generation.

- SDXL + LoRA Rock Anime Scenes: Artists praised SDXL’s robust styles while augmenting with LoRA for anime artistry. Users overcame style mismatches, preserving color schemes for game scenes and character designs.

- AniDoc Colors Frames Like Magic: Gradio’s AniDoc transforms rough sketches into fully colored animations, handling poses and scales gracefully. Devs hailed it as a strong extension to speed up visual storytelling and prototyping.

PART 1: High level Discord summaries

Codeium (Windsurf) Discord

- Windsurf 1.1.1 Shines with Pricing & Image Upgrades: The Windsurf 1.1.1 update introduced a 'Send to Cascade' button, usage info on plan statuses, and removed the 1MB limit on images, as noted in the changelog.

- Community members tested the 'Legacy Chat' mode and praised the new Python enhancements, referencing details in the usage docs.

- Send to Cascade Showcases Quick Issue Routing: A short demo highlighted the 'Send to Cascade' feature letting users escalate problems to Cascade, shown in a tweet.

- Contributors encouraged everyone to try it, noting the convenience of swiftly combining user feedback with dedicated troubleshooting.

- Cascade Errors Prompt Chat Resets: Users encountered internal error messages in Cascade when chats grew lengthy, prompting them to start new sessions for stability.

- They stressed concise conversation management to sustain performance, pointing to the benefits of smaller chat logs.

- Subscription Plans Confuse Some Members: One user questioned the halt of a trial pro plan for Windsurf, sparking conversation over free vs tiered features with references to Plan Settings.

- Others swapped experiences on usage limits, highlighting the differences between the extension, Cascade, and Windsurf packages.

- CLI Add-Ons and Performance Fuel Debates: Some participants requested better integration with external tools like Warp or Gemini, while noting fluctuating performance at various times of day.

- They emphasized the potential synergy of Command Line Interface usage with AI-driven coding, though concerns about slowdowns in large codebases persisted.

Cursor IDE Discord

- Cursor 0.44.5 Boosts Productivity & Attracts Funding: Developers reported that Cursor's version 0.44.5 shows marked performance improvements, particularly in agent mode, prompting many to switch back to Cursor from rival editors.

- TechCrunch revealed a new $100M funding round at a $2.5B valuation for Cursor, suggesting strong investor enthusiasm for AI-driven coding solutions.

- AI Tools Turbocharge Dev Efforts: Participants highlighted how AI-powered features reduce coding time and broaden solution searches, allowing them to finish projects more efficiently.

- They noted synergy with extra guidance from tutorials like Building effective agents, which ensure practical integration of large language models into workflows.

- Sonnet Models Spark Mixed Feedback: Users compared multiple Sonnet releases, with some praising the latest version's UI generation chops while others reported inconsistent output quality.

- They observed that system prompts can significantly impact the model's behavior, leading certain developers to adjust their approach for better results.

- Freelancers Embrace AI for Faster Delivery: Freelance contributors shared examples of using AI to automate tedious coding tasks and clean up project backlogs more rapidly.

- A few voiced concerns about clients' skepticism regarding AI usage, but overall sentiment remained positive given improved outcomes.

- UI Styling Challenges Persist in AI-Created Layouts: While AI handles backend logic effectively, it struggles with refined styling elements, forcing developers to fix front-end design issues manually.

- This shortfall emphasizes the need for more training data on visual components, which could enhance a tool’s ability to produce polished interfaces.

aider (Paul Gauthier) Discord

- OpenAI O3 Gains Speed: Benchmarks show OpenAI O3 hitting 75.7% on the ARC-AGI Semi-Private Evaluation, as noted in this tweet.

- A follow-up post from ARC Prize mentioned a high-compute O3 build scoring 87.5%, sparking talk about cost and performance improvements.

- Aider and Cline Join Forces: Developers employed Aider for smaller coding tweaks, while Cline handled heavier automation tasks with its stronger memory capabilities.

- They observed a boost in workflow by pairing these tools, reducing manual repetition in software development.

- AI Job Security Worries Grow: Commenters voiced concern that AI could displace parts of the coding role by automating simpler tasks.

- Others insisted the human element remains key for complex problem-solving, so the developer position should remain vital.

- Depth AI Steps Up for Code Insights: Engineers tested Depth AI on large codebases, noting its full knowledge graph and cross-platform integration at trydepth.ai.

- One user stopped using it when they no longer needed retrieval-augmented generation, but still praised its potential.

- AniDoc Colors Sketches with Ease: The new AniDoc tool converts rough frames into fully colored animations based on style references.

- Users appreciated its ability to handle various poses and scales, calling it an effective extension for visual storytelling.

Interconnects (Nathan Lambert) Discord

- O3’s Overdrive on ARC-AGI: OpenAI revealed O3 scoring 87.5% on the ARC-AGI test, skipping the name O2 and moving from O1 to O3 in three months, as shown in this tweet.

- Community members argued about high inference costs and GPU usage, with one joking that Nvidia stock is surging because of O3’s strong results.

- LoRA’s Limited Gains: A user questioned LoRA finetuning, pointing to an analysis paper that doubts LoRA’s effectiveness outside the training set.

- Others emphasized that LoRA becomes necessary with bigger models, sparking debate over whether full finetuning might yield more consistent results.

- Chollet Dubs O1 The Next AlphaGo: François Chollet likened O1 to AlphaGo, explaining in this post that both use extensive processes for a single move or output.

- He insisted that labeling O1 a simple language model is misleading, spurring members to question whether O1 secretly uses search-like methods.

- RL & RLHF Reward Model Challenges: Some members argued that Reinforcement Learning with uncertain outputs needs specialized reward criteria, suggesting a loose verifier for simpler tasks and linking to this discussion.

- They warned about noise in reward models, highlighting a push toward binary checks in domains like aesthetics and predicting bigger RL + LLM breakthroughs in 2025.

- Anthropic’s Surprise Release & Building Anthropic Chat: A possible Anthropic holiday release fueled speculation, though one member joked that Anthropic is too polite for a sudden product drop.

- In the YouTube video about Building Anthropic, participants playfully described Dario as a 'cute little munchkin' and praised the team’s upbeat environment.

OpenAI Discord

- OpenAI's 12th Day Finale Excites Crowd: The closing day of 12 Days of OpenAI featured Sam Altman, Mark Chen, and Hongyu Ren, with viewers directed to watch the live event here.

- Many anticipated concluding insights and potential announcements from these key figures.

- O3 Model Fever Spurs Comparisons: Participants speculated o3 might rival Google’s Gemini, with OpenAI's pricing raising questions about its market edge.

- A tweet highlighted o3’s coding benchmark rank of #175 globally, amplifying interest.

- OpenAI Direction Triggers Mixed Reactions: Some voiced dissatisfaction over OpenAI's transition away from open-source roots toward paid services, citing fewer free materials.

- Commenters doubted the accessibility of future model releases under this pricing structure.

- Chatbot Queries & 4o Restriction: A user highlighted that custom GPTs are locked to 4o, restricting model flexibility.

- Developers also sought advice on crafting a bot to interpret software features and guide users in plain language.

Unsloth AI (Daniel Han) Discord

- O3 Gains & Skeptics Collide: The new O3 soared to 75.7% on ARC-AGI's public leaderboard, spurring interest in whether it uses a fresh model, refined data strategy, and massive compute.

- Some called the results interesting but questioned if O1 plus fine-tuning hacks might explain the bump, pointing to possible oversights in the official publication.

- FrontierMath's Surprising Accuracy: A new FrontierMath result jumped from 2% to 25%, according to a tweet by David Dohan, challenging prior assumptions about advanced math tasks.

- Community members cited Terence Tao stating this dataset should remain out of AI's reach for years, while others worried about potential overfitting or data leakage.

- RAG & Kaggle Speed Fine-Tuning: RAG training dropped from 3 hours to 15 minutes by leveraging GitHub materials, with a 75k-row CSV converted from JSON boosting model accuracy.

- Some suggested Kaggle for 30 free GPU hours weekly, and encouraged focusing on data quality over sheer volume for Llama fine-tuning.

- SDXL & LoRA Team Up for Anime: Users praised SDXL for strong anime results, noting that Miyabi Hoshimi's LoRA model can boost style accuracy.

- Others reported difficulty pairing Flux with LoRA for consistent anime outputs, expecting Unsloth support for Flux soon.

- TGI vs vLLM Showdown: TGI and vLLM sparked debate over speed and adapter handling, referencing Text Generation Inference docs.

- Some prefer vLLM for its flexible approach, while others champion TGI for reliably serving large-scale model deployments.

Nous Research AI Discord

- O3 Breaks the Bank, Bests O1: The freshly announced O3 model outperformed O1 in coding tasks and rang up a compute bill of $1,600,250, as noted in this tweet.

- Enthusiasts pointed to substantial financial barriers, remarking that the high cost could limit widespread adoption.

- Gemini 2.0 Stages a Flashy Showdown: Google introduced Gemini 2.0 Flash Thinking to rival OpenAI’s O1, allowing users to see step-by-step reasoning as reported in this article.

- Observers contrasted it with O1, highlighting the new dropdown-based explanation feature as a significant step toward transparent model introspection.

- Llama 3.3’s Overeager Function Calls: Members noted Llama 3.3 is much quicker to trigger function calls than Hermes 3 70b, which can drive up costs.

- They found Hermes more measured with calls, reducing expense and improving consistency overall.

- Subconscious Prompting Sparks Curiosity: A proposal for latent influence injecting in prompts surfaced, drawing parallels to subtle NLP-style interventions.

- Participants discussed the possibility of shaping outputs without direct references, likening it to behind-the-scenes suggestions.

- Thinking Big with

Tag Datasets : A collaboration effort emerged to build a reasoning dataset using thetag, targeting models like O1-Preview or O3. - Contributors aim to embed full reasoning traces in the raw data for improved clarity, seeking synergy between structured thought and final answers.

Stackblitz (Bolt.new) Discord

- Merry Madness with Mistletokens: The Bolt team introduced Mistletokens with 2M free tokens for Pro users until year-end and 200K daily plus a 2M monthly limit for free users.

- They aim to spark more seasonal projects and solutions with these expanded holiday token perks.

- Bolt Battles Redundancy: Developers complained about Bolt draining tokens without cleaning duplicates, referencing 'A lot of duplication with diffs on.'

- Some overcame the issue through targeted reviews like 'Please do a thorough review and audit of [The Auth Flow of my application].' that forced it to address redundancy.

- Integration Bugs Spark Frustration: Multiple users noted Bolt automatically creating new Supabase instances instead of reusing old ones, which led to wasted tokens.

- Repeated rate-limits triggered more complaints, with users insisting purchased tokens should exempt them from free plan constraints.

- WebRTC Dreams and Real-time Streams: Efforts to integrate WebRTC for video chat apps on Bolt resulted in technical difficulties around real-time features.

- Community members requested pre-built WebRTC solutions with customizable configurations for smoother media handling.

- Subscription Tango and Storefront Showoff: Many grew wary of needing an active subscription to tap purchased token reloads, urging clearer payment guidelines.

- Meanwhile, a dev previewed a full-stack ecommerce project with a headless backend, a refined storefront, and a visual editor aiming to stand on its own.

LM Studio Discord

- OpenAI Defamation Disruption: A linked YouTube video showed a legal threat against OpenAI accusing the AI of making defamatory statements about a specific individual.

- Members debated how training on open web data could produce erroneous attributions, raising concerns about name filters in final outputs.

- LM Studio's Naming Nook: Participants noticed LM Studio auto-generates chat names, likely by using a small built-in model to summarize the conversation.

- Some speculated that a bundled summarizer is embedded, making chat interactions more seamless and user-friendly.

- 3090 Gobbles 16B Models: Engineers affirmed that a 3090 GPU with 64 GB RAM plus a 5800X processor can handle 16B parameter models at comfortable token speeds.

- They mentioned 70B models still need higher VRAM and smart quantization strategies to maintain useful performance.

- Parameter Quantization Quips: Enthusiasts explained that Q8 quantization is often nearly lossless for many models, while Q6 still preserves decent precision.

- They highlighted trade-offs between smaller file sizes and model accuracy, emphasizing balanced approaches for best results.

- eGPU Power Plays: One member showcased a Razer Core X rig with a 3090 to turbocharge an i7 laptop via Thunderbolt.

- This setup sparked interest in external GPUs as a flexible choice for those wanting desktop-grade performance on portable systems.

OpenRouter (Alex Atallah) Discord

- Gemini 2.0 Flash Thinking Flickers: Google introduced the new Gemini 2.0 Flash Thinking model that outputs thinking tokens directly into text, now accessible on OpenRouter.

- It's briefly unavailable for some users, but you can request access via Discord if you're keen on experimenting.

- BYOK & Fee Talk Takes Center Stage: The BYOK (Bring Your Own API Keys) launch allows users to pool their own provider credits with OpenRouter’s, incurring a 5% fee on top of upstream costs.

- A quick example was requested to clarify fee structures, and updated docs will detail how usage fees combine provider rates plus that extra slice.

- AI To-Do List Taps 5-Minute Rule: An AI To-Do List built on Open Router harnesses the 5-Minute Rule to jump-start tasks automatically.

- It also creates new tasks recursively, leaving users to remark that “it’s actually fun to do work.”

- Fresh Model Releases & AGI Dispute: Community chatter hints at o3-mini and o3 arriving soon, with naming conflicts sparking inside jokes.

- Debate over AGI took a turn with some calling the topic a 'red herring', directing curious minds to a 1.5-hour video discussion.

- Crypto Payments API Sparks Funding Flow: The new Crypto Payments API lets LLMs handle on-chain transactions through ETH, 0xPolygon, and Base, as detailed in OpenRouter's tweet.

- It introduces headless, autonomous financing, giving agents methods to transact independently and opening avenues for novel use cases.

Eleuther Discord

- Natural Attention Nudges Adam: Jeroaranda introduced a Natural Attention approach that approximates the Fisher matrix and surpasses Adam in certain training scenarios, referencing proof details on GitHub.

- Community members stressed the need for a causal mask and debated quality vs. quantity in pretraining data, underscoring intensive verification for these claims.

- MSR’s Ethical Quagmire Exposed: Concerns about MSR’s ethics erupted following examples of plagiarism, involving two papers including a NeurIPS spotlight award runner-up.

- Participants expressed distrust in referencing MSR work and questioned the credibility of their research environment, warning others to tread carefully.

- BOS Token’s Inordinate Influence: Members discovered that BOS token positions can have activation norms up to 30x higher, potentially skewing SAE training results.

- They suggested excluding BOS from training data or applying normalization to mitigate the disproportionate effect, referencing short-context experiments with 2k and 1024 context lengths.

- Benchmark Directory Debacle: Users were thrown off by logs saving to

./benchmark_logs/name/__mnt__weka__home__...instead of./benchmark_logs/name/, complicating multi-model runs.- They proposed unique naming conventions and a specialized harness for comparing all checkpoints, balancing improvement with backwards compatibility.

- GPT-Neox MFU Logging Gains Traction: Pull Request #1331 added MFU/HFU metrics for

neox_args.peak_theoretical_tflopsusage and integrated these stats into WandB and TensorBoard.- The community appreciated the new tokens_per_sec and iters_per_sec logs, and merged the PR after positive feedback despite delayed testing.

Modular (Mojo 🔥) Discord

- FFI Friction: v24.6 Tangle: An upgrade from v24.5 to v24.6 triggered clashes with the standard library’s built-in write function, complicating socket usage in Mojo.

- Developers proposed FileDescriptor as a workaround, referencing write(3p) to avoid symbol collisions.

- Libc Bindings for Leaner Mojo: Members pushed for broader libc bindings, reporting 150+ functions already sketched out for Mojo integration.

- They advocated a single repository for these bindings to bolster cross-platform testing and system-level functionality.

- Float Parsing Hits a Snag: Porting float parsing from Lemire fell short, with standard library methods also proving slower than expected.

- A pending PR seeks to upgrade atof and boost numeric handling, aiming to refine performance in data-heavy tasks.

- Tensorlike Trait Tussle: A request at GitHub Issue #274 asked tensor.Tensor to implement tensor_utils.TensorLike, asserting it already meets the criteria.

- Arguments arose about

Tensoras a trait vs. type, reflecting the challenge of direct instantiation within MAX APIs.

- Arguments arose about

- Modular Mail: Wrapping Up 2024: Modular thanked the community for a productive 2024, announcing a holiday shutdown until January 6 with reduced replies during this period.

- They invited feedback on the 24.6 release via a forum thread and GitHub Issues, fueling anticipation for 2025.

Latent Space Discord

- OpenAI’s O3 Surges on ARC-AGI: OpenAI introduced the O3 model, scoring 75.7% on the ARC-AGI Semi-Private Evaluation and 87.5% in high-compute mode, indicating strong reasoning performance. Researchers mentioned possible parallel Chain-of-Thought mechanisms and substantial resource demands.

- Many debated the model’s cost—rumored at around $1.5 million—while celebrating leaps in code, math, and logic tasks.

- Alec Radford Departure: Alec Radford, known for his early GPT contributions, confirmed his exit from OpenAI for independent research. Members speculated about leadership shifts and potential impact on upcoming model releases.

- Some predicted an internal pivot soon, and others hailed Radford’s past work as key to GPT’s foundation.

- Economic Tensions in High-Compute AI: Discussions raised concerns that hefty computational budgets, like those powering O3, might hamper commercial viability. Participants cautioned that while breakthroughs are exciting, they carry significant operating costs.

- They weighed whether the improved performance on ARC-AGI justifies the expenditure, especially for specialized tasks in code and math.

- Safety Testing Takes Center Stage: OpenAI invited volunteers to stress-test O3 and O3-mini, reflecting an emphasis on spotting potential misuse. This call underscores the push for thorough vetting before wider deployment.

- Safety researchers welcomed the opportunity, reinforcing community-driven oversight as a key measure of responsible AI progress.

- API Keys & Character AI Role-Play: Developers reported tinkering with API keys, highlighting day-to-day experimentation in the AI community. Meanwhile, Character AI draws a younger demographic, with interest in 'Disney princess' style interactions.

- Participants noted user experience signals, referencing “magical math rocks” humor to highlight playful engagement beyond typical business applications.

Notebook LM Discord Discord

- Podcasting Gains Steam with AI: One conversation highlighted the use of AI to produce a podcast episode, accelerating content creation and improving section audio consistency.

- Additionally, a project titled Churros in the Void used Notebook LM and LTX-studio for visuals and voiceovers, reinforcing a self-driven approach to voice acting.

- Notebook LM Bolsters Education: One user described Notebook LM as a powerful tool for building timelines and mind maps in a Journalism class, referencing data from this notebook.

- They integrated course materials and topic-specific podcasts, reporting improved organization of content for coherent papers.

- AI Preps Job Applicants: One member used Notebook LM to analyze their resume against a job ad, generating a custom study guide for upcoming interviews.

- They recommended others upload resumes for immediate pointers on skill alignment.

- Interactive Mode & Citation Tools Hit Snags: Several users struggled to access the new voice-based interactive mode, raising questions about its uneven rollout.

- Others reported a glitch that removed citation features in saved notes, and the dev team confirmed a fix is in progress.

- Audio Overviews & Language Limitations: A user requested tips on recovering a missing audio overview, noting the difficulty of reproducing an identical version once it's lost.

- Similar threads explored how Notebook LM might handle diverse language sources more accurately by separating content into distinct sets.

Perplexity AI Discord

- OpenAI’s O3 Overdrive: OpenAI introduced new o3 and o3-mini models, with coverage from TechCrunch that stirred conversation regarding potential performance leaps beyond the o1 milestone.

- Some participants highlighted the significance of these releases for large-scale deployments, while referencing a video presentation where Sam Altman called for test-driven caution.

- Lepton AI Nudges Node Payment: A newly launched Node-based pay solution echoed the open-source blueprint from Lepton AI with discussions questioning originality.

- Comments pointed to the GitHub repo as evidence of prior open efforts, fueling arguments about reuse and proper citations.

- Samsung’s Moohan Mission: Samsung introduced Project Moohan as an AI-based initiative, prompting speculation about new integrated features.

- Details remain few, but participants are curious about synergy with existing hardware and AI platforms.

- AI Use at Work Surges: A recent survey claimed that over 70% of employees are incorporating AI into their daily tasks.

- People noted how new generative tools streamline code reviews and documentation, suggesting a rising standard for advanced automation.

Nomic.ai (GPT4All) Discord

- GPT4All v3.6.x: Swift Steps, Snappy Fixes: The new GPT4All v3.6.0 arrived with Reasoner v1, a built-in JavaScript code interpreter, plus template compatibility improvements.

- Community members promptly addressed regression bugs in v3.6.1, with Adam Treat and Jared Van Bortel leading the charge as seen in Issue #3333.

- Llama 3.3 & Qwen2 Step Up: Members highlighted functional gains in Llama 3.3 and Qwen2, citing improved performance over previous iterations.

- They referenced a post from Logan Kilpatrick showcasing puzzle-solving with visual and textual elements.

- Phi-4 Punches Above Its Weight: The Phi-4 model at 14B parameters reportedly rivals Llama 3.3 70B according to Hugging Face.

- Community testers commented on smooth local runs, noting strong performance and enthusiasm for further trials.

- Custom Templates & LocalDocs Link Up: A specialized GPT4All chat template utilizes a code interpreter for robust reasoning, verified to function with multiple model types.

- Members described connecting the GPT4All local API server with LocalDocs (Docs), enabling effective offline operation.

Stability.ai (Stable Diffusion) Discord

- Local Generator Showdown: SD1.5 vs SDXL 1.0: Some members praised SD1.5 for stable performance, while others recommended SDXL 1.0 with comfyUI for advanced results.

- They noted improvements in text-to-image clarity for concept art and stressed the minimal setup headaches of these local models.

- Flux-Style Copy Gains Steam: A user got Flux running locally and asked for tips on matching a reference image's style for game scenes.

- They mentioned successfully preserving color schemes and silhouettes, citing consistent parameters in Flux.

- Scams: Tech Support Server Raises Red Flags: A suspicious group claiming to offer Discord help requested wallet details, sparking security concerns.

- Members compared safer alternatives and reminded each other about standard cautionary measures.

- SF3D Emerges for 3D Asset Creation: Enthusiasts pointed to stabilityai/stable-fast-3d on Hugging Face for generating isometric characters and props.

- They reported stable results for creating game-ready objects with fewer artifacts than other approaches.

- LoRA Magic for Personal Art Training: An artist described wanting faster art generation by training new models with their own images.

- Others recommended LoRA finetuning, especially for Flux or SD 3.5, to lock in style details.

Cohere Discord

- Cohere c4ai Commands MLX Momentum: During an MLX integration push, members tested Cohere’s c4ai-command-r7b model, praising improved open source synergy.

- They highlighted early VLLM support and pointed to a pull request that could accelerate further expansions.

- 128K Context Feat Impresses Fans: A community review showcased Cohere’s model handling a 211009-token danganronpa fanfic on 11.5 GB of memory.

- Discussions credited the lack of positional encoding for robust extended context capacity, calling it a key factor in large-scale text tasks.

- O3 Model Sparks Speculation: Members teased an O3 model with features reminiscent of GPT-4, fueling excitement over voice-based interactions.

- They predicted a possible release soon, anticipating advanced AI functionality.

- Findr Debuts on Cohere’s Coattails: Community members celebrated Findr’s launch, crediting Cohere’s tech stack for powering it behind the scenes.

- One member asked about which Cohere features are used, reflecting a desire to examine the integration choices.

LAION Discord

- OpenAI o3 Overdrive: OpenAI unveiled its o3 reasoning model, hitting 75.7% in low-compute mode and 87.5% in high-compute mode.

- A conversation cited François Chollet’s tweet and ARC-AGI-Pub results, implying fresh momentum in advanced task handling.

- AGI or Not: The Debate Rages: Some asserted that surpassing human performance on tasks such as ARC signals AGI.

- Others insisted that AGI is too vaguely defined, urging context-driven meanings to dodge confusion.

- Elo Ratings and Compute Speculations: Participants compared o3 results to grandmaster-level Elo, referencing an Elo Probability Calculator.

- They pondered if weaker models could reach similar results with additional test-time compute at $20 per extended run.

- Colorful Discourse on DCT and VAEs: Discussions centered on DCT and DWT encoding with color spaces like YCrCb or YUV, questioning if extra steps justify the training overhead.

- Some referenced the VAR paper to predict DC components first and then add AC components, highlighting the role of lightness channels in human perception.

GPU MODE Discord

- Triton Docs Stumble, Devs Step Up: The search feature on Triton’s documentation is broken, and the community flagged missing specs on tl.dtypes like tl.int1.

- Willing contributors want to fix it if the docs backend is open for edits.

- Flex Attention Gains Momentum: Members tinkering with flex attention plus context parallel signaled that an example might soon land in attn-gym.

- They see a direct path to combine these approaches to handle bigger tasks effectively.

- Diffusion Autoguidance Lights Up: A new NeurIPS 2024 paper by Tero Karras outlines how diffusion models can be shaped through the Autoguidance method.

- Its runner-up status and PDF link sparked plenty of talk about the impact on generative modeling.

- ARC CoT Data Fuels LLaMA 8B Tests: A user is producing a 10k-sample ARC CoT dataset to see if a fine-tuned LLaMA 8B surpasses the base in log probability metrics.

- They plan to examine the influence of 'CoT' training after generating a few thousand samples, highlighting potential improvements for future evaluations.

- PyTorch Puts Sparsity in Focus: The PyTorch sparsity design introduced

to_sparse_semi_structuredfor inference, with users suggesting a swap tosparsify_for greater flexibility.- This approach also spotlights native quantization and other built-in features for model optimization.

LlamaIndex Discord

- LlamaParse Boosts Audio Parsing: The LlamaParse tool now parses audio files, complementing PDF and Word support with speech-to-text conversion.

- This update cements LlamaParse as a strong cross-format parser for multimedia workflows, according to user feedback.

- LlamaIndex Celebrates a Year of Growth: They announced tens of millions of pages parsed in a year-end review, plus consistent weekly feature rollouts.

- They teased LlamaCloud going GA in early 2024 and shared a year in review link with detailed stats.

- Stock Analysis Bot Shines with LlamaIndex: A quick tutorial walked through building an automated stock analysis agent using FunctionCallingAgent and Claude 3.5 Sonnet.

- Engineers can reference Hanane D's post for a one-click solution that simplifies finance tasks.

- Document Automation Demos with LlamaIndex: A notebook illustrated how LlamaIndex can standardize units and measurements across multiple vendors.

- The example notebook demonstrated unified workflows for real-world production settings.

- Fine-Tuning LLM with Synthetic Data: Users discussed generating artificial samples for sentiment analysis, referencing a Hugging Face blog.

- They recommended prompt manipulation as a stepping stone while others discussed broader approaches to model refinement.

LLM Agents (Berkeley MOOC) Discord

- Hackathon Hustle & Reopened Rush: Due to participants facing technical difficulties, the hackathon submission form reopened until Dec 20th at 11:59PM PST.

- Organizers confirmed no further extensions, so participants should finalize details like the primary contact email in the certification form for official notifications.

- Manual Submission Checks & Video Format Bumps: A manual verification process is offered for participants unsure about their submission, preventing last-minute confusion.

- Some resorted to email-based entries after YouTube issues, saying they remain focused on the hackathon rather than the course.

- Agent Approach Alternatives & AutoGen Warnings: A participant referenced a post about agent-building strategies, advising against relying solely on frameworks like Autogen.

- They suggested simpler, modular methods in future MOOCs, emphasizing instruction tuning and function calling.

Torchtune Discord

- Torchtune v0.5.0 Splashes In: The devs launched Torchtune v0.5.0, packing in Kaggle integration, QAT + LoRA training, Early Exit recipes, and Ascend NPU support.

- They shared release notes detailing how these upgrades streamline finetuning for heavier models.

- QwQ-preview-32B Extends Token Horizons: Someone tested QwQ-preview-32B on 8×80G GPUs, aiming for context parallelism beyond 8K tokens.

- They mentioned optimizer_in_bwd, 8bit Adam, and QLoRA optimization flags as ways to stretch input size.

- fsdp2 State Dict Loading Raises Eyebrows: Developers questioned loading the fsdp2 state dict when sharded parameters conflicted with non-DTensors in distributed loading code.

- They worried about how these mismatches complicate deploying FSDPModule setups across multiple nodes.

- Vocab Pruning Needs fp32 Care: Some participants pruned vocab to shrink model size yet insisted on preserving parameters in fp32 for consistent accuracy.

- They highlighted separate handling of bf16 calculations and fp32 storage to maintain stable finetuning.

DSPy Discord

- Litellm Proxy Gains Traction: Litellm can be self-hosted or used via a managed service, and it can run on the same VM as your primary system for simpler operations. The discussion stressed that this setup makes integration smoother by bundling the proxy with related services.

- Participants noted it meets a broad set of infrastructure needs while staying easy to adjust.

- Synthetic Data Sparks LLM Upgrades: A post titled On Synthetic Data: How It’s Improving & Shaping LLMs at dbreunig.com explained how synthetic data fine-tunes smaller models by simulating chatbot-like inputs. The conversation also covered its limited impact on large-scale tasks and the nuance of applying it across diverse domains.

- Members observed mixed results but agreed these generated datasets can push reasoning studies forward.

- Optimization Costs Stir Concerns: Extended sessions for advanced optimizers highlighted escalating costs, prompting suggestions to cap calls or tokens. Some proposed smaller parameter settings or pairing LiteLLM with preset limits to sidestep overspending.

- Voices in the discussion underscored active resource monitoring to avoid unexpected expenses.

- MIPRO 'Light' Mode Tames Resources: MIPRO 'Light' mode offers those looking to run optimization steps a leaner way forward. It was said to balance processing demands against performance in a more controlled environment.

- Early adopters mentioned that fewer resources can still produce decent outcomes, indicating a promising path for trials.

OpenInterpreter Discord

- OpenInterpreter's server mode draws interest: One user asked about documentation for running OpenInterpreter on a VPS in server mode, curious whether commands run locally or on the server.

- They expressed eagerness to confirm remote usage possibilities, highlighting potential for flexible configurations.

- Google Gemini 2.0 hype intensifies: Someone questioned the new Google Gemini 2.0 multimodal feature, especially its os mode, noting that access could be limited to 'tier 5' users.

- They wondered about its availability and performance, suggesting a need for broader testing.

- Local LLM integration brings cozy vibes: A participant celebrated local LLM integration for adding a welcome offline dimension to OpenInterpreter.

- They previously feared loss of this feature but voiced relief that it's still supported.

- SSH usage inspires front-end aims: One user shared their method of interacting with OpenInterpreter through SSH, noting a straightforward remote experience.

- They hinted at plans for a front-end interface, confident about implementing it with minimal friction.

- Community flags spam: A member alerted others to referral spam in the chat, seeking to maintain a clean environment.

- They signaled the incident to a relevant role, hoping for prompt intervention.

Axolotl AI Discord

- KTO and Liger: A Surprising Combo: Guild members confirmed that Liger now integrates KTO, supporting advanced synergy that aims to boost model performance.

- They noted pain from loss parity concerns against the HF TRL baseline, prompting further scrutiny on training metrics.

- DPO Dreams: Liger Eyes Next Steps: A team is focusing on Liger DPO as the main priority, aiming for stable operations that could lead to smoother expansions.

- Frustrated voices emerged over the loss parity struggles, yet optimism persists that fixes will soon surface for these lingering issues.

tinygrad (George Hotz) Discord

- Stale PRs Face the Axe: A user plans to close or automate closure of PRs older than 30 days starting next week, removing outdated code proposals. This frees the project from excess open requests while keeping the code repository lean.

- They stressed the importance of tidying up longstanding PRs. No further details or links were shared beyond the proposed timeline.

- Bot Might Step In: They mentioned possibly using a bot to track or close inactive PRs, reducing manual oversight. This approach could cut down on housekeeping tasks and maintain an uncluttered development queue.

- No specific bot name or implementation details were provided. The conversation ended without additional references or announcements.

Gorilla LLM (Berkeley Function Calling) Discord

- Watt-Tool Models Boost Gorilla Leaderboard: A pull request #847 was filed to add watt-tool-8B and watt-tool-70B to Gorilla’s function calling leaderboard.

- These models are also accessible at watt-tool-8B and watt-tool-70B for further experimentation.

- Contributor Seeks Review Before Christmas: They requested a timely check of the new watt-tool additions, hinting at potential performance and integration questions.

- Community feedback on function calling use cases and synergy with existing Gorilla tools was encouraged before the holiday pause.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!