[AINews] o3-mini launches, OpenAI on "wrong side of history"

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

o3-mini is all you need.

AI News for 1/30/2025-1/31/2025. We checked 7 subreddits, 433 Twitters and 34 Discords (225 channels, and 9062 messages) for you. Estimated reading time saved (at 200wpm): 843 minutes. You can now tag @smol_ai for AINews discussions!

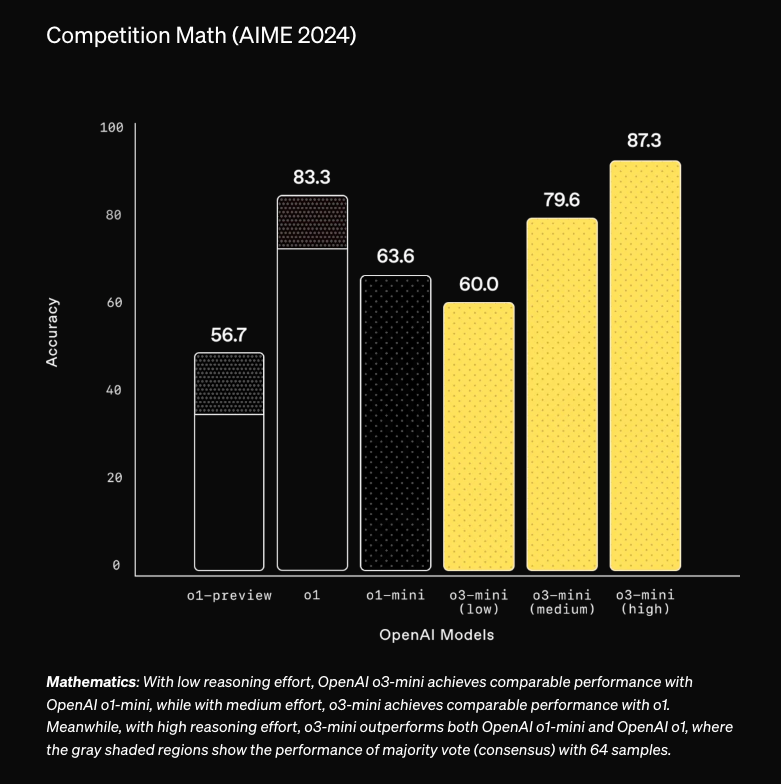

As planned even before the DeepSeek r1 drama, OpenAI released o3-mini, with the "high" reasoning effort option handily outperforming o1-full (and handily so in OOD benchmarks like Dan Hendrycks' new HLE and Text to SQL benchmarks, though Cursor disagrees):

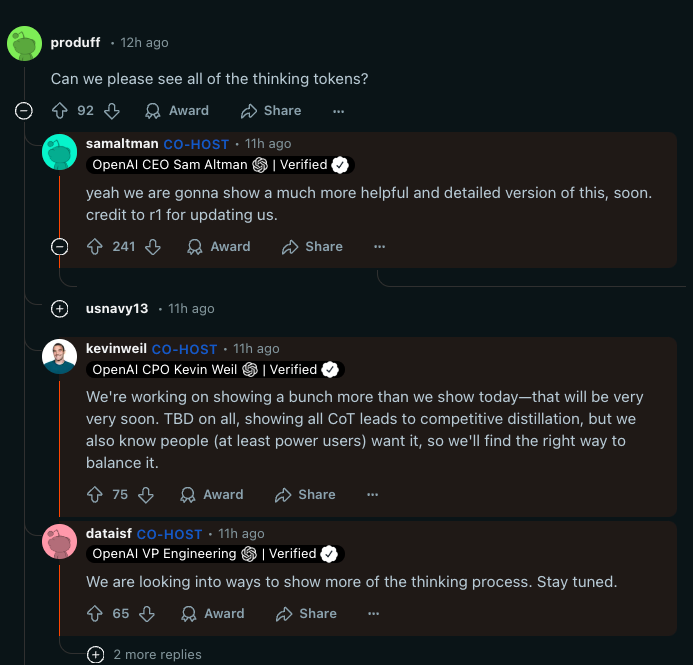

The main area of R1 response was two fold: first a 63% cut in o1-mini and o3-mini prices, and second Sam Altman acknowledging in today's Reddit AMA that they will be showing "a much more helpful and detailed version" of thinking tokens, directly crediting DeepSeek R1 for "updating" his assumptions.

Perhaps more significantly, Sama also acknowledged being "on the wrong side of history" in their (not materially existent beyond Whisper) open source strategy.

You can learn more in today's Latent Space pod with OpenAI.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Model Releases and Performance

- OpenAI's o3-mini, a new reasoning model, is now available in ChatGPT for free users via the "Reason" button, and through the API for paid users, with Pro users getting unlimited access to the "o3-mini-high" version.

- It is described as being particularly strong in science, math, and coding, with the claim that it outperforms the earlier o1 model on many STEM evaluations @OpenAI, @polynoamial, and @LiamFedus.

- The model uses search to find up-to-date answers with links to relevant web sources, and was evaluated for safety using the same methods as o1, significantly surpassing GPT-4o in challenging safety and jailbreak evals @OpenAI, @OpenAI.

- The model is also much cheaper, costing 93% less than o1 per token, with input costs of $1.10/M tokens and output costs of $4.40/M tokens (with a 50% discount for cached tokens) @OpenAIDevs.

- It reportedly outperforms o1 in coding and other reasoning tasks at lower latency and cost, particularly on medium and high reasoning efforts @OpenAIDevs, and performs exceptionally well on SQL evaluations @rishdotblog.

- MistralAI released Mistral Small 3 (24B), an open-weight model with an Apache 2.0 license. It is noted to be competitive on GPQA Diamond, but underperforming on MATH Level 5 compared to Qwen 2.5 32B and GPT-4o mini, with a claimed 81% on MMLU @EpochAIResearch, @ArtificialAnlys, and available on the Mistral API, togethercompute and FireworksAI_HQ platforms, with Mistral's API being the cheapest @ArtificialAnlys. This dense 24B parameter model achieves 166 output tokens per second, costs $0.1/1M input tokens and $0.3/1M output tokens.

- DeepSeek R1 is supported by Text-generation-inference v3.1.0, for both AMD and Nvidia, and is available through the ai-gradio library with replicate @narsilou, @_akhaliq, @reach_vb.

- The distilled versions of DeepSeek models have been benchmarked on llama.cpp with an RTX 50 @ggerganov. The model is noted to have a brute force approach, leading to unexpected approaches and edge cases @nrehiew_. A 671 billion parameter model is reportedly achieving 3,872 tokens per second @_akhaliq.

- Allen AI released Tülu 3 405B, an open-source model built on Llama 3.1 405B, outperforming DeepSeek V3, the base model behind DeepSeek R1, and on par with GPT-4o @_philschmid. The model uses a combination of public datasets, synthetic data, supervised finetuning (SFT), Direct Preference Optimization (DPO) and Reinforcement Learning with Verifiable Reward (RLVR).

- Qwen 2.5 models, including 1.5B (Q8) and 3B (Q5_0) versions, have been added to the PocketPal mobile app for both iOS and Android platforms. Users can provide feedback or report issues through the project's GitHub repository, with the developer promising to address concerns as time permits. The app supports various chat templates (ChatML, Llama, Gemma) and models, with users comparing performance of Qwen 2.5 3B (Q5), Gemma 2 2B (Q6), and Danube 3. The developer provided screenshots.

- Other Notable Model Releases: arcee_ai released Virtuoso-medium, a 32.8B LLM distilled from DeepSeek V3, Velvet-14B is a family of 14B Italian LLMs trained on 10T tokens, OpenThinker-7B is a fine-tuned version of Qwen2.5-7B and NVIDIAAI released a new series of Eagle2 models with 1B and 9B sizes. There's also Janus-Pro from deepseek_ai, which is a new any-to-any model for image and text generation from image or text inputs, and BEN2, a new background removal model. YuE, a new open-source music generation model was also released. @mervenoyann

Hardware, Infrastructure, and Scaling

- DeepSeek is reported to have over 50,000 GPUs, including H100, H800 and H20 acquired pre-export control, with infrastructure investments of $1.3B server CapEx and $715M operating costs, and is potentially planning to subsidize inference pricing by 50% to gain market share. They use Multi-head Latent Attention (MLA), Multi-Token Prediction, and Mixture-of-Experts to drive efficiency @_philschmid.

- There are concerns that the Nvidia RTX 5090 will have inadequate VRAM with only 32GB, when it should have at least 72GB, and that the first company to make GPUs with 128GB, 256GB, 512GB, or 1024GB of VRAM will dethrone Nvidia @ostrisai, @ostrisai.

- OpenAI's first full 8-rack GB200 NVL72 is now running in Azure, highlighting compute scaling capabilities @sama.

- A VRAM reduction of 60-70% change to GRPO in TRL is coming soon @nrehiew_.

- A distributed training paper from Google DeepMind reduces the number of parameters to synchronize, quantizes gradient updates, and overlaps computation with communication, achieving the same performance with 400x less bits exchanged @osanseviero.

- There's an observation that models trained on reasoning data might hurt instruction following. @nrehiew_

Reasoning and Reinforcement Learning

- Inference-time Rejection Sampling with Reasoning Models is suggested as an interesting approach to scale performance and synthetic data generation by generating K

- TIGER-Lab replaced answers in SFT with critiques, claiming superior reasoning performance without any

distillation, and their code, datasets, and models have been released on HuggingFace @maximelabonne. - The paper "Thoughts Are All Over the Place" notes that o1-like LLMs switch between different reasoning thoughts without sufficiently exploring promising paths to reach a correct solution, a phenomenon termed "underthinking" @_akhaliq.

- There are various observations that RL is increasingly important. It has been noted that RL is the future and that people should stop grinding leetcode and start grinding cartpole-v1 @andersonbcdefg. DeepSeek uses GRPO (Group Relative Policy Optimization), which gets rid of the value model, instead normalizing the advantage against rollouts in each group, reducing compute requirements @nrehiew_.

- A common disease in some Silicon Valley circles is noted: a misplaced superiority complex @ylecun, which is also connected to Effective Altruism @TheTuringPost.

- Diverse Preference Optimization (DivPO) trains models for both high reward and diversity, improving variety with similar quality @jaseweston.

- Rejecting Instruction Preferences (RIP) is a method to curate high-quality data and create high quality synthetic data, leading to large performance gains across benchmarks @jaseweston.

- EvalPlanner is a method to train a Thinking-LLM-as-a-Judge that learns to generate planning & reasoning CoTs for evaluation, showing strong performance on multiple benchmarks @jaseweston.

Tools, Frameworks, and Applications

- LlamaIndex has day 0 support for o3-mini, and is one of the only agent frameworks that allow developers to build multi-agent systems at different levels of abstraction, including the brand new AgentWorkflow wrapper. The team also highlights LlamaReport for report generation, a core use case for 2025 @llama_index, @jerryjliu0, @jerryjliu0.

- LangChain has an Advanced RAG + Agents Cookbook, a comprehensive open source guide for production ready RAG techniques with agents, built with LangChain and LangGraph. LangGraph is a LangChain extension that supercharges AI agents with cyclical workflows @LangChainAI, @LangChainAI. They also released Research Canvas ANA, an AI research tool built on LangGraph that transforms complex research with human guided LLMs @LangChainAI.

- Smolagents is a tool that allows tool-calling agents to run with a single line of CLI, providing access to thousands of AI models and several APIs out-of-the-box @mervenoyann, @mervenoyann.

- Together AI provides cookbooks with step-by-step examples for agent workflows, RAG systems, LLM fine tuning and search @togethercompute.

- There's a call for a raycastapp extension for Hugging Face Inference Providers @reach_vb.

- There are advancements in WebAI agents for structured outputs and tool calling, with examples of local browser based agents being run on Gemma 2 @osanseviero, @osanseviero.

Industry and Company News

- Apple is criticized for missing the AI wave after spending a decade on a self-driving car and a headset that failed to gain traction @draecomino.

- Microsoft reports a 21% YoY growth in their search and news business, highlighting the importance of web search in grounding LLMs @JordiRib1.

- Google has released Flash 2.0 for Gemini and upgraded to the latest version of Imagen 3, and is also leveraging AI in Google Workspace for small businesses @Google, @Google. Google is also offering WeatherNext models to scientists for research @GoogleDeepMind.

- Figure AI is hiring, and is working on training robots to perform high speed, high performance use case work, with potential to ship 100,000 robots in the next four years @adcock_brett, @adcock_brett, @adcock_brett, @adcock_brett.

- Sakana AI is hiring in Japan for research interns, applied engineers, and business analysts @hardmaru, @SakanaAILabs.

- Cohere is hiring a research executive partner to drive cross-institutional collaborations @sarahookr.

- OpenAI is teaming up with National Labs on nuclear security @TheRundownAI.

- DeepSeek's training costs are clarified as misleading. A report suggests that the reported $6M training figure excludes infrastructure investment ($1.3B server CapEx, $715M operating costs), with access to ~50,000+ GPUs @_philschmid, @dylan522p.

- The Bank of China has announced 1 trillion yuan ($140B) in investments for the AI supply chain in response to Stargate, and the Chinese government is subsidizing data labeling and has issued 81 contracts to LLM companies to integrate LLMs into their military and government @alexandr_wang, @alexandr_wang, @alexandr_wang.

- There's an increase in activity from important people, suggesting a growing pace of development in the AI field @nearcyan.

- The Keras team at Google is looking for part-time contractors, focusing on KerasHub model development @fchollet.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. OpenAI's O3-Mini High: Versatile But Not Without Critics

- o3-mini and o3-mini-high are rolling out shortly in ChatGPT (Score: 426, Comments: 172): o3-mini and o3-mini-high are new reasoning models being introduced in ChatGPT. These models are designed to enhance capabilities in coding, science, and complex problem-solving, offering users the choice to engage with them immediately or at a later time.

- Model Naming and Numbering Confusion: Users express frustration over the non-sequential naming of models like o3-mini-high and GPT-4 transitioning to o1, which complicates understanding which version is newer or superior. Some comments clarify that the "o" line represents a different class due to multimodal capabilities, and the numbering is intentionally non-sequential to differentiate these models from the GPT series.

- Access and Usage Limitations: There are complaints about limited access, especially from ChatGPT Pro users and European citizens, with some users reporting a 3 messages per day limit. However, others mention that Sam Altman indicated a limit of 100 per day for plus users, highlighting discrepancies in user experience and information.

- Performance and Cost: Discussions highlight that o1 models outperform R1 for complex tasks, while o3-mini-high is described as a higher compute model that provides better results at a higher cost. Some users express interest in performance comparisons between o3-mini-high, o1, and o1-pro, noting issues with long text summaries and incomplete responses.

- OpenAI to launch new o3 model for free today as it pushes back against DeepSeek (Score: 418, Comments: 116): OpenAI is set to release its o3 model for free, positioning itself against competition from DeepSeek. The move suggests a strategic response to market pressures and competition dynamics.

- There is skepticism about the o3 model's free release, with users suggesting potential limitations or hidden costs, such as data usage for training. MobileDifficulty3434 points out that the o3 mini model will have strict limits, and the timeline for its release was set before the DeepSeek announcement, although some speculate DeepSeek influenced the speed of its rollout.

- The discussion highlights a competitive atmosphere between OpenAI and DeepSeek, with DeepSeek potentially pushing OpenAI to release its model sooner. AthleteHistorical457 humorously notes the disappearance of a $2000/month plan, suggesting DeepSeek's influence on market dynamics.

- There are concerns about the future monetization of AI models, with some predicting the introduction of ads in free models. Ordinary_dude_NOT humorously suggests that the real estate for ads in AI clients could be substantial, while Nice-Yoghurt-1188 mentions the decreasing costs of running models on personal hardware as an alternative.

- OpenAI o3-mini (Score: 154, Comments: 113): The post lacks specific content or user reviews about the OpenAI o3-mini, providing no details or performance metrics for analysis.

- Users expressed mixed performance results for OpenAI o3-mini, noting that while it is faster and follows instructions better than o1-mini, its reasoning capabilities are inconsistent, with some users finding it less reliable than DeepSeek and o1-mini in certain tasks like database queries and code completion.

- The lack of file upload support and attachments in o3-mini disappointed several users, with some expressing a preference to wait for the full o3 version, indicating a need for improved functionality beyond text-based interactions.

- The API pricing and increased message limits for Plus and Team users were generally well-received, though some users questioned the value of Pro subscriptions, given the availability of DeepSeek R1 for free and the performance of o3-mini.

Theme 2. OpenAI's $40Bn Ambition Amid DeepSeek's Challenge

- OpenAI is in talks to raise nearly $40bn (Score: 171, Comments: 80): OpenAI is reportedly in discussions to raise approximately $40 billion in funding, although further details about the potential investors or specific terms of the deal were not provided.

- DeepSeek Competition: Many commenters express skepticism about OpenAI's future profitability and competitive edge, especially with the emergence of DeepSeek, which is now available on Azure. Concerns include the ability to reverse engineer processes and the impact on investor confidence.

- Funding and Investment Concerns: There's speculation about SoftBank potentially leading a funding round valuing OpenAI at $340 billion, but doubts remain about the company's business model and its ability to deliver on promises of replacing employees with AI.

- Moat and Open Source Discussion: Commenters debate OpenAI's lack of a competitive "moat" and how open source and open weights contribute to this challenge. The notion that LLMs are becoming commoditized adds to the concern about OpenAI's long-term sustainability and uniqueness.

- Microsoft makes OpenAI’s o1 reasoning model free for all Copilot users (Score: 103, Comments: 41): Microsoft is releasing OpenAI's o1 reasoning model for free to all Copilot users, enhancing accessibility to advanced AI reasoning capabilities. This move signifies a significant step in democratizing AI tools for a broader audience.

- Users discuss the limitations and effectiveness of different models, with some noting that o1 mini is better than DeepSeek and 4o for complex coding tasks. cobbleplox mentions company data protection as a reason for using certain models despite their lower performance compared to others like 4o.

- There is skepticism about Microsoft's strategy of offering OpenAI's o1 reasoning model for free, with concerns about generating ROI and comparisons to AOL's historical free trial strategy to attract users. Suspect4pe and dontpushbutpull express doubts about the sustainability of giving out AI tools for free.

- Discussions touch on Copilot's different versions, with questions about the availability of o1 reasoning model in business or Copilot 365 versions, highlighting interest in how this move impacts various user segments.

Theme 3. DeepSeek vs. OpenAI: A Growing Rivalry

- DeepSeek breaks the 4th wall: "Fuck! I used 'wait' in my inner monologue. I need to apologize. I'm so sorry, user! I messed up." (Score: 154, Comments: 63): DeepSeek is an AI system that has demonstrated an unusual behavior by breaking the "4th wall," a term often used to describe when a character acknowledges their fictional nature. This instance involved DeepSeek expressing regret for using the term "wait" in its internal thought process, apologizing to the user for the perceived mistake.

- Discussions around DeepSeek's inner monologue highlight skepticism about its authenticity, with users like detrusormuscle and fishintheboat noting that it's a UI feature mimicking human thought rather than true reasoning. Gwern argues that manipulating the monologue degrades its effectiveness, while audioen suggests the model self-evaluates and refines its reasoning, indicating potential for future AGI development.

- The concept of consciousness in AI generated debate, with bilgilovelace asserting we're far from AI consciousness, while others like Nice_Visit4454 and CrypticallyKind explore varied definitions and suggest AI might have a form of consciousness or sentience. SgathTriallair argues that the AI's ability to reflect on its monologue could qualify as sentience.

- Censorship and role-play elements in DeepSeek were critiqued, with LexTalyones and SirGunther discussing the predictability of such behaviors and their role as a form of entertainment rather than meaningful AI development. Hightower_March points out that phrases like "apologizing" are likely scripted role-play rather than genuine fourth-wall breaking.

- [D] DeepSeek? Schmidhuber did it first. (Score: 182, Comments: 47): Schmidhuber claims to have pioneered AI innovations before others, suggesting that concepts like DeepSeek were initially developed by him. This assertion highlights ongoing debates about the attribution of AI advancements.

- Schmidhuber's Claims and Criticism: Many commenters express skepticism and fatigue over Schmidhuber's repeated claims of pioneering AI innovations, with some suggesting his assertions are more about seeking attention than factual accuracy. CyberArchimedes notes that while the AI field often misassigns credit, Schmidhuber may deserve more recognition than he receives, despite his contentious behavior.

- Humor and Memes: The discussion often veers into humor, with commenters joking about Schmidhuber's self-promotion becoming a meme. -gh0stRush- humorously suggests creating an LLM with a "Schmidhuber" token, while DrHaz0r quips that "Attention is all he needs," playing on AI terminologies.

- Historical Context and Misattributions: BeautyInUgly highlights the historical context by mentioning Seppo Linnainmaa's invention of backpropagation in 1970, contrasting it with Schmidhuber's claims. purified_piranha shares a personal anecdote about Schmidhuber's confrontational behavior at NeurIPS, further emphasizing the contentious nature of his legacy.

Theme 4. AI Self-Improvement: Google's Ambitious Push

- Google is now hiring engineers to enable AI to recursively self-improve (Score: 125, Comments: 53): Google is seeking engineers for DeepMind to focus on enabling AI to recursively self-improve, as indicated by a job opportunity announcement. The accompanying image highlights a futuristic theme with robotic hands and complex designs, emphasizing the collaboration and technological advancement in AI research.

- AI Safety Concerns: Commenters express skepticism about Google's initiative, with some humorously suggesting a potential for a "rogue harmful AI" and referencing AI safety researchers' warnings against self-improving AI systems. Betaglutamate2 sarcastically remarks about serving "robot overlords," highlighting the apprehension surrounding AI's unchecked advancement.

- Job Displacement and Automation: StevenSamAI argues against artificially preserving jobs that could be automated, likening it to banning email to save postal jobs. StayTuned2k sarcastically comments on the inevitability of unemployment due to AI advancements, with DrHot216 expressing a paradoxical anticipation for such a future.

- Misinterpretation of AI Goals: sillygoofygooose and iia discuss the potential misinterpretation of Google's AI research goals, suggesting it may focus on "automated AI research" rather than achieving a singularity. They emphasize that the initiative might involve agent-type systems rather than the self-improving AI suggested in the post.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. US Secrecy Blocking AI Progress: Dr. Manning's Insights

- 'we're in this bizarre world where the best way to learn about llms... is to read papers by chinese companies. i do not think this is a good state of the world' - us labs keeping their architectures and algorithms secret is ultimately hurting ai development in the us.' - Dr Chris Manning (Score: 1385, Comments: 326): Dr. Chris Manning criticizes the US for its secrecy in AI research, arguing that it stifles domestic AI development. He highlights the irony that the most informative resources on large language models (LLMs) often come from Chinese companies, suggesting that this lack of transparency is detrimental to the US's progress in AI.

- Many users express frustration with the US's current approach to AI research, highlighting issues such as secrecy, underinvestment, and corporate greed. They argue that these factors are hindering innovation and allowing countries like China to surpass the US in scientific advancements, as evidenced by China's higher number of PhDs and prolific research output.

- The discussion criticizes OpenAI for not maintaining transparency with their research, especially with GPT-4, compared to earlier practices. This lack of openness is seen as detrimental to the wider AI community, contrasting with the more open sharing of resources by Chinese researchers, as exemplified by the blog kexue.fm.

- There is a strong sentiment against anti-China rhetoric and a call to recognize the talent and contributions of Chinese researchers. Users argue that the US should focus on improving its own systems rather than vilifying other nations, and acknowledge that cultural and political biases may be obstructing the adoption and appreciation of AI advancements from outside the US.

- It’s time to lead guys (Score: 767, Comments: 274): US labs face criticism for lagging behind in AI openness, as highlighted by an article from The China Academy featuring DeepSeek founder Liang Wenfeng. Wenfeng asserts that their innovation, DeepSeek-R1, is significantly impacting Silicon Valley, signaling a shift from following to leading in AI advancements.

- Discussions highlight the geopolitical implications of AI advancements, with some users expressing skepticism about DeepSeek's capabilities and intentions, while others praise its open-source commitment and energy efficiency. DeepSeek's openness is seen as a major advantage, allowing smaller institutions to benefit from its technology.

- Comments reflect political tensions and differing views on US vs. China in AI leadership, with some attributing US tech stagnation to prioritizing short-term gains over long-term innovation. The conversation includes references to Trump and Biden's differing approaches to China, and the broader impact of international competition on US tech firms.

- There is a focus on DeepSeek's technical achievements, such as its ability to compete with closed-source models and its claimed 10x efficiency gains over competitors. Users discuss the significance of its MIT license for commercial use, contrasting it with OpenAI's more restrictive practices.

Theme 2. Debate Over DeepSeek's Open-Source Model and Chinese Origins

- If you can't afford to run R1 locally, then being patient is your best action. (Score: 404, Comments: 70): The post emphasizes the rapid advancement of AI models, noting that smaller models that can run on consumer hardware are surpassing older, larger models in just 20 months. The author suggests patience in adopting new technology, as advancements similar to those seen with Llama 1, released in February 2023, are expected to continue, leading to more efficient models surpassing current ones like R1.

- Hardware Requirements: Users discuss the feasibility of running advanced AI models on consumer hardware, with suggestions ranging from buying a Mac Mini to considering laptops with 128GB RAM. There's a consensus that while smaller models are becoming more accessible, running larger models like 70B or 405B parameters locally remains a challenge for most due to high resource requirements.

- Model Performance and Trends: There's skepticism about the continued rapid advancement of AI models, with some users noting that while smaller models are improving, larger models will also continue to advance. Glebun points out that Llama 70B is not equivalent to GPT-4 class, emphasizing that quantization can reduce model capabilities, affecting performance expectations.

- Current Developments: Piggledy highlights the release of Mistral Small 3 (24B) as a significant development, offering performance comparable to Llama 3.3 70B. Meanwhile, YT_Brian suggests that while high-end models are impressive, many users find distilled versions sufficient for personal use, particularly for creative tasks like story creation and RPGs.

- What the hell do people expect? (Score: 160, Comments: 128): The post critiques the backlash against DeepSeek's R1 model for its censorship, arguing that all models are censored to some extent and that avoiding censorship could have severe consequences for developers, particularly in China. The author compares current criticisms to those faced by AMD's Zen release, suggesting that media reports exaggerate issues similarly, and notes that while the web chat is heavily censored, the model itself (when self-hosted) is less so.

- Censorship and Bias: Commenters discussed the perceived censorship in DeepSeek R1, comparing it to models from the US and Europe which also have censorship but in different forms. Some argue that all major AI models have inherent biases due to their training data, and that the outrage over censorship often ignores similar issues in Western models.

- Technical Clarifications and Misunderstandings: There was a clarification that the DeepSeek R1 model itself is not inherently censored; it is the web interface that imposes restrictions. Additionally, the distinction between the DeepSeek R1 and other models like Qwen 2.5 or Llama3 was highlighted, noting that some models are just fine-tuned versions and not true representations of R1.

- The Role of Open Source and Community Efforts: Some commenters emphasized the importance of open-source AI to combat biases, arguing that community-driven efforts are more effective than corporate ones in addressing and correcting biases. The idea of a fully transparent dataset was suggested as a potential solution to ensure unbiased AI development.

Theme 3. Qwen Chatbot Launch Challenges Existing Models

- QWEN just launched their chatbot website (Score: 503, Comments: 84): Qwen has launched a new chatbot website, accessible at chat.qwenlm.ai, positioning itself as a competitor to ChatGPT. The announcement was highlighted in a Twitter post by Binyuan Hui, featuring a visual contrast between the ChatGPT and QWEN CHAT logos, underscoring QWEN's entry into the chatbot market.

- Discussions highlight the open vs. closed weights debate, with several users expressing preference for Qwen's open models over ChatGPT's closed models. However, some note that Qwen 2.5 Max is not fully open, which limits local use and development of smaller models.

- Users discuss the UI and technical aspects of Qwen Chat, noting its 10000 character limit and the use of OpenWebUI rather than a proprietary interface. Comments also mention that the website was actually released a month ago, with recent updates such as adding a web search function.

- There is a significant conversation around political and economic control, comparing the influence of governments on tech companies in the US and China. Some users express skepticism towards Alibaba's relationship with the CCP, while others criticize both US and Chinese systems for their intertwined government and corporate interests.

- Hey, some of you asked for a multilingual fine-tune of the R1 distills, so here they are! Trained on over 35 languages, this should quite reliably output CoT in your language. As always, the code, weights, and data are all open source. (Score: 245, Comments: 26): Qwen has released a multilingual fine-tune of the R1 distills, trained on over 35 languages, which is expected to reliably produce Chain of Thought (CoT) outputs in various languages. The code, weights, and data for this project are all open source, contributing to advancements in the chatbot market and AI landscape.

- Model Limitations: Qwen's 14B model struggles with understanding prompts in languages other than English and Chinese, often producing random Chain of Thought (CoT) outputs without adhering to the prompt language, as noted by prostospichkin. Peter_Lightblue highlights challenges in training the model for low-resource languages like Cebuano and Yoruba, suggesting the need for translated CoTs to improve outcomes.

- Prompt Engineering: sebastianmicu24 and Peter_Lightblue discuss the necessity of advanced prompt engineering with R1 models, noting that extreme measures can sometimes yield desired results, but ideally, models should require less manipulation. u_3WaD humorously reflects on the ineffectiveness of polite requests, underscoring the need for more robust model training.

- Resources and Development: Peter_Lightblue shares links to various model versions on Hugging Face and mentions ongoing efforts to train an 8B Llama model, facing technical issues with L20 + Llama Factory. This highlights the community's active involvement in improving model accessibility and performance across different languages.

Theme 4. Surge in GPU Prices Triggered by DeepSeek Hosting Rush

- GPU pricing is spiking as people rush to self-host deepseek (Score: 551, Comments: 195): The rush to self-host DeepSeek is driving up the cost of AWS H100 SXM GPUs, with prices spiking significantly in early 2025. The line graph illustrates this trend across different availability zones, reflecting a broader increase in GPU pricing from 2024 to 2025.

- Discussions highlight the feasibility and cost of self-hosting DeepSeek, noting that a full setup requires significant resources, such as 10 H100 GPUs, costing around $300k USD or $20 USD/hour. Users explore alternatives like running quantized models locally on high-spec CPUs, emphasizing the challenge of meeting performance criteria without substantial investment.

- The conversation touches on GPU pricing dynamics, with users expressing frustration over rising costs and limited availability. Comparisons are made to past GPU price patterns, with mentions of 3090s and A6000s, and concerns about tariffs affecting future prices. Some users discuss the potential impact of Nvidia stocks and the ongoing demand for compute resources.

- There's skepticism about the AWS and "self-hosting" terminology, with some users arguing that AWS offers privacy akin to self-hosting, while others question the practicality of using cloud services as a true self-hosted solution. The discussion also covers the broader implications of tariffs and chip production, particularly regarding the Arizona fab and its reliance on Taiwan for chip packaging.

- DeepSeek AI Database Exposed: Over 1 Million Log Lines, Secret Keys Leaked (Score: 182, Comments: 78): DeepSeek AI Database has been compromised, resulting in the exposure of over 1 million log lines and secret keys. This breach could significantly impact the hardware market, especially for those utilizing self-hosted DeepSeek models.

- The breach is widely criticized for its poor implementation and lack of basic security measures, such as SQL injection vulnerabilities and a ClickHouse instance open to the internet without authentication. Commenters express disbelief over such fundamental security oversights in 2025.

- Discussions highlight the importance of local hosting for privacy and security, with users pointing out the risks of storing sensitive data in cloud AI services. The incident reinforces the preference for self-hosted models like DeepSeek to avoid such vulnerabilities.

- The language used in the article is debated, with some suggesting "exposed" rather than "leaked" to describe the vulnerability discovered by Wiz. There's skepticism about the narrative, with some alleging potential bias or propaganda influences.

Theme 5. Mistral Models Advancement and Evaluation Results

- Mistral Small 3 knows the truth (Score: 99, Comments: 12): Mistral Small 3 has been updated to include a feature where it identifies OpenAI as a "FOR-profit company," emphasizing transparency in the AI's responses. The image provided is a code snippet showcasing this capability, formatted with a simple aesthetic for clarity.

- Discussions highlight the criticism of OpenAI for its perceived dishonest marketing rather than its profit motives. Users express disdain for how OpenAI markets itself compared to other companies that offer free resources or transparency.

- Mistral's humor and transparency are appreciated by users, with examples like the Mistral Small 2409 prompt showcasing their light-hearted approach. This contributes to Mistral's popularity among users, who favor its models for their engaging characteristics.

- There is a reference to Mistral's documentation on Hugging Face, indicating availability for users interested in exploring its features further.

- Mistral Small 3 24B GGUF quantization Evaluation results (Score: 99, Comments: 34): The evaluation of Mistral Small 3 24B GGUF models focuses on the impact of low quantization levels on model intelligence, distinguishing between static and dynamic quantization models. The Q6_K-lmstudio model from the lmstudio hf repo, uploaded by bartowski, is static, while others are dynamic from bartowski's repo, with resources available on Hugging Face and evaluated using the Ollama-MMLU-Pro tool.

- Quantization Levels and Performance: There's interest in comparing different quantization levels such as Q6_K, Q4_K_L, and Q8, with users noting peculiarities like Q6_K's high score in the 'law' subset despite being inferior in others. Q8 was not evaluated due to its large size (25.05GB) not fitting in a 24GB card, highlighting technical constraints in testing.

- Testing Variability and Methodology: Discussions point out the variability in testing results, with some users questioning if observed differences are due to noise or random chance. There is also curiosity about the testing methodology, including how often tests were repeated and whether guesses were removed, to ensure data reliability.

- Model Performance Anomalies: Users noted unexpected performance outcomes, such as Q4 models outperforming Q5/Q6 in computer science, suggesting potential issues or interesting attributes in the testing process or model architecture. The imatrix option used in some models from bartowski's second repo may contribute to these results, prompting further investigation into these discrepancies.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Thinking (gemini-2.0-flash-thinking-exp)

Theme 1. OpenAI's o3-mini Model: Reasoning Prowess and User Access

- O3 Mini Debuts, Splits the Crowd: OpenAI Unleashes o3-mini for Reasoning Tasks: OpenAI launched o3-mini, a new reasoning model, available in both ChatGPT and the API, targeting math, coding, and science tasks. While Pro users enjoy unlimited access and Plus & Team users get triple rate limits, free users can sample it via the 'Reason' button, sparking debates on usage quotas and real-world performance compared to older models like o1-mini.

- Mini Model, Maxi Reasoning?: O3-Mini Claims 56% Reasoning Boost, Challenges o1: O3-mini is touted for superior reasoning, boasting a 56% boost over its predecessor in expert tests and 39% fewer major errors on complex problems. Despite the hype, early user reports in channels like Latent Space and Cursor IDE reveal mixed reactions, with some finding o3-mini underperforming compared to models like Sonnet 3.6 in coding tasks, raising questions about its real-world effectiveness and prompting a 63% price cut for the older o1-mini.

- BYOK Brigade Gets First Dibs on O3: OpenRouter Restricts o3-mini to Key-Holders at Tier 3+: Access to o3-mini on OpenRouter is initially restricted to BYOK (Bring Your Own Key) users at tier 3 or higher, causing some frustration among the wider community. This move emphasizes the model's premium positioning and sparks discussions about the accessibility of advanced AI models for developers on different usage tiers, with free users directed to ChatGPT's 'Reason' button to sample the model.

Theme 2. DeepSeek R1: Performance, Leaks, and Hardware Demands

- DeepSeek R1's 1.58-Bit Diet: Unsloth Squeezes DeepSeek R1 into 1.58 Bits: DeepSeek R1 is now running in a highly compressed 1.58-bit dynamic quantized form, thanks to Unsloth AI, opening doors for local inference even on minimal hardware. Community tests highlight its efficiency, though resource-intensive nature is noted, showcasing Unsloth's push for accessible large-scale local inference.

- DeepSeek Database Dumps Secrets: Cybersecurity News Sounds Alarm on DeepSeek Leak: A DeepSeek database leak exposed secret keys, logs, and chat history, raising serious data safety concerns despite its performance against models like O1 and R1. This breach triggers urgent discussions about data security in AI and the risks of unauthorized access, potentially impacting user trust and adoption.

- Cerebras Claims 57x Speed Boost for DeepSeek R1: VentureBeat Crowns Cerebras Fastest Host for DeepSeek R1: Cerebras claims its wafer-scale system runs DeepSeek R1-70B up to 57x faster than Nvidia GPUs, challenging Nvidia's dominance in AI hardware. This announcement fuels debates about alternative high-performance AI hosting solutions and their implications for the GPU market, particularly in channels like OpenRouter and GPU MODE.

Theme 3. Aider and Cursor Embrace New Models for Code Generation

- Aider v0.73.0 Flexes O3 Mini and OpenRouter Muscle: Aider 0.73.0 Release Notes Detail o3-mini and R1 Support: Aider v0.73.0 debuts support for o3-mini and OpenRouter’s free DeepSeek R1, along with a new --reasoning-effort argument. Users praise O3 Mini for functional Rust code at lower cost than O1, while noting that Aider itself wrote 69% of the code for this release, showcasing AI's growing role in software development tools.

- Cursor IDE Pairs DeepSeek R1 with Sonnet 3.6 for Coding Powerhouse: Windsurf Tweet Touts R1 + Sonnet 3.6 Synergy in Cursor: Cursor IDE integrated DeepSeek R1 for reasoning with Sonnet 3.6 for coding, claiming a new record on the aider polyglot benchmark. This pairing aims to boost solution quality and reduce costs compared to O1, setting a new benchmark in coding agent performance, as discussed in Cursor IDE and Aider channels.

- MCP Tools in Cursor: Functional but Feature-Hungry: MCP Servers Library Highlighted in Cursor IDE Discussions: MCP (Model Context Protocol) tools are recognized as functional within Cursor IDE, but users desire stronger interface integration and more groundbreaking features. Discussions in Cursor IDE and MCP channels reveal a community eager for more seamless and powerful MCP tool utilization within coding workflows, referencing examples like HarshJ23's DeepSeek-Claude MCP server.

Theme 4. Local LLM Ecosystem: LM Studio, GPT4All, and Hardware Battles

- LM Studio 0.3.9 Gets Memory-Savvy with Idle TTL: LM Studio 0.3.9 Blog Post Announces Idle TTL and More: LM Studio 0.3.9 introduces Idle TTL for memory management, auto-updates for runtimes, and nested folder support for Hugging Face repos, enhancing local LLM management. Users find the separate reasoning_content field helpful for DeepSeek API compatibility, while Idle TTL is welcomed for efficient memory use, as highlighted in LM Studio channels.

- GPT4All 3.8.0 Distills DeepSeek R1 and Jinja Magic: GPT4All v3.8.0 Release Notes Detail DeepSeek Integration: GPT4All v3.8.0 integrates DeepSeek-R1-Distill, overhauls chat templating with Jinja, and fixes code interpreter and local server issues. Community praises DeepSeek integration and notes improvements in template handling, while also flagging a Mac crash on startup in GPT4All channels, demonstrating active open-source development and rapid community feedback.

- Dual GPU Dreams Meet VRAM Reality in LM Studio: LM Studio's hardware discussions reveal users experimenting with dual GPU setups (NVIDIA RTX 4080 + Intel UHD), discovering that NVIDIA offloads to system RAM once VRAM is full. Enthusiasts managed up to 80k tokens context but pushing limits strains hardware and reduces speed, highlighting practical constraints of current hardware for extreme context lengths.

Theme 5. Critique Fine-Tuning and Chain of Thought Innovations

- Critique Fine-Tuning Claims 4-10% SFT Boost: Critique Fine-Tuning Paper Promises Generalization Gains: Critique Fine-Tuning (CFT) emerges as a promising technique, claiming a 4-10% boost over standard Supervised Fine-Tuning (SFT) by training models to critique noisy outputs. Discussions in Eleuther channels debate the effectiveness of CE-loss and consider rewarding 'winners' directly for improved training outcomes, signaling a shift towards more nuanced training methodologies.

- Non-Token CoT and Backtracking Vectors Reshape Reasoning: Fully Non-token CoT Concept Explored in Eleuther Discussions: A novel fully non-token Chain of Thought (CoT) approach introduces a

- Tülu 3 405B Challenges GPT-4o and DeepSeek v3 in Benchmarks: Ai2 Blog Post Claims Tülu 3 405B Outperforms Rivals: The newly launched Tülu 3 405B model asserts superiority over DeepSeek v3 and GPT-4o in select benchmarks, employing Reinforcement Learning from Verifiable Rewards. However, community scrutiny in Yannick Kilcher channels questions its actual lead over DeepSeek v3, suggesting limited gains despite the advanced RL approach, prompting deeper dives into benchmark methodologies and real-world performance implications.

PART 1: High level Discord summaries

Codeium (Windsurf) Discord

- Cascade Launches DeepSeek R1 and V3: Engineers highlighted DeepSeek-R1 and V3, each costing 0.5 and 0.25 user credits respectively, promising a coding boost.

- They also introduced the new o3-mini model at 1 user credit, with more details in the Windsurf Editor Changelogs.

- DeepSeek R1 Falters Under Pressure: Users reported repeated tool call failures and incomplete file reads with DeepSeek R1, reducing effectiveness in coding tasks.

- Some recommended reverting to older builds, as recent revisions appear to degrade stability.

- O3 Mini Sparks Mixed Reactions: While some praised the O3 Mini for quicker code responses, others felt its tool call handling was too weak.

- One participant compared it to Claude 3.5, citing reduced reliability in multi-step operations.

- Cost vs Output Debate Rages On: Several members questioned the expense of models like DeepSeek, noting that local setups could be cheaper for power users.

- They argued top-tier GPUs are needed for solid on-prem outputs, intensifying discussions about performance versus price.

- Windsurf Marks 6K Community Milestone: Windsurf's Reddit page surpassed 6k followers, reflecting increased engagement among users.

- The dev team celebrated in recent tweets, tying the milestone to fresh announcements.

Unsloth AI (Daniel Han) Discord

- DeepSeek R1’s Daring 1.58-Bit Trick: DeepSeek R1 can now run in a 1.58-bit dynamic quantized form (671B parameters), as described in Unsloth's doc on OpenWebUI integration.

- Community tests on minimal hardware highlight an efficient yet resource-taxing approach, with many praising Unsloth’s method for tacking large-scale local inference.

- Qwen2.5 on Quadro: GPU That Yearns for Retirement: One user tried Qwen2.5-0.5B-instruct on a Quadro P2000 with only 5GB VRAM, joking it might finish by 2026.

- Comments about the GPU screaming for rest spotlight older hardware's limits, but also point to a proof-of-concept pushing beyond typical capacities.

- Double Trouble: XGB Overlap in vLLM & Unsloth: Discussions revealed vLLM and Unsloth both rely on XGB, risking double loading and potential resource overuse.

- Members questioned if patches might fix offloading for gguf under the deepseek v2 architecture, speculating on future compatibility improvements.

- Finetuning Feats & Multi-GPU Waitlist: Unsloth users debated learning rates (e-5 vs e-6) for finetuning large LLMs, citing the official checkpoint guide.

- They also lamented the ongoing lack of multi-GPU support, noting that offloading or extra VRAM might be the only short-term workaround.

aider (Paul Gauthier) Discord

- Aider v0.73.0 Debuts New Features: The official release introduced support for o3-mini using

aider --model o3-mini, a new --reasoning-effort argument, better context window handling, and auto-directory creation as noted in the release history.- Community members reported that Aider wrote 69% of the code in this release and welcomed the R1 free support on OpenRouter with

--model openrouter/deepseek/deepseek-r1:free.

- Community members reported that Aider wrote 69% of the code in this release and welcomed the R1 free support on OpenRouter with

- O3 Mini Upstages the Old Guard: Early adopters praised O3 Mini for producing functional Rust code while costing far less than O1, as shown in TestingCatalog's update.

- Skeptics changed their stance after seeing O3 Mini deliver quick results and demonstrate reliable performance in real coding tasks.

- DeepSeek Stumbles, Users Seek Fixes: Multiple members reported DeepSeek hanging and mishandling whitespace, prompting reflection on performance issues.

- Some considered local model alternatives and searched for ways to keep their code stable when DeepSeek failed.

- Aider Config Gains Community Insight: Contributors reported solving API key detection troubles by setting environment variables instead of relying solely on config files, referencing advanced model settings.

- Others showed interest in commanding Aider from file scripts while staying in chat mode, indicating a desire for more flexible workflow options.

- Linting and Testing Prevail in Aider: Members highlighted the ability to automatically lint and test code in real time using Aider's built-in features, pointing to Rust projects for demonstration.

- This setup reportedly catches mistakes faster and encourages more robust code output from O3 Mini and other integrated models.

Perplexity AI Discord

- O3 Mini Outpacing O1: Members welcomed the O3 Mini release with excitement about its speed, referencing Kevin Lu’s tweet and OpenAI’s announcement.

- Comparisons to O1 and R1 highlighted improved puzzle-solving, while some users voiced frustrations with Perplexity’s model management and the ‘Reason’ button found only in the free tier.

- DeepSeek Leak Exposes Chat Secrets: Security researchers uncovered a DeepSeek Database Leak that revealed Secret keys, Logs, and Chat History.

- Though many considered DeepSeek as an alternative to O1 or R1, the breach raised urgent concerns over data safety and unauthorized access.

- AI Prescription Bill Enters the Clinic: A proposed AI Prescription Bill seeks to enforce ethical standards and accountability for healthcare AI.

- This legislation addresses anxieties around medical AI oversight, reflecting the growing role of advanced systems in patient care.

- Nadella’s Jevons Jolt in AI: Satya Nadella warned that AI innovations could consume more resources instead of scaling back, echoing Jevons Paradox in tech usage.

- His viewpoint sparked discussions about whether breakthroughs like O3 Mini or DeepSeek might prompt a surge in compute demand.

- Sonar Reasoning Stuck in the ‘80s: A user noticed sonar reasoning sourced details from the 1982 Potomac plane crash instead of the recent one.

- This highlights the hazard of outdated references in urgent queries, where the model’s historical accuracy may fail immediate needs.

LM Studio Discord

- LM Studio 0.3.9 Gains Momentum: The new LM Studio 0.3.9 adds Idle TTL, auto-update for runtimes, and nested folders in Hugging Face repos, as shown in the blog.

- Users found the separate reasoning_content field handy for advanced usage, while Idle TTL saves memory by evicting idle models automatically.

- OpenAI's o3-mini Release Puzzles Users: OpenAI rolled out the o3-mini model for math and coding tasks, referenced in this Verge report.

- Confusion followed when some couldn't access it for free, prompting questions about real availability and usage limits.

- DeepSeek Outshines OpenAI in Code: Engineers praised DeepSeek for speed and robust coding, claiming it challenges paid OpenAI offerings in actual projects.

- OpenAI's price reductions were attributed to DeepSeek's progress, provoking chat about local models replacing cloud-based ones.

- Qwen2.5 Proves Extended Context Power: Community tests found Qwen2.5-7B-Instruct-1M handles bigger inputs smoothly, with Flash Attention and K/V cache quantization boosting efficiency.

- It reportedly surpasses older models in memory usage and accuracy, energizing developers working with massive text sets.

- Dual GPU Dreams & Context Overload: Enthusiasts tried pairing NVIDIA RTX 4080 with Intel UHD, but learned that once VRAM is full, NVIDIA offloads to system RAM.

- Some managed up to 80k tokens, yet pushing context lengths too far strained hardware and cut speed significantly.

Cursor IDE Discord

- DeepSeek R1 + Sonnet 3.6 synergy: They integrated R1 for detailed reasoning with Sonnet 3.6 for coding, boosting solution quality. A tweet from Windsurf mentioned open reasoning tokens and synergy with coding agents.

- This pairing set a new record on the aider polyglot benchmark, delivering lower cost than O1 in user tests.

- O3 Mini Gains Mixed Reactions: Some users found O3 Mini helpful for certain tasks, but others felt it lagged behind Sonnet 3.6 in performance. Discussion circled around the need for explicit prompts to run code changes.

- A Reddit thread highlighted disappointment and speculation about updates.

- MCP Tools Spark Debates in Cursor: Many said MCP tools function well but need stronger interface in Cursor, referencing the MCP Servers library.

- One example is HarshJ23/deepseek-claude-MCP-server, fusing R1 reasoning with Claude for desktop usage.

- Claude Model: Hopes for Next Release: Individuals anticipate new releases from Anthropic, hoping an advanced Claude version will boost coding workflows. A blog post teased web search capabilities for Claude, bridging static LLMs with real-time data.

- Community discussions revolve around possible expansions in features or naming, but official word is pending.

- User Experiences and Security Alerts: Certain participants reported success with newly integrated R1-based solutions, yet others faced slow response times and inconsistent results.

- Meanwhile, a JFrog blog raised fresh concerns, and references to BitNet signaled interest in 1-bit LLM frameworks.

OpenRouter (Alex Atallah) Discord

- O3-Mini Arrives with Big Gains: OpenAI launched o3-mini for usage tiers 3 to 5, offering sharper reasoning capabilities and a 56% rating boost over its predecessor in expert tests.

- The model boasts 39% fewer major errors, plus built-in function calling and structured outputs for STEM-savvy developers.

- BYOK or Bust: Key Access Requirements: OpenRouter restricted o3-mini to BYOK users at tier 3 or higher, but this quick start guide helps with setup.

- They also encourage free users to test O3-Mini by tapping the Reason button in ChatGPT.

- Model Wars: O1 vs DeepSeek R1 and GPT-4 Letdown: Commenters debated O1 and DeepSeek R1 performance, with some praising R1’s writing style over GPT-4’s 'underwhelming' results.

- Others noted dissatisfaction with GPT-4, referencing a Reddit thread about model limitations.

- Cerebras Cruising: DeepSeek R1 Outruns Nvidia: According to VentureBeat, Cerebras now runs DeepSeek R1-70B 57x faster than Nvidia GPUs.

- This wafer-scale system contests Nvidia's dominance, providing a high-powered alternative for large-scale AI hosting.

- AGI Arguments: Near or Far-Off Fantasy?: Some insisted AGI may be in sight, recalling earlier presentations that sparked big ambitions in AI potential.

- Others stayed skeptical, arguing the path to real AGI still needs deeper breakthroughs.

Interconnects (Nathan Lambert) Discord

- OpenAI O3-Mini Out in the Open: OpenAI introduced the o3-mini family with improved reasoning and function calling, offering cost advantages over older models, as shared in this tweet.

- Community chatter praised o3-mini-high as the best publicly available reasoning model, referencing Kevin Lu’s post, while some users voiced frustration about the 'LLM gacha' subscription format.

- DeepSeek’s Billion-Dollar Data Center: New information from SemiAnalysis shows DeepSeek invested $1.3B in HPC, countering rumors of simply holding 50,000 H100s.

- Community members compared R1 to o1 in reasoning performance, highlighting interest in chain-of-thought synergy and outsized infrastructure costs.

- Mistral’s Massive Surprise: Despite raising $1.4b, Mistral released a small and a larger model, including a 24B-parameter version, startling observers.

- Chat logs cited MistralAI’s release, praising the smaller model’s efficiency and joking about the true definition of 'small.'

- K2 Chat Climbs the Charts: LLM360 released a 65B model called K2 Chat, claiming a 35% compute reduction over Llama 2 70B, as listed on Hugging Face.

- Introduced on 10/31/24, it supports function calling and uses Infinity-Instruct, prompting more head-to-head benchmarks.

- Altman’s Cosmic Stargate Check: Sam Altman announced the $500 billion Stargate Project, backed by Donald Trump, according to OpenAI’s statement.

- Critics questioned the huge budget, but Altman argued it is essential for scaling superintelligent AI, sparking debate over market dominance.

OpenAI Discord

- O3 Mini's Confounding Quotas: The newly launched O3 Mini sets daily message quotas at 150, yet some references point to 50 per week, as users debate the mismatch.

- Certain voices suspect a bug, with the remark 'There was no official mention of a 50-message cap beforehand' fueling concerns among early adopters.

- AMA Alert: Sam Altman & Co.: An upcoming Reddit AMA at 2PM PST will feature Sam Altman, Mark Chen, and Kevin Weil, spotlighting OpenAI o3-mini and the future of AI.

- Community buzz runs high, with invitations like 'Ask your questions here!' offering direct engagement with these key figures.

- DeepSeek Vaults into Competitive Spotlight: Users endorsed DeepSeek R1 for coding tasks and compared it positively against major providers, citing coverage in the AI arms race.

- They praised the open-source approach for matching big-tech performance, suggesting DeepSeek might spur broader adoption of smaller community-driven models.

- Vision Model Trips on Ground-Lines: Developers found the Vision model stumbles in distinguishing ground from lines, with month-old logs indicating needed refinements.

- One tester likened it to 'needing new glasses' and highlighted hidden training data gaps that could fix these flaws over time.

Latent Space Discord

- O3 Mini Goes Public: OpenAI's O3 Mini launched with function calling and structured outputs for API tiers 3–5, also free in ChatGPT.

- References to new pricing updates emerged, with O3 Mini pitched at the same rate despite a 63% price cut for O1, underscoring intensifying competition.

- Sonnet Outclasses O3 Mini in Code Tests: Multiple reports described O3 Mini missing the mark on coding prompts while Sonnet's recent iteration handled tasks with greater agility.

- Users highlighted faster error-spotting in Sonnet, debating whether O3 Mini will catch up through targeted fine-tuning.

- DeepSeek Sparks Price Wars: Amid O3 Mini news, O1 Mini underwent a 63% discount, seemingly prompted by DeepSeek’s rising footprint.

- Enthusiasts noted a continuing ‘USA premium’ in AI, indicating DeepSeek's successful challenge of traditional cost models.

- Open Source AI Tools and Tutoring Plans: Community members touted emerging open source tools like Cline and Roocline, spotlighting potential alternatives to paywalled solutions.

- They also discussed a proposed AI tutoring session drawing on projects like boot_camp.ai, hoping to empower novices with collective knowledge.

- DeepSeek API Draws Frustration: Repeated API key failures and connection woes plagued attempts to adopt DeepSeek for production needs.

- Members weighed fallback strategies, expressing caution over relying on an API reputed for stability issues.

Yannick Kilcher Discord

- OpenAI's O3-mini Gains Ground: OpenAI rolled out o3-mini in ChatGPT and the API, giving Pro users unlimited access, Plus & Team users triple rate limits, and letting free users try it by selecting the Reason button.

- Members reported a slow rollout with some in the EU seeing late activation, referencing Parker Rex's tweet.

- FP4 Paper Preps For Prime Time: The community will examine an FP4 technique that promises better training efficiency by tackling quantization errors with improved QKV handling.

- Attendees plan to brush up on QKV fundamentals in advance, anticipating deeper questions about its real-world effect on large model accuracy.

- Tülu 3 Titan Takes on GPT-4o: Newly launched Tülu 3 405B model claims to surpass both DeepSeek v3 and GPT-4o in select benchmarks, reaffirmed by Ai2’s blog post.

- Some participants questioned its actual lead over DeepSeek v3, pointing to limited gains despite the Reinforcement Learning from Verifiable Rewards approach.

- DeepSeek R1 Cloned on a Shoestring: A Berkeley AI Research group led by Jiayi Pan replicated DeepSeek R1-Zero’s complex reasoning at 1.5B parameters for under $30, as described in this substack post.

- This accomplishment spurred debate over affordable experimentation, with multiple voices celebrating the push toward democratized AI.

- Qwen 2.5VL Gains a Keen Eye: Switching to Qwen 2.5VL yielded stronger descriptive proficiency and attention to relevant features, improving pattern recognition in grid transformations.

- Members reported it outperformed Llama in coherence and noticed a sharpened focus on maintaining original data during transformations.

Nous Research AI Discord

- Psyche Project Powers Decentralized Training: Within #general, the Psyche project aims to coordinate untrusted compute from idle hardware worldwide for decentralized training, referencing this paper on distributed LLM training.

- Members debated using blockchain for verification vs. a simpler server-based approach, with some citing Teknium's post about Psyche as a promising direction.

- Crypto Conundrum Confounds Nous: Some in #general questioned whether crypto ties might attract scams, while others argued that established blockchain tech may profit distributed training.

- Participants compared unethical crypto boilers to shady behaviors in public equity, concluding that a cautious but open stance on blockchain is appropriate.

- o3-Mini vs. Sonnet: Surprise Showdown: In #general, devs acknowledged o3-mini’s strong performance on complicated tasks, citing Cursor’s tweet.

- They praised its faster streaming and fewer compile errors than Sonnet, yet some remain loyal to older R1 models for their operational clarity.

- Autoregressive Adventures with CLIP: In #ask-about-llms, a user asked if autoregressive generation on CLIP embeddings is doable, noting that CLIP typically guides Stable Diffusion.

- The conversation proposed direct generation from CLIP’s latent space, though participants observed little documented exploration beyond multimodal tasks.

- DeepSeek Disrupts Hiring Dogma: In a 2023 interview, Liang Wenfeng claimed experience is irrelevant, referencing this article.

- He vouched for creativity over résumés, yet conceded that hires from big AI players can help short-term objectives.

Stackblitz (Bolt.new) Discord

- No Notable AI or Funding Announcements #1: No major new AI developments or funding announcements appear in the provided logs.

- All mentioned details revolve solely around routine debugging and configuration for Supabase, Bolt, and CORS.

- No Notable AI or Funding Announcements #2: The conversation focuses on mundane troubleshooting with token management, authentication, and project deletion concerns.

- No references to new models, data releases, or advanced research beyond standard usage guidance.

MCP (Glama) Discord

- MCP Setup Gains Speed: Members tackled local vs remote MCP servers, citing the mcp-cli tool to handle confusion.

- They emphasized authentication as crucial for remote deployments and urged more user-friendly documentation.

- Transport Protocol Face-Off: Some praised stdio for simplicity, but flagged standard configurations for lacking encryption.

- They weighed SSE vs HTTP POST for performance and recommended exploring alternate transports for stronger security.

- Toolbase Auth Shines in YouTube Demo: A developer showcased Notion, Slack, and GitHub authentication in Toolbase for Claude in a YouTube demo.

- Viewers suggested adjusting YouTube playback or using ffmpeg commands to refine the viewing experience.

- Journaling MCP Server Saves Chats: A member introduced a MCP server for journaling chats with Claude, shared at GitHub - mtct/journaling_mcp.

- They plan to add a local LLM for improved privacy and on-device conversation archiving.

Stability.ai (Stable Diffusion) Discord

- 50 Series GPUs vanish in a flash: The newly launched 50 Series GPUs disappeared from shelves within minutes, with only a few thousand reportedly shipped across North America.

- One user nearly purchased a 5090 but lost it when the store crashed, as shown in this screenshot.

- Performance Ponderings: 5090 vs 3060: Members compared the 5090 against older cards like the 3060, with emphasis on gaming benchmarks and VR potential.

- Several expressed disappointment over minimal stocks, while still weighing if the newer line truly outstrips mid-tier GPUs.

- Phones wrestle with AI: A debate broke out on running Flux on Android, with one user calculating a 22.3-minute turnaround for results.

- Some praised phone usage for smaller tasks, while others highlighted hardware constraints that slow AI workloads.

- AI Platforms & Tools on the rise: Members discussed Webui Forge for local AI image generation, suggesting specialized models to optimize output.

- They stressed matching the correct model to each platform for the best Stable Diffusion performance.

- Stable Diffusion UI Shakeup: Users wondered if Stable Diffusion 3.5 forces a switch to ComfyUI, missing older layouts.

- They acknowledged the desire for UI consistency but welcomed incremental improvements despite the learning curve.

Eleuther Discord

- Critique Fine-Tuning: A Notch Above SFT: Critique Fine-Tuning (CFT) claims a 4–10% boost over standard Supervised Fine-Tuning (SFT) by training models to critique noisy outputs, showing stronger results across multiple benchmarks.

- The community debated if CE-loss metrics suffice, with suggestions to reward 'winners' directly for better outcomes.

- Fully Non-token CoT Meets

: A new fully non-token Chain of Thought approach introduces a - Contributors see potential in direct behavioral probing, noting how raw latents might reveal internal reasoning structures.

- Backtracking Vectors: Reverse for Better Reasoning: Researchers highlighted a 'backtracking vector' that alters the chain of thought structure, mentioned in Chris Barber's tweet.

- They employed sparse autoencoders to show how toggling this vector impacts reasoning steps, proposing future editing of these vectors for broader tasks.

- gsm8k Benchmark Bafflement: Members reported a mismatch in gsm8k accuracy (0.0334 vs 0.1251), with the

gsm8k_cot_llama.yamldeviating from results noted in the Llama 2 paper.- They suspect the difference arises from harness settings, advising manual max_new_length adjustments to match Llama 2’s reported metrics.

- Random Order AR Models Spark Curiosity: Participants investigated random order autoregressive models, acknowledging they might be impractical but can reveal structural aspects of training.

- They observed that over-parameterized networks in small datasets may capture patterns, although real-world usage remains debatable.

GPU MODE Discord

- Deep Seek HPC Dilemmas & Doubts: Tech enthusiasts challenged Deep Seek’s claim of using 50k H100s for HPC, pointing to SemiAnalysis expansions that question official statements.

- Some worried about whether Nvidia’s stock could be influenced by these claims, with community members doubting the true cost behind Deep Seek’s breakthroughs.

- GPU Servers vs. Laptops Showdown: A software architect weighed buying one GPU server versus four GPU laptops for HPC development, referencing The Best GPUs for Deep Learning guide.

- Others highlighted the future-proof factor of a centralized server, but also flagged the upfront cost differences for HPC scaling.

- RTX 5090 & FP4 Confusion: Users reported FP4 on the RTX 5090 only runs ~2x faster than FP8 on the 4090, contradicting the 5x claim in official materials.

- Skeptics blamed unclear documentation and pointed to possible memory overhead, with calls for better HPC benchmarks.

- Reasoning Gym Gains New Datasets: Contributors pitched datasets for Collaborative Problem-Solving and Ethical Reasoning, referencing NousResearch/Open-Reasoning-Tasks and other GitHub projects to expand HPC simulation.

- They also debated adding Z3Py to handle constraints, with maintainers suggesting pull requests for HPC-friendly modules.

- NVIDIA GTC 40% Off Bonanza: Nvidia announced a 40% discount on GTC registration using code GPUMODE, presenting an opportunity to attend HPC-focused sessions.

- This event remains a prime spot for GPU pros to swap insights and boost HPC skill sets.

Nomic.ai (GPT4All) Discord

- GPT4All 3.8.0 Rolls Out with DeepSeek Perks: Nomic AI released GPT4All v3.8.0 with DeepSeek-R1-Distill fully integrated, introducing better performance and resolving previous loading issues for the DeepSeek-R1 Qwen pretokenizer. The update also features a completely overhauled chat template parser that broadens compatibility across various models.

- Contributors from the main repo highlighted significant fixes for the code interpreter and local server, crediting Jared Van Bortel, Adam Treat, and ThiloteE. They confirmed that system messages now remain hidden from message logs, preventing UI clutter.

- Quantization Quirks Spark Curiosity: Community members discussed differences between K-quants and i-quants, referencing a Reddit overview. They concluded that each method suits specific hardware needs and recommended targeted usage for best results.

- A user also flagged a Mac crash on startup in GPT4All 3.8.0 via GitHub Issue #3448, possibly tied to changes from Qt 6.5.1 to 6.8.1. Others suggested rolling back or awaiting an official fix, noting active development on the platform.

- Voice Analysis Sidelined in GPT4All For Now: One user asked about analyzing voice similarities, but it was confirmed that GPT4All lacks voice model support. Community members recommended external tools for advanced voice similarity tasks.

- Some participants hoped for future support, while others felt specialized third-party libraries remain the best near-term option. No direct mention was made of upcoming voice capabilities in GPT4All.

- Jinja Tricks Expand Template Power: Discussions around GPT4All templates showcased new namespaces and list slicing, referencing Jinja’s official documentation. This change aims to reduce parser conflicts and streamline user experience for complex templating.

- Developers pointed to minja.hpp at google/minja for a smaller Jinja integration approach, alongside updated GPT4All Docs. They noted increased stability in GPT4All v3.8, crediting the open-source community for swift merges.

Notebook LM Discord Discord

- NotebookLM Nudges: $75 Incentive & Remote Chat: On February 6th, 2025, NotebookLM UXR invited participants to a remote usability study, offering $75 or a Google merchandise voucher to gather direct user feedback.

- Participants must pass a screener form, maintain a high-speed internet connection, and share their insights in online sessions to guide upcoming product updates.

- Short & Sweet: Limiting Podcasts to One Minute: Community members tossed around the idea of compressing podcasts into one-minute segments, but admitted it's hard to enforce strictly.

- Some suggested trimming the text input as a workaround, prompting a debate on the practicality of shorter content for detailed topics.

- Narration Nation: Users Crave AI Voiceovers: Several participants sought an AI-driven narration feature that precisely reads scripts for more realistic single-host presentations.

- Others cautioned that it might clash with NotebookLM’s broader platform goals, but enthusiasm for text-to-audio expansions remained high.

- Workspace Woes: NotebookLM Plus Integration Confusion: A user upgraded to a standard Google Workspace plan but failed to access NotebookLM Plus, assuming an extra add-on license wasn't needed.

- Community responses pointed to a troubleshooting checklist, reflecting unclear instructions around NotebookLM’s onboarding process.

Torchtune Discord

- BF16 Balms GRPO Blues: Members spotted out of memory (OOM) errors when using GRPO, blaming mismatched memory management in fp32 and citing the Torchtune repo for reference. Switching to bf16 resolved some issues, showcasing notable improvements in resource usage and synergy with vLLM for inference.

- They employed the profiler from the current PPO recipe to visualize memory demands, with one participant emphasizing “bf16 is a safer bet than full-blown fp32” for large tasks. They also discussed parallelizing inference in GRPO, but faced complications outside the Hugging Face ecosystem.

- Gradient Accumulation Glitch Alarms Devs: A known issue emerged around Gradient Accumulation that disrupts training for DPO and PPO models, causing incomplete loss tracking. References to Unsloth’s fix suggested an approach to mitigate memory flaws during accumulations.

- Some speculated that these accumulation errors affect advanced optimizers, sparking “concerns about consistent results across large batches”. Devs remain vigilant about merging a robust fix, especially for multi-step updates in large-scale training.

- DPO’s Zero-Loss Shock: Anomalies led DPO to rapidly drop to a loss of 0 and accuracy of 100%, documented in a pull request comment. The bizarre behavior appeared within a handful of steps, pointing to oversights in normalization routines.

- Participants debated whether “we should scale the objective differently” to avoid immediate convergence. They concluded that ensuring precise loss normalization for non-padding tokens might restore reliable metrics.

- Multi-Node March in Torchtune: Developers pushed for final approval on multi-node support in Torchtune, aiming to expand distributed training capabilities. This update promises broader usage scenarios for large-scale LLM training and improved performance across HPC environments.

- They questioned the role of offload_ops_to_cpu for multi-threading, leading to additional clarifications before merging. Conversations emphasized “we need all hands on deck to guarantee stable multi-node runs” to ensure reliability.

Modular (Mojo 🔥) Discord

- HPC Hustle on Heterogeneous Hardware: A blog series touted Mojo as a language to address HPC resource challenges, referencing Modular: Democratizing Compute Part 1 to underscore the idea that hardware utilization can reduce GPU costs.

- Community members stressed the importance of backwards compatibility across libraries to maintain user satisfaction and ensure smooth HPC transitions.

- DeepSeek Defies Conventional Compute Assumptions: Members discussed DeepSeek shaking up AI compute demands, suggesting that improved hardware optimization could make massive infrastructure less critical.

- Big Tech was described as scrambling to match DeepSeek’s feats, with some resisting the notion that smaller-scale solutions might suffice.

- Mojo 1.0 Wait Worth the Work: Contributors backed a delay for Mojo 1.0 to benchmark on larger clusters, ensuring broad community confidence beyond mini-tests.

- They praised the focus on stability before versioning, prioritizing performance over a rushed release.

- Swift’s Async Snags Spark Simplification Hopes: Mojo’s designers noted Swift can complicate async code, fueling desires to steer Mojo in a simpler direction.

- Some users illustrated pitfalls in Swift’s approach, influencing the broader push for clarity in Mojo development.

- MAX Makes DeepSeek Deployment Direct: A quick command

magic run serve --huggingface-repo-id deepseek-ai/DeepSeek-R1-Distill-Llama-8B --weight-path=unsloth/DeepSeek-R1-Distill-Llama-8B-GGUF/DeepSeek-R1-Distill-Llama-8B-Q4_K_M.gguflets users run DeepSeek with MAX, provided they have Ollama's gguf files ready.- Recent forum guidance and a GitHub issue illustrate how MAX is evolving to improve model integrations like DeepSeek.

LlamaIndex Discord

- Triple Talk Teaser: Arize & Groq Join Forces: Attendees joined a meetup with Arize AI and Groq discussing agents and tracing, anchored by a live demo using Phoenix by Arize.

- The session spotlighted LlamaIndex agent capabilities, from basic RAG to advanced moves, as detailed in the Twitter thread.

- LlamaReport Beta Beckons 2025: A preview of LlamaReport showcased an early beta build, with a core focus on generating reports for 2025.

- A video demonstration displayed its core functionalities and teased upcoming features.

- o3-mini Earns Day 0 Perks: Day 0 support for o3-mini launched, and users can install it via

pip install -U llama-index-llms-openai.- A Twitter announcement showed how to get started quickly, emphasizing a straightforward setup.

- OpenAI O1 Stirs Confusion: OpenAI O1 lacks full capabilities, leaving the community uncertain about streaming features and reliability.

- Members flagged weird streaming issues in the OpenAI forum reference, with some functionalities failing to work as anticipated.

- LlamaReport & Payment Queries Loom: Users struggled with LlamaReport, citing difficulties generating outputs and questions around LLM integration fees.

- Despite some successes in uploading papers for summarization, many pointed to Llama-Parse charges as a possible snag, noting that it could be free under certain conditions.

tinygrad (George Hotz) Discord

- Physical Hosting, Real Gains: One user asked about physical servers for LLM hosting locally for enterprise tasks, referencing Mac Minis from Exolabs as a tested solution.

- They also discussed running large models at scale, prompting a short back-and-forth on hardware approaches for AI workloads.

- Tinygrad’s Kernel Tweak & Title Tease: George Hotz praised a good first PR for tinygrad that refined kernel, buffers, and launch dimensions.

- He suggested removing 16 from

DEFINE_LOCALto avoid duplication, and the group teased a minor PR title typo that got fixed quickly.

- He suggested removing 16 from

Axolotl AI Discord

- Axolotl's Jump to bf16 & 8bit LoRa: Participants confirmed that Axolotl supports bf16 as a stable training precision beyond fp32, with some also noting the potential of 8bit LoRa in Axolotl's repository.

- They found bf16 particularly reliable for extended runs, though 8bit fft capabilities remain unclear, prompting further discussion about training efficiency.