[AINews] o1: OpenAI's new general reasoning models

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Test-time reasoning is all you need.

AI News for 9/11/2024-9/12/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (216 channels, and 4377 messages) for you. Estimated reading time saved (at 200wpm): 416 minutes. You can now tag @smol_ai for AINews discussions!

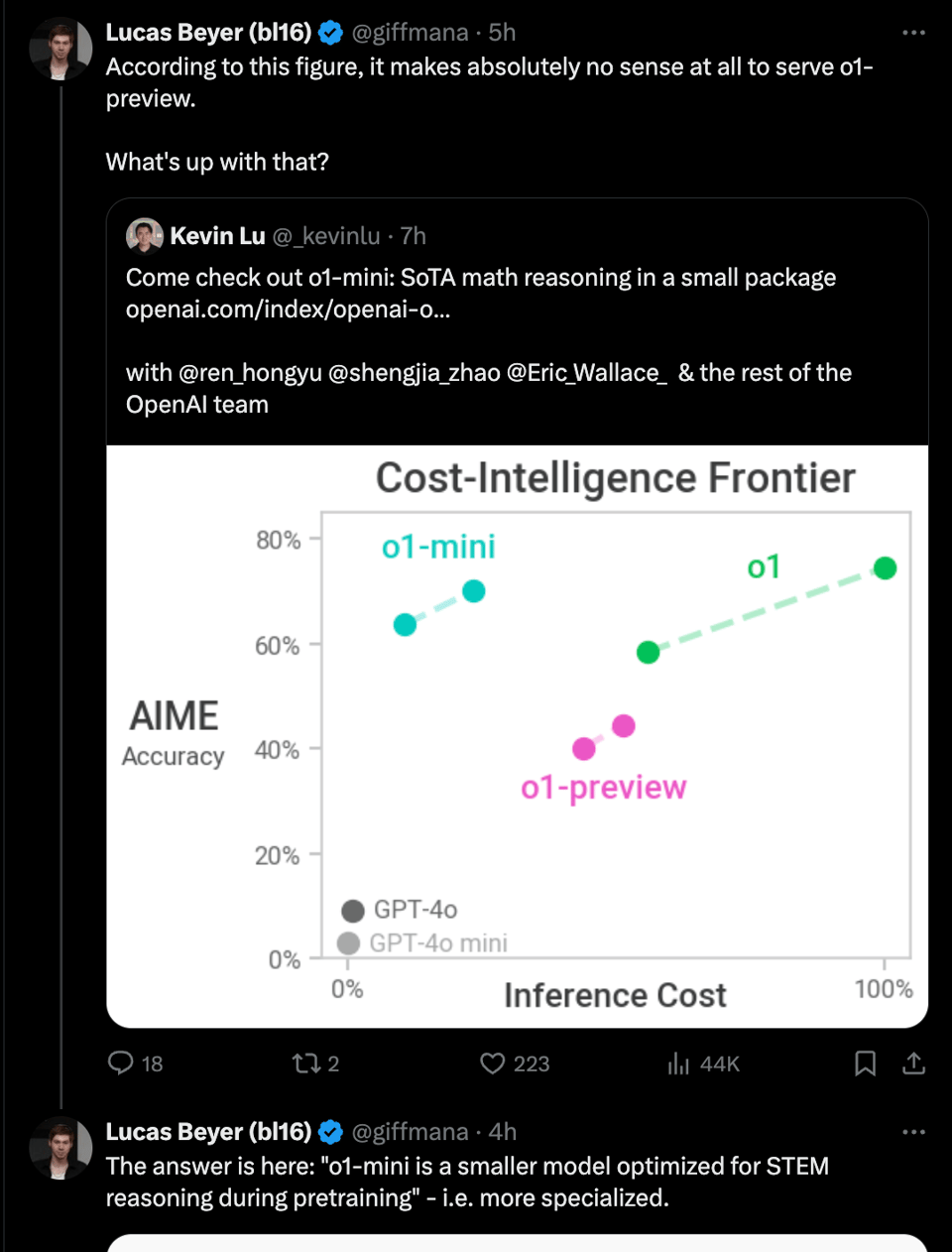

o1, aka Strawberry, aka Q*, is finally out! There are two models we can use today: o1-preview (the bigger one priced at $15 in / $60 out) and o1-mini (the STEM-reasoning focused distillation priced at $3 in/$12 out) - and the main o1 model is still in training. This caused a little bit of confusion.

There are a raft of relevant links, so don’t miss:

- the o1 Hub

- the o1-preview blogpost

- the o1-mini blogpost

- the technical research blogpost

- the o1 system card

- the platform docs

- the o1 team video and contributors list (twitter)

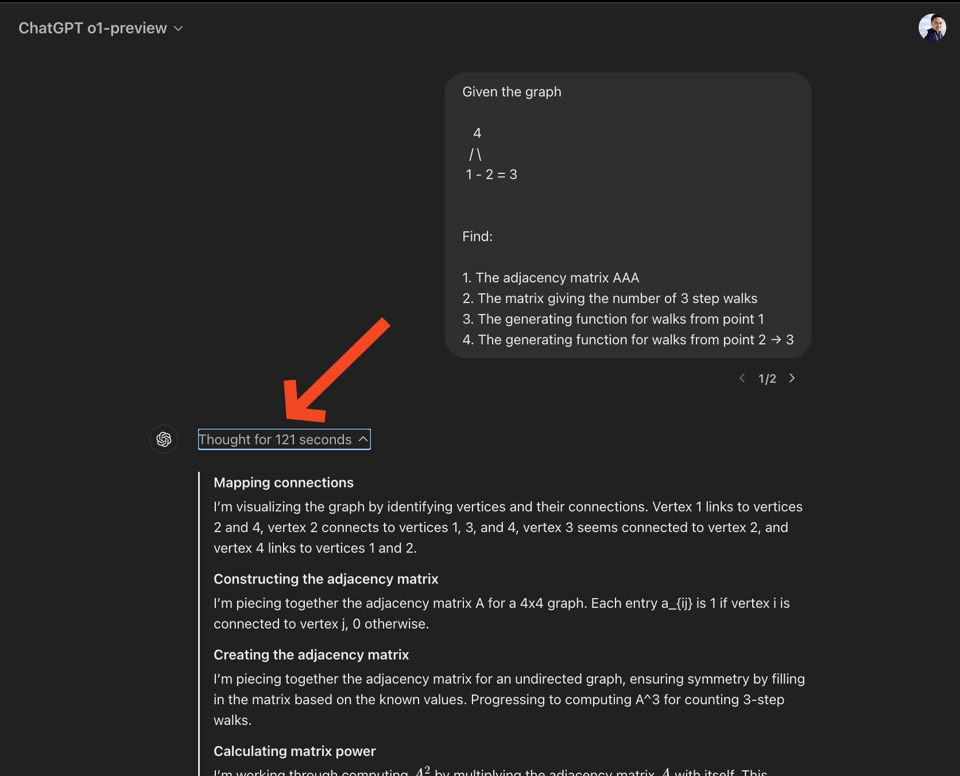

Inline with the many, many leaks leading up to today, the core story is longer “test-time inference” aka longer step by step responses - in the ChatGPT app this shows up as a new “thinking” step that you can click to expand for reasoning traces, even though, controversially, they are hidden from you (interesting conflict of interest…):

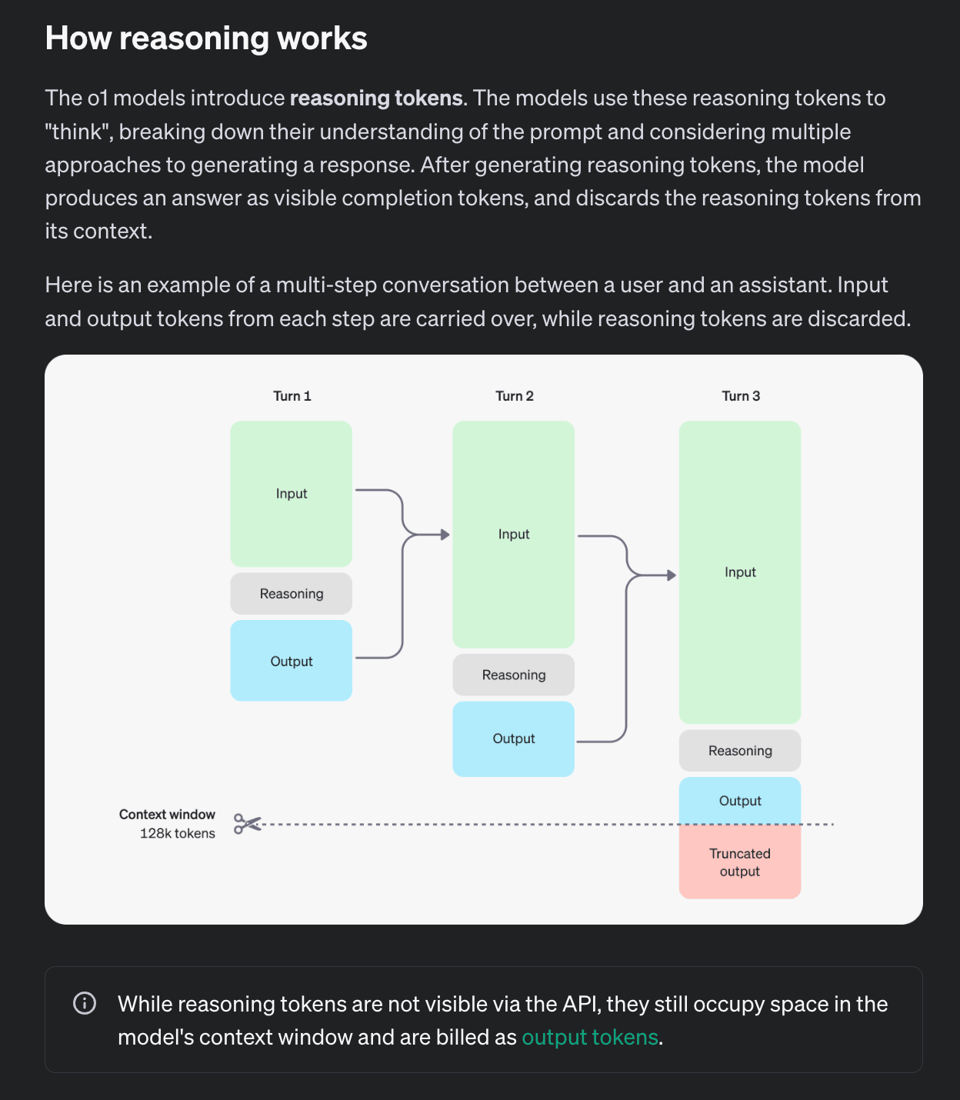

Under the hood, o1 is trained for adding new reasoning tokens - which you pay for, and OpenAI has accordingly extended the output token limit to >30k tokens (incidentally this is also why a number of API parameters from the other models like temperature and role and tool calling and streaming, but especially max_tokens is no longer supported).

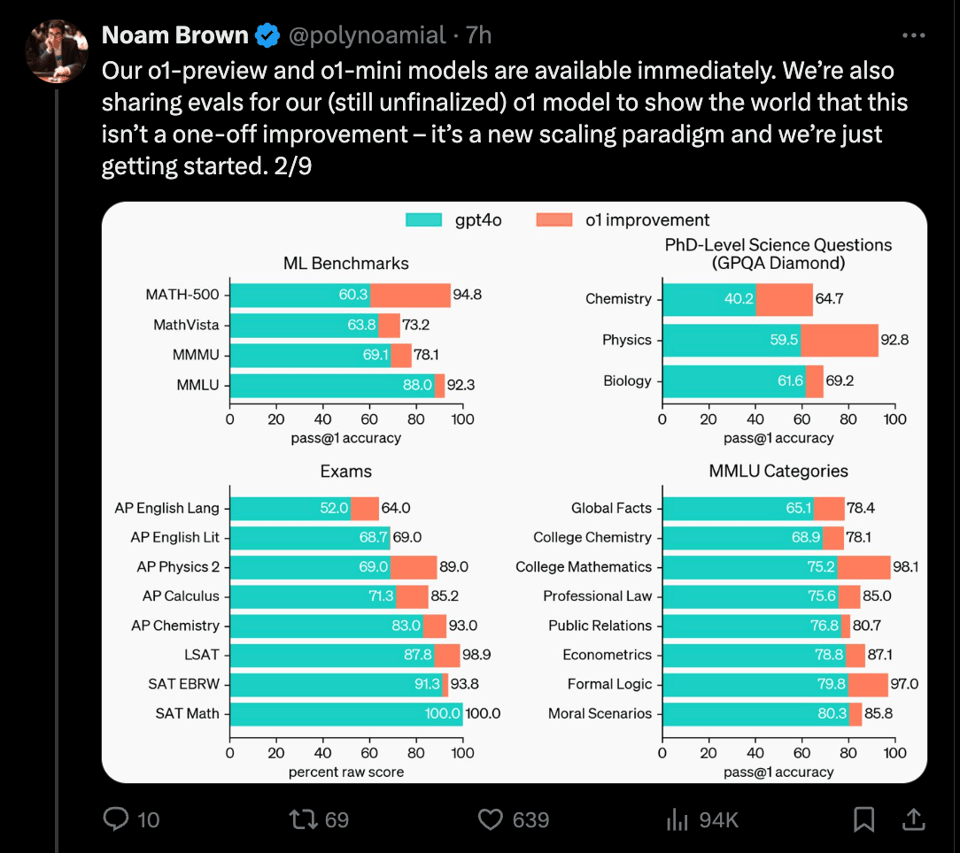

The evals are exceptional. OpenAI o1:

- ranks in the 89th percentile on competitive programming questions (Codeforces),

- places among the top 500 students in the US in a qualifier for the USA Math Olympiad (AIME),

- and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems (GPQA).

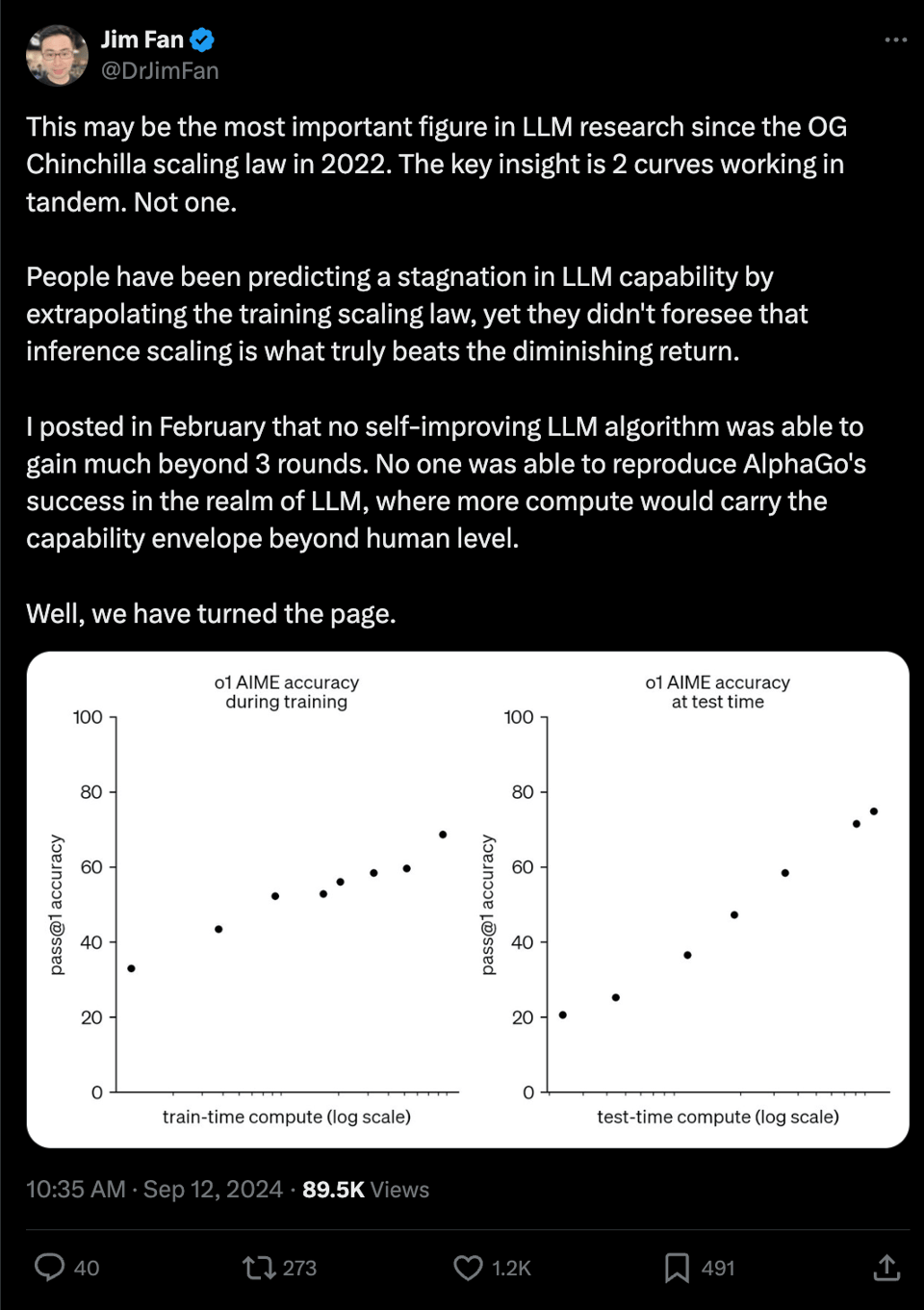

You are used to new models showing flattering charts, but there is one of note that you don’t see in many model announcements, that is probably the most important chart of all. Dr Jim Fan gets it right: we now have scaling laws for test time compute, and it looks like they scale loglinearly.

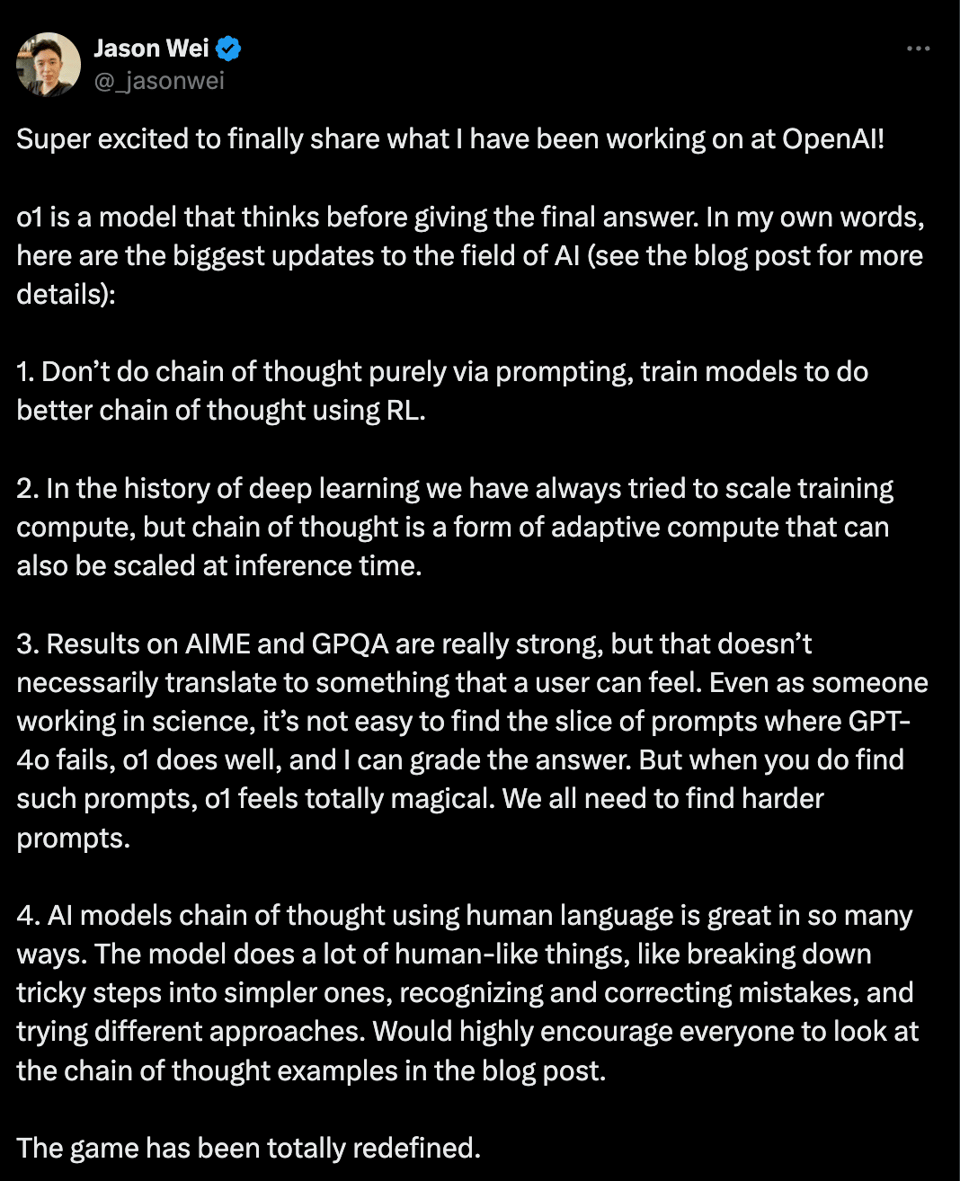

We unfortunately may never know the drivers of the reasoning improvements, but Jason Wei shared some hints:

Usually the big model gets all the accolades, but notably many are calling out the performance of o1-mini for its size (smaller than gpt 4o), so do not miss that.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Nvidia and AI Chip Competition

- Market share shifts: @draecomino noted that Nvidia is starting to lose share to AI chip startups for the first time, as evidenced by discussions at recent AI conferences.

- CUDA vs. Llama: The same user highlighted that while CUDA was crucial in 2012, in 2024 90% of AI developers are web developers building off Llama rather than CUDA.

- Performance comparisons: @AravSrinivas pointed out that Nvidia still has no competition in training, though it may face some in inference. However, B100s with fp4 inference could potentially outperform competitors.

- SambaNova performance: @rohanpaul_ai shared that SambaNova launched the "World's fastest API" with Llama 3.1 405B at 132 tokens/sec and Llama 3.1 70B at 570 tokens/sec.

New AI Models and Releases

- Pixtral 12B: @sophiamyang announced the release of Pixtral 12B, Mistral's first multimodal model. @swyx shared benchmarks showing Pixtral outperforming models like Phi 3, Qwen VL, Claude Haiku, and LLaVA.

- LLaMA-Omni: @osanseviero introduced LLaMA-Omni, a new model for speech interaction based on Llama 3.1 8B Instruct, featuring low-latency speech and simultaneous text and speech generation.

- Reader-LM: @rohanpaul_ai shared details about JinaAI's Reader-LM, a Small Language Model for web data extraction and cleaning that outperformed larger models like GPT-4 and LLaMA-3.1-70B on HTML2Markdown tasks.

- GOT (General OCR Theory): @_philschmid described GOT, a 580M end-to-end OCR-2.0 model that outperforms existing methods in handling complex tasks like sheets, formulas, and geometric shapes.

AI Research and Developments

- Superposition prompting: @rohanpaul_ai shared research on superposition prompting, which accelerates and enhances RAG without fine-tuning, addressing long-context LLM challenges.

- LongWriter: @rohanpaul_ai discussed the LongWriter paper, which introduces a method for generating 10,000+ word outputs from long context LLMs.

- AI in scientific discovery: @rohanpaul_ai highlighted research on using Agentic AI for automatic scientific discovery, revealing hidden interdisciplinary relationships.

- AI safety and risks: @ylecun shared thoughts on AI safety, arguing that human-level AI is still far off and that regulating AI R&D due to existential risk fears is premature.

AI Tools and Applications

- Gamma: @svpino showcased Gamma, an app that can generate functional websites from uploaded resumes in seconds.

- RAG-based document QA: @llama_index introduced Kotaemon, an open-source UI for chatting with documents using RAG-based systems.

- AI Scheduler: @llama_index announced an upcoming workshop on building an AI Scheduler for smart meetings using Zoom, LlamaIndex & Qdrant.

AI Industry and Market Trends

- AI replacing enterprise software: @rohanpaul_ai noted that Klarna replacing Salesforce and Workday with AI-powered in-house software signals a trend of AI eating most SaaS.

- AI valuation: @rohanpaul_ai shared that Anthropic's valuation has reached $18.4 billion, putting it among the top privately held tech companies.

- AI in manufacturing: @weights_biases promoted a session on "Generative AI in Manufacturing: Revolutionizing Tool Development" at AIAI Berlin.

AI Ethics and Societal Impact

- AI detection challenges: @rohanpaul_ai shared research showing that both AI models and humans struggle to differentiate between humans and AI in conversation transcripts.

- Criminal case involving AI: @rohanpaul_ai detailed the first criminal case involving AI-inflated music streaming, where the perpetrator used AI-generated music and fake accounts to fraudulently collect royalties.

Memes and Humor

- @ylecun shared a meme about a first grader upset for getting a bad grade after insisting that 2+2=5.

- @ylecun joked about a Halloween special of The Simpsons where "In Springfield, they're eating their dawgs!"

- @cto_junior shared a humorous image about what strawberry likely is.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Reviving and Improving Classic LLM Architectures

- New release: Solar Pro (preview) Instruct - 22B (Score: 44, Comments: 14): The Solar Pro team has released a preview of their new 22B parameter instruct model, available on Hugging Face. This model is claimed to be the best open model that can run on a single GPU, though the post author notes that such claims are common among model releases.

- Users expressed disappointment with the 4K context window, considering it insufficient in 2024 when 8K is seen as a minimum. The full release in November 2024 will feature longer context windows and expanded language support.

- Discussions compared Solar Pro to other models, with some praising Solar 11B's performance. Users are curious about improvements to Phi 3's usability and personality, as it was seen as a "benchmark sniper" that underperformed in real-world applications.

- The claim of being the "best open model that can run on a single GPU" was noted as more realistic than claiming superiority over larger models like Llama 70B. Some users expressed interest in trying a GGUF version of Solar Pro.

- Chronos-Divergence-33B ~ Unleashing the Potential of a Classic (Score: 72, Comments: 23): Zeus Labs introduced Chronos-Divergence-33B, an improved version of the original LLaMA 1 model with an extended sequence length from 2048 to 16,384 effective tokens. The model, trained on ~500M tokens of new data, can write coherently for up to 12K tokens while maintaining LLaMA 1's charm and avoiding repetitive "GPT-isms", with technical details and quantizations available on its Hugging Face model card.

- Users discussed the model's storytelling capabilities, with the developer confirming it's primarily focused on multiturn RP. Some users reported shorter responses than expected and sought suggestions for improvement.

- The community expressed interest in using older models as bases to avoid "GPT slop" or "AI smell" present in newer models. Users debated the effectiveness of this approach and discussed potential alternatives like InternLM 20B.

- Discussions touched on training techniques to remove biases, with suggestions including gentle DPO/KTO, ORPO, and SFT on pre-training raw text. Some proposed modifying the tokenizer to eliminate specific tokens associated with GPT-isms.

Theme 2. New Open-Source Speech Models Pushing Boundaries

- New Open Text-to-Speech Model: Fish Speech v1.4 (Score: 106, Comments: 13): Fish Speech v1.4, a new open-source text-to-speech model, has been released, trained on 700,000 hours of audio data across multiple languages. The model requires only 4GB of VRAM for inference, making it accessible for various applications, and is available through its official website, GitHub repository, HuggingFace page, and includes an interactive demo.

- Users compared Fish Speech v1.4 to other open-source models, noting that while it's an improvement over previous versions, it's still not on par with XTTSv2 for voice cloning. The model's performance in German was positively received.

- The development team behind Fish Speech v1.4 includes members from SoVITS and RVC projects, which are considered notable in the open-source text-to-speech community. RVC was mentioned as potentially the best current open-source option.

- A user pointed out an unfortunate naming choice for the command-line binary "fap" (fish-audio-preprocessing), suggesting it should be changed to avoid awkward execution commands.

- LLaMA-Omni: Seamless Speech Interaction with Large Language Models (Score: 74, Comments: 30): LLaMA-Omni is a new model that enables seamless speech interaction with large language models. The model, available on Hugging Face, is accompanied by a research paper and open-source code on GitHub, allowing for further exploration and development in the field of speech-enabled AI interactions.

- LLaMA-Omni uses only the voice encoder portion of Whisper to embed audio, not the full transcription model. This approach differs from prior multimodal methods that used Whisper's complete speech-to-text capabilities.

- The model's hardware requirements sparked discussion, with users noting high VRAM needs. The developer mentioned the possibility of using smaller Whisper models for faster inference at the cost of quality.

- Users debated the model's effectiveness, with some viewing it as a proof of concept comparable to existing solutions. Others questioned its voice quality and non-verbal speech capabilities, suggesting it might be a combination of separate ASR, LLM, and TTS models.

Theme 3. Benchmarking and Cost Analysis of LLM Deployments

- Ollama LLM benchmarks on different GPUs on runpod.io (Score: 52, Comments: 16): The author conducted GPU and AI model performance benchmarks using Ollama on runpod.io, focusing on the

eval_ratemetric for various models and GPUs. Key findings include: llama3.1:8b performed similarly on 2x and 4x RTX4090, mistral-nemo:12b was ~30% slower than lama3.1:8b, and command-r:35b was twice as fast as llama3.1:70b, with minimal differences between L40 vs. L40S and A5000 vs. A6000 for smaller models. The author shared a detailed spreadsheet with their findings and welcomed feedback for potential extensions to the benchmark.- Users inquired about VRAM usage and quantization, with the author adding VRAM size ranges to the spreadsheet. A commenter noted running Q8 or fp16 models on Runpod for tasks beyond home capabilities.

- Discussion on context length impact on model speed, with the author detailing their testing process using a standard question ("why is the sky blue?") that typically generated 300-500 token responses across models.

- A user shared experience mixing GPU brands (7900xt and 4090) using kobalcpp with vulkan, achieving 10-14 tokens/second for llama3.1 70b q4KS, noting issues with llama.cpp and vulkan compatibility.

- LLMs already cheaper than traditional ML models? (Score: 65, Comments: 40): The post compares translation costs between GPT-4 models and Google Translate, finding that GPT-4 options are significantly cheaper: GPT-4 costs $20/1M tokens, GPT-4-mini costs $0.75/1M tokens, while Google Translate costs $20/1M characters (equivalent to $60-$100/1M tokens). The author expresses confusion about why anyone would use the more expensive Google Translate, given that GPT-4 models also offer additional benefits like context understanding and customized prompts for potentially better translation results.

- Google Translate uses a hybrid transformer architecture, as detailed in a 2020 Google Research blog post. Users questioned its performance compared to newer LLMs, with some attributing this to its age.

- OpenAI's API pricing sparked debate about profitability. Some argued OpenAI isn't making money from API calls, while others suggested they're likely cash flow positive, citing efficient infrastructure and competitive pricing compared to open-source LLM providers.

- Users highlighted Google Translate's reliability for specific language pairs and less common languages, noting that GPT-4 might struggle with non-English translations or produce unrelated content. Some mentioned Gemini Pro 1.5 as a potential alternative for multi-language translation.

Theme 4. Developers Embrace AI Coding Assistants, Unlike Artists with AI Art

- I want to ask a question that may offend a lot of people: are a significant number of programmers / software engineers bitter about LLMs getting better in coding like a significant numbers of artists are bitter about AI art? (Score: 107, Comments: 275): Software engineers and programmers generally appear less bitter about Large Language Models (LLMs) improving at coding compared to artists' reactions to AI art. The overall mood in the programming industry seems to be one of adaptation and integration, with many viewing LLMs as tools to enhance productivity rather than as threats to their jobs.

- Software engineers generally view LLMs as productivity-enhancing tools rather than threats, using them for tasks like boilerplate creation, understanding new frameworks, and automating tedious work. Many see AI as another step in the abstraction level evolution of programming.

- While LLMs are helpful for coding assistance, they still have limitations such as hallucinating incorrect code and requiring human oversight. Some developers note that LLMs are currently poor at programming for real-world applications and shine mainly in generating simple or short code snippets.

- There's a spectrum of attitudes among developers, from enthusiastic early adopters to those in denial about AI's potential impact. Some express concern about management expectations and potential job market effects, while others view AI as an opportunity to focus on higher-level problem-solving and system design.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Releases and Improvements

- OpenAI developments: There are rumors and speculation about upcoming releases from OpenAI, including a potential "Strawberry" model and GPT-4o voice mode. However, many users express frustration with vague announcements and delayed releases. An OpenAI head of applied research tweeted about "shipping" something soon, fueling more speculation.

- Fish Speech 1.4: A new open-source text-to-speech model was released, trained on 700K hours of speech across 8 languages. It requires only 4GB VRAM and offers 50 free uses per day.

- Amateur Photography Lora v4: An update to a Stable Diffusion Lora model for generating realistic amateur-style photos was released, with improvements in sharpness and realism.

AI Research and Breakthroughs

- Neuromorphic computing: Scientists reported a breakthrough in neuromorphic computing using molecular memristors, potentially enabling more efficient AI hardware. The device offers 14-bit precision, high energy efficiency, and fast computation.

- PaperQA2: An AI agent was introduced that can conduct entire scientific literature reviews autonomously.

AI Industry News

- OpenAI departures: Multiple high-profile researchers have left OpenAI recently, including Alexis Conneau, who was working on GPT-4o/GPT-5.

- Ilya Sutskever's new venture: The ex-OpenAI researcher raised $1 billion for a new AI company without a product or revenue.

- Apple's AI efforts: Apple's upcoming iPhone 16 AI features are criticized as "late, unfinished & clumsy" compared to competitors.

AI Ethics and Societal Impact

- Deepfake concerns: Taylor Swift expressed concerns about AI-generated deepfakes falsely depicting her endorsing political candidates.

- Realistic AI-generated images: The Amateur Photography Lora v4 model demonstrates the increasing difficulty in distinguishing AI-generated images from real photos.

AI Discord Recap

A summary of Summaries of Summaries. We leave the 4o vs o1 comparisons for your benefit

GPT4O (gpt-4o-2024-05-13)

1. OpenAI O1 Model Launch and Reactions

- OpenAI O1 Model Faces Criticism Over Costs: OpenAI's O1 model has received criticism from users due to its high costs and underwhelming performance, especially in coding tasks, as discussed in OpenRouter.

- With pricing set at $60 per million tokens, users are concerned about unexpected costs and the overall value, prompting discussions about the model's practical utility.

- Mixed Reactions to OpenAI O1 Preview: The O1 preview received mixed feedback from users, questioning its improvements over models like Claude 3.5 Sonnet, particularly in creative tasks, as noted in Nous Research AI.

- Users are skeptical about its operational efficiency, suggesting it may need further iterations before a full rollout.

- OpenAI O1 Launches for Reasoning Tasks: OpenAI launched O1 models aimed at enhancing reasoning in complex tasks like science, coding, and math, available for all Plus and Team users in ChatGPT and through the API for tier 5 developers as reported in OpenAI.

- Users are noting that O1 models outperform previous iterations in problem-solving but some express skepticism about the price versus performance improvements.

2. AI Model Performance and Benchmarking

- DeepSeek V2.5 Launch with User-Friendly Features: DeepSeek V2.5 introduces a full-precision provider and promises no prompt logging for privacy-conscious users, as announced in OpenRouter.

- Additionally, DeepSeek V2 Chat and DeepSeek Coder V2 models have been merged into this version, allowing seamless redirection to the new model.

- PLANSEARCH Algorithm Boosts Code Generation: Research on the PLANSEARCH algorithm demonstrates it enhances LLM-based code generation by identifying diverse plans before code generation, promoting efficient outputs, as discussed in aider.

- By alleviating the lack of diversity in LLM outputs, PLANSEARCH shows valuable performance improvements across benchmarks like HumanEval+ and LiveCodeBench.

3. Challenges in AI Training and Inference

- Challenges with OpenAI O1 and GPT-4o Models: Feedback about the O1 and GPT-4o models underscores frustration as performance results indicate they do not markedly outperform previous versions, as noted in OpenRouter.

- Users advocate for more practical enhancements in these advanced models for real-world application, suggesting a need for consistent testing for validation.

- LLM Distillation Complexities: Participants in Unsloth AI expressed challenges in distilling LLMs into smaller models, emphasizing accurate output data as crucial for success.

- Reliance on high-quality examples is key, with slow inference arising from token costs for complex reasoning.

4. Innovations in AI Tools and Frameworks

- Ophrase and Oproof CLI Tools Transform Operations: A detailed article explains how Ophrase and Oproof bolster command-line interface functionalities by streamlining task automation and management, as discussed in HuggingFace.

- These tools offer substantial enhancements to workflow efficiency, promising a revolution in handling command line tasks.

- HTML Chunking Package Debuts: The new package

html_chunkingefficiently chunks and merges HTML content while maintaining token limits, crucial for web automation tasks, as introduced in LangChain AI.- This structured approach ensures valid HTML parsing, preserving essential attributes for a variety of applications.

5. AI Applications in Various Domains

- Roblox Capitalizes on AI for Gaming: A video discussed how Roblox is innovatively merging AI with gaming, positioning itself as a leader in this area, as noted in Perplexity AI.

- This development marks a substantial leap in integrating advanced technologies within gaming environments.

- Personal AI Executive Assistant Success: A member in Cohere successfully built a personal AI executive assistant that manages scheduling with a calendar agent cookbook, integrating voice inputs to edit Google Calendar events.

- The assistant adeptly interprets unstructured data, proving beneficial for organizing exam dates and project deadlines.

GPT4O-Aug (gpt-4o-2024-08-06)

1. OpenAI O1 Model Launch and Performance

- OpenAI O1 Model Draws Mixed Reactions: OpenAI O1 launched with claims of enhanced reasoning for complex tasks in science, coding, and math, sparking excitement among users.

- While some praise its problem-solving capabilities, others criticize its high costs and question if improvements justify the price, especially compared to older models like GPT-4o.

- Community Concerns Over O1's Practicality: Users express frustration over O1's performance in coding tasks, citing hidden 'thinking tokens' leading to unexpected costs, as noted at $60 per million tokens.

- Despite its marketed advancements, many users report preferring alternatives like Sonnet, highlighting the need for practical enhancements in real-world applications.

2. DeepSeek V2.5 Features and User Feedback

- DeepSeek V2.5 Launches with Privacy Features: DeepSeek V2.5 introduces a full-precision provider and ensures no prompt logging, appealing to privacy-conscious users.

- The new version merges DeepSeek V2 Chat and DeepSeek Coder V2, facilitating seamless model access while maintaining backward compatibility.

- DeepSeek Endpoint Performance Under Scrutiny: Users report inconsistent performance from the DeepSeek endpoint, questioning its reliability following recent updates.

- Community feedback suggests a need for improved performance consistency, with some considering alternative solutions.

3. AI Regulation and Community Reactions

- California's AI Regulation Bill Faces Opposition: Speculation around California's SB 1047 AI safety bill suggests a 66-80% chance of veto due to political dynamics, especially with Pelosi involved.

- Discussions highlight its potential impacts on data privacy and inference compute, reflecting tensions between tech innovation and regulatory measures.

- AI Regulation Discussions Spark Debates: Conversations on AI regulations, fueled by Dr. Epstein's insights on Joe Rogan's podcast, reveal concerns over narratives shaped by social media.

- Members advocate for a balanced approach to free speech versus hate speech, emphasizing moderation within AI discussions.

4. Innovations in LLM Training and Optimization

- PLANSEARCH Algorithm Boosts Code Generation: Research on the PLANSEARCH algorithm demonstrates it enhances LLM-based code generation by identifying diverse plans, improving efficiency.

- By broadening the range of generated observations, PLANSEARCH shows valuable performance improvements across benchmarks like HumanEval+ and LiveCodeBench.

- Dolphin Architecture for Efficient Context Processing: The Dolphin architecture introduces a 0.5B parameter decoder, promoting energy efficiency for long context processing while reducing latency by 5-fold.

- Empirical results reveal a 10-fold improvement in energy efficiency, positioning it as a significant advancement in model processing techniques.

5. Challenges in AI Model Deployment and Integration

- Reflection Model Faces Criticism: The Reflection 70B model drew fire over claims of falsified benchmarks, raising concerns about fairness in AI development.

- Critics labeled it a wrapper around existing techniques, emphasizing the need for transparency in model evaluations.

- API Access and Integration Challenges: Users express frustration over API limits and authorization issues, emphasizing the need for clarity in troubleshooting.

- Managing multiple AI models while keeping costs low remains a recurring theme in the discussions, highlighting integration challenges.

o1-mini (o1-mini-2024-09-12)

- OpenAI Unveils O1 Model with Superior Reasoning: OpenAI's O1 model series enhances reasoning in complex tasks, outperforming models like GPT-4o and Claude 3.5 Sonnet in over 50,000 matchups. Despite its advancements, users raise concerns about its high pricing of $60 per million tokens, prompting comparisons with alternatives such as Sonnet.

- Advanced Training Techniques Boost Model Efficiency: Innovations like ZeRO++ reduce GPU communication overhead by 4x, while vAttention optimizes KV-cache memory for efficient inference. Additionally, Consistency LLMs explore parallel token decoding, significantly lowering inference latency.

- Cost Management Strategies for High-Priced Models: Communities discuss optimizing usage to mitigate the costs of models like O1, emphasizing the importance of managing token consumption and exploring billing structures. Tips include leveraging /settings/privacy for data preferences and utilizing efficient prompting techniques to maximize value.

- Open-Source Frameworks Accelerate AI Development: Projects such as LlamaIndex and Axolotl empower developers with tools for building robust AI applications. Hackathons and community efforts offer over $10,000 in prizes, fostering collaboration and innovation in RAG technology and AI agent development.

- Fine-Tuning and Specialized Model Training Gains Traction: Techniques like Qlora facilitate effective fine-tuning of models such as Llama 3.1 and Mistral, enhancing performance in tasks like code generation and translations. Community benchmarks reveal that fine-tuned models can achieve competitive results, sparking ongoing discussions on optimizing training workflows and addressing domain-specific challenges.

o1-preview (o1-preview-2024-09-12)

OpenAI's o1 Model Launches to Mixed Reviews

- OpenAI released the o1 model series aiming to enhance reasoning in complex tasks like science, coding, and math. While some praised its improved problem-solving abilities, others criticized the high cost ($60 per million output tokens) and questioned its performance gains over existing models like GPT-4o and Sonnet.

Reflection 70B Model Faces Backlash Over Bold Claims

- The Reflection 70B model drew criticism for allegedly falsifying benchmarks claiming to outperform GPT-4o and Claude 3.5 Sonnet. Users labeled it \"a wrapper around existing techniques\" and reported disappointing performance, raising concerns about transparency in AI model evaluations.

AI Developers Embrace New Tools for Enhanced Workflows

- Projects like HOPE Agent introduce features like dynamic task allocation and JSON-based management to streamline AI orchestration. Additionally, Parakeet demonstrates rapid training capabilities, completing training in less than 20 hours using the AdEMAMix optimizer.

Skepticism Surrounds New AI Models Despite Promised Improvements

- Users expressed doubts about the effectiveness of models like OpenAI's o1, DeepSeek V2.5, and Solar Pro Preview, citing underwhelming performance and high costs. Discussions emphasized the need for verifiable benchmarks and practical enhancements over marketing hype in AI advancements.

California's AI Regulation Bill SB 1047 Raises Industry Concerns

- The proposed California AI regulation bill SB 1047 spurred debate over potential impacts on data privacy and inference compute. Speculation suggests a \"66-80% chance of veto\" due to political dynamics, highlighting tensions between tech innovation and regulatory measures.

PART 1: High level Discord summaries

OpenRouter (Alex Atallah) Discord

- DeepSeek V2.5 Rolls Out with User-Friendly Features: The launch of DeepSeek V2.5 introduces a full-precision provider and a promise of no prompt logging for users concerned about privacy, according to the OpenRouter announcement. Users can update their data preferences in the

/settings/privacysection.- Additionally, the DeepSeek V2 Chat and DeepSeek Coder V2 models have been merged into this version, allowing seamless redirection to the new model without losing access.

- OpenAI O1 Model Faces Criticism: The OpenAI O1 model is drawing ire from users regarding its high costs and disappointing outputs in coding tasks, particularly due to its use of hidden 'thinking tokens'. Several users reported they feel the model fails to deliver on expected capabilities.

- With pricing set at $60 per million tokens, users are raising concerns about unexpected costs and the overall value provided, prompting discussions around the model's practical utility.

- DeepSeek Endpoint's Performance Under Fire: Community members are reporting inconsistent performance from the DeepSeek endpoint, citing issues with previous downtimes and overall quality. Users are particularly questioning the reliability of the endpoint following recent updates.

- Concerns about performance consistency suggest users might need to replace or adjust their expectations concerning the endpoint's reliability and output quality.

- Diverse User Experiences in LLMs: Forum discussions reveal mixed experiences users have had with various LLMs, with many favoring alternatives such as Sonnet over OpenAI's O1. Some users indicate that despite O1's marketed advancements, performance was lacking compared to other competitors.

- These exchanges highlight a broader sentiment that while users hoped for improvements, many are pivoting to other models that have shown better results.

- Exploring Limitations of O1 and GPT-4o Models: Feedback about the O1 and GPT-4o models underscores frustration as performance results indicate they do not markedly outperform previous versions. Users advocate for more practical enhancements in these advanced models for real-world application.

- Critics call for a greater emphasis on real-world effectiveness rather than unsubstantiated claims of superior reasoning capabilities and argue that consistent testing is essential for validation.

aider (Paul Gauthier) Discord

- OpenAI o1 Pricing Shocks Users: OpenAI's o1 models, including o1-mini and o1-preview, come with hefty prices of $15.00 per million input tokens and $60.00 per million output tokens, leading users to worry about costs rivaling a full-time developer.

- Concerns arose that debugging with o1 could quickly escalate expenses, which has raised eyebrows within the community.

- Aider's Smart Edge Over Cursor: Comparisons between Aider and Cursor highlight Aider's strengths in code iteration and repository mapping, which aids pair programming.

- While Cursor allows for easier file viewing before commits, Aider is seen as the superior choice for making complex code adjustments.

- PLANSEARCH Algorithm Boosts Code Generation: Research on the PLANSEARCH algorithm demonstrates it enhances LLM-based code generation by identifying diverse plans before code generation, promoting more efficient outputs.

- By alleviating the lack of diversity in LLM outputs, PLANSEARCH exhibits valuable performance across benchmarks like HumanEval+ and LiveCodeBench.

- Community Buzz on Diversity in LLM Outputs: A new paper emphasizes that insufficient diversity in LLM outputs hampers performance, leading to inefficient searches and repetitive incorrect outputs.

- PLANSEARCH tackles this issue by broadening the range of generated observations, resulting in significant performance improvements in code generation tasks.

- Aider Scripting Improves Usability: Discussions around enhancing Aider scripting reveal suggestions for defining script file names and configuring .aider.conf.yml for efficient file management.

- Other users also tackled git ignore issues, offering solutions for editing ignored files by adjusting settings or employing command-line flags.

OpenAI Discord

- OpenAI launches o1 models for reasoning: OpenAI released a preview of o1, a new series of AI models aimed at enhancing reasoning in complex tasks like science, coding, and math. This rollout is available for all Plus and Team users in ChatGPT and through the API for tier 5 developers.

- Users are noting that o1 models outperform previous iterations in problem-solving but some express skepticism about the price versus performance improvements.

- ChatGPT struggles with memory functionality: Members reported ongoing issues with ChatGPT memory loading, affecting consistent responses over weeks of conversation. Some switched to the app hoping for reliable access while noting the absence of a Windows app.

- Frustration was voiced about shortcomings, especially in handling chat memory, alongside mixed feelings regarding the new o1 model's creativity compared to GPT-4.

- Active discussions on prompt performance: A member noted a prompt's execution time at 54 seconds, indicating good performance following optimizations, particularly after integrating enhanced physics functionalities. The location of the prompt-labs was clarified for better user accessibility.

- Community members expressed relief upon discovering the library section within the Workshop, revealing potential gaps in the communication of tool availability.

- API access for customizable ChatGPTs discussed: Discussions highlighted users' curiosity regarding accessing APIs for customizable ChatGPTs, with confirmation of availability but uncertainty on the effectiveness of models like o1. Concerns arose regarding user confusion over different model interfaces and rollout statuses.

- Critical experiences related to custom GPTs also surfaced, including issues with publishing due to suspected policy violations, raising questions about the reliability of OpenAI's processes.

Nous Research AI Discord

- Mixed Reviews on the o1 Preview: The o1 preview has received mixed feedback, with some users questioning its improvements over existing models like Claude 3.5 Sonnet, particularly in creative tasks.

- Concerns were raised about its operational efficiency, suggesting it may need further iterations before a full rollout.

- Dolphin Architecture Boosts Context Efficiency: The Dolphin architecture showcases a 0.5B parameter decoder, promoting energy efficiency for long context processing while reducing latency by 5-fold.

- Empirical results reveal a 10-fold improvement in energy efficiency, positioning it as a significant advancement in model processing techniques.

- Inquiring Alternatives for Deductive Reasoning: A discussion on the availability of general reasoning engines explored options beyond traditional LLMs, emphasizing a need for systems capable of solving logical syllogisms.

- An example problem involving potatoes illustrated gaps in LLM performance for deductive reasoning, hinting at the potential use of hybrid systems.

- Cohere Models Compared with Mistral: Feedback regarding Cohere models revealed sentiments of limited alignment and intelligence compared to Mistral, suggesting the latter offers better performance.

- Participants reinforced the comparison between Mistral Large 2 and CMD R+, showcasing Mistral's superior capabilities.

- AI in Product Marketing Discussion: Members investigated AI models that could autonomously manage marketing tasks across platforms, expressing the absence of viable solutions at present.

- The potential for integrating various APIs was floated, sparking ideas for future developments in marketing automation.

HuggingFace Discord

- Ophrase and Oproof CLI Tools Transform Operations: A detailed article explains how Ophrase and Oproof bolster command-line interface functionalities by streamlining task automation and management.

- These tools offer substantial enhancements to workflow efficiency, promising a revolution in handling command line tasks.

- Unpacking Reflection 70B Model: The Reflection 70B project illustrates advances in model versatility, utilizing Llama cpp for improved performance and user interaction.

- It opens a discussion on the dynamics of model adaptability, aiming for accessible community engagement around its functionalities.

- Launch of Persian Dataset for NLP: Introducing a new Persian dataset featuring 6K translated sentences from Wikipedia, aimed at aiding Persian language modeling.

- This initiative enhances resource availability for diverse language processing tasks within the NLP landscape.

- AI Regulation Brings Mixed Reactions: Conversations on AI regulations, sparked by Dr. Epstein's insights on Joe Rogan’s podcast, reflect worries about narratives shaped by social media platforms.

- Members advocate for a balanced approach to free speech versus hate speech, emphasizing the need for moderation within AI discussions.

- HOPE Agent Enhances AI Workflow Management: The HOPE Agent introduces features like dynamic task allocation and JSON-based management to streamline AI orchestration.

- It integrates with existing frameworks such as LangChain and Groq API, enhancing automation across workflows.

Modular (Mojo 🔥) Discord

- Mojo's Error Handling Importance: The discussion focused on ensuring syscall interfaces in Mojo return meaningful error values, which is crucial given the interface contract in languages like C.

- Designing these interfaces requires a thorough understanding of potential errors for effective syscall response management.

- Converting Span[UInt8] to String: Guidance was sought on converting a

Span[UInt8]to a string view in Mojo, with direction provided towardsStringSlice.- To properly initialize

StringSlice, theunsafe_from_utf8keyword argument was noted as necessary after encountering initialization errors.

- To properly initialize

- ExplicitlyCopyable Trait RFC Proposal: A suggestion was made to initiate an RFC for the

ExplicitlyCopyabletrait to require implementingcopy(), potentially impacting future updates.- This could significantly reduce breaking changes to existing definitions according to participants.

- MojoDojo's Open Source Collaboration: The community discovered that mojodojo.dev is now open-sourced, paving the way for collaboration.

- Originally created by Jack Clayton, this resource served as a playground for learning Mojo.

- Recommendation Systems in Mojo: Inquiry arose about existing Mojo or MAX features for developing recommendation systems, revealing both are still in the 'build your own' phase.

- Members noted ongoing development for these functionalities which are not fully established yet.

Perplexity AI Discord

- OpenAI O1 Sparks Enthusiasm: Users are buzzing about the release of OpenAI O1, a model series that promises enhanced reasoning and complex problem-solving capabilities.

- Speculations suggest it may integrate features from reflection and agent-oriented frameworks like Open Interpreter.

- Doubts Surround AI Music: Members expressed skepticism regarding the sustainability of AI-driven music, deeming it a gimmick devoid of genuine artistic value.

- They highlighted the absence of the human touch that gives traditional music its depth and meaning.

- Uncovr.ai Faces Development Challenges: The creator of Uncovr.ai shared insights on the hurdles faced during platform development, stressing the need for enhancements to the user experience.

- Concerns about cost management and sustainable revenue models were noted throughout discussions.

- API Limit Frustrations Prevail: Users aired frustrations over API limits and authorization issues, emphasizing the need for clarity in troubleshooting.

- Management of multiple AI models while keeping costs low was a recurring theme in the discussions.

- Roblox Capitalizes on AI for Gaming: A video discussed how Roblox is innovatively merging AI with gaming, positioning itself as a leader in this area; check the video here.

- This development marks a substantial leap in integrating advanced technologies within gaming environments.

Interconnects (Nathan Lambert) Discord

- OpenAI o1 Model Impresses with Reasoning: The newly released OpenAI o1 model is designed to think more before responding and has shown strong results on benchmarks like MathVista.

- Users noted its ability to handle complex tasks with mixed feedback on its practical performance moving forward.

- California's AI Regulation Bill SB 1047 Raises Concerns: Speculation around California's SB 1047 AI safety bill suggests a 66-80% chance of veto due to political dynamics, especially with Pelosi involved.

- Discussions on the bill’s potential impacts on data privacy and inference compute highlight the tension between tech innovation and regulatory measures.

- Benchmark Speculation on OpenAI o1 Performance: Initial benchmark tests indicate that OpenAI's o1-mini model is performing comparably to gpt-4o, particularly in code editing tasks.

- The community is interested in how the o1 model fares against existing LLMs, reflecting a competitive landscape in AI.

- Understanding RLHF in Private Models: Members are trying to uncover how RLHF (Reinforcement Learning from Human Feedback) functions specifically for private models as Scale AI explores this area.

- This approach aims to align model behaviors with human preferences, which could enhance training reliability.

- Challenges in Domain Expertise for Scale AI: In specialized fields like materials science and chemistry, challenges for Scale AI against established domain experts are anticipated.

- Members reflected that handling data in clinical contexts is significantly more complex compared to less regulated areas, impacting training effectiveness.

Unsloth AI (Daniel Han) Discord

- Reflection Model Faces Backlash: The Reflection 70B model drew fire over claims of falsified benchmarks, still being available on Hugging Face, which raised concerns about fairness in AI development.

- Critics labeled it a wrapper around existing techniques and emphasized the need for transparency in model evaluations.

- Unsloth Limited to NVIDIA GPUs: Unsloth confirmed it only supports NVIDIA GPUs for finetuning, leaving potential AMD users disappointed.

- The discussion highlighted that Unsloth's optimized memory usage makes it a top choice for projects requiring high performance.

- KTO Outshines Traditional Model Alignment: Insights on KTO indicated it could significantly outperform traditional methods like DPO, though models trained with it remain under wraps due to proprietary data.

- Members were excited about the potential of KTO but noted the need for further validation once models become accessible.

- Skepticism Surrounds Solar Pro Preview Model: The Solar Pro Preview model, boasting 22 billion parameters, has been introduced for single GPU efficiency while claiming superior performance over larger models.

- Critics voiced concerns about the practicality of its bold claims, recalling previous letdowns in similar announcements.

- LLM Distillation's Complexities: Participants expressed challenges in distilling LLMs into smaller models, emphasizing accurate output data as crucial for success.

- Disgrace6161 pointed out that reliance on high-quality examples is key, with slow inference arising from token costs for complex reasoning.

Latent Space Discord

- OpenAI Launches o1: A Game Changer for Reasoning: OpenAI has launched the o1 model series, designed to excel in reasoning across various domains such as math and coding, garnering attention for its enhanced problem-solving capabilities.

- Reports highlight that the o1 model not only outperforms previous iterations but also improves on safety and robustness, taking a notable leap in AI technology.

- Devin AI Shines with o1 Testing: Evaluations of the coding agent Devin with OpenAI's o1 models yielded impressive results, showcasing the importance of reasoning in software engineering tasks.

- These tests indicate that o1's generalized reasoning abilities provide a significant performance boost for agentic systems focused on coding applications.

- Scaling Inference Time Discussion: Experts are evaluating the potential of inference time scaling methods linked to the o1 models, proposing that it can rival traditional training scaling and enhance LLM functionality.

- Discussions emphasize the need to measure hidden inference processes to understand their effects on the operational success of models like o1.

- Community's Mixed Feelings on o1: The AI community has expressed a variety of emotions towards the o1 model, with some doubting its efficacy compared to earlier models like Sonnet/4o.

- Highlights of the conversation include concerns about LLM limitations for non-domain experts, underscoring the necessity for specialized tools in AI.

- Anticipating Future o1 Developments: The community is keen to see upcoming developments for the o1 models, especially the exploration of potential voice features.

- While excitement surrounds o1's cognitive capabilities, some users are facing limitations in functional aspects like voice interactions.

CUDA MODE Discord

- Exciting Compute Credits for CUDA Hackathon: The organizing team secured $300K in cloud credits along with access to a 10 node GH200 cluster and a 4 node 8 H100 cluster for participants of the hackathon.

- This opportunity includes SSH access to nodes, allowing for serverless scaling with the Modal stack.

- Robust Support for torch.compile: Support for

torch.compilehas been integrated into themodel.generate()function in version 0.2.2 of the MobiusML repository, improving usability.- This update means that the previous dependency on HFGenerator for model generation is eliminated, simplifying workflows for developers.

- GEMM FP8 Implementation Advances: A recent pull request implements FP8 GEMM using E4M3 representation, addressing issue #65 and testing various matrix sizes.

- Documentation for SplitK GEMM has been added to guide developers on usage and implementation strategies.

- Aurora Innovation Hiring Engineers: Aurora Innovation aims for a commercial launch by late 2024, seeking L6 and L7 engineers with expertise in GPU acceleration and CUDA/Triton tools.

- The company recently raised $483 million to support its driverless launch plans, highlighting significant investor confidence.

- Evaluating AI Models and OpenAI's Strategy: Participants expressed concerns about OpenAI's competitiveness compared to rivals like Anthropic, emphasizing the need for innovative training strategies.

- Debate around Chain of Thought (CoT) revealed frustrations over its implementation transparency, affecting perceptions of leadership effectiveness at OpenAI.

Stability.ai (Stable Diffusion) Discord

- Reflection LLM Performance Concerns: The Reflection LLM claimed to outperform GPT-4o and Claude Sonnet 3.5, yet its actual performance has drawn significant criticism, especially compared to the open-source variant.

- Doubts emerged regarding its originality as its API seemed to mirror Claude, prompting users to question its effectiveness.

- Exploration of AI Photo Generation Services: There were inquiries about the best paid AI photo generation services for realistic image outputs, creating a lively discussion around available options.

- A notable alternative was mentioned: Easy Diffusion, positioned as a strong free competitor.

- Flux Model Performance Optimizations: Users reported positive experiences with the Flux model, highlighting notable performance gains tied to memory usage tweaks and RAM limits.

- There is ongoing discussion about low VRAM optimizations, particularly in comparison to competitors like SDXL.

- Lora Training Troubleshooting: Members shared the difficulties encountered during Lora training, seeking help on better configurations for devices with limited VRAM.

- Discussions included references to resources for workflow optimizations, specifically highlighting trainers like Kohya.

- Dynamic Contrast Adjustments in Models: One user explored methods to decrease contrast in their lighting model by using specific CFG settings and proposed dynamic thresholding techniques.

- They requested advice on how to balance parameters when modifying CFG values, indicating a need for precise adjustments to improve output quality.

LM Studio Discord

- Guidelines for Implementing RAG in LM Studio: For a successful RAG pipeline in LM Studio, users should download version 0.3.2 and upload PDFs as advised by members. Another user encountered a 'No relevant citations found for user query' error, recommending to make specific inquiries instead of general questions.

- Members are encouraged to refer to Building Performant RAG Applications for Production - LlamaIndex for further insights.

- Ternary Models Performance Issues: Members discussed troubles with loading ternary models, with one reporting an error while accessing a model file and another suggested reverting to older quantization types since the latest 'TQ' types are still experimental.

- This highlights the need for caution when dealing with newer types and adjusting workflows accordingly.

- Community Meet-up in London: Users are invited to a community meet-up in London to discuss prompt engineering and share experiences, emphasizing readiness to connect with fellow engineers. Attendees should look for older members carrying laptops.

- This event provides a platform for networking and exchanging valuable insights.

- OpenAI O1 Access Rollout: Members are discussing their experiences with accessing the OpenAI O1 preview, noting the rollout occurs in batches. Several users have received access recently, while others await their opportunity.

- This rollout showcases the gradual availability of new tools within the community.

- Interest in Dual 4090D Setup: One member shared excitement over using two 4090D GPUs with 96GB RAM each but highlighted the power challenge needing a small generator for their 600W requirement. This humorous take on high power consumption caught the group's attention.

- This enthusiasm reflects the advanced setups members are considering to boost their performance.

OpenInterpreter Discord

- Enhanced Terminal Output with Rich: Rich is a Python library for beautiful terminal formatting, greatly enhancing output visuals for developers.

- Members explored various terminal manipulation techniques, discussing alternatives to improve color and animation functionalities.

- LiveKit Server Best Practices: Community consensus promotes Linux as the favored OS for the LiveKit Server, with anecdotes of troubles on Windows.

- One member humorously noted, 'Not Windows, only had problems so far,' easing others' concerns about OS choice.

- Preview Release of OpenAI o1 Models: OpenAI teased the preview of o1, a new model series aimed at improving reasoning in science, coding, and math applications, detailed in their announcement.

- Members expressed excitement over the potential for tackling complex tasks better than previous models.

- Challenges with Open Interpreter Skills: Participants highlighted Open Interpreter skills not being retained post-session, affecting functionality such as Slack messaging.

- A call for community collaboration to resolve this issue has been issued, seeking further investigation.

- Awaiting Cursor and o1-mini Integration: Users expressed eagerness for Cursor's launch with o1-mini, hinting at its upcoming functionality with playful emojis.

- The anticipation for o1-mini suggests a growing demand for novel tool capabilities in the community.

Cohere Discord

- Parakeet Project Makes Waves: The Parakeet project, trained using an A6000 line model for 10 hours on a 3080 Ti, produced notable outputs, stirring interest in the effectiveness of the AdEMAMix optimizer. A member noted, 'this might be the reason Parakeet was able to train in < 20 hours at 4 layers.'

- The success indicates a potential shift in training paradigms while inviting further investigations into optimization techniques.

- GPU Ownership Reveals Varied Setups: A member shared their impressive GPU lineup, boasting ownership of 7 GPUs, including 3 RTX 3070's and 2 RTX 4090's. This prompted humorous reactions regarding the naming conventions of GPUs and their relevance today.

- The ongoing discussions highlight the broad diversity in hardware choices and usage among members.

- Quality Over Quantity in Training Data: A conversation emphasized that it’s not the sheer volume but the quality of data that counts when training models - a perspective shared by a member regarding their work with 26k rows for a JSON to YAML use case. They stated, 'Less the amount of data - it's more the quality.'

- The exchange pointed towards a deeper understanding of data importance in training methodologies.

- Personal AI Executive Assistant Success: A member successfully built a personal AI executive assistant that manages scheduling with a calendar agent cookbook, integrating voice inputs to edit Google Calendar events. This project demonstrates an innovative use of AI for personal productivity.

- The assistant adeptly interprets unstructured data, proving beneficial for organizing exam dates and project deadlines.

- Seeking Best Practices for RAG Applications: A user inquired about best practices for implementing guardrails in RAG applications, stressing that solutions should be context-specific. This aligns with ongoing efforts to optimize AI applications for real-world utility.

- They also investigated tools used for evaluating RAG performance, aiming to pinpoint widely accepted metrics and methodologies.

Eleuther Discord

- Simplifying Contribution to Projects: A member emphasized that opening an issue in any project and following it up with a PR is the easiest way to contribute.

- This method provides clarity and fosters collaboration in ongoing projects.

- Excitement over PhD Completion: One member shared enthusiasm about finishing their PhD in Germany and gearing up for a postdoc focusing on safety and multi-agent systems.

- They also highlighted hobbies like chess and table tennis, showing a well-rounded personal life.

- RWKV-7 Stands Out with Chain of Thought: The RWKV-7 model, with only 2.9M parameters, demonstrates impressive capabilities in solving complex tasks using Chain of Thought.

- It's noted that generating extensive data with reversed numbers enhances its training efficiency.

- Pixtral 12B Falls Short in Comparisons: Discussion erupted over the Pixtral 12B's performance, which appeared inferior compared to Qwen 2 7B VL despite being larger.

- Skepticism arose regarding data integrity presented at the MistralAI conference, indicating potential oversights.

- Challenges in Multinode Training: Concerns were raised about the feasibility of multinode training over slow Ethernet links, particularly with DDP across 8xH100 machines.

- Members agreed that optimizing the global batch size is essential to overcome performance bottlenecks.

LlamaIndex Discord

- Hands-on AI Scheduler Workshop Coming Up: Join us at the AWS Loft on September 20th to learn about building a RAG recommendation engine for meeting productivity using Zoom, LlamaIndex, and Qdrant. More details can be found here.

- This workshop aims to create a highly efficient meeting environment with cutting-edge tools that provide transcription features.

- Build RAG System for Automotive Needs: A new multi-document agentic RAG system will help diagnose car issues and manage maintenance schedules using LanceDB. Participants can set up vector databases for effective automotive diagnostics as explained here.

- This approach underscores the versatility of RAG systems in practical applications beyond traditional settings.

- OpenAI Models Now Available in LlamaIndex: With the integration of OpenAI's o1 and o1-mini models, users can utilize these models directly in LlamaIndex. Install the latest version using

pip install -U llama-index-llms-openaifor full access details here.- This update enhances the capabilities within LlamaIndex, aligning with ongoing advancements in model utility.

- LlamaIndex Hackathon Offers Cash Prizes: Prepare for the second LlamaIndex hackathon scheduled for October 11-13, with more than $10,000 in prizes sponsored by Pinecone and Vesslai. Participants can register here for this event focused on RAG technology.

- The hackathon encourages innovation in RAG applications and AI agent development.

- Debate on Complexity of AI Frameworks: Discussions arose over whether frameworks like LangChain, Llama_Index, and HayStack have become overly complex for practical use in LLM development. An insightful Medium post was referenced.

- This highlights ongoing concerns about balancing functionality and simplicity in tool design.

DSPy Discord

- Output Evaluation Challenges: Members highlighted a lack of methods to evaluate outputs for veracity beyond standard prompt techniques aimed at reducing hallucinations, emphasizing the need for better evaluations.

- One member humorously noted, Please don't cite my website in your next publication, stressing caution in using generated outputs.

- Customizing DSPy Chatbots: A member inquired about implementing client-specific customizations in DSPy-generated prompts using a post-processing step instead of hard-coding, aiming for flexibility.

- Another member proposed utilizing a 'context' input field similar to a RAG approach, suggesting training pipelines with common formats to enhance adaptability.

- O1 Pricing Confusion: Discussion about OpenAI's O1 pricing revealed that members were uncertain about its structure, with one confirming that O1 mini is cheaper than other options.

- Members expressed interest in conducting a comparative analysis between DSPy and O1, suggesting a trial of O1 for potential cost-effectiveness.

- Understanding RPM for O1: '20rpm' was clarified by a member as referring to 'requests per minute', a critical metric that impacts performance discussions regarding O1 and DSPy.

- This clarification led to further inquiries about the implications of this metric for current and future integrations.

- DSPy and O1 Integration Curiosity: Questions arose about DSPy's compatibility with O1-preview, reflecting the community's eagerness to explore more functionalities between these two systems.

- This interest signifies the importance of integration to enhance capabilities within DSPy.

Torchtune Discord

- Mixed Precision Training Complexity: Maintaining compatibility between mixed precision modules and other features requires extra work; bf16 half precision training is noted as strictly better due to fp16 support issues on older GPUs.

- Using fp16 naively leads to overflow errors, increasing system complexity and memory usage with full precision gradients.

- FlexAttention Integration Approved: The integration of FlexAttention for document masking has been merged, sparking excitement about its potential.

- Questions arose about whether each 'pack' is padded to max_seq_len, considering the implications of lacking a perfect shuffle for convergence.

- PackedDataset Shines with INT8: Performance tests revealed a 40% speedup on A100 with PackedDataset using INT8 mixed-precision in torchao.

- A member plans more tests, confirming that the fixed seq_len of PackedDataset fits well with their INT8 strategy.

- Tokenizer API Standardization Discussion: A member suggested addressing issue #1503 to unify the tokenizer API before tackling the eos_id issue, implying this could streamline development.

- With an assignee already on #1503, the member intends to explore other fixes to enhance overall improvements.

- QAT Clarification Provided: A member compared QAT (Quantization-Aware Training) with INT8 mixed-precision training, highlighting key differences in their goals.

- QAT aims to improve accuracy, while INT8 training focuses on enhancing speed and may not require QAT for minimal accuracy loss.

OpenAccess AI Collective (axolotl) Discord

- OpenAI Model Sparks Curiosity: Members are buzzing about the new OpenAI model, questioning its features and reception, suggesting it's a fresh release worth exploring.

- It sparked curiosity and further discussion about its capabilities and reception.

- Llama Index Interest Peaks: Interest in the Llama Index grows as members share their familiarity with its tools for model interaction and potential connections to the OpenAI model.

- This led to a potential exploration of how it relates to the new OpenAI model.

- Reflection 70B Labelled a Dud: Concerns emerged regarding the Reflection 70B model being considered a dud, spurring discussions about the implications of the new OpenAI release timing.

- The comment was shared light-heartedly, suggesting it was a response to previous disappointments.

- DPO Format Expectations Set: A member clarified the expected DPO format as

<|begin_of_text|>{prompt}followed by{chosen}<|end_of_text|>and{rejected}<|end_of_text|>, referencing a GitHub issue.- This update points to improvements in custom format handling within the Axolotl framework.

- Llama 3.1 Struggles with Tool Calls: Issues arose with the llama3.1:70b model regarding nonsensical outputs when utilizing tool calls, despite appearing functionally correct.

- In one instance, after the tool indicated the night mode was deactivated, the assistant still failed to appropriately respond to subsequent requests.

LAION Discord

- Flux by RenderNet launched!: Flux from RenderNet enables users to create hyper-realistic images from just one reference image without the need for LORAs. Check it out through this link.

- Ready to bring your characters to life? Users can get started effortlessly with just a few clicks.

- SD team undergoes name change: The SD team has changed its name to reflect their departure from SAI, leading to discussions about its current status.

- So SD has just died? This comment captures the sentiment of concern among members regarding the team's future.

- Concerns about SD's open-source status: Members expressed worries about SD's lack of activity in the open source space, indicating a possible decline in community engagement.

- If you care about open source, SD seems to be dead, was a notable remark on the perceived inactivity.

- New API/web-only model released by SD: Despite concerns about engagement, the SD team has released a recent API/web-only model, signaling some level of output.

- Though initial skepticism about their commitment to open source persists, the release shows they are still working.

- Stay Updated with Sci Scope: Sci Scope compiles new ArXiv papers with related topics, summarizing them weekly for easier consumption in AI research.

- Subscribe to the newsletter for straightforward updates delivered directly, enhancing your awareness of current literature.

LangChain AI Discord

- HTML Chunking Package Debuts: The new package

html_chunkingefficiently chunks and merges HTML content while maintaining token limits, crucial for web automation tasks.- This structured approach ensures valid HTML parsing, preserving essential attributes for a variety of applications.

- Demo Code Shared for HTML Chunking: A demo snippet showcasing

get_html_chunksillustrates how to process an HTML string while preserving its structure within set token limits.- The outputs consist of valid HTML chunks—long attributes are truncated, ensuring the lengths remain reasonable for practical use.

- HTML Chunking vs Existing Tools:

html_chunkingis compared to LangChain'sHTMLHeaderTextSplitterand LlamaIndex'sHTMLNodeParser, highlighting its superiority in preserving HTML context.- The existing tools primarily extract text content, undermining their effectiveness in scenarios demanding comprehensive HTML retention.

- Call to Action for Developers: Developers are encouraged to explore

html_chunkingfor enhanced web automation capabilities, emphasizing its precise HTML handling.- Links to the HTML chunking PYPI page and GitHub repo provide avenues for further exploration.

tinygrad (George Hotz) Discord

- George Hotz promotes sensible engagement: George Hotz has requested that members refrain from unnecessary @mentions unless their queries are deemed constructive, encouraging self-sufficiency in resource utilization.

- It only takes one search to find pertinent information, fostering a culture of relevance within discussions.

- New terms of service focus on ethical practices: George has introduced a terms of service specifically for ML developers, aimed at prohibiting activities like crypto mining and resale on GPUs.

- This policy intends to create a focused development environment, particularly leveraging MacBooks' capabilities.

LLM Finetuning (Hamel + Dan) Discord

- Literal AI's Usability Shines: A member praised Literal AI for its usability, noting features that enhance the LLM application lifecycle available at literalai.com.

- Integrations and intuitive design were highlighted as key aspects that facilitate smoother operations for developers.

- Boosting App Lifecycle with LLM Observability: LLM observability was discussed as a game-changer for enhancing app development, allowing for quicker iterations and debugging while utilizing logs for fine-tuning smaller models.

- This approach is set to improve performance and reduce costs significantly in model management.

- Transforming Prompt Management: Emphasizing prompt performance tracking as a safeguard against deployment regressions, the discussion indicated its necessity for reliable LLM outputs.

- This method proactively maintains quality assurance across updates.

- Establishing LLM Monitoring Setup: Insights were shared on building a robust LLM monitoring and analytics system, integrating log evaluations to uphold optimal production performance.

- Such setups are deemed critical for ensuring sustained efficiency in operations.

- Fine-tuning LLMs for Better Translations: A discussion surfaced about fine-tuning LLMs specifically for translations, pinpointing challenges where LLMs capture gist but often miss tone or style.

- This gap presents an avenue for developers to innovate in translation capabilities.

Gorilla LLM (Berkeley Function Calling) Discord

- Gorilla LLM Tests Reveal Mixed Accuracy: Recent tests on Gorilla LLM show concerning results, with irrelevance achieving a perfect accuracy of 1.0, while both java and javascript recorded 0.0.

- Tests such as live_parallel_multiple and live_simple were disappointing, prompting doubts on the models' effectiveness.

- Member Seeks Help on Prompt Splicing for Qwen2-7B-Chat: A user raised worries over the subpar performance of qwen2-7b-chat, questioning if it stems from issues with prompt splicing.

- They are looking for reliable insights and methods to enhance their testing experience with effective prompt strategies.

MLOps @Chipro Discord

- Insights Wanted on Predictive Maintenance: A member raised questions about their experiences with predictive maintenance and sought resources such as papers or books on optimal models and practices, especially regarding unsupervised methods without tracked failures.

- The discussion highlighted the impracticality of labeling events manually, emphasizing a need for efficient methodologies within the field.

- Mechanical and Electrical Focus in Monitoring: Discussion centered on a device that is both mechanical and electrical, which records several operational events that can benefit from improved monitoring practices.

- Members agreed that utilizing effective monitoring strategies could enhance maintenance approaches and potentially lower future failure rates.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!