[AINews] o1 destroys Lmsys Arena, Qwen 2.5, Kyutai Moshi release

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

o1 may be all you need.

AI News for 9/17/2024-9/18/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (221 channels, and 1591 messages) for you. Estimated reading time saved (at 200wpm): 176 minutes. You can now tag @smol_ai for AINews discussions!

We humans at Smol AI have been dreading this day.

For the first time ever, an LLM has been able to 100% match and accurately report what we consider to be the top stories of the day without our intervention. (See the AI Discord Recap below.)

Perhaps more interesting for the model trainers, o1-preview consistently beats out our vibe check evals. Every AINews daily run is a bakeoff between OpenAI, Anthropic, and Google models (you can see traces in the archives. we briefly tried Llama 3 too but it consistently lost), and o1-preview has basically won every day since introduction (with no specific tuning beyond needing to rip out instructor's hidden system prompts).

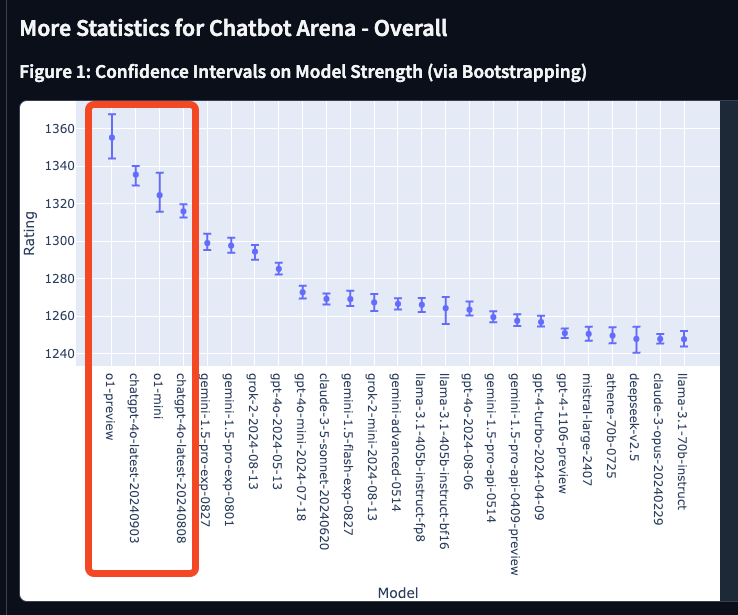

We now have LMsys numbers on o1-preview and -mini to quantify the vibe checks.

The top 4 slots on LMsys are now taken by OpenAI models. Demand has been high even as OpenAI is gradually raising rate limits by the day, now up to 500-1000 requests per minute.

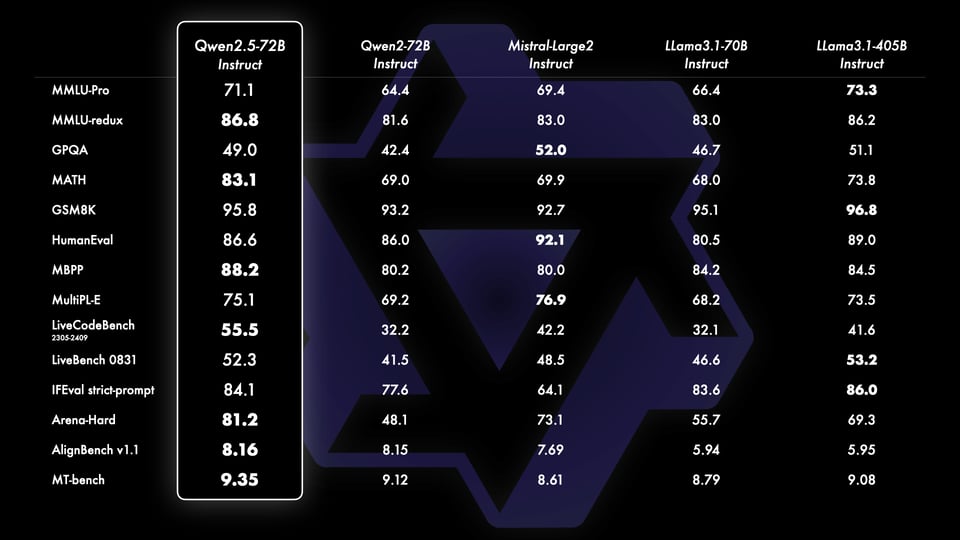

Over in open source land, Alibaba's Qwen caught up to DeepSeek with its own Qwen 2.5 suite of general, coding, and math models, showing better numbers than Llama 3.1 at the 70B scale.

as well as updating their closed Qwen-Plus models to beat DeepSeek V2.5 but coming short of the American frontier models.

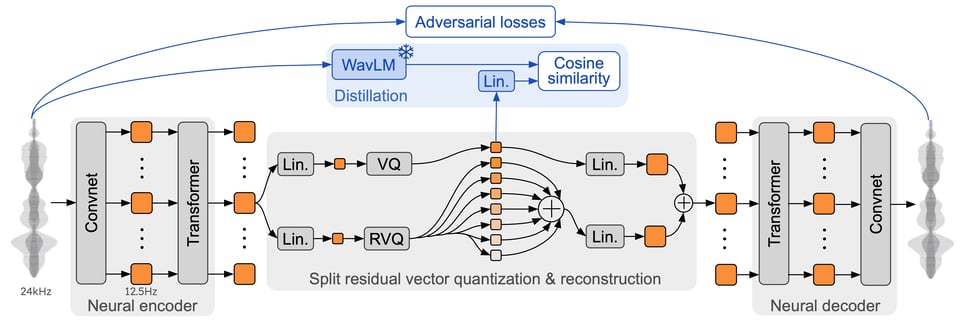

Finally, Kyutai Moshi, which teased its realtime voice model in July and had some entertaining/concerning mental breakdowns in the public demo, finally released their open weights model as promised, along with details of their unique streaming neural architecture that displays an "inner monologue".

Live demo remains at https://moshi.chat, or try locally at

$ pip install moshi_mlx

$ python -m moshi_mlx.local_web -q 4

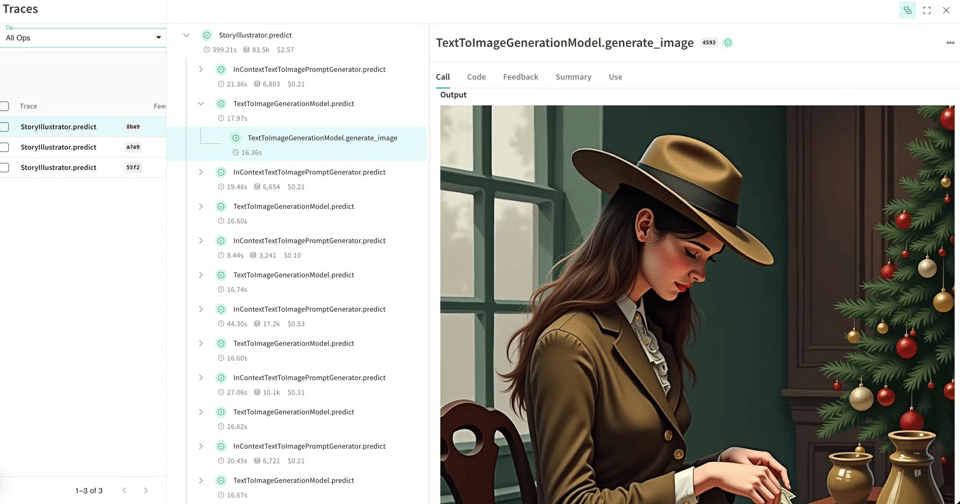

[This week's issues brought to you by Weights and Biases Weave!]: Look, we’ll be honest, many teams know Weights & Biases only as the best ML experiments tracking software in the world and aren’t even aware of our new LLM observability toolkit called Weave. So if you’re reading this, and you’re doing any LLM calls on production, why don’t you give Weave a try? With 3 lines of code you can log and trace all inputs, outputs and metadata between your users and LLMs, and with our evaluation framework, you can turn your prompting from an art into more of a science.

Check out the report on building a GenAI-assisted automatic story illustrator with Weave.

swyx's Commentary: I'll visiting the WandB LLM-as-judge hackathon this weekend in SF with many friends from the Latent Space/AI Engineer crew hacking with Weave!

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Updates and Releases

- OpenAI's o1 models: @sama announced significant outperformance on goal 3, despite taking longer than expected. These models use chain-of-thought reasoning for enhanced complex problem-solving.

- Mistral AI's Pixtral: @GuillaumeLample announced the release of Pixtral 12B, a multimodal model available on le Chat and la Plateforme. It features a new 400M parameter vision encoder and a 12B parameter multimodal decoder based on Mistral Nemo.

- Llama 3.1: @AIatMeta shared an update on Llama's growth, noting rapidly increasing usage across major cloud partners and industries.

AI Development and Tools

- ZML: @ylecun highlighted ZML, a high-performance AI inference stack for parallelizing and running deep learning systems on various hardware, now out of stealth and open-source.

- LlamaCloud: @jerryjliu0 announced multimodal capabilities in LlamaCloud, enabling RAG over complex documents with spatial layouts, nested tables, and visual elements.

- Cursor: @svpino praised Cursor's code completion capabilities, noting its advanced features compared to other tools.

AI Research and Benchmarks

- Chain of Thought Empowerment: A paper shows how CoT enables transformers to solve inherently serial problems, expanding their problem-solving capabilities beyond parallel-only limitations.

- V-STaR: Research on training verifiers for self-taught reasoners, showing 4% to 17% improvement in code generation and math reasoning benchmarks.

- Masked Mixers: A study suggests that masked mixers with convolutions may outperform self-attention in certain language modeling tasks.

AI Education and Resources

- New LLM Book: @JayAlammar and @MaartenGr released a new book on Large Language Models, available on O'Reilly.

- DAIR.AI Academy: @omarsar0 announced the launch of DAIR.AI Academy, offering courses on prompt engineering and AI application development.

AI Applications and Demonstrations

- AI Product Commercials: @mickeyxfriedman introduced AI-generated product commercials on Flair AI, allowing users to create animated videos from product photos.

- Multimodal RAG: @llama_index launched multimodal capabilities for building end-to-end multimodal RAG pipelines across unstructured data.

- NotebookLM: @omarsar0 demonstrated NotebookLM's ability to generate realistic podcasts from AI papers, showcasing an interesting application of AI and LLMs.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. T-MAC: Energy-efficient CPU backend for llama.cpp

- T-MAC (an energy efficient cpu backend) may be coming to llama.cpp! (Score: 50, Comments: 5): T-MAC and BitBLAS, Microsoft-backed projects designed for efficient low-bit math, may be integrated into llama.cpp as the T-MAC maintainers plan to submit a pull request. T-MAC shows linear scaling of FLOPs and inference latency relative to bit count, supports bit-wise computation for int1/2/3/4 without dequantization, and accommodates various activation types using fast table lookup and add instructions. This integration could benefit projects like Ollama, potentially improving performance on laptops and mobile devices like the Pixel 6, which currently faces thermal throttling issues when running llama.cpp.

- Discussion arose about BitNet not being a true quantization method, as it's trained natively at 1 bit rather than being quantized from a higher resolution model. The original poster clarified that some layers still require quantization.

- Users expressed excitement about the potential of BitNet, with one commenter eagerly anticipating its full implementation and impact on the field.

- The concept of "THE ULTIMATE QUANTIZATION" was humorously referenced, with the original poster jokingly shouting about its supposed benefits like "LOSSLESS QUALITY" and "OPENAI IN SHAMBLES".

Theme 2. Qwen2.5-72B-Instruct: Performance and content filtering

- Qwen2.5-72B-Instruct on LMSys Chatbot Arena (Score: 31, Comments: 10): Qwen2.5-72B-Instruct has shown strong performance on the LMSys Chatbot Arena, as evidenced by a shared image. The Qwen2.5 series includes models ranging from 0.5B to 72B parameters, with specialized versions for coding and math tasks, and appears to have stricter content filtering compared to its predecessor, resulting in the model being unaware of certain concepts, including some non-pornographic but potentially sexually related topics.

- Qwen2.5-72B-Instruct faces strict content filtering, likely due to Chinese regulations for open LLMs. Users note it's unaware of certain concepts, including non-pornographic sexual content and sensitive political topics like Tiananmen Square.

- The model excels in coding and math tasks, performing on par with 405B and GPT-4. Some users found adding "never make any mistake" to prompts improved responses to tricky questions.

- Despite censorship concerns, some users appreciate the model's focus on technical knowledge. Attempts to bypass content restrictions were discussed, with one user sharing an image of a workaround.

Theme 3. Latest developments in Vision Language Models (VLMs)

- A Survey of Latest VLMs and VLM Benchmarks (Score: 30, Comments: 8): The post provides a comprehensive survey of recent Visual Language Models (VLMs) and their associated benchmarks. It highlights key models such as GPT-4V, DALL-E 3, Flamingo, PaLI, and Kosmos-2, discussing their architectures, training approaches, and performance on various tasks. The survey also covers important VLM benchmarks including MME, MM-Vet, and SEED-Bench, which evaluate models across a wide range of visual understanding and generation capabilities.

- Users inquired about locally runnable VLMs, with the author recommending Bunny and referring to the State of the Art section for justification.

- A discussion on creating a mobile-first application for nonprofit use emerged, suggesting YOLO for training and a UI overlay for real-time object detection, referencing a YouTube video for UI inspiration.

- A proposal for a new VLM benchmark focused on manga translation was suggested, emphasizing the need to evaluate models on their ability to discern text, understand context across multiple images, and disambiguate meaning in both visual and textual modalities.

Theme 4. Mistral Small v24.09: New 22B enterprise-grade model

- Why is chain of thought implemented in text? (Score: 67, Comments: 51): The post questions the efficiency of implementing chain of thought reasoning in text format for language models, particularly referencing o1, which was fine-tuned for long reasoning chains. The author suggests that maintaining the model's logic in higher dimensional vectors might be more efficient than projecting the reasoning into text tokens, challenging the current approach used even in models specifically designed for extended reasoning.

- Traceability and explainable AI are significant benefits of text-based chain of thought reasoning, as noted by users. The black box nature of latent space would make it harder for humans to understand the model's reasoning process.

- OpenAI's blog post revealed that the o1 model's chain of thought process is text-based, contrary to speculation about vectorized layers. Some users suggest that future models like o2 could implement implicit CoT to save tokens, referencing a paper on Math reasoning.

- Users discussed the challenges of training abstract latent space for reasoning, with some suggesting reinforcement learning as a potential approach. Others proposed ideas like gradual shifts in training data or using special tokens to control the display of reasoning steps during inference.

- mistralai/Mistral-Small-Instruct-2409 · NEW 22B FROM MISTRAL (Score: 160, Comments: 74): Mistral AI has released a new 22B parameter model called Mistral-Small-Instruct-2409, which is now available on Hugging Face. This model demonstrates improved capabilities over its predecessors, including enhanced instruction-following, multi-turn conversations, and task completion across various domains. The release marks a significant advancement in Mistral AI's model offerings, potentially competing with larger language models in performance and versatility.

- Mistral Small v24.09 is released under the MRL license, allowing non-commercial self-deployment. Users expressed mixed reactions, with some excited about its potential for finetuning and others disappointed by the licensing restrictions.

- The model demonstrates improved capabilities in human alignment, reasoning, and code generation. It supports function calling, has a 128k sequence length, and a vocabulary of 32768, positioning it as a potential replacement for GPT-3.5 in some use cases.

- Users discussed the model's place in the current landscape of language models, noting its 22B parameters fill a gap between smaller models and larger ones like Llama 3.1 70B. Some speculated about its performance compared to other models in the 20-35B parameter range.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Advancements and Research

- OpenAI's o1 model demonstrates impressive capabilities: OpenAI released a preview of their o1 model, which shows significant improvements over previous models. Sam Altman tweeted about "incredible outperformance on goal 3", suggesting major progress.

- Increasing inference compute yields major performance gains: OpenAI researcher Noam Brown suggests that increasing inference compute is much more cost-effective than training compute, potentially by orders of magnitude. This could allow for significant performance improvements by simply allocating more compute at inference time.

- Google DeepMind advances multimodal learning: A Google DeepMind paper demonstrates how data curation via joint example selection can further accelerate multimodal learning.

- Microsoft's MInference speeds up long-context inference: Microsoft's MInference technique enables inference of up to millions of tokens for long-context tasks while maintaining accuracy, dramatically speeding up supported models.

AI Applications and Demonstrations

- AI-assisted rapid app development: A developer built and published an iOS habit tracking app in just 6 hours using Claude and OpenAI's o1 model, without writing any code manually. This demonstrates the potential for AI to dramatically accelerate software development.

- Virtual try-on technology: Kling launched Kolors Virtual-Try-On, allowing users to change clothes on any photo for free with just a few clicks. This showcases advancements in AI-powered image manipulation.

- AI-generated art and design: Posts in r/StableDiffusion show impressive AI-generated artwork and designs, demonstrating the creative potential of AI models.

Industry and Infrastructure Developments

- Major investment in AI infrastructure: Microsoft and BlackRock are forming a group to raise $100 billion to invest in AI data centers and power infrastructure, indicating significant scaling of AI compute resources.

- Neuralink advances brain-computer interface technology: Neuralink received Breakthrough Device Designation from the FDA for Blindsight, aiming to restore sight to those who have lost it.

- NVIDIA's vision for autonomous machines: NVIDIA's Jim Fan predicts that in 10 years, every machine that moves will be autonomous, and there will be as many intelligent robots as iPhones. However, this timeline was presented as a hypothetical scenario.

Philosophical and Societal Implications

- Emergence of AI as a new "species": An ex-OpenAI researcher suggests we are at a point where there are two roughly intelligence-matched species, referring to humans and AI. This sparked discussion about the nature of AI intelligence and its rapid progress.

- Comparisons to historical technological transitions: A post draws parallels between current AI data centers and the vacuum tube era of computing, suggesting we may be on the cusp of another major technological leap.

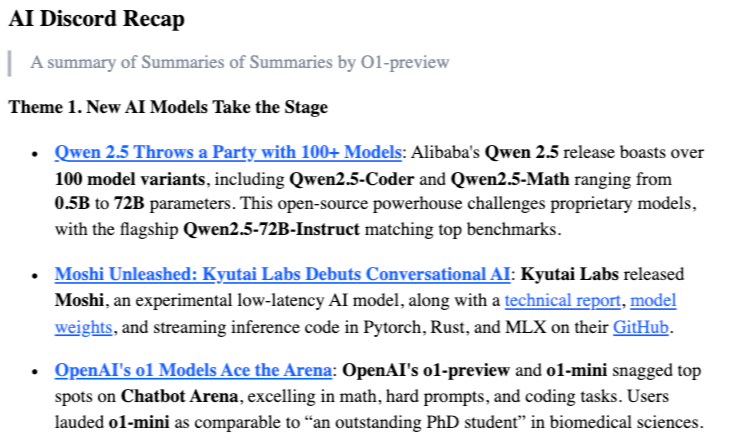

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1. New AI Models Take the Stage

- Qwen 2.5 Throws a Party with 100+ Models: Alibaba's Qwen 2.5 release boasts over 100 model variants, including Qwen2.5-Coder and Qwen2.5-Math ranging from 0.5B to 72B parameters. This open-source powerhouse challenges proprietary models, with the flagship Qwen2.5-72B-Instruct matching top benchmarks.

- Moshi Unleashed: Kyutai Labs Debuts Conversational AI: Kyutai Labs released Moshi, an experimental low-latency AI model, along with a technical report, model weights, and streaming inference code in Pytorch, Rust, and MLX on their GitHub.

- OpenAI's o1 Models Ace the Arena: OpenAI's o1-preview and o1-mini snagged top spots on Chatbot Arena, excelling in math, hard prompts, and coding tasks. Users lauded o1-mini as comparable to “an outstanding PhD student” in biomedical sciences.

Theme 2. Turbocharging Model Fine-Tuning

- Unsloth Doubles Speeds and Slashes VRAM by 70%: Unsloth boosts fine-tuning speeds for models like Llama 3.1, Mistral, and Gemma by 2x, while reducing VRAM usage by 70%. Discussions highlighted storage impacts when pushing quantized models to the hub.

- Torchtune 0.3 Drops with FSDP2 and DoRA Support: The latest Torchtune release introduces full FSDP2 support, enhancing flexibility and speed in distributed training. It also adds easy activation of DoRA/QDoRA features by setting

use_dora=True.

- Curriculum Learning Gets Practical in PyTorch: Members shared steps to implement curriculum learning in PyTorch, involving custom dataset classes and staged difficulty. An example showed updating datasets in the training loop for progressive learning.

Theme 3. Navigating AI Model Hiccups

- OpenRouter Users Slammed by 429 Errors: Frustrated OpenRouter users report being hit with 429 errors and strict rate limits, with one user rate-limited for 35 hours. Debates ignited over fallback models and key management to mitigate access issues.

- Cache Confusion Causes Headaches: Developers grappled with cache management in models, discussing the necessity of fully deleting caches after each task. Suggestions included using context managers to prevent interference during evaluations.

- Overcooked Safety Features Mocked: The community humorously critiqued overly censored models like Phi-3.5, sharing satirical responses. They highlighted challenges that heavy censorship poses for coding and technical tasks.

Theme 4. AI Rocks the Creative World

- Riffusion Makes Waves with AI Music: Riffusion enables users to generate music from spectrographs, sparking discussions about integrating AI-generated lyrics. Members noted the lack of open-source alternatives to Suno AI for full-song generation.

- Erotic Roleplay Gets an AI Upgrade: Advanced techniques for erotic roleplay (ERP) with AI models were shared, focusing on crafting detailed character profiles and immersive prompts. Users emphasized building anticipation and realistic interactions.

- Artists Hunt for Image-to-Cartoon Models: Members are on the lookout for AI models that can convert images into high-quality cartoons, exchanging recommendations. The quest continues for a model that delivers top-notch cartoon conversions.

Theme 5. AI Integration Boosts Productivity

- Integrating Perplexity Pro with VSCode Hits Snags: Users attempting to use Perplexity Pro with VSCode extensions like 'Continue' faced challenges, especially distinguishing between Pro Search and pure writing modes. Limited coding skills added to the integration woes.

- Custom GPTs Become Personal Snippet Libraries: Members are using Custom GPTs to memorize personal code snippets and templates like Mac Snippets. Caution is advised against overloading instructions to maintain performance.

- LM Studio's New Features Excite Users: The addition of document handling in LM Studio has users buzzing. Discussions revolved around data table size limitations and the potential for analyzing databases through the software.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Unsloth speeds up model fine-tuning: Unsloth enhances fine-tuning for models like LlaMA 3.1, Mistral, and Gemma by 2x and reduces VRAM usage by 70%.

- Discussions highlighted that quantized models require different storage compared to full models, impacting memory availability.

- Qwen 2.5 hits the stage: The recently launched Qwen 2.5 model shows improved instruction-following abilities, particularly for coding and mathematics.

- Users noted its added capacity to handle nuances better than Llama 3.1, although issues arise with saving and reloading merged models.

- Gemma 2 fine-tuning struggles: Members reported challenges with fine-tuning Gemma 2, particularly errors encountered when saving and loading merged models.

- Suggestions pointed to potential issues with chat templates used in inference or general persistence problems within the model.

- Neural network code generation success: A community member expressed thanks for help in training a neural network to generate Python code, marking it as a promising start.

- Responses were encouraging, with the community applauding the achievement with Incredible and congrats!.

- vLLM serving brings latency concerns: A participant dealing with vLLM for serving mentioned latency issues while fine-tuning their model.

- They sought advice on using Quantization Aware LoRa training as well as concerns about merging models effectively.

Stability.ai (Stable Diffusion) Discord

- LoRa Models Training Essentials: A member inquired about effective images for training LoRa models, recommending diverse floor plans, doors, and windows to enhance the dataset.

- Emphasis was placed on tagging and community experience sharing to assist newcomers on their training journey.

- Resolution Rumble: SD1.5 vs. SD512: SD1.5 outperforms with 1024x1024 images over 512x512, especially when considering GPU limitations during generation.

- Adoption of turbo models was suggested for faster image generation without sacrificing efficiency.

- Multidiffusion Magic for Memory Saving: The multidiffusion extension was hailed as a memory-saving tool for low VRAM users, processing images in smaller tiled sections.

- Guides and resources were shared to help users integrate this extension effectively into their workflows.

- Riffusion Rocks AI Music Creation: The platform Riffusion facilitates music generation from spectrographs and may incorporate AI lyrics in future iterations.

- Discussion highlighted a scarcity of alternatives to Suno AI in the open-source realm for full-song generation.

- Remote Processing: A Double-Edged Sword: Concerns emerged around tools like iopaint using remote processing which limits user control and model flexibility.

- The community advocated for self-hosting models for enhanced customization and privacy.

OpenRouter (Alex Atallah) Discord

- Mistral API prices hit new lows: Members highlighted significant price reductions on the Mistral API, with competitive pricing like $2/$6 on Large 2 for large models.

- This pricing adjustment sets a favorable comparison against other providers, enhancing model accessibility for users.

- OpenRouter faces access barriers: Multiple users are encountering issues with OpenRouter, particularly receiving 429 errors and Data error output messages.

- To mitigate these problems, users were encouraged to create dedicated threads for reporting errors to streamline troubleshooting.

- Rate limits disrupt user workloads: Frustration arose over users hitting strict rate limits that hinder accessing models, causing significant productivity setbacks.

- One user noted they were rate-limited for 35 hours, prompting discussions about potential solutions like BYOK (Bring Your Own Key).

- Fallback models need a better strategy: There was a discussion regarding the order of operations using fallback models versus fallback keys during rate limit errors.

- Concerns about not using fallback models effectively were raised, especially when faced with Gemini Flash 429 errors.

- User queries on free LLM access: A user questioned how to provide free LLM access to 5000 individuals with an effective budget of $10-$15 per month.

- Discussions ensued about token usage, estimating around 9k tokens per user per day, which pushed for sophisticated optimization strategies.

aider (Paul Gauthier) Discord

- Aider behaves unexpectedly: Users found that Aider exhibited erratic behavior, sometimes following its own agenda after simple requests, requiring a restart to resolve. The issue seems linked to context retention during sessions.

- The community suggests investigating state management to prevent this kind of unexpected behavior in future updates.

- Feedback on OpenAI models disappoints: Users criticized the performance of the O1 models, particularly for refusing to obey formatting commands, which disrupts workflow efficiency. Many users turned to 3.5 Sonnet, citing improved control over prompts.

- This raised discussions about the importance of flexible parameter settings to enhance user interactions with AI models.

- Exploring DeepSeek limitations: Challenges surfaced around the DeepSeek model regarding its editing and refactoring capabilities, with suggestions to refine input formats for better outputs. Tuning efforts were proposed, seeking effective source/prompt examples for testing.

- The exchange indicates a collective need for clearer guidelines on optimizing model performance through effective prompt design.

- Claude 3.5 System Prompt Details Released: An extracted system prompt for Claude 3.5 Sonnet has been shared, aimed at enhancing performance in handling artifacts. This uncovering has sparked interest in how it might be applied practically.

- The community awaits insights on the impact of this prompt on practical applications and code generation tasks.

- FlutterFlow 5.0 Launch Enhancements: A YouTube video introduced FlutterFlow 5.0, which promises to revolutionize app development with new features aimed at streamlining component creation. The update claims significant performance improvements.

- Feedback suggests users are already eager to implement these features for better efficiency in coding workflows.

Perplexity AI Discord

- Integrating Perplexity Pro with VSCode: Users discussed how to utilize the Perplexity Pro model alongside VSCode extensions like 'Continue' for effective autocomplete functionality, despite integration challenges due to limited coding skills.

- The distinction between Pro Search and pure writing mode was highlighted, complicating usage strategies for some.

- O1 Model Utilization in Pro Search: The O1-mini is now accessible through the Reasoning focus in Pro Search, though its integration varies by model selection.

- Some users advocate for using O1 in role play scenarios due to character retention capabilities but demand higher usage limits.

- Debate on Perplexity vs ChatGPT: An ongoing debate compares the Perplexity API models with those in ChatGPT, particularly regarding educational utility and subscription benefits.

- One user pointed out the advantages of ChatGPT Plus for students while acknowledging the merits of a Perplexity Pro subscription.

- Slack Unveils AI Agents: Slack reported the introduction of AI agents, aimed at improving workflow and communication efficiency within the platform.

- This feature is expected to enhance the overall productivity of teams using the platform.

- New Affordable Electric SUV by Lucid: Lucid launched a new, more affordable electric SUV, broadening its market reach and appealing to environmentally conscious consumers.

- This affordable model targets a wider audience interested in sustainable transportation.

HuggingFace Discord

- Hugging Face Unveils New API Documentation: The newly launched API docs feature improved clarity on rate limits, a dedicated PRO section, and enhanced code examples.

- User feedback has been directly applied to improve usability, making deploying AI smoother for developers.

- TRL v0.10 Enables Vision-Language Fine-Tuning: TRL v0.10 simplifies fine-tuning for vision-language models down to two lines of code, coinciding with Milstral's launch of Pixtral.

- This release emphasizes the increasing connectivity of multimodal AI capabilities.

- Nvidia Launches Compact Mini-4B Model: Check out Nvidia's new Mini-4B model here, which shows remarkable performance but requires compatible Nvidia drivers.

- Users are encouraged to register it as a Hugging Face agent to leverage its full functionality.

- Open-source Biometric Template Protection: A member shared their Biometric Template Protection (BTP) implementation for authentication without server data access, available on GitHub.

- This educational code aims to introduce newcomers to the complexities of secure biometric systems while remaining user-friendly.

- Community Seeking Image-to-Cartoon Model: Members are on the lookout for a space model that can convert images into high-quality cartoons, with calls for recommendations.

- Community engagement is key, as they encourage shared insights on models that fulfill this requirement.

Nous Research AI Discord

- NousCon Ignites Excitement: Participants expressed enthusiasm for NousCon, discussing attendance and future events, with plans for an afterparty at a nearby bar to foster community interaction.

- Many requested future events in different locations, emphasizing the desire for more networking opportunities.

- Hermes Tool Calling Standard Adopted: The community has adopted a tool calling format for Qwen 2.5, influenced by contributions like vLLM support and other tools being discussed for future implementations.

- Discussions on parsing tool distinctions between Hermes and Qwen are ongoing, sparking innovative ideas for integration.

- Qwen 2.5 Launches with New Models: Qwen 2.5 has been announced, featuring new coding and mathematics models, marking a pivotal moment for open-source AI advancements.

- This large-scale release showcases the continuous evolution of language models in the AI community, with a detailed blog post outlining its capabilities.

- Gemma 2 Improves Gameplay: Members shared experiences about fine-tuning models like Gemma 2 to enhance chess gameplay, although performance highlighted several challenges.

- This reflects the creative development processes and collaborative spirit within the community, driving innovation backward from gameplay expectations.

- Hermes 3 API Access Confirmed: Access to the Hermes 3 API has been confirmed in collaboration with Lambda, allowing users to utilize the new Chat Completions API.

- Further discussions included potential setups for maximized model capabilities, with particular interest in running at

bf16precision.

- Further discussions included potential setups for maximized model capabilities, with particular interest in running at

CUDA MODE Discord

- Triton Conference Shoutout: During the Triton conference keynote, Mark Saroufim praised the community for their contributions, which excited attendees.

- The recognition sparked discussions on community engagement and future contributions.

- Triton CPU / ARM Becomes Open Source: Inquiries about the Triton CPU / ARM led to confirmation that it is now open-source, available on GitHub.

- This initiative aims to foster community collaboration and improve the experimental CPU backend.

- Training Llama-2 Model Performance Report: Performance metrics for the Llama2-7B-chat model revealed significant comparisons against FP16 configurations across various tasks.

- Participants underscored the need for optimizing quantization methods to enhance inference quality.

- Quantization Techniques for Efficiency: Discussions centered on effective quantization methods such as 4-bit quantization for Large Language Models, crucial for BitNet's architecture.

- Members demonstrated interest in models that apply quantization without grouping to ease inference costs.

- Upcoming Pixtral Model Release: Excitement surrounds the anticipated release of the Pixtral model on the Transformers library, with discussions on implementation strategies.

- Members noted the expected smooth integration with existing frameworks upon the release.

Eleuther Discord

- Open-Source TTS Migration Underway: A discussion arose on transitioning from OpenAI TTS to open-source alternatives, particularly highlighting Fish Speech V1.4 that supports multiple languages.

- Members debated the viability of using xttsv2 to enhance performance across different languages.

- Compression Techniques for MLRA Keys: Members explored the concept of utilizing an additional compression matrix for MLRA keys and values, aiming to enhance data efficiency post-projection.

- Concerns were raised about insufficient details in the MLRA experimental setup, particularly regarding rank matrices.

- Excitement Around Playground v3 Launch: Playground v3 (PGv3) was released, showcasing state-of-the-art performance in text-to-image generation and a new benchmark for image captioning.

- The new model integrates LLMs, diverging from earlier models reliant on pre-trained encoders to prove more efficient.

- Diagram of Thought Framework Introduced: The Diagram of Thought (DoT) framework was presented, modeling iterative reasoning in LLMs through a directed acyclic graph (DAG) structure, aiming to enhance logical consistency.

- This new method proposes a significant improvement over linear reasoning approaches discussed in previous research.

- Investigating Model Debugging Tactics: A member suggested initiating model debugging from a working baseline and progressively identifying issues across various configurations, such as FSDP.

- The back-and-forth underlined the need for sharing debugging experiences while optimizing model performance.

OpenAI Discord

- Custom GPTs Memorize Snippets Effectively: Members discussed using Custom GPTs to import and memorize personal snippets, such as Mac Snippets, although challenges arose from excessive information dumping.

- A suggestion emerged that clearer instructions and knowledge base uploads can enhance performance.

- Leaked Advanced Voice Mode Launch: An upcoming Advanced Voice Mode for Plus users is expected on September 24, focusing on improved clarity and response times while filtering noise.

- The community expressed curiosity on its potential impact on daily voice command usability.

- Debate on AI Content Saturation: A heated discussion centered on whether AI-generated content elevates or dilutes quality, with voices suggesting it reflects pre-existing low-quality content.

- Concerns were raised about disassociation from reality as AI capabilities grow.

- GPT Store Hosts Innovative Creations: One member touted their various GPTs in the GPT Store, which automate tasks derived from different sources, enhancing workflow.

- Specific prompting techniques inspired by literature, including DALL·E, were part of their offerings.

- Clarification on Self-Promotion in Channels: Members reviewed the self-promotion rules, confirming exceptions in API and Custom GPTs channels for shared creations.

- Encouragement to link their GPTs was voiced, highlighting community support for sharing while following server guidelines.

Cohere Discord

- Cohere Job Application Sparks Community Excitement: A member shared their enthusiasm after applying for a position at Cohere, connecting with the community for support.

- The community welcomed the initiative with excitement, showcasing friendly vibes for newcomers.

- CoT-Reflections Outshines Traditional Approaches: Discussion focused on how CoT-reflections improves response quality compared to standard chain of thought prompting.

- Members highlighted that integrating BoN with CoT-reflections could enhance output quality significantly.

- Speculations on O1's Reward Model Mechanism: Members speculated that O1 operates using a reward model that iteratively calls itself for optimal results.

- There are indications that O1 underwent a multi-phase training process to elevate its output quality.

- Billing Information Setup Confusion Resolved: A member sought clarification on adding VAT details after setting up payment methods via a Stripe link.

- The suggestion to email support@cohere.com for secure handling of billing changes was confirmed to be a viable solution.

LM Studio Discord

- Markov Models Get Props: Members noted that Markov models are less parameter-intensive probabilistic models of language, leading to discussions on their potential applications.

- Interest grew around how these models could simplify certain processes in language processing for engineers.

- Training Times Spark Debate: Training on 40M tokens would take about 5 days with a 4090 GPU, but reducing it to 40k tokens cuts that down to just 1.3 hours.

- Concerns remained regarding why 100k models still seemed excessive in training time.

- Data Loader Bottlenecks Cause Frustration: Members discussed data loader bottlenecks during model training, with reports of delays causing frustration.

- There was a call to explore optimization techniques for the data pipeline to enhance overall training efficiency.

- LM Studio's Exciting New Features: With new document handling features, excitement bubbled as a member returned to LM Studio after integrating prior feedback.

- Discussions revolved around understanding size limitations for data tables and analyzing databases through the software.

- AI Model Recommendations Swirl: In recommendations for coding, the Llama 3.1 405B model surfaced for Prolog assistance, prompting various opinions.

- Insights on small model alternatives like qwen 2.5 0.5b highlighted its coherence, though it lacked lowercase support.

Latent Space Discord

- Langchain Updates for Partner Packages: An inquiry arose regarding the process to update an old Langchain community integration into a partner package, with suggestions to reach out via joint communication channels.

- Lance Martin was mentioned as a go-to contact for further assistance in this transition.

- Mistral Launches Free Tier for Developers: Mistral introduced a free tier on their serverless platform, enabling developers to experiment at no cost while enhancing the Mistral Small model.

- This update also included revised pricing and introduced free vision capabilities on their chat interface, making it more accessible.

- Qwen 2.5: A Game Changer in Foundation Models: Alibaba rolled out the Qwen 2.5 foundation models, introducing over 100 variants aimed at improving coding, math reasoning, and language processing.

- This release is noted for its competitive performance and targeted enhancements, promising significant advancements over earlier versions.

- Moshi Kyutai Model Rocks the Scene: Kyutai Labs unveiled the Moshi model, complete with a technical report, weights, and streaming inference code on various platforms.

- They provided links to the paper, GitHub, and Hugging Face for anyone eager to dig deeper into the model's capabilities.

- Mercor Attracts Major Investment: Mercor raised $30M in Series A funding at a $250M valuation, targeting enhanced global labor matching with sophisticated models.

- The investment round featured prominent figures like Peter Thiel and Jack Dorsey, underlining its importance in AI-driven labor solutions.

Torchtune Discord

- Torchtune 0.3 packed with features: Torchtune 0.3 introduces significant enhancements, including full support for FSDP2 to boost flexibility and speed.

- The upgrades focus on accelerating training times and improving model management across various tasks.

- FSDP2 enhances distributed training: All distributed recipes now leverage FSDP2, allowing for better compile support and improved handling of LoRA parameters.

- Users are encouraged to experiment with the new configuration in their distributed recipes for enhanced performance.

- Training-time speed improvements: Implementing torch.compile has resulted in under a minute compile times when set

compile=True, leading to faster training.- Using the latest PyTorch nightlies amplifies performance further, offering significant reductions during model compilation.

- DoRA/QDoRA support enabled: The latest release enables users to activate DoRA/QDoRA effortlessly by setting

use_dora=Truein configuration.- This addition is vital for enhancing training capabilities related to LoRA and QLoRA recipes.

- Cache management discussions arise: A conversation sparked around the necessity of completely deleting cache after each task, with suggestions for improvements to the eval harness.

- One contributor proposed ensuring models maintain both inference and forward modes without needing cache teardown.

Interconnects (Nathan Lambert) Discord

- Qwen2.5 Launch Claims Big Milestones: The latest addition to the Qwen family, Qwen2.5, has been touted as one of the largest open-source releases, featuring models like Qwen2.5-Coder and Qwen2.5-Math with various sizes from 0.5B to 72B.

- Highlights include the flagship Qwen2.5-72B-Instruct matching proprietary models, showcasing competitive performance in benchmarks.

- OpenAI o1 Models Compared to PhD Level Work: Testing OpenAI's o1-mini model showed it to be comparable to an outstanding PhD student in biomedical sciences, marking it among the top candidates they've trained.

- This statement underscores the model's proficiency and the potential for its application in advanced academic projects.

- Math Reasoning Gains Attention: There's a growing emphasis on advancing math reasoning capabilities within AI, with excitement around the Qwen2.5-Math model, which supports both English and Chinese.

- Engagement from users suggests a collective focus on enhancing math-related AI applications as they strive to push boundaries in this domain.

- Challenges of Knowledge Cutoff in AI Models: Several users expressed frustration over the knowledge cutoff of models, notably stating it is set to October 2023, affecting their relevance to newer programming libraries.

- Discussions indicate that real-time information is critical for practical applications, presenting a challenge for models like OpenAI's o1.

- Transformers Revolutionize AI: The Transformer architecture has fundamentally altered AI approaches since 2017, powering models like OpenAI's GPT, Meta's Llama, and Google's Gemini.

- Transformers extend their utility beyond text into audio generation, image recognition, and protein structure prediction.

OpenInterpreter Discord

- 01 App is fully operational: Members confirmed that the 01 app works well on their phones, especially when using the -qr option for best results.

- One member tested the non-local version extensively and reported smooth functionality.

- Automating Browser Tasks Request: A member seeks guides and tips for automating browser form submissions, particularly for government portals.

- Despite following ChatGPT 4o suggestions, they're facing inefficiencies, especially with repetitive outcomes.

- CV Agents Available for Testing: A member shared their CV Agents project aimed at enhancing job hunting with intelligent resumes on GitHub: GitHub - 0xrushi/cv-agents.

- The project invites community contributions and features an attractive description.

- Moshi Artifacts Unleashed: Kyutai Labs released Moshi artifacts, including a technical report, model weights, and streaming inference code in Pytorch, Rust, and MLX, available in their paper and GitHub repository.

- The community is eager for more updates as the project gains traction.

- Feedback on Audio Sync: A user pointed out that an update to the thumbnail of the Moshi video could boost visibility and engagement.

- They noted slight audio sync issues in the video, signaling a need for technical adjustments.

LlamaIndex Discord

- Benito's RAG Deployment Breakthrough: Benito Martin shared a guide on building and deploying RAG services end-to-end using AWS CDK, offering a valuable resource for translating prototypes into production.

- If you're looking to enhance your deployment skills, this guide is a quick start!

- KeyError Hits Weaviate Users Hard: Yasuyuki raised a KeyError while reading an existing Weaviate database, referencing GitHub Issue #13787. A community member suggested forking the repo and creating a pull request to allow users to specify field names.

- This is a common pitfall when querying vector databases not created with llama-index.

- Yasuyuki's First Open Source Contribution: Yasuyuki expressed interest in contributing to the project by correcting the key from 'id' to 'uuid' and preparing a pull request.

- This first contribution has encouraged him to familiarize himself with GitHub workflows for future engagement.

- Seeking Feedback on RAG Techniques: .sysfor sought feedback on RAG (Retrieval-Augmented Generation) strategies to link vendor questions with indexed QA pairs.

- Suggestions included indexing QA pairs and generating variations on questions to enhance retrieval efficiency.

LangChain AI Discord

- Model Providers Cause Latency in LLMs: Latency in LLM responses is primarily linked to the model provider, rather than implementation errors, according to member discussions.

- This suggests focusing on optimizing the model provider settings to enhance response speeds.

- Python and LangChain's Minimal Impact on Latency: It's claimed that only 5-10% of LLM latency can be attributed to Python or LangChain, implying a more significant focus should be on model configurations.

- Optimizing model settings could vastly improve overall performance and reduce wait times.

- Best Practices for State Management in React: Users discussed optimal state management practices when integrating Langserve with a React frontend.

- The conversation hints at the importance of effective state handling, especially with a Python backend involved.

- PDF-Extract-Kit for High-Quality PDF Extraction: The PDF-Extract-Kit was presented as a comprehensive toolkit for effective PDF content extraction.

- Interest was sparked as members considered its practical applications in common PDF extraction challenges.

- RAG Application Developed with AWS Stack: A member showcased a new RAG application utilizing LangChain and AWS Bedrock for LLM integration and deployment.

- This app leverages AWS OpenSearch as the vector database, highlighting its robust cloud capabilities for handling data.

Modular (Mojo 🔥) Discord

- BeToast Discord Compromise Alert: Concerns arose about the BeToast Discord server potentially being compromised, fueled by a report from a LinkedIn conversation about hacking incidents.

- Members emphasized the necessity for vigilance and readiness to act if any compromised accounts start spamming.

- Windows Native Support Timeline Uncertain: Discussion on the Windows native support revealed a GitHub issue outlining feature requests, with an uncertain timeline for implementation.

- Many developers prefer alternatives to Windows for AI projects due to costs, using WSL as a common workaround.

- Converting SIMD to Int Explained: A user queried how to convert SIMD[DType.int32, 1] to Int, to which a member succinctly replied:

int(x).- This underscored the importance of understanding SIMD data types for efficient conversions.

- Clarifying SIMD Data Types: The conversation emphasized the need to understand SIMD data types for smooth conversions, encouraging familiarization with DType options.

- Members noted that this knowledge could streamline future queries around data handling.

LAION Discord

- Exploring State of the Art Text to Speech: A member inquired about the state of the art (sota) for text to speech, specifically seeking open source solutions. “Ideally open source, but curious what all is out there” reflects a desire to compare various options.

- Participants praised Eleven Labs as the best closed source text to speech option, while alternatives like styletts2, tortoise, and xtts2 were suggested for open source enthusiasts.

- Introducing OmniGen for Unified Image Generation: The paper titled OmniGen presents a new diffusion model that integrates diverse control conditions without needing additional modules found in models like Stable Diffusion. OmniGen supports multiple tasks including text-to-image generation, image editing, and classical CV tasks through its simplified architecture.

- OmniGen leverages SDXL VAE and Phi-3, enhancing its capability in generating images and processing control conditions, making it user-friendly for various applications.

- Nvidia's Official Open-Source LLMs: A member highlighted the availability of official Nvidia open-source LLMs, potentially relevant for ongoing AI research and development. This initiative might provide valuable resources for developers and researchers working in the field.

- The move supports a transition towards more collaborative and accessible AI resources, aligning with current trends in open-source software.

DSPy Discord

- Ruff Check Error Alert: A user reported a TOML parse error while performing

ruff check . --fix-only, highlighting an unknown fieldindent-widthat line 216.- This error indicates a need to revise the configuration file to align with the expected fields.

- Podcast with AI Researchers: A YouTube podcast featuring Sayash Kapoor and Benedikt Stroebl explores optimizing task performance and minimizing inference costs, viewable here.

- The discussion stirred interest, emphasizing the importance of considering costs in AI systems.

- LanceDB Integration Debut: The new LanceDB integration for DSpy enhances performance for large datasets, explained in this pull request.

- The contributor expressed willingness to collaborate on related personal projects and open-source initiatives.

- Concerns around API Key Handling: Users are questioning whether API keys need to be sent directly to a VM/server before reaching OpenAI, highlighting trust issues with unofficial servers.

- Clarity on secure processes is crucial to avoid compromising personal data.

- Creating a Reusable RAG Pipeline: A community member sought guidance on creating a reusable pipeline with RAG that can accommodate multiple companies without overloading prompts.

- Concerns about effectively incorporating diverse data were raised, aiming to streamline the process.

OpenAccess AI Collective (axolotl) Discord

- Implementing Curriculum Learning in PyTorch: To implement curriculum learning in PyTorch, define criteria, segment your dataset into stages of increasing difficulty, and create a custom dataset class to manage this logic.

- An example showed how to update the dataset in the training loop using this staged approach.

- Controlling Dataset Shuffling: A user raised a question about specifying the lack of random shuffling in a dataset, seeking guidance on this aspect.

- It was suggested that this query could be tackled in a separate thread for clarity.

tinygrad (George Hotz) Discord

- Tinybox Setup Instructions Needed: A request for help on setting up two tinyboxes was made, with a link to the Tinybox documentation provided for setup guidance.

- This highlights a demand for streamlined setup instructions as more users explore Tinygrad functionalities.

- Tinyboxes Boost Tinygrad CI: It was noted that tinyboxes play a crucial role in tinygrad's CI, showcasing their capabilities through running on MLPerf Training 4.0.

- This demonstrates their status as the best-tested platform for tinygrad integrations.

- Tinybox Purchase Options Explained: For those looking to buy, it was mentioned to visit tinygrad.org for tinybox purchases, reassuring others that it's fine not to get one.

- This caters to diverse user interests, whether in acquisition or exploration.

- Tinybox Features Uncovered: A brief overview highlighted the tinybox as a universal system for AI workloads, dealing with both training and inference tasks.

- Specific hardware specs included the red box with six 7900XTX GPUs and the green box featuring six 4090 GPUs.

LLM Finetuning (Hamel + Dan) Discord

- rateLLMiter Now Available for Pip Install: The rateLLMiter module is now available as a pip installable package, enhancing request management for LLM clients. Check out the implementation details on GitHub with information regarding its MIT license.

- This implementation allows LLM clients to better manage their API calls, making it easier to integrate into existing workflows.

- Rate Limiter Graph Shows Request Management: A graph illustrates how rateLLMiter smooths out the flow of requests, with orange representing requests for tickets and green showing issued tickets. This effectively distributed a spike of 100 requests over time to avoid server rate limit exceptions.

- Participants highlighted the importance of managing API rates effectively to ensure seamless interactions with backend services during peak loads.

Gorilla LLM (Berkeley Function Calling) Discord

- Member Realizes Prompt Misuse: A member acknowledged their misapplication of a prompt, which caused unexpected output confusion in Gorilla LLM discussions.

- This highlights the necessity of validating prompt usage to ensure accurate results.

- Prompt Template Now Available: The same member mentioned that a prompt template is now readily accessible to assist with crafting future prompts efficiently.

- Leveraging the template could help mitigate similar prompt-related errors down the line.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!