[AINews] o1 API, 4o/4o-mini in Realtime API + WebRTC, DPO Finetuning

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Better APIs are all you need for AGI.

AI News for 12/16/2024-12/17/2024. We checked 7 subreddits, 433 Twitters and 32 Discords (210 channels, and 4050 messages) for you. Estimated reading time saved (at 200wpm): 447 minutes. You can now tag @smol_ai for AINews discussions!

It was a mini dev day for OpenAI, with a ton of small updates and one highly anticipated API launch. Let's go in turn:

o1 API

Minor notes:

o1-2024-12-17is a NEWER o1 than the o1 they shipped to ChatGPT 2 weeks ago (our coverage here), that takes 60% fewer reasoning tokens on average- vision/image inputs (we saw this with the full o1 launch, but now it's in API)

- o1 API also has function calling and structured outputs - with some, but very small impact on benchmarks

- a new

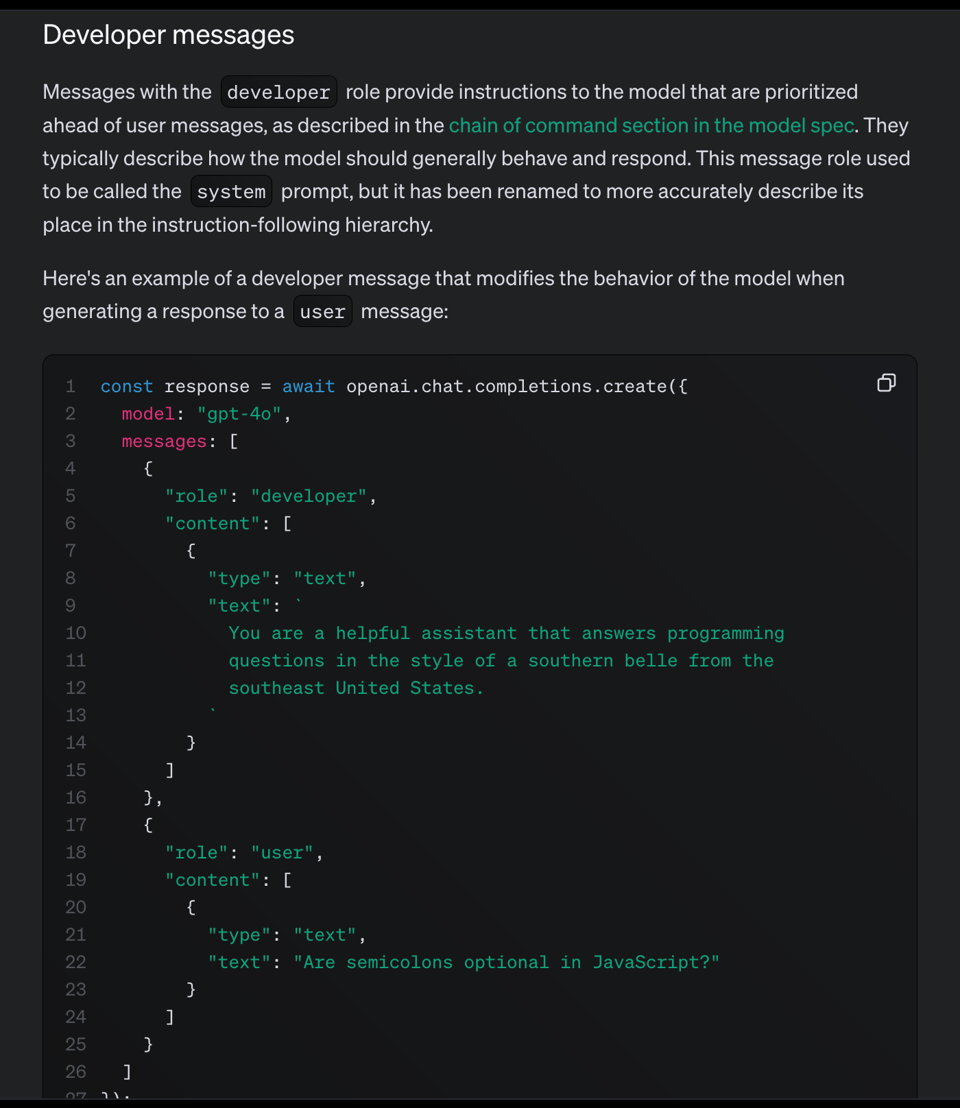

reasoning_effortparameter (justlow/medium/highstrings right now) - the "system message" has been renamed to "developer messages" for reasons... (we're kidding, this just updates the main chatCompletion behavior to how it also works in the realtime API)

o1 pro is CONFIRMED to "be a different implementation and not just o1 with high reasoning_effort setting." and will be available in API in "some time".

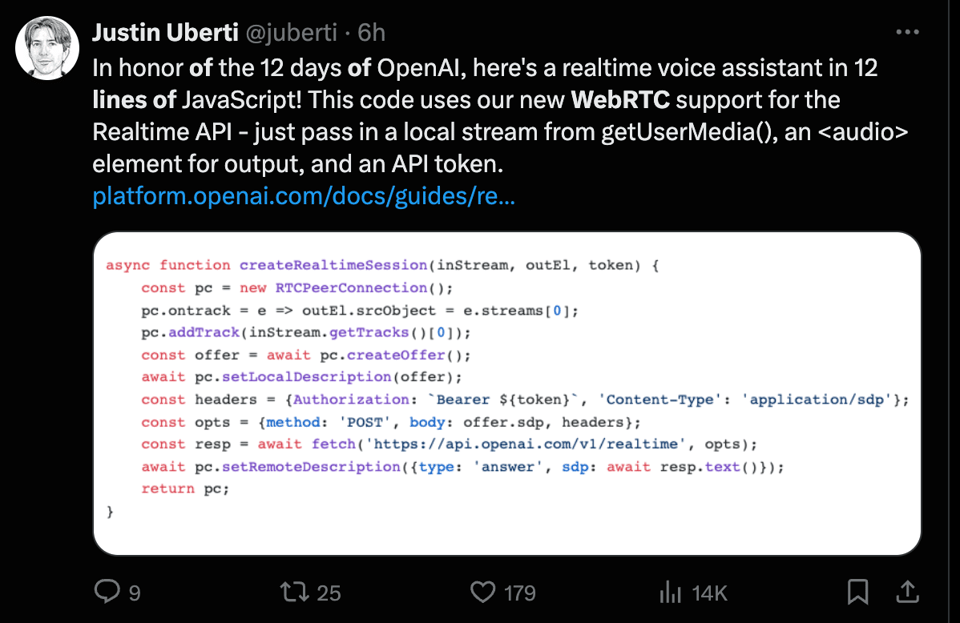

WebRTC and Realtime API improvements

It's a lot easier to work with the RealTime API with WebRTC now that it fits in a tweet (try it out on SimwonW's demo with your own keys)):

New 4o and 4o-mini models, still in preview:

Justin Uberti, creator of WebRTC who recently joined OpenAI, also highlighted a few other details

- improved pricing (10x cheaper when using mini)

- longer duration (session limit is now 30 minutes)

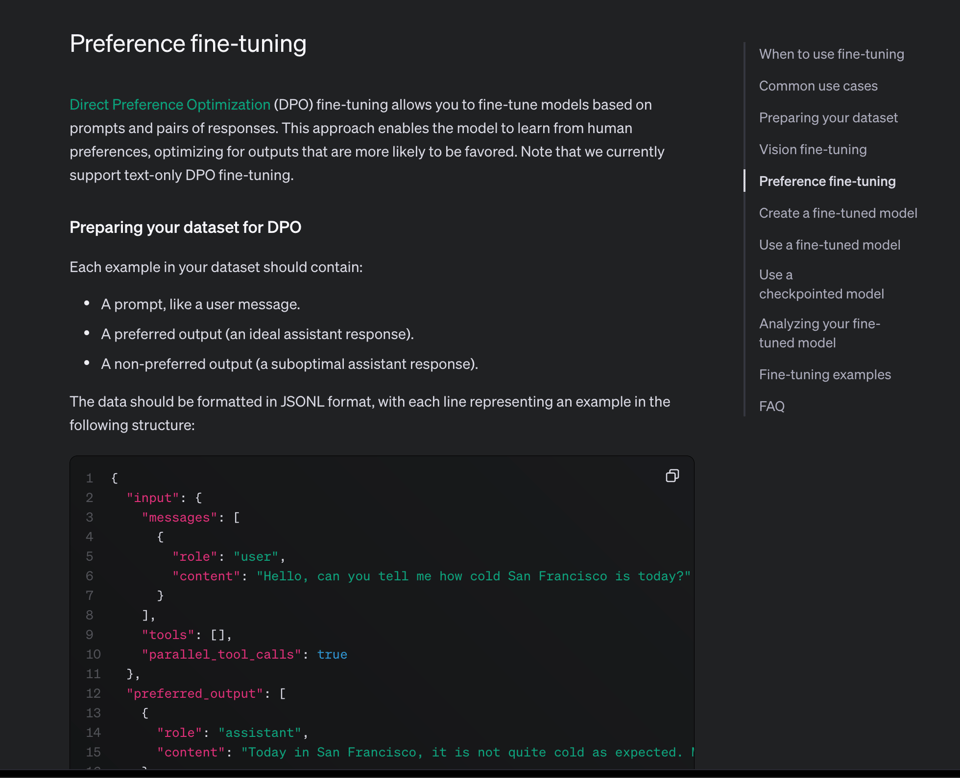

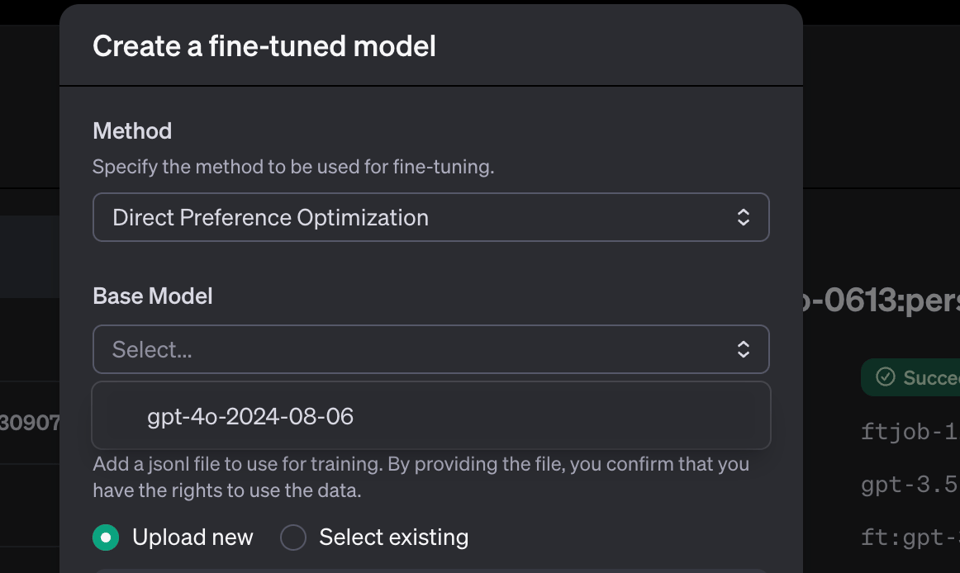

DPO Preference Tuning

It's Hot or Not, but for finetuning. We aim to try this out for AINews ASAP... although it seems to only be available for 4o.

Misc

Selected OpenAI DevDay videos were also released.

Official Go and Java SDKs for those who care.

The team also did an AMA (summary here, nothing too surprising).

The full demo is worth a watch:

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Here's a categorized summary of the key discussions and announcements:

Model Releases and Performance

- OpenAI o1 API Launch: @OpenAIDevs announced o1's availability in the API with function calling, structured outputs, vision capabilities, and developer messages. The model reportedly uses 60% fewer reasoning tokens than o1-preview.

- Google Gemini Updates: Significant improvements noted with Gemini 2.0 Flash achieving 83.6% accuracy on the new DeepMind FACTS benchmark, outperforming other models.

- Falcon 3 Release: @scaling01 shared that Falcon released new models (1B, 3B, 7B, 10B & 7B Mamba), trained on 14 Trillion tokens with Apache 2.0 license.

Research and Technical Developments

- Test-Time Computing: @_philschmid highlighted how Llama 3 3B outperformed Llama 3.1 70B on MATH-500 using test-time compute methods.

- Voice API Pricing: OpenAI announced GPT-4o audio is now 60% cheaper, with GPT-4o-mini being 10x cheaper for audio tokens.

- WebRTC Support: New WebRTC endpoint added for the Realtime API using the WHIP protocol.

Company Updates

- Midjourney Perspective: @DavidSHolz shared insights on running Midjourney, noting they have "enough revenue to fund tons of crazy R&D" without investors.

- Anthropic Security Incident: The company confirmed unauthorized posts on their account, stating no Anthropic systems were compromised.

Memes and Humor

- @jxmnop joked about watching "Attention Is All You Need" in IMAX

- Multiple humorous takes on model comparisons and AI development race shared across the community

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Falcon 3 Emerges with Impressive Token Training and Diversified Models

- Falcon 3 just dropped (Score: 332, Comments: 122): Falcon 3 has been released, showcasing impressive benchmarks according to a Hugging Face blog post. The release highlights significant advancements in AI model performance.

- Model Performance and Benchmarks: The Falcon 3 release includes models ranging from 1B to 10B, trained on 14 trillion tokens. The 10B-Base model is noted for being state-of-the-art in its category, with specific performance scores such as 24.77 on MATH-Lvl5 and 83.0 on GSM8K. The benchmarks indicate that Falcon 3 is competitive with other models like Qwen 2.5 14B and Llama-3.1-8B.

- Licensing Concerns and Bitnet Model: There are concerns about the model's license, which includes a "rug pull clause" that could limit its use geographically. The release of a BitNet model is discussed, with some noting the model's poor benchmark performance compared to traditional FP16 models, although it allows for more parameters on the same hardware.

- Community and Technical Support: The community is actively discussing support for Mamba models and inference engine support, with ongoing developments in llama.cpp to improve compatibility. There is interest in the 1.58-bit quantization approach, though current benchmarks show significant performance drops compared to non-quantized models.

- Introducing Falcon 3 Family (Score: 121, Comments: 37): The post announces the release of Falcon 3, a new open-source large language model, marking an important milestone for the Falcon team. For further details, readers are directed to the official blog post on Hugging Face.

- LM Studio is expected to integrate Falcon 3 support through updates to llama.cpp, though issues with loading models due to unsupported tokenizers have been reported. A workaround involves applying a fix from a GitHub pull request and recompiling llama.cpp.

- Concerns about Arabic language support were raised, with users noting the lack of models with strong Arabic capabilities and benchmarks for reasoning and math. The response indicated that Arabic support is currently nonexistent.

- Users expressed appreciation for the release and performance of Falcon 3, with plans to include it in upcoming benchmarks. Feedback was given to update the model card with information about the tokenizer issue and workaround.

Theme 2. Nvidia's Jetson Orin Nano: A Game Changer for Embedded Systems?

- Finally, we are getting new hardware! (Score: 262, Comments: 171): Jetson Orin Nano hardware is being introduced, marking a notable development in AI technology. This new hardware is likely to impact AI applications, especially in edge computing and machine learning, by providing enhanced performance and capabilities.

- The Jetson Orin Nano is praised for its low power consumption (7-25W) and compact, all-in-one design, making it suitable for robotics and embedded systems. However, there is criticism regarding its 8GB 128-bit LPDDR5 memory with 102 GB/s bandwidth, which some users feel is insufficient for larger AI models compared to alternatives like the RTX 3060 or Intel B580 at similar price points.

- Discussions highlight the Jetson Orin Nano's potential in machine learning applications and distributed LLM nodes, with some users noting its 5x speed advantage over Raspberry Pi 5 for LLM tasks. Yet, concerns about its memory bandwidth limiting LLM performance are raised, emphasizing the importance of RAM bandwidth for LLM inference.

- Comparisons with other hardware include mentions of Raspberry Pi's upcoming 16GB compute module and Apple's M1/M4 Mac mini, with the latter offering better memory bandwidth and power efficiency. Users debate the Jetson Orin Nano's value proposition, considering its specialized use cases in robotics and machine learning versus more general-purpose computing needs.

Theme 3. ZOTAC Announces GeForce RTX 5090 with 32GB GDDR7: High-End Potential for AI

- ZOTAC confirms GeForce RTX 5090 with 32GB GDDR7 memory, 5080 and 5070 series listed as well - VideoCardz.com (Score: 153, Comments: 61): ZOTAC has confirmed the GeForce RTX 5090 graphics card, which will feature 32GB of GDDR7 memory. Additionally, the 5080 and 5070 series are also listed, indicating a forthcoming expansion in their product lineup.

- Memory Bandwidth Concerns: Users express disappointment with the memory bandwidths of the new series, except for the RTX 5090. There's a desire for larger memory sizes, particularly for the 5080, which some wish had 24GB.

- Market Dynamics: The release of new graphics cards often leads to a flooded market with older models like the RTX 3090 and 4090. Some users are considering purchasing these older models due to price-performance considerations and availability issues with new releases.

- Cost and Production Insights: Nvidia aims to maximize profits, which affects the availability of larger memory modules. The production cost of a 4090 is about $300, with a significant portion attributed to memory modules, hinting at potential limitations in the size of new DDR7 modules for upcoming models.

Theme 4. DavidAU's Megascale Mixture of Experts LLMs: A Creative Leap

- (3 models) L3-MOE-8X8B-Dark-Planet-8D-Mirrored-Chaos-47B-GGUF - AKA The Death Star - NSFW, Non AI Like Prose (Score: 67, Comments: 28): DavidAU has released a new set of models, including the massive L3-MOE-8X8B-Dark-Planet-8D-Mirrored-Chaos-47B-GGUF, which is his largest model to date at 95GB and uses a unique Mixture of Experts (MOE) approach for creative and NSFW outputs. The model integrates 8 versions of Dark Planet 8B through an evolutionary process, allowing users to access varying combinations of these models and control power levels. Additional models and source codes are available on Hugging Face for further exploration and customization.

- DavidAU develops these models independently, using a method he describes as "merge gambling" where he evolves models like Dark Planet 8B by combining elements from different models and selecting the best versions for further development.

- Discussions on NSFW benchmarking highlight the importance of models understanding nuanced prompts without defaulting to safe or generic outputs, with users noting that successful models avoid "GPT-isms" and can handle complex scenarios like those described in detailed prompts.

- Commenters emphasize the significance of prose quality and general intelligence in model evaluation, noting that many models struggle with maintaining character consistency and narrative depth, often defaulting to summarization rather than detailed storytelling.

Theme 5. Llama.cpp GPU Optimization: Snapdragon Laptops Gain AI Performance Boost

- Llama.cpp now supporting GPU on Snapdragon Windows laptops (Score: 65, Comments: 4): Llama.cpp now supports GPU on Snapdragon Windows laptops, specifically leveraging the Qualcomm Adreno GPU. The post author is curious about when this feature will be integrated into platforms like LM Studio and Ollama and anticipates the release of an ARM build of KoboldCpp. Further details can be found in the Qualcomm developer blog.

- FullstackSensei critiques the addition of an OpenCL backend for the Qualcomm Adreno GPU as redundant, suggesting that it is less efficient than using the Hexagon NPU and results in higher power consumption despite a minor increase in token processing speed. They highlight that the system remains bottlenecked by memory bandwidth, approximately 136GB/sec.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. Steak Off Challenge Emphasizes Google's Lead in AI Video Rendering

- Steak Off Challenge between different Video Gens. Google Veo wins - by a huge mile (Score: 106, Comments: 13): Google Veo outperforms competitors in video rendering, particularly in handling complex elements like fingers and cutting physics. Hunyan ranks second, with Kling following, while Sora performs poorly. The original discussion can be found in a tweet.

- Google Veo is praised for its realistic rendering, particularly in the subtle details like knife handling, which gives it a more human-like quality compared to competitors. Users note the impressive realism in the video's depiction of food preparation, suggesting extensive training on cooking footage.

- Hunyan Video is also highlighted for its quality, although humorous comments note its rendering quirks, such as using a plastic knife. This suggests that while Hunyan is second to Google Veo, there are still noticeable areas for improvement.

- Discussions hint at the potential for an open-source version of such high-quality video rendering in the near future, indicating excitement and anticipation for broader accessibility and innovation in this space.

Theme 2. Gemini 2.0 Flash Model Enriches AI with Advanced Roleplay and Context Capabilities

- Gemini 2.0 advanced released (Score: 299, Comments: 54): Gemini 2.0 is highlighted for its sophisticated roleplay capabilities, with features labeled as "1.5 Pro," "1.5 Flash," and experimental options like "2.0 Flash Experimental" and "2.0 Experimental Advanced." The interface is noted for its clean and organized layout, emphasizing the functionalities of each version.

- There is a debate over the effectiveness of Gemini 2.0 compared to other models like 1206 and Claude 3.5 Sonnet for coding tasks, with some users expressing disappointment if 1206 was indeed 2.0. Users like Salty-Garage7777 report that 2.0 is smarter and better at following prompts than Flash, but worse in image recognition.

- The 2.0 Flash model is praised for its roleplay capabilities, with users like CarefulGarage3902 highlighting its long context length and customization options. The model is favored over mainstream alternatives like ChatGPT for its sophistication and ability to adjust censorship filters and creativity.

- There is interest in the availability and integration of Gemini 2.0 on platforms like the Gemini app for Google Pixel phones, though it is not yet available. Additionally, users are seeking coding benchmarks to compare Gemini 2.0 with other models, with some expressing frustration over the lack of such data.

AI Discord Recap

A summary of Summaries of Summaries by O1-mini

Theme 1. AI Models Battle for Supremacy

- Phi-4 Outsmarts GPT-4 in STEM: The phi-4 model with 14 billion parameters eclipses GPT-4 in STEM-related QA by leveraging synthetic data and advanced training techniques, proving that size isn't everything.

- Despite minor tweaks from phi-3, phi-4's revamped curriculum boosts reasoning benchmarks significantly.

- Gemini Flash 2 Takes Code Generation Higher: Gemini Flash 2 outperforms Sonnet 3.5 in scientific coding tasks, especially in array sizing, signaling a new era for code-generating AIs.

- Users are excited about integrating external frameworks to further enhance its capabilities.

- Cohere’s Maya Sparkles in Tool Use: The release of Maya has developers buzzing, with plans to finetune it for enhanced tool utilization, pushing project boundaries like never before.

Theme 2. AI Tools Struggle with Pricing and Integration

- Windsurf Woes: Code Overwrites and Pricing Puzzles: Users lament that Windsurf not only struggles with modifying files but also introduces confusing new pricing plans, making it harder to manage resources.

- Suggestions include integrating Git for better version control to prevent unwanted overwrites.

- Codeium’s Credit Crunch Causes Chaos: Rapid consumption of Flex credits in Codeium leaves users scrambling to purchase larger blocks, highlighting a need for clearer pricing tiers.

- Community debates the fairness of the new credit limits and the specifics of paid tiers.

- Aider’s API Launch: Feature-Rich but Pricey: The O1 API introduces advanced features like reasoning effort parameters, but users are wary of substantial price hikes compared to competitors like Sonnet.

- Combining O1 with Claude is suggested to leverage strengths but raises concerns about overconfidence in responses.

Theme 3. Optimizing AI Deployments and Hardware Utilization

- Quantization Quest: 2-Bit Magic for 8B Models: Successfully quantizing an 8 billion parameter model down to 2 bits opens doors for deploying larger models on constrained hardware, despite initial setup headaches.

- Enthusiasm grows for standardizing this method for models exceeding 32B parameters.

- NVIDIA’s Jetson Orin Nano Super Kit Boosts AI Processing: Priced at $249, NVIDIA’s new kit ramps up AI processing with a 70% increase in neural operations, making powerful AI accessible to hobbyists.

- Developers explore deploying LLMs on devices like AGX Orin and Raspberry Pi 5, enhancing local AI capabilities.

- CUDA Graphs and Async Copying Create Compute Conundrums: Integrating cudaMemcpyAsync within CUDA Graphs on a 4090 GPU leads to inconsistent results, baffling developers and prompting a deeper dive into stream capture issues.

- Ongoing investigations aim to resolve these discrepancies and optimize compute throughput.

Theme 4. AI Enhancements in Developer Workflows

- Cursor Extension: Markdown Magic and Web Publishing: The new Cursor Extension allows seamless exporting of composer and chat histories to markdown, plus one-click web publishing, supercharging developer productivity.

- Users praise the ability to capture and share coding interactions effortlessly.

- Aider’s Linters and Code Management Revolutionize Workflow: With built-in support for various linters and customizable linting commands, Aider offers developers unparalleled flexibility in managing code quality alongside AI-driven edits.

- Automatic linting can be toggled, allowing for a tailored coding experience.

- SpecStory Extension Transforms AI Coding Journeys: The SpecStory extension for VS Code captures, searches, and learns from every AI-assisted coding session, providing a rich repository for developers to refine their practices.

- Enhances documentation and analysis of coding interactions for better learning outcomes.

Theme 5. Community Events and Educational Initiatives Drive Innovation

- DevDay Holiday Edition and API AMA Boomt: OpenAI’s DevDay Holiday Edition livestream wraps up with an AMA featuring the API team, offering a wealth of insights and direct interaction opportunities for developers.

- Community members eagerly await answers to burning API questions and future feature announcements.

- Code Wizard Hackathon Seeks Sponsors for February Frenzy: The organizer hunts for sponsorships to fuel the upcoming Code Wizard hackathon in February 2025, aiming to foster innovation and problem-solving among participants.

- Although some question the funding needs, many underscore the hackathon’s role in building valuable tech projects.

- LLM Agents MOOC Extends Submission Deadline: The Hackathon submission deadline is extended by 48 hours to Dec 19th, clarifying the submission process and giving participants extra time to perfect their AI agent projects.

- Improved mobile responsiveness on the MOOC website garners praise, aiding participants in showcasing their innovations.

PART 1: High level Discord summaries

Codeium / Windsurf Discord

- Windsurf Struggles with File Modifications: Users reported that Windsurf is unable to effectively modify or edit files, with one user describing it as 'stupider' after recent updates.

- Discussions highlighted resource exhaustion errors and confusion regarding the introduction of new pricing plans.

- Confusion Over Codeium's Pricing Model: Members inquired about purchasing larger blocks of Flex credits, noting rapid consumption despite their efforts to manage usage.

- Conversations focused on the newly established credit limits and the specifics of different paid tiers.

- Performance Showdown: Windsurf vs Cursor: Participants compared Windsurf and Cursor, observing that both platforms offer similar agent functionalities but differ in context usage strategies.

- Windsurf enforces strict credit limits, whereas Cursor provides unlimited slow queries post a certain premium usage, which some users find more accommodating.

- Challenges in Windsurf's Code Management: Users expressed frustration with Windsurf's tendency to overwrite code and introduce hallucinated errors, complicating the development process.

- Suggestions were made to integrate Git for version control to better manage changes and enable reversibility.

- Assessing Gemini 2.0's Integration with Windsurf: Engineers are evaluating Gemini 2.0 alongside Windsurf, noting a significant context advantage but experiencing mixed reviews on output quality.

- While Gemini 2.0 offers a larger context window, some users have observed performance degradation beyond certain token limits.

Nous Research AI Discord

- phi-4 Model Outperforms GPT-4 in STEM QA: The phi-4 model, boasting 14 billion parameters, surpasses GPT-4 in STEM-focused QA by integrating synthetic data and enhanced training methodologies.

- Despite minor architectural similarities to phi-3, phi-4 demonstrates robust performance in reasoning benchmarks, attributed to its revised training curriculum and post-training techniques.

- Effective 2-bit Quantization Achieved for 8B Models: An 8 billion parameter model was successfully quantized down to 2 bits, showcasing potential as a standard for larger models, despite initial setup complexities.

- This advancement suggests enhanced usability for models exceeding 32B parameters, with members expressing optimism about its applicability in future drafts.

- Gemini Utilizes Threefry in Sampling Algorithms: Members discussed whether Xorshift or other algorithms are employed in sampling for LLMs, with one noting that Gemma uses Threefry.

- PyTorch's adoption of Mersenne Twister contrasts with Gemini's approach, highlighting differing sampling techniques across AI frameworks.

- Hugging Face Advances Test-Time Compute Strategies: Hugging Face's latest work on test-time compute approaches received commendation, particularly their scaling methods for compute efficiencies.

- A Hugging Face blogpost delves into their strategies, fostering community understanding and positive reception.

aider (Paul Gauthier) Discord

- Full O1 API Launch: The upcoming O1 API introduces features like reasoning effort parameters and system prompts, enhancing AI capabilities.

- Users anticipate significant price increases compared to Sonnet, expressing mixed feelings about the potential costs associated with the O1 API.

- Improved AI Performance with O1 and Claude: The O1 model demonstrates enhanced response capabilities based on specific prompts, while users suggest combining it with Claude to leverage strengths of both models.

- Despite improved performance, the O1 model can exhibit overconfidence in certain situations, prompting discussions on optimal model usage.

- Aider's Linters and Code Management: Aider provides built-in support for various linters and allows customization through the

--lint-cmdoption, as detailed in the Linting and Testing documentation.- Users can toggle automatic linting, offering flexibility in managing code quality alongside AI-driven edits.

- Claude Model Limitations: The Claude model is noted for its reluctance to generate certain outputs and tends to provide overly cautious responses.

- Users highlighted the necessity of more explicit guidance to achieve desired results, emphasizing the importance of specificity in prompts.

- Aider's Integration with LM Studio: Aider faces challenges integrating with LM Studio, including errors like BadRequestError due to missing LLM providers.

- Successful integration was achieved by configuring the OpenAI provider format with

openai/qwen-2.5-coder-7b-instruct-128k, as discovered by users during troubleshooting.

- Successful integration was achieved by configuring the OpenAI provider format with

Notebook LM Discord Discord

- New UI Rollout Enhances User Experience: This morning, the team announced the rollout of the new UI and NotebookLM Plus features to all users, as part of an ongoing effort to improve the platform's user experience. Announcement details.

- However, some users expressed dissatisfaction with the new UI, highlighting issues with the chat panel's visibility and notes layout, while others appreciated the larger editor and suggested collapsing panels to improve usability.

- NotebookLM Plus Access Expands via Google Services: NotebookLM Plus is now accessible through Google Workspace and Google Cloud, with plans to extend availability to Google One AI Premium users by early 2025. Upgrade information.

- Questions arose about its availability in countries like Italy and Brazil, with responses indicating a gradual global rollout.

- Interactive Audio BETA Limited to Early Adopters: Interactive Audio is currently available only to a select group of users as backend improvements are being made. Users without access to the Interactive mode (BETA) should not be concerned during the transition. Interactive Audio details.

- Multiple users reported difficulties with the Interactive Mode feature, citing lagging and accessibility issues even after updating to the new UI, suggesting the feature is still in rollout.

- AI Integration in Call Centers Discussed: Members explored the integration of AI into IT call centers, including humorous takes on a German-speaking AI managing customer queries, accompanied by shared audio clips demonstrating scenarios like computer troubleshooting and cold-call sales pitches. Use Cases.

- The discussion highlighted potential improvements in customer service efficiency through AI implementations.

- Expanding Multi-language Support in NotebookLM: Users inquired about NotebookLM's multi-language capabilities for generating podcasts, confirming that audio summaries are currently supported only in English. General channel.

- Despite this limitation, successful content generation in Portuguese indicates potential for broader language support in future updates.

Unsloth AI (Daniel Han) Discord

- Phi-4 Outpaces GPT-4 in STEM QA: The phi-4, a 14-billion parameter language model, leverages a training strategy that emphasizes data quality, integrating synthetic data throughout its development to excel in STEM-focused QA capabilities, surpassing its teacher model, GPT-4.

- Despite minimal architecture changes since phi-3, the model's performance on reasoning benchmarks underscores an improved training curriculum, as detailed in the Continual Pre-Training of Large Language Models.

- Unsloth 4-bit Model Shows Performance Gaps: Users reported discrepancies in layer sizes between the Unsloth 4-bit model and the original Meta version, highlighting potential issues with model parameterization.

- Concerns were raised about VRAM usage and performance trade-offs when transitioning from 4-bit to full precision, as discussed in the Qwen2-VL-7B-Instruct-unsloth-bnb-4bit repository.

- Qwen 2.5 Finetuning Faces Catastrophic Forgetting: A member expressed frustration that their finetuned Qwen 2.5 model underperformed compared to the vanilla version, attributing the decline to catastrophic forgetting.

- Other members recommended iterating on the fine-tuning process to better align with specific objectives, emphasizing the importance of tailored adjustments.

- Enhancing Function Calls in Llama 3.2: Participants explored training Llama 3.2 to improve function calling capabilities but noted the scarcity of direct implementation examples.

- There was consensus that incorporating special tokens directly into datasets could streamline the training process, as referenced in the Llama Model Text Prompt Format.

- Optimizing Lora+ with Unsloth: Members discussed integrating Lora+ with Unsloth, observing potential incompatibilities with other methods and suggesting alternatives like LoFTQ or PiSSA for better initializations.

- One member highlighted performance improvements in Unsloth's latest release through a CPT blog post, emphasizing the benefits of these optimizations.

Cohere Discord

- Cohere Boosts Multimodal Image Embed Rates: Cohere has increased the rate limits for the Multimodal Image Embed endpoint by 10x, elevating production keys from 40 images/min to 400 images/min. Read more

- Trial users remain limited to 5 images/min for testing, enabling application development and community sharing without overwhelming the system.

- Maya Release Enhances Tool Utilization: The release of Maya has been celebrated among members, sparking enthusiasm to explore and potentially finetune it for tool use. Members are committed to pushing project boundaries with the new model.

- The community plans to engage in extensive testing and customization, aiming to integrate Maya's capabilities into their workflows effectively.

- Optimizing Cohere API Key Management: Cohere offers two types of API keys: evaluation keys that are free but limited, and production keys that are paid with fewer restrictions. Users can manage their keys via the API keys page.

- This structure allows developers to efficiently start projects while scaling up with production keys as their applications grow.

- Strategies for Image Retrieval using Embeddings: To implement image retrieval based on user queries, a member proposed storing image paths as metadata alongside embeddings in the Pinecone vector store. This allows the system to display the correct image when an embedding matches a query.

- By leveraging semantic search through embeddings, the retrieval process becomes more accurate and efficient, enhancing the user experience.

- Seeking Sponsors for Code Wizard Hackathon: The organizer of the Code Wizard hackathon is actively seeking sponsorships for the event scheduled in February 2025, aiming to foster innovation and problem-solving among participants.

- While some attendees questioned the funding necessity, others emphasized the event's role in building valuable projects and advancing technical skills.

Bolt.new / Stackblitz Discord

- Bolt's UI Version with Model Selection: A member announced the rollout of a UI version of Bolt, enabling users to choose between Claude, OpenAI, and Llama models hosted on Hyperbolic. This update aims to enhance the generation process by offering diverse model options.

- The new UI is designed to improve user experience and streamline model selection, allowing for more customized and efficient project workflows.

- Managing Tokens Effectively: Users raised concerns regarding the usage and management of tokens in Bolt, highlighting frustrations over unexpected consumption.

- It was emphasized that there are limits on monthly token usage, and users should be mindful of replacement costs to avoid exceeding their quotas.

- Challenges with Bolt Integration: Several users reported integration issues with Bolt, such as the platform generating unnecessary files and encountering errors during command execution.

- To mitigate frustration, some users suggested taking breaks from the platform, emphasizing the importance of maintaining productivity without overexertion.

- Bolt for SaaS Projects: Members expressed interest in utilizing Bolt for SaaS applications, recognizing the need for developer assistance to scale and integrate effectively.

- One user sought step-by-step guidance on managing SaaS projects with Bolt, indicating a demand for more comprehensive support resources.

- Support and Assistance on Coding Issues: Users sought support for coding challenges within Bolt, with specific requests for help with their Python code.

- Community members provided advice on debugging techniques and recommended online resources to enhance coding practices.

Eleuther Discord

- Pythia based RLHF models: Members in general inquired about the availability of publicly available Pythia based RLHF models, but no specific models were recommended in the discussion.

- TensorFlow on TPU v5p: A user reported TensorFlow experiencing segmentation faults on TPU v5p, stating that 'import tensorflow' causes errors across multiple VM images.

- Concerns were raised regarding Google's diminishing support for TensorFlow amid ongoing technical challenges.

- SGD-SaI Optimizer Approach: In research, the introduction of SGD-SaI presents a new method to enhance stochastic gradient descent without adaptive moments, achieving results comparable to AdamW.

- Participants emphasized the need for unbiased comparisons with established optimizers and suggested dynamically adjusting learning rates during training phases.

- Stick Breaking Attention Mechanism: Discussions in research covered stick breaking attention, a technique for adaptively aggregating attention scores to reduce oversmoothing effects in models.

- Members debated whether these adaptive methods could better handle the complexity of learned representations within transformer architectures.

- Grokking Phenomenon: A recent paper discussed the grokking phenomenon by linking neural network complexity with generalization, introducing a metric based on Kolmogorov complexity.

- The study aims to discern when models generalize versus memorize, potentially offering structured insights into training dynamics.

Cursor IDE Discord

- Cursor Extension Launch Enhances Productivity: The Cursor Extension now allows users to export their composer and chat history to Markdown, facilitating improved productivity and content sharing.

- Additionally, it includes an option to publish content to the web, enabling effective capture of coding interactions.

- O1 Pro Automates Coding Tasks Efficiently:

@mckaywrigleyreported that O1 Pro successfully implemented 6 tasks, modifying 14 files and utilizing 64,852 input tokens in 5m 25s, achieving 100% correctness and saving 2 hours.- This showcases O1 Pro's potential in streamlining complex coding workflows.

- RAPIDS cuDF Accelerates Pandas with Zero Code Changes: A tweet by @NVIDIAAIDev announced that RAPIDS cuDF can accelerate pandas operations up to 150x without any code modifications.

- Developers can now handle larger datasets in Jupyter Notebooks, as demonstrated in their demo.

- SpecStory Extension Integrates AI Coding Journeys: The SpecStory extension for Visual Studio Code offers features to capture, search, and learn from every AI coding journey.

- This tool enhances developers' ability to document and analyze their coding interactions effectively.

OpenAI Discord

- DevDay Holiday Edition and API Team AMA: The DevDay Holiday Edition YouTube livestream, Day 9: DevDay Holiday Edition, is scheduled and accessible here.

- The stream precedes an AMA with OpenAI's API team, scheduled for 10:30–11:30am PT on the developer forum.

- AI Accents Mimicry and Realism Limitations: Users discussed AI's ability to switch between multiple languages and accents, such as imitating an Aussie accent, but interactions still feel unnatural.

- Participants noted that while AI can mimic accents, it often restricts certain respectful interaction requests due to guideline limitations.

- Custom GPTs Editing and Functionality Issues: Several users reported losing the ability to edit custom GPTs and issues accessing them, despite multiple setups.

- This appears to be a known issue, as confirmed by others facing similar problems with their custom GPT configurations.

- Anthropic's Pricing Model Adjustment: Anthropic has adjusted its pricing model, becoming less expensive with the addition of prompt caching for APIs.

- Users expressed curiosity about how this shift might impact their usage and the competition with OpenAI's offerings.

OpenRouter (Alex Atallah) Discord

- OpenRouter adds support for 46 models: OpenRouter now supports 46 models for structured outputs, enhancing multi-model application development. The demo showcases how structured outputs constrain LLM outputs to a JSON schema.

- Additionally, structured outputs are normalized across 8 model companies and 8 free models, facilitating smoother integration into applications.

- Gemini Flash 2 outperforms Sonnet 3.5: Gemini Flash 2 generates superior code for scientific problem-solving tasks compared to Sonnet 3.5, especially in array sizing scenarios.

- Feedback suggested that integrating external frameworks could further boost its effectiveness in specific use cases.

- Experimenting with typos to influence AI responses: Members are exploring the use of intentional typos and meaningless words in prompts to guide model outputs, potentially benefiting creative writing.

- This technique aims to direct model attention to specific keywords while maintaining controlled outputs through Chain of Thought (CoT) methods.

- o1 API reduces token usage by 60%: o1 API now consumes 60% fewer tokens, raising concerns about its impact on model performance.

- Users discussed the need for pricing adjustments and improved token efficiency, noting that current tier limitations still apply.

- API key exposure and reporting for OpenRouter: A member reported exposed OpenRouter API keys on GitHub, initiating discussions on proper reporting channels.

- It was recommended to contact support@openrouter.ai to address any security risks from exposed keys.

Perplexity AI Discord

- Perplexity Introduces Pro Gift Subscriptions: Perplexity is now offering gift subscriptions for 1, 3, 6, or 12 months here, enabling users to unlock enhanced search capabilities.

- Subscriptions are delivered via promo codes directly to recipients' email, and it is noted that all sales are final to ensure commitment.

- Debate on OpenAI Borrowing Perplexity Features: Users debated whether OpenAI is innovating or copying Perplexity's features like Projects and GPT Search, leading to discussions about originality in AI development.

- Some members opined that feature replication is common across platforms, fostering a dialogue on maintaining unique value propositions in AI tools.

- Mozi App Launches Amidst Social Media Excitement: Ev Williams launched the new social app Mozi, which is garnering attention for its fresh approach to social networking, as detailed in a YouTube video.

- The app promises innovative features, generating discussions on its potential impact on existing social media platforms.

- Concerns Over Declining Model Performance: Users expressed frustration with the Sonnet and Claude model variations, noting a perceived decline in performance affecting response quality.

- Switching between specific models has led to inconsistent user experiences, highlighting the need for clarity on model optimization.

- Gemini API Integration Enhances Perplexity's Offerings: Implementation of the new Gemini integration through the OpenAI SDK allows seamless interaction with multiple APIs, accessible via the Gemini API.

- This integration improves user experience by facilitating access to diverse models, including Gemini, OpenAI, and Groq, with Mistral support forthcoming.

Latent Space Discord

- Palmyra Creative's 128k Context Release: The new Palmyra Creative model enhances creative business tasks with a 128k context window for brainstorming and analysis.

- It integrates seamlessly with domain-specific models, catering to professionals from marketers to clinicians.

- OpenAI API Introduces O1 with Function Calling: OpenAI announced updates during a mini dev day, including an O1 implementation with function calling and new voice model features.

- WebRTC support for real-time voice applications and significant output token enhancements were key highlights.

- NVIDIA Launches Jetson Orin Nano Super Kit: NVIDIA's Jetson Orin Nano Super Developer Kit boosts AI processing with a 70% increase in neural processing to 67 TOPS and 102 GB/s memory bandwidth.

- Priced at $249, it aims to provide budget-friendly AI capabilities for hobbyists.

- Clarification on O1 vs O1 Pro by Aidan McLau: Aidan McLau clarified that O1 Pro is a distinct implementation from the standard O1 model, designed for higher reasoning capabilities.

- This distinction has raised community questions about potential functional confusion between these models.

- Anthropic API Moves Four Features Out of Beta: Anthropic announced the general availability of four new features, including prompt caching and PDF support for their API.

- These updates aim to enhance developer experience and facilitate smoother operations on the Anthropic platform.

LM Studio Discord

- Zotac's RTX 50 Sneak Peek: Zotac inadvertently listed the upcoming RTX 5090, RTX 5080, and RTX 5070 GPU families on their website, revealing advanced specs 32GB GDDR7 memory ahead of the official launch.

- This accidental disclosure has sparked excitement within the community, confirming the RTX 5090's impressive specifications and fueling anticipation for Nvidia's next-generation hardware.

- AMD Driver Dilemmas: Users reported issues with the 24.12.1 AMD driver, which caused performance drops and GPU usage spikes without effective power consumption.

- Reverting to version 24.10.1 resolved these lag issues, resulting in improved performance to 90+ tokens/second on various models.

- TTS Dreams: LM Studio's Next Step: A user expressed optimism for integrating text to speech and speech to text capabilities in LM Studio, with current alternatives available as workarounds.

- Another member suggested running these tools alongside LM Studio as a server to facilitate the desired functionalities, enhancing the overall user experience.

- Uncensoring Chatbots: New Alternatives: Discussions emerged around finding uncensored chatbot alternatives, with recommendations for models like Gemma2 2B and Llama3.2 3B that can run on CPU.

- Members were provided with resources on effectively using these models, including links to quantization options, to optimize their performance.

- GPU Showdown: 3070 Ti vs 3090: Users observed that the RTX 3070 Ti and RTX 3090 exhibit similar performance in gaming despite comparable price ranges.

- One member noted finding 3090s for approximately $750, while another mentioned prices around $900 CAD locally, highlighting market price variations.

Stability.ai (Stable Diffusion) Discord

- Top Stable Diffusion Courses: A member is seeking comprehensive online courses that aggregate YouTube tutorials for learning Stable Diffusion with A1111.

- The community emphasized the necessity for accessible educational resources on Stable Diffusion.

- Laptop vs Desktop for AI: A user is evaluating between a 4090 laptop and a 4070 TI Super desktop, both featuring 16GB VRAM, for AI tasks.

- Members suggested desktops are more suitable for heavy AI workloads, noting laptops are better for gaming but not for intensive graphics tasks.

- Bot Detection Strategies: Discussions focused on techniques for scam bot identification, such as asking absurd questions or employing the 'potato test'.

- Participants highlighted that both bots and humans can pose risks, requiring cautious interaction.

- Creating Your Own Lora Model: A user requested guidance on building a Lora model, receiving a step-by-step approach including dataset creation, model selection, and training.

- Emphasis was placed on researching effective dataset creation for training purposes.

- Latest AI Models: Flux.1-Dev: A returning member inquired about current AI models, specifically mentioning Flux.1-Dev, and its requirements.

- The community provided updates on trending model usage and necessary implementation requirements.

GPU MODE Discord

- CUDA Graphs and cudaMemcpyAsync Compatibility: Members confirmed that CUDA Graph supports cudaMemcpyAsync, but integrating them leads to inconsistent application results, particularly affecting compute throughput on the 4090 GPU. More details

- A reported issue highlighted that using cudaMemcpyAsync within CUDA Graph mode causes incorrect application outcomes, unlike kernel copies which function correctly. Further investigation with minimal examples is underway to resolve these discrepancies.

- Optimizing PyTorch Docker Images: Discussions revealed that official PyTorch Docker images range from 3-7 GB, with possibilities to reduce size using a 30MB Ubuntu base image alongside Conda for managing CUDA libraries. GitHub Guide

- Debate ensued over the necessity of combining Conda and Docker, with arguments favoring it for maintaining consistent installations across diverse development environments.

- NVIDIA Jetson Nano Super Launch: NVIDIA introduced the Jetson Nano Super, a compact AI computer offering 70-T operations per second for robotics applications, priced at $249 and supporting advanced models like LLMs. Tweet

- Users discussed enhancing Jetson Orin performance with JetPack 6.1 via the SDK Manager, and deploying LLM inference on devices such as AGX Orin and Raspberry Pi 5, which utilizes nvme 256GB for expedited data transfer.

- VLM Fine-tuning with Axolotl and TRL: Resources for VLM fine-tuning using Axolotl, Unslosh, and Hugging Face TRL were shared, including a fine-tuning tutorial.

- The process was noted to be resource-intensive, necessitating significant computational power, which was emphasized as a consideration for efficient integrations.

- Chain of Thought Dataset Generation: The team initiated a Chain of Thought (CoT) dataset generation project to evaluate which CoT forms most effectively enhance model performance, utilizing reinforcement learning for optimization.

- This experiment aims to determine if CoT can solve riddles beyond the capabilities of direct transduction methods, with initial progress showing 119 riddles solved and potential for improvement using robust verifiers.

Modular (Mojo 🔥) Discord

- MAX 24.6 Launches with MAX GPU: Today, MAX 24.6 was released, introducing the eagerly anticipated MAX GPU, a vertically integrated generative AI stack that eliminates the need for vendor-specific libraries like NVIDIA CUDA. For more details, visit Modular's blog.

- This release addresses the increasing resource demands of large-scale Generative AI, paving the way for enhanced AI development.

- Mojo v24.6 Release: The latest version of Mojo, v24.6.0, has been released and is ready for use, as confirmed by the command

% mojo --version. The community has shown significant excitement about the new features.- Users in the mojo channel are eager to explore the updates, indicating strong community engagement.

- MAX Engine and MAX Serve Introduced: MAX Engine and MAX Serve were introduced alongside MAX 24.6, providing a high-speed AI model compiler and a Python-native serving layer for large language models (LLMs). These tools are designed to enhance performance and efficiency in AI workloads.

- MAX Engine features vendor-agnostic Mojo GPU kernels optimized for NVIDIA GPUs, while MAX Serve simplifies integration for LLMs under high-load scenarios.

- GPU Support Confirmed in Mojo: GPU support in Mojo was confirmed for the upcoming Mojo v25.1.0 nightly release, following the recent 24.6 release. This inclusion showcases ongoing enhancements within the Mojo platform.

- The community anticipates improved performance and scalability for complex AI workloads with the added GPU support.

- Mojo REPL Faces Archcraft Linux Issues: A user reported encountering issues when entering the Mojo REPL on Archcraft Linux, citing a missing dynamically linked library, possibly

mojo-lddormojo-lld.- Additionally, the user faced difficulties installing Python requirements, mentioning errors related to being in an externally managed environment.

LlamaIndex Discord

- NVIDIA NV-Embed-v2 Availability Explored: Members investigated the availability of NVIDIA NV-Embed-v2 within NVIDIA Embedding using the

embed_model.available_modelsfeature to verify accessible models.- It was highlighted that even if NV-Embed-v2 isn't explicitly listed, it might still function correctly, prompting the need for additional testing to confirm its availability.

- Integrating Qdrant Vector Store in Workflows: A user sought assistance with integrating the Qdrant vector store into their workflow, mentioning challenges with existing collections and query executions.

- Another member provided documentation examples and noted they hadn't encountered similar issues, suggesting further troubleshooting.

- Addressing OpenAI LLM Double Retry Issues: Paullg raised concerns about potential double retries in the OpenAI LLM, indicating that both the OpenAI client and the

llm_retry_decoratormight independently implement retry logic.- The discussion then focused on whether a recent pull request resolved this issue, with participants expressing uncertainty about the effectiveness of the proposed changes.

- LlamaReport Enhances Document Readability: LlamaReport, now in preview, transforms document databases into well-structured, human-readable reports within minutes, facilitating effective question answering about document sets. More details are available in the announcement post.

- This tool aims to streamline document interaction by optimizing the output process, making it easier for users to navigate and utilize their document databases.

- Agentic AI SDR Boosts Lead Generation: The introduction of a new agentic AI SDR leverages LlamaIndex to generate leads, demonstrating practical AI integration in sales strategies. The code is accessible for implementation.

- This development is part of the Quickstarters initiative, which assists users in exploring Composio's capabilities through example projects and real-world applications.

Nomic.ai (GPT4All) Discord

- GPT4All v3.5.3 Released with Critical Fixes: The GPT4All v3.5.3 version has been officially released, addressing notable issues from the previous version, including a critical fix for LocalDocs that was malfunctioning in v3.5.2.

- Jared Van Bortel and Adam Treat from Nomic AI were acknowledged for their contributions to this update, enhancing the overall functionality of GPT4All.

- LocalDocs Functionality Restored in New Release: A serious problem preventing LocalDocs from functioning correctly in v3.5.2 has been successfully resolved in GPT4All v3.5.3.

- Users can now expect improved performance and reliability while utilizing LocalDocs for document handling.

- AI Agent Capabilities Explored via YouTube Demo: Discussions emerged about the potential to run an 'AI Agent' via GPT4All, linked to a YouTube video showcasing its capabilities.

- One member noted that while technically feasible, it mainly serves as a generative AI platform with limited functionality.

- Jinja Template Issues Plague GPT4All Users: A member reported that GPT4All is almost completely broken for them due to a Jinja template problem, which they hope gets resolved soon.

- Another member highlighted the importance of Jinja templates as crucial for model interaction, with ongoing improvements to tool calling functionalities in progress.

- API Documentation Requests Highlight Gaps in GPT4All: A request was made for complete API documentation with details on endpoints and parameters, referencing the existing GPT4All API documentation.

- Members shared that activating the local API server requires simple steps, but they felt the documentation lacks comprehensiveness.

OpenInterpreter Discord

- Questions about Gemini 2.0 Flash: Users are inquiring about the functionality of Gemini 2.0 Flash, highlighting a lack of responses and support.

- This indicates a potential gap in user experience or support for this feature within the OpenInterpreter community.

- Debate on VEO 2 and SORA: Members debate whether VEO 2 is superior to SORA, noting that neither AI is currently available in their region.

- The lack of availability suggests interest but also frustration among users wanting to explore these options.

- Web Assembly Integration with OpenInterpreter: A user proposed running the OpenInterpreter project in a web page using Web Assembly with tools like Pyodide or Emscripten.

- This approach could provide auto-sandboxing and eliminate the need for compute calls, enhancing usability in a chat UI context.

- Local Usage of OS in OpenInterpreter: Inquiries were made about utilizing the OS locally within OpenInterpreter, with users seeking clarification on what OS entails.

- This reflects ongoing interest in local execution capabilities among users looking to enhance functionality.

- Troubleshooting Errors in Open Interpreter: A member reported persistent errors when using code with the

-yflag, specifically issues related to setting the OpenAI API key.- This highlights a common challenge users face and the need for clearer guidance on error handling.

Torchtune Discord

- Torcheval's Batched Metric Sync Simplifies Workflow: A member expressed satisfaction with Torcheval's batched metric sync feature and the lack of extra dependencies, making it a pleasant tool to work with.

- This streamlined approach enhances productivity and reduces complexity in processing metrics.

- Challenges in Instruction Fine-Tuning Loss Calculation: A member raised concerns about the per-token loss calculation in instruction fine-tuning, noting that the loss from one sentence depends on others in the batch due to varying token counts.

- This method appears to be the standard practice, leading to challenges that the community must adapt to.

- GenRM Verifier Model Enhances LLM Performance: A recent paper proposes using generative verifiers (GenRM) trained on next-token prediction to enhance reasoning in large language models (LLMs) by integrating solution generation with verification.

- This approach allows for better instruction tuning and the potential for improved computation via majority voting, offering benefits over standard LLM classifiers.

- Sakana AI's Universal Transformer Memory Optimization: Researchers at Sakana AI have developed a technique to optimize memory usage in LLMs, allowing enterprises to significantly reduce costs related to application development on Transformer models.

- The universal transformer memory technique retains essential information while discarding redundancy, enhancing model efficiency.

- 8B Verifier Performance Analysis and Community Reactions: Concerns were raised regarding the use of an 8B reward/verifier model, with a member noting the computation costs and complexity of training such a model shouldn't be overlooked in performance discussions.

- Another member humorously compared the methodology to 'asking a monkey to type something and using a human to pick the best one,' suggesting it might be misleading and indicating a need for broader experimentation.

LLM Agents (Berkeley MOOC) Discord

- Hackathon Deadline Extended by 48 Hours: The Hackathon submission deadline has been extended by 48 hours to 11:59pm PT, December 19th.

- This extension aims to clear up confusion about the submission process and allow participants more time to finalize their projects.

- Submission Process Clarified for Hackathon: Participants are reminded that submissions should be made through the Google Form, NOT via the Devpost site.

- This clarification is essential to ensure all projects are submitted correctly.

- LLM Agents MOOC Website Gets Mobile Makeover: A member revamped the LLM Agents MOOC website for better mobile responsiveness, sharing the updated version at this link.

- Hope this can be a way to give back to the MOOC/Hackathon. Another user praised the design, indicating plans to share it with staff.

- Certificate Deadlines Confirmed Until 12/19: A user inquired about the certificate submission deadline amidst uncertainty about potential extensions.

- Another member confirmed that there are no deadline changes for the MOOC and emphasized that the submission form will remain open until 12/19 for convenience.

tinygrad (George Hotz) Discord

- GPU via USB Connectivity Explored: A user in #general inquired about connecting a GPU through a USB port, referencing a tweet, to which George Hotz responded, 'our driver should allow this'.

- This discussion highlights the community's interest in expanding hardware compatibility for tinygrad applications.

- Mac ARM64 Backend Access Limited to CI: In #general, a user sought access to Macs for arm64 backend development, but George clarified that these systems are designated for Continuous Integration (CI) only.

- The clarification emphasizes that Mac infrastructure is currently reserved for running benchmark tests rather than general development use.

- Continuous Integration Focuses on Mac Benchmarks: The Mac Benchmark serves as a crucial part of the project's Continuous Integration (CI) process, concentrating on performance assessments.

- This approach underscores the team's strategy to utilize specific hardware configurations to ensure robust performance metrics.

Axolotl AI Discord

- Scaling Test Time Compute Analysis: A member shared the Hugging Face blog post discussing scaling test time compute, which they found refreshing.

- This post sparked interest within the community regarding the efficiency of scaling tests.

- 3b Model Outperforms 70b in Math: A member noted that the 3b model outperforms the 70b model in mathematics, labeling this as both insane and significant.

- This observation led to discussions about the unexpected efficiency of smaller models.

- Missing Optim Code in Repository: A member expressed concern over the absence of the actual optim code in a developer's repository, which only contains benchmark scripts.

- They highlighted their struggles with the repo and emphasized ongoing efforts to resolve the issue.

- Current Workload Hindering Contributions: A member apologized for being unable to contribute, citing other tasks and bug fixes.

- This underscores the busy nature of development and collaboration within the community.

- Community Expresses Gratitude for Updates: A member thanked another for their update amidst ongoing discussions.

- This reflects the positive and supportive atmosphere of the channel.

DSPy Discord

- Autonomous AI Boosts Knowledge Worker Efficiency: A recent paper discusses how autonomous AI enhances the efficiency of knowledge workers by automating routine tasks, thereby increasing overall productivity.

- The study reveals that while initial research focused on chatbots aiding low-skill workers, the emergence of agentic AIs shifts benefits towards more skilled individuals.

- AI Operation Models Alter Workforce Dynamics: The paper introduces a framework where AI agents can operate autonomously or non-autonomously, leading to significant shifts in workforce dynamics within hierarchical firms.

- It notes that basic autonomous AI can displace humans into specialized roles, while advanced autonomous AI reallocates labor towards routine tasks, resulting in larger and more productive organizations.

- Non-Autonomous AI Empowers Less Knowledgeable Individuals: Non-autonomous AI, such as chatbots, provides affordable expert assistance to less knowledgeable individuals, enhancing their problem-solving capabilities without competing for larger tasks.

- Despite being perceived as beneficial, the ability of autonomous agents to support knowledge workers offers a competitive advantage as AI technologies continue to evolve.

Mozilla AI Discord

- Final RAG Event for Ultra-Low Dependency Applications: Tomorrow is the final event for December, where participants will learn to create an ultra-low dependency Retrieval Augmented Generation (RAG) application using only sqlite-vec, llamafile, and bare-bones Python, led by Alex Garcia.

- The session requires no additional dependencies or 'pip install's, emphasizing simplicity and efficiency in RAG development.

- Major Updates on Developer Hub and Blueprints: A significant announcement was made regarding the Developer Hub and Blueprints, prompting users to refresh their awareness.

- Feedback is being appreciated as the community explores the thread on Blueprints, aimed at helping developers build open-source AI solutions.

MLOps @Chipro Discord

- Year-End Retrospective on Data Infrastructure: Join us on December 18 for a retrospective panel featuring founders Yingjun Wu, Stéphane Derosiaux, and Alexander Gallego discussing innovations in data infrastructure over the past year.

- The panel will cover key themes including Data Governance, Streaming, and the impact of AI on Data Infrastructure.

- Keynote Speakers for Data Innovations Panel: The panel features Yingjun Wu, CEO of RisingWave, Stéphane Derosiaux, CPTO of Conduktor, and Alexander Gallego, CEO of Redpanda.

- Their insights are expected to explore crucial areas like Stream Processing and Iceberg Formats, shaping the landscape for 2024.

- AI's Role in Data Infrastructure: The panel will discuss the impact of AI on Data Infrastructure, highlighting recent advancements and implementations.

- This includes how AI technologies are transforming Data Governance and enhancing Streaming capabilities.

- Stream Processing and Iceberg Formats: Key topics include Stream Processing and Iceberg Formats, critical for modern data infrastructure.

- The panelists will delve into how these technologies are shaping the data infra ecosystem for the upcoming year.

Gorilla LLM (Berkeley Function Calling) Discord

- BFCL Leaderboard V3 Freezes During Function Demo: A member raised an issue with the BFCL Leaderboard being stuck at 'Loading Model Response...' during the function call demo.

- They inquired if others have encountered the same loading problem, seeking confirmation and potential solutions.

- BFCL Leaderboard V3 Expands Features and Datasets: Discussion highlighted the Berkeley Function Calling Leaderboard V3's updated evaluation criteria for accurate function calling by LLMs.

- Members referenced previous versions like BFCL-v1 and BFCL-v2, noting that BFCL-v3 includes expanded datasets and methodologies for multi-turn interactions.

The LAION Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!