[AINews] Nvidia Minitron: LLM Pruning and Distillation updated for Llama 3.1

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Pruning and Distillation are all you need.

AI News for 8/22/2024-8/23/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (214 channels, and 2531 messages) for you. Estimated reading time saved (at 200wpm): 284 minutes. You can now tag @smol_ai for AINews discussions!

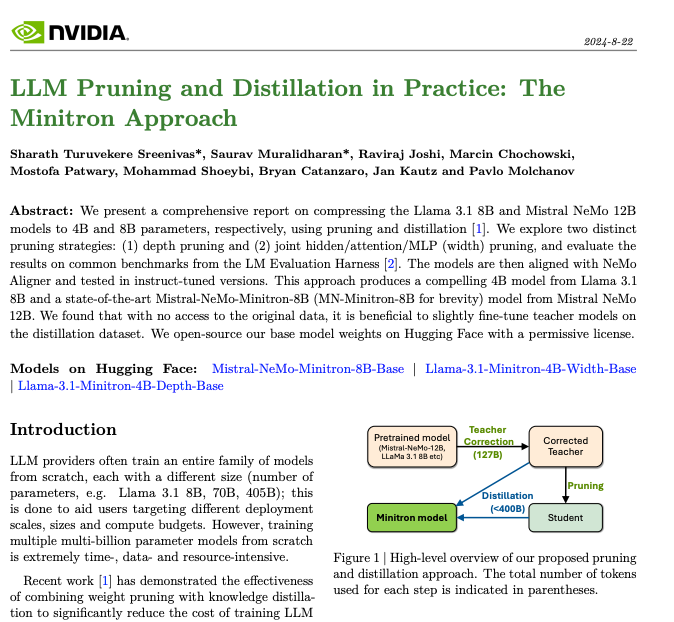

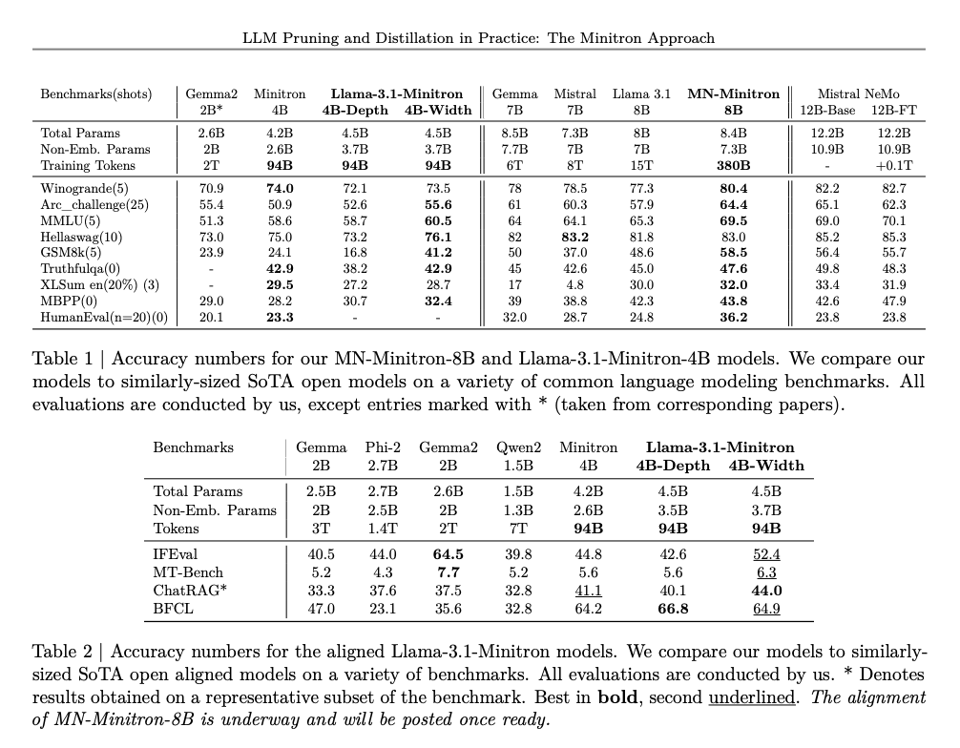

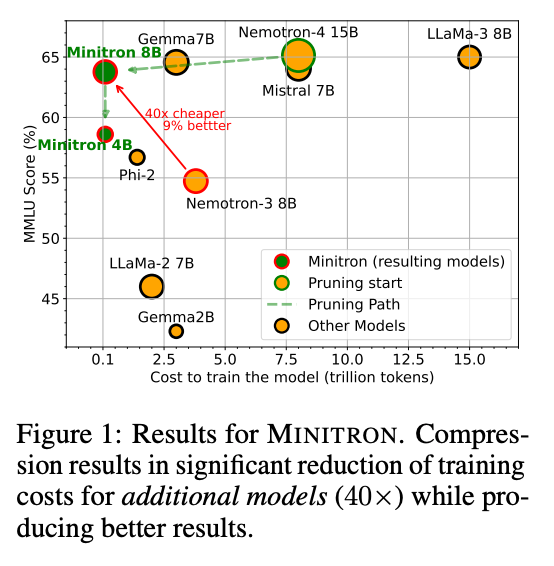

We've obliquely mentioned the 4B and 8B Minitron (Nvidia's distillations of Llama 3.1 8B) a couple times in recent weeks, but there's now a nice short 7 pager from Sreenivas & Muralidharan et al (authors of the Minitron paper last month) updating their Llama 2 results for Llama 3:

The reason this is important provides some insight on Llama 3 given Nvidia's close relatinoship with Meta:

"training multiple multi-billion parameter models from scratch is extremely time-, data- and resource-intensive. Recent work [1] has demonstrated the effectiveness of combining weight pruning with knowledge distillation to significantly reduce the cost of training LLM model families. Here, only the biggest model in the family is trained from scratch; other models are obtained by successively pruning the bigger model(s) and then performing knowledge distillation to recover the accuracy of pruned models.

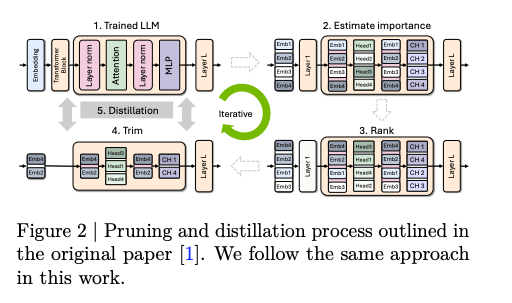

The main steps:

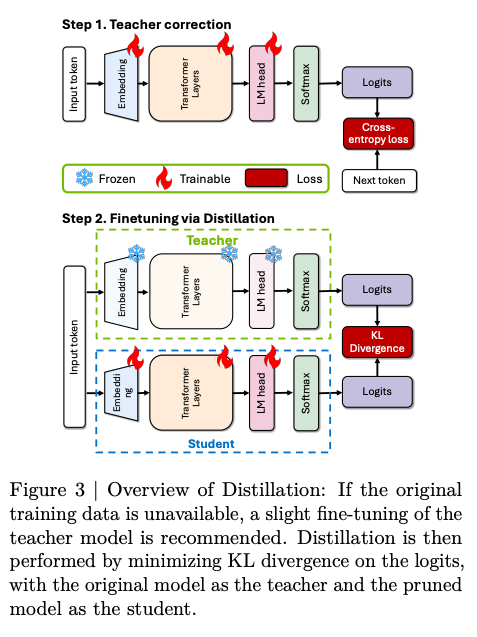

- teacher correction - lightly finetuning the teacher model on the target dataset to be used for distillation, using ∼127B tokens.

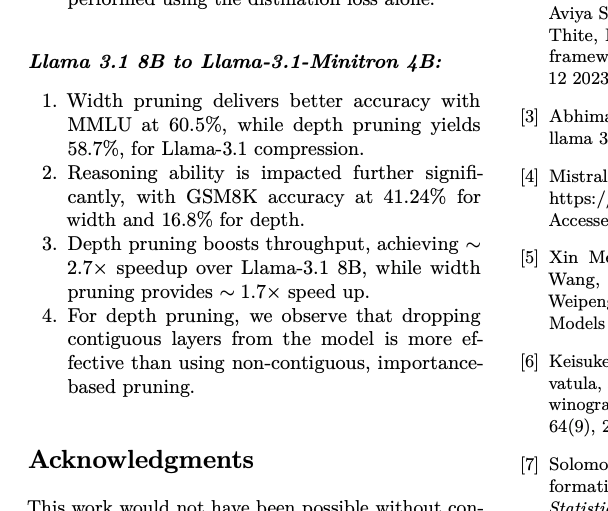

- depth or width pruning: using "a purely

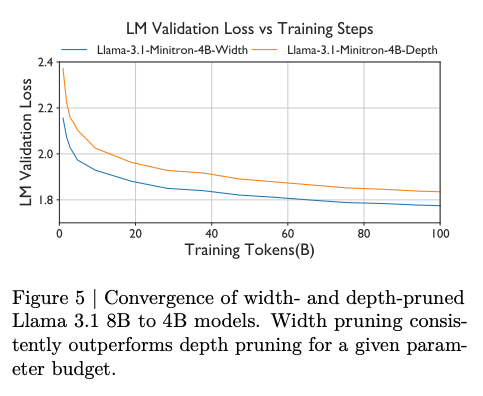

activation-based importance estimation strategy that simultaneously computes sensitivity information for all the axes we consider (depth, neuron, head, and embedding channel) using a small calibration dataset and only forward propagation passes". Width pruning consistently outperformed in ablations.

- Retraining with distillation: "real" KD, aka using KL Divergence loss on teacher and student logits.

This produces a generally across-the-board-better model for comparable sizes:

The distillation is far from lossless, however; the paper does not make it easy to read off the deltas but there are footnotes at the end on the tradeoffs.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Releases and Developments

- Jamba 1.5 Launch: @AI21Labs released Jamba 1.5, a hybrid SSM-Transformer MoE model available in Mini (52B - 12B active) and Large (398B - 94B active) versions. Key features include 256K context window, multilingual support, and optimized performance for long-context tasks.

- Claude 3 Updates: @AnthropicAI added LaTeX rendering support for Claude 3, enhancing its ability to display mathematical equations and expressions. Prompt caching is now available for Claude 3 Opus as well.

- Dracarys Release: @bindureddy announced Dracarys, an open-source LLM fine-tuned for coding tasks, available in 70B and 72B versions. It shows significant improvements in coding performance compared to other open-source models.

- Mistral Nemo Minitron 8B: This model demonstrates superior performance to Llama 3.1 8B and Mistral 7B on the Hugging Face Open LLM Leaderboard, suggesting the potential benefits of pruning and distilling larger models.

AI Research and Techniques

- Prompt Optimization: @jxmnop discussed the challenges of prompt optimization, highlighting the complexity of finding optimal prompts in vast search spaces and the surprising effectiveness of simple algorithms like AutoPrompt/GCG.

- Hybrid Architectures: @tri_dao noted that hybrid Mamba / Transformer architectures work well, especially for long context and fast inference.

- Flexora: A new approach to LoRA fine-tuning that yields superior results and reduces training parameters by up to 50%, introducing adaptive layer selection for LoRA.

- Classifier-Free Diffusion Guidance: @sedielem shared insights from recent papers questioning prevailing assumptions about classifier-free diffusion guidance.

AI Applications and Tools

- Spellbook Associate: @scottastevenson announced the launch of Spellbook Associate, an AI agent for legal work capable of breaking down projects, executing tasks, and adapting plans.

- Cosine Genie:

@swyxhighlighted a podcast episode discussing the value of finetuning GPT4o for code, resulting in the top-performing coding agent according to various benchmarks.

- LlamaIndex 0.11: @llama_index released version 0.11 with new features including Workflows replacing Query Pipelines and a 42% smaller core package.

- MLX Hub: A new command-line tool for searching, downloading, and managing MLX models from the Hugging Face Hub, as announced by @awnihannun.

AI Development and Industry Trends

- Challenges in AI Agents: @RichardSocher highlighted the difficulty of achieving high accuracy across multi-step workflows in AI agents, comparing it to the last-mile problem in self-driving cars.

- Open-Source vs. Closed-Source Models: @bindureddy noted that most open-source fine-tunes deteriorate overall performance while improving on narrow dimensions, emphasizing the achievement of Dracarys in improving overall performance.

- AI Regulation: @jackclarkSF shared a letter to Governor Newsom about SB 1047, discussing the costs and benefits of the proposed AI regulation bill.

- AI Hardware: Discussion on the potential of combining resources from multiple devices for home AI workloads, as mentioned by @rohanpaul_ai.

AI Reddit Recap

/r/LocalLlama Recap

- Exllamav2 Tensor Parallel support! TabbyAPI too! (Score: 55, Comments: 29): ExLlamaV2 has introduced tensor parallel support, enabling the use of multiple GPUs for inference. This update also includes integration with TabbyAPI, allowing for easier deployment and API access. The community expresses enthusiasm for these developments, highlighting the potential for improved performance and accessibility of large language models.

- Users express enthusiasm for ExLlamaV2's updates, with one running Mistral-Large2 at 2.65bpw with 8192 context length and 18t/s generation speed on multiple GPUs.

- Performance improvements noted, with Qwen 72B 4.25bpw showing a 20% increase from 17.5 t/s to 20.8 t/s at 2k context on 2x3090 GPUs.

- A bug affecting the draft model (qwama) was reported and promptly addressed by the developers, highlighting active community support and quick issue resolution.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI and Machine Learning Advancements

- Stable Diffusion's 2-year anniversary: On this date in 2022, the first Stable Diffusion model (v1.4) was released to the public, marking a significant milestone in AI-generated imagery.

- NovelAI open-sources original model: NovelAI has decided to open-source their original AI model, despite it having leaked previously. This move promotes transparency and collaboration in the AI community.

AI-Generated Content and Tools

- Anti-blur Flux Lora: A new tool has been developed to address blurry backgrounds in AI-generated images, potentially improving the overall quality of outputs.

- Amateur Photography Lora: A comparison of realism in AI-generated images using an Amateur Photography Lora with Flux Dev, showcasing advancements in photorealistic AI-generated content.

- Pony Diffusion V7: Progress towards developing the next version of Pony Diffusion, demonstrating ongoing improvements in specialized AI models.

Robotics and AR Technology

- Boston Dynamics pushup video: Boston Dynamics posted a video of a robot doing pushups on their official Instagram, showcasing advancements in robotic mobility and strength.

- Meta's AR glasses: Meta is set to unveil its new AR glasses in September, indicating progress in augmented reality technology from a major tech company.

AI-Related Discussions and Humor

- AI hype and expectations: A humorous post about waiting for AGI to overthrow governments sparked discussions about the current state of AI and public perceptions. Comments highlighted concerns about overhyping AI capabilities and the need for realistic expectations.

- AI video creation speculation: A video of a cat appearing to cook led to discussions about AI video generation techniques, with some users suggesting it was created using traditional video editing methods rather than AI.

Feature Requests for AI Tools

- A post highlighting a desired feature for Flux D, indicating ongoing user interest in improving AI image generation tools.

AI Discord Recap

A summary of Summaries of Summaries by Claude 3.5 Sonnet

1. AI Model Releases and Benchmarks

- Jamba 1.5 Jumps Ahead in Long Context: AI21 Labs launched Jamba 1.5 Mini (12B active/52B total) and Jamba 1.5 Large (94B active/398B total), built on the new SSM-Transformer architecture, offering a 256K effective context window and claiming to be 2.5X faster on long contexts than competitors.

- Jamba 1.5 Large achieved a score of 65.4 on Arena Hard, outperforming models like Llama 3.1 70B and 405B. The models are available for immediate download on Hugging Face and support deployment across major cloud platforms.

- Grok 2 Grabs Second Place in LMSYS Arena: Grok 2 and its mini variant have been added to the LMSYS leaderboard, with Grok 2 currently ranked #2, surpassing GPT-4o (May) and tying with Gemini in overall performance.

- The model excels particularly in math and ranks highly across other areas, including hard prompts, coding, and instruction-following, showcasing its broad capabilities in various AI tasks.

- SmolLM: Tiny But Mighty Language Models: SmolLM, a series of small language models in sizes 135M, 360M, and 1.7B parameters, has been released, trained on the meticulously curated Cosmo-Corpus dataset.

- These models, including datasets like Cosmopedia v2 and Python-Edu, have shown promising results when compared to other models in their size categories, potentially offering efficient alternatives for various NLP tasks.

2. AI Development Tools and Frameworks

- Aider 0.52.0 Adds Shell Power to AI Coding: Aider 0.52.0 introduces shell command execution, allowing users to launch browsers, install dependencies, run tests, and more directly within the tool, enhancing its capabilities for AI-assisted coding.

- The release also includes improvements like

~expansion for/readand/dropcommands, a new/resetcommand to clear chat history, and a switch togpt-4o-2024-08-06as the default OpenAI model. Notably, Aider autonomously generated 68% of the code for this release.

- The release also includes improvements like

- Cursor Raises $60M for AI-Powered Coding: Cursor announced a $60M funding round from investors including Andreessen Horowitz, Jeff Dean, and founders of Stripe and Github, positioning itself as the leading AI-powered code editor.

- The company aims to revolutionize software development with features like instant answers, mechanical refactors, and AI-powered background coders, with the ambitious goal of eventually writing all the world's software.

- LangChain Levels Up SQL Query Generation: The LangChain Python Documentation outlines strategies to improve SQL query generation using

create_sql_query_chain, focusing on how the SQL dialect impacts prompts.- It covers formatting schema information into prompts using

SQLDatabase.get_contextand building few-shot examples to assist the model, aiming to enhance the accuracy and relevance of generated SQL queries.

- It covers formatting schema information into prompts using

3. AI Research and Technical Advancements

- Mamba Slithers into Transformer Territory: The Mamba 2.8B model, a

transformers-compatible language model, has been released, offering an alternative architecture to traditional transformer models.- Users need to install

transformersfrom the main branch until version 4.39.0 is released, along withcausal_conv_1dandmamba-ssmfor optimized CUDA kernels, potentially offering improved efficiency in certain NLP tasks.

- Users need to install

- AutoToS: Automating the Thought of Search: A new paper titled "AutoToS: Automating Thought of Search" proposes automating the "Thought of Search" (ToS) method for planning with LLMs, achieving 100% accuracy on evaluated domains with minimal feedback iterations.

- The approach involves defining search spaces with code and guiding LLMs to generate sound and complete search components through feedback from unit tests, potentially advancing the field of AI-driven planning and problem-solving.

- Multimodal LLM Skips the ASR Middle Man: A researcher shared work on a multimodal LLM that directly understands both text and speech without a separate Automatic Speech Recognition (ASR) stage, built by extending Meta's Llama 3 model with a multimodal projector.

- This approach allows for faster responses compared to systems that combine separate ASR and LLM components, potentially opening new avenues for more efficient and integrated multimodal AI systems.

4. AI Industry News and Events

- Autogen Lead Departs Microsoft for New Venture: The lead of the Autogen project left Microsoft in May 2024 to start OS autogen-ai, a new company that is currently raising funds.

- This move signals potential new developments in the Autogen ecosystem and highlights the dynamic nature of AI talent movement in the industry.

- NVIDIA AI Summit India Announced: The NVIDIA AI Summit India is set for October 23-25, 2024, at Jio World Convention Centre in Mumbai, featuring a fireside chat with Jensen Huang and over 50 sessions on AI, robotics, and more.

- The event aims to connect NVIDIA with industry leaders and partners, showcasing transformative work in generative AI, large language models, industrial digitalization, supercomputing, and robotics.

- California's AI Regulation Spree: California is set to vote on 20+ AI regulation bills this week, covering various aspects of AI deployment and innovation in the state.

- These bills could significantly reshape the regulatory landscape for AI companies and researchers operating in California, potentially setting precedents for other states and countries.

5. AI Safety and Ethics Discussions

- AI Burnout Sparks Industry Concern: Discussions in the AI community have raised alarms about the potential for AI burnout, particularly in intense frontier labs, with concerns that the relentless pursuit of progress could lead to unsustainable work practices.

- Members likened AI powerusers to a "spellcasting class", suggesting that increased AI model power could intensify demands on these users, potentially exacerbating burnout issues in the field.

- AI Capabilities and Risks Demo-Jam Hackathon: An AI Capabilities and Risks Demo-Jam Hackathon launched with a $2000 prize pool, encouraging participants to create demos that bridge the gap between AI research and public understanding of AI safety challenges.

- The event aims to showcase potential AI-driven societal changes and convey AI safety challenges in compelling ways, with top projects offered the chance to join Apart Labs for further research opportunities.

- Twitter's AI Discourse Intensity Questioned: A recent tweet by Greg Brockman showing 97 hours of coding work in a week sparked discussions about the intensity of AI discourse on Twitter and its potential disconnect from reality.

- Community members expressed unease with the high-pressure narratives often shared on social media platforms, questioning whether such intensity is sustainable or beneficial for the AI field's long-term health.

- AI Engineer Meetup in London: The first AI Engineer London Meetup is scheduled for September 12th, featuring speakers like @maximelabonne and Chris Bull.

- Participants are encouraged to register here to connect with fellow AI Engineers.

- Infinite Generative Youtube Development: A team is seeking developers for their Infinite Generative Youtube platform, gearing up for a closed beta launch.

- They are looking for passionate developers to join this innovative project.

PART 1: High level Discord summaries

LM Studio Discord

- LM Studio 0.3.0 is Here with Upgrades: LM Studio has released version 0.3.0, featuring a revamped UI, improved RAG functionality, and support for running a local server using

lms.- However, users reported bugs like model loading issues, indicating the development team is actively working on fixes.

- Llama 3.1's Hardware Performance Under Review: Llama 3.1 70B q4 achieves a token rate of 1.44 t/s on a 9700X CPU with 2 channel DDR5-6000, highlighting its CPU performance capabilities.

- Users noted that GPU offloading may slow inference if the GPU's VRAM is less than half the model size.

- Debate on Apple Silicon vs Nvidia for LLMs: An ongoing discussion contrasts the M2 24gb Apple Silicon against Nvidia rigs, with reports suggesting M2 Ultra may outperform a 4090 in specific scenarios.

- However, users face limitations on fine-tuning speed with Apple Silicon, with a reported 9-hour training on a max-spec Macbook Pro.

- GPU Offloading Remains a Hot Topic: Despite user reports of issues, GPU offloading is still supported in LM Studio; users can activate it by holding the ALT key during model selection.

- Continued exploration into optimal setups remains critical as users navigate performance with various configurations.

- LLM Accuracy Sparks Concern: Discussions reveal that LLMs, such as Llama 3.1 and Phi 3, can hallucinate, especially about learning styles or specific queries, leading to verbose outputs.

- A contrasting analysis states that Claude may demonstrate better self-evaluation mechanisms despite being vague.

Nous Research AI Discord

- Nous Research Merch Store is Live!: The Nous Research merch store has officially launched, offering a variety of items including stickers with every order while supplies last.

- Check out the store here for exclusive merch!

- Hermes 3 Recovers from Mode Collapse: A member reported successfully recovering Hermes 3 from a mode collapse, allowing the model to analyze and understand the collapse, with only a single relapse afterward.

- This marks a step forward in tackling mode collapse issues prevalent in large language models.

- Introducing Mistral-NeMo-Minitron-8B-Base: The Mistral-NeMo-Minitron-8B-Base is a pruned and distilled text-to-text model, leveraging 380 billion tokens and continuous pre-training data from Nemotron-4 15B.

- This base model showcases advances in model efficiency and performance.

- Exploring Insanity in LLM Behavior: A proposal was put forth to deliberately tune an LLM for insanity, aiming to explore boundaries in unexpected behavior and insights into LLM limitations.

- This project seeks to simulate anomalies in LLM outputs, which could lead to potentially groundbreaking revelations.

- Drama Engine Framework for LLMs: A member shared their project, the Drama Engine, a narrative agent framework aimed at improving agent-like interactions and storytelling.

- They provided a link to the project's GitHub page for anyone interested in contributing or learning more: Drama Engine GitHub.

HuggingFace Discord

- LogLLM - Automating ML Experiment Logging: LogLLM automates the extraction of experimental conditions from your Python scripts with GPT4o-mini and logs results using Weights & Biases (W&B).

- It simplifies the documentation process for machine learning experiments, improving efficiency and accuracy.

- Neuralink Implements Papers at HF: A member shared that their work at Hugging Face involves implementing papers, possibly as a paid role.

- They expressed excitement about their work and emphasized the importance of creating efficient models for low-end devices.

- Efficient Models for Low-End Devices: Members have shown a keen interest in making models more efficient for low-end hardware, highlighting ongoing challenges.

- This reflects the community's focus on accessibility and practical applications in diverse environments.

- GPU Powerhouse: RTX 6000 Revealed: Users discovered the existence of the RTX 6000, boasting 48GB of VRAM for robust computing tasks.

- At a price of $7,000, it stands out as the premier choice for high-performance workloads.

- Three-way Data Splitting for Generalization: A member suggested a three-way data split to enhance model generalization during training, validation, and testing.

- The emphasis is on testing with diverse data sets to assess a model's robustness beyond mere accuracy.

Stability.ai (Stable Diffusion) Discord

- SDXL vs SD1.5: The Speed Dilemma: A user with 32 GB RAM is torn between SDXL and SD1.5, reporting sluggish image generation on their CPU. Recommendations lean towards SDXL for superior images, despite potential out-of-memory errors and the need for increased swap space.

- Keep in mind, balancing CPU speeds with image quality is the key factor for these heavy models.

- Prompting Techniques: The Great Debate: Members share their experiences on prompt techniques, with one finding success using commas while others prefer natural language prompts. This variance highlights the ongoing discourse on optimal prompting strategies for consistency.

- Participants suggest that the effectiveness of prompts greatly depends on personal preference and experimentation.

- ComfyUI and Flux Installation Woes: A user faces challenges installing iPadaper on ComfyUI, prompting suggestions to venture into the Tech Support channel for assistance. Another user struggles with Flux, trying different prompts to overcome noisy, low-quality outputs.

- This underscores the community's shared trials and errors as they fine-tune their setups in pursuit of creative objectives.

- GPU RAM: The Weight of Performance: Questions arise about adjusting GPU weights in ComfyUI while using Flux on a 16GB RTX 3080. A user with a 4GB GPU reports frustrating slowdowns in A1111, indicative of GPU power's impact on image generation.

- This exchange suggests a critical need for robust hardware to enable smoother performance across various models.

- Stable Diffusion Guides Galore: A user requests recommendations for Stable Diffusion installation guides, with Automatic1111 and ComfyUI suggested as good starting points. AMD cards, though usable, are noted for slower performance.

- The Tech Support channel is highlighted as a valuable resource for troubleshooting and guidance.

aider (Paul Gauthier) Discord

- Aider 0.52.0 Release: Shell Command Execution & More: Aider 0.52.0 introduces shell command execution, allowing users to launch a browser, install dependencies, and run tests directly within the tool. Key updates include

~expansion for commands, a/resetcommand for chat history, and a model switch togpt-4o-2024-08-06.- Autonomously, Aider generated 68% of the code for this version, underlining its advancing capabilities in software development.

- Training Set for Aider: Meta-Format Exploration: A member is assembling a training set of Aider prompt-code pairs to create an efficient meta-format for various coding techniques using tools like DSPY, TEXTGRAD, and TRACE. This initiative includes a co-op thread for deeper brainstorming on optimization.

- The aim is to refine both code and prompts for better reproducibility, enhancing Aider's effectiveness in generating code.

- Using Aider for Token Optimization: A user seeks documentation on optimizing token usage for small Python files that exceed OpenAI's limits, specifically when handling complex tasks needing multi-step processes. They are looking for strategies to reduce token context within their projects.

- They specifically request advancements in calculations and rendering optimizations, underlining the need for improved resource management.

- Cursor's Vision for AI-Powered Code Creation: Cursor's blog post depicts aspirations for developing an AI-powered code editor that could potentially automate extensive code writing tasks. Features include instant responses, refactoring, and expansive changes made in seconds.

- Future enhancements aim at enabling background coding, pseudocode modifications, and bug detection, revolutionizing how developers interact with code.

- LLMs in Planning: AutoToS Paper Insights: AutoToS: Automating Thought of Search proposes automating the planning process with LLMs, showcasing its effectiveness in achieving 100% accuracy in diverse domains. The approach allows LLMs to define search spaces with code, enhancing the planning methodology.

- The paper identifies challenges in search accuracy and articulates how AutoToS uses feedback from unit tests to guide LLMs, reinforcing the quest for soundness in AI-driven planning.

Latent Space Discord

- Autogen Lead Takes Off From Microsoft: The lead of the Autogen project departed from Microsoft in May 2024 to initiate OS autogen-ai and is currently raising funds.

- This shift has sparked discussions about new ventures in AI coding standards and collaborations.

- Cursor AI Scores $60M Backing: Cursor successfully raised $60M from high-profile investors like Andreessen Horowitz and Jeff Dean, claiming to reinvent how to code with AI.

- Their products aim to develop tools that could potentially automate code writing on a massive scale.

- California Proposes New AI Regulations: California is set to vote on 20+ AI regulation bills this week, summarized in this Google Sheet.

- These bills could reshape the landscape for AI deployment and innovation in the state.

- Get Ready for the AI Engineer Meetup!: Join the first AI Engineer London Meetup on the evening of September 12th, featuring speakers like @maximelabonne and Chris Bull.

- Register via this link to connect with fellow AI Engineers.

- Taxonomy Synthesis Supports AI Research: Members discussed leveraging Taxonomy Synthesis for organizing writing projects hierarchically.

- The tool GPT Researcher was highlighted for its ability to autonomously conduct in-depth research, enhancing productivity.

OpenRouter (Alex Atallah) Discord

- OpenRouter Decommissions Several Models: Effective 8/28/2024, OpenRouter will deprecate multiple models, including

01-ai/yi-34b,phind/phind-codellama-34b,nousresearch/nous-hermes-2-mixtral-8x7b-sft, and the complete Llama series, making them unavailable for users.- Users are advised of this policy through Together AI's deprecation document, which outlines the migration options.

- OpenRouter's Pricing Mishap: A user incurred a $0.01 charge after mistakenly selecting a paid model, illustrating a potential issue for newcomers unfamiliar with the interface.

- In response, the community reassured the user that OpenRouter would not pursue charges for such a low balance, promoting a non-threatening environment for AI exploration.

- Token Counting Confusion Clarified: A discussion emerged on OpenRouter's token counting mechanism after a user reported a 100+ token charge for a simple prompt, revealing complexities in token calculations.

- Members clarified that OpenRouter forwards token counts from OpenAI's API, with variances influenced by system prompts and prior context in the chat.

- Grok 2 Shines on LMSYS Leaderboard: Grok 2 and its mini variant secured positions on the LMSYS leaderboard, with Grok 2 ranking #2, even overtaking GPT-4o in performance metrics.

- The model excels particularly in math and instruction-following but also demonstrates high capability in coding challenges, raising discussions on its overall performance profile.

- OpenRouter Team Remains Mysterious: There was an inquiry about the OpenRouter team's current projects, but unfortunately, no detailed response was provided, leaving members curious.

- This lack of information highlights an ongoing interest in the development activities of OpenRouter, but specifics remain elusive.

Modular (Mojo 🔥) Discord

- Mojo's Open Source Licensing Dilemma: The question of Mojo's open source status arose, with Modular navigating licensing details to protect their market niche while allowing external use.

- They aim for a more permissive licensing model over time, maintaining openness while safeguarding core product features.

- Max Integration Blurs Lines with Mojo: Max functionalities now deeply integrate with Mojo, initially designed as separate entities, raising questions about future separation.

- Discussions suggest that this close integration will influence licensing possibilities and product development pathways.

- Modular's Commercial Focus on Managed AI: Modular is focusing on managed AI cloud applications, allowing continued investment in Mojo and Max while licensing Max for commercial applications.

- They introduced a licensing approach that encourages open development and aligns with their strategic business objectives.

- Paving the Way for Heterogeneous Compute: Modular is targeting portable GPU programming across heterogeneous compute scenarios, facilitating wider access to advanced computing tools.

- Their goal is to provide frameworks that simplify integration for developers seeking advanced computational capabilities.

- Async Programming Found a Place in Mojo: Users discussed the potential for asynchronous functionality in Mojo, particularly for I/O tasks, likening it to Python's async capabilities.

- The conversation included exploring a 'sans-io' HTTP implementation, emphasizing thread safety and proper resource management.

Perplexity AI Discord

- Perplexity Internals Dilemma: Users are seeking data on the frequency of follow-ups in Perplexity, including time spent and back-and-forth interactions, but responses indicate this data may be proprietary.

- This raises concerns about transparency and usability for engineers working on performance improvements.

- Perplexity Pro Source Count Mystery: A noticeable drop in the source count displayed in Perplexity Pro from 20 or more to 5 or 6 for research inquiries has sparked questions on whether there were changes to the service or incorrect usage.

- This inconsistency highlights the need for clarity in source management and potential impacts on research quality.

- Exploring Email Automation Tools: Users are diving into AI tools for automating emails, mentioning Nelima, Taskade, Kindo, and AutoGPT as contenders while seeking further recommendations.

- The exploration indicates a growing interest in streamlining communication processes through AI efficiencies.

- Perplexity AI Bot Seeks Shareable Threads: The Perplexity AI Bot encourages users to ensure their threads are 'Shareable' by providing links to the Discord channel for reference.

- This push for shareable content suggests a focus on enhancing community engagement and resource sharing.

- Social Sentiment Around MrBeast: Discussion surfaced around the internet's perception of MrBeast, with users linking to a search query for insights into the potential dislike.

- This conversation reflects broader trends in digital celebrity culture and public opinion dynamics.

OpenAccess AI Collective (axolotl) Discord

- Base Phi 3.5 Not Released: A member highlighted the absence of the base Phi 3.5, indicating that only the instruct version has been released by Microsoft. This poses challenges for those wishing to fine-tune the model without base access.

- Exploring the limits of availability, they seek solutions for fine-tuning using the available instruct version.

- QLORA + FSDP Hardware Needs: Discussion on running QLORA + FSDP centered around the requirement for an 8xH100 configuration. Members also noted inaccuracies with the tqdm progress bar when enabling warm restarts during training.

- Performance monitoring remains a challenge, prompting calls for refining the tracking tools available within the framework.

- SmolLM: A Series of Small Language Models: SmolLM includes small models of 135M, 360M, and 1.7B parameters, all trained on the high-quality Cosmo-Corpus. These models incorporate various datasets like Cosmopedia v2 and FineWeb-Edu to ensure robust training.

- Curated choice of datasets, aims to provide balanced language understanding under varying conditions.

- Mode-Aware Chat Templates in Transformers: A user reported an issue regarding mode-aware chat templates on the Transformers repository, suggesting this feature could distinguish training and inference behaviors. This could resolve existing problems linked to chat template configurations.

- Details are outlined in a GitHub issue which proposes implementing a template_mode variable.

OpenAI Discord

- GPT-3.5: Outdated or Interesting?: A discussion emerged on whether testing GPT-3.5 is still relevant as it may be considered outdated given the advancements in post-training techniques.

- Some members suggested it may lack significance compared to newer models like GPT-4.

- Exploring Email Automation Alternatives: Users sought tools for automating email tasks, looking for alternatives beyond Nelima for emailing based on prompts.

- This indicates a growing need for automation solutions in everyday workflows.

- SwarmUI: Praise for User Experience: SwarmUI received accolades for its user-friendly interface and compatibility with both NVIDIA and AMD GPUs.

- Users highlighted its intuitive design, making it a preferred choice for many developers.

- Knowledge Files Formatting Dilemma: A user questioned the efficacy of using XML versus Markdown for knowledge files in their project, aiming for optimal performance.

- This inquiry reflects the ongoing debate about the best practices for structuring content in GPTs.

- Inconsistent GPT Formatting Creates Frustration: Concerns about inconsistent output formatting in GPT responses were raised, specifically regarding how some messages conveyed structured content while others did not.

- Users are looking for solutions to standardize formatting to enhance readability and user experience.

Eleuther Discord

- Mastering Multi-Turn Prompts: A user highlighted the importance of including

n-1turns in multi-turn prompts, referencing a code example from the alignment handbook.- They explored the viability of gradually adding turns to prompt generation but raised concerns about its comparative effectiveness.

- SmolLM Model Insights: The SmolLM model was discussed, noting its training data sourced from Cosmo-Corpus, which includes Cosmopedia v2 among others.

- SmolLM models range from 135M to 1.7B parameters, showing notable performance within their size category.

- Mamba Model Deployment Help: Information was shared on the Mamba 2.8B model, which works seamlessly with the

transformerslibrary.- Instructions for setting up dependencies like

causal_conv_1dand using thegenerateAPI were provided for text generation.

- Instructions for setting up dependencies like

- Innovative Model Distillation Techniques: A suggestion was made to apply LoRAs to a 27B model and distill logits from a smaller 9B model, aiming to replicate functionality in a condensed form.

- This approach could potentially streamline large model performances in smaller architectures.

- Strategies for Model Compression: Proposals for compressing model sizes included techniques such as zeroing out parameters and applying quantization methods, with a reference to the paper on quantization techniques.

- Techniques discussed aim to enhance efficiency while managing size.

Cohere Discord

- Cohere API Error on Invalid Role: A user reported an HTTP-400 error while using the Cohere API, indicating that the role provided was invalid, with acceptable options being 'User', 'Chatbot', 'System', or 'Tool'.

- This highlights the need for users to verify role parameters before API calls.

- Innovative Multimodal LLM Developments: One member showcased a multimodal LLM that interprets both text and speech seamlessly, eliminating the separate ASR stage with a direct multimodal projector linked to Meta's Llama 3 model.

- This method accelerates response times by merging audio processing and language modeling without latency from separate components.

- Cohere's New Schema Object Excites Users: Enthusiasm grew around the newly introduced Cohere Schema Object for its ability to facilitate structured multiple actions in a single API request, aiding in generative fiction tasks.

- Users reported it assists in generating complex prompt responses and content management efficiently.

- Cohere Pricing - Token-Based Model: The pricing structure for Cohere’s models, such as Command R, is based on a token system, where each token carries a cost.

- A general guideline indicates that one word equals approximately 1.5 tokens, crucial for budgeting use.

- Cohere Models Set to Land on Hugging Face Hub: Plans are underway to package and host all major Cohere models on the Hugging Face Hub, creating an accessible ecosystem for developers.

- This move has generated excitement among members keen to utilize these resources in their projects.

Interconnects (Nathan Lambert) Discord

- AI Burnout Raises Red Flags: Concerns over AI burnout are escalating as members note that AI faces far greater burnout risks than humans, particularly in intense frontier labs, making for a sustainability issue.

- This discussion highlights the worrying trend of relentless workloads and the potential long-term implications on mental health in the AI community.

- AI Powerusers as Spellcasters: A member compared AI powerusers to a spellcasting class, emphasizing their constant tool usage which breeds stress and potential burnout.

- With advancements in AI models, the demands placed on these users may escalate, intensifying the burnout cycle already observed.

- The Endless Model Generation Trap: The quest for the next model generation is being scrutinized, with fears that the cyclical chase could culminate in severe industry burnout.

- Predictive models suggest a shift in burnout trends, linked to the accelerating pace of AI progress and its toll on developers.

- Twitter Anxiety Strikes Again: A recent Greg Brockman Twitter post showcasing 97 hours of coding in a single week sparked conversation about the pressures stemming from heightened intensity in AI discourse online.

- Participants voiced concern that the vibrant yet anxiety-inducing Twitter scene may detract from real-world engagement, highlighting a concerning disconnect.

LAION Discord

- Dev Recruitment for Infinite Generative Youtube: A team seeks developers for their Infinite Generative Youtube platform, launching a closed beta soon.

- They're particularly interested in enthusiastic developers to join their innovative project.

- Text-to-Speech Models for Low-Resource Languages: A user is eager to train TTS models for Hindi, Urdu, and German, aiming for a voice assistant application.

- This venture focuses on enhancing accessibility in low-resource language processing.

- WhisperSpeech's Semantic Tokens for ASR Exploration: Inquiries surfaced regarding the use of WhisperSpeech semantic tokens to enhance ASR in low-resource languages through a tailored training process.

- The proposed method includes fine-tuning a small decoder model using semantic tokens from audio and transcriptions.

- SmolLM: Smaller Yet Effective Models: SmolLM offers three sizes (135M, 360M, and 1.7B parameters), trained on the Cosmo-Corpus, showcasing competitive performance.

- The dataset includes Cosmopedia v2 and Python-Edu, indicating a strong focus on quality training sets.

- Mamba's Compatibility with Transformers: The Mamba language model comes with a transformers compatible mamba-2.8b version, requiring specific installations.

- Users need to set up 'transformers' until version 4.39.0 is released to utilize the optimized cuda kernels.

LangChain AI Discord

- Graph Memory Saving Inquiry: Members discussed whether memory can be saved as a file to compile new graphs, and if the same memory could be reused across different graphs.

- Is it per graph or shared? was the core question, with a keen interest in optimizing memory usage.

- Improving SQL Queries with LangChain: The LangChain Python Documentation provided new strategies for enhancing SQL query generation through create_sql_query_chain, focusing on SQL dialect impacts.

- Learn how to format schema information using SQLDatabase.get_context to improve the prompt's effectiveness in query generation.

- Explicit Context in LangChain: To use context like

table_infoin LangChain, you must explicitly pass it when invoking the chain, as shown in the documentation.- This approach ensures your prompts are tailored to provided context, showcasing the flexibility of LangChain.

- Deployment of Writer Framework to Hugging Face: A blog post explored deploying Writer Framework apps to Hugging Face Spaces using Docker, showcasing the ease of deployment for AI applications.

- The Writer Framework provides a drag-and-drop interface similar to frameworks like Streamlit and Gradio, aimed at simplifying AI app development.

- Hugging Face Spaces as a Deployment Venue: The noted blog post detailed the deployment process on Hugging Face Spaces, emphasizing Docker's role in hosting and sharing AI apps.

- Platforms like Hugging Face provide excellent opportunity for developers to showcase their projects, driving community engagement.

DSPy Discord

- Adalflow Launches with Flair: A member highlighted Adalflow, a new project from SylphAI, expressing interest in its features and applications.

- Adalflow aims to optimize LLM task pipelines, providing engineers with tools to enhance their workflow.

- DSpy vs Textgrad vs Adalflow Showdown: Curiosity brewed over the distinctions between DSpy, Textgrad, and Adalflow, specifically about when to leverage each module effectively.

- It was noted that LiteLLM will solely manage query submissions for inference, hinting at performance capabilities across these modules.

- New Research Paper Alert!: A member shared a link to an intriguing paper on ArXiv titled 2408.11326, encouraging fellow engineers to check it out.

- Details about the paper were not disclosed, but its presence indicates ongoing contributions to the DSPy community.

OpenInterpreter Discord

- Seek Open Interpreter Brand Guidelines: A user inquired about the availability of Open Interpreter brand guidelines, indicating a need for clarity on branding.

- Could you share where to find those guidelines?

- Surprising Buzz Around Phi-3.5-mini: Users expressed unexpected approval for the performance of Phi-3.5-mini, sparking discussions that brought Qwen2 into the spotlight.

- The positive feedback caught everyone off guard!

- Python Script Request for Screen Clicks: A user sought a Python script capable of executing clicks on specified screen locations based on text commands, like navigating in Notepad++.

- How do I make it click on the file dropdown?

- --os mode Could Be a Solution: In response to the script query, it was suggested that using the --os mode might solve the screen-clicking challenge.

- This could streamline operations significantly!

- Exciting Announcement for Free Data Analytics Masterclass: A user shared an announcement for a free masterclass on Data Analytics, promoting real-world applications and practical insights.

- Interested participants can register here and share in the excitement over potential engagement.

Gorilla LLM (Berkeley Function Calling) Discord

- Gorilla and Huggingface Leaderboards Now Align: A member queried the scores on the Gorilla and Huggingface leaderboards, which were initially inconsistent. The discrepancy has been resolved as the Huggingface leaderboard now mirrors the Gorilla leaderboard.

- This alignment indicates a more reliable comparison for users evaluating model performance across platforms.

- Llama-3.1-Storm-8B Debuts on Gorilla Leaderboard: A user submitted a Pull Request to add Llama-3.1-Storm-8B to the Gorilla Leaderboard for benchmarking. The PR will undergo review as the model recently completed its release.

- The inclusion of this model showcases the community's ongoing commitment to updating benchmarking frameworks.

- Guidance Requested for REST API Test Pairs: Inquiring users sought advice on crafting 'executable test pairs' for their REST API functionality, pointing to existing pairs from the Gorilla leaderboard. They showed a preference for tests that are both 'real' and 'easy' to implement.

- This indicates a demand for more practical testing resources and methods in API development.

- Clarification on Executable Test Pairs Needed: Another discussion arose regarding the term 'executable test pairs', with users seeking a clearer understanding of its relevance in REST API testing. This reveals a gap in conceptual clarity for members.

- Insight into this terminology could enhance comprehension and application in their testing strategies.

AI21 Labs (Jamba) Discord

- Jamba 1.5 Mini & Large Hit the Stage: AI21 Labs launched Jamba 1.5 Mini (12B active/52B total) and Jamba 1.5 Large (94B active/398B total), based on the new SSM-Transformer architecture, offering superior long context handling and speed over competitors.

- The models feature a 256K effective context window, claiming to be 2.5X faster on long contexts than rivals.

- Jamba Dominates Long Contexts: Jamba 1.5 Mini boasts a leading score of 46.1 on Arena Hard, while Jamba 1.5 Large achieved 65.4, outclassing even Llama 3.1's 405B.

- The jump in performance makes Jamba a significant contender in the long context space.

- API Rate Limits Confirmed: Users confirmed API rate limits for usage at 200 requests per minute and 10 requests per second, settling concerns on utilization rates.

- This information was found by users after initial inquiries.

- No UI Fine-Tuning for Jamba Yet: Clarification was provided regarding Jamba's fine-tuning; it's only available for the instruct version and currently not accessible through the UI.

- This detail raises questions for developers relying on UI for adjustments.

- Jamba's Filtering Features Under Spotlight: Discussions emerged on Jamba's filtering capabilities, particularly for roleplaying scenarios involving violence.

- Members expressed curiosity about these built-in features to ensure safe interactions.

MLOps @Chipro Discord

- NVIDIA AI Summit India Ignites Excitement: The NVIDIA AI Summit India takes place from October 23-25, 2024, at Jio World Convention Centre in Mumbai, featuring Jensen Huang among other industry leaders during over 50 sessions.

- The summit focuses on advancing fields such as generative AI, large language models, and supercomputing, aiming to highlight transformative works in the industry.

- AI Hackathon Offers Big Incentives: The AI Capabilities and Risks Demo-Jam Hackathon has launched with a $2000 prize pool, where top projects could partner with Apart Labs for research opportunities.

- This initiative aims to create demos addressing AI impacts and safety challenges, encouraging clear communication of complex concepts to the public.

- Exciting Kickoff for the Hackathon: The hackathon kicked off with an engaging opening keynote on interactive AI displays, followed by team formation and project brainstorming.

- Participants benefit from expert mentorship and resources, while the event is live-streamed on YouTube for wide accessibility.

tinygrad (George Hotz) Discord

- Tinygrad's Mypyc Compilation Quest: A member expressed interest in compiling tinygrad with mypyc, currently investigating its feasibility.

- The original poster invited others to contribute to this effort, emphasizing a collaborative spirit.

- Join the Quest!: The original poster invited others to contribute to the tinygrad compilation effort with mypyc.

- Engagement is encouraged as they explore this new venture.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!