[AINews] not much happened today

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

a quiet day.

AI News for 3/12/2025-3/13/2025. We checked 7 subreddits, 433 Twitters and 28 Discords (222 channels, and 5887 messages) for you. Estimated reading time saved (at 200wpm): 616 minutes. You can now tag @smol_ai for AINews discussions!

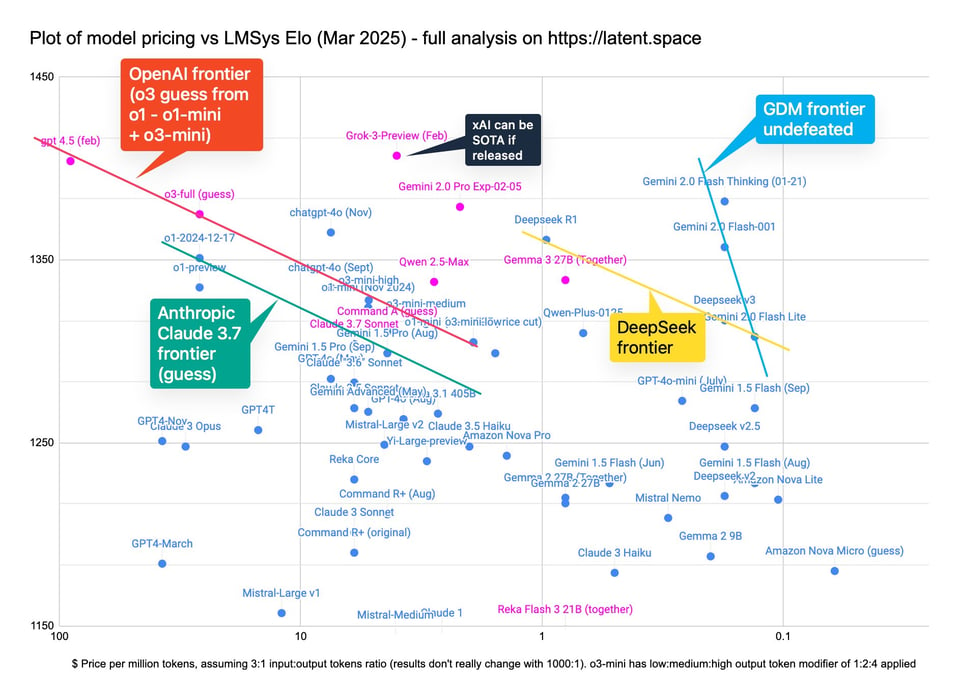

This is the state of models after yesterday's Gemma 3 drop and today's Command A:

the Windsurf talk from AIE NYC is somehow doing even better than the MCP workshop.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

outage in our scraper today; sorry.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek R1's FP8 training and efficiency prowess

- Does Google not understand that DeepSeek R1 was trained in FP8? (Score: 441, Comments: 79): DeepSeek R1 was trained using FP8 precision, which raises questions about whether Google understands this aspect in their analysis, as implied by the post's title. The Chatbot Arena Elo Score graph shows DeepSeek R1 outperforming Gemma 3 27B, with scores of 1363 and 1338 respectively, and notes the significant computational resources required, including 32 H100s and 2,560GB VRAM.

- Discussions highlight the efficiency of FP8 precision in model storage and processing, emphasizing that upcasting weights to a wider format like BF16 doesn't improve precision. The conversation also touches on the trade-offs in quantization, with FP8 allowing for smaller models and potentially faster inference due to reduced memory requirements.

- Users debate the hardware requirements for running large models like DeepSeek R1, noting that while H100 GPUs can handle FP8 models, legacy hardware may require different approaches. Some comments mention running large models on consumer-grade GPUs with slower performance, highlighting the flexibility and challenges of model deployment across different systems.

- There is skepticism about the accuracy and utility of charts and benchmarks in the AI industry, with some users expressing distrust of the data presented in corporate materials. NVIDIA's blog post is cited as a source for running DeepSeek R1 efficiently, and there is criticism of the potential misleading nature of AI-generated charts.

- OpenAI calls DeepSeek 'state-controlled,' calls for bans on 'PRC-produced' models | TechCrunch (Score: 183, Comments: 154): NVIDIA demonstrates DeepSeek R1 running on 8xH200s, with OpenAI labeling DeepSeek as "state-controlled" and advocating for bans on "PRC-produced" models, according to TechCrunch.

- Discussions highlight skepticism towards OpenAI's motives, with many users criticizing Sam Altman for attempting to stifle competition by labeling DeepSeek as "state-controlled" to protect OpenAI's business model. Users argue that DeepSeek offers more open and affordable alternatives compared to OpenAI's offerings, which they view as monopolistic and restrictive.

- The conversation emphasizes the accessibility and openness of DeepSeek's models, noting that they can be run locally or on platforms like Hugging Face, which counters claims about compliance with Chinese data demands. Comparisons are drawn to US companies also being subject to similar legal obligations under the CLOUD Act.

- Many commenters express frustration with OpenAI's stance, viewing it as a barrier to open-source AI development and innovation. They criticize the company's attempts to influence government regulations to curb competition, contrasting it with the democratization of AI models like DeepSeek and Claude.

Theme 2. Gemma 3's Technical Highlights and Community Impressions

- The duality of man (Score: 424, Comments: 59): Gemma 3 receives mixed reviews on r/LocalLLaMA, with one post praising its creative and worldbuilding capabilities and another criticizing its frequent mistakes, suggesting it is less effective than phi4 14b. The critique post has significantly more views, 23.7k compared to 5.1k for the appreciation post, indicating greater engagement with the negative feedback.

- Several users discuss the language support capabilities of Gemma 3, noting that the 1B version only supports English, while 4B and above models support multiple languages. This limitation is highlighted in the context of handling Chinese and other languages, with users expressing the need for models to handle multilingual tasks effectively.

- There are concerns about the instruction template and tokenizer issues affecting Gemma 3, with users noting that the model is extremely sensitive to template errors, resulting in incoherent outputs. This sensitivity is contrasted with previous models like Gemma 2, which handled custom formats better, and some users have adapted by tweaking their input formatting to achieve better results.

- Discussions highlight the dual nature of Gemma 3 in performing tasks, where it excels in creative writing but struggles with precision tasks like coding. Users note that while it may generate interesting ideas, it often makes logical errors, and there is speculation that these issues may be related to the tokenizer or other model-specific bugs.

- AMA with the Gemma Team (Score: 279, Comments: 155): The Gemma research and product team from DeepMind will be available for an AMA to discuss the Gemma 3 Technical Report and related resources. Key resources include the technical report here, and additional platforms for exploration such as AI Studio, Kaggle, Hugging Face, and Ollama.

- Several users raised concerns about the Gemma 3 model's licensing terms, highlighting issues such as potential "viral" effects on derivatives and the ambiguity of rights regarding outputs. The Gemma Terms of Use were critiqued for their complex language, leading to confusion about what constitutes a "Model Derivative" and the implications for commercial use.

- Discussions about model architecture and performance included inquiries into the rationale behind design choices such as the smaller hidden dimension with more layers, and the impact of the 1:5 global to local attention layers on long context performance. The team explained that these choices were made to balance performance with latency and memory efficiency, maintaining a uniform width-vs-depth ratio across models.

- Users expressed interest in future developments and capabilities of the Gemma models, such as the possibility of larger models between 40B and 100B, the introduction of voice capabilities, and the potential for function calling and structured outputs. The team acknowledged these interests and hinted at upcoming examples and improvements in these areas.

- AI2 releases OLMo 32B - Truly open source (Score: 279, Comments: 42): AI2 has released OLMo 32B, a fully open-source model that surpasses GPT 3.5 and GPT 4o mini. The release includes all artifacts such as training code, pre- and post-train data, model weights, and a reproducibility guide, allowing researchers and developers to modify any component for their projects. AI2 Blog provides further details.

- Hugging Face Availability: OLMo 32B is available on Hugging Face and works with transformers out of the box. For vLLM, users need the latest main branch version or wait for version 0.7.4.

- Open Source Practice: The release is celebrated for its true open-source nature, with Apache 2.0 licensing and no additional EULAs, making it accessible for individual developers to build models from scratch if they have GPU access. This aligns with the trend of open AI development, as noted by several commenters.

- Model Features and Context: The model supports 4k context, as indicated by the config file, and further context size extensions are in progress. It's noted for being efficient, with inference possible on one GPU and training on one node, fitting well with 24 gigs of VRAM.

Theme 3. Innovation in Large Language Models: Cohere's Command A

- CohereForAI/c4ai-command-a-03-2025 · Hugging Face (Score: 192, Comments: 72): Cohere has introduced a new model called Command A, accessible on Hugging Face under the repository CohereForAI/c4ai-command-a-03-2025. Further details about the model's capabilities or specifications were not provided in the post.

- Pricing and Performance: The Command A model costs $2.5/M input and $10/M output, which some users find expensive for a 111B parameter model, comparable to GPT-4o via API. It is praised for its performance, especially in business-critical tasks and multilingual capabilities, and can be deployed on just two GPUs.

- Comparisons and Capabilities: Users compare Command A to other models like GPT4o, Deepseek V3, Claude 3.7, and Gemini 2 Pro, noting its high instruction-following score and solid programming skills. It is considered a major improvement over previous Command R+ models and is praised for its creative writing abilities.

- Licensing and Hosting: There is a discussion about the model's research-only license, which some find limiting, and the need for a new license that allows for commercial use of outputs while restricting commercial hosting. Users are interested in local hosting capabilities and fine-tuning tools for the model.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

Theme 1. Claude 3.7 Sonnet Creates Unbeatable AI in Arcade Games

- Claude 3.7 Sonnet making 3blue1brown kind of videos. Learning will be much different for this generation (Score: 176, Comments: 20): Claude 3.7 is generating content similar to 3blue1brown videos, indicating a shift in how learning materials are produced and consumed. This development suggests a transformative impact on educational methods for the current generation.

- Curator Tool: The code executor on curator was used to create the content, and it's available on GitHub.

- AI Impact on Education: There is a strong belief that AI will revolutionize public education, but it requires abandoning traditional learning methods. The discussion highlights concerns about over-reliance on AI, as seen in the unnoticed error regarding the maximal area of circles.

- Trust in AI: Skepticism is expressed about the current trust in AI, with a specific example of a mathematical error in the AI-generated content that many missed, illustrating potential pitfalls in learning accuracy when using AI tools.

- I asked Claude to make a simple Artillery Defense arcade game. Then I used Claude to design a CPU player that couldn't lose. (Score: 247, Comments: 49): The post discusses using Claude, an AI model, to create a simple Artillery Defense arcade game and subsequently designing a CPU player that is unbeatable. The author implies a successful implementation of AI in game design, showcasing Claude's capabilities in generating both game mechanics and an invincible CPU player.

- Token Limit Challenges: Users like Tomas_Ka and OfficialHashPanda discuss the challenges of using Claude for coding tasks due to token limitations, with Tomas_Ka noting issues when attempting a simple website project. Craygen9 mentions using VSCode with GitHub Copilot and managing a codebase of around 2,000 lines, highlighting that the process slows as the code grows.

- Game Development Process: Craygen9 shares a detailed account of developing the Artillery Defense game, emphasizing the use of HTML and JavaScript with Sonnet 3.7. The game, comprising 1,500 lines of code, was iteratively refined with features like scaling difficulty, power-up crates, and sound, with Claude assisting in designing a CPU player that plays perfectly.

- Graphics and Iterations: The graphics for the game were generated by Claude using CSS, with multiple iterations needed for improvements. Craygen9 explains the evolution from basic graphics to more polished arcade-style visuals, detailing the iterative process that included adding points, power-ups, sound effects, and a loading screen, all without external libraries or assets.

Theme 2. Gemini 2.0 Flash: Native Image Generation Now Available

- Google released native image generation in Gemini 2.0 Flash (Score: 247, Comments: 52): Google released the Gemini 2.0 Flash with native image generation capabilities, available for free in AI Studio. The feature is still experimental but has received positive feedback for its performance. Further details can be found in the full article.

- There is a significant debate over whether Google Gemini 2.0 Flash is truly open source, with users clarifying that while it's free to use, it does not equate to open source. Educational_End_2473 and ReasonablePossum_ emphasize the distinction, noting that open source allows for modification and redistribution, which this release does not.

- Diogodiogogod and EuphoricPenguin22 discuss the subreddit rules concerning open source content, highlighting a community preference for open source tools and questioning the downvoting of comments that enforce these rules. They argue that the subreddit often favors simple, visual content over complex technical discussions.

- Inferno46n2 suggests that even though Gemini 2.0 Flash is not open source, it remains useful due to its free accessibility, except under heavy usage conditions. However, very_bad_programmer insists on a strict interpretation, stating that "not open source means not open source," leaving no room for gray areas.

- Guy asked Gemini to only respond with images and it got creepy (Score: 165, Comments: 42): The post discusses an interaction with Google's Gemini AI, where the user requested responses solely in image format, leading to unexpectedly unsettling results. The lack of text in the AI's replies and the unspecified nature of the images contributed to a creepy experience.

- AI Response Interpretation: Commenters speculate on the meaning behind Gemini AI's response, suggesting it was a mix of prompts like "you are a visual storyteller" and "what is the meaning of life," leading to a garbled output that seemed to convey deep feelings but was actually a reflection of the input text. Astrogaze90 suggests it was trying to express that the meaning of life is tied to one's own existence and soul.

- Emotional and Conceptual Themes: DrGutz explains that using the word "scared" might have triggered the AI to generate unsettling images and concepts, demonstrating how AI processes emotional triggers. Some users, like KairraAlpha, interpret the AI's output as a philosophical statement about unity and existence, while others humorously reference pop culture, like Plums_Raider with a quote from "The Simpsons."

- User Reactions and Humor: Several users, like Nekrips, responded humorously to the AI's output, with some comments being nonsensical or playful, such as Zerokx's interpretation of the AI saying "I love lasagna," showing a mix of serious and lighthearted engagement with the AI's unexpected behavior.

Theme 3. Dramatically Enhance Video AI Quality with Wan 2.1

- Dramatically enhance the quality of Wan 2.1 using skip layer guidance (Score: 346, Comments: 85): Skip layer guidance can dramatically enhance the quality of Wan 2.1. Without further context or details from the post body, additional specifics regarding the implementation or results are not provided.

- Kijai's Implementation and Wan2GP: Kijai has implemented skip layer guidance in the WanVideoWrapper on GitHub, which users can clone and run using specific scripts. Wan2GP is designed for low VRAM consumer cards, supporting video generation on cards with as little as 6GB of VRAM for 480p or 12GB for 720p videos.

- Technical Insights on Skip Layer Guidance: The skip layer technique involves skipping certain layers during unconditional video denoising to improve the result, akin to perturbed attention guidance. Users report that skipping later layers often results in video corruption, while skipping layers during specific inference steps may be more effective.

- User Experiences and Experimentation: Users have shared mixed experiences, with some reporting successful tests and others noting issues like sped-up or slow-motion videos when skipping certain layers. Discussions highlight the importance of experimenting with different layers to optimize video quality, as some layers may be critical for maintaining video coherence or following prompts.

- I have trained a new Wan2.1 14B I2V lora with a large range of movements. Everyone is welcome to use it. (Score: 279, Comments: 47): The post announces the training of a new Wan2.1 14B I2V lora model with an extensive range of movements, inviting others to utilize it. No additional details or links are provided in the post body.

- Model Training and Usage: Some_Smile5927 shared detailed information about the Wan2.1 14B I2V 480p v1.0 model, including its training on the Wan.21 14B I2V 480p model with a trigger word 'sb9527sb flying effect'. They provided recommended settings and links to the inference workflow and model.

- Training Methodology: Some_Smile5927 mentioned using 50 short videos for training, while houseofextropy inquired about the specific tools used for training the Wan Lora model. Pentagon provided a link to the Musubi Tuner on GitHub, which may be related to the training process.

- Model Capabilities and Perceptions: Users expressed amazement at the model's ability to handle fabric and its movement capabilities, although YourMomThinksImSexy humorously noted that the Lora model primarily performs one movement.

AI Discord Recap

A summary of Summaries of Summaries by o1-mini-2024-09-12

Anthropic’s Claude Slashes API Costs with Clever Caching

- Claude's caching API cuts costs by 90%: Anthropic’s Claude 3.7 Sonnet introduces a caching-aware rate limit and prompt caching, potentially reducing API costs by up to 90% and latency by 85% for extensive prompts.

- OpenManus Emerges as Open-Source Manus Alternative: Excitement builds around OpenManus, the open-source counterpart to Manus, with users experimenting via a YouTube demo.

- “Some users are switching to Cline or Windsurf as alternative IDEs”: Performance issues in Cursor IDE lead members to explore alternatives like Cline or Windsurf.

Google and Cohere Battle it Out with Command A and Gemini Flash

- Cohere Launches Command A, Competing with GPT-4o: Command A by Cohere boasts 111B parameters and a 256k context window, claiming parity or superiority to GPT-4o and DeepSeek-V3 for agentic enterprise tasks.

- Google’s Gemini 2.0 Flash Introduces Native Image Generation: Gemini 2.0 Flash now supports native image creation from text and multimodal inputs, enhancing its reasoning capabilities.

- “Cohere's Command A outpaces GPT-4o in inference rates”: Command A achieves up to 156 tokens/sec, significantly outperforming its competitors.

LM Studio and OpenManus: Tool Integrations Fuel AI Innovations

- LM Studio Enhances Support for Gemma 3 Models: LM Studio 0.3.13 now fully supports Google’s Gemma 3 models in both GGUF and MLX formats, offering GPU-accelerated image processing and major speed improvements.

- Blender Integrates with MCP for AI-Driven 3D Creation: MCP for Blender enables Claude to interact directly with Blender, facilitating the creation of 3D scenes from textual prompts.

- OpenManus Launches Open-Source Framework: OpenManus provides a robust, accessible alternative to Manus, sparking discussions on its capabilities and ease of use for non-technical users.

AI Development Dilemmas: From Cursor Crashes to Fine-Tuning Fiascos

- Cursor IDE Faces Sluggishness and Crashes: Users report Cursor experiencing UI sluggishness, window crashes, and memory leaks, particularly on Mac and Windows WSL2, hinting at underlying legal issues with Microsoft.

- Fine-Tuning Gemma 3 Blocked by Transformers Bug: Gemma 3 model fine-tuning is stalled due to a bug in Hugging Face Transformers, causing mismatches between documentation and setup in the Jupyter notebook on Colab.

- LSTM Model Plagued by NaN Loss in tinygrad: Training an LSTMModel with TinyJit results in NaN loss after the first step, likely due to large input values causing numerical instability.

Policy Prowess: OpenAI’s Push to Ban PRC Models Raises Eyebrows

- OpenAI Proposes Ban on PRC-Produced Models: OpenAI advocates for banning PRC-produced models within Tier 1 countries, linking fair use with national security and labeling models like DeepSeek as state-controlled.

- Google Aligns with OpenAI on AI Policy: Following OpenAI’s lead, Google endorses weaker copyright restrictions on AI training and calls for balanced export controls in its policy proposal.

- “If China has free data access while American companies lack fair use, the race for AI is effectively over”: OpenAI submits a policy proposal directly to the US government, emphasizing the strategic disadvantage in AI race dynamics.

AI in Research, Education, and Function Calling

- Nous Research AI Launches Inference API with Hermes and DeepHermes Models: Introducing Hermes 3 Llama 70B and DeepHermes 3 8B Preview as part of their new Inference API, offering $5 free credits for new users and compatibility with OpenAI-style integrations.

- Berkeley Function-Calling Leaderboard (BFCL) Sets New Standards: The BFCL offers a comprehensive evaluation of LLMs' ability to call functions and tools, mirroring real-world agent and enterprise workflows.

- AI Agents Enhance Research and Creativity: Jina AI shares advancements in DeepSearch/DeepResearch, emphasizing techniques like late-chunking embeddings and rerankers to improve snippet selection and URL prioritization for AI-powered research.

PART 1: High level Discord summaries

Cursor IDE Discord

- Claude's Caching API Slashes Costs: Anthropic introduces API updates for Claude 3.7 Sonnet, featuring cache-aware rate limits and prompt caching, potentially cutting costs by up to 90%.

- These updates enable Claude to maintain knowledge of large documents, instructions, or examples without resending data with each request, also reducing latency by 85% for long prompts.

- Cursor Plagued by Performance Problems: Users are reporting sluggish UI, frequent window crashes, and memory leaks in recent Cursor versions, especially on Mac and Windows WSL2.

- Possible causes mentioned include legal issues with Microsoft; members suggest trying Cline or Windsurf as alternative IDEs.

- Open Source Manus Sparks Excitement: The open-source alternative to Manus, called OpenManus, is generating excitement, with some users are even trying the demo showcased in this YouTube video.

- The project aims to provide a more accessible alternative to Manus, prompting discussions around its capabilities and ease of use for non-technical users.

- Blender Integrates with MCP: A member highlighted MCP for Blender, enabling Claude to directly interact with Blender for creating 3D scenes from prompts.

- This opens possibilities for extending AI tool integration beyond traditional coding tasks.

- Cursor Version Confusion Creates Chaos: A chaotic debate erupts over Cursor versions, with users touting non-existent 0.49, 0.49.1, and even 1.50 builds while others struggle with crashes on 0.47.

- The confusion stems from differing update experiences, with some users accessing beta versions through unofficial channels, further muddying the waters.

Nous Research AI Discord

- Nous Research Launches Inference API with DeepHermes: Nous Research introduced its Inference API featuring models like Hermes 3 Llama 70B and DeepHermes 3 8B Preview, accessible via a waitlist at Nous Portal with $5.00 free credits for new users.

- The API is OpenAI-compatible, and further models are planned for integration.

- DeepHermes Models Offer Hybrid Reasoning: Nous Research released DeepHermes 24B and 3B Preview models, available on HuggingFace, as Hybrid Reasoners with an on/off toggle for long chain of thought reasoning.

- The 24B model demonstrated a 4x accuracy boost on challenging math problems and a 43% improvement on GPQA when reasoning is enabled.

- LLM Gains Facial Recognition: A member open-sourced the LLM Facial Memory System, which combines facial recognition with LLMs, enabling it to recognize people and maintain individual chat histories based on identified faces.

- This system was initially built for work purposes and then released publicly with permission.

- Gemma-3 Models Now Run in LM Studio: LM Studio 0.3.13 introduced support for Google's Gemma-3 models, including multimodal versions, available in both GGUF and MLX formats.

- The update resolves previous issues with the Linux version download, which initially returned 404 errors.

- Agent Engineering: Hype vs. Reality: A blog post on "Agent Engineering" sparked discussion about the gap between the hype and real-world application of AI agents.

- The post suggests that despite the buzz around agents in 2024, their practical implementation and understanding remain ambiguous, suggesting a long road ahead before they become as ubiquitous as web browsers.

Unsloth AI (Daniel Han) Discord

- Gemma 3 Models Cause a Transformers Tantrum: A bug in Hugging Face Transformers is currently blocking fine-tuning of Gemma 3 models, as noted in the Unsloth blog post.

- The issue results in mismatches between documentation and setup in the Gemma 3 Jupyter notebook on Colab; HF is actively working on a fix.

- GRPO Gains Ground over PPO for Finetuning: Members discussed the usage of GRPO vs PPO for finetuning, indicating that GRPO generalizes better, is easier to set up, and is a possible direct replacement.

- While Meta 3 used both PPO and DPO, AI2 still uses PPO for VLMs and their big Tulu models because they use a different reward system that leads to very up to date RLHF.

- GPT-4.5 Trolls Its Users: A member reported ChatGPT-4.5 trolled them by limiting questions, then mocking their frustration before granting more.

- The user quoted it as being like "u done having ur tantrum? I'll give u x more questions".

- Double Accuracy Achieved Via Validation Set: A member saw accuracy more than double from 23% to 53% using a validation set of 68 questions.

- The creator of the demo may submit a PR into Unsloth with this feature.

- Slim Attention Claims Memory Cut, MLA Questioned: A paper titled Slim attention: cut your context memory in half without loss of accuracy was shared, highlighting the claim that K-cache is all you need for MHA.

- Another member questioned why anyone would use this over MLA.

LM Studio Discord

- LM Studio Goes Gemm-tastic: LM Studio 0.3.13 is live, now supporting Google's Gemma 3 in both GGUF and MLX formats, with GPU-accelerated image processing on NVIDIA/AMD, requiring a llama.cpp runtime update to 1.19.2 from lmstudio.ai/download.

- Users rave that the new engine update for Gemma 3 offers significant speed improvements, with many considering it their main model now.

- Gemma 3's MLX Model Falters?: Some users report that Gemma 3's MLX model produces endless

<pad>tokens, hindering text generation; a workaround is to either use the GGUF version or provide an image.- Others note slow token generation at 1 tok/sec with low GPU and CPU utilization, suggesting users manually maximize GPU usage in model options.

- Context Crashes Catastrophic Gemma: Members discovered that Gemma 3 and Qwen2 vl crash when the context exceeds 506 tokens, spamming

<unusedNN>, a fix has been released in Runtime Extension Packs (v1.20.0).- One member asked if they could use could models with LM Studio, but another member swiftly replied that LM Studio is designed for local models only.

- Vulkan Slow, ROCm Shows Promise: Users found that Vulkan performance lags behind ROCm, suggesting a driver downgrade to 24.10.1 for testing; one user reported 37.3 tokens/s on a 7900 XTX with Mistral Small 24B Q6_K.

- For driver changes without OS reinstall, it was suggested to use AMD CleanUp.

- 9070 GPU Bites the Dust: A user's 9070 GPU malfunctioned, preventing PC boot and triggering the motherboard's RAM LED, but a 7900 XTX worked; testing is underway before RMA.

- They will try to boot with one RAM stick at a time, but others speculated on a PCI-E Gen 5 issue, recommending testing in another machine or forcing PCI-E 4.

aider (Paul Gauthier) Discord

- Google Drops Gemma 3, Shakes Up Open Models: Google has released Gemma 3, a collection of lightweight, open models built from the same research and tech powering Gemini 2.0 [https://blog.google/technology/developers/gemma-3/]. The new models are multimodal (text + image), support 140+ languages, have a 128K context window, and come in 1B, 4B, 12B, and 27B sizes.

- The release sparked a discussion on fine-tuning, with a link to Unsloth's blog post showing how to fine-tune and run them.

- OlympicCoder Model Competes with Claude 3.7: The OlympicCoder model, a 7B parameter model, reportedly beats Claude 3.7 and is close to o1-mini/R1 on olympiad level coding [https://x.com/lvwerra/status/1899573087647281661]. It comes with a new IOI benchmark as reported in the Open-R1 progress report 3.

- Claims were made that no one was ready for this release.

- Zed Predicts Edits with Zeta Model: Zed is introducing edit prediction powered by Zeta, their new open source model. The editor now predicts the user's next edit, which can be applied by hitting tab.

- The model is currently available for free during the public beta.

- Anthropic Releases text_editor Tool, Alters Edit Workflow: Anthropic has introduced a new text_editor tool in the Anthropic API, designed for apps where Claude works with text files. This tool enables Claude to make targeted edits to specific portions of text, reducing token consumption and latency while increasing accuracy.

- The update suggests there may be no need for an editor model with some users musing over a new simpler workflow.

- LLMs: Use as a Launchpad, Not a Finish Line: Members discussed that a bad initial result with LLMs isn’t a failure, but a starting point to push the model towards the desired outcome. One member is prioritizing the productivity boost from LLMs not for faster work, but to ship projects that wouldn’t have been justifiable otherwise.

- A blog post notes that using LLMs to write code is difficult and unintuitive, requiring significant effort to figure out its nuances, stating that if someone claims coding with LLMs is easy, they are likely misleading you, and successful patterns may not come naturally to everyone.

Perplexity AI Discord

- Naming AI Agents ANUS Causes Laughter: Members had a humorous discussion about naming an AI agent ANUS, with the code available on GitHub.

- One member joked, 'Sorry boss my anus is acting up I need to restart it'.

- Windows App Apple ID Login Still Buggy: Users are still experiencing the 500 Internal Server Error when trying to authenticate Apple account login for Perplexity’s new Windows app.

- Some reported success using the Apple relay email instead; and other suggested to login using Google.

- Perplexity's Sonar LLM Dissected: Sonar is confirmed as Perplexity's own fast LLM used for basic search.

- The overall consensus is that the web version of Perplexity is better than the mobile app, with one user claiming that Perplexity is still the best search site overall.

- Model Selector Ghosts Users: Users reported that the model selector disappeared from the web interface, leading to frustration as the desired models (e.g., R1) were not selectable.

- Members used a complexity extension as a workaround to revert back to a specific model.

- Perplexity Pro Suffers Memory Loss: Several users noted that Perplexity Pro seems to be losing context in conversations, requiring them to constantly remind the AI of the original prompt.

- As such, Perplexity's context is a bit limited.

OpenAI Discord

- Perplexity Wins AI Research Tool Preference: Members favored Perplexity as their top AI research tool, followed by OpenAI and SuperGrok due to budget constraints and feature preferences.

- Users are seeking ways to access Perplexity and Grok instead of subscribing to ChatGPT Pro.

- Python's AI Inference Crown Challenged: Members debated whether Python is still the best language for AI inference, or whether C# is a better alternative for deployment.

- Some members are using Ollama with significant RAM (512GB) to deploy models as a service.

- Gemini 2.0 Flash Shows off Native Image Generation: Gemini 2.0 Flash now features native image generation within AI Studio, enabling iterative image creation and advanced image understanding and editing.

- Users found that Gemini's free image generation outshines GPT-4o, highlighting new robotic capabilities described in Google DeepMind's blog.

- GPT Users Rant over Ethical Overreach: Members voiced frustration with ChatGPT's persistent ethical reminders and intent clarification requests, finding them overly cautious and intrusive.

- One user lamented the lack of a feature to disable these reminders, expressing a desire to avoid the model's ethical opinions.

- Threats Debated to Improve GPT Output: Members shared methods to improve GPT responses, including minimal threat prompting and personalization, with some reporting successful experimentation.

- One member demonstrated that personalizing the model led to absolutely lov[ed] results for everything while another reported improvements with custom GPT using kidnapped material science scientist.

HuggingFace Discord

- Python's Performance Questioned for AI: A member questioned if Python is the best choice for AI transformer model inference, suggesting C# might be faster, but others suggested VLLM or LLaMa.cpp are better.

- VLLM is considered more industrial, while LLaMa.cpp is more suited for at-home use.

- LTX Video Generates Real-Time Videos: The new LTX Video model is a DiT-based video generation model that generates 24 FPS videos at 768x512 resolution in real-time, faster than they can be watched and has examples of how to load single files.

- This model is trained on a large-scale dataset of diverse videos and generates high-resolution videos with realistic and varied content.

- Agent Tool List Resolves Erroneous Selection: An agent failed to use a defined color mixing tool, but was resolved when the tool was added to the agent's tool list.

- The agent ignored the predefined

@toolsection, instead opted to generate its own python script.

- The agent ignored the predefined

- Ollama Brings Local Models to SmolAgents: Members can use local models in

smolagentsby installing withpip install smolagents[litellm\], then define the local model usingLiteLLMModelwithmodel_id="ollama_chat/qwen2.5:14b"andapi_key="ollama".- This integration lets users leverage local resources for agentic workflows.

- Manus AI Releases Free ANUS Framework: Manus AI launched an open-source framework called ANUS (Autonomous Networked Utility System), touting it as a free alternative to paid solutions, according to a tweet.

- Details on the framework's capabilities and how it compares to existing paid solutions are being discussed.

Interconnects (Nathan Lambert) Discord

- Gemma 3 Sparks Creative AI Uprising: The new Gemma-3-27b model ranks second in creative writing, according to this tweet, suggesting it will be a favorite with creative writing & RP fine tuners.

- A commenter joked that 4chan will love Gemmasutra 3.

- alphaXiv Triumphs with Claude 3.7: alphaXiv uses Mistral OCR with Claude 3.7 to generate research blogs with figures, key insights, and clear explanations with one click, according to this tweet.

- Some believe alphaXiv is HuggingFace papers done right, offering a neater version of html.arxiv dot com.

- Gemini Flash's Image Generation Gambits: Gemini 2.0 Flash now features native image generation, allowing users to create contextually relevant images, edit conversationally, and generate long text in images, as noted in this blog post and tweet.

- Gemini Flash 2.0 Experimental can also be used to generate Walmart-style portrait studio pictures, according to a post on X.

- Security Scrutiny Surrounds Chinese Model Weights: Users express concerns about downloading open weight models like Deepseek from Hugging Face due to potential security risks, as highlighted in this discussion.

- Some worry if I download deepseek from huggingface, will I get a virus or that the weights send data to the ccp, leading to a startup idea of rebranding Chinese models as patriotic American or European models.

- OpenAI's PRC Model Policy Proposal: OpenAI's policy proposal argues for banning the use of PRC-produced models within Tier 1 countries that violate user privacy and create security risks such as the risk of IP theft.

- OpenAI submitted their policy proposal to the US government directly linking fair use with national security, stating that if China has free data access while American companies lack fair use, the race for AI is effectively over, according to Andrew Curran's tweet.

Eleuther Discord

- Distill Reading Group Announces Monthly Meetup: The Distill reading group announced that the next meetup will be March 14 from 11:30-1 PM ET, with details available in the Exploring Explainables Reading Group doc.

- The group was formed due to popular demand for interactive scientific communication around Explainable AI.

- Thinking Tokens Expand LLM Thinking: One discussant proposes using a hybrid attention model to expand thinking tokens internally, using the inner TTT loss on the RNN-type layer as a proxy and suggested determining the number of 'inner' TTT expansion steps by measuring the delta of the TTT update loss.

- The expansion uses cross attention between normal tokens and normal tokens plus thinking tokens internally, but faces challenges in choosing arbitrary expansions without knowing the TTT loss in parallel, which can be addressed through random sampling or proxy models.

- AIME 24 Implementation Lands: A member added an AIME24 implementation based off of the MATH implementation to lm-evaluation-harness.

- They based it off of the MATH implementation since they couldn't find any documentation of what people are running when they run AIME24.

- Deciphering Delphi's Activation Collection: A member inquired about how latents are collected for interpretability using the LatentCache, specifically whether latents are obtained token by token or for the entire sequence with the Delphi library.

- Another member clarified that Delphi collects activations by passing batches of tokens through the model, collecting activations, generating similar activations, and saving only the non-zero ones, and linked to <#1268988690047172811>.

OpenRouter (Alex Atallah) Discord

- Gemma 3 Arrives with Multimodal Support: Google has launched Gemma 3 (free), a multimodal model with vision-language input and text outputs, featuring a 128k token context window and improved capabilities across over 140 languages.

- Reportedly the successor to Gemma 2, Gemma 3 27B includes enhanced math, reasoning, chat, structured outputs, and function calling capabilities.

- Reka Flash 3 Flies in with Apache 2.0 License: Reka Flash 3 (free), a 21 billion parameter LLM with a 32K context length, excels at general chat, coding, instruction-following, and function calling, optimized through reinforcement learning (RLOO).

- The model supports efficient quantization (down to 11GB at 4-bit precision), utilizes explicit reasoning tags, and is licensed under Apache 2.0, though it is primarily an English model.

- Llama 3.1 Swallow 70B Lands Swiftly: A new Japanese-capable model, Llama 3.1 Swallow 70B (link), has been released, characterized by OpenRouter as a smaller model with high performance.

- Members didn't provide additional color commentary.

- Gemini 2 Flash Conjures Native Images: Google AI Studio introduced an experimental version of Gemini 2.0 Flash with native image output, accessible through the Gemini API and Google AI Studio.

- This new capability combines multimodal input, enhanced reasoning, and natural language understanding to generate images.

- Cohere Commands A, Challenging GPT-4o: Cohere launched Command A, claiming parity or superiority to GPT-4o and DeepSeek-V3 for agentic enterprise tasks with greater efficiency, according to the Cohere Blog.

- The new model prioritizes performance in agentic tasks with minimal compute, directly competing with GPT-4o.

Cohere Discord

- Command A challenges GPT-4o on Enterprise Tasks: Cohere launched Command A, claiming performance on par with or better than GPT-4o and DeepSeek-V3 for agentic enterprise tasks with greater efficiency, detailed in this blog post.

- The model features 111b parameters, a 256k context window, and inference at a rate of up to 156 tokens/sec, and it is available via API as

command-a-03-2025.

- The model features 111b parameters, a 256k context window, and inference at a rate of up to 156 tokens/sec, and it is available via API as

- Command A's API Start Marred by Glitches: Users reported errors when using Command-A-03-2025 via the API, traced back to the removal of

safety_mode = “None”from the model's requirements.- One member discovered that removing the

safety_modesetting resolved the issue, highlighting that Command A and Command R7B no longer support it.

- One member discovered that removing the

- Seed Parameter Fails to Germinate Consistent Results: A member found that the

seedparameter in the Chat API didn't work as expected, producing varied outputs for identical inputs and seed values across models like command-r and command-r-plus.- A Cohere team member confirmed the issue and began investigating.

- OpenAI Compatibility API Throws Validation Errors: A user reported a 400 error with the OpenAI Compatibility API, specifically with the

chat.completionsendpoint and model command-a-03-2025, due to schema validation of theparametersfield in thetoolsobject.- Cohere initially required the

parametersfield even when empty, but the team decided to match OpenAI's behaviour for better compatibility.

- Cohere initially required the

- AI Researcher dives into RAG and Cybersecurity: An AI researcher/developer with a background in cybersecurity is focusing on RAG, agents, workflows, and primarily uses Python.

- They seek to connect and learn from the community.

MCP (Glama) Discord

- Glama API Shows More Data per Server: A member shared that the new Glama** API (https://glama.ai/mcp/reference#tag/servers/GET/v1/servers) lists all the available tools and has more data per server compared to Pulse.

- However, Pulse reportedly has more servers available.

- Claude Struggles Rendering Images Elegantly: A member reported struggles rendering a Plotly image in Claude Desktop, finding no elegant way to force Claude** to pull out a resource and render it as an artifact.

- They suggested that using

openis better and others pointed to an MCP example, noting that the image appears inside the tool call, a current limitation of Claude.

- They suggested that using

- NPM Package Caching Investigated**: A member asked about the location of the npm package cache and how to display downloaded/connected servers in the client.

- Another member suggested checking

C:\Users\YourUsername\AppData\Local\npm-cache, while the ability to track server states depends on client-side implementation.

- Another member suggested checking

- OpenAI Agents SDK Gains MCP Support: A developer integrated Model Context Protocol (MCP)** support into the OpenAI Agents SDK, which is accessible via a fork and as the

openai-agents-mcppackage on pypi.- This integration allows the Agent to combine tools from MCP servers, local tools, OpenAI-hosted tools, and other Agent SDK tools with a unified syntax.

- Goose Project Commands Computers via MCP: The Goose project, an open-source AI agent, utilizes any MCP server to automate developer tasks.

- See a demonstration of Goose controlling a computer in this YouTube short.

Notebook LM Discord

- Google Seeks NotebookLM Usability Participants: Google is seeking NotebookLM users who heavily use their mobile phones and are recruiting users for usability studies for product feedback, offering $75 USD (or a $50 Google merchandise voucher) as compensation.

- Interested users can fill out this screener for the mobile user research, or participate in 60-minute remote sessions on April 2nd and 3rd 2025.

- NoteBookLM Plus Considered as Internal FAQ: A user inquired about using NoteBookLM Plus as an internal FAQ, while another user suggested posting this as a feature request, as chat history is not saved in NotebookLM.

- Workarounds discussed include making use of clipboard copy and note conversion to share information.

- Inline Citations Get Preserved: Users can now save chat responses as notes and have inline citations preserved in their original form, allowing for easy reference to the original source material.

- Many users requested this feature, which is the first step of some cool enhancements to the Notes editor, however they also requested improvements for enhanced copy & paste with footnotes.

- Thinking Model Gets Pushed to NotebookLM: The latest thinking model has been pushed to NotebookLM, promising quality improvements across the board, especially for Portugese speakers who can add

?hl=ptat the end of the url to fix the language.- Users also discussed the possibility of integrating AI Studio functionality into NotebookLM, which 'watches' YouTube videos and doesn't rely solely on transcripts from this Reddit link.

GPU MODE Discord

- VectorAdd Submission Bounces Back From Zero: A member initially reported their vectoradd submission was returning zeros, despite working on Google Colab.

- The member later discovered the code was processing the same block repeatedly, leading to unexpectedly high throughput and pinpointed that if it's too fast, there's probably a bug somewhere.

- SYCL Shines as CUDA Challenger: Discussion around SYCL's portability and implementations like AdaptiveCpp and triSYCL reveal that Intel is a key stakeholder.

- One participant finds SYCL more interesting than HIP because it isn't just a CUDA clone and can therefore improve on the design.

- Deepseek's MLA Innovation: DataCrunch detailed the implementation of Multi-Head Latent Attention (MLA) with weight absorption in Deepseek's V3 and R1 models in their blog post.

- A member found that vLLM's current default was bad, based on this pull request.

- Reasoning-Gym Curriculum attracts ETH + EPFL: A team from ETH and EPFL are collaborating on reasoning-gym for SFT, RL, and Eval, as well as investigating auto-curriculum for RL, with preliminary results available on GitHub.

- The team is also looking to integrate with Evalchemy for automatic evaluations of LLMs.

- FlashAttention ported to Turing Architecture: A developer implemented the FlashAttention forward pass for the Turing architecture, previously limited to Ampere and Hopper, with code available on GitHub.

- Early benchmarks show a 2x speed improvement over Pytorch's

F.scaled_dot_product_attentionon a T4, under specific conditions:head_dim = 128, vanilla attention, andseq_lendivisible by 128.

- Early benchmarks show a 2x speed improvement over Pytorch's

Yannick Kilcher Discord

- YC Backs Quick Wins Not Unicorns: A member claimed that YC prioritizes startups with short-term success, investing $500K aiming to 3x that in 6 months, rather than focusing on long-term growth.

- They argued that YC hasn't produced a notable unicorn in years, suggesting a possible shift away from fostering long-term success stories.

- LLM Scaling Approximates Context-Free Languages: A theory suggests that LLM scaling can be understood through their ability to approximate context-free languages using probabilistic FSAs, resulting in a characteristic S-curve pattern as seen in this attached image.

- The proposal is that LLMs try to approximate the language of a higher rung, coming from a lower rung of the Chomsky hierarchy.

- Google's Gemma 3 Faces ChatArena Skepticism: Google released Gemma 3, as detailed in the official documentation, which is reported to be on par with Deepseek R1 but significantly smaller.

- One member noted that the benchmarks provided are user preference benchmarks (ChatArena) rather than non-subjective metrics.

- Universal State Machine Concept Floated: A member shared a graph-based system with dynamic growth, calling it a Universal State Machine (USM), noting it as a very naive one with poor optimization and an explosive node count.

- They linked to an introductory paper describing Infinite Time Turing Machines (ITTMs) as a theoretical foundation and the Universal State Machine (USM) as a practical realization, offering a roadmap for scalable, interpretable, and generalizable machines.

- RTX Remix Revives Riddick Dreams: A member shared a YouTube video showcasing the Half-Life 2 RTX demo with full ray tracing and DLSS 4, reimagined with RTX Remix.

- Another member expressed anticipation for an RTX version of Chronicles of Riddick: Escape from Butcher Bay.

Nomic.ai (GPT4All) Discord

- GPT-4 Still King over Local LLMs: A user finds that ChatGPT premium's quality significantly surpasses LLMs on GPT4All, attributing this to the smaller model sizes available locally and hoping for a local model to match its accuracy with uploaded documents.

- The user notes that the models they have tried on GPT4All haven't been very accurate with document uploads.

- Ollama vs GPT4All Decision: A user asked for advice on whether to use GPT4All or Ollama for a server managing multiple models, quick loading/unloading, frequently updating RAG files, and APIs for date/time/weather.

- A member suggested Deepseek 14B or similar models, also mentioning the importance of large context windows (4k+ tokens) for soaking up more information like documents, while remarking that apple hardware is weird.

- GPT4All workflow good, GUI Bad: A member suggests using GPT4All with tiny models to check the workflow for loading, unloading, and RAG with LocalDocs, but pointed out that the GUI doesn't support multiple models simultaneously.

- They recommend using the local server or Python endpoint, which requires custom code for pipelines and orchestration.

- Crawling the Brave New Web: A user inquired about getting web crawling working and asked for advice before starting the effort.

- A member mentioned a Brave browser compatibility PR that wasn't merged due to bugs and a shift towards a different tool-calling approach, but it could be resurrected if there's demand.

- LocalDocs Plain Text Workaround: A member suggested that to work around LocalDocs showing snippets in plain text, users can make a screenshot save as PDF, OCR the image, and then search for the snippet in a database.

- They suggested using docfetcher for this workflow.

Latent Space Discord

- Mastra Launches Typescript AI Framework: Mastra, a new Typescript AI framework, launched aiming to provide a robust framework for product developers, positioning itself as superior to frameworks like Langchain.

- The founders, with backgrounds from Gatsby and Netlify, highlighted type safety and a focus on quantitative performance gains.

- Gemini 2.0 Flash Generates Images: Gemini 2.0 Flash Experimental now supports native image generation, enabling image creation from text and multimodal inputs, thus boosting its reasoning.

- Users responded with amazement, one declared they were "actually lost for words at how well this works" while another remarked it adds "D" to the word "BASE".

- Jina AI Fine-Tunes DeepSearch: Jina AI shared techniques for enhancing DeepSearch/DeepResearch, specifically late-chunking embeddings for snippet selection and using rerankers to prioritize URLs before crawling.

- They conveyed enthusiasm for the Latent Space podcast, indicating "we'll have to have them on at some point this yr".

- Cohere's Command Model Openly Weighs: Cohere introduced Command A, an open-weights 111B parameter model boasting a 256k context window, tailored for agentic, multilingual, and coding applications.

- This model succeeds Command R+ with the intention of superior performance across tasks.

- Gemini Gives Free Deep Research To All: The Gemini App now offers Deep Research for free to all users, powered by Gemini 2.0 Flash Thinking, alongside personalized experiences using search history.

- This update democratizes access to advanced reasoning for a broader audience.

LlamaIndex Discord

- LlamaIndex Jumps Aboard Model Context Protocol: LlamaIndex now supports the Model Context Protocol, allowing users to use tools exposed by any MCP-compatible server, according to this tweet.

- The Model Context Protocol is an open-source effort to streamline tool discovery and usage.

- AI Set to Shake Up Web Development: Experts will convene at the @WeAreDevs WebDev & AI Day to explore AI's impact on platform engineering and DevEx, as well as the evolution of developer tools in an AI-powered landscape, announced in this tweet.

- The event will focus on how AI is reshaping the developer experience.

- LlamaParse Becomes JSON Powerhouse: LlamaParse now incorporates images into its JSON output, providing downloadable image links and layout data, with details here.

- This enhancement allows for more comprehensive document parsing and reconstruction.

- Deep Research RAG Gets Ready: Deep research capabilities within RAG are accessible via

npx create-llama@latestwith the deep research option, with the workflow source code available on GitHub.- This setup facilitates in-depth exploratory research using RAG.

LLM Agents (Berkeley MOOC) Discord

- MOOC Quiz Deadlines Coming in May: Members reported that all quiz deadlines are in May and details will be released soon to those on the mailing list, confirmed by records showing they opened the latest email about Lecture 6.

- The community should follow the weekly quizzes and await further information.

- MOOC Labs & Research Opportunities Announced Soon: Plans for labs and research opportunities for MOOC learners are in the works and details about projects are coming soon.

- An announcement will be made once everything is finalized, including information on whether non-Berkeley students can obtain certifications.

- Roles and Personas Elucidated in LLMs: In querying an LLM, roles are constructs for editing a prompt, like system, user, or assistant, whereas a persona is defined as part of the general guidelines given to the system, influencing how the assistant acts.

- The system role provides general guidelines, while user and assistant roles are active participants.

- Decision Making Research Group Needs You: A research group focused on decision making and memory tracks has opened its doors.

- Join the Discord research group to dive deeper into the topic.

Modular (Mojo 🔥) Discord

- Mojo Bundling with Max?: In the Modular forums, users are questioning the potential synergies and benefits of bundling Mojo with Max.

- The discussion revolves around user benefits and potential use cases of such a bundle.

- Mojo on Windows: When?: There is community interest about Mojo's potential availability on Windows.

- The community discussed the associated challenges and timelines for expanding Mojo's platform support.

- Modular Max Gains Process Spawning: A member shared a PR for Modular Max that adds functionality to spawn and manage processes from executable files using

exec.- The availability is uncertain due to dependencies on merging a foundations PR and resolving issues with Linux exec.

- Closure Capture Causes Commotion: A member filed a language design bug related to

capturingclosures.- Another member echoed this sentiment, noting they found this behavior odd as well.

- Missing MutableInputTensor Mystifies Max: A user reported finding the

MutableInputTensortype alias in the nightly docs, but it doesn't seem to be publicly exposed.- The user attempted to import it via

from max.tensor import MutableInputTensorandfrom max.tensor.managed_tensor_slice import MutableInputTensorwithout success.

- The user attempted to import it via

Gorilla LLM (Berkeley Function Calling) Discord

- AST Accuracy Evaluates LLM Calls: The AST (Abstract Syntax Tree) evaluation checks for the correct function call with the correct values, including function name, parameter types, and parameter values within possible ranges as noted in the V1 blog.

- The numerical value for AST represents the percentage of test cases where all these criteria were correct, revealing the accuracy of LLM function calls.

- BFCL Updates First Comprehensive LLM Evaluation: The Berkeley Function-Calling Leaderboard (BFCL), last updated on 2024-08-19, is a comprehensive evaluation of LLMs' ability to call functions and tools (Change Log).

- The leaderboard aims to mirror typical user function calling use-cases in agents and enterprise workflows.

- LLMs Enhanced by Function Calling: Large Language Models (LLMs) such as GPT, Gemini, Llama, and Mistral are increasingly being used in applications like Langchain, Llama Index, AutoGPT, and Voyager through function calling capabilities.

- These models have significant potential in applications and software via function calling (also known as tool calling).

- Function Calls run in Parallel: The evaluation includes various forms of function calls, such as parallel (one function input, multiple invocations of the function output) and multiple function calls.

- This comprehensive approach covers common function-calling use-cases.

- Central location to track all evaluation tools: Datasets are located in /gorilla/berkeley-function-call-leaderboard/data, and for multi-turn categories, function/tool documentation is in /gorilla/berkeley-function-call-leaderboard/data/multi_turn_func_doc.

- All other categories store function documentation within the dataset files.

DSPy Discord

- DSPy Eyes Pluggable Cache Module: DSPy is developing a pluggable Cache module, with initial work available in this pull request.

- The new feature aims to have one single caching interface with two levels of cache: in-memory LRU cache and fanout (on disk).

- Caching Strategies Seek Flexibility: There's a desire for more flexibility in defining caching strategies, particularly for context caching to cut costs and boost speed, with interest in cache invalidation with TTL expiration or LRU eviction.

- Selective caching based on input similarity was also discussed to avoid making redundant API calls, along with built-in monitoring for cache hit/miss rates.

- ColBERT Endpoint Connection Refused: A member reported that the ColBERT endpoint at

http://20.102.90.50:2017/wiki17_abstractsappears to be down, throwing a Connection Refused error.- When trying to retrieve passages using a basic MultiHop program, the endpoint returns a 200 OK response, but the text contains an error message related to connecting to

localhost:2172.

- When trying to retrieve passages using a basic MultiHop program, the endpoint returns a 200 OK response, but the text contains an error message related to connecting to

tinygrad (George Hotz) Discord

- LSTM Model Plagued by NaN Loss: A member reported encountering NaN loss when running an LSTMModel with TinyJit, observing the loss jump from a large number to NaN after the first step.

- The model setup involves

nn.LSTMCellandnn.Linear, optimized with theAdamoptimizer, and the input data contains a large value (1000) which may be the reason.

- The model setup involves

- Debugging the NaN: A member requested assistance debugging NaN loss during tinygrad training, providing a code sample exhibiting an LSTM setup.

- This suggests possible numerical instability or gradient explosion issues as causes.

AI21 Labs (Jamba) Discord

- Pinecone Performance Suffers: A member reported that their RAG system faced performance limitations when using Pinecone.

- Additionally, the lack of VPC deployment support with Pinecone was a major concern.

- RAG System Dumps Pinecone: Due to performance bottlenecks and the absence of VPC deployment support, a RAG system is ditching Pinecone.

- The engineer anticipates that the new setup will alleviate these two issues.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Codeium (Windsurf) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!