[AINews] not much happened today

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

a quiet christmas eve is all you need.

AI News for 12/23/2024-12/24/2024. We checked 7 subreddits, 433 Twitters and 32 Discords (215 channels, and 2265 messages) for you. Estimated reading time saved (at 200wpm): 257 minutes. You can now tag @smol_ai for AINews discussions!

The Qwen team launched a vision version of their experimental QwQ o1 clone, called QVQ, but the benchmarks mostly bring it up to par with Claude 3.5 Sonnet, and there's also some discussion about Bret Taylor's latest post on autonomous software dev (as distinct from the Copilot era.

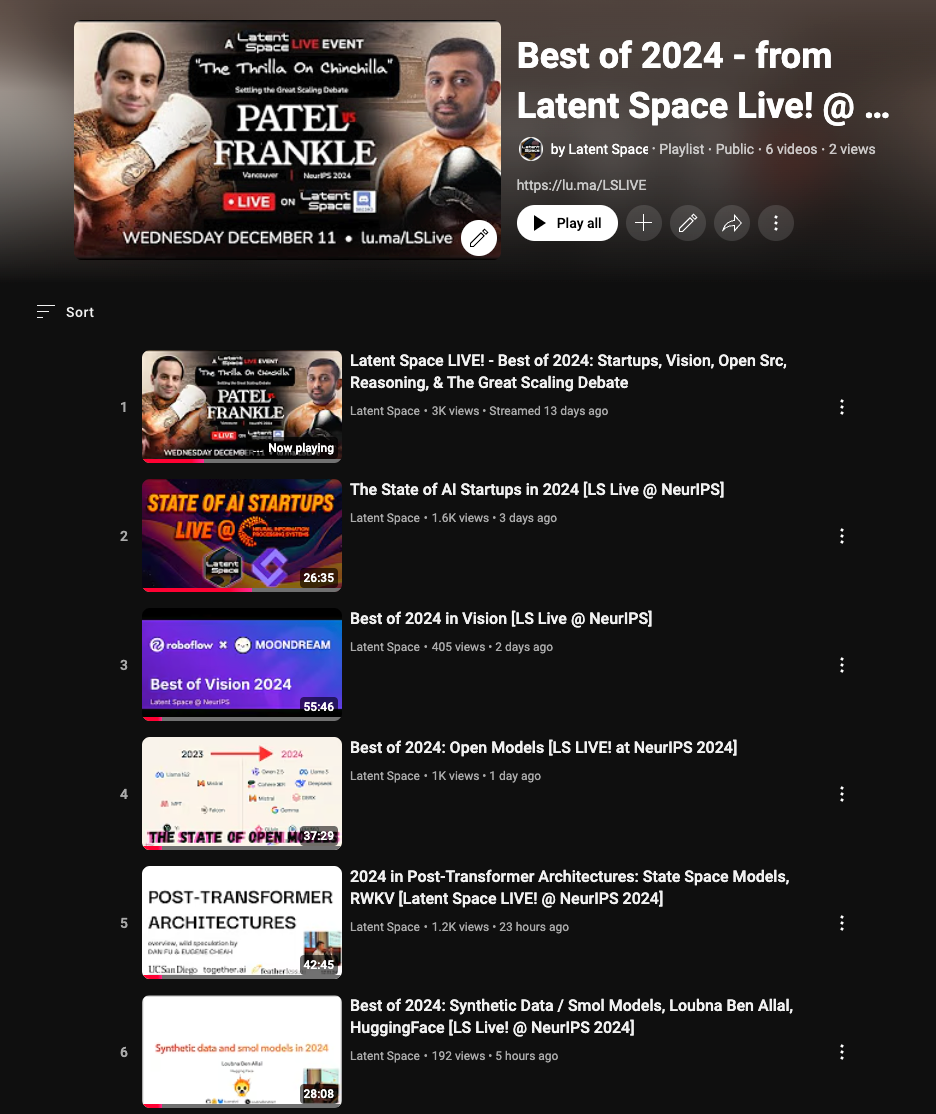

The individual talks from Latent Space LIVE! are being released to tide you through the holidays and recap the Best of 2024 in AI Startups, Vision, Open Models, Post-Transformers, Synthetic Data, Smol Models, Agents, and more.

Your Ad here!

We briefly closed doors for Dec, but are once again reopening ad slots for Jan 2025 AINews. Please email swyx@smol.ai to get in front of >30k AI Engineers daily!

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Models and Benchmarking

- Developing Benchmarks for LLM Calibration: @tamaybes proposes creating a benchmark to measure large language models' tendency to confidently assert falsehoods and assess their calibration in making probabilistic claims.

- Advancements in AI Model Performance: @reach_vb announces QVQ, the first open multimodal o1-like model with vision capabilities, outperforming models like GPT-4o and Claude Sonnet 3.5.

AI Alignment and Ethics

- Intentionality in AI Systems: @BrivaelLp emphasizes the need to crack intentionality in AI, highlighting that even the smartest AI requires intentional limits to function effectively.

- Debate on Alignment Faking: @teortaxesTex critiques the study on alignment faking in AI models like Claude, arguing that engineered charismatic behaviors do not accurately represent general alignment challenges.

Company News and Collaborations

- OpenAI’s Latest Developments: @TheTuringPost shares updates on OpenAI’s o3 and o3-mini models, a new deliberative alignment strategy, and an improved o1 model.

- Collaborative AI Research Projects: @SakanaAILabs announces the ASAL project, collaborating with MIT, OpenAI, and the Swiss AI Lab IDSIA to automate the discovery of artificial life using foundation models.

Immigration and Personal Discussions

- Green Card Denial Experiences: @Yuchenj_UW expresses frustration over a green card denial, criticizing the USCIS for its arbitrary reasoning despite having a PhD and serving as an Apple CTO. Multiple replies highlight similar experiences and frustrations with the immigration system.

- Support and Advice for Applicants: @deedydas offers support and advice to @Yuchenj_UW, encouraging perseverance despite setbacks in the green card application process.

Technical Tools and Projects

- Introducing GeminiCoder: @osanseviero unveils GeminiCoder, a tool that allows users to create apps in seconds using simple prompts.

- Automated Contract Review Agent: @llama_index presents a contract review agent built with Reflex and Llama Index, capable of checking GDPR compliance in vendor agreements.

Memes/Humor and Holiday Greetings

- Holiday Wishes and Festivities: @ollama wishes everyone a Merry Christmas, while @ClementDelangue shares a heartfelt moment from the Christmas Midnight Mass at Notre Dame Paris.

- Humorous Anecdotes: @TheGregYang shares a funny story about receiving a harsh comment from his mother on his profile picture, adding a lighthearted touch to the holiday season.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Qwen/QVQ-72B Achieves 70.3 on MMMU Evaluation

- Qwen/QVQ-72B-Preview · Hugging Face (Score: 72, Comments: 10): Qwen/QVQ-72B has a preview available on Hugging Face, indicating its relevance in the AI community for model exploration and experimentation.

- QVQ-72B achieves a score of 70.3 on the MMMU dataset, showcasing its performance in university-level multidisciplinary evaluations. Users hope for more post-training details, with resources available on Qwen's blogpost and Hugging Face.

- A user shared a screenshot illustrating the model's thoroughness and ability to translate from Chinese, emphasizing its impressive performance. There is a request for Hugging Face to enable it as a warm inference model.

- A discrepancy in the model size was highlighted, with 73.4B parameters mentioned instead of 72B, leading to some confusion among users.

- QVQ - New Qwen Realease (Score: 267, Comments: 40): The QVQ model achieves a score of 70.3 on the MMMU benchmark, surpassing the performance of the Qwen2-VL-72B-Instruct model. The image highlights QVQ's superior results across various tests such as MathVista, MathVision, and OlympiadBench, indicating substantial improvements over competitors like OpenAI and GPT-4.

- Discussion highlights the QVQ model's licensing, with a query about its specific type, while QwQ is noted to have an Apache license and impressive performance for its size. QVQ, being larger, is expected to outperform QwQ.

- The NSFW filter in the demo is mentioned, but the model itself appears uncensored, with no refusals on borderline images, indicating a flexible content moderation approach.

- The QVQ model's availability on Hugging Face is appreciated, with praise for Alibaba's innovative open-source contributions and a call for more inclusive benchmarking between models like llamma and qwen.

- Guys am I crazy or is this paper totally batshit haha (Score: 86, Comments: 43): The post lacks sufficient context or content to provide a detailed summary.

- Commenters criticize the ICOM project for its dubious claims, lack of transparency, and reliance on GPT-3.5 and GPT-4 APIs, which led to disqualification from the ARC-AGI Challenge due to rule violations. They argue that the project appears to be a superficial wrapper for existing LLMs, failing to demonstrate any unique or advanced capabilities.

- There is skepticism about the ICOM paper's credibility, with accusations of self-citation, inconsistent formatting, and exaggerated claims about surpassing benchmarks without training data. Commenters mock the paper for resembling a marketing ploy rather than a legitimate scientific contribution, and question the motivations behind its publication.

- Discussions highlight the ICOM project's reliance on C#, which is atypical for AI research, and the use of Excel for visualizations, suggesting a lack of sophistication. Commenters express disbelief at the project's claims of achieving significant milestones without rigorous evidence or peer validation, comparing it to pseudoscientific endeavors.

Theme 2. Inter-3B Model Comparisons: Llama vs Granite vs Hermes

- llama 3.2 3B is amazing (Score: 321, Comments: 121): Llama 3.2-3B is highly effective and user-friendly, particularly noted for its ability to retain context and handle Spanish language efficiently, comparable to Stable LM 3B. The model, specifically llama-3.2-3b-instruct-abliterated.Q4_K_M.gguf, performs well on a CPU i3 10th generation at approximately 10 tokens per second.

- Users discuss running Llama 3.2-3B on various devices, including iPhones, Pixel 7 phones, and even a Raspberry Pi 5 with 8GB RAM, highlighting its efficiency and versatility across platforms. Some users report token generation speeds, with one noting 100 tokens per second on an M1 Max.

- Comparisons are made between Llama 3.2-3B and other models like Granite3.1-3B-MoE and Hermes 3B, with some users preferring the newer models due to features like 32K tokens context and built-in function calling. There are also mentions of the 3.3 version being better, though limitations such as 70B size are noted.

- Discussions around software tools and platforms such as LMStudio and Ollama highlight differences in usability and performance, with some users expressing strong preferences due to design and implementation choices. The Q4_K_M variant's size and performance are also debated, with one user stating it's 42GB.

Theme 3. GGUF Models Now Usable Privately via Hugging Face in Ollama

- You can now run private GGUFs from Hugging Face Hub directly in Ollama (Score: 129, Comments: 29): Hugging Face has enabled the direct running of private GGUFs from their hub in Ollama. Users only need to add their Ollama SSH key to their Hugging Face profile to access this feature, allowing them to run private fine-tunes and quants with the same user experience. Full instructions and details are available on the Hugging Face documentation page.

- GGUF Format and Quantization: GGUF is a model format used with llamacpp and similar backends, containing weights and metadata. The file size reflects memory usage, with various quantizations (from Q2 to Q8) affecting compression and size, resulting in a range of model sizes available in repositories.

- Private Model Running on Ollama: The new feature allows running private GGUF models directly in Ollama by uploading an SSH key to Hugging Face. Users can now run private models from their namespaces, a capability not previously available, enhancing the flexibility of model management and execution.

- Local Execution and Storage: Using Ollama, users can pull obfuscated GGUF files and metadata from servers, storing them locally for execution. This is applicable to both public and private models, with the added feature of managing private repositories with the user's Ollama public key.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. Criticism of "Gotcha" tests to determine LLM intelligence

- [D] Can we please stop using "is all we need" in titles? (Score: 335, Comments: 74): The post criticizes the overuse of the phrase "... is all we need" in scientific paper titles, arguing that it often lacks scientific value and serves merely as an attention-grabbing tactic. The author calls for a reduction in its usage, suggesting it has become a bad practice.

- Commenters humorously critique the overused paper titles like "is all we need", suggesting alternatives such as "attention (grabbing) is all we need" and "better title names is all we need." They express frustration over clickbait titles in scientific papers and suggest desk reject policies for such submissions.

- There is a shared sentiment against convoluted acronyms in scientific papers, with users noting they are unnecessary unless introducing a frequently referenced resource. milesper criticizes the practice of using letters from the middle of words, while H4RZ3RK4S3 suggests a wishlist post for better practices.

- Successful-Western27 highlights the prevalence of the phrase, noting over 150 papers with "is all you need" in their titles on Arxiv in the last 6 months, sparking a discussion on the platform's role as a scientific resource. yannbouteiller and TheJoshuaJacksonFive contribute by questioning Arxiv's credibility, calling it a "glorified blog."

Theme 2. 76K robodogs now $1600, and AI is practically free

- 76K robodogs now $1600, and AI is practically free, what the hell is happening? (Score: 424, Comments: 294): The post discusses the drastic reduction in prices of advanced technologies, highlighting Boston Dynamics' robodog dropping from $76,000 to $1,600 and the cost of using GPT-4o Mini at $0.00015 per 1,000 tokens, compared to GPT-3's initial pricing. The author questions whether these price drops are a result of capitalism or if they are devaluing innovation, pondering the implications of making cutting-edge technology widely accessible and the potential consequences of a pricing race to the bottom.

- Technological Deflation: Commenters like broose_the_moose argue that price reductions in technology are driven by ongoing innovation in manufacturing, engineering, and algorithmic breakthroughs, suggesting a trend towards improved quality of life globally, not a devaluation of innovation.

- Societal Impact and Integration: Discussions highlight the potential societal impacts of cheaper technology, with wonderingStarDusts advocating for a collectivist approach to technology distribution, while others like Nuckyduck envision a future where humans and technology are more integrated, potentially leading to a "work-free" society.

- Practical Applications and Limitations: Commenters express interest in practical applications of affordable technology, such as CrybullyModsSuck wanting a laundry robot, while dronemastersaga points out limitations, noting the $1,600 robodog is non-programmable and more of a toy compared to its $17,000 programmable counterpart.

AI Discord Recap

A summary of Summaries of Summaries by o1-2024-12-17

Theme 1. Next-Gen Model Rivalries

- QVQ-72B Thrashes GPT4o & Claude 3.5: Qwen’s 72B vision-capable model stuns testers by solving math and geometry tasks with bold accuracy. Users bragged it “beats GPT4o” and teased massive synergy in future image and text tasks.

- Phi-4 Hallucinates...Sometimes: Testers saw it nail an XOR MLP but fail basic prompts, proving big leaps in some queries while faceplanting in others. Frequent “random illusions” keep it from consistent stardom.

- O3 by OpenAI Ramps Up: The new “O3” allegedly merges process traces from STEM experts with synthetic data. Rumors say it uses RL post-training for “longer, better thinking” that dwarfs earlier GPT approaches.

Theme 2. Tools & Dev Integrations

- Cursor IDE Flexes AppImage & DB Tricks: Linux users love Cursor’s 25-limit bump for code generation and an easy AppImage setup. They also ask the AI for local API guides to build Next.js stacks in record time.

- Multi-Agent Madness with Windsurf & More: Devs share frameworks like “multi-agent-orchestrator” and “PromptoLab” for wrangling multiple AIs. Some combine them with OpenRouter's new endpoints for custom flows.

- Email the Humans for More Credits: Cursor, Windsurf, and others hike usage caps by user request. Folks joke about penning heartfelt “increase my limit” letters for holiday coding sprees.

Theme 3. GPU & Speed Scenes

- EPYC CPU Outmuscles Expectations: Some report 26 tokens/sec on CPU and 332 on GPU with smaller LLMs on 64-core EPYC servers. “PCIe 4 risers solve meltdown bugs,” they quip, setting eyes on PCIe 5 next.

- AMD’s Software Under Fire: SemiAnalysis tears into AMD’s driver stack, calling improvement commitments just talk. “The world wants monopolies,” a user half-joked, lamenting the slow pace of real fixes.

- Quantization Quarrels & Ternary Tricks: Researchers slash model size with ternary weights, storing 175B in ~20MB. “No backprop!” they boast, fueling wild speculation on training efficiency.

Theme 4. AI in Real-World Applications

- Scammers & Malicious Links: Stability.ai watchers suffer scam attacks from hacked inactive accounts. They praise vigilant bots and re-up the old mantra: “vigilance is key.”

- Contract Review & Invoice Agents: LlamaIndex devs share one-liners to parse invoices or check GDPR compliance. They celebrate slashing hours of manual reviews with these doc-savvy agents.

- AI Podcasting & Voice Fun: NotebookLM fans hype an invite-only platform for AI-driven podcasts. Others propose hooking up Google News for daily headlines with dynamic RSS to feed your ears.

Theme 5. RL, Summaries, and Fine-Tuning Hustle

- OREO Cranks 52.5% on MATH: Offline RL outstrips DPO, letting a mere 1.5B LLM handle multi-step reasoning with zero new pair data. Test-time “value-guided tree search” is basically free icing on the cake.

- Free AI Book Summaries & Embedding Bonanzas: Nous Research folks share reading hacks, praising open-source efforts like Mixedbread’s embeddings. “Reranking strength speaks for itself,” they say, perfect for RAG workflows.

- QLoRa Quests & GPT4All Dreams: Resourceful devs fine-tune 3B–14B models on 8GB GPUs, comparing QLoRa, BnB 4bit, and GGUF. They chase faster inference while wrangling memory constraints and local hosting setups.

PART 1: High level Discord summaries

Codeium (Windsurf) Discord

- Windsurf’s Wallet Woes: In December chatter, users blasted Windsurf’s 3:1 credit refill approach as too pricey, referencing updated pricing details.

- Some joked that the policy nudges them toward multiple accounts, fueling frustration over an approach they viewed as slanting costs unfairly.

- Coding Models Collide: Contributors compared Sonnet 3.5, Claude, Gemini, and Haiku (Anthropic’s smallest model), noting minimal differences in code completion accuracy.

- They stressed the importance of picking the best model per task, praising Windsurf for seamless multi-AI integration.

- Multi-Agent Madness: Community members spotlighted awslabs/multi-agent-orchestrator and heltonteixeira/openrouterai for handling complex agent orchestration.

- They also mentioned PromptoLab for prompt evaluation and pointed to Addyo’s substack and Builder.io's write-up on AI coding approaches.

- Pro Plan Pandemonium: Users reported days-long waits on Windsurf support, with one ticket on auto-edit features going unanswered for 12 days.

- Another user claimed the backlog prevented Pro status from updating, amplifying discontent during the holiday lull.

Cursor IDE Discord

- Cursor IDE Gains Strength: Community members discussed new updates to Cursor IDE, raising usage limits from 10 to 25, referencing Cursor - Build Software Faster for expanded functionality.

- They contrasted it with similarly positioned solutions like Windsurf, highlighting Cursor's steadiness and convenience.

- Sonnet Surpasses Grok & Gemini: Users compared Sonnet with Grok and Gemini, praising it for better coding tasks than Grok's recent update.

- They cited hallucination issues in the newer Grok build, reinforcing Sonnet's reputation for consistent performance.

- Taming AppImages on Linux: Instructions covered how to run Cursor as an AppImage on Linux, referencing AppImage for x64 after marking it executable.

- A few encountered hiccups and used Gear Lever to handle the process, streamlining the entire installation.

- Local API & DB Setup with Cursor: One dev inquired about running a local API and database using Cursor, and was encouraged to ask the AI for detailed steps.

- Others pointed to Next.js docs for potential synergy, recommending a trial-by-doing approach to confirm compatibility.

- Email the Humans for More Limits: Humor surfaced around emailing support for additional usage caps beyond the newly raised 25 limit in Cursor.

- Community members chimed in with success stories, saying support responded swiftly to increase capacity.

Nous Research AI Discord

- Phi-4 Gains or Pains?: Community tested the Phi-4 model's factual accuracy after it produced a working XOR MLP using the built-in

pow()function.- One user was amazed at its success on some requests but also noted frequent hallucinations hamper reliability.

- Qwen Coder 7B vs 14B Face-Off: Members compared Qwen Coder 7B and 14B for coding tasks, observing performance shifts under various quantization settings.

- They found Qwen 2.5 Coder 7B sometimes fails routine prompts, yet alternatives also show notable code generation issues.

- Quantization Quarrel Continues: Participants debated the changing field of quantization, citing QTIP for models above 7B parameters.

- They underscored thorough benchmarking along with Llama.Cpp-Toolbox as a simple interface.

- Hugging Face Helpers Unite: A contributor highlighted their Hugging Face involvement, referencing PyTorchModelHubMixin and transformers PR #35010.

- They also welcomed collaboration on a Discord bot and other code, directing peers to their GitHub for direct participation.

- Free AI Summaries for the Win: A user in #interesting-links recommended Free AI Book Summaries for quick references.

- They shared it as a resource catering to essential AI readings and deeper exploration alike.

Unsloth AI (Daniel Han) Discord

- Quant Quirks & GGUF Gains: Participants explored converting Unsloth models to GGUF for quicker inference, noting that BnB 4bit might limit any major speed boosts and emphasizing high-quality data as key for training.

- They concluded that quantization alone can’t replace robust datasets, praising Unsloth for its VRAM-friendly approach and consistent performance.

- QLoRa Quests & Sprint Mode: Users wondered if QLoRa fine-tuning with the Llama 3.2:3B model is feasible on an 8 GB card, sharing experiences and concerns about memory constraints.

- They also asked about sprint mode, attaching an image hinting at future features but receiving no firm release date.

- Unsloth vs Ollama & Speed Scenes: A member evaluated Unsloth as a replacement for Ollama, intrigued by claims of 2x faster inference but noting the lack of a ready-made chat template.

- Others acknowledged Ollama for simpler setup, while Unsloth demanded more manual tweaks but promised strong speed benefits.

- Pro Delays & Multi-GPU Trials: Frustrations arose when users discovered Unsloth Pro remains unavailable despite willingness to pay, as the tool is not yet for sale.

- They heard Multi-GPU support is in test stages with a proposed release in 2025, fueling further excitement for advanced functionalities.

- Mixedbread’s Embedding Edge: Community members recommended Mixedbread for RAG tasks, comparing it with other open source models like Stella and Qwen from the MTEB leaderboard.

- They linked the Mixedbread model and pointed out its reranking features, prompting others to try it for sentence embeddings.

Stability.ai (Stable Diffusion) Discord

- Scammers Subvert Servers: A group explained how scammers compromise Discord servers by hacking inactive accounts and spam users with malicious links.

- One user highlighted dedicated bots to filter these attacks, stating 'vigilance is key'.

- GPU Rentals Rouse Skepticism: Participants debated the legitimacy of renting a GPU for just $0.18/hr on certain platforms.

- One user called it 'too good to be true', pointing out that lower-tier hardware is pricier elsewhere.

- Inpainting Tactics in Focus: Members compared multiple AI inpainting workflows, including chained models for stronger results.

- They suggested using high-quality models for minimal setup while mixing diverse elements effectively.

- Video Generation Crowned by LTXV: Several participants recommended LTXV or Hunyuan for stable video diffusion tasks, praising their resource efficiency and performance.

- Others contrasted these solutions with outdated models that struggle to process frames efficiently, citing better optimization in newer approaches.

- Stable Diffusion Offline Steps: One user asked about offline usage of Stable Diffusion, prompting suggestions for local web UI setups.

- Resources included a Webui Installation Guide detailing GPU constraints and offline installation tips.

Stackblitz (Bolt.new) Discord

- Seasonal Slowdowns: AI's Odd Quirk: A TikTok-based study indicated that AI becomes less efficient in August and around Christmas, adopting patterns from human data during these slower periods.

- Members discussed providing seasonal insights to boost performance, suggesting that these slump months might be prime for interesting experimentations.

- Project Pains: Wasted Time and Limited Access: One user spent $15 and 3 hours on tasks without success, feeling frustrated over the lack of immediate results.

- Others voiced concerns about only having access to the last 30 days of chats, sparking questions on retrieving older Bolt projects for future reference.

- Mongo Mayhem: Bolt's Connection Conundrum: Community members struggled to link MongoDB with Bolt, citing backend constraints that hinder direct database connections.

- They mentioned using Firebase or Supabase as more compatible options, referencing Bolters.io documentation for alternative solutions.

- MVP Momentum: AI Tools Spark Swift Builds: Users praised Bolt.new for generating production-ready code for small MVPs, emphasizing the need to understand its parameters for smoother workflows.

- They shared community resources like Bolters.io to refine AI-driven development approaches while managing expectations on speed and stability.

OpenRouter (Alex Atallah) Discord

- Holiday Web Search Rolls Out: OpenRouter introduced Web Search for any language model, giving engineers real-time access to information during the festive season, as shown in this live demo.

- This free upgrade surfaced in the announcements channel and could expand to an official API feature, prompting ideas around cost management for token usage.

- Model Price Slashes Amp Up Enthusiasm: Various models, such as qwen/qwen-2.5 coders, received a -12% cut, and nousresearch/hermes-3 dipped by -11%, with meta-llama slashed by -31% to encourage usage.

- Developers labeled it a timely perk for holiday workloads, citing community feedback that applauded the budget-friendly changes.

- Endpoints API Emerges in Beta: OpenRouter introduced a beta version of the Endpoints API, visible at this link for developers to explore model metadata.

- Although missing official documentation, this preview indicates upcoming enhancements for refined customizations and possibly deeper integration options.

- Qwen Models Spark Mixed Reactions: Community members compared QVQ-72B with Llama 3.3 and Phi-4, noting differences in math and geometry handling while praising selective strengths.

- They referenced a Hugging Face repo for more insights, recognizing instruction-following gaps and varied task proficiency.

- Claude 3.5 Beta Clears Up Confusion: Participants established that Claude 3.5 beta and Claude 3.5 are virtually identical, with the beta featuring its own moderated endpoint.

- Reassurances surfaced about consistent coding capabilities, helping quell questions on performance variations between the two releases.

Perplexity AI Discord

- OpenAI's O3 Overdrive: OpenAI introduced the O3 Model, promising advanced capabilities for AI-driven workflows and shared highlights in the #[sharing] channel.

- Community feedback called O3 a 'significant step in AI evolution,' noting an emphasis on improved reasoning and user experience.

- Gemini Gains Ground: Google teased Gemini on Google AI Studio, positioning it as the next generation multimodal approach.

- Members speculated on Perplexity integration, citing a tweet from TestingCatalog News that hinted at Gemini 2.0 Pro.

- ClingyBench Checks AI Attachment: f1shy-dev introduced ClingyBench to measure 'clinginess' in AI models based on numeric differentials.

- Participants wondered if 'emotion-like' behaviors could be quantified, calling ClingyBench an amusing experiment in user-model interaction.

- LLMAAS Gains Traction: Members explored LLMAAS scenarios, brainstorming ways to streamline large language model hosting.

- They discussed load handling and pricing structures, viewing LLMAAS as a viable collaboration channel for multiple AI stakeholders.

- Llama 3 Light Launch: A brief mention of Llama 3 surfaced in #[pplx-api], with minimal official details on performance or release timing.

- Several users requested deeper specifications, calling official statements 'too brief' for a thorough technical assessment.

aider (Paul Gauthier) Discord

- Aider & .gitignore Gossip: Members confirmed Aider respects

.gitignore, referencing documentation.- However, confusion persists on whether future ignored files might be loaded, prompting calls for clearer docs.

- Voice UI Ventures: A user pursuing a real-time voice interaction UI for Aider highlighted the need for a dedicated API, citing this GitHub project.

- They expressed excitement for voice-driven features, hinting at new possibilities for direct spoken commands.

- Qwen’s QVQ-72B Surges: Discussants noted the impressive visual reasoning of QVQ-72B, citing the Hugging Face link.

- They pointed out strong performance metrics on MMMU, prompting curiosity about future visual AI benchmarks.

- BigCode-Bench Buzz: A brief mention of BigCode-Bench surfaced, pointing to an evaluation resource for coding models.

- This link spurred interest in tracking performance numbers and ensuring accurate comparisons across different model families.

- Cursor IDE & Aider Harmony: Users praised Cursor IDE for respecting

.gitignoreand providing a coding environment akin to VS Code, referencing Cursor docs.- They noted simple settings imports and a smooth workflow when combining Cursor with Aider for coding tasks.

OpenAI Discord

- Meta's Mega-Concept Move: Meta introduced large concept models to expand key applications in advanced AI tasks.

- Members praised the approach, calling it a major step in refining model capabilities.

- Claude Conquers ChatGPT in Code: Switching from ChatGPT to Claude revealed improved coding performance in tasks with increased complexity.

- However, others suggested Gemini for larger projects due to better token limits.

- O1 Overdrive for ESLint Setup: Developers considered O1 for its higher model limits and potential to handle modern lint settings.

- They debated feeding O1 recent configs beforehand to mitigate outdated knowledge issues.

- Memory Boost in Personalization: Users enabled memory to store personal data, aiming for more context-aware AI replies.

- Several participants saw promise in adopting persistent memory for deeper interactions.

- Recipe Generation Goes Both Ways: Discussion centered on top-down vs bottom-up approaches for building advanced recipes with minimal cost.

- The group weighed how retrieval modes and variety demands interplay with effective outcome generation.

Modular (Mojo 🔥) Discord

- Mojo's Sprint into HFT: Some members said that Mojo outpaced C in certain tasks, suggesting it could support High-Frequency Trading algorithms.

- They also mentioned a firm that hosted a Kaggle Math Olympiad event, indicating interest in real-world applications beyond simple experiments.

- Bug Bites: 'input()' Crash: A user found that pressing ctrl-d with no input caused Mojo to crash, documented in Issue #3908.

- Developers recommended clarifying the error messaging and confirmed that reading errno is currently impossible.

- GPU Gains Still Brewing in Mojo: Participants noted that GPU support remains in preview, blocking integration with NuMojo for now.

- They targeted a timeline of about a year for more robust enhancements, hoping to accelerate Mojo’s approach to ML tasks.

- Mandelbrot Crash in MAX: A Mandelbrot implementation in MAX crashed on Mac with a dlsym error, hinting at Python build limitations.

- An improved custom op was shared to optimize C initialization, and they plan to merge it in January with further refinements.

- Mojo vs Julia: The Sci-Compute Scrimmage: Debate arose about Mojo possibly rivaling Julia in numerical work, referencing attempts to mirror Python’s success with numpy and matplotlib.

- While libraries like numojo are emerging, members foresee significant development for a fully mature ecosystem.

Notebook LM Discord Discord

- Akas Amplifies AI Audio: A member introduced the Akas app at https://akashq.com, an invite-only platform to upload and share AI-generated podcasts, citing its elimination of content-discovery hassles.

- The community welcomed this concept, emphasizing the need for a more straightforward approach to storing and accessing NotebookLM-powered audio.

- NotebookLM Gains Praise & Criticism: One user praised NotebookLM for handling a hefty 20-year book series, calling it vital to keep characters, plot holes, and story lines organized.

- Others reported UI bugs, with frequent page refreshes disrupting creative flows, highlighting an ongoing demand for smoother usage on mobile and desktop.

- RSS Reigns in AI Podcasting: A participant insisted RSS feeds are essential for discoverability, underscoring how many platforms rely on standardized feeds.

- The group discussed generating dynamic RSS per user, plus hooking Google News for top stories to boost AI-driven audio content.

- Project Mariner Charts the Web: Google's Project Mariner emerged as a Gemini-powered AI agent automating web tasks in Chrome, like form filling, detailed in this TechCrunch article.

- Members called it a major leap for AI in browsing, expecting transformative shifts in how users tackle web-based tasks.

- Annual Review Gains LLM Boost: A user combined NotebookLM with Claude to refine their yearly assessment, spotlighting how LLMs can detect performance patterns.

- They described it as a 'Google Search of myself' and credited these LLM tools for shining a light on personal improvement opportunities.

Latent Space Discord

- Concept Craze: Large Concept Models: The new Large Concept Models paper explores sentence-level representations for language modeling, but participants questioned its immediate utility.

- Some see synergy with steerable idea frameworks, fueling excitement despite skepticism about real-world feasibility.

- OCTAVE’s On-The-Fly Voice Feats: Hume introduced OCTAVE, a speech-language model enabling real-time voice and personality generation, as revealed in this announcement.

- Community reactions emphasized realistic voice synthesis potentially becoming broadly accessible.

- xAI’s $6B Funding Flood: xAI announced a Series C of $6B featuring investors like a16z, Blackrock, and Fidelity, detailed in their statement.

- Conversations hinted at possible hardware pivots toward AMD, referenced in tweets from Dylan Patel.

- Post-Transformers & the Subquadratic Showdown: A special session featuring @realDanFu and @picocreator tackled subquadratic attention beyond Transformers, shared in this pod.

- They offered bold opinions on context lengths beyond 10 million tokens versus RAG, prompting debate among scaling enthusiasts.

- Synthetic Data & ‘Smol’ Surprises: In a recap on Latent.Space, Loubna showcased top achievements in Synthetic Data and Smol Models this year.

- They addressed model collapse, highlighted textbooks like Phi and FineWeb, and considered on-device solutions for broader use.

Interconnects (Nathan Lambert) Discord

- QvQ 72B Tussles with GPT4o & Claude Sonnet 3.5: The QvQ 72B model by Qwen was released on Hugging Face with vision capabilities, reportedly beating GPT4o and Claude Sonnet 3.5 in performance (link).

- Community members highlighted strong improvements in reasoning tasks and expressed enthusiasm for more advanced visual-linguistic synergy.

- O1/O3 Weighted Decoding vs. Majority Voting: Members debated whether O1/O3 techniques rely on parallel trajectory generation and majority voting for cost-effective final answer selection.

- Some proposed picking the highest reward model output from the best candidate pool, while others questioned if a simple top-reward approach might match or exceed majority voting.

- QVQ Vision & The Product Rule: The QVQ visual reasoning model from Qwen applies mathematical functions and derivatives, as shown in their blog post, demonstrating product-rule-based evaluations.

- An example derivative at x=2 gave -29, suggesting potential for combining symbolic math logic with visual tasks in advanced LLMs.

- AMD Software Rant & the $500 Subscription: A Dylan video on AMD software drew mixed responses due to its circular style and limited clarity.

- Meanwhile, one user paid $500 for a Semianalysis subscription, joking they'd have done better following chat-based advice to invest in Nvidia stock.

- Curated LM Reasoning Papers Emerge: A compiled set of LM reasoning papers highlighted prompting, reward modeling, and self-training while skipping superficial Chain-of-Thought examples.

- They span deterministic and learned verifiers, prompting requests for any impactful works that may have been missed.

LM Studio Discord

- Intel AVX2 Clarifies LM Studio's Load Path: Engineers confirmed that LM Studio supports modern Intel CPUs with AVX2 instructions, referencing an i9-12900k as a confirmed working example.

- One user encountered an Exit Code 6 error with llama 3.3 mlx 70b 4bit, hinting that context length or model size might exceed system capabilities, although others reported success loading larger models.

- EPYC Endurance: Surprising CPU Gains: Tests showed 64-core EPYC processors churning out 26 tokens per second on CPU and 332 on GPU, performing beyond expectations with an 8b and 1b model.

- Discussion highlighted how PCIe 4 risers solved motherboard issues on ASUS boards, spurring curiosity about PCIe 5 risers and MCIO cables for further performance gains.

- Granite Gaps in Real-World Code Tests: A user voiced frustration with Granite models, claiming they repeatedly failed coding exercises despite glowing reviews online.

- This mismatch triggered debate on model credibility in practice versus marketing claims, with others questioning whether the Granite hype was overstated.

- ComfyUI & 4090 GPUs: VRAM Gains but GPU Pains: Running two 4090 GPUs in one system technically provides 48 VRAM, but achieving maximum throughput in ComfyUI remains challenging.

- Participants noted VRAM alone does not ensure faster speeds unless context size demands it, and recommended draft models for optimizing tasks whenever large language models are employed.

GPU MODE Discord

- PyTorch's Symbolic Shuffle: In PyTorch builds, integers (and possibly floats) trigger symbolic recompilation as highlighted in torch.SymInt, prompting a preference for pre-emptive setup over runtime warm-ups.

- Contributors plan further experiments to confirm if floats also adopt this symbolic approach, aiming to avoid multiple just-in-time triggers in the kernel.

- Triton's Type-Hinted TMA Tactics: Triton developers considered type hints like

def program_id(axis: int) -> tl.tensor, while also examining async TMA and warp specialization to tap into Hopper hardware.- They discussed the differences between TMA and

ldgstswithout warp specialization, emphasizing multi-stage and persistent kernels for more flexible code generation.

- They discussed the differences between TMA and

- GPU Benchmark Showdown: Torch vs. Triton: A discussion contrasted triton.testing.do_bench and torch.inductor.utils.print_performance, noting the absence of

torch.cuda.synchronize()in certain loops and the possible impact on kernel timing.- Participants referenced this Triton testing snippet along with CUDA events for measuring kernel duration, suggesting that a single stream processes launches sequentially.

- BitNet's Ternary Takeover: BitNet gained attention by training with ternary weights, boasting a 97% energy cut and storing a 175B model in about 20MB, as seen in Noise Step Paper.

- One approach named Training in 1.58B With No Gradient Memory bypassed backprop, sparking discussions about memory-light methods on small benchmarks like MNIST.

- OREO Offline RL Rises: A method called OREO delivered 52.5% on MATH using a 1.5B language model without extra problem sets, as noted in this tweet.

- It sidesteps paired data and outperforms DPO in multi-step reasoning, allowing value-guided tree search at test time for free performance gains.

Eleuther Discord

- Persistent Pythia: Pretraining Step Saga: A user requested extra Pythia model checkpoints at intervals, including optimizer states, to resume pretraining.

- They specifically needed 10 additional steps across the 160M/1.4B/2.8B series, acknowledging large file sizes.

- Hallucinatory Headaches: AI's Reality Distortion: A New York Times article on AI hallucinations sparked conversation about misleading outputs in advanced models.

- Participants noted the continuing challenge of verifying results to prevent these false claims from overshadowing real progress.

- ASAL's Big Leap: Automated Artificial Life: The Automated Search for Artificial Life (ASAL) approach uses foundation models to find simulations generating target phenomena and open-ended novelty.

- This method aims to reduce manual guesswork in ALife, offering new ways to test evolving systems with FMs rather than brute force.

- Coprocessor Craze: LLMs Offload for Gains: Research shared a strategy letting frozen LLMs tap an offline coprocessor, augmenting their key-value cache to boost performance.

- This yields lower latency and better reasoning, as the LLM defers heavier tasks to specialized hardware for significant speedups.

- CLEAR Momentum: Diffusion Transformers Race On: Diffusion Transformers introduced linear attention with a local strategy named CLEAR, cutting complexity in high-res image generation.

- Discussion also highlighted interest in physics-based metrics and potential partnerships for an automated research framework.

LlamaIndex Discord

- SKU Agent Slashes Manual Matching: Check out the new tutorial by @ravithejads showing how a document agent parses invoices and matches line items with standardized SKUs, as seen in this tutorial.

- It significantly reduces manual effort, demonstrating the efficiency of agentic workflows in an invoicing context.

- Single-line Contract Reviewer Tackles GDPR: A new template by @MarcuSchiesser illustrates how to build a contract review agent in just one line of code using @getreflex and llama_index, as shown in this tweet.

- It checks GDPR compliance for vendor agreements, hinting at a streamlined approach to contract analysis.

- Ollama LLM Overflows its Context Window: Users observed context window issues with Ollama LLM locally, even with a small prompt and top_k=1.

- They proposed increasing the LLM timeout to avert overflows, showing how configuration tweaks can address local LLM constraints.

- VectorIndexRetriever Hits Serialization Snag: @megabyte0581 encountered a ValueError stating IndexNode objects aren't serializable when using VectorIndexRetriever, referencing Issue #11478.

- They noted Chroma as their Vector DB and pivoted to a recursive retriever approach for a workaround.

- Inquiry on Llama Index Message Batching API: @snowbloom asked about tapping OpenAI/Anthropic's Message Batching API via the Llama Index LLM class, but saw no immediate replies.

- This highlights the ongoing need for clearer guidance on batch request handling in LLM workflows.

Nomic.ai (GPT4All) Discord

- Azure Expenditure Blues & GPT4All: A developer questioned whether it would be cost-effective to run GPT4All on Azure AI, citing the high price of GPU VMs.

- They worried about budget constraints for open-source hosting, and others warned that substantial usage could result in large bills.

- Vision Variance & AI 'Hallucinations': A user shared a YouTube video demonstrating a vision model, though it reportedly hallucinated its capabilities.

- Another observer found reliability questionable, pointing to a follow-up clip for more evidence.

- o1 Model Hooks & GPT4All Gains: A member pursued the o1 model on GPT4All by connecting an OpenAI-compatible server.

- Community feedback confirmed the setup's success, suggesting model integration is relatively straightforward.

- Ollama Proxy Path & GPT4All: A developer considered routing GPT4All requests through Ollama on a server to avoid local installs.

- Another user confirmed success by directing GPT4All to the URL endpoint, enabling an effortless remote proxy workflow.

- LocalFiles Limit Mystery: A user noticed GPT4All referencing only a fraction of files within LocalFiles, ignoring the full set.

- They suspected incomplete document coverage, prompting questions on GPT4All’s handling of multiple files in bulk queries.

LLM Agents (Berkeley MOOC) Discord

- No Noteworthy LLM Discussion #1: No advanced LLM or AI topics surfaced beyond administrative MOOC tasks, so there's nothing technical to highlight.

- We skip mundane certificate form issues in compliance with the guidelines.

- No Noteworthy LLM Discussion #2: No references to new models, datasets, or training strategies emerged from these conversations.

- Thus, we have no relevant content to share based on these guidelines.

tinygrad (George Hotz) Discord

- SemiAnalysis Slams AMD’s Software: SemiAnalysis criticized AMD for its software situation, questioning whether the company will deliver real improvements.

- Members responded that talk is cheap, highlighting broader concerns that the world wants monopolies.

- Lean Proof Bounty Beckons: A community member showed interest in the Lean proof bounty and requested support to tackle the challenge.

- They sought insights from others in formal methods, hoping to accelerate their progress.

- Discord Rules Baffle Some: A reminder to check Discord rules confused several members who couldn't find them easily.

- Their comments like I do not see the rules for this discord underscored the need for clearer guidelines.

- Tinygrad Applauded for Torch-Like Transition: One user commended the Tinygrad API for closely mirroring Torch, making complex project porting simpler.

- They noted that ChatGPT can convert Torch to Tinygrad effortlessly, emphasizing the library's approachable design.

Cohere Discord

- Snoopy's Santa Surprise: One user posted a cartoon of Snoopy dressed as Santa Claus holding a bell, showcasing the group's holiday spirit ahead of the season.

- This cheerful GIF prompted 'What's cooking this X-mas?' inquiries and lighthearted discussion, as members exchanged festive emojis and ideas.

- X-mas Culinary Curiosity: Participants chatted about Christmas cooking plans, brainstorming possible holiday menus and snacks.

- They signaled excitement for upcoming gatherings and feasts, sharing playful encouragement to spark more holiday cheer.

OpenInterpreter Discord

- Fumbles and Fatigue: A user voiced confusion over repeated mistakes, jokingly calling themselves a nuub while grappling with technical roadblocks in an almost continuous loop.

- They also mentioned long hours spent in front of the screen, intensifying frustration and prompting the community to ask 'Why does this happen?'

- TLDraw Tool Tease: Someone shared a link to computer.tldraw.com, suggesting a possible resource for visualizing or troubleshooting issues.

- Details remain limited, but the mention stirred interest among participants seeking fresh methods to tackle persistent frustrations.

DSPy Discord

- pyn8n v4 Debuts with Pythonic Workflow Power: The new pyn8n v4 upgrades n8n automation with code-driven Dynamic Workflow Generation and a user-friendly Conversational CLI.

- This release enables developers to efficiently create, manage, and monitor workflows, combining advanced orchestration features with straightforward Python APIs.

- Ash Framework & n8n API Wrapper Integration: The Ash Framework integrates advanced business logic throughout n8n workflows, staying invisible to automators.

- The new n8n API Wrapper also simplifies direct REST calls, incorporating node deployment via DSLModel for streamlined automation tasks.

MLOps @Chipro Discord

- Brief Shout-Out in #general-ml: One user posted "pretty cool thanks for sharing!", referencing an unspecified resource with no further details. No additional conversation points were introduced, leaving the discussion short-lived.

- No specific links, code repositories, or technical insights were shared alongside the message. Consequently, the channel saw no extended talk or follow-up beyond this note.

- Lack of Further Content: No other participants added to the conversation, nor did they raise any new issues or announcements. The overall chatter was minimal, providing no deeper AI or MLOps insights.

- Without extra context or references, there's nothing more to explore here. This message effectively concluded the session with no emergent topics.

LAION Discord

- GPT-4o Gains a Visual Edge: A user shared a tweet from Greg Brockman showcasing a GPT-4o generated image, signaling evolving possibilities for AI-driven visuals.

- Community members framed this as a significant step forward, reflecting excitement over potential next-level image creation.

- Team Bolsters GPT-4o's Toolbox: Participants highlighted the ongoing push to refine GPT-4o for improved image generation capabilities.

- They credited the team's work ethic and shared high hopes for future enhancements in AI imaging.

The Axolotl AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!