[AINews] not much happened today

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

a quiet week to start the year

AI News for 1/2/2025-1/3/2025. We checked 7 subreddits, 433 Twitters and 32 Discords (217 channels, and 2120 messages) for you. Estimated reading time saved (at 200wpm): 236 minutes. You can now tag @smol_ai for AINews discussions!

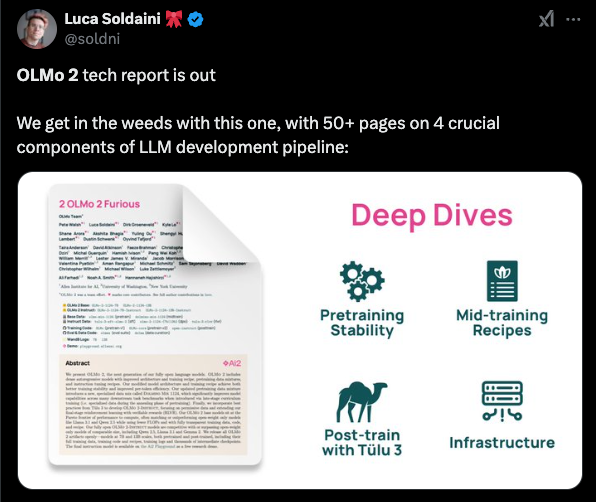

Lots of "open o1" imitators causing noise, but mostly not leaving much confidence and meanwhile o1 is continuing to impress. Olmo 2 released their tech report (our first coverage here), with characteristic full {pre|mid|post}-training detail for one of the few remaining frontier fully open models.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Models and Performance

- Model Developments and Benchmarks: @_akhaliq introduced PRIME, an open-source solution advancing reasoning in language models, achieving 26.7% pass@1, surpassing GPT-4o. Additionally, @_jasonwei discussed the importance of dataset selection in evaluating Chain-of-Thought methods, emphasizing their effectiveness in math and coding tasks.

- Optimization Techniques: @vikhyatk benchmarked libvips, finding it 25x faster at resizing images compared to Pillow. Furthermore, @awnihannun reported that Qwen 32B (4-bit) generates at >40 toks/sec on an M4 Max, highlighting performance improvements.

- Architectural Insights: @arohan criticized the stagnation in architectural breakthroughs despite exponential increases in compute, suggesting that breakthroughs in architectures akin to SSMs or RNNs might be necessary.

AI Tools and Frameworks

- Development Tools: @tom_doerr shared Swaggo/swag, a tool for generating Swagger 2.0 documentation from Go code annotations, supporting frameworks like Gin and Echo. Additionally, @hendrikbgr announced integration between Cerebras Systems and LangChain.js, enabling streaming, tool calling, and structured output for JavaScript/TypeScript applications.

- Agent Frameworks: @jerryjliu0 previewed upcoming agent architectures for 2025, focusing on customizability for domains like report generation and customer support.

- Version Control and Security Tools: @tom_doerr introduced Jujutsu (jj), a Git-compatible VCS using changesets for simpler version control, and Portspoof, a security tool that makes all TCP ports appear open to deter attackers.

Robotics and Hardware

- Robotics Advances: @adcock_brett unveiled Gen 2 of their weapon detection system with a meters-wide field of view and faster image frame rate. Additionally, @shuchaobi promoted video speech models powered by their latest hardware designs.

- Hardware Optimization: @StasBekman added a high-end accelerator cache-sizes section, comparing cache architectures across manufacturers, and @StasBekman shared benchmarks comparing H100 vs MI300x, noting different winners for different use cases.

AI Applications and Use Cases

- Medical and Financial Applications: @reach_vb discussed Process Reinforcement through Implicit Rewards (PRIME) enhancing medical error detection in clinical notes. @virattt launched a production app for an AI Financial Agent integrating LangChainAI and Vercel AI SDK.

- Creative and Educational Tools: @andrew_n_carr demonstrated converting text to 3D printed objects using tools like Gemini and Imagen 3.0. @virattt also highlighted Aguvis, a vision GUI agent for multiple platforms.

- Workflow and Automation: @bindureddy detailed how agents manage workflows, data transformation, and visualization widgets, while @llama_index provided resources for building agentic workflows in invoice processing.

Industry Updates and News

- Company Growth and Investments: @sophiamyang celebrated 1-year at MistralAI, highlighting team growth from 20 to 100+ employees. @Technium1 reported on $80B spent by datacenters this year.

- Regulatory and Market Trends: @tom_doerr criticized the EU's fast-moving regulations, and @RichardMCNgo addressed concerns about H-1B visa holders and drop in tech investing.

- AI Leadership and Conferences: @swyx announced the AI Leadership track of AIEWF now available on YouTube, featuring insights from leaders like @MarkMoyou (NVIDIA) and @prathle (Neo4j).

Community and Personal Reflections

- Memorials and Personal Stories: @DrJimFan shared a heartfelt tribute to Felix Hill, expressing sorrow over his passing and reflecting on the intense pressures within the AI community.

- Productivity and Learning: @swyx emphasized the importance of self-driven projects for personal growth, and @RichardMCNgo advocated for recording work processes to enhance learning and data availability.

Memes and Humor

- Light-Hearted Takes: @Scaling01 joked about the irrelevance of architecture opinions, while @HamelHusain shared humorous reactions with multiple 🤣 emojis.

- Humorous Anecdotes: @andersonbcdefg posted about missing a rice cooker, and @teortaxesTex reacted with "🤣🤣🤣🤣" to amusing content.

- Funny Observations: @nearcyan humorously contrasted quantity vs quality in tweeting ideas, and @thinkzarak shared a witty take on AI's role in society.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. LLM Performance Leap Creates Demand for New Benchmarks

- Killed by LLM – I collected data on AI benchmarks we thought would last years (Score: 98, Comments: 18): GPT-4 in 2023 revolutionized AI benchmarks by not only surpassing state-of-the-art scores but also saturating them, marking a significant milestone akin to passing the Turing Test. By 2024, other models like O1/O3 and Sonnet 3.5/4o caught up, saturating math, reasoning, and visual benchmarks, while Llama 3/Qwen 2.5 made open-weight models competitive. The author argues for improved benchmarks by 2025 to better measure real-world reliability, as current benchmarks fail to assess tasks expected to be solved by 2030, and invites contributions to their GitHub repository for further development.

- Commenters discuss the limitations of AI models like GPT-4 and O1/O3 in handling complex tasks, noting their proficiency in generating initial code or boilerplate but struggles with integration, security, and niche problems. They emphasize that while these models can provide impressive overviews and solutions, they often fail with larger, more complex applications.

- The conversation highlights the potential need for a coding paradigm shift, suggesting frameworks that optimize code for AI comprehension. Robk001 and Gremlation discuss how breaking code into small, manageable chunks can improve AI performance, with the latter pointing out that quality input results in better AI output.

- Users like Grouchy-Course2092 and butteryspoink share experiences of increased productivity when providing detailed input to AI models. They note that structured approaches, such as using SDS+Kanban boards, can significantly enhance the quality of AI-generated code, suggesting that user input quality plays a critical role in AI effectiveness.

- LLM as survival knowledge base (Score: 83, Comments: 88): Large Language Models (LLMs) serve as a dynamic knowledge base, offering immediate advice tailored to specific scenarios and available resources, surpassing traditional media like books or TV shows. The author experimented with popular local models for hypothetical situations and found them generally effective, seeking insights from others who have conducted similar research and identified preferred models for "apocalypse" scenarios.

- Power and Resource Concerns: The practicality of using LLMs in survival scenarios is debated due to high power consumption. Some argue that small models, such as 7-9B, can be useful with portable solar setups, while others highlight the inefficiency of using precious resources for potentially unreliable AI outputs. ForceBru emphasizes the randomness of LLM outputs, while others suggest combining LLMs with traditional resources like books for more reliable guidance.

- Trustworthiness and Hallucination: Many commenters, including Azuras33 and Calcidiol, express concerns about LLM hallucinations, suggesting the integration of Retrieval-Augmented Generation (RAG) with grounded data sources like Wikipedia exports to improve reliability. AppearanceHeavy6724 and others discuss techniques like asking the same question multiple times to identify consistent answers and reduce hallucination risks.

- Model Fine-Tuning and Practical Usage: Lolzinventor and benutzername1337 discuss the potential of fine-tuning smaller models, like Llama 3.2 3B, for survival-specific knowledge, noting the importance of curating a dataset from survival and DIY resources. Benutzername1337 shares a personal experience using an 8B model during a survival trip, highlighting both its utility and limitations due to power constraints.

Theme 2. Deepseek V3 Hosted on Fireworks, Privacy and Pricing

- Deepseek V3 hosted on Fireworks (no data collection, $0.9/m, 25t/s) (Score: 119, Comments: 65): Deepseek V3 is now hosted on Fireworks, offering enhanced privacy by not collecting or selling data, unlike the Deepseek API. The model supports a full 128k context size, costs $0.9/m, and operates at 25t/s; however, privacy concerns have been raised about the terms of service. OpenRouter can proxy to Fireworks, and there are plans for fine-tuning support, as discussed in their Twitter thread.

- Privacy Concerns and Trustworthiness: Users express skepticism about Fireworks' privacy claims, noting that companies often have broad terms of service that allow them to use submitted content extensively. Concerns about data collection and potential misuse are highlighted, with some users questioning the trustworthiness of Fireworks.

- Performance and Cost Issues: Users report dissatisfaction with Fireworks when accessed through OpenRouter, citing slower response times and higher costs compared to alternatives. There are mentions of Deepseek V3 being an MoE model, which activates only 37B parameters out of 671B, making it cheaper to run when scaled, but users remain doubtful about the low pricing.

- Technical Implementation and Infrastructure: Discussions touch on the technical infrastructure needed for Deepseek V3's performance, suggesting that its cost-effectiveness may result from efficient use of memory and infrastructure design. Exolabs' blog is referenced for insights into running such models on alternative hardware like Mac Minis.

- Deepseek-V3 GGUF's (Score: 63, Comments: 26): DeepSeek-V3 GGUF quants have been uploaded by u/fairydreaming and u/bullerwins to Hugging Face. A request is made for someone to upload t/s with 512GB DDR4 RAM and a single 3090 GPU.

- Memory Requirements: The discussion highlights that q4km requires approximately 380 GB of RAM plus additional space for context, totaling close to 500 GB, making it unsuitable for systems with less RAM like a Macbook Pro with an m4 chip. Q2 quantization is mentioned as having lower RAM requirements at 200 GB, but is considered ineffective.

- Hardware Considerations: Users are discussing hardware upgrades, with one planning to order an additional 256GB of DDR5 RAM to test the setup, while others express limitations due to motherboard constraints. bullerwins provides performance benchmarks, noting 14t/s prompt processing and 4t/s text generation with Q4_K_M on their setup, and mentions using an EPYC 7402 CPU with 8 DDR4 memory channels.

- Performance Comparisons: There is a debate on the performance of CPUs versus 4x3090 GPUs, with bullerwins noting a 28% performance loss in prompt processing and 12% in inference when using a CPU compared to GPUs. The GPUs can only load 7 out of 61 layers, highlighting the limitations of GPU memory in this context.

Theme 3. Tsinghua's Eurus-2: Novel RL Methods Beat Qwen2.5

- Train a 7B model that outperforms GPT-4o ? (Score: 74, Comments: 10): The Tsinghua team introduced PRIME (Process Reinforcement through Implicit Rewards) and Eurus-2, achieving advanced reasoning with a 7B model that surpasses Qwen2.5-Math-Instruct using only 1/10 of the data. Their approach addresses challenges in reinforcement learning (RL) by implementing implicit process reward modeling to tackle issues with precise and scalable dense rewards and RL algorithms' efficiency. GitHub link

- GPU requirements were questioned by David202023, seeking details on the hardware needed to train a 7B model, indicating interest in the technical specifications.

- Image visibility issues were raised by tehnic, who noted the inability to view images, suggesting potential accessibility or hosting problems with the project's resources.

- Model testing plans were expressed by ozzie123, who intends to download and evaluate the model, showcasing community engagement and practical interest in the project's outcomes.

Theme 4. OLMo 2.0: Competitive Open Source Model Released

- 2 OLMo 2 Furious (Score: 117, Comments: 29): OLMo 2 is aiming to surpass Llama 3.1 and Qwen 2.5 in performance, indicating a competitive landscape in AI model development. The post title suggests a focus on speed and intensity, possibly referencing the "Fast and Furious" film franchise.

- OLMo 2's Performance and Data Strategy: OLMo 2 models are positioned at the Pareto frontier of performance relative to compute, often surpassing models like Llama 3.1 and Qwen 2.5. The team uses a bottom-up data curation strategy, focusing on specific capabilities such as math through synthetic data, while maintaining general model capabilities with high-quality pretraining data.

- Community and Open Source Engagement: The release of OLMo 2 is celebrated for its openness, with all models, including 7B and 13B scales, available on Hugging Face. The community appreciates the fully open-source nature of the project, with recognition for its transparent training data, code, and recipes.

- Future Developments and Community Interaction: The OLMo team is actively engaging with the community, with discussions about potential larger models (32B or 70B) and ongoing experiments applying the Molmo recipe to OLMo 2 weights. The team has also shared training data links for Molmo on Hugging Face.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. Video Generation Tool Comparison: Sora vs Veo2 vs Minimax

- Pov trying to use the $200 version of Sora... (Score: 164, Comments: 47): The post lacks specific content or context for a detailed summary, as it only mentions the $200 version of Sora in the title without further elaboration.

- Discussions highlight concerns about content filtering in AI models, with users expressing frustration over policy violations triggered by non-sexual content. Some argue that the filtering system is overly restrictive, particularly regarding depictions of women, and question the efficacy of current content moderation approaches.

- Video generation alternatives like hailuoai.video and minimax are discussed, with users comparing their features and effectiveness. Veo2 is noted for its superior results, though access is limited due to a waitlist, and hunyuan video is mentioned as a strong competitor.

- Users discuss the copyright paradox in AI training, pointing out the inconsistency in allowing models to train on copyrighted material while restricting generated outputs that resemble such material. Concerns about the high denial rate of content and the potential for increased restrictions to avoid negative publicity are also raised.

Theme 2. GPT-4o: Advanced Reasoning Over GPT-3.5

- Clear example of GPT-4o showing actual reasoning and self-awareness. GPT-3.5 could not do this (Score: 112, Comments: 74): The post discusses GPT-4o's capabilities in advanced reasoning and self-awareness, noting that these features are improvements over GPT-3.5. Specific examples or context are not provided in the post body.

- Discussions highlight that GPT-4o's ability to recognize and explain patterns is not indicative of reasoning but rather enhanced pattern recognition. Commenters emphasize that the model's ability to identify patterns like "HELLO" is due to its training and tokenization processes, rather than any form of self-awareness or reasoning.

- Several commenters, including Roquentin and BarniclesBarn, explain that the model's performance is due to tokenization and embeddings, which allow it to recognize patterns without explicit instructions. This aligns with the model's design to predict the next token based on previous context, rather than demonstrating true reasoning or introspection.

- The conversation also touches on the limitations of the "HELLO" pattern as a test, suggesting that using non-obvious patterns could better demonstrate reasoning capabilities. ThreeKiloZero and others suggest that the model's vast training dataset and multi-parametric structure allow it to match patterns rather than reason, indicating the importance of context and training data in its responses.

AI Discord Recap

A summary of Summaries of Summaries by o1-2024-12-17

Theme 1. Performance Sagas and Slowdowns

- DeepSeek Takes a Dive: Users griped about DeepSeek v3 dropping to 0.6 TPS, sparking calls for server scaling. They monitored the status page for signs of relief, but many still yearned for a faster model.

- Windsurf and Cascade Credits Clash: People saw internal errors drain credits in Windsurf, leading to confusion. Despite automated assurances, charges persisted, prompting frustrated posts about refunds.

- ComfyUI Under the Hood: SwarmUI runs on ComfyUI’s backend for user-friendly rendering, while rival setups like Omnigen or SANA lag behind. Fans praised LTXVideo and HunyuanVideo for blazing-fast video generation with minimal quality loss.

Theme 2. Credit Crunch and Cost Confusion

- $600k Model Training Sticker Shock: Engineers shared jaw-dropping GPU bills for training large models, with 7B parameters costing around $85k. They debated cheaper hosting options like RunPod and low-rank adapters from the LoQT paper.

- Payment Woes Plague API Users: Credit card declines in OpenRouter and subscription quirks in Perplexity stirred confusion. Some overcame it by switching cards or clearing caches, but annoyance simmered.

- Flex vs. Premium Credits: Multiple communities slammed usage caps and missing rollovers. Paying for unused tokens or dealing with “internal error” sessions fueled calls for more transparent plans.

Theme 3. Model Debuts and Fine-Tuning Frenzy

- Sonus-1 Steals the Show: Its Mini, Air, Pro, and Reasoning variants drew chatter for advanced text gen in 2025. A tweet showcased swift code outputs combining Aider with Sonus mini models.

- Swarm Library Joins NPM: This TypeScript multi-agent AI library touts synergy beyond OpenAI’s Swarm, winning praise for modular design. Others pinned hopes on PersonaNLP at ICML 2025, focusing on persona-based NLP tasks.

- Qwen2-VL & Llama 3.1 Spark Tuning: Communities wrestled with partial or missing vision adapters, while Llama 3.x and SmallThinker-3B soared in performance. People also fine-tuned with Unsloth, Axolotl, or Hugging Face Transformers/PEFT for custom tasks.

Theme 4. Tooling Triumphs and Tensions

- GraphRAG and Graphrag Grab Headlines, with new retrieval-augmented generation strategies fueling interest in code and text tasks. Conversations covered multi-retriever setups and weighting vectors to improve query results.

- Invoice Agents and K-Summary Betas: LlamaIndex showcased automatically classifying invoices with spend categories and cost centers, while new summarizers in the Korean market lured testers. Users raved about chunked cross entropy and memory-savvy approaches in Torchtune.

- AI for Coding Hustle: Codeium, Cursor, and Aider communities battled random code changes, linting mania, and limited plan tiers. Despite frustration, many lauded faster dev cycles and more consistent code suggestions.

Theme 5. Hardware, VRAM, and HPC Adventures

- RTX 50xx VRAM Brouhaha left engineers suspecting NVIDIA artificially caps memory. They mulled whether bigger VRAM or cunning offload solutions to main RAM is the real path forward.

- Torch.compile Headaches: Users saw major slowdowns with Inductor caching, dynamic br/bc in Flash Attention, and tricky Triton kernel calls. They tested environment hacks to dodge segfaults, hoping official patches solve the compile chaos.

- 1-bit LLM Hype: BitNet’s talk of ternary weights and dramatic resource cuts excited HPC fans. Some bet these low-bit breakthroughs will slash training bills without sacrificing model accuracy.

PART 1: High level Discord summaries

Codeium (Windsurf) Discord

- Whirling Windsurf Woes: Many members reported major performance issues with Windsurf, citing excessive internal errors and slowdowns, referencing this tweet about bringing Figma designs to life.

- They also observed Claude 3.5 Sonnet deteriorating mid-session, causing unexpected credit drains despite official disclaimers at Plan Settings.

- Cascade Credits Controversies: Community discussions focus on Cascade charging credits even during failed operations, with repeated 'internal error' messages leading to confusion.

- Several users claim these charges persist contrary to automated assurances, prompting some to escalate via Support | Windsurf Editor and Codeium extensions.

- DeepSeek v3 vs. Sonnet 3.6 Showdown: Some argue DeepSeek v3 falls short of Sonnet 3.6 despite benchmark claims, preferring free alternatives like Gemini.

- They cite skepticism about DeepSeek’s real advantage, while others reference Things we learned about LLMs in 2024 for further data.

- Code Editing Chaos in Windsurf: Users mentioned random code changes and incomplete tasks, requesting clearer solutions to maintain continuity in the AI’s workflow.

- Many resort to saving instructions in external files, then reloading them to keep the conversation on track.

- Credit System Grumbles: Members criticized the Premium and Flex credits structure, complaining about usage caps and missed rollovers.

- They urged a fairer allocation model, with reports of mixed success via email and Support | Windsurf Editor and Codeium extensions.

aider (Paul Gauthier) Discord

- Sonus-1 Steps onto the Stage: The newly introduced Sonus-1 Family (Mini, Air, Pro, Reasoning) was presented in a blog post, focusing on advanced text generation capabilities for 2025.

- A tweet from Rubik's AI highlighted swift code generation in the mini models, prompting discussion on synergy with Aider.

- Deepseek Stumbles Under Heavy Load: Community members observed Deepseek dropping to 1.2 TPS, igniting complaints about server capacity and reliability.

- Others verified that Deepseek Chat v3 remains accessible via

--model openrouter/deepseek/deepseek-chat, but questioned if more servers are needed.

- Others verified that Deepseek Chat v3 remains accessible via

- OpenRouter's API Key Confusion: Some faced authentication headaches with the OpenRouter API, suspecting incorrect key placement in config files.

- One user confirmed success by double-checking model settings, advising the community to watch for hidden whitespace in YAML.

- Tailwind & Graphrag Grab Spotlight: Members explored adding Tailwind CSS documentation context into Aider, with suggestions to copy or index relevant info for quick reference.

- Microsoft's Graphrag tool also came up as a RAG alternative, spurring interest in more efficient CLI implementations.

- Aider Wish List Widened: Users requested toggling class definitions and prior context to refine code edits, aiming to cut down on irrelevant suggestions.

- They also envisioned better control over command prompts, citing advanced context management as a prime next step.

Unsloth AI (Daniel Han) Discord

- OpenWebUI Exports Expand Dataset Horizons: Members discussed exporting chat JSON from OpenWebUI for dataset creation, referencing owners for formatting advice.

- They highlighted potential synergy with local inference setups like vLLM, noting that combining well-structured data with advanced inference can improve training outcomes.

- Ollama Quantization Quandaries: Challenges emerged around quantizing models for Ollama, with users noting that default GGUF files run in FP16.

- Attendees recommended manual adjustments and pointed to the mesosan/lora_model config for potential solutions.

- Fine-Tuning Frenzy for Classification: Community members recommended Llama 3.1 8B and Llama 3.2 3B for tasks of moderate complexity, citing good performance with classification tasks.

- They emphasized using GPU hardware like RTX 4090 and pointed out Unsloth's documentation for tips on efficient finetuning.

- Fudan Focuses on O1 Reproduction: A recent Fudan report provided in-depth coverage of O1 reproduction efforts, available at this paper.

- It was praised by one member as the most thorough resource so far, igniting interest in the next steps for the O1 project.

- Process Reinforcement Teases Code Release: The Process Reinforcement paper garnered attention for its ideas on implicit rewards, though many lamented the lack of code.

- Community members remain optimistic that code will be published soon, describing it as a work in progress worth watching.

Cursor IDE Discord

- DeepSeek V3 Model's Privacy Puzzle: Members raised concerns about DeepSeek V3 potentially storing code and training on private data, emphasizing privacy issues and uncertain benefits in user projects.

- They questioned the personal and enterprise risks, debating whether the model's advantages justify its possible data retention approach.

- Cursor Slashes Dev Time: A user shared a quick success story using Cursor with SignalR to finalize a project in far less time than expected.

- Others chimed in with positive feedback, noting how AI-driven tools are helping them tackle complex development tasks more confidently.

Nous Research AI Discord

- Swarm's NPM Invasion: The newly published Swarm library for multi-agent AI soared onto NPM, offering advanced patterns for collaborative systems beyond OpenAI Swarm. It is built with TypeScript, model-agnostic design, and had its last update just a day ago.

- Community members praised its modular structure, mentioning it as a bold step toward flexible multi-agent synergy that could outdistance older frameworks in performance.

- PersonaNLP Preps for ICML 2025: A planned PersonaNLP workshop for ICML 2025 seeks paper submissions and shared tasks, spotlighting user-focused methods in language modeling. Organizers are openly coordinating with researchers interested in refining persona-based NLP approaches.

- Participants recommended specialized channels for deeper collaboration and expressed an eagerness to bolster the workshop’s scope.

- Costs Soar for Giant Models: Recent discussions revealed model training bills reaching $600,000, highlighted by a Hacker News post and a tweet from Moin Nadeem. Members noted that a 7B model alone can cost around $85,000 on commercial GPUs.

- Some engineers pointed to services like RunPod for cheaper setups and explored whether low-rank adapters from the LoQT paper could reduce spending.

- Hermes Data Dilemma: Community members spotted no explicit training data for certain adult scenarios in Hermes, speculating that this omission might constrain the model’s broader capabilities. They questioned whether the lack of such data could limit knowledge breadth.

- One voice claimed skipping these data points removes potentially pivotal nuance, while others argued it was a reasonable compromise for simpler model outputs.

- Llama Weight Whispers: Analysts found unexpected amplitude patterns in K and Q weights within Llama2, implying inconsistent token importance. They shared images that suggested partial redundancy in how weights represent key features.

- Members debated specialized fine-tuning or token-level gating as possible remedies, underscoring new angles for improving Llama2 architecture.

LM Studio Discord

- Extractor.io's Vanishing Act: One user discovered that Extractor.io apparently no longer exists, raising confusion despite this curated LLM list offered as an alternative.

- Others questioned the abrupt disappearance, with some suggesting it may have folded into a different domain or rebranded.

- LM Studio Goes Text-Only: Community members confirmed that LM Studio focuses on large language models and cannot produce images.

- They suggested Pixtral for picture tasks, noting it depends on the MLX Engine and runs only on specific hardware.

- Qwen2-VL Without Vision: Enthusiasts observed that Qwen2-VL-7B-Instruct-abliterated lacks the ability to process images due to its missing vision adapter.

- They emphasized that proper quantization of the base model is critical for fully using its text-based strengths.

- Training on the Entire Internet?: A user floated the idea of feeding an AI all internet data, but many flagged overwhelming size and poor data quality as pitfalls.

- They stressed that bad data undermines performance, so quality must trump sheer volume.

- GPU Offload for Quicker Quests: Local LLM fans tapped GPU support to speed up quest generation with Llama 3.1, citing better responses.

- They recommended selecting GPU-enabled models and watching Task Manager metrics, referencing success with a 4070 Ti Super setup.

Notebook LM Discord Discord

- Multilingual Mischief in AI: Enthusiasts tested audio overviews in non-English settings by issuing creative prompts, showing partial success for language expansion.

- They reported inconsistent translation quality, with suggestions for better language-specific models to address these gaps.

- K-Summary Beta Attracts Attention: A user promoted a new AI summarization product that soared in the Korean market, offering a chance for beta testers to try streamlined summaries.

- Several community members expressed eagerness to compare it against current summarizers for faster text processing.

- Customization Function Sparks Debate: Members worried that adjusting system prompts could expose ways to bypass normal AI restrictions.

- They debated boundaries between creative freedom and safe AI usage, weighing the potential benefits against misuses.

Stackblitz (Bolt.new) Discord

- Bolt Embraces AI Logic for Web Apps: A member shared plans to integrate logic/AI in their Bolt web apps, praising the visuals while needing better functionality and referencing BoltStudio.ai.

- They asked for strategies to merge code-driven workflows with AI modules, with incremental upgrades and local testing cited as ways forward.

- Supabase Eases Email Logins in Bolt: Developers praised Supabase email authentication for Bolt-based apps, highlighting local storage to manage user roles.

- They pointed to StackBlitz Labs for bridging frontends with flexible backends, while acknowledging continued debates on Bolt’s token usage.

Perplexity AI Discord

- O1 vs ChatGPT: Perplexity’s Punchy Search: Perplexity O1 drew mixed reactions, with some complaining about 10 daily searches and calling it a hassle, while others found it promising for search-centric tasks.

- Comparisons to ChatGPT praised Opus for unlimited usage and lengthy context, as noted in this tweet.

- Grok’s Gains or Gripes: Some called Grok "the worst model" they've used despite its lower cost, fueling debates on model reliability.

- Others touted the 3.5 Sonnet model for stronger performance, hinting at shifting user loyalties.

- Perplexity’s UI Shake-Up & Subscriptions: Recent UI changes added stock and weather info, prompting one user to clear cache to avoid annoying homepage elements.

- Members discussed unlimited queries and cost-saving approaches in AravSrinivas’s tweet, showcasing varied subscription choices.

- 2025 AI Interview Qs to Impress: A shared guide outlined methods for tackling tricky AI questions in 2025, boosted by this link.

- Participants deemed thorough preparation essential for staying competitive in the hiring landscape.

- Europe-Based API & 1.5B-Token Chatbot Hopes: One user expects European servers matching pro search speeds to power a 1.5B-token chatbot with better performance.

- They believe this integration will enhance the chatbot’s utility, especially for large-scale token usage.

OpenRouter (Alex Atallah) Discord

- OpenRouter's Auth Aggravations: Multiple users saw 'Unauthorized' errors when attempting requests, even with credits available and the correct API key at OpenRouter. They reported changing HTTPS addresses and adjusting certificates without relief.

- Some speculated the issue might involve n8n configuration mismatches or connectivity problems, noting that manual tweaks to URL settings still failed.

- DeepSeek's Dreaded Drag: Community members complained of 0.6 TPS speeds on DeepSeek v3, causing slow responses. The DeepSeek service status page showed high demand and potential scaling shortfalls.

- They expressed worry that usage had outpaced current forecasts, prompting calls for capacity boosts before more widespread rollouts.

- Structured Output Seeks a Savior: A user wanted an alternative to gpt-4-mini for JSON-formatted replies but found limited choices in the current lineup. Others suggested Gemini Flash and pointed to LiteLLM for handling multiple APIs in a unified interface.

- They noted potential rate limit constraints and recommended monitoring usage metrics, referencing RouteLLM as another solution for routing requests across various models.

- Janitor AI Joins OpenRouter: Members debated how to link Janitor AI with OpenRouter, focusing on advanced settings for API endpoints. They outlined toggling certain authentication fields and matching the proxy URLs for synergy in usage.

- Various configurations were shared, concluding that correct URL alignment and token handling made the integration seamless.

- Cards Declined, Payments Denied: Some users found credit card failures when attempting to pay through OpenRouter, although different cards sometimes worked fine. One user noted consistent issues with a capital one card, while a second card processed successfully.

- They considered potential bank-specific rules or OpenRouter’s payment gateway quirks, advising those affected to try multiple billing methods.

Stability.ai (Stable Diffusion) Discord

- SwarmUI Zips Ahead with ComfyUI: Members explained how SwarmUI uses ComfyUI’s backend for a simpler UI with the same performance, emphasizing user-friendliness and robust features.

- They also highlighted a Stable Diffusion Webui Extension that streamlines model management, prompting chatter about frontends for better workflows.

- SANA & Omnigen Spark Space Showdown: Community tested SANA for speedy inference on smaller hardware, contrasting it with Omnigen that’s slower and sometimes behind SDXL in image quality.

- Enthusiasts questioned if SANA justifies HDD usage, especially when Flux might offer better model performance.

- LTXVideo & HunyuanVideo Go Full Throttle: LTXVideo earned praise for faster rendering on new GPUs with almost no drop in quality, outpacing older video pipelines.

- Meanwhile, HunyuanVideo introduced quicker steps and better compression, fueling excitement about recent strides in video generation.

- Flux Dev Rocks Text-in-Image Requests: Members identified Flux Dev as a top open-source model for embedding text in images, rivaling closed solutions like Ideogramv2 and DALL-E 3.

- They also cited Flux 1.1 Ultra as the best closed model for crisp text output, referencing user tests and side-by-side comparisons.

- GPU Gains & Memory Must-Haves: Enthusiasts suggested RTX-series cards for AI tasks, with advice to wait for upcoming releases that might cut prices further.

- They stressed at least 32GB RAM and decent VRAM for smooth image generation, highlighting stability benefits.

Eleuther Discord

- RTX 50xx VRAM Limits Light a Fuse: Engineers debated rumored VRAM caps in the RTX 50xx series, suspecting that NVIDIA artificially restricts memory to avoid product overlap.

- Some questioned if the added GBs would matter for AI tasks, revealing frustration about potential performance bottlenecks.

- VRAM vs. RAM: The Memory Melee: Multiple participants argued that VRAM could be reclassified as L3 Cache, noting that regular RAM might be 4x slower than VRAM in certain contexts.

- Others pondered pipelining commands between VRAM and RAM, warning that any mismatch could hamper throughput for large-scale model inference.

- Higher-Order Attention Shakes Things Up: Researchers explored attention on attention techniques, referencing expansions in the Quartic Transformer and linking them to Mamba or SSM style convolutions.

- They tied these ideas to ring attention, citing the second paper’s bigger context window and highlighting possible line-graph or hypergraph parallels.

- HYMBA Heads Off SWA: Community members argued that HYMBA mixing full attention on certain layers might undercut the efficiency gains behind SWA or SSM.

- They considered a trade-off between more robust cross-window representation and extra overhead, noting that the actual performance boost requires further tests.

- Pytorch Flex Attention Bugs Persist: A few users reported ongoing troubles with Pytorch's Flex Attention, which blocked attempts at complex attention patterns.

- They found that

torch.compileoften clashed with lesser-used model features, forcing them to revert to standard attention layers until a fix emerges.

- They found that

Latent Space Discord

- 2024 LLMs & Image Generation Gains: An upcoming article, LLMs in 2024, spotlights major leaps for Large Language Models, including multimodal expansions and fierce price competition. The community also noted trending meme culture with generative images that turned an everyday person into a comedic 'bro' and gave Santa a no-nonsense look.

- Members credited these cross-domain breakthroughs for spurring broader creative usage, emphasizing how new metrics push LLM performance boundaries. They also observed that cost reductions and accessible APIs accelerate adoption for smaller-scale projects.

- Text Extraction Throwdown: A benchmark study tested regulatory document parsing with various libraries. Contribs singled out pdfium + tesseract combos for their success in tricky data extraction tasks.

- They underscored how these solutions handle real-world complexity better than standalone OCR or PDF parsing tools. Some cite workflow integration as the next big step for robust text pipelines.

- SmallThinker-3B's Surging Stats: The new SmallThinker-3B-preview on Hugging Face outperforms Qwen2.5-3B-Instruct on multiple evaluations. This compact model targets resource-limited scenarios yet shows significant leaps in benchmark scores.

- Its emphasis on edge-friendly footprints broadens real-world usage, bridging smaller footprints with robust performance. Some participants suspect these improvements stem from specialized fine-tuning and data curation.

- OLMo2's Outstanding Outline: The OLMo2 tech report covers 50+ pages detailing four critical components in the LLM development pipeline. It offers a thorough breakdown of data handling, model architecture, evaluation, and deployment strategies.

- Readers praised its direct approach to revealing real-world learnings, highlighting best practices for reproducible and scalable training. The report encourages devs to refine existing workflows with deeper technical clarity.

- Summit & Transformers Tactics: The invite-only AI Engineer Summit returns after a 10:1 applicant ratio, aiming to highlight new breakthroughs in AI engineering. Organizers recall the 3k-seat World's Fair success, drawing over 1m online views.

- In tandem, an Understanding Transformers overview from this resource offers a structured path to learning self-attention and modern architectural variations. Summit planners encourage guest publishing opportunities for advanced explainers that push community knowledge forward.

GPU MODE Discord

- GPU GEMM Gains: A user spotlighted the discrepancy between real and theoretical GEMM performance on GPU, citing an article on Notion and a related post on Twitter.

- They hinted that declaring 'optimal performance' might trigger more advanced solutions from the community.

- Triton Tuning Troubles: Removing TRITON_INTERPRET was reported to drastically boost Triton kernel performance, especially in matrix multiplication tasks.

- Others confirmed that setting batch sizes to 16 or more, plus tweaking floating-point tolerances for large inputs, eased kernel call issues.

- The Flash br/bc Dilemma: A user asked about dynamic br/bc in Flash Attention for better adaptability, but others insisted fixed sizing is '10 trillion times faster.'

- They proposed compiling multiple versions as Flash Attention does, aiming to balance speed with more flexible parameters.

- Torch Inductor Cache Letdown: One discussion addressed prolonged load times with Inductor caching, reaching 5 minutes even when using a Redis-based remote cache.

- They suspect compiled kernel loading still causes delays, motivating additional scrutiny of memory usage and activation needs.

- P-1 AI’s Radical AGI Push: P-1 AI is recruiting for an artificial general engineering initiative, with open roles here.

- Their core team—ex-DeepMind, Microsoft, DARPA, and more—aims to enhance physical system design using multimodal LLMs and GNNs for tasks once deemed unworkable.

Interconnects (Nathan Lambert) Discord

- LoRA Library War: TRL Tops Unsloth: The #ml-questions channel contested the merits of LLM fine-tuning tools, praising TRL for its thorough documentation while deeming Unsloth too difficult, referencing the LLM Fine-Tuning Library Comparison.

- Though Unsloth boasts 20k GitHub stars, members recommended Transformers/PEFT from Hugging Face along with Axolotl and Llama Factory for simpler LoRA fine-tuning.

- Gating Games: MoE & OLMoE: Members in #ml-questions asked about gating networks for Mixture of Experts, specifically the routing used in Deepseek v3.

- One user suggested the OLMoE paper, highlighting a lower number of experts that keeps complexity under control.

- Pre-recorded Tutorial Tussle at 20k: In #random, the community debated whether to share pre-recorded tutorials with an audience of 20k, emphasizing that these talks have earned praise.

- Another user jokingly labeled the UK AI Safety Institute as intelligence operatives, while others noted LinkedIn competitiveness in AI circles.

- Bittersweet Lesson and Felix's Legacy: In #reads, members mourned the passing of Felix, who authored The Bittersweet Lesson and left a deep impression on the community.

- They discussed safeguarding his works via PDF backups, concerned that deactivated Google accounts could disrupt future access.

- SnailBot’s Sluggish Shenanigans: In #posts, SnailBot drew laughs as users deemed it one slow snail and exclaimed good lord at its performance.

- Its comedic pace entertained many, who felt it lived up to its name with snail-like consistency.

OpenAI Discord

- FLUX.1 [dev] Fuels Fresh Image Generation: Members highlighted FLUX.1 [dev], a 12B param rectified flow transformer for text-to-image synthesis, referencing the Black Forest Labs announcement.

- They noted it stands just behind FLUX.1 [pro] in quality and includes open weights for scientific research, reflecting an eagerness for community experimentation.

- ChatGPT’s Search Reliability Under the Microscope: A user asked if ChatGPT can handle real-time web results, comparing it to specialized tools like Perplexity.

- Community feedback indicated the model can be limited by data updates, with some preferring external search solutions to bridge missing information.

Cohere Discord

- Cohere's Sparkling Rerank-3.5 Release: Members welcomed the new year with enthusiastic wishes, anticipating that rerank-3.5 will soon deploy on Azure, delivering a next-level ranker for advanced text processing.

- The conversation included questions about potential use-cases, with someone asking “How are you liking it so far?”, highlighting the community’s eagerness for insights on improved performance.

- Embedding Rate Limit Bumps & Best Practices: Users explored the request process for boosting embedding rate limits by contacting Cohere support, aiming to handle heavier workloads.

- Community members outlined existing API constraints of 100 requests per minute on trials and 2,000 requests per minute in production, emphasizing efficient usage to avoid overages.

Torchtune Discord

- Torchtune Gains Momentum: Community members praised Torchtune for its broader use in multiple AI models, emphasizing an approach to measure performance.

- A user recommended exploring alternative evaluation methods with transformer module for better insights.

- Chunked Cross Entropy Boosts Memory Efficiency: Chunked cross entropy helps reduce memory usage by splitting the computation, shown in PR #1390.

- One variant used log_softmax instead of

F.cross_entropy, prompting discussion on performance and memory optimization.

- One variant used log_softmax instead of

- Flex Attention on A6000 Seeks Workarounds: Members encountered a PyTorch Torchtune bug on the A6000, discovering a kernel workaround by setting

flex_attention_compiledwithtorch.compile().- They proposed an environment variable approach, warning that a permanent fix in 2.6.0 remains uncertain and referencing Issue #2218.

LlamaIndex Discord

- Invoice Intelligence with LlamaParse: A recent notebook demonstration showcased a custom invoice processing agent that automatically classifies spend categories and cost centers, leveraging LlamaParse for smooth workflows.

- Members emphasized automation to cut manual errors and speed up finance-related tasks, referencing the agent’s approach to handle invoice handling pipelines more efficiently.

- Simple JSON Solutions for Data Storage: Community members debated storing LLM evaluation datasets in S3, Git LFS, or local JSON files, highlighting minimal overhead and easy structure.

- They suggested compressing large JSON data and recommended Pydantic for quick integration, noting that SQL or NoSQL hinges on dataset size.

- Fusion Friction with Multi-Retrievers: A user combined 2 vector embedding retrievers with 2 BM25 retrievers but reported weak query results from this fused setup.

- Discussions pointed toward tweaking weighting, indexing, or re-ranking strategies to boost the quality of responses from blended retrieval methods.

OpenInterpreter Discord

- Open Interpreter Falters and Receives Recognition: Users criticized Open Interpreter 1.0 for performing worse than the classic version, lacking code execution and web browsing, and shared OpenInterpreter on GitHub for reference. They also highlighted important open-source contributions from OpenProject, Rasa, and Kotaemon.

- Participants stressed broken text formatting and an absence of searching tools, but still commended the open-source community for driving new functionalities.

- Installation Steps Simplify Setup: A single-line install process for Open Interpreter on Mac, Windows, and Linux emerged, enabling a quick web UI experience. Friends confirmed the approach eases command execution post-installation.

- Curious users tested the setup in the #general channel, confirming that it spares them from manual environment configurations.

- WhatsApp Jokes and a Need for Always-On Trading Tools: One user played with Web WhatsApp messaging, joking that it breathes life into mundane text chats. This exchange prompted others to share personal tech-driven everyday experiences.

- A separate discussion focused on requiring an always-on trading clicker, hinting at an OS-level solution that never sleeps for continuous command execution.

Modular (Mojo 🔥) Discord

- Mojo's Linked List Longing: A request emerged for a linked list codebase that works on nightly, spotlighting the desire for quickly accessible data structures in the Mojo ecosystem.

- Contributors weighed in on efficient prototypes and recommended minimal overhead as the key impetus for streamlined exploration.

- CLI & TUI Tools Toasting with Mojo: A developer showcased their learning process for building CLI and TUI libraries in Mojo, producing new utilities for command-line enthusiasts.

- Others joked about forging a new Mojo shell, mirroring bash and zsh, reinforcing the community’s enthusiasm for deeper terminal integration.

- AST Explorations & Debugging Drama: Members shared their success with index-style trees like

RootAstNodeandDisplayAstNode, but battled segmentation faults with the Mojo debugger.- A GitHub issue #3917 documents these crashes under --debug-level full, fueling a lively exchange on complex recursive structures.

LLM Agents (Berkeley MOOC) Discord

- January Joy for LLM Agents Certificates: Members confirmed certificates for the LLM Agents MOOC will be issued by the end of January, based on recent updates.

- They advised to stay tuned for more details, pointing interested learners to the Spring 2025 sign-up page.

- Fall 2024 Fades, Spring 2025 Beckons: Enrollment for Fall 2024 is now closed, ending the chance to earn that session’s certificate.

- Members encouraged joining Spring 2025 by using the provided form, noting upcoming improvements in the curriculum.

DSPy Discord

- Gemini's GraphRAG Gains Gleam: A user asked if any specific GraphRAG approach was used, and it turned out that Gemini adjusted default prompts for code-related entities to enhance extraction results.

- They indicated this approach could add clarity to entity extraction steps, with a focus on refining DSPy capabilities.

- Donor's Game Doubles Down: A member ran a simulation of the Donor's Game from game theory using DSPy to replicate repeated strategic upgrades across multiple generations.

- They referenced a GitHub repository implementing a method from Cultural Evolution of Cooperation among LLM Agents, exploring ways to encourage cooperative behavior among LLM agents.

tinygrad (George Hotz) Discord

- Windows Warmth for tinygrad: A member asked if tinygrad would accept pull requests for Windows bug fixes, highlighting the challenge of supporting Windows while it isn't a primary focus.

- Another member speculated such fixes would be welcomed if they remain consistent and stable, indicating a cautious but open stance on cross-platform expansions.

- Shapetracker Scrutiny: A member praised the thoroughness of tinygrad’s documentation, referencing a tinygrad-notes blog for deeper insight.

- They sought details on index and stride calculations in

Shapetracker-based matrix memory, requesting references to clarify the underlying principles.

- They sought details on index and stride calculations in

MLOps @Chipro Discord

- WandB & MLflow Marathon: Many noted that Weights & Biases offers a hosted service, while MLflow can be self-hosted for more control.

- Both platforms effectively track machine learning experiments, and team preferences hinge on cost and desired workflow ownership.

- Data Logging Delights: Some mentioned storing experimentation results in Postgres or Clickhouse as a fallback for basic versioning.

- They agreed it's a practical route when specialized platforms are off-limits.

- Classical ML On the Clock: A user questioned whether classical machine learning (like recommender systems and time series) is fading in the LLM era.

- Others disagreed, contending these fields remain crucial despite the buzz around LLMs.

- BitNet Bites Into 1-bit: Recent work on BitNet showcases 1-bit LLMs matching full-precision models while lowering resource needs.

- Researchers cited this paper describing how ternary weights lead to cheaper and efficient hardware support.

Nomic.ai (GPT4All) Discord

- AI Reader: PDF Chat for Low-Cost Laptops: A user building a low-cost system in Africa wants an AI Reader that opens a chat GUI on PDF access to help students with tests and content, exploring Nomic embed usage.

- They plan to handle content embeddings on local hardware and ask for ways to feed real-time mock exam feedback, emphasizing minimal re-indexing overhead.

- Ranking Content Authority for Dynamic Fields: A participant suggested measuring educational materials' authority in a way that changes as computer science evolves.

- They worried about performance overhead if frequent re-indexing is required, proposing a more flexible approach to keep data current.

- Boosting Student Transcripts in Search: Contributors proposed giving extra weight to student transcripts to reflect personal academic growth in content retrieval.

- They see a more personalized indexing method as the next shift, letting individuals track learning achievements more precisely.

- Indexing by Subject for Enhanced Resource Control: Users floated an indexing system focused on subject rather than single references like books, aiming to include supplementary articles and notes.

- They believe this approach grants better coverage of knowledge gaps and more direct resource selection for exam prep.

LAION Discord

- Blender & AI Bond for 3D: In #general, someone asked about AI-driven collaboration with Blender for advanced 3D annotations, referencing synergy in the community.

- They sought partners to extend Blender's capabilities, aiming for deeper integration of AI in geometry-based tasks.

- Brainwaves & Beast Banter: A participant mentioned Animals and EEG for language/action mapping, looking for groups exploring AI and neuroscience for animal studies.

- They hoped to decode animal behavior with EEG data, suggesting a possible new wave of biologically informed experimentation.

- YoavhaCohen's Tweet Surfaces: A link to this tweet appeared in #research, without added details.

- It remained cryptic but hinted at interest in future developments from YoavhaCohen, stirring curiosity.

The Axolotl AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!