[AINews] not much happened today

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

a quiet day.

AI News for 3/26/2025-3/27/2025. We checked 7 subreddits, 433 Twitters and 30 Discords (230 channels, and 7972 messages) for you. Estimated reading time saved (at 200wpm): 757 minutes. You can now tag @smol_ai for AINews discussions!

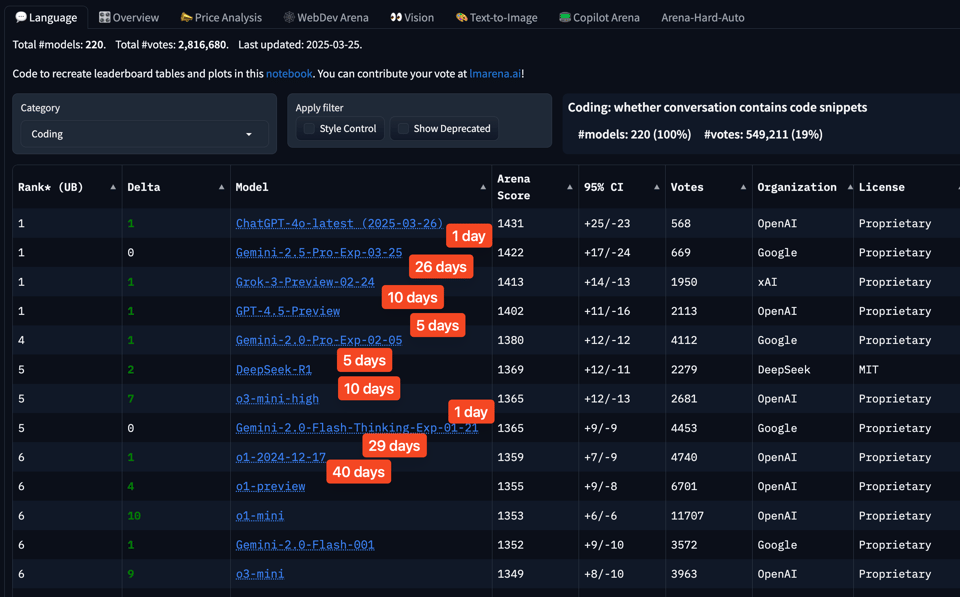

There's a new 4o model in ChatGPT, but there's no blogpost and not much detail beyond the announcement tweet so there's not much to report. However you can see that the time between SOTA models has been shortening recently.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

GPT-4o and Multimodal Models

- OpenAI's GPT-4o has seen significant updates, enhancing its ability to follow detailed instructions, tackle complex technical and coding problems, and improve intuition and creativity while reducing emojis 🙃, according to @OpenAI. Furthermore, the updated

chatgpt-4o-latestis now available in the API, with plans to bring these improvements to a dated model in the API in the coming weeks, as announced by @OpenAIDevs. - GPT-4o's native image generation stands out for its instruction following capability, with @abacaj noting that nothing comes close to it. @iScienceLuvr highlighted the impressive compositioning, text generation, and overall flow of diagrams generated by GPT-4o, specifying that these elements were created without needing to be explicitly defined.

- Concerns about image generation content filters have also been raised, with @nrehiew_ pointing out an instance where OpenAI allowed an image through its filters that seemed surprising.

- Initial examples matter: @sama emphasizes the careful consideration put into the initial examples shown when introducing new technology.

- Creative freedom and potential harms: @joannejang, who leads model behavior at OpenAI, shared her thoughts and nuance that went into setting policy for 4o image generation. She discusses OpenAI's shift from blanket refusals in sensitive areas to a more precise approach focused on preventing real-world harm, aiming to maximize creative freedom while preventing real harm, and embracing humility, recognizing how much they don't know, and positioning themselves to adapt as they learn.

- Image generation with transparent backgrounds is a cool feature of GPT-4o that is super useful for creating all kinds of assets, according to @giffmana.

- GPT-4o's performance improvements are clear over previous releases, with large model arena showing improvements in math, hard prompts and coding categories, according to @lmarena_ai.

- Model quality perceptions: @abacaj finds that Google models are perpetually in preview or experimental mode, and by the time they're fully available, another model has surpassed them. They give you a glimpse of what’s possible and then someone goes and actually does it.

DeepSeek and Gemini

- DeepSeek V3-0324 APIs are being tracked by @ArtificialAnlys across 10 APIs, including DeepSeek’s first-party API and offerings from Fireworks, DeepInfra, Hyperbolic, Nebius, CentML, Novita, Replicate and SambaNova. It's also now available on Hugging Face through @SambaNovaAI, with 250+ t/s - the fastest in the world. It smashes benchmarks like MMLU-Pro (81.2) & AIME (59.4), outperforming Gemini 2.0 Pro & Claude 3.7 Sonnet.

- Gemini 2.5 Pro is recommended for coding, if you are currently using Claude, according to @_philschmid.

- Gemini 3 can be deployed with just 3 lines of code to Google Cloud Vertex AI, due to the new Model Garden SDK, according to @_philschmid.

- Gemma 3 Technical Report is now on arxiv, according to @_philschmid. It introduces a multimodal addition to the Gemma family of lightweight open models, ranging in scale from 1 to 27 billion parameters. The version introduces vision understanding abilities, a wider coverage of languages and longer context – at least 128K tokens

- A new function calling guide for GoogleDeepMind Gemini, using the new uSDKs, has been announced by @_philschmid, and includes multiple full examples for Python, JavaScript and REST.

- TxGemma, built on GoogleDeepMind Gemma models, can understand and predict the properties of small molecules, chemicals, proteins and more. This could help scientists identify promising targets faster, predict clinical trial outcomes, and reduce overall costs, according to @GoogleDeepMind.

AI Safety and Interpretability

- Anthropic's interpretability research was highlighted by @iScienceLuvr. The new interpretability methods allow them to trace the steps in their "thinking", according to @AnthropicAI.

- Anthropic is recruiting researchers to work with them on AI interpretability, according to @AnthropicAI.

- Anthropic Economic Index is releasing the second research report from the Index, and sharing several more datasets based on anonymized Claude usage data, according to @AnthropicAI.

- AI Safety Fads: @DanHendrycks notes that every year or two a new LW/AF fad comes out (inner optimizers, ELK, Redwood's injury classifier, SAEs), which tend to be much more intense than those in academia due to LW/AF insularity and centralized funding.

AI Tools and Frameworks

- Langchain now offers full E2E OTel support for applications built with LangChain or LangGraph, enabling unified observability, distributed tracing, and the ability to send traces to other observability tools, according to @LangChainAI.

- LangGraph BigTool - LangChain shows it's reliable enough to work w/ local models (via @ollama) w/ > 50 tools.

- LlamaCloud can be used as an MCP server, allowing users to bring up-to-the-second data into their workflow as a tool used by any MCP client, as demonstrated by @llama_index.

- Cohere's Command A, a highly capable and efficient model that can be run on just 2 GPUs, is optimized for real-world agentic and multilingual tasks, according to @cohere.

- Keras has a brand new homepage to celebrate the 10th anniversary of the original release, according to @fchollet.

Trends and Opinions

- Generative AI and Studio Ghibli: Following the release of GPT-4o, there has been significant discussion around the use of Studio Ghibli-style generations, according to @iScienceLuvr and @aidan_mclau. @nearcyan discusses the post-reality-filter stage rolling out over the next few years, where one's reality is whatever they want it to be (Ghibli or pokemon or lotr or...), and as each human finds that which they truly desire, they then become demarcated into their own garden made of pure beauty and art (and for many, of lust) optimized just for them.

- The future is ASI: @aidan_mclau says they cannot imagine building artificial fucking superintelligence to make money or stymie political opponents or arbitrage EA calculus.

- Concerns about reliance on models: @nptacek is beginning to wonder if there is a builders/collators divide going on here, with some wanting some sort of neat, orderly information space while others are completely comfortable pushing the boundaries of what can exist in the first place.

- The Meltdown of GPUs: @sama tweeted that their GPUs are melting, due to people loving images in chatgpt.

Humor/Memes

- The Inverse of a Banger: @nearcyan defined the exact opposite of a banger when it comes to AI image generation.

- Muppet Jensen: @iScienceLuvr posted a friendly reminder from muppet jensen.

- AGI = All Ghibli Images?: @_akhaliq jokes that AGI means "All Ghibli Images?"

- Gary Marcus's opinions: @cloneofsimo joked about world-renowned, thought-provoking, innovative value that Gary Marcus provides to the ML community.

- DeepMind AI trying to make a banger: @sama CLAIDE tried to make a banger but instead said one man's slop is another man's slop.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek V3 0324 on Livebench Surpasses Claude 3.7 with Hallucination Issues

- DeepSeek V3 0324 on livebench surpasses Claude 3.7 (Score: 148, Comments: 14): DeepSeek V3 (0324) has achieved significant performance on LiveBench, ranking 10th overall and outperforming Claude 3.7 Sonnet (base model), while being the second highest non-thinking model after GPT-4.5 Preview. This performance suggests that the upcoming R2 model could potentially be a strong competitor in the AI field.

- DeepSeek V3's Performance and Hallucination Issues: Users report that DeepSeek V3's hallucination rate increased from 4% to 8%, making it less reliable for certain tasks. Despite correct answers based on hallucinated prompts, users find it surprising and recommend running it at a low temperature of 0.3 to mitigate this issue.

- Comparison with Other Models: Gemini Pro 2.5 showed significant improvements in reasoning over its predecessor, raising questions about potential enhancements from V3.1 to R2. Anthropic and OpenAI face challenges with high API costs, but OpenAI's multi-modal capabilities, particularly in image generation, are noted as advantageous.

- LiveBench and Model Updates: There is curiosity about the removal of grok-3-beta from LiveBench. Fast R1 providers may take time to adopt V3, and users express hopes for improvement in upcoming updates, possibly by June.

Theme 2. Microsoft's KBLaM: Plug-and-Play Knowledge in LLMs

- Microsoft develop a more efficient way to add knowledge into LLMs (Score: 426, Comments: 56): Microsoft has developed KBLaM, a new method designed to efficiently integrate knowledge into Large Language Models (LLMs). This approach aims to improve the performance and accuracy of LLMs by enhancing their knowledge base without significantly increasing computational requirements.

- KBLaM's Limitations: Users highlight that KBLaM is a research prototype and not production-ready, with limitations in providing accurate answers when used with unfamiliar knowledge bases. This suggests it's not an improvement over existing RAG systems, which are already in production.

- Technical Insights and Challenges: The implementation requires significant resources, such as an A100 80GB for testing an 8B model, indicating high computational demands. The approach involves language tokens attending to knowledge tokens but not vice versa, which raises questions about potential knowledge gaps, such as understanding concepts without practical application knowledge.

- Potential Applications and Research Directions: There's interest in whether extracting factual knowledge from training data could optimize parameter usage, potentially making models more intelligent or efficient. However, the consensus is that broad knowledge is essential for intelligence, and more research is needed to explore general knowledge applications and specialist bots.

Theme 3. New QVQ-Max Feature on Qwen Chat Enhances User Experience

- New QVQ-Max on Qwen Chat (Score: 115, Comments: 16): Qwen Chat introduces "QVQ-Max", a powerful visual reasoning model, among other models like "Qwen2.5-Max," described as the most powerful language model in the series. The user interface highlights the capabilities of each model, including "Qwen2.5-Plus," "QwQ-32B," and "Qwen2.5-Turbo," against a dark, clean design backdrop.

- QVQ-Max and other models like Qwen2.5-Max are generating interest among users, with some planning to include them in their testing schedules, particularly on advanced hardware like the M3 Ultra.

- A comment highlights that the model is currently closed, indicating limited or restricted access at the moment.

- There is anticipation for further developments or releases, with hints from an employee on Twitter about potential updates or enhancements expected on Thursday.

Theme 4. Gemini 2.5 Pro Faces Performance Criticism Despite ASIC Advantage

- Gemini 2.5 Pro Dropping Balls (Score: 106, Comments: 17): The post titled "Gemini 2.5 Pro Dropping Balls" compares Gemini 2.5 Pro with LLaMA 4, but lacks detailed content in the body. The title suggests potential issues or shortcomings with Gemini 2.5 Pro.

- Gemini 2.5 Pro vs LLaMA 4: There is skepticism about Gemini 2.5 Pro's superiority over LLaMA 4, with some users arguing that Grok only comes close when using a sampling of 64. However, others believe that no current model, including Claude, can surpass Gemini 2.5 Pro.

- Technological Edge: Google's use of their own ASICs gives them a significant advantage, with Meta and Amazon trying to catch up using MTIA and Tranium respectively. Google is perceived to be six or seven generations ahead, making the competition challenging.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

debugging issues with our pipelines, sorry...

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Thinking

Theme 1. Gemini 2.5 Pro: Rate Limits, Pricing, and Performance Hype

- Cursor Users Hit Gemini 2.5 Pro Rate Limit Wall: Cursor users are experiencing extremely low rate limits with Gemini 2.5 Pro, some reporting throttling after just two API requests. Workarounds involve using Open Router and personal AI Studio API keys, with some suggesting Google Workspace Business accounts might unlock higher limits.

- Cursor Defends Gemini 2.5 Pro Pricing Amidst Free API Claims: Cursor faces user backlash for charging for Gemini 2.5 Pro, which is perceived as free via Google AI Studio API. A Cursor representative clarified that charges cover capacity at scale, as Google doesn't offer a truly free tier for their usage levels.

- Gemini 2.5 Pro Eclipses Claude 3.5 Sonnet in User Preference: User evaluations show Gemini 2.5 Pro surpassing Claude 3.5 Sonnet, capturing 3% of top rankings for story generation while Sonnet plummeted from 74% to 18%. Users praise Gemini 2.5 for seamless handling of long contexts, with some confirming 15K token context windows on AI Studio.

Theme 2. OpenAI's GPT-4o: Updates, Image Generation, and Policy Shifts

- GPT-4o Gets Another Update, Climbs Arena Leaderboard: OpenAI's GPT-4o received a significant update, now ranking #2 on the Arena leaderboard, surpassing GPT-4.5 and tying for #1 in Coding and Hard Prompts. The update reportedly improves instruction following, problem-solving, intuition, and creativity.

- OpenAI Eases Image Generation Policy, Meltdown Imminent: OpenAI is relaxing its image generation policy from blanket refusals to preventing real-world harm, aiming for greater creative freedom. Sam Altman joked that GPUs are melting due to image generation popularity, leading to temporary rate limits.

- Midjourney CEO Disses 4o Image Generation as "Slow and Bad" Meme: The Midjourney CEO dismissed GPT-4o's image generation as slow and bad, calling it a fundraising tactic and a meme, not a serious creative tool. This criticism comes amid discussions about model naming conventions and the White House deleting a tweet with a Ghibli-style image.

Theme 3. Model Context Protocol (MCP) Gains Momentum and Faces Challenges

- OpenAI and Cloudflare Embrace Model Context Protocol (MCP): OpenAI CEO Sam Altman announced MCP support is coming to OpenAI products like the Agents SDK and ChatGPT desktop app, signaling a major step for MCP adoption. Cloudflare also now supports building and deploying remote MCP servers, lowering the barrier to entry.

- Claude Desktop Struggles with MCP Prompt and Resource Handling: Users reported issues with Claude Desktop getting stuck in endless loops when MCP servers include resources or prompts. A workaround involves removing capabilities to prevent Claude from searching for these elements, with a fix released.

- LlamaCloud Integrates as MCP Server for Real-time Data: LlamaCloud can function as an MCP server, enabling real-time data integration into workflows for any MCP client, including Claude Desktop. This allows users to leverage existing LlamaCloud indexes as dynamic data sources for MCP.

Theme 4. Local LLM and Tooling Updates: Unsloth, LM Studio, and Aider

- Unsloth Unleashes Dynamic Quantization and Orpheus TTS Notebook: Unsloth AI released Dynamic Quants for improved accuracy and efficiency in local LLMs, along with DeepSeek-V3-0324 GGUFs. They also launched the Orpheus TTS notebook for human-like speech synthesis with emotional cues and voice customization, outperforming OpenAI's TTS in user tests.

- LM Studio 0.3.14 Adds Multi-GPU Controls and Optimization: LM Studio 0.3.14 introduces granular controls for multi-GPU setups, allowing users to optimize performance by enabling/disabling GPUs and choosing allocation strategies. The update also includes 'Limit Model Offload' mode for improved stability and long context handling, with enhancements coming for AMD GPUs.

- Aider's /context Command Streamlines Code Navigation: Aider introduced the

/contextcommand, automating the identification and addition of relevant files for a given request, improving workflow efficiency in large codebases. However, users reported compatibility issues with Gemini via the OpenAI API compatibility layer and CPU usage spikes.

Theme 5. Turing Institute Turmoil and Open Source RL System DAPO

- Alan Turing Institute Faces Mass Layoffs and Project Cuts Despite Funding: The Alan Turing Institute (ATI), despite a recent £100 million funding injection, is planning mass redundancies and to axe around a quarter of its research projects, causing staff revolt. The institute faces an existential crisis amidst competition from the wider AI field.

- ByteDance's Open-Source RL System DAPO Emerges Quietly: ByteDance and Tsinghua AIR released DAPO, an open-source Reinforcement Learning system, which seemingly went under the radar. Members shared the link, highlighting its potential significance in the RL research community.

- Catastrophic Overtraining Paper Challenges LLM Pre-training Paradigm: A new paper coins the term "catastrophic overtraining," suggesting that extended pre-training can degrade fine-tuning performance and make models harder to adapt to downstream tasks. The paper notes that instruction-tuned OLMo-1B performs worse after extended pre-training.

PART 1: High level Discord summaries

Cursor Community Discord

- Cursor Chokes on Gemini 2.5 Pro's API Limits: Users report hitting very small rate limits with Gemini 2.5 Pro in Cursor, as low as two API requests before being throttled.

- Some members are using a combination of Gemini API, Requesty, and Open Router to get around these limits while others pointed out that Google Workspace Business accounts might unlock higher limits.

- Windsurf and Cursor Duke it Out Again: The debate rages on between Windsurf and Cursor, with Windsurf favored for its full context during prototyping, and Cursor preferred for bug fixing.

- One user complained about Windsurf's UI styling compared to Cursor's consistency across pages, while another requested to hire the official shitsurf shill.

- Context Window Constrained by Cursor?: A user asked whether Cursor restricts the context window for Gemini 2.5 Pro to 30K, despite the model's advertised 1M context limit.

- Others chimed in that while agentic models have a 60k context window, Claude 3.7 has a 120k context window, or even 200k if max settings are enabled (though there was not enough data to confirm if this was Vertex).

- Gemini 2.5 Pro's Pricing Perplexity: Users are questioning why Cursor charges for Gemini 2.5 Pro, when it's supposedly free via Google's AI Studio API, leading to accusations of dishonesty.

- A Cursor representative clarified that the charges cover the capacity required to handle the model's usage, as Google doesn't offer a free tier at Cursor's scale, adding that the price mirrors Gemini 2.0 Pro.

- Cline Takes Over Coding Workflows: Some users plan to ditch Cursor, opting for VSCode with Cline (with Gemini) for coding, and DeepSeek v3 from Fireworks for planning.

- One user expressed nostalgia for Cursor's Tab feature, and while noting the decline of most models, still recognized the usefulness of the models, but concluding that local models all suck at the RTX 4090 level anyway.

Perplexity AI Discord

- Perplexity Bot Joins Discord: Perplexity launched the Perplexity Discord Bot for testing, providing quick answers and fact-checking directly within Discord channels, accessible via tagging <@&1354609473917816895> or using the /askperplexity command.

- Testers can explore features like /askperplexity, fact-check comments with the ❓ emoji, and create memes with /meme, with feedback directed to the <#1354612654836154399> channel for improvements.

- GPT-4.5 Quietly Departs Perplexity: Members noticed GPT-4.5 disappeared from model selection on Perplexity.com, potentially due to cost concerns.

- The Perplexity AI bot clarified that GPT-4.5 generally outperformed GPT-4o in scientific reasoning, math, and coding, while GPT-4o excels in general and multimodal use.

- Complexity Extension Boosts Perplexity: Users discussed the 'Complexity' extension, a third-party add-on designed to enhance Perplexity's functionality.

- The Perplexity AI bot noted that while the extension's features could be beneficial as native options, integration decisions depend on user demand, technical feasibility, and product roadmap alignment.

- MCP Servers Control Perplexity: Users explored leveraging Model Context Protocol (MCP) servers like Playwright to allow Perplexity to control browsers or other applications with available MCP servers.

- The Perplexity AI bot explained that configuring the server to work with Perplexity's API enables automated browser actions and web interactions.

- User Encounters API Parameter Error: A user encountered an error related to the

response_formatparameter despite having credits, disrupting their app's functionality and leading to lost sales.- The API team implemented error handling for parameters like

search_domain_filter,related_questions,images, andstructured_outputs, clarifying these were never available for non-Tier 3 users; see usage tiers.

- The API team implemented error handling for parameters like

Manus.im Discord Discord

- Gemini 2.5 Pro Shows Early Promise: Members are giving early praise that Gemini 2.5 Pro is coding pretty good so far and wayyyy better than any Gemini model used last year.

- One user mentioned it took two separate animation components and meshed them into one for a seamless loading transition.

- Manus Invitation Codes Face Delays: Users have expressed frustration regarding the wait times for Manus invitation codes, with some waiting for over a week.

- One member suggested using incognito mode or a different browser when signing up and receiving the embedded code.

- Discord UI Sidebar Mysteriously Vanishes: A user reported missing icons, threads and messages, from their Discord sidebar, specifically on platform.openai.com.

- A member suggested changing appearance settings to compact view or checking PC display settings to resolve size issues.

- WordPress Staging Site Fails in Manus: A member reported that their WordPress staging site in Manus has been failing repeatedly since the last maintenance.

- No solutions were found.

- Manus Plays Well with N8N: Members discussed using Manus with N8N or Make.com for process automation and workflow automation.

- One member is in the process of building their first workflow with N8N and Manus to connect creatives globally.

LMArena Discord

- Livebench primarily tests rote tasks: Members debated the merits of Livebench, with some arguing it primarily tests rote tasks and rewards flash thinking rather than deeper reasoning.

- This focus on rote tasks could skew results and reduce the reliability of the benchmark.

- Gemini 2.5 Pro raises limits and eyebrows: Gemini 2.5 Pro's capabilities are discussed, ranging from amazing at math to exhibiting instruction following issues, with Logan Kilpatrick announcing increased rate limits on X.

- Concerns are growing regarding the consistency and stability of the model, especially discrepancies between the free AI Studio version and the paid Gemini Advanced version.

- AI Censorship debate burns: The discussion shifts to censorship in AI models, with concerns raised about both Western models being woke and Chinese models being propaganda parrots.

- Members debate whether government censorship is distinct from safety guardrails and legal compliance.

- Qwen 3 release is imminent: Enthusiasm builds for the upcoming Qwen 3 release, with speculation about its architecture and performance.

- Some anticipate a MoE model with impressive performance, while others remain cautious about its actual capabilities compared to Qwen 2.5 Max.

- DeepSeek V3 0324 scores impressively: DeepSeek V3 0324's impressive SWE-bench score is highlighted, raising questions about its coding prowess relative to other models like GPT-4o.

- Some suggest that these coding improvements might be a result of vibe coding or benchmark tuning rather than genuine advancements in the model's architecture.

Unsloth AI (Daniel Han) Discord

- Selective Quantization Improves Unsloth's Dynamic Quants: Unsloth's Dynamic Quants are selectively quantized, and they released DeepSeek-V3-0324 GGUFs, including 1-4-bit Dynamic versions.

- This allows running the model in llama.cpp, LMStudio, and Open WebUI, with a guide for detailed instructions.

- Unsloth's Orpheus TTS Notebook Speaks Volumes: Unsloth released the Orpheus TTS notebook that delivers human-like speech with emotional cues and allows users to customize voices + dialogue faster with less VRAM, described in this tweet.

- It supports single stage models and one of the members said that Kokoro won't be finetuneable at all.

- YouTube Algorithm Succumbs to Galois Theory: After a single YouTube search for Galois theory, a member joked that their feed is now saturated with videos about quintics.

- They quipped that these algorithms are "walking and degrading to crawling after 8k ctx".

- Instruct Models Better without Pretraining?: A user was advised not to continually pretrain an instruct model because it can degrade performance and is generally intended for adding new domain knowledge, referencing the Unsloth documentation.

- Instead, the member was encouraged to explore Supervised Fine Tuning (SFT) instead for Q&A tasks.

- ByteDance's DAPO System Opens Doors: Members shared a link to ByteDance's Open-Source RL System DAPO, noting it "seemed like it kind of went under the radar".

- The system comes from ByteDance Seed and Tsinghua AIR.

aider (Paul Gauthier) Discord

- Google Gemini 2.5 Pro Hits High Demand: Google is prioritizing raising rate limits for Gemini 2.5 Pro due to high demand, according to a tweet by @OfficialLoganK.

- To bypass rate limits, OpenRouter suggests adding an AI Studio API key and setting up OpenRouter.

- GPT-4o Gains Traction After Recent Updates: GPT-4o received an update in ChatGPT featuring improved instruction following, technical problem solving, intuition, and creativity, according to OpenAI's tweet.

- It now ranks #2 on the Arena Leaderboard, surpassing GPT-4.5, tying #1 in Coding and Hard Prompts.

- Aider's New Command Streamlines Coding Process: Aider's new

/contextcommand automatically identifies and adds relevant files for a given request, streamlining the coding process, though it is still in testing.- This assists in large codebases and saves time, and is useful for figuring out what needs to be modified and can be used with a reasoning model to brainstorm bugs.

- Gemini's OpenAI API faces Compatibility Problems: A user reported issues with Gemini not working with the OpenAI compatibility layer, suspecting Litellm as the cause, despite other models functioning correctly.

- The user accesses all AI services through a reverse proxy, necessitating the OpenAI API compatibility.

- User Reports Aider CPU Usage Spike: A user reported Aider suddenly spiking to 100% CPU usage, causing hanging or slow response from the LLM, despite working with a small repo.

- The user sought debugging tips, unsure where to start troubleshooting the issue.

OpenAI Discord

- Dash Devotee's Keyboard Konversion: A user's keyboard remapping to favor the dash sparked a discussion on punctuation preferences and alternatives, like semicolons, with some joking about using the

^symbol.- This underscored the nuanced and personal choices individuals make in their writing and communication styles.

- Sora's Struggles: Prompting Plants & Questionable Bunnies: A user sought help crafting Sora prompts for a camera spin effect with smoothly changing plant backgrounds, sharing an example image, criptomeria-na_kmienku-globosa-cr-300x300.webp.

- Meanwhile, concerns arose about Sora generating suggestive content when prompted for bunny characters, raising questions about content moderation.

- Image Generation: Vice or Vision?: A user criticized AI image generation as a vice that devalues digital art, sharing an image created with it, Screenshot_20250327_162135_Discord.jpg.

- Subsequent disagreement led to the user being blocked, highlighting differing views on AI's role in the art world.

- Arxiv Ascendant: STEM's Speedy Stage: Arxiv's growing presence as a pre-publication platform in STEM fields sparked debate about the value of unreviewed work, the potential for a critical mass of eyes to drive progress, and the coming era of AI peer review.

- Criticism of the traditional peer review process included scientists paying for consideration and losing ownership, fueling enthusiasm for AI to create a more functional, accessible system.

- Bulk File Bonanza: Upload All At Once: Members confirmed that to ensure the model considers all documents in a single context, it's preferable to upload all files simultaneously when using tools like ChatGPT.

- This approach ensures the model integrates the information from all documents, provided they are formatted properly.

OpenRouter (Alex Atallah) Discord

- Gemini 2.5 Capacity Crunch: Users encounter

RESOURCE_EXHAUSTEDerrors with Gemini 2.5, and are advised to link an AI Studio key in OpenRouter settings to enhance capacity.- It's highlighted that Google lets users pay for increased capacity through AI Studio.

- Deepseek R1 Stalls with Emptiness: Users reported empty API responses from the Chutes provider when using Deepseek R1 (Free), even with fresh keys.

- Setting max_tokens to 0 was found to be a likely culprit, though the issue persisted even after adjustment.

- OpenRouter Provider Routing Realities: A user discovered and debugged a routing bug when routing Gemini/Anthropic across Google/Bedrock/Anthropic using the AI SDK.

- Even with

allow_fallbacksset to false, requests were not respecting the defined order, leading to all requests ending up on Anthropic; the staff confirmed the routing bug.

- Even with

- OpenRouter Parity Pursuit: A user finds OpenRouter's compatibility with the OpenAI SDK lacking when using

google/gemini-2.5-pro-exp-03-25:freecompared toopenai/gpt-4o-minivia Spring AI.- A member insists that OpenRouter is supposed to be 100% compatible and the user may be facing rate limits, and suggests testing with Mistral Small 3.1 and Phi 3 models.

- Free Gemini 2.5 Pro Prowess Possible: Members share how to utilize OpenRouter to run Gemini 2.5 Pro in @cursor_ai for free, using a Cursor tutorial.

- The member reported this solution as resolving issues encountered after a brief period of troubleshooting.

LM Studio Discord

- LM Studio Gets Multi-GPU Mantras: LM Studio 0.3.14 introduces controls for multi-GPU setups, letting users enable/disable GPUs and choose allocation strategies to optimize performance on systems with multiple GPUs.

- The version includes a 'Limit Model Offload to Dedicated GPU memory' mode, improving stability and optimizing long context handling, with enhancements coming to AMD GPUs.

- Vision Model Plugin Voyage Vanishes: Members sought vision model plugins for models from Hugging Face, noting Mistral Small is text-only and in GGUF format for LM Studio.

- The suggestion to use Mistral Small in LM Studio was made, but it seems like nobody got the Vision Model working.

- Threadripper CPU Tag Squabble: Members debated if AMD Threadripper CPUs are consumer grade, despite being marketed as HEDT (High-End Desktop) processors, referencing a Gamers Nexus article.

- A member argued that while marketed towards home users, Threadrippers are actually professional workstations.

- Gemma 3 Generates Great Gains: A user achieved 54 t/s using Gemma3 - 12b Q4_K_M on a newly acquired 9070XT (Vulkan, no flash attention), whereas their 7800XT only generated around 35 t/s with Vulkan and 39 t/s with ROCm.

- Members discussed how Gemma3 models spill into shared memory even with full offload, noting context can fill 32 GB of shared memory with questions being asked on how to load a model as large as 48+gb

- P100 is Past Prime: A member asked about a P100 16GB for 400 CAD/200 USD as a hobby investment, but they were strongly advised against it as e-waste.

- Members cited its Tesla architecture, unsupported CUDA versions, and inferior performance compared to modern cards like the 6750XT.

Eleuther Discord

- Deepseek V3 Rivals Cloud on Mac: Members compared running Deepseek V3 on Mac Studios (20toks/second) with cloud instances, citing slower performance on an AMD EPYC Rome system (4 tokens/sec) reported in this article.

- The discrepancy might be due to the Mac's faster unified RAM.

- EleutherAI Mulls ICLR 2025 Meetup: EleutherAI is considering an ICLR 2025 meetup, potentially hosting about 30 attendees.

- Sponsorship opportunities may be explored if attendance interest is high.

- Qwen 32B Stalls on LLM Harness: A member encountered issues evaluating the Qwen 32B model on LLM harness, despite using the latest Transformers version.

- Root cause is possibly due to sharded model having more than 10 shards related to tied embeddings, potentially triggered by the transformers library itself.

- Transformers Library Triggers False Errors: A member traced a misleading error back to transformers

4.50.2, sharing a Colab notebook running4.50.0where the problem was absent.- The issue stemmed from insufficient storage, despite the error message suggesting a problem with the

AutoModelloading function; fix will come in the form of a PR to lm-eval to add better error handling.

- The issue stemmed from insufficient storage, despite the error message suggesting a problem with the

- OLMo-1B Suffers Overtraining Crash: The instruction-tuned OLMo-1B model pre-trained on 3T tokens leads to over 2% worse performance on standard LLM benchmarks than its 2.3T token counterpart, according to this paper.

- The Gemma Team also published a new paper with authors including Aishwarya Kamath, Johan Ferret, and Shreya Pathak.

Interconnects (Nathan Lambert) Discord

- Gemini 2.5 Pro Dethrones Claude 3.5 Sonnet: Gemini 2.5 Pro edged out Claude 3.5 Sonnet in user preference, capturing 3% of top rankings for story element combinations, while Claude 3.5 Sonnet dropped from 74% to 18% according to evaluations.

- Despite the shift, users lauded Gemini 2.5 for its seamless performance with lengthy contexts, confirmed by a user observing a 15K token context window on AI Studio.

- OpenAI's Revenue Rockets, AGI Dreams Loom: OpenAI anticipates tripling its revenue to $12.7 billion this year, projecting $125 billion by 2029, fueled by advancements like GPT-4o and a revised image generation policy focusing on preventing real-world harm, as detailed in a Bloomberg report.

- Despite GPU constraints due to GPT-4o's image generation popularity, OpenAI is temporarily implementing rate limits, with Sam Altman noting, it's super fun seeing people love images in chatgpt, but our GPUs are melting.

- Midjourney CEO Slams 4o Image Generation: The Midjourney CEO criticized 4o's image generation as slow and bad, dismissing it as a fundraising tactic and a meme, not a creative tool, as reported on X.

- This criticism surfaces amid discussions about model naming conventions and the White House deleting a tweet featuring a Ghibli-style image, originally described as dark.

- Turing Institute in Deep Turmoil: Despite a recent £100 million injection in 2024, the Alan Turing Institute (ATI) is facing mass layoffs and planning to axe around a quarter of its research projects, causing staff upheaval according to researchprofessionalnews.com.

- The institute faces an existential threat given competing challenges from the wider field.

Modular (Mojo 🔥) Discord

- Mojo Struggles with Unit Addition: Discussion in the

mojochannel highlighted challenges in resolving addition of different units, such as kilometers per second and meters per minute, with concerns about the return type and how to correctly scale the values.- One member noted that the scale would have to return the correct thing in those cases, and there's no way to use

-> A if cond else Btype logic for the function's return type.

- One member noted that the scale would have to return the correct thing in those cases, and there's no way to use

- C Unions Spark Debate in Mojo: A member inquired about how

unionlowers into, and another suggested using a C union.- The second member pointed out, Since iirc CUDA has unions in some parts of the API.

- Traits Discussion Reveals Nuances: Discussion on extension methods and traits clarified that extensions allow adding methods to library types, a feature not directly available with Rust's

impldue to orphan rules.- Another member corrected that Rust's

implcan implement library types.

- Another member corrected that Rust's

- Implicit Trait Implementations Draw Concern: Debate arose on implicit trait implementations, with a member hoping they are temporary and stating they make marker traits hazardous to have.

- Alternative approaches to propagate trait implementations were discussed, including naming extensions and evaluating soundness tradeoffs.

- Tuple Mutability Raises Eyebrows: A member highlighted that assigning to an index inside a tuple is possible and can be done with indexing, demonstrating unexpected mutability.

- Another member clarified that this is a side effect of

__getitem__returning a mutable reference and noted that it should not be the case, demonstrated in the test suite.

- Another member clarified that this is a side effect of

HuggingFace Discord

- ByteDance's InfiniteYou Merges with ComfyUI: ByteDance's InfiniteYou model, designed for flexible photo recrafting while preserving individual identity, has been integrated into ComfyUI via this Github repo.

- The goal is to deliver a smooth way to generate different, high-quality images, connecting Claude to Vite.

- HF Inference API Capped for Free Users: Per HuggingFace's API pricing documentation, free users can no longer query the Inference API once they use up all their monthly credits.

- In contrast, PRO or Enterprise Hub users will incur charges for requests exceeding their subscription limits.

- Sieves Streamlines Zero-Shot NLP Pipelines: Sieves, a tool for building NLP pipelines using only zero-shot, generative models without training, was introduced.

- It ensures accurate output from generative models, leveraging structured output from libraries like Outlines, DSPy, and LangChain.

- Qwen 2.5 VL Tangles with Memory Errors on Kaggle: A member faced memory errors running Qwen 2.5 VL 3b on Kaggle to describe a 10-second video, after debugging import issues in the latest transformers library (4.50.0.dev0).

- Recommendations included using bigger hardware, a smaller model, or Flash Attention 2 for GPU offloading.

MCP (Glama) Discord

- Sama Signals Support: OpenAI Embraces MCP!: OpenAI CEO Sam Altman announced MCP support is coming to OpenAI products like the Agents SDK, ChatGPT desktop app, and Responses API.

- This move is seen as a critical step towards establishing MCP as the backbone for agents handling business-related tasks, similar to HTTP's impact on the internet.

- Cloudflare Cranks Context: MCP Gets Remote Server Tooling: Cloudflare now supports building and deploying remote MCP servers with tools like workers-oauth-provider and McpAgent.

- This support is a significant development, offering developers resources to construct MCP servers more efficiently.

- Spec Snafus Surface: Claude Struggles with MCP Prompts: Users encountered issues with Claude Desktop when MCP servers include resources or prompts, leading to endless queries, but a new github version with the fix was released.

- A workaround involves removing capabilities to prevent Claude from searching for missing resources and prompts.

- Canvas MCP Connects College Courses: A member built a Canvas MCP for college courses, enabling automated querying of resources and assignments.

- In response to a request, the creator added a Gradescope integration agent, enabling autonomous crawling of Gradescope.

- All-In-One Docker Compose Preps MCP Servers: An all-in-one docker-compose was created, enabling users to self-host 17 MCP servers easily from Portainer.

- The compose fetches Dockerfiles from public GitHub projects, ensuring updates are automatically applied.

Notebook LM Discord

- Mind Map Goes Public: The Mind Map feature on Notebook LM is now publicly available to all users, with the team expressing gratitude for user patience and feedback, with thank you image.

- A member noted that the mind map, while neatly structured, wastes time because it lacks descriptions, and you also have no control with how the mind map is structured or how descriptive it can be.

- Podcast Creation in Spanish Stops: A user reported that the ability to generate podcasts in Spanish is no longer functioning in the "customize" settings.

- A member suggested including podcast creation via the NotebookLM API and noted that they could make some really cool things with that.

- Notebook Sharing Frustrates: A Pro user reported being unable to share Notebooks via link, even with publicly available YouTube content.

- Potential solutions mentioned include ensuring recipients have active NLM accounts and manually emailing the link.

- Gemini gets Turkey Tested: A member tested Gemini 2.5 Pro with a 'Turkey Test', challenging it to write metaphysical poetry about a bird and shared the video with NotebookLM commentary here.

- The user shaped the commentary with Interactive Mode to segue to a satisfying ending, stumbling into new uses for NBLM.

- Advanced Research has Limits: A member inquired about the deep research limit for Gemini Advanced, and another member responded that it is 20 research reports per day.

- The first member considered this pretty dang good compared to chatgpt.

Yannick Kilcher Discord

- Sketch-to-Model Pipeline Proposed: A member introduced a "Sketch-to-Model" process (Sketch --> 2D/2.5D Concept/Image --> 3D Model --> Simulation) and explored alternatives to Kernel Attention (KA).

- The member mentioned that ChatGPT alluded to a concept akin to KAN, identifying it with Google DeepMind, while Grok 3 indicated that the xAI team is actively researching KAN.

- AI Puzzle-Solving Debated: Members pondered whether AI could crack the puzzle book Maze: Solve the World's Most Challenging Puzzle (Wikipedia).

- Suggestions included training LLMs on ARGs and old puzzle games, though it was acknowledged that some puzzles' deliberate difficulty might stymie current reasoning models.

- GPT-4o Autoregressive Image Generation Confirmed: GPT-4o is confirmed to be an autoregressive image generation model as stated in OpenAI's Native Image Generation System Card.

- Speculation suggests GPT-4o might be reusing image input tokens for image output, potentially outputting the same format of image tokens used as input, using a semantic encoder/decoder.

- Turing Institute Axes Research Projects: Despite securing £100 million in funding, the Alan Turing Institute (ATI) is planning mass redundancies and to cut a quarter of its research projects.

- Reports indicate open revolt among staff due to these cuts.

- Tracing Thoughts in Language Model gets dissected: Members are analyzing Tracing Thoughts in a Language Model and the associated YouTube video.

- The conversation is expected to span multiple sessions due to the extensive material available.

GPU MODE Discord

- Data Distribution Differs Between DP and TP Ranks: In distributed processing (DP), each rank receives different data, while in tensor parallelism (TP), all ranks get the same data, according to a member.

- They suggested that TRL (Transformer Reinforcement Learning) should already manage this distribution automatically to ensure efficient training and utilization of resources.

- Triton Autotune Lacks Pre/Post Hooks: pre_hook and post_hook are not supported in

triton.Autotuneortriton.Configbecause they require python code execution at runtime, which Inductor can't support in AOTI.- One member speculates that implementing this support shouldn't be too difficult and is willing to help.

- Hopper's num_ctas Setting Puzzles Triton Users: Users are encountering crashes or

RuntimeError: PassManager::run failedexceptions when using anum_ctasvalue higher than 1 for Hopper in Triton, with the root cause remaining unclear.- This effectively limits the performance tuning options for Hopper architecture when using Triton.

- CUDA's Memory Hierarchy Gets a Boost: A user explained the CUDA memory hierarchy and clarified that it's the movement of data between DRAM and SRAM that is slow.

- This is why memory coalescing and maximizing data transfer efficiency between global memory and shared memory is critical.

- Red Hat Needs GPU Kernel Engineers: Red Hat is seeking full-time software engineers at various levels with experience in C++, GPU kernels, CUDA, Triton, CUTLASS, PyTorch, and vLLM.

- Interested candidates should email a resume and summary of relevant experience to terrytangyuan@gmail.com, including "GPU Mode" in the subject line.

LlamaIndex Discord

- LlamaCloud Doubles as MCP Server: LlamaCloud can be used as an MCP server, enabling real-time data integration into workflows for any MCP client, as shown in this video demonstration.

- This setup allows an existing LlamaCloud index to serve as a data source for an MCP server used by Claude Desktop.

- Claude Leverages Data from LlamaCloud: Claude Desktop can use an existing LlamaCloud index as a data source for an MCP server, integrating up-to-the-second data into the Claude workflow, detailed in this video.

- This functionality enhances Claude's ability to access and utilize real-time information within its workflows.

- LlamaExtract Ditches Schema Inference: The schema inference capability in LlamaExtract, announced last year, has been de-prioritized because most users already have the schema they need, as detailed in the LlamaExtract Announcement.

- The feature may return in the future, but other aspects are being prioritized.

- LLMs to Caption PDFs and Scanned Images: Members discussed using LlamaParse as the best parsing tool to parse PDFs; another member suggested using an LLM to read and caption an image for a RAG application, to answer questions from uploaded PDFs.

- Another member enquired about Hybrid Chunking and OCR for scanned documents like handwritten mathematics homework.

- Chatbot battles with SQL Query Generation: A user building a chatbot that generates SQL queries from user messages reported issues with the bot not picking the appropriate columns, even with column comments in the SQL file.

- No specific solution was provided, but the user was encouraged to file a bug report to the team.

Latent Space Discord

- Nvidia Nabs Lepton AI: Nvidia has acquired Lepton AI, an inference provider, for several hundred million dollars to enhance its software offerings for GPU utilization, according to The Information.

- This acquisition aims to simplify GPU utilization and beef up its software offerings.

- OpenAI's Agents Embrace MCP: The Model Context Protocol (MCP) now integrates with OpenAI Agents SDK, enabling the use of various MCP servers to supply tools to Agents, as detailed in the Model Context Protocol introduction.

- MCP is envisioned as a USB-C port for AI applications, standardizing context provision to LLMs.

- Replit Agent v2 Gains Autonomy: Replit Agent v2, currently in early access with Anthropic’s Claude 3.7 Sonnet, boasts enhanced autonomy, formulating hypotheses and searching for files before making alterations, detailed in the Replit blog.

- The upgrade ensures it is more autonomous and less likely to get stuck on the same bug.

- GPT-4o Leaps Up Leaderboard: The latest ChatGPT-4o update (2025-03-26) has surged to #2 on the Arena, surpassing GPT-4.5, with notable enhancements and is tied for #1 in Coding and Hard Prompts, according to the Arena leaderboard.

- The update is reportedly better at following detailed instructions, particularly those with multiple requests, and has improved intuition and creativity.

- OpenAI Relaxes Image Gen Policy: OpenAI is adjusting its image generation policy from blanket refusals to preventing real-world harm, aiming to maximize creative freedom while averting actual harm, as described by Joanne Jang in her blog post.

- This policy shift seeks to balance creative expression with harm prevention.

Torchtune Discord

- FP8 QAT run Spotted: A member is exploring FP8 QAT and encountered this issue while aiming for a pure QAT run on a cold-trained model.

- They clarified that while FP8 QAT is a goal, immediate resources are limited.

- Optimizer State Remains Unaltered: A member confirmed that activating fake quant will not alter the optimizer state.

- This confirmation addresses concerns about unintended side effects during quantization experiments.

- GRPO PRs Seek Swift Action: A member emphasized the urgency of merging two GRPO PRs (#2422 and #2425), pointing out that #2425 is a critical bug fix.

- A team member promptly acknowledged the message and committed to addressing the PRs.

- Anthropic Allegedly Abandons Ship for TensorFlow: It was pointed out that Anthropic is allegedly standardizing around TensorFlow.

- This has triggered speculation about the future of PyTorch within Anthropic.

- JoeI SORA Takes Over: A member shared a screenshot of JoeI SORA in an unspecified context, responding to a query about a model's intuition.

- The member quipped that there is no intuition, just JoeI.

Cohere Discord

- Cohere Explores Vector Database Integrations: Members discussed options for vector databases, with a member sharing a link to Cohere's integrations page that showcased options such as Elasticsearch, MongoDB, Redis, Haystack, Open Search, Vespa, Chroma, Qdrant, Weaviate, Pinecone, and Milvus.

- Another member asked about hosting vector DBs online, to which the response implied that Cohere handles hosting concerns.

- Founders Ponder AI Agent Pricing: A member initiated a discussion about how founders are pricing and monetizing AI agents, seeking to chat with others and validate insights.

- Another member encouraged sharing more details about AI Agent pricing strategies.

- Cohere May Hit Up QCon London: A member inquired whether Cohere would be present at QCon London this year, expressing interest in discussing access to North with a Cohere representative.

- They attended last year.

- Refugee Organization Champions Livelihood: A refugee in Kenya introduced Pro-Right for Refugees, a Community Based Organization (CBO) focused on promoting refugee access to livelihood opportunities and enhancing peaceful living in Kakuma Refugee and Kalobeyei Settlement.

- The CBO focuses on peacebuilding, awareness raising, and livelihood initiatives, inviting volunteers and support for refugees.

tinygrad (George Hotz) Discord

- Budget AI Rig Assembled for Cheap: A member explored building a budget AI rig for 7-8k yuan using older X99 components, Xeons, and 32GB ECC DDR4 RAM sourced from Taobao.

- Another member confirmed the feasibility of this setup after a quick investigation.

- AX650N Specs Highlighted: The AX650N's specs were spotlighted via a link to its product page, revealing 72Tops@int4, 18.0Tops@int8 NPU and native support for Transformer intelligent processing platform.

- Additionally, the AX650N includes an Octa-core A55 CPU, supports 8K video encoding/decoding, and features dual HDMI 2.0 outputs.

- AX650N Reverse Engineered: A blog post detailed the reverse engineering of the AX650N, reporting it achieves 72.0 TOPS@INT4 and 18.0 TOPS@INT8.

- The post also mentioned efforts to port smaller Transformer models, pointing to an associated GitHub repo.

- Tinygrad's PRs Focus on CPU Functionality: Two Tinygrad pull requests were shared: PR #9546 and PR #9554.

- The first PR addresses a potential fix for a recursion error in test_failure_53, while the second aims to continue moving functions off of CPU in torch backend.

- TinyGrad's Code Generation Unveiled: A user inquired about TinyGrad's code generation process, referencing outdated information about

CStyleCodegenandCUDACodegenclasses, seeking to understand the translation from optimized plans to low-level code.- The discussion sought to clarify how TinyGrad translates optimized plans into executable code for various devices (CPU/GPU), given the user's confusion regarding the current implementation.

LLM Agents (Berkeley MOOC) Discord

- Sharing Lecture Recordings OK'd: A member inquired about sharing lecture recordings for the LLM Agents Berkeley MOOC, and a moderator confirmed it's allowed, encouraging new participants to sign up.

- This is part of onboarding new MOOC participants.

- Mentorship Deadline Extension Mulling: A member requested a deadline extension for the mentorship application; the moderator noted the form won't close immediately.

- However, consideration after the deadline isn't guaranteed due to high interest and the need to start projects soon.

- Entre Track Mentorship Missing: A member asked about mentorship for the Entre track, and the moderator clarified that Berkeley doesn't offer it.

- There will be office hours with sponsors in Apr/May.

DSPy Discord

- AOT Breaks Down Reasoning: Atom of Thoughts (AOT) decomposes questions into atomic subquestions structured as a directed acyclic graph (DAG), in contrast to Tree of Thoughts (ToT) which maintains the entire tree history.

- The poster emphasizes AOT's memoryless reasoning steps and explicit decompose-then-contract phases for atomic subquestions.

- Ideal Evaluation Datasets: Ideal evaluation datasets for AOT should include GSM8K and MATH (datasets with step-by-step solutions), HotpotQA and 2WikiMultihopQA (annotated reasoning paths), and datasets explicitly detailing intermediate reasoning steps.

- Examples provided include

mock_llm_client.generate.side_effect = ["0.9", "42"]for testing and validation.

- Examples provided include

- LLMDecomposer Strategy is Flexible: AOT utilizes the flexible decomposition provided by

LLMDecomposer, with prompts that adapt based on the question type (MATH, MULTI_HOP), support custom decomposers, and enable dynamic prompt selection.- The decomposition strategy ensures atomicity via a contraction validation phase, exemplified by prompts such as

QuestionType.MATH: Break down this mathematical question into smaller, logically connected subquestions: Question: {question}.

- The decomposition strategy ensures atomicity via a contraction validation phase, exemplified by prompts such as

- MiproV2 Faces Value Error: A user encountered a ValueError while using MiproV2, related to mismatched keys in

signature.output_fields, where the expected keys weredict_keys(['proposed_instruction']), but the actual keys received weredict_keys([]).- Similar issues were reportedly encountered with Copro on GitHub, potentially related to

max_tokenssettings.

- Similar issues were reportedly encountered with Copro on GitHub, potentially related to

Codeium (Windsurf) Discord

- Windsurf Surfs Up with Gemini 2.5 Pro!: Gemini 2.5 Pro is now available in Windsurf, giving users 1.0 user prompt credits on every message and 1.0 flow action credits on each tool call.

- The release was announced on X.

- Gemini 2.5 Pro Overloads Windsurf: Windsurf is experiencing rate limiting issues with Gemini 2.5 due to massive load.

- The team is actively working to increase quota and apologized for the inconvenience.

Nomic.ai (GPT4All) Discord

- GPT4All Users Bemoan Model Import Issues: Users are reporting difficulties importing models into GPT4All, with the system seemingly unresponsive, with further issues including the inability to search the model list.

- Additional complaints include missing model size information during selection, lack of LaTeX support, and a non-user-friendly model list order.

- GPT4All User Experience Draws Ire: Users are expressing frustration with the GPT4All user experience, citing issues such as missing embedder choice options.

- A user stated that you are loosing users ... cause others much more user-friendly and willing to be open.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!