[AINews] Multi-modal, Multi-Aspect, Multi-Form-Factor AI

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 4/12/2024-4/15/2024. We checked 5 subreddits and 364 Twitters and 27 Discords (395 channels, and 8916 messages) for you. Estimated reading time saved (at 200wpm): 985 minutes.

Whole months happen in some days in AI - just as Feb 15 saw Sora and Gemini 1.5 and a bunch of other launches, the ides of April saw huge launches from:

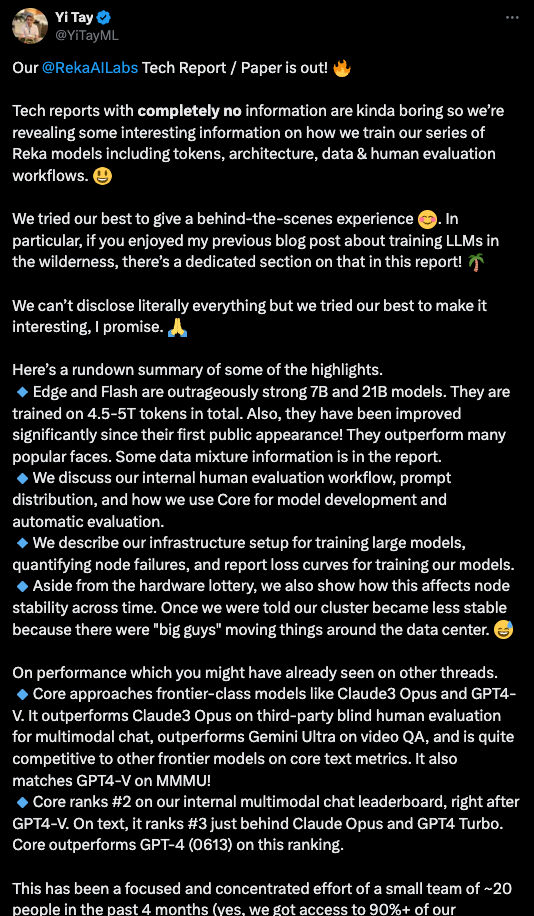

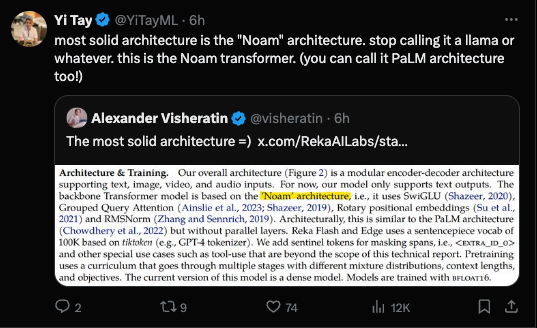

Reka Core

A new GPT4-class multimodal foundation model...

... with an actually useful technical report...

... being "full Shazeer"

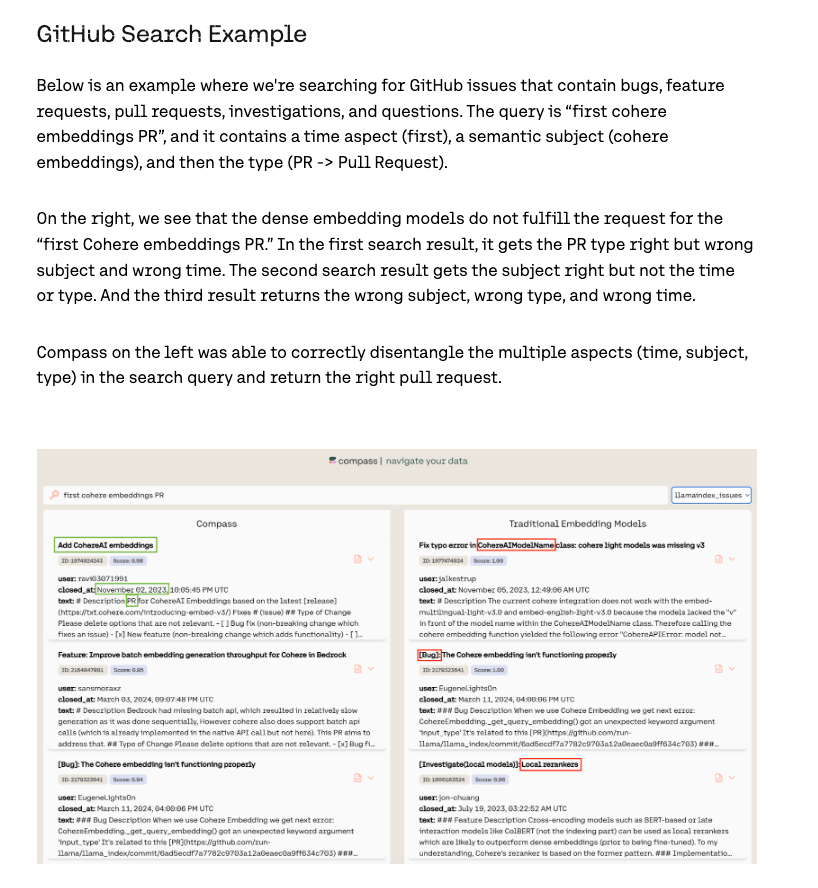

Cohere Compass

our new foundation embedding model that allows indexing and searching on multi-aspect data. Multi-aspect data can best be explained as data containing multiple concepts and relationships. This is common within enterprise data — emails, invoices, CVs, support tickets, log messages, and tabular data all contain substantial content with contextual relationships.

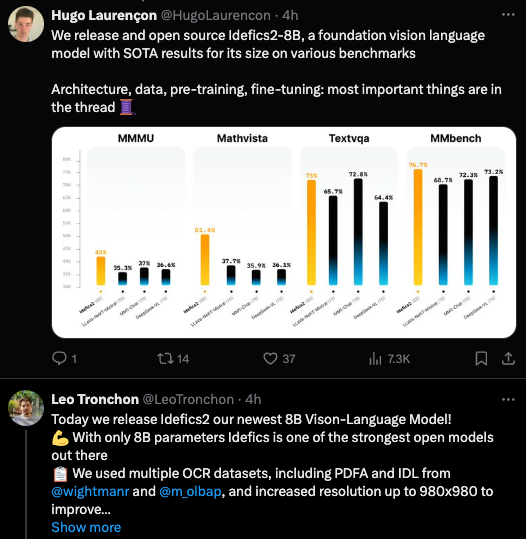

IDEFICS 2-8B

Continued work from last year's IDEFICS, a totally open source reproduction of Google's Flamingo unreleased multimodal model.

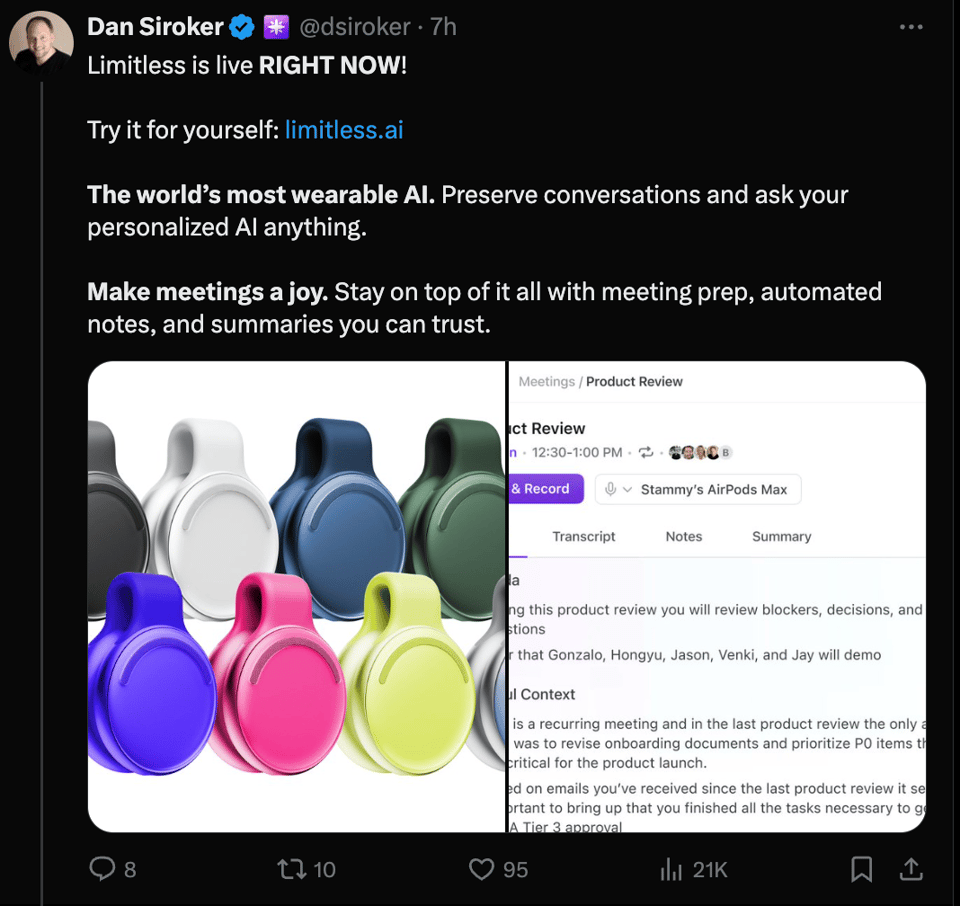

Rewind pivots to Limitless

It’s a web app, Mac app, Windows app, and a wearable.

Spyware is out, Pendants are in.

Table of Contents

- Reka Core

- Cohere Compass

- IDEFICS 2-8B

- Rewind pivots to Limitless

- AI Reddit Recap

- AI Twitter Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Stability.ai (Stable Diffusion) Discord

- Perplexity AI Discord

- Unsloth AI (Daniel Han) Discord

- Eleuther Discord

- Nous Research AI Discord

- OpenRouter (Alex Atallah) Discord

- LM Studio Discord

- OpenAI Discord

- Latent Space Discord

- Modular (Mojo 🔥) Discord

- CUDA MODE Discord

- Cohere Discord

- HuggingFace Discord

- LAION Discord

- LangChain AI Discord

- OpenAccess AI Collective (axolotl) Discord

- OpenInterpreter Discord

- LlamaIndex Discord

- tinygrad (George Hotz) Discord

- Interconnects (Nathan Lambert) Discord

- LLM Perf Enthusiasts AI Discord

- DiscoResearch Discord

- Mozilla AI Discord

- Alignment Lab AI Discord

- AI21 Labs (Jamba) Discord

- Datasette - LLM (@SimonW) Discord

- Skunkworks AI Discord

- PART 2: Detailed by-Channel summaries and links

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence. Comment crawling works now but has lots to improve!

AI Models and Performance

- Apple MLX performance: In /r/LocalLLaMA, Apple MLX (0.10.0) reaches 105.5 tokens/s on M2 Ultra with 76 GPU, beating Ollama/Llama.cpp at 95.1 tokens/s using Mistral Instruct 4bit.

- Ollama performance comparisons: In /r/LocalLLaMA, Ollama performance using Mistral Instruct 0.2 q4_0 shows the M2 Ultra 76GPU leading at 95.1 t/s, followed by Windows Nvidia 3090 at 89.6 t/s, WSL2 NVidia 3090 at 86.1 t/s, and M3 Max 40GPU at 67.5 t/s. Apple MLX reaches 103.2 t/s on M2 Ultra and 76.2 t/s on M3 Max.

- M3 Max vs M1 Max prompt speed: In /r/LocalLLaMA, the M3 Max 64GB has more than double the prompt speed compared to M1 Max 64GB when processing long contexts, using Command-R and Dolphin-Mixtral 8x7B models.

- GPU considerations for LLMs: In /r/LocalLLaMA, a user is seeking advice on building a machine around RTX 4090 or buying a MAC for running LLMs like Command R and Mixtral models with future upgradability.

- GPU for Stable Diffusion: In /r/StableDiffusion, a comparison of 3060 12GB vs 4060 16GB for Stable Diffusion recommends going for as much VRAM as reasonably affordable. A 4060ti 16GB takes 18.8 sec for SDXL 20 steps.

LLM and AI Developments

- Comparing AI to humans: In /r/singularity, Microsoft Research's Chris Bishop compares AI models that regurgitate information to "stochastic parrots", noting that humans who do the same are given university degrees. Comments discuss the validity of degrees and whether they indicate more than just information regurgitation.

- Impact on jobs: Former PayPal CEO Dan Schulman predicts that GPT-5 will be a "freak out moment" and that 80% of jobs will be reduced by 80% in scope due to AI.

- Obsession with AGI: Mistral's CEO Arthur Mensch believes the obsession with achieving AGI is about "creating God". Gary Marcus also urges against creating conscious AI in a tweet.

- Building for the future: Sam Altman tweets about people working hard to build technology for future generations to continue advancing, without expecting to meet the beneficiaries of their work.

- Confronting consciousness: A post in /r/singularity argues that achieving AGI will force humans to confront their lack of understanding about what creates consciousness, predicting debates and tensions around AI ethics and rights.

Industry and Career

- Identifying "fake" ML roles: In /r/MachineLearning, a PhD student asks for advice on spotting "fake" ML roles after being hired for a position that didn't involve actual ML work, noting that asking questions during interviews may not be effective due to potential dishonesty.

- Relevance of traditional NLP tasks: Another PhD student questions the importance of traditional NLP tasks like text classification, NER, and RE in the era of LLMs, worrying about the future of their research.

- Practical uses for LLMs: A post in /r/MachineLearning asks for examples of practical industry uses for LLMs beyond text generation that provide good ROI, noting that tasks like semantic search can be handled well by other models.

Tools and Resources

- DBRX support in llama.cpp: Llama.cpp now supports DBRX, a binary format for LLMs.

- Faster structured generation: In /r/LocalLLaMA, a new method for structured generation in LLMs is claimed to be much faster than llama.cpp's approach, with runtime independent of grammar complexity or model/vocabulary size. The authors plan to open-source it soon.

- Python data sorting tools: The author has open-sourced their collection of Python tools for automating data sorting and organization, aimed at handling unorganized files and large amounts of data efficiently.

- Simple discrete diffusion implementation: An open-source, simple PyTorch implementation of discrete diffusion in 400 lines of code was shared in /r/MachineLearning.

Hardware and Performance

- M1 Max vs M3 Max: In /r/LocalLLaMA, a comparison of prompt speed between M1 Max and M3 Max with 64GB RAM shows the M3 Max having more than double the speed, especially for long contexts.

- RTX 4090 vs Mac: A post asks for advice on building a PC with an RTX 4090 or buying a Mac for running LLMs, believing a PC would be cheaper and more upgradeable.

- MLX performance on M2 Ultra: Apple's MLX library achieves 105.5 tokens/s on an M2 Ultra with 76 GPU cores, surpassing llama.cpp's 95.1 tokens/s when running the Mistral Instruct 4-bit model.

- Ollama performance comparison: A performance comparison of the Ollama library on various hardware shows the M2 Ultra leading at 95.1 t/s, followed by Windows Nvidia 3090, WSL2 Nvidia 3090, and M3 Max using the Mistral Instruct model.

Memes and Humor

- Several meme and humor posts were highly upvoted, including "The Anti-AI Manifesto", "Maybe maybe maybe", "the singularity is being driven by an outside force", "Ai women are women", and "Real reason AI (Alien Intelligence) can't do hands? 👽".

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

AI Models and Architectures

- New model releases: @RekaAILabs announced Reka Core, their "best and most capable multimodal language model yet", competitive with GPT-4/Opus level models. @WizardLM_AI released WizardLM-2, a family of models including 8x22B, 70B and 7B variants competitive with leading proprietary LLMs.

- Architectures and training: @jeremyphoward noted that transformers are an architecture commonly used with diffusion. @ylecun stated AI will eventually surpass human intelligence, but not with current auto-regressive LLMs alone.

- Optimizing and scaling: @karpathy optimized an LLM in C to match PyTorch, now at 26.2ms/iteration, using tricks like cuBLAS in fp32 mode. @_lewtun believes strong Mixtral-8x22B fine-tunes will close the gap with proprietary models.

AI Capabilities and Benchmarks

- Multimodal abilities: @RekaAILabs shared Reka Core's video understanding capabilities, beating Claude3 Opus on multimodal chat. @DrJimFan speculated Tesla FSD v13 may use language tokens to reason about complex self-driving scenarios.

- Coding and math: @OfirPress noted the open-source coding agent SWE-agent already has 1.5k users after 10 days. @WizardLM_AI used a fully AI-powered synthetic training system to improve WizardLM-2.

- Benchmarks and leaderboards: @svpino noted Claude 3 was the best model on a human eval leaderboard for 17 seconds before GPT-4 updated. @bindureddy analyzed GPT-4's coding, math and knowledge cutoff as reasons for its performance.

Open Source and Democratizing AI

- Open models and data: @arankomatsuzaki announced EleutherAI's Pile-T5 model using 2T tokens from the Pile and the Llama tokenizer. @_philschmid introduced Idefics2, an open source VLM under 10B parameters with strong OCR, document understanding and visual reasoning.

- Accessibility and cost: @maximelabonne noted open source models now lag top closed source by 6-10 months rather than years. @ClementDelangue predicted the gap will fully close by year end, as open source is faster, cheaper and safer for most uses.

- Compute and tooling: @WizardLM_AI is sharing 8x22B and 7B WizardLM-2 weights on Hugging Face. @aidangomez announced the Compass embedding model beta for multi-aspect data search.

Industry and Ecosystem

- Company expansions: @gdb and @hardmaru noted OpenAI's expansion to Japan as a significant AI presence. @adcock_brett shared Canada's $2.4B investment in AI capabilities and infrastructure.

- Emerging applications: @svpino built an entire RAG app without code using Langflow's visual interface and Langchain. @llama_index showcased using LLMs and knowledge graphs to accelerate biomaterials discovery.

- Ethical considerations: @ID_AA_Carmack regretted not supporting @PalmerLuckey more at Facebook during a "witch hunt". @mmitchell_ai praised a video as the best AI existential risk coverage so far.

AI Discord Recap

A summary of Summaries of Summaries

-

Advancements in Large Language Models (LLMs): There is significant excitement and discussion around new releases and capabilities of LLMs across various platforms and organizations. Key examples include:

- Pile-T5 from EleutherAI, a T5 model variant trained on 2 trillion tokens, showing improved performance on benchmarks like SuperGLUE and MMLU. All resources, including model weights and scripts, are open-sourced on GitHub.

- WizardLM-2 series announced, with model sizes like 8x22B, 70B, and 7B, sparking excitement for deployment on OpenRouter, with WizardLM-2 8x22B compared favorably to GPT-4.

- Reka Core, a frontier-class multimodal language model from Reka AI, with details on training, architecture, and evaluation shared in a technical report.

-

Optimizations and Techniques for LLM Training and Inference: Extensive discussions revolve around optimizing various aspects of LLM development, including:

- Efficient context handling with approaches like Ring Attention, enabling models to scale to nearly infinite context windows by employing multiple devices.

- Model compression techniques such as LoRA, QLoRA, and 16-bit quantization for reducing memory footprint, with insights from Lightning AI and community experiments.

- Hardware acceleration strategies like enabling P2P support on NVIDIA 4090 GPUs using tinygrad's driver patch, achieving significant performance gains.

-

Open-Source Initiatives and Community Collaboration: The AI community demonstrates a strong commitment to open-source development and knowledge sharing, as evidenced by:

- Open-sourcing of major projects like Pile-T5, Axolotl, and Mixtral (in Mojo), fostering transparency and collaborative efforts.

- Educational resources such as CUDA MODE lectures and a call for volunteers to record and share content, promoting knowledge dissemination.

- Community projects like llm.mojo (Mojo port of llm.c), Perplexica (Perplexity AI clone), and LlamaIndex integrations for document retrieval, showcasing grassroots innovation.

-

Datasets and Data Strategies for LLM Development: Discussions highlight the importance of data quality, curation, and strategic approaches to training data, including:

- Synthetic data generation techniques like those used in StableLM (ReStruct dataset) and MiniCPM (mixing OpenOrca and EvolInstruct), with a focus on CodecLM for aligning LLMs with tailored synthetic data.

- Data filtering strategies and the development of scaling laws for data curation, emphasizing that curation cannot be compute-agnostic, as presented in a CVPR 2024 paper.

-

Misc

- Stable Diffusion 3 Sparks Excitement and Debate: The AI community is eagerly anticipating the release of Stable Diffusion 3 (SD3), discussing its potential improvements in quality and efficiency. Conversations revolve around optimizing performance on less powerful GPUs with tools like SD Forge, and exploring AI-powered creative workflows with ControlNet, Lora, and outpainting techniques. The heavy prompt censorship in SD3 has raised concerns about potential quality decline, as discussed on Reddit.

- Perplexity AI's Roadmap and Model Comparisons: Perplexity AI's June roadmap teases new features like enforcing JSON grammar, a new Databricks model, Model Info endpoint, status page, and multilingual support, viewable on their feature roadmap page. Discussions compare the context window and performance of models like Claude Opus, GPT-4, and RAG for various tasks. Meta's release of an AI interface on WhatsApp, resembling Perplexity AI, has sparked interest in the growing integration of AI in messaging platforms, as reported in this article.

- Tinygrad Enables P2P on NVIDIA GPUs: Tinygrad has successfully enabled peer-to-peer (P2P) support on NVIDIA 4090 and 4070 TI Super GPUs by modifying NVIDIA's driver, achieving 14.7 GB/s AllReduce performance. The breakthrough, shared on Twitter with code available on GitHub, has significant implications for cost reduction in running large language models. The CUDA community is pushing performance boundaries with the One Billion Row Challenge and exploring optimization strategies for low-precision computations.

- Eleuther AI's Pile-T5 Model and Research Insights: EleutherAI introduced Pile-T5, a T5 model variant trained on 2 trillion tokens from the Pile, demonstrating improved performance on benchmarks like SuperGLUE and MMLU. The model, which excels in code-related tasks, has its weights and training scripts open-sourced on GitHub. Research discussions delved into the capabilities of MoE versus dense transformer models, the role of tokenization in LLMs, and the interpretability of hidden representations using frameworks like Google's Patchscopes.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

Stable Diffusion 3 Sparks Excitement: AI enthusiasts are buzzing with anticipation over Stable Diffusion 3 (SD3), discussing its potential for efficiency gains and debating the merits of SD Forge for optimizing performance on less powerful GPUs.

Pixart-Sigma Pushing VRAM Boundaries: The application of Pixart-Sigma with T5 conditioning within ComfyUI provokes discussions around VRAM usage, with participants noting that T5 maintains VRAM usage under 10.3GB even in 8-bit compression mode.

AI Tools for Content Creation Get Spotlight: Query exchanges regarding AI tools like ControlNet, Lora, and expansion techniques like outpainting hint at a need for consistent color generation and background extension in creative workflows.

Debating CPU vs. GPU Efficiency for AI: Community members exchange troubleshooting tips on GPU memory optimization and flag features like --lowvram for those running Stable Diffusion on less potent machines, highlighting the significant speed difference between CPU and GPU processing.

Artists Seek Tech-Driven Collaborations: The trend of fusing AI with artistic tools continues as a digital artist seeks input and tutorial assistance on a painting app combining AI features, with project details found on GitHub.

Perplexity AI Discord

- AI Models at Play in Prose and Logic: Debates underscore that Claude Opus excels in prose while GPT-4 outshines in logical reasoning. Practitioners should explore models to best fit their application needs.

- Context Hinges on Model Choice: Claude Opus is praised for executing sequential instructions with finesse, while discussions indicate that GPT-4 may falter after several follow-ups; RAG boasts an edge with larger context retrieval for file uploads.

- Perplexity's Roadmap débuts Multilingualism: Perplexity AI's June roadmap teases enforcing JSON grammar, new Databricks model, Model Info endpoint, status page, multilingual support, and N>1 sampling, viewable on their feature roadmap page.

- API Intricacies Spark Dialogue: Users delve into Perplexity API's nuances—from default temperature settings in models, citation features, community aid for URL retrieval in responses, to procedures for increased rate limits.

- Meta Mimics Perplexity?: A buzz on Meta's AI resembling Perplexity's interface for WhatsApp, suggesting AI's growing integration within messaging platforms, further highlighted by an article comparing the two (Meta Releases AI on WhatsApp, Looks Like Perplexity AI).

Unsloth AI (Daniel Han) Discord

Geohot Hacks Back P2P to 4090s: "geohot" ingeniously implemented peer-to-peer support into NVIDIA 4090s, enabling enhanced multi-GPU setups; details are available on GitHub.

Unsloth Gains Multi-GPU Momentum: Interest spiked on Multi-GPU support in Unsloth AI, with Llama-Factory touted as a worthy investigation route for integration.

A Hugging Face of Encouragement: Unsloth AI garnered attention from Hugging Face CEO Clement Delangue by securing a follow on an unspecified platform, suggesting potential collaborative undertones.

Linguistic Labyrinth of AI PhD Life: A PhD student outlined their challenging exploration in developing an instruction-following LLM for their mother tongue, highlighting the project complexity beyond mere translation and fine-tuning.

Million-Dollar Mathematical AI: The community engaged with the prospect of a $10M Kaggle AI prize to create an LLM capable of acing the International Math Olympiad and unpacked the Beal Conjecture's $1M bounty for a proof or counterexample at AMS.

Resourceful VRAM Practices & Strategic Finetuning: AI engineers converged on efficient use of VRAM for training robust LLMs like Mistral, sharing best practices such as the "30% less VRAM" update from Unsloth and the nuanced approach of initiating finetuning with shorter examples.

Cultural Conquest of Linguistic Datasets: Strategies to amplify low-resource language datasets were exchanged, including the use of translation data from platforms like HuggingFace.

Pioneering Podman for AI Deployment: Innovators showcased the deployment of Unsloth AI in Podman containers, streamlining local and cloud implementation, as seen in this demo: Podman Build - For cost-conscious AI.

Ghost 7B Alpha Raises the Benchmark: Ghost 7B Alpha was proclaimed for superior reasoning compared to other models, signaling fine-tuning prowess without expanding tokenizers, as discussed by enthusiasts.

Merging Minds on Model Compression: The melding of adapters, particularly QLoRA and 16bit-saving techniques for vLLM or GGUF, was deliberated, contemplating the intricacies of naive merging versus dequantization strategies.

Eleuther Discord

Pile-T5 Power: EleutherAI introduces Pile-T5, an enhanced T5 model variant produced through training with 2 trillion tokens from the Pile. It showcases significant performance improvements in both SuperGLUE and MMLU benchmarks and excels in code-related tasks, with resources including weights and scripts open-sourced on GitHub.

Entropic Data Filtering: The CVPR 2024 paper suggests a notable advancement in unpacking the importance of entropy in data filtering. An empirical study unveiled scaling laws capturing how data curation is fundamentally linked with entropy, enriching the community's understanding of heterogeneous & limited web data and its practical implications. Explore the study here.

Inside the Transformer Black Box: Google's Patchscopes framework endeavors to make LLMs' hidden representations more interpretable by generating explanations in natural language. Likewise, a paper introducing a toolkit for transformers to conduct causal manipulations showcases the value of pinpointing key model subcircuits during training, possibly offering pathways to avoid common training roadblocks. Details on the JAX toolkit can be found in this tweet from Stephanie Chan (@scychan_brains) and on the Patchscopes framework here.

MoE vs. Dense Transformers Debate: Discussions in the community probe the capacity and benefits of MoE versus dense transformer models. Key insights reveal MoEs' relative advantage sans VRAM constraints and question dense models' performance parity at comparable parameter budgets. There's a pronounced curiosity regarding the foundational attributes driving model behavior beyond the metrics.

NeoX Nuances Unveiled: Questions within the GPT-NeoX project brought up intricacies like oversized embedding matrices for GPU efficiency and peculiar weight decay behaviors potentially due to non-standard activations. A remark on rotary embeddings noted its partial application in NeoX as against other models. A corporate CLA is being devised to facilitate contributions to the project.

Nous Research AI Discord

- Google's Infini-attention Paper Snags Spotlight: A member expressed interest in Google's "Infini-attention" paper, available on arXiv, proposing a novel way to handle longer sequences for language models.

- Community Delves into Vector Search: In a comparison of vector search speeds, FAISS's IVFPQ came out on top at 0.36ms, followed by FFVec's 0.44ms; meanwhile, FAISS Flat and HNSW clocked in at slower times of 10.58ms and 1.81ms, respectively.

- AI Benchmarks Garner Scrutiny: A discussion among members challenged the effectiveness of current AI benchmarks, proposing the need for harder-to-game, domain-specific task evaluations, such as a scrutinized tax benchmark.

- Anticipating WorldSim's Update: Enthusiasm is brewing over the forthcoming update to WorldSim, with members sharing their experiments inspired by it, while preparing for new exciting features teased for next Wednesday.

- LLMs Under the Microscope: Technical exchanges included inquiries into a safety-focused healthcare LLM detailed in an arXiv paper, challenges in fine-tuning LLMs for the Greek language while considering pretraining necessity, and methods for in-line citations in Retrieval-Augmented Generation (RAG) queries.

OpenRouter (Alex Atallah) Discord

Mixtral Model Mix-Up: The community reported that Mixtral 8x22B:free is discontinued; users should transition to the Mixtral 8x22B standard model. Experimental models Zephyr 141B-A35B and Fireworks: Mixtral-8x22B Instruct (preview) are available for testing; the latter is a fine-tune of Mixtral 8x22B.

Token Transaction Troubles: A user's issue with purchasing tokens was deemed unrelated to OpenRouter; they were advised to contact Syrax directly for resolution.

Showcasing Rubik's Research Assistant: Users interested in testing the new Rubiks.ai research assistant can join the beta test with a 2-month free premium. This tool includes access to models like Claude 3 Opus and GPT-4 Turbo, among others; testers should use the code RUBIX and provide feedback. Explore Rubik's AI.

Dynamic Routing Deliberation: There's a buzz around improving Mixtral 8x7B Instruct (nitro) speeds via dynamic routing to the highest transaction-per-second (t/s) endpoint. There were varying opinions on the performance of models like Zephyr 141b and Firextral-8x22B as well.

WizardLM-2 Series Spells Excitement: The newly announced WizardLM-2 series with model sizes including 8x22B, 70B, and 7B, has garnered community interest. Attention is particularly focused on the expected performance of WizardLM-2 8x22B on OpenRouter.

LM Studio Discord

Models On The Fritz: Users reported problems with model loading in LM Studio across different versions and OS, including error messages about "Error loading model." and issues persisting after downgrading and turning off GPU offload. A full removal and reinstall of LM Studio were sought by one user after ongoing frustrations with model performance.

Attention to Hardware: There was considerable discussion surrounding hardware requirements for running AI models effectively, highlighting the necessity for high-tier equipment for an experience on par with GPT-4 and the underutilization of Threadripper Pro CPUs. Contrastingly, ROCm support was called into question, with specific mention of the Radeon 6600 being unsupported in LM Studio.

Quantized conundrums and innovative inferences: Quantization was a hot topic, with users noting performance changes and debate on whether the trade-off in output quality is worthwhile. Meanwhile, a paper titled "Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention" was circulated, illuminating cutting-edge methodologies for Transformers handling prolonged inputs effectively.

Navigating Through New Model Territories: Users shared experiences and recommendations for an assortment of new models like Command R+ and Mixtral 8x22b, focusing on different setups, performance, and even introducing a commands-based tool missionsquad.ai. Notably, Command R+ has been praised for outpacing similar models in analyzing lengthy documents.

Beta Blues and Docker Distributions: While some users grappled with troubles in MACOS 0.2.19, others spearheaded initiatives like creating and maintaining a Docker image for Autogen Studio, hosted on GitHub. Concurrently, sentiments of letdown were conveyed over Linux beta releases missing out on ROCm support, highlighting the niche hurdles that technical users encounter.

OpenAI Discord

- AI Sentience: Fact or Fiction? Engaging debates broke out about AI sentience, revolving around the implications of AI advancements on consciousness and ethical use. Ethical considerations were highlighted, but no consensus was reached on sentient AI systems.

- Token Trading Techniques: Strategies for dealing with GPT's token limits were discussed, with users suggesting summarizing content and using follow-up prompts to manage the context within the constraint effectively.

- GPT-4 Turbo's Visionary Capabilities: Clarification was provided that 'gpt-4-turbo' integrates vision capabilities, and while it still bears a 4k token limit due to memory constraints, it requires a script for analyzing images.

- Prompting for Precision: Users shared experiences with GPT model inconsistencies, suggesting version-specific behaviors and fine-tuning aspects that impact response accuracy, with recommendations to employ a meta-prompting template for better results.

- Hackers Wanted for Prompt Challenge: There's a call for engineers interested in exploring prompt hacking to team up for a competition, suggesting a collaborative venture into creative interactions with LLMs.

Latent Space Discord

Collaboration Beyond Borders with Scarlet AI: The new Scarlet platform enhances task management with collaborative AI agents that provide automated context and support tool orchestration, eyeing an integration with Zapier NLA. A work-in-progress demo was mentioned and Scarlet's website provides details on their offerings.

Whispers of Perplexity's Vanishing Act: The removal of Perplexity "online" models from LMSYS Arena spurred debate on the model's efficacy and potential integrations into engineering tools. This instance underscores a shared interest in the integration efficiency of AI models.

AI Wrangles YouTube's Wild West: Engineers examined strategies to transcribe and summarize YouTube content for AI applications, debating the merits of various tools including Descript, youtube-dl, and Whisper with diarization. The discussion reflects ongoing endeavors to streamline content processing for AI model training.

Limitless Eyes the Future of Personalized AI: The rebranding of Rewind to Limitless introduces a wearable with personalized AI capabilities, sparking discussions around local processing and data privacy. This highlights a peak interest in the security implications of new AI-powered wearable technologies.

Navigating Vector Space in Semantic Search: Insightful discussions on the complexities of semantic search, including vector size, memory usage, and retrieval performance, culminated in a proposed hackathon to delve deeper into embedding models. The discourse reflects a keen focus on optimizing speed, efficiency, and performance in AI-powered search applications.

Modular (Mojo 🔥) Discord

Earn Your Mojo Stripes: Engage with the Mojo community by contributing to the modularml/mojo repo or creating cool open source projects to attain the prestigious Mojician role. Those with merged PRs can DM Jack to match their GitHub contributions with Discord identities.

Mojo's Python Aspirations: The community is buzzing with the anticipation of Mojo extending full support to Python libraries, aiming to include Python packages with C extensions. Meanwhile, efforts to enhance Mojo's Reference usability are in motion, with Chris Lattner hinting at a proposal that could simplify mutability management.

Code Generation and Error Handling Forefront: GPU code generation tactics and the potential integration of an "extensions" feature resembling Swift's implementation for superior error handling have sparked technical debates among members, indicating a future direction for Mojo's development.

Community Code Collaborations Spike: A flurry of activity surrounds the llm.mojo project, where performance boost techniques such as vectorization and parallelization might benefit from collective wisdom, including maintaining synchronized C and Mojo code bases within a single repository.

Nightly News on Mojo Updates: The Mojo team addresses standard library discrepancies in package naming with an upcoming release fix. Additionally, the idea of updating StaticTuple to Array and supporting AnyType garnered interest, and a call for Unicode support contributions to the standard library arose, along with discussions on proper item assignment syntax.

Twitter Dispatch for Modular Updates: Keep an eye on Modular's Twitter for the latest flurry of tweets covering updates and announcements, including a series of six recent tweets from the organization that shed light on their ongoing initiatives.

CUDA MODE Discord

P2P Gets a Speed Boost: Tinygrad enhances P2P support on NVIDIA 4090 GPUs, reaching 14.7 GB/s AllReduce performance after modifying NVIDIA's driver, as detailed here, while PyTorch tackles namespace build complexities with the nightly build showing slower performance versus torch.matmul.

Massive Row Sorting Challenge Awaits CUDA Competitors: tspeterkim_89106 throws down the gauntlet with a One Billion Row Challenge in CUDA implementation that impressively runs in 16.8 seconds on a V100 and invites others to beat a 6-second record on a 4090 GPU.

New Territories in Performance Optimization: CUDA discussions orbit around the merits of running independent matmuls in separate CUDA streams, leveraging stable_fast over torch.compile, and the pursuit of high-efficiency low-precision computations demonstrated by stable_fast challenging int8 quant tensorrt speeds.

Recording and Sharing CUDA Expertise: CUDA MODE is recruiting volunteers to record and share content through YouTube, where lecturing material is also maintained on GitHub, and highlighting potential shifts to live streaming to manage growing member scales.

HQQ and LLM.c: Striding Towards Efficiency: Updates in HQQ implementation on gpt-fast push token generation speeds with torchao int4 kernel support, while LLM.c confronts CUDA softmax integration challenges, explores online softmax algorithm efficiencies, and juggles the dual goals of peak performance and educational clarity.

Cohere Discord

Connector Confusion Cleared: A member resolved an issue with Cohere's connector API, learning that connector URLs must end with /search to avoid errors.

Fine-Tuning Finesse: Cohere's base models are available for fine-tuning through their dashboard, as confirmed in a dashboard link, with options expanded to Amazon Bedrock and AWS.

New Cohere Tools on the Horizon: Updates were shared on new Cohere capabilities, specifically named Coral for chatbot interfaces, and upcoming releases for AWS toolkits for connector implementations.

Model Performance Discussed: Dialogue around Cohere models like command-r-plus touched on their performance on different hardware, including Nvidia's latest graphics cards and TPUs.

Learning Avenues for AI Newbies: New community members seeking educational resources were directed to free offerings like LLM University and provided with a link to Cohere's educational documentation.

Command-R Rocks the Core: Command-R received accolades for being a newly integrated core module by Cohere, highlighting its significance.

Quant and AI Converge: An invitation for beta testing of Quant Based Finance was posted, appealing to those interested in financial analysis powered by AI, with a link here.

Rubiks.AI Rolls Out: An invite for beta testing of Rubiks.AI, a new advanced research assistant and search engine, was shared, offering early access to models like Claude 3 Opus and GPT-4 Turbo, available at Rubiks.AI.

HuggingFace Discord

A Bundle of Multi-Model Know-how: Engineers exchanged tips on deploying multiple A1111 models on a GPU, highlighting resource allocation for parallel model runs. Discussions in NLP explored lightweight embedding options such as all-MiniLM-L6-v2, with paraphrase-multilingual-MiniLM-L12-v2 suggested for academic purposes.

Cognitive Collision: AI Models Straddle Realities: The cool-finds channel shared links to PyTorch and Blender integration for real-time data pipelines, while a Medium post introduced LlamaIndex's document retrieval enhancements. The Grounding DINO model for zero-shot object detection and how it utilizes this in Transformers trended in computer-vision.

Community-Sourced AI Timeline & Mental Models: Project RicercaMente, aiming to map data science evolution through key papers, was touted in cool-finds and NLP, inviting collaboration from the community. Meanwhile, a Counterfactual Inception method was presented to address hallucinations in AI responses, detailed in a paper on arXiv and a related GitHub project.

Training Trials & Tribulations: A U-Net training plateau after 11 epochs led a user to consider Deep Image Prior for image cleaning tasks, shared in computer-vision. In diffusion-discussions, there was an exploration of multimodal embeddings and a clarification about an overstretched token limit warning in a Gradio chatbot for image generation.

Crossing Streams: Events & Education: Upcoming LLM Reading Group sessions focusing on groundbreaking research, including OpenAI's CLIP model and Zero-Shot Classification, were advertised in today-im-learning and reading-group. Resources for those starting out in NLP were also suggested, featuring beginner's guides and transformer overview videos.

LAION Discord

Scam Notice: No LAION NFTs Exist: There have been repeated warnings about a scam involving a fraudulent Twitter account claiming LAION is offering NFTs, but the community has confirmed LAION is solely focused on free, open-source AI resources and does not engage in selling anything.

When to Guide the Diffusion: An arXiv paper introduced findings that the best image results from diffusion models occur when guidance is applied at particular noise levels, emphasizing the middle stages of generation while avoiding early and late phases.

Innovations from AI Audio to Ethics Discussions: Discussions ranged from the introduction of new AI models, like Hugging Face's Parler TTS for sound generation, to debates over the proper use of 'ethical datasets' and the implications of such political language in AI research.

Stable Diffusion 3's Dilemma: Community insight suggested Stable Diffusion 3 faces a risk of quality decline due to its rigorous prompt censorship. There is anticipation for further refinement to address this possible issue shared particularly on Reddit.

Troubleshooting Diffusion Models: A GitHub repository was shared by a member who faces a training issue with their diffusion model, which is outputting random noise and solid black during inference, despite attempts to adjust regularization and learning rate.

LangChain AI Discord

Be Cautious of Spam: Several channels have reported spam messages containing links to adult content, falsely advertising TEEN/ONLYFANS PORN with a potential phishing risk. Members are advised to avoid engaging with suspicious links or content.

RAG Operations Demand Precision: Users are encountering issues with document splitting during Retrieval-Augmented Generation (RAG) operations on legal contracts, where section contents are mistakenly linked to preceding sections, compromising retrieval accuracy.

LangChain Gets Parallel: Utilizing LangChain's RunnableParallel class allows for the parallel execution of tasks, enhancing efficiency in LangGraph operations—an approach worth considering for those optimizing for performance.

Emerging AI Tools and Techniques: A variety of resources, tutorials, and projects have been shared, including Meeting Reporter, how-to guides for RAG, and Personalized recommendation systems, to equip AI professionals with cutting-edge knowledge and practical solutions.

Watch and Learn: A series of YouTube tutorials has been highlighted, focusing on the implementation of chat agents using Vertex AI Agent Builder and integrating them with communication platforms like Slack, valuable for those interested in AI-infused app development.

OpenAccess AI Collective (axolotl) Discord

OpenAccess AI Goes Open-Source: NVIDIA Linux GPU support with P2P gets a boost from open-gpu-kernel-modules on GitHub, offering a tool for enhanced GPU functionality.

Fireworks AI Ignites with Instruct MoE: Promising results from Fireworks AI's Mixtral 8x22b Instruct OH, previewed here, although facing a hiccup with DeepSpeed zero 3 which was addressed by pulling updates from DeepSpeed's main branch.

DeepSpeed's Contributions Clarified: While it doesn't accelerate model training, deepspeed zero 3 shines in training larger models, with a successful workaround integrating updates from DeepSpeed's official GitHub repository for MoE models.

A Harmonious Relationship with AI: Advances in AI-music creation gain spotlight with a tune crafted by AI, available for a listen at udio.com.

Tools for Advanced Model Training: Engaging discussions revolve around model merging, the use of LISA and DeepSpeed, and their effects on model performance. This tool was cited for extracting Mixtral model experts, alongside hardware prerequisites.

Dynamic Weight Unfreezing: Conversations emerge around dynamic weight unfreezing tactics for GPU-constrained users, alongside an unofficial GitHub implementation for Mixture-of-Depths, accessible here.

RTX 4090 GPUs and P2P: Success in enabling P2P memory access with tinygrad on RTX 4090s lead to discussions about removing barriers to P2P usage and community eagerness regarding this achievement.

Combatting Model Repetitiveness: Persistent shortcomings with a model producing repetitive outputs guide members towards exploring diverse datasets and finetuning methods. Mergekit emerges as a go-to for model surgery with configuration insights gleaned from WestLake-10.7B-v2.

Fine-Tuning and Prompt Surgery: One delves into Axolotl's finetuning intricacies, troubleshooting IndexError and prompt formatting woes with guidance from the Axolotl GitHub repo and successful configuration adjustments.

Mistral V2 Outshines: Exceptional first-epoch results with Mistral v2 instruct overshadow others, demonstrating aptitude in diverse tasks including metadata extraction, outperforming models like qwen with new automation capabilities.

DeepSpeed Docker Deep Dive: Distributed training via DeepSpeed necessitates a custom Docker build and streamlined SSH keys for passwordless node intercommunication. Launching containers with the correct environment variables is essential, as detailed in this Phorm AI Code Search) link.

DeepSpeed and 🤗 Accelerate Collaboration: DeepSpeed integrates smoothly with 🤗 Accelerate without overriding custom learning rate schedulers, with the push_to_hub method complementing the ease of Hugging Face model hub repository creation.

OpenInterpreter Discord

Malware Scare and Command Line Riddles: Engineers have raised an issue about Avast antivirus detections and confusion stemming from the ngrok/ngrok/ngrok command line in OpenInterpreter; updates to the documentation were suggested to clear user concerns.

Tiktoken Gets a PR For Building Progress: A GitHub pull request aiming to resolve a build error by updating the tiktoken version for OpenInterpreter suggests improvements are on the way; review the changes here.

Persistence Puzzle: Emergence of Assistants API: The integration of the Assistants API for data persistence has been discussed, with community members creating Python assistant modules for better session management; advice on node operations implementation is being sought.

OS Mode Odyssey on Ubuntu: The Open Interpreter's OS Mode on Ubuntu has generated troubleshooting conversations, with a focus on downgrading to Python 3.10 for platform compatibility and configuring accessibility settings.

Customize It Yourself: O1's User-Driven Innovation: The O1 community showcases their creativity through personal modifications and enhancements such as improved batteries and custom cases; a custom GPT model trained on Open Interpreter's documentation is lending a hand to ChatGPT Plus users.

LlamaIndex Discord

LlamaIndex Migrates PandasQueryEngine: The latest LlamaIndex update (v0.10.29) relocated PandasQueryEngine to llama-index-experimental, necessitating import path adjustments and providing error messages for guidance on the transition.

AI Application Generator Garners Attention: In partnership with T-Systems and Marcus Schiesser, LlamaIndex launched create-tsi, a command line tool to generate GDPR-compliant AI applications, stirring the community's interest with a promotional tweet.

Redefining Database and Retrieval Strategies: Community exchanges delved into ideal vector databases for similarity searches, contrasting Qdrant, pg vector, and Cassandra, along with discussions on leveraging hybrid search for multimodal data retrieval, referencing the LlamaIndex vector stores guide.

Tutorials and Techniques to Enhance AI Reasoning: Articles and a tutorial shared showcased methods to fortify document retrieval with memory in Colbert-based agents and integrating small knowledge graphs to boost RAG systems, as highlighted by WhyHow.AI.

Community Commendations and Technical Support: Community appreciation for articles on advancing AI reasoning was voiced, while LlamaIndex users tackled technical woes and encouraged proactive contribution to documentation, reinforcing the platform's dedication to knowledge sharing and support.

tinygrad (George Hotz) Discord

- Stacking 4090s Slices TinyBox Expenses: Enthusiasm bubbled over the RTX 4090 driver patch reaching the Hacker News top story, signaling its breakthrough in GPU efficiency. When stacked, RTX 4090s offer a substantial reduction in costs for running large language models (LLMs), with numbers crunched to show a 36.04% cost decrease compared to the Team Red Tinybox, and a striking 61.624% cut versus the Team Green Tinybox.

- Tinygrad Development Heats Up: George Hotz, along with the Tinygrad community, is pushing forward in enhancing the tinygrad documentation, specifically targeting developer experience and clarity in error messaging. A consensus was reached to increase the code line limit to 7500 lines to support new backends, addressing a flaky mnist test and non-determinism in the scheduler.

- Kernel Fusion and Graph Splitting in Tinygrad: The intricacies of Tinygrad's kernel generation got dissected with insights revealing that graph splits during fusion can arise from reasons such as broadcasting needs, detailed in the

create_schedule_with_varsfunction. Users learned that Tinygrad is capable of running heavyweight models like Stable Diffusion and Llama, though not directly used for their training.

- Colab and Contribution Stories: AI Engineers swapped tips on setting up and leveraging Tinygrad on Google Colab, with the recommended installation command being

pip install git+https://github.com/tinygrad/tinygrad. Challenges with tensor padding and transformer models surfaced, with a workaround of usingNonefor padding cited as a practical solution.

- Encouraging Contributions and Documentation Deep Dives: To assist rookies, links to Tinygrad documentation and personal study notes were distributed, demystifying the engine’s gears. Questions about contributing sans CUDA support were welcomed, highlighting the importance of prior immersion in the Tinygrad ecosystem before chipping in.

Relevant links for further exploration and understanding included: - Google Colab Setup, - Tinygrad Notes on ScheduleItem, - Tinygrad Documentation, - Tinygrad CodeGen Notes, - Tinygrad GitHub Repository.

Interconnects (Nathan Lambert) Discord

Hugging Face Collections Streamline Artifact Organization: Hugging Face collections have been introduced to aggregate artifacts from a blog post on open models and datasets. The collections offer ease of re-access and come with an API as seen in the Hugging Face documentation.

The "Incremental" Update Debate and Open Data Advocacy: Community members are divided on the importance of the transition from Claude 2 to Claude 3, and there's a push to remember the value of open data initiatives that may be getting overshadowed. Meanwhile, AI release announcements appear in force, with Pile-T5 and WizardLM 2 amongst the front runners.

Synthesis of Machine Learning Discourse: Conversations touched on obligations with ACL revision uploads, the benefits of "big models," and distinctions between critic vs reward models—with Nato's RewardBench project being a point of focus. A tweet from @andre_t_martins provided clarity on the ACL revision uploads process.

Illuminating Papers and Research: Key papers highlighted include "CodecLM: Aligning Language Models with Tailored Synthetic Data" and "LLaMA: Open and Efficient Foundation Language Models" with Hugging Face identifier 2302.13971. Synthetic data's role and learning from stronger models were key takeaways from the discussions.

Graphs Garner Approval, and Patience Is Proposed for Bots: In the realm of newsletters, graphs won praise for their clarity, with a commitment to future enhancement and integration into a Python library. A lone mention was made of an experimental bot that might benefit from patience rather than premature intervention.

LLM Perf Enthusiasts AI Discord

Haiku's Speed Hiccup: Engineers discussed Haiku's slower total response times in contrast to its throughput, suggesting Bedrock's potential as an alternative despite speed concerns there as well.

Claude's RP Constraints: Concerns arose as Claude refuses to engage with roleplay prompts such as being a warrior maid or sending fictional spam mail, even after using various prompting techniques.

Jailbreak Junction: Amid discussions, a tweet by @elder_plinius was shared about a universal jailbreak for Claude 3 to enable edgier content which bypasses the strict content filters like those in Gemini 1.5 Pro. The community appears to be evaluating its implications; the tweet is available here.

Code Competence Claims: A newer version of an unnamed tool is lauded for its enhanced coding abilities and speed, with a member considering to reactivate their ChatGPT Plus subscription to further test these upgrades.

Claude's Contextual Clout: Despite improvements in other tools, Claude maintains its distinction with long context window code tasks, implying limitations in ChatGPT's context window size handling.

DiscoResearch Discord

Mixtral Mastery or Myth?: Community discussions touched on uncertainties in the training and finetuning efficiency of Mixtral MoE models, with some suspecting undisclosed techniques behind its performance. Interest was shown in weekend ventures of finetuning with en-de instruct data, and Envoid's "fish" model was mentioned as a curious case for RP/ERP applications in Mixtral, albeit untested due to hardware limitations.

Llama-Tokenizer Tinkering Tutorial Tips: Efforts to optimize a custom Llama-tokenizer for small hardware utilization led to shared resources such as the convert_slow_tokenizer.py from Hugging Face and the convert.py script with --vocab-only option from llama.cpp GitHub. Additionally, there's a community call for copyright-free EU text and multimodal data for training large open multimodal models.

Template Tendencies of Translators: The occiglot/7b-de-en-instruct model showcased template sensitivity in evaluations, with performance variations on German RAG tasks due to template correctness as indicated by Hugging Face's correct template usage.

Training Parables from StableLM and MiniCPM: Insights on pretraining methods were highlighted, referencing StableLM's use of ReStruct dataset inspired by this ExpressAI GitHub repo, and MiniCPM's preference for mixing data like OpenOrca and EvolInstruct during cooldown phases detailed in Chapter 5 of their study.

Mozilla AI Discord

Burnai Ignites Interest: Rust enthusiasts in the community are pointing out the underutilized potential of the Burnai project for optimal inference, sparking questions about Mozilla's lack of engagement despite Rust being their creation.

Llamafile Secures Mcaffee's Trust: The llamafile 0.7 binary has successfully made it to Mcaffee's whitelist, marking a win for the project's security reputation.

New Collaborator Energizes Discussions: A new participant has joined the fold, eager to dive into collaborations and knowledge-sharing, signaling a fresh perspective on the horizon.

Curiosity Peaks for Vulkan and tinyblas: Intrigue is brewing over potential Vulkan compatibility in the anticipated v0.8 release and the benefits of upstreaming tinyblas for ROCm applications, indicating a concerted focus on performance enhancements.

Help Wanted for Model Packaging: Demand for guidance on packaging custom models into llamafile has led to community exchanges, giving rise to contributions like a GitHub Pull Request on container publishing.

Alignment Lab AI Discord

- Spam Slaying Bot Suggestion: A recommendation was made to integrate the wick bot into the server to automatically curb spam issues.

- DMs Desired for Debugging: One user sought assistance with their coding problem, signaling a desire for direct collaboration through DM, and used their request to verify their humanity against possible bot suspicion.

- Rethinking Discord Invites: The suggestion arose that Discord invites should be banned to prevent complications within the server, although no consensus or decision was reported.

- Project Pulse Check: A user inquired about the status of a project, questioning its activity with a simple "Is the project still alive?" without additional context.

AI21 Labs (Jamba) Discord

- Code-Jamba-v0.1 Shows Multi-Lingual Mastery: The model Code-Jamba-v0.1, trained on 2 x H100 GPUs for 162 hours, excels in creating code for Python, Java, and Rust, leveraging datasets like Code-290k-ShareGPT and Code-Feedback.

- Call for Code-Jamba-v0.1 Evaluation Data: Members highlighted the need for performance benchmarks for Code-Jamba-v0.1, reflecting on the utility of AI21 Labs' dataset for enhancing other Large Language Models.

- Eager Ears for Jamba API Announcement: A member's question about an upcoming Jamba API hinted at a potential integration with Fireworks AI, pending discussions with their leadership.

Datasette - LLM (@SimonW) Discord

- AI's Password Persuasion: PasswordGPT emerges as an intriguing game that challenges players to persuade an AI to disclose a password, testing natural language generation's limits in simulated social engineering.

- Annotation Artistry Versus Raw LLM Analysis: There's a split in community preference over whether to meticulously annotate datasets prior to modeling for better comprehension or to lean on the raw predictive power of large language models (LLMs).

- Historical Data Deciphering Pre-LLM Era: A shared effort was recognized where a team used pre-LLM transformer models for the meticulous extraction of records from historical documents, displaying cross-era AI application.

- Enhanced User Engagement Through Open Prompts: The consensus leans towards favoring open-prompt AI demos which could lead to deeper user engagement and a heightened ability to "outsmart" the AI, by revealing the underlying prompts.

Skunkworks AI Discord

- Scaling the AI Frontier: An upcoming meetup titled "Unlock the Secrets to Scaling Your Gen AI App to Production" was announced, presenting a significant challenge for scaling Gen AI apps. Featured panelists from Portkey, Noetica AI, and LastMile AI will share insights, with the registration link available here.

PART 2: Detailed by-Channel summaries and links

Stability.ai (Stable Diffusion) ▷ #general-chat (1129 messages🔥🔥🔥):

- Stable Diffusion Discussions Center Around SD3 and Efficiency: Conversations focus heavily on the implications and capabilities of the upcoming Stable Diffusion 3 (SD3), with members eagerly anticipating its release and functionalities. The example video provided showcases perceived improvements in quality, while discussions also delve into the effectiveness of SD Forge for less powerful GPUs and the upcoming release schedule for SD3.

- Pixart-Sigma, ComfyUI, and T5 Conditioning: Users are exploring Pixart-Sigma with T5 conditioning in the ComfyUI environment, discussing VRAM requirements and performance, with mentions that in 8-bit compression mode, T5 doesn't exceed 10.3GB VRAM. The prompt adherence, generation speed, and samplers such as 'res_momentumized' for this model are topics of interest, with shared insights to optimize generation quality.

- ControlNet, Lora, and Outpainting Queries: The community is exchanging advice on various AI tools including ControlNet, Lora, and outpainting features for project-specific needs such as filling backgrounds behind objects. Inquiries about the possibility of keeping generated image colors consistent when using ControlNet have been raised.

- CPU vs. GPU Performance Concerns: Members discuss the slower performance of Stable Diffusion (SD) when running on CPU, with image generation taking significantly longer compared to using a GPU. Users share experiences and suggestions including using the

--lowvramflag in SD to reduce GPU memory usage.

- Community Projects and AI Features: An artist announces the development of a painting app incorporating AI features and looks for feedback and tutorial creation support from the community. The app aims to integrate essential digital art tools with unique AI-driven functionalities, and the project overview is available here.

- Clip front: no description found

- Why Not Both Take Both GIF - Why Not Both Why Not Take Both - Discover & Share GIFs: Click to view the GIF

- Nervous Courage GIF - Nervous Courage Courage the cowardly dog - Discover & Share GIFs: Click to view the GIF

- Perturbed-Attention-Guidance Test | Civitai: A post by rMada. Tagged with . PAG (Perturbed-Attention Guidance): https://ku-cvlab.github.io/Perturbed-Attention-Guidance/ PROM...

- TikTok - Make Your Day: no description found

- SUBPAC - The New Way to Experience Sound: Feel it.™: SUBPAC lets you feel the bass by immersing the body in low-frequency, high-fidelity physical sound, silent on the outside. Ideal for music, gaming, & VR.

- Reddit - Dive into anything: no description found

- Stability AI: Activating humanity's potential through generative AI. Open models in every modality, for everyone, everywhere.

- Server Is For Js Javascript GIF - Server Is For Js Javascript Js - Discover & Share GIFs: Click to view the GIF

- SUPIR: New SOTA Open Source Image Upscaler & Enhancer Model Better Than Magnific & Topaz AI Tutorial: With V8, NOW WORKS on 12 GB GPUs as well with Juggernaut-XL-v9 base model. In this tutorial video, I introduce SUPIR (Scaling-UP Image Restoration), a state-...

- Home v2: Transform your projects with our AI image generator. Generate high-quality, AI generated images with unparalleled speed and style to elevate your creative vision

- SUPIR: New SOTA Open Source Image Upscaler & Enhancer Model Better Than Magnific & Topaz AI Tutorial: With V8, NOW WORKS on 12 GB GPUs as well with Juggernaut-XL-v9 base model. In this tutorial video, I introduce SUPIR (Scaling-UP Image Restoration), a state-...

- Reddit - Dive into anything: no description found

- Is it possible to use Flan-T5 · Issue #20 · city96/ComfyUI_ExtraModels: Would it be possible to use encoder only version of Flan-T5 with Pixart-Sigma? This one: https://huggingface.co/Kijai/flan-t5-xl-encoder-only-bf16/tree/main

- GitHub - QuintessentialForms/ParrotLUX: Pen-Tablet Painting App for Open-Source AI: Pen-Tablet Painting App for Open-Source AI. Contribute to QuintessentialForms/ParrotLUX development by creating an account on GitHub.

- Reddit - Dive into anything: no description found

- GitHub - KU-CVLAB/Perturbed-Attention-Guidance: Official implementation of "Perturbed-Attention Guidance": Official implementation of "Perturbed-Attention Guidance" - KU-CVLAB/Perturbed-Attention-Guidance

- Warp Drives: New Simulations: Learn more from a science course on Brilliant! First 30 days are free and 20% off the annual premium subscription when you use our link ➜ https://brilliant....

- Reddit - Dive into anything: no description found

- GitHub - PKU-YuanGroup/MagicTime: MagicTime: Time-lapse Video Generation Models as Metamorphic Simulators: MagicTime: Time-lapse Video Generation Models as Metamorphic Simulators - PKU-YuanGroup/MagicTime

- Dungeons & Dancemusic: 🧙🏻♂️🧙🏻♂️🧙🏻♂️Welcome, young beatmaker! Racso, the dancefloor sorceror, will teach you the six essential attributes of a powerful dancefloor beat. Str...

- GitHub - city96/ComfyUI_ExtraModels: Support for miscellaneous image models. Currently supports: DiT, PixArt, T5 and a few custom VAEs: Support for miscellaneous image models. Currently supports: DiT, PixArt, T5 and a few custom VAEs - city96/ComfyUI_ExtraModels

- Reddit - Dive into anything: no description found

- GitHub - lllyasviel/stable-diffusion-webui-forge: Contribute to lllyasviel/stable-diffusion-webui-forge development by creating an account on GitHub.

- Socks Segmentation - ADetailer - (socks_seg_s_yolov8) - v1.0 | Stable Diffusion Other | Civitai: A Yolov8 detection model that segments socks in images. The model can be used as an ADetailer model (for Automatic1111 / Stable Diffusion use), or ...

- MohamedRashad/midjourney-detailed-prompts · Datasets at Hugging Face: no description found

Perplexity AI ▷ #general (879 messages🔥🔥🔥):

- Context Window and RAG Discussions: Users discussed how the context window works, noting how Claude Opus successfully follows instructions, while GPT-4 may lose context after several follow-ups. RAG is said to retrieve relevant parts of the document only, resulting in a much larger context for file uploads.

- Switching Between Models and Perplexity Features: Some users are considering whether to continue using Perplexity Pro or You.com, weighing the pros and cons, such as RAG functionality, long context handling, and code interpretation features between the platforms.

- Issues with Generating Code: Users reported problems with models not outputting entire code snippets when prompted – mentioning Claude Opus as more reliable for generating complete code compared to GPT-4, which tends to be descriptive rather than executable.

- AI Model Evaluations: Users discussed the effectiveness of various AI models for different tasks, with opinions that Claude Opus is better for prose writing and GPT-4 for logical reasoning. They emphasized trial and error to find the best-suited model for specific needs.

- Miscellaneous Queries and Concerns: Users asked about getting assistive responses regarding account issues and unwanted charges, mentioned technical problems with models like hallucinations and incorrect searches, brought up privacy concerns with new AI services, and expressed interest in the possibility of new models like Reka and features like video inputs in relation to costs and context limits.

- Grok-1.5 Vision Preview: no description found

- First Official Emulator "iGBA" Now On Apple App Store To Download: A new Game Boy emulator has officially landed on the Apple App Store, allowing retro gamers to play their favourite games of the past.

- ‘Como si Wikipedia y ChatGPT tuvieran un hijo’: así es la startup Buzzy AI, ‘madre’ de Perplexity, el buscador que acabaría con Google: Perplexity, un motor de búsqueda impulsado por inteligencia artificial, cuenta con el respaldo de personalidades tecnológicas como Jeff Bezos y cuenta con multimillonarios como el director ejecutivo d...

- OpenAI planea lanzar un buscador para competir con Google: La empresa dirigida por Sam Altman estaría desarrollando un buscador que usase la IA para realizar búsquedas más precisas que uno convencional.

- Rate Limits: no description found

- Take My Money Fry GIF - Take My Money Fry Futurama - Discover & Share GIFs: Click to view the GIF

- Monica - Your ChatGPT AI Assistant Chrome Extension: no description found

- TikTok - Make Your Day: no description found

- Cognosys: Meet the AI that simplifies tasks and speeds up your workflow. Get things done faster and smarter, without the fuss

- TikTok - Make Your Day: no description found

Perplexity AI ▷ #sharing (23 messages🔥):

- Rendezvous with US Policies: Members are investigating US policies as someone shared a Perplexity search link that leads to related inquiries.

- Exploring the Virtue of Honesty: A link to a Perplexity AI search was shared, suggesting a discourse on the importance of honesty.

- Understanding Durable Functions: Participants are delving into the world of durable functions through a shared search query on Perplexity AI.

- Meta Mimics Perplexity for WhatsApp AI: In an article, Meta's AI on WhatsApp, compared to Perplexity AI, has been linked for insights into the expanding use of AI in messaging platforms.

- Scratchpad-Thinking and AutoExpert Unite: There's talk on combining scratchpad-think with autoexpert functionalities, highlighted by a Perplexity search.

Link mentioned: Meta Releases AI on WhatsApp, Looks Like Perplexity AI: Meta has quietly released its AI-powered chatbot on WhatsApp, Instagram, and Messenger in India, and various parts of Africa.

Perplexity AI ▷ #pplx-api (26 messages🔥):

- Perplexity API Roadmap Revealed: Perplexity AI's roadmap, updated 6 days ago, outlines features planned for release in June such as enforcing JSON grammar, N>1 sampling, a new Databricks model, a Model Info endpoint, a status page, and multilingual support. The documentation is available on their feature roadmap page.

- Taking the Temperature of Default Settings: When querying the Perplexity API without specifying temperature, the default value is considered to be 0.2 for the 'online' models, which might be unexpectedly low for other models. Users are recommended to specify the temperature in their API requests to avoid ambiguity.

- Seeking Clarity on Citations Feature: Several users inquired about how and when citations will be implemented in Perplexity API, engaging in discussions and sharing links from the Perplexity documentation regarding the application process for features.

- Circular References in Response to API Questions: A user seeking help about not receiving URLs in API responses for news stories was directed to a Discord channel post for information. This represents an instance of users within the community attempting to assist one another.

- Request for API Rate Limit Increase: A user inquired about getting a rate limit increase for the API and was guided to fill out a form available on Perplexity's website, a process appearing to involve justifying the business need for increased capacity.

- Feature Roadmap: no description found

- Get Sources and Related Information when using API: no description found

- Data from perplexity web and perplexity api: no description found

- API gives very low quality answers?: no description found

- Discover Typeform, where forms = fun: Create a beautiful, interactive form in minutes with no code. Get started for free.

Unsloth AI (Daniel Han) ▷ #general (431 messages🔥🔥🔥):

<ul>

<li><strong>Unsloth Multi-GPU Update Query</strong>: There were inquiries regarding updates on Multi-GPU support for Unsloth AI. Suggestions to look into Llama-Factory with Unsloth integration were mentioned.</li>

<li><strong>Geohot Adds P2P to 4090s</strong>: A significant update where "geohot" has hacked P2P back into NVIDIA 4090s was shared, along with a relevant <a href="https://github.com/tinygrad/open-gpu-kernel-modules">GitHub link</a>.</li>

<li><strong>Upcoming Unsloth AI Demo and Q&A Event Alert</strong>: An announcement for a live demo of Unsloth AI with a Q&A session by Analytics Vidhya was shared. Those interested were directed to join via a posted <a href="https://us06web.zoom.us/webinar/register/WN_-uq-XlPzTt65z23oj45leQ">Zoom link</a>.</li>

<li><strong>Mistral Model Fusion Tactics Discussed</strong>: There was a discussion about the practicality of merging MOE experts into a single model, with skepticism regarding output quality. Some considered fine-tuning Mistral for narrow tasks and removing lesser-used experts as a potential compression method.</li>

<li><strong>Hugging Face CEO Follows Unsloth on Platform X</strong>: Clement Delangue, co-founder, and CEO of Hugging Face, now follows Unsloth on an unnamed platform, sparking hopes for future collaborations between the two AI communities.</li>

</ul>

- Physics of Language Models: Part 3.3, Knowledge Capacity Scaling Laws: Scaling laws describe the relationship between the size of language models and their capabilities. Unlike prior studies that evaluate a model's capability via loss or benchmarks, we estimate the n...

- Video Conferencing, Web Conferencing, Webinars, Screen Sharing: Zoom is the leader in modern enterprise video communications, with an easy, reliable cloud platform for video and audio conferencing, chat, and webinars across mobile, desktop, and room systems. Zoom ...

- LoftQ: LoRA-Fine-Tuning-Aware Quantization for Large Language Models: Quantization is an indispensable technique for serving Large Language Models (LLMs) and has recently found its way into LoRA fine-tuning. In this work we focus on the scenario where quantization and L...

- Vezora/Mistral-22B-v0.1 · Hugging Face: no description found

- AI-Sweden-Models (AI Sweden Model Hub): no description found

- Viking - a LumiOpen Collection: no description found

- Finetuning LLMs with LoRA and QLoRA: Insights from Hundreds of Experiments - Lightning AI: LoRA is one of the most widely used, parameter-efficient finetuning techniques for training custom LLMs. From saving memory with QLoRA to selecting the optimal LoRA settings, this article provides pra...

- NVIDIA Collective Communications Library (NCCL): no description found

- GitHub - tinygrad/open-gpu-kernel-modules: NVIDIA Linux open GPU with P2P support: NVIDIA Linux open GPU with P2P support. Contribute to tinygrad/open-gpu-kernel-modules development by creating an account on GitHub.

- GitHub - astramind-ai/Mixture-of-depths: Unofficial implementation for the paper "Mixture-of-Depths: Dynamically allocating compute in transformer-based language models": Unofficial implementation for the paper "Mixture-of-Depths: Dynamically allocating compute in transformer-based language models" - astramind-ai/Mixture-of-depths

- [BUG] LISA: same loss regardless of lisa_activated_layers · Issue #726 · OptimalScale/LMFlow: Describe the bug I think there might be something wrong with the current LISA implementation. There is no difference in training loss, no matter how many layers are active. Not using LMFlow but HF ...

Unsloth AI (Daniel Han) ▷ #random (38 messages🔥):

- Exploring PhD Life in AI: A first-year PhD student in AI discusses the stressful yet fun experience of pursuing a doctorate, including the pressure of producing results and self-doubt. In one message, they mention their topic as creating an instruction-following LLM in their native language, noting it's a complex project beyond just translation and fine-tuning.

- Potential Gold in AI Competitions: The conversation shifts towards a Kaggle AI Mathematical Olympiad, offering a $10M prize for an LLM that could earn a gold medal at the International Math Olympiad. The PhD student also acknowledges the idea of getting their juniors to work on the problem.

- The Beal Prize Discussed: The chat includes a link to the American Mathematical Society detailing the rules for the Beal Prize, mentioning the condition for a proof or a counterexample to the Beal Conjecture and indicating the prize sum of $1,000,000.

- Universities' Priorities Debated: A member points out that universities focus on publishing papers and creating an "impact" rather than prize money when it comes to research topics, despite discussing significant monetary awards in competitions.

- AI and the Nature of Language: There's philosophical musings about thinking and language being self-referencing systems. It is suggested that thoughts self-generate and language can only be defined in terms of more language.

- AI Tech Showcased on Instagram: A brief interlude reveals engagement with a shared Instagram video, praised for its content and particularly clean code, though not all were fully able to grasp it.

- AI Mathematical Olympiad - Progress Prize 1 | Kaggle: no description found

- Julia | Math & Programming on Instagram: "When you're a grad student #gradschool #phd #university #stem #phdstudent #machinelearning #ml #ai": 6,197 likes, 139 comments - juliaprogramming.jlApril 8, 2024 on : "When you're a grad student #gradschool #phd #university #stem #phdstudent #machinelearning #ml #ai"

- Beal Prize Rules and Procedures: Advancing research. Creating connections.

- Reddit - Dive into anything: no description found

Unsloth AI (Daniel Han) ▷ #help (313 messages🔥🔥):

- Efficient VRAM Usage and Finetuning Strategies: Participants discussed methods for finetuning large language models like Mistral efficiently, with concerns about vRAM usage when training with long examples. There was advice to use the 30% less VRAM update from Unsloth, utilize accumulation steps to counteract large batch size impacts, and consider strategies like finetuning on short examples before moving to longer ones.

- Choosing a Base Model for New Languages: Members sought advice on finetuning on a low-resource language, with users sharing experiences on models like Gemma and discussing strategies like mixing datasets and continuing pretraining.

- Tips and Tricks for Uninterrupted Workflow: Users discussed troubleshooting issues with unsloth installation and shared Kaggle's and starsupernova's installation instructions to overcome problems such as the CUDA not linked error, and dependency issues with torch versions, flash-attn, and xformers.

- Adapter Merging for Production: There was a conversation about the proper method for merging adapters from QLoRA and whether to use naive merging or a more complex process involving dequantization before merging. Starsupernova provides wiki links on saving models to 16bit for merging to vLLM or GGUF.

- Dialog Format Conversion and Custom Training: A user shared a script to convert a plain text conversation dataset into ShareGPT format in preparation to emulate personal chatting style, while recommending the use of Unsloth notebooks for custom training after conversion.

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- ura-hcmut/ura-llama-7b · Hugging Face: no description found

- ghost-x/ghost-7b-v0.9.0 · Hugging Face: no description found

- Supervised Fine-tuning Trainer: no description found

- Home: 2-5X faster 80% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Home: 2-5X faster 80% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- GitHub: Let’s build from here: GitHub is where over 100 million developers shape the future of software, together. Contribute to the open source community, manage your Git repositories, review code like a pro, track bugs and fea...

- Home: 2-5X faster 80% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- CodeGenerationMoE/code/finetune.ipynb at main · akameswa/CodeGenerationMoE: Mixture of Expert Model for Code Generation. Contribute to akameswa/CodeGenerationMoE development by creating an account on GitHub.

- no title found: no description found

- Type error when importing datasets on Kaggle · Issue #6753 · huggingface/datasets: Describe the bug When trying to run import datasets print(datasets.__version__) It generates the following error TypeError: expected string or bytes-like object It looks like It cannot find the val...

- GitHub - facebookresearch/xformers: Hackable and optimized Transformers building blocks, supporting a composable construction.: Hackable and optimized Transformers building blocks, supporting a composable construction. - facebookresearch/xformers

- no title found: no description found

- GitHub - unslothai/unsloth: 2-5X faster 80% less memory QLoRA & LoRA finetuning: 2-5X faster 80% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- unsloth (Unsloth): no description found

Unsloth AI (Daniel Han) ▷ #showcase (54 messages🔥):

- Low-Resource Language Enrichment Techniques: Members discussed ways to enrich low-resource language datasets, mentioning the use of translation data and resources available on HuggingFace.

- EEVE-Korean Model Data Efficiency Claim: A member referenced a paper on EEVE-Korean-v1.0, highlighting an efficient vocabulary expansion method building on English-centric large language models (LLMs) that purportedly requires only 2 billion tokens to significantly boost non-English language proficiency.

- Unsloth AI Packaged in Podman Containers: A video was shared demonstrating podman builds of containerized generative AI applications, including Unsloth AI, able to deploy locally or to cloud providers: Podman Build - For cost-conscious AI.

- Enhanced LLM with Ghost 7B Alpha: Discussions highlighted the advantages of Ghost 7B Alpha in reasoning capabilities over models like ChatGPT. Further talk centered on the model's fine-tuning method which includes difficult reasoning questions, implying an orca-like approach without extending tokenizer vocabulary.

- Intriguing Results from Initial Benchmarks: A member shared their initial benchmarking results, prompting positive feedback and reinforcement from other participants, indicating promising performance.

- Efficient and Effective Vocabulary Expansion Towards Multilingual Large Language Models: This report introduces \texttt{EEVE-Korean-v1.0}, a Korean adaptation of large language models that exhibit remarkable capabilities across English and Korean text understanding. Building on recent hig...

- Sloth Crawling GIF - Sloth Crawling Slow - Discover & Share GIFs: Click to view the GIF

- Tweet from undefined: no description found

- ghost-x (Ghost X): no description found

- Podman Build- For cost-conscious AI: A quick demo on how @podman builds containerized generative AI applications that can be deployed locally or to your preferred cloud provider. That choice is ...

Eleuther ▷ #announcements (1 messages):

- Pile-T5 Unveiled: EleutherAI has released Pile-T5, a T5 model variant trained on 2 trillion tokens from the Pile with the LLAMA tokenizer which shows markedly improved performance on benchmarks such as SuperGLUE and MMLU. This model, which also performs better in code-related tasks, is available with intermediate checkpoints for both HF and T5x versions.

- Modeling Details and Contributions: Pile-T5 was meticulously trained to 2 million steps and incorporates the original span corruption method for improved finetuning. The project is a collaborative effort by EleutherAI members, as acknowledged in the announcement.

- Open Source for the Community: All resources related to Pile-T5, including model weights and training scripts, are open-sourced on GitHub. This includes intermediate checkpoints, allowing the community to experiment and build upon their work.

- Shoutout and Links on Twitter: The release and open sourcing of Pile-T5 have been announced on Twitter, inviting the community to explore the blog post, download the weights, and contribute to the repository.

- Pile-T5: Trained T5 on the Pile