[AINews] ModernBert: small new Retriever/Classifier workhorse, 8k context, 2T tokens,

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Encoder-only models are all you need.

AI News for 12/18/2024-12/19/2024. We checked 7 subreddits, 433 Twitters and 32 Discords (215 channels, and 4745 messages) for you. Estimated reading time saved (at 200wpm): 440 minutes. You can now tag @smol_ai for AINews discussions!

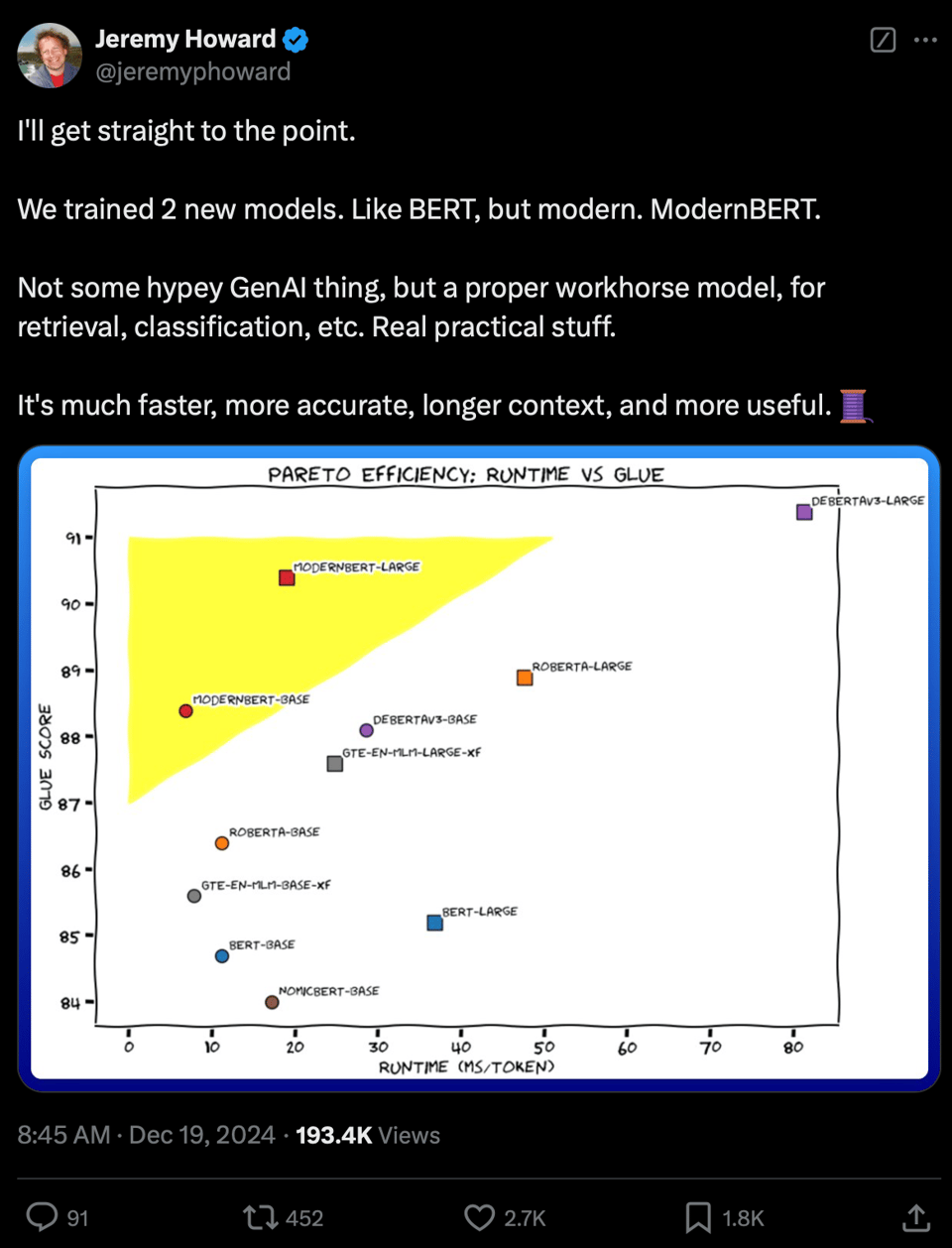

As he has been teasing for a few months, Jeremy Howard and the Answer.ai/LightOn team released ModernBert today, updating the classic BERT from 2018:

The HuggingFace blogpost goes into more detail on why this is useful:

- Context: Old BERTS had ~500 token context; ModernBERT has 8k

- Data: Old BERTS were on older/less data; ModernBERT was trained on 2T, including "a large amount of code"

- Size: LLMs these days are >70B, with the requisite cost and latency issues; ModernBERT is 139M (base)/395M (large) params

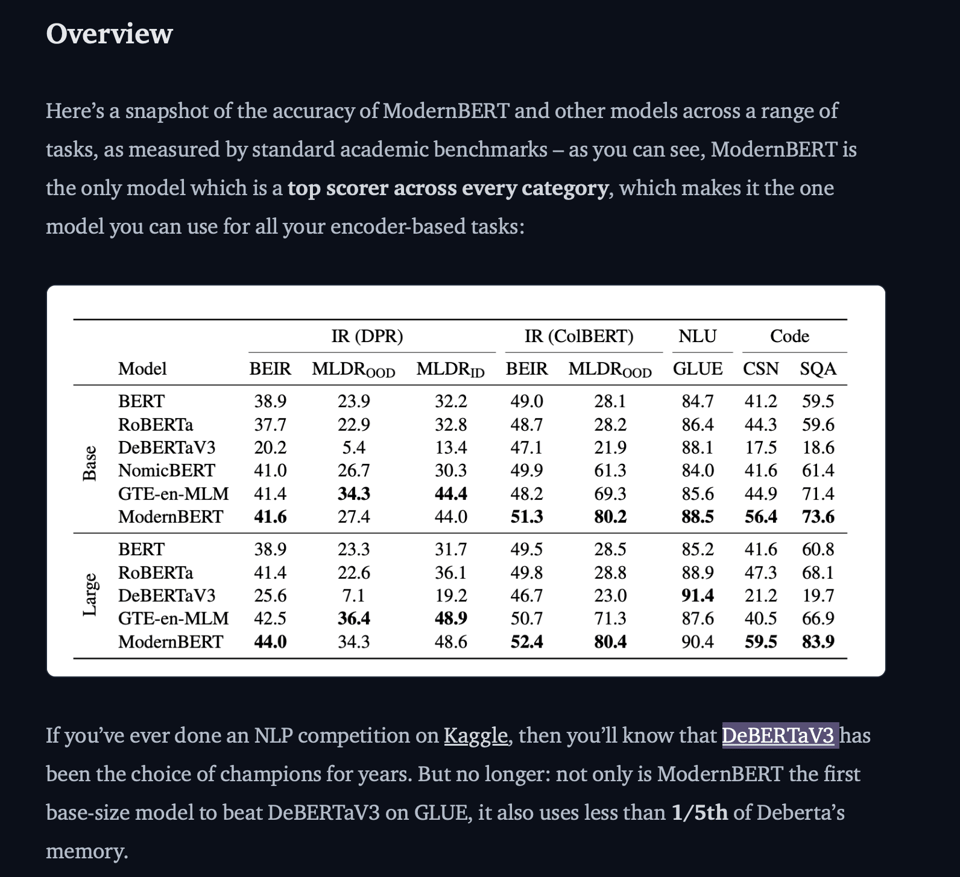

- SOTA perf for size: beats regular Kaggle winners like DeBERTaV3 on all retrieval/NLU/code categories

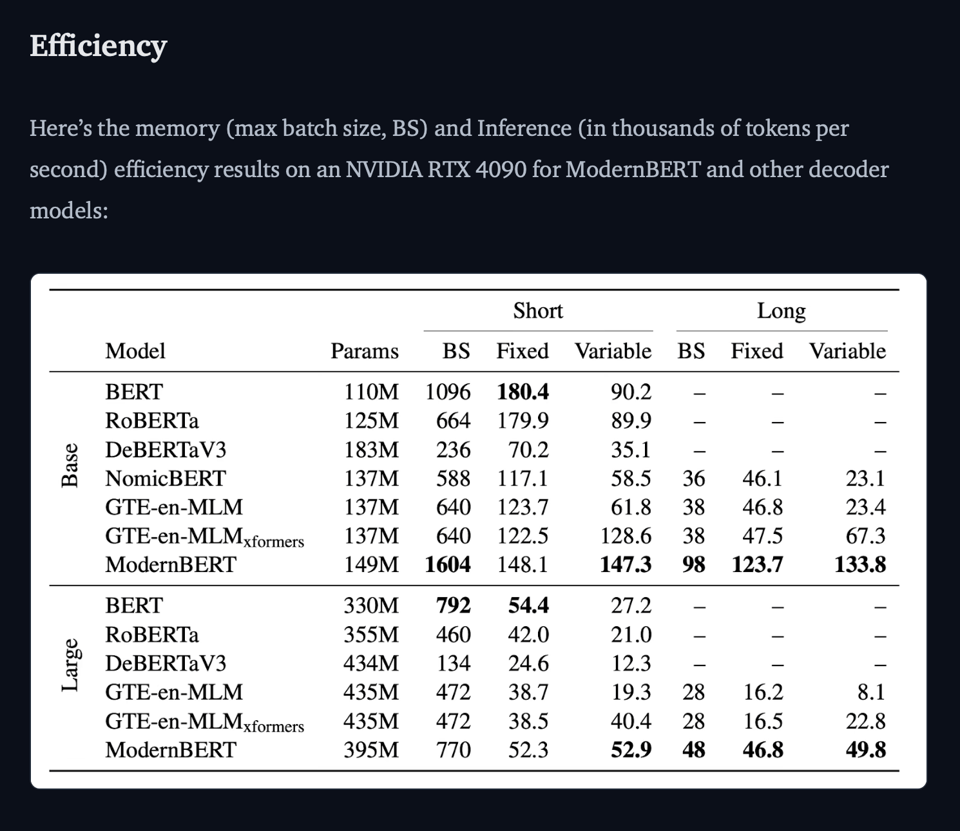

- Real world variable length long context: input sizes vary in the real world, so that’s the performance we worked hard to optimise – the “variable” column. As you can see, for variable length inputs, ModernBERT is much faster than all other models.

- Bidirectional: Decoder-only models are specifically constrained against "looking ahead", whereas BERTS can fill in the blanks:

import torch

from transformers import pipeline

from pprint import pprint

pipe = pipeline(

"fill-mask",

model="answerdotai/ModernBERT-base",

torch_dtype=torch.bfloat16,

)

input_text = "One thing I really like about the [MASK] newsletter is its ability to summarize the entire AI universe in one email, consistently, over time. Don't love the occasional multiple sends tho but I hear they are fixing it."

results = pipe(input_text)

pprint(results)

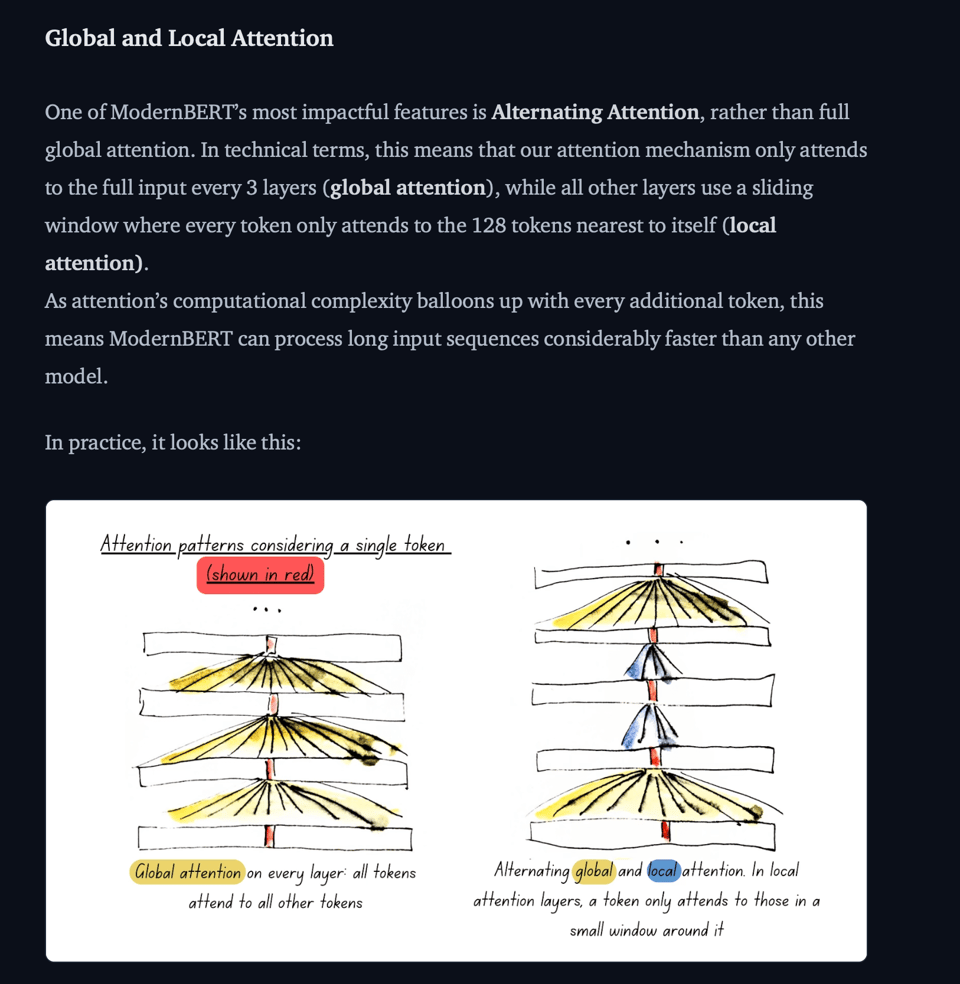

One of the MANY interesting details disclosed in the paper is the Alternating Attention layers - mixing global and local attention in the same way Noam Shazeer did at Character (our coverage here):

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Releases and Performance

- @drjwrae announced the release of Gemini 2.0 Flash Thinking, built on their 2.0 Flash model for improved reasoning

- @lmarena_ai reported that Gemini-2.0-Flash-Thinking debuted as #1 across all categories in Chatbot Arena

- @bindureddy noted that the new O1 model scores 91.58 in Reasoning and is #1 on Livebench AI

- @answerdotai and @LightOnIO released ModernBERT with up to 8,192 tokens context length and improved performance

Major Company News

- @AIatMeta shared that Llama has been downloaded over 650M times, doubling in 3 months

- @OpenAI launched desktop app integrations with apps like Xcode, Warp, Notion and voice capabilities

- @adcock_brett announced that Figure delivered their first humanoid robots to commercial clients

- Alec Radford's departure from OpenAI was announced

Technical Developments

- @DrJimFan discussed advances in robotics simulation, highlighting trends in massive parallelization and generative graphics

- @_philschmid shared details about Genesis, a new physics engine claiming 430,000x faster than real-time simulation

- @krandiash outlined challenges in extending context windows and memory in AI models

Memes and Humor

- @AmandaAskell joked about species procreating via FOMO

- @_jasonwei shared getting roasted by his girlfriend comparing his talks to scenes from Arrival

- @karpathy posted about his daily PiOclock tradition of taking photos at 3:14pm

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Bamba: Inference Efficient Hybrid Mamba2 Model

- Bamba: Inference-Efficient Hybrid Mamba2 Model 🐍 (Score: 60, Comments: 14): Bamba is an inference-efficient hybrid model based on Mamba2. The post title suggests a focus on performance gaps and new benchmarks related to this model, though no further details are provided in the body.

- Benchmark Gaps: Discussions highlight that the Bamba model shows gaps in math benchmarks, similar to other linear models, due to the training data and the inclusion of benchmark-aligned instruction datasets during training phases. A specific example mentioned is the improvement in the GSM8k score from 36.77 to 60.0 by adding metamath data.

- Openness in Methodology: Commenters appreciate the transparency in the training and quantization processes of the Bamba model, expressing enthusiasm for the forthcoming paper that promises detailed insights into data sources, ratios, and ablation techniques.

- Model Naming Humor: There is a lighthearted exchange about the naming convention of models like Bamba, Zamba, and others, with links provided to related papers and models on Hugging Face (Zamba-7B-v1, Jamba, Samba).

Theme 2. Genesis: Generative Physics Engine Breakthrough

- New physics AI is absolutely insane (opensource) (Score: 1350, Comments: 147): The post discusses an open-source physics AI called Genesis, highlighting its impressive generative and physics engine capabilities. The lack of a detailed text description suggests that the video linked may provide further insights into its functionalities and applications.

- Skepticism and Concerns: Many commenters express skepticism about the project, comparing it to other hyped technologies like Theranos and Juicero, and suggesting that the affiliations and "open-source" claims may be overstated. MayorWolf and others doubt the authenticity of the video, suggesting it involves creative editing and that the open-source aspect may be limited to what's already available in tools like Blender.

- Technical Discussion: Some users discuss the technical aspects, such as the use of Taichi for efficient GPU simulations, and the potential similarities to Nvidia's Omniverse. AwesomeDragon97 notes a flaw in the simulation regarding water droplet adhesion, indicating the need for further refinement in the physics engine.

- Project Legitimacy: Links to the project's website and GitHub repository are shared, with some users noting the involvement of top universities and suggesting it could be legitimate. Others, like Same_Leadership_6238, highlight that while it may seem too good to be true, it is open source and warrants further investigation.

- Genesis project: a generative physics engine able to generate 4D dynamical worlds powered by a physics simulation platform (Score: 103, Comments: 13): The Genesis project introduces a generative physics engine capable of creating 4D dynamical worlds using a physics simulation platform, developed over 24 months with contributions from over 20 research labs. This engine, written in pure Python, operates 10-80x faster than existing GPU-accelerated stacks and offers significant advancements in simulation speed, being ~430,000 times faster than real-time. It is open-source and aims to autonomously generate complex physical worlds for robotics and physical AI applications.

- Generative physics engine allows for simulations where robots, including soft robots, can experiment and refine their actions far faster than real-world trials, potentially revolutionizing robotics and physical AI applications.

- The impact on simulations and animations is substantial, enabling individuals with access to consumer-grade hardware like an NVIDIA 4090 to train robots for real-world applications, which was previously limited to entities with significant resources.

- Skepticism exists about the technology's capabilities due to its impressive claims, with users expressing a desire to personally test the engine to validate its performance.

Theme 3. Slim-Llama ASIC Processor's Efficiency Leap

- Slim-Llama is an LLM ASIC processor that can tackle 3-bllion parameters while sipping only 4.69mW - and we'll find out more on this potential AI game changer very soon (Score: 240, Comments: 25): Slim-Llama is an LLM ASIC processor capable of handling 3 billion parameters while consuming only 4.69mW of power. More details about this potentially significant advancement in AI hardware are expected to be revealed soon.

- There is skepticism about the Slim-Llama's performance, with concerns over its 3000ms latency and the practicality of its 5 TOPS at 1.3 TOPS/W power efficiency. Critics argue that the 500KB memory is insufficient for running a 1B model without external memory, which would increase energy consumption (source).

- The Slim-Llama supports only 1 and 1.5-bit models and is seen as an academic curiosity rather than a practical solution, with potential applications in wearables, IoT sensor nodes, and energy-efficient industrial applications due to its low power consumption of 4.69mW. Some commenters express hope for future use cases with improved 4-bit quantization and better software support.

- Discussion includes the chip's 20.25mm² die area using Samsung's 28nm CMOS technology, with curiosity about its potential performance on more advanced processes like 5nm or 3nm. There is also playful banter about running Enterprise Resource Planning simulations on the "SLUT-based BMM core," highlighting the chip's novelty and niche appeal.

Theme 4. Gemini 2.0 Flash Thinking Experimental Release

- Gemini 2.0 Flash Thinking Experimental now available free (10 RPM 1500 req/day) in Google AI Studio (Score: 73, Comments: 10): Gemini 2.0 Flash Thinking Experimental is now available for free in Google AI Studio, allowing users 10 requests per minute and 1500 requests per day. The interface includes system instructions for answering queries like "who are you now?" and allows adjustments in model selection, token count, and temperature settings.

- A user humorously described a thinking process example where the model counted the occurrences of "r" in "strawberry" but noted a misspelling, highlighting the model's step-by-step reasoning.

- There is curiosity about the potential to utilize the output from Gemini 2.0 Flash Thinking for training additional thinking models, suggesting interest in model improvement and development.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. Gemini 2.0 Flash Thinking released, outperforming older models

- Gemini 2.0 Flash Thinking (reasoning, FREE) (Score: 268, Comments: 95): Gemini 2.0 Flash, a reasoning model by Google, is now available for free at aistudio.google.com, offering up to 1500 free requests per day with a 2024 knowledge cutoff. The author finds it impressive, particularly for its ability to be steered via system prompts, and notes that it performs on par or better than OpenAI's GPT-3.5 for tasks like image processing, general questions, and math, criticizing the cost and limitations of OpenAI's offering.

- Users are impressed with Gemini 2.0 Flash's performance, noting its superiority in math compared to other models and its ability to display its reasoning process, which some find remarkable. There is a general sentiment that it outperforms OpenAI's offerings, with users questioning the value of paying for ChatGPT Plus.

- There is a discussion on Google's strategic advantage due to their cost-effective infrastructure, specifically their TPUs, which allows them to offer the model for free, in contrast to OpenAI's expensive and closed models. This cost advantage is seen as a potential long-term win for Google in the AI space.

- Some users express a desire for improved UI/UX in Google's AI products, suggesting that a more user-friendly interface could enhance their appeal. The conversation also touches on the absence of web search capabilities in Gemini, and the potential for custom instructions in AI Studio, which enhances user control over the model's responses.

- O1's full LiveBench results are now up, and they're pretty impressive. (Score: 267, Comments: 85): OpenAI's "o1-2024-12-17" model leads in the LiveBench results, showing superior performance particularly in Reasoning and Global Average scores. The table compares several models across metrics like Coding, Mathematics, and Language, with competitors from Google, Alibaba, and Anthropic.

- There is significant discussion about the O1 model's pricing and performance. Some users argue that O1 is more expensive than Opus due to "invisible thought tokens", leading to a cost of over $200 per mTok output, while others claim the price is the same but costs accumulate due to reasoning tokens (source).

- O1's capabilities and access are debated, with some noting that the O1 Pro API isn't available yet and that the current O1 model uses a "reasoning_effort" parameter, which affects its performance and pricing. This parameter indicates that O1 Pro might be a more advanced version with higher reasoning effort.

- Comparisons with other models like Gemini 2.0 Flash are prevalent, with Gemini noted for its cost-effectiveness and potential for scaling up. Some speculate that Gemini's efficiency is due to Google's TPU resources, and there's optimism about future advancements leading to "in-the-box-AGI" within 1-2 years.

- The AI race over time by Artificial Analysis (Score: 157, Comments: 12): The report from Artificial Analysis provides a comprehensive overview of the AI race, focusing on the evolution of AI language models from OpenAI, Anthropic, Google, Mistral, and Meta. A line graph illustrates the "Frontier Language Model Intelligence" over time, using the "Artificial Analysis Quality Index" to compare model quality from Q4 2022 to Q2 2025, highlighting trends and advancements in AI development. Full report here.

- Gemini 2.0 is considered superior to the current GPT-4o model in all aspects, and it is available for free on Google AI Studio.

- There is a correction regarding the timeline: GPT-3.5 Turbo was not available in 2022; instead, GPT-3.5 Legacy was available during that period.

Theme 2. NotebookLM incorporates interactive podcast feature

- Notebook LM interaction BETA. MindBlown. (Score: 272, Comments: 69): Google has quietly activated an interaction feature in NotebookLM, allowing users to interact with generated podcasts. The post expresses excitement over this new capability, describing it as "mindblowing."

- Users discussed the interaction feature in NotebookLM, noting that it allows real-time conversation with AI about uploaded source material. However, the interaction remains surface-level, and users expressed a desire for deeper conversational capabilities and better prompt responses compared to ChatGPT.

- The feature requires creating a new notebook and adding sources to generate an audio overview. Interaction begins after the audio is ready, but some users noted it lacks the ability to save or download the interacted podcast, and availability may vary by region.

- There is a mixed reaction to Google's advancements in AI, with some users expressing skepticism about Google's position in the AI race and others noting the feature's utility for studying, while comparisons were made to OpenAI's recent updates, which some found underwhelming.

AI Discord Recap

A summary of Summaries of Summaries by o1-2024-12-17

Theme 1. Fierce Model Wars and Bold Price Cuts

- Gemini 2.0 Lights Up the Stage: Users praise “Gemini 2.0 Flash Thinking” for displaying explicit chain-of-thought and beating older models in reasoning tasks. Several tests, including lmarena.ai’s mention, show it topping performance leaderboards with public excitement.

- OpenRouter Slashes Prices in Epic Showdown: Providers like MythoMax and QwQ cut costs by over 7%, with mistralai/mistral-nemo reducing 12.5%. Observers call it “ongoing price wars” as AI providers compete for user adoption.

- Databricks Gobbles $10B for Growth: The company raised a colossal round at a stunning $62B valuation, with plans to exceed $3B revenue run rate. Stakeholders link this surge to soaring enterprise AI demands and 60% annual growth.

Theme 2. Multi-GPU and Fine-Tuning Frenzy

- Unsloth Preps GPU Magic: Multi-GPU support lands in Q1, with the team testing enterprise pricing and sales revamps. They confirm Llama 3.3 needs around 41GB VRAM to fine-tune properly.

- SGLang vs. vLLM in a Performance Duel: vLLM wins for raw throughput, while SGLang excels in structured outputs and scheduling. Engineers weigh trade-offs, citing SGLang’s flexible modular approach for certain workflows.

- Quantization Saves the Day: Threads tout 4-bit or 8-bit quantization to shrink memory footprints. Contributors highlight “RAG plus quantization” as an efficient path for resource-limited tasks.

Theme 3. Agents, RAG, and RLHF Breakthroughs

- Agentic Systems Race Ahead: Anthropic’s “year of agentic systems” blueprint outlines composable patterns, fueling speculation of major leaps by 2025. Researchers compare these designs to classical search and note how open thinking patterns can surpass naive retrieval.

- Asynchronous RLHF Powers Faster Training: A paper proposes off-policy RLHF, decoupling generation and learning to speed up language model refinement. The community debates “how much off-policyness can we tolerate?” in pursuit of efficiency.

- Multi-Agent LlamaIndex Unleashes RAG: Developers shift from single to multi-agent setups, each focusing on a specialized subtask for robust retrieval-augmented generation. They use agent factories to coordinate tasks and ensure better coverage over large corpora.

Theme 4. AI Tools for Coding Take Center Stage

- Cursor 0.44.4 Upgrades: The launch introduced “Yolo mode” and improved agent commands, touted in the changelog. Early adopters noticed faster code edits and better task handling in large projects.

- GitHub Copilot Chat Goes Free: Microsoft announced a no-credit-card-needed tier that even taps “Claude for better capabilities.” Devs cheer cost-free real-time code suggestions, although some still prefer old-school diff editing for version control.

- Windsurf vs. Cursor Showdown: Users compare collaborative editing, large-file handling, and performance. Many mention Cursor’s consistency for complex refactors, while some appreciate Windsurf’s flexible UI for smaller tasks.

Theme 5. Fresh Libraries and Open-Source Adventures

- Genesis AI Conjures Physics Realities: A new generative engine simulates 4D worlds 430,000 times faster than real-time. Robotics fans marvel at 26-second training runs on an RTX4090, showcased in the Genesis-Embodied-AI/Genesis repo.

- ModernBERT Takes a Bow: This “workhorse model” offers extended context and improved classification or retrieval over older BERT. Community testers confirm better performance and simpler optimization for RAG workflows.

- Nomic Maps Data in the Browser: The final post in their Data Mapping Series shows how scalable embeddings and dimensionality reduction democratize massive dataset visualization. Readers laud it as a game-changer for exploratory analysis.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Unsloth Preps Multi-GPU Magic: Multi-GPU support for Unsloth is slated for Q1, and the team is fine-tuning pricing details alongside final tests.

- They also hinted at revamping sales processes for enterprise interest, though their enterprise beta remains in a testing phase.

- Llama 3.3 Ramps Up Power: The Llama 3.3 model demands roughly 41GB of VRAM to fine-tune, as noted in Unsloth’s blog.

- Participants reported higher performance in contrast to earlier versions, pointing to the benefits of careful training cycles on large datasets.

- SGLang vs. vLLM: The Speed Showdown: Many agreed vLLM outpaces SGLang for hefty production tasks, but SGLang v0.4 looks promising for structured outputs and scheduling tricks.

- Community members consider vLLM stronger for throughput, while SGLang appeals to those optimizing modular results.

- RAG Meets Quantization: Retrieval-Augmented Generation (RAG) appeared as a smarter alternative to direct fine-tuning when resources are tight, often employing chunked data and embeddings for context retrieval.

- Users praised quantization (see Transformers Docs) to shrink memory footprints without completely sacrificing performance.

- LoRAs, Merging & Instruction Tuning Warnings: Combining Low Rank Adapters (LoRAs) with base models, possibly saved as GGUF options, requires careful parameter balancing to avoid unwanted distortions.

- An instruction tuning paper highlighted how partial training can degrade core knowledge, underscoring the hazards of merging multiple techniques without thorough validation.

Cursor IDE Discord

- Cursor 0.44.4 Launches with Agent Boost: Cursor 0.44.4 introduced improved agent features, Yolo mode, and is available here.

- Engineers applauded its faster command execution and better task handling, citing the changelog for a detailed breakdown.

- Coin Toss: O1 vs Sonnet 3.5: Users pinned O1 at around 40 cents per request and compared its gains to Sonnet 3.5.

- Some considered Sonnet 3.5 'good enough,' while others questioned if O1's extra cost is worth the difference.

- Build It Up: Framer vs. DIY Code: A lively discussion contrasted Framer for rapid site creation with fully custom code.

- Some praised the time savings, while others preferred complete control over performance and flexibility.

- Gemini-1206 Gains Curiosity: Members showed interest in Gemini-1206, but concrete evidence of its abilities remains scarce.

- Others stayed focused on Sonnet 3.5 for coding, since they lacked extensive data on Gemini-1206.

- College or Startup: The Great Showdown: Some argued Ivy League credentials offer networking perks, while others favored skipping school to build real-world products.

- Opinions varied, with personal success stories suggesting any path can yield major breakthroughs.

Codeium (Windsurf) Discord

- Cline & Gemini Triumph Together: Multiple members praised Cline v3 combined with Gemini 2.0 for smoother coding and large-task handling.

- They noted that it outperformed other setups, mainly due to faster iterations and more stable refactoring capabilities.

- Windsurf vs Cursor Showdown: Comparisons referenced this direct breakdown on features like collaborative editing and file handling.

- Opinions seemed divided, but many cited Cursor's more consistent performance as a critical advantage in code-heavy workflows.

- Credit Rollover Reassurance: Users confirmed flex credits roll over in Codeium’s paid plan, ensuring no sudden interruptions.

- Some participants shared relief about not losing credits after paying, highlighting the importance of stable subscription models.

- Claude vs Gemini Model Chatter: Community members weighed performance differences between Claude Sonnet, Gemini, and other AI models while referencing Aider LLM Leaderboards.

- They stressed the need for contextual prompts and thorough documentation to fully leverage each model's coding potential.

Interconnects (Nathan Lambert) Discord

- Gemini 2.0 Flashes a 'Think Out Loud' Trick: Google introduced Gemini 2.0 Flash Thinking, an experimental model that trains explicit chain-of-thought for enhanced reasoning and speed in chatbot tasks.

- Community members referenced Denny Zhou's stance on classical AI reliance on search, hinting that Gemini's open thinking pattern might surpass naive retrieval solutions.

- OpenAI Sings with Voice Mode: OpenAI rolled out Work with Apps in voice mode, enabling integration with apps like Notion and Apple Notes as teased on their 12 Days of ChatGPT site.

- Members called this a straightforward but major step in bridging ChatGPT with real-world productivity, with some hoping advanced voice features could power daily tasks.

- Chollet's 'o1 Tiff' Rattles LLM Circles: François Chollet equated labeling o1 as an LLM to calling AlphaGo 'a convnet', inciting heated arguments on X.

- Community members noted this parallels the old Subbarao/Miles Brundage incident, with calls for clarity on o1's architecture fueling further drama.

- FineMath: Gigantic Gains for LLM Arithmetic: A link from @anton_lozhkov showcased FineMath, a math-focused dataset with over 50B+ tokens, promising boosts over conventional corpora.

- Participants saw this as a big leap for complex code math tasks, referencing merging FineMath with mainstream pretraining to handle advanced calculations.

- RLHF Book: Spot a Typo, Score Free Copies: An RLHF resource was mentioned to be on GitHub, where volunteers who catch typos or formatting bugs qualify for free copies of the book.

- Eager contributors found it less stressful to refine reinforcement learning fundamentals this way, calling the process both fun and beneficial for the community.

OpenAI Discord

- Day 11 of OpenAI Delivers ChatGPT Boost: Day 11 of the 12 Days of OpenAI introduces a new approach for ChatGPT, featuring a YouTube live session that highlights advanced code collaboration.

- Engineers can now broaden daily development cycles with AI assistance, although manual copy actions remain necessary.

- ChatGPT Integrates with XCode: Participants discussed copying code from ChatGPT straight into XCode, smoothing iOS dev tasks.

- This step promises convenience but still depends on user-initiated triggers for actual code insertion.

- Google’s Gemini 2.0 Hits the Spotlight: Google published the Gemini 2.0 Flash Thinking experimental model, attracting curiosity with bold performance claims.

- Some participants doubted the model’s reliability after it stumbled on letter-count tasks, fueling skepticism about its real prowess.

- YouTube Clone Demo with ChatGPT: Members explored using ChatGPT to craft a YouTube-like experience, covering front-end and back-end solutions.

- Though front-end tasks seemed straightforward, the server-side setup demanded more steps through terminal instructions.

- AI Automation Heats Up the Engineering Floor: Conversations centered on the prospect of AI fully automating software development, reshaping the demand for human engineers.

- While many recognized potential time-savings, others wondered if hype was outpacing actual breakthroughs.

Eleuther Discord

- FSDP vs Tensor Parallel Tangle: At Eleuther, participants compared Fully Sharded Data Parallel (FSDP) to Tensor Parallelism, referencing llama-recipes for real-world implementations.

- They argued about higher communication overhead in FSDP and weighed that against the direct parallel ops advantage of tensor-based methods, with some voicing concern about multi-node scaling limits.

- NaturalAttention Nudges Adam: A member highlighted a new Natural Attention Optimizer on GitHub that modifies Adam with attention-based gradient adjustments, backed by proofs in Natural_attention_proofs.pdf.

- They claimed notable performance gains, though some cited potential bugs in the code at natural_attention.py and suggested caution when replicating results.

- Diffusion vs Autoregressive Arm-Wrestle: A discussion emerged contrasting diffusion and autoregressive models across image and text domains, highlighting efficiency tradeoffs and discrete data handling.

- Some posited that diffusion leads in image generation but might be challenged by autoregressive approaches in tasks that require token-level control.

- Koopman Commotion in NNs: Members debated applying Koopman theory to neural networks, referencing Time-Delay Observables for Koopman: Theory and Applications and Learning Invariant Subspaces of Koopman Operators--Part 1.

- They questioned the legitimacy of forcing Koopman methods onto standard frameworks, suggesting it might mislead researchers if underlying math doesn't align with real-world activation behaviors.

- Steered Sparse AE OOD Queries: In interpretability discussions, enthusiasts explored steered sparse autoencoders (SAE) and whether cosine similarity checks on reconstructed centroids effectively gauge out-of-distribution data.

- They reported that adjusting one activation often influenced others, indicating strong interdependence and prompting caution in interpreting SAE-based OOD scores.

Perplexity AI Discord

- Perplexity's Referral Program Boosts Sign-Ups: Multiple users confirmed that Perplexity offers a referral program granting benefits for those who link up with new sign-ups.

- Enthusiasts aim to recruit entire fraternities, accelerating platform reach and energizing discussions about user growth.

- You.com Imitation Raises Accuracy Concerns: Community members discussed You.com replicating responses with search-based system instructions, questioning the quality of its output.

- They noted that relying on direct model calls often produces more precise logic, revealing potential gaps in search-oriented Q&A solutions.

- Game Descriptions Overwhelm Translation Limits: A user attempting to convert lengthy lists to French encountered size restrictions, showing Perplexity AI's text-handling constraints.

- They sought advice on segmenting content into smaller chunks, hoping to bypass these limitations in complex translation tasks.

- Magic Spell Hypothesis Sparks Curiosity: A posted document described the Magic Spell Hypothesis, linking advanced linguistic patterns to emerging concepts in scientific circles.

- Researchers and community members weighed its credibility, applauding attempts to test fringe theories in structured experiments.

aider (Paul Gauthier) Discord

- Gemini Gains Ground: On 12/19, Gemini 2.0 Flash Thinking emerged with the

gemini-2.0-flash-thinking-exp-1219variant, touting better reasoning in agentic workflows as shown in Jeff Dean's tweet.- Initial tests revealed faster performance than O1 and deepseek, and some community members applauded its upgraded output quality.

- Aider & MCP Get Cozy: Users achieved Aider and MCP integration for streamlined Jira tasks, referencing Sentry Integration Server - MCP Server Integration.

- They discussed substituting Sonnet with other models in MCP setups, suggesting top-notch flexibility for error tracking and workflow automation.

- OpenAPI Twinning Madness: Community members explored running QwQ on Hugging Face alongside local Ollama, clarifying that Hugging Face mandates its own API usage for seamless model switching.

- They discovered the need to indicate the service in the model name, preventing confusion in multi-API setups.

- Copilot Chat Spices Up: GitHub Copilot Chat introduced a free immersive mode as stated in GitHub's announcement, offering real-time code interactions and sharper multi-file edits.

- While users appreciated the enhanced chat interface, some still preferred old-school diff edits to contain costs and maintain predictable workflows.

Stackblitz (Bolt.new) Discord

- Bolt & Supabase Spark Instant Setup: The Bolt & Supabase integration is officially live, offering simpler one-click connections as shown in this tweet from StackBlitz. It eliminates the manual steps, letting engineers unify services more quickly and reduce overhead.

- Users praised the easy setup, noting how it shortens ramp-up time for data-driven applications and provides a frictionless developer experience.

- Figma Frustrations & .env Woes: Users reported .env file resets that disrupt Firebase configurations, with locking attempts failing after refresh and causing 'This project exceeds the total supported prompt size' errors.

- Additionally, Figma direct uploads are off the table, forcing designers to rely on screenshots while requesting more robust design-to-dev integrations.

- Redundancy Rehab & Public Folder Setup: Community members asked if Bolt could analyze code for redundant blocks, aiming to cut token use in large-scale apps. They also needed clarity on building a public folder to host images, highlighting confusion about project structure.

- Some suggested straightforward docs to resolve folder-setup uncertainties, indicating a desire for simpler references when working with Bolt.

- Session Snafus & Token Tangles: Frequent session timeouts and forced page refreshes left many losing chat histories in Bolt, driving up frustration and token costs. The dev team is investigating these authentication issues, but real-time disruptions persist.

- Users hope for fixes that reduce redundant outputs and control overspending on tokens, seeking stability in their project workflows.

- Community Convergence for Guides & Integrations: Participants plan a broader guide for Bolt, providing a user dashboard for submitting and approving resources. The conversation touched on Stripe integration, advanced token handling, and synergy with multiple tech stacks.

- They also showcased Wiser - Knowledge Sharing Platform, hinting at deeper expansions for shared content and more polished developer experiences.

Notebook LM Discord Discord

- Interactive Mode Reaches Everyone: The development team confirmed Interactive Mode reached 100% of users with notable improvements for audio overviews.

- Enthusiasts praised the creative possibilities and shared firsthand experiences of smoother deployment.

- MySQL Database Hook for Automatic NPCs: A game master asked how to connect a large MySQL database to NotebookLM for automating non-player character responses.

- They highlighted a decade of stored RPG data and sought ideas for managing dynamic queries.

- Podcasters Tweak Recording Setup: Members debated how the interactive podcast feature does not store conversations, forcing separate audio capture for external listeners.

- A concise 'podcast style prompt' sparked interest in faster, more candid commentary for a QWQ model review.

- AI-Generated Space Vlog Shakes Viewers: A user showcased a year-long astronaut isolation vlog rendered by AI, linked at this YouTube link.

- Others noted daily animated uploads driven by NotebookML outputs, demonstrating consistent content production.

- Updated UI Gains Kudos: Users applauded the NotebookLM interface overhaul, describing it as more receptive and convenient for project navigation.

- They are eager to test its new layouts and praised the overall polished look.

Stability.ai (Stable Diffusion) Discord

- Ubuntu Steps for SDXL: Some members shared tips for running SDXL on Ubuntu, advising the use of shell scripts from stable-diffusion-webui-forge for streamlined setups.

- They underlined the importance of system knowledge to avoid performance bottlenecks.

- ComfyUI Meltdown: Engineers complained about persistent errors and charred output from ComfyUI despite attempts to fix sampling issues.

- They recommended using Euler sampling with well-tuned denoising levels to reduce flawed results.

- AI Images Face Rocky Road to Perfection: Some argued AI-generated images and video won't be flawless by 2030 due to current challenges.

- Others countered that rapid technological leaps could deliver polished outputs much sooner.

- Quantum Quarrel Over P=NP: A heated chat focused on quantum computing relevance if P=NP becomes reality.

- Skeptics pointed to trouble extracting real-world value from quantum states, citing complexity in practical execution.

- Civitai.com Down Again?: Multiple users noted frequent outages on civitai.com, making model access challenging.

- They speculated recurring server problems are behind the repeated downtime.

GPU MODE Discord

- GPU Glitter & Coil Whine: Users complained about absurd coil whine from a returned RX 6750XT, plus VRChat's memory appetite prompting some to choose 4090s.

- They also expressed worry about potentially bigger price tags for next-gen RTX 50 cards while comparing the 7900 XTX.

- Triton Tinkers with AMD: Community members tested Triton kernels on AMD GPUs like the RX 7900, noting performance still lags behind PyTorch/rocBLAS.

- They also discovered that warp-specialization was removed in Triton 3.x, driving them to explore alternative optimizations.

- CARLA Zooms into UE 5.5: CARLA version 0.10.0 introduced Unreal Engine 5.5 features like Lumen and Nanite, boosting environment realism.

- Attendees also praised Genesis AI for its water droplet demos, envisioning synergy with Sim2Real and referencing Waymo's synthetic data approach for autonomous driving.

- MatX's HPC Hiring Spree: MatX announced open roles for low-level compute kernel authors and ML performance engineers, aiming to build an LLM accelerator ASIC.

- The job listing emphasizes a high-trust environment that favors bold design decisions over extended testing.

- Alma's 40-Option Benchmark Bash: A duo released alma, a Python package checking the throughput of over 40 PyTorch conversion options in a single function call.

- According to GitHub, it gracefully handles failures with isolated processes and will expand into JAX and llama.cpp soon.

Latent Space Discord

- Anthropic Agents Amp Up: Anthropic posted Building effective agents with patterns for AI agentic systems, anticipating a major milestone in 2025.

- They emphasized composable workflows, referencing a tweet about the 'year of agentic systems' for advanced design.

- Gemini 2.0 Gains Speed: Multiple tweets, including lmarena.ai's mention and Noam Shazeer's announcement, praised Gemini 2.0 Flash Thinking for topping all categories.

- The model trains to 'think out loud', enabling stronger reasoning and outdoing earlier Gemini versions.

- Databricks Hauls $10B: They announced a Series J funding round worth $10B, hitting a $62B valuation with Thrive Capital leading.

- They anticipate crossing $3B in revenue run rate, reporting 60% growth sparked by AI demand.

- ModernBERT Steps Onstage: A new model called ModernBERT was introduced as a 'workhorse' option with extended context and improved performance.

- References like Jeremy Howard's mention show attempts to apply it in retrieval and classification, spurring conversation among practitioners.

- Radford Says Farewell to OpenAI: Alec Radford, credited for the original GPT paper, left OpenAI to pursue independent research.

- This shift stirred speculation about OpenAI's upcoming directions in the industry.

OpenInterpreter Discord

- OpenInterpreter’s Vision Variation: OpenInterpreter 1.0 now includes vision support, with an installation path via GitHub and pip install git+https://github.com/OpenInterpreter/open-interpreter.git@development.

- Experiments suggest the

--tools guicommand functions properly for bridging different models or APIs, with people noting local or SSH-based usage.

- Experiments suggest the

- Server Mode Sparks Execution Queries: Members questioned how server mode handles command execution, debating whether tasks run locally or on the server.

- They mentioned using SSH for simpler interaction and proposed a front end for improved workflow.

- Google Gemini 2.0 Gains Attention: A user showed interest in Google Gemini 2.0 for multimodal tasks within OS mode, hoping for highly capable command execution.

- They compared it to existing setups and wondered if it competes effectively with other systems.

- Cleaning Installs & O1 Confusion: Some users faced issues with OpenInterpreter installation after multiple configurations, prompting them to remove flags for a new setup.

- Meanwhile, an O1 channel user complained about unclear docs, seeking direct guidance even after reading official references.

LM Studio Discord

- Safetensors Snafu Stumps LM Studio: Users encountered Safetensors header is unexpectedly large: bytes=2199142139136 errors when loading models, forcing redownloads of the MLX version of Llama 3.3 to fix possible corruption issues.

- Discussions mentioned conflict with file compatibility, with some users suggesting a careful file check for future downloads.

- Mobile Dreams: iOS Gains Chat, Android Waits: An iOS app called 3Sparks Chat (link) connects to LM Studio on Mac or PC, providing a handheld interface for local LLMs.

- Members expressed disappointment about the lack of an Android client, leaving the community requesting alternative solutions.

- AMD's 24.12.1 Distress: The AMD 24.12.1 drivers triggered system stuttering and performance loss when loading models with LM Studio, connecting to llama.cpp rocm libraries.

- Downgrading drivers resolved problems in some setups, and references to the 7900XTX GPU emerged as a concern for stability.

- Vision Model Hopes in LM Studio: A query about image input models led to mention of mlx-community/Llama-3.2-11B-Vision-Instruct-4bit, highlighting early attempts at integrating visual features.

- Users reported loading problems on Windows, fueling debate about model compatibility with local hardware.

- Apple Silicon vs. 4090 GPU Showdown: Community members questioned if Mac Pro and Ultra chips outperform a 30 or 4090 card due to memory bandwidth advantages.

- Benchmark references pointed to the llama.cpp GitHub discussion, where users confirmed the 4090 still holds faster metrics in practical tests.

OpenRouter (Alex Atallah) Discord

- Price Cuts Rattle the LLM Market: This morning saw a 7% cut for gryphe/mythomax-l2-13b, 7.7% for qwen/qwq-32b-preview, and a 12.5% slash on mistralai/mistral-nemo.

- Community members joked about 'ongoing price wars' fueling competition among providers.

- Crowdsourced AI Stack Gains Spotlight: VC firms have released various ecosystem maps, but there's a push for a truly crowdsourced and open-source approach showcased in this GitHub project.

- One user requested feedback on the proposed logic, encouraging the community to 'contribute to a dynamic developer resource'.

- DeepSeek Speeds Code Learning: Developers used DeepSeek V2 and DeepSeek V2.5 to parse entire GitHub repositories, reporting significant improvements in project-wide optimization.

- However, a user cautioned that 'it may not handle advanced code generation', and they still praised its annotation abilities.

- Calls for Programmatic API Keys: A discussion emerged about allowing a provider API key to be sent implicitly with requests, streamlining integration.

- One user said 'I'd love to see a programmatic version' to enhance developer convenience across the board.

Nous Research AI Discord

- GitHub Copilot Goes Free: Microsoft introduced a new free tier for GitHub Copilot with immediate availability for all users.

- It surprisingly includes Claude for improved capabilities, and no credit card is required.

- Granite 3.1-8B-Instruct Gains Fans: Developers praised the Granite 3.1-8B-Instruct model for strong performance on long context tasks.

- It provides quick results for real-world cases, and IBM offers code resources on GitHub.

- LM Studio Enables Local LLM Choices: LM Studio simplifies running Llama, Mistral, or Qwen models offline while supporting file downloads from Hugging Face.

- Users can also chat with documents quickly, appealing to folks wanting an offline approach.

- Fine-Tuning Uniform Instruction Sparks Debate: Questions arose about using the same instruction for every prompt in a Q&A dataset.

- A caution was raised that it might cause suboptimal model performance due to repetitive usage.

- Genesis Project Roars with Generative Physics: The Genesis engine builds 4D dynamical worlds at speeds up to 430,000 times faster than real-time.

- It's open source, runs in Python, and slashes robotic training to just 26 seconds on a single RTX4090.

Modular (Mojo 🔥) Discord

- Negative Indexing Showdown in Mojo: A heated discussion emerged about adopting negative indexing in Mojo, with some calling it an error magnet while others see it as standard practice in Python.

- Opponents favored a

.last()approach to dodge overhead, warning of performance issues with negative offsets.

- Opponents favored a

- SIMD Key Crash Rumbles in Dicts: A serious bug in SIMD-based struct keys triggered segmentation faults in Dict usage, detailed in GitHub Issue #3781.

- Absent scaling_cur_freq caused these crashes, prompting a fix within a 6-week window.

- Mojo Goes Rogue on Android: Enthusiasts tinkered with running Mojo on native Android via Docker-based hacks, though it's labeled 'wildly unsupported'.

- Licensing rules prevent publishing a Docker image, but local custom builds remain possible.

- Python Integration Teases SIMD Support: Participants discussed merging SIMD and conditional conformance with Python types, balancing separate handling for integral and floating-point data.

- They highlighted ABI constraints and future bit-width expansions, stirring interest in cross-language interactions.

DSPy Discord

- Synthetic Data Explainer Gains Steam: One contributor is building an explainer on how synthetic data is generated, requesting community input on tricky areas.

- They plan to highlight creation approaches and performance implications for advanced models.

- DataBricks Rate-Limiting Debate: Participants flagged big throughput charges, calling for a rate limiter to prevent overuse in DataBricks.

- Some recommended the LiteLLM proxy layer for usage tracking, also referencing Mosaic AI Gateway as a supplementary approach.

- dspy.Signature as a Class: A user asked about returning a dspy.Signature in class form, aiming for structured outputs over raw strings.

- They hope to define explicit fields for clarity and potential type-checking.

- Provisioned Throughput Shocks Wallet: A conversation exposed high expense from provisioned throughput in DataBricks when it remains active.

- Members advised the scale to 0 feature to curb costs during idle periods.

- LiteLLM Reaches DataBricks: Attendees debated whether to embed the LiteLLM proxy within a DataBricks notebook or run it separately.

- They agreed it's feasible to integrate both approaches, given environment controls and resource needs.

LlamaIndex Discord

- LlamaIndex's Multi-Agent Makeover: A post described the jump from a single agent to a multi-agent system with practical code examples in LlamaIndex, referencing this link.

- It also clarifies how agent factories manage multiple tasks working in tandem.

- Vectara's RAG Rally: An update showcased Vectara's RAG strengths, including data loading and streaming-based queries, referencing this link.

- It underscored agentic usage of RAG methods, with insights on reranking in a managed environment.

- Vercel's AI Survey Shout-Out: Community members were urged to fill out Vercel's State of AI Survey, found here.

- They plan to gather data on developer experiences, challenges, and target areas for future AI improvements.

- Vision Parse for PDF Power: A new open-source Python library, Vision Parse, was introduced for converting PDF to well-structured markdown using advanced Vision Language Models.

- Participants praised its potential to simplify document handling and welcomed open-source efforts for collective growth.

Nomic.ai (GPT4All) Discord

- Nomic's Data Mapping Marathon Ends: The final installment of the Data Mapping Series spotlights scalable graphics for embeddings and unstructured data in Nomic’s blog post.

- This six-part saga demonstrates how techniques like dimensionality reduction empower users to visualize massive datasets within their web browsers.

- BERT & GGUF Glitches Get Patched: Users faced issues loading Nomic’s BERT embedding model from Huggingface after a commit broke functionality, but the fix is now live.

- Community members also flagged chat template problems in .GGUF files, with updated versions promised in the upcoming release.

- Code Interpreter & System Loader Shine: A pull request proposes a code interpreter tool built on the jinja template for running advanced code tasks.

- Simultaneously, users requested a more convenient system message loader to bypass manual copy-pasting of extensive context files.

- GPT4All Device Specs Confirmed: A query about GPT4All system requirements led to a link detailing hardware support.

- Important CPU, GPU, and memory details were highlighted to ensure a stable local LLM experience.

tinygrad (George Hotz) Discord

- TinyChat Installation Tussle: One user ran into problems setting up TinyChat, reporting missing pieces like tiktoken and a 30-second system freeze, plus a puzzling prompt about local network devices.

- George Hotz spoke about writing a tiktoken alternative within TinyGrad and flagged 8GB RAM as a constraint.

- Mac Scroll Direction Goes Rogue: A user complained that running TinyChat flipped the scroll direction on their Mac, then reverted once the app closed.

- George Hotz called this behavior baffling, acknowledging it as a strange glitch.

- Bounty Push and Layout Talk: Contributors discussed bounty rewards to push tinygrad forward, stressing tests and improvements as key drivers.

- A user mentioned the complexity of layout notation, linking to both a view merges doc and viewable_tensor.py for deeper context.

- Scheduler Query in #learn-tinygrad: A participant asked why the scheduler uses realize before expand or unsafe pad ops, with no clear explanation offered.

- The group didn't fully unpack the reasoning, leaving the topic open for further exploration.

Cohere Discord

- Ikuo Impresses & Etiquette Ensues: Ikuo618 introduced himself with six years of experience in DP, NLP, and CV, spotlighting his Python, TensorFlow, and PyTorch skills.

- A gentle reminder followed, advising members not to repost messages across channels for a cleaner conversation flow.

- Platform Feature Question Marks: A user asked about a feature's availability on the platform, and a member confirmed it's still not live.

- The inquirer expressed thanks, ending on a positive note with a smiley face.

- Cohere Keys & Rate Limits Exposed: Cohere provides evaluation and production API keys, detailed on the API keys page and in the pricing docs.

- Rate limits include 20 calls per minute for trial and 500 per minute for production on the Chat endpoint, with Embed and Classify sharing distinct quotas.

Torchtune Discord

- Torchtune Teases Phi 4 & Roles: In the official Torchtune docs page, members confirmed that Torchtune only supports Phi 3 but welcomes contributions for Phi 4.

- They introduced a Contributor role on Discord and noted minimal differences between Phi 3 and Phi 4 to simplify new pull requests.

- Asynchronous RLHF Races Ahead: Asynchronous RLHF separates generation and learning for faster model training, detailed in “Asynchronous RLHF: Faster and More Efficient Off-Policy RL for Language Models”.

- The paper questions how much off-policyness can we tolerate, pushing for speed without sacrificing performance.

- Post-Training Gains Momentum: The Allen AI blog highlights that post-training is crucial after pre-training to ensure models follow human instructions safely.

- They outline instruction fine-tuning steps and focus on preserving capabilities such as intermediate reasoning while specializing.

- Instruction Tuning Tightrope: InstructGPT-style strategies can unwittingly diminish certain model abilities, especially if specialized tasks overshadow broader usage.

- Retaining coding proficiency while handling poetic or general instructions emerged as the delicate balance to maintain.

LLM Agents (Berkeley MOOC) Discord

- LLM Agents Hackathon Countdown: The submission deadline for the hackathon is 12/19 at 11:59 PM PST, with entries filed via the official Submission Form.

- The community is on standby for last-minute fixes, making sure everyone has a fair shot before the clock hits zero.

- Support for Eleventh-Hour LLM Queries: Participants can drop last-minute questions in the chat for quick feedback from peers.

- Organizers urge coders to finalize checks promptly, avoiding frantic merges at the buzzer.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Axolotl AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LAION Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!