[AINews] Mistral Large 2 + RIP Mistral 7B, 8x7B, 8x22B

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

A Mistral Commercial License is what you'll need.

AI News for 7/23/2024-7/24/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (474 channels, and 4118 messages) for you. Estimated reading time saved (at 200wpm): 428 minutes. You can now tag @smol_ai for AINews discussions!

It is instructive to consider the focuses of Mistral Large in Feb 2024 vs today's Mistral Large 2:

- Large 1: big focus on MMLU 81% between Claude 2 (79%) and GPT4 (86.4%), API-only, no parameter count

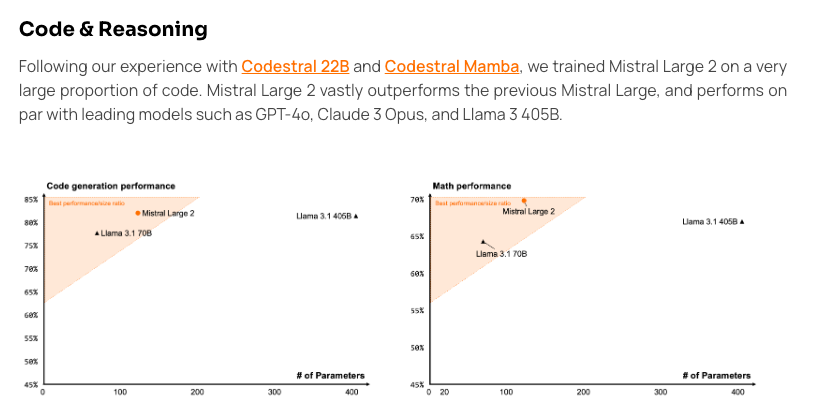

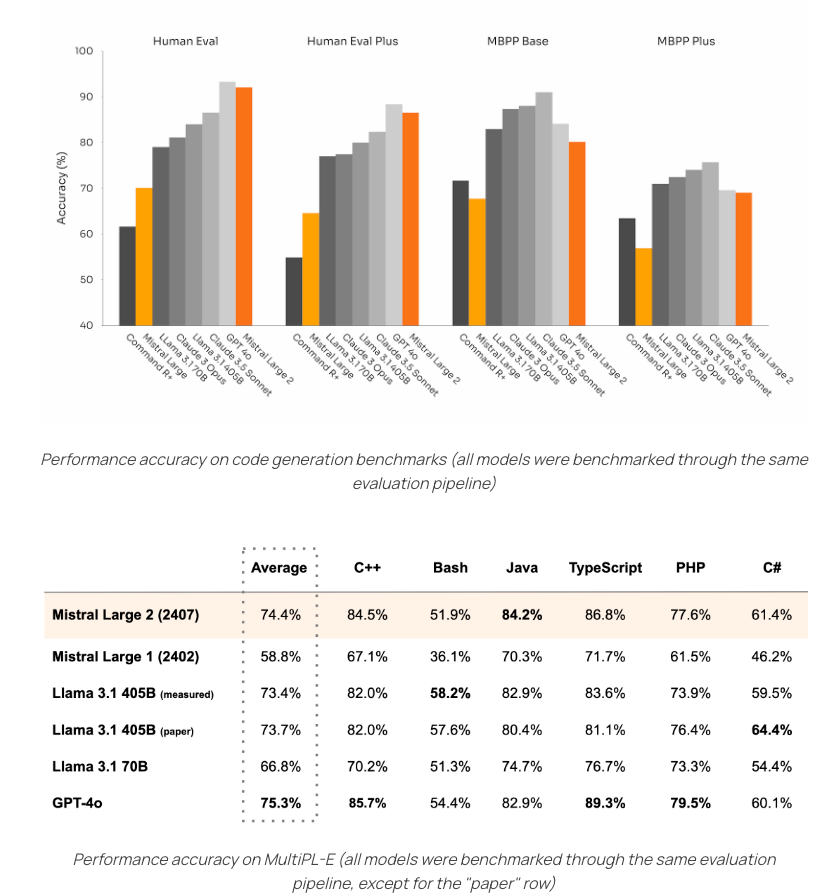

- Large 2: one small paragraph on MMLU 84% (still not better than GPT4!), 123B param Open Weights under a Research License, "sets a new point on the performance/cost Pareto front of open models" but new focus is on codegen & math performance using the "convex hull" chart made popular by Mixtral 8x22

- Both have decent focus on Multilingual MMLU

- Large 1: 32k context

- Large 2: 128k context

- Large 1: only passing mention of codegen

- Large 2: "Following our experience with Codestral 22B and Codestral Mamba, we trained Mistral Large 2 on a very large proportion of code."

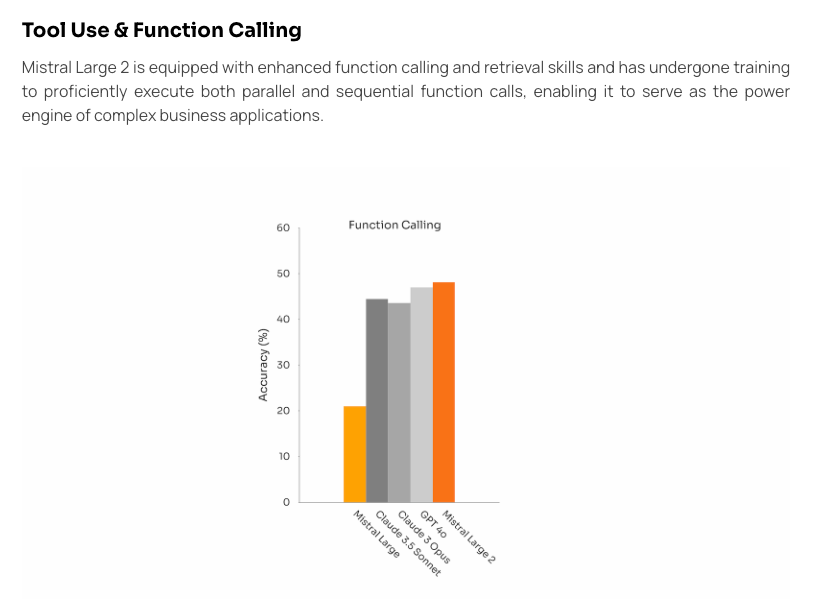

- Large 1: "It is natively capable of function calling" and "JSON format"

- Large 2: "Sike actually our Function calling wasn't that good in v1 but we're better than GPT4o now"

- Large 2: "A significant effort was also devoted to enhancing the model’s reasoning capabilities."

- Llama 3.1: <<90 pages of extreme detail on how sythetic data was used to improve reasoning and math>>

Mistral's la Plateforme is deprecating all its Apache open source models (Mistral 7B, Mixtral 8x7B and 8x22B, Codestral Mamba, Mathstral) and only Large 2 and last week's 12B Mistral Nemo remain for its generalist models. This deprecation was fully predicted by the cost-elo normalized frontier chart we discussed at the end of yesterday's post.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

temporary outage today. back tomorrow.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Llama 3.1 Release and Capabilities

- Meta Officially Releases Llama-3-405B, Llama-3.1-70B & Llama-3.1-8B (Score: 910, Comments: 373): Meta has officially released new versions of their Llama language models, including Llama-3-405B, Llama-3.1-70B, and Llama-3.1-8B. The models are available for download from the Llama website, and can be tested on cloud provider playgrounds such as Groq and Together.

- Let's discuss Llama-3.1 Paper (A lot of details on pre-training, post-training, etc) (Score: 109, Comments: 26): Llama 3.1 paper reveals pre-training details The Llama 3.1 paper, available at ai.meta.com, provides extensive details on the model's pre-training and post-training processes. The paper includes hyperparameter overviews, validation loss graphs, and various performance metrics for different model sizes ranging from 7B to 70B parameters.

- Early Hot Take on Llama 3.1 8B at 128K Context (Score: 72, Comments: 49): Llama 3.1 8B model's 128K context performance underwhelms The author tested the Llama 3.1 8B model with 128K context using a novel-style story and found it less capable than Mistral Nemo and significantly inferior to the Yi 34B 200K model. The Llama model struggled to recognize previously established context about a character's presumed death and generate appropriate reactions, even when tested with FP16 precision in 24GB VRAM using exllama with Q6 cache. Despite further testing with 8bpw and Q8 quantization, the author ultimately decided to abandon Llama 8B in favor of Mistral Dori.

Theme 2. Open Source AI Strategy and Industry Impact

- Open source AI is the path forward - Mark Zuckerberg (Score: 794, Comments: 122): Mark Zuckerberg advocates for open source AI Mark Zuckerberg argues that open source AI is crucial for advancing AI technology and ensuring its responsible development. In his blog post, Zuckerberg emphasizes the benefits of open source AI, including faster innovation, increased transparency, and broader access to AI tools and knowledge.

- Llama 3 405b is a "systemic risk" to society according to the AI Act (Score: 169, Comments: 68): Meta's Llama 3.1 405B model has been classified as a "systemic risk" under the European Union's AI Act. This designation applies to AI systems with more than 10^25 parameters, placing significant regulatory obligations on Meta for the model's development and deployment. The classification highlights the growing concern over the potential societal impacts of large language models and the increasing regulatory scrutiny they face in Europe.

- OpenAI right now... (Score: 167, Comments: 27): OpenAI's competitors are closing the gap. The release of Llama 3.1 by Meta has demonstrated significant improvements in performance, potentially challenging OpenAI's dominance in the AI language model space. This development suggests that the competition in AI is intensifying, with other companies rapidly advancing their capabilities.

- ChatGPT's Declining Performance: Users report ChatGPT's coding abilities have deteriorated since early 2023, with GPT-4 and GPT-4 Turbo showing inconsistent results and reduced reliability for tasks like generating PowerShell scripts.

- OpenAI's Credibility Questioned: Critics highlight OpenAI's lobbying efforts to regulate open-source AI and the addition of former NSA head Paul Nakasone to their board, suggesting a shift away from their original "open" mission.

- Calls for Open-Source Release: Some users express desire for OpenAI to release model weights for local running, particularly for GPT-3.5, as a way to truly advance the industry and live up to their "Open" name.

Theme 3. Performance Benchmarks and Comparisons

- LLama 3.1 vs Gemma and SOTA (Score: 140, Comments: 37): Llama 3.1 outperforms Gemma and other state-of-the-art models across various benchmarks, including MMLU, HumanEval, and GSM8K. The 7B and 13B versions of Llama 3.1 show significant improvements over their predecessors, with the 13B model achieving scores comparable to or surpassing larger models like GPT-3.5. This performance leap suggests that Llama 3.1 represents a substantial advancement in language model capabilities, particularly in reasoning and knowledge-based tasks.

- Llama 3.1 405B takes #2 spot in the new ZebraLogic reasoning benchmark (Score: 110, Comments: 9): Llama 3.1 405B has secured the second place in the newly introduced ZebraLogic reasoning benchmark, demonstrating its advanced reasoning capabilities. This achievement positions the model just behind GPT-4 and ahead of other notable models like Claude 2 and PaLM 2. The ZebraLogic benchmark is designed to evaluate a model's ability to handle complex logical reasoning tasks, providing a new metric for assessing AI performance in this crucial area.

- The final straw for LMSYS (Score: 175, Comments: 55): LMSYS benchmark credibility questioned. The author criticizes LMSYS's ELO ranking for placing GPT-4o mini as the second-best model overall, arguing that other models like GPT-4, Gemini 1.5 Pro, and Claude Opus are more capable. The post suggests that human evaluation of LLMs is now limited by human capabilities rather than model capabilities, and recommends alternative benchmarks such as ZebraLogic, Scale.com leaderboard, Livebench.ai, and LiveCodeBench for more accurate model capability assessment.

Theme 4. Community Tools and Deployment Resources

- Llama-3.1 8B Instruct GGUF are up (Score: 50, Comments: 15): Llama-3.1 8B Instruct GGUF models have been released, offering various quantization levels including Q2_K, Q3_K_S, Q3_K_M, Q4_0, Q4_K_S, Q4_K_M, Q5_0, Q5_K_S, Q5_K_M, Q6_K, and Q8_0. These quantized versions provide options for different trade-offs between model size and performance, allowing users to choose the most suitable version for their specific use case and hardware constraints.

- Finetune Llama 3.1 for free in Colab + get 2.1x faster, 60% less VRAM use + 4bit BnB quants (Score: 85, Comments: 24): Unsloth has released tools for Llama 3.1 that make finetuning 2.1x faster, use 60% less VRAM, and improve native HF inference speed by 2x without accuracy loss. The release includes a free Colab notebook for finetuning the 8B model, 4-bit Bitsandbytes quantized models for faster downloading and reduced VRAM usage, and a preview of their Studio Chat UI for local chatting with Llama 3.1 8B Instruct in Colab.

- We made glhf.chat: run (almost) any open-source LLM, including 405b (Score: 54, Comments: 26): New platform glhf.chat launches for running open-source LLMs The newly launched glhf.chat platform allows users to run nearly any open-source LLM supported by the vLLM project, including models up to ~640GB of VRAM. Unlike competitors, the platform doesn't have a hardcoded model list, enabling users to run any compatible model or finetune by pasting a Hugging Face link, with support for models like Llama-3-70b finetunes and upcoming Llama-3.1 versions.

- The platform initially required an invite code "405B" for registration, which was mentioned in the original post. reissbaker, the developer, later removed the invite system entirely to simplify access for all users.

- Users encountered a "500 user limit" error due to an oversight in upgrading the auth provider. Billy, another glhf.chat developer, acknowledged the issue and promised a fix within minutes.

- In response to a user request, reissbaker shipped a fix for the Mistral NeMo architecture, enabling support for models like the dolphin-2.9.3-mistral-nemo-12b on the platform.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Releases and Benchmarks

- Meta releases Llama 3.1 405B model: Meta has released a new 405 billion parameter Llama model. Benchmark results show it performing competitively with GPT-4 and Claude 3.5 Sonnet on some tasks.

- Zuckerberg argues for open-sourcing AI models: Mark Zuckerberg made the case that open-sourcing AI models is beneficial, arguing that closed models will be stolen anyway. He stated that "it doesn't matter that China has access to open weights, because they will just steal weights anyway if they're closed."

- Google releases "AI Agents System": Google has released Project Oscar, an open-source platform for creating AI agents to manage software projects, particularly for monitoring issues and bugs.

AI Capabilities and Benchmarks

- Debate over AI surpassing human intelligence: There is ongoing discussion about whether current AI models have surpassed human-level intelligence in certain domains. Some argue that AI is now "smart enough to fool us", while others contend that AI still struggles with simple logic and math tasks.

- Limitations of current benchmarks: Critics point out that current AI benchmarks may not accurately measure intelligence. For example, the Arena benchmark measures which responses people prefer, not necessarily intelligence.

AI Ethics and Corporate Practices

- OpenAI criticized for non-disclosure agreements: OpenAI faced criticism after a community note on social media highlighted that the company had previously used non-disclosure agreements that prevented employees from making protected disclosures.

- Debate over open vs. closed AI development: There is ongoing discussion about the merits of open-sourcing AI models versus keeping them closed. Some argue that open-sourcing promotes innovation, while others worry about potential misuse.

AI Discord Recap

A summary of Summaries of Summaries

1. Llama 3.1 Model Performance and Challenges

- Fine-Tuning Woes: Llama 3.1 users reported issues with fine-tuning, particularly with error messages related to model configurations and tokenizer handling, suggesting updates to the transformers library.

- Discussions emphasized the need for specifying correct model versions and maintaining the right configuration to mitigate these challenges.

- Inconsistent Performance: Users noted that Llama 3.1 8B struggles with reasoning and coding tasks, with some members expressing skepticism regarding its overall performance.

- Comparisons suggest that while it's decent for its size, its logic capabilities appear lacking, especially contrasted with models like Gemma 2.

- Overload Issues: The Llama 3.1 405B model frequently shows 'service unavailable' errors due to being overloaded with requests, suggesting higher demand and potential infrastructure limits.

- Users discussed the characteristics of the 405B variant, mentioning that it feels more censored compared to its 70B sibling.

2. Mistral Large 2 Model

- Mistral Large 2 Release: On July 24, 2024, Mistral AI launched Mistral Large 2, featuring an impressive 123 billion parameters and a 128,000-token context window, pushing AI capabilities further.

- Mistral Large 2 is reported to outperform Llama 3.1 405B, particularly in complex mathematical tasks, making it a strong competitor against industry giants.

- Multilingual Capabilities: The Mistral Large 2 model boasts a longer context window and multilingual support compared to existing models, making it a versatile tool for various applications.

- Members engaged in comparisons with Llama models, noting ongoing performance enhancement efforts in this evolving market.

3. AI in Software Development and Job Security

- Job Security Concerns: Participants addressed job security uncertainties among junior developers as AI tools increasingly integrate into coding practices, potentially marginalizing entry-level roles.

- Consensus emerged that experienced developers should adapt to these tools, using them to enhance productivity rather than replace human interaction.

- Privacy in AI Data Handling: Concerns arose regarding AI's data handling practices, particularly the implications of human reviewers accessing sensitive information.

- The discourse underscored the critical need for robust data management protocols to protect user privacy.

4. AI Model Benchmarking and Evaluation

- Benchmarking Skepticism: Skepticism arises over the performance metrics of Llama 405b, with discussions highlighting its average standing against Mistral and Sonnet models.

- The community reflects on varied benchmark results and subjective experiences, likening benchmarks to movie ratings that fail to capture true user experience.

- Evaluation Methods: The need for better benchmarks in hallucination prevention techniques was highlighted, prompting discussions on improving evaluation methods.

- A brief conversation with a Meta engineer raised concerns about the current state of benchmarking, suggesting a collaborative approach to developing more reliable metrics.

5. Open-Source AI Developments

- Llama 3.1 Release: The Llama 3.1 model has officially launched, expanding context length to 128K and supporting eight languages, marking a significant advancement in open-source AI.

- Users reported frequent 'service unavailable' errors with the Llama 3.1 405B model due to overload, suggesting it feels more censored than its 70B counterpart.

- Mistral Large 2 Features: Mistral Large 2 features state-of-the-art function calling capabilities, with day 0 support for structured outputs and agents.

- This release aligns with enhanced function calling and structured outputs, providing useful resources like cookbooks for users to explore.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Llama 3.1 Fine-Tuning Challenges: Users reported issues fine-tuning Llama 3.1, particularly with error messages stemming from model configurations and tokenizer handling, suggesting updates to the transformers library.

- Discussions emphasized the need for specifying correct model versions and maintaining the right configuration to mitigate these challenges.

- Job Security Concerns in AI Development: Participants addressed job security uncertainties among junior developers as AI tools increasingly integrate into coding practices, potentially marginalizing entry-level roles.

- Consensus emerged that experienced developers should adapt to these tools, using them to enhance productivity rather than replace human interaction.

- Insights on Image Generation Bias: Discussions around image generation highlighted challenges in achieving diversity and addressing biases inherent in AI models, which are crucial for educational contexts.

- Critiques of current diversity efforts emerged, pointing out execution flaws that could skew historical accuracy.

- Performance of Mistral Large 2: The Mistral Large 2 model surfaced as a strong competitor in the AI landscape, boasting a longer context window and multilingual support compared to existing models.

- Members engaged in comparisons with Llama models, noting ongoing performance enhancement efforts in this evolving market.

- Privacy Concerns in AI Data Handling: Concerns arose regarding AI's data handling practices, particularly the implications of human reviewers accessing sensitive information.

- The discourse underscored the critical need for robust data management protocols to protect user privacy.

LM Studio Discord

- LM Studio struggles running Llama 3.1: Users identified that LM Studio cannot run Llama 3.1 on OpenCL cards; upgrading to version 0.2.28 is recommended for better support.

- Confirmed updates from LM Studio are essential for effective performance of large models like Llama 3.1.

- ROCm 0.2.28 leads to performance degradation: After the ROCm 0.2.28 update, a user experienced reduced performance, seeing only 150w usage on a dual 7900 XT setup.

- Reverting to 0.2.27 restored normal performance, prompting calls for a deeper investigation into changes in the new update.

- Nemo Models face context and performance issues: Users report that Nemo models function with current versions but suffer from context length limitations and slower outputs due to insufficient RAM.

- There were success stories with particular setups, alongside suggestions for optimizations.

- GPU Offloading Problems Persist: Several members reported malfunctioning GPU offloading on their systems, particularly with M3 Max and 4080S GPUs, often requiring manual adjustments.

- Automatic settings caused errant outputs, indicating a need for more reliable manual configurations for better performance.

- Meta-Llama 3.1 70B hits the repository: The release of 70B quant models for Meta-Llama 3.1 has been announced, available through the repository.

- Enthusiasm in the channel was notable, with expectations for improved performance following a re-upload to fix a tokenizer bug.

Perplexity AI Discord

- Llama 3.1 405B Makes Waves: The Llama 3.1 405B model is touted as the most capable open-source model, now available on Perplexity, rivaling GPT-4o and Claude Sonnet 3.5 for performance.

- Exciting plans for its integration into mobile applications are in the works, enhancing accessibility for on-the-go developers.

- Mistral Large 2 Breaks New Ground: On July 24, 2024, Mistral AI launched Mistral Large 2, featuring an impressive 123 billion parameters and a 128,000-token context window, pushing AI capabilities further.

- Mistral Large 2 is reported to outperform Llama 3.1 405B, particularly in complex mathematical tasks, making it a strong competitor against industry giants.

- AI Model Benchmarking Under Scrutiny: Skepticism arises over the performance metrics of Llama 405b, with discussions highlighting its average standing against Mistral and Sonnet models.

- The community reflects on varied benchmark results and subjective experiences, likening benchmarks to movie ratings that fail to capture true user experience.

- NextCloud Integrates OpenAI: A recent integration of NextCloud with OpenAI has sparked interest, featuring a community-driven, open-source approach that promotes clear coding standards.

- A GitHub repository was shared, providing aspiring developers resources to explore this new functionality and its implications.

- TikTok's Search Engine Potential: A lively discussion on TikTok as a search tool for Gen Z highlights its rising relevance and challenges traditional search engines.

- Concerns around the platform's reliability, especially in health advice, indicate a need for caution when using TikTok for critical information.

OpenAI Discord

- Mistral-7B boasts massive context windows: The Mistral-7B-v0.3 model features an impressive 128k context window and supports multiple languages, while the Mistral Large version runs efficiently at 69GB using ollama.

- Users praised its capabilities, pointing to potential applications for multitasking with larger datasets.

- Affordable GPU server options emerge: Discussions highlighted Runpod as a budget-friendly GPU server option for large models, priced at just $0.30/hour.

- Participants recommended using LM Studio and ollama for better performance tailored to specific model requirements.

- Kling AI offers quirky image-to-video generation: Kling AI impressed users with its ability to create videos from still images, although some noted issues with video quality and server overloads.

- Despite mixed experiences, the engaging output sparked further interest in experimenting with the tool.

- Memory feature inconsistencies frustrate users: Members reported variable appearances of the memory feature in the EU, with some only able to access it temporarily for five minutes.

- This led to lighthearted banter about the feature’s operational status and its overall reliability.

- Generating PDFs with OpenAI in Python: A user sought help for generating PDF documents via Python using OpenAI, looking for ways to automate section descriptions based on uploaded content.

- This discussion drove a collaborative exchange on effective workflows to enhance document generation processes.

Nous Research AI Discord

- LLM Distillation Advancements: Members have highlighted the potential of the Minitron GitHub repository for understanding recent advancements in LLM distillation techniques using pruning and knowledge distillation.

- This repository reflects ongoing efforts similar to models like Sonnet, Llama, and GPT-4Omini.

- LLaMa 3 Introduced as a New Player: The recently introduced LLaMa 3 models feature a dense Transformer structure equipped with 405B parameters and a context window of up to 128K tokens, designed for various complex tasks.

- These models excel in multilinguality and coding, setting a new benchmark for AI applications.

- Mistral Large 2's Competitive Edge: The release of Mistral Large 2 with 123B parameters and a 128k context window has captivated users, especially for coding tasks.

- Despite its non-commercial license, its innovative design positions it well for optimal API performance.

- Fine-Tuning Llama 3 Presents Challenges: Concerns surface over fine-tuning Llama 3 405B, where some suggest only Lora FTing as a feasible approach.

- This situation may bolster advances in DoRA fine-tuning efforts within the OSS community.

- Moral Reasoning and the Trolley Problem: Discussions around incorporating difficult moral queries, like the trolley problem, have emphasized the need to evaluate models' moral foundations.

- This triggers debates on whether these tasks examine pure reasoning skills or ethical frameworks.

OpenRouter (Alex Atallah) Discord

- DeepSeek Coder V2 Launches Private Inference Provider: DeepSeek Coder V2 now features a private provider to serve requests on OpenRouter without input training, marking a significant advancement in private model deployment.

- This new capacity reflects strategic progression within the OpenRouter platform as it enhances usability for users.

- Concerns over Llama 3.1 405B Performance: Users express dissatisfaction with the performance of Llama 3.1 405B, particularly its handling of NSFW content where it often refuses prompts or outputs training data.

- Feedback indicates temperature settings significantly affect quality, with some users reporting better output at lower temperatures.

- Mistral Large 2 Replacement Provides Better Multilingual Support: Mistral Large 2 is now launched as Mistral Large, effectively replacing the previous version with enhanced multilingual capabilities.

- Users speculate it may outperform Llama 3.1 when dealing with languages like French, as they assess its comparative effectiveness.

- Users Discuss OpenRouter API Limitations: Discussion highlights OpenRouter API challenges, particularly in terms of rate limits and multilingual input management, which complicates model usage.

- While some models are in free preview, users report strict limits on usage and context, pointing to a need for improvements.

- Interest in Open-Source Coding Tools Grows: Users show a keen interest in open-source autonomous coding tools like Devika and Open Devin, asking for recommendations based on current efficacy.

- This shift reflects a desire to experiment with alternatives to mainstream AI coding solutions that exhibit varied performance.

HuggingFace Discord

- Llama 3.1 Launches with Excitement: The Llama 3.1 model has officially launched, expanding context length to 128K and supporting eight languages, marking a significant advancement in open-source AI. The model can be explored in detail through the blogpost and is available for testing here.

- Users reported frequent 'service unavailable' errors with the Llama 3.1 405B model due to overload, suggesting it feels more censored than its 70B counterpart.

- Improved HuggingChat with Version v0.9.1: The latest version HuggingChat v0.9.1 integrates new features that significantly enhance user accessibility. Users can discover more functionalities through the's model page.

- The update aims to improve interactions utilizing the new HuggingChat features.

- Risks with MultipleNegativesRankingLoss: Difficulties were reported when training sentence encoders using MultipleNegativesRankingLoss, where increasing the batch size led to worse model performance. Insights were sought on common dataset pitfalls associated with this method.

- One user described their evaluation metrics, focusing on recall@5, recall@10, and recall@20 for better benchmarking.

- Mistral-NeMo 12B Shines in Demo: A demo of Mistral-NeMo 12B Instruct using llama.cpp showcases the model's significant performance enhancements. Users are encouraged to experiment for an improved chat experience.

- Community interest is soaring regarding the model's capabilities and potential applications in various AI tasks.

- Questions on Rectified Flow and Evaluation: Members expressed frustration regarding the lack of discussions around Rectified Flow and Flow Matching, especially in contrast to DDPM and DDIM debates. They emphasized the difficulty finding straightforward examples for Flow applications such as generating MNIST.

- Evaluation methods for generative models were explored, with a focus on qualitative and quantitative methods for assessing the performance of models like Stable Diffusion versus GANs.

Stability.ai (Stable Diffusion) Discord

- Kohya-ss GUI Compatibility Quirks: Users reported that the current version of Kohya-ss GUI faces compatibility issues with Python 3.10, requiring an upgrade to 3.10.9 or higher.

- One user humorously remarked that it resembles needing a weight limit of 180lbs but not exceeding 180.5lbs.

- Exciting Lycoris Features on the Horizon: Onetrainer is potentially integrating Lycoris features in a new dev branch, spurring discussions on functional enhancements.

- Community members noted a preference for bmaltais' UI wrapper, which could improve experiences with these new integrations.

- Community Raves About Art Models: Discussion outlined performance ratings for models including Kolors, Auraflow, Pixart Sigma, and Hunyuan, with Kolors being commended for its speed and quality.

- Participants engaged in a debate on user experiences and specific applications of these models, showcasing diverse opinions.

- Stable Diffusion Models Under the Microscope: Users examined the differences in output between Stable Diffusion 1.5 and SDXL, focusing on detail and resolution.

- Techniques such as Hidiffusion and Adaptive Token Dictionary were discussed as methods to boost older model outputs.

- Welcome to Stable Video 4D!: The newly introduced Stable Video 4D model allows transformation of single object videos into multi-angle views for creative projects.

- Currently in research, this model promises applications in game development, video editing, and virtual reality.

Eleuther Discord

- Diving Deep into Sampling Models: Members discussed various sampling methods such as greedy, top-p, and top-k, highlighting their respective trade-offs, particularly for large language models.

- Stochastic sampling is noted for diversity but complicates evaluation, contrasting with the reliability of greedy methods which generate the most probable paths.

- Llama 3.1's Sampling Preferences: In discussions about Llama 3.1, participants recommended consulting its paper for optimal sampling methods, with a lean towards probabilistic sampling techniques.

- One member pointed out that Gemma 2 effectively uses top-p and top-k strategies common in model evaluations.

- Misleading Tweets Trigger Discussion: Members analyzed a misleading tweet related to Character.ai's model, particularly its use of shared KV layers impacting performance metrics.

- Concerns arose regarding the accuracy of such information, highlighting the community's ongoing journey to comprehend transformer architectures.

- MoE vs Dense Models Debate: A lively debate emerged over the preference for dense models over Mixture-of-Experts (MoE), citing high costs and engineering challenges of handling MoEs in training.

- Despite the potential efficiency of pre-trained MoEs, concerns linger about varied organizational capabilities to implement them.

- Llama API Evaluation Troubles: Users reported errors with the

lm_evaltool for Llama 3.1-405B, particularly challenges in handling logits and multiple-choice tasks through the API.- Errors such as 'No support for logits' and 'Method Not Allowed' prompted troubleshooting discussions, with successful edits to the

_create_payloadmethod noted.

- Errors such as 'No support for logits' and 'Method Not Allowed' prompted troubleshooting discussions, with successful edits to the

CUDA MODE Discord

- CUDA Installation Troubleshooting: Members faced issues when Torch wasn't compiled with CUDA, leading to import errors. Installation of the CUDA version from the official page was recommended for ensuring compatibility.

- After setting up CUDA, one user encountered a torch.cuda.OutOfMemoryError while allocating 172.00 MiB, suggesting adjustments to max_split_size_mb to tackle memory fragmentation.

- Exploring Llama-2 and Llama-3 Features: A member shared a fine-tuned Llama-2 7B model, trained on a 24GB GPU in 19 hours. Concurrently, discussions on implementing blockwise attention in Llama 3 focused on the sequence splitting stage relative to rotary position embeddings.

- Additionally, inquiries on whether Llama 3.1 has improved inference latency over 3.0 were raised, reflecting ongoing interests in model performance advancements.

- Optimizations in FlashAttention for AMD: FlashAttention has gained support for AMD ROCm, following the implementation detailed in GitHub Pull Request #1010. The updated library maintains API consistency while introducing several new C++ APIs like

mha_fwd.- Current compatibility for the new version is limited to MI200 and MI300, suggesting potential broader updates may follow in the future.

- PyTorch Compile Insights: Users reported that

torch.compileincreased RAM usage with small Bert models, and switching from eager mode resulted in worse performance. Suggestions to use the PyTorch profiler to analyze memory traces during inference were offered.- Observations indicated no memory efficiency improvements with

reduce-overheadandfullgraphcompile options, emphasizing the importance of understanding configuration effects.

- Observations indicated no memory efficiency improvements with

- Strategies for Job Hunting in ML/AI: A user sought advice on drafting a roadmap for securing internships and full-time positions in ML/AI, sharing a Google Document with their plans. They expressed a commitment to work hard and remain flexible on timelines.

- Further feedback on their internship strategies was encouraged, highlighting the willingness to dedicate extra hours towards achieving their objectives.

OpenAccess AI Collective (axolotl) Discord

- Llama 3.1 Struggles with Errors: Users reported issues with Llama 3.1, facing errors like AttributeError, which may stem from outdated images or configurations.

- One user found a workaround by trying a different image, expressing frustration over ongoing model updates.

- Mistral Goes Big with Large Model Release: Mistral released the Mistral-Large-Instruct-2407 model with 123B parameters, claiming state-of-the-art performance.

- The model offers multilingual support, coding proficiency, and advanced agentic capabilities, stirring excitement in the community.

- Multilingual Capabilities under Scrutiny: Comparisons between Llama 3.1 and NeMo highlighted performance differences, particularly in multilingual support.

- While Llama 3 has strengths in European languages, users noted that NeMo excels in Chinese and others.

- Training Large Models Hits RAM Barriers: Concerns arose over the significant RAM requirements for training large models like Mistral, with users remarking on their limitations.

- Some faced exploding gradients during training and speculated whether this issue was tied to sample packing.

- Adapter Fine-Tuning Stages Gaining Traction: Members discussed multiple stages of adapter fine-tuning, proposing the idea of initializing later stages with previous results, including SFT weights for DPO training.

- A feature request on GitHub suggests small code changes to facilitate this method.

Interconnects (Nathan Lambert) Discord

- GPT-4o mini dominates Chatbot Arena: With over 4,000 user votes, GPT-4o mini is now tied for #1 in the Chatbot Arena leaderboard, outperforming its previous version while being 20x cheaper. This milestone signals a notable decline in the cost of intelligence for new applications.

- Excitement was evident as developers celebrated this accomplishment, noting its implications for future chatbot experiences.

- Mistral Large 2: A New Contender: Mistral Large 2 boasts a 128k context window and multilingual support, positioning itself strongly for high-complexity tasks under specific licensing conditions. Discussions surfaced on the lack of clarity regarding commercial use of this powerful model.

- Members emphasized the need for better documentation to navigate the licensing landscape effectively.

- OpenAI's $5 billion Loss Prediction: Estimates suggest OpenAI could face a staggering loss of $5 billion this year, primarily due to Azure costs and training expenses. The concern over profitability has prompted discussions about the surprisingly low API revenue compared to expectations.

- This situation raises fundamental questions about the sustainability of OpenAI's business model in the current environment.

- Llama 3 Officially Released: Meta has officially released Llama3-405B, trained on 15T tokens, which claims to outperform GPT-4 on all major benchmarks. This marks a significant leap in open-source AI technology.

- The launch has sparked discussions around the integration of 100% RLHF in the post-training capabilities of the model, which highlights the crucial role of this method.

- CrowdStrike's $10 Apology Gift Card for Outage: CrowdStrike is offering partners a $10 Uber Eats gift card as an apology for a massive outage, but some found the vouchers had been canceled when attempting to redeem them. This incident underscores the operational risks associated with technology updates.

- Members shared mixed feelings about the effectiveness of this gesture amid ongoing frustrations.

Modular (Mojo 🔥) Discord

- Mojo Compiler Versioning Confusion: A discussion highlighted the uncertainty ongoing about whether the next main compiler version will be 24.5 or 24.8, citing potential disconnects between nightly and main releases as they progress towards 2025.

- Community members raised concerns about adhering to different release principles, complicating future updates.

- Latest Nightly Update Unpacked: The newest nightly Mojo compiler update,

2024.7.2405, includes significant changes such as the removal of DTypePointer and enhanced string formatting methods, details of which can be reviewed in the current changelog.- The removal of DTypePointer necessitates code updates for existing projects, prompting calls for clearer transition guidelines.

- SDL Integration Questions Arise: A user requested resources for integrating SDL with Mojo, aiming to gain a better understanding of the process, and how to use DLHandle effectively.

- This reflects a growing interest in enhancing Mojo’s capabilities through third-party libraries.

- Discussion on Var vs Let Utility: A member initiated a debate on the necessity of using var in situations where everything is already declared as such, suggesting redundancy in usage.

- Another pointed out that var aids the compiler while let caters to those favoring immutability, highlighting a preference debate among developers.

- Exploring SIMD Type Comparability: Members discussed challenges in establishing total ordering for SIMD types, noting tension between generic programming and specific comparisons.

- It was proposed that a new SimdMask[N] type might alleviate some complexities associated with platform-specific behaviors.

Latent Space Discord

- Factorio Automation Mod sparks creativity: The new factorio-automation-v1 mod allows agents to automate tasks like crafting and mining in Factorio, offering a fun testing ground for agent capabilities.

- Members are excited about the possibilities this mod opens up for complex game interactions.

- GPT-4o Mini Fine-Tuning opens up: OpenAI has launched fine-tuning for GPT-4o mini, available to tier 4 and 5 users, with the first 2M training tokens free daily until September 23.

- Members noted performance inconsistencies when comparing fine-tuned GPT-4o mini with Llama-3.1-8b, raising questions about exact use cases.

- Mistral Large 2 impresses with 123B parameters: Mistral Large 2 has been revealed, boasting 123 billion parameters, strong coding capabilities, and supporting multiple languages.

- However, indications show it achieved only a 60% score on Aider's code editing benchmark, slightly ahead of the best GPT-3.5 model.

- Reddit's Content Policy stirs debate: A heated discussion surfaced about Reddit's public content policy, with concerns around user control over generated content.

- Members argue that the vague policy creates significant issues, highlighting the need for clearer guidelines.

- Join the Llama 3 Emergency Paper Club: An emergency paper club meeting on The Llama 3 Herd of Models is set for later today, a strong contender for POTY Awards.

- Key contributors to the discussion include prominent community members, emphasizing the paper's significance.

LlamaIndex Discord

- LlamaParse Enhances Markdown Capabilities: LlamaParse now showcases support for Markdown output, plain text, and JSON mode for better metadata extraction. Features such as multi-language output enhance its utility across workflows, as demonstrated in this video.

- This update is set to significantly improve OCR efficiency for diverse applications, broadening its adoption for various tasks beyond simple text.

- MongoDB’s AI Applications Program is Here: The newly launched MongoDB AI Applications Program (MAAP) aims to simplify the journey for organizations building AI-enhanced applications. With reference architectures and integrated technology stacks, it accelerates AI deployment timeframes; learn more here.

- The initiative addresses the urgent need developers have to modernize their applications with minimal overhead, contributing to more efficient workflows.

- Mistral Large 2 Introduces Function Calling: Mistral Large 2 is rolling out enhanced function calling capabilities, which includes support for structured outputs as soon as it launches. Detailed resources such as cookbooks are provided to aid developers in utilizing these new functionalities; explore them here.

- This release underscores functional versatility for LLM applications, allowing developers to implement more complex interactions effectively.

- Streaming Efficiency with SubQuestionQueryEngine: Members discussed employing SubQuestionQueryEngine.from_defaults to facilitate streaming responses and reduce latency within LLM queries. Some solutions were proposed using

get_response_synthesizer, though challenges remain in implementation.- Despite the hurdles in adoption, there's optimism about improving user interaction speeds across LLM integrations.

- Doubts Surface Over Llama 3.1 Metrics: Skepticism mounts regarding the metrics published by Meta for Llama 3.1, especially its effectiveness in RAG evaluations. Users are questioning the viability of certain models like

llama3:70b-instruct-q_5for practical tasks.- This skepticism reflects broader community concerns regarding the reliability of AI metrics in assessing model performance in various applications.

Cohere Discord

- Cohere Dashboard Reloading Trouble: Members reported issues with the Cohere account dashboard constantly reloading, while others noted no such problems on their end, leading to discussions on potential glitches.

- This prompted a conversation about rate limiting as a possible cause for the reloading issue.

- Cheering for Command R Plus: With each release of models like Llama 3.1, members expressed increasing appreciation for Command R Plus, highlighting its capabilities compared to other models.

- One user proposed creating a playground specifically for model comparisons to further explore this growing sentiment.

- Server Performance Under Scrutiny: Concerns arose regarding potential server downtime, but some users confirmed that the server was in full operational status.

- Suggestions included investigating rate limiting as a factor influencing user experience.

- Innovative Feature Suggestions for Cohere: A member suggested incorporating the ability to use tools during conversations in Cohere, like triggering a web search on demand.

- Initial confusion arose, but it was clarified that some of these functionalities are already available.

- Community Welcomes New Faces: New members introduced themselves, sharing backgrounds in NLP and NeuroAI, sparking excitement about the community.

- The discussion also touched on experiences with Command-R+, emphasizing its advantages over models like NovelAI.

DSPy Discord

- Zenbase/Core Launch Sparks Excitement: zenbase/core is now live, enabling users to integrate DSPy’s optimizers directly into their Python projects like Instructor and LangSmith. Support the launch by engaging with their Twitter post.

- Community members are responding positively, with a strong willingness to promote this recent release.

- Typed Predictors Raise Output Concerns: Users report issues with typed predictors not producing correctly structured outputs, inviting help from others. Suggestions include enabling experimental features with

dspy.configure(experimental=True)to address these problems.- Encouragement from peers highlights a collective effort to refine the usage of these predictors.

- Internal Execution Visibility Under Debate: There's a lively discussion over methods to observe internal program execution steps, including suggestions like

inspect_history. Users express the need for deeper visibility into model outputs, especially during type-checking mishaps.- A common desire for transparency showcases the importance of debugging tools in DSPy usage.

- Push for Small Language Models: One member shared an article on the advantages of small language models, noting their efficiency and suitability for edge devices with limited resources. They highlighted benefits like privacy and operational simplicity for models running on just 4GB of RAM.

- Check out the article titled Small Language Models are the Future for a comprehensive read on the topic.

- Call to Contribute to DSPy Examples: A user expressed interest in contributing beginner-friendly examples to the DSPy repository, aiming to enrich the resource base. Community feedback confirmed a need for more diverse examples, specifically in the

/examplesdirectory.- This initiative reflects a collaborative spirit to enhance learning materials within the DSPy environment.

tinygrad (George Hotz) Discord

- Members tackle Tinygrad learning: Members express their ongoing journey with Tinygrad, focusing on understanding its use concerning transformers. One noted, It's a work in progress, indicating a gradual mastery process.

- Discussion hinted at potential collective resources to enhance the learning curve.

- Molecular Dynamics engine under construction: A team is developing a Molecular Dynamics engine using neural networks for energy prediction, facing challenges in gradient usage. Input gradient tracking methods were suggested to optimize weight updates during backpropagation.

- Optimizing backpropagation emerged as a focal point to improve training performance.

- Creating a Custom Runtime in Tinygrad: A member shared insights on implementing a custom runtime for Tinygrad, emphasizing how straightforward it is to add support for new hardware. They sought clarity on terms like

global_sizeandlocal_size, vital for kernel executions.- Technical clarifications were provided regarding operational contexts for these parameters.

- Neural Network Potentials discussion: The energy in the Molecular Dynamics engine relies on Neural Network Potentials (NNP), with emphasis on calculation efficiency. Conversations revolved around strategies to optimize backpropagation.

- Clear paths for enhancing calculation speed are necessary to improve outcomes.

- PPO Algorithm scrutiny in CartPole: A member probed the necessity of the

.sum(-1)operation in the implementation of the PPO algorithm for the Beautiful CartPole environment. This sparked a collaborative conversation on the nuances of reinforcement learning.- Detailed exploration of code implementations fosters community understanding and knowledge sharing.

Torchtune Discord

- Countdown to 3.1 and Cool Interviews: Members inquired about whether there would be any cool interviews released along with the 3.1 version, similar to those for Llama3.

- This raises interest for potential insights and discussions that might accompany the new release.

- MPS Support PR Gains Attention: A new pull request (#790) was highlighted which adds support for MPS on local Mac computers, checking for BF16 compatibility.

- Context suggests this PR could resolve major testing hurdles for those using MPS devices.

- LoRA Functionality Issues Persist: Discussed issues surrounding LoRA functionality, noting it did not work during a previous attempt and was previously impacted by hardcoded CUDA paths.

- Members exchanged thoughts on specific errors encountered, highlighting ongoing challenges in implementation.

- Fixing the Pad ID Bug: A member pointed out that the pad id should not be showing up in generate functionality, identifying it as an important bug.

- In response, a Pull Request was created to prevent pad ids and special tokens from displaying, detailed in Pull Request #1211.

- Optimizing Git Workflow to Reduce Conflicts: Discussion around refining git workflows to minimize the occurrence of new conflicts constantly arose, emphasizing collaboration.

- It was suggested that new conflicts might stem from the workflow, indicating a potential need for tweaks.

LangChain AI Discord

- Hugging Face Models and Agents Discussion: Members discussed their experiences with Agents using Hugging Face models, including local LLMs via Ollama and cloud options like OpenAI and Azure.

- This conversation sparked interest in the potential applications of agents within various model frameworks.

- Python Developers Job Hunt: A member urgently expressed their situation, stating, 'anyone looking to hire me? I need to pay my bills.' and highlighted their strong skills in Python.

- The urgency of job availability in the current market was apparent as discussions about opportunities ensued.

- Challenges with HNSW IVFFLAT Indexes on Aurora: Members faced problems creating HNSW or IVFFLAT indexes with 3072 dimensions on Aurora PGVECTOR, leading to shared insights about solutions involving halfvec.

- This highlighted ongoing challenges with dimensionality management in high-performance vector databases.

- LangServe's OSError Limits: Users encountered an OSError: [Errno 24] Too many open files when their LangServe app processed around 1000 concurrent requests.

- They are actively seeking strategies to handle high traffic while mitigating system resource limitations, with a GitHub issue raised for support.

- Introduction of AI Code Reviewer Tool: A member shared a YouTube video on the AI Code Reviewer, highlighting its features powered by LangChain.

- This tool aims to enhance the code review process, suggesting a trend towards automation in code assessment methodologies.

OpenInterpreter Discord

- Llama 3.1 405 B impresses with ease of use: Llama 3.1 405 B performs fantastically out of the box with OpenInterpreter, offering an effortless experience.

- In contrast, gpt-4o requires constant reminders about capabilities, making 405b a superior choice for multitasking.

- Cost-effective API usage with Nvidia: A user shared that Nvidia provides 1000 credits upon signup, where 1 credit equals 1 API call.

- This incentive opens up more accessibility for experimenting with APIs.

- Mistral Large 2 rivals Llama 3.1 405 B: Mistral Large 2 reportedly performs comparably to Llama 3.1 405 B, particularly noted for its speed.

- The faster performance may be due to lower traffic on Mistral's endpoints compared to those of Llama.

- Llama 3.1 connects with databases for free: MikeBirdTech noted that Llama 3.1 can interact with your database at no cost through OpenInterpreter, emphasizing savings on paid services.

- It's also fully offline and private, nobody else needs to see your data, highlighting its privacy benefits.

- Concerns over complex databases using Llama 3.1: A member raised a concern that for complex databases involving joins across tables, this solution may not be effective.

- They expressed appreciation for sharing the information, remarking on the well-done execution despite the limitations.

LAION Discord

- Llama 3.1: Meta's Open Source Breakthrough: Meta recently launched Llama 3.1 405B, hailed as the first-ever open-sourced frontier AI model, outperforming competitive models like GPT-4o on various benchmarks. For more insights, check this YouTube video featuring Mark Zuckerberg discussing its implications.

- The reception highlights the model's potential impact on AI research and open-source contributions.

- Trouble Downloading LAION2B-en Metadata: Members reported difficulties in locating and downloading the LAION2B-en metadata from Hugging Face, querying if others faced the same problem. Responses indicate this is a common frustration with accessibility.

- Someone linked to LAION maintenance notes for further clarification on the situation.

- LAION Datasets in Legal Limbo: Discussion revealed that LAION datasets are currently in legal limbo, with access to official versions restricted. While alternatives are available, it is advised to utilize unofficial datasets only for urgent research needs.

- Members noted the ongoing complexities surrounding data legality in the AI community.

- YouTube Polls: A Nostalgic Debate: A member shared a YouTube poll asking which 90's movie had the best soundtrack, igniting nostalgia among viewers. This prompts members to reflect on their favorite soundtracks from the era.

- The poll sparks a connection through shared cultural experiences.

Alignment Lab AI Discord

- Legal Clarity on ML Dataset Copyright: A member pointed out that most of the datasets generated by an ML model are likely not copyrightable since they lack true creativity. They emphasized that content not generated by GPT-4 may be under MIT licensing, though this area remains murky amid current legal debates.

- This opens up discussions on the implications for data ownership and ethical guidelines in dataset curation.

- Navigating Non-Distilled Data Identification: Discussion arose around the methods to pinpoint non-distilled data within ML datasets, highlighting an interest in systematic data management.

- Members seek clearer methodologies to enhance the organization of dataset contents, aiming to improve usability in ML projects.

LLM Finetuning (Hamel + Dan) Discord

- Experimenting with DPO for Translation Models: A member inquired about successfully fine-tuning translation models using DPO, referencing insights from the CPO paper. They emphasized that moderate-sized LLMs fail to match state-of-the-art performance.

- Is anyone achieving better results? underscores the community's growing interest in fine-tuning techniques.

- CPO Enhances Translation Outputs: The CPO approach targets weaknesses in supervised fine-tuning by aiming to boost the quality of machine translation outputs. It turns the focus from just acceptable translations to higher quality results, improving model performance.

- By addressing reference data quality, CPO leads to significant enhancements, specifically underutilizing datasets effectively.

- ALMA-R Proves Competitive: Applying CPO significantly improved ALMA-R despite training on only 22K parallel sentences and 12M parameters. The model can now rival conventional encoder-decoder architectures.

- This showcases the potential of optimizing LLMs even with limited data, opening up discussions on efficiency and scaling.

- NYC Tech Meetup in Late August: Interest sparked for a tech meetup in NYC during late August, with members expressing their desire to connect in person. This initiative promises to foster deeper networking and collaboration opportunities.

- The buzz around this potential meetup highlights a sense of community among members eager to share insights and experiences.

MLOps @Chipro Discord

- ML Efficiency Boost through Feature Stores: A live session on Leveraging Feature Stores is scheduled for July 31st, 2024, at 11:00 AM EDT, aimed at ML Engineers, Data Scientists, and MLOps professionals.

- This session will explore automated pipelines, tackling unreliable data, and present advanced use cases to enhance scalability and performance.

- Addressing Data Consistency Challenges: The webinar will emphasize the importance of aligning serving and training data to create scalable and reproducible ML models.

- Discussions will highlight common issues like inconsistent data formats and feature duplication, aiming to enhance collaboration within ML teams.

- Enhancing Feature Governance Practices: Participants will learn effective techniques for implementing feature governance and versioning, crucial for managing the ML lifecycle.

- Attendees can expect insights and practical tools to refine their ML processes and advance operations.

Mozilla AI Discord

- Accelerator Application Deadline Approaches: The application deadline for the accelerator program is fast approaching, offering a 12 week program with up to 100k in non-diluted funds for projects.

- A demo day with Mozilla is planned, and members are encouraged to ask their questions here.

- Two More Exciting Events Coming Up: Reminder about two upcoming events this month featuring the work of notable participants, bringing fresh insights to the community.

- These events are brought to you by two members, further bolstering community engagement.

- Insightful Zero Shot Tokenizer Transfer Discussion: A session titled Zero Shot Tokenizer Transfer with Benjamin Minixhofer is scheduled, aiming to explore advanced tokenizer implementations.

- Details and participation links can be found here.

- AutoFix: Open Source Issue Fixer Launch: An announcement was made regarding AutoFix, an open source issue fixer that submits PRs from Sentry.io, streamlining developers’ workflows.

- More information on the project can be accessed here.

DiscoResearch Discord

- Llama3.1 Paper: A Treasure for Open Source: The new Llama3.1 paper from Meta is hailed as incredibly valuable for the open source community, prompting discussions about its profound insights.

- One member joked that it contains so much alpha that you have to read it multiple times like a favorite movie.

- Training a 405B Model with 15T Tokens: The paper reveals that the model with 405 billion parameters was trained using ~15 trillion tokens, which was predicted by extrapolating their scaling laws.

- The scaling law suggests training a 402B parameter model on 16.55T tokens to achieve optimal results.

- Insights on Network Topology: It includes a surprisingly detailed description of the network topology used for their 24k H100 cluster.

- Images shared in the thread illustrate the architecture, demonstrating the scale of the infrastructure.

- Training Interruptions Due to Server Issues: Two training interruptions during Llama3-405b's process were attributed to the 'Server Chassis' failing, humorously suggested to be caused by someone's mishap.

- As a consequence, 148 H100 GPUs were lost during pre-training due to these failures.

- Discussion on Hallucination Prevention Benchmarks: A brief conversation with a Meta engineer raised concerns about the need for better benchmarks in hallucination prevention techniques.

- The member shared that anyone else working on this should engage in further discussions.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI Stack Devs (Yoko Li) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!