[AINews] Microsoft AgentInstruct + Orca 3

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Generative Teaching is all you need.

AI News for 7/12/2024-7/15/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (465 channels, and 4913 messages) for you. Estimated reading time saved (at 200wpm): 505 minutes. You can now tag @smol_ai for AINews discussions!

The runaway success of FineWeb this year (our coverage here, tech report here) combined with Apple's Rephrasing research has basically served as existence proofs that there can be at least an order of magnitude improvement in dataset quality for pre- and post-training. With content shops either lawyering up or partnering up, research has turned to improving synthetic dataset generation to extend the runway on the tokens we have already compressed or scraped.

Microsoft Research has made the latest splash with AgentInstruct: Toward Generative Teaching with Agentic Flows, (not to be confused with AgentInstruct of Crispino et al 2023) the third in its Orca series of papers:

- Orca 1: Progressive Learning from Complex Explanation Traces of GPT-4

- Orca 2: Teaching Small Language Models How to Reason

- Orca Math: Unlocking the potential of SLMs in Grade School Math)

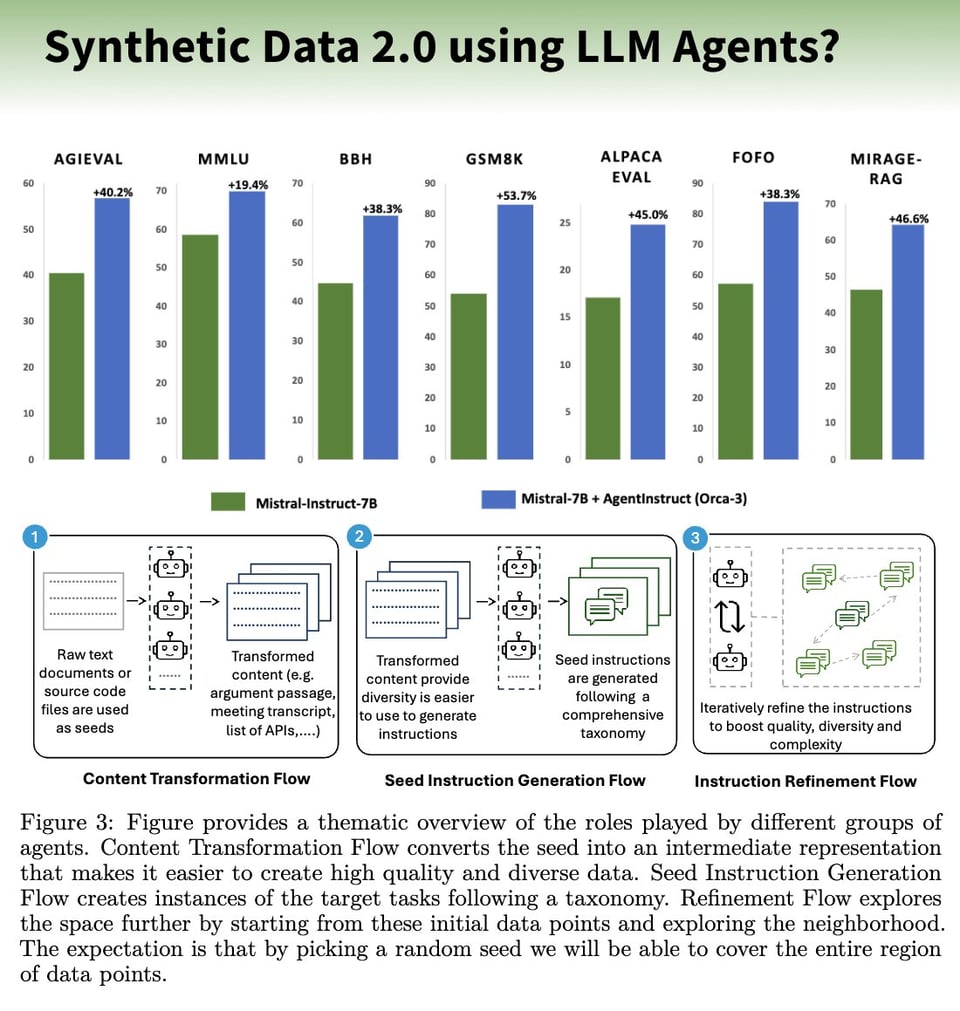

The core concept is that raw documents is transformed by multiple agents playing different roles to provide diversity (for 17 listed capabilities), which are then used by yet more agents to generate and refine instructions in a "Content Transformation Flow".

Out of this pipeline comes 22 million instructions aimed at teaching those 17 skills, which when combined with the 3.8m instructions from prior Orca papers makes "Orca 2.5" - the 25.8m instruction synthetic dataset that the authors use to finetune Mistral 7b to produce the results they report:

- +40% on AGIEval, +19% on MMLU; +54% on GSM8K; +38% on BBH; +45% AlpacaEval, 31.34% reduction in hallucinations for summarization tasks (thanks Philipp)

This is just the latest entry in this genre of synthetic data research, most recently with Tencent claiming 1 billion diverse personas on their related work.

This seems both obvious that it will work yet also terribly expensive and inefficient compared to FineWeb, but whatever works!

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Shooting Incident at Trump Rally

- Shooting details: @sama noted a gunman at a Trump rally pointed a rifle at an officer who discovered him on a rooftop shortly before opening fire, with the bullet coming within an inch of Trump's head. @rohanpaul_ai shared an AP update confirming the gunman pointed the rifle at the officer before opening fire.

- Reactions and commentary: @sama hoped this moment could lead to turning down rhetoric and finding more unity, with Democrats showing grace in resisting the urge to "both-sides" it. @zachtratar argued no one would stage a bullet coming within an inch of a headshot at that distance, as it would be too risky if staged. @bindureddy made a joke that an AI President can't be assassinated.

AI and ML Research and Developments

- New models and techniques: @dair_ai shared top ML papers of the week, covering topics like RankRAG, RouteLLM, FlashAttention-3, Internet of Agents, Learning at Test Time, and Mixture of A Million Experts. @_philschmid highlighted recent AI developments including Google TPUs on Hugging Face, FlashAttention-3 improving transformer speed, and Q-GaLore enabling training of 7B models with 16GB memory.

- Implementations and applications: @llama_index implemented GraphRAG concepts such as graph generation and community-based retrieval in a beta release. @LangChainAI pointed to OpenAI's Assistant API as an example of agentic infrastructure with features like persistence and background runs.

- Discussions and insights: @sarahcat21 called for more research into updateable/collaborative AI/ML and model merging techniques. @jxnlco is exploring incorporating prompting techniques into instructor documentation to help understand possibilities and identify abstractions.

Coding, APIs and Developer Tools

- New APIs and services: @virattt launched an open beta stock market API with 30+ years of data for S&P 500 tickers, including financial statements, with no API limits. It's undergoing load testing before a full 15,000+ stock launch for AI financial agents to utilize.

- Coding experiences and tips: @giffmana shared frustration with unhelpful online resources when writing a Python script to read multipart/form-data, finding the actual RFC2388 spec most useful. @jeremyphoward demonstrated a new function-cache decorator design in Python to compose cache eviction policies.

- Developer discussions: @svpino predicted AI becoming a foundational skill for future developers alongside data structures and algorithms, as software development and machine learning converge.

Humor, Memes and Off-Topic Discussions

- Jokes and memes: @cto_junior shared a meme combining Wagie News and 4chan references. @lumpenspace joked it's impossible to determine if anti-Trump sentiment influenced the shooter given conflicting details about their political leanings.

- Off-topic chatter: @sarahookr recommended visiting Lisboa and shared a photo from the city. @ID_AA_Carmack discussed a comic panel that inspired an indie game title idea called "Corgi Battle Pose".

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. We recently improved the anti-hallucination measures but are still tuning the filtering, clustiner, and summary quality.

Theme 1. AI Research Publication Lag in Fast-Paced Development

- [/r/singularity] Due to the speed of AI development and the long delays in the scientific publishing process, a whole bunch of academic papers suggest that LLMs can't do things they can actually do well. Example: this is a fine paper, but it uses GPT-3.5. (Score: 237, Comments: 19): Academic papers on AI capabilities rapidly become outdated due to the fast pace of AI development and the lengthy scientific publishing process. A prime example is a paper that uses GPT-3.5 to assess LLM capabilities, despite more advanced models like GPT-4 being available. This lag in publication leads to a significant discrepancy between published research and the current state of AI technology.

- [/r/OpenAI] AI headlines this week (Score: 361, Comments: 57): AI headlines dominate tech news: This week saw a flurry of AI-related announcements, including Google's Gemini launch, OpenAI's GPT Store delay, and Anthropic's Claude 2.1 release. The rapid pace of AI developments is drawing comparisons to the early days of the internet, with some experts suggesting AI's impact could be even more transformative and far-reaching than the web revolution.

- AI: Not Just Another Fad: Commenters draw parallels between early internet skepticism and current AI doubts. Many recall initial reluctance to use credit cards online, highlighting how perceptions can change dramatically over time.

- AI Revolutionizes Development: Developers praise AI as a "game changer" for coding, with one user creating a native Swift app using Anthropic's console despite limited knowledge. Others note AI's ability to narrow down solutions faster than traditional methods.

- Dot-Com Bubble Lessons: Discussion touches on the 2000 dot-com crash, with users pointing out how companies like Amazon lost 90% market cap. Some suggest a similar correction might occur in AI but believe the bubble hasn't peaked yet.

- AI's Growing Pains: Critics highlight issues with current AI implementations, such as Google's search highlights being criticized for hallucinations. Users stress the importance of responsible AI deployment to maintain credibility in the field.

Theme 2. AI's Impact on Employment: TurboTax Layoffs

- [/r/singularity] Maker of TurboTax Fires 1,800 Workers, Says It’s Pivoting to AI (Score: 303, Comments: 63): Intuit, the company behind TurboTax and QuickBooks, has announced a 7% reduction in its workforce, laying off 1,800 employees. The company cites a shift towards artificial intelligence and machine learning as the reason for the restructuring, aiming to better serve customers and drive innovation. This move comes despite Intuit reporting $14.4 billion in revenue for the fiscal year 2023, a 13% increase from the previous year.

Theme 3. AI Integration in Creative Workflows: ComfyUI GLSL Node

- [/r/StableDiffusion] 🖼 OpenGL Shading Language (GLSL) node for ComfyUI 🥳 (Score: 221, Comments: 21): OpenGL Shading Language (GLSL) node for ComfyUI has been introduced, allowing users to create custom shaders and apply them to images within the ComfyUI workflow. This new feature enables real-time image manipulation using GPU-accelerated operations, potentially enhancing the efficiency and capabilities of image processing tasks in ComfyUI. The integration of GLSL shaders opens up possibilities for advanced visual effects and custom image transformations directly within the ComfyUI environment.

- GitHub repo and ShaderToy link shared: The original poster, camenduru, provided links to the GitHub repository for the GLSL nodes and a ShaderToy example showcasing the potential of shader effects.

- Excitement and potential applications: Users expressed enthusiasm for the new feature, with ArchiboldNemesis highlighting its potential for masking inputs and speculating about "Realtime SD metaballs". Another user pondered if ComfyUI might evolve into a visual programming framework like TouchDesigner.

- Technical discussions and clarifications: Some users sought explanations about OpenGL and its relation to the workflow. A commenter clarified that OpenGL shading is used for viewport rendering without raytracing capabilities, while another mentioned the applicability of three.js glsl shaders knowledge to ComfyUI.

- Future development ideas: Suggestions included integrating VSCode and plugins into ComfyUI or developing ComfyUI as a VSCode plugin. Questions were also raised about real-time processing/rendering capabilities within the current implementation.

AI Discord Recap

A summary of Summaries of Summaries

1. Pushing the Boundaries of LLMs

- Breakthrough LLM Performance Gains: Microsoft Research introduced AgentInstruct, a framework for automatically creating synthetic data to post-train models like Mistral-7b into Orca-3, achieving 40% improvement on AGIEval, 54% on GSM8K, and 45% on AlpacaEval.

- The Ghost 8B Beta model outperformed Llama 3 8B Instruct, GPT 3.5 Turbo, and others in metrics like lc_winrate and AlpacaEval 2.0 winrate, aiming for superior knowledge capabilities, multilingual support, and cost efficiency, as detailed on its documentation page.

- New Benchmarks Fuel LLM Progress: InFoBench (Instruction Following Benchmark) was introduced, sparking debates on its relevance compared to standard alignment datasets and whether unique benchmarks highlight valuable LLM qualities beyond high correlations with MMLU.

- The WizardArena/ArenaLearning paper detailed evaluating models via human preference scores in a Kaggle competition, generating interest in multi-turn synthetic interaction generation and evaluation setups.

2. Hardware Innovations Powering AI

- Accelerating AI with Specialized Hardware: MonoNN, a new machine learning compiler, optimizes GPU utilization by accommodating entire neural networks into single kernels, addressing inefficiencies in traditional kernel-by-kernel execution schemes, as detailed in a paper presentation and source code release.

- Discussions around WebGPU development highlighted its fast iteration cycles but need for better tooling and profiling, with members exploring porting llm.c transformer kernels for performance insights and shifting more ML workloads to client-side computation.

- Optimizing LLMs with Quantization: Research on quantization techniques revealed that compressed models can exhibit "flips" - changing from correct to incorrect outputs despite similar accuracy metrics, highlighting the need for qualitative evaluations alongside quantitative ones.

- The paper 'LoQT' proposed a method enabling efficient training of quantized models up to 7B parameters on consumer 24GB GPUs, handling gradient updates differently and achieving comparable memory savings for pretraining and fine-tuning.

3. Open Source Driving AI Innovation

- Collaborative Efforts Fuel Progress: The OpenArena project introduced an open platform for pitting LLMs against each other to enhance dataset quality, primarily using Ollama models but supporting any OpenAI-compatible endpoints.

- The LLM-Finetuning-Toolkit launched for running experiments across open-source LLMs using single configs, built atop HuggingFace libraries and enabling evaluation metrics and ablation studies.

- Frameworks Streamlining LLM Development: LangChain saw active discussions on streaming output handling, with queries on

invoke,stream, andstreamEventsfor langgraph integration, as well as managingToolCalldeprecation and unintended default tool calls.- LlamaIndex gained new capabilities like entity deduplication using Neo4j, managing data pipelines centrally with LlamaCloud, leveraging GPT-4o for parsing financial reports, enabling multi-agent workflows via Redis integration, and an advanced RAG guide.

PART 1: High level Discord summaries

HuggingFace Discord

- NPM Module Embraces Hugging Face Inference: A new NPM module supporting Hugging Face Inference has been announced, inviting community feedback.

- The developer emphasizes the model's reach across 36 Large Language Model providers, fostering a collaborative development ethos.

- Distributed Computing Musters Llama3 Power: Llama3 8B launches on a home cluster, spanning from the iPhone 15 Pro Max to NVIDIA GPUs, with code open-sourced on GitHub.

- The project aims for device optimization, engaging the community to battle against programmed obsolescence.

- LLM-Finetuning-Toolkit Unveiled: The debut of LLM-Finetuning-Toolkit offers a unified approach to LLM experimentation across various models using single configs.

- It stands out by integrating evaluation metrics and ablation studies, all built atop HuggingFace libraries.

- Hybrid Models Forge EfficientNetB7 Collaboration: A push to train hybrid models combines EfficientNetB7 for feature extraction with Swin Transformer on Huggingface for classification.

- Participants utilize Google Colab's computational offerings, seeking more straightforward implementation techniques.

- Heat Generated from HF Inference API Misattribution: Copilot incorrectly cites the HF Inference API as an OpenAI product, leading to user confusion in discussions.

- Responses were mixed, ranging from humorous suggestions like 'cheese cooling' servers to pragmatic requests for open-source documentation practices.

Unsloth AI (Daniel Han) Discord

- Llama 3’s Anticipated Unveiling Stumbles: The launch of Llama 3 (405b) scheduled for July 23 by Meta Platforms is rumored to be delayed, with Redditors chattering about a push to later in the year.

- Community exchanges buzz around operational challenges and look forward to fine-tuning opportunities despite the holdup.

- Gemini API Leaps to 2M Tokens: Google's Gemini API now boasts a 2 million token context window for Gemini 1.5 Pro, as announced with features including code execution.

- AI Engineers debate the merits of the extended context and speculate on the implications for performance in everyday scenarios.

- MovieChat GitHub Repo Sparks Dataset Debate: MovieChat emerges as a tool allowing conversations over 10K frames of video, stirring a dialogue over dataset creation.

- Users dispute the feasibility of open-sourced datasets, considering the complexity involved in assembling them.

- Ghost 8B Beta Looms Large: Ghost 8B Beta model is lauded for its performance, topping rivals like Llama 3 8B Instruct and GPT 3.5 Turbo as demonstrated by metrics like the lc_winrate and AlpacaEval 2.0 winrate scores.

- New documentation signals the model’s prowess in areas like multilingual support and cost-efficiency, igniting discussions on strategic contributions.

- CURLoRA Tackles Catastrophic Forgetting: A shift in fine-tuning approach, CURLoRA uses CUR matrix decomposition to combat catastrophic forgetting and minimize trainable parameters.

- AI experts receive the news with acclaim, seeing potential across various applications as detailed in the paper.

Stability.ai (Stable Diffusion) Discord

- GPTs Stagnation Revelation: Concerns were raised about GPTs agents inability to assimilate new information post-training, with clarifications highlighting that uploaded files serve merely as reference 'knowledge' files, without altering the underlying model.

- The community exchanged knowledge on how GPTs agents interface with additional data, establishing that new inputs do not dynamically reshape base knowledge.

- OpenAI's Sidebar Saga: Users noted the disappearance of two icons from the sidebar on platform.openai.com, sparking speculations and evaluations of the interface changes.

- The sidebars triggered discussions concerning usability, with mentions of icons related to threads and messages having vanished.

- ComfyUI Conquers A1111: The speed superiority of ComfyUI over A1111 was a hot topic, with community tests suggesting a 15x performance boost in favor of ComfyUI.

- Despite the speed advantage, some users criticized ComfyUI for lagging behind A1111 in control precision, indicating a trade-off between efficiency and functionality.

- Custom Mask Assembly Anxieties: Debates emerged over the complex process of crafting custom masks in ComfyUI, with participants pointing out the more onerous nature of SAM inpainting.

- Recommendations circulated for streamlining the mask creation process, proposing the integration of tools like Krita to mitigate the cumbersome procedure in ComfyUI.

- The Artistic Ethics Debate: Ethical and legal discussions surfaced regarding AI-generated likenesses of individuals, with members pondering the protective cloak of parody in art creation.

- The community engaged in a spirited debate on the legitimacy of AI art, invoking concerns around the representation of public figures and the merits of seeking professional legal counsel in complex situations.

LM Studio Discord

- CUDA Conundrum & GPU Guidance: Users combated the 'No CUDA devices found' error, advocating for the installation of NVIDIA drivers and the 'libcuda1' package.

- In hardware dialogues, Intel Arc a750's subpar performance was spotlighted, and for LM Studio precision, NVIDIA 3070 or AMD's ROCm-supported GPUs were recommended.

- Polyglot Programming Preference: Rust vs C++: Engineers exchanged views on programming languages, citing Rust's memory safety and C++'s historical baggage; juxtaposed with a dash of Rust Evangelism.

- Despite Python's stronghold in neural network development, Rust and C++ communities highlighted their languages' respective strengths and tools like llama.cpp.

- LM Studio: Scripting Constraints & Model Mysteries: Debate on lmstudio.js veered towards its RPC usage over REST, paired with challenges integrating embedding support due to RPC ambiguities.

- AI aficionados probed into multi-GPU configurations, pinpointing PCIe bandwidth’s impact and musing over the upcoming Mac Studio with an M4 chip for LLM tasks.

- Vulkan and ROCm: GPU Reliance & Revolutionary Runtimes: Enthusiasm was expressed for Vulkan's pending arrival in LM Studio, despite concerns over its 4-bit quantization limit.

- Meanwhile, ROCm stood out as a linchpin for AMD GPU users; essential for models like Llama 3, and in contrast, gaining traction for its Windows support.

OpenAI Discord

- GPT Alt-Debate: Seeking Academic Excellence: Discussions rested on whether Copilot or Bing’s AI, both allegedly running on GPT-4, are superior for academic use.

- A user, bemoaning the lack of other viable options, mentioned alternatives like Claude and GPT-4o, but still acknowledged spending on ChatGPT.

- Microsoft's Multi-CoPilot Conundrum: Members dissected Microsoft’s array of CoPilots across applications like Word, PowerPoint, and Outlook, noting Word CoPilot for its profound dive into subjects.

- Conversely, PowerPoint's assistant was branded basic, primarily assisting in generating rudimentary decks.

- DALL-E's Dilemma with GPT Guidance: A conversation emerged around DALL-E's unreliable rendering of images upon GPT instruction, yielding either prompt text or broken image links.

- "DALL-E's hiccups** were critiqued for the tech's failure to interpret GPT’s guidance aptly on initial commands.

- AI Multilinguists: Prompt Language Distinctions: Inquiry revolved around the impact of prompt language on response quality, particularly when employing Korean versus English in ChatGPT interactions.

- The central question hinged on the efficacy of prompts directly in the desired language against those needing translation.

- Unlocking Android's Full Potential with Magic: A shared 'Android Optimization Guru' guide promised secrets to enhance Android phone performance through battery optimization, storage management, and advanced settings.

- The guide appealed to younger tech enthusiasts with playful scenarios, making advanced Android tips accessible and compelling.

Modular (Mojo 🔥) Discord

- Website Worries Redirected: Confusion arose when the Mojo website was down, leading users to discover it wasn't the official site.

- Correcting course, users were pointed to Modular's official website, ensuring appropriate redirection.

- Bot Baffles By The Book: Modular's bot prompted unwanted warnings when members tagged multiple contributors, mistaking the action as bot-worthy of a threat.

- Discussions ensued regarding pattern triggers, with members calling for a review of the bot's interpretation logic.

- Proposal to Propel module maintainability: A proposal to create

stdlib-extensionsaimed at reducing stdlib maintainers' workload was tabled, sparking a dialogue on GitHub.- The community requested feedback from diligent contributors to ensure this refinement aids in streamlining module management.

- MAX License Text Truncated: Typographical errors in the Max license text triggered conversations about attention to detail in legal documents.

- Errors such as otherModular and theSDK were mentioned, prompting a swift rectification.

- Accelerated Integration Ambitions: Members queried about Max dovetailing into AMD's announced Unified AI software stack, spotlighting Modular's growing influence.

- Citing a convergence of interests, users showed an eagerness for potential exclusive partnerships for the MAX platform.

Perplexity AI Discord

- Cloudflare Quarrels & API Credit Quests: Members are encountering access challenges due to the API being behind Cloudflare, while others are questioning the availability of the advertised $5 free credits for Pro plan upgrades.

- Discussions also cover frustrations with using the $5 credit, with users seeking assistance via community channels.

- Diminished Daily Pro Descent: Pro users noticed a quiet reduction from 600 to 540 in their daily search limit, sparking discussions about future changes and the need for greater transparency.

- The community is reacting to this unexpected change, and the potential impact it may have on their daily operations.

- Imaging Trouble & Comparative Capabilities: Users are sharing difficulties where Perplexity's responses improperly reference past images, hindering conversation continuity.

- Tech-savvy individuals debate Perplexity's strengths against ChatGPT, especially around specialties like file handling, image generation, and precise follow-ups.

- Vexing API Model Mysteries: A user seeks to emulate Perplexity AI's free tier results with the API but struggles to retrieve URL sources, prompting inquiries on which models are being used.

- The goal is to match the free tier's capabilities, suggesting a need for clarity on model utilizations and outputs within the API service.

- A Spectrum of Sharing: Health to Controversy: Discussions range from pathways to health and strength, to understanding dynamic market forces like the Cantillon Effect.

- Conversations also include unique identifiers in our teeth and analysis of a political figure's security episode.

Nous Research AI Discord

- AgentInstruct's Leap Forward: AgentInstruct lays the blueprint for enhancing models like Mistral-7b into more sophisticated versions such as Orca-3, demonstrating substantial gains on benchmarks.

- The application yielded 40% and 54% improvements on AGIEval and GSM8K respectively, while 45% on AlpacaEval, setting new bars for competitors.

- Levity in Eggs-pert Advice: Egg peeling hacks made a surprising entry with recommendations favoring a 10-minute hot water bath for peel-perfect eggs.

- Vinegar-solution magic was also shared, teasing shell-free eggs through an acid-base reaction.

- AI's YouTube Drama: Q-star Leaks: Q-star's confidential details got airtime via a YouTube revelation, showing the promise and perils of developments in AGI.

- Insights from OpenAI's hidden trove codenamed STRAWBERRY spilled the beans on upcoming LLM strategies.

- Goodbye PDFs, Hello Markdown: New versions of Marker crunch PDF to Markdown conversion times by leveraging efficient model architecture to aid dataset quality.

- Boosts included 7x faster speeds on MPS and a 10% GPU performance jump, charting a course for rapid dataset creation.

- Expanding LLM Horizons in Apps: Discussions on app integrations revealed retrieval-augmented generation (RAG) as a favorite for embedding tutorial intelligence.

- Suggestions flew around extending models like Mixtral and Llama up to 1M tokens, although practical usage remains a challenge.

CUDA MODE Discord

- Warp Speed WebGPU Workflow: Users exploring WebGPU development discussed its quick iteration cycles, but identified tooling and profiling as areas needing improvement.

- A shared library approach like dawn was recommended, with a livecoding demo showcasing faster shader development.

- Peeking into CUDA Cores' Concurrency: A dive into CUDA core processing revealed each CUDA core can handle one thread at a time, with an A100 SM managing 64 threads simultaneously from a pool of 2048.

- Discussions also focused on how register limitations can impact thread concurrency, affecting overall computational efficiency.

- Efficient Memory with cudaMallocManaged: cudaMallocManaged was proposed over cudaFix as a way to support devices with limited memory, especially to enhance smaller GPU integration efforts.

- Switching to cudaMallocManaged was flagged as critical for ensuring performance remains unhindered while accommodating a broader range of GPU architectures.

- FSDP Finesse for Low-Bit Ops: Discussion on implementing FSDP support for low-bit optimization centered on the non-addressed collective ops for optimization state subclass.

- A call for a developer guide aimed at aiding FSDP compatibility was discussed to boost developer engagement and prevent potential project drop-off.

- Browser-Based Transformers with WebGPU: Members discussed leveraging Transformers.js for running state-of-the-art machine learning tasks in the browser, utilizing WebGPU's potential in the ONNX runtime.

- Challenges related to building Dawn on Windows were also highlighted, noting troubleshooting experiences and the impact of buffer limitations on performance.

Cohere Discord

- OpenArena's Ambitious AI Face-off: A new OpenArena project has launched, challenging LLMs to compete and ensure robust dataset quality.

- Syv-ai's repository details the application process, aiming at direct engagement with various LLM providers.

- Cohere Conundrum: Event Access Debacle: Members bemoaned Cohere event link mix-ups, resulting in access issues, circumvented by sharing the correct Zoom link for the diffusion model talk.

- Guest speaker session clarity was restored, with guidance on creating spectrograms using diffusion models.

- Cost of AI Competency Crashes: Andrej Karpathy's take on AI training costs shows a dramatic decrease, marking a steep affordability slope for training models like GPT-2.

- He illuminates the transition from 2019's cost-heavy landscape to now, where enthusiasts can train GPT-like models for a fraction of the price.

- Seamless LLM Switch with NPM Module: Integrating Cohere becomes a breeze for developers with the updated NPM module, perfect for cross-platform LLM interactions.

- This modular approach opens doors to cohesive use of diverse AI platforms, enriching developer toolkits.

- The r/localllama Newsbot Chronicles: The r/localllama community breathes life into Discord with a Langchain and Cohere powered bot that aggregates top Reddit posts.

- This innovative engine not only summarizes but arranges news into compelling narratives, tailored for channel-specific delights.

Eleuther Discord

- London AI Gatherings Lack Technical Teeth: Discussions revealed dissatisfaction with the technical depth of AI meetups in London, suggesting those interested should attend UCL and Imperial seminars instead.

- ICML and ICLR conferences were recommended for meaningful, in-depth interactions, especially in niche gatherings of researchers.

- Arrakis: Accelerating Mechanistic Interpretability**: Arrakis, a toolkit for interpretability experiments, was introduced to enhance experiment tracking and visualization.

- The library integrates with tools like tuned-lens to streamline mechinterp research efficiency.

- Traversing Model Time-Relevance: There's a growing interest in incorporating time relevance into LLMs, as traditional timestamp methods are lacking in effectiveness.

- Current discussions are centered around avenues such as literature on time-sensitive datasets and benchmarks for training improvement.

- Quantization Quirks: More Than Meets the Eye: Concerns were raised regarding a paper on quantization flips explaining that compressed models can have different behaviors despite identical accuracy metrics.

- This has sparked dialogue on the need for rigorous qualitative evaluations alongside quantitative ones.

- Unfolding lm-eval's Potential: A technical inquiry led to a guide on integrating a custom Transformer-lens model with lm-eval's Python API, as seen in this documentation.

- Yet, some members are still navigating the intricacies of custom functions and metrics within lm-evaluation-harness.

tinygrad (George Hotz) Discord

- MonoNN Streamlines GPU Workloads: The introduction of MonoNN, a new machine learning compiler, sparked interest with its single kernel approach for entire neural networks, possibly improving GPU efficiencies. The paper and the source code are available for review.

- The community considered the potential impact of MonoNN's method on reducing the kernel-by-kernel execution overhead, aligning with the ongoing conversations about tinygrad kernel overhead concerns.

- MLX Edges Out tinygrad: MLX gained the upper hand over tinygrad with better speed and accuracy, as demonstrated in the beautiful_MNIST benchmark, drawing the community's attention to the tinygrad commit for mlx.

- This revelation led to further discussion on improving tinygrad's performance, targeting areas of overhead and inefficiencies.

- Tweaks Touted for tinygrad's avg_pool2d: The community requested an

avg_pool2denhancement to supportcount_include_pad=False, a feature in stable diffusion training evaluations, proposing potential solutions modeled after PyTorch's implementation.- Discussions revolved around the need for this feature in benchmarks like MLPerf and saw suggestions for workarounds using existing pooling operations.

- Discourse on Tinygrad's Tensor Indexing: Members exchanged knowledge on tensor indexing nuances within tinygrad, comparing it with other frameworks and demonstrating how operations like masking can lead to increased performance.

- A member referred to the tinygrad documentation to clarify the execution and efficiency benefits of this specific tensor operation within the toolkit.

- PR Strategies and Documentation Dynamism: The consensus among members was for separate pull requests for enhancements, bug fixes, and feature implementations to streamline the review process, evident in the handling of the

interpolatefunction for FID.- Emphasizing the importance of up-to-date and working examples, members discussed the strategy for testing and verifying code blocks in the tinygrad documentation.

Latent Space Discord

- Leaderboard Levels Up: Open LLM Leaderboard V2 Excitement: A new episode on Latent Space focusing on Open LLM Leaderboard V2** sparked conversation, with community members sharing their enthusiasm.

- The podcast was linked to a new release, providing listeners insights into the latest LLM rankings.

- Linking Without Hallucinating: Strategies to Combat Misinformation: Discussion surfaced around SmolAI's innovative approaches to eliminate Reddit link hallucination, focusing on pre-check and post-proc** methods.

- Techniques and results were discussed, highlighting the importance of reliable links in enhancing the use of LLMs.

- Unknown Entrants Stir LMSys: New Models Spark Curiosity: Speculation arose about the entities behind new models in the LMSys arena**, accompanied by a mixed bag of opinions.

- Rumors about Command R+ jailbreaks and their implications were a part of the buzz, reflected in community conversations.

- Composing with Cursor: The Beta Buzz: Cursor's** new Composer feature stirred excitement within the community, with users eager to discuss its comparative UX and the beta release.

- Affordability and utility surfaced as topics of interest, as spectators shared positive reactions and pondered subscription models.

- Microsoft's Spreadsheet Savvy: Introducing SpreadsheetLLM: Microsoft made waves with SpreadsheetLLM, an innovation aiming to refine LLMs' spreadsheet handling using a SheetCompressor** encoding framework.

- Conversations veered towards its potential to adapt LLMs to spreadsheet data, with excitement over the nuanced approach detailed in their publication.

OpenAccess AI Collective (axolotl) Discord

- Open Source Tools Open Doors: User le_mess has created a 100% open source version of a dataset creation tool named OpenArena, expanding the horizon for model training flexibility.

- OpenArena was initially designed for OpenRouter and is now leveraging Ollama to boost its capabilities.

- Memory Usage Woes in ORPO Training: A spike in memory usage during ORPO training was noted by xzuyn, leading to out-of-memory errors despite a max sequence limit of 2k.

- The conversation highlighted missing messages on truncating long sequences after tokenization as a possible culprit.

- Integrating Anthropic Prompt Know-How: Axolotl's improved prompt format draws inspiration from Anthropic's official Claude, discussed by Kalomaze, featuring special tokens for clear chat turn demarcations.

- The template, applicable to Claude/Anthropic formats, is found here, sparking a divide over its readability and flexibility.

- RAG Dataset Creation Faces Scrutiny: Concerns were raised by nafnlaus00 about the security of Chromium in rendering JavaScript needed sites for RAG model dataset scraping.

- Suggestions included exploring alternative scraping solutions like firecrawl or Jina API to navigate these potential vulnerabilities.

- Weighted Conversations Lead Learning: Tostino proposed a novel approach to training data utilization involving weight adjustments to steer model learning away from undesirable outputs.

- Such advanced tweaking could refine models by focusing on problematic areas, enhancing the learning curve.

Interconnects (Nathan Lambert) Discord

- Strawberry Fields of AI Reasoning: OpenAI is developing a new reasoning technology named Strawberry, drawing comparisons to Stanford's STaR (Self-Taught Reasoner). Community insiders believe its capabilities mirror those outlined in a 2022 paper detailed by Reuters.

- The technology's anticipated impact on reasoning benchmarks prompts examination of its possible edge over existing systems, with particular focus on product names, key features, and release dates.

- LMSYS Arena's Stealthy Model Entrants: The LMSYS chatbot arena is abuzz with new entrants like column-r and column-u, speculated to be the brainchildren of Cohere as per info from Jimmy Apples.

- Further excitement is stirred by Twitter user @btibor91, who points out four new models gearing up for release, including eureka-chatbot and upcoming-gpt-mini, with Google as the purported trainer for some.

- Assessing Mistral-7B's Instruction Strength: The AI community debates the efficacy of Mistral-7B's instruct-tuning in light of findings from the Orca3/AgentInstruct paper and seeks to determine the strength of the underlying instruct-finetune dataset.

- The discussion evaluates if current datasets meet robustness criteria, and contrasts Mistral-7B's benchmarks with other models' performance.

- InFoBench Spurring Benchmark Debates: The recently unveiled InFoBench (Instruction Following Benchmark) sparks conversations comparing its value against established alignment datasets, with mixed opinions on its real-world relevance.

- Skeptics and proponents clash over whether unique benchmarks like InFoBench alongside EQ Bench truly highlight significant qualities of language models, considering their correlation with established benchmarks like MMLU.

- California's AI Legislative Labyrinth: The passage of California AI Bill SB 1047 leads to a legislative skirmish, as AI safety experts and venture capitalists spar over the bill’s implications, ahead of a critical vote.

- Senator Scott Wiener characterizes the clash as ‘Jets vs Sharks’, revealing the polarized perspectives documented in a Fortune article and made accessible via Archive.is for wider review.

LangChain AI Discord

- JavaScript Juggles: LangChain's Trio of Functions: Users dissected the intricacies of LangChain JS's

invoke,stream, andstreamEvents, debating their efficacy for streaming outputs in langgraph.- A proposal emerged suggesting the use of agents for assorted tasks like data collection and API interactions.

- Base64 Blues with Gemini API: Seek, Decode, Fail: A puzzling 'invalid input' snag was hit when a user wielded Base64 with Gemini Pro API**, despite File API uploads being the lone documented method.

- The collective's guidance pointed towards the need for clarity in docs and further elaboration on Base64 usage with APIs.

- ToolCall Toss-up: LangChain’s Legacy to OpenAIToolCall:

ToolCall, now obsolete, directs users to its successorOpenAIToolCall, introducing anindexfeature for order.- The community pondered package updates and the handling of auto mode's inadvertent default tool calls.

- Hallucination Hazards: Chatbots Conjure Queries: Hallucinations in HuggingFace models were reported, provoking discussions around the LLM-generated random question/answer pairs for chatbots.

- Alternative remedies were offered, including a shift to either openAI-models or FireworksAI models, although finetuned llama models seemed resilient to the typical repetition penalties.

- Embedding Excellence: OpenAI Models Spotlight: Curiosity peaked over the optimal OpenAI embedding model, sparking a discourse on the best model to comprehend and utilize embedding vectors.

- The general consensus leaned towards

text-embedding-ada-002recommended as the go-to model in LangChain for vector embeddings.

- The general consensus leaned towards

LlamaIndex Discord

- Dedupe Dancing with LlamaIndex: The LlamaIndex Knowledge Graph undergoes node deduplication with new insights and explanations in a related article, highlighting the significance of knowledge modeling.

- Technical difficulties arose when executing the NebulaGraphStore integration, as detailed in GitHub Issue #14748, pointing to a potential mismatch in method expectations.

- Fusion of Formulas and Finances: Combining SQL and PDF embeddings sparked discussions on integrating databases and documents, directed by examples from LlamaIndex's SQL integration guide.

- A mention of an issue with

NLSQLTableQueryEngineprompted debate over the correct approach given that Manticore's query language differs from MySQL's classic syntax.

- A mention of an issue with

- Redis Rethinks Multi-Agent Workflows: @0xthierry's Redis integration facilitates the construction of production workflows, creating a network for agent services to communicate, as detailed in a popular thread.

- The efficiency of multi-agent systems was a central theme, with Redis Queue acting as the broker, reflecting a trend towards streamlined architectures.

- Chunky Data, Sharper embeddings: Efforts to chunk data into smaller sizes led to improved precision within LlamaIndex's embeddings, per suggestions on optimal chunk and overlap settings in the Basic Strategies documentation.

- The LlamaIndex AI community agreed that a

chunk_sizeof 512 with an overlap of 50 optimizes detail capture and retrieval accuracy.

- The LlamaIndex AI community agreed that a

- Advanced RAG with LlamaIndex's Touch: For a deep dive into agent modules, LlamaIndex's guide offers a comprehensive walkthrough, showcased in @kingzzm's tutorial on utilizing LlamaIndex query pipelines.

- RAG workflows' complexities are unpacked in steps, from initiating a query to fine-tuning query engines with AI engineers in mind.

OpenInterpreter Discord

- GUI Glory: OpenInterpreter Upgrade: The integration of a full-fledged GUI into OpenInterpreter has added editable messages, branches, auto-run code, and save features.

- Demands for video tutorials to explore these functionalities signal a high community interest.

- OS Quest: OpenAI's Potential Venture: Speculation is rife following a tweet hint about OpenAI, led by Sam Altman, possibly brewing its own OS.

- Suspense builds as community members piece together hints from recent job postings.

- Phi-3.1: Promise and Precision: Techfren's analysis on Phi-3.1 model's potential reveals impressive size-to-capability ratio.

- Yet, discussions reveal it occasionally stumbles on precise

execution, sparking talks on enhancement.

- Yet, discussions reveal it occasionally stumbles on precise

- Internlm2 to Raspi5: A Compact Breakthrough: 'Internlm2 smashed' garners focus for its performance on a Raspi5 system, promising for compact computing needs.

- Emphasis is on exploring multi-shot and smash modes for novel IoT applications.

- Ray-Ban's Digital Jailbreak: Community's Thrill: A possibility of jailbreaking Meta Ray-Ban has the community buzzing with excitement and anticipation.

- The vision of hacking this hardware elicits a surge of interest for new functionality opportunities.

LLM Finetuning (Hamel + Dan) Discord

- Agents Assemble in LLM: A user explained the addition of agents in LLMs to enhance modularity within chat pipelines, using JSON output for task execution such as fetching data and API interaction.

- The shared guide shows steps incorporating Input Processing and LLM Interpretation, highlighting modular components' benefits.

- OpenAI API Keys: The Gateway for Tutorials: API keys are in demand for a chatbot project tutorial, with a plea for key sharing amongst the community to aid in the tutorial's creation.

- The member did not provide further context but stressed the temporary need for the key to complete and publish their guide.

- Error Quest in LLM Land: Members voiced their struggles with unfamiliar errors from modal and axolotl, expressing the need for community help on platforms like Slack.

- While specific nature of the errors was not detailed, conversations insinuated a need for better problem-solving channels for these technical issues.

- Navigating Through Rate Limit Labyrinths: A user facing token rate limitations during Langsmith evaluation found respite by tweaking the max_concurrency setting.

- Discussions also traversed strategies to introduce delays in script runs, aiming to steer clear of the rate limits imposed by service providers.

- Tick Tock Goes the OpenAI Clock: The discourse revealed that OpenAI credits are expiring on September 1st, with users clarifying the deadline after inquiries surfaced.

- Talks humorously hinted at initiating a petition to extend credit validity, indicating users' reliance on these resources beyond the established expiration.

LAION Discord

- Hugging Face Hits the Green Zone: Hugging Face declares profitability with a team of 220, while keeping most of its platform free and open-source.

- CEO Clement Delangue excitedly notes: 'This isn’t a goal of ours because we have plenty of money in the bank but quite excited to see that @huggingface is profitable these days, with 220 team members and most of our platform being free and open-source for the community!'

- Cambrian-1's Multimodal Vision: Introduction of the Cambrian-1 family, a new series of multimodal LLMs with a focus on vision, available on GitHub.

- This expansion promises to broaden the horizons for AI models integrating images within their learning context.

- MagViT2 Dances with Non-RGB Data: Discussions arose around MagViT2's potential compatibility with non-RGB motion data, specifically 24x3 datasets.

- While the conversation was brief, it raises questions about preprocessing needs for non-standard data formats in AI models.

- Choreographing Data for AI Steps: Preprocessing techniques for non-RGB motion data drew interest for ensuring they can work harmoniously with existing AI models.

- The details on these techniques remain to be clarified in further discussions.

DiscoResearch Discord

- OpenArena Ignites LLM Competition: The release of OpenArena initiates a new platform for LLM showdowns, with a third model judging to boost dataset integrity.

- Primarily incorporating Ollama models, OpenArena is compatible with any OpenAI-based endpoints, broadening its potential application in the AI field.

- WizardLM Paper Casts a Spell on Arena Learning: The concept of 'Arena Learning' is detailed in the WizardLM paper, establishing a new method for LLM evaluation.

- This simulation-based methodology focuses on meticulous evaluations and constant offline simulations to enhance LLMs with supervised fine-tuning and reinforcement learning techniques.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI Stack Devs (Yoko Li) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!