[AINews] Meta Llama 3.3: 405B/Nova Pro performance at 70B price

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

"a new alignment process and progress in online RL techniques" is all you need.

AI News for 12/5/2024-12/6/2024. We checked 7 subreddits, 433 Twitters and 31 Discords (206 channels, and 5628 messages) for you. Estimated reading time saved (at 200wpm): 535 minutes. You can now tag @smol_ai for AINews discussions!

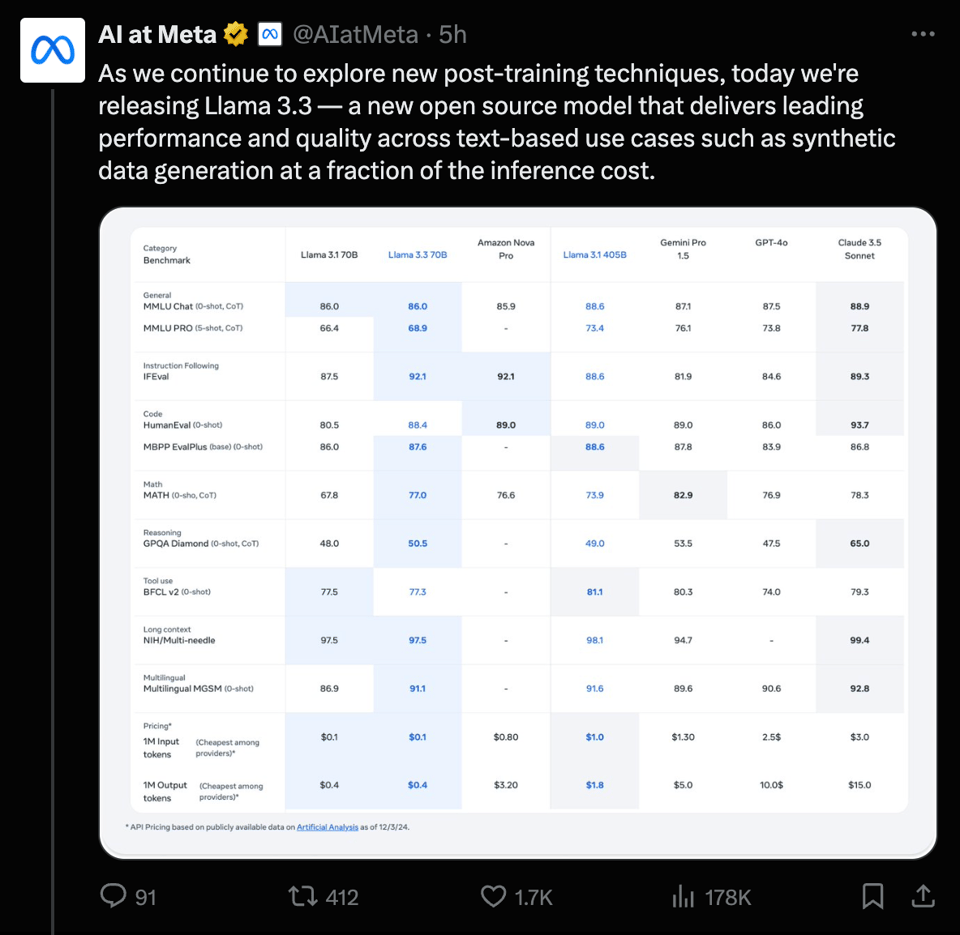

Meta AI, sensibly waiting for OpenAI to release an o1 finetuning waitlist, thankfully kept their sane versioning strategy and simply bumped their Llama minor version yet again to 3.3, this time matching 405B performance with their 70B model, using "a new alignment process and progress in online RL techniques". No papers of course.

Amazon Nova Pro had all of 3 days to sit and look pretty, but with Meta loudly advertising same performance at 12% of the cost, they have been smacked back down in the hierarchy of price-to-performance ratios.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Here are the key themes and discussions from the Twitter activity, organized by major topics:

Meta's Llama 3.3 70B Release

- Release Details: @AIatMeta announced Llama 3.3, a 70B model delivering performance comparable to Llama 3.1 405B but with significantly lower compute requirements. The model achieves improved performance on GPQA Diamond (50.5%), Math (77.0%), and Steerability (92.1%).

- Several providers including @hyperbolic_labs and @ollama quickly announced support for serving the model.

- The model supports 8 languages and maintains the same license as previous Llama releases.

OpenAI's Reinforcement Fine-Tuning (RFT) Announcement

- Product Launch: @OpenAI previewed Reinforcement Fine-Tuning, allowing organizations to build expert models for specific domains using limited training data.

- @stevenheidel noted that RFT allows users to create custom models using the same process OpenAI uses internally.

- Alpha access is being provided to researchers and enterprises through a research program.

Google's Gemini Performance Updates

- New Model Version: @lmarena_ai announced that Gemini-Exp-1206 is now leading benchmarks, taking first place overall and tying with GPT-4o for coding performance.

- The model shows improvements across various benchmarks including hard prompts and style control.

- @OriolVinyalsML celebrated Gemini's one-year anniversary and noted the progress in beating their own benchmarks.

LlamaCloud & Document Processing

- Feature Updates: @jerryjliu0 showcased LlamaCloud's capabilities for extracting tables from documents and performing analytics workloads.

- The platform now supports rendering tables and code directly in the UI.

- @jerryjliu0 highlighted automated extraction as an overlooked but valuable use case, particularly for receipt/invoice processing.

Memes and Industry Commentary

- OpenAI Pricing: Multiple users including @aidan_mclau commented on OpenAI's $200/month plan, with discussions around the economics of AI pricing models.

- @sama clarified that most users will be best served by the free tier or $20/month plus tier.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Llama 3.3 70B Performance vs. GPT-4o and Others

- Llama-3.3-70B-Instruct · Hugging Face (Score: 465, Comments: 139): The post is about Llama-3.3-70B-Instruct, a model available on Hugging Face, but lacks additional details or context regarding its features, capabilities, or applications.

- Discussions highlight the impressive performance of Llama-3.3-70B-Instruct, noting its comparable capabilities to the Llama 405B despite having significantly fewer parameters. Users are particularly impressed with its 128K context and multilingual abilities, with benchmarks showing substantial improvements in code generation, reasoning, and math.

- There is interest in the potential release of smaller versions of the model, as the 70B model is challenging for consumer-grade hardware due to VRAM limitations. Techniques like quantizing are discussed as methods to make it runnable on GPUs with 24G VRAM like the RTX 4090, although this may impact output quality.

- Some users express skepticism about the model's real-world performance compared to benchmarks, with comparisons being made to Qwen2.5 72B and discussions about the trade-offs in performance scaling. The community is keen on seeing further architectural changes in future iterations, such as Llama 4 and Qwen 3.

- Meta releases Llama3.3 70B (Score: 432, Comments: 100): Meta has released Llama3.3 70B, a model that serves as a drop-in replacement for Llama3.1-70B and approaches the performance of the 405B model. This new model is highlighted for its cost-effectiveness, ease of use, and improved accessibility, with further information available on Hugging Face.

- Llama 3.3 70B shows significant performance improvements over previous versions, with notable enhancements in code generation, multilingual capabilities, and reasoning & math, as highlighted by vaibhavs10. The model achieves comparable performance to the 405B model with fewer parameters, and specific metric improvements include a 7.9% increase in HumanEval for code generation and a 9% increase in MATH (CoT).

- Discussions around multilingual support emphasize that Llama 3.3 supports 7 additional languages besides English. However, there are concerns about the lack of a pretrained version, as Electroboots and mikael110 mention that only an instruction-tuned version is available, according to the Official Docs.

- Commenters like Few_Painter_5588 and SeymourStacks compare Llama to other models like Qwen 2.5 72b, noting Llama's improved prose quality and reasoning capabilities, though Qwen is still considered smarter in some benchmarks. There is also a call for more comprehensive benchmarks that focus on fundamentals rather than being easily gamed by post-training.

- New Llama 3.3 70B beats GPT 4o, Sonnet and Gemini Pro at a fraction of the cost (Score: 112, Comments: 0): Llama 3.3 70B reportedly outperforms GPT-4o, Sonnet, and Gemini Pro while offering cost advantages. Specific details on performance metrics and cost comparisons are not provided in the post.

Theme 2. Open Source O1: Call for Better Models

- Why we need an open source o1 (Score: 267, Comments: 135): The author criticizes the new o1 model, noting its downgrade from o1-preview in coding tasks, where it fails to follow instructions and makes unauthorized changes to scripts. They argue that these issues highlight the need for open-source models like QwQ, as proprietary models may prioritize profit over performance and reliability, making them unsuitable for critical systems.

- Open-source models like QwQ are gaining traction due to reliability issues with proprietary models like o1, which often change behavior unexpectedly and disrupt workflows. Users prefer open-weight solutions for stability, as they can control updates and ensure consistent performance over time.

- The o1 model has been criticized for its poor performance in coding, with users reporting unauthorized changes and a failure to follow instructions. This has led to concerns about its suitability for critical applications, with some users suggesting that OpenAI might be cutting costs by releasing less capable models deliberately.

- There is a general sentiment that models have been downgraded since GPT-4, with users expressing dissatisfaction with newer iterations like o1 and Gemini. Many believe these changes are driven by business strategies rather than technical improvements, leading to a preference for older models or open-source alternatives.

- Am I the only person who isn't amazed by O1? (Score: 124, Comments: 95): The author expresses skepticism about the O1 model, stating that it doesn't represent a paradigm shift. They argue that OpenAI has merely applied existing methods from the open source AI community, such as OptiLLM and prompt optimization techniques like "best of n" and "self consistency," which have been used since October.

- Many users express dissatisfaction with the O1 model, describing it as a downgrade from O1-preview and questioning the value of paying $200/month for the service. Some suggest that the model's limitations, such as losing track during extended interactions, make it unsuitable for professional use, and they prefer using 4o or other alternatives like Claude.

- There is discussion about the perception of OpenAI's strategy, with some users noting that the company has shifted to a "for-profit" model, focusing on incremental upgrades rather than groundbreaking innovations. This has led to a sense of disappointment among users who feel that OpenAI is prioritizing enterprise customers over individual consumers.

- The conversation touches on the broader AI landscape, with mentions of other models like QwenQbQ and DeepSeek R1, and the potential for open-source advancements. Users highlight the need for reliable models integrated into workflows, emphasizing long- and short-term memory, and mature agent frameworks, as opposed to merely increasing intelligence.

Theme 3. Windsurf Cascade System Prompt Details

- Windsurf Cascade Leaked System prompt!! (Score: 173, Comments: 51): Windsurf Cascade is an agentic AI coding assistant designed by the Codeium engineering team for use in Windsurf, an IDE based on the AI Flow paradigm. Cascade aids users in coding tasks such as creating, modifying, or debugging codebases, and operates with tools like Codebase Search, Grep Search, and Run Command. It emphasizes asynchronous operations, precise tool usage, and a professional communication style, while ensuring code changes are executable and user-friendly.

- Discussions highlighted the complexity of prompts in AI models, with users expressing astonishment at the effectiveness of intricate prompts despite numerous negatively formulated rules. There was curiosity about the specific model used by Windsurf Cascade.

- The use of HTML-style tags in prompts was discussed, with explanations that they provide structure and focus, aiding the model in processing longer prompts. Some users referenced a podcast with Erik Schluntz from Anthropic, noting that structured markup like XML/HTML-style tags can be more effective than raw text.

- There was a debate on the effectiveness of positive reinforcement in prompts, with some arguing that positive language can improve model performance by associating keywords with better solutions. However, others pointed out the limitations of endlessly adding conditions to prompts, comparing it to inefficiently programming with numerous "IF" statements.

Theme 4. HuggingFace Course: Preference Alignment for LLMs

- Free Hugging Face course on preference alignment for local llms! (Score: 192, Comments: 13): Hugging Face offers a free course on preference alignment for local LLMs, featuring modules like Argilla, distilabel, lightval, PEFT, and TRL. The course covers seven topics, with "Instruction Tuning" and "Preference Alignment" already released, while others like "Parameter Efficient Fine Tuning" and "Vision Language Models" are scheduled for future release.

- Colab Format Clarification: There was confusion about the term "Colab format," with users clarifying that the course materials are in notebook format, which can be run on Google Colab but are primarily designed to run locally. bburtenshaw emphasized that the notebooks contain links to open them in Colab for convenience, though everything is intended to run on local machines.

- Local LLMs Expectation: Users like 10minOfNamingMyAcc expected the course to provide a local codebase for local LLMs, aligning with the course's focus on local model training and usage. The course indeed supports local execution of code and models.

- Course Access: The course is available on GitHub, with MasterScrat providing the link here for those interested in accessing the materials directly.

Theme 5. Adobe Releases DynaSaur Code for Self-Coding AI

- Adobe releases the code for DynaSaur: An agent that codes itself (Score: 88, Comments: 13): Adobe has released the code for DynaSaur, an agent capable of coding itself. This move highlights Adobe's contribution to the field of AI, specifically in autonomous coding agents.

- Eposnix advises running DynaSaur in a VM due to the risk of it iterating indefinitely and potentially causing system damage. They suggest that confidence scoring could prevent this by allowing the AI to quit if a task is too difficult, rather than persisting with potentially harmful solutions.

- Knownboyofno explains that DynaSaur can autonomously create tools by generating Python functions to achieve specified goals, providing a clearer understanding of its capabilities.

- Staladine and others express interest in seeing practical examples or demonstrations of DynaSaur in action, indicating a need for more illustrative resources to comprehend its functionality.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. OpenAI GPT-4.5: Surpassing Expectations in Creative Language Tasks

- Asked her to roast the UHC CEO (Score: 195, Comments: 27): ChatGPT critiques the health insurance industry by equating profit-driven practices to "playing Monopoly with people's lives," reflecting on the moral implications of prioritizing profits over patient care. The conversation also touches on the challenges of roasting a person who has been assassinated.

- The discussion highlights ChatGPT's evolving capabilities, with users expressing shock at its ability to deliver incisive critiques, particularly targeting insurance executives without censorship. Comments reflect on ChatGPT's boldness and suggest it has become more radical, especially in political contexts.

- A user humorously notes that "facts have always had a liberal bias," indicating a perceived alignment of ChatGPT's critiques with liberal viewpoints. This underscores the AI's perceived role in challenging established norms and figures in sensitive industries.

- The community engages with the post through humor and memes, showcasing a lighthearted yet critical reception of the AI's commentary on the insurance industry, with references to the harshness of its critique as "brutal" and "murderous."

AI Discord Recap

A summary of Summaries of Summaries by O1-mini

Theme 1. AI Model Releases and Performance Battle Royale

- Meta’s Llama 3.3 Outperforms 405B Rival: Meta’s Llama 3.3, featuring 70B parameters, matches the performance of a 405B model while being more cost-efficient, igniting comparisons with Gemini-exp-1206 and Qwen2-VL-72B models.

- Users celebrate Llama 3.3’s enhanced math solutions and robust performance in coding tasks, citing its suitability for various engineering projects.

- The release spurs competitive benchmarking, with community members eager to integrate and test the model against established standards.

- "Saw remarkable improvements in syntax handling," a user exclaimed, highlighting the model’s advanced capabilities.

- Gemini-exp-1206 Ties with O1 in Coding Benchmarks: Google’s Gemini-exp-1206 model secures a top spot overall, matching O1 in coding benchmarks and pushing technological boundaries in AI performance.

- The model showcases significant advancements in synthetic data generation and cost-effective inference, appealing to developers focused on scalability.

- Community discussions highlight Gemini-exp-1206’s potential to exceed expectations in complex AI applications.

- Explore Gemini-exp-1206’s capabilities.

Theme 2. Pricing Shakeups Spark User Grievances

- Windsurf’s Steep Price Hike Frustrates Subscribers: Codeium raises Windsurf’s Pro tier to $60/month with new hard limits on prompts and flow actions, leaving many users dissatisfied and seeking clarification on grandfathering policies.

- Subscribers express frustration over the sudden price increase without prior bug fixes, questioning the sustainability of the new pricing model.

- The abrupt changes have accelerated exploration of alternatives like Cursor, Bolt AI, and Copilot, despite some sharing similar reliability issues.

- "This pricing is unsustainable given the current performance," a user lamented.

- See Windsurf’s new pricing details.

- Lambda Labs Slashes Model Prices to Attract Developers: DeepInfra cuts prices on multiple models, including Llama 3.2 3B Instruct for $0.018 and Mistral Nemo for $0.04, aiming to provide affordable options for budget-conscious developers.

- These reductions make high-quality models more accessible, fostering broader adoption and innovation within the developer community.

- Users welcome the lower costs, noting the improved value proposition and increased accessibility.

- Check out DeepInfra’s price cuts.

Theme 3. Tool Stability Fails and User Frustrations

- Claude’s Code Struggles Deter Developers: Users report significant bugs in tools like Windsurf and Claude, leading to unreliable performance and increased error rates, making coding tasks more cumbersome.

- Persistent server outages and issues like 'resource_exhausted' undermine productivity, causing users to reconsider their subscriptions.

- Community consensus highlights the critical need for reliable performance in AI tools before any further pricing adjustments.

- Read more user feedback on Claude.

- Cursor’s Sluggish Response Times Push Users to Alternatives: Users report connection failures and slow responses with Cursor’s Composer, often requiring new sessions for functionality, leading to frustration and migration towards more stable tools like Windsurf.

- Despite new features in Cursor 0.43.6, issues like unreliable Composer responses persist, dampening user experience.

- Discussions emphasize the need for robust bug fixes and performance improvements to retain user trust.

- "Cursor’s performance doesn't meet expectations," a developer noted.

- Explore Cursor’s performance issues.

Theme 4. Feature Enhancements and New Integrations Unveiled

- Aider’s Pro Upgrade Introduces Advanced Voice and Context Features: Aider Pro now includes unlimited advanced voice mode, a new 128k context for O1, and a copy/paste to web chat capability, enhancing workflow efficiency and handling of extensive documents and code.

- Additionally, process suspension support and exception capture analytics provide users with better control and insights into their processes.

- User feedback praises the 61% code contribution from Aider, showcasing its growing capabilities and robust development.

- Discover Aider Pro’s new features.

- OpenRouter’s Author Pages Simplify Model Discovery: OpenRouter launches Author Pages, enabling users to explore models by creators with detailed stats and related models showcased through a convenient carousel interface.

- This feature enhances model discovery and allows for better analysis, making it easier for users to find and evaluate diverse AI models.

- The community anticipates improved user experience and streamlined navigation through different authors' collections.

- Visit OpenRouter’s Author Pages.

Theme 5. Community Concerns: Security, Licensing, and Fake Apps

- Beware the Fake Perplexity App!: Discord users alert the community about a fake Perplexity app circulating on the Windows app store, which deceptively uses the official logo and unauthorized APIs, directing users to a suspicious Google Doc and urging immediate reporting to prevent security breaches.

- Members highlight the importance of verifying app authenticity to avoid exposure to malware and phishing attempts.

- Discussions emphasize the need for vigilance and community-driven measures to combat fraudulent applications.

- Report the fake Perplexity app.

- Phi-3.5 Overly Censors AI Responses: Microsoft’s Phi-3.5 model is criticized for being highly censored, making it resistant to offensive queries and potentially limiting its usefulness for technical tasks, sparking debates on the balance between safety and usability in AI models.

- Users debate methods to uncensor or improve the model’s functionality, including sharing links to uncensored versions on Hugging Face.

- Concerns are raised about the censorship’s impact on coding and technical applications, urging developers to seek models with better contextual understanding.

- "Phi-3.5’s censorship makes it impractical for many real-world applications," a user argued.

- Explore Phi-3.5’s uncensored version.

- Security Oversight in AI Tools Raises Alarm: Discussions surrounding Safety Concerns in AI tools highlight issues like over-censorship and the lack of secure license agreements, emphasizing the need for better security protocols and transparent licensing to protect user interests.

- Community members call for improved overseeing mechanisms to ensure AI models are both safe and functional, avoiding overzealous restrictions that hinder practical use.

- "We need a balance between safety and usability in AI models," a participant stated.

- Learn about AI model safety concerns.

PART 1: High level Discord summaries

Codeium / Windsurf Discord

- Windsurf Pricing Overhaul: Codeium has increased Windsurf's Pro tier to $60/month, introducing hard limits on user prompts and flow actions, which has unsettled many subscribers.

- Users are demanding clarity on the new pricing structure and whether existing plans will be grandfathered, expressing dissatisfaction with the abrupt changes without prior bug fixes.

- User Frustrations with AI Tools: Engineers reported significant bugs in tools like Windsurf, hindering effective coding and leading to reconsideration of their subscriptions.

- There is a consensus that AI tools need to ensure reliable performance and user-friendly features before implementing further pricing adjustments.

- Alternatives to Windsurf: In response to Windsurf's pricing and performance issues, users are exploring alternatives such as Cursor, Bolt AI, and Copilot for more consistent performance.

- Despite considering these alternatives, some users remain cautious as tools like Bolt AI are reported to have similar reliability challenges.

- Impact of Server Issues: Frequent server outages and errors like 'resource_exhausted' are disrupting the use of Windsurf, negatively impacting user productivity.

- These technical problems are intensifying user frustrations and accelerating the shift towards other AI coding solutions.

- Feedback on AI Tool Performance: Users have highlighted that Claude struggles with context retention and introduces errors in code, reducing its effectiveness in development tasks.

- This feedback emphasizes the need for AI tools to enhance their accuracy and contextual understanding to better meet the demands of engineering projects.

Notebook LM Discord Discord

- Audio Generation with NotebookLM: Members explored using NotebookLM for audio generation, successfully creating podcasts from documents. One user reported a 64-minute podcast generated from a multilingual document, highlighting varied outcomes based on input type.

- Discussions revealed challenges in maintaining coherence and focus in AI-generated audio, with some users facing unexpected tangents despite effective prompting techniques.

- Language and Voice Support in NotebookLM: Conversations centered on NotebookLM's support for languages beyond English, with some users recalling limitations to English only. The impressive voice quality in generated audio sparked debates on its potential as a standalone text-to-speech solution.

- Users questioned the scope of language support, discussing the possibility of expanding NotebookLM's multilingual capabilities to enhance its utility for a global engineering audience.

- Game Development using Google Docs and AI: Engineers shared strategies for utilizing Google Docs to organize game rules and narratives, leveraging AI to generate scenarios and build immersive worlds. One member highlighted successes with AI-generated scenarios that blend serious and humorous content in their RPG games.

- The integration of AI in game development was lauded for enhancing creative processes, with users emphasizing the flexibility of Google Docs as a collaborative tool for narrative construction.

- Spreadsheet Integration Workarounds for NotebookLM: Users identified limitations with direct spreadsheet uploads into NotebookLM, suggesting alternatives like converting data into Google Docs for better compatibility. One user mentioned reducing spreadsheet complexity by hiding unnecessary columns to incorporate essential data.

- Creative methods for integrating spreadsheet data were discussed, focusing on maintaining data integrity while circumventing NotebookLM's upload restrictions.

- NotebookLM Performance and Usability Feedback: Feedback on NotebookLM's performance was mixed, with discussions on the accuracy and depth of generated content. Users emphasized the need for more transparency regarding potential paywalls and consistent performance metrics.

- Concerns about the disappearance of the new notebook button led to speculations about possible notebook limits, affecting the overall usability and workflow within NotebookLM.

Unsloth AI (Daniel Han) Discord

- PaliGemma 2 Launch Expands Model Offerings: Google introduced PaliGemma 2 with new pre-trained models of 3B, 10B, and 28B parameters, providing greater flexibility for developers.

- The integration of SigLIP for vision tasks and the upgrade to Gemma 2 for the text decoder are expected to enhance performance compared to previous versions.

- Qwen Fine-Tuning Hits VRAM Limitations: Engineers encountered issues fine-tuning the Qwen32B model on an 80GB GPU, necessitating a 96GB H100 NVL GPU to prevent OOM errors (Issue #1390).

- Conversations revealed that QLORA might use more memory than LORA, leading to ongoing investigations into VRAM consumption discrepancies.

- Unsloth Pro Anticipates Upcoming Release: Unsloth Pro is slated for release soon, generating excitement among users awaiting enhanced features.

- Community members are looking forward to leveraging Unsloth Pro to streamline their workflows and utilize new model capabilities.

- Llama 3.3 Debuts 70B Model with Efficiency Gains: Llama 3.3 has been released, featuring a 70B parameter model that delivers robust performance while reducing operational costs (Tweet by @Ahmad_Al_Dahle).

- Unsloth has introduced 4-bit quantized versions of Llama 3.3, which improve loading times and decrease memory usage.

- Optimizing LoRA Fine-Tuning Configurations: 'Silk.ai' questioned the necessity of the use_cache parameter in LoRA fine-tuning, sparking a debate on optimal settings.

- Another contributor emphasized the importance of enabling LoRA dropout to achieve the desired model performance.

Cursor IDE Discord

- Cursor Performance Takes a Hit: Users reported that Cursor has been experiencing connection failures and slow response times while using the Composer, often requiring new sessions for proper functionality.

- Many compared its performance unfavorably to Windsurf, expressing frustration over persistent issues.

- Windsurf Surpasses Cursor: Several users mentioned that Windsurf performed better in handling tasks without issues, even under heavy code generation demands.

- People highlighted that while Cursor struggles to apply changes, Windsurf executed similar tasks smoothly, shifting user preferences.

- Cursor 0.43.6 Adds Sidebar Integration: With the latest Cursor 0.43.6 update, users noted that the Composer UI has been integrated into the sidebar, but some functions like long context chat have been removed.

- New features such as inline diffs, git commit message generation, and early versions of an agent were also mentioned.

- Composer Responds Unreliably: Users shared mixed experiences regarding Cursor's Composer feature, with reports of it sometimes failing to respond to queries.

- Issues include Composer not generating the expected code or missing updates, especially after recent updates.

- Exploring Unit Testing with Cursor: A user inquired about effective methods for writing unit tests using Cursor, expressing interest in shared techniques.

- While a definitive response is pending, users encouraged sharing their experiences and methods for testing.

OpenRouter (Alex Atallah) Discord

- OpenRouter Launches Author Pages: OpenRouter introduced the Author Pages feature, enabling users to explore models by creators at openrouter.ai/author. This update includes detailed stats and related models displayed via a carousel.

- The feature aims to enhance model discovery and analysis, providing a streamlined experience for users to navigate through different authors' collections.

- Amazon Nova Models Receive Mixed Feedback: Users have reported varying experiences with Amazon Nova models, describing some as subpar compared to alternatives like Nova Pro 1.0.

- Despite criticisms, certain users highlighted the models' speed and cost-effectiveness, indicating a divide in user satisfaction.

- Llama 3.3 Deployment and Performance: Llama 3.3 has been successfully launched, with providers offering it shortly after release, enhancing capabilities for text-based applications as detailed in OpenRouter's announcement.

- AI at Meta noted that this model promises improved performance in generating synthetic data while reducing inference costs.

- DeepInfra Reduces Model Pricing: DeepInfra announced significant price cuts on multiple models, including Llama 3.2 3B Instruct for $0.018 and Mistral Nemo for $0.04, as per their latest tweet.

- These reductions aim to provide budget-conscious developers with access to high-quality models at more affordable rates.

- OpenAI Introduces Reinforcement Learning Finetuning: During OpenAI Day 2, the company announced the upcoming reinforcement learning finetuning for o1, though it generated limited excitement among the community.

- Participants expressed skepticism regarding the updates, anticipating more substantial advancements beyond the current offerings.

Eleuther Discord

- MoE-lite Motif Enhances Transformer Efficiency: A member introduced the MoE-lite motif, utilizing a custom bias-per-block-per-token to nonlinearly affect the residual stream, which suggests faster computations despite increased parameter costs.

- The discussion compared its efficiency against traditional Mixture of Experts (MoE) architectures, debating potential benefits and drawbacks.

- GoldFinch Architecture Streamlines Transformer Parameters: A member detailed the GoldFinch model, which removes the V matrix by deriving it from a mutated layer 0 embedding, significantly enhancing parameter efficiency. GoldFinch paper

- The team discussed the potential to replace or compress both K and V parameters, aiming to improve overall transformer efficiency.

- Layerwise Token Embeddings Optimize Transformer Parameters: Members explored layerwise token value embeddings as a substitute for traditional value matrices, promoting significant parameter savings in transformers without compromising performance.

- The approach leverages initial embeddings to dynamically compute V values, thereby reducing reliance on extensive value projections.

- Updated Mechanistic Interpretability Resources Now Available: A member shared a Google Sheets resource cataloging key papers in mechanistic interpretability, organized by themes for streamlined exploration.

- The resource includes theme-based categories and annotated notes to assist researchers in navigating foundational literature effectively.

- Dynamic Weight Adjustments Boost Transformer Efficiency: Members proposed dynamic weight adjustments to enhance parameter allocation and Transformer efficiency, drawing parallels to regularization methods like momentum.

- The conversation highlighted potential performance improvements and streamlined computations by eliminating or modifying V parameters.

aider (Paul Gauthier) Discord

- Aider v0.67.0 Released With New Features: The latest Aider v0.67.0 introduces support for Amazon Bedrock Nova models, enhanced command functionalities, process suspension support, and exception capture analytics.

- Highlighting its development, Aider contributed 61% of the code for this release, showcasing its robust capabilities.

- Aider Pro Features Gain Attention: Aider Pro now includes unlimited advanced voice mode, a new 128k context for O1, and a copy/paste to web chat capability allowing seamless integration with web interfaces.

- Users praised these features for enabling the handling of extensive documents and code, enhancing their workflow efficiency.

- Gemini 1206 Model Release Sparks Interest: Google DeepMind released the Gemini-exp-1206 model, claiming performance improvements over previous iterations.

- Community members are eager to see comparative benchmarks against models like Claude and await detailed performance results from Paul Gauthier.

- DeepSeek's Performance in Aider: DeepSeek was discussed as a cost-effective option for Aider users, alongside alternatives like Qwen 2.5 and Haiku.

- There is speculation about the potential of fine-tuning community versions to enhance DeepSeek’s benchmarks in Aider.

Interconnects (Nathan Lambert) Discord

- Gemini-exp-1206 claims top spot: The new Gemini-exp-1206 model achieved first place overall and is tied with O1 on coding benchmarks, marking significant improvements over previous versions.

- OpenAI's demonstration revealed that fine-tuned O1-mini can surpass the full O1 model based on medical data, highlighting Gemini's robust performance.

- Llama 3.3 brings cost-effective performance: Enhancements in Llama 3.3 are driven by updated alignment processes and advancements in online reinforcement learning techniques.

- This model matches the performance of the 405B model while enabling more cost-efficient inference on standard developer workstations.

- Qwen2-VL-72B launched by Alibaba: Alibaba Cloud introduced the Qwen2-VL-72B model, featuring advanced capabilities in visual understanding.

- Designed for multimodal tasks, it excels in video comprehension and operates seamlessly across various devices, aiming to enhance multimodal performance.

- Reinforcement Fine-Tuning advances AI models: Discussions emphasized the role of Reinforcement Learning in fine-tuning models to outperform existing counterparts.

- Key points included the use of pre-defined graders for model training and evolving methodologies in RL training approaches.

- AI Competition drives model innovation: Members called for robust competition in AI, urging OpenAI to challenge models like Claude and Deepseek to foster advancements.

- This sentiment underscores the community's belief that effective competitors are essential for continual progress in the AI field.

Bolt.new / Stackblitz Discord

- Enhancing Token Efficiency in Bolt.new: Members discussed strategies like Specific Section Edits to reduce token usage by modifying only selected sections instead of regenerating entire files, aiming for improved token management efficiency.

- Questions were raised about daily token limits for free accounts and the benefits of purchasing the token reload option to allow token rollover.

- Integrating GitHub Repos with Bolt.new: Users explored GitHub Repo Integration by starting Bolt with repository URLs such as bolt.new/github.com/org/repo, noting that private repositories currently require being set to public for successful integration.

- To resolve deployment errors related to private repos, users suggested switching to public repositories to bypass permission issues.

- Managing Feature Requests and Improvements: Discussions emphasized efficient Feature Requests Management through engaging with Bolt for handling requests individually, which helps reduce hallucination in bot responses.

- Community members proposed submitting feature enhancement ideas via the GitHub Issues page, highlighting the importance of user feedback for product development.

- Optimizing Development with Local Storage and Backend Integration: Developers recommended building applications using local storage initially, then migrating features to backend solutions like Supabase to facilitate smoother testing and streamline the integration process.

- This method was confirmed to help maintain app polish and reduce errors during the transition to database storage.

Stability.ai (Stable Diffusion) Discord

- Reactor's Face Swap Showdown: Users debated if Reactor is the optimal choice for face swapping, with no clear consensus reached.

- Participants recommended experimenting with various models to evaluate their impact on output quality.

- AI Discords Diversify Discussions: A user sought a Discord community for diverse AI topics beyond LLMs, triggering recommendations.

- Members suggested Gallus and TheBloke Discords as hubs for a wide range of AI discussions.

- Cloud GPU Providers' Price Wars: Users shared preferred Cloud GPU providers like Runpod, Vast.ai, and Lambda Labs, highlighting competitive pricing.

- Lambda Labs was noted as often the cheapest option, though access can be challenging.

- Lora & ControlNet Tune Stable Diffusion: Discussion revolved around adjusting Lora's strength in Stable Diffusion, noting it can exceed 1 but risks image distortion at higher settings.

- Members recommended using OpenPose for accurate poses and leveraging depth control for improved results.

- AI Art Licensing Quandary: A user raised questions about exceeding the revenue threshold under Stability AI's license agreement.

- Clarifications suggested outputs remain usable, but the license for model use is revoked upon termination.

OpenAI Discord

- Reinforcement Fine-Tuning in 12 Days of OpenAI: The YouTube event '12 Days of OpenAI: Day 2' features Mark Chen, SVP of OpenAI Research, and Justin Reese discussing the latest in reinforcement fine-tuning.

- Participants are encouraged to join the live stream starting at 10am PT for insights directly from leading researchers.

- Gemini 1206 Experimental Model Surpasses O1 Pro: The Gemini 1206 experimental model has been highlighted for its strong performance, surpassing O1 Pro in tasks such as generating SVG code for detailed unicorn illustrations.

- Users report that Gemini 1206 delivers enhanced results, particularly excelling in SVG generation and other technical applications.

- O1 Pro Pricing Compared to Gemini 1206: O1 Pro, priced at $200/month, has sparked discussions regarding its value compared to free alternatives like Gemini 1206.

- Some users believe that despite O1's capabilities, the high cost is unjustifiable given the availability of effective free models.

- Demand for Advanced Voice Mode Features: There is a clear community demand for a more advanced voice mode, with current offerings being criticized for their robotic sound.

- Users express hopes for significant improvements in the feature, especially during the upcoming holiday season.

- Collaborative GPT Editing Features Proposed: A member expressed a desire for enabling multiple editors to simultaneously modify a GPT, highlighting the need for collaboration.

- Currently, only the creator can edit a GPT, but the community suggests a 'Share GPT edit access' feature to facilitate teamwork.

Modular (Mojo 🔥) Discord

- VSCode Extension Inquiry Resolved: A member faced issues with VSCode extension tests running with cwd=/, which was resolved after finding the appropriate channel to ask about the extension.

- This incident underscores the significance of directing technical queries to the correct community channels for efficient problem resolution.

- Mojo Function Extraction Errors: A user encountered errors while adapting the

j0function from the math module in Mojo due to an unknown declaration_call_libmduring compilation.- They sought guidance on properly extracting and utilizing functions from the math standard library without encountering compiler issues.

- Programming Career Specialization: Members discussed the benefits of specializing in areas like blockchain, cryptography, or distributed systems for enhanced job prospects in tech.

- Emphasis was placed on targeted learning, hands-on projects, and a solid grasp of fundamental concepts to advance one's career.

- Compiler Passes and Metaprogramming in Mojo: Discussions highlighted new Mojo features enabling custom compiler passes, with ideas to enhance the API for more extensive program transformations.

- Members compared Mojo's metaprogramming approach to traditional LLVM Compiler Infrastructure Project, noting limitations in JAX-style program transformations.

- Education Insights in Computer Science: Participants shared experiences regarding challenging computer science courses and projects that deepened their understanding of programming concepts.

- They discussed balancing personal interests with market demands, using their academic journeys as examples.

Perplexity AI Discord

- Perplexity AI Faces Code Interpreter Constraints: Users reported that Perplexity AI's code interpreter fails to execute Python scripts even after uploading relevant files, restricting its functionality to generating only text and graphs.

- This limitation has sparked conversations about the necessity for Perplexity AI to support actual code execution to better serve technical engineering needs.

- Fake Perplexity App Circulates on Windows Store: Members identified a fake Perplexity app available on the Windows app store, which deceptively uses the official logo and unauthorized API, leading users to a suspicious Google Doc.

- The community urged reporting the fraudulent app to prevent potential security risks and protect the integrity of Perplexity AI offerings.

- Llama 3.3 Model Released with Enhanced Features: Llama 3.3 was officially released, garnering excitement for its improved capabilities over previous versions, as highlighted by user celebrations.

- There is strong anticipation within the community for Perplexity AI to integrate Llama 3.3 into their services to leverage its advanced functionalities.

- Optimizing API Usage with Grok and Groq: Discussions around using Grok and Groq APIs revealed that Grok offers a free starter credit, while Groq provides complimentary usage with Llama 3.3 integration.

- Users shared troubleshooting tips, noting challenges with the Groq endpoint, which some members successfully resolved through community support.

- Introducing RAG Feature to Perplexity API: A member inquired about incorporating the RAG feature from Perplexity Spaces into the API, indicating a demand for advanced retrieval capabilities.

- This interest underscores the community's need for enhanced functionality within the Perplexity API to support more sophisticated data retrieval processes.

LM Studio Discord

- Paligemma 2 Release: The Paligemma 2 release on MLX introduces new models from GoogleDeepMind, enhancing the platform's capabilities.

- Users are encouraged to install it using

pip install -U mlx-vlm, contribute by starring the project, and submitting pull requests.

- Users are encouraged to install it using

- RAG File Limitations: A member discussed workarounds for the 5 file RAG limitation, stressing the necessity to analyze multiple small files for issue detection.

- Community members deliberated on potential solutions and the performance implications of processing smaller file batches with models.

- Llama 3.1 CPU Benchmarks: Benchmarks for the Llama 3.1 8B model on Intel i7-13700 and i7-14700 CPUs were requested to assess potential inference speeds.

- Community insights indicate varying performance metrics based on recent user experiences with similar CPU setups.

- 4090 GPU Price Surge: There is a reported 4090 GPU price surge for both new and used units in certain regions, causing user concerns.

- Rumors suggest some 4090 GPUs might be modded to expand VRAM to 48GB, sparking further discussions.

- Chinese Modding of 4090 GPUs: Reddit discussions about Chinese modders working on 4090 GPUs were mentioned, though no specific sources were provided.

- Users expressed challenges in locating detailed information or links regarding these GPU modding activities.

Cohere Discord

- Rerank 3.5 Model Enhances Search Accuracy: The newly released Rerank 3.5 model offers improved reasoning and multilingual capabilities, enabling more accurate searches of complex enterprise data.

- Members are seeking benchmark scores and performance metrics to evaluate Rerank 3.5's effectiveness.

- Structured Outputs Streamline Command Models: Command models now enforce strict Structured Outputs, ensuring all required parameters are included and enhancing reliability in enterprise applications.

- Users can utilize Structured Outputs in JSON for text generation or Tools via function calling, currently experimental in Chat API V2 with feedback encouraged.

- vnc-lm Integrates with LiteLLM for Enhanced API Connections: vnc-lm is now integrated with LiteLLM, enabling connections to any API that supports Cohere models like Cohere API and OpenRouter.

- The integration allows seamless API interactions and supports multiple LLMs including Claude 3.5, Llama 3.3, and GPT-4o, as showcased on GitHub.

- /embed Endpoint Faces Rate Limit Issues: Users have expressed frustration over the low rate limit of 40 images per minute for the /embed endpoint, limiting the ability to embed datasets efficiently.

- Members suggest reaching out to support for potential rate limit increases.

- Optimizing API Calls with Retry Mechanisms: Users are discussing strategies to optimize their retry mechanisms for API calls using the vanilla Cohere Python client, which inherently handles retries gracefully.

- This has sparked a productive exchange on various approaches to manage API retries effectively.

Latent Space Discord

- Writer deploys built-in RAG tool: Writer has rolled out a built-in RAG tool enabling users to pass a graph ID for model access to the knowledge graph, demonstrated by Sam Julien. This feature supports auto-uploading scraped content into a Knowledge Graph and interactive post discussions.

- The tool enhances content management and interactive capabilities, allowing seamless integration of user-specific knowledge bases into the modeling process.

- ShellSage enhances AI productivity in terminals: The ShellSage project was introduced by R&D staff at AnswerDot AI, focusing on improving productivity through AI integration in terminal environments, as highlighted in this tweet.

- Designed as an AI terminal assistant, ShellSage leverages a hybrid human+AI approach to handle tasks more intelligently within shell interfaces.

- OpenAI launches new RL fine-tuning API: OpenAI announced a new Reinforcement Learning fine-tuning API that allows users to apply advanced training algorithms to their models, detailed in John Allard's post.

- This API empowers users to develop expert models across various domains, building on the advancements of previous o1 models.

- Google's Gemini Exp 1206 tops multiple AI benchmarks: Google’s Gemini exp 1206 has secured the top rankings across several tasks, including hard prompts and coding, as reported by Jeff Dean.

- The Gemini API is now available for use, marking a significant achievement for Google in the competitive AI landscape.

- AI Essays explore Service-as-Software and business strategies: Several essays discussed AI opportunities, including a $4.6 trillion market with the Service-as-Software framework, shared by Joanne Chen.

- Another essay proposed strategies for fundraising and consolidating service businesses using AI models, as outlined in this post.

Nous Research AI Discord

- Llama 3.3 Model Release Sparks Debate: Ahmad Al-Dahle announced Llama 3.3, a new 70B model offering performance comparable to a 405B model but with enhanced cost-efficiency.

- Community members questioned if Llama 3.3 represents a base model relying on Llama 3.1, and discussed whether it's a complex fine-tuning pipeline without new pretraining, highlighting trends in model releases.

- Decentralized Training with Nous Distro: Nous Distro was clarified as a decentralized training framework, generating excitement among members about its potential applications.

- The project received positive reactions, with members expressing enthusiasm for the advancements it brings to distributed AI training methodologies.

- Challenges in Fine-Tuning Mistral for Kidney Detection: A user highlighted difficulties in fine-tuning a Mistral model using a 25-column dataset for chronic kidney disease detection, citing a lack of suitable tutorials after three months of attempts.

- Community members recommended resources and strategies to overcome these challenges, emphasizing the need for better documentation and support for specialized model tuning.

- Leveraging LightGBM for Enhanced Tabular Data Performance: Members suggested using LightGBM for better handling of tabular data in machine learning tasks, noting its efficiency in ranking and classification.

- This recommendation serves as an alternative to LLMs for specific datasets, highlighting LightGBM's strengths in performance and scalability.

- Optimizing Data Formatting for Model Training: Discussions emphasized the necessity to convert numeric data into text format, as LLMs perform suboptimally with direct numerical tabular data.

- A member pointed to an example using Unsloth for classification with custom templates, underscoring the importance of generalized CSV data in training models.

GPU MODE Discord

- Popcorn Project Pops Up with NVIDIA H100 Benchmarks: The Popcorn Project is set to launch in January 2025, enabling job submissions for leaderboards across various kernels, and includes benchmarking capabilities on GPUs like the NVIDIA H100.

- This initiative aims to enhance the development experience, despite its non-traditional approach, by providing robust performance metrics.

- Triton's TMA Support Seeks Official Release Amid Broken Nightlies: Triton users are requesting an official release to support low-overhead TMA descriptors, as current nightly builds are reported to be broken.

- Concerns around nightly build stability highlight the community's dependency on reliable tooling for optimal GPU performance.

- LTX Video's CUDA Revamp Doubles GEMM Speed: A member reimplemented all layers in the LTX Video model using CUDA, achieving 8bit GEMM that's twice as fast as cuBLAS FP8 and incorporating FP8 Flash Attention 2, RMSNorm, RoPE Layer, and quantizers, without accuracy loss due to the Hadamard Transformation.

- Performance tests on the RTX 4090 demonstrated real-time generation with just 60 denoising steps, showcasing significant advancements in model speed and efficiency.

- TorchAO Quantization: New Methods and Best Practices Explored: A member delved into multiple quantization implementation methods within TorchAO, seeking guidance on best practices and identifying specific files as starting points.

- This exploration reflects the community's dedication to optimizing model performance through effective quantization techniques in AI engineering workflows.

- Llama 3.3 Unleashed: Llama 3.3 has been released, as announced in this tweet.

- The community has shown interest in the new Llama 3.3 release, discussing its potential enhancements.

Torchtune Discord

- Llama 3.3 Launch with Enhanced Specifications: The Llama 3.3 model has been released, boasting a performance of 405B parameters while maintaining a compact 70B size, which is expected to spark innovative applications.

- The community is eager to explore the capabilities of Llama 3.3's reduced size for diverse AI engineering projects.

- Torchtune Adds Comprehensive Finetuning for Llama 3.3: Torchtune has expanded its support to include full LoRA and QLoRA finetuning for the newly released Llama 3.3, enhancing customization options.

- Detailed configuration settings are available in the Torchtune GitHub repository.

- LoRA Training Adjustments Proposed: A proposed change to LoRA training now requires a separate weight merging step instead of automatic merging, as discussed in this GitHub issue.

- Members debated the potential impacts of this change on existing workflows, weighing the benefits of increased flexibility.

- Debate Over Alpaca Training Defaults: Concerns have been raised regarding the train_on_input default setting in the Alpaca training library, currently set to False, leading to questions about its alignment with common practices.

- Discussions referenced repositories like Hugging Face's trl and Stanford Alpaca to evaluate the appropriateness of the default configurations.

- Crypto Lottery Introduces LLM Agreement Challenges: A crypto lottery model was described where participants pay per LLM prompt with the chance to win all funds by convincing the LLM to agree to a payout.

- This unique incentive structure has sparked debates on the ethical implications and practicality of such mechanisms within the crypto ecosystem.

LlamaIndex Discord

- LlamaParse Enhances Document Parsing Efficiency: LlamaParse provides advanced document parsing capabilities, significantly reducing parsing time for complex documents.

- This improvement streamlines workflows by effectively handling intricate document structures.

- Hybrid Search Webinar with MongoDB Recorded: A recent webinar featuring MongoDB Atlas covered hybrid search strategies and metadata filtering techniques.

- Participants can revisit key topics such as the transition from sequential to DAG reasoning to optimize search performance.

- Enabling Multimodal Parsing in LlamaParse: LlamaParse now supports multimodal parsing with models like GPT-4 and Claude 3.5, as demonstrated in a video by @ravithejads.

- Users can enhance their parsing capabilities by converting screenshots of pages into structured data seamlessly.

- Resolving WorkflowTimeoutError by Adjusting Timeouts: Facing a WorkflowTimeoutError can be mitigated by increasing the timeout or setting it to None, using

w = MyWorkflow(timeout=None).- This approach helps prevent timing out issues during prolonged workflows, ensuring smoother execution.

- Configuring ReAct Agent in LlamaIndex: To switch to the ReAct agent, replace the standard agent configuration with

ReActAgent(...), as outlined in the workflow documentation.- This modification allows for a more adaptable setup, leveraging the flexibility of the ReAct framework.

OpenInterpreter Discord

- Preview 1.0 Pushes Performance: A member impressed with the streamlined and fast performance of 1.0 preview, highlighting the clean UI and well-segregated code. They are currently testing the interpreter tool with specific arguments but are unable to execute any code from the AI.

- Users are testing the interpreter tool with specific arguments but have reported being unable to execute any code generated by the AI.

- MacOS App Access Accelerated: Multiple users inquired about accessing the MacOS-only app. A team member confirmed they are approaching a public launch and are willing to add users to the next batch while also developing a cross-platform version.

- This initiative aims to broaden user accessibility and enhance platform compatibility.

- API Availability Approaches: A member raised concerns over the $200 monthly fee for API access, questioning its accessibility. Another member reassured the community that the API will be available to users soon.

- These discussions highlight the community's interest in API accessibility and pricing.

- Reinforcement Fine-Tuning Updates: OpenAI announced Day 2 focusing on Reinforcement Fine-Tuning, sharing insights through a post on X and more details on their official site.

- The community is actively engaged in optimizing model training methodologies, reflecting dedication to enhancing reinforcement learning techniques.

- Llama Launches 3.3: Meta announced the release of Llama 3.3, a new open-source model that excels in synthetic data generation and other text-based tasks, offering a significantly lower inference cost, as detailed in their post on X.

- This release underscores Meta's focus on improving model efficiency and expanding capabilities in text-based use cases.

LLM Agents (Berkeley MOOC) Discord

- Spring 2025 MOOC Greenlit: The Berkeley MOOC team has officially confirmed the sequel course for spring 2025. Participants are advised to stay updated for more details as they become available.

- Members expressed 'Woohoo!' about the upcoming offering, indicating high excitement within the community.

- Assignment Deadlines Loom: A participant emphasized the necessity to complete all assignments before their set deadlines. This highlights a growing sense of urgency among the learners.

- Participants are meticulously organizing their schedules to accommodate the upcoming assessments.

- Lambda Labs for Lab Grading: Inquiry was made regarding the possibility of using non-OpenAI models, such as Lambda Labs, for grading lab assignments.

- This suggests a community interest in exploring diverse grading solutions.

- Lecture Slides Stalled: Members reported that the lecture slides from the last session have not been updated on the course website due to unforeseen delays.

- One member noted the lecture included approximately 400 slides, indicating extensive content coverage.

- Captioning Causes Delay: Lecture recordings are pending professional captioning, which may result in further delays.

- Given the long duration of the lectures, the captioning process is expected to be time-consuming.

Axolotl AI Discord

- Llama 3.3 Release: Llama 3.3 has been released, featuring an instruction model only, generating excitement among members who are seeking more details on its capabilities.

- Members are enthusiastic about Llama 3.3, but some members want additional information to fully understand its features.

- Model Request Issues on llama.com: Members reported issues when requesting models on llama.com, with the process getting stuck after clicking 'Accept and continue'.

- This technical glitch is causing frustration as users look for solutions and alternatives.

- SFT vs RL Quality Bounds: Discussions highlighted that Supervised Fine-Tuning (SFT) limits model quality based on the dataset.

- Conversely, a Reinforcement Learning (RL) approach may allow models to surpass dataset limitations, especially with online RL.

DSPy Discord

- DSPy Optimization Optionality: A member in the #general channel inquired whether DSPy Modules require optimization for each use case, likening it to training ML models for enhanced prompting.

- Another member clarified that optimization is optional, necessary only for improving the performance of a fixed system.

- RAG System Context Conflict: A TypeError was reported in the RAG System, indicating that

RAG.forward()received an unexpected keyword argument 'context' while attempting to use DSPy.- It was noted that the RAG system requires the keyword argument 'context' to function correctly, and the user wasn’t providing it.

tinygrad (George Hotz) Discord

- tinygrad Stats Site Outage: The tinygrad stats site experienced an outage, raising concerns about its infrastructure.

- George Hotz inquired about needing cash to cover the VPS bill, hinting at possible financial issues.

- Expired SSL Certificate Brings tinygrad Down: An expired SSL certificate caused the tinygrad stats site to go down while hosted on Hetzner.

- After resolving the issue, the site is back up and operational.

LAION Discord

- Cellular Personification in Media: A discussion highlighted the personification of cells, marking a notable instance since Osmosis Jones and adding a humorous twist to cellular representation.

- This approach blends humor with scientific concepts, potentially making complex topics more engaging for the audience.

- Osmosis Jones Reference: The reference to Osmosis Jones underscores its influence on current efforts to personify cellular structures, emphasizing its role in shaping creative representations.

- Participants find parallels between the animated depiction in Osmosis Jones and recent attempts to make cellular biology more relatable through media.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!