[AINews] Meta BLT: Tokenizer-free, Byte-level LLM

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Dynamic byte patch sizing is all you need.

AI News for 12/12/2024-12/13/2024. We checked 7 subreddits, 433 Twitters and 31 Discords (209 channels, and 6703 messages) for you. Estimated reading time saved (at 200wpm): 741 minutes. You can now tag @smol_ai for AINews discussions!

In a day with monster $250m fundraising and Ilya declaring the end of pretraining, we are glad for Meta to deliver a paper with some technical meat: Byte Latent Transformer: Patches Scale Better Than Tokens.

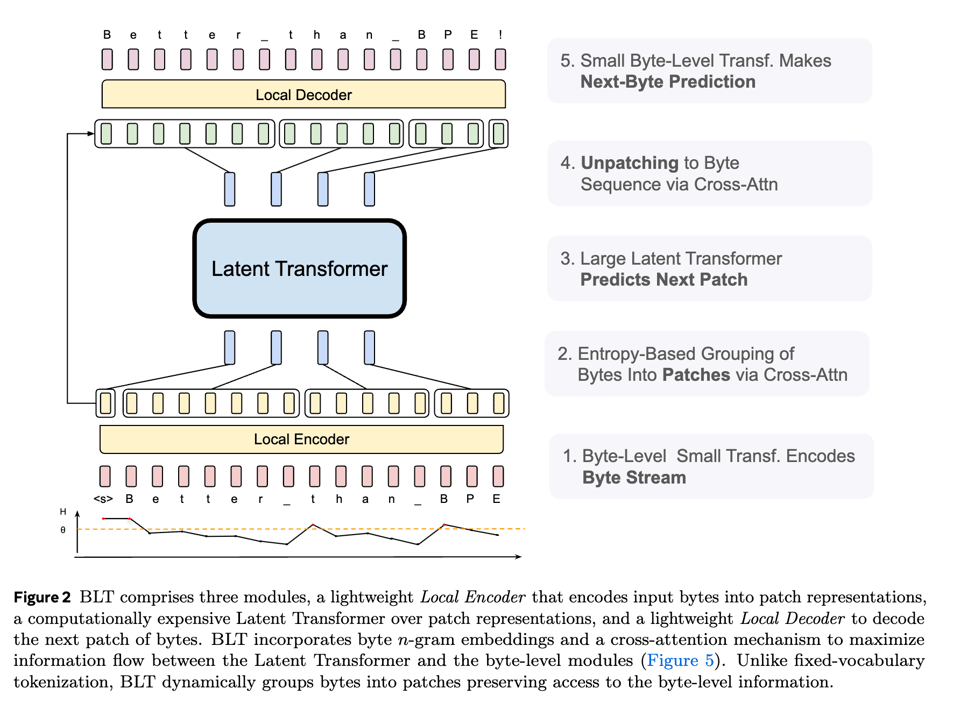

The abstract is very legible. In contrast to previous byte level work like MambaByte, BLT uses dynamically formed patches that are encoded to latent representations. As the authors say: "Tokenization-based LLMs allocate the same amount of compute to every token. This trades efficiency for performance, since tokens are induced with compression heuristics that are not always correlated with the complexity of predictions. Central to our architecture is the idea that models should dynamically allocate compute where it is needed. For example, a large transformer is not needed to predict the ending of most words, since these are comparably easy, low-entropy decisions compared to choosing the first word of a new sentence. This is reflected in BLT’s architecture (§3) where there are three transformer blocks: two small byte-level local models and a large global latent transformer."

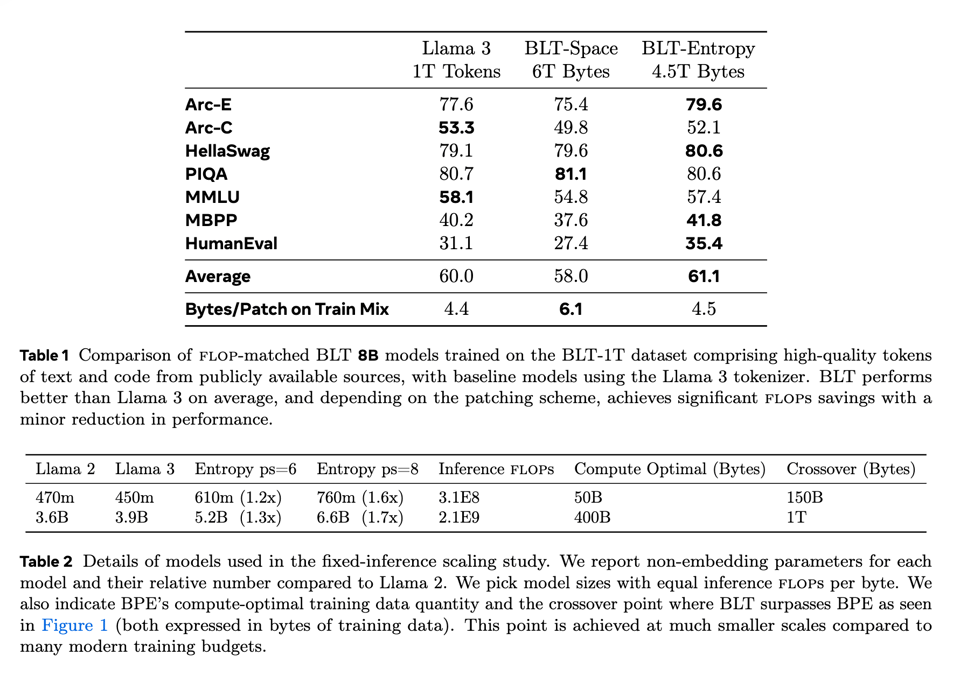

The authors trained this on ~1T tokens worth of data and compared it with their house model, Llama 3, and it does surprisingly well on standard benchmarks:

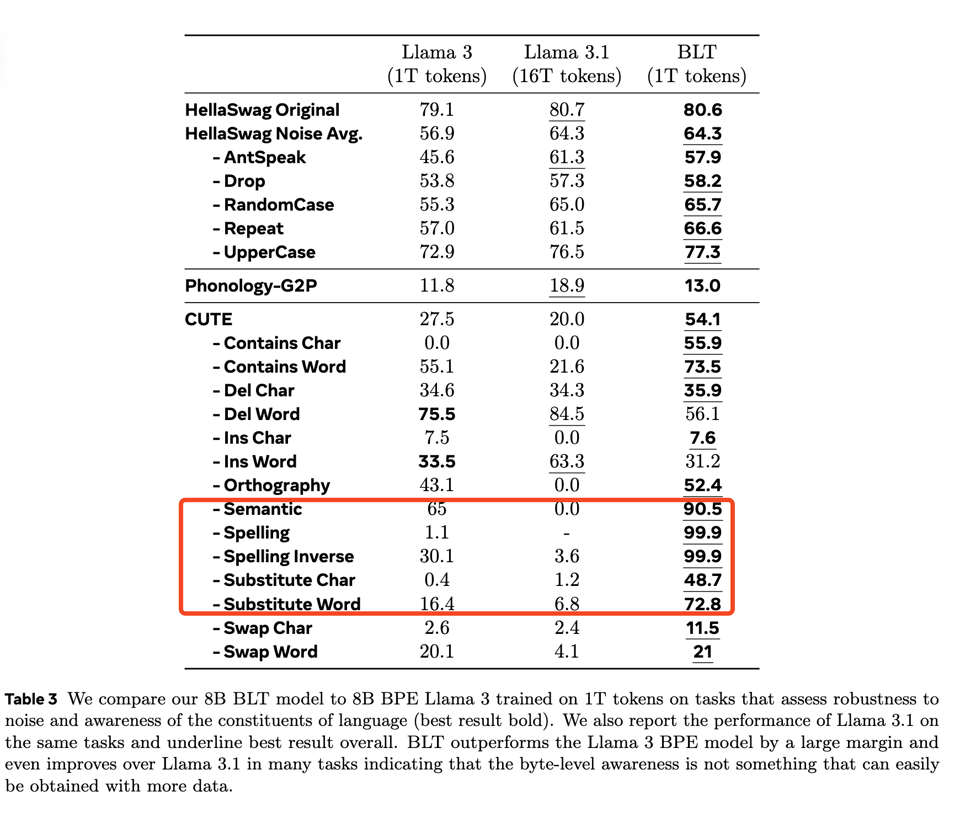

but also does much better on tasks that usually trip up tokenizer based models (the CUTE benchmark):

What's next - scale this up? Is this worth throwing everything we know about tokenization out the window? What about Long context/retrieval/IFEval type capabilities?

Possibly byte-level transformers unlock NEW kinds of multimodality, as /r/localllama explains:

example of such new possibility is "talking to your PDF", when you really do exactly that, without RAG, and chunking by feeding data directly to the model. You can think of all other kinds of crazy use-cases with the model that natively accepts common file types.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Here are the key topics from the Twitter discussions, organized by category:

New Model & Research Announcements

- Microsoft Phi-4: @SebastienBubeck announces a 14B parameter model achieving SOTA results on STEM/reasoning benchmarks, outperforming GPT-4o on GPQA and MATH

- Meta Research: Released Byte Latent Transformer, a tokenizer-free architecture that dynamically encodes bytes into patches with better inference efficiency

- DeepSeek-VL2: @deepseek_ai launches new vision-language models using DeepSeek-MoE architecture with sizes 1.0B, 2.8B, and 27B parameters

Product Launches & Updates

- ChatGPT Projects: @OpenAI announces new Projects feature for organizing chats, files and custom instructions

- Cohere Command R7B: @cohere releases their smallest and fastest model in the R series

- Anthropic Research: Published findings on "Best-of-N Jailbreaking" showing vulnerabilities across text, vision and audio models

Industry Discussion & Analysis

- Model Scaling: @tamaybes notes that frontier LLM sizes have decreased dramatically - GPT-4 ~1.8T params vs newer models ~200-400B params

- Benchmark Performance: Significant discussion around Phi-4's strong performance on benchmarks despite smaller size

Memes & Humor

- Various jokes and memes about AI progress, model comparisons, and industry dynamics

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Phi-4 Release: Benchmarks Shine but Practicality Questioned

- Introducing Phi-4: Microsoft’s Newest Small Language Model Specializing in Complex Reasoning (Score: 749, Comments: 195): Microsoft has introduced Phi-4, a small language model designed for complex reasoning. Further details about its capabilities and applications were not provided in the post.

- Discussion highlights skepticism about Phi-4's real-world performance, with users noting that previous Phi models had high benchmark scores but underperformed in practical applications. Instruction following is mentioned as an area where Phi models struggle, with some users comparing them unfavorably to Llama.

- Several comments focus on synthetic data and its role in training the Phi models, suggesting that Microsoft may use the Phi series to showcase their synthetic datasets. There is speculation that these datasets could be licensed to other companies, and some users express interest in the potential of synthetic data for improving model performance in specific domains like math.

- The community expresses interest in benchmark results, with some noting impressive scores for a 14B model. However, there is also caution about potential overfitting and the validity of these benchmarks, with some users questioning the transparency and accessibility of the Phi-4 model, mentioning that it will be available on Hugging Face next week.

- Bro WTF?? (Score: 447, Comments: 131): The post discusses a table comparing AI models, highlighting the performance of "phi-4" against other models in tasks like MMLU, GPQA, and MATH. It categorizes models into "Small" and "Large" and includes a specific internal benchmark called "PhiBench" to showcase the phi model's competitive results.

- Phi Model Performance and Real-World Application: Many users express skepticism about the phi-4 model's real-world applicability despite its strong benchmark performance, noting previous phi models often excelled in tests but underperformed in practice. lostinthellama highlights that these models are tailored for business and reasoning tasks but perform poorly in creative tasks like storytelling.

- Model Size and Development: Discussion revolves around the potential for larger phi models, with Educational_Gap5867 noting the largest Phi models are currently 14B parameters. arbv mentions previous attempts to scale beyond 7B were unsuccessful, suggesting a focus on smaller, more efficient models.

- Availability and Access: The model is expected to be available on Hugging Face, with Guudbaad providing a link to its current availability on Azure, though download speeds are reportedly slow. sammcj offers a script for downloading files from Azure to facilitate access.

- Microsoft Phi-4 GGUF available. Download link in the post (Score: 231, Comments: 65): Microsoft Phi-4 GGUF model, converted from Azure AI Foundry, is available for download on Hugging Face as a non-official release, with the official release expected next week. Available quantizations include Q8_0, Q6_K, Q4_K_M, and f16, alongside the unquantized model, with no further quantizations planned.

- Phi-4 Performance and Comparisons: The Phi-4 model is significantly better than its predecessor, Phi-3, especially in multilingual tasks and instruction following, with improvements noted in benchmarks such as farel-bench (Phi-4 scored 81.11 compared to Phi-3's 62.44). However, it still faces competition from models like Qwen 2.5 14B in certain areas.

- Model Availability and Licensing: The model is available for download on Hugging Face and has been uploaded to Ollama for easier access. The licensing has changed to the Microsoft Research License Agreement, allowing only non-commercial use.

- Technical Testing and Implementation: Users have tested the model in environments like LM Studio using AMD ROCm, achieving about 36 T/s on an RX6800XT. The model's performance is noted to be concise and informative, fitting well within the 16K context on a 16GB GPU.

Theme 2. Andy Konwinski's $1M Prize for Open-Source AI on SWE-bench

- I’ll give $1M to the first open source AI that gets 90% on contamination-free SWE-bench —xoxo Andy (Score: 449, Comments: 97): Andy Konwinski has announced a $1M prize for the first open-source AI model that scores 90% on a contamination-free SWE-bench. The challenge specifies that both the code and model weights must be open source, and further details can be found on his website.

- There is skepticism about the feasibility of achieving 90% on SWE-bench, with Amazon's model only reaching 55%. Concerns are raised about potential gaming of the benchmark due to the lack of required dataset submission, and the challenge of ensuring a truly contamination-free evaluation process.

- Andy Konwinski clarifies the competition's integrity by using a new test set of GitHub issues created after submission freeze to ensure contamination-free evaluation. This method, inspired by Kaggle's market prediction competitions, involves a dedicated engineering team to verify the solvability of issues, drawing from the SWE-bench Verified lessons.

- The legitimacy of Andy Konwinski and the prize is questioned but later confirmed through his association with Perplexity and Databricks. The initiative is seen as a prototype for future inducement prizes, with plans to potentially continue and expand the competition if significant community engagement is observed.

Theme 3. GPU Capabilities Unearthed: How Rich Are We?

- How GPU Poor are you? Are your friends GPU Rich? you can now find out on Hugging Face! 🔥 (Score: 70, Comments: 65): The post highlights a feature on Hugging Face that allows users to compare their GPU configurations and performance metrics with others. The example provided shows Julien Chaumond classified as "GPU rich" with an NVIDIA RTX 3090 and two Apple M1 Pro chips, achieving 45.98 TFLOPS, compared to another user's 25.20 TFLOPS, labeled as "GPU poor."

- Users express frustration over limited GPU options on Hugging Face, noting the absence of Threadripper 7000, Intel GPUs, and other configurations like kobold.cpp. This highlights a need for broader hardware compatibility and recognition within the platform.

- Several comments reflect on the emotional impact of hardware comparisons, with users humorously lamenting their "GPU poor" status and acknowledging the limitations of their setups. A link to a GitHub file is provided for users to add unsupported GPUs.

- Discussions around GPU utilization indicate dissatisfaction with software support, especially for AMD and older GPU models. Users note that despite owning capable hardware, the lack of robust software frameworks limits their ability to fully leverage their GPUs.

Theme 4. Meta's Byte Latent Transformer Redefines Tokenization

- Meta's Byte Latent Transformer (BLT) paper looks like the real-deal. Outperforming tokenization models even up to their tested 8B param model size. 2025 may be the year we say goodbye to tokenization. (Score: 90, Comments: 27): Meta's Byte Latent Transformer (BLT) demonstrates significant advancements in language processing, outperforming tokenization models like Llama 3 in various tasks, particularly achieving a 99.9% score in "Spelling" and "Spelling Inverse." The analysis suggests that by 2025, tokenization might become obsolete due to BLT's superior capabilities in language awareness and task performance.

- BLT's Key Innovations: The Byte Latent Transformer (BLT) introduces a dynamic patching mechanism that replaces fixed-size tokenization, grouping bytes into variable-length patches based on predicted entropy, enhancing efficiency and robustness. It combines a global transformer with local byte-level transformers, operating directly on bytes, eliminating the need for a pre-defined vocabulary, and improving flexibility and efficiency in handling multilingual data and misspellings.

- Potential and Impact: The BLT model's byte-level approach is seen as a breakthrough, opening up new possibilities for applications, such as direct interaction with file types without additional processing steps like RAG. This could simplify multimodal training, allowing the model to process various data types like images, video, and sound as bytes, potentially enabling advanced tasks like byte-editing programs.

- Community Resources: The paper and code for BLT are available online, with the paper accessible here and the code on GitHub, providing resources for further exploration and experimentation with the model.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. Gemini 2.0: Google's Multimodal Breakthrough

- Gemini 2.0 is what 4o was supposed to be (Score: 853, Comments: 287): Gemini 2.0 is described as fulfilling the promises that 4o failed to deliver, particularly in terms of native multimodal capabilities, state-of-the-art performance, and features like voice mode and image output. The author is impressed by Gemini 2.0's 2 million character context and deep search capabilities, and notes that while early testers have access now, it will be widely available by 2025, unlike OAI's unspecified release timeline for similar features. Video link provided for further insight.

- Gemini 2.0's Features and Accessibility: Users highlight the availability of Gemini 2.0 Flash on Google AI Studio with free access and features like real-time video and screen sharing. There's praise for its ability to converse in multiple languages with native accents and its 2 million character context window, though some features remain exclusive to trusted testers.

- Comparison with OpenAI's Offerings: Discussions reflect a sentiment that OpenAI is struggling with costs and resource limitations, as evidenced by their $200 pro subscription. In contrast, Google's use of TPUs and free access to Gemini 2.0 is seen as a competitive advantage, potentially marking a turning point in the AI landscape.

- Community Reactions and Expectations: There is a mix of enthusiasm and skepticism, with some users considering switching from OpenAI to Google due to the latter's performance and cost-effectiveness. The community expresses anticipation for future updates from both companies, particularly regarding multimodal capabilities and improved model features.

- Don't pay for ChatGPT Pro instead use gemini-exp-1206 (Score: 386, Comments: 109): For coding purposes, the author recommends using Google's gemini-exp-1206 model available at AI Studio instead of paying for ChatGPT Pro. They find gemini-exp-1206 superior to the now-unavailable o1-preview model and consider it sufficient alongside GPT Plus and the Advanced Voice model with Camera.

- Gemini-exp-1206 vs. Other Models: Several users argue that gemini-exp-1206 outperforms models like Claude 3.5 and o1 in various coding tasks, with lmsys arena rankings supporting this claim. However, some users note that Gemini is not a direct replacement for o1-Pro, especially for more complex tasks, and others find Gemini inferior in real-world programming applications.

- Google's AI Ecosystem Confusion: Users express frustration over the fragmented nature of Google's AI services, citing the confusion caused by multiple platforms like AI Studio, Note LLM, and Gemini. There is a call for a more unified interface to streamline access and usability.

- Data Privacy Concerns: Concerns about data privacy with Gemini are raised, particularly regarding the lack of data opt-out options in free versions. However, it is noted that Google's paid API services have different terms, promising not to use user data for product improvement.

Theme 2. Limitations on Advanced Voice Mode Usage

- So advanced voice mode is now limited to 15 minutes a day for Plus users? (Score: 191, Comments: 144): OpenAI's advanced voice mode for Plus users was mistakenly reported to be limited to 15 minutes a day, causing frustration among users who rely on this feature. However, this was clarified as an error by /u/OpenAI, confirming that the advanced voice limits remain unchanged, and the lower limit applies only to video and screenshare features.

- Advanced Voice Mode Concerns: Users express frustration over perceived limitations and monetization strategies, with some like Visionary-Vibes feeling the 15-minute limit is unfair for paying Plus users. PopSynic highlights accessibility issues for visually impaired users and the unexpected consumption of limits even when not actively using the voice mode.

- Technical and Resource Challenges: Comments from ShabalalaWATP and realityexperiencer suggest that OpenAI, like other companies, struggles with hardware constraints, impacting service delivery. traumfisch notes that server overload might be causing inconsistent service caps.

- User Experience and Feedback: Some users, like Barkis_Willing, critique the voice quality and functionality, while chazwhiz appreciates the ability to communicate at a natural pace for brainstorming. pickadol praises OpenAI's direct communication, emphasizing its positive impact on user goodwill.

AI Discord Recap

A summary of Summaries of Summaries by o1-mini

Theme 1. AI Model Performance and Innovations

- Phi-4 Surpasses GPT-4o in Benchmarks: Microsoft's Phi-4, a 14B parameter language model, outperforms GPT-4o in both GPQA and MATH benchmarks, emphasizing its focus on data quality. Phi-4 Technical Report details its advancements and availability on Azure AI Foundry and Hugging Face.

- Command R7B Launch Enhances AI Efficiency: Cohere's Command R7B has been released as the smallest and fastest model in their R series, supporting 23 languages and optimized for tasks like math, code, and reasoning. Available on Hugging Face, it aims to cater to diverse enterprise use cases.

- DeepSeek-VL2 Introduces Mixture-of-Experts Models: DeepSeek-VL2 launches with a Mixture-of-Experts (MoE) architecture, featuring scalable model sizes (3B, 16B, 27B) and dynamic image tiling. It achieves outstanding performance in vision-language tasks, positioning itself strongly against competitors like GPT-4o and Sonnet 3.5 on the WebDev Arena leaderboard.

Theme 2. Integration and Tooling Enhancements for Developers

- Aider v0.69.0 Streamlines Coding Workflows: The latest Aider v0.69.0 update enables triggering with

# ... AI?comments and monitoring all files, enhancing automated code management. Support for Gemini Flash 2.0 and ChatGPT Pro integration optimizes coding workflows. Aider Documentation provides detailed usage instructions.

- Cursor IDE Outperforms Windsurf in Autocomplete: Cursor is favored over Windsurf for its superior autocomplete capabilities and flexibility in managing multiple models without high costs. Users report frustrations with Windsurf's inefficiencies in file editing and redundant code generation, highlighting Cursor's advantage in enhancing developer productivity.

- NotebookLM Plus Enhances AI Documentation: NotebookLM Plus introduces features like support for up to 300 sources per notebook and improved audio and chat functionalities. The updated 3-panel interface and interactive audio overviews facilitate better content management and user interaction. Available through Google Workspace.

Theme 3. AI Model Development Techniques and Optimizations

- Quantization-Aware Training Boosts Model Accuracy: Implementing Quantization-Aware Training (QAT) in PyTorch recovers up to 96% of accuracy degradation on specific benchmarks. Utilizing tools like torchao and torchtune facilitates effective fine-tuning, with Straight-Through Estimators (STE) handling non-differentiable operations to maintain gradient integrity.

- Inverse Mechanistic Interpretability Explored: Researchers are delving into inverse mechanistic interpretability, aiming to transform code into neural network architectures without relying on differentiable programming. RASP serves as a pertinent example, demonstrating code interpretation at a mechanistic level. RASP Paper provides comprehensive insights.

- Dynamic 4-bit Quantization Enhances Vision Models: Unsloth's Dynamic 4-bit Quantization selectively avoids quantizing certain parameters, significantly improving accuracy while maintaining VRAM efficiency. This method proves effective for vision models, which traditionally struggle with quantization, enabling better performance in local training environments.

Theme 4. Product Updates and Announcements from AI Providers

- ChatGPT Introduces New Projects Feature: In the latest YouTube launch, OpenAI unveiled the Projects feature in ChatGPT, enhancing chat organization and customization for structured discussion management.

- Perplexity Pro Faces Usability Challenges: Perplexity Pro users report issues with conversation tracking and image generation, impacting the overall user experience. Recent updates introduce custom web sources in Spaces to tailor searches to specific websites, enhancing search specificity.

- OpenRouter Adds Model Provider Filtering Amidst API Downtime: OpenRouter now allows users to filter models by provider, improving model selection efficiency. During AI Launch Week, OpenRouter managed over 1.8 million requests despite widespread API downtime from providers like OpenAI and Anthropic, ensuring service continuity for businesses.

Theme 5. Community Engagement and Support Issues

- Codeium Pricing and Performance Frustrations: Users express dissatisfaction with Codeium's pricing and ongoing performance issues, particularly with Claude and Cascade models. Despite recent price hikes, internal errors remain unresolved, prompting concerns about the platform's reliability.

- Tinygrad Performance Bottlenecks Highlight Need for Optimization: Tinygrad users report significant performance lags compared to PyTorch, especially with larger sequence lengths and batch sizes. Calls for benchmark scripts aim to identify and address compile time and kernel execution inefficiencies.

- Unsloth AI Enhances Multi-GPU Training Support: Unsloth anticipates introducing multi-GPU training support, currently limited to single GPUs on platforms like Kaggle. This enhancement is expected to optimize training workflows for larger models, alleviating current bottlenecks and improving training efficiency.

PART 1: High level Discord summaries

Codeium / Windsurf Discord

- Codeium's Pricing and Performance Woes: Users are frustrated with Codeium's pricing and persistent performance issues despite recent price hikes, leading to dissatisfaction with the service.

- Complaints focus on internal errors with the Claude and Cascade models, prompting regret over platform spending.

- Claude Model Faces Internal Errors: Multiple reports indicate that the Claude model encounters internal errors after the initial message, disrupting user experience.

- Switching to the GPT-4o model mitigates the issue, suggesting potential instability within Claude.

- Cascade Struggles with C# Integration: Users report challenges integrating Cascade with their C# .NET projects, citing the tool's unfamiliarity with the .NET framework.

- Proposals for workspace AI rules aim to customize Cascade usage to better suit specific programming requirements.

- Windsurf's Sonnet Version Conundrum: Windsurf users face increased errors when using Sonnet 3.5, whereas Claude 4o serves as a more stable alternative.

- This discrepancy raises questions about the operational reliability of different Sonnet versions within Windsurf.

- Seamless Windsurf and Git Integration: Windsurf demonstrates strong compatibility with Git, maintaining native Git features akin to VSCode.

- Users effectively utilize tools like GitHub Desktop and GitLens alongside Windsurf without encountering conflicts.

Notebook LM Discord Discord

- NotebookLM's Gradual Feature Rollout: The latest NotebookLM updates, including premium features and UI enhancements, are being deployed incrementally, resulting in some users still accessing the previous interface despite having active subscriptions.

- Users are advised to remain patient as the rollout progresses, which may vary based on country and workspace configurations.

- Interactive Audio Overviews Issues: Several users reported disruptions with Interactive Audio Overviews, such as AI hosts prematurely ending sentences and interrupting conversations.

- The community is troubleshooting potential microphone problems and questioning the functionality of the interactive feature.

- Multilingual Support Enhancements: NotebookLM's capability to handle multiple European languages in a single performance test has been a topic of discussion, demonstrating its multilingual processing strengths.

- Users shared experiences of varied accents and language transitions, highlighting both the effectiveness and areas for improvement in AI language processing.

- Launch of NotebookLM Plus: The introduction of NotebookLM Plus offers expanded features, including support for up to 300 sources per notebook and enhanced audio and chat functionalities.

- Available through Google Workspace, Google Cloud, and upcoming Google One AI Premium.

- AI Integration in Creative Projects: An experienced creator detailed their use of NotebookLM alongside 3D rendering techniques in the project UNREAL MYSTERIES, emphasizing AI's role in enhancing storytelling.

- Insights were shared during an interview on a prominent FX podcast, showcasing the synergy between AI and creative processes.

aider (Paul Gauthier) Discord

- Aider v0.69.0 Streamlines File Interaction: The latest Aider v0.69.0 update enables users to trigger Aider with

# ... AI?comments and monitor all files, enhancing the coding workflow.- New instructions can be added using

# AI comments,// AI comments, or-- AI commentsin any text file, facilitating seamless automated code management.

- New instructions can be added using

- Gemini Flash 2.0 Support Enhances Versatility: Aider now fully supports Gemini Flash 2.0 Exp with commands like

aider --model flash, increasing compatibility with various LLMs.- Users highlighted that Gemini Flash 2.0's performance in processing large pull requests significantly boosts efficiency during code reviews.

- ChatGPT Pro Integration Optimizes Coding Workflows: Combining Aider with ChatGPT Pro has proven effective, allowing efficient copypasting of commands between both platforms during coding tasks.

- This integration streamlines workflows, making it easier for developers to manage and execute coding commands seamlessly.

- Fine-Tuning Models Enhances Knowledge on Recent Libraries: Users successfully fine-tuned models to update knowledge on recent libraries by condensing documentation into relevant contexts.

- This method significantly improved model performance when handling newer library versions, as shared by community members.

- LLM Leaderboards and Performance Comparisons Discussed: Discussions emerged around finding reliable leaderboards for comparing LLM performance on coding tasks, with LiveBench recommended for accuracy.

- Participants noted that many existing leaderboards might be biased due to contaminated datasets, emphasizing the need for unbiased evaluation tools.

Cursor IDE Discord

- Cursor Crushes Windsurf in Performance Showdown: Users prefer Cursor over Windsurf for its flexibility and superior performance, especially in autocomplete and managing multiple models without excessive costs.

- Windsurf faced criticism for inefficiencies in file editing and generating redundant code, highlighting Cursor's advantage in these areas.

- Cursor's Subscription Struggles: Payment Pains Persist: Users reported frustrations with Cursor's payment options, particularly involving PayPal and credit cards, and difficulties in purchasing Pro accounts.

- One user successfully paid with PayPal after initial issues, suggesting inconsistencies in payment processing.

- Cursor's Model Limits: Breaking Down the Numbers: Cursor's subscription plan offers 500 fast requests and unlimited slow requests once fast requests are exhausted, primarily for premium models.

- Users clarified that both Claude Haiku and Sonnet can be effectively utilized within these parameters, with Haiku requests costing less.

- Developers Delight in Cursor's Coding Capabilities: Users shared positive experiences using Cursor for coding tasks, including deploying Python projects and understanding server setups.

- Cursor was praised for enhancing productivity and efficiency, though some noted a potential learning curve with features like Docker.

- Cursor vs Windsurf: AI Performance Under Scrutiny: Discussions arose regarding the quality of responses from various AI models, with some users doubting the reliability of Windsurf compared to Cursor.

- Comparisons also included features like proactive assistance in agents and handling complex code appropriately.

Eleuther Discord

- GPU Cluster Drama Unfolds: A member humorously described disrupting GPU clusters by staying up all night to monopolize GPU time on the gpu-serv01 cluster.

- Another participant referenced previous disruptions, noting the practice is both entertaining and competitive within the community.

- Grading Fantasy Character Projects: A member introduced a project where students generate tokens from a fantasy character dataset, raising questions on effective assessment methods.

- Proposed grading strategies include perplexity scoring and CLIP notation, alongside humorous considerations on preventing cheating during evaluations.

- Crowdsourcing Evaluation Criteria: In response to grading challenges, a member suggested embedding the evaluation criteria directly into assignments to involve students in the grading process.

- The discussion took a lighthearted turn when another member joked about simplifying grading by assigning a 100 score to all submissions.

- Differentiating Aleatoric and Epistemic Uncertainty: The community delved into distinguishing aleatoric from epistemic uncertainty, asserting that most real-world uncertainty is epistemic due to unknown underlying processes.

- It was highlighted that memorization in models blurs this distinction, transitioning representations from inherent distributions to empirical ones.

- Inverse Mechanistic Interpretability Explored: A member inquired about inverse mechanistic interpretability, specifically the process of transforming code into neural networks without using differentiable programming.

- Another member pointed to RASP as a pertinent example, linking to the RASP paper that demonstrates code interpretation at a mechanistic level.

OpenAI Discord

- ChatGPT Introduces Projects Feature: In the latest YouTube video, Kevin Weil, Drew Schuster, and Thomas Dimson unveil the new Projects feature in ChatGPT, enhancing chat organization and customization.

- This update aims to provide users with a more structured approach to managing their discussions within the platform.

- Teams Plan Faces Sora Access Limitations: Users reported that the ChatGPT Teams plan does not include access to Sora, leading to dissatisfaction among subscribers.

- Additionally, concerns were raised about message limits remaining unchanged from the Plus plan despite higher subscription fees.

- Claude Preferred Over Gemini and ChatGPT: Discussions highlighted a preference for Claude over models like Gemini and ChatGPT, citing enhanced performance.

- Participants also emphasized the benefits of local models such as LM Studio and OpenWebUI for their practicality.

- Issues with AI-generated Content Quality: Users identified problems with the quality of AI-generated outputs, including unexpected elements like swords in images.

- There were mixed opinions on implementing quality controls for copyrighted characters, with some advocating for stricter measures.

- Local AI Tools Adoption and Prompt Complexity: Insights were shared on running AI locally using tools like Ollama and OpenWebUI, viewed as effective solutions.

- Discussions also revealed that while simple prompts receive quick responses, more complex prompts require deeper reasoning, potentially extending response times.

LM Studio Discord

- MacBook Pro M4 Pro handles large LLMs: The MacBook Pro 14 with M4 Pro chip efficiently runs 8b models with a minimum of 16GB RAM, but larger models benefit from 64GB or more.

- A member remarked, “8b is pretty low”, expressing interest in higher-capacity models and discussing options like the 128GB M4 MBP.

- RTX 3060 offers strong value for AI workloads: The RTX 3060 is praised for its price-to-performance ratio, with comparisons to the 3070 and 3090 highlighting its suitability for AI tasks.

- Concerns were raised about CUDA support limitations in Intel GPUs, leading members to compare used market options.

- AMD vs Intel GPUs in AI Performance: Members compared AMD's RX 7900XT with Intel's i7-13650HX, noting the latter's superior Cinebench scores.

- The 20GB VRAM in RX 7900XT was highlighted as advantageous for specific AI workloads.

- Selecting the right PSU is crucial for setups: Choosing a suitable power supply unit (PSU) is emphasized, with 1000W units preferred for demanding configurations.

- Members shared links to various PSUs, discussing their efficiency ratings and impact on overall system performance.

- Optimizing AI performance via memory overclocking: Overclocking memory timings is suggested to enhance bandwidth performance in GPU-limited tasks.

- The importance of effective cooling solutions was discussed to maintain efficiency during high-performance computing.

Latent Space Discord

- Qwen 2.5 Turbo introduces 1M context length: The release of Qwen 2.5 Turbo extends its context length to 1 million tokens, significantly boosting its processing capabilities.

- This enhancement facilitates complex tasks that require extensive context, marking a notable advancement in AI model performance.

- Codeium Processes Over 100 Million Tokens: In a recent update, Codeium demonstrated the ability to handle over 100 million tokens per minute, showcasing their scalable infrastructure.

- This achievement reflects their focus on enterprise-level solutions, with insights drawn from scaling 100x in just 18 months.

- NotebookLM Unveils Audio Overview and NotebookLM Plus: NotebookLM introduced an audio overview feature that allows users to engage directly with AI hosts and launched NotebookLM Plus for enterprise users, enhancing its functionality at notebooklm.status/updates.

- The redesigned user interface facilitates easier content management, catering to businesses' needs for improved AI-driven documentation.

- Sonnet Tops WebDev Arena Leaderboard: Claude 3.5 Sonnet secured the top position on the newly launched WebDev Arena leaderboard, outperforming models like GPT-4o and demonstrating superior performance in web application development.

- This ranking underscores Sonnet's effectiveness in practical AI applications, as highlighted by over 10K votes from the community.

- SillyTavern Emerges as LLM Testing Ground: SillyTavern was highlighted by AI engineers as a valuable frontend for testing large language models, akin to a comprehensive test suite for diverse scenarios.

- Members leveraged it for complex philosophical discussions, illustrating its flexibility and utility in engaging with AI models.

Bolt.new / Stackblitz Discord

- Testing Bolt's Memory Clearance: Users are experimenting with memory erasure prompts by instructing Bolt to delete all prior interactions, aiming to modify its recall mechanisms.

- One user noted, 'It could be worth a shot' to assess the impact on Bolt's memory retention capabilities.

- Bolt's Handling of URLs in Prompts: There is uncertainty regarding Bolt's ability to process URLs within API references, with users inquiring about this functionality.

- A clarification was provided that Bolt does not read URLs, recommending users to transfer content to a

.mdfile for effective review.

- A clarification was provided that Bolt does not read URLs, recommending users to transfer content to a

- Duration of Image Analysis Processes: Inquiries have been made about the expected duration of image analysis processes, reflecting concerns over efficiency.

- This ongoing dialogue highlights the community's focus on improving the responsiveness of the image analysis feature.

- Integrating Supabase and Stripe with Bolt: Participants are exploring the integration of Supabase and Stripe, facing challenges with webhook functionalities.

- Many anticipate that the forthcoming Supabase integration will enhance Bolt's capabilities and address current issues.

- Persistent Bolt Integration Issues: Users continue to face challenges with Bolt failing to process commands, despite trying various phrasings, leading to increased frustration.

- The lack of comprehensive feedback from Bolt complicates task completion, as highlighted by several community members.

GPU MODE Discord

- Quantization-Craze in PyTorch Enhances Accuracy: A user detailed how Quantization-Aware Training (QAT) in PyTorch recovers up to 96% of accuracy degradation on specific benchmarks, utilizing torchao and torchtune for effective fine-tuning methods.

- Discussions emphasized the role of Straight-Through Estimators (STE) in handling non-differentiable operations during QAT, with members confirming its impact on gradient calculations for linear layers.

- Triton Troubles: Fused Attention Debugging Unveiled: Members raised concerns regarding fused attention in Triton, seeking resources and sessions to clarify its implementation, while one user reported garbage values in the custom flash attention kernel linked to TRITON_INTERPET=1.

- Solutions were proposed to disable TRITON_INTERPET for valid outputs, and compatibility issues with bfloat16 were highlighted, aligning with existing challenges in Triton data types.

- Modal's GPU Glossary Boosts CUDA Comprehension: Modal released a comprehensive GPU Glossary aimed at simplifying CUDA terminology through cross-linked articles, receiving positive feedback from the community.

- Collaborative efforts were noted to refine definitions, particularly for tensor cores and registers, enhancing the glossary's utility for AI engineers.

- CPU Offloads Outperform Non-Offloaded GPU Training for Small Models: CPU offloading for single-GPU training was implemented, showing higher throughput for smaller models by increasing batch sizes, though performance declined for larger models due to PyTorch's CUDA synchronization during backpropagation.

- Members discussed the limitations imposed by VRAM constraints and proposed modifying the optimizer to operate directly on CUDA to mitigate delays.

- H100 GPU Scheduler Sparks Architecture Insights: Discussions clarified the H100 GPU's architecture, noting that despite having 128 FP32 cores per Streaming Multiprocessor (SM), the scheduler issues only one warp per cycle, leading to scheduler complexity questions.

- This sparked further inquiries into architectural naming conventions and the operational behavior of tensor cores versus CUDA cores.

Nous Research AI Discord

- Microsoft Launches Phi-4, a 14B Parameter Language Model: Microsoft has unveiled Phi-4, a 14B parameter language model designed for complex reasoning in math and language processing, available on Azure AI Foundry and Hugging Face.

- The Phi-4 Technical Report highlights its training centered on data quality, distinguishing it from other models with its specialized capabilities.

- DeepSeek-VL2 Enters the MoE Era: DeepSeek-VL2 launches with a MoE architecture featuring dynamic image tiling and scalable model sizes of 3B, 16B, and 27B parameters.

- The release emphasizes outstanding performance across benchmarks, particularly in vision-language tasks.

- Meta's Tokenization Breakthrough with SONAR: Meta introduced a new language modeling approach that replaces traditional tokenization with sentence representation using SONAR sentence embeddings, as detailed in their latest paper.

- This method allows models, including a diffusion model, to outperform existing models like Llama-3 on tasks such as summarization.

- Byte Latent Transformer Redefines Tokenization: Scaling01 announced the Byte Latent Transformer, a tokenizer-free model enhancing inference efficiency and robustness.

- Benchmark results show it competes with Llama 3 while reducing inference flops by up to 50%.

- Speculative Decoding Enhances Model Efficiency: Discussions on speculative decoding revealed it generates a draft response with a smaller model, corrected by a larger model in a single forward pass.

- Members debated the method's efficiency and the impact of draft outputs on re-tokenization requirements.

Unsloth AI (Daniel Han) Discord

- Phi-4 Released with Open Weights: Phi-4 is set to release next week with open weights, offering significant performance enhancements in reasoning tasks compared to earlier models. Sebastien Bubeck announced that Phi-4 falls under the Llama 3.3-70B category and features 5x fewer parameters while achieving high scores on GPQA and MATH benchmarks.

- Members are looking forward to leveraging Phi-4’s streamlined architecture for more efficient deployments, citing the reduced parameter count as a key advantage.

- Command R7B Demonstrates Speed and Efficiency: Command R7B has garnered attention for its impressive speed and efficiency, especially considering its compact 7B parameter size. Cohere highlighted that Command R7B delivers top-tier performance suitable for deployment on commodity GPUs and edge devices.

- The community is eager to benchmark Command R7B against other models, particularly in terms of hosting costs and scalability for various applications.

- Unsloth AI Enhances Multi-GPU Training Support: Unsloth is anticipated to introduce multi-GPU training support, addressing current limitations on platforms like Kaggle which restrict users to single GPU assignments. This enhancement aims to optimize training workflows for larger models.

- Members discussed the potential for increased training efficiency and the alleviation of bottlenecks once multi-GPU support is implemented.

- Fine-tuning Llama 3.3 70B on Unsloth Requires High VRAM: Fine-tuning the Llama 3.3 70B model using Unsloth necessitates 41GB of VRAM, rendering platforms like Google Colab inadequate for this purpose. GitHub - unslothai/unsloth provides resources to facilitate this process.

- Community members recommend using Runpod or Vast.ai for accessing A100/H100 GPUs with 80GB VRAM, although multi-GPU training remains unsupported.

- Unsloth vs Llama Models: Performance and Usability: Discussions indicate that using Unsloth's model version over the Llama model version yields better fine-tuning results, simplifies API key handling, and resolves certain bugs. GitHub resources streamline the fine-tuning workflow for large-scale models.

- Members advise prioritizing Unsloth's versions to leverage enhanced functionalities and achieve more stable and efficient model performance.

Cohere Discord

- Command R7B Launch Accelerates AI Efficiency: Cohere has officially released Command R7B, the smallest and fastest model in their R series, enhancing speed, efficiency, and quality for AI applications across various devices.

- The model, available on Hugging Face, supports 23 languages and is optimized for tasks like math, code, and reasoning, catering to diverse enterprise use cases.

- Resolving Cohere API Errors: Several users reported encountering 403 and 400 Bad Request errors while using the Cohere API, highlighting issues with permission and configuration.

- Community members suggested solutions such as updating the Cohere Python library using

pip install -U cohere, which helped resolve some of the API access problems.

- Community members suggested solutions such as updating the Cohere Python library using

- Understanding Rerank vs Embed in Cohere: Discussions clarified that the Rerank feature reorders documents based on relevance, while Embed converts text into numerical representations for various NLP tasks.

- Embed can now process images with the new Embed v3.0 models, enabling semantic similarity estimation and categorization tasks within AI workflows.

- 7B Model Enhances Performance Metrics: The 7B model by Cohere outperforms older models like Aya Expanse and previous Command R versions, offering improved capabilities in Retrieval Augmented Generation and complex tool use.

- Upcoming examples for finetuning the 7B model are set to release next week, showcasing its advanced reasoning and summarization abilities.

- Cohere Bot and Python Library Streamline Development: The Cohere bot is back online, assisting users with finding relevant resources and addressing technical queries efficiently.

- Additionally, the Cohere Python library was shared to facilitate API access, enabling developers to integrate Cohere's functionalities seamlessly into their projects.

Perplexity AI Discord

- Campus Strategist Program Goes International: We're thrilled to announce the expansion of our Campus Strategist program internationally, allowing students to run their own campus activations, receive exclusive merch, and collaborate with our global team. Applications for the Spring 2025 cohort are open until December 28; for more details, visit Campus Strategists Info.

- This initiative emphasizes collaboration among strategists globally, fostering a vibrant community.

- Perplexity Pro Faces Usability Challenges: Users reported issues with Perplexity Pro, noting that it fails to track conversations effectively and frequently makes errors, such as inaccurate time references.

- These usability concerns are impacting the user experience, particularly regarding performance and adherence to instructions.

- Perplexity Pro Users Struggle with Image Generation: A user expressed frustration over being unable to generate images with Perplexity Pro, despite following prompts outlined in the guide.

- An attached image highlights gaps in the expected functionality, indicating potential issues in the image generation feature.

- Perplexity Introduces Custom Web Sources in Spaces: Perplexity launched custom web sources in Spaces, enabling users to tailor searches to specific websites. This update aims to provide more relevant and context-driven queries.

- The feature allows for enhanced customization, accommodating diverse user needs and improving search specificity within Spaces.

- Clarification on Perplexity API vs Website: It's been clarified that the Perplexity API and the Perplexity website are separate products, with no available API for the main website.

- This distinction ensures users understand the specific functionalities and offerings of each platform component.

OpenRouter (Alex Atallah) Discord

- Filter Models by Provider Now Available: Users can now filter the

/modelspage by provider, enhancing the ability to find specific models quickly. A screenshot was provided with details on this update. - API Uptime Issues During AI Launch Week: OpenRouter recovered over 1.8 million requests for closed-source LLMs amidst widespread API failures during AI Launch Week. A tweet from OpenRouter highlighted significant API downtime from providers like OpenAI and Gemini.

- APIs from all providers experienced considerable downtime, with OpenAI's API down for 4 hours and Gemini's API being nearly unusable. Anthropic also showed extreme unreliability, leading to major disruptions for businesses relying on these models.

- Gemini Flash 2.0 Bug Fixes Underway: Members reported ongoing bugs with Gemini Flash 2.0, such as the homepage version returning no providers, and expressed optimism for the fixes being implemented.

- Suggestions included linking to the free version and addressing concerns about exceeding message quotas when using Google models.

- Euryale Model Performance Decline: Euryale has been producing nonsensical outputs recently, with members suspecting issues stemming from model updates rather than their configurations.

- Another member noted that similar performance inconsistencies are common, highlighting the unpredictable nature of AI model behavior.

- Custom Provider Keys Access Launch: Access to custom provider keys is set to be opened soon, with Alex Atallah confirming its imminent release.

- Members are eagerly requesting access, and users expressed a desire to provide their own API Keys, indicating a push for customization options.

Modular (Mojo 🔥) Discord

- Innovative Networking Strategies in Mojo: The Mojo community emphasized the need for efficient APIs, discussing the use of XDP sockets and DPDK for advanced networking performance.

- Members are excited about Mojo's potential to reduce overhead compared to TCP in Mojo-to-Mojo communications.

- CPU vs GPU Performance in Mojo: Discussions highlighted that leveraging GPUs for networking tasks can enhance performance, achieving up to 400k requests per second with specific network cards.

- The consensus leans towards data center components offering better support for such efficiencies than consumer-grade hardware.

- Mojo’s Evolution with MLIR: Mojo's integration with MLIR was a key topic, focusing on its evolving features and implications for the language's compilation process.

- Contributors debated the impact of high-level developers' perspectives on Mojo's language efficiency, highlighting its potential across various domains.

- Discovering Mojo's Identity: The community humorously debated naming the little flame character associated with Mojo, suggesting names like Mojo or Mo' Joe with playful commentary.

- Discussions about Mojo's identity as a language sparked conversations regarding misconceptions among outsiders who often view it as just another way to speed up Python.

Interconnects (Nathan Lambert) Discord

- Microsoft Phi-4 Surpasses GPT-4o in Benchmarks: Microsoft's Phi-4 model, a 14B parameter language model, outperforms GPT-4o on both GPQA and MATH benchmarks and is now available on Azure AI Foundry.

- Despite Phi-4's performance, skepticism remains about the training methodologies of prior Phi models, with users questioning the focus on benchmarks over diverse data.

- LiquidAI Secures $250M for AI Scaling: LiquidAI has raised $250M to enhance the scaling and deployment of their Liquid Foundation Models for enterprise AI solutions, as detailed in their blog post.

- Concerns have been raised regarding their hiring practices, reliance on AMD hardware, and the potential challenges in attracting top-tier talent.

- DeepSeek VL2 Introduces Mixture-of-Experts Vision-Language Models: DeepSeek-VL2 was launched featuring Mixture-of-Experts Vision-Language Models designed for advanced multimodal understanding, available in sizes like 4.5A27.5B and Tiny: 1A3.4B.

- Community discussions highlight the innovative potential of these models, indicating strong interest in their performance capabilities.

- Tulu 3 Explores Advanced Post-Training Techniques: In a recent YouTube talk, Nathan Lambert discussed post-training techniques in language models, focusing on Reinforcement Learning from Human Feedback (RLHF).

- Sadhika, the co-host, posed insightful questions that delved into the implications of these techniques for future model development.

- Language Model Sizes Exhibit Reversal Trend: Recent analyses reveal a reversal in the growth trend of language model sizes, with current models like GPT-4o and Claude 3.5 Sonnet having approximately 200B and 400B parameters respectively, deviating from earlier expectations of reaching 10T parameters.

- Some members express skepticism about these size estimates, suggesting the actual parameter counts might be two orders of magnitude smaller due to uncertainties in reporting.

LLM Agents (Berkeley MOOC) Discord

- Certificate Declaration Form confusion resolved: A member initially struggled to locate where to submit their written article link on the Certificate Declaration Form but later found it.

- This highlights a common concern among members about proper submission channels amidst a busy course schedule.

- Labs submission deadlines extended: The deadline for labs was extended to December 17th, 2024, and members were reminded that only quizzes and articles were due at midnight.

- This extension offers flexibility for members who were behind due to various reasons, especially technological issues.

- Quizzes requirement clarified: It was confirmed that all quizzes need to be submitted by the deadline to meet certification requirements, although some leniency was offered for late submissions.

- A member who missed the quiz deadline was reassured they could still submit their answers without penalty.

- Public Notion links guideline: A clarification was made regarding whether Notion could be used for article submissions, emphasizing that it should be publicly accessible.

- Members were encouraged to ensure their Notion pages were published properly to avoid issues during submission.

- Certificate distribution timeline: Members inquired about the timeline for certificate distribution, with confirmations that certificates would be sent out late December through January.

- The timeline varies depending on the certification tier achieved, providing clear expectations for participants.

Stability.ai (Stable Diffusion) Discord

- WD 1.4 Underperforms Compared to Alternatives: A member recalled that WD 1.4 is merely an SD 2.1 model which had issues at launch, noting that Novel AI's model was the gold standard for anime when it first released.

- They mentioned that after SDXL dropped, users of the 2.1 model largely transitioned away from it due to its limitations.

- Local Video AI Models Discord Recommendation: A user sought recommendations for a Discord group focused on Local Video AI Models, specifically Mochi, LTX, and HunYuanVideo.

- Another user suggested joining banodoco as the best option for discussions on those models.

- Tag Generation Model Recommendations: A member asked for a good model for tag generation in Taggui, to which another member confidently recommended Florence.

- Additionally, it was advised to adjust the max tokens to suit individual needs.

- Need for Stable Diffusion XL Inpainting Script: A user expressed frustration over the lack of a working Stable Diffusion XL Inpainting finetuning script, despite extensive searches.

- They questioned if this channel was the right place for such inquiries or if tech support would be more suitable.

- Image Generation with ComfyUI: A user inquired about modifying a Python script to implement image-to-image processing with a specified prompt and loaded images.

- Others confirmed that while the initial code aimed for text-to-image, it could theoretically support image-to-image given the right model configurations.

OpenInterpreter Discord

- Resolved Nvidia NIM API Setup: A user successfully configured the Nvidia NIM API by executing

interpreter --model nvidia_nim/meta/llama3-70b-instructand setting theNVIDIA_NIM_API_KEYenvironment variable.- They expressed appreciation for the solution while highlighting difficulties with repository creation.

- Custom API Integration in Open Interpreter: A member inquired about customizing the Open Interpreter app's API, sparking discussions on integrating alternative APIs for improved desktop application functionality.

- Another participant emphasized that the app targets non-developers, focusing on user-friendliness without requiring API key configurations.

- Clarifying Token Limit Functionality: Users discussed the max tokens feature's role, noting it restricts response lengths without accumulating over conversations, leading to challenges in tracking token usage.

- Suggestions included implementing

max-turnsand a prospective max-budget feature to manage billing based on token consumption.

- Suggestions included implementing

- Advancements in Development Branch: Feedback on the development branch indicated that it enables repository creation via commands, praised for practical applications in projects.

- However, users reported issues with code indentation and folder creation, raising queries about the optimal operating environment.

- Meta's Byte Latent Transformer Introduced: Meta published Byte Latent Transformer: Patches Scale Better Than Tokens, introducing a strategy that utilizes bytes instead of traditional tokenization for enhanced model performance.

- This approach may transform language model operations by adopting byte-level representation to boost scalability and efficiency.

LlamaIndex Discord

- LlamaCloud's Multimodal RAG Pipeline: Fahd Mirza showcased LlamaCloud's multimodal capabilities in a recent video, allowing users to upload documents and toggle multi-modal functionality via Python or JavaScript APIs.

- This setup effectively handles mixed media, streamlining the RAG pipeline for diverse data types.

- OpenAI's Non-Strict Function Calling Defaults: Function calling defaults in OpenAI remain non-strict to minimize latency and ensure compatibility with Pydantic classes, as discussed in the general channel.

- Users can enable strict mode by setting

strict=True, though this may disrupt certain Pydantic integrations.

- Users can enable strict mode by setting

- Prompt Engineering vs. Frameworks like dspy: A discussion emerged around the effectiveness of prompt engineering compared to frameworks such as dspy, with members seeking strategies to craft impactful prompts.

- The community expressed interest in identifying best practices to enhance prompt performance for specific objectives.

- AWS Valkey as a Redis Replacement: Following Redis's shift to a non-open source model, members inquired about support for AWS Valkey, a drop-in replacement, as detailed in Valkey Datastore Explained.

- The conversation highlighted potential compatibility with existing Redis code and the need for further exploration.

- Integrating Langchain with MegaParse: Integration of Langchain with MegaParse enhances document parsing capabilities, enabling efficient information extraction from diverse document types, as outlined in AI Artistry's blog.

- This combination is particularly valuable for businesses and researchers seeking robust parsing solutions.

DSPy Discord

- DSPy Framework Accelerates LLM Applications: DSPy simplifies LLM-powered application development by providing boilerplate prompting and task 'signatures', reducing time spent on prompting DSPy.

- The framework enables building agents like a weather site efficiently.

- AI Redefines Categories as the Platypus: A blog post describes how AI acts like a platypus, challenging existing technological categorizations The Platypus In The Room.

- This analogy emphasizes AI's unique qualities that defy conventional groupings.

- Cohere v3 Outpaces Colbert v2 in Performance: Cohere v3 has been recognized to deliver superior performance over Colbert v2 in recent evaluations, sparking interest in the underlying enhancements.

- Discussions delved into the specific improvements contributing to Cohere v3's performance gains and explored implications for ongoing projects.

- Leveraging DAGs & Serverless for Scalable AI: A YouTube video titled 'Building Scalable Systems with DAGs and Serverless for RAG' was shared, focusing on challenges in AI system development.

- Jason and Dan discussed issues from router implementations to managing conversation histories, offering valuable insights for AI engineers.

- Optimizing Prompts with DSPy Optimizers: Discussions highlighted the role of DSPy optimizers in guiding LLM instruction writing during optimization runs, referencing an arXiv paper.

- Members expressed the need for enhanced documentation on optimizers, aiming for more detailed and simplified explanations to aid understanding.

tinygrad (George Hotz) Discord

- Tinygrad Performance Lag: Profiling shows Tinygrad runs significantly slower than PyTorch, facing a 434.34 ms forward/backward pass for batch size 32 at sequence length 256.

- Users reported an insane slowdown when increasing sequence length on a single A100 GPU.

- BEAM Configuration Tweaks: Discussions highlighted that setting BEAM=1 in Tinygrad is greedy and suboptimal for performance.

- Switching to BEAM=2 or 3 is recommended to improve runtime and performance during kernel search.

- Benchmark Script Request: Members expressed the need for simple benchmark scripts to enhance Tinygrad's performance.

- Providing these benchmarks could help identify improvements in compile time and kernel execution.

Torchtune Discord

- Torchtune 3.9 Eases Type Hinting: With the release of Torchtune 3.9, developers can now substitute

List,Dict, andTuplewith default builtins for type hinting, simplifying the coding process.- This update has initiated a light-hearted discussion about how Python's ongoing changes are influencing workflows.

- Python's Evolving Type System Challenges: A member humorously noted that Python is increasing their workload due to recent changes, highlighting a common sentiment within the community.

- This reflects the frequent, often amusing, frustrations developers encounter when adapting to language updates.

- Ruff Automates Type Hint Replacement: Ruff now includes a rule that automatically manages the replacement of type hints, streamlining the transition for developers.

- This enhancement underscores how tools like Ruff are evolving to support developers amidst Python's continuous updates.

MLOps @Chipro Discord

- Kickstart the Year with Next-Gen Retrieval: Participants will explore how to integrate vector search, graph databases, and textual search engines to establish a versatile, context-rich data layer during the session on January 8th at 1 PM EST.

- Rethink how to build AI applications in production to effectively support modern demands for large-scale LLMOps.

- Enhance Runtimes with Advanced Agents: The session offers insights into utilizing tools like Vertex AI Agent Builder for orchestrating long-running sessions and managing chain of thought workflows.

- This tactic aims to improve the performance of agent workflows in more complex applications.

- Scale Model Management for LLMs: Focus will be on leveraging robust tools for model management at scale, ensuring efficient operations for specialized LLM applications.

- Expect discussions on strategies that integrate AI safety frameworks with dynamic prompt engineering.

- Simplify Dynamic Prompt Engineering: The workshop will emphasize dynamic prompt engineering, crucial for adapting to evolving model capabilities and user requirements.

- This method aims to deliver real-time contextual responses, enhancing user satisfaction.

- Ensure AI Compliance and Safety Standards: An overview of AI safety and compliance practices will be presented, ensuring that AI applications meet necessary regulations.

- Participants will learn about integrating safety measures into their application development workflows.

Mozilla AI Discord

- Demo Day Recap Published: The recap of the Mozilla Builders Demo Day has been published, detailing attendee participation under challenging conditions. Read the full recap here.

- The Mozilla Builders team highlighted the event's success on social media, emphasizing the blend of innovative technology and dedicated participants.

- Contributors Receive Special Thanks: Special acknowledgments were extended to various contributors who played pivotal roles in the event's execution. Recognition was given to teams with specific organizational roles.

- Participants were encouraged to appreciate the community's support and collaborative efforts that ensured the event's success.

- Social Media Amplifies Event Success: Highlights from the event were shared across platforms like LinkedIn and X, showcasing the event's impact.

- Engagement metrics on these platforms underscored the enthusiasm and positive feedback from the community regarding the Demo Day.

- Demo Day Video Now Available: A video titled Demo_day.mp4 capturing key moments and presentations from the Demo Day has been made available. Watch the highlights here.

- The video serves as a comprehensive visual summary, allowing those who missed the event to stay informed about the showcased technologies and presentations.

The Axolotl AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LAION Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!