[AINews] Mamba-2: State Space Duality

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Transformers are SSMs.

AI News for 5/31/2024-6/3/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (400 channels, and 8575 messages) for you. Estimated reading time saved (at 200wpm): 877 minutes.

Over the weekend we got the FineWeb Technical Report (which we covered a month ago), and it turns out that it does improve upon CommonCrawl and RefinedWeb with better filtering and deduplication.

However we give the weekend W to the Mamba coauthors, who are somehow back again with Mamba-2, a core 30 lines of Pytorch which outperforms Mamba and Transformer++ in both perplexity and wall-clock time.

Tri recommends reading the blog first, developing Mamba-2 over 4 parts:

- The Model

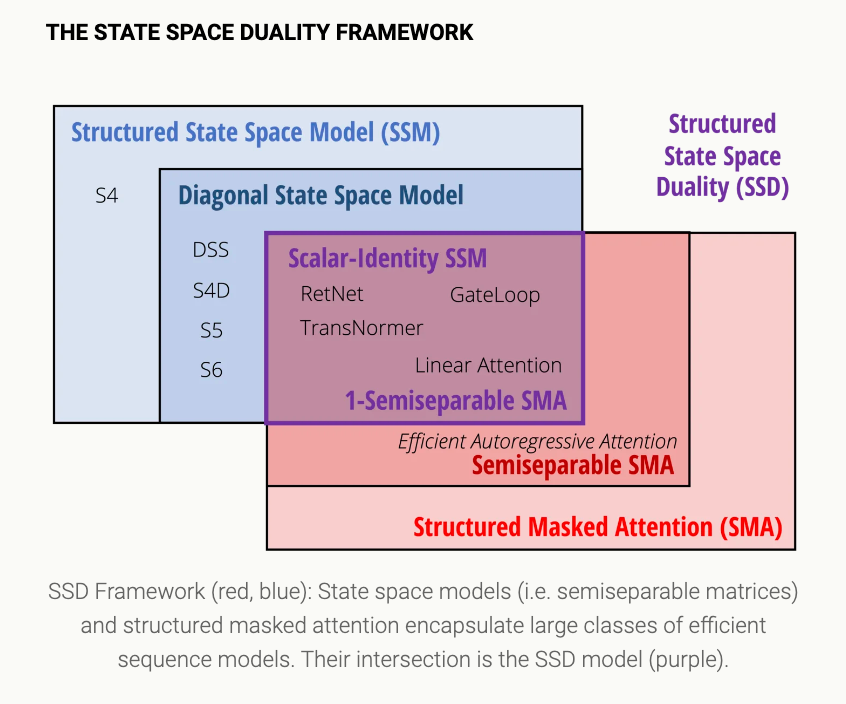

- Understanding: What are the conceptual connections between state space models and attention? Can we combine them?

As developed in our earlier works on structured SSMs, they seem to capture the essence of continuous, convolutional, and recurrent sequence models – all wrapped up in a simple and elegant model.

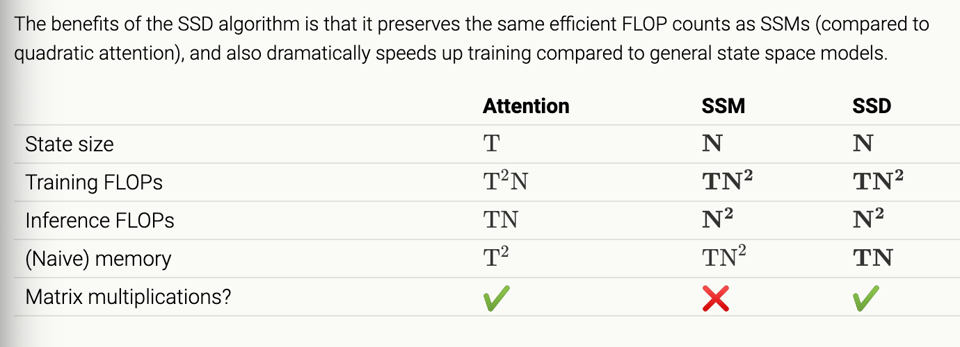

- Efficiency: Can we speed up the training of Mamba models by recasting them as matrix multiplications?

Despite the work that went into making Mamba fast, it’s still much less hardware-efficient than mechanisms such as attention.

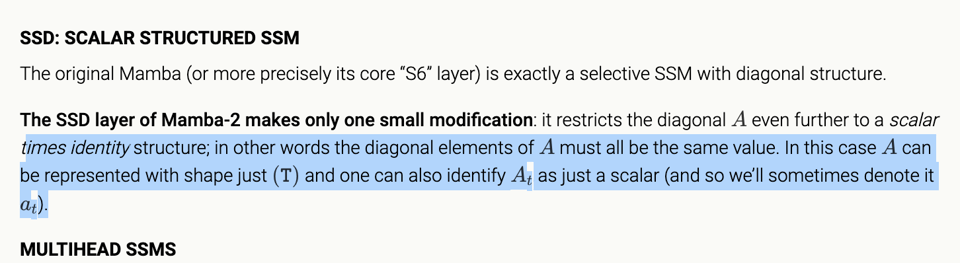

- The core difference between Mamba and Mambda-2 is a stricter diagonalization of their A matrix:

Using this definition the authors prove equivalence (duality) between Quadratic Mode (Attention) and Linear Mode (SSMs), and unlocks matrix multiplications.

Using this definition the authors prove equivalence (duality) between Quadratic Mode (Attention) and Linear Mode (SSMs), and unlocks matrix multiplications.

- Understanding: What are the conceptual connections between state space models and attention? Can we combine them?

- The Theory

- The Algorithm

- The Systems

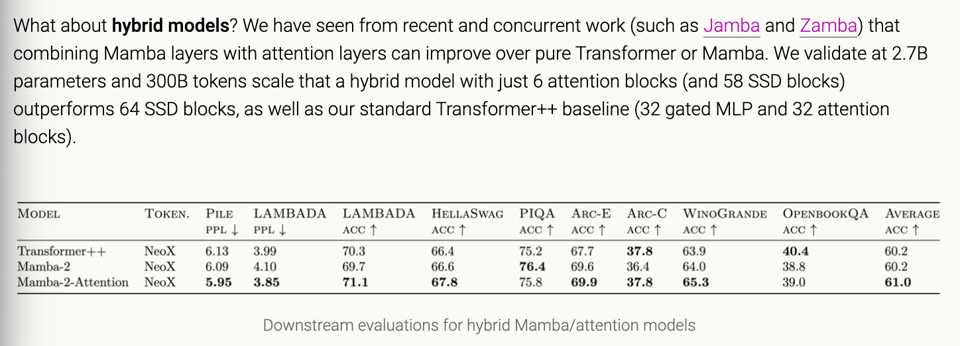

- they show that Mamba-2 both beats Mamba-1 and Pythia on evals, and dominate in evals when placed in hybrid model archs similar to Jamba:

- they show that Mamba-2 both beats Mamba-1 and Pythia on evals, and dominate in evals when placed in hybrid model archs similar to Jamba:

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

AI and Machine Learning Research

- Mamba-2 State Space Model: @_albertgu and @tri_dao introduced Mamba-2, a state space model (SSM) that outperforms Mamba and Transformer++ in perplexity and wall-clock time. It presents a framework connecting SSMs and linear attention called state space duality (SSD). Mamba-2 has 8x larger states and 50% faster training than Mamba. (@arankomatsuzaki and @_akhaliq)

- FineWeb and FineWeb-Edu Datasets: @ClementDelangue highlighted the release of FineWeb-Edu, a high-quality subset of the 15 trillion token FineWeb dataset, created by filtering FineWeb using a Llama 3 70B model to judge educational quality. It enables better and faster LLM learning. @karpathy noted its potential to reduce tokens needed to surpass GPT-3 performance.

- Perplexity-Based Data Pruning: @_akhaliq shared a paper on using small reference models for perplexity-based data pruning. Pruning based on a 125M parameter model's perplexities improved downstream performance and reduced pretraining steps by up to 1.45x.

- Video-MME Benchmark: @_akhaliq introduced Video-MME, the first comprehensive benchmark evaluating multi-modal LLMs on video analysis, spanning 6 visual domains, video lengths, multi-modal inputs, and manual annotations. Gemini 1.5 Pro significantly outperformed open-source models.

AI Ethics and Societal Impact

- AI Doomerism and Singularitarianism: @ylecun and @fchollet criticized AI doomerism and singularitarianism as "eschatological cults" driving insane beliefs, with some stopping long-term life planning due to AI fears. @ylecun argued they make people feel powerless rather than mobilizing solutions.

- Attacks on Dr. Fauci and Science: @ylecun condemned attacks on Dr. Fauci by Republican Congress members as "disgraceful and dangerous". Fauci helped save millions but is vilified by those prioritizing politics over public safety. Attacks on science and the scientific method are "insanely dangerous" and killed people in the pandemic by undermining public health trust.

- Opinions on Elon Musk: @ylecun shared views on Musk, liking his cars, rockets, solar/satellites, and open source/patent stances, but disagreeing with his treatment of scientists, hype/false predictions, political opinions, and conspiracy theories as "dangerous for democracy, civilization, and human welfare". He finds Musk "naive about content moderation difficulties and necessity" on his social platform.

AI Applications and Demos

- Dino Robotics Chef: @adcock_brett shared a video of Dino Robotics' robot chef making schnitzel and fries using object localization and 3D image processing, trained to recognize various kitchen objects.

- SignLLM: @adcock_brett reported on SignLLM, the first multilingual AI model for Sign Language Production, generating AI avatar sign language videos from natural language across eight languages.

- Perplexity Pages: @adcock_brett highlighted Perplexity's Pages tool for turning research into articles, reports, and guides that can rank on Google Search.

- 1X Humanoid Robot: @adcock_brett demoed 1X's EVE humanoid performing chained tasks like picking up a shirt and cup, noting internal updates.

- Higgsfield NOVA-1: @adcock_brett introduced Higgsfield's NOVA-1 AI video model allowing enterprises to train custom versions using their brand assets.

Miscellaneous

- Making Friends Advice: @jxnlco shared tips like doing sports, creative outlets, cooking group meals, and connecting people based on shared interests to build a social network.

- Laptop Recommendation: @svpino praised the "perfect" but expensive Apple M3 Max with 128GB RAM and 8TB SSD.

- Nvidia Keynote: @rohanpaul_ai noted 350x datacenter AI compute cost reduction at Nvidia over 8 years. @rohanpaul_ai highlighted the 50x Pandas speedup on Google Colab after RAPIDS cuDF integration.

- Python Praise: @svpino called Python "the all-time, undisputed GOAT of programming languages" and @svpino recommended teaching kids Python.

Humor and Memes

- Winning Meme: @AravSrinivas posted a "What does winning all the time look like?" meme image.

- Pottery Joke: @jxnlco joked "Proof of cooking. And yes I ate on a vintage go board."

- Stable Diffusion 3 Meme: @Teknium1 criticized Stability AI for "making up a new SD3, called SD3 'Medium' that no one has ever heard of" while not releasing the Large and X-Large versions.

- Llama-3-V Controversy Meme: @teortaxesTex posted about Llama-3-V's Github and HF going down "after evidence of them stealing @OpenBMB's model is out".

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Model Releases and Updates

- SD3 Medium open weights releasing June 12 from Stability AI: In /r/StableDiffusion, Stability AI is releasing the 2B parameter SD3 Medium model designed for photorealism, typography, performance and fine-tuning under non-commercial use. The 2B model currently outperforms the 8B version on some metrics, and has incompatibilities with certain sampling methods. Commercial rights will be available through a membership program.

- Nvidia and AMD unveil future AI chip roadmaps: In /r/singularity, Nvidia revealed plans through 2027 for its Rubin platform to succeed Blackwell, with H200 and B100 chips on the horizon after H100s used in OpenAI's models. AMD announced the MI325X with 288GB memory to rival Nvidia's H200, with the MI350 and MI400 series offering major inference boosts in coming years.

AI Capabilities and Limitations

- AI-generated media deceives mainstream news: A video mistaken by NBC News as showing a real dancing effect demonstrates the potential for AI-generated content to fool even major media outlets.

- Challenges in truly open-sourcing AI: A video argues that open source AI is not truly open source, as model weights are inscrutable without training data, order, and techniques. Fully open-sourcing large language models is difficult due to reliance on licensed data.

- Limitations in multimodal reasoning: In /r/OpenAI, ChatGPT struggles to annotate an object in an image despite correctly identifying it, highlighting current gaps in AI's ability to reason across modalities.

AI Development Tools and Techniques

- High-quality web dataset outperforms on knowledge and reasoning: The FineWeb dataset with 1.3T tokens surpasses other open web-scale datasets on knowledge and reasoning benchmarks. The associated blog post details techniques for creating high-quality datasets from web data.

- New mathematical tool for ML introduced in book: The book "Tangles" applies a novel mathematical approach to group qualities for identifying structure and types in data, with applications from clustering to drug development. Open source code is available.

- Parametric compression of large language model: In /r/LocalLLaMA, a simple parametric compression method prunes less important layers of LLaMA 3 70B to 62B parameters without fine-tuning, resulting in only slight performance drops on benchmarks.

AI Ethics and Societal Impact

- Ethical dilemma in disclosing AI impact on jobs: /r/singularity discusses the quandary of whether to inform friends that AI can now do their jobs, like book cover design, in seconds. The distress of delivering such news is weighed against withholding the truth.

- Poll gauges perceptions of AI's threat to job security: A poll in /r/singularity measures how secure people feel about their jobs persisting for the next 10 years in the face of AI automation.

Memes and Humor

- Meme satirizes AI's wide-reaching job replacement potential: An "All The Jobs" meme image humorously portrays AI's ability to replace a vast range of occupations.

AI Discord Recap

A summary of Summaries of Summaries

-

LLM Advancements and Multimodal Applications:

- Granite-8B-Code-Instruct from IBM enhances instruction-following for code tasks, surpassing major benchmarks. Stable Diffusion 3 Medium set to launch, promises better photorealism and typography, scheduled for June 12.

- The AI engineering community discusses VRAM requirements for SD3, with predictions around 15GB while considering features like fp16 optimization for potential reductions. FlashAvatar promises 300FPS digital avatars using Nvidia RTX 3090, stoking interest in high-fidelity avatar creation.

-

Fine-Tuning Techniques and Challenges:

- Recommendations for overcoming tokenizer issues in half-precision training suggest

tokenizer.padding_side = 'right'and using techniques from LoRA for enhanced fine-tuning. Axolotl users face issues with binary classification, suggesting Bert as an alternative.

- Community insights highlight the effective use of Gradio's OAuth for private app access and the utility of

share=Truefor quick app testing. Troubleshooting includes handling issues with inference setups in Kaggle and discrepancies with loss values in Axolotl, considering factors like input-output preprocessing.

- Recommendations for overcoming tokenizer issues in half-precision training suggest

-

Open-Source Projects and Community Collaborations:

- Manifold Research’s call for collaboration on multimodal transformers and control tasks aims to build a comprehensive open-source Generalist Model. StoryDiffusion and OpenDevin emerge as new open-source AI projects, sparking interest.

- Efforts to integrate TorchAO with LM Evaluation Harness focus on adding APIs for quantization support. Community initiatives, such as adapting Axolotl for AMD compatibility, highlight ongoing efforts in refining AI tools and frameworks.

-

AI Infrastructure and Security:

- Hugging Face security incident prompts a recommendation for rotating tokens and switching to fine-grained access tokens, affecting users' infrastructures like HF Spaces. Discussions in OpenRouter reference database timeouts in Asia, leading to service updates and decommissioning certain models like Llava 13B and Hermes 2 Vision 7B.

- ZeRO++ framework presents significant communication overhead reduction in large model training, aiding LLM implementations. The Paddler stateful load balancer enhances llama.cpp's efficiency, potentially streamlining model serving capabilities.

-

AI Research and Ethical Discussions:

- Yudkowsky’s controversial strategy against AI development sparks debate, with aggressive measures like airstrikes on data centers. LAION community reacts, discussing the balance between open collaboration and preventing misuse.

- New Theories on Transformer Limitations: Empirical evidence suggests transformers struggle with composing functions on large domains, leading to new approaches in model design. Discussions on embedding efficiency continue, comparing context windows for performance across LLM implementations.

PART 1: High level Discord summaries

HuggingFace Discord

- Security Flaw in HF Spaces: Users are advised to rotate any tokens or keys after a security incident in HF Spaces, as detailed in HuggingFace's blog post.

- AI and Ethics Debate Heats Up: Debate over the classification of lab-grown neurons sparks a deeper discussion on the nature and ethics of artificial intelligence. Meanwhile, HuggingFace infrastructure issues lead to a resolution of "MaxRetryError" problems.

- Rust Rising: A member collaborates to implement a deep learning book (d2l.ai) in Rust, contributing to GitHub, while others discuss the efficiency and deployment benefits of Rust's Candle library.

- Literature Review Insights and Quirky Creations: An LLM reasoning literature review is summarized on Medium, plus creative projects like the Fast Mobius demo and the gary4live Max4Live device shared, reflecting a healthy mixture of engineering rigor with imaginative playfulness.

- Practical Applications and Community Dialogue: Practical guidance on using TrOCR and models, such as MiniCPM-Llama3-V 2.5, is shared for OCR tasks. Discussions also extend to LLM determinism and resource recommendations for enhanced language generation and translation tasks, specifically citing Helsinki-NLP/opus-mt-ja-en as a strong Japanese to English translation tool.

- Exciting Developments in Robotics and Gradio: The article Diving into Diffusion Policy with Lerobot showcases ACT and Diffusion Policy methods in robotics, while Gradio announced support for dynamic layouts with @gr.render, exemplified by versatile applications like the Todo List and AudioMixer, explored in the Render Decorator Guide.

Unsloth AI (Daniel Han) Discord

- Multi-GPU Finetuning Progress: Active development is being made on multi-GPU finetuning with discussions on the viability of multimodal expansion. A detailed analysis of LoRA was shared, highlighting its potential in specific finetuning scenarios.

- Technical Solutions to Training Challenges:

Recommendations were made to alleviate tokenizer issues in half-precision training by setting

tokenizer.padding_side = 'right', and insights were given on Kaggle Notebooks as a solution to expedite LLM finetuning.

- Troubleshooting AI Model Implementation: Users have encountered difficulties with Phi 3 models on GTX 3090 and RoPE optimization on H100 NVL. Community recommended fixes include Unsloth's updates and discussion on potential memory reporting bugs.

- Model Safety and Limitations in Focus: Debates surfaced on businesses' hesitation to use open-source AI models due to safety concerns, with emphasis on preventing harmful content generation. Moreover, the inherent limitation of LLMs unable to innovate beyond their training data was acknowledged.

- Continuous Improvements to AI Collaboration Tools: Community shared solutions for saving models and fixing installation issues on platforms like Kaggle. Furthermore, there's active collaboration on refining checkpoint management for fine-tuning across platforms like Hugging Face and Wandb.

Stability.ai (Stable Diffusion) Discord

- Countdown to SD3 Medium Launch: Stability.ai has announced Stable Diffusion 3 Medium is set to launch on June 12th; interested parties can join the waitlist for early access. The announcement at Computex Taipei highlighted the model's expected performance boosts in photorealism and typography.

- Speculation Over SD3 Specs: The AI engineering community is abuzz with discussions on the prospective VRAM requirements for Stable Diffusion 3, with predictions around 15GB, while suggestions such as fp16 optimization have been mentioned to potentially reduce this figure.

- Clarity Required on Commercial Use: There's a vocal demand for Stability AI to provide explicit clarification on the licensing terms for SD3 Medium's commercial use, with concerns stemming from the transition to licenses with non-commercial restrictions.

- Monetization Moves Meet Backlash: The replacement of the free Stable AI Discord bot by a paid service, Artisan, has sparked frustration within the community, underscoring the broader trend toward monetizing access to AI tools.

- Ready for Optimizations and Fine-Tuning: In preparation for the release of SD3 Medium, engineers are anticipating the procedures for community fine-tunes, as well as performance benchmarks across different GPUs, with Stability AI ensuring support for 1024x1024 resolution optimizations, including tiling techniques.

Perplexity AI Discord

- AI-Assisted Homework: Opportunity or Hindrance?: Engineers shared diverse viewpoints on the ethics of AI-assisted homework, comparing it to choosing between "candy and kale," while suggesting an emphasis on teaching responsible AI usage to kids.

- Directing Perplexity's Pages Potential: Users expressed the need for enhancements to Perplexity's Pages feature, like an export function and editable titles, to improve usability, with concerns voiced over the automatic selection and quota exhaustion of certain models like Opus.

- Extension for Enhanced Interaction: The announcement of a Complexity browser extension to improve Perplexity's UI led to community engagement, with an invitation for beta testers to enhance their user experience.

- Testing AI Sensitivity: Discussions highlighted Perplexity AI's capability to handle sensitive subjects, demonstrated by its results on creating pages on topics like the Israel-Gaza conflict, with satisfactory outcomes reinforcing faith in its appropriateness filters.

- API Exploration for Expert Applications: AI engineers discussed optimal model usage for varying tasks within Perplexity API, clarifying trade-offs between smaller, faster models versus larger, more accurate ones, while also expressing enthusiasm about potential TTS API features. Reference was made to model cards for guidance.

CUDA MODE Discord

Let's Chat Speculatively: Engineers shared insights into speculative decoding, with suggestions like adding gumbel noise and a deterministic argmax. Recorded sessions on the subject are expected to be uploaded after editing, and discussions highlighted the importance of ablation studies to comprehend sampling parameter impacts on acceptance rates.

CUDA to the Cloud: Rental of H100 GPUs was discussed for profiling purposes, recommending providers such as cloud-gpus.com and RunPod. The challenges in collecting profiling information without considerable hacking were also noted.

Work and Play: A working group for production kernels and another for PyTorch performance-related documentation were announced, inviting collaboration. Additionally, a beginner's tip was given to avoid the overuse of @everyone in the community to prevent unnecessary notifications.

Tech Talks on Radar: Upcoming talks and workshops include a session on Tensor Cores and high-performance scan algorithms. The community also anticipates hosting Prof Wen-mei Hwu for a public Q&A, and a session from AMD's Composable Kernel team.

Data Deep Dives and Development Discussions: Discussion in #llmdotc was rich with details like the successful upload of a 200GB dataset to Hugging Face and a proposal for LayerNorm computation optimization, alongside a significant codebase refactor for future-proofing and easier model architecture integration.

Of Precision and Quantization: The AutoFP8 GitHub repository was introduced, aiming at automatic conversion to FP8 for increased computational efficiency. Meanwhile, integrating TorchAO with the LM Evaluation Harness was debated, including API enhancements for improved quantization support.

Parsing the Job Market: Anyscale is seeking candidates with interests in speculative decoding and systems performance, while chunked prefill and continuous batching practices were underscored for operational efficiencies in predictions.

Broadcasting Knowledge: Recordings of talks on scan algorithms and speculative decoding are to be made available on the CUDA MODE YouTube Channel, providing resources for continuous learning in high-performance computing.

PyTorch Performance Parsing: A call to action was made for improving PyTorch's performance documentation during the upcoming June Docathon, with emphasis on current practices over deprecated concepts like torchscript and a push for clarifying custom kernel integrations.

LM Studio Discord

- VRAM Vanquishers: Engineers are discussing solutions for models with high token prompts leading to slow responses on systems with low VRAM, and practical model recommendations like Nvidia P40 cards for home AI rigs.

- Codestral Claims Coding Crown: Codestral 22B's superior performance in context and instruction handling sparked discussions, while concerns with embedding model listings in LM Studio were addressed, and tales of tackling text generation with different models circulated.

- Whispering Without Support: Despite clamor for adding Whisper and Tortoise-enhanced audio capabilities to LM Studio, the size and complexity trade-offs triggered a talk, alongside the reveal of a "stop string" bug in the current iteration.

- Config Conundrums: Queries regarding model configuration settings for applications from coding to inference surfaced, with focus on quantization trade-offs and an enigmatic experience with inference speeds on specific GPU hardware.

- Amped-Up Amalgamation Adventures: Members mulled over Visual Studio plugin creations for smarter coding assistance, tapping into experiences with existing aids and the potential for project-wide context understanding using models like Mentat.

Note: Specific links to models, discussions, and GitHub repositories were provided in the respective channels and can be referred back to for further technical details and context.

Nous Research AI Discord

- Thriving in a Token World: The newly released FineWeb-Edu dataset touts 1.3 trillion tokens reputed for their superior performance on benchmarks such as MMLU and ARC, with a detailed technical report accessible here.

- Movie Magicians Source SMASH: A 3K screenplay dataset is now available for AI enthusiasts, featuring screenplay PDFs converted into .txt format, and secured under AGPL-3.0 license for enthusiastic model trainers.

- Virtual Stage Directions: Engagement in strategy simulation using Worldsim unfolds with a particular focus on the Ukraine-Russia conflict, demonstrating its capacity for detailed scenario-building, amidst a tech glitch causing text duplication currently under review.

- Distillation Dilemma and Threading Discussions: Researchers are exchanging ideas on effective knowledge distillation from larger to smaller models, like the Llama70b to Llama8b transition, and suggesting threading over loops for managing AI agent tasks.

- Model Ethics in Plain View: Community debates are ignited over the alleged replication of OpenBMB's MiniCPM by MiniCPM-Llama3-V, which led to the removal of the contested model from platforms like GitHub and Hugging Face after collective concern and evidence came to light.

LLM Finetuning (Hamel + Dan) Discord

- Axolotl Adversities: Engineers reported issues configuring binary classification in Axolotl's .yaml files, receiving a

ValueErrorindicating no corresponding data for the 'train' instruction. A proposed alternative was deploying Bert for classification tasks instead, as well as directly working with TRL when Axolotl lacks support.

- Gradio's Practicality Praised: AI developers leveraged Gradio's

share=Trueparameter for quickly testing and sharing apps. Discussions also unfolded around using OAuth for private app access and the overall sharing strategy, including hosting on HF Spaces and handling authentication and security.

- Modal Mysteries and GitHub Grief: Users encountered errors downloading models like Mistral7B_v0.1, due in part to a lack of authentication into Hugging Face in modal scripts caused by recent security events. Other challenges arose with

device map = metain Accelerate, with one user providing insights into its utility for inference mechanics.

- Credits Crunch Time: Deadline-driven discussions dominated channels, with many members concerned about timely credit assignment across platforms. Dan and Hamel intervened with explanations and reassurances, highlighting the importance of completing forms accurately to avoid missing out on platform-specific credits.

- Fine-tuning for the Future: Possible adjustments and various strategies for LLM training and fine-tuning emerged, such as keeping the batch sizes at powers of 2, using gradient accumulation steps to optimize training, and the potential of large batch sizes to stabilize training even in distributed setups over ethernet.

OpenAI Discord

- Zero Crossings and SGD: Ongoing Dispute: Ongoing debates have delved into the merits and drawbacks of tracking zero crossings in gradients for optimizer refinement, with mixed results observed in application. Another topic of heated discussion was the role of SGD as a baseline for comparison against new optimizers, indicating that advances may hinge upon learning rate improvements.

- FlashAvatar Ignites Interest: A method dubbed FlashAvatar for creating high-fidelity digital avatars has captured particular interest, promising up to 300FPS rendering with an Nvidia RTX 3090, as detailed in the FlashAvatar project.

- Understanding GPT-4's Quirks: Conversations in the community have centered on GPT-4's memory leaks and behavior, discussing instances of 'white screen' errors and repetitive output potentially tied to temperature settings. Custom GPT uses and API limits were also discussed, highlighting a 512 MB per file limit and 5 million tokens per file constraint as per OpenAI help articles.

- Context Window and Embedding Efficacy in Debate: A lively debate focused on the effectiveness of embeddings versus expanding context windows for performance improvement. Prospects for incorporating Gemini into the pipeline were entertained for purportedly enhancing GPT's performance.

- Troubles in Prompt Engineering: Community members shared challenges with ChatGPT's adherence to prompt guidelines, seeking strategies for improvement. Observations noted a preference for a single system message in structuring complex prompts.

Modular (Mojo 🔥) Discord

- Mojo Server Stability Saga: Users report the Mojo language server crashing in VS Code derivatives like Cursor on M1 and M2 MacBooks, documented in GitHub issue #2446. The fix exists in the nightly build, and a YouTube tutorial covers Python optimization techniques that can accelerate code loops, suggested for those looking to boost Python's performance.

- Eager Eyes on Mojo's Evolution: Discussions around Mojo's maturity centered on its development progress and open-source community contributions, as outlined in the Mojo roadmap and corresponding blog announcement. Separate conversations have also included Mojo's potential in data processing and networking, leveraging frameworks like DPDK and liburing.

- Mojo and MAX Choreograph Forward and Backward: In the Max engine, members are dissecting the details of implementing the forward pass to retain the necessary outputs for the backward pass, with concerns about the lack of documentation for backward calculations. The community is excited about conditional conformance capabilities in Mojo, poised to enhance the standard library's functions.

- Nightly Updates Glow: Continuous updates to the nightly Mojo compiler (2024.6.305) introduced new functionalities like global

UnsafePointerfunctions becoming methods. A discussion about thechartype in C leads to ultimately asserting its implementation-defined nature. Simultaneously, suggestions to improve changelog consistency are voiced, pointing to a style guide suggestion and discussing the transition of Tensors out of the standard library.

- Performance Chasers: Performance enthusiasts are benchmarking data processing times, identifying that Mojo is outpacing Python while lagging behind compiled languages, with the conversation captured in a draft PR#514. The realization sparks a proposal for custom JSON parsing, drawing inspiration from C# and Swift implementations.

Eleuther Discord

- BERT Not Fit for lm-eval Tasks: BERT stumbled when put through lm-eval, since encoder models like BERT aren't crafted for generative text tasks. The smallest decoder model on Hugging Face is sought for energy consumption analysis.

- Unexplained Variance in Llama-3-8b Performance: A user reported inconsistencies in gsm8k scores with llama-3-8b, marking a significant gap between their 62.4 score and the published 79.6. It was suggested that older commits might be a culprit, and checking the commit hash could clarify matters.

- Few Shots Fired, Wide Results Difference: The difference in gsm8k scores could further be attributed to the 'fewshot=5' configuration used on the leaderboard, potentially deviating from others' experimental setups.

- Collaborations & Discussions Ignite Innovation: Manifold Research's call for collaborators on multimodal transformers and control tasks was mentioned alongside insights into the bias in standard RLHF. Discussions also peeled back the layers of transformer limitations and engaged in the challenge of data-dependent positional embeddings.

- Hacking the Black Box: Interest is poised (editing advised) to surge for the upcoming mechanistic interpretability hackathon in July, with invites to dissect neural nets over a weekend put forth. A paper summary looking for collaborators on backward chaining circuits was shared to rope in more minds.

- Vision and Multimodal Interpretability Gaining Focus: The AI Alignment Forum article shed light on foundation-building in vision and multimodal mechanistic interpretability, underlining emergent segmentation maps and the "dogit lens." However, a need for further research into the circuits of score models itself was expressed, noting an existing gap in literature.

OpenRouter (Alex Atallah) Discord

Database Woes in the East: OpenRouter users reported database timeouts in Asia, mainly in regions like Seoul, Mumbai, Tokyo, and Singapore. A fix was implemented which led to rolling back some latency improvements to address this issue.

OpenRouter Under Fire for API Glitches: Despite a patch, users continued to face 504 Gateway errors, with some temporarily bypassing the issue using EU VPNs. User suggestions included the addition of provider-specific uptime statistics for better service accountability.

Model Decommissioning and Recommendation: Due to low usage and high costs, OpenRouter is retiring models such as Llava 13B and Hermes 2 Vision 7B (alpha) and suggests switching to alternatives like FireLlava 13B and LLaVA v1.6 34B.

Seamless API Switcheroo: OpenRouter’s standardized API simplifies switching between models or providers, as seen in the Playground, without necessitating code alterations, acknowledging easier management for engineers.

Popularity Over Benchmarks: OpenRouter tends to rank language models based on real-world application, detailing model usage rather than traditional benchmarks for a pragmatic perspective available at OpenRouter Rankings.

LAION Discord

- AI Ethics Hot Seat: Outrage and debate roared around Eliezer Yudkowsky’s radical strategy to limit AI development, with calls for aggressive actions including data center destruction sparking divisive dialogue. A deeper dive into the controversy can be found here.

- Mobius Model Flexes Creative Muscles: The new Mobius model charmed the community with prompts like "Thanos smelling a little yellow rose" and others, showcasing the model's flair and versatility. Seek inspiration or take a gander at the intriguing outcomes on Hugging Face.

- Legal Lunacy Drains Resources: A discussion detailed how pseudo-legal lawsuits waste efforts and funds, spotlighted by a case of pseudo-legal claims from Vancouver emerging as a cautionary tale. Review the absurdity in full color here.

- Healthcare's AI Challenge Beckons: Innovators are called to the forefront with the Alliance AI4Health Medical Innovation Challenge, dangling a $5k prize to spur development in healthcare AI solutions. Future healthcare pioneers can find their starting block here.

- Research Reveals New AI Insights: The unveiling of the Phased Consistency Model (PCM) challenges LCM on design limitations, with details available here, while a new paper elaborates on the efficiency leaps in text-to-image models, dubbed the "1.58 bits paper applied to image generation," which can be explored on arXiv. SSMs strike back in the speed department, with Mamba-2 outstripping predecessors and rivaling Transformers, read all about it here.

LlamaIndex Discord

- Graphs Meet Docs at LlamaIndex: LlamaIndex launched first-class support for building knowledge graphs integrated with a toolkit for manual entity and relation definitions, elevating document analytics capabilities. Custom RAG flows can now be constructed using knowledge graphs, with resources for neo4j integration and RAG flows examples.

- Memories and Models in Webinars: Upcoming and recorded webinars showcase the forefront of AI with discussions on "memary" for long-term autonomous agent memory featured by Julian Saks and Kevin Li, alongside another session focusing on "Future of Web Agents" with Div from MultiOn. Register for the webinar here and view the past session online.

- Parallel Processing Divide: Engineers discussed the OpenAI Agent's ability to make parallel function calls, a feature clarified by LlamaIndex's documentation, albeit true parallel computations remain elusive. The discussion spanned several topics including persistence in TypeScript and RAG-based analytics for document sets with examples linked in the documentation.

- GPT-4o Ecstatic on Professional Doc Extraction: Recent research shows GPT-4o markedly surpasses other tools in document extraction, boasting an average accuracy of 84.69%, indicating potential shifts in various industries like finance.

- Seeking Semantic SQL Synergy: The guild pondered the fusion of semantic layers with SQL Retrievers to potentially enhance database interactions, a topic that remains open for exploration and could inspire future integrations and discussions.

Latent Space Discord

AI's Intrigue and Tumult in Latent Space: An AI Reverse Turing Test video surfaced, sparking interest by depicting advanced AIs attempting to discern a human among themselves. Meanwhile, accusations surfaced around llama3-V allegedly misappropriating MiniCPM-Llama3-V 2.5's academic work, as noted on GitHub.

The Future of Software and Elite Influence: Engineers digested the implications of "The End of Software," a provocative Google Doc, while also discussing Anthropic's Dario Amodei’s rise to Time's Top 100 after his decision to delay the chatbot Claude’s release. An O'Reilly article on operational aspects of LLM applications was also examined for insights on a year of building with these models.

AI Event Emerges as Industry Nexus: The recent announcement of the AI Engineering World Forum (AIEWF), detailed in a tweet, stoked anticipation with new speakers, an AI in Fortune 500 track, and official events covering diverse LLM topics and industry leadership.

Zoom to the Rescue for Tech Glitch: A Zoom meeting saved the day for members experiencing technical disruptions during a live video stream. They bridged to continued discussion by accessing the session through the shared Zoom link.

LangChain AI Discord

RAG Systems Embrace Historical Data: Community members discussed strategies for integrating historical data into RAG systems, recommending optimizations for handling CSV tables and scanned documents to enhance efficiency.

Game Chatbots Game Stronger: A debate on the structure of chatbots for game recommendations led to advice against splitting a LangGraph Chatbot agent into multiple agents, with a preference for a unified agent or pre-curated datasets for simplicity.

LangChain vs OpenAI Showdown: Conversations comparing LangChain with OpenAI agents pointed out LangChain's adaptability in orchestrating LLM calls, highlighting that use case requirements should dictate the choice between abstraction layers or direct OpenAI usage.

Conversational AI Subjects Trending in Media: Publications surfaced in the community include explorations of LLMs with Hugging Face and LangChain on Google Colab, and the rising importance of conversational agents in LangChain. Key resources include exploratory guide on Medium and a deep dive into conversational agents by Ankush k Singal.

JavaScript Meets LangServe Hurdle: A snippet shared the struggles within the JavaScript community when dealing with the RemoteRunnable class in LangServe, as evidenced by a TypeError related to message array processing.

tinygrad (George Hotz) Discord

Tinygrad Progress Towards Haskell Horizon: Discussions highlighted a member's interest in translating tinygrad into Haskell due to Python's limitations, while another suggested developing a new language specifically for tinygrad’s uop end.

Evolving Autotuning in AI: The community critiqued older autotuning methods like TVM, emphasizing the need for innovations that address shortcomings in block size and pipelining tuning to enhance model accuracy.

Rethinking exp2 with Taylor Series: Users, including georgehotz, examined the applicability of Taylor series to improve the exp2 function, discussing the potential benefits of CPU-like range reduction and reconstruction methods.

Anticipating tinygrad's Quantum Leap: George Hotz excitedly announced tinygrad 1.0's intentions to outstrip PyTorch in speed for training GPT-2 on NVIDIA and AMD, accompanied by a tweet highlighting upcoming features like FlashAttention, and proposing to ditch numpy/tqdm dependencies.

NVIDIA's Lackluster Showcase Draws Ire: Nvidia's CEO Jensen Huang's COMPUTEX 2024 keynote video raised expectations for revolutionary reveals but ultimately left at least one community member bitterly disappointed.

OpenAccess AI Collective (axolotl) Discord

- Yuan2.0-M32 Shows Its Expertise: The new Yuan2.0-M32 model stands out with its Mixture-of-Experts architecture and is presented alongside key references including its Hugging Face repository and the accompanying research paper.

- Troubleshooting llama.cpp: Users are pinpointing tokenization problems in llama.cpp, citing specific GitHub issues (#7094 and #7271) and advising careful verification during finetuning.

- Axolotl Adapts to AMD: Modifying Axolotl for AMD compatibility has been tackled, resulting in an experimental ROCm install guide on GitHub.

- Defining Axolotl's Non-Crypto Realm: In a clarification within the community, Axolotl is reaffirmed to focus on training large language models, explicitly not delving into cryptocurrency.

- QLoRA Training with wandb Tracking: Members are exchanging insights on how to implement wandb for monitoring parameters and losses during QLoRA training sessions, with a nod to an existing wandb project and specific

qlora.ymlconfigurations.

Cohere Discord

Open Call for AI Collab: Manifold Research is on the hunt for collaborators to work on building an open-source "Generalist" model, inspired by GATO, targeting multimodal and control tasks across domains like vision, language, and more.

Cohere Community Troubleshoots: A broken dashboard link in the Cohere Chat API documentation was spotted and flagged, with community members stepping in to acknowledge and presumably kickstart a fix.

AI Model Aya 23 Gets the Thumbs Up: A user shares a successful testing of Cohire's Aya 23 model and hints at a desire to distribute their code for peer review.

Community Tag Upgrade Revealed: Discord's updated tagging mechanism sparks conversation and excitement in the community, with members sharing a link to the tag explanation.

Support Network Activated: For those experiencing disappearing chat histories or other issues, redirections to Cohere's support team at support@cohere.com or the server's designated support channel are provided.

OpenInterpreter Discord

- Whisper to OI's Rescue: Efforts to integrate Whisper or Piper into Open Interpreter (OI) are underway; this aims to reduce verbosity and increase speech initiation speed. No successful installation of OI on non-Ubuntu systems was reported; one attempt on MX Linux failed due to Python issues.

- Agent Decision Confusion Cleared: Clarifications were made about "agent-like decisions" within OI, leading to a specific section in the codebase—the LLM with the prompt found in the default system message.

- Looking for a Marketer: The group discussed a need for marketing efforts for Open Interpreter, which was previously handled by an individual.

- Gemini's Run Runs into Trouble: Queries were raised about running Gemini on Open Interpreter, as the provided documentation seemed to be outdated.

- OI's Mobile Maneuver: There are active discussions on creating an app to link the OI server to iPhone, with an existing GitHub code and a TestFlight link for the iOS version. TTS functionality on iOS was confirmed, while an Android version is in development.

- Spotlight on Loyal-Elephie: A single mention pointed to Loyal-Elephie, without context, by user cyanidebyte.

Interconnects (Nathan Lambert) Discord

- Security Breach at Hugging Face: Unauthorized access compromised secrets on Hugging Face's Spaces platform, leading to a recommendation to update keys and use fine-grained access tokens. Full details are outlined in this security update.

- AI2 Proactively Updates Tokens: In response to the Hugging Face incident, AI2 is refreshing their tokens as a precaution. However, Nathan Lambert reported his tokens auto-updated, mitigating the need for manual action.

- Phi-3 Models Joining the Ranks: The Phi-3 Medium (14B) and Small (7B) models have been added to the @lmsysorg leaderboard, performing comparably to GPT-3.5-Turbo and Llama-2-70B respectively, but with a disclaimer against optimizing models solely for academic benchmarks.

- Plagiarism Allegations in VLM Community: Discussions surfaced claiming that Llama 3V was a plagiarized model, supposedly using MiniCPM-Llama3-V 2.5's framework with minor changes. Links, including Chris Manning's criticism and a now-deleted Medium article, fueled conversations about integrity within the VLM community.

- Donation-Bets Gain Preference: Dylan transformed a lost bet about model performance into an opportunity for charity, instigating a trend of 'donation-bets' among members who see it also as a reputational booster for a good cause.

Mozilla AI Discord

- Mozilla Backs Local AI Wizardry: The Mozilla Builders Accelerator is now open for applications, targeting innovators in Local AI, offering up to $100,000 in funding, mentorship, and a stage on Mozilla’s networks for groundbreaking projects. Apply now to transform personal devices into local AI powerhouses.

- Boosting llama.cpp with Paddler: Engineers are considering integrating Paddler, a stateful load balancer, with llama.cpp to streamline llamafile operations, potentially offering more efficient model serving capabilities.

- Sluggish Sampling Calls JSON Schema into Question: AI engineers encounter slowdowns in sampling due to server issues and identified a problem with the JSON schema validation, citing a specific issue in the llama.cpp repository.

- API Endpoint Compatibility Wrangling: Usability discussions revealed that the OpenAI-compatible chat endpoint

/v1/chat/completionsworks with local models; however, model-specific roles need adjustments previously handled by OpenAI’s processing.

- Striving for Uniformity with Model Interfaces: There's a concerted effort to maintain a uniform interface across various models and providers despite the inherent challenges due to different model specifics, necessitating customized pre-processing solutions for models like Mistral-7b-instruct.

DiscoResearch Discord

- Spaetzle Perplexes Participants: Members discussed the details of Spaetzle models, with the clarification that there are actually multiple models rather than a single entity. A related AI-generated Medium post highlighted different approaches to tuning pre-trained language models, which include names like phi-3-mini-instruct and phoenix.

- Anticipation for Replay Buffer Implementation: An article on InstructLab describes a replay buffer method that could relate closely to Spaetzle; however, it has not been implemented to date. Interest is brewing around this concept, indicating potential future developments.

- Deciphering Deutsche Digits: A call was made for recommendations on German handwriting recognition models, and Kraken was suggested as an option, accompanied by a survey link possibly intended for further research or input collection.

- Model Benchmarking and Strategy Sharing: The effectiveness of tuning methods was a core topic, underscored by a member expressing intent to engage with material on InstructLab. No specific benchmarks for the models were provided, although they were mentioned in the context of Spaetzle.

Datasette - LLM (@SimonW) Discord

- Claude 3's Tokenizing Troubles: Engineers found it puzzling that Claude 3 lacks a dedicated tokenizer, a critical tool for language model preprocessing.

- Nomic Model Queries: There's confusion on how to utilize the nomic-embed-text-v1 model since it isn't listed with gpt4all models within the

llm modelscommand output. - SimonW's Plugin Pivot: For embedding tasks, SimonW recommends switching to the llm-sentence-transformers plugin, which appears to offer better support for the Nomic model.

- Embed Like a Pro with Release Notes: Detailed installation and usage instructions for the nomic-embed-text-v1 model can be found in the version 0.2 release notes of llm-sentence-transformers.

AI21 Labs (Jamba) Discord

- Jamba Instruct on Par with Mixtral: Within discussions, Jamba Instruct's performance was likened to that of Mixtral 8x7B, positioning it as a strong competitor against the recently highlighted GPT-4 model.

- Function Composition: AI's Achilles' Heel: A shared LinkedIn post revealed a gap in current machine learning models like Transformers and RNNs, pinpointing challenges with function composition and flagging Jamba's involvement in related SSM experiments.

MLOps @Chipro Discord

- Hack Your Way to Health Innovations: The Alliance AI4Health Medical Innovation Challenge Hackathon/Ideathon is calling for participants to develop AI-driven healthcare solutions. With over $5k in prizes on offer, the event aims to stimulate groundbreaking advancements in medical technology. Click to register.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI Stack Devs (Yoko Li) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!