[AINews] LMSys killed Model Versioning (gpt 4o 1120, gemini exp 1121)

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Dates are all you need.

AI News for 11/21/2024-11/22/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (217 channels, and 2501 messages) for you. Estimated reading time saved (at 200wpm): 237 minutes. You can now tag @smol_ai for AINews discussions!

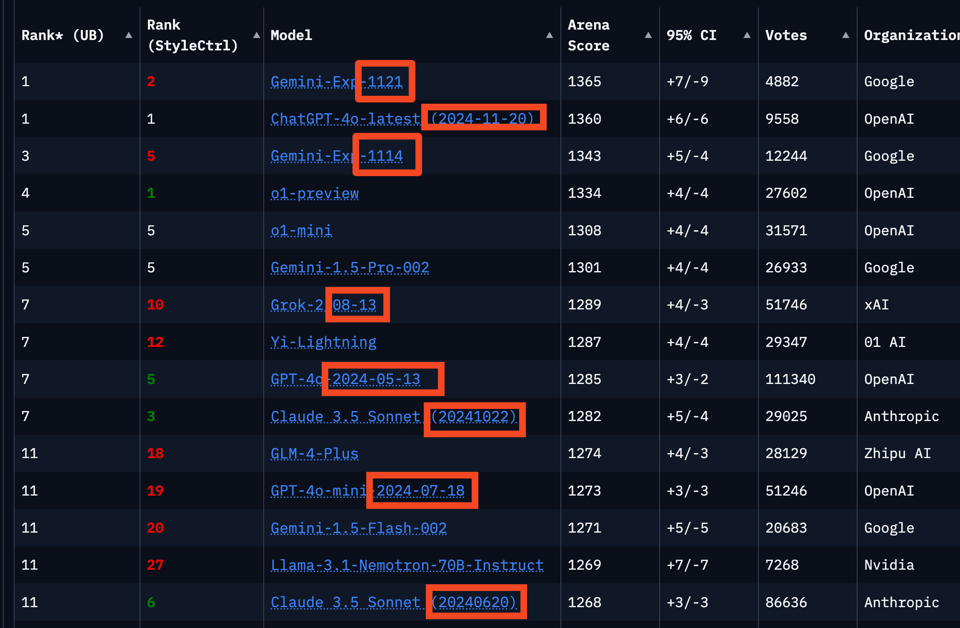

Frontier lab race dynamics are getting somewhat ridiculous. We used to have a rule that new SOTA models always get top spot, and reported on Gemini Exp 1114 last week even though there was next to no useful detail on it beyond their lmsys ranking. But yesterday OpenAI overtook them again with gpt-4o-2024-11-20, which we fortunately didn't report on (thanks to DeepSeek R1), because it is now suspected of being a worse (but faster) model (we don't know if this is true but it would be a very serious accusation indeed for OpenAI to effectively brand a "mini" model as a mainline model and hope we don't notice), and meanwhile, today Gemini Exp 1121 is out -again- retaking the top lmsys spot from OpenAI.

It's getting so absurd that this joke playing on OpenAI-vs-Gemini-release-coincidences is somewhat plausible:

The complete suspension of all model release decorum is always justifiable under innocent "we just wanted to get these out into the hands of devs ASAP" type good intentions, but we are now in a situation where all three frontier labs (reminder that Anthropic, despite their snark, has also been playing the date-update-with-no-versioning game) have SOTA model variants uniquely only identified by their dates rather than their versions, in order to keep up on Lmsys.

Are we just not doing versioning anymore? Hopefully we are, because we're still talking about o2 and gpt5 and claude4 and gemini2, but this liminal lull as the 100k clusters ramp up is a rather local minima nobody is truly happy with.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Theme 1. DeepSeek and Global AI Advancements

- DeepSeek's R1 Performance: DeepSeek R1 is compared to OpenAI o1-preview with "thoughts" streamed directly without MCTS used during inference. @saranormous highlights the model's potency, suggesting chip controls are ineffective against burgeoning competition from China, underlined by @bindureddy who praises the open-source nature of R1.

- Market Impact and Predictions: Deepseek-r1 gains traction as a competitive alternative to existing leaders like OpenAI, further emphasized by discussion of the AI race between China and the US.

Theme 2. Model Releases and Tech Developments

- Google's Gemini-Exp-1121: This model is being lauded for its improvements in vision, coding, and creative writing. @Lmarena_ai discusses its ascendance in the Chatbot Arena rankings alongside GPT-4o, showcasing rapid gains in performance.

- Enhanced Features: New coding proficiency, stronger reasoning, and improved visual understanding have made Gemini-Exp-1121 a formidable force, per @_akhaliq.

- Mistral's Expansion: MistralAI announces a new office in Palo Alto, indicating growth and open positions in various fields. This expansion reflects a strategic push to scale operations and talent pool, as noted by @sophiamyang.

- Claude Pro Google Docs Integration: Anthropic enhances Claude AI with Google Docs integration, aiming to streamline document management across organization levels.

Theme 3. AI Frameworks and Dataset Releases

- SmolTalk Dataset Unveiling: SmolTalk, a 1M sample dataset under Apache 2.0, boosts SmolLM v2's performance with new synthetic datasets. This initiative promises to enhance various model outputs, like summarization and rewriting.

- Dataset Integration and Performance: The dataset couples with public sources like OpenHermes2.5 and outperforms competitors trained on similar model scales, positioning it as a high-impact resource in language model training.

Theme 4. Innovative AI Applications and Tools

- LangGraph Agents and LangChain's Voice Capabilities: A video tutorial illustrates the transformation of LangGraph agents into voice-enabled assistants using OpenAI's Whisper for input and ElevenLabs for speech output.

- OpenRecovery's Use of LangGraph: Highlighted by LangChain, the application in addiction recovery demonstrates its practical adaptiveness and scalability.

Theme 5. Benchmarks and Industry Analysis

- AI Performance and Industry Insights: Menlo Ventures releases a report on Generative AI's evolution, emphasizing top use cases and integration strategies, noting Anthropic's growing share in the market.

- Model Fine-tuning and Evaluation: A shift from fine-tuning to more advanced RAG and agentic AI techniques is reported, underscoring the value of LLM engineers in optimizing AI applications.

Theme 6. Memes/Humor

- Misadventures with AI and OpenAI: @aidan_mclau humorously contemplates the challenges of fitting new language model behaviors into neatly defined categories, reflecting the often unpredictable nature of ongoing AI developments.

- Finance Humor and Predictions: @nearcyan injects humor into financial discussions by likening the experience of "ghosting" at FAANG companies with engineering's evolving professional landscapes.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. M4 Max 128GB: Running 72B Models at 11 t/s with MLX

- M4 Max 128GB running Qwen 72B Q4 MLX at 11tokens/second. (Score: 476, Comments: 181): The Apple M4 Max with 128GB of memory successfully runs the Qwen 72B Q4 MLX model at a speed of 11 tokens per second. This performance metric demonstrates the capability of Apple silicon to handle large language models efficiently.

- Users discussed power consumption and thermal performance, noting the system draws up to 190W during inference while running at high temperatures. The M4 Max achieves this performance while using significantly less power than comparable setups with multiple NVIDIA 3090s or A6000s.

- The M4 Max's memory bandwidth of 546 GB/s and performance of 11 tokens/second represents a significant improvement over the M1 Max (at 409.6 GB/s and 6.17 tokens/second). Users successfully tested various models including Qwen 72B, Mistral 128B, and smaller coding models with 32k context windows.

- Discussion compared costs between building a desktop setup (~$4000 for GPUs alone) versus the $4700 M4 Max laptop, with many highlighting the portability advantage and complete solution aspect of the Apple system for running local LLMs, particularly during travel or in locations with power constraints.

- Mac Users: New Mistral Large MLX Quants for Apple Silicon (MLX) (Score: 91, Comments: 23): A developer created 2-bit and 4-bit quantized versions of Mistral Large optimized for Apple Silicon using MLX-LM, with the q2 version achieving 7.4 tokens/second on an M4 Max while using 42.3GB RAM. The models are available on HuggingFace and can run in LMStudio or other MLX-compatible systems, promising faster performance than GGUF models on M-series chips.

- Users inquired about performance comparisons, with tests showing MLX models running approximately 20% faster than GGUF versions on Apple Silicon, confirmed independently by multiple users.

- Questions focused on practical usage, including how to run models through LMStudio, where users can manually download from HuggingFace and place files in the LMStudio cache folder for recognition.

- Users discussed hardware compatibility, particularly regarding the M4 Pro 64GB and its ability to run Mistral Large variants, with interest in comparing performance against Llama 3.1 70B Q4.

Theme 2. DeepSeek R1-Lite Preview Shows Strong Reasoning Capabilities

- Here the R1-Lite-Preview from DeepSeek AI showed its power... WTF!! This is amazing!! (Score: 146, Comments: 19): DeepSeek's R1-Lite-Preview model demonstrates advanced capabilities, though no specific examples or details were provided in the post body. The post title expresses enthusiasm about the model's performance but lacks substantive information about its actual capabilities or benchmarks.

- Base32 decoding capabilities vary significantly among models, with GPT-4 showing success while other models struggle. The discussion highlights that most open models perform poorly with ciphers, though they handle base64 well due to its prevalence in training data.

- MLX knowledge gaps were noted in DeepSeek's R1-Lite-Preview, suggesting limited parameter capacity for comprehensive domain knowledge. This limitation reflects the model's likely smaller size compared to other contemporary models.

- Discussion of tokenization constraints explains model performance on encoding/decoding tasks, with current models using token-based rather than character-based processing. Users compare this limitation to humans trying to count invisible atoms - a system limitation rather than intelligence measure.

- deepseek R1 lite is impressive , so impressive it makes qwen 2.5 coder look dumb , here is why i am saying this, i tested R1 lite on recent codeforces contest problems (virtual participation) and it was very .... very good (Score: 135, Comments: 44): DeepSeek R1-Lite demonstrates superior performance compared to Qwen 2.5 specifically on Codeforces competitive programming contest problems through virtual participation testing. The post author emphasizes R1-Lite's exceptional performance but provides no specific metrics or detailed comparisons.

- DeepSeek R1-Lite shows mixed performance across different tasks - successful with scrambled letters and number encryption but fails consistently with Playfair Cipher. Users note it excels at small-scope problems like competitive programming tasks but may struggle with real-world coding scenarios.

- Comparative testing between R1-Lite and Qwen 2.5 shows Qwen performing better at practical tasks, with users reporting success in Unity C# scripting and implementing a raycast suspension system. Both models can create Tetris, with Qwen completing it in one attempt versus R1's two attempts.

- Users highlight that success in competitive programming doesn't necessarily translate to real-world coding ability. Testing on platforms like atcoder.jp and Codeforces with unique, recent problems is suggested for better model evaluation.

Theme 3. Gemini-exp-1121 Tops LMSYS with Enhanced Coding & Vision

- Google Releases New Model That Tops LMSYS (Score: 139, Comments: 53): Google released Gemini-exp-1121, which achieved top performance on the LMSYS leaderboard for coding and vision tasks. The model represents an improvement over previous Gemini iterations, though no specific performance metrics were provided in the announcement.

- LMSYS leaderboard rankings are heavily debated, with users arguing that Claude being ranked #7 indicates benchmark limitations. Multiple users report Claude outperforms competitors in real-world applications, particularly for coding and technical tasks.

- Gemini's new vision capabilities enable direct manga translation by processing full image context, offering advantages over traditional OCR + translation pipelines. This approach better handles context-dependent elements like character gender and specialized terminology.

- A competitive pattern emerged between Google and OpenAI, where each company repeatedly releases models to top the leaderboard. The release of Gemini-exp-1121 appears to be a strategic move following OpenAI's recent model release.

Theme 4. Allen AI's Tulu 3: Open Source Instruct Models on Llama 3.1

- Tülu 3 -- a set of state-of-the-art instruct models with fully open data, eval code, and training algorithms (Score: 117, Comments: 23): Allen AI released Tülu 3, a collection of open-source instruction-following models, with complete access to training data, evaluation code, and training algorithms. The models aim to advance state-of-the-art performance while maintaining full transparency in their development process.

- Tülu 3 is a collection of Llama 3.1 fine-tunes rather than models built from scratch, with models available in 8B and 70B versions. Community members have already created GGUF quantized versions and 4-bit variants for improved accessibility.

- Performance benchmarks show the 8B model surpassing Qwen 2.5 7B Instruct while the 70B model outperforms Qwen 2.5 72B Instruct, GPT-4o Mini, and Claude 3.5 Haiku. The release includes comprehensive training data, reward models, and hyperparameters.

- Allen AI has announced that their completely open-source OLMo model series will receive updates this month. A detailed discussion of Tülu 3's training process is available in a newly released podcast.

Theme 5. NVIDIA KVPress: Open Source KV Cache Compression Research

- New NVIDIA repo for KV compression research (Score: 48, Comments: 7): NVIDIA released an open-source library called kvpress to address KV cache compression challenges in large language models, where models like llama 3.1-70B require 330GB of memory for 1M tokens in float16 precision. The library, built on 🤗 Transformers, introduces a new "expected attention" method and provides tools for researchers to develop and benchmark compression techniques, with the code available at kvpress.

- KV cache quantization is not currently supported by kvpress, though according to the FAQ it could potentially be combined with pruning strategies to achieve up to 4x compression when moving from float16 to int4.

- That's the only meaningful discussion point from the comments provided - the other comments and replies don't add substantive information to summarize.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. Flux.1 Tools Suite Expands SD Capabilities

- Huge FLUX news just dropped. This is just big. Inpainting and outpainting better than paid Adobe Photoshop with FLUX DEV. By FLUX team published Canny and Depth ControlNet a likes and Image Variation and Concept transfer like style transfer or 0-shot face transfer. (Score: 739, Comments: 194): Black Forest Labs released their Flux.1 Tools control suite featuring inpainting and outpainting capabilities that compete with Adobe Photoshop, alongside ControlNet-style features for Canny and Depth controls. The release includes image variation and concept transfer tools that enable style transfer and zero-shot face transfer functionality.

- ComfyUI offers day-one support for Flux Tools with detailed implementation examples, requiring 27GB VRAM for full models, though LoRA versions are available on Huggingface.

- Community feedback indicates strong outpainting capabilities comparable to Midjourney, with users particularly praising the Redux IP adapter's performance and strength. The tools are publicly available for the FLUX DEV model with implementation details at Black Forest Labs.

- Users criticized the clickbait-style announcement title and requested more straightforward technical communication, while also noting that an FP8 version is available on Civitai for those with lower VRAM requirements.

- Day 1 ComfyUI Support for FLUX Tools (Score: 149, Comments: 26): ComfyUI added immediate support for FLUX Tools on release day, though specific details about the integration were not provided in the post.

- ComfyUI users report successful integration with Flux Tools, with SwarmUI providing native support for all model variants as documented on GitHub.

- Users identified issues with Redux being overpowering and not working with FP8_scaled models, though adjusting ConditioningSetTimestepRange and ConditioningSetAreaStrength parameters showed improvement. A proper compositing workflow using ImageCompositeMasked or Inpaint Crop/Stitch nodes was recommended to prevent VAE degradation.

- The implementation supports Redux Adapter, Fill Model, and ControlNet Models & LoRAs (specifically Depth and Canny), with a demonstration workflow available on CivitAI.

- Flux Redux with no text prompt (Score: 43, Comments: 30): Redux adapter testing focused on image variation capabilities without text prompts, though no specific details or results were provided in the post body.

- FLUX.1 Redux adapter focuses on reproducing images with variations while maintaining style and scene without recreating faces. Users report faster and more precise results, particularly with inpainting capabilities for changing clothes and backgrounds.

- The ComfyUI implementation requires placing the sigclip vision model in the models/clip_vision folder. Updates and workflows can be found on the ComfyUI examples page.

- Flux Tools integration with ComfyUI provides features like ControlNet, variations, and in/outpainting as detailed in the Black Forest Labs documentation. The implementation guide is available on the ComfyUI blog.

Theme 2. NVIDIA/MIT Release SANA: Efficient Sub-1B Parameter Diffusion Model

- Diffusion code for SANA has just released (Score: 103, Comments: 52): SANA diffusion model's training and inference code has been released on GitHub by NVlabs. The model weights are expected to be available on HuggingFace under "Efficient-Large-Model/Sana_1600M_1024px" but are not currently accessible.

- SANA model's key feature is its ability to output 4096x4096 images directly, though some users note that models like UltraPixel and Cascade can also achieve this. The model comes in sizes of 0.6B and 1.6B parameters, significantly smaller than SDXL (2B) and Flux Dev (12B).

- The model is released by NVIDIA, MIT, and Tsinghua University researchers with a CC BY-NC-SA 4.0 License. Users note this is more restrictive than PixArt-Sigma's OpenRail++ license, but praise the rare instance of a major company releasing model weights.

- Technical discussion focuses on the model's speed advantage and potential for fine-tuning, with the 0.6B version being considered for specialized use cases. The model is available on HuggingFace with a size of 6.4GB.

- Testing the CogVideoX1.5-5B i2v model (Score: 177, Comments: 51): CogVideoX1.5-5B, an image-to-video model, was discussed for community testing and evaluation. Insufficient context was provided in the post body for additional details about testing procedures or results.

- The model's workflow is available on Civitai, with recommended resolution being above 720p. The v1.5 version is still in testing phase and currently only supports 1344x768 according to Kijai documentation.

- Using a 4090 GPU, generation takes approximately 3 minutes for 1024x640 resolution and 5 minutes for 1216x832. A 3090 with 24GB VRAM can run without the 'enable_sequential_cpu_offload' feature.

- Technical limitations include poor performance of the GGUF version with occasional crashes, incompatibility with anime-style images, and potential Out of Memory (OOM) issues on Windows when attempting 81 frames at 1024x640 resolution.

Theme 3. ChatGPT 4o Nov Update: Better Writing, Lower Test Scores

- gpt-4o-2024-11-20 scores lower on MMLU, GPQA, MATH and SimpleQA than gpt-4o-2024-08-06 (Score: 77, Comments: 17): GPT-4o's November 2024 update shows performance decreases across multiple benchmarks compared to its August version, including MMLU, GPQA, MATH, and SimpleQA. The lack of additional context prevents analysis of specific score differences or potential reasons for the decline.

- Performance drops in the latest GPT-4o are significant, with GPQA declining by 13.37% and MMLU by 3.38% according to lifearchitect.ai. The model now scores lower than Claude 3.5 Sonnet, Llama 3.1 405B, Grok 2, and even Grok 2 mini on certain benchmarks.

- Multiple users suggest OpenAI is optimizing for creative writing and user appeal rather than factual accuracy, potentially explaining the decline in benchmark performance. The tradeoff has resulted in "mind blowing" improvements in creative tasks while sacrificing objective correctness.

- Users express desire for specialized model naming (like "gpt-4o-creative-writing" or "gpt-4o-coding") and suggest these changes are cost-optimization driven. Similar specialization trends are noted with Anthropic's Sonnet models showing task-specific improvements and declines.

- OpenAI's new update turns it into the greatest lyricist of all time (ChatGPT + Suno) (Score: 57, Comments: 27): OpenAI released an update that, when combined with Suno, enhances its creative writing capabilities specifically for lyrics. No additional context or specific improvements were provided in the post body.

- Users compare the AI's rap style to various artists including Eminem, Notorious B.I.G., Talib Kweli, and Blackalicious, with some suggesting it surpasses 98% of human rap. The original source was shared via Twitter/X.

- The technical improvement appears focused on the LLM's rhyming capabilities while maintaining coherent narrative structure. Several users note the AI's ability to maintain consistent patterns while delivering meaningful content.

- Multiple comments express concern about AI's rapid advancement, with one user noting humans are being outperformed not just in math and chess but now in creative pursuits like rapping. The sentiment suggests significant apprehension about AI capabilities.

Theme 4. Claude Free Users Limited to Haiku as Demand Strains Capacity

- Free accounts are now (permanently?) routed to 3.5 Haiku (Score: 52, Comments: 40): Claude free accounts now default to the Haiku model, discovered by user u/Xxyz260 through testing with a specific prompt about the October 7, 2023 attack. The change appears to be unannounced, with users reporting intermittent access to Sonnet 3.5 over an 18-hour period before reverting to Haiku, suggesting possible load balancing tests by Anthropic.

- Users confirmed through testing that free accounts are receiving Haiku 3.5, not Haiku 3, with evidence shown in test results demonstrating that model knowledge comes from system prompts rather than the models themselves.

- A key concern emerged that Pro users are not receiving access to the newest Haiku 3.5 model after exhausting their Sonnet limits, while free users are getting the updated version by default.

- Discussion around ChatGPT becoming more attractive compared to Claude, particularly for coding tasks, with users expressing frustration about Anthropic's handling of the service changes and lack of transparency.

- Are they gonna do something about Claude's overloaded server? (Score: 27, Comments: 46): A user reports being unable to access Claude Sonnet 3.5 due to server availability issues for free accounts, while finding Claude Haiku unreliable for following prompts. The user expresses that the $20 Claude Pro subscription is cost-prohibitive for their situation as a college student, despite using Claude extensively for research, creative writing, and content creation.

- Free usage of Claude Sonnet is heavily impacted by server load, particularly during US working hours, with users reporting up to 14 hours of unavailability. European users note better access during their daytime hours.

- Even paid users experience overloading issues, suggesting Anthropic's server capacity constraints are significant. The situation is unlikely to improve for free users unless the company expands capacity or training costs decrease.

- There's confusion about the Haiku model version (3.0 vs 3.5), with users sharing comparison screenshots and noting inconsistencies between mobile app and web UI displays, suggesting possible A/B testing or UI bugs.

AI Discord Recap

A summary of Summaries of Summaries by O1-mini

Theme 1. New AI Models Surge Ahead with Enhanced Capabilities

- Tülu 3 Launches with Superior Performance Over Llama 3.1: Nathan Lambert announced the release of Tülu 3, an open frontier model that outperforms Llama 3.1 across multiple tasks by incorporating a novel Reinforcement Learning with Verifiable Rewards approach. This advancement ensures higher accuracy and reliability in real-world applications.

- Gemini Experimental 1121 Tops Chatbot Arena Benchmarks: Google DeepMind’s Gemini-Exp-1121 tied for the top spot in the Chatbot Arena, surpassing GPT-4o-1120. Its significant improvements in coding and reasoning capabilities highlight rapid progress in AI model performance.

- Qwen 2.5 Achieves GPT-4o-Level Performance in Code Editing: Open source models like Qwen 2.5 32B demonstrate competitive performance on Aider's code editing benchmarks, matching GPT-4o. Users emphasize the critical role of model quantization, noting significant performance variations based on quantization levels.

Theme 2. Advanced Fine-Tuning Techniques Propel Model Efficiency

- Unsloth AI Introduces Vision Support, Doubling Fine-Tuning Speed: Unsloth has launched vision support for models like LLaMA, Pixtral, and Qwen, enhancing developer capabilities by improving fine-tuning speed by 2x and reducing memory usage by 70%. This positions Unsloth ahead of benchmarks such as Flash Attention 2 (FA2) and Hugging Face (HF).

- Contextual Position Encoding (CoPE) Enhances Model Expressiveness: Contextual Position Encoding (CoPE) adapts position encoding based on token context rather than fixed counts, leading to more expressive models. This method improves handling of selective tasks like Flip-Flop, which traditional position encoding struggles with.

- AnchorAttention Reduces Training Time by Over 50% for Long-Context Models: A new paper introduces AnchorAttention, a plug-and-play solution that enhances long-context performance while cutting training time by more than 50%. Compatible with both FlashAttention and FlexAttention, it is suitable for applications like video understanding.

Theme 3. Hardware Solutions and Performance Optimizations Drive AI Efficiency

- Cloud-Based GPU Renting Enhances Model Speed for $25-50/month: Transitioning to a cloud server for model hosting costs $25-50/month and significantly boosts model speed compared to local hardware. Users find cloud-hosted GPUs more cost-effective and performant, avoiding the limitations of on-premises setups.

- YOLO Excels in Real-Time Video Object Detection: YOLO remains a preferred choice for video object detection, supported by the YOLO-VIDEO resource. Ongoing strategies aim to optimize YOLO's performance in real-time processing scenarios.

- MI300X GPUs Experience Critical Hang Issues During Long Runs: Members reported intermittent GPU hangs on MI300X GPUs during extended 12-19 hour runs with axolotl, primarily after the 6-hour mark. These stability concerns are being tracked on GitHub Issue #4021, including detailed metrics like loss and learning rate.

Theme 4. APIs and Integrations Enable Custom Deployments and Enhancements

- Hugging Face Endpoints Support Custom Handler Files: Hugging Face Endpoints now allow deploying custom AI models using a handler.py file, facilitating tailored pre- and post-processing. Implementing the EndpointHandler class ensures flexible and efficient model deployment tailored to specific needs.

- Model Context Protocol (MCP) Enhances Local Interactions: Anthropic's Claude Desktop now supports the Model Context Protocol (MCP), enabling enhanced local interactions with models via Python and TypeScript SDKs. While remote connection capabilities are pending, initial support includes various SDKs, raising interest in expanded functionalities.

- OpenRouter API Documentation Clarified for Seamless Integration: Users expressed confusion regarding context window functionalities in the OpenRouter API documentation. Enhancements are recommended to improve clarity, assisting seamless integration with tools like LangChain and optimizing provider selection for high-context prompts.

Theme 5. Comprehensive Model Evaluations and Benchmark Comparisons Illuminate AI Progress

- Perplexity Pro Outperforms ChatGPT in Accuracy for Specific Tasks: Users compared Perplexity with ChatGPT, noting that Perplexity is perceived as more accurate and offers advantages in specific functionalities. One participant highlighted that certain features of Perplexity were developed before their popularity surge, underscoring its robust capabilities.

- SageAttention Boosts Attention Mechanisms Efficiency by 8-Bit Quantization: The SageAttention method introduces an efficient 8-bit quantization approach for attention mechanisms, enhancing operations per second while maintaining accuracy. This improvement addresses the high computational complexity traditionally associated with larger sequences.

- DeepSeek-R1-Lite-Preview Showcases Superior Reasoning on Coding Benchmarks: DeepSeek launched the DeepSeek-R1-Lite-Preview, demonstrating impressive reasoning capabilities on coding benchmarks. Users like Zhihong Shao praised its performance in both coding and mathematical challenges, highlighting its practical applications.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Vision Support in Unsloth Launches: Unsloth has officially launched vision support, enabling fine-tuning of models like LLaMA, Pixtral, and Qwen, which significantly enhances developer capabilities.

- This feature improves fine-tuning speed by 2x and reduces memory usage by 70%, positioning Unsloth ahead of benchmarks such as Flash Attention 2 (FA2) and Hugging Face (HF).

- Enhancements in Fine-tuning Qwen and LLaMA: Users are exploring the feasibility of fine-tuning both base and instructed Qwen and LLaMA models, with discussions centered around creating and merging LoRAs.

- Unsloth's vision support facilitates merging by converting 4-bit LoRAs back into 16-bit, streamlining the fine-tuning process.

- Llama 3.2 Vision Unveiled: Llama 3.2 Vision models are now supported by Unsloth, achieving 2x faster training speeds and 70% less memory usage while enabling 4-8x longer context lengths.

- The release includes Google Colab notebooks for tasks like Radiography and Maths OCR to LaTeX, accessible via Colab links.

- AnchorAttention Improves Long-Context Training: A new paper introduces AnchorAttention, a method that enhances long-context performance and reduces training time by over 50%.

- This solution is compatible with both FlashAttention and FlexAttention, making it suitable for applications like video understanding.

- Strategies for Training Checkpoint Selection: Discussions around selecting the appropriate training checkpoint revealed varied approaches, with some members opting for extensive checkpoint usage and others advocating for strategic selection based on specific metrics.

- Participants emphasized the importance of performance benchmarks and shared experiences to optimize checkpoint choices in the training workflow.

Interconnects (Nathan Lambert) Discord

- Tülu 3 launch innovations: Nathan Lambert announced the release of Tülu 3, an open frontier model that outperforms Llama 3.1 on multiple tasks, incorporating a novel Reinforcement Learning with Verifiable Rewards approach.

- The new model rewards algorithms exclusively for accurate generations, enhancing its performance and reliability in real-world applications.

- Nvidia's AI Wall concerns: An article from The Economist reports Nvidia’s CEO downplaying fears that AI has 'hit a wall', despite widespread skepticism in the community.

- This stance has intensified discussions about the current trajectory of AI advancements and the pressing need for continued innovation.

- Gemini's Chatbot Arena performance: Google DeepMind’s Gemini-Exp-1121 tied for the top spot in the Chatbot Arena, surpassing GPT-4o-1120 in recent benchmarks.

- Gemini-Exp-1121 exhibits significant improvements in coding and reasoning capabilities, highlighting rapid progress in AI model performance.

- Reinforcement Learning with Verifiable Rewards: Tülu 3 incorporates a new technique called Reinforcement Learning with Verifiable Rewards, which trains models on constrained math problems and rewards correct outputs, as detailed in Nathan Lambert's tweet.

- This approach aims to ensure higher accuracy in model generations by strictly incentivizing correct responses during training.

- Model Context Protocol by Anthropic: Anthropic's Claude Desktop now supports the Model Context Protocol (MCP), enabling enhanced local interactions with models via Python and TypeScript SDKs.

- While initial support includes various SDKs, remote connection capabilities are pending future updates, raising interest in expanded functionalities.

HuggingFace Discord

- SageAttention Enhances Attention Mechanisms: The SageAttention method introduces an efficient quantization approach for attention mechanisms, boosting operations per second by optimizing computational resources.

- This technique maintains accuracy while addressing the high complexity associated with larger sequences, making it a valuable improvement over traditional methods.

- Deploying AI Models with Custom Handler Files: Hugging Face Endpoints now support deploying custom AI models using a handler.py file, allowing for tailored pre- and post-processing.

- Implementing the EndpointHandler class ensures flexible and efficient model deployment, catering to specific deployment needs.

- Automated AI Research Assistant Developed: A new Python program transforms local LLMs into automated web researchers, delivering detailed summaries and sources based on user queries.

- The assistant systematically breaks down queries into subtopics, enhancing information gathering and analysis efficiency from various online sources.

- YOLO Excels in Video Object Detection: YOLO continues to be a preferred choice for video object detection, supported by a YOLO-VIDEO resource that facilitates effective implementation.

- Discussions highlight ongoing strategies to optimize YOLO's performance in video streams, addressing challenges related to real-time processing.

- MOUSE-I Streamlines Web Service Deployment: MOUSE-I enables the conversion of simple prompts into globally deployed web services within 60 seconds using AI automation.

- This tool is ideal for startups, developers, and educators seeking rapid deployment solutions without extensive manual configurations.

OpenAI Discord

- Perplexity Outperforms ChatGPT in Accuracy: Users compared Perplexity with ChatGPT, highlighting that Perplexity is seen as more accurate and offers advantages in specific functionalities.

- One participant noted that certain features of Perplexity were in development prior to their popularity surge.

- GPT-4 Drives Product Categorization Efficiency: A member shared their experience categorizing products using a prompt with GPT-4, specifying categories ranging from groceries to clothing with great results.

- They noted that while the categorization is effective, token usage is high due to the lengthy prompt structure.

- GPT-4o Enhances Image-Based Categorization: A member described using GPT-4o to categorize products based on title and image, achieving excellent results with a comprehensive prompt structure.

- However, they pointed out that the extensive token usage poses a scalability challenge for their setup.

- Streamlining with Prompt Optimization: Discussions centered around minimizing token usage while maintaining prompt effectiveness in categorization tasks.

- Suggestions included exploring methods like prompt caching to streamline the process and reduce redundancy.

- Prompt Caching Cuts Down Token Consumption: Members suggested implementing prompt caching techniques to reduce repetitive input tokens in their categorization workflows.

- They recommended consulting API-related resources for further assistance in optimizing token usage.

LM Studio Discord

- Hermes 3 exceeds expectations: A member favored Hermes 3 for its superior writing skills and prompt adherence, though noted phrase repetition at higher contexts (16K+).

- This preference underscores Hermes 3’s advancements, but highlights limitations in handling longer contexts efficiently.

- Cloud-Based GPU Renting: Transitioning to a cloud server for model hosting costs $25-50/month and enhances model speed compared to local hardware.

- Users find cloud-hosted GPUs more cost-effective and performant, avoiding the limitations of on-premises setups.

- LLM GPU Comparisons: Members compared AMD and NVIDIA GPUs, with recent driver updates impacting AMD's ROCM support.

- Consensus leans towards NVIDIA for better software compatibility and support in AI applications.

- Mixed GPU setups hinder performance: Configurations with 1x 4090 + 2x A6000 GPUs underperform others due to shared resource constraints, reducing token generation rates.

- Users highlighted that the slowest GPU in a setup, such as the 4090, can limit overall processing speed.

- Feasibility of a $2k local LLM server: Setting up a local LLM server for 2-10 users on a $2,000 budget poses challenges with single GPU concurrency.

- Developers recommend cloud solutions to mitigate performance bottlenecks associated with budget and older hardware.

aider (Paul Gauthier) Discord

- Qwen 2.5 Rivals GPT-4o in Performance: Open source models like Qwen 2.5 32B demonstrate competitive performance on Aider's code editing benchmarks, matching GPT-4o, while the least effective versions align with GPT-3.5 Turbo.

- Users emphasized the significant impact of model quantization, noting that varying quantization levels can lead to notable performance differences.

- Aider v0.64.0 Introduces New Features: The latest Aider v0.64.0 release includes the new

/editorcommand for prompt writing and full support for gpt-4o-2024-11-20.- This update enhances shell command clarity, allowing users to see confirmations and opt into analytics seamlessly.

- Gemini Model Enhances AI Capabilities: The Gemini Experimental Model offers improved coding, reasoning, and vision capabilities as of November 21, 2024.

- Users are leveraging Gemini's advanced functionalities to achieve more sophisticated AI interactions and improved coding efficiency.

- Model Quantization Impact Discussed: Model quantization significantly affects AI performance, particularly in code editing, as highlighted in Aider's quantization analysis.

- The community discussed optimizing quantization levels to balance performance and resource utilization effectively.

- DeepSeek-R1-Lite-Preview Boosts Reasoning: DeepSeek launched the DeepSeek-R1-Lite-Preview, showcasing impressive reasoning capabilities on coding benchmarks, as detailed in their latest release.

- Users like Zhihong Shao praised its performance in both coding and mathematical challenges, highlighting its practical applications.

OpenRouter (Alex Atallah) Discord

- OpenRouter Launches Five New Models: OpenRouter has introduced GPT-4o with improved prose capabilities, along with Mistral Large (link), Pixtral Large (link), Grok Vision Beta (link), and Gemini Experimental 1114 (link).

- These models enhance functionalities across various benchmarks, offering advanced features for AI engineers to explore.

- Mistral Medium Deprecated, Alternatives Recommended: The Mistral Medium model has been deprecated, resulting in access errors due to priority not enabled.

- Users are advised to switch to Mistral-Large, Mistral-Small, or Mistral-Tiny to continue utilizing the service without interruptions.

- Gemini Experimental 1121 Released with Upgrades: Gemini Experimental 1121 model has been launched, featuring enhancements in coding, reasoning, and vision capabilities.

- Despite quota restrictions shared with the LearnLM model, the community is eager to assess its performance and potential applications.

- OpenRouter API Documentation Clarified: Users have expressed confusion regarding context window functionalities in the OpenRouter API documentation.

- Enhancing documentation clarity is recommended to assist seamless integration with tools like LangChain.

- Request for Custom Provider Key for Claude 3.5: A member has requested a custom provider key for Claude 3.5 Sonnet due to exhausting usage on the main Claude app.

- This request aims to provide an alternative solution to manage usage limits and improve user experience.

Stability.ai (Stable Diffusion) Discord

- Flux's VRAM Vexations: Members discussed the resource requirements for using Flux effectively, noting that it requires substantial VRAM and can be slow to generate images. Black Forest Labs released FLUX.1 Tools, enhancing control and steerability of their base model.

- One member highlighted that using Loras can enhance Flux's output for NSFW content, although Flux isn't optimally trained for that purpose.

- Optimizing SDXL Performance: For SDXL, applying best practices such as

--xformersand--no-half-vaecan improve performance on systems with 12GB VRAM. Members noted that Pony, a derivative of SDXL, requires special tokens and has compatibility issues with XL Loras.- These configurations aid in enhancing SDXL efficiency, while Pony's limitations underscore challenges in model compatibility.

- Enhancing Image Prompts with SDXL Lightning: A user inquired about using image prompts in SDXL Lightning via Python, specifically for inserting a photo into a specific environment. This showcases the community's interest in combining image prompts with varied backgrounds to boost generation capabilities.

- The discussion indicates a trend towards leveraging Python integrations to augment SDXL Lightning's flexibility in image generation tasks.

- Mitigating Long Generation Times: Frustrations over random long generation times while using various models led to discussions about potential underlying causes. Members speculated that memory management issues, such as loading resources into VRAM, might contribute to these slowdowns.

- Addressing these delays is pivotal for improving user experience, with suggestions pointing towards optimizing VRAM usage to enhance generation speeds.

- Securing AI Model Utilization: Concerns were raised about receiving suspicious requests for personal information, like wallet addresses, leading members to suspect scammers within the community. Users were encouraged to report such incidents to maintain a secure environment.

- Emphasizing security, the community seeks to protect its members by proactively addressing potential threats related to AI model misuse.

Eleuther Discord

- Contextual Position Encoding (CoPE) Boosts Model Adaptability: A proposal for Contextual Position Encoding (CoPE) suggests adapting position encoding based on token context rather than fixed counts, leading to more expressive models. This approach targets improved handling of selective tasks like Flip-Flop that traditional methods struggle with.

- Members discussed the potential of CoPE to enhance the adaptability of position encoding, potentially resulting in better performance on complex NLP tasks requiring nuanced token relationship understanding.

- Forgetting Transformer Surpasses Traditional Architectures in Long-Context Tasks: Forgetting Transformer, a variant incorporating a forget gate, demonstrates improved performance on long-context tasks compared to standard architectures. Notably, this model eliminates the need for position embeddings while maintaining effectiveness over extended training contexts.

- The introduction of the Forgetting Transformer indicates a promising direction for enhancing LLM performance by managing long-term dependencies more efficiently.

- Sparse Upcycling Enhances Model Quality with Inference Trade-off: A recent Databricks paper evaluates the trade-offs between sparse upcycling and continued pretraining for model enhancement, finding that sparse upcycling results in higher model quality. However, this improvement comes with a 40% increase in inference time, highlighting deployment challenges.

- The findings underscore the difficulty in balancing model performance with practical deployment constraints, emphasizing the need for strategic optimization methods in model development.

- Scaling Laws Predict Model Performance at Minimal Training Costs: A recent paper introduces an observational approach using ~100 publicly available models to develop scaling laws without direct training, enabling prediction of language model performance based on scale. This method highlights variations in training efficiency, proposing that performance is dependent on a low-dimensional capability space.

- The study demonstrates that scaling law models are costly but significantly less so than training full target models, with Meta reportedly spending only 0.1% to 1% of the target model's budget for such predictions.

- lm-eval Enhances Benchmarking for Pruned Models: A user inquired if the current version of lm-eval supports zero-shot benchmarking of pruned models, specifically using WANDA, and encountered issues with outdated library versions. Discussions suggested reviewing the documentation for existing limitations.

- To resolve integration issues with the Groq API, setting the API key in the

OPENAI_API_KEYenvironment variable was recommended, successfully addressing unrecognized API key argument problems.

- To resolve integration issues with the Groq API, setting the API key in the

Perplexity AI Discord

- Perplexity Pro Unveils Advanced Features: Members discussed various features of Perplexity Pro, emphasizing the inclusion of more advanced models available for Pro users, differentiating it from ChatGPT.

- The discussion included insights on search and tool integration that enhances user experience.

- Pokémon Data Fuels New AI Model: A YouTube video explored how Pokémon data is being utilized to develop a novel AI model, providing insights into technological advancements in gaming.

- This may change the way data is leveraged in AI applications.

- NVIDIA's Omniverse Blueprint Transforms CAD/CAE: A member shared insights on NVIDIA's Omniverse Blueprint, showcasing its transformative potential for CAD and CAE in design and simulation.

- Many are excited about how it integrates advanced technologies into traditional workflows.

- Bring Your Own API Key Adoption Discussed: A member inquired about the permissibility of bringing your own API key to build an alternative platform with Perplexity, outlining secure data management practices.

- This approach involves user-supplied keys being encrypted and stored in cookies, raising questions about compliance with OpenAI standards.

- Enhancing Session Management in Frontend Apps: In response to a request for simplification, a user explained session management in web applications by comparing it to cookies storing session IDs.

- The discussion emphasized how users' authentication relies on validating sessions without storing sensitive data directly.

Latent Space Discord

- Truffles Device Gains Attention: The Truffles device, described as a 'white cloudy semi-translucent thing,' enables self-hosting of LLMs. Learn more at Truffles.

- A member humorously dubbed it the 'glowing breast implant,' highlighting its unique appearance.

- Vercel Acquires Grep for Enhanced Code Search: Vercel announced the acquisition of Grep to bolster developer tools for searching code across over 500,000 public repositories.

- Dan Fox, Grep's founder, will join Vercel's AI team to advance this capability.

- Tülu 3 Surpasses Llama 3 on Tasks: Tülu 3, developed over two years, outperforms Llama 3.1 Instruct on specific tasks with new SFT data and optimization techniques.

- The project lead expressed excitement about their advancements in RLHF.

- Black Forest Labs Launches Flux Tools: Black Forest Labs unveiled Flux Tools, featuring inpainting and outpainting for image manipulation. Users can run it on Replicate.

- The suite aims to add steerability to their text-to-image model.

- Google Releases Gemini API Experimental Models: New experimental models from Gemini were released, enhancing coding capabilities.

- Details are available in the Gemini API documentation.

Nous Research AI Discord

- DeepSeek R1-Lite Boosts MATH Performance: Rumors suggest that DeepSeek R1-Lite is a 16B MOE model with 2.4B active parameters, significantly enhancing MATH scores from 17.1 to 91.6 according to a tweet.

- The skepticism emerged as members questioned the WeChat announcement, doubting the feasibility of such a dramatic performance leap.

- Llama-Mesh Paper Gains Attention: A member recommended reviewing the llama-mesh paper, praising its insights as 'pretty good' within the group.

- This suggestion came amid a broader dialogue on advancing AI architectures and collaborative research.

- Multi-Agent Frameworks Face Output Diversity Limits: Concerns were raised that employing de-tokenized output in multi-agent frameworks like 'AI entrepreneurs' might result in the loss of hidden information due to discarded KV caches.

- This potential information loss could be contributing to the limited output diversity observed in such systems.

- Soft Prompts Lag Behind Fine Tuning: Soft prompts are often overshadowed by techniques like fine tuning and LoRA, which are perceived as more effective for open-source applications.

- Participants highlighted that soft prompts suffer from limited generalizability and involve trade-offs in performance and optimization.

- CoPilot Arena Releases Initial Standings: The inaugural results of CoPilot Arena were unveiled on LMarena's blog, showing a tightly contested field among participants.

- However, the analysis exclusively considered an older version of Sonnet, sparking discussions on the impact of using outdated models in competitions.

GPU MODE Discord

- Triton Kernel Debugging and GEMM Optimizations: Users tackled Triton interpreter accuracy issues and discussed performance enhancements through block size adjustments and swizzling techniques, referencing tools like triton.language.swizzle2d.

- There was surprise at Triton GEMM's conflict-free performance on ROCm, sparking conversations about optimizing GEMM operations for improved computational efficiency.

- cuBLAS Matrix Multiplication in Row-Major Order: Challenges with

cublasSgemmwere highlighted, especially regarding row-major vs column-major order operations, as detailed in a relevant Stack Overflow post.- Users debated the implications of using

CUBLAS_OP_NversusCUBLAS_OP_Tfor non-square matrix multiplications, noting compatibility issues with existing codebases.

- Users debated the implications of using

- ROCm Compilation and FP16 GEMM on MI250 GPU: Developers reported prolonged compilation times when using ROCm's

makecommands, with efforts to tweak the-jflag showing minimal improvements.- Confusion arose over input shape transformations for FP16 GEMM (v3) on the MI250 GPU, leading to requests for clarification on shared memory and input shapes.

- Advancements in Post-Training AI Techniques: A new survey, Tulu 3, was released, covering post-training methods such as Human Preferences in RL and Continual Learning.

- Research on Constitutional AI and Recursive Summarization frameworks was discussed, emphasizing models that utilize human feedback for enhanced task performance.

Notebook LM Discord Discord

- NotebookLM's GitHub Traversal Limitations: Users reported that NotebookLM struggles to traverse GitHub repositories by inputting the repo homepage, as it lacks website traversal capabilities. One member suggested converting the site into Markdown or PDF for improved processing.

- The inability to process websites directly complicates using NotebookLM for repository analysis, leading to workarounds like manual content conversion.

- Enhancements in Audio Prompt Generation: A user proposed enhancing NotebookLM by supplying specific prompts to generate impactful audio outputs, improving explanations and topic comprehension.

- This strategy aims to facilitate a deeper understanding of designated topics through clearer audio content, as discussed by the community.

- Integrating Multiple LLMs for Specialized Tasks: Community members shared workflows utilizing multiple Large Language Models (LLMs) tailored to specific needs, with compliments towards NotebookLM for its generation capabilities.

- This approach underscores the effectiveness of combining various AI tools to support conversational-based projects, as detailed in user blog posts.

- ElevenLabs' Dominance in Text-to-Speech AI: Discussions highlighted ElevenLabs as the leading text-to-speech AI, outperforming competitors like RLS and Tortoise. A user reminisced about early experiences with the startup before its funding rounds.

- The impact of ElevenLabs on voice synthesis and faceless video creation was emphasized as a transformative tool in the industry.

- NotebookLM's Stability and Safety Flags Issues: Users noted an increase in safety flags and instability within NotebookLM, resulting in restricted functionalities and task limitations.

- Community members suggested direct message (DM) examples for debugging, attributing transient issues to ongoing application improvements.

LlamaIndex Discord

- AI Agent Architecture with LlamaIndex and Redis: Join the upcoming webinar on December 12 to learn how to architect agentic systems for breaking down complex tasks using LlamaIndex and Redis.

- Participants will discover best practices for reducing costs, optimizing latency, and gaining insights on semantic caching mechanisms.

- Knowledge Graph Construction using Memgraph and LlamaIndex: Learn how to set up Memgraph and integrate it with LlamaIndex to build a knowledge graph from unstructured text data.

- The session will explore natural language querying of the constructed graph and methods to visualize connections effectively.

- PDF Table Extraction with LlamaParse: A member recommended using LlamaParse for extracting table data from PDF files, highlighting its effectiveness for optimal RAG.

- An informative GitHub link was shared detailing its parsing capabilities.

- Create-Llama Frontend Configuration: A user inquired about the best channel for assistance with Create-Llama, specifically regarding the absence of a Next.js frontend option in newer versions when selecting the Express framework.

- Another participant confirmed that queries can be posted directly in the channel to receive team support.

- Deprecation of Llama-Agents in Favor of Llama-Deploy: A member noted dependency issues while upgrading to Llama-index 0.11.20 and indicated that llama-agents has been deprecated in favor of llama_deploy.

- They provided a link to the Llama Deploy GitHub page for further context.

Cohere Discord

- 30 Days of Python Challenge: A member shared their participation in the 30 Days of Python challenge, which emphasizes step-by-step learning, utilizing the GitHub repository for resources and inspiration.

- They are actively engaging with the repository's content to enhance their Python skills during this structured 30-day program.

- Capstone Project API: One member expressed a preference for using Go in their capstone project to develop an API, highlighting the exploration of different programming languages in practical applications.

- Their choice reflects the community's interest in leveraging Go's concurrency features for building robust APIs.

- Cohere GitHub Repository: A member highlighted the Cohere GitHub repository (GitHub link) as an excellent starting point for contributors, showcasing various projects.

- They encouraged exploring available tools within the repository and sharing feedback or new ideas across different projects.

- Cohere Toolkit for RAG Applications: The Cohere Toolkit (GitHub link) was mentioned as an advanced UI designed specifically for RAG applications, facilitating quick build and deployment.

- This toolkit includes a collection of prebuilt components aimed at enhancing user productivity.

- Multimodal Embeddings Launch: Exciting updates were shared about the improvement in multimodal embeddings, with their launch scheduled for early next year on Bedrock and partner platforms.

- A team member will flag the rate limit issue for further discussion to address scalability concerns.

Modular (Mojo 🔥) Discord

- Mojo's Async Features Under Development: Members reported that Mojo's async functionalities are currently under development, with no available async functions yet.

- The compiler presently translates synchronous code into asynchronous code, resulting in synchronous execution during async calls.

- Mojo Community Channel Launch: A dedicated Mojo community channel has been launched to facilitate member interactions, accessible at mojo-community.

- The channel serves as a central hub for ongoing discussions related to Mojo development and usage.

- Moonshine ASR Model Performance on Mojo: The Moonshine ASR model was benchmarked using moonshine.mojo and moonshine.py, resulting in an execution time of 82ms for 10s of speech, compared to 46ms on the ONNX runtime.

- This demonstrates a 1.8x slowdown in Mojo and Python versions versus the optimized ONNX runtime.

- Mojo Script Optimization Challenges: Developers faced crashes with Model.execute when passing

TensorMapin the Mojo scripts, necessitating manual argument listing due to unsupported unpacking.- These issues highlight the need for script optimization and improved conventions in Mojo code development.

- CPU Utilization in Mojo Models: Users observed inconsistent CPU utilization while running models in Mojo, with full CPU capacity and hyperthreading being ignored.

- This suggests a need for further optimization to maximize resource utilization during model execution.

Torchtune Discord

- Updated Contributor Guidelines for Torchtune: The team announced new guidelines to assist Torchtune maintainers and contributors in understanding desired features.

- These guidelines clarify when to use forks versus example repositories for demonstrations, streamlining the contribution process.

- Extender Packages Proposed for Torchtune: A member suggested introducing extender packages like torchtune[simpo] and torchtune[rlhf] to simplify package inclusion.

- This proposal aims to reduce complexity and effectively manage resource concerns without excessive checks.

- Binary Search Strategy for max_global_bsz: Recommendation to implement a last-success binary search method for max_global_bsz, defaulting to a power of 2 smaller than the dataset.

- The strategy will incorporate max_iterations as parameters to enhance efficiency.

- Feedback on UV Usability: A member inquired about others' experiences with UV, seeking opinions on its usability.

- Another member partially validated its utility, noting it appears appealing and modern.

- Optional Packages Addressing TorchAO: Discussion on whether the optional packages feature can resolve the need for users to manually download TorchAO.

- Responses indicate that while it may offer some solutions, additional considerations need to be addressed.

DSPy Discord

- Prompt Signature Modification: A member inquired about modifying the prompt signature format for debugging purposes to avoid parseable JSON schema notes, particularly by building an adapter.

- The discussion explored methods like building custom adapters to achieve prompt signature customization in DSPy.

- Adapter Configuration in DSPy: A user suggested building an adapter and configuring it using

dspy.configure(adapter=YourAdapter())for prompt modifications, pointing towards existing adapters in thedspy/adapters/directory for further clarification.- Leveraging existing adapters within DSPy can aid in effective prompt signature customization.

- Optimization of Phrases for Specific Cases: Questions about tuning phrases for specific types like bool, int, and JSON were clarified to be based on a maintained set of model signatures.

- These phrases are not highly dependent on individual language models overall, indicating a generalized formulation approach.

LLM Agents (Berkeley MOOC) Discord

- Intel AMA Session Scheduled for November 21: Join the Hackathon AMA with Intel on November 21 at 3 PM PT to engage directly with Intel specialists.

- Don't forget to watch live here and set your reminders for the Ask Intel Anything opportunity.

- Quiz 10 Release Status Update: A member inquired about the release status of Quiz 10, which has not been released on the website yet.

- Another member confirmed that email notifications will be sent once Quiz 10 becomes available, likely within a day or two.

- Hackathon Channel Mix-up Incident: A member expressed gratitude for the Quiz 10 update but humorously acknowledged asking about the hackathon in the wrong channel.

- This exchange reflects common channel mix-ups within the community, adding a lighthearted moment to the conversation.

tinygrad (George Hotz) Discord

- Need for int64 Indexing Explored: A user questioned the necessity of int64 indexing in contexts that do not involve large tensors, prompting others to share their thoughts.

- Another user linked to the ops_hip.py file for further context regarding this discussion.

- Differences in ops_hip.py Files Dissected: A member pointed out distinctions between two ops_hip.py files in the tinygrad repository, suggesting the former may not be maintained due to incorrect imports.

- They noted that the latter is only referenced in the context of an external benchmarking script, which also contains erroneous imports.

- Maintenance Status of ops_hip.py Files: In response to the maintenance query, another user confirmed that the extra ops_hip.py is not maintained while the tinygrad version should function if HIP=1 is set.

- This indicates that while some parts of the code may not be actively managed, others can still be configured to work correctly.

MLOps @Chipro Discord

- Event link confusion arises: A member raised concerns about not finding the event link on Luma, seeking clarification on its status.

- Chiphuyen apologized, explaining that the event was not rescheduled due to illness.

- Wishing well to a sick member: Another member thanked Chiphuyen for the update and wished them a speedy recovery.

- This reflects the community's supportive spirit amidst challenges in event management.

OpenInterpreter Discord

- Urgent AI Expertise Requested: User michel.0816 urgently requested an AI expert, indicating a pressing need for assistance.

- Another member suggested posting the issue in designated channels for better visibility.

- Carter Grant's Job Search: Carter Grant, a full-stack developer with 6 years of experience in React, Node.js, and AI/ML, announced his search for job opportunities.

- He expressed eagerness to contribute to meaningful projects.

OpenAccess AI Collective (axolotl) Discord

- MI300X GPUs Stall After Six Hours: A member reported experiencing intermittent GPU hangs during longer 8 x runs lasting 12-19 hours on a standard ablation set with axolotl, primarily occurring after the 6-hour mark.

- These stability concerns have been documented and are being tracked on GitHub Issue #4021, which includes detailed metrics such as loss and learning rate to provide technical context.

- Prompting Correctly? Engineers Debate Necessity: In the community-showcase channel, a member questioned the necessity of proper prompting, sharing a YouTube video to support the discussion.

- This query has initiated a dialogue among AI engineers regarding the current relevance and effectiveness of prompt engineering techniques.

LAION Discord

- Training the Autoencoder: A member emphasized the importance of training the autoencoder for achieving model efficiency, focusing on techniques and implementation strategies to enhance performance.

- The conversation delved into methods for improving autoencoder performance, including various training techniques.

- Sophistication of Autoencoder Architectures: Members discussed the sophistication of autoencoder architectures in current models, exploring how advanced structures contribute to model capabilities.

- The effectiveness of different algorithms and their impact on data representation within autoencoders were key points of discussion.

Mozilla AI Discord

- Refact.AI Live Demo: The Refact.AI team is conducting a live demo showcasing their autonomous agent and innovative tooling.

- Join the live event here to engage in the conversation.

- Mozilla Launches Web Applets: Mozilla has initiated the open-source project Web Applets to develop AI-native applications for the web.

- This project promotes open standards and accessibility, fostering collaboration among developers, as detailed here.

- Mozilla's Public AI Advocacy: Mozilla has advanced 14 local AI projects in the past year to advocate for Public AI and build necessary developer tools.

- This initiative aims to foster open-source AI technology with a collaborative emphasis on community engagement.

Gorilla LLM (Berkeley Function Calling) Discord

- Query on Llama 3.2 Prompt Format: A member inquired about the lack of usage of a specific prompt for Llama 3.2, referencing the prompt format documentation.

- The question highlighted a need for clarity on function definitions in the system prompt, emphasizing their importance for effective use.

- Interest in Prompt Applicability: The conversation showed a broader interest in understanding the applicability of prompts within Llama 3.2.

- This reflects ongoing discussions about best practices for maximizing model performance through effective prompting.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!