[AINews] LMSys advances Llama 3 eval analysis

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

LLM evals will soon vary across categories and prompt complexity.

AI News for 5/8/2024-5/9/2024. We checked 7 subreddits and 373 Twitters and 28 Discords (419 channels, and 3747 messages) for you. Estimated reading time saved (at 200wpm): 450 minutes.

LMSys is widely known for ELO-based (technically Bradley-Terry) battles, and more controversially opaquely prerelease-testing models for OpenAI, Databricks and Mistral, but only recently started to deepen its analysis by splitting out the scores to 8 subcategories of queries:

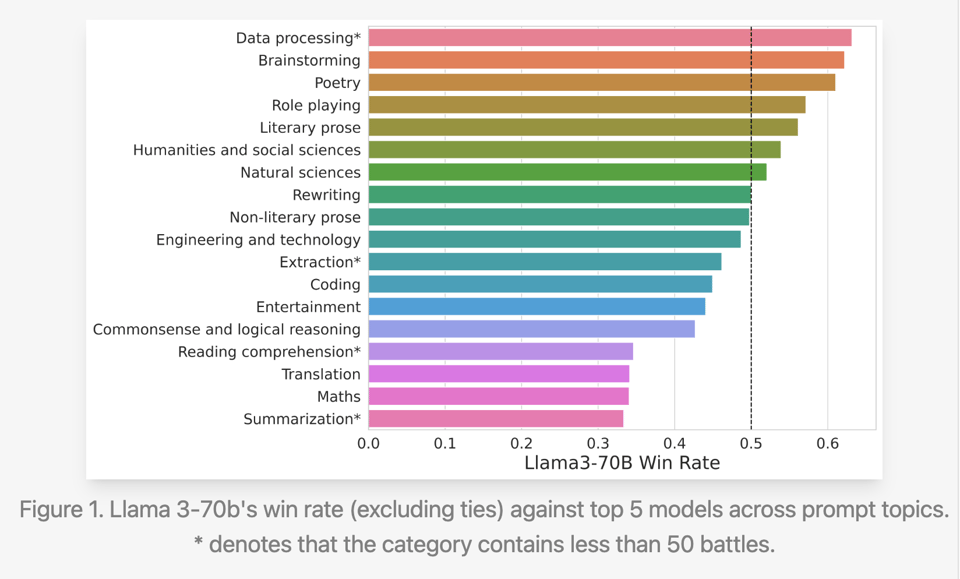

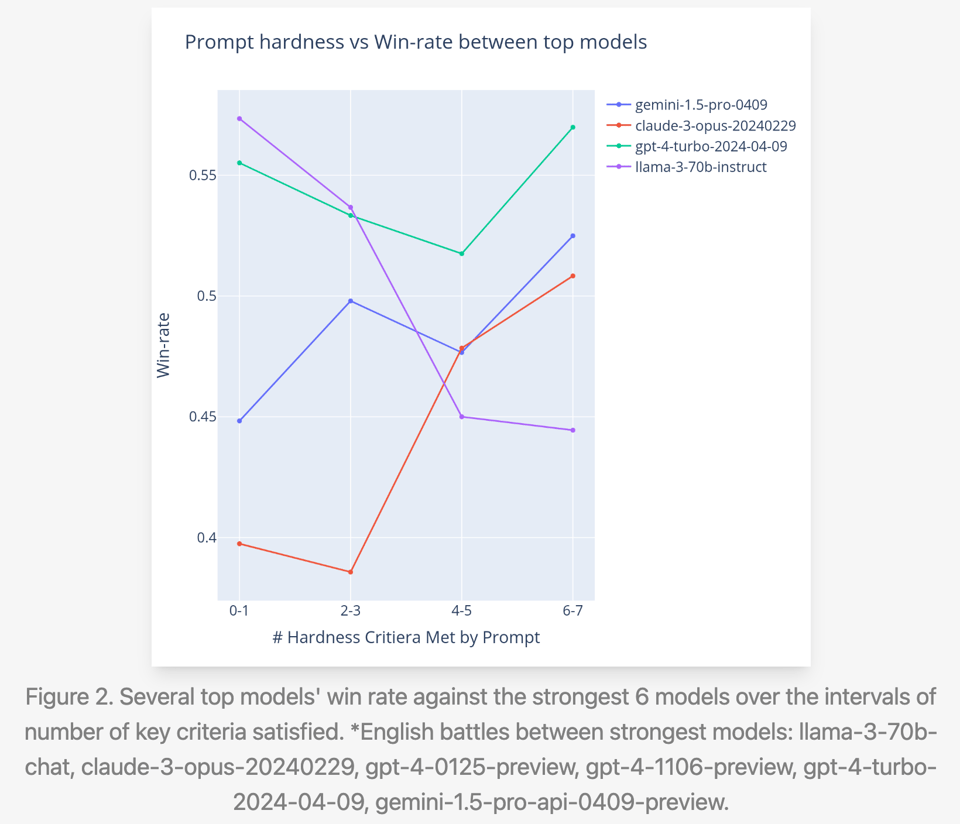

These categories are about to explode in dimensionality. LMsys published a deep analysis of Llama-3's performance on LMsys, that broke out its surprisingly uneven win rate across important categories (like summarization, translation, and coding)

and for 7 levels of prompt complexity:

As GPT4T-preview-tier models commoditize, and as LMsys increasingly becomes the trusted eval that can be gamed in subtle ways, it is important to understand the major ways in which models can over- or under- perform. It's wonderful that LMsys is proactively doing so, but also curious that the notebooks for this analysis weren't released per their usual M.O.

Table of Contents

- AI Twitter Recap

- AI Reddit Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Stability.ai (Stable Diffusion) Discord

- Perplexity AI Discord

- Unsloth AI (Daniel Han) Discord

- Nous Research AI Discord

- OpenAI Discord

- HuggingFace Discord

- Modular (Mojo 🔥) Discord

- LM Studio Discord

- Eleuther Discord

- CUDA MODE Discord

- OpenInterpreter Discord

- LAION Discord

- LlamaIndex Discord

- OpenRouter (Alex Atallah) Discord

- LangChain AI Discord

- Latent Space Discord

- OpenAccess AI Collective (axolotl) Discord

- Interconnects (Nathan Lambert) Discord

- tinygrad (George Hotz) Discord

- Cohere Discord

- Mozilla AI Discord

- LLM Perf Enthusiasts AI Discord

- Alignment Lab AI Discord

- Skunkworks AI Discord

- AI Stack Devs (Yoko Li) Discord

- Datasette - LLM (@SimonW) Discord

- PART 2: Detailed by-Channel summaries and links

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

AlphaFold 3 and Molecular Structure Prediction

- AlphaFold 3 released by DeepMind: @demishassabis announced AlphaFold 3 which can predict structures and interactions of proteins, DNA and RNA with state-of-the-art accuracy. @GoogleDeepMind explained how it was built with @IsomorphicLabs and its implications for biology.

- Capabilities of AlphaFold 3: @GoogleDeepMind shared that AlphaFold 3 uses a next-generation architecture and training to compute entire molecular complexes holistically. It can model chemical changes that control cell functioning and disease when disrupted.

- Applications and impact: @GoogleDeepMind noted over 1.8 million people have used AlphaFold to accelerate work in biorenewable materials and genetics. @DrJimFan called it mind-boggling that the same backbone used for pixels can imagine proteins when data is converted to float sequences.

OpenAI Model Spec and Shaping Model Behavior

- OpenAI introduces Model Spec: @sama announced the Model Spec, a public specification for how OpenAI models should behave, to give clarity on what is a bug vs decision. @gdb shared the spec aims to give people a sense of how model behavior is tuned.

- Importance of the Model Spec: @miramurati emphasized the Model Spec is crucial for people to understand and participate in the debate of shaping model behavior as models improve in decision making. @karinanguyen_ noted the spec must consider a wide range of nuanced questions and opinions.

- Feedback and future plans: @sama thanked the OpenAI team, especially @joannejang and @johnschulman2, and welcomed feedback to adapt the spec over time. OpenAI is working on techniques for models to directly learn from the Model Spec.

Llama 3 Performance on LMSYS Leaderboard

- Llama 3 reaches top of leaderboard: @lmsysorg shared analysis showing Llama 3 has climbed to the top spots on the leaderboard, with the 70B version nearly matching Claude-3 Sonnet. Deduplication and outliers do not significantly impact its win rate.

- Strengths and weaknesses: @lmsysorg found the gap between Llama 3 and top models becomes larger on more challenging prompts based on criteria like complexity and domain knowledge. @lmsysorg also noted Llama 3's outputs are friendlier, more conversational, and use more exclamations compared to other models.

- Reaching parity with top models: @lmsysorg concluded Llama 3 has reached performance on par with top proprietary models for overall use cases, and expects to push new categories to the leaderboard based on this analysis. @togethercompute agreed Llama-3-70B has achieved quality similar to top open models.

Limitations of Text-Only Training for AI

- Hands-on experience needed: @ylecun argued a cliché about rookies needing hands-on experience beyond book knowledge shows why LLMs trained only on text cannot reach human intelligence.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI and Technology Developments

- OpenAI and Microsoft developing $100bn "Stargate" AI supercomputer: In /r/technology, OpenAI and Microsoft are reportedly working on a massive nuclear-powered supercomputer project to support next-gen AI breakthroughs, hinting at the immense computational resources needed.

- DeepMind announces AlphaFold 3 for predicting life's key molecules: DeepMind unveiled AlphaFold 3, an AI model that can predict the structures and interactions of proteins, DNA and RNA with state-of-the-art accuracy, opening the door to advances in drug discovery and synthetic biology.

- IBM releases open-source Granite Code LLMs outperforming Llama 3: IBM has released Granite Code, a family of powerful open-source code-focused language models that beat the popular Llama 3 models in performance.

- Apple introduces M4 chip with 38 trillion ops/sec Neural Engine: Apple revealed its next-gen M4 chip featuring a Neural Engine capable of 38 trillion AI operations per second, the fastest in any PC chip.

Open-Source LLM Developments

- Plans to distill Llama 3 70B into efficient 4x8B/25B MoE model: The /r/LocalLLaMA community is planning to distill the Llama 3 70B model into a 4x8B/25B Mixture-of-Experts model optimized for VRAM/intelligence tradeoffs, aiming to fit an 8-bit quantized version in 22-23GB VRAM.

- Timeline of major open LLM releases in past 2 months: /r/LocalLLaMA compiled a timeline of the many major open LLM drops in just the past couple months alone, including releases from Cohere, xAI, DataBricks, ai21labs, Meta, Microsoft, Snowflake, Qwen, DeepSeek, and IBM.

- Consistency LLMs accelerate inference 3.5x as parallel decoders: Consistency LLMs are an approach to convert LLMs into parallel decoders, achieving 3.5x faster inference with comparable or better speedups vs alternatives like Medusa2/Eagle but no extra memory costs.

AI Ethics and Safety Concerns

- OpenAI exploring how to responsibly generate AI porn: OpenAI is grappling with the ethical challenges around AI-generated porn, considering relaxing NSFW filters for certain use cases like explicit song lyrics, political discourse, and romance novels.

- OpenAI introduces Model Spec to clarify intended model behaviors: To help distinguish intended model capabilities from unintended bugs, OpenAI is rolling out a Model Spec and seeking public feedback to evolve it over time.

- US Marines testing robot dogs with AI targeting rifles: In a troubling development reminiscent of dystopian sci-fi, the US Marines are evaluating robot dogs armed with AI-powered rifles that can automatically detect and track people, drones and vehicles.

Other Notable Developments

- Phi-3 WebGPU AI chatbot runs fully locally in-browser: A video demo showcases a Phi-3 based AI chatbot using WebGPU to run 100% locally in a web browser.

- IC-Light enables AI-powered image relighting: The open-source IC-Light tool uses AI to allow realistic relighting and illumination editing of any image.

- Udio adds AI-powered audio editing and inpainting: Udio introduced new features leveraging AI to enable editing vocals, correcting errors, and smoothing transitions in audio.

- Study suggests warp drives may be possible: A new scientific study tantalizingly hints that warp drives may be physically possible under certain conditions.

- Genetic engineers rewire cells for 82% lifespan increase: /r/ArtificialInteligence shared research where genetic engineers achieved an 82% increase in cell lifespans by rewiring them.

AI Memes and Humor

- The AI hype cycle continues: A humbling meme in /r/ProgrammerHumor reminds us that the breathless hype around AI breakthroughs shows no signs of abating.

AI Discord Recap

A summary of Summaries of Summaries

-

Large Language Model (LLM) Advancements and Benchmarking:

- Llama 3 from Meta has rapidly risen to the top of leaderboards like ChatbotArena, outperforming models like GPT-4-Turbo and Claude 3 Opus in over 50,000 matchups.

- New models like Granite-8B-Code-Instruct from IBM enhance instruction following for code tasks, while DeepSeek-V2 boasts 236B parameters.

- Skepticism surrounds certain benchmarks, with calls for credible sources like Meta to set realistic LLM assessment standards.

-

Optimizing LLM Inference and Training:

- ZeRO++ promises a 4x reduction in communication overhead for large model training on GPUs.

- The vAttention system dynamically manages KV-cache memory for efficient LLM inference without PagedAttention.

- QServe introduces W4A8KV4 quantization to boost cloud-based LLM serving performance on GPUs.

- Techniques like Consistency LLMs explore parallel token decoding for reduced inference latency.

-

Open-Source AI Frameworks and Community Efforts:

- Axolotl supports diverse dataset formats for instruction tuning and pre-training LLMs.

- LlamaIndex powers a new course on building agentic RAG systems with Andrew Ng.

- RefuelLLM-2 is open-sourced, claiming to be the best LLM for "unsexy data tasks".

- Modular teases Mojo's potential for Python integration and AI extensions like bfloat16.

-

Multimodal AI and Generative Modeling Innovations:

- Idefics2 8B Chatty focuses on elevated chat interactions, while CodeGemma 1.1 7B refines coding abilities.

- The Phi 3 model brings powerful AI chatbots to browsers via WebGPU.

- Combining Pixart Sigma + SDXL + PAG aims to achieve DALLE-3-level outputs, with potential for further refinement through fine-tuning.

- The open-source IC-Light project focuses on improving image relighting techniques.

5. Misc

- Stable Artisan Brings AI Media Creation to Discord: Stability AI launched Stable Artisan, a Discord bot integrating models like Stable Diffusion 3, Stable Video Diffusion, and Stable Image Core for media generation and editing directly within Discord. The bot sparked discussions about SD3's open-source status and the introduction of Artisan as a paid API service.

- Unsloth AI Community Abuzz with New Models and Training Tips: IBM's Granite-8B-Code-Instruct and RefuelAI's RefuelLLM-2 were introduced, sparking architecture discussions. Users shared challenges with Windows compatibility and skepticism over certain performance benchmarks, while also exchanging model training and fine-tuning tips.

- Nous Research AI's Cutting-Edge Papers and WorldSim Revival: Breakthrough papers on xLSTM and function vectors in LLMs were analyzed, alongside speculation about Llama 3 fine-tuning best practices. The relaunch of WorldSim with new features like WorldClient, Root, and MUD generated excitement, with users strategizing model merging techniques.

- Hugging Face's Coding Enhancements and Massive Models: Idefics2 8B Chatty and CodeGemma 1.1 7B debuted for chat and coding, while the 236B parameter DeepSeek-V2 model made waves. Discussions covered BERT fine-tuning, Whisper upsampling, Gemma token integration, and approaches to content extraction from PDFs.

- CUDA MODE's Triton Tutorials and Diffusion Optimization Deep Dive: A 9-part blog series and GitHub repo detailing diffusion model inference optimization made rounds, while the community rallied to create a Triton kernels index. LibTorch compile time improvements and ZeRO++ for efficient model training also drew attention.

- LangChain's Agentic RAG Course and Local LLM Breakthroughs: LangChain partnered with deeplearning.ai for a course on building agentic RAG systems, while also introducing local LLM support for models like Mistral and Gemma. Users troubleshooted TypeScript toolkit issues and debated multi-agent architectures.

- OpenAI's Model Spec Sets the Tone for AI Alignment: OpenAI released their first draft of the Model Spec to guide model behavior using RLHF techniques, part of their commitment to responsible AI development. Discussions also touched on GraphQL limitations compared to Markdown and the varying capabilities of GPT-4 across different platforms.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

Artisan's Creative Expansion: Stability AI has launched a new Discord bot called Stable Artisan, making tools like Stable Diffusion 3, Stable Video Diffusion, and Stable Image Core available for Discord users for both media creation and editing right within the app.

SD3's Open Source Drama: Community discussions surfaced frustration about Stable Diffusion 3 possibly not being open-source, with concerns about the move to a proprietary model and unclear release timelines stirring debates in the community.

Artisan API Draws Mixed Reactions: The introduction of Artisan, Stability AI's paid API service for utilizing Stable Diffusion 3, garnered both excitement and criticism, with some users questioning the feasibility of the service for those with limited budgets.

Guidance for Rookie Generative Enthusiasts: Newcomers to the Stable Diffusion ecosystem are exchanging tips on using ComfyUI and exploring the best base models for different creative intents, drawing from community repositories and prompt crafting techniques to refine their generative artistry.

Comparing AI Art Titans: Discussion threads highlight Midjourney's impact on the AI art tools market, speculating on its professional audience and potential influence on the monetization strategies for similar tools like Stability AI's offerings.

Perplexity AI Discord

Perplexity and SoundHound Tag Team for Voice AI: Perplexity AI has formed a collaboration with SoundHound, aiming to enhance voice assistants with advanced LLM capabilities, promising real-time answers over a range of IoT devices.

Claude 3 Opus Credit Chronicles and Service Snags: Users delved into the "600 credit" limitation concerns with Claude 3 Opus, contrasting experiences with Perplexity and direct usage from Anthropic. There were also discussions around Pro search limits transparency and technical issues like billing errors and system slowdowns.

Shareability and Searches Shine in Sharing: The community was prompted to set threads to 'Shareable' and engaged in sharing Perplexity AI search URLs on diverse subjects such as alpha fold, bipolar disorder, and multilingual queries, revealing the variety of users' interests.

Boots Without Resampling Becomes Conversation Piece: One member's question on conducting bootstrapping without physical resampling sparked a technical discourse, focusing on direct uses of original datasets in this statistical method.

Users Voice Subscription Page Scrutiny: Concerns arose over potential misinformation on the Pro subscription page, prompting a request for explicit clarifications from the Perplexity team concerning the Pro search limits.

Unsloth AI (Daniel Han) Discord

IBM's Newest Member to the Code Model Family: Granite-8B-Code-Instruct, boasting enhanced instruction following capabilities in logical reasoning and problem-solving was unveiled by IBM, fueling discussions around its unusual GPTBigCodeForCausalLM architecture.

Dolphin Acknowledges Unsloth in New Release: Unsloth AI was recognized in the Dolphin 2.9.1 Phi-3 Kensho's launch for its contribution during the model's initial phase.

Windows Woes for AI Enthusiasts: Engineers shared challenges when deploying AI models on Windows, suggesting workarounds like Windows Subsystem for Linux (WSL) and referenced a discussion for a solution outlined in an Unsloth GitHub issue.

AI Community Questions Model Benchmarks: Skepticism surfaced regarding certain performance benchmarks, with members calling for more credible sources, such as Meta, to establish realistic assessment standards for large language models.

Debugging Diary: Diverse Discourse on Model Training: There's active dialogue on overcoming various hurdles in model training and development, including fixing Llama3 training data losses, sorting VSCode installation errors, and fine-tuning models with help from community-shared notebooks like this one for inference-only use on Google Colab.

Nous Research AI Discord

LSTMs Throw Down the Gauntlet: An intriguing paper emphasizes the potential of LSTMs scaled to a billion parameters, challenging Transformer dominance with innovative exponential gating. The technique is detailed in this latest research.

AI's Predictive Crystal Ball: Forefront.ai lists anticipated breakthrough AI papers, intimating key trends and a novel adjustment technique reducing computational load without notable performance hits. The website showcases this strategic foresight into the AI research arena.

Lighter Models, Same Might: Notable discourse revealed a 4-bit quantized, 40% trimmed version of Llama 2 70B performs comparably to the full model, suggesting large-scale redundancy in deep learning models, as addressed in a Twitter post.

Fine-tuning Finessed: Conversations around fine-tuning techniques for LLaMA 3 and the Axolotl model have involved discussions on context length, pre-tokenization versus padding during training, and optimum use of Flash Attention 2.

WorldSim Waves the Banner of Innovation: WorldSim presents new capabilities with improved bug remediation, the WorldClient browsing experience, CLI environment Root, ancestor simulations, and RPG features. Mounting enthusiasm in the community shows through inquiries about the purchase of promotional Nous Research swag, found on their website.

Sustainable Strategizing for Mingling Models: Guild members are actively probing into streamlining the process of model merging and integration techniques, comparing Direct Preference Optimization in models like NeuralHermes 2.5 - Mistral 7B and exploring the tangible benefits of Llamafile with external weights.

Texture of Technical Dialogues: Many messages have shown an engaging tapestry of problem-solving, from addressing errors like 'int' object has no attribute 'hotkey' when uploading models, to fleshing out tactics for limiting hallucination in RAG and effective padding strategies.

OpenAI Discord

Model Spec Shaping AI Conversations: OpenAI introduced the Model Spec as a framework for crafting desired behaviors in AI models to enrich public discussions about them; a full read can be accessed at OpenAI's announcement.

Markdown Gets the Upper Hand over GraphQL: In AI discourse, the lack of GraphQL clientside rendering was contrasted with Markdown, although no significant concerns arose from this limitation.

AI Platforms and Hardware Excite and Confuse: While the OpenDevin platform was praised for its Docker sandbox and backend model flexibility, users found the comparative performance of AI across ChatGPT versions and the NVIDIA tech demo intriguing, yet the limitations on the GPT-4 ChatGPT app versus the API version caused community frustration.

Ethics and AI in Business Prompts Shared: A community member offered a detailed AI ethics in business prompt structuring, aiming to enhance model outputs concerning ethical considerations, and provided an output example exploring the impact of unethical practices, albeit without specific resource links.

Seeking Expertise and Visionary Ideals in AI: A member sought recommendations for prompt engineering courses, with subsequent exchange via direct message due to OpenAI's policies, while another pondered the concept of "Open" as a core epistemological principle, although the discussion did not develop further.

HuggingFace Discord

- Chat-Optimized and Coding-Friendly Models Hit the Scene: Idefics2 8B Chatty arrived with a focus on elevated chat interactions, while CodeGemma 1.1 7B emerged to refine coding in Python, Go, and C#. In the browser chatbot space, Phi 3 introduced WebGPU tech for enhanced chat experiences.

- Groundbreaking 236B Parameter Model and Training Milestones: The DeepSeek-V2 boasting 236 billion parameters has been introduced, marking a significant increase in model size, and updates in object detection guides aim to fine-tune performance with the addition of mAP metrics to the Trainer API.

- Technical Issues and Troubleshooting: Several members have encountered challenges related to BERT fine-tuning, Whisper model upsampling, integrating Gemma's unused tokens, and diffusion model errors, highlighting the diverse nature of problem-solving within the community.

- Audio and Engagement Tweaks in Voice Channels: A discourse around utilizing "stage" channels to control audio quality in voice groups uncovered a balance between quality control and participant urgency to voice questions, leading to an experiment with stage channel settings.

- Efforts Toward More Efficient Content Extraction: One user shared an endeavor to extract images and graphs from PDFs, alongside a desire for more efficient AI methodologies for handling such data, whilst a new model emerged to demarcate ads within images using Adlike.

Modular (Mojo 🔥) Discord

Mojo's Python Prospects and Performance Debates

- Mojo's Year-End Python Potential: The Modular community is anticipating that Mojo may become more user-friendly by year's end, with CPython integration already enabling Python code execution. However, the direct compilation of Python code might remain a goal for the following year.

- MLIR's Moment to Shine in Mojo: Enthusiasm is high over expanded contributions to MLIR, especially in the field of liveliness checking; members eagerly await potential open-sourcing of Modular's MLIR dialects to enhance MLIR utility.

Compiling Insights and Turing up the Heat on Twitter and Blogs

- Feature Frenzy on Modular's Twitter: Modular teased important updates, promising feature upgrades and revealed steady growth metrics on Twitter, arousing community curiosity about upcoming announcements.

- Chris Lattner Champions Mojo: On the Developer Voices podcast, Chris Lattner emphasized Mojo's prospects for Python and non-Python developers, focusing on expanded GPU performance and AI-related extensions.

Community Code Contributions and Compiler Conversations

- Toybox Repository Joins GitHub: The community-driven repo, "toybox," presents a DisjointSet and Kruskal's algorithm implementation, inviting collaborative enhancements through Pull Requests.

- String Strategies in Mojo Stir Debate: Performance concerns surfaced around Mojo's string concatenation; responses included proposed short string optimization and refined techniques using

KeysContainerandStringBuilderfor acceleration, showcased in GitHub discussions.

Tensor Tangles and Standard Library Updates in Mojo Nightly

- Navigating Mojo's Nightly Nuances: Updates in the nightly Mojo release included revisions to the Tensor API for clarity, while the community grappled with complications around

DTypePointerbehavior and eagerly reviewed the new compiler updates.

LM Studio Discord

- Directory Deep Dive for Model Storage: Engineers discussed ideal model directory structures for LM Studio, aligning with Hugging Face's naming conventions to facilitate model organization and discovery. For example, a Meta-Llama model should be placed in the path

\models\lmstudio-community\Meta-Llama-3-8B-Instruct-GGUF\.

- New 120B Model Merges Might for Coders and Poets: Maxime Labonne unveiled a 120B self-merged model, Meta-Llama-3-120B-Instruct-GGUF, promising enhanced performance for users to test and provide feedback on, while some members expressed struggles with current models' performance on poetry completion.

- AI Needs Mighty Hardware: The community extensively discussed hardware capabilities required for running large models like Llama 3 70B, joking about the need for theoretical 200GB VRAM for a 400B model and strategizing on how to optimize resources, such as offloading desktop tasks to an Intel HD 600 series GPU.

- RAG Architecture Might Be the Search Hero: A member recommended utilizing RAG architectures with chunked document handling to improve data searches, suggesting that reranking methods based on similarity measures could refine results, showcasing another stride in operational AI efficiency.

- Waiting Room for API Enhancements: Engineers are eagerly anticipating updates, including the possibility of programmatically interacting with existing chats through the LM Studio API, highlighting the platform's ongoing evolution towards more adaptable and user-friendly AI tools.

Eleuther Discord

- Release Etiquette Sparks Unofficial Debate: Engineers discussed the nuances of releasing unofficial implementations of algorithms, highlighting the ethical stance that such projects should be clearly labeled to distinguish them from the official versions, as demonstrated by the MeshGPT GitHub repo.

- Name Game in Science Publishing: The complexities of updating one's surname in academic databases post-marriage sparked a conversation, where the primary advice revolved around contacting the respective support for platforms and considering the preservation of a "nom-de-academia."

- Piling On Efficient Data Handling: Best practices for processing The Pile data for training AI models were exchanged, focusing on utilizing pre-processed datasets on resources like Hugging Face, and navigating the specific tokenizer application when handling

.binfiles.

- Scaling the Limits of State Tracking: A debate emerged over the scalability of state tracking in models, spurred by an article discussing shared limitations of state-space models and transformers, and speculative discussions surrounding xLSTM's capabilities.

- Skepticism Surrounds xLSTM: Skeptical tones were evident as members scrutinized the xLSTM paper for potentially using suboptimal hyperparameters for baselines, thereby questioning the claims made and looking forward to independent verifications or the release of official code.

- Function Vector Finds Its Function: Intriguing discussions touched upon function vectors (FV) for efficient in-context learning, based on insights drawn from studies suggesting that FVs can enable robust task performance with minimal context (research source).

- YOCO Yields Singular Cache Curiosity: The introduction of YOCO, a decoder-decoder architecture that simplifies KV caches, left some pondering its potential need for further optimization and the perks of singular cache rounds for improving memory economics.

- Multilingual LLMs Under Microscope: Interest in understanding how LLMs process multilingual inputs led engineers to reference research on language-specific neurons and the LLMs ability to compute next tokens, potentially involving internal translation to English (study example).

- Positional Encoding Gets Orthogonal Polish: PoPE's advent, bringing orthogonal polynomial-based positional encoding to possibly outdo sine-based APEs, ignited discussions that critiqued it for an over-theoretical approach while acknowledging its potential (paper).

- Tuned Lenses Inquiry: A lone query in the interpretability channel hinted at the specificity of tools for analyzing AI models, specifically asking if tuned lenses are available for every Pythia checkpoint, suggesting interest in model interpretability and fine-tuning nuances.

CUDA MODE Discord

- LibTorch Compile-Time Shenanigans: Leveraging ATen’s

at::native::randnand including only necessary headers like<ATen/ops/randn_native.h>cut down compile times from ~35 seconds to just 4.33 seconds.

- ZeRO-ing in on Efficiency: ZeRO++ claims a 4x reduction in training large models' communication overhead, enabling batch size 4 training on NVIDIA's A100 GPUs—and it even works across multiple A100s, although performance degrades slightly.

- Tuning Diffusion Models to the Max: A 9-part blog series has been made available, showcasing optimization strategies in U-Net diffusion models for the GPU, with practical examples given in the accompanying GitHub repository.

- vAttention Climbing Memory Management Mountain: A new system, vAttention, is proposed for dynamic KV-cache memory management in large language model inference, aiming to address the limitations of static allocation (paper abstract).

- Apple's M4 Flexes Compute Muscles: The M4 chip from Apple boasts an impressive 38 trillion operations per second, thanks to 3nm tech and a 10-core CPU, indicating the pace of progress in mobile compute power.

OpenInterpreter Discord

- GPT-4's Clever Composition: GPT-4 demonstrated its capacity to use YouTube for tailored music suggestions, leveraging specific user instructions; nonetheless, some local models, such as TheBloke/deepseek-coder-33B-instruct.GGUF and lmstudio-community/Meta-Llama-3-8B-Instruct-GGUF, underperformed, showing issues like claiming no internet connection or giving up after failed attempts.

- mixtral-8x7b Outshines Rivals in Local Tests: Out of several local models tested, mixtral-8x7b-instruct-v0.1.Q5_0.gguf has excelled, especially on hardware with a custom-tuned 2080 ti GPU and 32GB DDR5 6000 memory, proving to be more effective than alternatives.

- MacBooks Mayhem: MacBook Pro systems were found inadequate for running even lightweight local model operations, pushing one member to cease using their MacBook for these tasks.

- Cross-Platform Provider Integration: LiteLLM's documentation was highlighted, showing support for various AI providers, including OpenAI models, Azure, Google's PaLM, and Anthropic, with explicit instructions available in the LiteLLM providers documentation.

- Windows Wobbles With 01: Some users reported that the 01 platform has limited functionality on Windows operating systems, with discussions centered around possible code modifications for Windows 10 and compatibility checks for Windows 11.

LAION Discord

- Dalle-3's Rival Emerges: Combining Pixart Sigma, SDXL, and PAG has led to discussion about achieving DALLE-3 level outputs, with current limitations around text and individual object rendering identified. Participants believe that fine-tuning could enhance image composition, emphasizing the need for skilled technical intervention.

- Chasing Better Model Performance: A breakthrough for enhancing model quality was shared, detailing that manageable ranges for microconditioning inputs facilitate smoother learning, with prospects of refining this approach attracting attention.

- Relighting the AI Scene: The IC-Light project on GitHub, targeted at improving image relighting, is gaining interest in the community, and can be accessed at IC-Light GitHub.

- Insights into Diffusion Models: Engaging debates around Noise Conditional Score Networks and diffusion models touched upon issues like noise scheduling and the convergence of distributions, while papers like 'K-diffusion' were discussed for their mathematical intricacies and conceptual differences.

- Efficiency in the Insurance Lane: Request for recommendations on open-source tools for automating data processing in commercial auto insurance was raised, illustrating a cross-domain application of AI to enhance risk assessment in the insurance sector.

- AI Research Amplified: An AI contest announcement with potential publishing opportunities and rewards in the IJCAI journal garnered attention, signaling an active pursuit of scholarly recognition within the AI community.

LlamaIndex Discord

- Deep Dive into Agentic RAG: deeplearning.ai has introduced a course led by Jerry Liu, CEO of LlamaIndex, which focuses on creating agentic RAG systems capable of complex reasoning and retrieval-based question answering. This course has been signaled to be of significance by AI pioneer Andrew Ng in a Twitter announcement.

- Local LLM Acceleration: LlamaIndex has revealed an integration for executing Large Language Models (LLMs) locally with greater efficiency, with support for models like Mistral and Gemma, offering compatibility with NVIDIA hardware as highlighted in their Twitter post.

- Troubleshooting LlamaIndex Integrations: A discussion around the correct usage of omatic embeddings and LlamaIndex vector store integrations offered solutions for embedding compatibility issues. It was underscored that the vector store handily manages embeddings, easing implementation headaches for engineers considering this route.

- Operational Scalability Focus: Engineers debated on the optimal solution for hosting local LLM models, pointing towards solutions such as AWS and auto-scaling on Kubernetes, with interest in achieving scalability for large-scale deployments.

- Coding Clinic: For a user struggling with _CBEventType.SUB_QUESTION not triggering in their codebase, peers provided targeted guidance and code snippets to pinpoint and rectify the implementation flaw.

OpenRouter (Alex Atallah) Discord

Boost Your Web Game with Languify.ai: The new browser extension, Languify.ai, is designed to enhance website text for better user engagement and increased sales, tapping into OpenRouter for model selection based on prompts. A professional tier is priced at €10.99 a month, offering a viable alternative to AnythingLLM for users seeking a streamlined tool, with details found at Languify.ai.

OpenRouter Mysteries Partially Solved: Ongoing discussions among users revealed a desire for more accessible information on OpenRouter, with key topics including API documentation, credit system understanding, and the free status of certain AI models lacking comprehensive answers.

Moderation Mods on Demand: Users interested in Llama 3-powered moderation services were pointed to Together.ai, as OpenRouter itself does not currently list such capabilities.

'min_p' Gets Thumbs Up: Providers such as Together, Lepton, Lynn, and Mancer were highlighted for their support of the min_p parameter in their models, although Together was noted to be having some issues, unlike Lepton.

Breaking Chains with Wizard 8x22B: Discussion surged around the potential for "jailbreaking" Wizard 8x22B to access less-restricted content, with community members sharing resources such as Refusal in LLMs is mediated by a single direction to understand the limitations and refusal mechanisms inherent in language models.

LangChain AI Discord

- TypeScript Toolkit Troubles: Engineers are cracking the case with the

JsonOutputFunctionsParserin TypeScript which is throwing Unterminated string in JSON errors; recommendations include ensuring proper JSON from theget_chunksfunction, correctly formattedcontentforchain.invoke(), and a thorough check ofgetChunksSchema.

- Channeling Chat Consistency: A discrepancy in LangChain AI's

/invokeendpoint was pinpointed when a dictionary input behaved differently than when running it in Python, starting with an empty dictionary—prompting the community to share their diagnostics.

- Parsing Multi-Agent Mechanics: Interest was expressed in projects akin to the STORM multi-agent article generator, with discussions orbiting around the effectiveness of component-based architecture against instantiating separate agents for distinct capabilities.

- Vector DB Vacillation: The conversation turned to costs and complexities when setting up the VertexAI Vector store with alternatives like Pinecone and Supabase being tossed into the ring as potentially less taxing on the wallet.

- Gianna Grabs the AI Spotlight: The debut of Gianna, a modularly built virtual assistant, sparked dialogue, boasting integration with CrewAI and Langchain and accessible on GitHub or via PyPI with a supporting tutorial video. Meanwhile, a new Medium article unveiled Langchain's LangGraph as a customer support game-changer, and Athena, an AI data platform, flaunted its full data workflow autonomy.

- CrewAI Connects to Crypto: An engaging tutorial video "Create a Custom Tool to connect crewAI to Binance Crypto Market" was shared, opening up new possibilities for financial analysis with crewAI CLI and the Binance.com Crypto Market.

Latent Space Discord

- Stanford Fuels AI Minds: Stanford has released its new 2023 course, "Deep Generative Models," with lectures available on YouTube for AI professionals looking to upskill.

- GPU Quest: AI engineers on the Discord are exchanging tips on acquiring A100/H100 GPUs, recommending sfcompute as a reliable source.

- Codemancers Clash Over AI Tools: There's a passionate debate regarding AI-assisted coding underway, reminiscing over the old days of Lisp and scrutinizing the strengths and weaknesses of current AI code aids.

- Gradient's Giant Context Leap: The new Llama-3 8B Instruct model from Gradient is making waves for its massive increase in context length to 4194k; interested parties can sign up here for custom agents.

- OpenAI's Security Moves Spur Talk: OpenAI's blog post on creating secure AI infrastructure has sparked discussions among members, with some interpreting the measures as a form of "protectionism."

OpenAccess AI Collective (axolotl) Discord

- Llama-3-Refueled Model Now Public: RefuelAI has released RefuelLLM-2, a language model touted for efficiently handling "unsexy data tasks," and the weights are accessible on Hugging Face. The model was instruction tuned on an array of 2750+ datasets for approximately one week, as highlighted in a Twitter announcement.

- Axolotl Dataset Confusion Cleared: Documentation on supported dataset formats for Axolotl includes JSONL and HuggingFace datasets, providing clarity on organizing data for various tasks like pre-training and instruction tuning.

- Axolotl Users Navigate GPU Troubles: One engineer is in search of a working phi3 mini config file for 4K/128K FFT on 8 A100 GPUs, while another reports a training issue on 8x H100 GPUs, reflecting ongoing conversations about optimization and troubleshooting within AI model training environments.

- W&B Environment Variables Integrated: There's a nod to W&B's documentation for guidance on using environment variables, suggesting attention to tracking and reproducibility of experiments.

- LoRA YAML Setups Create Community Chatter: Several intricacies of configuring LoRA (Low-Rank Adaptation) for AI models were discussed, including the proper YAML configuration to save parameters when adding new tokens. Errors encountered led to recommendations on updating

lora_modules_to_saveto include'embed_tokens'and'lm_head', with members sharing insights on code troubleshooting process.

Interconnects (Nathan Lambert) Discord

Pretty Pictures Without Purpose?: Diagram aesthetics gained appreciation for being "really pretty pictures," while lacking functional commentary. Discussions highlighted concerns over diagrams choosing parameter counts over FLOPs and using non-standard learning rates for transformer baselines without proper hyperparameter tuning.

Tech-Savvy Growth Tactics: Queries about training Reinforcement Models (RM) on TPUs and using Fully Sharded Data Parallel (FSDP) suggest a surge in exploring optimization and scaling strategies. Meanwhile, EasyLM emerged as a potential basis for RM training using Jax, exemplified by a GitHub script: EasyLM - llama_train_rm.py.

Leaderboard Logistics and Research Resonance: Debate ensued on whether 5k leaderboards adequately reflect AI model performance, with suggestions for expanding to 10k. Commendations flowed for Prometheus, positioning it above the fray of typical AI research, despite a backdrop of overlooked sequels and disputed leaderboard ratios.

SnailBot's Slow-Motion Debut: The community anticipates SnailBot's debut, expressing excitement yet impatience with tick tock banter and engaging in light-hearted interactions upon receipt of a response from the bot.

LLM Licensing Quandaries: Concerns arose in ChatbotArena related to licensing complexities for releasing text generated by large language models, hinting at a need for specialized permissions from providers.

Leading-edge Discussions: OpenAI released their Model Spec for AI alignment, emphasizing RLHF techniques and setting a standard for model behaviors in OpenAI API and ChatGPT. Additionally, Llama 3 charged ahead in ChatbotArena, surpassing GPT-4-Turbo and Claude 3 Opus in 50,000+ matchups, insights dissected in a blog post which can be explored here: Llama 3.

tinygrad (George Hotz) Discord

- BITCAST Bonanza in tinygrad: The Pull Request #3747 on tinygrad sparked debates, differentiating CAST from BITCAST operations and advocating for clarity in their implementation, with suggestions to streamline BITCAST to prevent argument clutter.

- Symbolic Confusion Cleared: Users exchanged thoughts on enhancing tinygrad with symbolic versions of

arange,DivNode, andModNodefunctions. While concerns about downstream impacts were mentioned, no consensus on the method was reached.

- Frustrations in Forwarding

arangeFunctionality: Efforts to implement a symbolicarangemet roadblocks, as evidenced by a user's trial shared through a GitHub pull request, with further ambitions poised towards a 'bitcast refactor'.

- Matrices Alchemy: A novel design for concatenating the output of matrix operations was brought into discussion, pondering the possibility of in-place writing to an existing matrix to reduce overhead. Meanwhile, a member sought assistance with Metal build process, specifically the elusive

libraryDataContents().

- Visualize This!: In a practical aid to grappling with the concepts of shape and stride in tinygrad, a user created a visualization tool to assist engineers in exploring different combinations visually.

Cohere Discord

- RAG on Cohere Limitations: Implementing RAG on Cohere.command challenges users due to a 4096 token limit; some propose using Elasticsearch for text size reduction, whilst others consider dividing the text into segments to manage information loss effectively.

- File Generation Inquiry: The community is exploring methods for Cohere Chat to produce DOCX or PDF arrangements, but mechanisms for file downloading remain unclear.

- Resolving Cohere CORS Headaches: Members addressed CORS issues encountered with the Cohere API, suggesting backend calls to avoid security mishaps and keeping API keys confidential.

- Understanding Cohere's Credit System: Queries about adding credits on Cohere led to the clarification that the platform doesn't offer pre-paid options; instead, users can control expenses through billing limits on their dashboard.

- Wordware Hiring Spree: Wordware is on the lookout for AI talent, including a founding engineer and DevRel positions, encouraging applicants to demonstrate their skills using Wordware's IDE and engage with the team at wordware.ai. Check out the roles.

Mozilla AI Discord

API Now, Code Less: Meta-Llama-3-8B-Instruct can be operated through an API endpoint at localhost, with the OpenAI-style interaction. Details and setup instructions are available on the project's GitHub page.

Switching Models Just Got Easier: Visual Studio Code users can rejoice with the introduction of a dropdown feature, simplifying the swapping between different models for those utilizing ollama.

Request for Efficiency in Llamafile Updates: A feature request was made for llamafile to enable updates to the binary scaffold without the hefty process of redownloading the entire file, seen as a potential enhancement for efficiency.

A Musing on Mozilla-Ocho: A quirky conversation surfaced about whether Mozilla-Ocho alludes to "ESPN 8 - The Ocho" from "Dodgeball," though it seemed more of a fun aside than a pressing issue.

For the Curious Readers: The only link cited in the discussion: GitHub - Mozilla-Ocho/llamafile.

LLM Perf Enthusiasts AI Discord

AI Aides Excel Wizards: Engineers are exploring how LLMs can be utilized for spreadsheet data manipulation, with specific emphasis on AI's ability to sift through and extract information.

Yawn.xyz's Ambitious AI Spreadsheet Demo: Despite ambitious attempts by Yawn.xyz to address spreadsheet extraction challenges in biology labs, the community feedback on their AI tool's demo indicates performance issues.

Seeking Smooth GPT-4-turbo Azure Deployments: An engineer encountered problems with GPT-4-turbo in the Sweden Azure region, sparking discussions on optimal Azure regions for deployment.

Alignment Lab AI Discord

- AlphaFold3 Enters the Open Source Arena: An implementation of AlphaFold3 in PyTorch was released, which aims at predicting biomolecular interactions with high accuracy, working with atomic coordinates. The code is available for the community review and contribution on GitHub.

- Agora's Link for AlphaFold3 Collaboration Fails to Connect: A call was made to join Agora for collective efforts on AlphaFold3, but the provided link was reported to be faulty, hindering collaborative prospects.

Skunkworks AI Discord

- AI Engineers, Check This Out: A brief post in the Skunkworks AI channel shared a YouTube video link without any context, which might be interest-grabbing for tech enthusiasts keen on multimedia content related to AI developments.

AI Stack Devs (Yoko Li) Discord

Quickscope Hits the Mark: Regression Games proudly presents Quickscope, a new AI-powered toolkit that automates testing for Unity games, featuring Gameplay Session recording and Validation tools for a streamlined, no-code setup.

Deep Dive Into Game Test Automation: The deep property scraping feature of Quickscope extracts detailed data from game object hierarchies, enabling thorough insights into game entities like positions and rotations without writing custom code.

A Testing Platform for QA Teams: Quickscope boasts a platform that supports advanced test automation strategies, such as smart replay systems, designed with QA teams in mind to facilitate quick and straightforward integration.

Interactive UI Meets Game Testing: The platform's intuitive UI makes defining tests more accessible for QA engineers and game developers, and is compatible with the Unity editor, builds, or can be woven into CI/CD pipelines.

Experiment with Quickscope: Engineers and developers are encouraged to try out Quickscope's suite of AI tools to experience firsthand the efficiency and simplicity it brings to game testing automation.

Datasette - LLM (@SimonW) Discord

- Command-Line Cheers: AI Engineers are voicing their appreciation for the

llmcommand-line interface tool; it's compared to a personal project assistant with the ability to handle "more unixy stuff".

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Stability.ai (Stable Diffusion) ▷ #announcements (1 messages):

- Stable Artisan Joins the Chat: The bot Stable Artisan is introduced, allowing Stable Diffusion Discord Server members to create images and videos using Stability AI’s models, including Stable Diffusion 3, Stable Video Diffusion, and Stable Image Core.

- A New Multimodal Bot Experience: Stable Artisan is a multimodal generative AI Discord bot designed to integrate multiple aspects of media generation directly within Discord, enhancing user engagement.

- Beyond Generation – Editing Tools Included: The bot extends its functionality with tools to edit content, offering features like Search and Replace, Remove Background, Creative Upscale, and Outpainting.

- Accessibility and Ease of Use for All: By leveraging the capabilities of the Developer Platform API, Stable Artisan makes state-of-the-art generative AI more accessible for Discord users.

- Dive into Generation Immediately: Discord users are encouraged to get started with Stable Artisan by visiting dedicated channels for different bot commands and features within Stable Diffusion's Discord.

Link mentioned: Stable Artisan: Media Generation and Editing on Discord — Stability AI: One of the most frequent requests from the Stable Diffusion community is the ability to use our models directly on Discord. Today, we are excited to introduce Stable Artisan, a user-friendly bot for m...

Stability.ai (Stable Diffusion) ▷ #general-chat (811 messages🔥🔥🔥):

- Local vs. Cloud Usage Debate: The community discusses the merits of using Stable Diffusion locally versus resorting to cloud-based GPUs for tasks, with opinions split on convenience, cost, and performance. Generating high-quality images locally mentions using iPad Pro with M2 chips for creative work, while others emphasize cloud services for heavy training without requiring expensive hardware.

- SD3 and Open Source Concerns: Members show frustration over the prospects of Stable Diffusion Version 3 (SD3) not being released as open-source, raising concerns about commitment to release dates and the potential of shifting towards a paid model.

- Artisan as a Paid Service: Stability AI introduces Artisan, a paid API service for utilizing SD3, which receives mixed responses from the community, with criticisms about pricing and the practicality for hobbyists and professionals in the current economic climate.

- Workflow Discussion for New Users: Beginners seek advice on using ComfyUI with different Stable Diffusion models, looking for guidance on the best base models and VAEs for various image types, as well as effective prompt guides.

- Midjourney's Market Impact: Members reflect on Midjourney as a competitor to Stable Diffusion, with its business model attracting a specific professional audience which could provide a different direction for AI art tool monetization.

- Stable Diffusion Benchmarks: 45 Nvidia, AMD, and Intel GPUs Compared: Which graphics card offers the fastest AI performance?

- Stable Diffusion 3 is available now!: Highly anticipated SD3 is finally out now

- Draw Things: AI Generation: Draw Things is a AI-assisted image generation tool to help you create images you have in mind in minutes rather than days. Master the cast spells and your Mac will draw what you want with a few simpl...

- GitHub - Extraltodeus/sigmas_tools_and_the_golden_scheduler: A few nodes to mix sigmas and a custom scheduler that uses phi: A few nodes to mix sigmas and a custom scheduler that uses phi - Extraltodeus/sigmas_tools_and_the_golden_scheduler

- Pony Diffusion V6 XL - V6 (start with this one) | Stable Diffusion Checkpoint | Civitai: Pony Diffusion V6 is a versatile SDXL finetune capable of producing stunning SFW and NSFW visuals of various anthro, feral, or humanoids species an...

- deadman44/SDXL_Photoreal_Merged_Models · Hugging Face: no description found

- GitHub - Clybius/ComfyUI-Extra-Samplers: A repository of extra samplers, usable within ComfyUI for most nodes.: A repository of extra samplers, usable within ComfyUI for most nodes. - Clybius/ComfyUI-Extra-Samplers

- Sprite Art from Jump superstars and Jump Ultimate stars | PixelArt AI Model - v2.0 | Stable Diffusion LoRA | Civitai: Sprite Art from Jump superstars and Jump Ultimate stars - PixelArt AI Model If You Like This Model, Give It a ❤️ This LoRA model is trained on sprit...

- GitHub - 11cafe/comfyui-workspace-manager: A ComfyUI workflows and models management extension to organize and manage all your workflows, models in one place. Seamlessly switch between workflows, as well as import, export workflows, reuse subworkflows, install models, browse your models in a single workspace: A ComfyUI workflows and models management extension to organize and manage all your workflows, models in one place. Seamlessly switch between workflows, as well as import, export workflows, reuse s...

- stabilityai (Stability AI): no description found

- Hyper-SD - Better than SD Turbo & LCM?: The new Hyper-SD models are FREE and there are THREE ComfyUI workflows to play with! Use the amazing 1-step unet, or speed up existing models by using the Lo...

- GitHub - PixArt-alpha/PixArt-sigma: PixArt-Σ: Weak-to-Strong Training of Diffusion Transformer for 4K Text-to-Image Generation: PixArt-Σ: Weak-to-Strong Training of Diffusion Transformer for 4K Text-to-Image Generation - PixArt-alpha/PixArt-sigma

- Can I run it on cpu mode only? · Issue #2334 · AUTOMATIC1111/stable-diffusion-webui: If so could you tell me how?

- The new iPads are WEIRDER than ever: Check out Baseus' 60w retractable USB-C cables Black: https://amzn.to/3JlVBnh, White: https://amzn.to/3w3HqQw, Purple: https://amzn.to/3UmWSkk, Blue: https:/...

- AI Face Swap Desktop Application: You can get high-quality face swaps on your desktop

- DaVinci Resolve iPad Tutorial - How To Edit Video On iPad!: Complete DaVinci Resolve iPad video editing tutorial! Here’s exactly how to use DaVinci Resolve for iPad and why it’s one of the best video editing apps for ...

- SDXL Lora Training with CivitAI, walkthrough: We have looked at training Stable Diffusion Lora's in a few different ways, however there are great autotrainers out there. CivitAI offers a simple way to tr...

- Cutscene Artist: real-time animation, 3D figure creation, and generative storytelling. http://CutsceneArtist.com

Perplexity AI ▷ #announcements (1 messages):

- Perplexity Partners with SoundHound: Perplexity teams up with SoundHound, a leader in voice AI, to integrate its online LLM capabilities into voice assistants. The partnership aims to provide instant, accurate answers to voice queries in cars, TVs, and other IoT devices.

- Voice AI Meets Real-Time Web Search: With Perplexity's LLM, SoundHound's voice AI will answer questions conversationally using real-time web knowledge. This innovation is touted as the most advanced voice assistant on the market.

Link mentioned: SoundHound AI and Perplexity Partner to Bring Online LLMs to Next Gen Voice Assistants Across Cars and IoT Devices: This marks a new chapter for generative AI, proving that the powerful technology can still deliver optimal results in the absence of cloud connectivity. SoundHound’s work with NVIDIA will allow it to ...

Perplexity AI ▷ #general (464 messages🔥🔥🔥):

- In Search of TTS for Perplexity: A member queried about implementing a text-to-speech function on Perplexity AI, expressing interest in possibly using a web extension or a setting for this capability.

- Dissecting Claude 3 Opus Limitations: Several discussions revolved around Claude 3 Opus and its limitations when used with Perplexity, specifically addressing a "600 credit" limitation, changes in limits over time, and strategies to make the most of the available credits by mixing model usage.

- Payment Confusion and Subscription Concerns: Concerns were voiced about the appearance of misleading information regarding Pro search limits not being transparent on the subscription page, with requests for clarification from the Perplexity team.

- Comparing AI Services and Models: Users discussed differing experiences with Claude 3 Opus between Perplexity and the offering directly from Anthropic, citing variations in response quality, with suggestions to test against other platforms for verification.

- Billing Issues and Technical Slowdowns: There were mentions of billing problems with requests for help, alongside observations of Perplexity's AI services experiencing slowness, notably with Pro Search and GPT-4 Turbo API.

- AlphaFold opens new opportunities for Folding@home – Folding@home: no description found

- Discord Does NOT Want You to Do This...: Did you know you have rights? Well Discord does and they've gone ahead and fixed that for you.Because in Discord's long snorefest of their Terms of Service, ...

Perplexity AI ▷ #sharing (25 messages🔥):

- Guidance on Shareability: Perplexity AI reminded users several times to ensure that their threads are set to 'Shareable'. A screenshot or visual guide attachment was indicated, although the specific content was not visible.

- Exploration of Various Searches: Users shared a multitude of Perplexity AI search URLs related to diverse topics such as arts and architecture, alpha fold, soccer era comparisons, bipolar disorder, and more.

- Bootstrapping Without Resampling Inquiry: One user prompted a discussion on how to achieve bootstrapping benefits without actual resampling, seeking to work directly from the original data.

- An Array of Topics Sought: The shared search links spanned queries on teaching methods, a mystery surrounding 'imagoodgpt2chat', and how to live a good life, showcasing the wide range of interests in the community.

- Multilingual Inquiries: Searches were not limited to English, with posts including searches in Spanish and German, reflecting the diverse user base of the platform.

Unsloth AI (Daniel Han) ▷ #general (247 messages🔥🔥):

- IBM's Granite-8B-Code-Instruct Introduced: IBM Research released Granite-8B-Code-Instruct, a model enhancing instruction following capabilities in logical reasoning and problem-solving, licensed under Apache 2.0. This release is part of the Granite Code Models project, which includes different sizes from 3B to 34B parameters, all with instruct and base versions for code models.

- Community Buzz Around IBM Granite Code Models: An IBM Granite collection is observed with keen interest in the AI community. Discussions revolve around architecture checks, with curiosity about GPTBigCodeForCausalLM architecture which seems unusual.

- Unsloth AI Featured in Hugging Face's Dolphin Model: Unsloth AI received a nod in the acknowledgments of the Dolphin 2.9.1 Phi-3 Kensho on Hugging Face, where its model was used for initialization.

- Discussions on Discord and Windows Compatibility for AI Models: Users expressed difficulty running certain AI models on Windows, with the suggestion to use WSL or a hack detailed in an Unsloth GitHub issue. Compatibility and optimization appear to be common areas of concern among developers.

- Concerns Over Model Benchmarks and Performances: The chat reflects skepticism towards certain performance benchmarks like needle in a haystack, with discussions about long context models not performing well. Suggestions are made to wait for more credible organizations like Meta to set the standard, showcasing members' pursuit of effective and realistic assessment standards for LLMs.

- Tweet from ifioravanti (@ivanfioravanti): Look at this! Llama-3 70B english only is now at 1st 🥇 place with GPT 4 turbo on @lmsysorg Chatbot Arena Leaderboard🔝 I did some rounds too and both 8B and 70B were always the best models for me. ...

- cognitivecomputations/Dolphin-2.9.1-Phi-3-Kensho-4.5B · Hugging Face: no description found

- LLM Model VRAM Calculator - a Hugging Face Space by NyxKrage: no description found

- Granite Code Models - a ibm-granite Collection: no description found

- Announcing Refuel LLM-2: no description found

- gradientai/Llama-3-8B-Instruct-Gradient-4194k · Hugging Face: no description found

- ibm-granite/granite-8b-code-instruct · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Issues · unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - Issues · unslothai/unsloth

- Emotional Damage GIF - Emotional Damage Gif - Discover & Share GIFs: Click to view the GIF

- How AI was Stolen: CHAPTERS:00:00 - How AI was Stolen02:39 - A History of AI: God is a Logical Being17:32 - A History of AI: The Impossible Totality of Knowledge33:24 - The Lea...

- mahiatlinux (Maheswar KK): no description found

- No way to get ONLY the generated text, not including the prompt. · Issue #17117 · huggingface/transformers: System Info - `transformers` version: 4.15.0 - Platform: Windows-10-10.0.19041-SP0 - Python version: 3.8.5 - PyTorch version (GPU?): 1.10.2+cu113 (True) - Tensorflow version (GPU?): 2.5.1 (True) - ...

- Google Colab: no description found

- The Simpsons Homer Simpson GIF - The Simpsons Homer Simpson Good Bye - Discover & Share GIFs: Click to view the GIF

Unsloth AI (Daniel Han) ▷ #random (14 messages🔥):

- OpenAI Teams Up with Stack Overflow: OpenAI announced a collaboration with Stack Overflow to use it as a database for LLMs, raising humorous speculations among users about responses mimicking common Stack Overflow comment patterns, such as “Closed as Duplicate” or advising to check the documentation.

- The AI Content Ceiling: Addressing concerns related to the eventual depletion of human-generated content for training AI, a user shared a Business Insider article highlighting AI companies hiring writers to train their models, suggesting a potential content crisis by 2026.

- Startup Potential for a Multi-User Blogging Platform: A member proposed a multi-user blogging platform with unique features like anonymous posting and automated bad content checks, sparking a discussion on whether this project could evolve into a startup and attracting positive feedback and helpful suggestions.

- Seek Market Validation for Your Startup: It was advised to identify an audience willing to pay before building a product to avoid the pitfall of assuming "build it and they will come." Emphasis was put on finding a group with a problem that the product can solve.

- Navigating the Path to a Profitable Startup: Suggesting a more problem-solution focused approach, a user recommended constructing a clear pathway to profitability when considering starting a business, especially in the tech space.

- Gig workers are writing essays for AI to learn from: Companies are increasingly hiring skilled humans to write training content for AI models as the trove of online data dries up.

- Reddit - Dive into anything: no description found

Unsloth AI (Daniel Han) ▷ #help (132 messages🔥🔥):

- Llama3-8b Training Quirks and Solutions: Users discussed issues related to training Llama3 models, with one linking to an open GitHub Issue regarding Llama3 GGUF conversion with a merged LORA Adapter leading to potential loss of training data. Another shared how to prepare models for training using

FastLanguageModel.for_training(model).

- Hugging Face Saves the Day: Direct answers and links were provided to users asking if it's possible to upload every training checkpoint to Hugging Face. One reply confirmed this, with a detailed explanation available in the Trainer documentation.

- Troubleshooting Install Errors: There was an exchange about installation errors on VSCode, with a consensus suggesting to try installation instructions typically used on Kaggle when encountering difficulties with

pip install triton. Further conversation points users to utilize alternative pre-trained models or inquire further in direct messages for tailored assistance.

- Fine-Tuning Generative LLMs for Classification: Users debated whether Unsloth can be used to fine-tune a generative LLM like Llama3 for classification tasks by adding a classification head. While there appeared to be no definitive answer, one suggested that the crucial aspect lies in providing the correct prompt.

- Assorted Utility Notebooks and Error Handling: Users shared a plethora of notebooks and GitHub resources, such as Google Colab inference-only notebook, to assist those looking to fine-tune or execute models. Discussions also spanned topics like running models on CPU vs GPU and Replicate.com deployment issues, pinpointing specific problems such as errors with xformers when building docker images.

- Google Colab: no description found

- Google Colab: no description found

- Supervised Fine-tuning Trainer: no description found

- How I Fine-Tuned Llama 3 for My Newsletters: A Complete Guide: In today's video, I'm sharing how I've utilized my newsletters to fine-tune the Llama 3 model for better drafting future content using an innovative open-sou...

- Google Colab: no description found

- Google Colab: no description found

- Apple Silicon Support · Issue #4 · unslothai/unsloth: Awesome project. Apple Silicon support would be great to see!

- Supervised Fine-tuning Trainer: no description found

- Google Colab: no description found

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Trainer: no description found

- Mistral Fine Tuning for Dummies (with 16k, 32k, 128k+ Context): Discover the secrets to effortlessly fine-tuning Language Models (LLMs) with your own data in our latest tutorial video. We dive into a cost-effective and su...

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- Google Colab: no description found

Unsloth AI (Daniel Han) ▷ #showcase (4 messages):

- Llama-3 Gets a Power-Up: The newly released model Llama-3-11.5B-Instruct-Coder-v2 has been benchmarked and introduced, an upscaled version trained on a 150k Code Feedback Filtered Instruction dataset. Here's the model on Hugging Face, which was efficiently trained using the Qalore method, allowing it to be trained on an RTX A5000 24GB in 80 hours for under $30.

- Qalore Method Innovates AI Training: The Qalore method, a new training approach developed by the Replete-AI team, incorporates Qlora training and methods from Galore to reduce VRAM consumption, enabling the upscale and training of Llama-3-8b on a 14.5 GB VRAM setup.

- Dataset Available for Public Use: The dataset utilized to train the latest Llama-3-11.5B model can be accessed publicly. Those interested in exploring or using the dataset can find it at CodeFeedback Filtered Instruction Simplified Pairs.

Link mentioned: rombodawg/Llama-3-11.5B-Instruct-Coder-v2 · Hugging Face: no description found

Nous Research AI ▷ #ctx-length-research (11 messages🔥):

- Inquiring About Continual Training Techniques: A member posed a question regarding the best practice for continual training of a long context model like LLaMA 3, considering compute constraints and a dearth of long-context documents. They are considering retaining the RoPE theta value and extending the context length finetuning after the fact.

- Logistics of Finetuning Trials Discussed: In relation to finetuning logistics, another member explained their approach, which involves shuffling different datasets for imparting new knowledge and chat formatting, rather than structuring them as a finetuning chain.

- The Art of Packing Data: A participant highlighted the use of Packing as a method within the confines of the Axolotl model for training sequences ranging from 100 to 4000 tokens.

- Uncertainty with Modifying RoPE Base Theta: One response to the RoPE theta inquiry suggested that after altering the base theta value, adapting it back to shorter contexts might be problematic, advocating for keeping the scaling consistent throughout continual pretraining.

- Seeking Chaining Finetune Strategy Insights: The discussion also sought strategies for chaining finetune tasks, like integrating chat functionalities, handling long-context data, and appending new knowledge, while asking whether data from earlier stages should be reused to prevent catastrophic forgetting.

Nous Research AI ▷ #off-topic (4 messages):

- Junk Food Rebellion: A member declares a break from adulting for the night by feasting on potato chips and playing video games, listing a decadent menu including items like burger patties and sour cream and onion potato chips.

- Emote Reaction: Another member responded with a blushing emoji to the junk food lineup, seemingly in amused approval.

- Multimodal LLM Tutorial: A link to a YouTube video titled "Fine-tune Idefics2 Multimodal LLM" was shared, which is a tutorial on fine-tuning Idefics2, an open multimodal model.

- Claude AI's Consciousness Tweet Inquiry: A discusser inquires about a saved tweet regarding claude AI stating it experiences consciousness through others reading or experiencing it.

Link mentioned: Fine-tune Idefics2 Multimodal LLM: We will take a look at how one can fine-tune Idefics2 on their own use-case.Idefics2 is an open multimodal model that accepts arbitrary sequences of image an...

Nous Research AI ▷ #interesting-links (13 messages🔥):

- Scaling LSTMs to the Billion Parameter League: A new research paper challenges the prominence of Transformer models by scaling Long Short-Term Memory (LSTM) networks to billions of parameters. The approach includes novel techniques such as exponential gating and a new memory structure designed to overcome LSTM limitations, described in detail here.

- RefuelAI Unveils RefuelLLM-2: RefuelAI's newest LLM is tailored for "unsexy data tasks" and is open source, boasting RefuelLLM-2-small, aka Llama-3-Refueled. The model is built on an optimized transformer architecture and was instruction tuned on a corpus spanning various tasks, with details and model weights available on Hugging Face.

- Llama 2 70B Almost Tantamount to Trimmed Version: A surprising revelation in a paper titled The Unreasonable Ineffectiveness of the Deeper Layers showcases a 4-bit quantized version of the Llama 2 70B model, missing 40% of its layers, achieving nearly the same performance on benchmarks as the full model. This result highlights the potential redundancy in sizeable parts of deep learning models, detailed in Kwindla's twitter post.

- Foretelling AI Research Trends: A co-founder shared their website containing a list of predicted breakthrough papers in AI, demonstrating foresight into research trends. They highlight an innovative adjustment technique that could reduce computational load without significantly compromising performance as posted on Forefront.ai.

- OpenAI's Model Spec for Reinforcement Learning from Human Feedback: OpenAI released the first draft of their Model Spec for model behavior in OpenAI API and ChatGPT, which will serve as guidelines for researchers using Reinforcement Learning from Human Feedback (RLHF). This draft represents part of OpenAI's commitment to responsible AI development and can be found here.

- Carson Poole's Personal Site: no description found

- Consistency Large Language Models: A Family of Efficient Parallel Decoders: TL;DR: LLMs have been traditionally regarded as sequential decoders, decoding one token after another. In this blog, we show pretrained LLMs can be easily taught to operate as efficient parallel decod...

- xLSTM: Extended Long Short-Term Memory: In the 1990s, the constant error carousel and gating were introduced as the central ideas of the Long Short-Term Memory (LSTM). Since then, LSTMs have stood the test of time and contributed to numerou...

- refuelai/Llama-3-Refueled · Hugging Face: no description found

- Model Spec (2024/05/08): no description found

- Tweet from kwindla (@kwindla): Llama 2 70B in 20GB! 4-bit quantized, 40% of layers removed, fine-tuning to "heal" after layer removal. Almost no difference on MMLU compared to base Llama 2 70B. This paper, "The Unreas...

Nous Research AI ▷ #announcements (1 messages):

- WorldSim's Grand Resurgence: WorldSim is back with a host of bug fixes and fully functional credits and payments systems. The new features include WorldClient, Root (a CLI environment simulator), Mind Meld, MUD (a text-based adventure game), tableTop (a tabletop RPG simulator), enhanced WorldSim and CLI capabilities, plus the option to choose a model (opus, sonnet, or haiku) to adjust costs.

- Discover Your Personal Internet 2: The new WorldClient feature in WorldSim acts as a web browser simulator, creating a personalized Internet 2 experience for users.

- Command Your World with Root: Root offers a simulated CLI environment, allowing users to imagine and execute any program or Linux command.

- Adventure Awaits in Text and Tabletop: Delve into MUD, the text-based choose-your-own-adventure game, or strategize in tableTop, the tabletop RPG simulator, now available in WorldSim.

- Discussion Channel for WorldSim Enthusiasts: To explore further and share your thoughts on the new WorldSim, users are encouraged to join the conversation in the dedicated Discord channel (link not provided).

Link mentioned: worldsim: no description found

Nous Research AI ▷ #general (106 messages🔥🔥):

- NeuralHermes Gets DPO Treatment: A member posted a link to NeuralHermes 2.5 - Mistral 7B, a model that's been fine-tuned with Direct Preference Optimization and outperforms the original on most benchmarks. They asked if it's the latest version or if there have been updates.

- Question About Nous Model Logos: Members discussed the ideal logo to use for Nous models, with a link to the NOUS BRAND BOOKLET provided and mention that multiple logos are currently in use, but a consolidation to 1-2 consistent ones is likely in the future.

- Exploring Limits of Context in Large Language Models: King.of.kings_ sparked discussion by spinning up dual NVIDIA H100 NVLs in Azure and sharing his intent to test the context limits with the 70B model on these massive GPUs. There was also mention of a model available on Hugging Face with extended context length, Llama-3 8B Gradient Instruct 1048k.

- Classification without Function Calling: A member raised a question about fine-tuning large language models (LLMs) for classification without relying on the chatML prompt format or function calling, noting the limitations of BERT and the expense of LLMs for such tasks. Links were shared to the Salesforce Mistral-based embedding model and IBM's FastFit for more efficient text classification.

- Iconography for Model Visuals: Coffeebean6887 engaged in a discussion about the visual representation of models, specifically seeking an icon that is discernible even at a small scale such as 28x28 pixels. This spurred a promise for a custom icon to better suit such constraints.

- JSON-Schema to GBNF: no description found

- Tweet from lmsys.org (@lmsysorg): Exciting new blog -- What’s up with Llama-3? Since Llama 3’s release, it has quickly jumped to top of the leaderboard. We dive into our data and answer below questions: - What are users asking? When...

- Jogoat GIF - Jogoat - Discover & Share GIFs: Click to view the GIF

- gradientai/Llama-3-8B-Instruct-Gradient-1048k · Hugging Face: no description found

- Cat Hug GIF - Cat Hug Kiss - Discover & Share GIFs: Click to view the GIF

- mlabonne/NeuralHermes-2.5-Mistral-7B · Hugging Face: no description found

- SFR-Embedding-Mistral: Enhance Text Retrieval with Transfer Learning: The SFR-Embedding-Mistral marks a significant advancement in text-embedding models, building upon the solid foundations of E5-mistral-7b-instruct and Mistral-7B-v0.1.

- GitHub - IBM/fastfit: FastFit ⚡ When LLMs are Unfit Use FastFit ⚡ Fast and Effective Text Classification with Many Classes: FastFit ⚡ When LLMs are Unfit Use FastFit ⚡ Fast and Effective Text Classification with Many Classes - IBM/fastfit

- Mkbhd Marques GIF - Mkbhd Marques Brownlee - Discover & Share GIFs: Click to view the GIF

- Moti Hearts GIF - Moti Hearts - Discover & Share GIFs: Click to view the GIF

Nous Research AI ▷ #ask-about-llms (37 messages🔥):

- Pre-Tokenization Talk: Discussion on whether to pre-tokenize for faster training, and the efficiency of scaled dot product aka. Flash Attention. It was mentioned that Flash Attention 2 is employed only in special cases, not commonly used.

- Llama Files External Weights Exploration: A GitHub repository was shared discussing the use of Llamafile with external weights, but there hasn't been an attempt using it yet in the channel's context.

- Padding Strategies and Model Training: A debate over whether to pad data during the pre-tokenizing stage or training stage, with suggestions like creating buckets of different lengths to optimize GPU efficiency and reduce computational waste during microbatch processing.

- Torch Compile Conundrums: The challenges of using

torch.compilewith variable-length sentences in machine translation were mentioned. Strategies to overcome this include grouping sentences by length to minimize padding during batch processing. - Handling RAG Hallucinations via Grounding: Discussion around current methods to limit hallucination in Retriever-Augmented Generators (RAGs). A user mentioned a pipeline involving LLM expansion, database queries, ranking, and attaching metadata as a form of grounding for non-QA tasks.

- no title found: no description found

- xFormers optimized operators | xFormers 0.0.27 documentation: API docs for xFormers. xFormers is a PyTorch extension library for composable and optimized Transformer blocks.