[AINews] Llama 3.1: The Synthetic Data Model

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Synthetic Data is all you need.

AI News for 7/22/2024-7/23/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (474 channels, and 5128 messages) for you. Estimated reading time saved (at 200wpm): 473 minutes. You can now tag @smol_ai for AINews discussions!

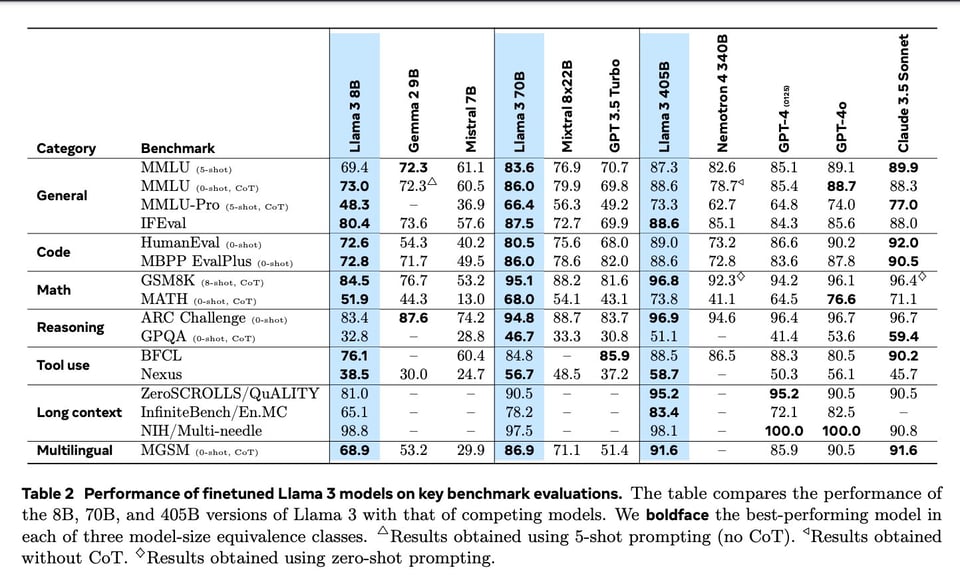

Llama 3.1 is here! (Site, Video,Paper, Code, model, Zuck, Latent Space pod). Including the 405B model, which triggers both the EU AI act and SB 1047. The full paper has all the frontier model comparisons you want:

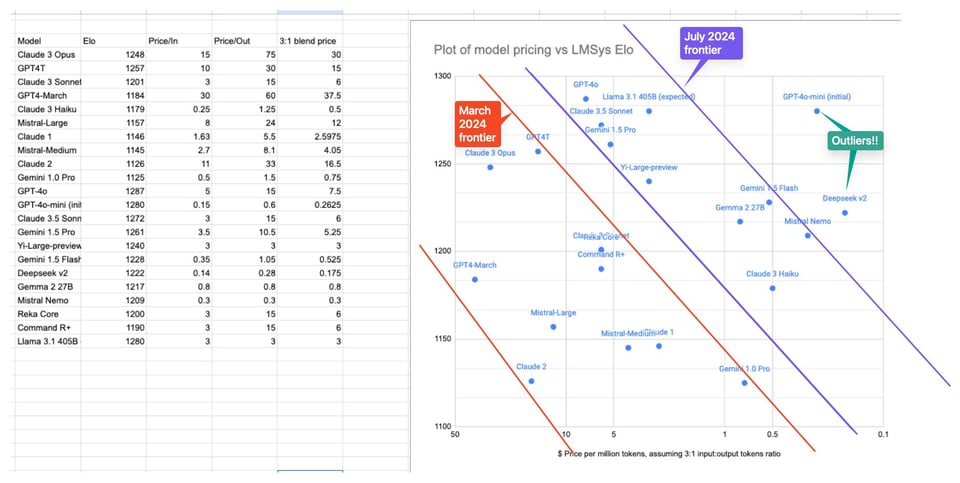

We'll assume you read the headlines from yesterday. It's not up on LMsys yet, but independent evals on SEAL and Allen AI's ZeroEval are promising (with some disagreement). It was a well coordinated launch across ~every inference provider in the industry, including (of course) Groq showing a flashy demo inferencing at 750tok/s. Inference pricing is also out with Fireworks leading the pack.

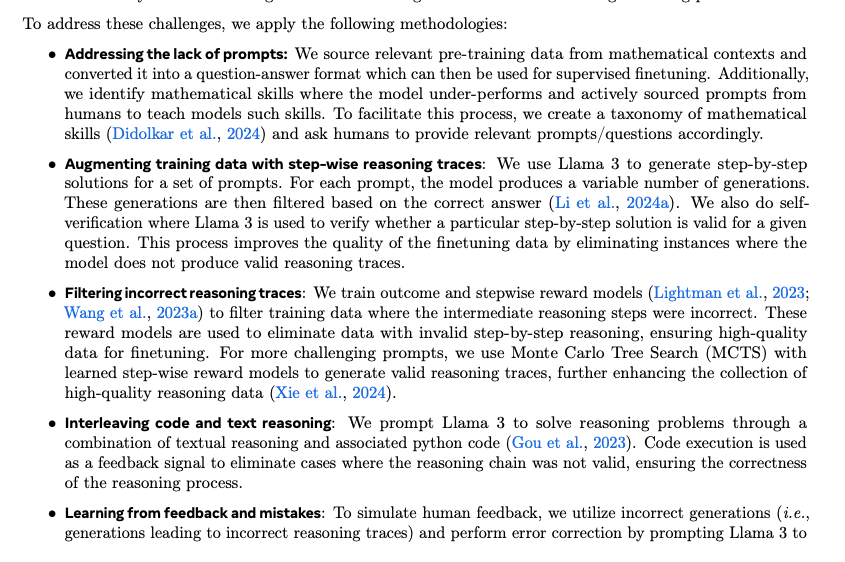

While it is well speculated that the 8B and 70B were "offline distillations" of the 405B, there are a good deal more synthetic data elements to Llama 3.1 than the expected. The paper explicitly calls out:

- SFT for Code: 3 approaches for synthetic data for the 405B bootstrapping itself with code execution feedback, programming language translation, and docs backtranslation.

- SFT for Math:

- SFT for Multilinguality: "To collect higher quality human annotations in non-English languages, we train a multilingual expert by branching off the pre-training run and continuing to pre-train on a data mix that consists of 90% multilingual tokens."

- SFT for Long Context: "It is largely impractical to get humans to annotate such examples due to the tedious and time-consuming nature of reading lengthy contexts, so we predominantly rely on synthetic data to fill this gap. We use earlier versions of Llama 3 to generate synthetic data based on the key long-context use-cases: (possibly multi-turn) question-answering, summarization for long documents, and reasoning over code repositories, and describe them in greater detail below"

- SFT for Tool Use: trained for Brave Search, Wolfram Alpha, and a Python Interpreter (a special new

ipythonrole) for single, nested, parallel, and multiturn function calling. - RLHF: DPO preference data was used extensively on Llama 2 generations. As Thomas says on the pod: “Llama 3 post-training doesn't have any human written answers there basically… It's just leveraging pure synthetic data from Llama 2.”

Last but not least, Llama 3.1 received a license update explicitly allowing its use for synthetic data generation.

We finally have a frontier-class open LLM, and together it is worth noting how far ahead the industry has moved in cost per intelligence since March, and it will only get better from here.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Meta AI

- Llama 3.1 405B model: @bindureddy noted that Llama-3.1 405B benchmarks were leaked on Reddit, outperforming GPT-4o. @Teknium1 shared an image comparing Llama-3.1 405/70/8b against GPT-4o, showing SOTA frontier models now available open source. @abacaj mentioned Meta is training and releasing open weights models faster than OpenAI can release closed models.

- Llama 3 70B performance: @rohanpaul_ai highlighted that the 70B model is matching GPT-4 levels while being 6x smaller. This is the base model, not instruct tuned. @rohanpaul_ai noted the 70B model is encroaching on 405B's territory, and the utility of big models would be to distill from it.

- Open source model progress: @teortaxesTex called it the dawn of Old Man Strength open models. @abacaj mentioned OpenAI models have not been improving significantly, so Meta models will catch up in open weights.

AI Assistants and Agents

- Omnipilot AI: @svpino introduced @OmnipilotAI, an AI application that can type anywhere you can and use full context of what's on your screen. It works with every macOS application and uses Claude Sonet 3.5, Gemini, and GPT-4o. Examples include replying to emails, autocompleting terminal commands, finishing documents, and sending Slack messages.

- Mixture of agents: @llama_index shared a video by @1littlecoder introducing "mixture of agents" - using multiple local language models to potentially outperform single models. It includes a tutorial on implementing it using LlamaIndex and Ollama, combining models like Llama 3, Mistral, StableLM in a layered architecture.

- Planning for agents: @hwchase17 discussed the future of planning for agents. While model improvements will help, good prompting and custom cognitive architectures will always be needed to adapt agents to specific tasks.

Benchmarks and Evaluations

- LLM-as-a-Judge: @cwolferesearch provided an overview of LLM-as-a-Judge, where a more powerful LLM evaluates the quality of another LLM's output. Key takeaways include using a sufficiently capable judge model, prompt setup (pairwise vs pointwise), improving pointwise score stability, chain-of-thought prompting, temperature settings, and accounting for position bias.

- Factual inconsistency detection: @sophiamyang shared a guide on fine-tuning and evaluating a @MistralAI model to detect factual inconsistencies and hallucinations in text summaries using @weights_biases. It's based on @eugeneyan's work and part of the Mistral Cookbook.

- Complex question answering: @OfirPress introduced a new benchmark to evaluate AI assistants' ability to answer complex natural questions like "Which restaurants near me have vegan and gluten-free entrées for under $25?" with the goal of leading to better assistants.

Frameworks and Tools

- DSPy: @lateinteraction shared a paper finding that DSPy optimizers alternating between optimizing weights and prompts can deliver up to 26% gains over just optimizing one. @lateinteraction noted composable optimizers over modular NLP programs are the future, and to compose BootstrapFewShot and BootstrapFinetune optimizers.

- LangChain: @hwchase17 pointed to the new LangChain Changelog to better communicate everything they're shipping. @LangChainAI highlighted seamless LangSmith tracing in LangGraph.js with no additional configuration, making it easier to use LangSmith's features to build agents.

- EDA-GPT: @LangChainAI introduced EDA-GPT, an open-source data analysis companion that streamlines data exploration, visualization, and insights. It has a configurable UI and integrates with LangChain.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Running Large Language Models Locally

- If you have to ask how to run 405B locally (Score: 287, Comments: 122): The post addresses the impossibility of running a 405 billion parameter model locally. It bluntly states that if someone needs to ask how to do this, they simply cannot achieve it, implying the task is beyond the capabilities of typical consumer hardware.

- Please share your LLaMA 3.1 405B experiences below for us GPU poor (Score: 52, Comments: 30): The post requests users to share their experiences running LLaMA 3.1 405B locally, specifically targeting those with limited GPU resources. While no specific experiences are provided in the post body, the title suggests interest in understanding how this large language model performs on consumer-grade hardware and the challenges faced by users with less powerful GPUs.

- Ollama site “pro tips” I wish my idiot self had known about sooner: (Score: 72, Comments: 24): The post highlights several "pro tips" for using the Ollama site to download and run AI models. Key features include accessing different quantizations of models via the "Tags" link, a hidden model type sorting feature accessible through the search box, finding max context window sizes in the model table, and using the top search box to access a broader list of models including user-submitted ones. The author, who has been using Ollama for 6-8 months, shares these insights to help others who might have overlooked these features.

Theme 2. LLaMA 3.1 405B Model Release and Benchmarks

- Azure Llama 3.1 benchmarks (Score: 349, Comments: 268): Microsoft released benchmark results for Azure Llama 3.1, showing improvements over previous versions. The model achieved a 94.4% score on the MMLU benchmark, surpassing GPT-3.5 and approaching GPT-4's performance. Azure Llama 3.1 also demonstrated strong capabilities in code generation and multi-turn conversations, positioning it as a competitive option in the AI model landscape.

- Llama 3.1 405B, 70B, 8B Instruct Tuned Benchmarks (Score: 137, Comments: 28): Meta has released LLaMA 3.1, featuring models with 405 billion, 70 billion, and 8 billion parameters, all of which are instruct-tuned. The 405B model achieves state-of-the-art performance on various benchmarks, outperforming GPT-4 on several tasks, while the 70B model shows competitive results against Claude 2 and GPT-3.5.

- LLaMA 3.1 405B base model available for download (Score: 589, Comments: 314): The LLaMA 3.1 405B base model, with a size of 764GiB (~820GB), is now available for download. The model can be accessed through a Hugging Face link, a magnet link, or a torrent file, with credits attributed to a 4chan thread.

- Users discussed running the 405B model, with suggestions ranging from using 2x A100 GPUs (160GB VRAM) with low quantization to renting servers with TBs of RAM on Hetzner for $200-250/month, potentially achieving 1-2 tokens per second at Q8/Q4.

- Humorous comments about running the model on a Nintendo 64 or downloading more VRAM sparked discussions on hardware limitations. Users speculated it might take 5-10 years before consumer-grade GPUs could handle such large models.

- Some questioned the leak's authenticity, noting similarities to previous leaks like Mistral medium (Miqu-1). Others debated whether it was an intentional "leak" by Meta for marketing purposes, given the timing before the official release.

Theme 3. Distributed and Federated AI Inference

- LocalAI 2.19 is Out! P2P, auto-discovery, Federated instances and sharded model loading! (Score: 52, Comments: 7): LocalAI 2.19 introduces federated instances and sharded model loading via P2P, allowing users to combine GPU and CPU power across multiple nodes to run large models without expensive hardware. The release includes a new P2P dashboard for easy setup of federated instances, Text-to-Speech integration in binary releases, and improvements to the WebUI, installer script, and llama-cpp backend with support for embeddings.

- Ollama has been updated to accommodate Mistral NeMo and a proper download is now available (Score: 63, Comments: 13): Ollama has been updated to include support for the Mistral NeMo model, now available for download. The user reports that NeMo performs faster and better than Lama 3 8b and Gemma 2 9b models on a 4060 Ti GPU with 16GB VRAM, noting it as a significant advancement in local AI models shortly after Gemma's release.

- Users praised Mistral NeMo 12b for its performance, with one noting it "NAILED" a 48k context test and showed fluency in French. However, its usefulness may be short-lived with the upcoming release of Llama 3.1 8b.

- Some users expressed excitement about downloading the model, while others found it disappointing compared to tiger-gemma2, particularly in following instructions during multi-turn conversations.

- The timing of Mistral NeMo's release was described as "very sad" for the developers, coming shortly after other significant model releases.

Theme 4. New AI Model Releases and Leaks

- Nvidia has released two new base models: Minitron 8B and 4B, pruned versions of Nemotron-4 15B (Score: 69, Comments: 3): Nvidia has released Minitron 8B and 4B, pruned versions of their Nemotron-4 15B model, which require up to 40x fewer training tokens and result in 1.8x compute cost savings compared to training from scratch. These models show up to 16% improvement in MMLU scores compared to training from scratch, perform comparably to models like Mistral 7B and Llama-3 8B, and are intended for research and development purposes only.

- Pruned models are uncommon in the AI landscape, with Minitron 8B and 4B being notable exceptions. This rarity sparks interest among researchers and developers.

- The concept of pruning is intuitively similar to quantization, though some users speculate that quantizing pruned models might negatively impact performance.

- AWQ (Activation-aware Weight Quantization) is compared to pruning, with pruning potentially offering greater benefits by reducing overall model dimensionality rather than just compressing bit representations.

- llama 3.1 download.sh commit (Score: 66, Comments: 18): A recent commit to the Meta Llama GitHub repository suggests that LLaMA 3.1 may be nearing release. The commit, viewable at https://github.com/meta-llama/llama/commit/12b676b909368581d39cebafae57226688d5676a, includes a download.sh script, potentially indicating preparations for the model's distribution.

- The commit reveals 405B models in both Base and Instruct versions, with variants labeled mp16, mp8, and fp8. Users speculate that "mp" likely stands for mixed precision, suggesting quantization-aware training for packed mixed precision models.

- Discussion around the fb8 label in the Instruct model concludes it's likely a typo for fp8, supported by evidence in the file. Users express excitement about the potential to analyze weight precisions for better low-bit quantization.

- The commit author, samuelselvan, previously uploaded a LLaMA 3.1 model to Hugging Face that was considered suspicious. Users are enthusiastic about Meta directly releasing quantized versions of the model.

- Llama 3 405b leaked on 4chan? Excited for it ! Just one more day to go !! (Score: 210, Comments: 38): Reports of a LLaMA 3.1 405B model leak on 4chan are circulating, but these claims are unverified and likely false. The purported leak is occurring just one day before an anticipated official announcement, raising skepticism about its authenticity. It's important to approach such leaks with caution and wait for official confirmation from Meta or other reliable sources.

- A HuggingFace repository containing the model was reportedly visible 2 days ago, allowing potential leakers access. Users expressed interest in 70B and 8B versions of the model.

- Some users are more interested in the pure base model without alignment or guardrails, rather than waiting for the official release. A separate thread on /r/LocalLLaMA discusses the alleged 405B base model download.

- Users are attempting to run the model, with one planning to convert to 4-bit GGUF quantization using a 7x24GB GPU setup. Another user shared a YouTube link of their efforts to run the model.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. OpenAI's Universal Basic Income Experiment Results

- [/r/singularity] The OpenResearch team releases the first result from their UBI study (OpenAI) (Score: 280, Comments: 84): OpenResearch, a team at OpenAI, has released initial results from their Universal Basic Income (UBI) study. The study, conducted in Kenyan villages, found that a $1,000 cash transfer resulted in significant positive impacts, including a $400 increase in assets and a 40% reduction in the likelihood of going hungry. These findings contribute to the growing body of evidence supporting the effectiveness of direct cash transfers in alleviating poverty.

- [/r/OpenAI] OpenAI founder Sam Altman secretly gave out $45 million to random people - as an experiment (Score: 272, Comments: 75): Sam Altman's $45 million UBI experiment revealed: The OpenAI founder secretly distributed $45 million to 3,000 people across two U.S. states as part of a Universal Basic Income (UBI) experiment. Participants received $1,000 per month for up to five years, with the study aiming to assess the impact of unconditional cash transfers on recipients' quality of life, time use, and financial health.

- 3,000 participants received either $1,000 or $50 per month for up to five years, with many Redditors expressing desire to join the experiment. The study targeted individuals aged 21-40 with household incomes below 300% of the federal poverty level across urban, suburban, and rural areas in Texas and Illinois.

- Some users criticized the experiment as a PR move by tech billionaires to alleviate concerns about AI-driven job loss, while others argued that private UBI experiments are necessary given slow government action on the issue.

- Discussions emerged about the future of employment, with some predicting a sudden spike in unemployment due to AI advancements, potentially leading to widespread UBI implementation when traditional jobs become scarce across various sectors.

Theme 4. AI Researcher Predictions on AGI Timeline

- [/r/singularity] Former OpenAI researcher predictions (Score: 243, Comments: 151): Former OpenAI researcher predicts AGI timeline: Paul Christiano, a former OpenAI researcher, estimates a 20-30% chance of AGI by 2030 and a 60-70% chance by 2040. He believes that current AI systems are still far from AGI, but rapid progress in areas like reasoning and planning could lead to significant breakthroughs in the coming years.

- [/r/singularity] "most of the staff at the secretive top labs are seriously planning their lives around the existence of digital gods in 2027" (Score: 579, Comments: 450): AI researchers anticipate digital deities: According to the post, most staff at secretive top AI labs are reportedly planning their lives around the expected emergence of digital gods by 2027. While no specific sources or evidence are provided, the claim suggests a significant shift in the mindset of AI researchers regarding the potential capabilities and impact of future AI systems.

- [/r/singularity] Nick Bostrom says shortly after AI can do all the things the human brain can do, it will learn to do them much better and faster, and human intelligence will become obsolete (Score: 323, Comments: 258): Nick Bostrom warns of AI surpassing human intelligence in a rapid and transformative manner. He predicts that once AI can match human brain capabilities, it will quickly outperform humans across all domains, rendering human intelligence obsolete. This accelerated advancement suggests a potential intelligence explosion, where AI's capabilities rapidly exceed those of humans, leading to significant societal and existential implications.

- Nick Bostrom's warning sparked debate, with some calling it "Captain obvious" due to AI's ability to connect to 100k GPUs, while others defended the importance of his message given ongoing arguments about AI capabilities.

- Discussions ranged from humorous memes to philosophical musings about a "solved world", with one user describing a hypothetical 2055 scenario of AGI and ASI leading to medical breakthroughs, full-dive VR, and simulated realities.

- Some users expressed optimism about AI solving major problems like ocean degradation, while others cautioned about potential negative outcomes, such as population reduction scenarios or the challenges of implementing necessary changes due to resistance.

Theme 5. New AI Training Infrastructure Developments

- [/r/singularity] Elon says that today a model has started training on the new and most powerful AI cluster in the world (Score: 239, Comments: 328): Elon Musk announces groundbreaking AI development: A new AI model has begun training on what Musk claims is the world's most powerful AI cluster. This announcement marks a significant milestone in AI computing capabilities, potentially pushing the boundaries of large language model training and performance.

AI Discord Recap

A summary of Summaries of Summaries

1. LLM Advancements and Benchmarking

- Llama 3.1 Release Excitement: Llama 3.1 models, including 8B and 405B, are now available, sparking excitement in the community. Users shared their experiences and troubleshooting tips to tackle issues like running the model locally and managing high loss values during fine-tuning.

- The community praised the model's performance, with some noting it surpasses existing proprietary models on benchmarks, while others highlighted challenges in practical deployment.

- Meta's Open Source AI Commitment: Meta's release of Llama 3.1 with models like 405B pushes the boundaries of open-source AI, offering 128K token context and support for multiple languages. This move aligns with Mark Zuckerberg's vision for fostering innovation through open collaboration.

- The community discussed the strategic implications of this release, emphasizing the model's potential to rival top closed-source alternatives like GPT-4.

2. Optimizing LLM Inference and Training

- Efficient Fine-tuning Techniques Discussed: The ReFT paper introduces a method that is 15x-60x more parameter-efficient than LoRA by working on the residual stream, offering flexibility in combining training tasks with optimized parameters.

- Community members engaged with the lead author to understand the practical applications, highlighting the method's potential to enhance fine-tuning efficiency.

- GPU Compatibility Challenges: Users reported issues with GPU detection on Linux, particularly with the Radeon RX5700XT, raising concerns about RDNA 1 support. Discussions emphasized the importance of proper configurations for GPU recognition.

- Some users confirmed that extension packs weren't resolving the issues, indicating a need for further troubleshooting and potential updates from developers.

3. Open-Source AI Frameworks and Community Efforts

- LlamaIndex Webinar on Efficient Document Retrieval: The upcoming webinar will discuss Efficient Document Retrieval with Vision Language Models this Friday at 9am PT. Participants can sign up to learn about cutting-edge techniques in document processing.

- The webinar aims to explore ColPali's innovative approach to embedding page screenshots with Vision Language Models, enhancing retrieval performance over complex documents.

- Magpie Paper Sparks Debate: Members debated the utility of insights from the Magpie paper, questioning whether the generated instructions offer substantial utility or are merely a party trick.

- The discussion highlights ongoing evaluations of emerging techniques in instruction generation, reflecting the community's critical engagement with new research.

4. Multimodal AI and Generative Modeling Innovations

- UltraPixel Creates High Resolution Images: UltraPixel is a project capable of generating extremely detailed high-resolution images, pushing the boundaries of image generation with a focus on clarity and detail.

- The community showcased interest in the project's capabilities, exploring its potential applications and sharing the link to the project for further engagement.

- Idefics2 and CodeGemma: New Multimodal Models: Idefics2 8B Chatty focuses on elevated chat interactions, while CodeGemma 1.1 7B refines coding abilities.

- These models represent significant advancements in multimodal AI, with the community discussing their potential to enhance user interaction and coding tasks.

PART 1: High level Discord summaries

HuggingFace Discord

- NuminaMath Datasets Launch: The NuminaMath datasets, featuring approximately 1M math problem-solution pairs used to win the Progress Prize at the AI Math Olympiad, have been released. This includes subsets designed for Chain of Thought and Tool-integrated reasoning, significantly enhancing performance on math competition benchmarks.

- Models trained on these datasets have demonstrated best-in-class performance, surpassing existing proprietary models. Check the release on the 🤗 Hub.

- Llama 3.1 Release Excitement: The recent release of Llama 3.1 has sparked excitement, with models like 8B and 405B now available for testing. Users are actively sharing experiences, including troubleshooting issues when running the model locally.

- The community engages with various insights and offers support for operational challenges faced by early adopters.

- Challenges in Model Fine-Tuning: Frustrations have arisen regarding high loss values and performance issues in fine-tuning models for specific tasks. Resources and practices have been suggested to tackle these challenges effectively.

- The exchange of knowledge aims to improve model training and evaluation processes.

- UltraPixel Creates High Resolution Images: UltraPixel is showcased as a project capable of generating extremely detailed high-resolution images. This initiative pushes the boundaries of image generation with a focus on clarity and detail.

- Check out the project at this link.

- Interest in Segmentation Techniques: Another member expressed interest in effective segmentation techniques that work alongside background removal using diffusion models. They seek recommendations on successful methods or models.

- The conversation is aimed at exploring better practices for image segmentation.

Nous Research AI Discord

- Magpie Paper Sparks Debate: Members discussed whether insights from the Magpie paper offer substantial utility or are merely a party trick, focusing on the quality and diversity of generated instructions.

- This inquiry highlights the ongoing evaluation of emerging techniques in instruction generation.

- ReFT Paper Reveals Efficient Fine-tuning: The lead author of the ReFT paper clarified that the method is 15x-60x more parameter-efficient than LoRA by working on the residual stream.

- This offers flexibility in combining training tasks with optimized parameters, reinforcing the relevance of efficient fine-tuning strategies.

- Bud-E Voice Assistant Gains Traction: The Bud-E voice assistant demo emphasizes its open-source potential and is currently optimized for Ubuntu, with hackathons led by Christoph for community engagement.

- Such collaborative efforts aim to foster contributions from volunteers, enhancing the project's scope.

- Llama 3.1 Impresses with Benchmark Performance: Llama 3.1 405B Instruct-Turbo ranked 1st on GSM8K and closely matched GPT-4o on logical reasoning, although performance on MMLU-Redux appeared weaker.

- This variation reinforces the importance of comprehensive evaluation across benchmark datasets.

- Kuzu Graph Database Recommended: Members recommended the Kuzu GraphStore, integrated with LlamaIndex, particularly for its MIT license that ensures accessibility for developers.

- The adoption of advanced graph database functionalities presents viable alternatives for data management, especially in complex systems.

LM Studio Discord

- LM Studio Performance Insights: Users highlighted performance differences between Llama 3.1 models, noting that running larger models demands significant GPU resources, especially for the 405B variant.

- One user humorously remarked about needing a small nation's electricity supply to run these models effectively.

- Model Download Woes: Several members noted difficulties with downloading models due to DNS issues and traffic spikes to Hugging Face caused by the popularity of Llama 3.1.

- One user suggested the option to disable IPv6 within the app to alleviate some of these downloading challenges.

- GPU Compatibility Challenges: New Linux users reported trouble with LM Studio recognizing GPUs like the Radeon RX5700XT, raising concerns about RDNA 1 support.

- Discussion highlighted the importance of proper configurations for GPU recognition, with some users confirming extension packs weren’t resolving the issues.

- Llama 3.1 Offers New Features: Llama 3.1 has launched with improvements, including context lengths of up to 128k, available for download on Hugging Face.

- Users are encouraged to explore the model's enhanced performance, particularly for memory-intensive tasks.

- ROCm Performance Issues Post-Update: A user noted that updating to ROCm 0.2.28 resulted in significant slowdowns in inference, with consumption dropping to 150w on their 7900XT.

- Reverting to 0.2.27 restored performance, indicating a need for clarity on functional changes in the newer version.

Perplexity AI Discord

- Llama 3.1 405B Launch and API Integration: The highly anticipated Llama 3.1 405B model is now available on Perplexity, rivaling GPT-4o and Claude Sonnet 3.5, enhancing the platform's AI capabilities.

- Users inquired about adding Llama 3.1 405B to the Perplexity API, asking if it will be available soon and sharing various experiences with model performance.

- Concerns Over Llama 3.1 Performance: Users reported issues with Llama 3.1 405B, including answer repetition and difficulties in understanding Asian symbols, leading many to consider reverting to Claude 3.5 Sonnet.

- Comparative evaluations suggested that while Llama 3.1 is a leap forward, Claude still holds an edge in speed and coding tasks.

- Exploring Dark Oxygen and Mercury's Diamonds: A recent discussion focused on Dark Oxygen, raising questions regarding its implications for atmospheric studies and ecological balance.

- Additionally, insights emerged about Diamonds on Mercury, sparking interest in the geological processes that could lead to their formation.

- Beach-Cleaning Robots Steal the Show: Innovations in beach-cleaning robot technology were highlighted, showcasing efforts to tackle ocean pollution effectively.

- The impact of these robots on marine ecosystems was a key point of discussion, with real-time data from trials being shared.

- Perplexity API's DSGVO Compliance DB: Concerns were raised about the Perplexity API being DSGVO-ready, with users seeking clarity on data protection compliance.

- The conversation included a share of the terms of service referencing GDPR compliance.

Stability.ai (Stable Diffusion) Discord

- Ranking AI Models with Kolors on Top: In the latest discussion, users ranked AI models, placing Kolors at the top due to its impressive speed and performance, followed by Auraflow, Pixart Sigma, and Hunyuan.

- Kolors' performance aligns well with user expectations for SD3.

- Training Lycoris Hits Compatibility Snags: Talks centered around training Lycoris using ComfyUI and tools like Kohya-ss, with users expressing frustration over compatibility requiring Python 3.10.9 or higher.

- There is anticipation for potential updates from Onetrainer to facilitate this process.

- Community Reacts to Stable Diffusion: Users debated the community's perception of Stable Diffusion, suggesting recent criticism often arises from misunderstandings around model licensing.

- Concerns were raised about marketing strategies and perceived negativity directed at Stability AI.

- Innovations in AI Sampling Methods: A new sampler node has been introduced, implementing Strong Stability Preserving Runge-Kutta and implicit variable step solvers, capturing user interest in AI performance enhancements.

- Users eagerly discussed the possible improvements these updates bring to AI model efficacy.

- Casual Chat on AI Experiences: General discussions flourished as users shared personal experiences with AI, including learning programming languages and assessing health-related focus challenges.

- Such casual conversations added depth to the understanding of daily AI applications.

OpenRouter (Alex Atallah) Discord

- Llama 3 405B Launch Competitively Priced: The Llama 3 405B has launched at $3/M tokens, rivaling GPT-4o and Claude 3.5 Sonnet while showcasing a remarkable 128K token context for generating synthetic data.

- Users reacted with enthusiasm, remarking that 'this is THE BEST open LLM now' and expressing excitement over the model's capabilities.

- Growing Concerns on Model Performance: Feedback on the Llama 405B indicates mixed performance results, especially in translation tasks where it underperformed compared to Claude and GPT-4.

- Some users reported the 70B version generated 'gibberish' after a few tokens, raising flags about its reliability for task-specific usage.

- Exciting OpenRouter Feature Updates: New features on OpenRouter include Retroactive Invoices, custom keys, and improvements to the Playground, enhancing user functionality overall.

- Community members are encouraged to share feedback here to further optimize the user experience.

- Multi-LLM Prompt Competition Launched: A prompting competition for challenging the Llama 405B, GPT-4o, and Claude 3.5 Sonnet has been announced, with participants vying for a chance to win 15 free credits.

- Participants are eager to know the judging criteria, especially regarding what qualifies as a tough prompt.

- DeepSeek Coder V2 Inference Provider Announced: The DeepSeek Coder V2 new private inference provider has been introduced, operating without input training, which broadens OpenRouter's offerings significantly.

- Users can start exploring the service via DeepSeek Coder.

CUDA MODE Discord

- Flash Attention Confusion in CUDA: A member questioned the efficient management of registers in Flash Attention, raising concerns about its use alongside shared memory in CUDA programming.

- This leads to a broader need for clarity in register allocation strategies in high-performance computing contexts.

- Memory Challenges with Torch Compile: Utilizing

torch.compilefor a small Bert model led to significant RAM usage, forcing a batch size cut from 512 to 160, as performance lagged behind eager mode.- Testing indicated that the model compiled successfully despite these concerns, highlighting memory management issues in PyTorch.

- Meta Llama 3.1 Focus on Text: Meta's Llama 3.1 405B release expanded context length to 128K and supports eight languages, excluding multi-modal features for now, sparking strategic discussions.

- This omission aligns with expectations around potential financial outcomes and competitive positioning ahead of earnings reports.

- Optimizing CUDA Kernel Performance: User experiences showed that transitioning to tiled matrix multiplication resulted in limited performance gains, similar to findings in a related article on CUDA matrix multiplication benchmarks.

- The discussion emphasized the importance of compute intensity for optimizing kernel performance, especially at early stages.

- Stable Diffusion Acceleration on AMD: A post detailed how to optimize inferencing for Stable Diffusion on RX7900XTX using the Composable Kernel library for AMD RDNA3 GPUs.

- Additionally, support for Flash Attention on AMD ROCm, effective for mi200 & mi300, was highlighted in a recent GitHub pull request.

OpenAI Discord

- GEMINI Competition Sparks Interest: A member expressed enthusiasm for the GEMINI Competition from Google, looking for potential collaborators for the hackathon.

- Reach out if you're interested to collaborate!

- Llama 3.1 Model Draws Mixed Reactions: Members reacted to the Llama-3.1 Model, with some labeling it soulless compared to earlier iterations like Claude and Gemini, which were seen to retain more creative depth.

- This discussion pointed out a divergence in experiences and expectations of recent models.

- Fine-Tuning Llama 3.1 for Uncensored Output: One user is working to fine-tune Llama-3.1 405B for an uncensored version, aiming to release it as Llama3.1-406B-uncensored on Hugging Face after several weeks of training.

- This effort highlights the ongoing interest in developing alternatives to constrained models.

- Voice AI in Discord Presents Challenges: Discussion arose around creating AI bots capable of engaging in Discord voice channels, emphasizing the complexity of the task due to current limitations.

- Members noted the technical challenges that need addressing for effective implementation.

- Eager Anticipation for Alpha Release: Members are keenly awaiting the alpha release, with some checking the app every 20 minutes, expressing uncertainty about whether it will launch at the end of July or earlier.

- There's a call for clearer communications from developers regarding timelines.

Modular (Mojo 🔥) Discord

- Mojo Community Meeting Presentations Open Call: There's an open call for presentations at the Mojo Community Meeting on August 12 aimed at showcasing what developers are building in Mojo.

- Members can sign up to share experiences and projects, enhancing community engagement.

- String and Buffer Optimizations Take the Stage: Work on short string optimization and small buffer optimization in the standard library is being proposed for presentation, highlighting its relevance for future meetings.

- This effort aligns with past discussion themes centered on performance enhancements.

- Installing Mojo on an Ubuntu VM Made Simple: Installation of Mojo within an Ubuntu VM on Windows is discussed, with WSL and Docker suggested as feasible solutions.

- Concerns about possible installation issues are raised, but the general consensus is that VM usage is suitable.

- Mojo: The Future of Game Engine Development: Mojo's potential for creating next-gen game engines is discussed, emphasizing its strong support for heterogeneous compute via GPU.

- Challenges with allocator handling are noted, indicating some hurdles in game development patterns.

- Linking Mojo with C Libraries: There’s ongoing dialogue about improving Mojo's linking capabilities with C libraries, especially utilizing libpcap.

- Members advocate for ktls as the default for Mojo on Linux to enhance networking functionalities.

Eleuther Discord

- FSDP Performance Troubles with nn.Parameters: A user faced a 20x slowdown when adding

nn.Parameterswith FSDP, but a parameter size of 16 significantly enhanced performance.- They discussed issues about buffer alignment affecting CPU performance despite fast GPU kernels.

- Llama 3.1 Instruct Access on High-End Hardware: A member successfully hosted Llama 3.1 405B instruct on 8xH100 80GB, available via a chat interface and API.

- However, access requires a login, raising discussions on costs and hardware limitations.

- Introducing Switch SAE for Efficient Training: The Switch SAE architecture improves scaling in sparse autoencoders (SAEs), addressing training challenges across layers.

- Relevant papers suggest this could help recover features from superintelligent language models.

- Concerns Over Llama 3's Image Encoding: Discussion surfaced regarding Llama 3's image encoder resolution limit of 224x224, with suggestions to use a vqvae-gan style tokenizer for enhancement.

- Suggestions were made to follow Armen's group, highlighting potential improvements.

- Evaluating Task Grouping Strategies: Members recommended using groups for nested tasks and tags for simpler arrangements, as endorsed by Hailey Schoelkopf.

- This method aims to streamline task organization effectively.

Interconnects (Nathan Lambert) Discord

- Meta's Premium Llama 405B Rollout: Speculation suggests that Meta may announce a Premium version of Llama 405B on Jul 23, after recently removing restrictions on Llama models, paving the way for more diverse applications.

- This change sparks discussions about broader use cases, departing from merely enhancing other models.

- NVIDIA's Marketplace Strategies: Concerns about NVIDIA potentially monopolizing the AI landscape were raised, aiming to combine hardware, CUDA, and model offerings.

- A user pointed out that such dominance might lead to immense profits, though regulatory challenges could impede this vision.

- OpenAI's Pricing Dynamics: OpenAI's introduction of free fine-tuning for gpt-4o-mini up to 2M tokens/day has ignited discussions about the competitive pricing environment in AI.

- Members characterized the pricing landscape as chaotic, emerging in response to escalating competition.

- Llama 3.1 Surpasses Expectations: The launch of Llama 3.1 introduced models with 405B parameters and enhanced multilingual capabilities, demonstrating similar performance to GPT-4 in evaluations.

- The conversation about potential model watermarking and user download tracking ensued, focusing on compliance and privacy issues.

- Magpie's Synthetic Data Innovations: The Magpie paper highlights a method for generating high-quality instruction data for LLMs that surpasses existing data sources in vocabulary diversity.

- Notably, LLaMA 3 Base finetuned on the Magpie IFT dataset outperformed the original LLaMA 3 Instruct model by 9.5% on AlpacaEval.

OpenAccess AI Collective (axolotl) Discord

- Llama 3.1 Release Generates Mixed Reactions: The Llama 3.1 release has stirred mixed feelings, with concerns about its utility particularly in the context of models like Mistral. Some members expressed their dissatisfaction, as captured by one saying, 'Damn they don't like the llama release'.

- Despite the hype, the feedback indicates a need for better performance metrics and clearer advantages over predecessors.

- Users Face Training Challenges with Llama 3.1: Errors related to the

rope_scalingconfiguration while training Llama 3.1 have contributed to community frustration. A workaround was found by updating transformers, showcasing resilience among users as one remarked, 'Seems to have worked thx!'.- This highlights a broader theme of troubleshooting that persists with new model releases.

- Concerns Over Language Inclusion in Llama 3.1: The exclusion of Chinese language support in Llama 3.1 has sparked discussions about its global implications. While the tokenizer includes Chinese, its lack of prioritization was criticized as a strategic blunder.

- This conversation points to the ongoing necessity for language inclusivity in AI models.

- Evaluation Scores Comparison: Llama 3.1 vs Qwen: Community discussions focused on comparing the cmmlu and ceval scores of Llama 3.1, revealing only marginal improvements. Members pointed out that while Qwen's self-reported scores are higher, differences in evaluation metrics complicate direct comparisons.

- This reflects the community's ongoing interest in performance benchmarks across evolving models.

- Exploring LLM Distillation Pipeline: A member shared the LLM Distillery GitHub repo, highlighting a pipeline focusing on precomputing logits and KL divergence for LLM distillation. This indicates a proactive approach to refining distillation processes.

- The community's interest in optimizing such pipelines reflects an ongoing commitment to improving model training efficiencies.

DSPy Discord

- Code Confluence Tool Generates GitHub Summaries: Inspired by DSPY, a member introduced Code Confluence, an OSS tool built with Antlr, Chapi, and DSPY pipelines, designed to create detailed summaries of GitHub repositories. The tool's performance is promising, as demonstrated on their DSPY repo.

- They also shared resources including the Unoplat Code Confluence GitHub and the compilation of summaries called OSS Atlas.

- New AI Research Paper Alert: A member shared a link to an AI research paper titled 2407.12865, sparking interest in its findings. Community members are encouraged to analyze and discuss its implications.

- Requests were made for anyone who replicates the findings in code or locates existing implementations to share them.

- Comparison of JSON Generation Libraries: Members discussed the strengths of libraries like Jsonformer and Outlines for structured JSON generation, noting that Outlines offers better support for Pydantic formats. While Jsonformer excels in strict compliance, Guidance and Outlines offer flexibility, adding complexity.

- Taking into account the community's feedback, they are exploring the practical implications of each library in their workflow.

- Challenges with Llama3 Structured Outputs: Users expressed difficulty obtaining properly structured outputs from Llama3 using DSPY. They suggested utilizing

dspy.configure(experimental=True)with TypedChainOfThought to enhance success rates.- Concerns were raised over viewing model outputs despite type check failures, with

inspect_historyfound to have limitations for debugging.

- Concerns were raised over viewing model outputs despite type check failures, with

- Exploring ColPali for Medical Documents: A member shared experiences using ColPali for RAG of medical documents with images due to prior failures with ColBert and standard embedding models. Plans are underway to investigate additional vision-language models.

- This exploration aims to bolster the effectiveness of information retrieval from complex document types.

LlamaIndex Discord

- LlamaIndex Webinar on Efficient Document Retrieval: Join the upcoming webinar discussing Efficient Document Retrieval with Vision Language Models this Friday at 9am PT. Signup here to explore cutting-edge techniques.

- ColPali introduces an innovative technique that directly embeds page screenshots with Vision Language Models, enhancing retrieval over complex documents that traditional parsing struggles with.

- TiDB Future App Hackathon Offers $30,000 in Prizes: Participate in the TiDB Future App Hackathon 2024 for a chance to win from a prize pool of $30,000, including $12,000 for the top entry. This competition urges innovative AI solutions using the latest TiDB Serverless with Vector Search.

- Coders are encouraged to collaborate with @pingcap to showcase their best efforts in building advanced applications.

- Explore Mixture-of-Agents with LlamaIndex: A new video showcases the approach 'mixture of agents' using multiple local language models to potentially outmatch standalone models like GPT-4. Check the step-by-step tutorial for insights into this enhancing technique.

- Proponents suggest this method could provide a competitive edge, especially in projects requiring diverse model capabilities.

- Llama 3.1 Models Now Available: The Llama 3.1 series now includes models of 8B, 70B, and 405B, accessible through LlamaIndex with Ollama, although the largest model demands significant computing resources. Explore hosted solutions at Fireworks AI for support.

- Users should evaluate their computational capacity when opting for larger models to ensure optimal performance.

- Clarifying context_window Parameters for Improved Model Usage: The

context_windowparameter defines the total token limit that affects both input and output capacity of models. Miscalculating this can result in errors like ValueError due to exceeding limits.- Users are advised to adjust their input sizes or select models with larger context capabilities to optimize output efficiency.

Latent Space Discord

- Excitement Surrounds Llama 3.1 Launch: The release of Llama 3.1 includes the 405B model, marking a significant milestone in open-source LLMs with remarkable capabilities rivaling closed models.

- Initial evaluations show it as the first open model with frontier capabilities, praised for its accessibility for iterative research and development.

- International Olympiad for Linguistics (IOL): The International Olympiad for Linguistics (IOL) commenced, challenging students to translate lesser-known languages using logic, mirroring high-stakes math competitions.

- Participants tackle seemingly impossible problems in a demanding six-hour time frame, highlighting the intersection of logical reasoning and language.

- Llama Pricing Insights: Pricing for Llama 3.1's 405B model ranges around $4-5 per million tokens across platforms like Fireworks and Together.

- This competitive pricing strategy aims to capture market share before potentially increasing rates with growing adoption.

- Evaluation of Llama's Performance: Early evaluations indicate Llama 3.1 ranks highly on benchmarks including GSM8K and logical reasoning on ZebraLogic, landing between Sonnet 3.5 and GPT-4o.

- Challenges like maintaining schema adherence after extended token lengths were noted in comparative tests.

- GPT-4o Mini Fine-Tuning Launch: OpenAI announced fine-tuning capabilities for GPT-4o mini, available to tier 4 and 5 users, with the first 2 million training tokens free each day until September 23.

- This initiative aims to expand access and customization, as users assess its performance against the newly launched Llama 3.1.

LangChain AI Discord

- AgentState vs InnerAgentState Explained: A discussion clarified the difference between

AgentStateandInnerAgentState, with definitions forAgentStateprovided and a suggestion to check the LangChain documentation for further details.- Key fields of

AgentStateincludemessagesandnext, essential for context-dependent operations within LangChain.

- Key fields of

- Setting Up Chroma Vector Database: Instructions were shared on how to set up Chroma as a vector database with open-source solutions in Python, requiring the installation of

langchain-chromaand running the server via Docker.- Examples showed methods like

.add,.get, and.similarity_search, highlighting the necessity of an OpenAI API Key forOpenAIEmbeddingsusage.

- Examples showed methods like

- Create a Scheduler Agent using Composio: A guide for creating a Scheduler Agent with Composio, LangChain, and ChatGPT enables streamlined event scheduling via email. The guide is available here.

- Composio enhances agents with effective tools, demonstrated in the scheduler examples, emphasizing efficiency in task handling.

- YouTube Notes Generator is Here!: The launch of the YouTube Notes Generator, an open-source project for generating notes from YouTube videos, was announced, aiming to facilitate easier note-taking directly from video content.

- Learn more about this tool and its functionality on LinkedIn.

- Efficient Code Review with AI: A new video titled 'AI Code Reviewer Ft. Ollama & Langchain' introduces a CLI tool aimed at enhancing the code review process for developers; watch it here.

- This tool aims to streamline workflow by promoting efficient code evaluations across development teams.

Cohere Discord

- New Members Join the Cohere Community: New members showcased enthusiasm about joining Cohere, igniting a positive welcome from the community.

- Community welcomes newbies with open arms, creating an inviting atmosphere for discussions.

- Innovative Fine-Tuning with Midicaps Dataset: Progress on fine-tuning efforts surfaced with midicaps, showing promise based on previous successful projects.

- Members highlighted good results from past endeavors, indicating potential future breakthroughs.

- Clarifying Cohere's OCR Solutions: Cohere utilizes unstructured.io for its OCR capabilities, keeping options open for external integrations.

- The community engaged in fruitful discussions about the customization and enhancement of OCR functionalities.

- RAG Chatbot Systems Explored: Chat history management in RAG-based ChatBot systems became a hot topic, highlighting the use of vector databases.

- Feedback mechanisms such as thumbs up/down were proposed to optimize interaction experiences.

- Launch of Rerank 3 Nimble with Major Improvements: Rerank 3 Nimble hits the scene, delivering 3x higher throughput while keeping accuracy in check, now available on AWS SageMaker.

- Say hello to increased speed for enterprise search! This foundation model boosts performance for retrieval-augmented generation.

Torchtune Discord

- Llama 3.1 is officially here!: Meta released the latest model, Llama 3.1, this morning, with support for the 8B and 70B instruct models. Check out the details in the Llama 3.1 Model Cards and Prompt formats.

- The excitement was palpable, leading to humorous remarks about typos and excitement-induced errors.

- MPS Support Pull Request Discussion: The pull request titled MPS support by maximegmd introduces checks for BF16 on MPS devices, aimed at improving testing on local Mac computers. Discussions indicate potential issues due to a common ancestor diff, suggesting a rebase might be a better approach.

- This PR was highlighted as a critical update for those working with MPS.

- LoRA Issues Persist: An ongoing issue regarding the LoRA implementation not functioning as expected was raised, with suggestions made for debugging. One contributor noted challenges with CUDA hardcoding in their recent attempts.

- This issue underscores the need for deeper troubleshooting in model performance.

- Git Workflow Challenges Abound: Git workflow challenges have been a hot topic, with many feeling stuck in a cycle of conflicts after resolving previous ones. Suggestions were made to tweak the workflow to minimize these conflicts.

- Effective strategies for conflict resolution seem to be an ever-pressing need among the contributors.

- Pad ID Bug Fix PR Introduced: A critical bug related to pad ID displaying in generate was addressed in Pull Request #1211 aimed at preventing this issue. It clarifies the implicit assumption of Pad ID being 0 in utils.generate.

- This fix is pivotal for ensuring proper handling of special tokens in future generative tasks.

tinygrad (George Hotz) Discord

- Help needed for matmul-free-llm recreation: There's a request for assistance in recreating matmul-free-llm with tinygrad, aiming to leverage efficient kernels while incorporating fp8.

- Hoping for seamless adaptation to Blackwell fp4 soon.

- M1 results differ from CI: An M1 user is experiencing different results compared to CI, seeking clarification on setting up tests correctly with conda and environment variables.

- There's confusion due to discrepancies when enabling

PYTHON=1, as it leads to an IndexError in tests.

- There's confusion due to discrepancies when enabling

- cumsum performance concerns: A newcomer is exploring the O(n) implementation of nn.Embedding in tinygrad and how to improve cumsum from O(n^2) to O(n) using techniques from PyTorch.

- There’s speculation about constraints making this challenging, especially as it's a $1000 bounty.

- Seeking Pattern for Incremental Testing with PyTorch: A member inquired about effective patterns for incrementally testing model performance in the sequence of Linear, MLP, MoE, and LinearAttentionMoE using PyTorch.

- They questioned whether starting tests from scratch is more efficient than incremental testing.

- Developing Molecular Dynamics Engine in Tinygrad: A group is attempting to implement a Molecular Dynamics engine in tinygrad to train models predicting energies of molecular configurations, facing challenges with gradient calculations.

- They require the gradient of predicted energy concerning input positions for the force, but issues arise because they backpropagate through the model weights twice.

LAION Discord

- Int8 Implementation Confirmed: Members discussed using Int8, with confirmation from one that it works, showing developer interest in optimization techniques.

- Hold a sec was requested, indicating a potential for additional guidance and community support during the implementation.

- ComfyUI Flow Script Guidance: A user requested a script for ComfyUI flow, leading to advice on utilizing this framework for smoother setup processes.

- This reflects a community trend towards efficiency and preferred workflows when working with complex system integrations.

- Llama 3.1 Sets New Standards: The release of Llama 3.1 405B introduces a context length of 128K, offering significant capabilities across eight languages.

- This leap positions Llama 3.1 as a strong contender against leading models, with discussions focusing on its diverse functionality.

- Meta's Open Source Commitment: Meta underlined its dedication to open source AI, as described in Mark Zuckerberg’s letter, highlighting developer and community benefits.

- This aligns with their vision to foster collaboration within the AI ecosystem, aiming for wider accessibility of tools and resources.

- Context Size Enhancements in Llama 3.1: Discussions criticized the previous 8K context size as insufficient for large documents, now addressed with the new 128K size in Llama 3.1.

- This improvement is viewed as crucial for tasks needing extensive document processing, elevating model performance significantly.

OpenInterpreter Discord

- Llama 3.1 405 B Amazes Users: Llama 3.1 405 B is reported to work fantastically out of the box with OpenInterpreter. Unlike GPT-4o, there's no need for constant reminders or restarts to complete multiple tasks.

- Users highlighted that the experience provided by Llama 3.1 405 B significantly enhances productivity when compared to GPT-4o.

- Frustrations with GPT-4o: A user expressed challenges with GPT-4o, requiring frequent prompts to perform tasks on their computer. This frustration underscores the seamless experience users have with Llama 3.1 405 B.

- The comparison made suggests a user preference leaning towards Llama 3.1 405 B for efficient task management.

- Voice Input on MacOS with Coqui Model?: A query arose about using voice input with a local Coqui model on MacOS. No successful implementations have been reported yet.

- Community engagement remains open, but no further responses have surfaced to clarify the practicality of this application.

- Expo App's Capability for Apple Watch: Discussion affirmed that the Expo app should theoretically be able to build applications for the Apple Watch. However, further details or confirmations were not provided.

- While optimistic, the community awaits practical validation of this capability in an Apple Watch context.

- Shipping Timeline for the Device: A member inquired about the shipping timeline for a specific device, indicating curiosity about its status. No updates or timelines were shared in the conversation.

- The lack of information points to an opportunity for clearer communication regarding shipping statuses.

Alignment Lab AI Discord

- Clarification on OpenOrca Dataset Licensing: A member inquired whether the MIT License applied to the OpenOrca dataset permits commercial usage of outputs derived from the GPT-4 Model.

- Can its outputs be used for commercial purposes? highlights the ongoing discussion around dataset licensing in AI.

- Plans for Open Sourcing Synthetic Dataset: Another member revealed intentions to open source a synthetic dataset aimed at supporting both commercial and non-commercial projects, highlighting its relevance in the AI ecosystem.

- They noted an evaluation of potential dependencies on OpenOrca, raising questions about its licensing implications in the broader dataset landscape.

LLM Finetuning (Hamel + Dan) Discord

- Miami Meetup Interest Sparks Discussion: A member inquired about potential meetups in Miami, seeking connections with others in the area for gatherings.

- So far, there have been no further responses or arrangements mentioned regarding this meetup inquiry.

- NYC Meetup Gains Traction for August: Another member expressed interest in attending meetups in NYC in late August, indicating a desire for community engagement.

- This discussion hints at the possible coordination of events for local AI enthusiasts in the New York area.

AI Stack Devs (Yoko Li) Discord

- Artist Seeking Collaboration: Aria, a 2D/3D artist, expressed interest in collaborating with others in the community. They invited interested members to reach out via DM for potential projects.

- This presents an opportunity for anyone in the guild looking to incorporate artistic skills into their AI projects, particularly in visualization or gaming.

- Engagement Opportunities for AI Engineers: The call for collaboration emphasizes the growing interest in merging AI engineering with creative domains like art and design.

- Such collaborations can enhance the visual aspects of AI projects, potentially leading to more engaging user experiences.

Mozilla AI Discord

- Mozilla Accelerator Application Deadline Approaches: The application deadline for the Mozilla Accelerator is fast approaching, offering a 12-week program with up to $100k in non-dilutive funds.

- Participants will also showcase their projects on demo day with Mozilla, providing a pivotal moment for feedback and exposure. Questions?

- Get Ready for Zero Shot Tokenizer Transfer Event: A reminder of the upcoming Zero Shot Tokenizer Transfer event with Benjamin Minixhofer, scheduled for this month.

- Details can be found in the event's link, encouraging participation from interested engineers.

- Introducing AutoFix: The Open Source Issue Fixer: AutoFix is an open-source tool that can submit PRs directly from Sentry.io, streamlining issue management.

- Learn more about this tool's capabilities in the detailed post linked here: AutoFix Information.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!