[AINews] Llama-3-70b is GPT-4-level Open Model

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 4/18/2024-4/19/2024. We checked 6 subreddits and 364 Twitters and 27 Discords (395 channels, and 10403 messages) for you. Estimated reading time saved (at 200wpm): 958 minutes.

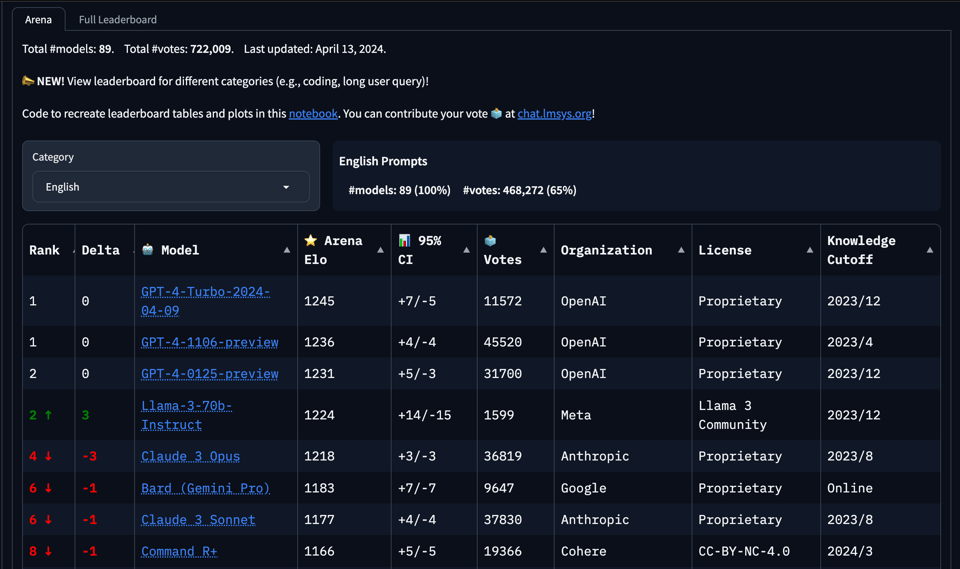

With a sample size of 1600 votes, the early results from Lmsys were even better than reported benchmarks suggested, which is rare these days:

This is the first open model to beat Opus, which itself was the first model to briefly beat GPT4 Turbo. Of course this may drift over time, but things bode very well for Llama-3-400b when it drops.

Already Groq is serving the 70b model at 500-800 tok/s, which makes Llama 3 the hands down fastest GPT-4-level token source period.

With recent replication results on Chinchilla coming under some scrutiny (don't miss Susan Zhang banger, acknowledged by Chinchilla coauthor), Llama 2 and 3 (and Mistral, to a less open extent) have pretty conclusively consigned Chinchilla laws to the dustbin of history.

Table of Contents

- AI Reddit Recap

- AI Twitter Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Perplexity AI Discord

- Unsloth AI (Daniel Han) Discord

- LM Studio Discord

- Nous Research AI Discord

- CUDA MODE Discord

- OpenAccess AI Collective (axolotl) Discord

- Stability.ai (Stable Diffusion) Discord

- Latent Space Discord

- Eleuther Discord

- LAION Discord

- HuggingFace Discord

- OpenRouter (Alex Atallah) Discord

- OpenAI Discord

- Interconnects (Nathan Lambert) Discord

- Modular (Mojo 🔥) Discord

- Cohere Discord

- LlamaIndex Discord

- DiscoResearch Discord

- OpenInterpreter Discord

- LangChain AI Discord

- Alignment Lab AI Discord

- Mozilla AI Discord

- Skunkworks AI Discord

- LLM Perf Enthusiasts AI Discord

- Datasette - LLM (@SimonW) Discord

- tinygrad (George Hotz) Discord

- AI21 Labs (Jamba) Discord

- PART 2: Detailed by-Channel summaries and links

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/Singularity. Comment crawling works now but has lots to improve!

Meta's Llama 3 Release and Capabilities

- Llama 3 released as most capable open LLM: Meta has released Llama 3, their most capable openly available large language model to date. In /r/LocalLLaMA, it was noted that 8B and 70B parameter versions are available, supporting 8K context length. An open-source code interpreter for the 70B model was also shared.

- Llama 3 outperforms previous models in benchmarks: Benchmarks shared in /r/LocalLLaMA show Llama 3 8B instruct outperforming the previous Llama 2 70B instruct model across various tasks. The 70B model provides GPT-4 level performance at over 20x lower cost based on API pricing. Tests also showed Llama 3 7B exceeding Mistral 7B on function calling and arithmetic.

Image/Video AI Progress and Stable Diffusion 3

- Lifelike talking face generation and impressive video AI: Microsoft unveiled VASA-1 for generating lifelike talking faces from audio. Meta's image and video generation UI was called "incredible" in /r/singularity.

- Stable Diffusion 3 impressions and extensions: In /r/StableDiffusion, it was noted that Imagine.art gave a false impression of SD3's capabilities compared to other services. A Forge Couple extension adding draggable subject regions for SD was also shared.

AI Scaling Challenges and Compute Requirements

- AI energy usage and GPU demand increasing rapidly: Discussions in /r/singularity highlighted that AI's computing power needs could overwhelm energy sources by 2030. Elon Musk stated training Grok 3 will require 100,000 Nvidia H100 GPUs, while AWS plans to acquire 20,000 B200 GPUs for a 27 trillion parameter model.

AI Safety, Bias and Societal Impact Discussions

- Political bias and AI safety concerns: In /r/singularity, it was argued that perceived "political bias" in AI reflects more on political parties than the models. Llama 3 was noted for its honesty and self-awareness in interactions. Discussions emerged weighing AI doomerism vs optimism for beneficial AI development.

- AI's potential to break encryption: A post in /r/singularity discussed the "quantum cryptopocalypse" and when AI could break current encryption methods.

AI Memes and Humor

- Various AI memes were shared, including the future of AI-generated memes, waiting for OpenAI's response to Llama 3, the AGI race between AI companies, and a parody trailer for humanity's AI future.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Meta Llama 3 Release

- Model Details: @AIatMeta released Llama 3 models in 8B and 70B sizes, with a 400B+ model still in training. Llama 3 uses a 128K vocab tokenizer and was trained on 15T tokens (7x more than Llama 2). It has an 8K context window and used SFT, PPO, and DPO for alignment.

- Performance: @karpathy noted Llama 3 70B broadly outperforms Gemini Pro 1.5 and Claude 3 Sonnet, with Llama 3 8B outperforming Gemma 7B and Mistral 7B Instruct. @bindureddy highlighted the 400B version approaching GPT-4 level performance on benchmarks.

- Availability: @ClementDelangue noted Llama 3 was the fastest model from release to #1 trending on Hugging Face. It's also available through @awscloud, @Azure, @Databricks, @GoogleCloud, @IBM, @NVIDIA and more.

Open Source AI Landscape

- Significance: @bindureddy argued most AI innovation in the open-source ecosystem will happen on the Llama architecture going forward. @Teknium1 felt Llama 3 disproved claims that finetuning can't teach models new knowledge or that 10K samples is the best for instruction finetuning.

- Compute Trends: @karpathy shared an update on llm.c, which trains GPT-2 on GPU at speeds matching PyTorch in 2K lines of C/CUDA code. He noted the importance of hyperoptimizing code for performance.

- Commercialization: @abacaj argued the price of tokens is plummeting as anyone can take Llama weights and optimize runtime. @DrJimFan predicted GPT-5 will be announced before Llama 3 400B releases, as OpenAI times releases based on open-source progress.

Ethical and Societal Implications

- Employee Treatment: @mmitchell_ai expressed empathy for Googlers fired for protesting, noting the importance of respecting employees even in disagreements.

- Data Transparency: @BlancheMinerva argued training data transparency is an unambiguous societal win, but incentives are currently against companies doing it.

- Ethics Requirements: @francoisfleuret imagined a world where email and web clients had to comply with the same ethical requirements as LLMs today.

AI Discord Recap

A summary of Summaries of Summaries

Meta's Llama 3 Release Sparks Excitement and Debate

- Meta released Llama 3, a new family of large language models ranging from 8B to 70B parameters, with pre-trained and instruction-tuned versions optimized for dialogue. Llama 3 boasts a new 128k token tokenizer for multilingual use and claims improved reasoning capabilities over previous models. [Blog]

- Discussions centered around Llama 3's performance benchmarks against models like GPT-4, Mistral, and GPT-3.5. Some praised its human-like responses, while others noted limitations in non-English languages despite its multilingual training.

- Licensing restrictions on downstream use of Llama 3 outputs were criticized by some as hampering open-source development. [Tweet]

- Anticipation built around Meta's planned 405B parameter Llama 3 model, speculated to be open-weight and potentially shift the landscape for open-source AI versus closed models like GPT-5.

- Tokenizer configuration issues, infinite response loops, and compatibility with existing tools like LLamaFile were discussed as Llama 3 was integrated across platforms.

Mixtral Raises the Bar for Open-Source AI

- The Mixtral 8x22B model from Mistral AI was lauded as setting new standards for performance and efficiency in open-source AI, utilizing a sparse Mixture-of-Experts (MoE) architecture. [YouTube]

- Benchmarks showed the Mera-mix-4x7B MoE model achieving competitive results like 75.91 on OpenLLM Eval, despite being smaller than Mixtral 8x7B.

- Multilingual capabilities were explored, with a new Mixtral-8x22B-v0.1-Instruct-sft-en-de model fine-tuned on English and German data.

- Technical challenges like shape errors, OOM issues, and router_aux_loss_coef parameter tuning were discussed during large model training.

Efficient Inference and Model Compression Gain Traction

- Quantization techniques like GPTQ and 4-bit models from Unsloth AI aimed to improve inference efficiency for large models, with reports of 80% less memory usage compared to vanilla implementations.

- LoRA (Low-Rank Adaptation) and Flash Attention were recommended for efficient LLM fine-tuning, along with tools like DeepSpeed for gradient checkpointing.

- Innovations like Half-Quadratic Quantization (HQQ) and potential CUDA kernel optimizations were explored for further compression and acceleration of large models on GPUs.

- Serverless inference solutions with affordable GPU hosting were shared, catering to cost-conscious developers deploying LLMs.

Open-Source Tooling and Applications Flourish

- LlamaIndex showcased multiple projects: building RAG applications with Elasticsearch [Blog], supporting Llama 3 [Tweet], and creating code-writing agents [Collab].

- LangChain saw the release of a prompt engineering course [LinkedIn] and the Tripplanner Bot utilizing travel APIs [GitHub].

- Cohere users discussed database integration, RAG workflows, and commercial licensing limitations for edge deployments.

- OpenRouter confirmed production use at Olympia.chat and anticipated Llama 3 integration, while LM Studio released Llama 3 support in v0.2.20.

Emerging Research Highlights

- A new best-fit packing algorithm optimizes document packing for LLM training, reducing truncations [Paper].

- The softmax bottleneck was linked to saturation and underperformance in smaller LLMs [Paper].

- DeepMind shared progress on Sparse Autoencoders (SAEs) for interpretability [Blog].

- Chinchilla scaling laws were reinterpreted, suggesting more parameters could be prioritized over data for optimal scaling.

PART 1: High level Discord summaries

Perplexity AI Discord

- Opus Users Hit Query Quota Quandary: Pro users are frustrated by a reduction in Opus queries from 600 to 30 per day, stirring up calls for a revised refund policy, given without advance notice of the change.

- Model Mastery Marathon: Comparisons between Llama 3 70b, Claude, and GPT-4 centered around coding prowess, table lookups, and multilingual proficiency, alongside strategies for bypassing AI content detectors critical for deploying AI-generated content.

- Anime Aesthetics and Image Implications: There's a surge of interest in applying AI to animations and images, referencing DALL-E 3 and Stable Diffusion XL, despite some challenges in harnessing their capabilities effectively.

- Interpreting Complexity in AI Riddles: A complex snail riddle became a testbed for evaluating AI reasoning with models, highlighting the need for AIs that can navigate beyond simple puzzles.

- Mixtral Sings Cohen, APIs Prove Playful: Mixtral-8x22B accurately interpreted Leonard Cohen's "Avalanche," while users confirmed Perplexity AI's chat models work with the API, giving life to applications like Mistral, Sonar, Llama, and CodeLlama.

Unsloth AI (Daniel Han) Discord

- Llama 3 Outpaces GPT-4: The release of Meta's Llama 3 has ignited discussions comparing its tokenizer advantages and performance benchmarks against OpenAI’s GPT-4, with the anticipation of a large 400B model iteration. Debate is ongoing regarding the VRAM requirements for training across different GPUs, and Unsloth AI has quickly integrated Llama 3, touting improved training efficiency.

- Unsloth Showcases Efficiency in 4-bits: Unsloth AI has updated its offerings with 4-bit models of Llama 3 for enhanced efficiency, with accessible models including 8B and 70B versions available on Hugging Face. Free Colab and Kaggle notebooks for Llama 3 have been provided, enabling users to more easily experiment and innovate.

- Unsloth Users Tackle Training and Inference Challenges: The community within Unsloth AI is actively engaging in troubleshooting various complexities like tokenizer issues and training script shortcomings during fine-tuning of models like vllm. Unsloth has noted issues with Llama 3's tokenizer and informed users about their resolution efforts.

- Community Endsures Model Mergers and Extensions: Interesting developments like Mixtral 8x22B, a substantial MoE model, and Neural Llama 3's addition to Hugging Face suggest steady advancement in model capabilities. User conversations also include practical advice and support on puzzles like JSON decoding errors, dataset structures, and memory limitations on platforms like Colab.

- AI Pioneers Propose ReFT: The potential integration of the ReFT (Reinforced Fine-Tuning) method into the Unsloth platform has sparked interest among users. This method, noted for potentially aiding newcomers, is under consideration by the Unsloth team, reflecting the community’s proactive approach to refine and expand tool capabilities.

LM Studio Discord

- Llama 3 Takes Center Stage: Meta's Llama 3 model is stirring up discussions, with users exploring the 70B and 8B versions, acknowledging its human-like responses comparable to larger models. Issues like infinite loops were noted, and the newly released Llama 3 70B Instruct promises to match GPT-3.5's performance and is available on Hugging Face.

- Hardware Hurdles and Triumphs: There's active conversation around running AI models on various hardware configurations. A 1080TI GPU is highlighted for adequate AI model processing, while compatibility challenges for AMD GPUs, like the lack of AMD HIP SDK support for certain cards, are acknowledged. Additionally, model quantization versions like K_S and Q4_K_M raised issues, but Quantfactory versions were suggested as superior.

- LM Studio Updates and Integration: The latest update, LM Studio 0.2.20, includes support for Llama 3. Users are encouraged to update via lmstudio.ai or by restarting the app. However, there's emphasis that only GGUFs from "lmstudio-community" will work for now. Discussions are also ongoing about ROCm support for AMD hardware with Llama 3 now supported on the ROCm Preview 0.2.20.

- Innovations in Usability and Compatibility: A new feature called the "prompt studio" has been launched, allowing users to fine-tune their prompts in an Electron app built using Vue 3 and TypeScript. Meanwhile, llamafile is being lauded for its compatibility across various systems, contrasting with LM Studio's AVX2 requirement. Users advocate for backward compatibility, pointing out the issue with keeping the AVX beta as up-to-date as the main channel.

- Efficiency and Community Contributions in AI: The efficient IQ1_M and IQ2_XS models require less than 20GB of VRAM for the IQ1_M variant, showcasing community efforts toward optimized AI model performance. Moreover, Llama 3 70B Instruct model quants, lauded for efficiency and compatibility with LM Studio, are now accessible, hinting at a forward leap in open-source AI.

Nous Research AI Discord

A Call for Multi-GPU Support: There are struggles with achieving efficient long context inference for models like Jamba using multi-GPU setups; deepspeed and accelerate documentation lack guidance on the matter.

Ripe for an Invite: TheBloke's Discord server resolved its inaccessible invite issue, with the new link now available: Discord Invite.

Reports Go Commando: The /report command has been introduced for effectively reporting rule violators within the server.

Llama 3 Ignites Benchmarking Blaze: Llama 3 is being rigorously benchmarked and compared to Mistral among users, with its performance and AI chat templates under the lens. Concerns about model limitations, such as the 8k token context limit, and restrictive licensing were prominent.

Pickle Cautions and AI Index: Dialogues on compromised systems via insecure pickle files and non-robust GPT models featured in the conversation. The AI community was directed to the AI Index Report for 2023 for insights on the year's development.

Cross-Model Queries and Support Calls: Queries included the search for effective prompt formats for Hermes-based models, anticipated release of llama-3-Hermes-Pro, and whether axolotl supports simultaneous multi-model training. The support for long context inferences on GPU clusters using models like jamba is under development, as seen in the vLLM project's GitHub pull request.

VLM on Petite Processors: A project aiming to deploy VLM (Vision Language Models) on Raspberry Pis for educational use hints at the ever-growing versatility in AI deployment platforms.

Data Dilemmas and Dimensionality Debates: Open-source models' need for fine-tuning and issues with data diversity, including the curse of dimensionality, have been topics of agreement. Moreover, strategies for creating effective RAG databases ranged from single large to multiple specialized databases.

Simulation Joins AI Giants: A fervent discussion has taken place centered around the integration of generative AI like Llama 3 and Meta.ai with world-sim, exploring the creation of rich, AI-powered narratives.

CUDA MODE Discord

Matrix Multiplication Mastery: Engineers debated optimal strategies for tiling matrix multiplication in odd-sized scenarios, proposing padding or boundary-specific code to improve efficiency. They highlighted the balance between major part calculations and special edge case handling.

CUDA Kernels Under the Microscope: Discussions on FP16 matrix multiplication (matmul) errors surfaced, suggesting the superior error handling of simt_hgemv compared to typical fp16 accumulation approaches. The group also examined dequantization in quantized matmuls, sequential versus offset memory access, and the value of vectorized operations like __hfma2, __hmul2, and __hadd2.

On the Shoulders of Giants: Members explored integrating custom CUDA and Triton kernels with torch.compile, sharing a Custom CUDA extensions example and directing to a comprehensive C++ Custom Operators manual.

CUDA Quest for Knowledge: There was an exchange on CUDA learning resources with the suggestion to learn it before purchasing hardware, and recommending a YouTube playlist for the theory and a GitHub CUDA guide for practice.

Leveraging CUDA for LLM Optimization: The community successfully reduced a CUDA model training loop from 960ms to 77ms using NVIDIA Nsight Compute for optimizations, highlighting the specific improvements and considering multi-GPU approaches for further enhancements. Details on the loop optimization can be found in a pull request.

Training Garb for Engineers: Discussions for CUDA Mode events necessitated coordination regarding recording duties, sparking conversations on suitable workflows and tools for capturing and potentially editing the sessions, in addition to managing event permissions and scheduling.

OpenAccess AI Collective (axolotl) Discord

- LLaMA-3 Launch Spurs In-Depth Technical Dialogue: The advent of Meta LLaMA-3 triggered rich discussions around its tokenizer efficiency and architecture, with members weighing in on whether its predecessor's architecture was inherited and conducting comparative tests. Concerns were voiced about finetuning challenges, while some tinker with the qlora adapter to improve integration despite facing technical snags with tokenizer loading and unexpected keyword arguments.

- Axolotl Reflects on Finetuning and Tokenizer Configurations: Debates persisted on how to best finetune AI models, with a spotlight on Mistral and LLaMA-3, including specifics about unfreezing lmhead and embed layers and tackling tokenizer changes that lead to

ValueErrorissues. Members shared tokenizer tweaks, ranging from adjusting PAD tokens to exploring new tokenizer override techniques, such as in the proposed Draft PR on tokenizer overrides.

- AMD GPU Compatibility Receives Attention: Users exploring ROCm published an install guide, aiming to provide alternatives for attention mechanisms on AMD GPUs, which could par with Nvidia's Flash Attention. This is an ongoing topic where users endeavor to identify methods that are more compatible with non-Nvidia hardware.

- Innovations in Language Modeling Noted: Significant advancements in language modeling were highlighted, including AWS's new packing algorithm triumph, reported to cut down closed domain hallucination substantially. A paper detailing this progress can be found at Fewer Truncations Improve Language Modeling, potentially informing future implementations for the engineering community.

- Runpod Reliability Gets a Wink and a Nod: A member highlighted slow response times in the runpod service, resulting in a humorous jab at the service's intermittent reliability, poking fun at runpod's operational hiccups.

Stability.ai (Stable Diffusion) Discord

Mark Your Calendars for SD3 Weights: Discussions indicate excitement for the upcoming May 10th release of Stable Diffusion 3 local weights, with members anticipating new capabilities and enhancements.

Censorship or Prudence?: Conversations surfaced concerns regarding the Stable Diffusion API, which might produce blurred outputs for certain prompts, signaling a disparity in content control between local versions and API usage.

GPU Picking Made Simpler: AI practitioners highlighted the cost-effectiveness of the RTX 3090 for AI tasks, weighing its advantages over pricier options like the RTX 4080 or 4090, factoring in VRAM and computational efficiency.

Artistic Mastery in AI: Dialogue in the community has been geared towards fine-tuning content generation, with members exchanging advice on creating specific image types, such as half-face portrayals, and controlling the nuances of the resulting AI-generated art.

AI Assistance Network: Resources like a detailed Comfy UI tutorial have been shared for community learning, and users are both seeking and providing tips on handling technical errors, including img2img IndexError and strategies for detecting hidden watermarks in AI imagery.

Latent Space Discord

Rocking the Discord Server with AI: A member explored the idea of summarizing a dense Discord server on systems engineering using Claude 3 Haiku and an AI news bot; they also shared an invitational link.

Meta's Might in Machine Learning: Meta introduced Llama 3, with conversations buzzing around its 8B and 70B model iterations outclassing SOTA performance, a forthcoming 400B+ model, and comparison to GPT-4. Participants noted Llama 3's superior inference speed, especially on Groq Cloud.

Macs and Llamas, an Inference Odyssey: Debates flared up about running large models like Llama 3 on Macs, with some members suggesting creative workarounds by combining local Linux boxes with Macs for optimized performance.

Hunt for the Ultimate LLM Blueprint: In search of efficiency, community members shared litellm, a promising resource to adapt over 100 LLMs with consistent input/output formats, simplifying the initiation of such projects.

Podcast Wave Hits the Community: Latent Space aired a new podcast episode featuring Jason Liu, with community members showing great anticipation and sharing the announcement Twitter link.

Engage, Record, and Prompt: The LLM Paper Club held discussions on the relevance of tokenizers and embeddings, announced the recording of sessions for YouTube upload, and examined model architectures like ULMFiT's LSTM. In-the-know participants confirmed PPO's auxiliary objectives and engaged in jest about the so-called 'prompting epoch.'

AI Evaluations and Innovations: The AI In Action Club pondered the pros and cons of using Discord versus Zoom, shared insights into LLM Evaluation, tackled unidentified noise during sessions, and shared strategies for abstractive summarization evaluation. Links to Eugene Yan's articles were circulated, underscoring the importance of reliability in AI evaluations.

Eleuther Discord

Best-fit Packing: Less Truncation, More Performance: A new Best-fit Packing method reduces truncation in large language model training, aiming for optimal document packing into sequences, according to a recent paper.

Unpacking the Softmax Bottleneck: Small language models underperform due to saturation linked with the softmax bottleneck, with challenges for models under 1000 hidden dimensions, as discussed in a recent study.

Scaling Laws Remain Chinchillated: Conversations in the scaling-laws channel have concluded that the Chinchilla token count per parameter stays consistent and that there might be more benefit in adding parameters over accumulating more data.

DeepMind Dives into Sparse Autoencoders: DeepMind's mechanistic interpretability team outlined advancements in Sparse Autoencoders (SAEs) and provided insights on interpretability challenges and techniques in a forum post, along with a relevant tweet.

Tackling lm-evaluation-harness Challenges: Efforts to contribute to the lm-evaluation-harness project have been hampered by the complexity of configurations and the need for a cleaner implementation method, with shared insights into the potential for multilingual benchmarking via PRs.

LAION Discord

- Shake-up at Stability AI: Stability AI has undergone layoffs of 20+ employees following the CEO's departure to address the issue of unsustainable growth, prompting discussions about the company's future direction and stability. The full memo/details on the layoff can be found in CNBC's coverage.

- Rivalry in AI Art Space Heats Up: DALL-E 3 has been observed to outperform Stable Diffusion 3 in terms of prompt accuracy and visual fidelity, leading to community dissatisfaction with SD3’s performance. The comparison of these models has heightened discussions of their respective strengths and weaknesses in the text-to-image arena.

- Meta Llama 3 Sparks Conversations: The introduction of Meta Llama 3 has triggered conversations regarding its implications for the AI landscape, with discussions encompassing its coding capabilities, limited context window, and how it might compete with other industry-leading models. An announcement confirmed that Meta Llama 3 will be available on major platforms such as AWS and Google Cloud, and can be reviewed in more detail at Meta's blog post.

- Digging Deeper into Cross-Attention Mechanisms: There are ongoing discussions about cross-attention mechanisms, particularly in the imagen model from Google, which is gaining attention for its method of handling text embeddings during the model training and image sampling processes.

- Advancing Facial Dynamics with Audio-Conditioned Models: Interest is on the rise for an open-source audio-conditioned generative model capable of facial dynamics and head movements, with a diffusion transformer model appearing as a strong candidate. Strategies involving latent space encoding of talking head videos or face meshes conditioned on audio are being evaluated for effectiveness in creating realistic facial expressions synchronized with audio.

HuggingFace Discord

Spanning Languages and Models: A Summary of Discourse

- LLaMA 3 Takes the Stage: The engineering community is awash with discussions about LLaMA 3, with insights from a Meta Releases LLaMA 3: Deep Dive & Demo video. The anticipation for its performance on leaderboards is high, particularly noted at the handle

MaziyarPanahi/Meta-Llama-3-8B-Instruct-GGUFon HuggingFace.

- Meta-Llama 3 vs. Mixtral: Benchmarks comparing Meta Llama 3 and Mixtral are under scrutiny, with the recent Mera-mix-4x7B model achieving 75.91 on OpenLLM Eval. The community also shared a tip about a 422 Unprocessable Entity error with LLaMA-2-7b-chat-hf model, requiring token input reduction for resolution.

- Expanding Multilingual Reach: Momentum is growing for multilingual accessibility as community members offer to translate and create content for a wider global audience, highlighted by Portuguese translations of Community Highlights available in a YouTube playlist and discussions on the importance of culturally relevant translations.

- Quantization Queries and Dataset Discussions: Conversations pivot to quantization as the community contemplates its impact on model performance, linked to an analysis at Exploring the Impact of Quantization on LLM Performance, whereas sharing of the ORPO-DPO-mix-40k dataset taps into the need for improved machine learning model training.

- Community Creates and Collaborates: The user-generated content shines through both in a creative audio-in-browser experience at zero-gpu-slot-machine and the launch of a new prediction leaderboard aimed at gauging LLMs' future event forecasting acumen, with the space located here. Meanwhile, a book on Generative AI garners interest as it promises more chapters, potentially on quantization and design systems.

Technical Exchange Flourishing: AI Engineers exchange knowledge on everything from deep reinforcement learning (DRL) in object detection to GPU issues in Gradio and the perplexing 'cursorop' error in TensorFlow. Discussions are also oriented towards 3D vision datasets and solutions for consistent backgrounds in inpainting with Lora. An open call was made to explore Counterfactual-Inception research on GitHub.

OpenRouter (Alex Atallah) Discord

- Mix-Up Fixed for Mixtral Model: The prompt template for the Mixtral 8x22B Instruct model was corrected, impacting how users should interact with the model.

- OpenRouter's Revenue Riddles: The guild is abuzz with speculation about OpenRouter's revenue strategies, with hypotheses about bulk discounts and commission from user top-ups, but no official stance from OpenRouter futures the debate.

- Latency Lowdown: Concerns about VPS latency, especially in South America, were discussed without reaching a consensus on the impact of server location on performance.

- Meta's LLaMA Lifts Off: Enthusiasm is high for the new Meta LLaMA 3 models, which are reported to be less censored. Engineers shared resources including the official Meta LLaMA 3 site and a download link for model weights at Meta LLaMa Downloads.

- OpenRouter Hits Production: Reports confirm the deployment of OpenRouter in production, with users pointing to examples like Olympia.chat and seeking advice on integrating it as a replacement for direct OpenAI, with emphasis on the gaps in documentation for specific integrations.

OpenAI Discord

Turbo Challenged by Claude: Users have reported slow performance with gpt-4-turbo-2024-04-09, finding it slower than its predecessor, GPT-4-0125-preview. Inquiries were made about faster versions, and some have integrated Claude to compensate for speed issues, yet with mixed results.

AI Grapples with PDFs: Conversations zeroed in on the inefficiency of PDFs as a data input format for AI, with community members advising the use of plain text or structured formats like JSON, while also noting XML is not currently supported for files.

Performance Anxiety Over ChatGPT: Members expressed concerns over the declining performance of ChatGPT, sparking debate over possible reasons which ranged from strategic responses to legal challenges to deliberate performance downgrades.

Engineering More Effective Prompts: There was a community effort to confirm and update the prompt engineering best practices, as recommended in the OpenAI guide with discussions pointing to real issues in prompt consistency and failure to adhere to instructions.

Integrating AI with Blockchain: A blockchain developer called for collaboration on projects combining AI with blockchain, suggesting an interaction between advanced prompt engineering and decentralized technologies.

Interconnects (Nathan Lambert) Discord

- Value-Guided AI Ascends: Excitement is building around PPO-MCTS, a cutting-edge decoding algorithm that combines Monte Carlo Tree Search with Proximal Policy Optimization, providing more preferable text generation through value-guided searches as explained in an Arxiv paper.

- Meta Llama 3 Models Spark Buzz: Discussions heated over Meta's Llama 3, a series of new large language models up to 70 billion parameters, with particular attention to the possible disruptiveness of an upcoming 405 billion parameter model. The model's multilingual capabilities and fine-tuning effectiveness were topics of debate, alongside the potential shake-up against closed models like GPT-5 Replicate’s Billing, Llama 3 Open LLMs, Azure Marketplace, and OpenAssistant Completed.

- Llama3 Release Keeps Presenters on Their Toes: Anticipation around the LLaMa3 release influenced presenters' slide preparations, with some needing to potentially include last-minute updates to their materials. Queries about LLaMA-Guard brought up discussions on safety classifiers and AI2's development of benchmarks for such systems.

- Pre-Talk Prep: In light of the LLaMa3 discussion, presenters geared up to address questions during their talks, while concurrently prioritizing blog post writing.

- Recording Anticipation: There's eagerness from the community for the release of a presentation recording, highlighting the interest in recent discussions and progress in AI fields.

Modular (Mojo 🔥) Discord

- C Integration Made Easier in Mojo: The

external_callfeature in Mojo was highlighted, with plans to further streamline C/C++ integration by enabling direct calls to external functions without a complex FFI layer, as outlined in the Tutorial on Twitter and the Modular roadmap and mission.

- Mojo Ponders Garbage Collection and Testing Capabilities: Within the Modular community, there was a discussion about implementing runtime garbage collection, similar to Nim's approach; curiosity about first-class support for testing in Mojo, comparable to Zig; and debate regarding the desire for pytest-like assertions. Additionally, excitement was noted around community contributions towards the development of a packaging and build system for Mojo.

- Rust vs. Mojo Performance Evaluated: A benchmarking debate revealed Rust's prefix sum computation to be slower than Mojo's equivalent, with Rust achieving a time of 0.31 nanoseconds per element using just

--releasecompile flag.

- Updating Nightly/Mojo with Care: Engineers reported issues updating Nightly/Mojo, with solutions ranging from updating the modular CLI to manually adjusting the

PATHin.zshrc. This brought to light both technical glitches and a gentle reminder of potential human errors affectionately phrased as Layer 8 issues.

- Meta's LLaMA 3 Model Discussed: The community shared a video titled "Meta Releases LLaMA 3: Deep Dive & Demo", exploring the features of Meta's LLaMA 3 AI model, noting the release date as April 18, 2024, viewable on YouTube.

Cohere Discord

- Tool Time with Command R Model: The Command R model guide was reinforced with links to the official documentation and example notebooks. The use of JSON schemas to describe tools for Command models was endorsed.

- Database Dynamics: Integration of MySQL with Cohere raised discussions, clarifying that it can be done without Docker, as demonstrated in the GitHub repository, even though the documentation may have outdated information.

- The RAG-tag Team: Questions on implementing Retrieval Augmented Generation (RAG) with Cohere AI were answered, referencing Langchain and RagFlow according to the official Cohere docs.

- Licenses and Limits: It was noted that Command R and Command R+ tools are bound by CC-BY-NC 4.0 licensing, which prohibits their commercial use on edge devices.

- Scaling Model Deployment: Dialogue revolved around deploying large models, indicating the challenges of scaling up to 100B+ models and highlighting specific hardware considerations like dual A100 40GBs and MacBook M1.

- Lockdown Breach Alert: Increasingly sophisticated jailbreaks in LLMs were discussed, highlighting the potential for serious repercussions including unauthorized database access and targeting individuals.

- Surveillance in the Service Loop: An example was provided of enhancing a conversation by integrating llm_output with run_tool, enabling an LLM's output to guide a monitoring tool in a feedback loop.

LlamaIndex Discord

Retrieval Augmented Generations Right at Our Fingertips: Engineers at Elastic have released a blog post demonstrating the construction of a Retrieval Augmented Generation (RAG) application using Elasticsearch and LlamaIndex, an integration of open tools including @ollama and @MistralAI.

Llama 3 Gets a Handy Cookbook: The LlamaIndex team has provided early support for Llama 3, the latest model from Meta, through a "cookbook" detailing usage from simple prompts to entire RAG pipelines. The guide can be fetched from this Twitter update.

Setting Up Shop Locally with Llama 3: For those looking to run Llama 3 models in a local environment, Ollama has shared a notebook update that includes simple command changes. The update can be applied by altering "llama2" to "llama3" as detailed here.

Puzzle & Dashboards: Pinecone and LLM Daily Struggles: Amidst technical exchanges, there was curiosity about how Google's Vertex AI handles typos in signs like "timbalands", as seen on their demo site, and ongoing dialogues surrounding the creation of an interactive dashboard for generating recipes from input ingredients.

Ready, Set, Track LlamaIndex's Progress: Interest around tracking the development of LlamaIndex spiked among engineers following confirmation that LlamaIndex has secured funding, a nod to the project's growth and anticipated advancements in the space.

DiscoResearch Discord

Mixtral's Multilingual Might: The Mixtral model mix of English and German showcases its language prowess, though evaluations are imminent. Technical challenges, including shape errors and OOM issues, hint at the complexity of training large models, while the efficacy of parameters such as "router_aux_loss_coef" in Mixtral's config remains a point of debate.

Meta's Llama Lightning Strikes: Meta's Llama 3 enters the fray, touting multilingual capabilities but with discernible performance discrepancies in non-English languages. Access to the new tokenizer is anticipated, and critiques focus on downstream usage restrictions of model outputs, sparking a discussion on the confluence of open source and proprietary constraints.

German Language Models Under Microscope: Initial tests suggest Llama3 DiscoLM German lags behind Mixtral in German proficiency, with notable grammar issues and incorrect token handling, despite a Gradio demo availability. Questions regarding the Llama3's dataset alignment and tokenizer configurations arise, and comparisons with Meta's 8B models show performance gaps that beg investigation.

OpenInterpreter Discord

ESP32 Demands WiFi for Linguistic Acumen: An engineer pointed out that ESP32 requires a WiFi connection to integrate with language models, emphasizing the necessity of network connectivity for operational functionality.

Ollama 3's Performance Receives Engineer's Applause: In the guild, there was a buzz about the performance of Ollama 3, with engineers experimenting with the 8b model and probing into enhancements for the text-to-speech (TTS) and speech-to-text (STT) models for accelerated response times.

OpenInterpreter Toolkit Trials and Tribulations: Users shared challenges with OpenInterpreter, ranging from file creation issues using CLI that wraps output with echo to BadRequestError during audio transmission attempts with M5Atom.

Fine-Tuning Local Language Mastery: Guild members discussed how to set up OS mode locally with OpenInterpreter, providing a Colab notebook for guidance and exchanged insights on refining models like Mixtral or LLama with concise datasets for nimble learning.

Exploring Meta_llama3_8b: A member shared a link to Hugging Face where fellow engineers can interact with the Meta_llama3_8b model, indicating a resource for hands-on experimentation and evaluation within the community.

LangChain AI Discord

- Chain the LangChain: The

RunnableWithMessageHistoryclass in LangChain is designed for handling chat histories, with a key emphasis on always includingsession_idin the invoke config. In-depth examples and unit tests can be found on their GitHub repository.

- RAG Systems Built Easier: LangChain community members are implementing RAG-based systems, with resources like a YouTube playlist on RAG system building and the VaultChat GitHub repository shared for guidance and inspiration.

- Prompt Engineering Skills Now Online: A prompt engineering course featuring LangChain is now available on LinkedIn Learning, broadening the horizon for those seeking to improve their skills in this area. You can check it out here.

- Test Drive Llama 3: Llama 3's experimentation phase is open, with a chat interface accessible at Llama 3 Chat and API services available at Llama 3 API, allowing engineers to explore this new AI horizon.

- Plan with AI: Tripplanner Bot, a new tool built with LangChain, combines free APIs to assist in travel planning. It's an open project available on GitHub for those looking to dive in, contribute, or simply learn from its construction.

Alignment Lab AI Discord

- Spam Bot Invasion Detected: Multiple channels within the Discord guild, namely

#ai-and-ml-discussion,#programming-help,#looking-for-collabs,#landmark-dev,#landmark-evaluation,#open-orca-community-chat,#leaderboard,#looking-for-workers,#looking-for-work,#join-in,#fasteval-dev, and#qa, have reported the influx of spam promoting NSFW and potentially illegal content involving a recurring Discord invite link (https://discord.gg/rj9aAQVQFX). The messages, possibly from bots or hacked accounts, peddle explicit material and incite community members to join an external server, raising significant concern for violation of Discord’s community guidelines and prompting calls for moderator intervention.

- Open-Sourcing WizardLM-2: The WizardLM-2 language model has been made open source, with references to its release blog, repositories, and academic papers. Curious minds and developers are encouraged to contribute and explore the model further, with resources and discussions available on Hugging Face and arXiv, along with an invitation to their Discord server.

- Meta Llama 3 Under Privacy Lock: Initiatives to understand and utilize the Meta Llama 3 model involve adhering to privacy agreements as outlined by the Meta Privacy Policy, sparking dialogue around privacy concerns and access protocols. While there's a zeal for exploring the model's tokenizer, the official route requires a detailed check-in at the get-started page of Meta Llama 3, juxtaposed against the community's workaround on access through the Undi95's Hugging Face repository.

- Post-Hype Model Evaluations: Despite the interferences from unwanted posts, the engineering community remains engrossed in ongoing discussions about evaluating AI models like Meta Llama 3 and WizardLM-2. As moderators resolve disruptions, engineers continue to seek out best practices and share insights on model performance, integration, and scaling challenges.

- Beware of Discord Invite: With the aforementioned series of spam alerts, it is strongly advised to avoid interacting with the shared Discord link https://discord.gg/rj9aAQVQFX which is tied to all spam messages. Elevated caution is recommended to maintain operational security and protect community integrity.

Mozilla AI Discord

Llama 3 8b Takes the Stage: The llamafile-0.7 update now supports Llama 3 8b models using the -m <model path> parameter, as discussed by richinseattle; however, there's a token issue with the instruct format highlighted alongside a Reddit discussion.

Patch on the Horizon: A pending update to llamafile promises to fix compatibility issues with Llama 3 Instruct, which is detailed in this GitHub pull request.

Quantum Leap in Llama Size: jartine announced the imminent release of a quantized version of llama 8b on Llamafile, indicating advancements for the efficiency-seeking community.

Meta Llama Weights Unbound: jartine shared the Meta Llama 3 8B Instruct executable weights for community testing on Hugging Face, noting that there are a few kinks to work out, including a broken stop token.

Model Mayhem Under Management: Community efforts in testing Llama 3 8b models yielded optimistic results, with a fix for the stop token issue in Llama 3 70b communicated by jartine; minor bugs are to be anticipated.

Skunkworks AI Discord

Databricks Goes GPU: Databricks has released a public preview of model serving, enhancing performance for Large Language Models (LLMs) with zero-config GPU optimization but may increase costs.

Ease of LLM Fine-Tuning: A new guide explains fine-tuning LLMs using LoRA adapters, Flash Attention, and tools like DeepSpeed, available at modal.com, offering strategies for efficient weight adjustments in models.

Affordable Serverless Solutions: An affordable serverless hosting guide using GPUs is available on GitHub, which could potentially lower expenses for developers - check the modal-examples repo.

Mixtral 8x22B Raises the Bar: The Mixtral 8x22B is a new model employing a sparse Mixture-of-Experts, detailed in a YouTube video, setting high standards for AI efficiency and performance.

Introducing Meta Llama 3: Facebook's Llama 3 adds to the roster of cutting-edge LLMs, open-sourced for advancing language technologies, with more information available on Meta AI's blog and a promoting YouTube video.

LLM Perf Enthusiasts AI Discord

- Curiosity around litellm: A member inquired about the application of litellm within the community, signaling interest in usage patterns or case studies involving this tool.

- Llama 3 Leads the Charge: Claims have surfaced of Llama 3's superior capability over opus, particularly highlighting its performance in an unnamed arena at a scale of 70b.

- Style or Substance?: A conversation sparked concerning whether performance discrepancies are a result of stylistic differences or a true variance in intelligence.

- Warning on Error Bounds: Error bounds became a focal point as a member raised concerns, possibly warning other members to proceed with caution when interpreting data or models.

- Humor Break with a Tumble: In a lighter moment, a member shared a gif depicting comic relief through an animated fall.

Datasette - LLM (@SimonW) Discord

Karpathy's Llama 3 Lasso: Andrej Karpathy's tweet raised discussions on the potential of compact models, noting an 8B parameter model trained on a 15T dataset as an example of possibly undertraining common LLMs by factors of 100-1000X, pointing engineers towards the notion of longer training cycles for smaller models.

Small Models, Big Expectations: Reactions to Karpathy's insights echo among members who express enthusiasm for the deployment of small yet efficient models like Llama 3, indicating a community ready to embrace optimal resource utilization in developing smaller, mightier LLMs.

Plugin Installation Snags: A member's ModuleNotFoundError while installing a llm plugin led to the revelation that conflicting installations from both brew and pipx might be at the root. A clean reinstall ended the ordeal, hinting at the necessity of vigilant environment management.

Concurrent Confusion Calls for Cleanup: The cross-over installation points from brew and pipx led a user astray, sparking reminders within the community to check which version of a tool is being executed with which llm to dodge similar issues in the future.

LLM Fun Facts: Amidst the technical back-and-forth, a shared use case for llm provided a light-hearted moment, presenting a practical, engaging application of the technology for members to explore.

tinygrad (George Hotz) Discord

- LLama3 Gallops Ahead: LLama3 has been released, boasting versions with both 8B and 70B parameters, broadening the horizons for AI applications.

- Speedy LLama Beats PyTorch: In initial testing, LLama3 demonstrated a slight speed advantage over PyTorch for certain models and showcased seamless compatibility with ROCm on XTX hardware.

AI21 Labs (Jamba) Discord

Long Context Inference Woes with Jamba: A Jamba user is struggling with long context inferences on a 2x A100 cluster and is seeking troubleshooting code for the distributed system's issue. There has been no follow-up discussion or provided solutions to the problem yet.

PART 2: Detailed by-Channel summaries and links

Perplexity AI ▷ #general (1059 messages🔥🔥🔥):

- Refund Requests and Model Usage Concerns: Pro users expressed frustration over the sudden limit to 30 Opus queries per day, down from 600, affecting those who primarily subscribed for Opus usage. There is a desire for a revised refund policy, especially since there was no prior notice given on the query limitation change.

- Animation and Image Modeling Capabilities: Users showed interest in expanding use cases to include animations and images, specifically mentioning DALL-E 3 and Stable Diffusion XL. However, some users faced issues in using these models effectively.

- Model Comparison and Performance: Discussions indicated a comparison between different models, like Llama 3 70b, Claude, and GPT-4, focusing on aspects like coding, table lookups, and multilingual capabilities. The conversation included methods to evade AI content detectors, which are essential for deploying AI-generated work in fields like academia.

- AI Riddle Challenge: A snail riddle puzzle prompted users to test various AI models and assess their reasoning and calculation capabilities. The complexity added to the riddle aimed to challenge AIs beyond commonly known puzzles.

- Language and Context Limitations: Users actively debated English language performance's importance, with the assertion that English's dominance on the web should not be the sole factor in evaluating language models. Awareness of the need for strong multilingual AI capabilities was also a key point. Also discussed were the apparent limitations in context windows for AI responses, affecting the models' effectiveness.

- Binoculars - a Hugging Face Space by tomg-group-umd: no description found

- AI Detector: AI Purity’s Reliable AI Text Detection Tool: A complete breakdown of AI Purity's cutting-edge technology with the most reliable and accurate AI detector capabilities including FAQs and testimonials.

- Tweet from Lech Mazur (@LechMazur): Meta's LLama 3 70B and 8B benchmarked on NYT Connections! Very strong results for their sizes.

- Gen-AI Search Engine Perplexity Has a Plan to Sell Ads: no description found

- Zuckerberg GIF - Zuckerberg - Discover & Share GIFs: Click to view the GIF

- Liam Santa GIF - Liam Santa Merry Christmas - Discover & Share GIFs: Click to view the GIF

- 24 vs 32 core M1 Max MacBook Pro - Apples HIDDEN Secret..: What NOBODY has yet Shown You about the CHEAPER Unicorn MacBook! Get your Squarespace site FREE Trial ➡ http://squarespace.com/maxtechAfter One Month of expe...

- Languages used on the Internet - Wikipedia: no description found

- Baby Love GIF - Baby Love You - Discover & Share GIFs: Click to view the GIF

- Snape Harry Potter GIF - Snape Harry Potter You Dare Use My Own Spells Against Me Potter - Discover & Share GIFs: Click to view the GIF

- Mark Zuckerberg - Llama 3, $10B Models, Caesar Augustus, & 1 GW Datacenters: Zuck on:- Llama 3- open sourcing towards AGI - custom silicon, synthetic data, & energy constraints on scaling- Caesar Augustus, intelligence explosion, biow...

- llm-sagemaker-sample/notebooks/deploy-llama3.ipynb at main · philschmid/llm-sagemaker-sample: Contribute to philschmid/llm-sagemaker-sample development by creating an account on GitHub.

Perplexity AI ▷ #sharing (14 messages🔥):

- Exploring Fake Actors in AI: A link was shared to Perplexity AI's search results discussing actors and fake elements within AI contexts.

- Diving into AI History: A member posted a link that leads to Perplexity AI's search results on the historical aspects of AI.

- Unveiling the 'Limitless AI Pendant': Curiosity arises with a shared Perplexity link referencing a 'Limitless AI pendant'.

- Insights on Mistral's Growth: The community showed interest in Mistral's progress through a shared Perplexity search link regarding Mistral fundraising.

- Understanding HDMI Utilization: Members may find answers to why HDMI is used with a link to Perplexity AI's search on the topic.

- Nandan Nilekani had this stunning thing to say about Aravind Srinivas' 'Swiss Army Knife' search engine: What Nandan Nilekani had to say about Perplexity AI, will make you rush to sign up with Aravind Srinivasan’s ‘Swiss Army Knife’ search engine.

- Inside The Buzzy AI StartUp Coming For Google's Lunch: In August 2022, Aravind Srinivas and Denis Yarats waited outside Meta AI chief Yann LeCun’s office in lower Manhattan for five long hours, skipping lunch for...

Perplexity AI ▷ #pplx-api (11 messages🔥):

- Mixtral Model Decodes Cohen's Lyrical Enigma: Mixtral-8x22B provided the most accurate interpretation of Leonard Cohen's "Avalanche," identifying the artist and song from the lyrics alone. The model interpreted themes of vulnerability, power, and evolving human relationships in its analysis.

- API Queries Can Indeed Be Fun: A member confirmed that the Perplexity AI chat models can be used with the provided API endpoint, after clarifying the details including parameter count and context length for various models like Mistral, Sonar, Llama, and CodeLlama.

- Embedding Models on Perplexity AI: It was shared that Llama-3 instruct models (8b and 70b) are accessible for chatting on labs.perplexity.ai and also available via pplx-api, with a mention of Pro users receiving monthly API credits.

- Real-Time Delight with New AI Models: A community member expressed enthusiasm for the new models, stating they have significantly improved their application, despite not having access to the Claude Opus API.

- Precision in API Responses Sought: A user sought assistance on how to limit API responses to an exact list of words when attempting to categorize items from a JSON file, mentioning trials with Sonar Medium Chat and Mistral without success.

- Monitoring API Credits: A question was raised regarding the frequency of updates to remaining API credits, inquiring whether the refresh rate is in minutes, seconds, or hours after running a script that makes API requests.

- Help Wanted for CORS Dilemma: A user requested examples or advice on resolving CORS issues when using the API in a frontend application, including setting up a proxy server as a potential solution.

- Tweet from Aravind Srinivas (@AravSrinivas): 🦙 🦙 🦙http://labs.perplexity.ai and brought up llama-3 - 8b and 70b instruct models. Have fun chatting! we will soon be bringing up search-grounded online versions of them after some post-training. ...

- Supported Models: no description found

Unsloth AI (Daniel Han) ▷ #general (1147 messages🔥🔥🔥):

- Llama 3 Buzz: The recent release of Meta's Llama 3 has got the AI community excited, with discussions around its tokenizer benefits and the anticipation of a 400B model. The model is compared to OpenAI’s GPT-4, and users debate Llama 3’s performance on various benchmarks.

- Unsloth Readies for Llama 3: The AI tool, Unsloth, has quickly updated its support for the new Llama 3 within hours of its release. Meanwhile, users seek advice for fine-tuning Llama 3 and discuss the VRAM requirements for training on different GPU configurations.

- Issues with Llama 3's Tokenizer: A hiccup is found in Llama 3’s tokenizer, with some unintended behavior that raised comments from the community. The team at Unsloth notifies that they are aware of the issues and are working on fixes.

- Benchmarking and Model Size Discussions: There is an ongoing conversation about how Llama 3's size impacts its performance and the need for more extensive benchmarks to fully assess capacities. A pre-release is suggested to gather user feedback and further optimize the model.

- VRAM Usage for Model Training: Users exchange insights on VRAM usage for fine-tuning language models. Specific attention is given to the efficiency of using Unsloth for training models like Llama 3 8B using Quantum LoRa (QLoRA), with reports of VRAM usage with and without quantization.

- screenshot: Discover the magic of the internet at Imgur, a community powered entertainment destination. Lift your spirits with funny jokes, trending memes, entertaining gifs, inspiring stories, viral videos, and ...

- Welcome Llama 3 - Meta's new open LLM: no description found

- Google Colaboratory: no description found

- unsloth/llama-3-8b-bnb-4bit · Hugging Face: no description found

- Google Colaboratory: no description found

- unsloth/llama-3-70b-bnb-4bit · Hugging Face: no description found

- kuotient/Meta-Llama-3-8B · Hugging Face: no description found

- mistralai/Mistral-7B-Instruct-v0.2 · Hugging Face: no description found

- Google Colaboratory: no description found

- Dance GIF - Dance - Discover & Share GIFs: Click to view the GIF

- jinaai/jina-reranker-v1-turbo-en · Hugging Face: no description found

- Obsidian - Sharpen your thinking: Obsidian is the private and flexible note‑taking app that adapts to the way you think.

- no title found: no description found

- Tweet from Daniel Han (@danielhanchen): Made a Colab for Llama-3 8B! 15 trillion tokens! So @UnslothAI now supports it! Uses free T4 GPUs. Doing benchmarking, but ~2x faster and uses 80% less memory than HF+FA2! Supports 4x longer context ...

- meta-llama/Meta-Llama-3-8B-Instruct · Update generation_config.json: no description found

- Meta Releases LLaMA 3: Deep Dive & Demo: Today, 18 April 2024, is something special! In this video, In this video I'm covering the release of @meta's LLaMA 3. This model is the third iteration of th...

- NeuralNovel/Llama-3-NeuralPaca-8b · Hugging Face: no description found

- Sweaty Speedruner GIF - Sweaty Speedruner - Discover & Share GIFs: Click to view the GIF

- Mark Zuckerberg on Instagram: "Big AI news today. We're releasing the new version of Meta AI, our assistant that you can ask any question across our apps and glasses. Our goal is to build the world's leading AI. We're upgrading Meta AI with our new state-of-the-art Llama 3 AI model, which we're open sourcing. With this new model, we believe Meta AI is now the most intelligent AI assistant that you can freely use. We're making Meta AI easier to use by integrating it into the search boxes at the top of WhatsApp, Instagram, Facebook, and Messenger. We also built a website, meta.ai, for you to use on web. We also built some unique creation features, like the ability to animate photos. Meta AI now generates high quality images so fast that it creates and updates them in real-time as you're typing. It'll also generate a playback video of your creation process. Enjoy Meta AI and you can follow our new @meta.ai IG for more updates.": 157K likes, 9,028 comments - zuckApril 18, 2024 on : "Big AI news today. We're releasing the new version of Meta AI, our assistant that you can ask any question across our apps and glasses....

- Fail to load a tokenizer (CroissantLLM) · Issue #330 · unslothai/unsloth: Trying to run the colab using a small model: from unsloth import FastLanguageModel import torch max_seq_length = 2048 # Gemma sadly only supports max 8192 for now dtype = None # None for auto detec...

- Mark Zuckerberg - Llama 3, $10B Models, Caesar Augustus, & 1 GW Datacenters: Zuck on:- Llama 3- open sourcing towards AGI - custom silicon, synthetic data, & energy constraints on scaling- Caesar Augustus, intelligence explosion, biow...

- [Usage]: Llama 3 8B Instruct Inference · Issue #4180 · vllm-project/vllm: Your current environment Using the latest version of vLLM on 2 L4 GPUs. How would you like to use vllm I was trying to utilize vLLM to deploy meta-llama/Meta-Llama-3-8B-Instruct model and use OpenA...

- HuggingChat: Making the community's best AI chat models available to everyone.

- ‘Her’ AI, Almost Here? Llama 3, Vasa-1, and Altman ‘Plugging Into Everything You Want To Do’: Llama 3, Vasa-1, and a host of new interviews and updates, AI news comes a bit like London buses. I’ll spend a couple minutes covering the last-minute Llama ...

- no title found: no description found

- Adaptive Text Watermark for Large Language Models: no description found

- Google Colaboratory: no description found

- Tweet from Andrej Karpathy (@karpathy): Congrats to @AIatMeta on Llama 3 release!! 🎉 https://ai.meta.com/blog/meta-llama-3/ Notes: Releasing 8B and 70B (both base and finetuned) models, strong-performing in their model class (but we'l...

- LLAMA-3 🦙: EASIET WAY To FINE-TUNE ON YOUR DATA 🙌: Learn how to fine-tune the latest llama3 on your own data with Unsloth. 🦾 Discord: https://discord.com/invite/t4eYQRUcXB☕ Buy me a Coffee: https://ko-fi.com...

- How to Fine Tune Llama 3 for Better Instruction Following?: 🚀 In today's video, I'm thrilled to guide you through the intricate process of fine-tuning the LLaMA 3 model for optimal instruction following! From setting...

- meta-llama/Meta-Llama-3-8B-Instruct · Fix chat template to add generation prompt only if the option is selected: no description found

Unsloth AI (Daniel Han) ▷ #announcements (1 messages):

- Llama 3 Hits the Ground Running: Unsloth AI introduces Llama 3, promising double the training speed and a 60% reduction in memory usage. Details can be found in the GitHub Release.

- Freely Accessible Llama Notebooks: Users can now access free notebooks to work with Llama 3 on Colab and Kaggle, where support for the Llama 3 70B model is also available.

- Innovating with 4-bit Models: Unsloth has launched 4-bit models of Llama-3 to improve efficiency, available for both 8B and 70B versions. For more models, including the Instruct series, visit their Hugging Face page.

- Experimentation Encouraged by Unsloth: The team is eager to see the community share, test, and discuss outcomes using Unsloth AI's models.

Link mentioned: Google Colaboratory: no description found

Unsloth AI (Daniel Han) ▷ #random (6 messages):

- HuggingFace's Inference API for LLAMA 3 is MIA: One member pointed out that HuggingFace has not yet opened the Inference API for LLAMA 3.

- LLAMA's Training Devours Compute: Another member humorously commented on the lack of compute by saying, No compute left after training the model followed by a skull emoji.

- LLAMA's Token Training Trove: In a brief exchange, members clarified the size of the training token set for LLAMA, settling on 15T tokens.

- AI Paparazzi Alert: A member shared a YouTube video discussing recent updates in AI, including LLAMA 3, with the whimsical introduction, "I am a paparaaaaazi!" accompanied by mind-blown and laughing emojis.

Link mentioned: ‘Her’ AI, Almost Here? Llama 3, Vasa-1, and Altman ‘Plugging Into Everything You Want To Do’: Llama 3, Vasa-1, and a host of new interviews and updates, AI news comes a bit like London buses. I’ll spend a couple minutes covering the last-minute Llama ...

Unsloth AI (Daniel Han) ▷ #help (341 messages🔥🔥):

- Model Saving and Loading Quandaries: A member experimented with fine-tuning a model, but encountered issues when trying to save in 16-bit for vllm without the necessary code in their training script. They debated a workaround involving saving the model from the latest checkpoint after reinitializing training, attempting to resolve size mismatch errors when loading state dictionaries due to token discrepancies.

- LLAMA3 Release and Inference Woes: As the team braced for the LLAMA3 release, other members struggled with utilizing the AI, with one member solving a size mismatch error by saving over from the checkpoint again and confirming the support of rank stabilization in high rank LoRAs. Another member grappled with inference problems, encountering an unanticipated termination after supposedly completing all steps in a single iteration.

- Tokenization Tribulations Across Unsloth: Issues with tokenization within Unsloth arose persistently, specifically errors related to missing

add_bos_tokenattributes in tokenizer objects and confusion over the necessity of saving tokenizers post-training to retain special tokens.

- Technical Difficulties and Environment Troubleshooting: Users detailed various technical setbacks including pipeline issues, JSON decoding errors, and the ill effects of relying on

pipinstead ofcondafor installations in their environments. Questions also surfaced about fine-tuning applications of Llama3, such as for non-English wikis and function calling.

- Practical Guidance and Community Support: Community members actively assisted each other by confirming setting details for training arguments, suggesting remedies for memory crashes on Colab, and discussing dataset structures for chatML. As members exchanged solutions, they displayed a commitment to confronting and overcoming current limitations, whether in finetuning models, preparing datasets, or navigating installation hitches.

- Google Colaboratory: no description found

- ParasiticRogue/Merged-RP-Stew-V2-34B · Hugging Face: no description found

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- meta-llama/Meta-Llama-3-8B-Instruct · Hugging Face: no description found

- astronomer-io/Llama-3-8B-Instruct-GPTQ-4-Bit · Hugging Face: no description found

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- Home: 2-5X faster 80% less memory LLM finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

- philschmid/guanaco-sharegpt-style · Datasets at Hugging Face: no description found

Unsloth AI (Daniel Han) ▷ #showcase (4 messages):

- Mixtral and Mistral Make Waves: Mistral.ai released a torrent for Mixtral 8x22B, an opposite-of-understated MoE model that follows the release of Mixtral 8x7B, boasting increased hidden dimensions to 6144 in line with DBRX. The team continues its endeavors without much fanfare.

- Neural Llama Appears on Hugging Face: Neural Llama 3 has made its way to Hugging Face, trained using Unsloth and showcased alongside the likes of tatsu-lab's alpaca model. Members have acknowledged the presence of this new model with enthusiasm.

- NeuralNovel/Llama-3-NeuralPaca-8b · Hugging Face: no description found

- AI Unplugged 7: Mixture of Depths,: Insights over information

Unsloth AI (Daniel Han) ▷ #suggestions (3 messages):

- ReFT Method Sparks Interest: A member mentioned the new ReFT (Reinforced Fine-Tuning) method and enquired about its potential integration into Unsloth. This technique could make it easier for newcomers to engage with the platform.

- Unsloth Team Takes Note: Another member responded with interest in exploring the ReFT method further, indicating that the team will consider its implementation within Unsloth.

- Community Echoes Integration Request: A community member added their voice, appreciating that the question about the integration of the ReFT method into Unsloth was raised and is being considered by the team.

LM Studio ▷ #💬-general (661 messages🔥🔥🔥):

- Llama 3 is the Hot AI on the Block: Users are discussing the performance of the newly-released Llama 3 model from Meta, particularly its 8B version. They suggest it is on par with other 70B models like StableBeluga and Airoboros, with responses feeling genuinely human-like.

- GPU Woes and Server Interactions: Some users report issues with Llama 3 models spouting nonsensical outputs when GPU acceleration is enabled on Mac M1 systems, while others share how they can successfully run models on older hardware. There's also interest in learning whether LM Studio's server supports KV caches to avoid recomputing long contexts for each conversation.

- Models and Quants Details: There are mentions of model quantization versions such as K_S and Q4_K_M causing issues in LM Studio, with some suggesting versions from other providers like Quantfactory work better. NousResearch's 70B instruction model is recommended, and there's speculation about updates to GGUFs improving model behavior.

- Model Integration and Accessibility: Inquiries about integrating with other tools, embedding documents, and the ability to run on a headless server are brought up, with suggestions to use alternatives like llama.cpp for headless server deployment, and existing third-party tools like llama index for document proxy capabilities.

- Fine-Tuning and Un-Censoring Discussions: Users are eager for uncensored versions of models, with suggestions to modify system prompts to coax more "human-like" behavior and circumvent restrictions. Some are also excited about the potential for the community to further improve and fine-tune Llama 3 going forward.

- LM Studio | Continue: LM Studio is an application for Mac, Windows, and Linux that makes it easy to locally run open-source models and comes with a great UI. To get started with LM Studio, download from the website, use th...

- lmstudio-community/Meta-Llama-3-70B-Instruct-GGUF · Hugging Face: no description found

- meta-llama/Meta-Llama-3-70B-Instruct - HuggingChat: Use meta-llama/Meta-Llama-3-70B-Instruct with HuggingChat

- meraGPT/mera-mix-4x7B · Hugging Face: no description found

- Docker: no description found

- NousResearch/Meta-Llama-3-70B-Instruct-GGUF · Hugging Face: no description found

- Welcome Llama 3 - Meta's new open LLM: no description found

- Tweet from AI at Meta (@AIatMeta): Introducing Meta Llama 3: the most capable openly available LLM to date. Today we’re releasing 8B & 70B models that deliver on new capabilities such as improved reasoning and set a new state-of-the-a...

- Monaspace: An innovative superfamily of fonts for code

- Todd Howard Howard GIF - Todd Howard Howard Nodding - Discover & Share GIFs: Click to view the GIF

- GitHub - arcee-ai/mergekit: Tools for merging pretrained large language models.: Tools for merging pretrained large language models. - arcee-ai/mergekit

- lmstudio-community/Meta-Llama-3-8B-Instruct-GGUF · Hugging Face: no description found

- Meta Releases LLaMA 3: Deep Dive & Demo: Today, 18 April 2024, is something special! In this video, In this video I'm covering the release of @meta's LLaMA 3. This model is the third iteration of th...

LM Studio ▷ #🤖-models-discussion-chat (617 messages🔥🔥🔥):

- Llama 3 Buzz and Infinite Loops: Users are exploring the capabilities of Llama 3 models, particularly the 70B and 8B versions. Some are encountering issues with infinite response loops from the model, often involving the model improperly using the word "assistant."

- Download and Runtime Concerns: Queries around whether various Llama 3 quants can run on specific hardware configurations are prevalent. Users are looking for models, especially ones that could efficiently operate on M3 Max with 128GB RAM or NVIDIA 3090.

- Comparisons between Llama 3 and Other Models: Some are comparing Llama 3 to previous Llama 2 models and other AI models like Command R Plus. Reports indicate a similar or improved performance, though some users have language-specific concerns.

- Prompt Template Confusions and EOT Token Issues: Users are seeking advice on the correct prompt settings for Llama 3 models to prevent unwanted loops and interactions in the responses. It appears version 0.2.20 of LM Studio is necessary along with specific community quants.

- Announcement of Llama 3 70B Instruct by Meta: A version of Llama 3 70B Instruct is announced to be coming soon, and others like IQ1_M are highlighted for their impressive coherence and size efficiency, fitting large models into relatively small VRAM capacities.

- meta-llama/Meta-Llama-3-70B · Hugging Face: no description found

- TEMPLATE """if .System<|start_header_id|>system<|end_header_id|>: <|eot_id|>if .Prompt<|start_header_id|>user<|end_header_id|> <|eot_id|><|start_header_id|>assistant<|end_header_id|&g...

- meta-llama/Meta-Llama-3-70B-Instruct - HuggingChat: Use meta-llama/Meta-Llama-3-70B-Instruct with HuggingChat

- MaziyarPanahi/WizardLM-2-7B-GGUF · Hugging Face: no description found

- QuantFactory/Meta-Llama-3-8B-GGUF at main: no description found

- MaziyarPanahi/Meta-Llama-3-8B-Instruct-GGUF · Hugging Face: no description found

- meta-llama/Meta-Llama-3-70B-Instruct · Update generation_config.json: no description found

- meta-llama/Meta-Llama-3-8B-Instruct · Update generation_config.json: no description found

- no title found: no description found

- meta-llama/Meta-Llama-3-8B-Instruct · Hugging Face: no description found

- meta-llama/Meta-Llama-3-70B-Instruct · Hugging Face: no description found

- llama3/MODEL_CARD.md at main · meta-llama/llama3: The official Meta Llama 3 GitHub site. Contribute to meta-llama/llama3 development by creating an account on GitHub.

- lmstudio-community/Meta-Llama-3-70B-Instruct-GGUF · Hugging Face: no description found

- Qwen/CodeQwen1.5-7B · Hugging Face: no description found

- We Know Duh GIF - We Know Duh Hello - Discover & Share GIFs: Click to view the GIF

- M3 max 128GB for AI running Llama2 7b 13b and 70b: In this video we run Llama models using the new M3 max with 128GB and we compare it with a M1 pro and RTX 4090 to see the real world performance of this Chip...

- lmstudio-community/Meta-Llama-3-8B-Instruct-GGUF · Hugging Face: no description found

- configs/llama3.preset.json at main · lmstudio-ai/configs: LM Studio JSON configuration file format and a collection of example config files. - lmstudio-ai/configs

- llama3 family support · Issue #6747 · ggerganov/llama.cpp: llama3 released would be happy to use with llama.cpp https://huggingface.co/collections/meta-llama/meta-llama-3-66214712577ca38149ebb2b6 https://github.com/meta-llama/llama3

LM Studio ▷ #announcements (1 messages):

- Introducing Llama 3 in LM Studio 0.2.20: LM Studio announces support for MetaAI's Llama 3 in the latest update, LM Studio 0.2.20, which can be accessed at lmstudio.ai or through an auto-update by restarting the app. The important caveat is that only Llama 3 GGUFs from "lmstudio-community" will function at this time.

- Community Model Spotlight - Llama 3 8B: The community model Llama 3 8B Instruct by Meta, quantized by bartowski, is highlighted, offering a small, fast, and instruction-tuned AI model. The original model can be found at Meta-Llama-3-8B-Instruct, and the GGUF version at lmstudio-community/Meta-Llama-3-8B-Instruct-GGUF.

- Exclusive Compatibility Note: Users are informed of a subtle issue in GGUF creation, which has been circumvented in these quantizations; as a result, they should not expect other Llama 3 GGUFs to work with the current LM Studio version.

- LLama 3 8B Availability and 70B on the Horizon: Llama 3 8B Instruct GGUF is now available for use, and the 70B version is hinted to be incoming. Users are encouraged to report bugs in the specified Discord channel.

- no title found: no description found

- lmstudio-community/Meta-Llama-3-8B-Instruct-GGUF · Hugging Face: no description found

- Tweet from LM Studio (@LMStudioAI): .@Meta's Llama 3 is now fully supported in LM Studio! 👉 Update to LM Studio 0.2.20 🔎 Download lmstudio-community/llama-3 Llama 3 8B is already up. 70B is on the way 🦙 https://huggingface.co/...

LM Studio ▷ #🧠-feedback (5 messages):

- Thumbs-Up for Improved Model Sorting: A user has commended the new model sorting feature on the download page, appreciating the improved functionality.

- A Call for Text-to-Speech (TTS) in LM Studio: There has been a query regarding the future possibility of integrating text-to-speech (TTS) into LM Studio to alleviate the need to read text all day.

- Perplexed by Persistent Bugs: One user has reported a recurring bug where closing the last chat after loading a new model results in the need to reload, and the system does not retain the chosen preset.

- Suggestion for Tools to Tackle Text-to-Speech: In response to a query about TTS integration, a user suggested that system tools might offer a solution.

- Feedback on Error Display Design: A user has expressed frustration with the error display window in LM Studio, criticizing it for being narrow and non-resizable, and suggesting a design that is taller to better accommodate the vertical content.

LM Studio ▷ #📝-prompts-discussion-chat (4 messages):

- Mixtral Resume Rating Confusion: A member mentioned struggling to use Mixtral for rating resumes according to their criteria, whereas using Chat GPT for the same task presented no issues.

- Two-Step Solution Proposed: In response, another member suggested a two-step approach for handling resumes with Mixtral: one step to identify and extract relevant elements, and another to grade them.

- Alternative CSV Grading Method: It was also proposed to convert the resumes into a CSV format and use an Excel formula to handle the grading.

LM Studio ▷ #🎛-hardware-discussion (16 messages🔥):

- Gaming GPUs for AI: The 1080TI was mentioned for its adequate performance in processing large AI models, leveraging its power as needed.

- Joking on Crypto's New Frontier: There was a humorous remark about old cryptocurrency mining chassis potentially being repurposed as AI rigs.

- A Question of Space: One member expressed concern about fitting a new GPU in their case, highlighting the benefit of an additional 6GB of VRAM.