[AINews] Liquid Foundation Models: A New Transformers alternative + AINews Pod 2

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Adaptive computational operators are all you need.

AI News for 9/27/2024-9/30/2024. We checked 7 subreddits, 433 Twitters and 31 Discords (225 channels, and 5435 messages) for you. Estimated reading time saved (at 200wpm): 604 minutes. You can now tag @smol_ai for AINews discussions!

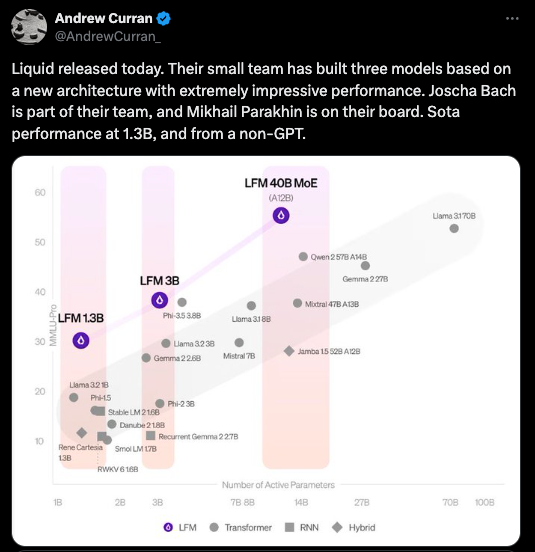

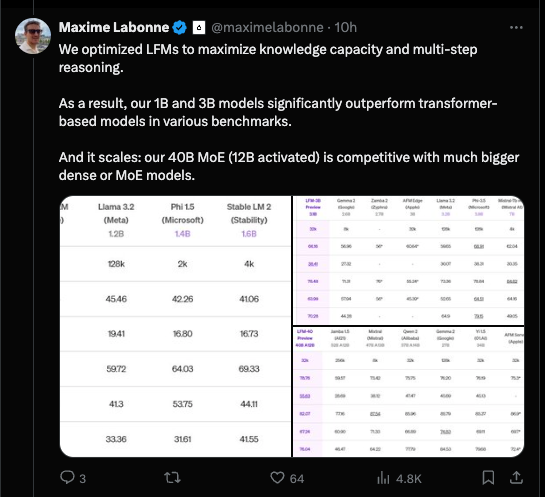

It's not every day that a credible new foundation model lab launches, so the prize for today rightfully goes to Liquid.ai, who, 10 months after their $37m seed, finally "came out of stealth" announcing 3 subquadratic models that perform remarkably well for their weight class:

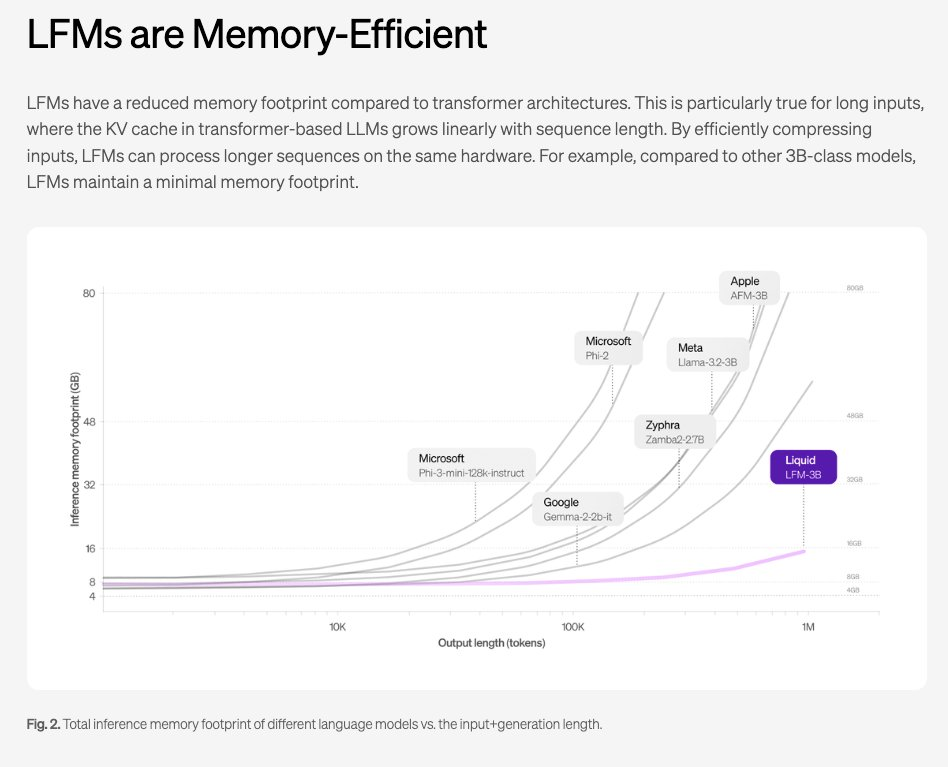

We know precious little about "liquid networks" compared to state space models, but they have the obligatory subquadratic chart to show that they beat SSMs there:

with very credible benchmark scores:

Notably they seem to be noticeably more efficient per parameter than both the Apple on device and server foundation models (our coverage here).

They aren't open source yet, but have a playground and API and have more promised coming up to their Oct 23rd launch.

AINews Pod

We first previewed our Illuminate inspired podcast earlier this month. With NotebookLM Deep Dive going viral, we're building an open source audio version of AINews as a new experiment. See [our latest comparison between NotebookLM and our pod here! Let us know @smol_ai if you have feedback or want the open source repo.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Updates and Developments

- Llama 3.2 Release: Meta AI announced Llama 3.2, featuring 11B and 90B multimodal models with vision capabilities, as well as lightweight 1B and 3B text-only models for mobile devices. The vision models support image and text prompts for deep understanding and reasoning on inputs. @AIatMeta noted that these models can take in both image and text prompts to deeply understand and reason on inputs.

- Google DeepMind Announcements: Google announced the rollout of two new production-ready Gemini AI models: Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002. @adcock_brett highlighted that the best part of the announcement was a 50% reduced price on 1.5 Pro and 2x/3x higher rate limits on Flash/1.5 Pro respectively.

- OpenAI Updates: OpenAI rolled out an enhanced Advanced Voice Mode to all ChatGPT Plus and Teams subscribers, adding Custom Instructions, Memory, and five new 'nature-inspired' voices, as reported by @adcock_brett.

- AlphaChip: Google DeepMind unveiled AlphaChip, an AI system that designs chips using reinforcement learning. @adcock_brett noted that this enables superhuman chip layouts to be built in hours rather than months.

Open Source and Regulation

- SB-1047 Veto: California Governor Gavin Newsom vetoed SB-1047, a bill related to AI regulation. Many in the tech community, including @ylecun and @svpino, expressed gratitude for this decision, viewing it as a win for open-source AI and innovation.

- Open Source Growth: @ylecun emphasized that open source in AI is thriving, citing the number of projects on Github and HuggingFace reaching 1 million models.

AI Research and Development

- NotebookLM: Google upgraded NotebookLM/Audio Overviews, adding support for YouTube videos and audio files. @adcock_brett shared that Audio Overviews turns notes, PDFs, Google Docs, and more into AI-generated podcasts.

- Meta AI Developments: Meta AI, the consumer chatbot, is now multimodal, capable of 'seeing' images and allowing users to edit photos using AI, as reported by @adcock_brett.

- AI in Medicine: A study on o1-preview model in medical scenarios showed that it surpasses GPT-4 in accuracy by an average of 6.2% and 6.6% across 19 datasets and two newly created complex QA scenarios, according to @dair_ai.

Industry Trends and Collaborations

- James Cameron and Stability AI: Film director James Cameron joined the board of directors at Stability AI, seeing the convergence of generative AI and CGI as "the next wave" in visual media creation, as reported by @adcock_brett.

- EA's AI Demo: EA demonstrated a new AI concept for user-generated video game content, using 3D assets, code, gameplay hours, telemetry events, and EA-trained custom models to remix games and asset libraries in real-time, as shared by @adcock_brett.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Emu3: Next-token prediction breakthrough for multimodal AI

- Emu3: Next-Token Prediction is All You Need (Score: 227, Comments: 63): Emu3, a new suite of multimodal models, achieves state-of-the-art performance in both generation and perception tasks using next-token prediction alone, outperforming established models like SDXL and LLaVA-1.6. By tokenizing images, text, and videos into a discrete space and training a single transformer from scratch, Emu3 simplifies complex multimodal model designs and demonstrates the potential of next-token prediction for building general multimodal intelligence beyond language. The researchers have open-sourced key techniques and models, including code on GitHub and pre-trained models on Hugging Face, to support further research in this direction.

- Booru tags, commonly used in anime image boards and Stable Diffusion models, are featured in Emu3's generation examples. Users debate the necessity of supporting these tags for model popularity, with some considering it a requirement for widespread adoption.

- Discussions arose about applying diffusion models to text generation, with mentions of CodeFusion paper. Users speculate on Meta's GPU compute capability and potential unreleased experiments, suggesting possible agreements between large AI companies to control information release.

- The model's ability to generate videos as next-token prediction excited users, potentially initiating a "new era of video generation". However, concerns were raised about generation times, with reports of 10 minutes for one picture on Replicate.

Theme 2. Replete-LLM releases fine-tuned Qwen-2.5 models with performance gains

- Replete-LLM Qwen-2.5 models release (Score: 73, Comments: 55): Replete-LLM has released fine-tuned versions of Qwen-2.5 models ranging from 0.5B to 72B parameters, using the Continuous finetuning method. The models, available on Hugging Face, reportedly show performance improvements across all sizes compared to the original Qwen-2.5 weights.

- Users requested benchmarks and side-by-side comparisons to demonstrate improvements. The developer added some benchmarks for the 7B model and noted that running comprehensive benchmarks often requires significant computing resources.

- The developer's continuous finetuning method combines previous finetuned weights, pretrained weights, and new finetuned weights to minimize loss. A paper detailing this approach was shared.

- GGUF versions of the models were made available, including quantized versions up to 72B parameters. Users expressed interest in testing these on various devices, from high-end machines to edge devices like phones.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Capabilities and Developments

- OpenAI's o1 model can handle 5-hour tasks, enabling longer-horizon problem-solving, compared to GPT-3 (5-second tasks) and GPT-4 (5-minute tasks), according to OpenAI's head of strategic marketing.

- MindsAI achieved a new high score of 48% on the ARC-AGI benchmark, with the prize goal set at 85%.

- A hacker demonstrated the ability to plant false memories in ChatGPT to create a persistent data exfiltration channel.

AI Policy and Regulation

- California Governor Gavin Newsom vetoed a contentious AI safety bill, highlighting ongoing debates around AI regulation.

AI Ethics and Societal Impact

- AI researcher Dan Hendrycks posed a thought experiment about a hypothetical new species with rapidly increasing intelligence and reproduction capabilities, questioning which species would be in control.

- The cost of a single query to OpenAI's o1 model was highlighted, sparking discussions about the economic implications of advanced AI models.

Memes and Humor

- A meme about trying to contain AGI sparked discussions about the challenges of AI safety.

- Another meme questioned whether humans are "the baddies" in relation to AI development, leading to debates about AI consciousness and ethics.

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1. AI Models Make Waves with New Releases and Upgrades

- LiquidAI Challenges Giants with Liquid Foundation Models (LFMs): LiquidAI launched LFMs—1B, 3B, and 40B models—claiming superior performance on benchmarks like MMLU and calling out competitors' inefficiencies. With team members from MIT, their architecture is set to challenge established models in the industry.

- Aider v0.58.0 Writes Over Half Its Own Code: The latest release introduces features like model pairing and new commands, boasting that Aider created 53% of the update's code autonomously. This version supports new models and enhances user experience with improved commands like

/copyand/paste. - Microsoft's Hallucination Detection Model Levels Up to Phi-3.5: Upgraded from Phi-3 to Phi-3.5, the model flaunts impressive metrics—Precision: 0.77, Recall: 0.91, F1 Score: 0.83, and Accuracy: 82%. It aims to boost the reliability of language model outputs by effectively identifying hallucinations.

Theme 2. AI Regulations and Legal Battles Heat Up

- California Governor Vetoes AI Safety Bill SB 1047: Governor Gavin Newsom halted the bill designed to regulate AI firms, claiming it wasn't the optimal approach for public protection. Critics see this as a setback for AI oversight, while supporters push for capability-based regulations.

- OpenAI Faces Talent Exodus Over Compensation Demands: Key researchers at OpenAI threaten to quit unless compensation increases, with $1.2 billion already cashed out amid a soaring valuation. New CFO Sarah Friar navigates tense negotiations as rivals like Safe Superintelligence poach talent.

- LAION Wins Landmark Copyright Case in Germany: LAION successfully defended against copyright infringement claims, setting a precedent that benefits AI dataset use. This victory removes significant legal barriers for AI research and development.

Theme 3. Community Grapples with AI Tool Challenges

- Perplexity Users Bemoan Inconsistent Performance: Users report erratic responses and missing citations, especially when switching between web searches and academic papers. Many prefer Felo for academic research due to better access and features like source previews.

- OpenRouter Users Hit by Rate Limits and Performance Drops: Frequent 429 errors frustrate users of Gemini Flash, pending a quota increase from Google. Models like Hermes 405B free show decreased performance post-maintenance, raising concerns over provider changes.

- Debate Ignites Over OpenAI's Research Transparency: Critics argue that OpenAI isn't sufficiently open about its research, pointing out that blog posts aren't enough. Employees assert transparency, but the community seeks more substantive communication beyond the research blog.

Theme 4. Hardware Woes Plague AI Enthusiasts

- NVIDIA Jetson AGX Thor's 128GB VRAM Sparks Hardware Envy: Set for 2025, the AGX Thor’s massive VRAM raises questions about the future of current GPUs like the 3090 and P40. The announcement has the community buzzing about potential upgrades and the evolving GPU landscape.

- New NVIDIA Drivers Slow Down Stable Diffusion Performance: Users with 8GB VRAM cards experience generation times ballooning from 20 seconds to 2 minutes after driver updates. The community advises against updating drivers to avoid crippling rendering workflows.

- Linux Users Battle NVIDIA Driver Issues, Eye AMD GPUs: Frustrations mount over NVIDIA's problematic Linux drivers, especially for VRAM offloading. Some users consider switching to AMD cards, citing better performance and ease of use in configurations.

Theme 5. AI Expands into Creative and Health Domains

- NotebookLM Crafts Custom Podcasts from Your Content: Google's NotebookLM introduces an audio feature that generates personalized podcasts using AI hosts. Users are impressed by the engaging and convincing conversations produced from their provided material.

- Breakthrough in Schizophrenia Treatment Unveiled: Perplexity AI announced the launch of the first schizophrenia medication in 30 years, marking significant progress in mental health care. Discussions highlight the potential impact on patient care and treatment paradigms.

- Fiery Debate Over AI-Generated Art vs. Human Creativity: The Stability.ai community is torn over the quality and depth of AI art compared to human creations. While some champion AI-generated works as legitimate art, others argue for the enduring superiority of human artistry.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- LinkedIn's Copied Code Controversy: LinkedIn faced backlash for allegedly copying Unsloth's code without proper attribution, prompting intervention from Microsoft and GitHub to ensure proper credit.

- The incident underscores the critical need for adherence to open source licensing and raises concerns about intellectual property.

- Best Practices for Fine-tuning Llama Models: To mitigate token generation issues, users discussed setting a random seed and evaluating output quality carefully during Llama model fine-tuning.

- It's essential to configure EOS tokens correctly to maintain the model's original abilities during inference.

- GGUF Conversion Errors: Users encountered a 'cannot find tokenizer merges in model file' error when loading GGUF models, highlighting potential issues during model saving.

- Understanding the conversion process and maintaining compatibility with tokenizer configurations is vital for ensuring smooth model transitions.

- Liquid Foundation Models Launch: LiquidAI announced the introduction of Liquid Foundation Models (LFMs), featuring 1B, 3B, and 40B models, but skepticism arose about the validity of these announcements.

- Concerns were expressed regarding the accuracy of the claims, especially in relation to Perplexity Labs.

- Leveraging Untapped Compute Power: Members noted substantial compute power being underutilized, suggesting potential performance improvements across various hardware setups.

- Achieving realistic performance boosts through optimization of existing resources indicates significant room for enhancement in current systems.

aider (Paul Gauthier) Discord

- Aider v0.58.0 Delivers Exciting Enhancements: The release of Aider v0.58.0 introduces features such as model pairing and new commands, with Aider creating 53% of the update's code autonomously.

- This version also supports new models and improves user experience with features like clipboard command updates.

- Architect/Editor Models Improve Efficiency: Aider utilizes a main model for planning and an optional editor model for execution, allowing configuration via

--editor-modelfor optimal task handling.- This dual approach has sparked discussions on multi-agent coding capabilities and price efficiency for LLM tasks.

- NotebookLM's New Podcast Feature Stands Out: NotebookLM launches an audio feature that generates custom podcasts from user content, showcasing AI hosts in a compelling format.

- One example podcast demonstrates the technology's ability to create engaging conversations from provided material.

- Automation Proposal for Content Generation: The idea to use NotebookLM to automate the production of videos from release notes has been floated, potentially leading to an efficient tool named ReleaseNotesLM.

- This tool aims to transform written updates into audio, streamlining processes for content creators.

- Discussion on Model Cost Efficiency: Using different models, such as

claude-3.5-sonnetfor architect tasks anddeepseek v2.5for editing, can lead to 20x-30x cost reductions on editor tokens.- Participants emphasized the advantages of strategic model selection based on cost and functionality, exploring script options for enhanced configuration.

HuggingFace Discord

- AI Model Merging Techniques Discussed: Users explored various methods for merging AI models, specifically focusing on approaches like PEFT merge and the DARE method to enhance performance during fine-tuning.

- The conversation stressed the value of leveraging existing models rather than training LLMs from scratch, positioning these methods as pivotal for efficient task handling.

- Medical AI Insights from Recent Papers: A post summarized the top research papers in medical AI for September 21-27, 2024, including notable studies like A Preliminary Study of o1 in Medicine.

- Members suggested breaking these insights into individual blog posts to increase engagement and discussion surrounding standout papers.

- Hallucination Detection Model Performance Metrics: The newly released Hallucination Detection Model upgraded from Phi-3 to Phi-3.5 boasts impressive metrics: Precision: 0.77, Recall: 0.91, F1 Score: 0.83, and accuracy: 82%; check out the model card.

- This model aims to improve the reliability of language model outputs by effectively identifying hallucinations.

- Gradio's Lackluster User Reception: Community sentiment towards Gradio turned negative, with users labeling it as 'hot garbage' due to UI responsiveness issues and design flaws that complicate project management.

- Despite the backlash, members encouraged seeking help in dedicated support channels, indicating a continued investment in troubleshooting.

- Keypoint Detection Model Enhancements: The announcement of the OmDet-Turbo model supports zero-shot object detection, integrating techniques from Grounding DINO and OWLv2; details can be found here.

- Exclusive focus on keypoint detection with models like SuperPoint sets the stage for community excitement over future developments in this field.

LM Studio Discord

- Challenges in downloading and sideloading models in LM Studio: Users encountered issues with downloading models in LM Studio, particularly when using VPNs, prompting some to sideload models instead. Limitations on supporting model formats like safetensors and GGUF were noted.

- The community expressed frustrations regarding the overall download experience, with discussions highlighting the necessity for better support with various model types.

- NVIDIA Jetson AGX Thor boasts 128GB VRAM: The upcoming NVIDIA Jetson AGX Thor is set to feature 128GB of VRAM in 2025, raising questions about the viability of current GPUs like the 3090 and P40. This announcement has created a buzz around potential upgrades in the GPU landscape.

- Some members pondered whether existing hardware will remain competitive as the demand for high-VRAM options continues to grow.

- Comparing GPU performance: 3090 vs 3090 Ti vs P40: Members compared the performance of the 3090, 3090 Ti, and P40, focusing on VRAM and pricing, which heavily influence their choices. One remark noted that the P40 operates at approximately half the speed of the 3090.

- Members expressed concern over rising GPU prices and debated the trade-offs between different models for current AI workloads.

- Market pricing dynamics for GPUs: Discussions emphasized that GPU prices remain high due to scalping and increased demand for AI applications, with the A6000 serving as a high-VRAM alternative. However, budget-conscious members favor options like multiple 3090s for their setups.

- The conversation highlighted a general frustration regarding pricing trends and the hurdles many face in the current market.

- Challenges with NVIDIA drivers on Linux: The community shared grievances about NVIDIA's Linux drivers being notoriously problematic, especially for VRAM offloading, an area where AMD cards perform better. Complications in setting up CUDA and other drivers underscored these frustrations.

- Some members indicated a growing preference for AMD hardware, citing its superior ease of use in certain configurations.

GPU MODE Discord

- Cerebras Chip Optimization Discussion: Members are exploring code optimizations for Cerebras chips with varying opinions about potential purchases and expertise availability.

- Community interest is growing as members show willingness to find experts for deeper insights into Cerebras technology.

- Rising Concerns Over Spam Management: The community is addressing an increase in crypto scam spam messages on Discord, suggesting stricter verification protocols to enhance server security.

- Members are actively seeking efficient anti-spam tools and discussing their experiences with existing solutions such as AutoMod.

- Triton Talk Materials Shared: A member sought out slides from the Triton talk and was directed to the GitHub repository containing educational resources.

- This reflects a strong community culture of knowledge sharing and collaborative learning.

- AMD GPU Performance Troubles: Discussion on significant performance limitations of AMD GPUs, particularly with GFX1100 and MI300 architectures, was prominent among the members.

- Many highlighted the ongoing challenges with multi-node setups and expressed the need for enhanced performance.

- Understanding Model Parallelism vs ZeRO/FSDP: Members clarified the distinctions between Model Parallelism and ZeRO/FSDP, focusing on how ZeRO implements parameter distribution strategies.

- Discussions emphasized that FSDP utilizes sharding to enhance model training efficiency, appealing to those looking to understand advanced features.

Modular (Mojo 🔥) Discord

- Modular Community Meeting Agenda Explored: Today's Modular Community Meeting at 10am PT will cover the MAX driver & engine API and a Q&A on Magic, with access via Zoom. Participants can check the Modular Community Calendar for upcoming events.

- The meeting recording will be uploaded to YouTube, including today’s session available at this link, ensuring no one misses out.

- Debate on Mojo Language Enhancements: A proposal for advanced Mojo language features suggested named variants for message passing and better management of tagged unions without new constructs, sparking extensive discussion among members.

- Proponents weighed the ergonomics of defining types, discussing the balance of nominal versus structural types in the design process.

- Bundling Models with Mojopkg: The ability to embed models in Mojopkg was enthusiastically discussed, showcasing potential user experience improvements by bundling everything into a single executable application.

- Key examples from other languages were mentioned, illustrating how this could simplify dependencies for users and enhance usability.

- Managing Native Dependencies Smoothly: Concerns were raised regarding Mojopkg's capability to simplify dependency management, potentially allowing for easier installation and configuration.

- Discussion included practical implementations like embedding installers for runtimes such as Python directly into Mojo applications.

- Compatibility Warnings on MacOS: A user reported compatibility warnings when building object files for macOS, noting linking issues between versions 15.0 and 14.4.

- Although the warnings are not fatal, they could point to future compatibility challenges needing resolution.

Nous Research AI Discord

- Nous Research pushes open source initiatives: Nous Research focuses on open source AI research, collaborating with builders and releasing models including the Hermes family.

- Their DisTrO project aims to speed up AI model training across the internet, hinting at the perils of closed source models.

- Distro paper release generating buzz: The Distro paper is expected to be announced soon, igniting excitement among community members eager for updates.

- This paper's relevance to the AI community amplifies anticipation surrounding its detailed content.

- New AI Model Fine-tuning Techniques Unleashed: The recent Rombodawg’s Replete-LLM topped the OpenLLM leaderboard for 7B models, aided by innovative fine-tuning techniques.

- Methods like TIES merging are identified as critical to enhancing model benchmarks significantly.

- Liquid Foundation Models capture attention: LiquidAI introduced Liquid Foundation Models with versions including 1B, 3B, and 40B, aiming for fresh capabilities in the AI landscape.

- These models are seen as pivotal in offering innovative functionalities for various applications within the AI domain.

- Medical AI Paper of the Week: Are We Closer to an AI Doctor?: The highlighted paper, A Preliminary Study of o1 in Medicine, explores the potential for AI to function as a doctor, authored by various experts in the field.

- This paper was recognized as the Medical AI Paper of the Week, showcasing its relevance in ongoing discussions about AI's role in healthcare.

Perplexity AI Discord

- Perplexity struggles with performance consistency: Users noted inconsistent responses from Perplexity while switching between web searches and academic papers, with instances of missing citations.

- Concerns were raised about whether these inconsistencies reflect a bug or highlight underlying design flaws in the search functionality.

- Felo superior for academic searches: Many users find Felo more effective for academic research, citing better access to relevant papers over Perplexity.

- Features like hovering for source previews enhance the research experience, drawing users to prefer Felo for its intuitive interface.

- Inconsistent API outputs frustrate users: The community discussed API inconsistencies, especially around the PPLX API, which was returning outdated real estate listings compared to the website data.

- Suggestions were made to experiment with parameters like temperature and top-p to improve the API's response consistency.

- Breakthrough in schizophrenia treatment: Perplexity AI announced an important milestone with the launch of the first schizophrenia medication in 30 years, marking significant progress in mental health solutions.

- Discourse emphasized the potential ramifications for patient care and the evolution of treatment paradigms moving forward.

- Texas counties use AI tech effectively: Texas counties showcased innovative approaches to leverage AI applications in local government operations, enhancing public service capabilities.

- Participants shared a detailed resource that highlights these practical implementations of AI technology in administrative tasks.

OpenRouter (Alex Atallah) Discord

- OpenRouter Struggles with Rate Limits: Users report frequent 429 errors while using Gemini Flash, causing significant frustration as they await a potential quota increase from Google.

- This ongoing traffic issue is undermining the platform's usability, impacting user engagement.

- Performance Decrease Post-Maintenance: Models like Hermes 405B free have exhibited lower performance quality after recent updates, raising concerns about potential changes in model providers.

- Users are advised to check their Activity pages to ensure they are using their preferred models.

- Translation Model Options Suggested: A user looked for efficient translation models for dialogue without strict limitations, expressing dissatisfaction with GPT4o Mini.

- Open weight models fine-tuned with dolphin techniques were recommended as more flexible alternatives.

- Frontend Chat GUI Recommendations: A discussion emerged about chat GUI solutions that allow middleware flexibility, with Streamlit proposed as a viable option.

- Typingmind was also mentioned for its customizable features in managing interactions with multiple AI agents.

- Gemini's Search Functionality Discussion: There’s interest in enabling direct search capabilities within Gemini models similar to Perplexity, though current usage limitations are still being evaluated.

- Discussions referenced Google's Search Retrieval API parameter, highlighting the need for clearer implementation strategies.

Stability.ai (Stable Diffusion) Discord

- Flux Model Hits a Home Run: Impressed by kohya_ss's work, members noted that the Flux model can train on just 12G VRAM, showcasing incredible performance capabilities.

- Excitement spread about the advancements, hinting at a possible shift in model efficiency benchmarks.

- Nvidia Drivers Slow Down SDXL: New Nvidia drivers caused major slowdowns for 8GB VRAM cards, with image generation times ballooning from 20 seconds to 2 minutes.

- Members strongly advised against updating drivers, as these changes detrimentally affected their rendering workflows.

- Regional Prompting Hits Snags: Community members shared frustrations with regional prompting in Stable Diffusion, specifically with character mixing in prompts like '2 boys and 1 girl'.

- Suggestions arose to begin with broader prompts, leveraging general guides for optimal results.

- AI Art Submission Call to Action: The community's invited to submit AI-generated art for potential feature in The AI Art Magazine, with a deadline set for October 20.

- This initiative aims to celebrate digital art and encourages members to flaunt their creativity.

- AI Art Stirs Quality Debate: A vigorous debate erupted regarding the merits of AI art versus human art, with opinions split on quality and depth.

- Some argued for the superiority of human artistry, while others defended AI-generated works as legitimate artistic expression.

OpenAI Discord

- Aider benchmarks LLM editing skills: Members discussed Aider's functionality, noting it excels with LLMs proficient in editing code, as highlighted in its leaderboards. Skepticism emerged around the reliability of Aider's benchmarks, especially concerning Gemini Pro 1.5 002.

- While Aider showcases impressive edits, the potential for further testing and validation remains critical for broader acceptance in the community.

- EU AI Bill sparks dialogue: The discourse around the EU's AI bill intensified, with members sharing varying views on its implications for multimodal AI regulation and chatbot classifications under level two regulations. Concerns about the regulatory burden on tech companies were prevalent.

- Many emphasized the necessity for clarity on how emerging AI technologies would be impacted by these regulations as they navigate compliance landscapes.

- Meta's game-changer in video translation: A member highlighted Meta's imminent release of a lip-sync video translation feature, set to enhance user engagement on the platform. This feature captivated discussions about its potential to reshape content creation tools.

- Members expressed excitement over how this could elevate translation services and the implications for global content accessibility.

- Voice mode quandaries in GPT-4: Frustration brewed over the performance of GPT-4o, with urgent calls for the release of GPT-4.5-o following claims of it being 'the dumbest LLM'. Critiques centered on insufficient reasoning capabilities as a major concern.

- Amidst user confusion, detailed discussions about daily limits and accessibility of voice mode highlighted the community's anticipation for enhancements in user experience.

- Flutter Code Execution Error Resolved: A user faced an error indicating an active run in thread

thread_ey25cCtgH3wqinE5ZqIUbmVT, leading to suggestions for managing active runs and using thecancelfunction. The user ultimately resolved the issue by waiting longer between executions.- Participants recommended incorporating a status parameter to track thread completions, potentially streamlining thread management and reducing frustration in future interactions.

Eleuther Discord

- New Members Enrich Community Dynamics: Several new members, including a fullstack engineer from Singapore and a data engineer from Portugal, joined the conversation, eager to contribute to AI projects and open source initiatives.

- Their enthusiasm for collaboration sets a promising tone for community growth.

- AI Conferences on the Horizon: Members discussed upcoming conferences like ICLR and NeurIPS, particularly with Singapore hosting ICLR, and are planning meetups.

- Light-hearted conversation about event security roles added a fun twist to the coordination.

- Liquid AI Launches Foundation Models: Liquid Foundation Models were announced, showcasing strong benchmark scores and a flexible architecture optimized for diverse industries.

- The models are designed for various hardware, inviting users to test them on Liquid AI's platform.

- Exploration of vLLM Metrics Extraction: A member inquired about extracting vLLM metrics objects from the lm-evaluation-harness library using the

simple_evaluatefunction on benchmarks.- They specifically sought metrics like time to first token and time in queue, prompting useful responses from the community.

- ExecuTorch Enhances On-Device AI Capabilities: ExecuTorch allows customization and deployment of PyTorch programs across various devices, including AR/VR and mobile systems, as per the platform overview.

- Details were shared regarding the

executorchpip package currently in alpha for Python 3.10 and 3.11, compatible with Linux x86_64 and macOS aarch64.

- Details were shared regarding the

Torchtune Discord

- Optimizing Torchtune Training Configurations: Users fine-tuned various settings for Llama 3.1 8B, optimizing parameters like

batch_size,fused, andfsdp_cpu_offload, which led to decreased epoch times whenpacked=Truewas enabled.- ...and everyone agreed that

enable_activation_checkpointshould remainFalseto boost compute efficiency.

- ...and everyone agreed that

- Demand for Dynamic CLI Solutions: A proposal emerged to create a dynamic CLI using the

tyrolibrary, allowing for customizable help texts that reflect configuration settings in Torchtune recipes.- This flexibility aims to enhance user experience and streamline recipe management with clear documentation.

- Memory Optimization Strategies Revealed: Members recommended updating the memory optimization page to include both performance and memory optimization tips, promoting a more integrated approach.

- Ideas like implementing sample packing and exploring int4 training were highlighted as potential enhancements for memory efficiency.

- Error Handling Enhancements for Distributed Training: A suggestion surfaced to improve error handling in distributed training by leveraging

torch.distributed's record utility for logging exceptions.- This approach facilitates easier troubleshooting by maintaining comprehensive error logs throughout the training process.

- Duplicate Key Concerns in Configuration Management: Discussion arose regarding OmegaConf flagging duplicate entries like

fused=Truein configs, highlighting the importance of clean and organized configuration files.- We should add a performance section in configs, placing fast options in comments to improve readability and immediate accessibility.

Latent Space Discord

- CodiumAI rebrands with Series A funding: QodoAI, previously known as CodiumAI, secured a $40M Series A funding, bringing their total to $50M to enhance AI-assisted tools.

- ‘This funding validates their approach’ indicating developer support for their mission to ensure code integrity.

- Liquid Foundation Models claim impressive benchmarks: LiquidAI launched LFMs, showcasing superior performance on MMLU and other benchmarks, calling out competitors' inefficiencies.

- With team members from MIT, their 1.3B model architecture is set to challenge established models in the industry.

- Gradio enables real-time AI voice interaction: LeptonAI demonstrated Gradio 5.0, which includes real-time streaming with audio mode for LLMs, simplifying code integrations.

- The updates empower developers to create interactive applications with ease, encouraging open-source collaboration.

- Ultralytics launches YOLO11: Ultralytics introduced YOLO11, enhancing previous versions for improved accuracy and speed in computer vision tasks.

- The launch marks a critical step in the evolution of their YOLO models, showcasing substantial performance improvements.

- Podcast listeners demand more researcher features: The latest episode features Shunyu Yao and Harrison Chase, drawing interest from listeners eager for more researcher involvement in future episodes.

- Engagements highlight listener enthusiasm, with comments like, ‘bring more researchers on’, urging for deeper discussions.

LlamaIndex Discord

- FinanceAgentToolSpec for Public Financial Data: The FinanceAgentToolSpec package on LlamaHub allows agents to access public financial data from sources like Polygon and Finnhub.

- Hanane's detailed post emphasizes how this tool can streamline financial analysis through querying.

- Full-Stack Demo Showcases Streaming Events: A new full-stack application illustrates workflows for streaming events with Human In The Loop functionalities.

- This app demonstrates how to research and present a topic, boosting user engagement significantly.

- YouTube Tutorial Enhances Workflow Understanding: A YouTube video provides a developer's walkthrough of the coding process for the full-stack demo.

- This resource aims to aid those wishing to implement similar streaming systems.

- Navigating RAG Pipeline Evaluation Challenges: Users reported issues with RAG pipeline evaluation using trulens, particularly addressing import errors and data retrieval.

- This led to discussions on the importance of building a solid evaluation dataset for accurate assessments.

- Understanding LLM Reasoning Problems: Defining the type of reasoning problem is essential for engaging with LLM reasoning, as highlighted in a shared article detailing reasoning types.

- The article emphasizes that various reasoning challenges require tailored approaches for effective evaluation.

Cohere Discord

- Cohere Startup Program Discounts Available: A user inquired about discounts for a startup team using Cohere, citing costs compared to Gemini. It was suggested they apply to the Cohere Startup Program for potential relief.

- Participants mentioned that the application process might take time, but they affirmed the significance of this support for early-stage ventures.

- Improve Flash Card Generation by Fine-tuning: Members discussed fine-tuning a model specifically for flash card generation from notes and slide decks, addressing concerns about output clarity. It was suggested to employ best practices for machine learning pipelines and utilize chunking data for improved results.

- Chunking was highlighted as beneficial, particularly for processing PDF slide decks, enhancing the model's understanding and qualitative output.

- Cultural Multilingual LMM Benchmark Launch: MBZUAI is developing a Cultural Multilingual LMM Benchmark for 100 languages, and is actively seeking native translators to volunteer for error correction. Successful participants will be invited to co-author the resulting paper.

- The scope of languages includes Indian, South Asian, African, and European languages, and interested parties can connect with the project lead via LinkedIn.

- RAG Header Formatting for LLM Prompts: Users sought guidance on formatting instructional headers for RAG prompts to ensure the LLM interprets inputs correctly. Discussions emphasized the need for precise supporting information and proper termination methods for headers.

- The conversation highlighted how clarity in formatting can mitigate errors in model responses, enhancing engagement with the LLM.

- Gaps in API Documentation Identified: A user noted inconsistencies in the API documentation regarding penalty ranges, calling for clearer standards on parameter values. This conversation reflects ongoing concerns about documentation consistency and user clarity in utilizing API features.

- Discussions around API migration from v1 to v2 corroborated that while older functionality remains, systematic updates are essential for a smooth transition.

Interconnects (Nathan Lambert) Discord

- OpenAI's talent exodus due to compensation demands: Key researchers at OpenAI are seeking higher compensation, with $1.2 billion already cashed out from selling profit units as the company’s valuation rises. This turnover is heightened by rivals like Safe Superintelligence actively recruiting talent.

- Employees are threatening to quit over money issues while new CFO Sarah Friar navigates these negotiations.

- California Governor vetoes AI safety bill SB 1047: Gov. Gavin Newsom vetoed the bill aimed at regulating AI firms, claiming it wasn't the best method for public protection. Critics view this as a setback for oversight while supporters push for regulations based on specific capabilities.

- Sen. Scott Wiener expressed disappointment over the lack of prior feedback from the governor, emphasizing the lost chance for California to lead in tech regulation.

- PearAI faces allegations of code theft: PearAI has been accused of stealing code from Continue.dev and rebranding it without acknowledgment, urging investors like YC to push for accountability. This raises significant ethical concerns about funding within the startup ecosystem.

- The controversy highlights ongoing concerns about the integrity of open-source communities and their treatment by emerging tech firms.

- Debate on Transparency in OpenAI Research: Critics question OpenAI's transparency, emphasizing that referencing a blog does not provide substantive communication of research findings. Some employees assert that the company is indeed open about their research.

- Discussions highlight mixed feelings on whether OpenAI's research blog sufficiently addresses the community's transparency concerns.

- Insights on Access to iPhone IAP Subscriptions: A substack best seller announced gaining access to iPhone In-App Purchase subscriptions, indicating new opportunities in mobile monetization. This development gives insight into implementing and managing these systems.

- The discussions reflect developers’ frustrations with the chaotic environment of managing the Apple App Store and their experiences with its complexities.

LLM Agents (Berkeley MOOC) Discord

- Course Materials Ready for Access: Students can access all course materials, including assignments and lecture recordings, on the course website, with submission deadline set for Dec 12th.

- It's important to check the site regularly for updates on materials as well.

- Multi-Agent Systems vs. Single-Agent Systems: Discussion emerged regarding the need for multi-agent systems rather than single-agent implementations in project contexts to reduce hallucinations and manage context.

- Participants noted that these systems might yield more accurate responses from LLMs.

- Curiosity Around NotebookLM's Capabilities: Members inquired if NotebookLM functions as an agent application, revealing it acts as a RAG agent that summarizes text and generates audio.

- Questions also surfaced regarding its technical implementation, particularly in multi-step processes.

- Awaiting Training Schedule Confirmation: Students are eager for confirmation on when training sessions start, with one noting that all labs were expected to be released on Oct 1st.

- However, this timeline was not officially confirmed.

- Exploring Super-Alignment Research: A proposed research project is in discussion, aiming to study ethics in multi-agent systems using frameworks like AutoGen.

- Challenges regarding the implementation of this study without dedicated frameworks were raised, highlighting limitations in simulation capabilities.

tinygrad (George Hotz) Discord

- Cloud Storage Costs Competitive with Major Providers: George mentioned that storage and egress costs will be less than or equal to major cloud providers, emphasizing cost considerations.

- He further explained that expectations for usage might alter perceived costs significantly.

- Modal's Payment Model Sparks Debate: Modal's unique pricing where they charge by the second for compute resources has drawn attention, touted as cheaper than traditional hourly rates.

- Members questioned the sustainability of such models and how it aligns with consistent usage patterns in the AI startup environment.

- Improving Tinygrad's Matcher with State Machines: A member suggested that implementing a matcher state machine could improve performance, aligning it towards C-like efficiency.

- George enthusiastically backed this approach, indicating it could achieve the desired performance improvements.

- Need for Comprehensive Regression Testing: Concerns were raised about the lack of a regression test suite for the optimizer, which could lead to unnoticed issues after code changes.

- Members discussed the idea of serialization for checking optimization patterns, but recognized it would not be engaging.

- SOTA GPU not mandatory for bounties: A member suggested that while a SOTA GPU could help, one can manage with an average GPU, especially for certain tasks.

- Some tasks like 100+ TFLOPS matmul in tinygrad may require specific hardware like the 7900XTX, while others do not.

OpenAccess AI Collective (axolotl) Discord

- Llama 3.2 Tuning Hits VRAM Wall: Users face high VRAM usage of 24GB when tuning Llama 3.2 1b with settings like qlora and 4bit loading, leading to discussions on balancing sequence length and batch size.

- Concerns specifically highlight the impact of sample packing, emphasizing a need for optimization in the tuning configuration.

- California Mandates AI Training Transparency: A new California law now requires disclosure of training sources for all AI models, affecting even smaller non-profits without exceptions.

- This has spurred conversations on utilizing lightweight chat models for creating compliant datasets, as community members brainstorm potential workarounds.

- Lightweight Chat Models Gain Traction: Members are exploring finetuning lightweight chat models from webcrawled datasets, aiming to meet legal transformation standards.

- One user pointed out that optimizing messy raw webcrawl data through LLMs can be a significant next step in the process.

- Liquid AI Sparks Curiosity: The introduction of Liquid AI, a new foundation model, has piqued interest among members due to its potential features and applications.

- Members are keen to discuss what legislative changes might mean for this model and its practical implications in light of recent developments.

- Maximizing Dataset Usage in Axolotl: In Axolotl, configure datasets to use the first 20% by adjusting the

splitoption in dataset settings for training purposes.- A lack of random sample selection directly in Axolotl means users must preprocess data, utilizing Hugging Face’s

datasetsfor random subset sampling before loading.

- A lack of random sample selection directly in Axolotl means users must preprocess data, utilizing Hugging Face’s

DSPy Discord

- DSPy showcases live Pydantic model generation: A livecoding session demonstrated how to create a free Pydantic model generator using Groq and GitHub Actions.

- Participants can catch the detailed demonstration in the shared Loom video.

- Upgrade to DSPy 2.5 delivers notable improvements: Switching to DSPy 2.5 with the LM client and a Predictor over TypedPredictor led to enhanced performance and fewer issues.

- Key enhancements stemmed from the new Adapters which are now more aware of chat LMs.

- OpenSearchRetriever ready for sharing: A member is willing to share their developed OpenSearchRetriever for DSPy if the community shows interest.

- This project could streamline integration and functionality, and it was encouraged that they make a PR.

- Challenges in Healthcare Fraud Classifications: A member is facing difficulties accurately classifying healthcare fraud in DOJ press releases, leading to misclassifications.

- The community discussed refining the classification criteria to enhance accuracy in this critical area.

- Addressing Long Docstring Confusion: Confusion arose around using long explanations in docstrings, affecting accuracy in class signatures.

- Members provided insights on the importance of clear documentation, but the user needed clarity on the language model being leveraged.

OpenInterpreter Discord

- Full-Stack Developer Seeks Projects: A full-stack developer is looking for new clients, specializing in e-commerce platforms, online stores, and real estate websites using React + Node and Vue + Laravel technologies.

- They are open to discussions for long-term collaborations.

- Query on Re-instructing AI Execution: A member asked about the possibility of modifying the AI execution instructions to enable users to independently fix and debug issues, pointing to frequent path-related errors.

- There was a clear expression of frustration regarding current system capabilities.

- Persistent Decoding Packet Error: Users reported a recurrent decoding packet issue, with the error message: Invalid data found when processing input during either server restarts or client connections.

- Suggestions were made to check for terminal error messages, but none were found, indicating consistent issues.

- Ngrok Authentication Troubles: A member encountered an ngrok authentication error requiring a verified account and authtoken during server execution.

- They suspected the issue might relate to the .env file not properly reading the apikey, asking for assistance on this topic.

- Jan AI as a Computer Control Interface: A member shared insights on using Jan AI with Open Interpreter as a local inference server for local LLMs, inviting feedback on others' experiences.

- They provided a YouTube video that showcases how Jan can interface to control computers.

LAION Discord

- Request for French Audio Datasets: A user needs high-quality audio datasets in French for training CosyVoice, emphasizing the urgency to obtain suitable datasets.

- Without proper datasets, they expressed uncertainty about progressing on their project.

- LAION Claims Victory in Copyright Challenge: LAION won a major copyright infringement challenge in a German court, setting a precedent in legal barriers for AI datasets.

- Further discussions emphasized the implications of this victory, which can be found on Reddit.

- Exploring Text-to-Video with Phenaki: Members explored the Phenaki model for generating videos from text, sharing a GitHub link for initial tests.

- They requested guidance for testing its capabilities due to a lack of datasets.

- Synergy Between Visual Language and Latent Diffusion: Discussion revolved around the potential of combining VLM (Visual Language Models) and LDM (Latent Diffusion Models) for enhanced image generation.

- A theoretical loop was proposed where VLM instructs LDM, effectively refining the output quality.

- Clarifying Implementation of PALM-RLHF Datasets: A member inquired about the suitable channel for implementing PALM-RLHF training datasets tailored to specific tasks.

- They aimed for clarity on aligning these datasets with operational requirements.

LangChain AI Discord

- Vectorstores could use example questions: A member suggested that incorporating example questions might enhance vectorstore performance in finding the closest match, although it may be considered excessive.

- They highlighted the importance of testing to measure the actual effectiveness of this approach.

- Database beats table data for LLMs: A member pointed out that switching from table data to a Postgres database is more suitable for LLMs, leading them to utilize LangChain modules for interaction.

- This transition aims to optimize data handling for model training and queries.

- Exploring thank you gifts in Discord: An inquiry was made about the feasibility of sending small thank you gifts to members in Discord who provided assistance.

- This reflects a desire to acknowledge contributions and build community bonds.

- Gemini faces sudden image errors: A member reported unexpected errors when sending images to Gemini, noting that this issue emerged after recent upgrades to all pip packages.

- The situation raised concerns about potential compatibility issues post-upgrade.

- Modifying inference methods with LangChain: A member is investigating modifications to the inference method of chat models using LangChain, focusing on optimizations in vllm.

- They seek to control token decoding, particularly around chat history and input invocation.

MLOps @Chipro Discord

- AI Realized Summit 2024 Set for October 2: Excitement is building for the AI Realized - The Enterprise AI Summit on October 2, 2024, hosted by Christina Ellwood and David Yakobovitch at UCSF, featuring industry leaders in Enterprise AI.

- Attendees can use code extra75 to save $75 off their tickets, which include meals at the conference.

- Kickoff of Manifold Research Frontiers Talks: Manifold Research is launching the Frontiers series to spotlight innovative work in foundational and applied AI, starting with a talk by Helen Lu focused on neuro-symbolic AI and human-robot collaboration.

- The talk will discuss challenges faced by autonomous agents in dynamic environments and is open for free registration here.

- Inquiry on MLOps Meetups in Stockholm: A member is seeking information about MLOps or Infrastructure meetups in Stockholm after recently moving to the city.

- They expressed a desire to connect with the local tech community and learn about upcoming events.

DiscoResearch Discord

- Calytrix introduces anti-slop sampler: A prototype anti-slop sampler suppresses unwanted words during inference by backtracking on detected sequences. Calytrix aims to make the codebase usable for downstream purposes, with the project available on GitHub.

- This approach targets enhancing dataset quality directly by reducing noise in generated outputs.

- Community backs anti-slop concept: Members shared positive feedback about the anti-slop sampler, with one commenting, 'cool, I like the idea!' highlighting its potential impact.

- The enthusiasm indicates a growing interest in solutions that refine dataset generation processes.

Mozilla AI Discord

- Takiyoshi Hoshida showcases SoraSNS: Indie developer Takiyoshi Hoshida will present a live demo of his project SoraSNS, a social media app offering a private timeline from users you don't typically follow.

- The demo emphasizes the app's unique concept of day and night skies, symbolizing openness and distant observation to enhance user experience.

- Hoshida's impressive tech credentials: Takiyoshi Hoshida studied Computer Science at Carnegie-Melon University, equipping him with a strong tech foundation.

- He has significant experience, having previously worked with Apple's AR Kit team and contributed to over 50 iOS projects.

Gorilla LLM (Berkeley Function Calling) Discord

- Hammer Handle Just Got Better: The hammer handle has undergone an update, introducing enhancements to its design and functionality. Expect numerous exciting improvements with this iteration.

- This update signals the team's commitment to continuously improving the tool's usability.

- Meet the Hammer2.0 Series Models: The team has launched the Hammer2.0 series models, which include Hammer2.0-7b, Hammer2.0-3b, Hammer2.0-1.5b, and Hammer2.0-0.5b.

- These models signify an important advancement in product diversification for development applications.

- New Pull Request PR#667 Submitted: A Pull Request (PR#667) has been submitted as part of the programmatic updates to the hammer product line. This submission is crucial to the ongoing development process.

- The PR aims to incorporate recent enhancements and feedback from the community.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!