[AINews] Learnings from o1 AMA

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Appreciation for RL-based CoT is all you need.

AI News for 9/12/2024-9/13/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (216 channels, and 5103 messages) for you. Estimated reading time saved (at 200wpm): 502 minutes. You can now tag @smol_ai for AINews discussions!

On day 2 of the o1 release we learned:

- o1-preview scores 21% on ARC-AGI (SOTA is 46%): "In summary, o1 represents a paradigm shift from "memorize the answers" to "memorize the reasoning" but is not a departure from the broader paradigm of fitting a curve to a distribution in order to boost performance by making everything in-distribution."

- o1-preview scores ~80% on aider code editing (SOTA - Claude 3.5 Sonnet was 77%): "The o1-preview model had trouble conforming to aider’s diff edit format. The o1-mini model had trouble conforming to both the whole and diff edit formats. Aider is extremely permissive and tries hard to accept anything close to the correct formats. It is surprising that such strong models had trouble with the syntactic requirements of simple text output formats. It seems likely that aider could optimize its prompts and edit formats to better harness the o1 models."

- o1-preview scores ~52% on Cognition-Golden with advice: "Chain-of-thought and asking the model to “think out loud” are common prompts for previous models. On the contrary, we find that asking o1 to only give the final answer often performs better, since it will think before answering regardless. o1 requires denser context and is more sensitive to clutter and unnecessary tokens. Traditional prompting approaches often involve redundancy in giving instructions, which we found negatively impacted performance with o1."

- Andrew Mayne's o1 prompting advice: "Don’t think of it like a traditional chat model. Frame o1 in your mind as a really smart friend you’re going to send a DM to solve a problem. She’ll answer back with a very well thought out explanation that walks you through the steps."

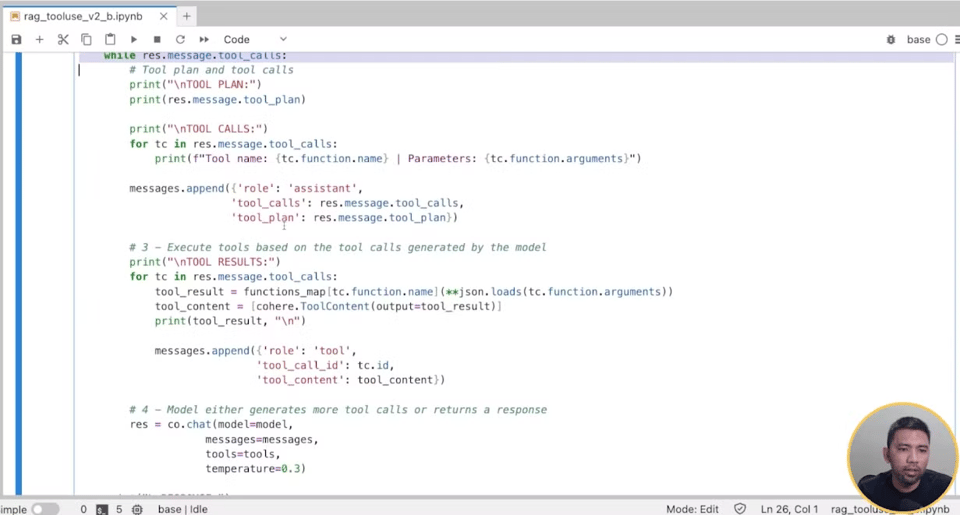

- The OpenAI Research Team AMA - this last one was best summarized by Tibor Blahe:

It's a quiet Friday otherwise, so you can check out the latest Latent Space pod with OpenAI, or sign up for next week's SF hackathon brought to you by this month's sponsors, our dear friends at WandB!

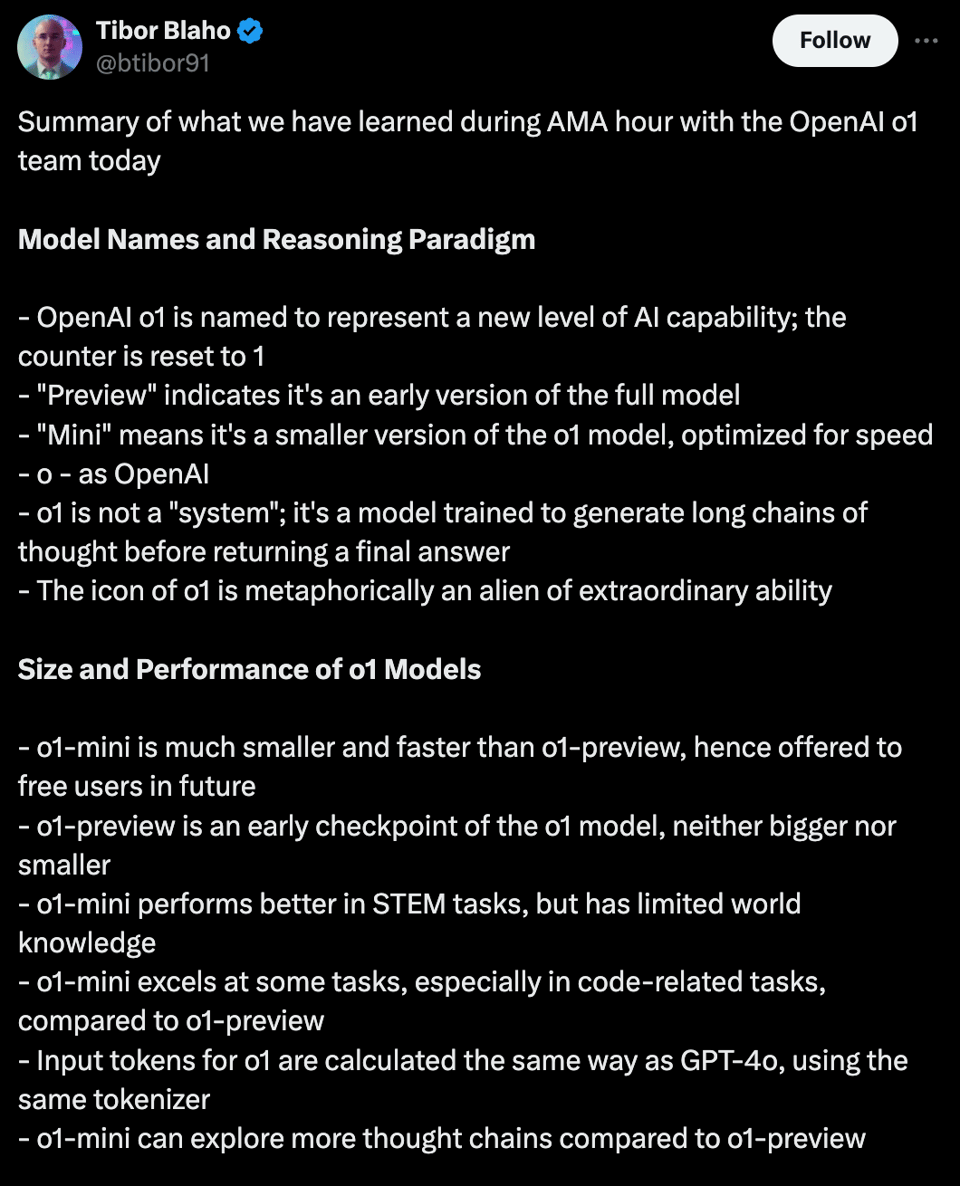

Advanced RAG Course sponsored by Weights & Biases: Go beyond basic RAG implementations and explore advanced strategies like hybrid search and advanced prompting to optimize performance, evaluation, and deployment. Learn from industry experts at Weights & Biases, Cohere, and Weaviate how to overcome common RAG challenges and build robust AI solutions, with free Cohere credits!

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

OpenAI Releases o1 Model Series

- Model Capabilities: @sama announced o1, a series of OpenAI's "most capable and aligned models yet." The models are trained with reinforcement learning to think hard about problems before answering, enabling improved reasoning capabilities.

- Performance Improvements: @sama highlighted significant improvements on various benchmarks. @rohanpaul_ai noted that o1 outperformed GPT-4o on 54/57 MMLU subcategories and achieved 78.2% on MMMU, making it competitive with human experts.

- Reasoning Approach: @gdb explained that o1 uses a unique chain-of-thought process, allowing it to break down problems, correct errors, and adapt its approach. This enables "System II thinking" compared to previous models' "System I thinking."

- Model Variants: @sama announced that o1-preview and o1-mini are available immediately in ChatGPT for Plus and Team users, and in the API for tier 5 users. @BorisMPower clarified that tier-5 API access requires $1,000 paid and 30+ days since first successful payment.

- Technical Details: @virattt noted that o1 introduces a new class of "reasoning tokens" which are billed as output tokens and count toward the 128K context window. OpenAI recommends reserving 25K tokens for reasoning, effectively reducing the usable context to ~100K tokens.

- Safety Improvements: @lilianweng mentioned that o1 shows significant improvements in safety and robustness metrics, with reasoning about safety rules being an efficient way to teach models human values and principles.

- Inference Time Scaling: @DrJimFan highlighted that o1 represents a shift towards inference-time scaling, where compute is used during serving rather than just pre-training. This allows for more refined outputs through techniques like Monte Carlo tree search.

- Potential Applications: @swyx shared examples of o1 being used for tasks in economics, genetics, physics, and coding, demonstrating its versatility across domains.

- Developer Access: @LangChainAI announced immediate support for o1 in LangChain Python & JS/TS, allowing developers to integrate the new model into their applications.

Reactions and Analysis

- Paradigm Shift: Many users, including @willdepue, emphasized that o1 represents a new paradigm in AI development, with potential for rapid improvement in the near future.

- Comparison to Other Models: While many were impressed, some users like @aaron_defazio criticized the lack of comparison to previous state-of-the-art models from other labs in OpenAI's release posts.

- Hidden Reasoning: @vagabondjack noted that OpenAI is not revealing the full chain of thought text to users, citing reasons related to "competitive advantage."

- Cost Considerations: @labenz pointed out that o1 output token pricing matches original GPT-3 pricing at $0.06 / 1K tokens, with input tokens 75% cheaper. However, the hidden reasoning tokens may make overall costs comparable to previous models for many use cases.

Memes and Humor

- @karpathy joked about o1-mini refusing to solve the Riemann Hypothesis, humorously referencing potential limitations of the model.

- Several users made jokes about the model's name, with @huybery quipping "If OpenAI o1 Comes, Can Qwen q1 Be Far Behind?"

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. OpenAI o1: A Leap in AI Reasoning Capabilities

- Evals - OpenAI o1 (Score: 110, Comments: 21): OpenAI's o1 models demonstrate significant advancements in STEM and coding tasks, as revealed in their latest evaluation results. The models show 20-30% improvements over previous versions in areas such as mathematics, physics, and computer science, with particularly strong performance in algorithmic problem-solving and code generation. These improvements suggest a notable leap in AI capabilities for technical and scientific applications.

- Users questioned why language models perform poorly on AP English exams compared to complex STEM tasks, noting that solving IMO problems seems more challenging than language-based tests.

- The comment "🍓" was included in the discussion, but its relevance or meaning is unclear without additional context.

- Excitement was expressed over the models' ability to outperform human experts on PhD-level problems, highlighting the significance of this achievement.

- Preliminary LiveBench results for reasoning: o1-mini decisively beats Claude Sonnet 3.5 (Score: 268, Comments: 129): o1-mini, a new AI model, has outperformed Claude 3.5 Sonnet on reasoning benchmarks according to preliminary LiveBench results. The findings were shared by Bindu Reddy on Twitter, indicating a significant advancement in AI reasoning capabilities.

- o1-mini outperforms o1-preview in STEM and code fields, with users noting its superior reasoning capabilities on platforms like lmarena. The model's performance improves with more reinforcement learning and thinking time.

- Users debate the fairness of comparing o1-mini to other models, as it uses built-in Chain of Thought (CoT) reasoning. Some argue this is a legitimate feature, while others view it as "cheesing" benchmarks.

- OpenRouter allows limited access to o1-mini at $3.00/1M input tokens and $12.00/1M output tokens, with a 12 message per day limit. Users express excitement about trying the model despite its high token consumption.

- "We're releasing a preview of OpenAI o1—a new series of AI models designed to spend more time thinking before they respond" - OpenAI (Score: 641, Comments: 248): OpenAI has announced the preview release of o1, a new series of AI models designed to spend more time thinking before responding. These models are engineered to exhibit advanced reasoning abilities, potentially enhancing the quality and depth of AI-generated outputs. The announcement suggests that OpenAI is focusing on improving the deliberative processes of AI systems, which could lead to more thoughtful and accurate responses in various applications.

- OpenAI's new o1 model shows significant improvements in reasoning abilities, scoring 83% on IMO qualifying exams compared to GPT-4's 13%, and reaching the 89th percentile in Codeforces coding competitions. However, some users are skeptical about real-world performance.

- The decision to hide the chain-of-thought process has sparked criticism, with users labeling it as "ClosedAI" and expressing concerns about reduced transparency. Some speculate that clever prompting may still reveal the model's thinking process.

- Comparisons to the recent "Reflection" controversy were made, with discussions on whether this is a more sophisticated implementation of similar concepts. The model also boasts a 4x increase in resistance to jailbreaking attempts, which some view negatively as increased censorship.

Theme 2. Advancements in Open Source and Local LLMs

- DataGemma Release - a Google Collection (27B Models) (Score: 122, Comments: 58): Google has released DataGemma, a collection of 27B parameter language models designed for data analysis tasks. The models, which include variants like DataGemma-2b, DataGemma-7b, and DataGemma-27b, are trained on a diverse dataset of 3 trillion tokens and can perform tasks such as data manipulation, analysis, and visualization using natural language instructions. These models are available for research use under the Apache 2.0 license.

- RIG (Retrieval-Interleaved Generation) is a new term introduced by Google for DataGemma, enhancing Gemma 2 by querying trusted sources and fact-checking against Data Commons. This feature allows DataGemma to retrieve accurate statistical data when generating responses.

- Users demonstrated the functionality of RIG, showing how it can query Data Commons to fill in key statistics, such as demographic information for Sunnyvale, CA. This approach potentially reduces hallucinations in AI-generated responses.

- Some users expressed excitement about trying DataGemma but noted a desire for models with larger context windows. The official Google blog post about DataGemma was shared for additional information.

- Face-off of 6 maintream LLM inference engines (Score: 42, Comments: 38): The post compares 6 mainstream LLM inference engines for local deployment, focusing on inference quality rather than just speed. The author conducted a test using 256 selected MMLU Pro questions from the 'other' category, running Llama 3.1 8B model with various quantization levels across different engines. Results showed that lower quantization levels don't always result in lower quality, with vLLM's AWQ quantization performing best in this specific test, though the author cautions against generalizing these results to all use cases.

- vLLM's AWQ engine was suggested for testing, with the author confirming it's "quite good" and running additional tests. The AWQ engine represents vLLM's "4 bit" version and recently incorporated Marlin kernels.

- Discussion arose about testing with the Triton TensorRT-LLM backend. The author noted it's "famously hard to setup" and requires signing an NVIDIA AI Enterprise License agreement to access the docker image.

- The complexity of TensorRT-LLM setup was highlighted, with the author sharing a screenshot of the quickstart guide. This led to surprise from a commenter who thought Triton was free and open-source.

- Excited about WebGPU + transformers.js (v3): utilize your full (GPU) hardware in the browser (Score: 49, Comments: 7): WebGPU and transformers.js v3 now enable full GPU utilization in web browsers, allowing for significant performance improvements in AI tasks without the need for Python servers or complex setups. The author reports 40-75x speed-ups for embedding models on an M3 Max compared to WASM, and 4-20x speed-ups on consumer-grade laptops with integrated graphics or older GPUs. This technology enables private, on-device inference for various AI applications like Stable Diffusion, Whisper, and GenAI, which can be hosted for free on platforms like GitHub Pages, as demonstrated in projects such as SemanticFinder.

- privacyparachute showcased a project featuring meeting transcription and automatic subtitle creation for audio/video, with privacy controls for recording participants. The project utilizes work by u/xenovatech.

- Discussion on the capability of browser-runnable models, with SeymourBits initially suggesting they were basic (circa 2019). privacyparachute countered, stating that latest models can be run using the right web-AI framework, recommending WebLLM as an example.

- The comments highlight ongoing development in browser-based AI applications, demonstrating practical implementations of the technology discussed in the original post.

Theme 3. Debates on AI Transparency and Open vs Closed Development

- "o1 is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it." (Score: 108, Comments: 49): Sam Altman, CEO of OpenAI, addressed criticisms of GPT-4 Turbo with vision (referred to as "o1") in a Twitter thread, acknowledging its flaws and limitations. He emphasized that while the model may seem impressive initially, extended use reveals its shortcomings, and he stressed the importance of responsible communication about AI capabilities and limitations.

- OpenAI hides the CoT used by o1 to gain competitive advantage. (Score: 40, Comments: 17): OpenAI is reportedly concealing the chain-of-thought (CoT) used by their o1 model to maintain a competitive edge. The post suggests that state-of-the-art (SoTA) models can be developed using open-source software (OSS) models by optimizing CoT prompts for specific metrics, with DSPy mentioned as a tool enabling this approach.

- Anthropic may already have the capability to replicate or surpass OpenAI's o1 model, given the talent migration between companies. Their Sonnet 3.5 model has reportedly been ahead for 3 months, though usage may be limited due to compute constraints.

- OpenAI's admission that censorship significantly reduces model intelligence has sparked interest, particularly in relation to generating chain-of-thought (CoT) outputs.

- The focus on hidden CoT may be a strategic narrative by OpenAI. Some argue that lower-level processes, like those explored in Anthropic's sparse autoencoder work, might better explain token selection and memory formation in AI models.

- If OpenAI can make GPT4o-mini be drastically better than Claude 3.5 at reasoning, that has to bode well for local LLMs doing the same soon? (Score: 111, Comments: 39): The post discusses the potential for open-source alternatives to match or surpass closed AI systems in reasoning capabilities. It suggests that if GPT4o-mini can significantly outperform Claude 3.5 in reasoning tasks, similar improvements might soon be achievable in local LLMs using Chain of Thought (CoT) implementations. The author references studies indicating that GPT3.5 can exceed GPT4's reasoning abilities when given the opportunity to "think" through CoT, implying that open-source models could implement comparable techniques.

- OpenAI o1 training theories include using GPT-4 to generate solutions, applying the STaR paper approach, and using RL directly. The process likely involves a combination of methods, potentially costing hundreds of millions for expert annotations.

- The "ultra secret sauce" may lie in the dataset quality. OpenAI's system card and the "Let's verify step by step" paper provide insights into their approach, which includes reinforcement learning for instruction tuning.

- An experiment using Nisten's prompt with the c4ai-command-r-08-2024-Q4_K_M.gguf model demonstrated improved problem-solving abilities, suggesting that open-source alternatives can potentially match closed AI systems in reasoning tasks.

Theme 4. New Data Generation Techniques for LLM Training

- Hugging Face adds option to query all 200,000+ datasets in SQL directly from your browser! (Score: 215, Comments: 15): Hugging Face has introduced a new feature allowing users to query over 200,000 datasets using SQL directly from their browser. This enhancement enables data exploration and analysis without the need for downloading datasets, providing a more efficient way to interact with the vast collection of datasets available on the platform.

- The feature is powered by DuckDB WASM, allowing SQL queries to run directly in the browser. Users can share their SQL queries and views, and provide feedback or feature requests.

- Users expressed appreciation for Hugging Face's ability to provide extensive bandwidth, storage, and CPU resources. The feature was well-received for its utility in filtering datasets and downloading results.

- Several users found the tool helpful for specific tasks, such as counting dataset elements and performing analyses they previously set up locally using DuckDB.

- I Made A Data Generation Pipeline Specifically for RP: Put in Stories, Get out RP Data with its Themes and Features as Inspiration (Score: 46, Comments: 15): The author introduces RPToolkit, an open-source pipeline for generating roleplaying datasets based on input stories, optimized for use with local models. The pipeline creates varied, rich, multi-turn roleplaying data reflecting the themes, genre, and emotional content of input stories, with the author demonstrating its capabilities by creating a dataset of around 1000 RP sessions using Llama 3 70b and Mistral Large 2 models. The tool aims to solve the problem of data generation for RP model creators, allowing users to create datasets tailored to specific genres or themes without directly quoting input data, potentially avoiding copyright issues.

- Users inquired about recommended LLMs for dataset generation, with the author suggesting turboderp/Mistral-Large-Instruct-2407-123B-exl2 and Llama 3 70b. The Magnum 123B model was also recommended for its ability to handle complex characters and scenarios.

- The author provided a detailed comparison between RPToolkit and the original Augmentoolkit, highlighting improvements such as dedicated RP pipelines, overhauled configs, classifier creator pipeline, and async for faster speed.

- Discussion touched on potential applications, including using RPToolkit for creating storytelling datasets for writing. The author suggested using it as-is or modifying prompts to focus on story writing instead of conversation.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Releases and Improvements

- OpenAI announces o1: OpenAI released a new series of reasoning models called o1, designed to spend more time thinking before responding. The o1-preview model is now available in ChatGPT and the API. It shows improved performance on complex tasks in science, coding, and math.

- o1-mini performance: The o1-mini model scored highly on reasoning benchmarks, surpassing previous models. This suggests significant improvements even in the smaller versions of the new o1 series.

- Flux model advancements: The Flux AI model, developed by Black Forest Labs (original SD team), is generating high-quality images and gaining popularity among AI enthusiasts. It's seen as a significant improvement over Stable Diffusion models.

AI Research and Techniques

- New scaling paradigm: An OpenAI researcher stated that o1 represents a new scaling paradigm, suggesting they are no longer bottlenecked by pretraining. This could indicate a shift in how AI models are developed and scaled.

- Reasoning capabilities: The o1 models are said to have enhanced reasoning capabilities, potentially representing a significant step forward in AI technology. However, some users express skepticism about the extent of these improvements.

AI Model Comparisons and Community Reactions

- Flux vs Stable Diffusion: There's ongoing discussion about Flux outperforming Stable Diffusion models, with many users reporting better results from Flux, especially when combined with LoRA techniques.

- MiniMax video generation: A post claims that MiniMax has surpassed Sora in AI video generation, showing impressive skateboarding clips that look believable to casual observers.

- Community anticipation and skepticism: While there's excitement about new AI developments, there's also skepticism about overhyped announcements and limited releases to select users.

AI Discord Recap

A summary of Summaries of Summaries

O1-mini

Theme 1. OpenAI o1 Model: Performance and Limitations

- OpenAI o1 Shines in Reasoning But Stumbles in Coding: The newly released OpenAI o1 model excels in reasoning and mathematics, outperforming Claude 3.5 Sonnet, but shows disappointing results in coding tasks compared to both GPT-4 and Claude 3.5 Sonnet. Users have observed it generating decent essays and educational content but struggling with practical coding applications.

- Rate Limits Clamp Down on o1 Usage: OpenRouter limited the o1 model to 30 requests per day, leading to user frustration as many hit rate limits after about 12 messages. This restriction has sparked debates on how it affects complex task execution and potential for future limit increases.

- First Commercial Spacewalk Completed: The completion of the first commercial spacewalk has been a significant milestone, detailed in an article discussing key mission events and outcomes.

Theme 2. AI Training Enhancements and Optimization

- Prompt Caching Slashes Costs by 90%: Prompt caching introduced by OpenRouter allows users to achieve latency speedups and potential 90% discounts on prompt tokens for providers like Anthropic and DeepSeek, with expansions anticipated. This feature is reshaping cost structures for frequent AI users.

- Quantization Techniques Boost Model Efficiency: Communities like Unsloth AI and CUDA MODE delve into separate quantization and dequantization processes, exploring methods like QLoRA and debating the merits of dynamic quantization to enhance model performance while managing VRAM limitations.

- Reinforcement Learning with KL Divergence: Discussed in Eleuther Discord, using KL divergence as an auxiliary loss in reinforcement learning helps prevent models from forgetting critical tasks, balancing moderation and creativity.

Theme 3. AI Tools, Integrations, and Platforms

- OAuth Integration Streamlines AI Development: OpenRouter's enhanced OAuth support for coding plugins like

vscode:andcursor:facilitates seamless integration of custom AI models into development environments, boosting workflow efficiency for developers. - Modular's Magic and Mojo Update the AI Toolkit: MAX 24.5 and Mojo 24.5 introduce significant performance improvements and Python 3.12 compatibility, utilizing the new Magic package manager for easier installations and environment management. These updates position Modular as a competitive AI solution for developers.

- WebGPU Puzzles Launches for Learning GPU Programming: The new WebGPU Puzzles app by Sarah Pan and Austin Huang teaches GPU programming through interactive browser-based challenges, making GPU access practical without dedicated hardware.

Theme 4. AI Regulations, Ethics, and Alignment

- California's SB 1047 AI Safety Bill Faces Veto Risks: The proposed SB 1047 bill aims to regulate AI safety in California but has a 66%-80% chance of being vetoed due to political influences. Discussions highlight the bill's dependence on the political climate and public perception of AI regulation.

- Concerns Over AI Censorship and Alignment: Across various Discords, members express apprehension that reinforcement learning from human feedback (RLHF) may 'dumb down' AI models, reducing their utility for technical tasks. There's a strong emphasis on balancing AI moderation with maintaining creativity and functionality.

- STaR Technique Enhances Model Reasoning: In LAION, integrating Chain-of-Thought (CoT) with Reinforcement Learning significantly improves model performance on complex reasoning tasks, highlighting the importance of quality data gathering.

Theme 5. Community Events, Collaborations, and Support

- Hackathons and Collaborations Fuel AI Innovation: Events like the LlamaIndex hackathon offer over $20,000 in prizes, fostering Retrieval-Augmented Generation (RAG) projects and encouraging community-led AI agent development. Collaborations with platforms like OpenSea for free mint opportunities also engage the community.

- Private Gatherings and Job Opportunities Strengthen AI Networks: Fleak AI's private happy hour in San Francisco and Vantager's AI Engineer position openings provide networking and career opportunities, enhancing community ties and professional growth within the AI space.

- OpenInterpreter Mobile App Feedback: Users report on challenges with voice response functionality in the OpenInterpreter mobile app, urging for improved user interactions and developer responsiveness, and encouraging community contributions to enhance documentation and troubleshooting.

O1-preview

Theme 1. OpenAI's o1 Model Sparks Excitement and Debate

- o1 Model Wows in Math, Stumbles in Code: OpenAI's new o1 model has the AI community buzzing, impressing users with its reasoning and math prowess but leaving them puzzled over its underwhelming coding performance compared to GPT-4 and Claude 3.5 Sonnet.

- o1 shines in complex reasoning tasks but struggles to deliver useful outputs in coding, prompting mixed reactions.

- Rate Limits Rain on o1's Parade: Early adopters of o1 are hitting strict rate limits—some after just 12 messages—sparking frustration and discussions about the model's practicality for serious use.

- Users are questioning token consumption discrepancies and the impact on their ability to conduct complex tasks effectively.

- Benchmark Battles: Is o1 Playing Fair?: Debates ignite over the fairness of AI model benchmarks, with o1's unique answer selection mechanism complicating direct comparisons to models like GPT-4o.

- Calls for benchmarks that consider compute budgets and selection methods highlight the complexities of evaluating AI progress.

Theme 2. Developers Supercharge Tools with AI Integration

- Coding Gets an IQ Boost with OAuth and AI: OpenRouter introduces OAuth support for plugins like

vscode:andcursor:, letting developers seamlessly integrate custom AI models into their code editors.- This update brings AI-powered solutions directly into IDEs, turbocharging workflow efficiency.

- TypeScript Taps into AI with LlamaIndex.TS Launch: LlamaIndex.TS brings advanced AI functionalities to TypeScript, simplifying development with tools tailored for TS enthusiasts.

- The package offers crucial features to streamline AI integration into TypeScript projects.

- Vim Lovers Unite Over AI-Powered Editing: Developers share resources on mastering Vim and Neovim, including a YouTube playlist on configuration, to boost coding speed with AI assistance.

- Communities collaborate to integrate AI into editors, enhancing efficiency and sharing best practices.

Theme 3. Fine-Tuners Face Off Against Training Challenges

- Memory Leaks Crash the GPU Party: Developers grapple with memory leaks in PyTorch when using variable GPU batch sizes, highlighting the woes of fluctuating tensor sizes and the need for better handling of variable sequence lengths.

- Concerns over padding inefficiencies spark calls for robust solutions to memory pitfalls.

- VRAM Limitations Test Fine-Tuners' Patience: Community members struggle to fine-tune models like Llama3 under tight VRAM constraints, experimenting with learning rate schedulers and strategies like gradient accumulation steps.

- "Trial and error remains our mantra," one user mused, reflecting the collective quest for efficient configurations.

- Phi-3.5 Training Goes Nowhere Fast: Attempts to train phi-3.5 leave users exasperated as LoRA adapters fail to learn anything substantial, prompting bug reports and deep dives into possible glitches.

- Frustrations mount as fine-tuners hit walls with the elusive model.

Theme 4. New Tools and Models Stir Up the AI Scene

- MAX 24.5 Rockets Ahead with 45% Speed Boost: MAX 24.5 debuts with a hefty 45% performance improvement in int4k Llama token generation, delighting developers hungry for speed.

- The new driver interface and token efficiency position MAX as a heavyweight contender in AI tools.

- Open Interpreter's Token Diet Leaves Users Hungry: Open Interpreter gobbles up 10,000 tokens for just six requests, leading users to question its voracious appetite and seek smarter ways to optimize token use.

- Discussions focus on slimming down token consumption without sacrificing functionality.

- Warhammer Fans Forge Ahead with Adaptive RAG: The Warhammer Adaptive RAG project rallies fans and developers alike, showcasing innovative uses of local models and features like hallucination detection and answer grading.

- Community feedback fuels the project's evolution, embodying the spirit of collaborative AI development.

Theme 5. AI Policy and Accessibility Conversations Heat Up

- California's AI Bill Faces Political Showdown: The proposed California SB 1047 AI safety bill spurs debate, with an estimated 66%-80% chance of a veto amid political maneuvering.

- The bill's uncertain fate underscores tensions between innovation and regulation in the AI sphere.

- Has OpenAI Put a PhD in Everyone's Pocket?: Users marvel at OpenAI's strides, suggesting AI advancements are "like having a PhD in everyone's pocket," while pondering if society truly grasps the magnitude of this shift.

- The discourse highlights AI's transformative impact on knowledge accessibility.

- Call for Fair Play in AI Benchmarks Rings Louder: Debates over AI model evaluations intensify, with advocates pushing for benchmarks that factor in compute budgets and selection methods to level the playing field.

- The community seeks more nuanced metrics to accurately reflect AI capabilities and progress.

PART 1: High level Discord summaries

OpenRouter (Alex Atallah) Discord

- OpenAI o1 Model Live for Everyone: The new OpenAI o1 model family is now live, allowing clients to stream all tokens at once, but initially under rate limits of 30 requests per day, resulting in users hitting rate limit errors after 12 messages.

- This limited release has sparked discussions on how these constraints affect usage patterns across different applications in coding and reasoning tasks.

- Prompt Caching Delivers Savings: Prompt caching now enables users to achieve latency speedups and potential 90% discounts on prompt tokens while sharing cached items, active for Anthropic and DeepSeek.

- This feature's expansion is anticipated for more providers, potentially reshaping cost structures for frequent users.

- OAuth Support Enhanced for Tool Integration: OpenRouter introduces OAuth support for coding plugins like

vscode:andcursor:, facilitating seamless integration of custom AI models.- This update allows developers to bring their AI-powered solutions directly into their IDEs, enhancing workflow efficiency.

- Rate Limits Disappoint Users: Users express frustration with OpenRouter's recent update limiting the o1 model to 30 requests per day, which they feel stifles their ability to conduct complex tasks effectively.

- Many are eager to see how usage patterns evolve and whether there's potential for increasing these limits.

- Technical Issues with Empty Responses: Technical concerns arose when users reported receiving 60 empty lines in completion JSON, suggesting instability issues that need addressing.

- One community member advised a waiting period for system adjustments before reconsidering the reliability of responses.

OpenAI Discord

- OpenAI o1 shows mixed results against GPT-4: Users pointed out that OpenAI o1 excels in reasoning and mathematics but shows disappointing results in coding compared to both GPT-4 and Claude 3.5 Sonnet.

- While it generates decent essays and educational content, there are considerable limitations in its coding capabilities.

- AI's evolving role in Art and Creativity: Discussion emerged on AI-generated art pushing human artistic limits while also creating a saturation of low-effort content.

- Participants envision a future where AI complements rather than replaces human creativity, albeit with concerns over content quality.

- Clarifying RAG vs Fine-Tuning for Chatbots: A member queried the benefits of Retrieval-Augmented Generation (RAG) versus fine-tuning for educational chatbots, receiving consensus that RAG is superior for context-driven questioning.

- Experts emphasized that fine-tuning adjusts behaviors, not knowledge, making it less suitable for real-time question answering.

- ChatGPT faces song translation frustrations: Users reported that ChatGPT struggles to translate generated songs, often returning only snippets rather than full lyrics due to its creative content guidelines.

- This limitation hampers the project continuity that many users seek, adding complexity to extending past conversations.

- Changes in User Interface spark complaints: Members expressed their dissatisfaction with recent user interface changes, particularly how copy and paste functionality broke line separations.

- This has led to usability issues and frustrations as members navigate the evolving interface.

Unsloth AI (Daniel Han) Discord

- Unsloth Pro Release Speculation: The community eagerly anticipates the release of Unsloth Pro, rumored to target larger enterprises with a launch 'when done'.

- Members lightheartedly compared the development pace to building Rome, suggesting substantial progress is being made.

- Gemma2 Testing on RTX 4090: Initial testing of Gemma2 27b on an RTX 4090 with 8k context shows promise, although potential VRAM limitations continue to raise eyebrows.

- The necessity for gradient accumulation steps highlights ongoing challenges with larger models.

- Mistral NeMo Performance Review: Early feedback indicates that Mistral NeMo delivers performance on par with 12b models, sparking some disappointment among users.

- Participants ponder whether more refined examples could boost performance.

- AI Moderation and Creativity Concerns: Users express apprehension that reinforcement learning from human feedback (RLHF) might 'dumb down' AI models, highlighting a balance between moderation and creativity.

- Implementing middleware filtering is proposed to retain originality while ensuring safety.

- Fine-tuning Models with Limited VRAM: Community discussions revolve around challenges of fine-tuning with Qlora under VRAM constraints, focusing on optimal learning rate (LR) scheduler choices.

- Trial and error remains a common theme as members seek alternatives to default cosine scheduling.

HuggingFace Discord

- Revolutionize CLI Tools with Ophrase and Oproof: A community member shared insights on revolutionizing CLI tools using Ophrase and Oproof. Their approach aims to enhance the developer experience significantly.

- Their innovative techniques inspire developers to rethink command line functionalities.

- Challenges with Hugging Face Model Integrity: Users reported issues with the integrity of a trending model on Hugging Face, suggesting it contains misleading information and breaks content policy rules.

- Discussions highlighted the potential for user disappointment after downloading the model, as it performed significantly below advertised benchmarks.

- Exploring Reflection 70B with Llama cpp: A project featuring Reflection 70B built using Llama cpp was highlighted, showcasing advanced capabilities in the field.

- Members noted the ease of access to state-of-the-art models as a key benefit.

- New Persian Dataset Enhances Multilingual Data: The community introduced a Persian dataset comprising 6K sentences translated from Wikipedia, crucial for enhancing multilingual AI capabilities.

- Participants praised its potential for improving Farsi language models and training data diversity.

- Arena Learning Boosts Performance: Arena Learning discussed as a method for improving model performance during post-training phases, showing notable results.

- Community members are eager to implement these insights into their own models for better outcomes.

Nous Research AI Discord

- O1-mini Outshines O1-preview: Users report O1-mini showing better performance compared to O1-preview, likely due to its capability to execute more Chain of Thought (CoT) turns in a given time frame.

- One user awaits a full release for clarity on current capabilities, exhibiting hesitation around immediate purchases.

- Hermes 3 Breakthroughs: Hermes 3 boasts significant enhancements over Hermes 2, with noted improvements in roleplaying, long context coherence, and reasoning abilities.

- Many are looking at its potential for applications requiring extended context lengths, sparking interest in its API capabilities.

- Model Alignment Woes: Concerns about autonomous model alignment were highlighted, noting risks of losing control should the model achieve higher intelligence without alignment.

- Discussions emphasized understanding developer intentions to preemptively tackle alignment challenges.

- GameGen-O Showcases Functionality: GameGen-O presents its features through a demo inspired by Journey to the West, drawing attention for its innovative capabilities.

- Contributors include affiliations from The Hong Kong University of Science and Technology and Tencent's LightSpeed Studios, indicating research collaboration.

- ReST-MCTS Self-Training Advances: The ReST-MCTS methodology offers enhanced self-training by coupling process reward guidance with tree search, boosting LLM training data quality.

- This technique notably surpasses previous algorithms, continually refining language models with quality output through iterative training.

Perplexity AI Discord

- OpenAI O1 Models Pending Integration: Users are keenly awaiting the integration of OpenAI O1 models into Perplexity, with some mentioning competitors that have already incorporated them.

- While many hope for a swift update, others contend that models like Claude Sonnet are already performing well.

- API Credits Confusion: Users are unclear about the $5 API credits replenishment timing, debating whether it resets on the 1st of each month or the first day of each billing cycle.

- Further clarification on these timings is highly sought after, especially among users managing their subscription statuses.

- Commercial Spacewalk Marks a Milestone: The first commercial spacewalk has officially been completed, bringing forth a detailed article discussing key mission events and outcomes.

- Read the full updates here.

- Internal Server Errors Hampering API Access: An internal server error (status code 500) has been reported, indicating serious issues users are facing while trying to access the API.

- This error poses challenges for effective utilization of Perplexity's services during critical operations.

- Highlighting OpenPerplex API Advantages: Users have expressed preference for the OpenPerplex API, citing benefits such as citations, multi-language support, and elevated rate limits.

- This reflects a favorable user experience that outstrips other APIs available, underscoring its utility.

Latent Space Discord

- OpenAI o1 gets mixed feedback: Users report that OpenAI's o1 models show mixed results, excelling at reasoning-heavy tasks but often failing to deliver useful outputs overall, leading to transparency concerns.

- “They say 'no' to code completion for cursor?” raises doubts about the research methods employed for evaluation.

- Fei-Fei Li launches World Labs: Fei-Fei Li unveiled World Labs with a focus on spatial intelligence, backed by $230 million in funding, aiming to develop Large World Models capable of 3D perception and interaction.

- This initiative is attracting top talent from the AI community, with aspirations to solve complex world problems.

- Cursor experiences scaling issues: Cursor is reportedly facing scaling issues, particularly in code completion and document generation functionalities, hindering user experience.

- The discussion highlighted users' frustrations, suggesting that the tool's performance does not meet expectations.

- Insights from HTEC AI Copilot Report: The HTEC team evaluated 26 AI tools, finding inconclusive results due to limited testing, casting doubt on the depth of their analyses regarding AI copilots.

- Though participants “dabbled” with each tool, the report seems more geared towards lead generation rather than thorough usability insights.

- Exploring Vim and Neovim resources: Members acknowledged Vim's steep learning curve but noted significant gains in coding speed once mastered, with many completing the Vim Adventures game for skill enhancement.

- Additionally, community members shared various Neovim resources, including a YouTube playlist on configuration to foster learning and collaboration.

CUDA MODE Discord

- Innovating with Quantization Techniques: A member is enhancing model accuracy through separate quantization and dequantization processes for input and weight during testing, while debating the merits of dynamic quantization for activation.

- They faced debugging issues with quantization logic, calling for a minimal running example to aid understanding and practical implementation.

- Repository for Llama 3 Integration: A feature branch has been initiated for adding Llama 3 support to llm.c, beginning from a copy of existing model files and maintaining planned PRs for RoPE and SwiGLU.

- This effort aims to incorporate significant advancements and optimizations before merging back into master.

- Fine-Tuning BERT with Liger Kernel Assistance: A request for help with BERT fine-tuning using the Liger kernel has surfaced, as members seek reference code while awaiting enhancements integrating liger ops into Thunder.

- Without liger ops, model adjustments may be necessary, prompting discussion around ongoing modifications to meet model requirements.

- Improving Performance Simply with Custom Kernels: Implementing the Cooley-Tukey algorithm for FFT has been a topic of discussion, optimized for enhanced performance in various applications.

- KV-cache offloading for the GH200 architecture also drew attention for its importance in maximizing efficiency during LLM inference tasks.

- WebGPU Puzzles Launches for Learning: The newly launched app, WebGPU Puzzles, aims to teach users about GPU programming via coding challenges directly in their browser.

- Developed by Sarah Pan and Austin Huang, it leverages WebGPU to make GPU access practical without requiring dedicated hardware.

Interconnects (Nathan Lambert) Discord

- OpenAI o1 model surprises with performance: The newly released OpenAI o1 model is achieving impressive scores on benchmarks like AIME, yet showing surprisingly low performance on the ARC Prize.

- While o1 excels at contest math problems, its ability to generalize to other problem types remains limited, which raises questions on its deployment.

- California SB 1047 and AI regulation: The proposed SB 1047 bill regarding AI safety has a projected 66%-80% chance of being vetoed due to political influences.

- Discussions suggest the bill's fate may depend greatly on the surrounding political climate and public perceptions of AI regulation.

- Debate on AI model benchmarking fairness: Discussions have sparked around the fairness of AI model benchmarks, particularly focusing on the complexity of pass@k metrics as they relate to models like o1 and GPT-4o.

- Participants argue that benchmarks should consider compute budgets, complicating direct comparisons, especially with o1's unique answer selection mechanism.

- Understanding the API Tier System: Members highlighted that to achieve Tier 5 in the API tier system, users need to spend $1000. One user shared they were at Tier 3, while another team surpassed Tier 5.

- This leads to discussions on the implications of spending tiers on access to features and capabilities.

- Insights into Chain-of-Thought reasoning: Errors in reasoning within the o1 model have been noted to lead to flawed Chain-of-Thought outputs, causing mistakes to spiral into incorrect conclusions.

- Members discussed how this phenomenon reveals significant challenges for maintaining reasoning coherence in AI, impacting reliability.

Stability.ai (Stable Diffusion) Discord

- A1111 vs Forge: Trade-Offs in Performance: Users compared the overlay of generation times on XYZ plots for A1111 and Forge, revealing that Schnell often generates images faster, but at the cost of quality contrast to Dev.

- This raised questions about the balance between speed and quality in model performance metrics.

- Pony Model: Confusion Reigns: The discussions about Pony model prompts highlighted inconsistencies in training data, leaving users puzzled over its effectiveness with score tags.

- Skepticism arose regarding whether these prompts would yield the desired results in practice.

- Watch for Scams: Stay Alert!: Concern arose over fraudulent investment proposals, emphasizing the need for users to remain vigilant against deceptive cryptocurrency schemes.

- The conversation underscored the critical importance of recognizing red flags in such discussions.

- Dynamic Samplers: A Step Forward: The integration of Dynamic compensation samplers into AI model training sparked interest among users for enhancing image generation techniques.

- There's a strong sense of community enthusiasm around the new tools and their potential impact on performance.

- Tokens that Matter: Create Quality Images: A range of effective prompt tokens like 'cinematic' and 'scenic colorful background' were shared, showing their utility in improving image generation quality.

- Discussions highlighted the varied opinions on optimal token usage and the need for research-backed insights.

LM Studio Discord

- o1-preview rollout speeds ahead: Members reported receiving access to the

o1-previewin batches, showing promising performance on tasks like Windows internals.- While excitement is high, some users express frustration over the pace of the rollout.

- Debating GPU configurations for max performance: Discussions centered on whether 6x RTX 4090 with a single socket or 4x RTX 4090 in a dual socket setup would yield superior performance, particularly for larger models.

- The consensus was that fitting the model within VRAM is essential, often outperforming configurations that rely more on system RAM.

- Text-to-Speech API launch: A member launched a Text-to-Speech API compatible with OpenAI's endpoints, highlighting its efficiency without needing GPUs.

- Integration details can be found on the GitHub repository, encouraging user participation.

- Market trends inflate GPU prices: A noticeable increase in GPU prices, particularly for the 3090 and P40 models, has been attributed to rising demand for AI tasks.

- Members shared experiences regarding the difficulty of finding affordable GPUs in local markets, reflecting broader supply and demand issues.

- Effect of VRAM on model performance: Participants agree that model size and available VRAM significantly impact performance, advising against using Q8 settings for deep models.

- There were calls for more straightforward inquiries to assist newcomers in optimizing their setups.

LlamaIndex Discord

- LlamaIndex.TS launches with new features!: LlamaIndex.TS is now available for TypeScript developers, enhancing functionalities through streamlined integration. Check it out on NPM.

- The package aims to simplify development tasks by offering crucial tools that cater specifically to TypeScript developers.

- Exciting Cash Prizes at LlamaIndex Hackathon: The second LlamaIndex hackathon is set for October 11-13, boasting over $20,000 in cash and credits for participants. Register here.

- The event revolves around the implementation of Retrieval-Augmented Generation (RAG) in the development of advanced AI agents.

- Limitations of LlamaIndex with function calls: Discussion revealed that LlamaIndex does not support function calls with the current API configuration, hindering tool usage. Members confirmed that both function calling and streaming remain unsupported currently.

- Users are encouraged to follow updates as new features may roll out in the future or explore alternative configurations.

- Advanced Excel Parsing in LlamaParse Demonstrated: A new video showcases the advanced Excel parsing features of LlamaParse, highlighting its support for multiple sheets and complex table structures. See it in action here.

- The recursive retrieval techniques employed by LlamaParse enhance the ability to summarize intricate data setups seamlessly.

- Exploring ChromaDB Integration: A user sought assistance with retrieving document context in LlamaIndex using ChromaDB, specifically regarding query responses. They were advised to check

response.source_nodesfor accurate document context retrieval.- Clarification on metadata reliance emerged from discussions, improving understanding of document handling in AI queries.

Eleuther Discord

- KL Divergence Enhances RL Stability: Members discussed the application of KL divergence as an auxiliary loss in reinforcement learning to prevent models from forgetting critical tasks, particularly in the MineRL regime.

- Concerns arose that an aligned reward function may undermine the benefits of KL divergence, exposing flaws in the current RL approaches.

- Mixed Precision Training Mechanics Unveiled: A query emerged about the rationale behind using both FP32 and FP16 for mixed precision training, citing numerical stability and memory bandwidth as prime considerations.

- It was noted that using FP32 for certain operations significantly reduces instability, which often bottlenecks overall throughput.

- Exploring Off-Policy Methods in RL: The nuances of exploration policies in reinforcement learning were examined, where members agreed off-policy methods like Q-learning provide better exploration flexibility than on-policy methods.

- Discussion highlighted the careful balance of applying auxiliary loss terms to facilitate exploration without creating a separate, potentially cumbersome exploration policy.

- OpenAI Reaches New Heights in Knowledge Access: A participant expressed concern over the lack of appreciation for OpenAI's contribution to democratizing knowledge, effectively placing a PhD in everyone’s pocket.

- This sparked a broader dialogue about societal perceptions of AI advancements and their integration into everyday applications.

- Tokenizers Need Retraining for New Languages: The need for retraining tokenizers when adding new languages in ML models was discussed, signifying the importance of comprehensive retraining for effectiveness.

- Members acknowledged that while limited pretraining may work for structurally similar languages, comprehensive retraining remains essential in natural language contexts.

Cohere Discord

- AdEMAMix Optimizer piques interest: Discussion around the AdEMAMix Optimizer highlighted its potential to enhance Parakeet's training efficiency, achieving targets in under 20 hours.

- Members speculated on its implications for model training strategies, emphasizing the need for various efficiency techniques.

- Cohere API Spending Limit setup: Users shared methods to set a daily or monthly spending limit on Cohere API usage through the Cohere dashboard to manage potential costs.

- Some encountered roadblocks in accessing the options, sparking a recommendation to contact Cohere support for resolution.

- Command R+ for Bar Exam Finetuning: A Masters graduate seeks input on using Command R+ to finetune llama2 for the American bar exam, requesting suggestions from fellow users.

- The group pushed for local experimentation and a thorough read of Cohere's documentation for optimal guidance.

- AI Fatigue signals emerge: Members noted a possible shift towards practicality over hype in AI advancements, indicating a growing trend for useful applications.

- Analyses drew parallels to rapidly evolving skill requirements in the field, likening the climate to a primordial soup of innovation.

- Implementing Rate Limiting on API requests: A suggestion arose to apply rate limits on API requests per IP address to mitigate misuse and control traffic effectively.

- This preventative measure is deemed crucial to safeguard against sudden spikes in usage that may arise from malicious activity.

Modular (Mojo 🔥) Discord

- MAX 24.5 Performance Boost: MAX 24.5 has launched with a 45% improvement in performance for int4k Llama token generation and introduces a new driver interface for developers. Check the full changes in the MAX changelog.

- This release positions MAX as a more competitive option, especially in environments reliant on efficient token handling.

- Mojo 24.5 Comes With Python Support: Mojo 24.5 adds support for implicit variable definitions and introduces new standard library APIs along with compatibility for Python 3.12. Details can be found in the Mojo changelog.

- These enhancements indicate a robust trajectory for Mojo, leveraging Python's latest features while streamlining development workflows.

- StringSlice Simplifies Data Handling: A member highlighted the use of

StringSlice(unsafe_from_utf8=path)to convert aSpan[UInt8]to a string view in Mojo. This method clarifies how keyword arguments function in this context.- Understanding this facilitates better utilization of string handling in Mojo's ecosystem, especially for data-driven tasks.

- Alternatives for MAX's Embedding Features: Discussions clarified that MAX lacks intrinsic support for embedding and vector database functionalities; alternatives like ChromaDB, Qdrant, and Weaviate are recommended for semantic search. A blog post offers examples for enhancing semantic search with these tools.

- This lack highlights the need for developers to utilize external libraries to achieve comprehensive search functionalities.

- Compatibility Issues in Google Colab: Concerns arose regarding running MAX in Google Colab due to installation issues; users were encouraged to create GitHub issues for investigation on this matter. The Colab Issue #223 captures ongoing discussions for community input.

- Addressing these compatibility concerns is crucial for maximizing accessibility for developers using popular notebook environments.

OpenInterpreter Discord

- Open Interpreter Token Usage Sparks Discussions: Concerns arose over Open Interpreter consuming 10,000 tokens for just six requests, calling its efficiency into question. This initiated a dialogue about potential optimizations in token handling.

- Members are actively discussing which strategies could improve token utilization without sacrificing functionality.

- Steps Needed for iPhone App Setup: A member requested clear instructions for launching the new iPhone app, seeking guidance on cloning the repo and setup processes, given their beginner status.

- Another user promptly recommended this setup guide to assist with the installation.

- Challenges in LiveKit Connection: Difficulties were reported with LiveKit connectivity issues on mobile data instead of Wi-Fi, complicating access on MacBooks. Members asked for detailed steps to replicate these connection errors.

- Community engagement surged as users pushed for collaborative troubleshooting to effectively address common LiveKit issues.

- Mobile App's Voice Response Missing: Feedback indicated that the Open Interpreter mobile app struggles with providing voice responses, where it recognizes commands but fails to execute verbal outputs. The non-responsive female teacher feature was particularly highlighted.

- Critiques surfaced as users pointed toward a lack of feedback in the app, urging developers to refine user interactions and improve the overall experience.

- Documenting Community Contributions: There’s a push for improved community documentation, especially regarding the LiveKit setup, with claims that 90% of users face foundational problems.

- Mike encouraged members to submit pull requests with actionable solutions, reinforcing the need for clear guides to navigate common pitfalls.

DSPy Discord

- Exploring O1 Functionality: Members are testing O1 support for DSPy with an eye on integrating it seamlessly, following its recent implementation.

- Active discussions highlight a strong community interest in extracting value from the new features as they arise.

- DSPy Version 2.4.16 Rocks!: DSPy version 2.4.16 has been officially released, introducing the

dspy.LMfunctionality that enhances user experience.- Users are reporting successful implementations of LiteLLM models post-update, encouraging broader adoption.

- RAG: The Retrieval-Aided Gem: Members are exploring the adaptation of traditional LLM queries to RAG (retrieval-augmented generation) using updated DSPy modules.

- Resources were shared, including links for simple RAG and MIPRO compilation, driving hands-on experimentation.

- Concerns with Google Vertex AI: Users have flagged Google Vertex AI integration issues, reporting service errors despite correct setups.

- Collaborative problem-solving efforts are focused on optimized environments for LiteLLM models, emphasizing proxy configurations.

- Dynamic Prompts in RAG Discussions: Community members are debating best practices for packing dynamic context into prompts for effective RAG implementation.

- Dialogues underscore the necessity of context-driven prompts to enhance results in varied scenarios.

OpenAccess AI Collective (axolotl) Discord

- Memory Leaks Plague GPU Batch Size: Discussions revealed that fluctuating tensor sizes in PyTorch can lead to memory leaks when using packed samples per GPU batch size.

- Participants raised concerns about padding in sequences, emphasizing the need for solutions to mitigate these memory pitfalls.

- Upstage Solar Pro Model Causes Buzz: Interest surged around the Upstage Solar Pro model, especially its 22B configuration for optimal single card inference; comparisons were drawn to LLaMA 3.1.

- Despite excitement, members expressed skepticism regarding the bold claims from its creators, wary of potential overpromises.

- Curiosity Hits Liger Kernels: One member sought insights on implementing Liger kernels, seeking experiences from others to shed light on performance outcomes.

- The inquiry reflects a broader interest in enhancing LLM optimization and usability.

- Training phi-3.5 Hits Snags: Attempts to train phi-3.5 have yielded frustration as lora adapters reportedly learned very little, with issues documented in a GitHub report.

- Participants discovered a potential bug that might be contributing to poor training results, venting their frustrations.

- Gradient Norms Cause Confusion: A user experienced unexpectedly high grad_norm values despite setting

max_grad_norm: 2in their LoRA configuration, peaking at 2156.37.- Questions linger about whether logs reflect clipped values accurately; the user's LoRA setup also included various fine-tuning settings for the Pythia model.

LAION Discord

- Llama 3.1 8B Finetune Released: A member announced a Llama 3.1 8B finetune model and seeks collaborators to enhance its dataset, which serves as a proof of concept for the flection model.

- This discussion sparks interest in replicating results seen in various YouTube channels, showcasing practical applications and community contributions.

- Concerns Raised over Open Source SD: A participant flagged that Stable Diffusion appears stagnant in the open source domain, suggesting a decline in community contributions.

- “Basically, if you care about open source, SD seems to be dead,” prompting a collective reevaluation of involvement in open source projects.

- Free Mint Event with OpenSea: The server announced a collaboration with OpenSea offering a new free mint opportunity for members, accessible via the CLAIM link.

- Participants are reminded that some claims may incur gas fees, encouraging quick actions from community members.

- Tier 5 API Access Comes at a Cost: Tier 5 API access raises concerns about its cost-effectiveness compared to previous models like GPT-4o, leading to a cautionary optimism about its capabilities.

- “Can't be much worse than gpt4o” reflects discussions on balancing budget with seeking new enhancements in API utility.

- STaR Techniques Enhancing Model Training: Integrating Chain-of-Thought (CoT) with Reinforcement Learning significantly bolsters model performance, as highlighted by the STaR technique's effectiveness in complex reasoning tasks.

- The importance of quality data gathering is stressed, with a sentiment that “It’s gotta be smart people too so it can’t be cheap,” affirming the link between data intelligence and model training efficacy.

Torchtune Discord

- Torchtune 0.2.1 fails installation on Mac: The installation of torchtune version 0.2.1 fails on Mac due to the unmet dependency torchao==0.3.1, blocking its usability on MacBooks. Members noted that the upcoming torchao 0.6.0 might resolve this with macOS wheels.

- The issue impacting Mac installations has led to frustration, reinforcing the need for smoother dependency management in future releases.

- torchao wheels for Mac M1 now available: torchao wheels are now confirmed available for Mac M1, significantly improving compatibility for Mac users. This update is expected to enhance functionality for those running torchtune on this architecture.

- Increased compatibility offers a practical pathway forward, allowing users to leverage Torchtune better under the M1 environment.

- Switching Recipe Tests to GPU: Members discussed moving current recipe tests from CPU to GPU, which was previously limited due to historical constraints. Suggestions were made to designate tests as GPU-specific, ensuring flexibility when GPUs are unavailable.

- This shift is positioned as essential for harnessing full computational power and streamlining test processes moving forward.

- Plans for Enhanced Batched Generation: A new lightweight recipe aimed at optimizing batched generation is in the pipeline, intending to align with project goals and user needs. Feedback on this new approach is highly encouraged from the community.

- Members indicated eagerness to participate in testing this generation improvement, which aims to simplify processes while maintaining effectiveness.

- Online Packing for Iterable Datasets on the Horizon: A future plan includes implementing online packing for iterable datasets, promising better data handling and operational efficiency in workflows. This advancement aims to support ongoing developments within Torchtune.

- The community anticipates enhancements to their data strategies, with excitement about the potential impact on iterative processes.

LangChain AI Discord

- LangChain AWS ChatBedrockConverse and Conversational History: A user inquired whether LangChain's AWS ChatBedrockConverse supports maintaining conversational history in a retrieval chain, which is crucial for conversational AI functionality.

- This sparked a discussion on the implications of history management within AI frameworks.

- Vector Database Implementation Troubles: One user reported challenges implementing Upstash Redis to replace the in-memory MemoryVectorStore for storing vector embeddings of PDF splits.

- They reached out for community assistance, noting issues with alternatives like Pinecone.

- Warhammer Adaptive RAG Project Takes Shape: A community member shared a GitHub project focused on Warhammer Adaptive RAG, seeking feedback particularly on features like hallucination and answer grading.

- Feedback highlighted the project’s innovative use of local models.

- AI Engineer Opportunity at Vantager: A member announced an opening for a Founding AI Engineer at Vantager, aiming at AI-native platforms for capital allocation.

- Candidates were encouraged to check the job board for details, with mention of backing from VC and the focus on solving significant data challenges.

- OpenAI's Transformative Impact: A member expressed amazement at OpenAI's advancements, suggesting it feels as if they have put a PhD in everyone's pocket.

- They raised concerns over whether society is fully understanding the impactful changes these technologies are bringing.

tinygrad (George Hotz) Discord

- Forum Members Discuss Etiquette: A member emphasized the importance of basic forum etiquette, noting that repetitive requests for help can discourage others from offering assistance.

- Wasting someone's time frustrates community engagement, urging better communication practices.

- Progress in MypyC Compilation for Tinygrad: A member detailed their methodical approach to MypyC compilation, working from the whole project to individual files for efficiency.

- Files compiled include

tinygrad/device.pyandtinygrad/tensor.py, indicating significant strides in the project.

- Files compiled include

- Successful Llama-7B Run with Tinygrad: The member successfully ran examples/llama.py using the Llama-7B model, highlighting a performance improvement of 12% in average timing.

- They provided a link to the Llama-7B repository to reference the used model.

- Code Changes for MypyC Functionality: Code modifications were made across several files, including rewriting generators and adding decorators, to enable MypyC functionality.

- The member described their changes as a rough draft, seeking team feedback before further refinement.

- Future Considerations for C Extensions: The member suggested that if C extensions are to be integrated into Tinygrad, a piecemeal approach should be taken to facilitate changes.

- They are eager to ensure their ongoing work aligns with the broader project goals before finalizing their contributions.

Gorilla LLM (Berkeley Function Calling) Discord

- Gorilla OpenFunctions Model Accuracy at Zero: The evaluation for the gorilla-openfunctions-v2 model returned an accuracy of 0.0 after 258 tests, despite model_result_raw aligning with the possible_answer.

- This anomaly suggests deeper issues may be at play that require further investigation beyond surface-level outputs.

- Decoding AST Throws Errors: An error arose during the execution of a user info function, specifically an Invalid syntax. Failed to decode AST message.

- The report also highlighted a data type mismatch with the note that one cannot concatenate str (not 'list') to str, indicating a possible bug.

- User Info Retrieval Completed Successfully: The model successfully retrieved information for a user with ID 7890, confirming the username as user7890 and the email as user7890@example.com.

- This operation completed the specific request for a special item in black, demonstrating some functionality amidst the reported issues.

LLM Finetuning (Hamel + Dan) Discord

- Fine-Tuning LLMs for Better Translations: A member inquired about experiences with fine-tuning LLMs specifically for translations, noting that many models capture the gist but miss key tone and style elements.

- This highlights the need for improved translation quality techniques to preserve essential nuances.

- Struggles with Capturing Tone in Translations: While LLMs deliver decent translations, they often struggle to effectively convey the original tone and style.

- Members called for sharing methods and insights to enhance translation fidelity, addressing these lingering challenges.

MLOps @Chipro Discord

- Fleak AI Hosts Private Gathering: Fleak AI is organizing a private happy hour for its community tonight in San Francisco at this location, aimed at discussing updates and fostering connections.

- This gathering promises a chance to network and engage with fellow developers and users, enhancing community ties.

- Fleak as a Serverless API Builder: Fleak promotes itself as a Serverless API Builder tailored for AI workflows, specifically excelling in functions like sentiment labeling.

- This functionality positions Fleak as a valuable tool for developers looking to streamline API integrations in their projects.

- Community Building Focus at Fleak: The event aims to strengthen community engagement through more frequent in-person meetups, starting with this happy hour.

- Organizers hope to create a welcoming environment that encourages open discussions and connections among attendees.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!