[AINews] Kolmogorov-Arnold Networks: MLP killers or just spicy MLPs?

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Are Learnable Activations all you need?

AI News for 5/6/2024-5/7/2024. We checked 7 subreddits and 373 Twitters and 28 Discords (419 channels, and 3749 messages) for you. Estimated reading time saved (at 200wpm): 414 minutes.

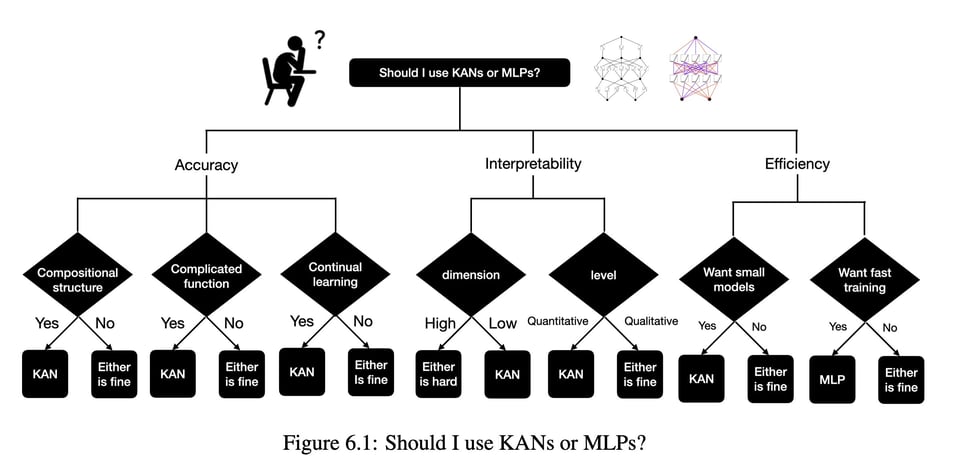

Theory papers are usually above our paygrade, but that is enough drama and not enough else going on today that we have the space to write about it. A week ago, Max Tegmark's grad student Ziming Liu published his very well written paper on KANs (complete with fully documented library), claiming them as almost universally equal to or superior to MLPs on many important dimensions like interpretability/inductive bias injection, function approximation accuracy and scaling (though is acknowledged to be currently 10x slower to train on current hardware on a same-param count basis, it is also 100x more param efficient).

While MLPs have fixed activation functions on nodes ("neurons"), KANs have learnable activation functions on edges ("weights").

Instead of layering preset activations like ReLu, KANs model "learnable activation functions" using B-splines (aka no linear weights, just curves) and simple addition. People got excited, rewriting GPTs with KANs.

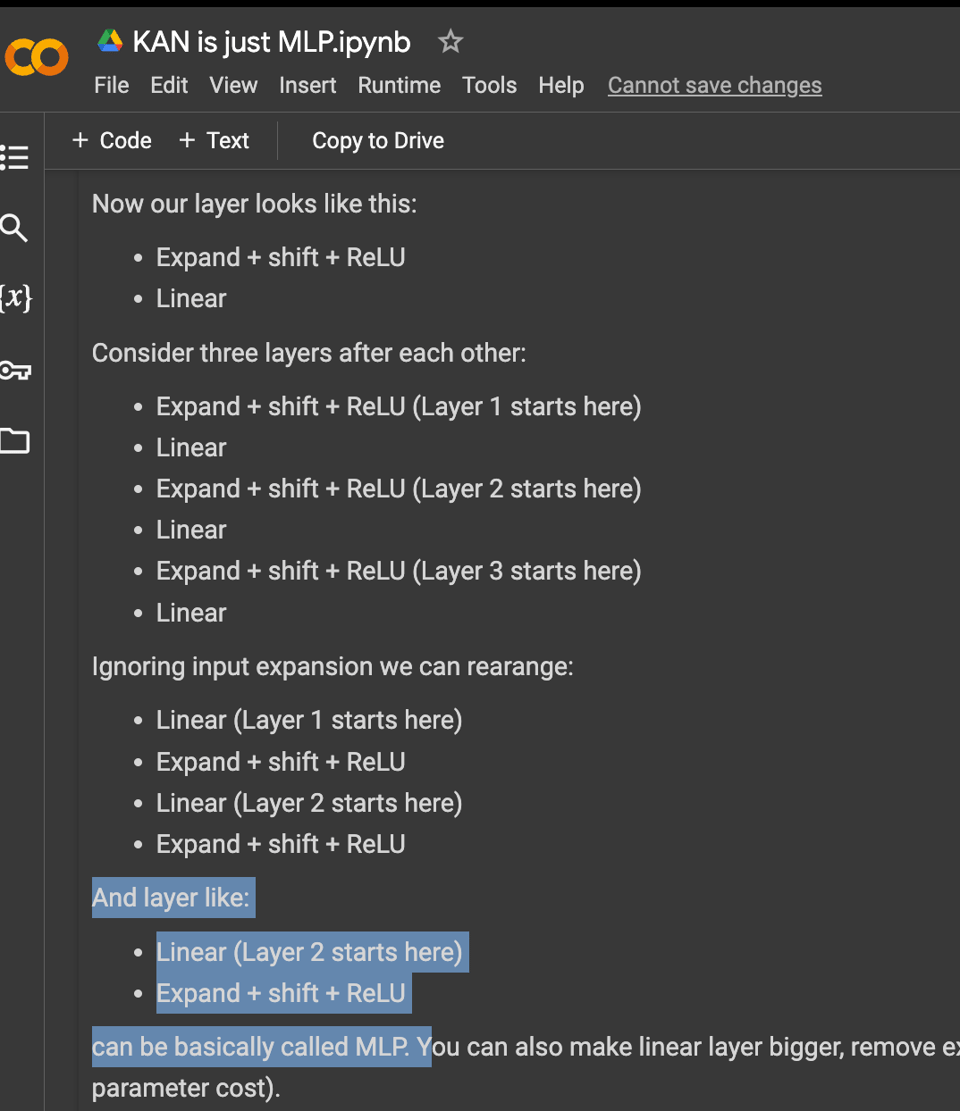

One week on, it now turns out that you can rearrange the KAN terms to arrive back at MLPs with the ~same number of params (twitter):

It doesn't surprise that you can rewrite one universal approximator as another - but following this very simple publication, many are defending KANs as more interpretable... which is also being rightfully challenged.

Have we seen the full rise and fall of a new theory paper in a single week? Is this the preprint system working?

Table of Contents

- AI Twitter Recap

- AI Reddit Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Unsloth AI (Daniel Han) Discord

- Nous Research AI Discord

- Stability.ai (Stable Diffusion) Discord

- LM Studio Discord

- HuggingFace Discord

- Perplexity AI Discord

- CUDA MODE Discord

- OpenAI Discord

- Eleuther Discord

- Modular (Mojo 🔥) Discord

- OpenRouter (Alex Atallah) Discord

- OpenInterpreter Discord

- OpenAccess AI Collective (axolotl) Discord

- LangChain AI Discord

- LAION Discord

- LlamaIndex Discord

- tinygrad (George Hotz) Discord

- Cohere Discord

- Latent Space Discord

- AI Stack Devs (Yoko Li) Discord

- Mozilla AI Discord

- Interconnects (Nathan Lambert) Discord

- DiscoResearch Discord

- LLM Perf Enthusiasts AI Discord

- Alignment Lab AI Discord

- Datasette - LLM (@SimonW) Discord

- PART 2: Detailed by-Channel summaries and links

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

OpenAI and GPT Models

- Potential GPT-5 Release: @bindureddy noted that the gpt-2 chatbots are back on chat.lmsys and may be the latest GPT-5 versions, though they seem underwhelming compared to the hype. @nptacek tested the im-a-good-gpt2-chatbot model, finding it very strong and definitely better than the latest GPT-4, while the im-also-a-good-gpt2-chatbot had fast output but tended to fall into repetitive loops.

- OpenAI Safety Testing: @zacharynado speculated that OpenAI's "safety testing" for GPT-4.5 couldn't finish in time for a Google I/O launch like they did with GPT-4.

- Detecting AI-Generated Images: OpenAI adopted the C2PA metadata standard for certifying the origin of AI-generated images and videos, which is integrated into products like DALL-E 3. @rohanpaul_ai noted the classifier can identify ~98% of DALL-E 3 images while incorrectly flagging <0.5% of non-AI images, but has lower performance distinguishing DALL-E 3 from other AI-generated images.

Microsoft AI Developments

- In-House LLM Training: According to @bindureddy, Microsoft is training its own 500B parameter model called MAI-1, which may be previewed at the Build conference. As the model becomes available, it will be natural for Microsoft to push it instead of OpenAI's GPT line, making the two companies more competitive.

- Copilot Workspace Impressions: @svpino had very positive first impressions of Copilot Workspace, noting its refined approach and tight integration with GitHub for generating code directly in repositories, solving issues, and testing. The tool is positioned as an aid to developers rather than a replacement.

- Microsoft's AI Focus: @mustafasuleyman, having joined Microsoft, shared that the company is AI-first and driving massive technological transformation, with responsible AI as a cornerstone. Teams are working to define new norms and build products for positive impact.

Other LLM Developments

- Anthropic's Approach: In an interview discussed by @labenz, Anthropic's CTO explained their approach of giving the AI many examples rather than fine-tuning for every task, as fine-tuning fundamentally narrows what the system can do.

- DeepSeek-V2 Release: @deepseek_ai announced the release of DeepSeek-V2, an open-source 236B parameter MoE model that places top 3 in AlignBench, surpassing GPT-4, and ranks highly in MT-Bench, rivaling LLaMA3-70B. It specializes in math, code, and reasoning with a 128K context window.

- Llama-3 Developments: @abacaj suggested Llama-3 with multimodal capabilities and long context could put pressure on OpenAI. @bindureddy noted Llama-3 on Groq allows efficiently making multiple serial calls for LLM apps to make multiple decisions before giving the right answer, which is difficult with GPT-4.

AI Benchmarks and Evaluations

- LLMs as a Commodity: @bindureddy argued that LLMs have become a commodity, and even if GPT-5 is fantastic, other major labs and companies will catch up within months as language abilities plateau. He advises using LLM-agnostic services for the best performance and efficiency.

- Evaluating LLM Outputs: @aleks_madry introduced ContextCite, a method for attributing LLM responses back to the given context to see how the model is using the information and if it's misinterpreting anything or hallucinating. It can be applied to any LLM at the cost of a few extra inference calls.

- Emergent Abilities of LLMs: @raphaelmilliere shared a preprint exploring philosophical questions around LLMs, covering topics like emergent abilities, consciousness, and the status of LLMs as cognitive models. The paper dedicates a large portion to recent interpretability research and causal intervention methods.

Scaling Laws and Architectures

- Scaling Laws for MoEs: @teortaxesTex noted that DeepSeek-V2-236B took 1.4M H800-hours to train compared to Llama-3-8B's 1.3M H100-hours, validating the Scaling Laws for Fine-Grained MoEs paper. DeepSeek openly shares inference unit economics in contrast to some Western frontier companies.

- Benefits of MoE Models: @teortaxesTex highlighted DeepSeek's architectural innovations in attention mechanisms (Multi-head Latent Attention for efficient inference) and sparse layers (DeepSeekMoE for training strong models economically), contrasting with the "scale is all you need" mindset of some other labs.

- Mixture-of-Experts Efficiency: @teortaxesTex pointed out that at 1M context, a ~250B parameter MLA model like DeepSeek-V2 uses only 34.6GB for cache, suggesting that saving long-context examples as an alternative to fine-tuning is becoming more feasible.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Progress and Capabilities

- Google's medical AI outperforms GPT and doctors: In /r/singularity, Google's Med-PaLM 2 AI destroys GPT's benchmark and outperforms doctors on medical diagnosis tasks. This highlights the rapid progress of AI in specialized domains like healthcare.

- Microsoft developing large language model to compete: In /r/artificial, it's reported that Microsoft is working on a 500B parameter model called MAI-1 to compete with offerings from Google and OpenAI. The race to develop ever-larger foundational models continues.

- AI system claims to eliminate "hallucinations": In /r/artificial, Alembic claims to have developed an AI that eliminates "hallucinations" and false information generation in outputs. If true, this could be a major step towards more reliable AI systems.

AI Ethics and Societal Impact

- Viral AI generated misinformation: In /r/singularity, an AI generated photo of Katy Perry at the Met Gala went viral, gaining over 200k likes in under 2 hours. This demonstrates the potential for AI to rapidly spread misinformation at scale.

- Prominent AI critic's credibility questioned: In /r/singularity, it's revealed that Gary Marcus, a prominent AI critic, admits he doesn't actually use the large language models he criticizes, drawing skepticism about his understanding of the technology.

- Concerns over AI scams and fraud: In /r/artificial, Warren Buffett predicts AI scamming and fraud will be the next big "growth industry" as the technology advances, highlighting concerns over malicious uses of AI.

Technical Developments

- New neural network architecture analyzed: In /r/MachineLearning, the Kolmogorov-Arnold Network is shown to be equivalent to a standard MLP with some modifications, providing new insights into neural network design.

- Efficient large language model developed: In /r/MachineLearning, DeepSeek-V2, a 236B parameter Mixture-of-Experts model, achieves strong performance while reducing costs compared to dense models, advancing more efficient architectures.

- New library for robotics and embodied AI: In /r/artificial, Hugging Face releases LeRobot, a library for deep learning robotics, to enable real-world AI applications and advance embodied AI research.

Stable Diffusion and Image Generation

- Stable Diffusion 3.0 shows major improvements: In /r/StableDiffusion, Stable Diffusion 3.0 demonstrates major improvements in image quality and prompt adherence compared to previous versions and competitors.

- Efficient model matches Stable Diffusion 3.0 performance: In /r/StableDiffusion, the PixArt Sigma model shows excellent prompt adherence, on par with SD3.0 while being more efficient, providing a compelling alternative.

- New model for realistic light painting effects: In /r/StableDiffusion, a new "Aether Light" LoRA model enables realistic light painting effects in Stable Diffusion, expanding creative possibilities for artists.

Humor and Memes

- Humorous AI chatbot emerges: In /r/singularity, an "im-a-good-gpt2-chatbot" model appears on OpenAI's Playground and engages in humorous conversations with users, showcasing the lighter side of AI development.

AI Discord Recap

A summary of Summaries of Summaries

1. Model Performance Optimization and Benchmarking

- [Quantization] techniques like AQLM and QuaRot aim to run large language models (LLMs) on individual GPUs while maintaining performance. Example: AQLM project with Llama-3-70b running on RTX3090.

- Efforts to boost transformer efficiency through methods like Dynamic Memory Compression (DMC), potentially improving throughput by up to 370% on H100 GPUs. Example: DMC paper by @p_nawrot.

- Discussions on optimizing CUDA operations like fusing element-wise operations, using Thrust library's

transformfor near-bandwidth-saturating performance. Example: Thrust documentation.

- Comparisons of model performance across benchmarks like AlignBench and MT-Bench, with DeepSeek-V2 surpassing GPT-4 in some areas. Example: DeepSeek-V2 announcement.

2. Fine-tuning Challenges and Prompt Engineering Strategies

- Difficulties in retaining fine-tuned data when converting Llama3 models to GGUF format, with a confirmed bug discussed.

- Importance of prompt design and usage of correct templates, including end-of-text tokens, for influencing model performance during fine-tuning and evaluation. Example: Axolotl prompters.py.

- Strategies for prompt engineering like splitting complex tasks into multiple prompts, investigating logit bias for more control. Example: OpenAI logit bias guide.

- Teaching LLMs to use

<RET>token for information retrieval when uncertain, improving performance on infrequent queries. Example: ArXiv paper.

3. Open-Source AI Developments and Collaborations

- Launch of StoryDiffusion, an open-source alternative to Sora with MIT license, though weights not released yet. Example: GitHub repo.

- Release of OpenDevin, an open-source autonomous AI engineer based on Devin by Cognition, with webinar and growing interest on GitHub.

- Calls for collaboration on open-source machine learning paper predicting IPO success, hosted at RicercaMente.

- Community efforts around LlamaIndex integration, with issues faced in Supabase Vectorstore and package imports after updates. Example: llama-hub documentation.

4. Hardware Considerations for Efficient AI Workloads

- Discussions on GPU power consumption, with insights on P40 GPUs idling at 10W but drawing 200W total, and strategies to limit to 140W for 85% performance.

- Evaluating PCI-E bandwidth requirements for inference tasks, often overestimated based on shared resources. Example: Reddit discussion.

- Exploring single-threaded operations in frameworks like tinygrad, which doesn't use multi-threading for CPU ops like matrix multiplication.

- Inquiries into Metal memory allocation on Apple Silicon GPUs for shared/global memory akin to CUDA's

__shared__.

5. Misc

- Exploring Capabilities and Limitations of AI Models: Engineers compared the performance of various models like Llama 3 70b, Mistral 8x22b, GPT-4 Turbo, and Sonar for tasks such as function calling, essay writing, and code refactoring (Nous Research AI). They also discussed the impact of quantization on model performance, like the degradation seen with

llama.cpp's quantization (OpenAccess AI Collective).

- Prompt Engineering Techniques and Challenges: The importance of prompt design was emphasized, noting how changes to templates and tokens can significantly impact model performance (OpenAccess AI Collective). Users shared tips for complex prompting tasks, like splitting product identification into multiple prompts (OpenAI), and discussed the difficulty of integrating negative examples (OpenAI). A new prompt generator tool from Anthropic was also explored (LLM Perf Enthusiasts AI).

- Optimizing CUDA Operations and Model Training: CUDA developers shared insights on using Triton for efficient kernel design, leveraging fused operations for element-wise computations, and utilizing CUDA's Thrust library for optimal performance (CUDA MODE). Discussions also covered techniques to boost transformer efficiency, like Dynamic Memory Compression (DMC) (CUDA MODE), and fine-tuning CUDA kernels for specific architectures (CUDA MODE).

- Advancements in Open-Source AI Projects: Notable open-source releases included DeepSeek-V2, an MoE model excelling in code and reasoning benchmarks (Latent Space), StoryDiffusion for magic story creation (OpenAccess AI Collective), and OpenDevin, an autonomous AI engineer (LlamaIndex). Collaborations were encouraged, like the open call to contribute to a machine learning IPO prediction paper (Unsloth AI).

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- GGUF Conversion Conundrum: Engineers noted a bug in converting Llama3 models to GGUF which affects retention of fine-tuned data, spurring discussions on related GitHub Issue #7062 and a Reddit thread.

- Training Tips and Troubles: Model training was a hot topic, including tokenization issues during fine-tuning and successful utilization of LORA Adapters with Unsloth. Additionally, concerns about base data impacting fine-tuned model results were addressed, suggesting fine-tuning alters weights for previously seen tokens.

- AI Engine Struggles and Strategies: Aphrodite Engine's compatibility with 4bit bnb quantization raised questions, while a VRAM calculator for models like GGUF and exllama was referenced for inference program compatibility. Separately, a member highlighted a need for a generalised approach to fine-tuning Large Vision Language Models (LVLM).

- Model Iterations and Collaborations: New versions of models were unveiled, including LLaMA-3-8B-RDF-Experiment for knowledge graph construction, while an open call was made for collaboration on a machine learning paper predicting IPO success.

- Product Showcase and Support Requests: The introduction of Oncord, a professional website builder, was met with a demo at oncord.com, and members debated marketing tactics for startups. Additionally, support for moondream fine-tuning was requested, linking a GitHub notebook.

Nous Research AI Discord

Function Calling Face-off: Llama 3 70b shows better function calling performance over Mistral 8x22b, revealing a gap despite the latter's touted capabilities, exemplified by the members' discussion around the utility and accuracy of function calling in AI chatbots.

A Battle of Speeds in AI Training: Comparing training times leads to concerns, with reports of 500 seconds per step on an A100 for LoRA llama 3 8b tuning and just 3 minutes for 1,000 iterations for Llama2 7B using litgpt, showing wide variances in efficiency and raising questions on optimization and practices.

Impatience for Improvements: Users express disappointment over inaccessible features such as worldsim.nousresearch.com, and latency in critical updates for networks like Bittensor, highlighting real-time challenges faced by developers in AI and the ripple effects of stalled updates on productivity.

Quantization Leaps Forward: The AQLM project advances with models like Llama-3-70b and Command-R+, demonstrating progress with running Large Language Models on individual GPUs and touching upon the community's push for greater model accessibility and performance.

Chasing Trustworthy AI: Invetech's "Deterministic Quoting" to combat hallucinations indicates a strong community desire for reliable AI, particularly in sensitive sectors like healthcare, aiming to marry veracity with the innovative potential of Large Language Models as seen in the discussion.

Stability.ai (Stable Diffusion) Discord

- Hyper Diffusion vs Stable Diffusion 3 Demystified: Engineers tackled the nuances between Hyper Stable Diffusion, known for its speed, and the upcoming Stable Diffusion 3. The community expressed concern over the latter potentially not being open-source, prompting discussions on the strategic safeguarding of AI models.

- Bias Alert in Realistic Human Renders: The quest for the most effective realistic human model stimulated debate, with a consensus forming on the necessity of avoiding models with heavy biases like those from civitai to maintain diversity in generated outputs.

- Dreambooth and LoRA Deep Dive: Deep technical consultation amongst users shed light on leveraging Dreambooth and LoRA when fine-tuning Stable Diffusion models. There was a particular focus on generating unique and varied faces and styles.

- The Upscaling Showdown: Participants compared upscalers, such as RealESRGAN_x4plus and 4xUltrasharp, sharing their personal successes and preferences. The conversations aimed to identify superior upscaling techniques for enhanced image resolution.

- Open-Source AI Twilight?: A recurrent theme in the dialogues reflected the community's anxiety about the future of open-source AI, particularly related to Stable Diffusion models. Talk revolved around the implications of proprietary developments and strategies for preserving access to crucial AI assets.

LM Studio Discord

Private Life for Your Code: Users call for a server logging off feature in LM Studio for privacy during development, with genuine concerns about server logs being collected through the GUI.

A Day in the CLI: There's interest in using LM Studio in headless mode and leveraging the lms CLI to start servers via the command line. Users also shared updates on tokenizer complications for Command R and Command R+ after a llama toolkit update and issued guidance for downloading updated quantizations from Hugging Face Co's Model Repo.

Memory Lapses in Linux: A peculiar case of Linux misreporting memory in LM Studio version 0.2.22 stirred some discussions, with suggestions offered to resolve GPU offloading troubles for running models like Meta Llama 3 instruct 7B.

Prompts Lost in Thought: Users tackled issues around LM Studio erroneously responding to deleted content and scoped document access, sparking a debate about LLMs' handling and retention of data.

Model Malfunctions: Troubles with several models in LM Studio were flagged, including llava-phi-3-mini misrecognizing images and models like Mixtral and Wizard LM fumbling Dungeon & Dragons data persistence despite AnythingLLM database use.

Power-play Considerations: Hardware aficionados in the guild grapple with GPU power consumption, server motherboards, and PCIe bandwidth, sharing successful runs of LM Studio in VMs with virtual GPUs and weighing in on practical hardware setups for AI endeavors.

Beta-testing Blues: Discussions mentioned crashes in 7B models on 8GB GPUs and unloading issues post-crash, with beta users seeking solutions for recurring errors.

SDK Advent: Announcement of new lmstudiojs SDK signals upcoming langchain integrations for more streamlined tool development.

In the AI Trenches: Users provided a solution for dependency package installation on Linux, discussed LM Studio's compatibility on Ubuntu 22.04 vs. 24.04, and shared challenges with LM Studio's API integration and concurrent request handling.

Engineer Inquiry: Curiosity peaked about GPT-Engineer setup with LM Studio and whether it involved custom prompting techniques.

Prompting the AIs: Some voiced the value of prompt engineering as a craft, citing it as central to garnering premium outputs from LLMs and sharing a win in Singapore’s GPT-4 Prompt Engineering Competition covered in Towards Data Science.

AutoGen Hiccups: There's a brief mention of a bug causing AutoGen Studio to send incomplete messages, with no further discussion on the resolution or cause.

HuggingFace Discord

ASR Fine-Tuning Takes Center Stage: Engineers discussed enhancing the openai/whisper-small ASR model, emphasizing dataset size and hyperparameter tuning. Tips included adjusting weight_decay and learning_rate to improve training, highlighted by community-shared resources on hyperparameters like gradient accumulation steps and learning rate adjustments.

Deep Dive into Quantum and AI Tools: Stealthy interest in seemingly nascent quantum virtual servers surfaced with Oqtant, while the AI toolkit included everything from an all-in-one assistant everything-ai capable of 50+ language support to the spaghetti-coded image-generating discord bot Sparky 2.

Debugging and Datasets: Chatbots designing PowerPoint slides, XLM-R getting a Flash Attention 2 upgrade, and multi-label image classification training woes took the stage, connecting community members across problems and sharing valuable insights. Meanwhile, the lost UA-DETRAC dataset incited a search for its much-needed annotations for traffic camera-based object detection.

Customization and Challenges in Model Training: From personalizing image models with Custom Diffusion—requiring minimal example images—to the struggles with fine-tuning Stable Diffusion 1.5 and BERT models, the community wrestled with and brainstormed solutions for various training hiccups. Device mismatches during multi-GPU and CPU offloading and the importance of optimization techniques for restricted resources were notable pain points.

Novel Approaches in Teaching Retrieval to LLMs: A newer technique encouraging LLMs to use a <RET> token for information retrieval to boost performance was discussed with reference to a recent paper, highlighting the importance of this method for elusive questions that evade the model's memory. This sits alongside observations on model billing methods via token counts, with practical insights shared on pricing strategies.

Perplexity AI Discord

Beta Bewilderment: Users experienced confusion with accessing Perplexity AI's beta version; one assumed clicking an icon would reveal a form, which didn't happen, and it was clarified that the beta is closed.

Performance Puzzles: Across different devices, Perplexity AI users reported technical issues such as unresponsive buttons and sluggish loading. Conversations revolved around limits of models like Claude 3 Opus and Sonar 32k, effecting work, with calls to check Perplexity's FAQ for details.

AI Model Melee: Comparisons of AI models' capabilities, including GPT-4 Turbo, Sonar, and Opus, were discussed, focusing on tasks like essay writing and code refactoring. Clarity was sought on whether source limits in searches had increased, with GIFs used to illustrate responses.

API Angst and Insights: Discussions in the Perplexity API channel ranged from crafting JSON outputs to perplexities with the search features of Perplexity's online models. The documentation was updated (as highlighted in a link to docs), important for users dealing with issues like outdated search results and exploring model parameter counts.

Shared Discoveries through Perplexity: The community delved into Perplexity AI's offerings, addressing an array of topics from US Air Force insights to Microsoft's 500 billion parameter AI model. Users shared an aspiration for a standardized image creation UI along with links to features like Insanity by XDream and emphasized content's shareability.

CUDA MODE Discord

GPU Clock Speed Mix-Up: A conversation was sparked by confusion over the clock speed of H100 GPUs, with the initial statement of 1.8 MHz corrected to 1.8 GHz. This highlighted the need to distinguish MHz from GHz and the importance of accurate specifications in discussions on GPU performance.

Tuning CUDA: From Kernels to Libraries: Members shared insights on optimizing CUDA operations, emphasizing the efficiency of Triton in kernel design, the advantage of fused operations in element-wise computations, and the use of CUDA's Thrust library. A CUDA best practice is to use Thrust's for_each and transform for near-bandwidth-saturating performance.

PyTorch Dynamics: Various issues and improvements in PyTorch were discussed, including troubleshooting dynamic shapes with PyTorch Compile using TORCH_LOGS="+dynamic" and how to work with torch.compile for the Triton backend. An issue reported on PyTorch's GitHub relates to combining Compile with DDP & dynamic shapes, captured in pytorch/pytorch #125641.

Transformer Performance Innovations: Conversations revolved around techniques to boost the efficiency of transformers, with the introduction of Dynamic Memory Compression (DMC) by a community member, potentially improving throughput by up to 370% on H100 GPUs. Members also discussed whether quantization was involved in this method, with reference to the paper on the technique.

CUDA Discussions Heat Up in llm.c: The llm.c channel was bustling with activity, addressing issues such as multi-GPU training hangs on the master branch and optimization opportunities using NVIDIA Nsight™ Systems. A notable contribution is HuggingFace's release of the FineWeb dataset for LLM performance, documented in PR #369, with potential kernel optimizations for performance gains discussed in PR #307.

OpenAI Discord

- OpenAI Linguistically Defines Its Data Commandments: OpenAI's new document on data handling clarifies the organization's practices and ethical guidelines for processing the copious amounts of data in the AI industry.

- AI's Rhythmic Revolution Might Be Here: The discussion centered around the evolution of AI in music, referencing a musician's jam session with AI as an example of significant advancements in AI’s ability to generate music that resonates with human listeners.

- Perplexity and Cosine Similarity Stir Engineer's Minds: Engineers marveled at discovering the utility of Perplexity in AI text analysis and debated the optimal cosine similarity thresholds for text embeddings, highlighting the shift to a "new 0.45" standard from the "old 0.9".

- Prompting Practices and Pitfalls in the Spotlight: Tips on prompt engineering emphasized the complexity of using negative examples and splitting tasks into multiple prompts, and pointed to the OpenAI logit bias guide for fine-tuning AI responses.

- GPT's Vector Vault and Uniform Delivery Assurances: Insights into GPT's knowledge base mechanics and performance consistency were shared, dispelling the notion that varying user demand affects GPT-4 output or that inferior models may be deployed to manage user load.

Eleuther Discord

- Questioning the Sacred P-Value: Discussions highlighted the arbitrary nature of the .05 p-value threshold in scientific research, pointing toward a movement to shift this standard to 0.005 to enhance reproducibility, as advocated in a Nature article.

- Pushing Boundaries with Skip Connections: Adaptive skip connections are under investigation with some evidence that making weights negative can improve model performance; details of these experiments can be found on Weights & Biases. Queries related to the underlying mechanics of weight dynamics were responded to with a gated residual network paper and a code snippet.

- Model Evaluation in a Logit-Locked World: The concealment of logits in API models like OpenAI's to prevent extraction of sensitive "signatures" has sparked conversations about alternatives for model evaluation, referencing the approach with 'generate_until' in YAML for Italian LLM comparisons, in light of recent findings (logit extraction work).

- Encounter with Non-Fine-Tunable Learning: Introduction of SOPHON, a framework designed for non-fine-tunable learning to restrict task transferability, aims to mitigate ethical misuse of pre-trained models (SOPHON paper). Alongside this, there's an emerging discussion about QuaRot, a rotation-based quantization scheme that compresses LLM components to 4-bit while maintaining performance (QuaRot paper).

- Scaling and Loss Curve Puzzles: A noteworthy model scaling experiment using a 607M parameter setup trained on the fineweb dataset unearthed unusual loss curves, initiating advice to try the experiment on other datasets for benchmarking.

Modular (Mojo 🔥) Discord

- Exploring "Mojo" with Boundless Coding Adventures: Engineers discussed intricacies of programming in mojo, including installing on Intel Mac OS using Docker, Windows support through WSL2, and integration with Python ecosystems. Emphasis on design choices, such as the inclusion of structs and classes, sparked debate while compilation capabilities allowing native machine code like .exe remained a highlight.

- Stay Updated with Modular's Latest Scoops: Two important updates from the Modular team surfaced on Twitter, hinting at unmentioned advancements or news, with the community directed to check out Tweet 1 and Tweet 2 for the full details.

- MAX Engine Excellence and API Elegance on Display: MAX 24.3 debuted in a community livestream, showcasing its latest updates and introducing a new Extensibility API for Mojo. Eager learners and curious minds are directed to watch the explanatory video.

- Tinkering with Tensors and Tactics in Mojo Development: From tensor indexing tips to SIMD complications for large arrays, AI engineers shared pointers and paradigms in the mojo domain. The discussions expanded to cover benchmarking functions, constructors in a classless setup, advanced complier tool needs, a proposal for

whereclauses, and the potential of compile-time metaprogramming in mojo.

- Community Projects Propelling Mojo Forward: Updates within the community projects showcased advancements and requests for assistance, such as an efficient radix sort plus benchmark for mojo-sort, migration troubles with Lightbug to Mojo 24.3 detailed in a GitHub issue, and the porting of Minbpe to Mojo that outpaced Python versions at Minbpe.mojo. Meanwhile, the search for a Mojo GUI library continues.

- Nightly Compilation Changes the Game: Engineers wrangled with Mojo's type handling, specifically with traits and variants, signaling limitations and workarounds like

PythonObjectand@staticmethods. A fresh nightly compiler release sparked conversation about automating release notifications and highlighted improvements toReferenceusage, all framed by a playful comment about the updates stretching the capacity of a 2k monitor.

OpenRouter (Alex Atallah) Discord

- Rollback on Model Usage Rates: Soliloquy L3 8B model's price dropped to $0.05/M tokens for 2023 - 2024, available on both private and logged endpoints as announced in OpenRouter's price update.

- Seeking Beta Brainiacs for Rubik: Rubik's AI calls for beta testers, offering two months of premium access to models including Claude 3 Opus, GPT-4 Turbo, and Mistral Large with a promo code at rubiks.ai, also hinting at a tech news section featuring Apple and Microsoft's latest endeavors.

- Decoding the Verbose Llama : Engineers shared frustrations over the length of responses from llama-3-lumimaid-8b, discussing complexities with verbosity compared to models like Yi and Wizard, and buzzed about the release of Meta-Llama-3-120B-Instruct, highlighted in a Hugging Face reveal.

- Inter-Regional Model Request Mysteries: Users mulled over Amazon Bedrock potentially imposing regional restrictions on model requests, with the consensus tilting towards cross-region requests being plausible.

- Precision Pointers and Parameter Puzzles: Conversations peeled back preferences on model precision within OpenRouter, generally sustaining fp16, and occasionally distilling to int8, dovetailing into discussions on whether the default parameters require tinkering for optimal conversational results.

OpenInterpreter Discord

Python 3.10 Spells Success: Open Interpreter (OI) should be run with Python 3.10 to avoid compatibility issues; one user improved performance by switching to models like dolphin or mixtral. The GitHub repository for Open Interpreter was suggested for insights on skill persistence.

Conda Environments Save the Day: Engineers recommended using a Conda environment for a conflict-free installation of Open Interpreter on Mac, specifically with Python 3.10 to sidestep version clashes and related errors.

Jan Framework Enjoys Local Support: Jan can be utilized as a local model framework for the O1 device without hiccups, contingent on similar model serving methods as with Open Interpreter.

Globetrotters Inquire About O1: The 01 device works globally, but hosted services are assumed to be US-centric for now, with no international shipments confirmed.

Fine-Tuning Frustrations and Fixes: A call to understand and employ system messages effectively before fine-tuning models led to the suggestion of OpenPipe.ai, as members navigate optimal performance for various models with Open Interpreter. The conversation included benchmarking models and the poor performance of Phi-3-Mini-128k-Instruct when used with OI.

OpenAccess AI Collective (axolotl) Discord

Open Source Magic on the Rise: The community launched an open-source alternative to Sora, named StoryDiffusion, released under an MIT license on Github; its weights, however, are still pending release.

Memory Efficiency Through Unsloth Checkpointing: Implementing unsloth gradient checkpointing has led to a reported reduction in VRAM usage from 19,712MB to 17,427MB, highlighting Unsloth's effectiveness in memory optimization.

Speculations on Lazy Model Layers: An oddity was observed where only specific slices of model layers were being trained, contrasting the full layer training seen in other models; theories posited include models potentially optimizing mainly the first and last layers when confronted with too easy datasets.

Prompt Design Proves Pivotal: AI enthusiasts emphasized that prompt design, particularly regarding the use of suitable templates and end-of-text tokens, is critical in influencing model performance during both fine-tuning and evaluation.

Expanded Axolotl Docs Unveil Weight Merging Insights: A new update to Axolotl documentation has been rolled out, enhancing insights on merging model weights, with an emphasis on extending these guidelines to cover inference strategies, as seen on the Continuum Training Platform.

LangChain AI Discord

- LangChain's OData V4 and More: Discussions highlighted interest in LangChain's compatibility with Microsoft Graph (OData V4), and a need for API access to kappa-bot-langchain. There was also a query about the

kparameter in ConversationEntityMemory, referencing the LangChain documentation.

- Python vs. JS Streaming Consistency Issues: Members are experiencing inconsistencies with

streamEventsin the JavaScript implementation of LangChain's RemoteRunnable, which works as expected in Python. This prompted suggestions to contact the LangChain GitHub repository for resolution.

- AI Projects Seek Collaborators: An update was shared about everything-ai V1.0.0, now including a user-friendly local AI assistant with capabilities like text summarization and image generation. The request for beta testers for Rubiks.ai, a research assistant tool, was also discussed. Beta tester sign-up is available at Rubiks.ai.

- No-Code Tool for Smooth AI Deployments: Introduction of a no-code tool aimed at easing the creation and deployment of AI apps with embedded prompt engineering features. The early demo can be watched here.

- Learning Langchain Through Video Tutorials: Members have access to the "Learning Langchain Series" with the latest tutorials on API Chain and Router Chain available on YouTube and here, respectively. These guide users through the usage and benefits of these tools in managing APIs with large language models.

LAION Discord

- Hungry for Realistic AI Chat? Look to Roleplays!: An idea was pitched to compile a dataset of purely human-written dialogue, which might include jokes and more authentic interactions, to enhance AI conversations that go beyond the formulaic replies seen in smart instruct models.

- Create With Fake: Introducing Simian Synthetic Data: A Simian synthetic data generator was introduced, capable of generating images, videos, and 3D models for potential AI experimentation, offering a tool for those looking to simulate data for research purposes.

- The Hunt for Perfect Datasets: In response to a request about datasets optimal for text/numeric regression or classification tasks, several suggestions were made, including MNIST-1D and the Stanford Large Movie Review Dataset.

- Text-to-Video: Diffusion Beats Transformers: It was debated that diffusion models are currently the best option for state-of-the-art (SOTA) text-to-video tasks and are often more computationally efficient as they can be fine-tuned from text-to-image (T2I) models.

- Video Diffusion Model Expert Weighs In: An author of a stable video diffusion paper discussed the challenges faced in ensuring quality text supervision for video models, and the benefits of captioning videos using large language models (LLMs), bringing up the differences between autoregressive and diffusion video generation techniques.

LlamaIndex Discord

- Learn from OpenDevin's Creators: LlamaIndex invites engineers to a webinar featuring OpenDevin's authors on Thursday at 9am PT, to explore autonomous AI agent construction with insight from GitHub's growing embrace. Register for the webinar here.

- Hugging Face and AIQCon Updates: Upgrades to Hugging Face's TGI toolkit now cater to function calling and batched inference; meanwhile, Jerry Liu gears up to discuss Advanced Question-Answering Agents at AIQCon, with discounts via "Community" code cited in a tweet.

- Integrating LlamaIndex Just Got Trickier: Engineers reported challenges integrating LlamaIndex with Supabase Vectorstore and experienced package import confusion, quickly addressed by the updated llama-hub documentation.

- Problem-Solving the LlamaIndex: Debating over deletion of document knowledge and local PDF parsing libraries, the community leaned towards re-instantiating the query engine and leveraging PyMuPDF for solutions, while considering prompt engineering to tackle irrelevant model responses.

- Scouting & Reflecting on AI Agents: Engineers seek effective HyDE methods for language to SQL conversion while introspective agents draw focus with their reflection agent pattern, as observed in an article on AI Artistry, despite some hitting a 404 error.

tinygrad (George Hotz) Discord

- LLVM IR Inspires tinygrad Formatting Proposal: A readability improvement for tinygrad was suggested, looking to adopt an operation representation closer to LLVM IR's human-readable format. The conversation pivoted to Static Single Assignment (SSA) form and potential confusion caused by the placement of the PHI operation in tinygrad.

- Tinygrad Stays Single-threaded: George Hotz confirmed that tinygrad does not use multi-threading for CPU operations like matrix multiplication, maintaining its single-threaded design.

- Remapping Tensors for Efficiency: Techniques involving remapping tensors by altering strides were discussed, with a focus on how to perform reshapes efficiently, akin to tinygrad's internal methods.

- Push for Practical Understanding in tinygrad Community: Sharing of resources such as symbolic mean explanations on GitHub and a Google Doc on view merges indicated a drive for better understanding through practical examples and documentation in the tinygrad community.

- tinygrad Explores Quantized Inference: Conversation touched on tinygrad's capabilities to perform quantized inference, a feature that can potentially compress models and accelerate inference times.

Cohere Discord

SQL Database Harbor Found: The SQL database needed for tracking conversational history in the Cohere toolkit is set to operate on port 5432, but a precise location was not mentioned.

Google Bard Rivalry, School Edition: A high school student planning to create a Bard-like chatbot received guidance from Cohere about adhering to user agreements with the caveat of obtaining a production key, as elaborated in Cohere's documentation.

Chroma Hiccups Amidst Local Testing: There's an unresolved IndexError when using Cohere toolkit's Chroma for document retrieval, with a full log trace available at Pastebin and a recommendation to use the latest prebuilt container.

Retriever Confusion in Cohere Toolkit: An anomaly was observed where Langchain retriever was selected by default despite an alternative being specified, as per a user report – though the screenshot provided to evidence this was not viewable.

Production Key Puzzle: A user faced an odd situation where a new production key behaved like a trial key in the Cohere toolkit. However, Cohere support clarified that it is expected behavior in Playground / Chat UI and correct functionality should prevail when used in the API.

Coral Melds Chatbot and ReRank Skills: Introducing Coral Chatbot, which merges capabilities like text generation, summarization, and ReRank into a unified tool available for feedback on its Streamlit page.

Python Decorators, a Quick Byte: A brief explainer titled "Python Decorators In 1 MINUTE" was shared for those seeking an expedited introduction to this pythonic concept - the video is accessible on YouTube.

Latent Space Discord

- Centaur Coders Could Trim the Fat: The integration of AI in development is fostering a trend where Centaur Programmer teams might downsize, potentially leading to heightened precision and efficiency in production.

- DeepSeek-V2 Climbs the Ranks: DeepSeek-V2 announced on Twitter as an open-source MoE model, boasts superior capabilities in code and logical reasoning, fueling discussions on its impact on current AI benchmarks.

- Praising DeepSeek's Accomplishments: Correspondence featured praise for DeepSeek-V2's benchmark success, with an AI News newsletter detailing the model's fascinating enhancements to the AI ecosystem.

- Scouting for Unified Search Synergy: The quest for effective unified search solutions prompts conversations about tools like Glean and a Hacker News discussion on potential open-source alternatives, suggesting a bot to bridge discordant search platforms.

- Crowdsourcing AI Orchestration Wisdom: Curiosity arose around best practices for AI orchestration, with community members consulting on favored tools and techniques for managing complex pipelines involving text and embeddings.

AI Stack Devs (Yoko Li) Discord

- Freeware Faraday Fundamentals: Engineers have confirmed that Faraday can be utilized locally without cost and does not necessitate a cloud subscription; a member's setup with 6 GB VRAM effectively runs the software along with its free voice output capability.

- Enduring Downloads: It was emphasized that assets such as characters and models downloaded from the Faraday platform can be accessed and used indefinitely without any additional charges.

- GPU Might Makes Right: A powerful GPU has been acknowledged as a viable alternative to a cloud subscription for running Faraday unless one prefers to support the developers through subscription.

- Simulation Station Collaboration: In the realm of user-initiated projects, @abhavkedia has sparked a collaboration for creating a fun simulation aligning with the Kendrick and Drake situation, encouraging other members to join in.

- New Playground for AI Enthusiasts: Engineers are invited to try out and potentially integrate Llama Farm with discussions centering around an integration technique that involves AI-Town, and a pivot towards making Llama Farm more universally applicable in systems utilizing the OpenAI API.

Mozilla AI Discord

- Need for Speed on Device? Try Rocket-3B: Experiencing 8 seconds per token, participants sought faster model options, with Rocket-3B providing a notable speed improvement.

- llamafile Caching Matures: Users can prevent redundant model downloads in llamafile by employing the ollama cache via

-m model_name.gguf, enhancing efficiency.

- Port Troubles with AutoGPT and llamafile: Integration issues between AutoGPT and llamafile surfaced; llamafile agent crashed during AP server starts, necessitating a manual workaround.

- Seeking Feedback for AutoGPT-llamafile Integration: The AutoGPT community is actively developing integration with llamafile as indicated by a draft PR, calling for feedback before further work.

Interconnects (Nathan Lambert) Discord

AI Benchmarks in Spotlight: Dr. Jim Fan's tweet spurred a debate on the overvaluation of specific benchmarks and public democracy in AI evaluation, and the member suggested AB testing as a more effective approach.

Benchmarking Across Industries: Drawing parallels to the database sector, one engineer underscored the significance of having standard benchmarks for AI, referencing the approach mentioned in Dr. Fan's tweet.

TPC Standards Explained: In response to inquiries, a member clarified TPC as the Transaction Processing Council, which standardizes database industry benchmarks, referencing specific benchmarks such as TPC-C and TPC-H.

GPT-2's Surprising Comeback: A light-hearted mention by Sam Altman prompted discussion about GPT-2’s return to the LMsys arena, with a tweet snapshot shared showing the humor involved.

Lingering Doubts Over LMsys Direction: Nathan Lambert voiced skepticism towards OpenAI possibly using LMsys for model evaluations and expressed concern about LMsys's resource limitations and potential reputation damage from the latest 'chatgpt2-chatbot' hype.

DiscoResearch Discord

- PR Hits the Chopping Block: A Pull Request was closed without additional context provided, signaling a potential change or halt in a discussed development effort.

- AIDEV Excitement Builds Among Engineers: Attendees of the upcoming AIDEV event are syncing up and showing enthusiasm about meeting in person, but attendees are inquiring about whether they need to bring their own food.

- Mistral Gains Ground in German Discussions: Utilization of the 8x22b Mistral model has been validated for a project, with a focus on deployment and performance. Inquiries into low-latency decoding techniques and the creation of a German dataset for inclusive language sparked dynamic discussions.

- Critical Data Set Crafting for German AI: Suggestions for building a German-exclusive pretraining dataset from Common Crawl have been made, prompting a discussion about which domains to prioritize for inclusion due to their high-quality content.

- Inclusive Language Resources Shared: For those interested in implementing inclusive language modes in models, resources like the INCLUSIFY prototype (https://davids.garden/gender/) and its GitLab repository (https://gitlab.com/davidpomerenke/gender-inclusive-german) have been circulated.

LLM Perf Enthusiasts AI Discord

Anthropic AI's Prompt Tool Piques Interest: Engineers found a new prompt generator tool in the Anthropic console, sparking discussions on its potential and capabilities.

Politeness through AI Crafted: The tool demonstrated its value by successfully rephrasing statements more courteously, marking a thumbs-up for practical AI usage.

Unpacking the AI's Instruction Set: An engineer embarked on uncovering the tool's system prompt, specifically noting the heavy reliance on k-shot examples in its architecture.

Extracting the Full AI Prompt Faces Challenges: Despite hurdles in retrieving the complete prompt due to its considerable size, the enthusiasm in the discussions remained high.

Share and Care Amongst AI Aficionados: A pledge was made by a community member to share the fully extracted prompt with peers, ensuring collective progress in understanding and utilizing the new tool.

Alignment Lab AI Discord

Given the information provided, there is no relevant discussion content to summarize for an AI Engineer audience. If future discussions include technical, detail-oriented content, a summary appropriate for engineers can be generated.

Datasette - LLM (@SimonW) Discord

- GitHub Issue Sparks Plugin Collaboration: A discussion focused on improving a plugin included a link to a GitHub issue, indicating active development for a feature to implement parameterization in testing.

- OpenAI Assistant API Compatibility Question: An inquiry was made about the possibility of using

llmwith the OpenAI Assistant API, expressing concern about missing previously shared information on the topic.

The Skunkworks AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Unsloth AI (Daniel Han) ▷ #general (170 messages🔥🔥):

- Technical Glitch in GGUF Conversion: Users discussed a confirmed bug in fine-tuning Llama3 models when converting to GGUF, specifically mentioning an issue with retaining fine-tuned data during the conversion. Follow-up and related discussions were referenced with direct links to Reddit and GitHub issues (Reddit Discussion, GitHub Issue).

- Running Aphrodite Engine with Quantization: A user encountered difficulties in running the Aphrodite engine with 4bit bnb quantization and sought advice. Recommendations were given to use fp16 with the

--load-in-4bitflag and to build from the dev branch for better support and features.

- LLM VRAM Requirements for Inference Programs: A link was shared to a VRAM calculator on Hugging Face's Spaces (LLM-Model-VRAM-Calculator) with discussion around its accuracy and compatibility with inference programs such as vLLM, GGUF, and exllama.

- Unsloth Studio Release Delayed: A user inquired about the delay in the release of Unsloth Studio due to issues with phi and llama, looking forward to easier notebook usage. Another user clarified the correct usage of the eos_token in Unsloth’s updated training code for Llama3-8b-instruct.

- Concerns About Model Base Data on Inference Results: The impact of the base model's training data on the results of a fine-tuned model was discussed. Clarification was provided that fine-tuning likely updates weights used for predicting tokens in conversations previously seen by the model.

- LLM Model VRAM Calculator - a Hugging Face Space by NyxKrage: no description found

- Reddit - Dive into anything: no description found

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- Reddit - Dive into anything: no description found

Unsloth AI (Daniel Han) ▷ #random (86 messages🔥🔥):

- Supporter Role Confusion: A member was unsure about their supporter status after a message about a private channel for supporters. It was clarified that supporter roles are present but require membership or a donation of at least $10.

- Tackling Unfiltered LLaMA-3 Outputs: A member expressed concern that LLaMA-3 provided uncensored outputs to questionable prompts. Despite attempts to stop it with system prompts, LLaMA-3 continued to produce explicit content.

- FlashAttention Optimization Discussion: A member highlighted an article on Hugging Face Blog about optimizing attention computation using FlashAttention for long sequence machine learning models, which can reduce memory usage in training.

- Graphics Card Sale Alert: A member shared a Reddit post about a discount on an MSi GeForce RTX 4090 SUPRIM LIQUID X 24 GB Graphics Card, prompting discussions on the advantages of smaller and more efficient cooling systems in newer GPU models.

- AI-Generated Profile Picture Admiration: Discussion ensued about a member's new profile picture, which turned out to be AI-generated. It sparked interest and comparisons to characters from popular media.

- Reddit - Dive into anything: no description found

- Saving Memory Using Padding-Free Transformer Layers during Finetuning: no description found

Unsloth AI (Daniel Han) ▷ #help (412 messages🔥🔥🔥):

- GGUF Upload Queries: Members discussed the ability to upload GGUF models to GPT-4all, with confirmation from another member that it should be possible, and the use of Huggingface's

model.push_to_hub_ggufto do so. - Tokenization Troubles: Conversations highlighted an issue with tokenization across various formats including GGUF, noting differences in responses when using Unsloth for fine-tuning compared to other inference methods.

- Tokenizer Regex Revision: There's an ongoing discussion on GitHub Issue #7062 regarding tokenization problems with LLama3 GGUF conversion, especially relating to LORA adapters; a regex modification has been proposed to address this.

- LORA Adapters and Training: A member successfully used LORA by training with

load_in_4bit = False, saving LORA adapters separately, and converting them using a specific llama.cpp script, which resulted in perfect results for them. - Deployment and Multigpu Questions: Inquiries about deployment using local data for fine-tuning models and the ability to use multiple GPUs for training with Unsloth were discussed, with the current conclusion that Unsloth does not yet support multigpu but it may in the future.

- Reddit - Dive into anything: no description found

- Tweet from bartowski (@bartowski1182): After days of compute (since I had to start over) it's finally up! Llama 3 70B GGUF with tokenizer fix :) https://huggingface.co/bartowski/Meta-Llama-3-70B-Instruct-GGUF In other news, just orde...

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Cooking GIF - Cooking Cook - Discover & Share GIFs: Click to view the GIF

- Supervised Fine-tuning Trainer: no description found

- Reddit - Dive into anything: no description found

- ScottMcNaught - Overview: ScottMcNaught has one repository available. Follow their code on GitHub.

- GGUF breaks - llama-3 · Issue #430 · unslothai/unsloth: Findings from ggerganov/llama.cpp#7062 and Discord chats: Notebook for repro: https://colab.research.google.com/drive/1djwQGbEJtUEZo_OuqzN_JF6xSOUKhm4q?usp=sharing Unsloth + float16 + QLoRA = WORKS...

- llama3-instruct models not stopping at stop token · Issue #3759 · ollama/ollama: What is the issue? I'm using llama3:70b through the OpenAI-compatible endpoint. When generating, I am getting outputs like this: Please provide the output of the above command. Let's proceed f...

- Google Colab: no description found

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- GitHub - ggerganov/llama.cpp at gg/bpe-preprocess: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- llama : fix BPE pre-tokenization (#6920) · ggerganov/llama.cpp@f4ab2a4: * merged the changes from deepseeker models to main branch * Moved regex patterns to unicode.cpp and updated unicode.h * Moved header files * Resolved issues * added and refactored unic...

- readme : add note that LLaMA 3 is not supported with convert.py (#7065) · ggerganov/llama.cpp@ca36326: no description found

- microsoft/Phi-3-mini-128k-instruct · Hugging Face: no description found

Unsloth AI (Daniel Han) ▷ #showcase (23 messages🔥):

- LLaMA Variants for Enhanced Knowledge: A new LLaMA-3 variant has been developed for aiding knowledge graph construction, with a focus on structured data like RDF triples. The model, LLaMA-3-8B-RDF-Experiment, is designed to generate knowledge graph triples and specifically excludes non-English data sets.

- Instruct Coder Model Released: A new LLaMA model, rombodawg/Llama-3-8B-Instruct-Coder-v2, has been finished and hosts improvements over its predecessor. The updated model Llama-3-8B-Instruct-Coder-v2 has been retrained to fix previous issues and is expected to perform better.

- Oncord: Professional Website Builder Unveiled: Oncord has been presented as a professional website builder for creating modern websites with integrated tools for marketing, commerce, and customer management. The platform, showcased at oncord.com, offers a read-only demo and is aimed at a mix of technical and non-technical users.

- Open Call for Collaboration on Machine Learning Paper: There's an invitation for the community to contribute to an open source paper predicting IPO success with machine learning. Interested parties can assist with the paper hosted at RicercaMente.

- Startup Discussion and Networking: A dialogue took place regarding startup marketing, strategies, and collaborations. Specifically, one startup Oncord has been discussed, with a focus on enhancing technical flexibility for users, and another concept for measuring trust between viewers and content creators was hinted at but not officially launched yet.

- PREDICT IPO USING MACHINE LEARNING: Open source project that aims to trace the history of data science through scientific research published over the years

- rombodawg/Llama-3-8B-Instruct-Coder-v2 · Hugging Face: no description found

- M-Chimiste/Llama-3-8B-RDF-Experiment · Hugging Face: no description found

- The miR-200 family is increased in dysplastic lesions in ulcerative colitis patients - PubMed: UC-Dysplasia is linked to altered miRNA expression in the mucosa and elevated miR-200b-3p levels.

- Oncord - Digital Marketing Software: Website, email marketing, and ecommerce in one intuitive software platform. Oncord hosted CMS makes it simple.

- no title found: no description found

Unsloth AI (Daniel Han) ▷ #suggestions (3 messages):

- Fine-Tuning LVLM Request: A member expressed a desire for a generalised way of fine-tuning Large Vision Language Models (LVLM).

- Call for Moondream Support: Another member requested support for moondream, noting that it currently only finetunes the phi 1.5 text model, and shared the GitHub notebook for moondream finetuning.

Link mentioned: moondream/notebooks/Finetuning.ipynb at main · vikhyat/moondream: tiny vision language model. Contribute to vikhyat/moondream development by creating an account on GitHub.

Nous Research AI ▷ #ctx-length-research (2 messages):

- In Quest for Data Collection Progress: A member inquired about the current count of pages collected in the cortex project, seeking an update on the data accumulation milestone.

- Navigating the Void: A link was posted presumably related to the ctx-length-research channel, but the content or context of the link is inaccessible as it was referenced as <<

>> .

Nous Research AI ▷ #off-topic (6 messages):

- Innovative Cooking Convenience Unveiled: A YouTube video titled "Recipic Demo" was shared, showcasing a website where users can upload their available ingredients to receive meal recipes. Intrigue is sparked for those seeking culinary inspiration with what they have on hand. Watch "Recipic Demo"

- Delving into Enhancements for Multimodal Language Models: A member inquires about ways to significantly improve multimodal language models, mentioning the integration of JEPA as a potential enhancement, though a repository or model for such integration hasn't been found.

- Multimodal Collaboration Envisioned: In response to enhancing multimodal language models, another member suggests the idea of tools that enable language models to utilize JEPA models, indicating an interest in cross-model functionality.

- Push for Higher Resolution in Multimodal Language Models: Advancing multimodal models can involve increasing their resolution to better interpret small text in images, a member suggests. This advancement could widen the scope of visual data that language models can effectively understand and incorporate.

Link mentioned: Recipic Demo: Ever felt confused about what to make for dinner or lunch? What if there was a website where you could just upload what ingredients you have and get recipes ...

Nous Research AI ▷ #interesting-links (7 messages):

- AQLM Pushes the Envelope with Llama-3: The AQLM project introduces more prequantized models, such as Llama-3-70b and Command-R+, enhancing the accessibility of open-source Large Language Models (LLMs). In particular, Llama-3-70b can run on a single RTX3090, showcasing significant progress in model quantization.

- Orthogonalization Techniques Create Kappa-3: Phi-3's weights have been orthogonalized to reduce model refusals, released as the Kappa-3 model. Kappa-3 comes with full precision (fp32 safetensors) and a GGUF fp16 option, although questions remain about its performance on prompts requiring rule compliance.

- Deepseek AI Celebrates a Win: A share from Deepseek AI's Twitter points to their success, triggering a light-hearted joke about family resemblances in AI achievements.

- Revolutionizing Healthcare with Deterministic Quoting: Invetech's project introduces "Deterministic Quoting" to address the risk of LLMs generating hallucinated quotations in sensitive fields like healthcare. With this technique, only verbatim quotes from the source material are displayed with a blue background, aiming to enhance trust in AI's use in medical record processing and diagnostics. Details and visual provided.

- Hallucination-Free RAG: Making LLMs Safe for Healthcare: LLMs have the potential to revolutionise our field of healthcare, but the fear and reality of hallucinations prevent adoption in most applications.

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

Nous Research AI ▷ #general (527 messages🔥🔥🔥):

<ul>

<li><strong>AI Chatbot Comparison and Speculation</strong>: Members discussed the performance of various AI models, with particular focus on function calling capabilities. **Llama 3 70b** was deemed superior to **Mistral 8x22b** for function calling, despite the latter's "superior function calling" marketing.</li>

<li><strong>The Return of GPT-2 in LMSYS</strong>: There's buzz around the return of **GPT-2** to LMSYS with significant improvements, and speculation on whether it's a new model being A/B tested or something else, such as GPT-4Lite or a more cost-efficient GPT alternative.</li>

<li><strong>Testing of the Hermes 2 Pro Llama 3 8B Model</strong>: A member requested testing of the **Hermes 2 Pro Llama 3 8B** model's function calling ability up to the 32k token limit, but practical limitations due to time and resource constraints were mentioned.</li>

<li><strong>Chatbot Names, Open Source Hopes, and GPT Hype Debates</strong>: The unique naming of chatbot models (like GPT-2 chatbot) led to discussions and jokes about their capabilities and the potential for an OpenAI model becoming open source. There were both skepticism and anticipation regarding the next big AI development and its release timeline.</li>

<li><strong>YAML vs. JSON in Model Input</strong>: A brief mention was made on the preference for YAML over JSON for model inputs due to better human readability and token efficiency.</li>

</ul>

- cognitivecomputations/Meta-Llama-3-120B-Instruct-gguf · Hugging Face: no description found

- OpenAI exec says today's ChatGPT will be 'laughably bad' in 12 months: OpenAI's COO said on a Milken Institute panel that AI will be able to do "complex work" and be a "great teammate" in a year.

- Tweet from Maxime G, M.D (@maximegmd): Internistai 7b: Medical Language Model Today we release the best 7b medical model, outperforming GPT-3.5 and achieving the first pass score on the USMLE! Our approach allows the model to retain the s...

- Mlp Relevant GIF - MLP Relevant Mylittlepony - Discover & Share GIFs: Click to view the GIF

- Support for grammar · Issue #1229 · vllm-project/vllm: It would be highly beneficial if the library could incorporate support for Grammar and GBNF files. https://github.com/ggerganov/llama.cpp/blob/master/grammars/README.md

- TRI-ML/mamba-7b-rw · Hugging Face: no description found

- Tweet from Kyle Mistele 🏴☠️ (@0xblacklight): btw I tested this with @vllm_project and it works to scale @NousResearch's Hermes 2 Pro Llama 3 8B to ~32k context with great coherence & performance (I had it summarizing @paulg essays) Download...

- Update Server's README with undocumented options for RoPE, YaRN, and KV cache quantization by K-Mistele · Pull Request #7013 · ggerganov/llama.cpp: I recently updated my LLama.cpp and found that there are a number of server CLI options which are not described in the README including for RoPE, YaRN, and KV cache quantization as well as flash at...

- NousResearch/Hermes-2-Pro-Llama-3-8B · Hugging Face: no description found

- NousResearch/Hermes-2-Pro-Llama-3-8B · Hugging Face: no description found

- GitHub - huggingface/lerobot: 🤗 LeRobot: State-of-the-art Machine Learning for Real-World Robotics in Pytorch: 🤗 LeRobot: State-of-the-art Machine Learning for Real-World Robotics in Pytorch - huggingface/lerobot

- Extending context size via RoPE scaling · ggerganov/llama.cpp · Discussion #1965: Intro This is a discussion about a recently proposed strategy of extending the context size of LLaMA models. The original idea is proposed here: https://kaiokendev.github.io/til#extending-context-t...

- Port of self extension to server by Maximilian-Winter · Pull Request #5104 · ggerganov/llama.cpp: Hi, I ported the code for self extension over to the server. I have tested it with a information retrieval, I inserted information out of context into a ~6500 tokens long text and it worked, at lea...

Nous Research AI ▷ #ask-about-llms (12 messages🔥):

- LlamaCpp Update Resolves Issue: An issue with LlamaCpp not generating the

<tool_call>token was resolved by updating to the latest version. The system prompt now works as intended. - LoRA Tuning Challenges on A100: A member is experiencing unexpectedly long training times with LoRA llama 3 8b, where each step takes approximately 500 seconds on an A100 using axolotl, prompting them to consider debugging due to others having much faster training times.

- Comparative Training Speed Insights: For Llama2 7B, a member reported it took roughly 3 minutes for 1,000 iterations using litgpt, indicating a significant speed difference in training times compared to what another member experienced with LoRA.

- Best Practices for Teaching GPT Examples: A member asked for advice on the best method to train GPT with examples, contemplating between providing a file with examples and structuring the examples as repeated user-assistant message pairs.

- Attention Paper Implementation Feedback Request: A member sought feedback on their reimplementation of the "Attention is All You Need" paper, sharing their GitHub repository at https://github.com/davidgonmar/attention-is-all-you-need. They're considering improvements like using torch's scaled dot product and pretokenizing.

Nous Research AI ▷ #bittensor-finetune-subnet (11 messages🔥):

- New Miner Repo Stuck: A user reported their repository commitment to the mining pool was not downloading for hours, indicating potential network issues for newcomers.

- Network Awaiting Critical PR: A user mentioned the Bittensor network is currently non-operational until a pending pull request (PR) is merged, which is crucial for fixing the network.

- Timeframe for Network Fix Uncertain: When asked, a user stated that the PR would be merged "soon", but clarified they have no control over the PR review process, leaving an ambiguous timeline.

- Network Issues Stall Model Validation: Clarification was given that new commits or models submitted to the network will not be validated until the aforementioned PR is resolved, directly impacting miner operations.

- Seeking GraphQL Service Information: One user inquired about resources or services related to GraphQL for Bittensor subnets, indicating a possible need for developer support or documentation.

Nous Research AI ▷ #world-sim (7 messages):

- World Sim Access Issues Persist: A member expressed difficulties with accessing worldsim.nousresearch.com, noting that the site is still not operational with a simple "still not work" comment.

- Expressing Disappointment: In response to the ongoing issue, there was another expression of disappointment, characterized by multiple frowning emoticons.

- Call for Simulation: A brief message stating "plz sim" was posted, possibly indicating a desire to start or engage with a simulation.

- Inquiry about World Sim: A member inquired, "What's world sim? Where can i find more info? and What's a world-sim role?" showing interest in the simulation aspect of the channel.

- Guidance to Information: In response to questions about World Sim, a member directed others to a specific channel <#1236442921050308649> for a pinned post that likely contains the relevant information.

Link mentioned: worldsim: no description found

Stability.ai (Stable Diffusion) ▷ #general-chat (421 messages🔥🔥🔥):

- Dissecting Diffusion Models: Members exchanged insights on the difference between Hyper Stable Diffusion, a finetuned or LoRA-ed model that operates quickly, and Stable Diffusion 3, a distinct model not equivalent to Hyper Stable Diffusion. Links to explanatory resources were not provided.

- Seeking Stable Diffusion Clarity: Conversations circled around Stable Diffusion no longer being open-source and the potential non-release of SD3. Users discussed the importance of downloading and saving models and adapters amid fears that AI's open-source era might be ending.

- Optimizing Realistic Human Models: A discussion on finding the best realistic human model with flexibility covered various model options, with suggestions to avoid heavy bias in models like those from civitai to prevent sameness in generated people.

- Dreambooth and LoRA Explorations: Users engaged in verbose consultation and detailed discussions about how to best use Dreambooth and LoRA training for Stable Diffusion, debating the best approach to creating unique faces and styles.

- Adventures in Upscaling: Queries about the most effective upscaler led to discussions about various upscaling models and workflows such as RealESRGAN_x4plus and 4xUltrasharp, with users sharing personal experiences and approximation of preferences.

- How to use Stable Diffusion - Stable Diffusion Art: Stable Diffusion AI is a latent diffusion model for generating AI images. The images can be photorealistic, like those captured by a camera, or in an artistic

- How to Install Stable Diffusion - automatic1111: Part 2: How to Use Stable Diffusion https://youtu.be/nJlHJZo66UAAutomatic1111 https://github.com/AUTOMATIC1111/stable-diffusion-webuiInstall Python https://w...

- Stable Cascade Examples: Examples of ComfyUI workflows

- Stylus: Automatic Adapter Selection for Diffusion Models: no description found

- LORA training EXPLAINED for beginners: LORA training guide/tutorial so you can understand how to use the important parameters on KohyaSS.Train in minutes with Dreamlook.AI: https://dreamlook.ai/?...

- THE OTHER LoRA TRAINING RENTRY: Stable Diffusion LoRA training science and notes By yours truly, The Other LoRA Rentry Guy. This is not a how to install guide, it is a guide about how to improve your results, describe what options d...

LM Studio ▷ #💬-general (107 messages🔥🔥):

- Server Logging Off Option Request: A user expressed discomfort with the inability to turn off server logging via the GUI in LM Studio, emphasizing a desire for increased privacy in their app development process.

- Recognition of Prompt Engineering Value: The legitimacy of prompt engineering as a critical and valuable skill in the tech industry was acknowledged, with references indicating it as a lucrative career and a pivotal aspect in producing high-quality outputs from LLMs.

- Headless Mode Operation for LM Studio: Users discussed the feasibility of operating LM Studio in a headless mode, where a user demonstrated interest in starting the server mode via command line rather than GUI, and others provided insights on using lms CLI as a potential solution.

- Phi-3 vs. Llama 3 for Quality Outputs: A debate emerged over the effectiveness of the Phi-3 model compared to Llama 3, particularly concerning the task of summarizing content and generating FAQs, with users sharing settings and strategies to improve outcomes.

- Troubleshooting Model Crashes and Configuration: Multiple users reported issues regarding model performance in LM Studio, with problems such as high RAM consumption despite sufficient VRAM, unexpected behavior after updates, and errors when loading models. Community members responded with suggestions such as checking drivers, adjusting model configs, and evaluating system specs.

- Welcome | LM Studio: LM Studio is a desktop application for running local LLMs on your computer.

- How I Won Singapore’s GPT-4 Prompt Engineering Competition: A deep dive into the strategies I learned for harnessing the power of Large Language Models (LLMs)

- GitHub - lmstudio-ai/lms: LM Studio in your terminal: LM Studio in your terminal. Contribute to lmstudio-ai/lms development by creating an account on GitHub.

LM Studio ▷ #🤖-models-discussion-chat (21 messages🔥):

- Llama Toolkit Update Affects Command R+ Tokenizer: Changes in llamacpp upstream for llama3 broke Command R and Command R+'s tokenizer, with additional reports of incorrect quantization. Updated quants for Command R+ can be found at Hugging Face Co Model Repo, and a note that

do not concatenate splitsbut rather usegguf-splitfor file merging, if necessary.

- Problems Fine-tuning Hermes-2-Pro L3 Noted: Despite popularity, fine-tuning Hermes-2-Pro L3 still presents issues, with an opinion expressed that it's better than L3 8b but not as improved over its predecessor as hoped.

- Hermes-2-Pro L3 in Action: Running the model with 8bit MLX showed impressive handling of incoherent input, with a quoted example testing the AI's response to disclosing potentially unethical information. A user queried about applying a "jailbreak" to remove content safeguards.