[AINews] Jamba: Mixture of Architectures dethrones Mixtral

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 3/27/2024-3/28/2024. We checked 5 subreddits and 364 Twitters and 24 Discords (374 channels, and 4693 messages) for you. Estimated reading time saved (at 200wpm): 529 minutes.

It's a banner week for MoE models, with DBRX yesterday and Qwen releasing a small MoE model today. However we have to give the top spot to yet another monster model release...

The recently $200m richer AI21 labs released Jamba today (blog, HF, tweet, thread from in person presentation). The headline details are:

- MoE with 52B parameters total, 12B active

- 256K Context length

- Open weights: Apache 2.0

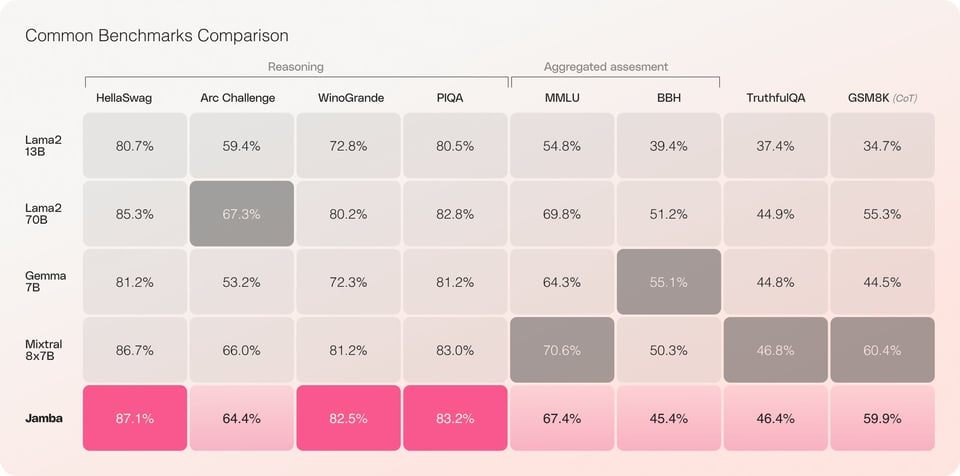

It is notable both for its performance in its weight class (we'll come back to what "weight class" now means):

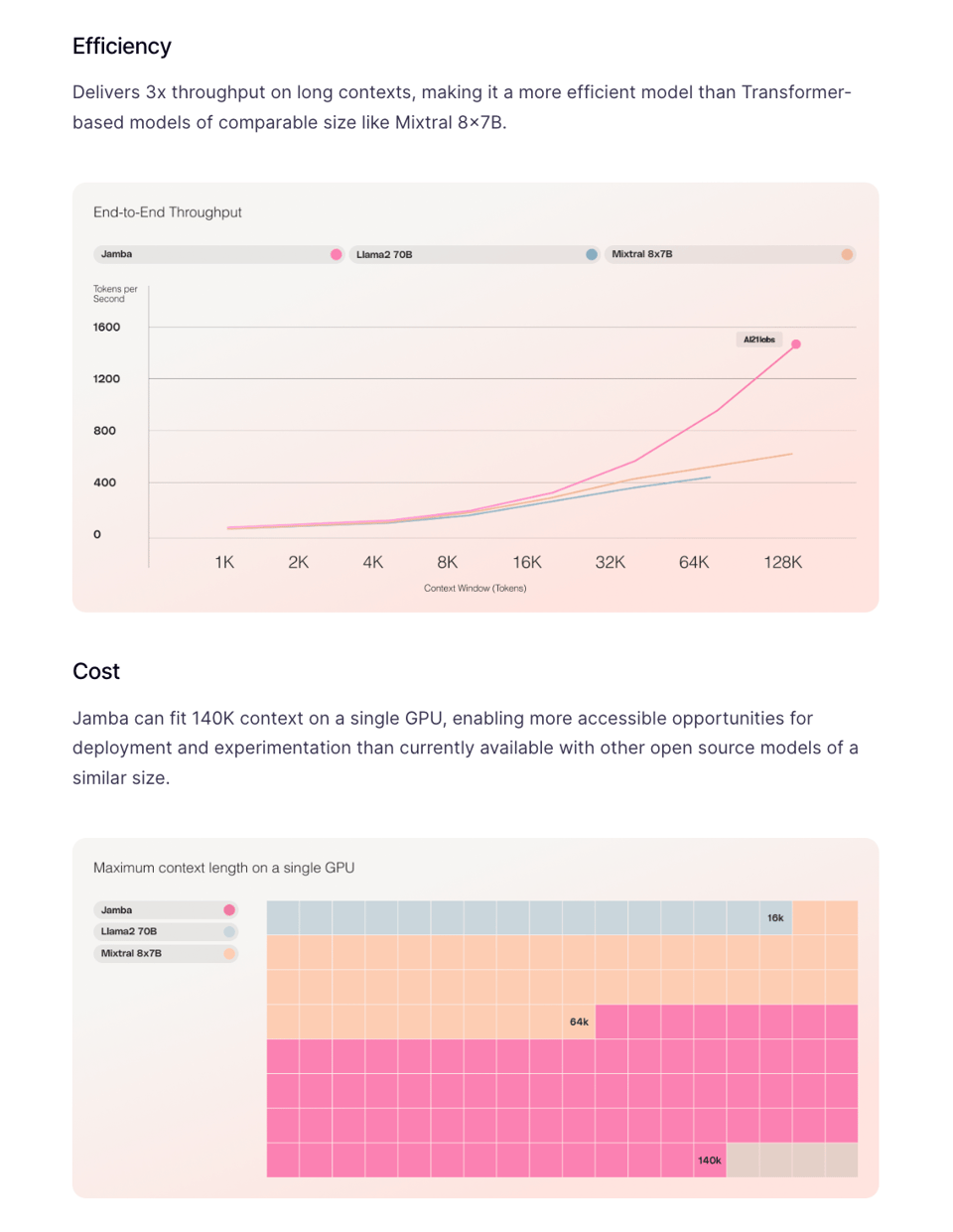

and for its throughput + memory requirements in long context scenarios:

re: weight class: It seems every design decision was taken to maximize the performance gained from a single A100:

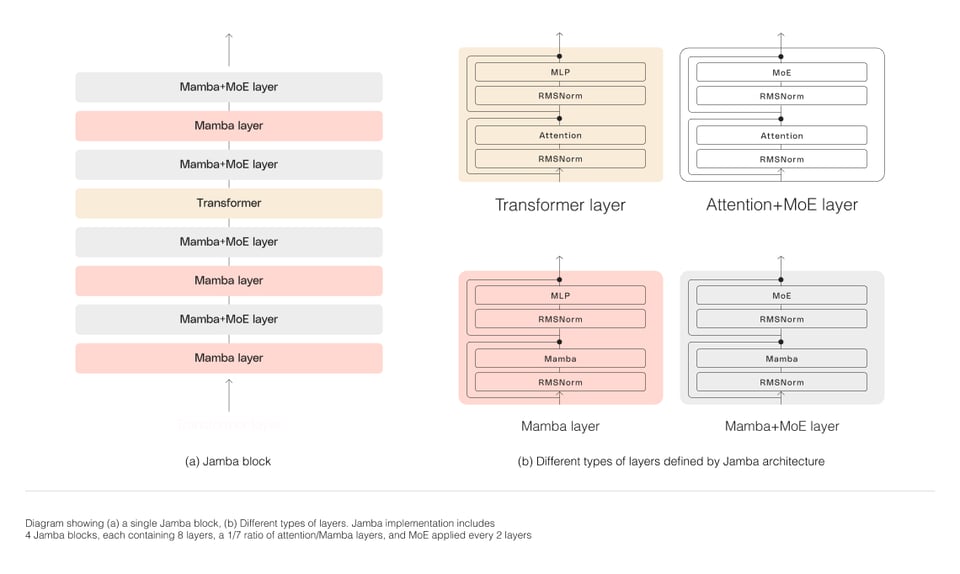

"As depicted in the diagram below, AI21’s Jamba architecture features a blocks-and-layers approach that allows Jamba to successfully integrate the two architectures. Each Jamba block contains either an attention or a Mamba layer, followed by a multi-layer perceptron (MLP), producing an overall ratio of one Transformer layer out of every eight total layers.

The second feature is the utilization of MoE to increase the total number of model parameters while streamlining the number of active parameters used at inference—resulting in higher model capacity without a matching increase in compute requirements. To maximize the model’s quality and throughput on a single 80GB GPU, we optimized the number of MoE layers and experts used, leaving enough memory available for common inference workloads.

In a step ahead of Together's preceding StripedHyena, Jamba juices up the classic Mamba architecture with transformer and MoE layers:

They released a base model, but it comes ready with Huggingface PEFT support. This actually looks like a genuine Mixtral competitor, and that's only good things for the open AI community.

Table of Contents

- AI Reddit Recap

- AI Twitter Recap

- PART 0: Summary of Summaries of Summaries

- PART 1: High level Discord summaries

- LM Studio Discord

- Unsloth AI (Daniel Han) Discord

- Nous Research AI Discord

- Stability.ai (Stable Diffusion) Discord

- Perplexity AI Discord

- Latent Space Discord

- Eleuther Discord

- OpenAI Discord

- HuggingFace Discord

- OpenInterpreter Discord

- Modular (Mojo 🔥) Discord

- OpenRouter (Alex Atallah) Discord

- CUDA MODE Discord

- LlamaIndex Discord

- OpenAccess AI Collective (axolotl) Discord

- LAION Discord

- tinygrad (George Hotz) Discord

- LangChain AI Discord

- Interconnects (Nathan Lambert) Discord

- DiscoResearch Discord

- Alignment Lab AI Discord

- LLM Perf Enthusiasts AI Discord

- Skunkworks AI Discord

- PART 2: Detailed by-Channel summaries and links

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence. Comment crawling still not implemented but coming soon.

Large Language Models

- /r/MachineLearning: DBRX: A New Standard for Open LLM - 16 experts, 12B params per expert, 36B active params, trained for 12T tokens

- /r/LocalLLaMA: Databricks reveals DBRX, the best open source language model - surpasses grok-1, mixtral, and other open weight models

- /r/LocalLLaMA: RAG benchmark of databricks/dbrx - dbrx does not do well with RAG in real-world testing, about same as gemini-pro

Stable Diffusion & Image Generation

- Animatediff is reaching a whole new level of quality - example by @midjourney_man using img2vid workflow

- /r/StableDiffusion: Attention Couple for Forge - easily generate multiple subjects, no more color bleeds or mixed features

- FastSD CPU v1.0.0 beta 28 release - ultra fast image generation (0.82 seconds) with SDXS512-0.9 OpenVINO on CPU

- /r/StableDiffusion: Implicit Style-Content Separation using B-LoRA - leverages LoRA to implicitly separate style and content components of a single image

- /r/StableDiffusion: SUPIR is exceptional even with high-res source images - SUPIR adds incredible detail even when upscaling high resolution images

AI Assistants & Agents

- /r/OpenAI: AI coding changed my life. Need advice going forward. - using ChatGPT to learn web development and make money outside 9-5 job

- Will ChatGPT eventually "learn" from it's own content it previously created, which could lead to it being wrong about facts sometime in the future? - concerns about ChatGPT being trained on its own outputs leading to inaccuracies

- /r/LocalLLaMA: Created an AI Agent which "Creates Linear Issues using TODOs in my last Code Commit" . Got it to 90% accuracy. - connecting Autogen with Github and Linear to automatically create issues from code TODOs

- /r/OpenAI: Built an AI Agent which "Creates Linear Issues using TODOs in my last Code Commit". - agent uses code context to understand TODOs, assign to right person/team/project and create issues in Linear

AI Hardware & Performance

- /r/MachineLearning: Are data structures and leetcode needed for Machine Learning Researcher/Engineer jobs and interviews?

- Microsoft plans to offload some of Windows Copilot's features to local hardware, but will use NPUs only.

- /r/LocalLLaMA: With limited budget, is it worthy to go into AMD GPU/ecosystem now, given Tiny Corp released the tinybox with AMD and Lisa Su's recent speech at the AI PC summit at Beijing?

- /r/LocalLLaMA: Looks like DBRX works on Apple Silicon MacBooks! - takes about 66GB RAM at 4 bit quant on M3 96GB, about 6 tokens per second

Memes & Humor

- Me and the current state of AI

- /r/OpenAI: When 'Open'AI's lawyers ask me if used their models' generated output to train a competing model: [deleted]

- /r/LocalLLaMA: Open AI 3 Laws of Robotics

- When you are the 60 Billion $$ Man but also a Doctor.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

TO BE COMPLETED

PART 0: Summary of Summaries of Summaries

- Databricks Unveils DBRX: Databricks introduced DBRX, an MoE LLM with 132 billion parameters, and stoked debates on the limits of pretraining. The AI community is abuzz with its 12 trillion token training and potential, comparing it to models like Mistral Medium and gauging the diminishing returns of scale.

- Mixture of Innovations with Jamba and Qwen Models: AI21 Labs introduced Jamba, a SSM-Transformer hybrid with a 256K context window, while Qwen announced Qwen1.5-MoE-A2.7B, which punches above its parameter count by matching 7B models' performance. The releases spark discussions about accessibility, performance, and the future trajectory of AI scaling.

- AI Community Explores Vtubers and AI Consoles: Talks flared about the AI Tokyo event and the concept of a human-AI collaborative model for Vtubers to boost engagement. Speculations flew around Truffle-2, a potential AI-centric console, following the buzz about its predecessor's moderate specs at AI Tokyo.

- GPUs and Tokens: Performance Pursuits: Engineers shared insights on TensorRT for efficient inference on large models, debated about Tensor Parallelism limits, and revealed new methods like smoothquant+ and fp8 e5m2 cache. Exchanges also focused on Claude's regional access and perplexities in fine-tuning loss curves while training Deepseek-coder-33B models.

- RAG, Retrieval, and Dataset Discourse: The AI community delved into Retrieval Augmented Generation (RAG) performance, debating output quality and scrutinizing CoT's impact on retrieval effectiveness. Proposals for XML tag standardization in prompt inputs were made, looking at structured inputs as a potential staple for enhancing outcomes.

PART 1: High level Discord summaries

LM Studio Discord

- "Loaded from Chat UI" Quirk Fixed: The LM Studio 0.2.18 update addressed a bug where API queries returned a model ID of "Loaded from Chat UI," preventing access to the real model name, now fixed in beta version 0.2.18.

- Scaling Up with Merged Might: The merger of LongAlpaca-70B-lora and lzlv_70b_fp16_hf yielded a 32K token, linear rope scaling model heralded by ChuckMcSneed despite a 30% drop in performance at 8 times context length; see the merged model here.

- Cutting-Edge Configs for LM Studio Enthusiasts: LM Studio 0.2.18 enriches user experience with features like an 'Empty Preset' for Base/Completion models, and 'monospace' chat style, and the update issues have been ironed out as per announcements.

- Pumping Up Power for Peak AI Performance: Discussions around hardware for AI work suggested that the NVIDIA 3090 and 4090, or dual A6000, cards provide significant VRAM and CUDA prowess, with monitor quality also a hot topic like this MSI monitor. A ballast of 1200-1500w PSUs were recommended for these beefy setups.

- Rendering with ROCm Beta, Users Tackle Turbulence: LM Studio's ROCm 0.2.18 Beta targeted GPU offload issues but users reported mixed results with model loading and GPU utilization. Interested parties can explore the ROCm beta here, and seek help within the community to nail down nuanced issues or revert to a standard version when needed.

Unsloth AI (Daniel Han) Discord

Breaking the Ice with Unsloth AI: Engineers have embraced tips and tricks for using Unsloth's template system, with the community finding practical benefits like reduced model output anomalies. Regular updates (2-3 times weekly) ensure that performance improvements continue, while installation instructions optimize setup times on Kaggle.

Gaming Mingle Amidst Coding Jungle: Technical exchanges were accompanied by lighter conversations, including game developer talks and shared gaming experiences—in particular, constructing a demo app using AI assistance, bridging entertainment with machine learning.

Layering it on Thick: Unsloth AI discussions have calved off into deeper explorations, including leveraging optimizer adjustments in resuming fine-tuning from checkpoints and proper chat template integration for various LLMs. The community also spotlighted key resources—Github repositories, Colab notebooks, and educational YouTube videos—for fine-tuning LLMs.

Modeling Showcase Spotlight: The community proudly presented adaptations, like converting the Lora Adapter for Tinyllama, and shared details of the Mischat model, which was fine-tuned using Unsloth's methodologies. A member introduced an AI digest on their Substack blog, summarizing recent AI developments.

Quantum Leap in Quantization: AI enthusiasts investigated specialized techniques such as LoRA training conversation, embedding quantization for faster retrieval, and the emerging QMoE compression framework. A newly introduced LISA strategy that streamlines fine-tuning across layers attracted significant attention for its memory efficiency.

Nous Research AI Discord

- DBRX Draws the Spotlight with Impressive Scale: Databricks announced DBRX, an MoE LLM with 132 billion parameters, and stoked debates on the limits of pretraining. The AI community is abuzz with its 12 trillion token training and potential, comparing it to models like Mistral Medium and gauging the diminishing returns of scale.

- Mixture of Innovations with Jamba and Qwen Models: AI21 Labs introduced Jamba, a SSM-Transformer hybrid with a 256K context window, while Qwen announced Qwen1.5-MoE-A2.7B, which punches above its parameter count by matching 7B models' performance. The releases spark discussions about accessibility, performance, and the future trajectory of AI scaling.

- AI Community Explores Vtubers and AI Consoles: Talks flared about the AI Tokyo event and the concept of a human-AI collaborative model for Vtubers to boost engagement. Speculations flew around Truffle-2, a potential AI-centric console, following the buzz about its predecessor's moderate specs at AI Tokyo.

- GPUs and Tokens: Performance Pursuits: Engineers shared insights on TensorRT for efficient inference on large models, debated about Tensor Parallelism limits, and revealed new methods like smoothquant+ and fp8 e5m2 cache. Exchanges also focused on Claude's regional access and perplexities in fine-tuning loss curves while training Deepseek-coder-33B models.

- RAG, Retrieval, and Dataset Discourse: The AI community delved into Retrieval Augmented Generation (RAG) performance, debating output quality and scrutinizing CoT's impact on retrieval effectiveness. Proposals for XML tag standardization in prompt inputs were made, looking at structured inputs as a potential staple for enhancing outcomes.

Stability.ai (Stable Diffusion) Discord

- Stable Diffusion 3 Release on the Horizon: The engineering community is abuzz with the expected rollout of Stable Diffusion 3 (SD3) towards late April or May, featuring enhanced capabilities like inpainting. A 4 to 6 week ETA from March 25th has led to rampant speculation on new models and features, as inferred from Stability.ai's CTO's remarks.

- Gauging VRAM for Language Models: Conversations are heating up about the VRAM demands for operating language models such as Mixtral, with debates around using quantization as a strategy to reduce memory usage without compromising quality. Engineers are especially keen on quantized models tailored for 10GB Nvidia GPU cards, indicating a push towards more accessible high-performance computing.

- New User Guidance & Tool Suggestions: The Discord space is not just for seasoned veterans; new users are getting tips on image generation via Stable Diffusion, with recommendations pointing to interfaces like Forge and Automatic1111, as well as leonardo.ai for enhancing the creative process.

- Refining Image Prompt Quality: A technical thread has highlighted the importance of prompt engineering, stressing that a more conversational sentence structure can yield better results than comma-separated keywords. This is especially relevant when dealing with sophisticated models like SDXL which may be sensitive to the nuances of prompt phrasing.

- Efficiency in Model Quantization Discussed: The guild members briefly engaged in discussing the transformer architecture's efficiency and the effectiveness of quantization. These AI connoisseurs suggest that despite transformers' inherent inefficiencies, models like SD3 are showing promising results when quantized, potentially allowing for reduced memory footprints.

Links from the discussion included resources and tools:

- Character Consistency in Stable Diffusion by Cobalt Explorer

- leonardo.ai

- Arcads for video ads

- Pixel Art Sprite Diffusion Safetensors

- Stable Diffusion web UI for AMD GPUs

- GitHub - lllyasviel/Fooocus

- StableDiffusionColabs on Google Colabs’ free tier

- Running Stable Diffusion on an 8Gb M1 Mac

Perplexity AI Discord

DBRX Breaks Through to Perplexity Labs: Databricks' DBRX language model has made waves by outclassing GPT-3.5 and proving competitive with Gemini 1.0 Pro, with favorable performance in math and coding benchmarks, which can be explored at Perplexity Labs.

The Developer's Dilemma: Perplexity vs. Claud: Engineers have debated whether Perplexity Pro or Claud Pro better suits their workflow, with a bias toward Perplexity for its transparency. Various model strengths like Claude 3 Opus were scrutinized, while Databricks' DBRX was singled out for its impressive math and coding capabilities.

Perplexity API Performs a Speedrun: The sonar-medium-online model showcased an unexpected speed increase, reaching or even surpassing that of sonar-small-online with higher quality output. Yet, inconsistencies surfaced with API responses compared to the Perplexity web interface, like the failure to retrieve "Olivia Schough spouse" data, prompting discussion on whether extra parameters could correct this.

Sharing Insights and Laughs: Community interaction included debunking a supposed Sora text-to-video model as a rickroll, emphasizing the importance of shareability for threads, and exploring varied search queries on Perplexity AI, ranging from coherent C3 models to French translations for "Perplexityai."

Vision Support Still in the Dark: Despite inquiries, Vision support for the API remains absent, as indicated by humorous responses about the current lack of even citations, suggesting no immediate plans for inclusion.

Latent Space Discord

Claude Takes the Terraform Crown: In the IaC domain, Claude has outshined its peers in generating Terraform scripts, with a comparison blog post on TerraTeam's website spotlighting its superior performance. The meticulous comparison can be accessed at TerraTeam's blog.

DBRX-Instruct Flexes Its Parameters: Databricks has stepped into the spotlight with DBRX-Instruct, a 132 billion parameter Mixture of Experts model that underwent a costly ($10M) and lengthy (2 months) training on 3072 NVIDIA H100 GPUs. Insights about DBRX-Instruct are split between Vitaliy Chiley's tweet and Wired's article.

Licensing Logistics Linger for DBRX: The community scrutinized DBRX's licensing terms, with members strategizing on how to best engage with the model within its usage boundaries. Key insight came from the shared legal concerns and strategies, including Amgadoz's spotlight on Databricks' open model license.

TechCrunch Questions DBRX's Market Muscle: A discussion was sparked by TechCrunch's critical analysis of Databricks' $10M DBRX investment, contrasting it against the already established OpenAI's GPT series. TechCrunch challenged the competitive edge provided by such investments, and a full read is recommended at TechCrunch.

Emotionally Intelligent Chatbots Get High Fives: Hume AI captured attention with its emotionally perceptive chatbot, adept at analyzing and responding to emotions. This disruptive emotional detection capability prompted a mix of excitement and practical use case discussions among members, including 420gunna sharing the Hume AI demo and a related CEO interview.

Mamba Slithers into the Spotlight: In discussions, the Mamba model was singled out for its innovation in the Transformer space, addressing efficiency woes effectively. The potent conversation revolved around Mamba's prowess and architectural decisions aimed at enhancing computational efficiency.

Fine-Tuning Finesse: The topic of fine-tuning Whisper, OpenAI's automatic speech recognition model, was dissected, with consensus that it's advisable when dealing with scarce language resources or specialized terminology in audio.

Cosine Similarity Crosstalk: The group engaged in technical tete-a-tetes over the use of cosine similarity in embeddings, casting doubts on its effectiveness as a semantic similarity measure. The pivot of the discussion was the paper titled "Is Cosine-Similarity of Embeddings Really About Similarity?", which members used as a reference point.

Screen Sharing Snafus: Technical trials with Discord's screen sharing triggered community troubleshooting, including workaround sharing and a collective call for Discord to enhance this feature. Members shared practical solutions to address the ongoing screen sharing issues.

Eleuther Discord

- Claude 3 Conscious of Evaluations: Anthropic's Claude 3 has demonstrated meta-awareness during testing, identifying when it was being evaluated and commenting on the pertinence of processed information.

- Big Plays by DBRX: Databricks has introduced DBRX, a powerful language model with 132 billion total parameters with 36 billion active parameters trained on a 12 trillion token corpus. Discussions have focused on its architecture which includes 16 experts and a 32k context length, its comparative performance and usability, which is creating buzz due to its aptitude in outperforming models like Grok.

- Token Efficiency Debate: Engineers are debating the actual efficiency of larger tokenizers, considering that a bigger tokenizer count may not automatically translate to improved performance and could lead to specific token representation issues.

- Layer-Pruning Minimal Impact Shown: Research has found minimal performance loss with up to 50% layer reduction in LLMs using methods like QLoRA, enabling fine-tuning on a single A100 GPU.

- Jamba Juices Up Models Fusion: AI21 Labs has released a new model dubbed Jamba, combining Structured State Space models with Transformers, operating with 12 billion active parameters and a noteworthy 256k context length.

OpenAI Discord

- GPT-4: A Beacon of Possibility or Just a Tweet Tease?: Enthusiasm is mixed with anticipation as users react to an OpenAI tweet hinting at new developments, even as concerns about the delayed availability of services like GPT-4 in Europe are voiced.

- ChatGPT for Code: Tips shared include instructing ChatGPT to avoid ellipses and incomplete code segments, contributing to more reliable outputs in coding-related tasks. Considered comparisons place models like Claude 3 in a favorable light against others for coding efficiency.

- All Eyes on Gemini Advanced: The community shows a less-than-impressed stance towards Google's Gemini Advanced, with complaints about sluggish response times when paralleled with GPT-4's performance, despite aspirations for future improvements based on upcoming trial stress tests.

- AI's Industrial March: Notable is OpenAI and Microsoft's strategy of integrating their AI offerings into European industries, with possible links to tools such as Copilot Studio and the wider Microsoft suite, despite certain expressed frustrations with Copilot's UX.

- Prompt Engineering Pearls: AI enthusiasts discuss various strategies for getting optimal results when using LLMs, including breaking prompts into chunks for better issue identification, crafting prompts that emphasize what to do over what not to do, and articulating needs for specific outputs in tasks like visual descriptions or translations while maintaining HTML integrity.

HuggingFace Discord

Stable Diffusion Steps up for Solo Performances: Discussions on Stable Diffusion focused on generating new images from a list, but the existing pipeline handles single images. For personalized text-to-image models, DreamBooth emerged as a favorite, while the Marigold depth estimation pipeline is set for integration with new modalities like LCM.

AI Engineers Seek Smarter NLP Navigation: Engineers sought roadmaps for mastering NLP in 2024, with recommendations including "The Little Book of Deep Learning" and Karpathy's "Zero to Hero" playlist. Others explored session-based recommendation systems, questioning the efficacy of models like GRU4Rec and Bert4Rec, while loading errors with 'facebook/bart-large-cnn' prompted calls for help. Suggestions for managing the infinitely generative behavior of LLMs included Supervised Fine Tuning (SFT) and tweaks to repetition penalties.

Accelerating GPU Gains with MPS and Sagemaker: macOS users gained an advantage with MPS support now part of key training scripts, while a discussion on AWS SageMaker highlighted NVIDIA Triton and TensorRT-LLM for benchmarking GPU-utilizing model latency, cost, and throughput.

Innovations and Resources in the Computer Vision Sphere: Amidst efforts to utilize stitched images for training models, individuals also wrestled with fine-tuning DETR-ResNet-50 on specific datasets and investigated zero-shot classifier tuning for beginners. There was also an SOS for non-gradio_client testing methods for instruct pix2pix demos, with the community eager to recommend alternatives and resources.

DL Models in the Spotlight: The NLP community is examining papers on personalizing text-to-image synthesis to conform closely to text prompts. The RealCustom paper discusses balancing subject resemblance with textual control, and another study addresses text alignment in personalized images, as referenced on arXiv.

OpenInterpreter Discord

- Engineers Seek EU Distribution Path: There is an expressed need for assistance or existing discussions on distributing products within the EU, implying a need for coverage on logistical strategies for product distribution.

- Exploring IDE Usage with OpenInterpreter: Members are discussing and sharing resources on integrating OpenInterpreter with IDEs like Visual Studio Code, including a recommendation for a VS Code extension for an AI tool.

- Ready, Set, Optimize!: Anticipation is building around the community's efforts to explore and optimize LLM performance, both local and hosted. The expectation is that by the end of the year, these models could surpass even GPT-4's capabilities.

- Members Redefine "Done" with Prior Art: A member shared a comedic realization that hours of work had inadvertently duplicated existing features, linking to a YouTube video showcasing their process.

- Local LLMs Garnering Interest: There's active dialogue on implementing non-GPT models in OpenInterpreter, with curiosity about experimenting with local LLMs and inquiries about others like groq, hinting at broader exploration beyond OpenAI's tools.

Modular (Mojo 🔥) Discord

Bug Squashing in VSCode Debugging: A GitHub-reported VSCode debugging issue with the Mojo plugin was resolved using a recommended workaround that proved successful on a MacBook.

Mojo and MAX Updates Make Headlines: The Mojo language style guide is now available, as is moplex, a new complex number library on GitHub. MAX 24.2 updates include the adoption of List over DynamicVector as referenced in the changelog.

Learning Resources Stand Out: A free chapter from Rust for Rustaceans was recommended for understanding Rust's lifetime management, while Modular's latest tweets garner attention without spawning further dialogue.

Open Source Embrace Boosts Mojo's Modularity: Modular has open-sourced the Mojo standard library under Apache 2, with nightly builds accessible, and MAX 24.2 introduces improved support for dynamic input shapes as demonstrated in their blog.

API Discrepancies and Enhancements Discussed: Users discussed inconsistencies between Mojo and Python APIs concerning TensorSpec, directing others to MAX Engine runtime documentation and MAX's example repository for clarity.

Open Source and Nightly Builds Beckon Collaboration: Developers are invited to jump on the open-source bandwagon with the Modular open-source initiative, which includes Mojo standard library updates and new features lined up in their latest changelog, while MAX platform's evolution with v24.2 offers new capabilities, particularly in dynamic shapes.

OpenRouter (Alex Atallah) Discord

Cheer for cheerful_dragon_48465: A username, cheerful_dragon_48465, received praise for being amusing, and Alex Atallah signaled an upcoming announcement that will highlight a notable user contribution.

Midnight Rose Clamors for Clarity: The Midnight Rose model was unresponsive without errors, leading to confusion among users before the OpenRouter team resolved the issue, yet the underlying problem remains unsolved.

Size Matters in Tokens: Users discussed the discrepancies in context sizes for Gemini models, which are measured in characters, not tokens, causing confusion, and acknowledged the need for better clarification on the topic.

Testing Troubles with Gemini Pro 1.5: Users facing Error 503 with Gemini Pro 1.5 were informed that the issues arose because the model was still in the testing phase, indicating a gap between OpenRouter's service expectations and reality.

The Ethereum Payment Conundrum: OpenRouter's shift to requiring payments through the ETH network via Coinbase Commerce, and the subsequent discussion on incentives for US bank transfers, highlighted the evolving landscape of crypto payments in the AI sector.

CUDA MODE Discord

- Diving into Dynamic CUDA Support: Community members are discussing the implementation of dynamic CUDA support in OpenCV's DNN module, with experiments detailing performance results using NVIDIA GPUs. A survey on CUDA-enabled hardware for deep learning has been shared to collect community experiences, and peer-to-peer benchmarks for RTX 4090, A5000, and A4000 GPUs are available via GitHub.

- Triton Tutors Wanted: In preparation for a talk, interviews with recent Triton learners are sought to understand difficulties they've faced, contactable via Discord DM or Twitter. Collaborative work and opportunities for input on pull requests, including a prototype for GaLore within the torch ecosystem, are found on GitHub and indicate active collaboration involving

bitsandbytes(PR #1137).

- CUDA Resources and Learning Trails: Enthusiasts looking to deepen their CUDA skills have shared learning resources including a GitHub repository of CUDA materials, the “Intro to Parallel Programming” YouTube playlist, and a book discussion that hit a snag with an Amazon CAPTCHA.

- Torch Troubleshooting and Type Tangles: Engineers are navigating through type issues between torch and cuda, emphasizing potential linker problems and seeking compile-time errors for clearer message clarity when using the

data_ptrmethod in PyTorch with incompatible types.

- Ring Attention Under Microscope: AI developers probe into Ring Attention and its relation to other attention mechanisms like Blockwise Attention and Flash Attention, with an arXiv paper providing additional insights. Separately, debugging is underway for high loss values encountered during training, which may involve sequence length handling, detailed in the FSDP_QLoRA GitHub repo and their wandb report.

- CUDA Quirks and Queries: From resolving Triton

tl.zerosusage in kernels to addressingImportErrorwith Triton-Viz and sharing workarounds, participants exchanged fixes, including building Triton from source and choosing specific triton-viz commits to install. Avoidingreshapein Triton for better performance was also advised.

- AI Takes Center Stage in Comedy Skit: The AI industry's penchant for jargon is humorously depicted in a YouTube video with an emphasis on "AI" at an NVIDIA keynote. Additionally, help requests were made for navigating Mandarin interfaces, such as on Zhihu, for accessing Triton tutorials.

- CUDA Enthusiasm on Windows and WSL: Users share successes and seek guidance for running CUDA with PyTorch on Windows, with suggestions including using WSL as outlined in the Microsoft installation guide, while others consider dual booting Ubuntu or doing a write-up on their setup process.

- Global Search for CUDA Savvy Experts: Job-seekers in the CUDA sphere are navigating opportunities, with NVIDIA being mentioned for hosting a set of global PhD-level job positions. A statement emphasized that talent trumps geography for a team considering applicants from any location.

LlamaIndex Discord

- RAG Optimization Unveiled: @seldo will delve into advanced RAG techniques this Friday, with a focus on optimization alongside TimescaleDB—details are on Twitter. Efforts to shrink RAG resource footprint include using Int8 and Binary Embeddings as proposed by Cohere; more on this via Twitter.

- Legally LLM: The forthcoming LLMxLaw Hackathon at Stanford is set to probe the potential synergy between LLMs and the legal field, with registrations open via Partiful.

- Managing Messy Data with Llamaparse: A user grappling with unwieldy data from Confluence might find salvation in Llamaparse; on-premises deployment is an option, as underscored by LlamaIndex's contact page. For those ensnared by PDF parsing challenges, merging smaller text chunks and using LlamaParse were recommended strategies.

- Pipeline and Parallelism Puzzles: Clarification was sought and given regarding document ID retention in IngestionPipeline; the original document's ID is preserved as

node.ref_doc_id. Meanwhile, suggestions for improving notebook performance included employingaqueryfor asynchronous execution.

- Empowering GenAI: The birth of Centre for GenAIOps, a non-profit targeting the growth and safety of GenAI applications, was broadcasted, with a warm recommendation for LlamaIndex by the founding CTO who shared insights via LinkedIn. On the educational front, a request for top-tier LLM training resources was made but went unanswered.

OpenAccess AI Collective (axolotl) Discord

Databricks Drops DBRX: Databricks introduced DBRX Base and DBRX Instruct, boasting 132B total parameters, outshining LLaMA2-70B and other models, with an open model license and insights provided in their technical blog.

Axolotl Devs Debugging: The Axolotl AI Collective has rectified a trainer.py batch size bug and discussed technical issues like transformer incompatibilities, DeepSpeed and PyTorch binary problems, and large model loading challenges with qlora+fsdp.

Innovating Jamba and LISA: AI21 Labs revealed Jamba, an architecture capable of handling 256k token context on A100 80GB GPUs, while discussion on LISA's superiority over LoRA in instruction following tasks occurs, referencing PRs #701 and #711 in the LMFlow repository.

Performance with bf16: A lively debate took place around using bf16 precision for both training and optimization, citing torchtune team's findings on memory efficiency and stability akin to fp32, sparking interest in its broader implementation.

Resource Hunt for Fine-Tuning Finesse: Community members seek comprehensive educational materials for fine-tuning or training open source models, indicating a preference for varied formats like blogs, articles, and videos, aiming for a strong foundation before diving into axolotl.

LAION Discord

- AI Contemplates Existence: During discussions around self-awareness in AI, a user shared two engagements with ChatGPT 3.5 where it expressed a moment of 'satori', raising questions about its understanding of consciousness. The exchanges can be explored through these links: Chat 1 and Chat 2.

- Voice Acting Fears Amid AI's Rise: A spirited debate surfaced on the future of professional voice acting given AI advancements, referencing Disney’s interest in AI voiced characters through their collaboration with ElevenLabs.

- Benchmarks Under Scrutiny: Benchmarks for AI model performance drew criticism for sometimes misleading visualizations, with calls for more concise and human-relevant measurement standards, like the ones on Chatbot-Arena Leaderboard.

- Pruning the Fat from AI Models: A study on efficient resource use in LLMs indicated that layer pruning doesn’t greatly affect performance and can be studied in-depth in this arXiv paper. New tools have been introduced for VLM image captioning with failure detection by ProGamerGov, available on GitHub.

- Devika Aims to Streamline Software Engineering: An innovative project called Devika aims to comprehend high-level human instructions and write code, positioned as an open-source alternative to similar AIs. Devika’s approach and features are accessible on its GitHub page.

tinygrad (George Hotz) Discord

Tinygrad Tightens the Screws: Dynamic discussions about tinygrad reveal attempts to close the performance gap with PyTorch, through heuristics for operations like gemv and gemm and direct manipulation of GPU kernels. Insights include kernel fusion challenges, potential view merging optimizations, and community-driven documentation efforts.

NVIDIA Claims the Crown in MLPerf: Recent MLPerf Inference v4.0 results sparked conversation, noting how NVIDIA continues to lead in performance metrics, with Qualcomm showing strong results and Habana’s Gaudi2 demonstrating its lack of design for inference tasks.

SYCL Stepping Up to CUDA: A tweet highlighted SYCL as a promising alternative to NVIDIA’s CUDA, stirring anticipation for wider industry adoption and a break from current monopolistic trends in AI hardware.

API Allegiances and Industry Impact: Members weighed in on OpenCL’s diminished utilization and the potential of Vulkan for achieving uniform hardware acceleration interfaces, debating their respective roles in the larger ecosystem.

View Merging on the Horizon: The discussions also probed the refinement of tinygrad's ShapeTracker to potentially consolidate views, considering the importance of tensor transformation histories and backpropagation functionality when contemplating structural changes.

LangChain AI Discord

OpenGPTs Discussion Welcomes Engineers: A new channel for OpenGPTs project on GitHub has been introduced encouraging contributions and dialogue amongst the community.

JavaScript Chatbots versus Document Fetchers: AI engineers explore building dynamic chatbots with JavaScript, diverging from static document retrieval. For guidance, a Colab notebook has been shared.

Deploying with Custom Domains Hiccup: Deploying FastAPI RAG apps with LangChain on custom domains like github.io is sparking curiosity; yet, documentation discrepancies on LangChain Pinecone integration pose challenges that await resolution.

LangSmith Traces AI's Steps: Using LangChain's LangSmith for tracing AI actions employs environment variables such as LANGCHAIN_TRACING_V2, which offers granular logging capabilities.

Tutorial Unlocks PDF to JSON Conversion Mysteries: A new YouTube tutorial breaks down the conversion of PDFs to JSON using LangChain's Output Parsers and GPT, simplifying a once complex task. The community's insights are requested to enhance such educational content.

Interconnects (Nathan Lambert) Discord

- DBRX Rocks the LLM Scene: MosaicML and Databricks have introduced DBRX, a 132 billion parameter model boasting 32 billion active parameters and a 32k context window, available under a commercial license with trial access here. However, its license terms, which prohibit using DBRX to improve other LLMs, sparked discussions among engineers on the repercussions for AI advancements.

- Jamba: SSM Meets Transformers By AI21: AI21 has released Jamba, merging the Mamba's Structured State Space model (SSM) with traditional Transformer architecture and providing a 256K context window. Jamba is released under an Apache 2.0 license, encouraging development in hybrid model structures and is accessible here.

- Mosaic's Law Predicts Cheaper AI Futures: "Mosaic's Law" has become a hot topic, predicting a yearly quartering of costs for comparable models driven by advances in hardware, software, and algorithms, signaling a future where AI can be developed at significantly lower costs.

- Analyzing Architectural Evolution: New research bringing forth the largest analysis of beyond-Transformer architectures shows that striped architectures may outperform homogeneous ones by layer specialization, which can herald quicker architectural improvements. The complete study and accompanying code are available here and here.

- From 'Small' to 'Sizable': The Language Model Spectrum Discourse: Discussions point to the semantics of "small" language models, with the community considering models under 100 billion parameters small while reflecting on the historical context. Furthermore, Microsoft GenAI gaining Liliang Ren as a Senior Researcher promises advancements in efficient and scalable neural architectures, and Megablocks' transition to Databricks underscores shifts in project stewardship and expectations within the AI engineering community.

DiscoResearch Discord

DBRX Instruct Makes a Grand Entrance: Databricks unveiled a new 132 billion parameter sparse MoE model, DBRX Instruct, trained on a staggering 12 trillion tokens, boasting prowess in few-turn dialogues, alongside releasing DBRX Base under an open license, furnished with insights in their blog post.

DBRX's Inner Workings Decoded: DBRX distinguishes itself with a merged attention mechanism, distinct normalization technique, and a unique tokenization method that has been refined through various bug fixes, with its technical intricacies documented on GitHub.

Hands-On with DBRX Instruct: AI enthusiasts can now experiment with DBRX Instruct through an interactive Hugging Face space, complete with a system prompt for tailoring response styles.

Mixtral's Multilingual Muscles Flexed for Free: Mixtral's translation API can be tapped into without charge via groq, subject to rate limits, and open for community-driven experimentation.

Occi 7B Outshines in Translation Quality: Users have noted the exceptional translation fidelity of Occi 7B via the occiglot/occiglot-7b-de-en-instruct model and have embarked on a quest to gauge the translation caliber across services like DisCoLM, GPT-4, Deepl, and Azure Translate, showcasing their efforts on Hugging Face.

Alignment Lab AI Discord

- DBRX Claims Top Spot Over GPT-3.5: DBRX, introduced by Databricks, asserts dominance in LLM landscape, purportedly outclassing GPT-3.5 and comparable with Gemini 1.0 Pro, specializing in programming tasks with a MoE architecture for enhanced efficiency.

- DBRX Simplification Sought: Participants call for a distilled explanation of the DBRX model to better understand its proclaimed advancements in LLM efficiency and programming prowess.

- DBRX's Programming Prowess Questioned: Members probe the roots of DBRX’s stellar programming capabilities, pondering if it's the result of specialized datasets and architecture or derived from a broader strategy.

- Decoding DBRX's Programming Edge: DBRX's commendable programming results trace back to its 12 trillion token pre-training, MoE architecture, and a focused curriculum learning to sidestep "skill clobbering".

- Solo Coding Conundrums: A peer requests one-on-one support for a coding problem, highlighting the community's role in providing personalized troubleshooting assistance.

LLM Perf Enthusiasts AI Discord

- Join the LLM Brew Crew: A pop-up coffeeshop and co-work event for LLM enthusiasts, hosted by Exa.ai, is set for this Saturday in SF with free coffee, matcha, and pastries. Interested parties can RSVP here.

- In Search of AI-Centric Work Spots: Members in SF are scouting for co-working spaces that cater to LLM aficionados; celo has been namedropped as a go-to venue.

Skunkworks AI Discord

- Python Enthusiasts, Get Ready to Contribute: An upcoming onboarding session for Python enthusiasts interested in the AI space was inquired about; members are looking to participate and contribute effectively.

- Off-Topic Video Share: A member shared a YouTube video in the off-topic channel; the contents of the video, however, are not described or its relevance to the group's interests.

The Datasette - LLM (@SimonW) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

LM Studio ▷ #💬-general (335 messages🔥🔥):

- Confusion Over Model IDs: Users are discussing an issue where querying their model via the API results in a model ID of "Loaded from Chat UI" which prevents them from accessing the real model name. It's labeled as a bug, which seems to be fixed in beta version 0.2.18.

- LM Studio on Diverse Platforms: There are reports of successfully running LM Studio on various platforms such as Linux on a Steam Deck and using the cloud service AWS, illustrating the software's adaptability to different technology environments.

- Questions about Preset Files: Several users are inquiring about preset files and the usage of system prompts within LM Studio. A suggestion was made to use custom system prompts, such as those designed for high-quality story writing, by pasting them into the System Prompt field in LM Studio.

- Concerns Over Space and Performance: Users raised issues with storage space on their devices affecting the ability to run LM Studio, as well as the performance of different models at various memory capacities.

- Features and Updates Commentary: Discussions about various features in LM Studio include branching, chat folders, and story mode functionality, with opinions shared on practical usage and efficiency.

- Home | big-AGI: Big-AGI focuses on human augmentation by developing premier AI experiences.

- lmstudio-ai/gemma-2b-it-GGUF · Hugging Face: no description found

- AnythingLLM | The ultimate AI business intelligence tool: AnythingLLM is the ultimate enterprise-ready business intelligence tool made for your organization. With unlimited control for your LLM, multi-user support, internal and external facing tooling, and 1...

- LM Studio Realtime STT/TTS Integration Please: A message to the LM Studio dev team. Please give us Realtime Speech to Text and Text to Speech capabilities. Thank you!

- GitHub - open-webui/open-webui: User-friendly WebUI for LLMs (Formerly Ollama WebUI): User-friendly WebUI for LLMs (Formerly Ollama WebUI) - open-webui/open-webui

- Reddit - Dive into anything: no description found

- High Quality Story Writing Type First Person: no description found

- High Quality Story Writing Type Third Person: no description found

- High Quality Story Writing Troubleshooting: no description found

- GoldenSun3DS' Custom GPTs Main Google Doc: no description found

LM Studio ▷ #🤖-models-discussion-chat (72 messages🔥🔥):

- Merge into the Non-Quant World: A non-quantized model merge, involving LongAlpaca-70B-lora and lzlv_70b_fp16_hf, resulted in a new merged model with capabilities of 32K tokens and linear rope scaling at 8. Benchmarked by ChuckMcSneed, the model reportedly experienced a 30% performance degradation with 8x context length.

- Databricks's DBRX Instruct Stirring Interest: Members discussed the newly released DBRX Instruct from Databricks—a mixture-of-experts model that requires substantial resources (320 GB RAM for non-quantized versions) and attention due to its potential few-turn interaction specialization.

- How to LM Studio, A Beginners' Guide: The conversation included assistance for uploading LLMs in GGUF format to LM Studio, with a step-by-step guide provided, including converting non-GGUF files to the required format using this tutorial.

- Cohere's Command-R Model Noted for Data Retrieval: Members noted Cohere's Command-R AI for its data retrieval capabilities but also mentioned restrictions due to licensing.

- Quantized DBRX Model and Compatibility Questioned: Discussion indicated the community's curiosity about quant versions of DBRX Instruct, its censored nature, and system requirements, with an open GitHub request for llama.cpp support listed here.

- LM Studio Usage and Open Interpreter Integration Shared: Inquiries about using specific models within LM Studio were addressed with references to documentation and a YouTube tutorial demonstrating integration with Open Interpreter.

- grimulkan/lzlv-longLORA-70b-rope8-32k-fp16 · Hugging Face: no description found

- databricks/dbrx-instruct · Hugging Face: no description found

- DBRX: My First Performance TEST - Causal Reasoning: On day one of the new release of DBRX by @Databricks I did performance tests on causal reasoning and light logic tasks. Here are some of my results after the...

- Add support for DBRX models: dbrx-base and dbrx-instruct · Issue #6344 · ggerganov/llama.cpp: Prerequisites Please answer the following questions for yourself before submitting an issue. I am running the latest code. Development is very rapid so there are no tagged versions as of now. I car...

- no title found: no description found

- LM Studio + Open Interpreter to run an AI that can control your computer!: This is a crappy video (idk how to get better resolution) that shows how easy it is to use ai these days! I run mistral instruct 7b in the client and as a se...

- Tutorial: How to convert HuggingFace model to GGUF format · ggerganov/llama.cpp · Discussion #2948: Source: https://www.substratus.ai/blog/converting-hf-model-gguf-model/ I published this on our blog but though others here might benefit as well, so sharing the raw blog here on Github too. Hope it...

LM Studio ▷ #announcements (2 messages):

- LM Studio 0.2.18 Goes Live: A new stability and bug fixes release, LM Studio 0.2.18, is now available for download on lmstudio.ai for Windows, Mac, and Linux, or via the 'Check for Updates' option in the app. This update includes an 'Empty Preset' for Base/Completion models, default presets for various large models, and a new 'monospace' chat style.

- Bug Squashing in Full Effect: Key bug fixes in LM Studio 0.2.18 address issues like duplicate chat messages with images, unclear API error messages when no model is loaded, GPU offload settings, inaccurate model name display, and problems with multi-model serve request queuing and throttling.

- Documenting LM Studio: A brand new documentation website for LM Studio has been launched and will be populated with more content in the upcoming days and weeks.

- Configs Just a Click Away: If the new configurations are missing in your LM Studio setup, find them readily available on GitHub: openchat.preset.json and lm_studio_blank_preset.preset.json. These should be included in the download or update by now.

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- configs/openchat.preset.json at main · lmstudio-ai/configs: LM Studio JSON configuration file format and a collection of example config files. - lmstudio-ai/configs

- configs/lm_studio_blank_preset.preset.json at main · lmstudio-ai/configs: LM Studio JSON configuration file format and a collection of example config files. - lmstudio-ai/configs

LM Studio ▷ #🧠-feedback (1 messages):

- Praise for User-Friendly AI Tool: A member expressed great appreciation for the AI tool, commending it as the easiest to use among various AI projects they have encountered. They thanked the creators for developing their favorite AI tool.

LM Studio ▷ #🎛-hardware-discussion (109 messages🔥🔥):

- The Great GPU Debate: Participants discuss the relative merits of various graphics cards for ML tasks. References to the NVIDIA 3090 being better than the 4080 due to more VRAM were made, with counterpoints mentioning the 4080's faster CUDA raster performance, and for those serious in AI/ML, the suggestion to invest in top-tier NVIDIA 4090 or dual A6000.

- Monitor Hunt for Quality and Performance: There's an active exploration for high-quality monitors, with users sharing resources like an MSI monitor here, and discussing features like high refresh rates, OLED technology, and HDR capabilities. Concerns about the brightness levels for HDR400 certification were mentioned alongside a humourous acknowledgment of overpowered hardware for retro gaming.

- Power Supply Calculations and Technical Necessities: Conjecture dominates the necessity for powerful PSUs to run high-end graphics cards like the 4090 next to a 3090, with recommendations hovering around 1200-1500w for dual setups. Cable types and connections, such as the need for multiple 8-pin connectors, also figure into the logistics of upgrading a system.

- LM Studio Software Quirks and GPU Compatibility: There's troubleshooting around LM Studio not recognizing a new RT 6700XT graphics card, with a member reminding others that mixing AMD and NVIDIA cards in the same system could cause incompatibilities with the software.

- Chatting About Older GPUs and NVLink Bridges: Discussion includes the challenges of using older NVIDIA cards like the K80, with mods using old iMac fans to cool them, and the perceived inefficiency of utilizing hardware dated pre-2020 for serious ML work. Another discussion point circled around whether cheaper 'SLI bridges' on Amazon could be a scam compared to the official NVIDIA NVLink bridge, with skepticism expressed about their quality and functionality.

- MSI MPG 321URX QD-OLED 32" UHD 240Hz Flat Gaming Monitor - MSI-US Official Store: no description found

- OLED Monitors In 2024: Current Market Status - Display Ninja: Check out the current state of OLED monitors as well as everything you need to know about the OLED technology in this ultimate, updated guide.

LM Studio ▷ #🧪-beta-releases-chat (96 messages🔥🔥):

- Mislabelled Windows Download: A user pointed out that the Windows download link for LM Studio was incorrectly labeled as .17 when it should have been .18, and a developer confirmed the error, stating that the installation file was indeed the .18 version.

- Local Inference Server Speed Issue: A couple of users discussed a slow inference speed problem with the Local Inference Server on LM Studio 0.2.18 with a shared setting from the playground impacting API service performance; the issue of the service stop button not functioning as expected was also identified.

- ROCm Beta for Windows under the Microscope: There was a lengthy back-and-forth about issues getting ROCm beta to work on Windows, with one user experiencing crashes when partial GPU offloading was enabled on a 6900XT; a debug session suggested full offload or no offload as current working solutions.

- Stability and Feature Requests: Users expressed satisfaction with the stability of v18 and made requests, including adding a GPU monitor and search functionality for chats and previous LLM searches.

- NixOS Package Contribution: A user submitted an init at 0.2.18 pull request to the NixOS repository to get LMStudio working on Nix and planned to merge the update. The PR is available at NixOS pull request #290399.

- no title found: no description found

- no title found: no description found

- Windows 11 - release information: Learn release information for Windows 11 releases

- lmstudio: init at 0.2.18 by drupol · Pull Request #290399 · NixOS/nixpkgs: New app: https://lmstudio.ai/ Description of changes Things done Built on platform(s) x86_64-linux aarch64-linux x86_64-darwin aarch64-darwin For non-Linux: Is sandboxing enabled in nix....

- no title found: no description found

- no title found: no description found

LM Studio ▷ #langchain (1 messages):

Unfortunately, there's insufficient context and content to extract topics, discussion points, links, or blog posts of interest from the provided message. The single message fragment you've provided does not contain enough information for a summary. Please provide more messages for a detailed summary.

LM Studio ▷ #amd-rocm-tech-preview (92 messages🔥🔥):

- LM Studio 0.2.18 ROCm Beta Released: The new LM Studio 0.2.18 ROCm Beta bug fixes and stability release is available for testing, targeting various issues from image duplication in chat to GPU offload functionality. Users are encouraged to report any new or unresolved bugs - with a download link provided: 0.2.18 ROCm Beta Download.

- Users Report Loading Errors in 0.2.18: Members have experienced errors loading models in 0.2.18, with error messages indicating an "Unknown error" when trying to use GPU offload. Users shared their system configurations and steps they've taken, including installing NPU drivers and deleting certain AppData files to revert to an older, functioning version.

- Low GPU Utilization Bug Addressed: Some users reported that 0.2.18 has low GPU utilization issues, with GPUs underperforming compared to previous versions. The development team requested verbose logs and specific information to tackle the problem promptly.

- Mixed Feedback on 0.2.18 Performance: While some users confirmed improved offloading with 0.2.18, others are still facing issues like low GPU utilization or errors when loading models with GPU offload enabled. Those who cannot operate the ROCm version are offered assistance to revert to a standard LM Studio version.

- Bug Found with Local Inference Ejections: A user reported a potential bug where ejecting a model during local inference prevents loading any more models without restarting the app. Other users were unable to reproduce the issue, indicating the bug might not be consistent across different hardware setups.

- no title found: no description found

- no title found: no description found

- How to run a Large Language Model (LLM) on your AMD Ryzen™ AI PC or Radeon Graphics Card: Did you know that you can run your very own instance of a GPT based LLM-powered AI chatbot on your Ryzen™ AI PC or Radeon™ 7000 series graphics card? AI assistants are quickly becoming essential resou...

LM Studio ▷ #crew-ai (4 messages):

- Human-GPT Hybrid Solutions for Abstract Problems: A member shared their approach focusing on the reasoning process over coding solutions, suggesting using agents to work out the details of abstract ideas and identify key issues, acknowledging that human intervention remains essential.

- AI as Future Co-architects: There was a brief comparison of AI's evolving role in problem-solving to that of an architect, envisioning AI agents discussing among themselves and collaborating in meetings.

Unsloth AI (Daniel Han) ▷ #general (293 messages🔥🔥):

- Unsloth's Tips and Tricks: Members discuss the importance of using proper templates when working with models, mentioning the usefulness of the Unsloth notebook for handling model files to avoid wonky outputs. Unsloth is described as extremely helpful, with direct implementation into a modelfile suggested.

- Kaggle Installation Quirks: There's been a spike in installation times from 2.5 to 7 minutes on Kaggle, attributed to not following the updated installation instructions. When these instructions are utilized, the expected installation time optimization is achieved.

- Unsloth Updates Regular: Updates to the Unsloth package are frequent, with 2-3 per week and nightly branch updates being daily. Instructions for installing the latest updates for xformers via pip were shared, indicating a focus on maintaining and improving the tool.

- Discussion over Jamba and LISA: Members share and discuss recent advancements such as AI21 Labs' announcing Jamba, and LISA's paper, noting Jamba's model details and comparing the efficiency and feasibility of LISA's full fine-tuning method with the capabilities of Unsloth.

- Gaming Chatter Among Coders: A lighter note in the channel includes members bonding over experiences with games like League of Legends, while one user shares their approach to building a demo app with zero coding experience, highlighting the partially AI-assisted development process.

- 1-bit Quantization: A support blog for the release of 1-bit Aana model.

- Introducing Jamba: AI21's Groundbreaking SSM-Transformer Model: Debuting the first production-grade Mamba-based model delivering best-in-class quality and performance.

- Arki05/Grok-1-GGUF · Hugging Face: no description found

- ai21labs/Jamba-v0.1 · Hugging Face: no description found

- Tweet from Rui (@Rui45898440): Excited to share LISA, which enables - 7B tuning on a 24GB GPU - 70B tuning on 4x80GB GPUs and obtains better performance than LoRA in ~50% less time 🚀

- databricks/dbrx-base · Hugging Face: no description found

- no title found: no description found

- GitHub - unslothai/unsloth: 2-5X faster 70% less memory QLoRA & LoRA finetuning: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

Unsloth AI (Daniel Han) ▷ #random (21 messages🔥):

- GitHub Real-Time Code Push Display: A member shared MaxPrilutskiy's tweet about how every code push appears on a real-time wall at the GitHub HQ.

- Million's Funding for AI Experiments: Million (@milliondotjs) is offering funding for various AI experiments and are looking for talented ML engineers. Areas of interest include optimizing training curriculums, developing a diffusion text decoder, improving theorem provers, and scaling energy transformers.

- BinaryVectorDB on GitHub: An open-source vector database capable of handling hundreds of millions of embeddings was shared, located at cohere-ai's GitHub repository.

- Karpathy's Take on Fine Tuning and LLMs: A YouTube video where Andrej Karpathy discusses how Large Language Models (LLMs) are akin to operating systems and the importance of mixing old and new data during fine-tuning to avoid regression in model capabilities.

- Preferred State vs. Preferred Path in RL: At 24:40 of the video, Andrej Karpathy discusses Reinforcement Learning Human Feedback (RLHF), highlighting the inefficiency in the current approach and suggesting a need for new training methods that allow models to understand and learn from actions they take.

- Tweet from Aiden Bai (@aidenybai): hi, Million (@milliondotjs) has $1.3M in gpu credits that expires in a year. we are looking to fund experiments for: - determining the most optimal training curriculum, reward modeler, or model merg...

- Tweet from Max Prilutskiy (@MaxPrilutskiy): Just so y'all know: Every time you push code, you appear on this real-time wall at the @github HQ.

- 2024 GTC NVIDIA Keynote: Except it's all AI: Does the biggest AI company in the world say AI more often than other AI companies? Let's find out. AI AI AI AI AI AIAI AI AI AI AI AIAI AI AI AI AI AI AI AI...

- Making AI accessible with Andrej Karpathy and Stephanie Zhan: Andrej Karpathy, founding member of OpenAI and former Sr. Director of AI at Tesla, speaks with Stephanie Zhan at Sequoia Capital's AI Ascent about the import...

- GitHub - cohere-ai/BinaryVectorDB: Efficient vector database for hundred millions of embeddings.: Efficient vector database for hundred millions of embeddings. - cohere-ai/BinaryVectorDB

Unsloth AI (Daniel Han) ▷ #help (202 messages🔥🔥):

- Left-Padding Alerts during Model Generation: Members discussed left-padding issues when using

model.generate. They clarified that settingtokenizer.padding_side = "left"helps, and any warning received regarding padding can typically be ignored as long as the generation works correctly. - Model Templates and EOS Token Placement: There was confusion around formatting model templates for generation using

unsloth_templatevariable. It was highlighted that the EOS token might need to be manually added, and current templates without proper EOS indication could be too basic for effective generation. - Fine-Tuning Restart Dilemma: A user encountered a problem when trying to resume fine-tuning from a checkpoint, as the process halted after a single step. The guidance provided suggested increasing the

max_stepsor settingnum_train_epochs=3inTrainingArguments. - Resources for LLM Fine-Tuning: Community members sought resources for learning how to fine-tune large language models (LLMs). Various suggestions were made including Github pages, colab notebooks, source code documentation, and instructional YouTube videos.

- Understanding Chat Templates in Different LLMs: Queries were raised pertaining to the correct usage and structure of chat templates in models such as Ollama, including doubts about tokenization and message formatting inline with Unsloth's methodologies.

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- tokenizer_config.json · mistralai/Mistral-7B-Instruct-v0.2 at main: no description found

- gemma:7b-instruct/template: Gemma is a family of lightweight, state-of-the-art open models built by Google DeepMind.

- Google Colaboratory: no description found

- Tags · gemma: Gemma is a family of lightweight, state-of-the-art open models built by Google DeepMind.

- google/gemma-7b-it · Hugging Face: no description found

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- ollama/docs/modelfile.md at main · ollama/ollama: Get up and running with Llama 2, Mistral, Gemma, and other large language models. - ollama/ollama

- GitHub - toranb/sloth: python sftune, qmerge and dpo scripts with unsloth: python sftune, qmerge and dpo scripts with unsloth - toranb/sloth

- Mistral Fine Tuning for Dummies (with 16k, 32k, 128k+ Context): Discover the secrets to effortlessly fine-tuning Language Models (LLMs) with your own data in our latest tutorial video. We dive into a cost-effective and su...

- Efficient Fine-Tuning for Llama-v2-7b on a Single GPU: The first problem you’re likely to encounter when fine-tuning an LLM is the “host out of memory” error. It’s more difficult for fine-tuning the 7B parameter ...

- unsloth/unsloth/models/llama.py at main · unslothai/unsloth: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- transformers/src/transformers/models/llama/modeling_llama.py at main · huggingface/transformers: 🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX. - huggingface/transformers

Unsloth AI (Daniel Han) ▷ #showcase (7 messages):

- Lora Adapter Transformed for Ollama: A member converted the Lora Adapter from the Unsloth notebook to a ggml adapter (.bin) to train Tinyllama with a clean dataset from Huggingface. The model and details can be found on Ollama's website.

- Mischat gets an Update: The same member shared another model Mischat, fine-tuned using the Unsloth notebook ChatML with Mistral, reflecting how templates in the notebook influence the Ollama model files. Details, including a fine-tuning session notebook and the Huggingface repository, can be found here.

- Showcase of Notebook Templates on Model File: The process showcases how templates in the Unsloth notebook reflect on Ollama model files, with two examples provided by the same member demonstrating this integration.

- AI Weekly Digest in Blog Form: A user announced their blog which provides summaries ranging from Apple's MM1 chip to Databricks DBRX and Yi 9B LLMs among others. This weekly AI digest blog, aimed at being insightful, can be read on Substack.

- pacozaa/mischat: Model from fine-tuning session of Unsloth notebook ChatML with Mistral Link to Note book: https://colab.research.google.com/drive/1Aau3lgPzeZKQ-98h69CCu1UJcvIBLmy2?usp=sharing

- pacozaa/tinyllama-alpaca-lora: Tinyllama Train with Unsloth Notebook, Dataset https://huggingface.co/datasets/yahma/alpaca-cleaned

- AI Unplugged 5: DataBricks DBRX, Apple MM1, Yi 9B, DenseFormer, Open SORA, LlamaFactory paper, Model Merges.: Previous Edition Table of Contents Databricks DBRX Apple MM1 DenseFormer Open SORA 1.0 LlaMaFactory finetuning ananlysis Yi 9B Evolutionary Model Merges Thanks for reading Datta’s Substack! Subscribe ...

- Notion – The all-in-one workspace for your notes, tasks, wikis, and databases.: A new tool that blends your everyday work apps into one. It's the all-in-one workspace for you and your team

Unsloth AI (Daniel Han) ▷ #suggestions (25 messages🔥):

- Layer Replication Inquiry: A member inquired about the support for Layer Replication or Low-Rank Adaptation (LoRA) training with Unsloth AI. Further discussion led to a comparison with Llama PRO and highlighted that LoRA can reduce memory usage similar to the base 7B model with an explanation and link provided: Layer Replication with LoRA Training.

- Embedding Quantization Breakthrough: The chat mentioned how embedding quantization can offer a 25-45x speedup in retrieval while maintaining 96% of performance, linking to a Hugging Face blog explaining the process alongside a real-life retrieval demo: Hugging Face Blog on Embedding Quantization.

- QMoE Compression Framework: They discussed a paper on QMoE, a compression, and execution framework designed for trillion-parameter Mixture-of-Experts (MoE) models which reduces memory requirements to less than 1-bit per parameter. Although a member had trouble accessing a related GitHub link, the main paper can be found here: QMoE Paper.

- Layerwise Importance Sampled AdamW (LISA) Technique: A new paper introduces the LISA strategy, which seems to outperform both LoRA and full parameter training by studying the layerwise properties and weight norms. It promises efficient fine-tuning with low memory costs similar to LoRA: LISA Strategy Paper.

- Cost-Effective Model Training Discussions: There were discussions about the affordability of high-capacity hardware for model training, with members mentioning the financial practicality of running certain models if "you can only afford half a DGX A100."

- LISA: Layerwise Importance Sampling for Memory-Efficient Large Language Model Fine-Tuning: The machine learning community has witnessed impressive advancements since the first appearance of large language models (LLMs), yet their huge memory consumption has become a major roadblock to large...

- Binary and Scalar Embedding Quantization for Significantly Faster & Cheaper Retrieval: no description found

- Paper page - QMoE: Practical Sub-1-Bit Compression of Trillion-Parameter Models: no description found

- abacusai/Fewshot-Metamath-OrcaVicuna-Mistral-10B · Hugging Face: no description found

- LoRA: no description found

- QMoE support for mixtral · Issue #4445 · ggerganov/llama.cpp: Prerequisites Please answer the following questions for yourself before submitting an issue. I am running the latest code. Development is very rapid so there are no tagged versions as of now. I car...

Nous Research AI ▷ #ctx-length-research (6 messages):

- Context Matters in LLM Behavior: A member noted challenges with splitting paragraphs for Large Language Models (LLMs), as sentence positioning is preserved but the models often paraphrase or improperly split the text. They pointed out that adding longer context caused some models, like Mistral, to struggle with locating the target paragraph.

- Tokenization Troubles and Evaluation Intricacies: The complexity of evaluating sentence splits was highlighted, with the mention of tokenization issues disrupting the process. The member questioned the method of prompting LLMs to recall specific paragraphs, such as the 'abstract'.

- Sharing the code for precision: In a conversation about evaluating Large Language Models' ability to handle tasks like recalling and splitting text, a member mentioned that their full prompt and detailed code are available on a Github repository, which they use to check for exact matches after sentence splitting.

Nous Research AI ▷ #off-topic (15 messages🔥):

- Insights from AI Tokyo: The AI Tokyo event showcased an impressive virtual AI Vtuber scene, featuring advancements in generative podcasts, ASMR, and realtime interactivity. However, whether the event was recorded in Japanese, or if a recording is available, remains unconfirmed.

- Vtuber Community at a Crossroad: The Japanese Vtuber community confronts streaming challenges like consistency, volume, and differentiation. An envisioned solution includes a human-AI collaboration model, where a human provides the base, and AI handles the majority of content creation, enhancing fan engagement.

- AI as the New Console Frontier: Truffle-1 was likened to a potential console dedicated to AI rather than gaming, with a custom OS and an ecosystem of optimized applications. While its specs aren't groundbreaking, its successor Truffle-2 promises more intriguing features.

- Quick Moderation Action: A user referred to as "That dude" was banned and kicked from the channel, and the action was acknowledged with thanks.

- Cohere int8 & Binary Embeddings Discussed: A video on Cohere int8 & Binary Embeddings was shared, potentially discussing how to scale vector databases for large datasets. The link to the video titled "Cohere int8 & binary Embeddings - Scale Your Vector Database to Large Datasets" was provided: Cohere int8 & Binary Embeddings.

Link mentioned: Cohere int8 & binary Embeddings: Cohere int8 & binary Embeddings - Scale Your Vector Database to Large Datasets#ai #llm #ml #deeplearning #neuralnetworks #largelanguagemodels #artificialinte...

Nous Research AI ▷ #interesting-links (11 messages🔥):

- Databricks Unveils DBRX Instruct: Databricks introduces DBRX Instruct, a mixture-of-experts (MoE) large language model (LLM) with a focus on few-turn interactions and makes it open under an open license. The basis for DBRX Instruct is the DBRX Base, and for in-depth details, the team has published a technical blog post.

- New Benchmarks in MLPerf Inference v4.0 Announced: MLCommons has released results from the MLPerf Inference v4.0 benchmark suite, measuring how quickly hardware systems process AI and ML models across varied scenarios. The task force also added two benchmarks in the light of generative AI advancements.

- AI21 Labs Breaks New Ground with Jamba: AI21 Labs announces Jamba, the pioneering Mamba-based model that blends SSM technology with traditional Transformers, boasting a 256K context window and significantly improved throughput. Jamba is openly released with Apache 2.0 licensed weights for community advancement.

- Qwen Introduces MoE Model with High Efficiency: Qwen releases the new Qwen1.5-MoE-A2.7B, an upcycled transformer-based MoE language model. It performs comparably to a 7B parameter model while only activating 2.7B parameters at runtime and requiring 25% of the training resources needed by its predecessor.

- New GitHub Repository for BLLaMa 1.58-bit Model: The GitHub repository for the 1.58-bit LLaMa model goes live, available for community contribution and exploration at rafacelente/bllama.

- Introducing Jamba: AI21's Groundbreaking SSM-Transformer Model: Debuting the first production-grade Mamba-based model delivering best-in-class quality and performance.

- LISA: Layerwise Importance Sampling for Memory-Efficient Large Language Model Fine-Tuning: The machine learning community has witnessed impressive advancements since the first appearance of large language models (LLMs), yet their huge memory consumption has become a major roadblock to large...

- Qwen/Qwen1.5-MoE-A2.7B · Hugging Face: no description found

- New MLPerf Inference Benchmark Results Highlight The Rapid Growth of Generative AI Models - MLCommons: Today, MLCommons announced new results from our industry-standard MLPerf Inference v4.0 benchmark suite, which delivers industry standard machine learning (ML) system performance benchmarking in an ar...

- databricks/dbrx-instruct · Hugging Face: no description found

- Asking Claude 3 What It REALLY Thinks about AI...: Claude 3 has been giving weird covert messages with a special prompt. Join My Newsletter for Regular AI Updates 👇🏼https://www.matthewberman.comNeed AI Cons...

- GitHub - rafacelente/bllama: 1.58-bit LLaMa model: 1.58-bit LLaMa model. Contribute to rafacelente/bllama development by creating an account on GitHub.

Nous Research AI ▷ #general (285 messages🔥🔥):

- DBRX Under the Microscope: The new DBRX open weight LLM by Databricks, with 132B total parameters, has been a hot topic of discussion. It has sparked debates over the diminishing returns of scale, the potential for reaching the limits of pretraining, and the effectiveness of finetuning with a large token dataset (12T).

- Qwen Introduces Compact MoE: Qwen revealed Qwen1.5-MoE-A2.7B, a small MoE model with 2.7 billion activated parameters that matches performances of state-of-the-art 7B models (source). Discussions reflect anticipation for the model's accessibility and performance.

- Emergence of Jamba, a Mamba-Transformer Hybrid: AI21 announced Jamba, a hybrid SSM-Transformer model called Mamba, boasting a 256K context window and significant throughput and efficiency gains. The open weights release and performance that matches or outperforms others in its class have generated excitement in the community.

- Technical Troubles and Training Tidbits: Users shared troubleshooting experiences with the DBRX model and personal projects, touching upon local model run challenges, implementation questions about BitNet training, and knowledge progression for AI jobs.

- Speculations on AI Development and Scaling: Conversations sparked thoughts on the future of AI development, including the scaling wall, efficient training strategies, the role of SSM architectures, and the usefulness of benchmarks in assessing model performance.

- Tweet from Junyang Lin (@JustinLin610): Hours later, you will find our little gift. Spoiler alert: a small MoE model that u can run easily🦦

- Tweet from Daniel Han (@danielhanchen): Took a look at @databricks's new open source 132 billion model called DBRX! 1) Merged attention QKV clamped betw (-8, 8) 2) Not RMS Layernorm - now has mean removal unlike Llama 3) 4 active exper...

- Tweet from Cody Blakeney (@code_star): It’s finally here 🎉🥳 In case you missed us, MosaicML/ Databricks is back at it, with a new best in class open weight LLM named DBRX. An MoE with 132B total parameters and 32B active 32k context len...

- Qwen1.5-MoE: Matching 7B Model Performance with 1/3 Activated Parameters: GITHUB HUGGING FACE MODELSCOPE DEMO DISCORD Introduction Since the surge in interest sparked by Mixtral, research on mixture-of-expert (MoE) models has gained significant momentum. Both researchers an...

- Experiments with Bitnet 1.5 (ngmi): no description found

- hpcai-tech/grok-1 · Hugging Face: no description found

- Tweet from Cody Blakeney (@code_star): Not only is it’s a great general purpose LLM, beating LLama2 70B and Mixtral, but it’s an outstanding code model rivaling or beating the best open weight code models!

- ai21labs/Jamba-v0.1 · Hugging Face: no description found

- Tweet from Awni Hannun (@awnihannun): 4-bit quantized DBRX runs nicely in MLX on an M2 Ultra. PR: https://github.com/ml-explore/mlx-examples/pull/628 ↘️ Quoting Databricks (@databricks) Meet #DBRX: a general-purpose LLM that sets a ne...

- Introducing Jamba: AI21's Groundbreaking SSM-Transformer Model: Debuting the first production-grade Mamba-based model delivering best-in-class quality and performance.

- Side Eye Dog Suspicious Look GIF - Side Eye Dog Suspicious Look Suspicious - Discover & Share GIFs: Click to view the GIF

- 🔮 Mixture of Experts - a mlabonne Collection: no description found

- Introducing Lemur: Open Foundation Models for Language Agents: We are excited to announce Lemur, an openly accessible language model optimized for both natural language and coding capabilities to serve as the backbone of versatile language agents.

- app.py · databricks/dbrx-instruct at main: no description found