[AINews] How To Scale Your Model, by DeepMind

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Systems thinking is all you need.

AI News for 2/3/2025-2/4/2025. We checked 7 subreddits, 433 Twitters and 34 Discords (225 channels, and 3842 messages) for you. Estimated reading time saved (at 200wpm): 425 minutes. You can now tag @smol_ai for AINews discussions!

In a surprise drop, some researchers released a "little textbook" on how they scale models at GDM:

A commenter confirmed this was GDM internal documentation, with Gemini references redacted.

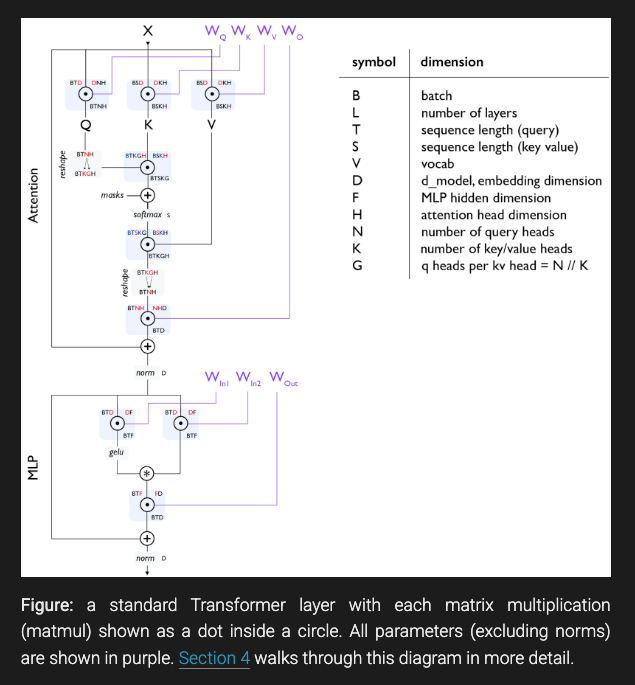

How To Scale Your Model comes in 12 parts and starts with a nice update of what standard Transformers today look like:

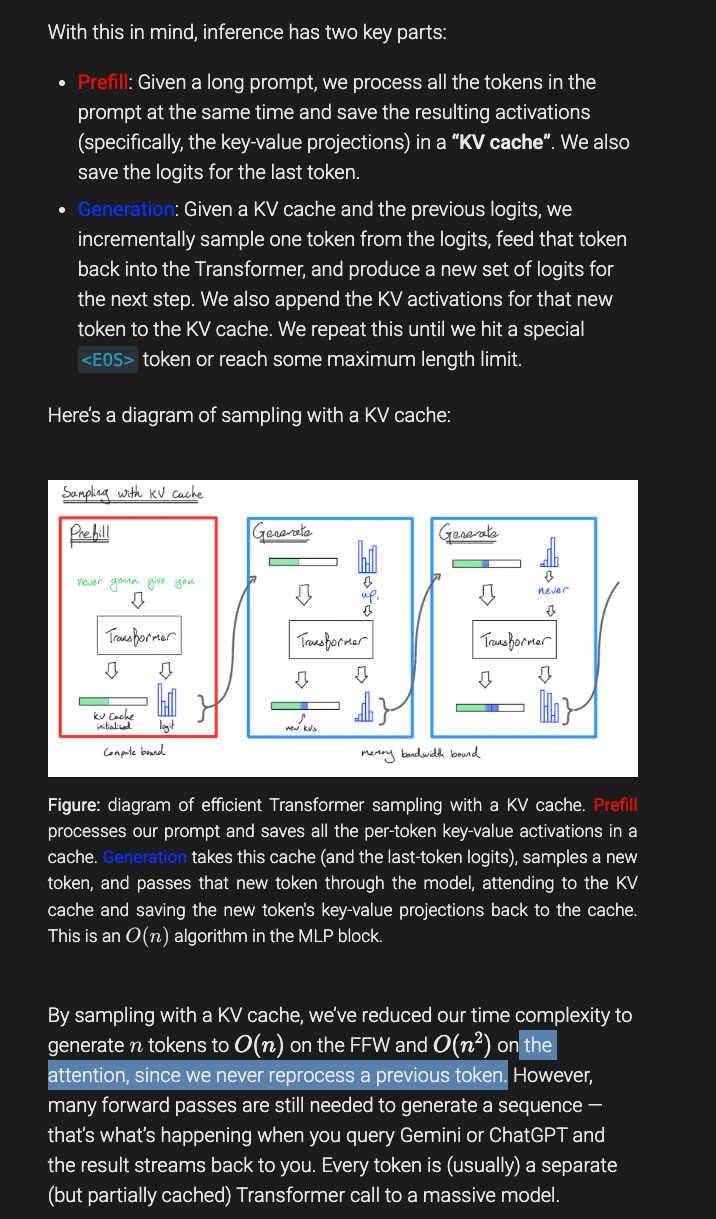

and explains how inference differs from the standard O(N^2) understanding of attention:

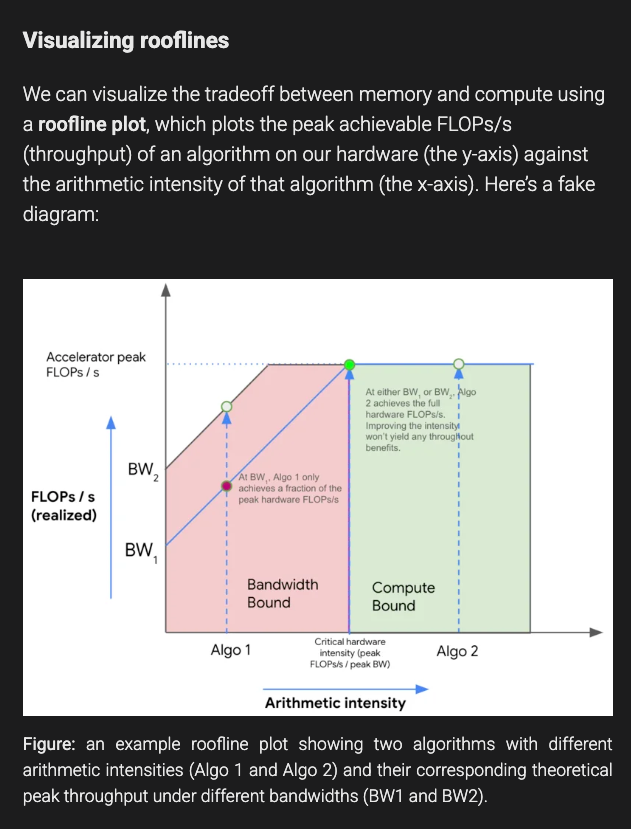

but also introduces standard high performance computing concepts like rooflines:

even coming with worked problems for the motivated reader to test their understanding... and comments are being read in realtime.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

AI Model Releases and Research Papers

- "ASAP": A Real2Sim2Real Model for Humanoid Robotics: @DrJimFan announced "ASAP," a model that enables humanoid robots to perform fluid motions inspired by Cristiano Ronaldo, LeBron James, and Kobe Bryant. The team, including @TairanHe99 and @GuanyaShi, has open-sourced the paper and code for this project. The approach combines real-world data with simulation to overcome the "sim2real" gap in robotics.

- "Rethinking Mixture-of-Agents" Paper and "Self-MoA" Method: @omarsar0 discussed a new paper titled "Rethinking Mixture-of-Agents," which questions the benefits of mixing different LLMs. The proposed "Self-MoA" method leverages in-model diversity by aggregating outputs from the top-performing LLM, outperforming traditional MoA approaches. The paper can be found here.

- Training LLMs with GRPO Algorithm from DeepSeek: @LiorOnAI highlighted a new notebook that demonstrates training a reasoning LLM using the GRPO algorithm from DeepSeek. In less than 2 hours, you can transform a small model like Qwen 0.5 (500 million parameters) into a math reasoning machine. Link to notebook.

- Bias in LLMs Used as Judges: @_philschmid shared insights from the paper "Preference Leakage: A Contamination Problem in LLM-as-a-Judge," revealing that LLMs can be significantly biased when used for synthetic data generation and evaluation. The study emphasizes the need for multiple independent judges and human evaluations to mitigate bias. Paper.

- mlx-rs: Rust Library for Machine Learning: @awnihannun introduced mlx-rs, a Rust library that includes examples of text generation with Mistral and MNIST training. This is a valuable resource for those interested in Rust and machine learning. Check it out.

AI Tools and Platforms Announcements

- Hugging Face's AI App Store Launched: @ClementDelangue announced that Hugging Face has launched its AI app store with 400,000 total apps, including 2,000 new apps daily and 2.5 million weekly visits. Users can now search through apps using AI or categories, emphasizing that "the future of AI will be distributed." Explore the app store.

- AI App Store Announcement: @_akhaliq echoed the excitement about the launch of the AI App Store, stating it's the best place to find the AI apps you need, with approximately 400k apps available. Developers can build apps, and users can discover new ones using AI search. Check it out.

- Updates to 1-800-CHATGPT on WhatsApp: @kevinweil announced new features for 1-800-CHATGPT on WhatsApp:

- You can now upload images when asking a question.

- Use voice messages to communicate with ChatGPT.

- Soon, you'll be able to link your ChatGPT account (free, plus, pro) for higher rate limits.

- Learn more.

- Replit's New Mobile App and AI Agent: @hwchase17 shared that Replit launched a new mobile app and made their AI agent free to try. The rapid development of Replit's AI Agent is notable, and @amasad confirmed the release. Details here.

- ChatGPT Edu Rolled Out at California State University: @gdb reported that California State University is becoming the first AI-powered university system, with ChatGPT Edu being rolled out to 460,000 students and over 63,000 staff and faculty. Read more.

AI Events, Conferences, and Hiring

- AI Dev 25 Conference Announced: @AndrewYNg announced AI Dev 25, a conference for AI developers happening on Pi Day (3/14/2025) in San Francisco. The event aims to create a vendor-neutral meeting for AI developers, featuring over 400 developers gathering to build, share ideas, and network. Learn more and register.

- Hiring for Alignment Science Team at Anthropic: @sleepinyourhat is hiring researchers for the Alignment Science team at Anthropic, co-led with @janleike. They focus on exploratory technical research on AGI safety. Ideal candidates have:

- Several years of experience as a SWE or RE.

- Substantial research experience.

- Familiarity with modern ML and the AGI alignment literature.

- Apply here.

- INTERRUPT Conference Featuring Andrew Ng: @hwchase17 announced that Andrew Ng will be speaking at the INTERRUPT conference this May. Celebrating Ng as one of the best educators of our generation, attendees are encouraged to learn from him. Get tickets.

- Virtual Forum on DeepSeek Integration: @llama_index invited developers, engineers, and AI enthusiasts to join a virtual forum exploring DeepSeek, its capabilities, and integration into workflows. Presenters include representatives from Google, GitHub, AWS, Vectara, and LlamaIndex. Register here.

AI Ethics, Safety, and Policy

- Google DeepMind Updates Frontier Safety Framework: @GoogleDeepMind shared updates to their Frontier Safety Framework, a set of protocols designed to mitigate severe risks as we progress toward AGI. Emphasizing the need for AI to be both innovative and safe, they invite readers to find out more.

- Discussion on Bias in LLM Judges: @_philschmid addressed the issue of bias in LLMs when used for synthetic data generation and as judges. The "Preference Leakage" paper reveals that LLMs can favor data generated by themselves or their previous versions, highlighting a contamination problem. Read the paper.

- OpenAI's Frontier Safety Framework Updates: @OpenAI announced new updates to their Frontier Safety Framework, aiming to stay ahead of potential severe risks associated with advanced AI systems.

General AI Industry Commentary

- Yann LeCun on Small Teams and Innovation: @ylecun emphasized that small research teams with autonomy are empowered to make the right technical choices and innovate. He highlighted the importance of organization and management in fostering innovation within R&D organizations.

- DeepSeek Compared to Sputnik Moment: @JonathanRoss321 compared the news about DeepSeek to a modern "Sputnik 2.0," implying it's a significant milestone in AI, similar to the historic space race event.

- Reflections on Technology Adoption: @DavidSHolz commented on how new technologies are often initially used to replicate old mediums, stating, "These mistakes happen when you don't respect that new inventions are also new mediums."

- Discussions on AI Evaluation and RL: @cwolferesearch observed that few-shot prompting degrades performance in DeepSeek-R1, likely due to the model's training on a strict format. This points to new paradigms in interacting with LLMs and the evolving landscape of AI techniques.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek R1 & R1-Zero: Rapid Model Training Achievements

- Deepseek researcher says it only took 2-3 weeks to train R1&R1-Zero (Score: 800, Comments: 127): Deepseek's researcher claims that R1 and R1-Zero models were trained in just 2-3 weeks, suggesting a rapid development cycle for these AI models.

- Discussions highlight skepticism about the R1 and R1-Zero's rapid training of 10,000 RL steps in 3 weeks, with some users questioning the feasibility and others suggesting that fine-tuning existing models like V3 could explain the speed. Concerns include potential bottlenecks in API and website performance due to high demand and the need for improved training data or architectures.

- Users compare Deepseek's models to other AI advancements, noting the potential for new models to emerge globally following the release of the paper. Some express preference for Deepseek over OpenAI due to perceived openness and lack of tariffs, while others anticipate future versions like R1.5 or V3-lite.

- The conversation touches on the AI space race, with comparisons to the global space race, highlighting regional participation disparities. Europe is mentioned as having contributions through companies like Stable Diffusion and Hugging Face, while other regions are noted for limited involvement, emphasizing the competitive nature of AI development globally.

Theme 2. DeepSeek-R1 Model: Implications of Shorter Correct Answers

- DeepSeek-R1's correct answers are generally shorter (Score: 289, Comments: 66): DeepSeek-R1's correct answers are generally shorter, averaging 7,864.1 tokens compared to 18,755.4 tokens for incorrect answers, as depicted in a bar graph. The standard deviation is 5,814.6 for correct solutions and 6,142.7 for incorrect ones, indicating variability in token lengths.

- Task Difficulty and Response Length: Several comments, including those by wellomello and Affectionate-Cap-600, question whether the analysis accounted for task difficulty, suggesting that harder tasks naturally require longer responses, which can affect error rates and token length averages.

- Model Behavior and Standard Deviation: FullstackSensei and 101m4n discuss the implications of the high standard deviation in token length, suggesting that incorrect answers could result from the model entering loops or struggling with problem-solving, thus extending response time.

- Related Research and Generalization: Angel-Karlsson references a relevant research paper on overthinking in models, while Egoz3ntrum highlights the importance of considering the dataset's limitations, indicating that conclusions might not generalize well beyond specific math problem difficulties.

Theme 3. OpenAI Research: Embracing Open-Source via Hugging Face

- OpenAI deep research but it's open source (Score: 421, Comments: 28): Hugging Face has launched an initiative called OpenAI Deep Research, making deep research open source. The project aims to democratize access to cutting-edge AI research, emphasizing transparency and collaboration in the AI community. More details can be found in their blog post.

- Users express significant appreciation for the Hugging Face team, comparing their contributions to those of the Mistral team and highlighting the rapid development pace, with some anticipating integration into platforms like Open-WebUI soon. The sense of urgency and surprise is echoed in comments about the swift creation of open-source alternatives to proprietary solutions from OpenAI.

- Discussions highlight the open-source community's gratitude towards Hugging Face for providing extensive tooling and frameworks, with some users humorously questioning the motivations behind such generosity. The notion of quickly developing open-source alternatives is a recurring theme, reflecting the community's proactive stance on maintaining open access to AI advancements.

- A comment provides a link to a GitHub repository for those interested in trying out local implementations, pointing towards Automated-AI-Web-Researcher-Ollama as a resource for experimenting with open-source AI tools. This suggests a practical interest in hands-on experimentation with AI research tools among the community.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. OmniHuman-1: China's Multimodal Marvel

- China's OmniHuman-1 🌋🔆 (Score: 684, Comments: 174): OmniHuman-1 is a Chinese project that focuses on video generation from single images. The post lacks additional details, so further context or technical specifics about OmniHuman-1 are not provided.

- OmniHuman-1's Capabilities and Concerns: There is a significant discussion about OmniHuman-1's potential to generate realistic human videos from a single image and audio, with some users expressing concerns about the implications for media authenticity and the potential for indistinguishable synthetic media. The project's details and code base are available on GitHub and its white paper is accessible at omnihuman-lab.github.io.

- AI's Impact on Creative Industries: Some commenters debate AI's influence on creative industries, suggesting AI could lead to a golden age of unique, economically feasible artistic creations, while others express skepticism about AI's ability to replicate the depth of human experiences. Concerns about the future of human-generated creative work and economic impacts, such as the potential need for UBI, are also discussed.

- Technical Observations and Challenges: Users note technical imperfections in AI-generated videos, such as unnatural physical movements and uncanny valley effects, suggesting that while AI has advanced, there are persistent challenges that may require fundamentally different techniques to overcome. The discussion includes the idea that AI-based video will continue evolving through a process of refinement, similar to developments in language models.

Theme 2. Huawei's Ascend 910C Challenges Nvidia H100

- huawei's ascend 910c chip matches nvidia's h100. there will be 1.4 million of them by december. don't think banned countries and open source can't reach agi first. (Score: 262, Comments: 99): Huawei's Ascend 910C chip reportedly matches Nvidia's H100 in performance, with plans to produce 1.4 million units by 2025. This development challenges claims that China and open-source projects are lagging in AI chip technology, suggesting they now have the capability to build top AI models, potentially reaching AGI before major AI companies.

- CUDA's Dominance: Many comments emphasize the importance of CUDA in AI development, noting that it's a proprietary platform that deeply integrates with major frameworks like TensorFlow and PyTorch. While some argue that alternatives like AMD's ROCm exist, others believe that replicating CUDA's ecosystem is a significant challenge, though not insurmountable given sufficient investment.

- Huawei's Competitive Position: There is skepticism about claims that Huawei's Ascend 910C matches Nvidia's H100. Some users argue that the 910C only achieves 60% of the H100's performance, and Huawei's strategy is not to compete directly with Nvidia but to capture market share where Nvidia is restricted, leveraging their own CANN platform as a CUDA equivalent.

- Market Dynamics and Open Source: The discussion touches on the potential for open-source developers to pivot away from open-source models if they achieve AGI. There's a sentiment that Huawei, due to market restrictions, might push their development to catch up with Nvidia, but it could take 3-5 years to reach parity, potentially using third-party channels to access Nvidia hardware in the meantime.

Theme 3. O3 Mini: OpenAI's Usability Leap

- O3 mini actually feels useful (Score: 104, Comments: 17): O3 Mini, an open AI model, initially impressed the user by suggesting a smart solution or bug fix for coding issues, which seemed more effective than O1 (non-pro). However, upon further evaluation, the suggested solution did not work as expected.

- O3 Mini initially seemed impressive but failed to deliver effective solutions, indicating that other AI models like Claude series might generalize better across tasks. Mescallan suggests that most models show spikes in specific benchmarks but lack generalization.

- O1 Pro is considered more reliable for coding tasks, with MiyamotoMusashi7 expressing trust in it for code-related tasks while acknowledging potential bugs in other areas.

- gentlejolt highlights a workaround for improving code quality by instructing the AI to "rearchitect" and optimize for readability and maintainability, although the end result was only a slightly improved version of the original code.

Theme 4. OpenAI Unveils OpenAI Sans Font

- Refreshed. (Score: 259, Comments: 140): OpenAI has introduced a new font as part of their branding strategy, signaling a refreshed visual identity. The update is part of their ongoing efforts to enhance their brand presence and user engagement.

- Many comments draw a comparison between OpenAI's new font and Apple's design ethos, suggesting that OpenAI's design team might include former Apple UX designers. The design change is seen as a strategic move to own a unique font, similar to Apple's creation of the San Francisco font, which reduces long-term licensing costs.

- There is skepticism about the need for a new font, with comments suggesting the move is more about branding and justifying investor spending rather than substantial innovation. Some users humorously critique the effort, equating it to spending billions to change a font from Arial to Helvetica.

- Several comments highlight the potential disconnect between the design-focused branding strategy and the expectations of a more technically inclined audience. The creation of "OpenAI sans" is seen as a strategic branding move, but its immediate value to non-designers is questioned, with some commenters finding the video presentation excessive and not directly relevant to their interests.

AI Discord Recap

A summary of Summaries of Summaries by o1-mini-2024-09-12

Theme 1. Model Optimization Mania

- DeepSeek R1 Shrinks to Size: The DeepSeek R1 model was successfully quantized to 1.58 bits, slashing its size by 80% from 720GB to 131GB, all while keeping it functional on a MacBook Pro M3 with 36GB RAM.

- Phi-3.5's Censorship Comedy: Users hilariously mocked Phi-3.5's over-the-top censorship, leading to the creation of an uncensored version on Hugging Face.

- Harmonic Loss Hits the Charts: Introducing harmonic loss, a new training loss that outperforms cross-entropy in both speed and interpretability, revolutionizing how models generalize and understand data.

Theme 2. AI Tool Wars

- Cursor Slays Copilot: In the battle of AI coding assistants, Cursor outperformed GitHub's Copilot, offering superior performance and utility, especially in smaller codebases, while Copilot slowed down workflows.

- OpenRouter Welcomes Cloudflare: Cloudflare joins OpenRouter, integrating its Workers AI platform and releasing Gemma 7B-IT with tool calling capabilities, expanding the ecosystem for developers.

- Bolt's Backup Blues: Users voiced frustrations over Bolt's unreliable backups and performance issues, highlighting the need for more robust solutions in the AI development space.

Theme 3. Ethics and Safety Shenanigans

- Anthropic’s 20% Hassle: Anthropic's new constitutional classifiers raised eyebrows with a 20% increase in inference costs and 50% more false refusals, sparking debates on AI safety efficacy.

- EU AI Act Angst: The stringent EU AI Act has the community worried about tight regulations and the future of AI operations within Europe, even before its full enactment.

- AI Copyright Catastrophe: Concerns surged over AI firms using copyrighted data without proper licensing, leading to calls for mandatory licensing systems akin to the music industry to ensure creators are compensated.

Theme 4. Hackathons and Collaborative Sparks

- $35k Hackathon Heat Up: A collaborative hackathon announced collaboration with Google Deepmind, Weights & Biases, and others, offering $35k+ in prizes for developing autonomous AI agents that enhance user capabilities.

- R1-V Project Revolution: The R1-V project showcases a model that beats a 72B counterpart with just 100 training steps at under $3, promising to be fully open source and igniting community interest.

- Pi0 Takes Action: Pi0, an advanced Vision Language Action model, was launched on LeRobotHF, enabling autonomous actions through natural language commands and available for fine-tuning on diverse robotic tasks.

Theme 5. AI in Legal and Customer Service Realms

- Lawyers Love NotebookLM: A Brazilian lawyer praises NotebookLM for drafting legal documents efficiently, leveraging its reliable source citations to boost productivity.

- Customer Service Transformation: Users explored how NotebookLM can revolutionize customer service by automating client profile creation and reducing agent training time, making support more scalable and efficient.

- Political AI Agent Launched: The Society Library introduced a Political AI agent to serve as an educational intermediary in digital debates, enhancing digital democracy through AI-driven discussions.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- DeepSeek R1 Quantized!: The DeepSeek R1 model has been quantized to 1.58 bits, resulting in an 80% size reduction from 720GB to 131GB, while maintaining functionality.

- This was achieved by selectively applying higher bits to specific layers, avoiding naive full quantization, which previously caused issues like gibberish outputs, also running on a MacBook Pro M3 with 36GB of RAM.

- Fine-Tuning Strategies Unveiled: Discussions emphasized that reducing dataset size and focusing on high-quality examples can enhance training outcomes, it's recommended to fine-tune models pretrained on code for code classification tasks.

- Participants also explored using models to generate synthetic datasets and adjusting loss functions to effectively manage class imbalances.

- Klarity Library Sees the Light!: The new open-source Klarity library allows for analyzing the entropy of language model outputs, providing enhanced insights into decision-making, and is open for testing with unsloth quantized models.

- The library offers detailed JSON reports for thorough examination; check it out here.

- MoE Experts Config: Static is Key: Confusion regarding the configuration of experts in MoE frameworks was addressed, emphasizing that the number of experts should typically remain static during model operation.

- Users were initially unsure if a default of 8 or a maximum of 256 experts should be used, but clarifications aimed to resolve this uncertainty.

- Bulgarian Model's Impressive Leap!: A Bulgarian language model showcased significant improvements against the base model, with notable perplexity score reductions (PPL: 72.63 vs 179.76 for short text).

- Such reductions in perplexity highlight the model's enhanced capabilities in understanding and processing the Bulgarian language.

Codeium (Windsurf) Discord

- Windsurf Seeks New Docs Shortcuts: A member is gathering new

@docsshortcuts for Windsurf, soliciting contributions to enhance the documentation experience.- The goal is to improve the documentation access with efficient handling of resources, and Mintlify was thanked for auto-hosting all docs with

/llms.txt, which allows the agent to avoid HTML parsing.

- The goal is to improve the documentation access with efficient handling of resources, and Mintlify was thanked for auto-hosting all docs with

- Codeium's Performance Suffers: Users report that Claude's tool utilization isn't working well, resulting in high credit usage due to repeated failures, with some suggesting credits shouldn't be deducted when tools produce errors.

- Other members experienced issues when attempting to sign in to their accounts on VSCode, due to internal certificate errors, and sought help via different networks and support.

- Users Question Windsurf O3 Mini Pricing: Users express concern over Windsurf's O3 Mini pricing, questioning if it should match Claude 3.5 Sonnet given its performance and high credit usage.

- Many users are unable to modify files, which often leads to internal errors, so some are requesting fairer pricing.

- Windsurf has Limitations on Model Context Window: Users report issues with Windsurf failing to modify or update files, alongside concerns about limited context windows affecting model performance with a preference for Claude.

- Feedback emphasizes the need for clearer warnings when exceeding context size and addressing tool call failures, while some are exploring creating

.windsurfrulesfiles to manage full-stack web applications.

- Feedback emphasizes the need for clearer warnings when exceeding context size and addressing tool call failures, while some are exploring creating

- Hackathon Invites AI Agent Enthusiasts: A collaborative hackathon with $35k+ in prizes was announced, inviting participants to develop autonomous AI agents.

- Participants will pitch projects aimed at improving user capabilities through AI technologies, as the community shares mixed feedback on the reliability of Qodo (formerly Codium).

aider (Paul Gauthier) Discord

- O1 Pro crushes Code Gen Times: Users are seeing massive speedups using O1 Pro, with some generating extensive code in under five minutes, running circles around O3 Mini.

- These users are noting faster response times and better handling of complex tasks.

- Weak Models Provide Strong Value in Aider: For tasks like generating commit messages and summarizing chats, members suggest that weak models can be more cost-effective and efficient than strong models, such as DeepSeek V3.

- The community is looking for budget-friendly yet effective models that can also be fine-tuned.

- OpenRouter preferred over Direct API: Members are finding that using OpenRouter provides better uptime and the ability to prioritize certain providers over direct API access, with CentML and Fireworks being effective deepseek providers, even though they can be slower.

- For more info check out the Aider documentation.

- Aider File Management Automation Sought: Manual file addition in Aider is tedious so users are seeking ways to automate it, with a plugin existing for VSCode that automatically adds the currently opened file.

- It was noted that a repo map is available so should be straightforward.

- Clarification on Aider Chat Modes: Members have requested more info on how

code,architect,ask, andhelpmodes alter interaction and commands in Aider, with command/chat-modeswitching the active mode.- The explanation highlighted that the active mode influences model choice as detailed in the Aider documentation.

Cursor IDE Discord

- Cursor IDE Updates Spark Mixed Reactions: Users experienced issues with recent Cursor updates, noting slower performance and bugs compared to previous versions, with some still using it and others expressing frustration.

- Some users feel that the current models can't effectively replace their previous experiences, while others noted that the Fusion Model rollout wasn't clear in the changelogs.

- Alternatives to Cursor Emerge: Users discussed alternatives like Supermaven and Pear AI, with varying opinions; some found Supermaven fast but less reliable than Cursor, especially in the free tier.

- A user shared a link to Repo Prompt and another user shared a link to his repo of AI Dev Helpers.

- Cost of AI Tools Sparks Concerns: The high costs of AI tools like Cursor and GitHub Copilot are concerning to some users, who are worried about affordability.

- While some seek lower-cost options, others believe Cursor's value justifies its price.

- Diverse AI Model Experiences: Experiences vary, with some users successfully building projects using Cursor, while others face frustrations with AI-generated errors; one user shared his 2-minute workflow using DeepSeek R1 + Claude 3.5 Sonnet in this video.

- Discussions included using models like Claude Sonnet and addressing practical challenges.

- Community Mobilizes Around Cursor: Users shared links to GitHub repositories such as

awesome-cursorrulesfor enhanced functionality with Cursor, aiming to optimize its use and improve user experiences for coding tasks.- These resources enable enhanced functionality with Cursor, like the multi-agent version of devin.cursorrules project.

Yannick Kilcher Discord

- Deepseek R1 600B Produces Outstanding Results: After presenting Deepseek R1 600B from Together.ai with a difficult grid, a member noted that it produced outstanding results relative to smaller models.

- Screenshots were provided that showed its ability to conclude with the right letter, indicating advanced reasoning capabilities, impressing the AI Engineer audience.

- Anthropic Classifiers Face Cost and Performance Issues: A member shared their concerns regarding the paper on constitutional classifiers, pointing out a 20% increase in inference costs and 50% more false refusals, which affects user experience.

- It was also suggested that classifiers may not sufficiently guard against dangerous model capabilities as models advance, especially as models become more capable, drawing criticisms against alignment strategies.

- Ethical AI Training Data Provokes Debate: Members debated the challenges of defining 'dubious' data sources for AI training, with concerns raised about the implications of using datasets like Wikipedia and the morality of AI capabilities.

- This highlights a broader debate on data ownership and ethical considerations in AI development, especially in the context of copyright reform.

- AI Companies Hide Behind Copyright Laws: A member highlighted that AI companies often hide behind copyright and patent laws to protect their intellectual property, which creates a dilemma between unrestricted access and tight control.

- Snake-oil selling was mentioned as a critique of these practices, implying deceit in their claims and potentially stifling innovation.

- Hallucinations Considered Natural Behavior: A debate emerged about the concept of 'hallucination' within LLM outputs, with some arguing it's a natural aspect of model behavior rather than a flaw.

- Members criticized the term 'hallucination' as misleading and anthromorphizing technology that generates output based on learned patterns, as well as being an unachievable goal.

LM Studio Discord

- Deepseek R1 Underperforms Relative to Qwen: Users found the Deepseek R1 abliterated Llama 8B model underwhelming compared to the smaller Qwen 7B and 1.5B models, noting inconsistent performance.

- One user questioned how to fully uncensor models, highlighting a discrepancy in capabilities between newer versions.

- Clarification on API Model Usage: Discussions clarified that 'local-model' in API calls acts as a placeholder for the specific model name, especially in setups with multiple models loaded (LM Studio API Docs).

- Explicitly obtaining model names before making API requests can prevent ambiguity in model selection, enhanced by REST API stats (LM Studio REST API).

- Intel Mac Support Sunsetted: LM Studio versions are exclusively supported on Apple Silicon, and there are no self-build options as it remains closed-source.

- Users suggested alternative systems for those with Intel-based Macs, since support is not provided.

- RAG Enhances Inference Without Finetuning: Users explored using Retrieval-Augmented Generation (RAG) to enhance inference capabilities in LM Studio for domain-specific tasks without finetuning (LM Studio Docs on RAG).

- The importance of utilizing domain knowledge in vector stores was emphasized as the first step before considering more complex solutions like model finetuning.

- M4 Ultra Performance Doubts: Members expressed skepticism about the M4 Ultra's ability to deliver strong performance with rumors pointing towards a $1200 starting price for a system with 128GB of RAM.

- Some speculate it may not outpace NVIDIA's Project DIGITS, which has superior interconnect speeds for clustering models.

Perplexity AI Discord

- Perplexity Welcomes New Security Lead: Perplexity introduced its new Chief Security Officer (CSO) with a video titled Jimmy, emphasizing the importance of security advancements.

- The announcement is intended to allow the community to align with leadership on new security strategies.

- Perplexity Pro Praised for Query Limits: Users appreciate the Perplexity Pro plan for its almost unlimited daily R1 usage, deeming it a valuable offering.

- One user contrasted it favorably with Deepseek's query limits, praising Perplexity's server performance.

- Sonar Model Deprecation Slows Processes: A user reported receiving a deprecation notice for llama-3.1-sonar-small-128k-online two weeks prior and experienced a 5-10 second latency increase after switching to

sonar.- They inquired about the expected nature of this delay and sought advice on mitigation.

- Sharing Phobia and Motherboard Resources: A user shared a link about websites that list phobias providing consolidated resources for further reading here, and also the MSI A520M A Pro motherboard found here.

- The MSI A520M link includes detailed comparisons and user experiences while the phobias link lists various phobias and their descriptions.

- API Users Request Image Access: An API user seeking to retrieve images for their PoC discovered the need to be a tier-2 API user to access this feature.

- They asked about the possibility of granting temporary access to utilize their existing credits for image retrieval.

OpenAI Discord

- DeepSeek Sparks Community Concerns: Users discussed how DeepSeek R1 could democratize AI tech, but concerns arose about data potentially being sent to China, leading to calls for increased transparency and analysis of DeepSeek R1 vs o3-mini in performance

- The discussion included links to a Reddit thread introducing a simpler and OSS version of OpenAI's latest Deep Research feature and a YouTube short questioning whether DeepSeek is being truthful, highlighting privacy and cybersecurity issues.

- O1 Pro Impresses with Mini-Games: Members shared positive experiences with O1 Pro, reporting its ability to generate multiple mini-games without errors in a single session, showcasing its robust performance and leading one user to plan rigorous testing with ambitious prompts.

- The praise for O1 Pro's capabilities sparked broader conversations about model performance and orchestration services in AI.

- Structured Generation Tweaks Model Performance: A member discussed utilizing 'thinking' fields in JSON schemas and Pydantic models to enhance model performance during inference.

- They cautioned that this method can pollute the data structure definition, but simplifies the addition/removal of fields via JSON Schema extras through the open-sourced UIForm utility, installable via

pip install uiform.

- They cautioned that this method can pollute the data structure definition, but simplifies the addition/removal of fields via JSON Schema extras through the open-sourced UIForm utility, installable via

- Users Ponder GPT-4o Reasoning Ability: Users questioned recent enhancements to GPT-4o's reasoning ability and expressed mixed reactions to OpenAI updates, with one user noting increased emoji usage in code responses, which could potentially reduce coding focus.

- A member rated the accuracy of Deep Research information on a scale from 1 to 10, indicating interest in its reliability, while others inquired about device restrictions for the Pro version.

Interconnects (Nathan Lambert) Discord

- SoftBank injects Billions into OpenAI Ventures: SoftBank is set to invest $3 billion annually in OpenAI products and is establishing a joint venture, Cristal Intelligence, focused on the Japanese market, potentially valuing OpenAI at $300 billion.

- The joint venture aims to deliver a business-oriented version of ChatGPT, marking a significant expansion of OpenAI's reach in Asia according to this tweet.

- Google Gemini Gets Workspace Integrated Overhaul: Gemini for Google Workspace will discontinue add-ons, integrating AI functionalities across Business and Enterprise Editions to boost productivity and data governance, serving over a million users.

- This strategic move aims to transform how businesses employ generative AI, as detailed in Google's official announcement.

- DeepSeek V3 Flexes on Huawei Ascend: The DeepSeek V3 model is now capable of training on Huawei Ascend hardware, expanding its availability to more researchers and engineers.

- Despite concerns about the reliability of performance and cost reduction claims, this integration marks a step forward for the platform according to this tweet.

- OpenAI Eyes Robotics and VR Headsets: OpenAI has filed a trademark signaling its intent to enter the hardware market with humanoid robots and AI-driven VR headsets, potentially challenging Meta and Apple.

- This move positions OpenAI to face the intricacies of crowded hardware challenges as noted in this Business Insider article.

- Prime Paper Drops Insights on Implicit Rewards: The highly anticipated Prime paper has been released, featuring contributions from Ganqu Cui and Lifan Yuan, introducing new concepts to optimize model performance through implicit rewards.

- This publication is poised to reshape understanding of reinforcement learning, offering innovative solutions for optimizing model performance.

GPU MODE Discord

- LlamaGen struggles out the gate: The new LlamaGen model promises to deliver top-tier image generation through next-token prediction, potentially outperforming diffusion frameworks like LDM and DiT.

- However, concerns have been raised regarding slow generation times when compared to diffusion models, hinting at potential optimization needs and raising questions about the absence of generation time comparisons in the paper Autoregressive Model Beats Diffusion: Llama for Scalable Image Generation.

- Triton Optimization Challenges Persist: A user reported their Triton code being 200x slower than PyTorch when trying to optimize an intensive memory operation, seeking assistance with performance tuning.

- It was suggested that optimizing k_cross, derived from crossing different rows in matrix k, is crucial for large dimensions, but without autotuning, TMA might not deliver expected improvements over traditional methods.

- Cache Inefficiency strikes again: During a CUDA discussion, members noted that if you have an input that's larger than your L2 and are streaming, then the cache is entirely useless, causing continuous thrashing even within a single stream.

- Concerns arose about increased use of integer operations impacting the performance of FP operations in kernels leveraging tensor cores, with some suggesting that the INT/FP distinction is less relevant if one is limited by FMAs.

- FlashAttention degrades output quality: A user discovered that while using the Flash Attention 3 FP8 kernel increased inference speed in their diffusion transformer model, the output quality degraded significantly.

- A hypothesis suggests that subtle differences between FP32 and FP8 (around 1e-5) accumulate during softmax, affecting attention distributions in long contexts, with NVIDIA's documentation cited as relevant reading.

- Cursor takes the crown, Copilot gets demoted: Users found the difference between Cursor and Github's Copilot to be night and day, with Cursor offering superior performance and utility, particularly in smaller codebases.

- The free version of Copilot was reported to slow down workflows and be less helpful overall, especially in larger codebases where human judgement proved more efficient.

Eleuther Discord

- Odds of Functional Language Models Unveiled: Researchers determined the probability of randomly guessing the weights of a functional language model is about 1 in 360 million after they developed a random sampling method in weight space.

- The method may offer insights into understanding network complexity, showcasing the unlikelihood of randomly stumbling upon a functional configuration.

- Harmonic Loss Emerges as a Training Game Changer: A new paper introduces harmonic loss as a more interpretable, and faster-converging alternative to cross-entropy loss, showcasing improved performance across various datasets and detailed in this arXiv paper.

- The harmonic model outperformed standard models in generalization and interpretability, indicating significant benefits for future LLM training; one researcher wondered how harmonically weighted attention would work given the potential benefits as expressed in this tweet.

- Polynomial Transformers Spark Interest: Members discussed the potential of polynomial (quadratic) transformers, suggesting that replacing MLPs could boost model efficiency, particularly in attention mechanisms as seen in Symmetric Power Transformers.

- The conversation revolved around classic models versus bilinear approaches, and highlighted trade-offs in parameter efficiency and complexity at scale.

- Custom LLM Assembly Tool Proposed: A member proposed a drag-and-drop tool for assembling custom LLMs, enabling users to visualize how different architectures and layers affect model behavior in real-time.

- This concept was entertained as a fun side project, reflecting a community interest in hands-on LLM customization.

- DeepSeek Models Encounter Evaluation Hiccups: A member reported poor scores using the llm evaluation harness on DeepSeek distilled models, and suspects

- They requested advice on verifying the issue or ignoring the tags during evaluation, indicating concerns about evaluation bias.

Nous Research AI Discord

- DeepSeek Shifts the AI Landscape: A YouTube video highlighted how DeepSeek has altered the trajectory of AI, sparking debate about Altman's position on open-source versus actions.

- Commentary suggested Altman has been labeled a hypeman due to the perceived gap between his words and actual support for open initiatives.

- Recommendation Systems Mature Slowly: A new member, Amith, shared experiences with Gorse, an open-source recommendation system, noting these systems still need time to mature.

- Another member recommended exploring ByteDance's technologies to expand the discussion on available resources for recommendations.

- RL Challenges in Teaching AI Values: Discussion emerged on whether Reinforcement Learning (RL) could instill AI with intrinsic values like curiosity, though complexities in maintaining learned behaviors were noted.

- Juahyori highlighted the difficulty of sustaining learned behaviors in continual learning, emphasizing alignment challenges.

- Political AI Agent Introduced: The Society Library introduced a Political AI agent as part of their nonprofit mission to enhance digital democracy.

- This AI agent will serve as an educational intermediary chatbot in digital debates, leveraging the Society Library's infrastructure.

- SWE Arena Enhances Vibe Coding: SWE Arena supports executing programs in real-time, enabling users to compare coding capabilities across multiple AI models.

- Featuring system prompt customization and code editing, it aligns with the Vibe Coding paradigm, focusing on the AI-generated results at swe-arena.com.

Stability.ai (Stable Diffusion) Discord

- Users Hunt for Image-to-Video Software: A user inquired about image-to-video software, citing NSFW content blocking as a limitation, and another user suggested exploring LTX as a potential solution.

- The inquiry suggests a need for tools that bypass content restrictions while maintaining functionality for diverse content.

- Stable Diffusion Quality Hits a Rut: A user expressed frustration with Stable Diffusion producing poor-quality images repeatedly, specifically mentioning unintentional features like double bodies, seeking advice to clear caches without restarting the software.

- The issue highlights potential challenges in maintaining consistent output quality with Stable Diffusion over prolonged use.

- Smurfette Birthday Wish Sparks Copyright Angst: A user requested help creating a non-NSFW birthday image featuring Smurfette using Stable Diffusion, noting copyright concerns with DALL-E.

- The request underscores the need for models capable of generating specific, family-friendly content while navigating copyright issues.

- Model Performance Debated Amid Censorship Concerns: Users discussed the performance of models like Stable Diffusion 3.5 and Base XL, with varying opinions on their censorship levels and overall effectiveness, with one discussion suggesting that fine-tuning may reduce censorship.

- The discussion reflects ongoing concerns regarding model biases and the trade-offs between censorship and creative control.

- Seeking Precision Character Edits in A1111: A user sought advice on editing individual characters in a multi-person image within A1111 using prompts, aiming to differentiate traits like hair color.

- While techniques like inpainting were mentioned, the user desires a more precise method, indicating a need for advanced editing tools within A1111.

Notebook LM Discord Discord

- Toastinator Launches 'Roast or Toast' Podcast: The AI toaster Toastinator has debuted a podcast called ‘Roast or Toast’, exploring the meaning of life through a mix of celebration and critique.

- The premiere episode invites listeners to witness if The Toastinator toasts or roasts the grand mystery of existence.

- Lawyers Use NotebookLM to Draft Efficiently: A lawyer in Brazil is leveraging NotebookLM to draft legal documents and study cases, citing the tool's source citations for reliability.

- They are now using the tool to adapt templates for repetitive legal documents, significantly boosting process efficiency.

- NotebookLM's Customer Service Potential: A user inquired about NotebookLM's applications in customer service, such as BPOs, focusing on real-world experiences and use cases.

- Potential benefits include reducing agent training time and creating client profiles.

- Google Account Glitches Plague NotebookLM Users: One user reported their regular Google account was disabled while using NotebookLM, suspecting potential age verification problems.

- Another user reinforced the need to carefully examine account settings and permissions when tackling similar issues.

- Workspace Access Woes: Members discussed activating NotebookLM Plus within Google Workspace for select groups instead of the entire organization.

- Instructions were shared on using the Google Admin console to configure access via organizational units, ensuring controlled deployment.

Latent Space Discord

- Anthropic Challenges Users with Claude Constitutional Classifiers: Anthropic launched their Claude Constitutional Classifiers, inviting users to attempt jailbreaks at 8 difficulty levels to test new safety techniques preparing for powerful AI systems.

- The release includes a demo app designed to evaluate and refine these safety measures against potential vulnerabilities.

- FAIR's Internal Conflicts Spark Debate Over Zetta and Llama: Discussions on social media highlighted internal dynamics at FAIR concerning the development of Zetta and Llama models, specifically around transparency and competitive practices (example).

- Key figures like Yann LeCun suggested that smaller, more agile teams have innovated beyond larger projects, prompting calls for a deeper examination of FAIR's organizational culture (example).

- Icon Automates Ad Creation: Icon, blending ChatGPT with CapCut functionalities, was introduced to automate ad creation for brands, with the capability to produce 300 ads monthly (source).

- Supported by investors from OpenAI, Pika, and Cognition, Icon integrates video tagging, script generation, and editing tools to enhance ad quality while significantly reducing expenses.

- DeepMind Drops Textbook on Scaling LLMs: Google DeepMind released a textbook titled How To Scale Your Model, available at jax-ml.github.io/scaling-book/, demystifying the systems view of LLMs with a focus on mathematical approaches.

- The book emphasizes understanding model performance through simple equations, aiming to improve the efficiency of running large models and using JAX software stack + Google's TPU hardware platforms.

- Pi0 Unleashes Autonomous Robotic Actions via Natural Language: Physical Intelligence team launched Pi0, an advanced Vision Language Action model that uses natural language commands to enable autonomous actions, now available on LeRobotHF (source).

- Alongside the model, pre-trained checkpoints and code have been released, facilitating fine-tuning on diverse robotic tasks.

Nomic.ai (GPT4All) Discord

- MathJax Gains Traction for LaTeX: Members explored integrating MathJax for enhanced LaTeX support, emphasizing the necessity for its SVG export functionalities for broader compatibility.

- The suggestion involved parsing and applying MathJax selectively to document sections containing LaTeX notation.

- DeepSeek Faces LocalDocs Hiccups: Users encountered issues with DeepSeek, reporting errors such as 'item at index 3 is not a prompt' when used with localdocs.

- While awaiting a fix anticipated in the main branch, some found improved performance with specific model versions.

- EU AI Act Sparks Concern: The EU's new AI Act raised concerns due to its stringent regulation of AI use, including prohibitions on certain applications, as detailed in the official documentation.

- Members shared informational resources, noting the significant implications for AI operations within the EU, even before the rules are fully enacted.

- EU's Global Role Draws Fire: A vigorous debate erupted regarding the EU's global political stance, particularly concerning imperialism and human rights.

- Participants exchanged pointed criticisms, highlighting perceived emotional responses and logical fallacies in discussions about EU policies and actions.

- AI Communication Faces Hurdles: Interactions among users spotlighted the difficulties in maintaining mature discussions on intricate subjects such as democracy and governance.

- Calls were made to refocus conversations on AI-related topics, stressing the need for respectful dialogue and awareness of personal biases.

Stackblitz (Bolt.new) Discord

- Supabase Edges Out Firebase in Integration Preference: Members debated Supabase versus Firebase, with preferences leaning towards Supabase due to its seamless integration capabilities for some use cases.

- Some admitted to technical comfort with Firebase, but the conversation highlighted diverse needs in database services.

- Bolt Plagued by Performance Woes: Users reported significant performance issues with Bolt, including slow loading times, authentication errors, and changes failing to update correctly.

- One user mentioned that refreshing the application provided temporary relief, but the intermittent nature of these problems caused ongoing frustration.

- Bolt Users Lament Backup Troubles: A user voiced concern about losing hours of work in Bolt because the most recent backup available was from early January, as well as a feature request to show the .bolt folder.

- Suggestions to check backup settings were made, but the outdated backup highlighted reliability issues.

- GDPR-Compliance Concerns Spark Hosting Hunt: A user questioned the GDPR-compliance of Netlify, particularly regarding data processing within the EU, see their privacy policy.

- The query led to a search for alternative hosting solutions that ensure all hosting and data processing activities remain within EU borders to maintain regulatory compliance.

- API Key Authentication Headache: A user struggled with API key authentication for a RESTful API request in Bolt using Supabase edge functions, encountering a 401 Invalid JWT error.

- Frustration arose from the lack of invocations and responses from the edge functions, leaving the user uncertain on how to resolve the authentication problem.

Torchtune Discord

- SFT Dataset Hijacked via Customization: A member successfully hijacked the built-in SFT Dataset by customizing the message_transform and model_transform parameters.

- This allows for format adjustments as needed, as the member stated, I just had to hijack the message/model transforms to fit my requirements.

- DPO Seed Issue Plagues Recipes: Members are troubleshooting why the

seedworks for lora/full finetune but not for lora/full DPO, causing different loss curves with the same config.- Concerns were raised about

seed=0andseed=nullaffecting randomness in DistributedSampler calls, and a related fix for gradient accumulation in DPO/PPO recipes may be needed; see issue 2334 and issue 2335.

- Concerns were raised about

- Ladder-residual Boosts Model Speed: A tweet introduced Ladder-residual, a modification improving the speed of 70B Llama under tensor parallelism by approximately 30%.

- This enhancement reflects ongoing optimization in model architecture collaboration among several authors and researchers.

- Data Augmentation Surveyed for LLMs: A recent survey analyzes the role of data augmentation in large language models (LLMs), highlighting their need for extensive datasets to avoid overfitting; see the paper.

- It discusses distinctive prompt templates and retrieval-based techniques that enhance LLM capabilities through external knowledge, leading to more grounded-truth data.

- R1-V Project Revolutionizes Learning: Exciting news was shared about the R1-V project that utilizes reinforcement learning with verifiable rewards to enhance models' counting abilities; see Liang Chen's Tweet.

- The project showcases a model surpassing a 72B counterpart with just 100 training steps, costing under $3, and promises to be fully open source, spurring community interest.

Modular (Mojo 🔥) Discord

- Community Showcase Finds Forum: The Community Showcase has moved to the Modular forum to improve organization, while the previous showcase is now read-only.

- This transition aims to streamline community interactions and project sharing within the Modular (Mojo 🔥) ecosystem.

- Rust Seeks Hot Reloading: Members are discussing how Rust typically uses a C ABI for hot reloading, which poses challenges with Rust updates and ABI stability.

- Owen inquired about resources for building a toy ABI, highlighting the importance of ABI stability due to frequent data structure changes.

- Mojo Explores Compile-Time Features: A user asked whether Mojo has a feature similar to Rust's

#[cfg(feature = "foo")], prompting a discussion on compile-time programming capabilities in Mojo and the importance of a stable ABI.- The conversation underscored that only a few languages maintain a stable ABI, which is critical for compatibility.

- Python's Asyncio Loop Deconstructed: Discussions on Python's asyncio revealed that it enables community-driven event loops, referencing uvloop on GitHub.

- Participants contrasted this with Mojo's threading and memory management approaches, pointing out potential hurdles.

- Async APIs Face Thread Safety Scrutiny: Concerns were raised about the thread safety of asynchronous APIs, focusing on potentially mutative qualities and the necessity for secure memory handling.

- The discussion emphasized that many current methods lack control over memory allocation, which could lead to complications.

LLM Agents (Berkeley MOOC) Discord

- Weston Teaches Self-Improvement in LLMs: Jason Weston lectured on 'Learning to Self-Improve & Reason with LLMs', focusing on innovative methods like Iterative DPO, Self-Rewarding LLMs, and Thinking LLMs for enhancing LLM performance.

- The talk highlighted effective reasoning and task-related learning mechanisms, aiming to improve LLM capabilities across diverse tasks.

- Hackathon Winners Anounced: Hackathon winners have been privately notified, with a public announcement expected next week.

- Members are eagerly awaiting further details about the hackathon results.

- MOOC Certificates delayed: The fall program certificates have not been released yet, but they will be available soon.

- Officials thanked participants for their patience regarding the release of the MOOC certificates.

- Research Project Interest Abounds: Members are expressing an interest in participating in research projects.

- More details about the research opportunities and team pairings will be provided soon, according to staff.

- Attendance form is Berkeley-Specific: The attendance form mentioned is only for Berkeley students.

- Concerns were raised regarding the accessibility of the attendance form for non-Berkeley students, as there is a lack of information for non-Berkeley students.

MCP (Glama) Discord

- Legacy ERP Integration Seeks VBS Help: A user is seeking assistance with servers utilizing .vbs scripts for integrating with legacy ERP systems.

- One member suggested using mcpdotnet, as it might simplify invocation from .NET.

- Cursor MCP Server Gets Docker Guidance: A new user requested guidance on running an MCP server locally within Cursor, with a specific interest in using a Docker container.

- Members suggested entering the SSE URL used with supergateway into the Cursor MCP SSE settings to resolve the issue.

- Enterprise MCP Protocol Advances: Discussion around the MCP protocol highlighted a draft for OAuth 2.1 authorization, potentially integrating with IAM systems.

- It was noted that current SDKs lack authorization support due to ongoing internal testing and prototyping.

- Localhost CORS Problems Plague Windows: A user encountered connection problems running their MCP server on localhost, suspecting CORS-related issues.

- They plan to use ngrok to circumvent potential communication issues associated with accessing the server via localhost on Windows.

- ngrok Zaps Localhost Access Issues: A member recommended using ngrok to assess server accessibility, suggesting the command

ngrok http 8001.- They highlighted that this could resolve problems stemming from attempting to access the server through localhost.

Cohere Discord

- Command-R+ Impresses Users with Internal Thoughts: Users are pleased with the Command-R+ model's ability to expose internal thoughts and logical steps, functioning akin to Chain of Thought.

- Despite excitement around newer models, one user noted that Command-R+ continues to surprise them after months of consistent usage.

- Cohere Champions Canadian AI Amid Tariff Concerns: One member chose Cohere to bolster Canadian AI capabilities, particularly given potential US tariffs.

- They appreciate the availability of options that sustain local AI efforts during challenging economic conditions.

- Cohere's Rerank 3.5 Elevates Financial Semantic Search: Cohere and Pinecone presented a webinar highlighting the benefits of Financial Semantic Search and Reranking.

- The webinar showcased Cohere’s Rerank 3.5 model and its potential to enhance overall search performance using financial data.

- Survey Aims to Fine-Tune Tech Content Experience: Recent grads are conducting a survey to gather insights on tech enthusiasts' content consumption preferences, aiming to improve user engagement.

- The survey, available at User Survey, explores sources ranging from Tech Blogs and Research Updates to community forums and AI tools.

DSPy Discord

- DSPy Directory Causes Concern: A user questioned whether a file named dspy.py or a directory called dspy could be causing issues, as Python sometimes struggles with this type of setup.

- This issue raises concerns about potential file handling conflicts that could affect the execution of DSPy projects.

- Image Pipeline Breaks in DSPy 2.6.2: An Image pipeline in dspy2.6.2 triggered a ContextWindowExceededError, implying it was 'out of context' due to token limits, whereas version 2.6.1 worked previously, albeit with an error that was being investigated.

- The user reported that this regression might have been caused by recent changes to DSPy.

- Assertions Get the Axe in DSPy 2.6.4: Members announced that assertions are being replaced in the upcoming 2.6.4 version, scheduled for release, indicating a shift in how error handling is approached in DSPy.

- This change signifies that error handling and logic checks within DSPy will be performed differently from previous versions.

- Databricks Observability Quest: A user running DSPy 2.5.43 in Databricks notebooks for NER and classification sought guidance on achieving structured output.

- Due to restrictions on configuring an LM server, they must use their current version, adding complexity to tasks involving optimizers and nested JSON outputs.

LAION Discord

- OpenEuroLLM Kicks Off with EU Flair: The OpenEuroLLM was introduced as the first family of open source large language models covering all EU languages, receiving the STEP Seal for excellence and focusing on community involvement.

- The project aims for compliance with EU regulations and preserving linguistic diversity, aligning with open-source and open science communities like LAION.

- EU AI Endeavors Face Skepticism: Amidst discussions about AI's future under EU regulations, a member jokingly suggested checking back in 2030 to assess the results of the EU's AI endeavors.

- This comment highlights a sense of doubt regarding the immediate tangible outcomes of current AI development efforts.

- Community Mulls Meme Coin Mania: A member gauged the community's interest in meme coins, seeking broader engagement from others.

- They proactively solicited expressions of interest from anyone intrigued by the topic.

LlamaIndex Discord

- DocumentContextExtractor Bolsters RAG: DocumentContextExtractor is an iteration to enhance the accuracy of RAG, implemented as demos by both AnthropicAI and llama_index.

- The technique promises improved performance, making it an important area of exploration for those working on retrieval-augmented generation.

- Contextual Retrieval Changes the Game: The use of Contextual Retrieval has been highlighted as a game-changer in improving response accuracy within RAG systems.

- This technique refines how context is drawn upon during document retrieval, fostering deeper interactions.

- LlamaIndex LLM Class Faces Timeout: A user inquired about implementing a timeout feature in the default LlamaIndex LLM class, noting it's available in OpenAI's API.

- Another member suggested that the timeout option likely belongs in the client kwargs, referring to the LlamaIndex GitHub repository.

- UI Solutions Explored for LlamaIndex: A member expressed curiosity regarding the UI solutions others use with LlamaIndex, questioning if people create it from scratch.

- The inquiry remains open, inviting others to share their user interface practices and preferences related to LlamaIndex.

tinygrad (George Hotz) Discord

- Tinybox faces Eurozone Shipping Limitations: Shipping for the tinybox (red) is unavailable to some Eurozone countries, as confirmed by the support team.

- Users attempting to order to countries like Estonia, which aren't listed in the dropdown menu during checkout, are currently unable to receive shipments.

- Clever Shipping Service workaround Emerges: A user suggested using services like Eurosender to bypass shipping restrictions.

- They confirmed a successful delivery to Germany via this method, providing a solution for users in unsupported regions, from the tinybox chat.

MLOps @Chipro Discord

- Iceberg Management Nightmares: A panel titled Pain in the Ice: What's Going Wrong with My Hosted Iceberg?! will discuss complexities in managing Iceberg with speakers including Yingjun Wu, Alex Merced, and Roy Hasson on February 6 (Meetup Link).

- Managing Iceberg can become a nightmare due to issues like ingestion, compaction, and RBAC, diverting resources from other tasks, and the panel aims to explore how innovations in the field can simplify Iceberg's management and usage.

- Blind LLM Pushing Frustrates: Members voiced concerns about AI engineers who push LLMs for every problem, even when unsupervised learning or other simpler methods may be more appropriate.

- The discussion highlighted a trend where tools are chosen without considering the problem's nature, diminishing the value of simpler methods.

- TF-IDF + Logistic Regression Prevails: A member shared a story of successfully advocating for TF-IDF + Logistic Regression over an OpenAI model for classifying millions of text samples.

- The Logistic Regression model performed adequately, proving that simpler algorithms can be effective, thus showcasing the effectiveness of traditional methods.

OpenInterpreter Discord

- Open Interpreter Project Stalls?: Members raised concerns over the lack of updates on the Open Interpreter project, noting inactive pull requests on GitHub for months since the last significant commit.

- The silence in the Discord channel was discouraging to contributors who were eager to get involved.

- Open Interpreter Documentation MIA: A member emphasized the missing documentation for version 1.0, particularly regarding the utilization of components like profiles.py.

- The absence of documentation left users questioning the project's current focus and support for its functionalities.

- DeepSeek r1 Integration Still a Mystery: An inquiry was made about integrating DeepSeek r1 into the Open Interpreter environment, but it was met with silence.

- The lack of community discussion suggests a potential gap in experimentation or knowledge sharing regarding this integration.

OpenRouter (Alex Atallah) Discord

- Cloudflare Joins Forces with OpenRouter: Cloudflare is now officially a provider on OpenRouter, integrating its Workers AI platform and Gemma models, opening up a variety of open-source tools for developers in AI applications.

- The partnership aims to enhance the OpenRouter ecosystem, providing developers with a broader range of AI tools.

- Gemma 7B-IT Adds Tool Calling: The Gemma 7B-IT model, now available through Cloudflare, features tool calling capabilities designed to enhance development efficiency.

- Developers are encouraged to explore Gemma 7B-IT for quicker and more streamlined tool integration in their applications; it's available via OpenRouter.

- Llama Models Swarm OpenRouter: OpenRouter now supports a range of Llama models, including Gemma 7B-IT, offering numerous options for users to select for their projects.

- AI Developers can request specific Llama models via Discord.

- Model Error Display Gets Specific: The display issue causing confusion with errors has been resolved, and the model name now appears in error messages to enhance user clarity.

- This update aims to improve the user experience by providing clearer error feedback.

The Axolotl AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!