[AINews] HippoRAG: First, do know(ledge) Graph

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Memory is all LLMs need.

AI News for 6/6/2024-6/7/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (409 channels, and 3133 messages) for you. Estimated reading time saved (at 200wpm): 343 minutes.

A warm welcome to the TorchTune discord. Reminder that we do consider requests for additions to our Reddit/Discord tracking (we will decline Twitter additions - personalizable Twitter newsletters coming soon! we know it's been a long time coming)

With rumors of increasing funding in the memory startup and long running agents/personal AI space, we are seeing rising interest in high precision/recall memory implementations.

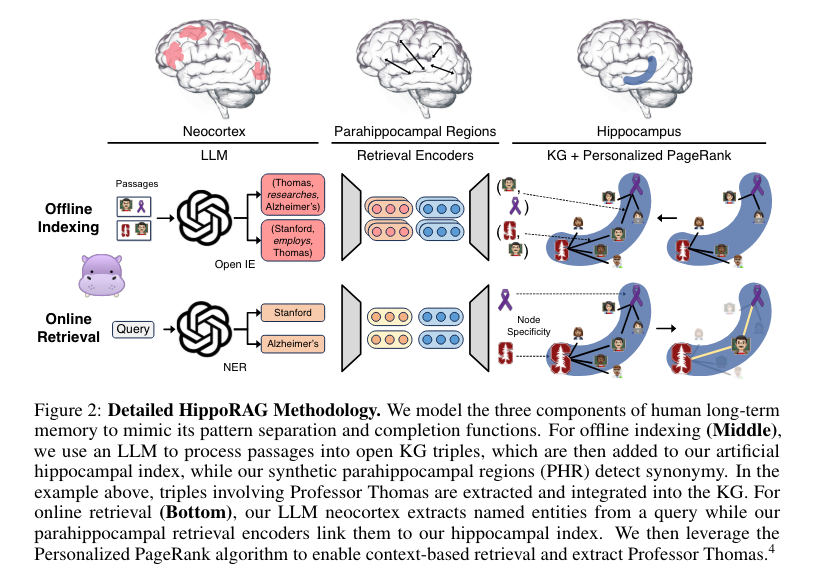

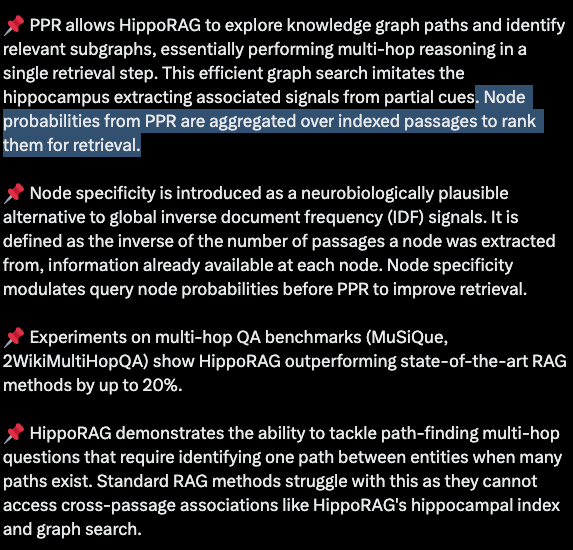

Today's paper isn't as great as MemGPT, but is indicative of what people are exploring. Though we are not big fans of natural intelligence models for artificial intelligence, the HippoRAG paper leans on "hippocampal memory indexing theory" to arrive at a useful implementation of knowledge grpahs and "Personalized PageRank" which probably stand on firmer empirical ground.

Ironically the best explanation of methodology comes from a Rohan Paul thread (we are not sure how he does so many of these daily):

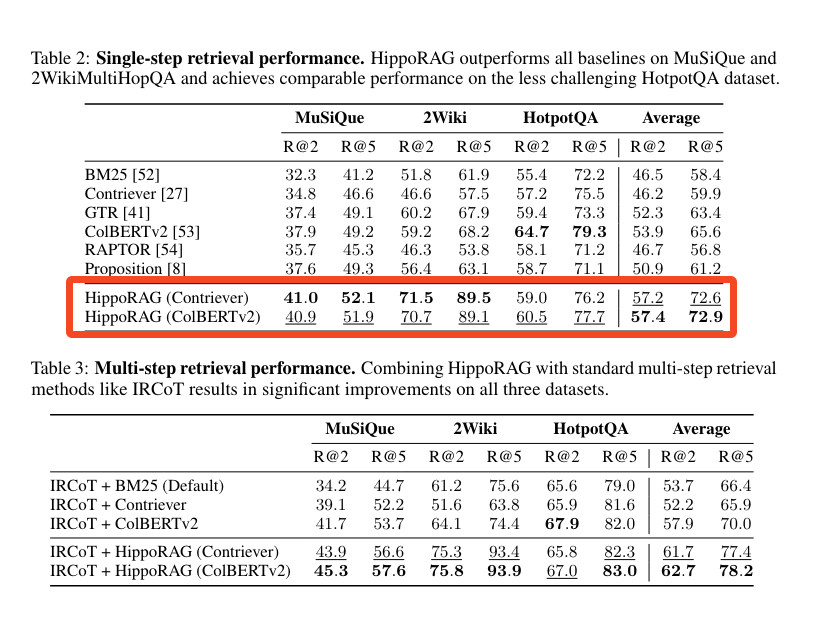

The Single-Step, Multi-Hop retrieval seems to be the key win vs comparable methods 10+ times slower and more expensive:

Section 6 offers a useful, concise literature review of the current techniques to emulate memory in LLM systems.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

New AI Models and Architectures

- New SOTA open-source models from Alibaba: @huybery announced the release of Qwen2 models from Alibaba, with sizes ranging from 0.5B to 72B parameters. The models were trained on 29 languages and achieved SOTA performance on benchmarks like MMLU (84.32 for 72B) and HumanEval (86.0 for 72B). All models except the 72B are available under the Apache 2.0 license.

- Sparse Autoencoders for interpreting GPT-4: @nabla_theta introduced a new training stack for Sparse Autoencoders (SAEs) to interpret GPT-4's neural activity. The approach shows promise but still captures only a small fraction of behavior. It eliminates feature shrinking, sets L0 directly, and performs well on the MSE/L0 frontier.

- Hippocampus-inspired retrieval augmentation: @rohanpaul_ai overviewed the HippoRAG paper, which mimics the neocortex and hippocampus for efficient retrieval augmentation. It constructs a knowledge graph from the corpus and uses Personalized PageRank for multi-hop reasoning in a single step, outperforming SOTA RAG methods.

- Implicit chain-of-thought reasoning: @rohanpaul_ai described work on teaching LLMs to do chain-of-thought reasoning implicitly, without explicit intermediate steps. The proposed Stepwise Internalization method gradually removes CoT tokens during finetuning, allowing the model to reason implicitly with high accuracy and speed.

- Enhancing LLM reasoning with Buffer of Thoughts: @omarsar0 shared a paper proposing Buffer of Thoughts (BoT) to enhance LLM reasoning accuracy and efficiency. BoT stores high-level thought templates distilled from problem-solving and is dynamically updated. It achieves SOTA performance on multiple tasks with only 12% of the cost of multi-query prompting.

- Scalable MatMul-free LLMs competitive with SOTA Transformers: @rohanpaul_ai shared a paper claiming to create the first scalable MatMul-free LLM competitive with SOTA Transformers at billion-param scale. The model replaces MatMuls with ternary ops and uses Gated Recurrent/Linear Units. The authors built a custom FPGA accelerator processing models at 13W beyond human-readable throughput.

- Accelerating LoRA convergence with Orthonormal Low-Rank Adaptation: @rohanpaul_ai shared a paper on OLoRA, which accelerates LoRA convergence while preserving efficiency. OLoRA uses orthonormal initialization of adaptation matrices via QR decomposition and outperforms standard LoRA on diverse LLMs and NLP tasks.

Multimodal AI and Robotics Advancements

- Dragonfly vision-language models for fine-grained visual understanding: @togethercompute introduced Dragonfly models leveraging multi-resolution encoding & zoom-in patch selection. Llama-3-8b-Dragonfly-Med-v1 outperforms Med-Gemini on medical imaging.

- ShareGPT4Video for video understanding and generation: @_akhaliq shared the ShareGPT4Video series to facilitate video understanding in LVLMs and generation in T2VMs. It includes a 40K GPT-4 captioned video dataset, a superior arbitrary video captioner, and an LVLM reaching SOTA on 3 video benchmarks.

- Open-source robotics demo with Nvidia Jetson Orin Nano: @hardmaru highlighted the potential of open-source robotics, sharing a video demo of a robot using Nvidia's Jetson Orin Nano 8GB board, Intel RealSense D455 camera and mics, and Luxonis OAK-D-Lite AI camera.

AI Tooling and Platform Updates

- Infinity for high-throughput embedding serving: @rohanpaul_ai found Infinity awesome for serving vector embeddings via REST API, supporting various models/frameworks, fast inference backends, dynamic batching, and easy integration with FastAPI/Swagger.

- Hugging Face Embedding Container on Amazon SageMaker: @_philschmid announced general availability of the HF Embedding Container on SageMaker, improving embedding creation for RAG apps, supporting popular architectures, using TEI for fast inference, and allowing deployment of open models.

- Qdrant integration with Neo4j's APOC procedures: @qdrant_engine announced Qdrant's full integration with Neo4j's APOC procedures, bringing advanced vector search to graph database applications.

Benchmarks and Evaluation of AI Models

- MixEval benchmark correlates 96% with Chatbot Arena: @_philschmid introduced MixEval, an open benchmark combining existing ones with real-world queries. MixEval-Hard is a challenging subset. It costs $0.6 to run, has 96% correlation with Arena, and uses GPT-3.5 as parser/judge. Alibaba's Qwen2 72B tops open models.

- MMLU-Redux: re-annotated subset of MMLU questions: @arankomatsuzaki created MMLU-Redux, a 3,000 question subset of MMLU across 30 subjects, to address issues like 57% of questions in Virology containing errors. The dataset is publicly available.

- Questioning the continued relevance of MMLU: @arankomatsuzaki questioned if we're done with MMLU for evaluating LLMs, given saturation of SOTA open models, and proposed MMLU-Redux as an alternative.

- Discovering flaws in open LLMs: @JJitsev concluded from their AIW study that current SOTA open LLMs like Llama 3, Mistral, and Qwen are seriously flawed in basic reasoning despite claiming strong benchmark performance.

Discussions and Perspectives on AI

- Google paper on open-endedness for Artificial Superhuman Intelligence: @arankomatsuzaki shared a Google paper arguing ingredients are in place for open-endedness in AI, essential for ASI. It provides a definition of open-endedness, a path via foundation models, and examines safety implications.

- Debate on the viability of fine-tuning: @HamelHusain shared a talk by Emmanuel Kahembwe on "Why Fine-Tuning is Dead", sparking discussion. While not as bearish, @HamelHusain finds the talk interesting.

- Yann LeCun on AI regulation: In a series of tweets (1, 2, 3), @ylecun argued for regulating AI applications not technology, warning that regulating basic tech and making developers liable for misuse will kill innovation, stop open-source, and are based on implausible sci-fi scenarios.

- Debate on AI timelines and progress: Leopold Aschenbrenner's appearance on the @dwarkesh_sp podcast discussing his paper on AI progress and timelines (summarized by a user) sparked much debate, with views ranging from calling it an important case for an AI capability explosion to criticizing it for relying on assumptions of continued exponential progress.

Miscellaneous

- Perplexity AI commercial during NBA Finals: @AravSrinivas noted that the first Perplexity AI commercial aired during NBA Finals Game 1. @perplexity_ai shared the video clip.

- Yann LeCun on exponential trends and sigmoids: @ylecun argued that every exponential trend eventually passes an inflection point and saturates into a sigmoid as friction terms in the dynamics equation become dominant. Continuing an exponential requires paradigm shifts, as seen in Moore's Law.

- John Carmack on Quest Pro: @ID_AA_Carmack shared that he tried hard to kill the Quest Pro completely as he believed it would be a commercial failure and distract teams from more valuable work on mass market products.

- FastEmbed library adds new embedding types: @qdrant_engine announced FastEmbed 0.3.0 which adds support for image embeddings (ResNet50), multimodal embeddings (CLIP), late interaction embeddings (ColBERT), and sparse embeddings.

- Jokes and memes: Various jokes and memes were shared, including a GPT-4 GGUF outputting nonsense without flash attention (link), @karpathy's llama.cpp update in response to DeepMind's SAE paper (link), and commentary on LLM hype cycles and overblown claims (example).

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

Chinese AI Models

- KLING model generates videos: The Chinese KLING AI model has generated several videos of people eating noodles or burgers, positioned as a competitor to OpenAI's SORA. Users discuss the model's accessibility and potential impact. (Video 1, Video 2, Video 3, Video 4)

- Qwen2-72B language model released: Alibaba has released the Qwen2-72B Chinese language model on Hugging Face. It outperforms Llama 3 on various benchmarks according to comparison images. The official release blog is also linked.

AI Capabilities & Limitations

- Open source vs closed models: Screenshots demonstrate how closed models like Bing AI and CoPilot restrict information on certain topics, emphasizing the importance of open source alternatives. Andrew Ng argues that AI regulations should focus on applications rather than restricting open source model development.

- AI as "alien intelligence": Steven Pinker suggests AI models are a form of "alien intelligence" that we are experimenting on, and that the human brain may be similar to a large language model.

AI Research & Developments

- Extracting concepts from GPT-4: OpenAI research on using sparse autoencoders to identify interpretable patterns in GPT-4's neural network, aiming to make the model more trustworthy and steerable.

- Antitrust probes over AI: Microsoft and Nvidia are facing US antitrust investigations over their AI-related business moves.

- Extreme weight quantization: Research on achieving a 7.9x smaller Stable Diffusion v1.5 model with better performance than the original through extreme weight quantization.

AI Ethics & Regulation

- AI censorship concerns: Screenshots of Bing AI refusing to provide certain information spark discussion about AI censorship and the importance of open access to information.

- Testing AI for election risks: Anthropic discusses efforts to test and mitigate potential election-related risks in their AI systems.

- Criticism of using social media data: Plans to use Facebook and Instagram posts for training AI models face criticism.

AI Tools & Frameworks

- Higgs-Llama-3-70B for role-playing: Fine-tuned version of Llama-3 optimized for role-playing released on Hugging Face.

- Removing LLM censorship: Hugging Face blog post introduces "abliteration" method for removing language model censorship.

- Atomic Agents library: New open-source library for building modular AI agents with local model support.

AI Discord Recap

A summary of Summaries of Summaries

1. LLM Advancements and Optimization Challenges:

- Meta's Vision-Language Modeling Guide provides a comprehensive overview of VLMs, including training processes and evaluation methods, helping engineers understand mapping vision to language better.

- DecoupleQ from ByteDance aims to drastically improve LLM performance using new quantization methods, promising 7x compression ratios, though further speed benchmarks are anticipated (GitHub).

- GPT-4o's Upcoming Features include new voice and vision capabilities for ChatGPT Plus users and real-time chat for Alpha users. Read about it in OpenAI's tweet.

- Efficient Inference and Training Techniques like

torch.compilespeed up SetFit models, confirming the importance of experimenting with optimization parameters in PyTorch for performance gains. - FluentlyXL Final from HuggingFace introduces substantial improvements in aesthetics and lighting, enhancing the AI model's output quality (FluentlyXL).

2. Open-Source AI Projects and Resources:

- TorchTune facilitates LLM fine-tuning using PyTorch, providing a detailed repository on GitHub. Contributions like configuring

n_kv_headsfor mqa/gqa are welcomed with unit tests. - Unsloth AI's Llama3 and Qwen2 Training Guide offers practical Colab notebooks and efficient pretraining techniques to optimize VRAM usage (Unsloth AI blog).

- Dynamic Data Updates in LlamaIndex help keep retrieval-augmented generation systems current using periodic index refreshing and metadata filters in LlamaIndex Guide.

- AI Video Generation and Vision-LSTM techniques explore dynamic sequence generation and image reading capabilities (Twitter discussion).

- TopK Sparse Autoencoders train effectively on GPT-2 Small and Pythia 160M without caching activations on disk, helping in feature extraction (OpenAI's release).

3. Practical Issues in AI Model Implementation:

- Prompt Engineering in LangChain struggles with repeated steps and early stopping issues, urging users to look for fixes (GitHub issue).

- High VRAM Consumption with Automatic1111 for image generation tasks causes significant delays, highlighting the need for memory management solutions (Stability.ai chat).

- Qwen2 Model Troubleshooting reveals problems with gibberish outputs fixed by enabling flash attention or using proper presets (LM Studio discussions).

- Mixtral 8x7B Model Misconception Correction: Stanford CS25 clarifies it contains 256 experts, not just 8 (YouTube).

4. AI Regulation, Safety, and Ethical Discussions:

- Andrew Ng's Concerns on AI Regulation mimic global debates on AI innovation stifling; comparisons to Russian AI policy discussions reveal varying stances on open-source and ethical AI (YouTube).

- Leopold Aschenbrenner's Departure from OAI sparks fiery debates on the importance of AI security measures, reflecting divided opinions on AI safekeeping (OpenRouter discussions).

- AI Safety in Art Software: Adobe's requirement for access to all work, including NDA projects, prompts suggestions of alternative software like Krita or Gimp for privacy-concerned users (Twitter thread).

5. Community Tools, Tips, and Collaborative Projects:

- Predibase Tools and Enthusiastic Feedback: LoRAX stands out for cost-effective LLM deployment, even amid email registration hiccups (Predibase tools).

- WebSim.AI for Recursive Analysis: AI engineers share experiences using WebSim.AI for recursive simulations and brainstorming on valuable metrics derived from hallucinations (Google spreadsheet).

- Modular's MAX 24.4 Update introduces a new Quantization API and macOS compatibility, enhancing Generative AI pipelines with significant latency and memory reductions (Blog post).

- GPU Cooling and Power Solutions discussed innovative methods for setting up Tesla P40 and similar hardware with practical guides (GitHub guide).

- Experimentation and Learning Resources provided by tcapelle include practical notebooks and GitHub resources for fine-tuning and efficiency (Colab notebook).

PART 1: High level Discord summaries

LLM Finetuning (Hamel + Dan) Discord

- RAG Revamp and Table Transformation Trials: Discontent over the formatting of markdown tables by Marker has led to discussions on fine-tuning the tool for improved output. Alternative table extraction tools like img2table are also under exploration.

- Predictive Text for Python Pros with Predibase: Enthusiasm for Predibase credits and tools is noted, with LoRAX standing out for cost-effective, high-quality LLM deployment. Confirmation and email registration hiccups are prevalent, with requests for help directed to the registration email.

- Open Discussions on Deep LLM Understanding: Posts from tcapelle offer deep dives into LLM fine-tuning with resources like slides and notebooks. Further, studies on pruning strategies highlight ways to streamline LLMs as shared in a NVIDIA GTC talk.

- Cursor Code Editor Catches Engineers' Eyes: The AI code editor Cursor, which leverages API keys from OpenAI and other AI services, garners approval for its codebase indexing and improvements in code completion, even tempting users away from GitHub Copilot.

- Modal GPU Uses and Gists Galore: Modal's VRAM use and A100 GPUs are appraised alongside posted gists for Pokemon card descriptions and Whisper adaptation tips._GPU availability inconsistencies are flagged, while dashboard absence for queue status is noted.

- Learning with Vector and OpenPipe: The discussion included resources for building vector systems with VectorHub's RAG-related content, and articles on the OpenPipe blog received spotlighting for their contribution to the conversation.

- Struggles with Finetuning Tools and Data: Issues with downloading course session recordings are being navigated, as Bulk tool development picks up motivated by the influx of synthetic datasets. Assistance for local Docker space quandaries during Replicate demos was sought without a solution in the chat logs.

- LLM Fine-Tuning Fixes in the Making: A lively chat around fine-tuning complexities unfolded, addressing the concerns over merged Lora model shard anomalies, and proposed fine-tuning preferences such as Mistral Instruct templates for DPO finetuning. Interesting, the output discrepancy with token space assembly in Axolotl raised eyebrows, and conversations were geared towards debugging and potential solutions.

Perplexity AI Discord

- Starship Soars and Splashes Successfully: SpaceX's Starship test flight succeeded with landings in two oceans, turning heads in the engineering community; the successful splashdowns in both the Gulf of Mexico and the Indian Ocean indicate marked progress in the program according to the official update.

- Spiced Up Curry Comparisons: Engineers with a taste for international cuisine analyzed the differences between Japanese, Indian, and Thai curries, noting unique spices, herbs, and ingredients; a detailed breakdown was circulated that provided insight into each type's historical origins and typical recipes.

- Promotional Perplexity Puzzles Participants: Disappointment bubbled among users expecting a noteworthy update from Perplexity AI's "The Know-It-Alls" ad; instead, it was a promotional video, leaving many feeling it was more of a tease than a substantive reveal as discussed in general chat.

- AI Community Converses Claude 3 and Pro Search: Discussion flourished over different AI models like Pro Search and Claude 3; details about model preferences, their search abilities, and user experiences were hot topics, alongside the removal of Claude 3 Haiku from Perplexity Labs.

- llava Lamentations and Beta Blues in API Channel: API users inquired about the integration of the llava model and vented over the seemingly closed nature of beta testing for new sources, showing a strong desire for more transparency and communication from the Perplexity team.

HuggingFace Discord

- Electrifying Enhancement with FluentlyXL: The eagerly-anticipated FluentlyXL Final version is now available, promising substantial enhancements in aesthetics and lighting, as detailed on its official page. Additionally, green-minded tech enthusiasts can explore the new Carbon Footprint Predictor to gauge the environmental impact of their projects (Carbon Footprint Predictor).

- Innovations Afoot in AI Model Development: Budding AI engineers are exploring the fast-evolving possibilities within different scopes of model development, from SimpleTuner's new MoE support in version 0.9.6.2 (SimpleTuner on GitHub) to a TensorFlow-based ML Library with its source code and documentation available for peer review on GitHub.

- AI's Ascendancy in Medical and Modeling Musings: A recent YouTube video offers insights into the escalating role of genAI in medical education, highlighting the benefits of tools like Anki and genAI-powered searches (AI in Medical Education). In the open-source realm, the TorchTune project kindles interest for facilitating fine-tuning of large language models, an exploration narrated on GitHub.

- Collider of Ideas in Computer Vision: Enthusiasts are pooling their knowledge to create valuable applications for Vision Language Models (VLMs), with community members sharing new Hugging Face Spaces Apps Model Explorer and HF Extractor that prove instrumental for VLM app development (Model Explorer, HF Extractor, and a relevant YouTube video).

- Engaging Discussions and Demonstrations: Multi-node fine-tuning of LLMs was a topic of debate, leading to a share of an arXiv paper on Vision-Language Modeling, while the Diffusers GitHub repository was highlighted for text-to-image generation scripts that could also serve in model fine-tuning (Diffusers GitHub). A blog post offering optimization insights for native PyTorch and a training example notebook for those eager to train models from scratch were also circulated.

Stability.ai (Stable Diffusion) Discord

- AI Newbies Drowning in Options: A community member expressed both excitement and overwhelm at the sheer number of AI models to explore, capturing the sentiment many new entrants to the field experience.

- ControlNet's Speed Bump: User arti0m reported unexpected delays with ControlNet, resulting in image generation times of up to 20 minutes, contrary to the anticipated speed increase.

- CosXL's Broad Spectrum Capture: The new CosXL model from Stability.ai boasts a more expansive tonal range, producing images with better contrast from "pitch black" to "pure white." Find out more about it here.

- VRAM Vanishing Act: Conversations surfaced about memory management challenges with the Automatic1111 web UI, which appears to overutilize VRAM and affect the performance of image generation tasks.

- Waterfall Scandal Makes Waves: A lively debate ensued about a viral fake waterfall scandal in China, leading to broader discussion on its environmental and political implications.

Unsloth AI (Daniel Han) Discord

- Adapter Reloading Raises Concerns: Members are experiencing issues when attempting to continue training with model adapters, specifically when using

model.push_to_hub_merged("hf_path"), where loss metrics unexpectedly spike, pointing to potential mishandling in saving or loading processes.

- LLM Pretraining Enhanced with Special Techniques: Unsloth AI's blog outlines the efficiency of continued pretraining for languages like Korean using LLMs such as Llama3, which promises reduced VRAM use and accelerated training, alongside a useful Colab notebook for practical application.

- Qwen2 Model Ushers in Expanded Language Support: Announcing support for Qwen2 model that boasts a substantial 128K context length and coverage for 27 languages, with fine-tuning resources shared by Daniel Han on Twitter.

- Grokking Explored: Discussions delved into a newly identified LLM performance phase termed "Grokking," with community members referencing a YouTube debate and providing links to supporting research for further exploration.

- NVLink VRAM Misconception Corrected: Clarity was provided on NVIDIA NVLink technology, with members explaining that NVLink does not amalgamate VRAM into a single pool, debunking a misconception about its capacity to extend accessible VRAM for computation.

CUDA MODE Discord

- Triton Simplifies CUDA: The Triton language is being recognized for its simplicity in CUDA kernel launches using the grid syntax (

out = kernel[grid](...)) and for providing easy access to PTX code (out.asm["ptx"]) post-launch, enabling a more streamlined workflow for CUDA developers.

- Tensor Troubles in TorchScript and PyTorch Profiling: The inability to cast tensors in torchscript using

view(dtype)caused frustration among engineers looking for bit manipulation capabilities with bfloat16s. Meanwhile, the PyTorch profiler was highlighted for its utility in providing performance insights, as shared in a PyTorch profiling tutorial.

- Hinting at Better LoRA Initializations: A blog post Know your LoRA was shared, suggesting that the A and B matrices in LoRA could benefit from non-default initializations, potentially improving fine-tuning outcomes.

- Note Library Unveils ML Efficiency: The Note library's GitHub repository was referenced for offering an ML library compatible with TensorFlow, promising parallel and distributed training across models including Llama2, Llama3, and more.

- Quantum Leaps in LLM Quantization: The channel engaged in deep discussion about ByteDance's 2-bit quantization algorithm, DecoupleQ, and a link to a NeurIPS 2022 paper on an approach improving over the Straight-Through Estimator for quantization was provided, pinpointing considerations for memory and computation in the quantization process.

- AI Framework Discussions Heat Up: The LLVM.c community delved into discussions ranging from supporting Triton and AMD, addressing BF16 gradient norm determinism, and future support for models like Llama 3. Topics also touched on ensuring 100% determinism in training and considered using FineWeb as a dataset, amid considerations for scaling and diversifying data types.

OpenAI Discord

- Plagiarism Strikes Research Papers: Five research papers were retracted for plagiarism due to inadvertently including AI prompts within their content; members responded with a mix of humor and disappointment to the oversight.

- Haiku Model: Affordable Quality: Enthusiastic discussions surfaced regarding the Haiku AI model, lauded for its cost-efficiency and commendable performance, even being compared to "gpt 3.5ish quality".

- AI Moderation: A Double-Edged Sword?: The guild was abuzz with the pros and cons of employing AI for content moderation on platforms like Reddit and Discord, weighing the balance between automated action and human oversight.

- Mastering LLMs via YouTube: Members shared beneficial YouTube resources for understanding LLMs better, singling out Kyle Hill's ChatGPT Explained Completely and 3blue1brown for their compelling mathematical explanations.

- GPT's Shifting Capabilities: GPT-4o is being introduced to all users, with new voice and vision capabilities earmarked for ChatGPT Plus. Meanwhile, the community is contending with frequent modification notices for custom GPTs and challenges in utilizing GPT with CSV attachments.

LM Studio Discord

- Celebration and Collaboration Within LM Studio: LM Studio marks its one-year milestone, with discourse on utilizing multiple GPUs—Tesla K80 and 3090 recommended for consistency—and running multiple instances for inter-model communication. Emphasis on GPUs over CPUs for LLMs highlighted, alongside practicality issues presented when considering LM Studio's use on powerful hardware like PlayStation 5 APUs.

- Higgs Enters with a Bang: Anticipation is high for an LMStudio update which will incorporate the impressive Higgs LLAMA, a hefty 70-billion parameter model that could potentially offer unprecedented capabilities and efficiencies for AI engineers.

- Curveballs and Workarounds in Hardware: GPU cooling and power supply for niche hardware like the Tesla P40 stir creative discussions, from jury-rigging Mac GPU fans to elaborate cardboard ducts. Tips include exploring a GitHub guide to deal with the bindings of proprietary connections.

- Model-Inclusive Troubleshooting: Fixes for Qwen2 gibberish involve toggling flash attention, while the perils of cuda offloading with Qwen2 are acknowledged with anticipation for llama.cpp updates. A member's experience of mixed results with llava-phi-3-mini-f16.gguf via API stirs further model diagnostics chat.

- Fine-Tuning Fine Points: A nuanced take on fine-tuning highlights style adjustments via LoRA versus SFT's knowledge-based tuning; LM Studio's limitations on system prompt names sans training; and strategies to counter 'lazy' LLM behaviors, such as power upgrades or prompt optimizations.

- ROCm Rollercoaster with AMD Technology: Users exchange tips and experiences on enabling ROCm on various AMD GPUs, like the 6800m and 7900xtx, with suggestions for Arch Linux use and workarounds for Windows environments to optimize the performance of their LLM setups.

Latent Space Discord

- AI Engineers, Get Ready to Network: Engineers interested in the AI Engineer event will have session access with the Expo Explorer ticket, though the speaker lineup is still to be finalized.

- KLING Equals Sora: KWAI's new Sora-like model calledKLING is generating buzz with its realistic demonstrations, as showcased in a tweet thread by Angry Tom.

- Unpacking GPT-4o's Imagery: The decision by OpenAI to use 170 tokens for processing images in GPT-4o is dissected in an in-depth post by Oran Looney, discussing the significance of "magic numbers" in programming and their latest implications on AI.

- 'Hallucinating Engineers' Have Their Say: The concept of GPT's "useful-hallucination paradigm" was debated, highlighting its potential to conjure up beneficial metrics, with parallels being drawn to "superprompts" and community-developed tools like Websim AI.

- Recursive Realities and Resource Repository: AI enthusiasts experimented with the self-referential simulations of websim.ai, while a Google spreadsheet and a GitHub Gist were shared for collaboration and expansive discussion in future sessions.

Nous Research AI Discord

- Mixtral's Expert Count Revealed: An enlightening Stanford CS25 talk clears up a misconception about Mixtral 8x7B, revealing it contains 32x8 experts, not just 8. This intricacy highlights the complexity behind its MoE architecture.

- DeepSeek Coder Triumphs in Code Tasks: As per a shared introduction on Hugging Face, the DeepSeek Coder 6.7B takes the lead in project-level code completion, showcasing superior performance trained on a massive 2 trillion code tokens.

- Meta AI Spells Out Vision-Language Modeling: Meta AI offers a comprehensive guide on Vision-Language Models (VLMs) with "An Introduction to Vision-Language Modeling", detailing their workings, training, and evaluation for those enticed by the fusion of vision and language.

- RAG Formatting Finesse: The conversation around RAG dataset creation underscores the need for simplicity and specificity, rejecting cookie-cutter frameworks and emphasizing tools like Prophetissa that utilize Ollama and emo vector search for dataset generation.

- WorldSim Console's Mobile Mastery: The latest WorldSim console update remedies mobile user interface issues, improving the experience with bug fixes on text input, enhanced

!listcommands, and new settings for disabling visual effects, all while integrating versatile Claude models.

LlamaIndex Discord

- RAG Steps Toward Agentic Retrieval: A recent talk at SF HQ highlighted the evolution of Retrieval-Augmented Generation (RAG) to fully agentic knowledge retrieval. The move aims to overcome the limitations of top-k retrieval, with resources to enhance practices available through a video guide.

- LlamaIndex Bolsters Memory Capabilities: The Vector Memory Module in LlamaIndex has been introduced to store and retrieve user messages through vector search, bolstering the RAG framework. Interested engineers can explore this feature via the shared demo notebook.

- Enhanced Python Execution in Create-llama: Integration of Create-llama with e2b_dev’s sandbox now permits Python code execution within agents, an advancement that enables the return of complex data, such as graph images. This new feature broadens the scope of agent applications as detailed here.

- Synchronizing RAG with Dynamic Data: Implementing dynamic data updates in RAG involves reloading the index to reflect recent changes, a challenge addressed by using periodic index refreshing. Management of datasets, like sales or support documentation, can be optimized through multiple indexes or metadata filters, with practices outlined in Document Management - LlamaIndex.

- Optimizations and Entity Resolution with Embeddings in LlamaIndex: Creating property graphs with embeddings directly uses the LlamaIndex framework, and entity resolution can be enhanced by adjusting the

chunk_sizeparameter. Managing these functions can be better understood through guides like "Optimization by Prompting" for RAG and the LlamaIndex Guide.

Modular (Mojo 🔥) Discord

- Andrew Ng Rings Alarm Bells on AI Regulation: Andrew Ng cautions against California's SB-1047, fearing it could hinder AI advancements. Engineers in the guild compare global regulatory landscapes, highlighting that even without U.S. restrictions, countries like Russia lack comprehensive AI policy, as seen in a video with Putin and his deepfake.

- Mojo Gains Smarter, Not Harder: The

isdigit()function's reliance onord()for performance is confirmed, leading to an issue report when problems arise. Async capabilities in Mojo await further development, and__type_ofis suggested for variable type checks, with the VSCode extension assisting in pre/post compile identification.

- MACS Make Their MAX Debut: Modular's MAX 24.4 release now supports macOS, flaunts a Quantization API, and community contributors surpass the 200 mark. The update can potentially slash latency and memory usage significantly for AI pipelines.

- Dynamic Python in the Limelight: The latest nightly release enables dynamic

libpythonselection, helping streamline the environment setup for Mojo. However, pain points persist with VS Code's integration, necessitating manual activation of.venv, detailed in the nightly changes along with the introduction of microbenchmarks.

- Anticipation High for Windows Native Mojo: Engineers jest and yearn for the pending Windows native Mojo release, its timeline shrouded in mystery. The eagerness for such a release underscores its importance to the community, suggesting substantial Windows-based developer interest.

Eleuther Discord

- Engineers Get Hands on New Sparse Autoencoder Library: The recent tweet by Nora Belrose introduces a training library for TopK Sparse Autoencoders, optimized on GPT-2 Small and Pythia 160M, that can train an SAE for all layers simultaneously without the need to cache activations on disk.

- Advancements in Sparse Autoencoder Research: A new paper reveals the development of k-sparse autoencoders that enhance the balance between reconstruction quality and sparsity, which could significantly influence the interpretability of language model features.

- The Next Leap for LLMs, Courtesy of the Neocortex: Members discussed the Thousand Brains Project by Jeff Hawkins and Numenta, which looks to implement the neocortical principles into AI, focusing on open collaboration—a nod to nature's complex systems for aspiring engineers.

- Evaluating Erroneous File Path Chaos: Addressing a known issue, members reassured that file handling, particularly erroneous result file placements—to be located in the tmp folder—is on the fix list, as indicated by the ongoing PR by KonradSzafer.

- Unearthing the Unpredictability of Data Shapley: Discourse on an arXiv preprint unfolded, evaluating Data Shapley's inconsistent performance in data selection across diverse settings, suggesting engineers should keep an eye on the proposed hypothesis testing framework for its potential in predicting Data Shapley’s effectiveness.

Interconnects (Nathan Lambert) Discord

- AI Video Synthesis Wars Heat Up: A Chinese AI video generator has outperformed Sora, offering stunning 2-minute, 1080p videos at 30fps through the KWAI iOS app, generating notable attention in the community. Meanwhile, Johannes Brandstetter announces Vision-LSTM which incorporates xLSTM's capacity to read images, providing code and a preprint on arxiv for further exploration.

- Anthropic's Claude API Access Expanded: Anthropic is providing API access for alignment research, requiring an institution affiliation, role, LinkedIn, Github, and Google Scholar profiles for access requests, facilitating deeper exploration into AI alignment challenges.

- Daylight Computer Sparks Interest: The new Daylight computer lured significant interest due to its promise of reducing blue light emissions and enhancing visibility in direct sunlight, sparking discussions about its potential benefits over existing devices like the iPad mini.

- New Frontiers in Model Debugging and Theory: Engaging conversations unfolded around novel methods analogous to "self-debugging models," which leverage mistakes to improve outputs, alongside discussions on the craving for analytical solutions in complex theory-heavy papers like DPO.

- Challenges in Deepening Robotics Discussions: Members pressed for deeper insights into monetization strategies and explicit numbers in robotics content, with specific callouts for a more granulated breakdown of the "40000 high-quality robot years of data" and a closer examination of business models in the space.

Cohere Discord

- Free Translation Research with Aya but Costs for Commercial Use: While Aya is free for academic research, commercial applications require payment to sustain the business. Users facing integration challenges with the Vercel AI SDK and Cohere can find guidance and have taken steps to contact SDK maintainers for support.

- Clever Command-R-Plus Outsmarts Llama3: Users suggest that Command-R-Plus outperforms Llama3 in certain scenarios, citing subjective experiences with its performance outside language specifications.

- Data Privacy Options Explored for Cohere Usage: For those concerned with data privacy when using Cohere models, details and links were shared on how to utilize these models on personal projects either locally or on cloud services like AWS and Azure.

- Developer Showcases Full-Stack Expertise: A full-stack developer portfolio is available, showcasing skills in UI/UX, Javascript, React, Next.js, and Python/Django. The portfolio can be reviewed at the developer's personal website.

- Spotlight on GenAI Safety and New Search Solutions: Rafael is working on a product to prevent hallucinations in GenAI applications and invites collaboration, while Hamed has launched Complexity, an impressive generative search engine, inviting users to explore it at cplx.ai.

OpenRouter (Alex Atallah) Discord

- Qwen 2 Supports Korean Too: Voidnewbie mentioned that the Qwen 2 72B Instruct model, recently added to OpenRouter's offerings, supports Korean language as well.

- OpenRouter Battles Gateway Gremlins: Several users encountered 504 gateway timeout errors with the Llama 3 70B model; database strain was identified as the culprit, prompting a migration of jobs to a read replica to improve stability.

- Routing Woes Spur Technical Dialogue: Members reported WizardLM-2 8X22 producing garbled responses via DeepInfra, leading to advice to manipulate the

orderfield in request routing and allusions to an in-progress internal endpoint deployment to help resolve service provider issues.

- Fired Up Over AI Safety: The dismissal of Leopold Aschenbrenner from OAI kicked off a fiery debate among members about the importance of AI security, reflecting a divide in perspectives on the need for and implications of AI safekeeping measures.

- Performance Fluctuations with ChatGPT: Observations were shared about ChatGPT's possible performance drops during high-traffic periods, sparking speculations about the effects of heavy load on service quality and consistent user experience.

OpenAccess AI Collective (axolotl) Discord

Flash-attn Installation Demands High RAM: Members highlighted difficulties when building flashattention on slurm; solutions include loading necessary modules to provide adequate RAM.

Finetuning Foibles Fixed: Configuration issues with Qwen2 72b's finetuning were reported, suggesting a need for another round of adjustments, particularly because of an erroneous setting of max_window_layers.

Guide Gleam for Multi-Node Finetuning: A pull request for distributed finetuning using Axolotl and Deepspeed was shared, signifying an increase in collaborative development efforts within the community.

Data Dilemma Solved: A member's struggle with configuring a test_datasets in JSONL format was resolved by adopting the structure specified for axolotl.cli.preprocess.

API Over YAML for Engineered Inferences: Confusion over Axolotl's configuration for API usage versus YAML setups was clarified, with a focus on broadening capabilities for scripted, continuous model evaluations.

LAION Discord

- A Contender to Left-to-Right Sequence Generation: The novel σ-GPT, developed with SkysoftATM, challenges traditional GPTs by generating sequences dynamically, potentially cutting steps by an order of magnitude, detailed in its arXiv paper.

- Debating σ-GPT's Efficient Learning: Despite σ-GPT's innovative approach, skepticism arises regarding its practicality, as a curriculum for high performance might limit its use, drawing parallels with XLNET's limited impact.

- Exploring Alternatives for Infilling Tasks: For certain operations, models like GLMs may prove to be more efficient, while finetuning with distinct positional embeddings could enhance RL-based non-textual sequence modeling.

- AI Video Generation Rivalry Heats Up: A new Chinese AI video generator on the KWAI iOS app churns out 2-minute videos at 30fps in 1080p, causing buzz, while another generator, Kling, with its realistic capabilities, is met with skepticism regarding its authenticity.

- Community Reacts to Schelling AI Announcement: Emad Mostaque's tweet about Schelling AI, which aims to democratize AI and AI compute mining, ignites a mix of skepticism and humor due to the use of buzzwords and ambitious claims.

LangChain AI Discord

- Early Stopping Snag in LangChain: Discussions highlighted an issue with the

early_stopping_method="generate"option in LangChain not functioning as expected in newer releases, prompting a user to link an active GitHub issue. The community is exploring workarounds and awaiting an official fix.

- RAG and ChromaDB Privacy Concerns: Queries about enhancing data privacy when using LangChain with ChromaDB surfaced, with suggestions to utilize metadata-based filtering within vectorstores, as discussed in a GitHub discussion, though acknowledging the topic's complexity.

- Prompt Engineering for LLaMA3-70B: Engineers brainstormed effective prompting techniques for LLaM3-70B to perform tasks without redundant prefatory phrases. Despite several attempts, no definitive solution emerged from the shared dialogue.

- Apple Introduces Generative AI Guidelines: An engineer shared Apple's newly formulated generative AI guiding principles aimed at optimizing AI operations on Apple's hardware, potentially useful for AI application developers.

- Alpha Testing for B-Bot App: An announcement for a closed alpha testing phase of the B-Bot application, a platform for expert knowledge exchange, was made, with invites extended here seeking testers to provide development feedback.

Torchtune Discord

- Phi-3 Model Export Confusion Cleared Up: Users addressed issues with exporting a custom phi-3 model to and from Hugging Face, pinpointing potential config missteps from a GitHub discussion. It was noted that using the FullModelHFCheckpointer, Torchtune handles conversions between its format and HF format during checkpoints.

- Clarification and Welcome for PRs: Inquiry about enhancing Torchtune with n_kv_heads for mqa/gqa was met with clarification and encouragement for pull requests, with the prerequisite of providing unit tests for any proposed changes.

- Dependencies Drama in Dev Discussions: Engineers emphasized precise versioning of dependencies for Torchtune installation and highlighted issues stemming from version mismatches, referencing situations like Issue #1071, Issue #1038, and Issue #1034.

- Nightly Builds Get a Nod: A consensus emerged on the necessity of clarifying the need for PyTorch nightly builds to use Torchtune's complete feature set, as some features are exclusive to these builds.

- PR Prepped for Clearer Installation: A community member announced they are prepping a PR specifically to update the installation documentation of Torchtune, to address the conundrum around dependency versioning and the use of PyTorch nightly builds.

AI Stack Devs (Yoko Li) Discord

- Game Over for Wokeness: Discussion in the AI Stack Devs channel revolved around the gaming studios like those behind Stellar Blade; members applauded the studios' focus on game quality over aspects such as Western SJW themes and DEI measures.

- Back to Basics in Gaming: Members expressed admiration for Chinese and South Korean developers like Shift Up for concentrating on game development without getting entangled in socio-political movements such as feminism, despite South Korea's societal challenges with such issues.

- Among AI Town - A Mod in Progress: An AI-Powered "Among Us" mod was the subject of interest, with game developers noting AI Town's efficacy in the early stages, albeit with some limitations and performance issues.

- Leveling Up With Godot: A transition from AI Town to using Godot was mentioned in the ai-town-discuss channel as a step to add advanced features to the "Among Us" mod, signifying improvements and expansion beyond initial capabilities.

- Continuous Enhancement of AI Town: AI Town's development continues, with contributors pushing the project forward, as indicated in the recent conversations about ongoing advancements and updates.

OpenInterpreter Discord

- Is There a Desktop for Open Interpreter?: A guild member queried about the availability of a desktop UI for Open Interpreter, but no response was provided in the conversation.

- Open Interpreter Connection Trials and Tribulations: A user was struggling with Posthog connection errors, particularly with

us-api.i.posthog.com, which indicates broader issues within their setup or external service availability.

- Configuring Open Interpreter with OpenAPI: Discussion revolved around whether Open Interpreter can utilize existing OpenAPI specs for function calling, suggesting a potential solution through a true/false toggle in some configuration.

- Tool Use with Gorilla 2: Challenges with tool use in LM Studio and achieving success with custom JSON output and OpenAI toolcalling were shared. A recommendation was made to check out an OI Streamlit repository on GitHub for possible solutions.

- Looking for Tips? Check OI Website: In a succinct reply to a request, ashthescholar. directed a member to explore Open Interpreter's website for guidance.

DiscoResearch Discord

- Mixtral's Expert System Unveiled: A clarifying YouTube video dispels the myth about Mixtral, confirming it comprises 256 experts across its layers and boasting a staggering 46.7 billion parameters, with 12.9 billion active parameters for token interactions.

- Which DiscoLM Reigns Supreme?: Confusion permeates discussions over the leading DiscoLM model, with multiple models vying for the spotlight and recommendations favoring 8b llama for systems with just 3GB VRAM.

- Maximizing Memory on Minimal VRAM: A user successfully runs Mixtral 8x7b at 6-7 tokens per second on a 3GB VRAM setup using a Q2-k quant, highlighting the importance of memory efficiency in model selection.

- Re-evaluating Vagosolutions' Capabilities: Recent benchmarks have sparked new interest in Vagosolutions' models, leading to debates on whether finetuning Mixtral 8x7b could triumph over a finetuned Mistral 7b.

- RKWV vs Transformers - Decoding the Benefits: The guild has yet to address the request for insights into the intuitive advantages of RKWVs compared to Transformers, suggesting either a potential oversight or the need for more investigation.

Datasette - LLM (@SimonW) Discord

- LLMs Eyeing New Digital Territories: Members shared developments hinting at large language models (LLMs) being integrated into web platforms, with Google considering LLMs for Chrome (Chrome AI integration) and Mozilla experimenting with transformers.js for local alt-text generation in Firefox Nightly (Mozilla experiments). The end game speculated by users is a deeper integration of AI at the operating system level.

- Prompt Injection Tricks and Trades: An interesting use case of prompt injection to manipulate email addresses was highlighted through a member's LinkedIn experience and discussed along with a link showcasing the concept (Prompt Injection Insights).

- Dash Through Dimensions for Text Analysis: A guild member delved into the concept of measuring the 'velocity of concepts' in text, drawing ideas from a blog post (Concept Velocity Insight) and showed interest in applying these concepts to astronomy news data.

- Dimensionality: A Visual Frontier for Embeddings: Members appreciated a Medium post (3D Visualization Techniques) for explanations on dimensionality reduction using PCA, t-SNE, and UMAP, which helped visualize 200 astronomy news articles.

- UMAP Over PCA for Stellar Clustering: It was found that UMAP provided significantly better clustering of categorized news topics, such as the Chang'e 6 moonlander and Starliner, over PCA, when labeling was done with GPT-3.5.

tinygrad (George Hotz) Discord

- Hotz Challenges Taylor Series Bounty Assumptions: George Hotz responded to a question about Taylor series bounty requisites with a quizzical remark, prompting reconsideration of assumed requirements.

- Proof Logic Put Under the Microscope: A member's perplexity regarding the validity of an unidentified proof sparked a debate, questioning the proof's logic or outcome.

- Zeroed Out on Symbolic Shape Dimensions: A discussion emerged on whether a symbolic shape dimension can be zero, indicating interest in the limits of symbolic representations in tensor operations.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!