[AINews] Grok-1 in Bio

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 3/15/2024-3/18/2024. We checked 358 Twitters and 21 Discords (337 channels, and 9841 messages) for you. Estimated reading time saved (at 200wpm): 1033 minutes.

After Elon promised to release it last week, Grok-1 is now open, with a characteristically platform native announcement:

If you don't get the "in bio" thing, just ignore it, it's a silly in-joke/doesn't matter.

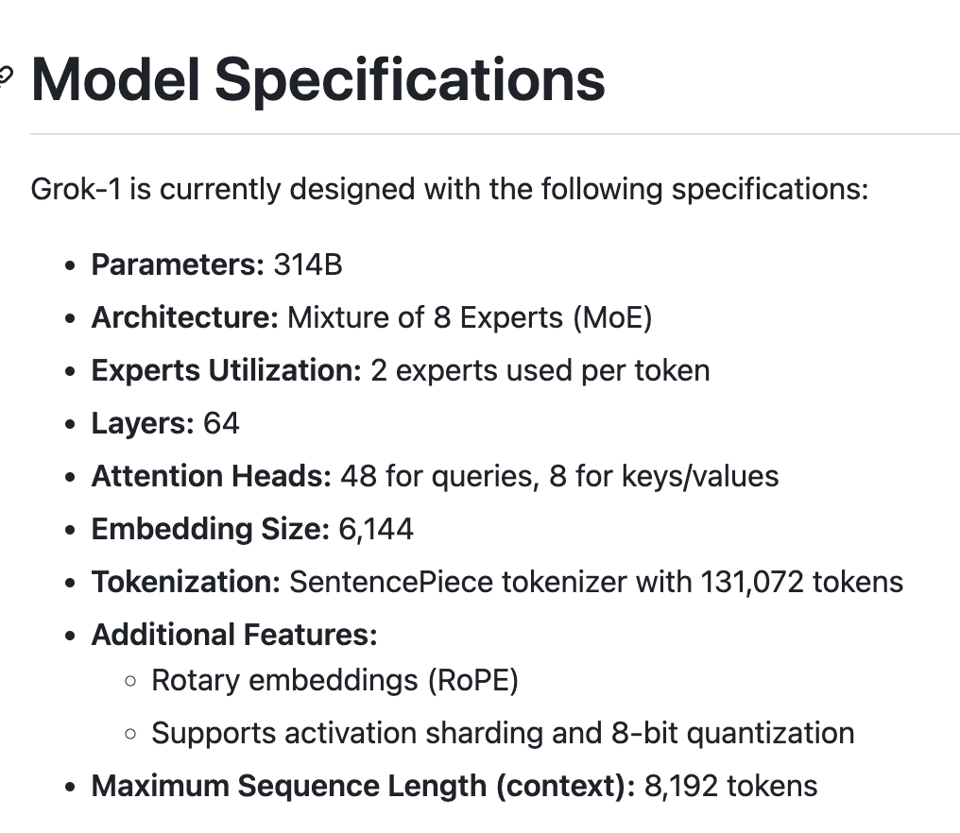

the GH repo offers a few more details:

Unsloth's Daniel Han went thru the architecture and called out a few notable differences, but nothing groundbreaking it seems.

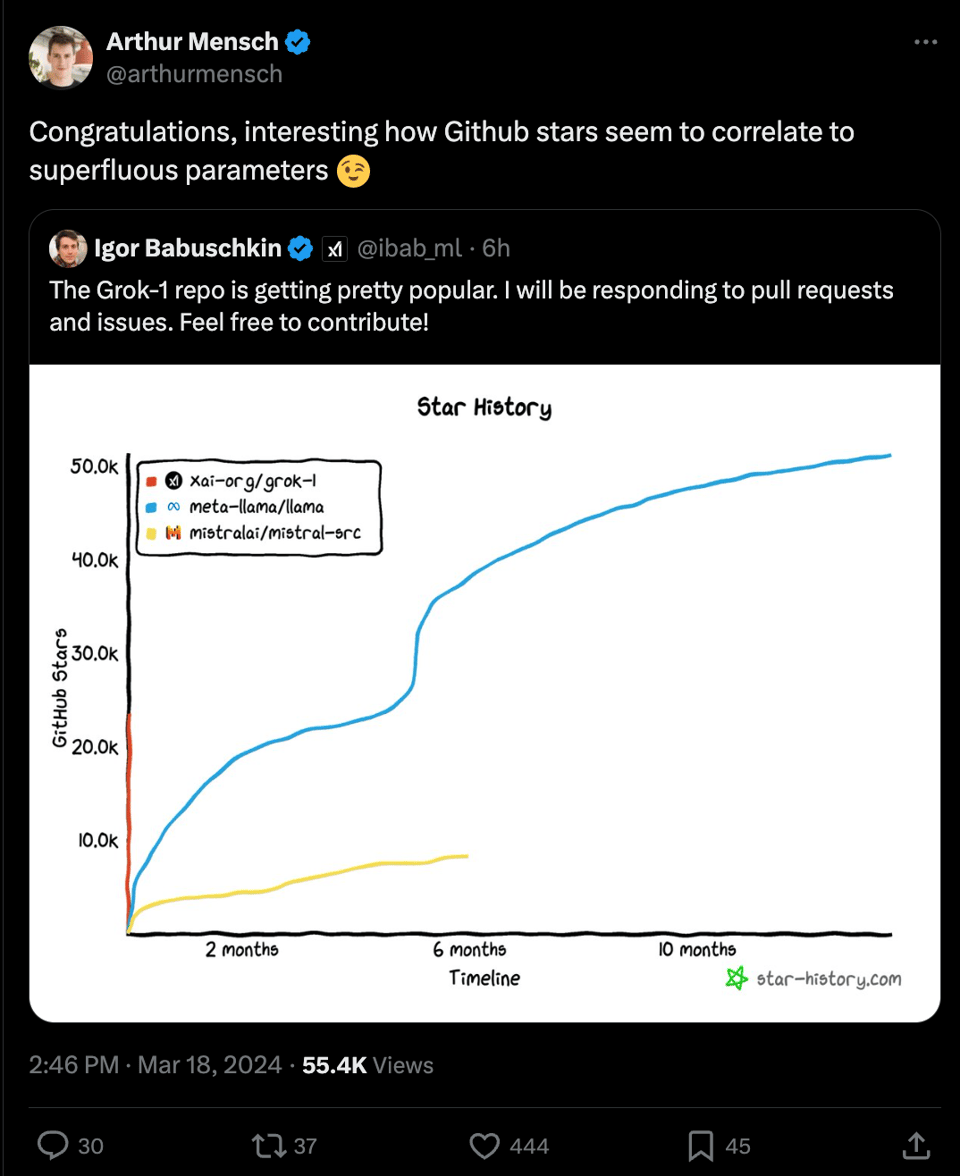

Grok-1 is great that it appears to be a brand new, from-scratch open LLM that people can use, but its size makes it difficult to finetune, which Arthur Mensch of Mistral is slyly poking at:

However folks like Perplexity have already pledged to finetune it and undoubtedly the capabilities of Grok-1 will be mapped out now that it is in the wild. Ultimately the MMLU performance doesn't seem impressive, and (since we have no details on the dataset) the speculation is that it is an upcycled Grok-0, undertrained for its size and Grok-2 will be more interesting.

Table of Contents

- PART X: AI Twitter Recap

- PART 0: Summary of Summaries of Summaries

- PART 1: High level Discord summaries

- Stability.ai (Stable Diffusion) Discord

- Perplexity AI Discord

- Unsloth AI (Daniel Han) Discord

- LM Studio Discord

- Nous Research AI Discord

- Eleuther Discord

- OpenAI Discord

- HuggingFace Discord

- LlamaIndex Discord

- Latent Space Discord

- LAION Discord

- OpenAccess AI Collective (axolotl) Discord

- CUDA MODE Discord

- OpenRouter (Alex Atallah) Discord

- LangChain AI Discord

- Interconnects (Nathan Lambert) Discord

- Alignment Lab AI Discord

- LLM Perf Enthusiasts AI Discord

- DiscoResearch Discord

- Datasette - LLM (@SimonW) Discord

- Skunkworks AI Discord

- PART 2: Detailed by-Channel summaries and links

PART X: AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs

Model Releases

- Grok-1 from xAI: 314B parameter Mixture-of-Experts (MoE) model, 8x33B MoE, released under Apache 2.0 license (191k views)

- Grok weights available for download at huggingface-cli download xai-org/grok-1 (19k views)

- Grok code: Attention scaled by 30/tanh(x/30), approx GELU, 4x Layernorms, RoPE in float32, vocab size 131072 (146k views)

- Open-Sora 1.0: Open-source text-to-video model, full training process, data, and checkpoints available (100k views)

Model Performance & Benchmarking

- Grok on par with Mixtral despite being 10x larger, potential for improvement with continued pretraining (21k views)

- Miqu 70B outperforms Grok (2.5k views)

Compute & Hardware

- Sam Altman believes compute will be the most important currency in the future, world is underprepared for increasing compute demand (181k views)

- Grok on Groq hardware could be a game-changer (3.8k views)

Anthropic Claude

- Interacting with Claude a spiritual experience, exists somewhere else in space and time (114k views)

- Claude has self-consistent histories, knows if you try to get it to violate ethics, have to argue within its moral framework (7.7k views)

Memes & Humor

- "OpenAI haha more like not open ai hahahahoakbslbxkvaufqigwrohfohfkbxits so funny i can't breathe hahahoainaknabkjbszjbug" (20k views)

- Grok used as "nastychat slutbot" instead of "demigod" given 314B params (9.4k views)

- Anons cooking a "new schizo grok waifu" (1.7k views)

In summary, the release of Grok-1, a 314B parameter MoE model from xAI, generated significant discussion around model performance, compute requirements, and comparisons to other open-source models like Mixtral and Miqu. The spiritual experience of interacting with Anthropic's Claude also captured attention, with users noting its self-consistent histories and strong moral framework. Memes and humor around Grok's capabilities and potential misuse added levity to the technical discussions.

PART 0: Summary of Summaries of Summaries

Since Claude 3 Haiku was released recently, we're adding them to this summary run for you to compare. We'll keep running these side by side for a little longer while we build the AINews platform for a better UX.

Claude 3 Haiku (3B?)

More instability in Haiku today. It just started spitting back the previous day's prompt, and it needed a couple turns of prompting to follow instructions right.

Advancements in 3D Content Generation: Stability.ai announced the release of Stable Video 3D, a new model that can generate high-quality novel view synthesis and 3D meshes from single images, building upon their previous Stable Video Diffusion technology. This represents a significant advancement in 3D content generation capabilities. Source

Debates on Model Efficiency and Optimization: Across multiple Discords, there were ongoing discussions about the relative efficiency and performance of different Stable Diffusion models, Mistral variants, and large language models like Grok-1. Users explored techniques like quantization, sparse attention, and model scaling to improve efficiency. Sources, Sources

Concerns over Proprietary AI Models and Partnerships: The Stability.ai Discord saw users expressing concerns about the company's recent partnerships with blockchain entities, fearing a potential shift towards more proprietary models and restricted access to AI tools. Source

Navigating API and Deployment Challenges: Across multiple Discords, users discussed issues with API integration, model deployment, and service disruptions. This included problems with OpenAI's API, inconsistencies in Perplexity's API responses, and challenges in running large models like Grok-1 locally. Sources, Sources

Finetuning and Dataset Curation: The Unsloth AI, Nous Research AI, and DiscoResearch Discords saw extensive discussions around the challenges and best practices for finetuning large language models, including dataset preparation, hyperparameter tuning, and model compatibility. Users shared resources and experiences to help navigate these complex tasks. Sources, Sources, Sources

Advancements in Retrieval-Augmented Generation (RAG): The LlamaIndex Discord saw active discussions around enhancing RAG pipelines, including techniques for handling complex queries, improving structured output, and leveraging memory management. Members shared resources and project updates related to these developments. Sources

Exploring Open-Source AI Models and Tooling: Discords like Eleuther, CUDA MODE, and Interconnects highlighted the release and exploration of large open-source models like Grok-1, as well as the development of new tools and techniques for CUDA programming and AI model evaluation. Sources, Sources, Sources

Claude 3 Sonnet (14B?)

Sonnet was better at following instructions today but continued not working out of the box like we had running a few days ago. Needed to implement a check step like "you should summarize shared themes across discords and make sure to directly link to source urls where relevant."

1. Massive AI Models and Their Implications:

- The release of Grok-1, a 314 billion parameter Mixture-of-Experts model by xAI, sparked widespread discussions across multiple Discords. It was open-sourced under Apache 2.0 license with instructions on GitHub, but its practicality was debated given the immense compute requirements for inference (up to 124GB VRAM).

- Comparisons were made to models like GPT-4, Falcon, and Mistral in terms of performance on benchmarks like GSM8K and MMLU. However, some were skeptical about Grok-1's potential to surpass GPT-4 or Claude.

- The challenges of distributing and working with such massive models were highlighted, with suggestions like using magnet links or even mailing physical drives to circumvent cloud egress fees.

2. Advancements in Multimodal and Retrieval-Augmented AI:

- Stability AI announced Stable Video 3D, generating 3D meshes and multi-view outputs from single images, improving quality over previous models.

- Discussions explored enhancing RAG (Retrieval-Augmented Generation) pipelines by treating retrieved documents as interactive components, as detailed in this GitHub link.

- The LlamaIndex project released v0.10.20 with an Instrumentation module for observability, and covered methods like Search-in-the-Chain for improving QA systems.

- A HuggingFace paper discussed crucial components and data choices for building performant Multimodal LLMs (MLLMs).

3. Fine-tuning and Optimizing Large Language Models:

- Extensive discussions on optimally fine-tuning models like Mistral-7b using QLoRA, addressing hyperparameters like learning rate and epoch count (generally 3 epochs recommended).

- Unsloth AI's integration with AIKit allows finetuning with Unsloth to create minimal OpenAI API-compatible model images.

- Debates on the efficiency of various Stable Diffusion models like Stable Cascade vs SDXL, with some finding Cascade better for complex prompts but slower.

- Guidance on handling issues like high VRAM/RAM usage during model saving, specifying end-of-sequence tokens, and potential future support for full fine-tuning in Unsloth.

4. Prompt Engineering and Enhancing LLM Capabilities:

- Discoveries were shared on the depth of "Prompt Engineering" for OpenAI's APIs, involving instructing the AI on analyzing responses beyond just question phrasing.

- Proposals to introduce

tokens in LLMs to improve reasoning capabilities were debated, with references to works like Self-Taught Reasoner (STaR) and Feedback Transformers. - An arXiv paper demonstrated extracting proprietary LLM information from a limited number of API queries due to the softmax bottleneck issue.

Claude 3 Opus (>220B?)

By far the best off the shelf summarizer model. Incredible prompt adherence. We like the Opus.

- Grok-1 Model Release Sparks Excitement and Skepticism: xAI's open-source release of the 314B parameter Mixture-of-Experts model Grok-1 under the Apache 2.0 license has generated buzz, with discussions around its impressive size but mixed benchmark performance compared to models like GPT-3.5 and Mixtral. Concerns arise about the practicality of running such a large model given its hefty compute requirements of up to 124GB of VRAM for local inference. The model weights are available on GitHub.

- Anticipation Builds for Stable Diffusion 3 and New 3D Model: The Stable Diffusion community eagerly awaits the release of Stable Diffusion 3 (SD3), with hints of beta access invites rolling out soon and a full release expected next month. Stability AI also announces Stable Video 3D (SV3D), a new model expanding 3D capabilities with significantly improved quality and multi-view experiences over previous iterations like Stable Zero123.

- Unsloth AI Gains Traction with Faster LoRA Finetuning: Unsloth AI is trending on GitHub for its 2-5X faster 70% less memory QLoRA & LoRA finetuning as per their repository. The community is actively discussing finetuning strategies, epochs, and trainability, with a general consensus on 3 epochs being standard to avoid overfitting and equal ratios of trainable parameters to dataset tokens being optimal.

- Photonics Breakthroughs and CUDA Optimization Techniques: Advancements in photonics, such as a new breakthrough claiming 1000x faster processing, are generating interest, with Asianometry's videos on Silicon Photonics and neural networks on light meshes shared as resources. CUDA developers are exploring warp schedulers, memory management semantics, and performance optimization techniques, while also anticipating NVIDIA's upcoming GeForce RTX 50-series GPUs with 28 Gbps GDDR7 memory.

Some other noteworthy discussions include:

- A new arXiv paper detailing a method to extract sensitive information from API-protected LLMs like GPT-3.5 at low cost

- Apple's rumored moves in the AI space, including a potential acquisition of DarwinAI and a 30B parameter LLM

ChatGPT (GPT4T)

ChatGPT proved particularly stubborn today - no amount of prompting tricks were able to improve the quality of the link sourcing in today's output. We will cut over to the new pipeline this week which should solve this problem but it is disappointing that prompts alone don't do what we want here.

- Revolutionizing 3D Content Generation and AI Efficiency: Stability.ai introduces Stable Video 3D, a leap forward in 3D mesh generation from images, outperforming predecessors like Stable Zero123. Discussions also revolve around the efficiency of various Stable Diffusion models, with debates on their prompt handling capabilities and speed, emphasizing a trade-off between performance and complexity.

- Emergence of Grok-1 and AI Hardware Discussions: The AI community buzzes about Grok-1, a 314B parameter open-source model by Elon Musk's team, sparking discussions about its computational demands for practical use. Concurrently, there's a surge in conversations around AI hardware, notably Nvidia's 5090 GPU, and cooling requirements, reflecting the escalating need for powerful setups to support growing model sizes.

- AI Applications in Workforce and Creativity: Perplexity AI showcases its API's utility in job searches, demonstrating AI's growing role in the workforce. Meanwhile, creative applications flourish, highlighted by a poetic expression of machine learning concepts on Unsloth AI's Discord, encouraging more creative technical monologues.

- AI's Role in Education and Legal Challenges: OpenAI's Discord engages in debates on prompt engineering techniques to optimize AI tasks and the complexities of API content filters in creative writing. Additionally, there's a focused discourse on AI's potential in parenting and education, spurred by comparisons of Claude 3 Opus with GPT-4, alongside a narrative on public access to government AI models, stirring legal and ethical considerations.

- Advancements in Language Models and Retrieval Systems: The AI community eagerly discusses the integration of RAG (Retriever Augmented Generation) systems for enhanced model outputs and the unveiling of LLaMa models on OpenRouter Discord, capable of handling a mix of prompts. Such advancements underscore ongoing efforts to improve language understanding and response generation, reflecting a broader trend towards more sophisticated AI interaction models.

These themes encapsulate the dynamic nature of AI development and application, from enhancing content creation and improving model efficiency to tackling hardware limitations and exploring AI's societal implications.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

Revolutionizing 3D Content Generation: Stable Video 3D has been announced by Stability AI, leveraging capabilities for high-quality novel view synthesis from single images and creating 3D meshes, surpassing former models like Stable Zero123 in quality and multi-view outputs.

Anticipation High for SD3: Engineers are eagerly awaiting Stable Diffusion 3 (SD3), with beta access speculated to start rolling out soon, and the official release expected next month, promising new advancements.

Efficiency on Trial: Ongoing debates focus on the efficiency of various Stable Diffusion models, where some engineers find Stable Cascade slower but more adept at handling complex prompts compared to SDXL.

Blockchain Ventures Spark Concern: Stability AI's partnerships with blockchain entities have stirred discussions, with some AI engineers worrying about the potential move towards proprietary models and restricted access to AI tools.

Safety in File Handling: Amidst security discussions, an inquiry about converting .pt files to SAFETENSOR format led to the share of a converter tool link, while most UIs are confirmed to avoid executing unsafe code - GitHub converter tool.

Perplexity AI Discord

- Unlimited Queries Not So Limitless: Engineers highlighted confusion over Perplexity's "unlimited" Claude 3 Opus queries for Pro users, noting an actual cap at 600 daily uses and seeking clarification on the misleading term "unlimited."

- Claude 3 Opus Gains Attention: The Claude 3 Opus model sparked interest among technical users comparing it with GPT-4 and discussing its potential for complex tasks with more natural responses, amidst broader debates on AI's role in parenting and education.

- Technical Deep Dive into Perplexity's API: In the #pplx-api channel, there's been confusion over a model's scheduled deprecation and discussion about API inconsistencies, with users sharing insights into API rate limits and the effect of token limits on LLM responses.

- Apple's AI Aspirations Discussed: Discourse surrounding Apple's AI moves, including the possible DarwinAI acquisition and speculation over a 30B LLM, permeated discussions, indicating keen interest in the tech giant's strategy in the AI landscape.

- Perplexity API Efficiency in Job Hunts: Utilizing the Perplexity API for job searches was a highlighted use case, with mixed results in terms of direct job listings versus links to broader job platforms, demonstrating practical AI applications in the workforce.

Unsloth AI (Daniel Han) Discord

- AIKit Welcomes Unsloth Finetuning: AIKit has integrated support for finetuning with Unsloth to create minimal model images compatible with OpenAI's API. A Spanish TTS test space on Hugging Face was shared for community use.

- Grok-1, the Open Source Giant: Discussions ignited about Grok-1, a 314B parameter open-sourced model by Elon Musk's team at X.ai, where concerns arose about its practical usage due to immense computational resource requirements for inference.

- Beware of Impersonators: A scam account imitating 'starsupernova0' prompted warnings within the community; members are encouraged to stay vigilant and report such activities.

- Unsloth AI Trends on GitHub: Unsloth AI has garnered attention on GitHub, where it offers 2-5X faster and 70% less memory usage for QLoRA & LoRA finetuning. The community is encouraged to star the Unsloth repository for support.

- Finetuning Troubles:

- High VRAM and system RAM usage during model saving in Colab was highlighted, especially for large models like Mistral.

- Finetuning-related concerns included unexpected model behaviors post-finetuning and clarifications about proper end-of-sequence token specification.

- Debates on epochs and trainability, with general consensus on 3 epochs being standard to avoid overfitting, and trainability discussions pointing to equal ratio of trainable parameters to dataset tokens.

- The Poetic Side of Tech: A poetic expression of machine learning concepts appeared, garnishing appreciation and encouragement for more creative technical monologues.

- Small Model Big Potential: Links to Tiny Mistral models were shared, suggesting potential inclusion in the Unsloth AI repository for community use and experimentation.

LM Studio Discord

- The Wait for Command-R Support: Discussions indicate anticipation for C4AI Command-R support in LM Studio post the merge of GitHub Pull Request #6033. However, confusion persists among members about llama.cpp's compatibility with c4ai, even though files are listed on Hugging Face.

- Big Models, Big Dreams, Bigger GPUs?: The community has been abuzz with hardware talk, from the feasibility of the Nvidia 5090 GPU in various builds to dealing with heavy power draw and cooling requirements. The ROCm library exploration was expanded with a GitHub resource for prebuilt libraries and hopes for dual GPU setup support in LM Studio using tools like koboldcpp-rocm.

- Configurations, Compatibility, and Cooling: Amidst the eager shares of new rig setups and considerations for motherboards with more x16 PCIe Gen 5 slots, members also discussed cable management and the practicalities of accommodating single-slot GPUs. There's active troubleshooting advice, like a suggestion about a Linux page note for AMD OpenCL drivers and confirming AVX Beta's limitations, such as not supporting starcoder2 and gemma models but maintaining compatibility with Mistral.

- Model Hunt and Support: Recommendations flew around for model selection, with suggestions to use Google and Reddit channels for finding a well-suited LLM and models like Phind-CodeLlama-34B-v2 being tapped for specific use cases. Inquiries about support limitations in LM Studio, such as the inability to chat with documents directly or use certain plugins, were discussed, while a list of configuration examples was shared for those seeking presets.

- Agency in AI Agents: A single message in the crew-ai channel expresses an ongoing search for an appropriate agent system to enhance the validation of a creative concept, suggesting an ongoing evaluation of various agents.

Nous Research AI Discord

- High-Speed Memory Speculation: NVIDIA's anticipated RTX 50-series Blackwell's use of 28 Gbps GDDR7 memory stirred debates on the company's historical conservative memory speed choices, as discussed in a TechPowerUp article.

- Inferences from Giant Models: There are both excitement and concerns about the feasibility of running massive AI models like Grok-1, which poses challenges such as requiring up to 124GB of VRAM for local inference and the cost-effectiveness for usage.

- Yi-9B License Quandaries & Scaling Wishes: Conversations delve into the licensing clarity of the Yi-9B model and the community's skepticism. Users also express their aspirations and doubts regarding the scaling or improvement upon Mistral to a 20 billion parameter model.

- RAG Innovations and Preferences: The community is focused on enhancing RAG (Retriever Augmented Generation) system outputs, discussing must-have features and advantages of smaller models within large RAG pipelines. A GitHub link was shared, exhibiting desirable RAG system prompts.

- Bittensor's Blockchain Blues: Technical problems are afoot in the Bittensor network, with discussions on network issues, the need for a subtensor chain update, and the challenges surrounding the acquisition of Tao for network registration. Hardware suggestions for new participants include the use of a 3090 GPU.

Eleuther Discord

- Ivy League's Generosity Unlocked: An Ivy League course made freely accessible has sparked a dialogue on high-quality education's reach, with nods to similar acts by institutions like MIT and Stanford.

- Woodruff's Course Garners Accolades: A comprehensive course by CMU's Professor David P. Woodruff was praised for its depth, covering a span of almost 7 years.

- Pioneering Projects 'Devin' and 'Figure 01': The debut of Devin, an AI software engineer, and the "Figure 01" robot's demo, in comparison to DeepMind's RT-2 (research paper), has opened discourse on the next leap in robot-human interaction.

- Fueling LLMs with

: A proposition from Reddit to introduce tokens in LLMs led to a debate, referencing works such as the Self-Taught Reasoner (STaR) and Feedback Transformers that delve into enhancing LLM reasoning through computational steps. - Public Access to Government-AI Sought: A discourse emerged around a FOIA request aimed at making Oakridge National Laboratory's 1 trillion parameter model public, accompanied by doubts due to classified data concerns and legal complications.

- Debating Performance Metrics: Discussions unraveled around model performance evaluations, pinpointing ambiguities in benchmarks, particularly with Mistral-7b on the GSM8k.

- Challenges of RL in Deep Thinking: The limitations of using reinforcement learning to promote 'deeper thinking' in language models were examined, alongside proposals for a supervised learning approach for enhancing such behaviors.

- Reverse for Relevance: A user's query on standard tokenizers not tokenizing numbers in reverse led into a discourse on right-aligned tokenization, highlighted in GPT models via Twitter.

- LLM Secrets via API Queries: Sharing a paper (arXiv:2403.09539) revealing that large language models could leak proprietary information from limited queries peaked interest due to a softmax bottleneck issue.

- Grok-1 Model Induces Model Curiosity: The unveiling of Grok-1 instigated discussions on its potential, scaling strategies, and benchmarks against contemporaries like GPT-3.5 and GPT-4.

- Scaling Laws Questioned with PCFG: Language model scaling sensitivity to dataset complexity, informed by a Probabilistic Context-Free Grammar (PCFG), was debated, suggesting gzip compression's predictive power on dataset-specific scaling impacts.

- Data Complexity's Role in Model Efficacy: The discussion highlighted that data complexity matching with downstream tasks might ensure more efficient model pretraining outcomes.

- Sampling from n-gram Distributions: Clear methods for sampling strings with a predetermined set of n-gram statistics were explored, with an autoregressive approach being posited for ensuring a maximum entropy distribution following pre-specified n-gram statistics.

- Discovery of n-gram Sampling Tool: A tool for generating strings with bigram statistics was shared, available on GitHub.

- Hurdles and Resolutions in Model Evaluation: A series of technical queries and clarifications in model evaluations were recorded, including a

lm-eval-harnessintegration query, Mistral model selection bug, and a deadlock issue during thewmt14-en-frtask, leading to sharing of the issue #1485. - Evaluating Translations in Multilingual Evals: The concept of translating evaluation datasets to other languages sprouted a suggestion to collect these under a specific directory and clearly distinguish them in task names.

- Unshuffling The Datascape: The Pile's preprocessing status was questioned; it's established that the original files are not shuffled, but already preprocessed and pretokenized data is ready for use with no extra shuffling required.

OpenAI Discord

- One Key, Many Doors: Unified API Key for DALL-E 4 and GPT-4 – Discussions confirmed that a single API key could indeed be used to access both DALL-E 4 for image generation and GPT-4 for text generation, streamlining the integration process.

- Exploring Teams and Privacy: ChatGPT Team Accounts Privacy Explained – It was clarified that upgrading from ChatGPT plus to team accounts does not give team admins access to users' private chats, an important note for user privacy on OpenAI services.

- Prompt Crafting Puzzles: Techniques in Prompt Engineering Gain Spotlight – Engineers exchanged strategies on optimizing prompts for AI tasks, with recommendations like applying the half-context-window rule for tasks and leveraging meta-prompting to overcome model refusals. There was a consensus on the importance of proper prompt structuring to improve classification, retrieval, and model interactivity.

- Model Behavior Mysteries: API Content Filters Sideline Creativity – Frustrations bubbled up about the content filters in OpenAI's API and the GPT-3.5 refusal issues. The community shared experiences of decreased willingness from the model to engage in creative writing and roleplay scenarios, and also noted service disruptions which were sometimes attributable to browser extensions rather than the ChatGPT model itself.

- The Web Search Conundrum: Complexities in GPT's Web Search Abilities Examined – Users discussed the capabilities of GPT regarding the integration of web searching features, the use of up-to-date libraries like Playwright in code generation, and how to direct GPT to generate and use multiple search queries for comprehensive information retrieval.

HuggingFace Discord

Discord's AI Scholars Share Latest Insights:

- Optimizing NL2SQL Pipelines Queries: An AI engineer expressed the need for more effective embedding and NL2SQL models, as current solutions like BAAI/llm-embedder and TheBloke/nsql-llama-2-7B-GGUF paired with FAISS are delivering inconsistent accuracy.

- Grace Hopper Superchip Revealed by Nvidia: NVIDIA teases its community with the Grace Hopper Superchip announcement, designed for compute-intensive disciplines such as HPC, AI, and data centers.

- How to NLP: Resources for beginners in NLP were sought; newcomers were directed to Hugging Face's NLP course and the latest edition of Jurafsky's textbook on Stanford's website, with a nod to Stanford’s CS224N for more dense material.

- Grok-1 Goes Big on Hugging Face: The upload and sharing of Grok-1, a 314 billion parameter model, stirred discussions, with links to its release information and a leaderboard of model sizes on Hugging Face.

- AI Peer Review Penetration: An intriguing study pointed out that between 6.5% to 16.9% of text in AI conference peer reviews might be significantly altered by LLMs, citing a paper that connects LLM-generated text to certain reviewer behaviors and suggests further exploration into LLMs' impact on information practices.

LlamaIndex Discord

- RAG Gets Interactive: Enhanced RAG pipelines are proposed to handle complex queries by using retrieved documents as an interactive component, with the idea shared on Twitter.

- LlamaIndex v0.10.20 Debuts Instrumentation Module: The new version 0.10.20 of LlamaIndex introduces an Instrumentation module aimed at improving observability, alongside dedicated notebooks for API call observation shared via Twitter.

- Search-in-the-Chain: Shicheng Xu et al.'s paper presents a method to improve question-answering systems by combining retrieval and planning in what they call Search-in-the-Chain, as detailed in a Tweet.

- Job Assistant from Resume: A RAG-based Job Assistant can be created using LlamaParse for CV text extraction, as explained by Kyosuke Morita and shared on Twitter.

- MemGPT Empowers Dynamic Memory: A webinar discusses MemGPT, which gives agents dynamic memory for better handling of memory tasks, with insights available on Twitter.

- OpenAI Agents Chaining Quirk: When chaining OpenAI agents resulted in a

400 Error, it was suggested that the content sent might have been empty and more discussion can be found in the deployment guide. - Xinference Meets LlamaIndex: For those looking to deploy LlamaIndex with Xinference in cluster environments, guidance is provided in a local deployment guide.

- Fashioning Chatbots as Fictional Characters: Engaging chatbots that emulate characters like James Bond may benefit from prompt engineering over datasets or fine-tuning, with relevant methods described in a prompting guide.

- Multimodal Challenges for LLMs: Discussion around handling multimodal content within LLMs flagged potential issues with losing order in chat and updating APIs, with multimodal content handling examples found here.

- How-To Guide on RAG Stacking: A YouTube guide was shared on building a RAG with LlamaParse, streamlining the process using technologies such as Qdrant and Groq, with the video available here.

- RAG Pipeline Insights on Medium: An article discusses creating an AI Assistant with a RAG pipeline and memory, leveraging LlamaIndex.

- RAPTOR Effort Hits a Snag: An AI engineer's attempt to adapt the RAPTOR pack for HuggingFace models, using guidance from GitHub, faced implementation issues seeking community assistance.

Latent Space Discord

- Grok-1 Unchained: xAI has launched Grok-1, a massive 314B parameter Mixture-of-Experts model, licensed under Apache 2.0, raising eyebrows over its unrestricted release but showing mixed performance in benchmarks. Intrigued engineers can find more details from the xAI blog.

- Altman Sparks Speculation: Sam Altman hints at a significant leap in reasoning with the upcoming GPT-5, igniting discussions about the model's potential impact on startups. Curious minds can dive into the conversation with Sam's interview on the Lex Fridman podcast.

- Jensen Huang's Anticipated Nvidia Keynote: GPT-4's hinted capabilities and the mention of its 1.8T parameters set the stage for Nvidia's eagerly awaited keynote by Jensen Huang, stirring the pot for AI tech enthusiasts. Watch the gripping revelations in Jensen's keynote.

- Innovative Data Extraction on the Horizon: Excitement is brewing with a teaser about a new structured data extraction tool in private beta promising low-latency and high accuracy—the AI community awaits further details. Keep an eye out on Twitter for updates on this potentially game-changing tool. Access tweet here.

- SDXL's Yellow Predicament: SDXL faces scrutiny with a color bias towards yellow in its latent space, prompting a deeper analysis and proposed solutions to this quirky challenge. Discover more about how color biases are addressed in the blog post on Hugging Face.

- Paper Club Delves into LLMs: The Paper Club has kicked off a session to dissect "A Comprehensive Summary Of Large Language Models," inviting all for a deep dive. Exchange insights and join the learning experience in the dedicated channel.

- AI Saturation Sarcasm Alert: A satirical article dubs the influx of AI-generated content as "grey sludge," possibly foreshadowing a paradigm shift in content generation. Get a dose of this satire on Hacker News.

- Attention Mechanisms Unpacked: Enthusiasts in the llm-paper-club-west channel reveled in a robust discussion about the rationale behind the attention mechanism, which enables models to process input sequences globally and resolve parallelization issues for faster training—spotlighting the decoder's efficiency in focusing on pertinent input segments.

- RAG Discussion Sparks Shared Learning: An article on "Advanced RAG: Small to Big Retrieval" spurred a conversation about retrieval mechanisms and the concept of "contrastive embeddings," offering alternatives to cosine similarity in LLMs. Check out the shared article for a deep dive into Retrieval-Augmented Generation.

- Resource Repository for AI Aficionados: A comprehensive Google Spreadsheet documenting past discussion topics, dates, facilitators, and resource links is available for members looking to catch up or review the AI In Action Club's historical knowledge exchange. Access the historical archive with this spreadsheet.

LAION Discord

- Jupyter's New Co-Pilot: Jupyter Notebooks can now be used within Microsoft Copilot Pro, offering free access to libraries like

simpyandmatplotlib, in a move that mirrors the features of ChatGPT Plus.

- DALL-E Dataset's New Home: Confusion about the DALL-E 3 dataset on Hugging Face was clarified; the dataset has been relocated and can still be accessed via this link.

- Grok-1 Against the Giants: Discussions around the new Grok-1 model, its benchmark performances, and comparisons with models such as GPT-3.5 and Mixtral emerged, alongside emphasizing Grok's open release on GitHub.

- Tackling Language Model Continuity: An arXiv paper detailed a more efficient approach for language models via continual pre-training to address data distribution shifts, promising advancements for the field. The paper can be found here.

- The GPT-4 Speculation Continues: Nvidia's apparent confirmation that GPT-4 is a massive 1.8T parameter MoE fueled ongoing rumors and debates, despite some skepticism over the exact naming of the model.

OpenAccess AI Collective (axolotl) Discord

- Fine-tuning Foibles Featuring Funky Tokenization: Engineers discuss an issue where a tokenizer inconsistently generates a

<summary>tag during fine-tuning for document summarization. A potential mismatch between tokenizer and model behavior is suspected, while another member facedHFValidationErrorsuggesting that full file paths should be utilized for local model and dataset fine-tuning.

- Conversation Dataset Conundrums Corrected: A perplexing problem arises during conversation type training data setup; the culprit turns out to be empty roles in the dataset. Furthermore, reporting on Axolotl's validation warnings generates varying outcomes, with a smaller eval set size causing issues.

- Grok Wades into Weighty Performance Waters: Within the Axolotl group, there's an exchange on the perceived underwhelming performance of the 314B Grok model. In addition, the int8 checkpoint availability is brought up, placing constraints on leveraging the model's capabilities.

- Hardware Hunt and Model Merging Musings: NVIDIA's NeMo Curator for data curation is shared, and Mergekit is suggested as a possible solution for model merging. There's also a conversation on ensuring that merged models are trained using the same chat format for flawless functionality.

- Lofty Leaks Lead to Speculative Sprint: Enthusiasm mixed with skepticism meets the leaks of GPT-4's massive 1.8 trillion parameter count and NVIDIA's next-gen GeForce RTX 5000 series cards. Professionals ponder these revelations, alongside exploring Sequoia for better decoding of large models and NVIDIA's Blackwell series for AI advancement.

Relevant links found in the discussions: - GitHub - NVIDIA/NeMo-Curator: NVIDIA's toolkit for data curation - Grok-1 weights on GitHub - ScatterMoE branch on GitHub - ScatterMoE pull request

CUDA MODE Discord

- Photonics Innovations Spark Interest: Discussions spotlighted a new breakthrough in photonics, claimed to be 1000x faster, and members shared videos including one from Lightmatter. Asianometry's YouTube videos on Silicon Photonics and neural networks on light meshes were also recommended for those interested in the field.

- CUDA Developments and Discussions: Engineers delved into topics like warp schedulers in CUDA, active warps, and memory management semantics involving ProducerProvides and ConsumerTakes. They pondered NVIDIA's GTC events, predicting new GPU capabilities while humorously remarking on the "Skynet vibes" of NVIDIA's latest tech.

- Triton Tools Take the Spotlight: The community shared new development tools such as a Triton debugger visualizer and published Triton Puzzles in a Google Colab to aid in understanding complex kernels.

- Reconfigurable Computing in Academia: Interest piqued in Prof. Mohamed Abdelfattah's research on efficient ML and reconfigurable computing, showcased on his YouTube channel and website. The ECE 5545 (CS 5775) hardware-centric ML systems course, accessed via their GitHub page, was highlighted, alongside the amusing discovery journey for the course's textbook.

- CUDA Beginners and Transition to ML: A solid foundation in CUDA was praised, with advice on transitioning to GPU-based ML with frameworks like PyTorch. References included the Zero to Hero series, ML libraries like cuDNN and cuBLAS, and the book Programming Massively Parallel Processors, found here, for deeper CUDA understanding.

- Ring-Attention Algorithm under the Microscope: Discussion revolved around the memory requirements of ring-attention algorithms, comparing with blockwise attentions. Links were shared to Triton-related code on GitHub and insights were sought into whether linear memory scaling refers to sequence length or the number of blocks.

- MLSys Conference and GTC Emphasized: Conversations touched on the MLSys 2024 conference, recognized for converging machine learning and systems professionals. Additionally, members arranged meetups for the upcoming GTC, discussing attendance and coordinating via DM, with some humorously referencing not being able to attend and linking to a related YouTube video.

OpenRouter (Alex Atallah) Discord

- LLaMa Learns New Tricks: LLaMa models now confirmed to handle a variety of formats, including a combination of

system,user, andassistantprompts, which may be pertinent when utilizing the OpenAI JavaScript library.

- Sonnet Swoops in for Superior Roleplay: Sonnet is attaining popularity for its roleplaying prowess, impressing users with its ability to avoid repetition and produce coherent output, potentially revolutionizing user engagement in interactive settings.

- Crafting the MythoMax Missive: Effective formatting for LLMs like MythoMax remains a hot topic, as understanding the positioning of system messages appears to be crucial for optimal prompt response, indicating that the first system message takes precedence in processing.

- Users Clamor for Consumption Clarity: There's a rising demand for detailed usage reports that break down costs and analytics, underlining a desire among users to fine-tune budget allocation according to AI model usage and time spent.

- Grokking Grok's Future: The forthcoming Grok model is creating buzz for its potential impact and need for fine-tuning on instruction data, with its open-source release and possible API fueling anticipation among community members. For details and contributions, check out Grok's repository on GitHub.

LangChain AI Discord

- Choose Your API Wisely: Engineers debated the use of

astream_logversusastream_eventsfor agent creation, noting perhaps the potential deprecation of thelog APIas theevents APIremains in its beta stage. They also called for beta testers for Rubik's AI, promising two months of premium access to AI models including GPT-4 Turbo and Groq models, with sign-up available at Rubik's AI.

- Improving Langchain Docs: Users articulated the need for more accessible Langchain documentation for beginners and contemplated using

Llamaindexfor quicker structured data queries in DataGPT projects. Others shared a practical solution demonstrating Python Pydantic for structuring outputs from LLM responses.

- JavaScript Streaming Stumbles: A discrepancy in

RemoteRunnablebehavior between Python and JavaScript was highlighted, where JavaScript fails to call/streamand defaults to/invoke, unlike its Python counterpart. Participants discussed inheritance inRunnableSequenceand proposed contacting the LangChain team directly via GitHub or hello@langchain.dev for support.

- Scrape with Ease, Chat with Data, and Bookmark Smartly: The community has been busy with new projects, including an open-source AI Chatbot for data analysis, a Discord bot for managing bookmarks, and Scrapegraph-ai, an AI-based scraper that touts over 2300 installations.

- AI for Nutritional Health & Financial Industry Analysis: Innovators have constructed a nutrition AI app called Nutriheal, which is showcased in a "Making an AI application in 15 minutes" video, and a Medium article discussed how LLMs could revolutionize research paper analysis for financial industry professionals. The article can be read here.

- Rapid AI App Development Spotlighted in Nutriheal: The Nutriheal demo emphasized easy AI app creation using Ollama and Open-webui, with data privacy from Langchain's Pebblo integration, while additional AI resources and tutorials can be found at navvy.co.

- Unveiling Home AI Capabilities: Community contributions included a tutorial aimed at debunking the myth of high-end AI being restricted to big tech and a guide for creating a generic chat UI for any LLM project. A Langgraph tutorial video was also shared, detailing the development of a plan-and-execute style agent inspired by the Plan-and-Solve paper and the Baby-AGI project, viewable here.

Interconnects (Nathan Lambert) Discord

- API-Protected LLMs Vulnerable to Data Extraction: A new arXiv paper exposes a method to extract sensitive information from API-protected large language models like OpenAI's GPT-3.5, challenging the softmax bottleneck with low-cost techniques.

- Model Size Underestimation: Debate centers around the paper's 7-billion parameter estimate for models such as GPT-3.5, with speculation that a Mixture of Experts (MoE) model, possibly used, would not align with such estimations, and that different architectures or distillation methods might be in play.

- Open Source Discourse Gets Heated: Discussions about the definition of open source in the tech community heat up, accompanied by Twitter exchanges and expressions of frustration, advocating for clear community guidelines and less online squabbling, as illustrated by discussions including Nathan Lambert and @BlancheMinerva.

- Grok-1 Enters the AI Arena: xAI's Grok-1, a 314 billion parameter MoE model, has been open-sourced under the Apache 2.0 license, offering untuned capabilities with potential optimality over existing models. It is being compared to others like Falcon, with performance discussions and download instructions available on GitHub.

- Big Data Transfer Riddles: Lively conversations around alternative model distribution methods, including magnet links and humorous suggestions like mailing physical hard drives, arise against the backdrop of Grok-1's release, and HuggingFace mirrors the weights. A Wall Street Journal interview with OpenAI's CTO regarding AI-generated content further fuels data-related concerns.

Alignment Lab AI Discord

- What's the Deal with Aribus?: Curiosity spiked about the Aribus Project after a member shared a tweet; however, the community lacked clarity on the project's applications, with no additional details put forth.

- In Search of HTTP-Savvy Transformers: Discussion turned technical as a member sought an embeddings model trained on HTTP responses, arguing any appropriately trained transformer could suffice. Yet the fine-tuning specificity, like details or sources, was left unaddressed.

- Hunting for Orca-Math Word Problems Model: Inquiry into a fine-tuned Mistral model specifically on orca-math-word-problems-200k dataset and nvidia/OpenMathInstruct-1 met with radio silence; a precise use-case hinted but unstated.

- Aspirations to Tame Grok 1: A member threw down the gauntlet to fine-tune Grok 1 with its formidable 314B parameter size, with conversation pivoting to the model's massive resources demand, like 64-128 H100 GPUs, and its benchmarking potential against titans like GPT-4.

- Grok 1 Shows Its Mathematical Might: Despite skepticism, Grok 1's prowess was spotlighted through performance on a complex Hungarian national high school finals in mathematics dataset, with discussions contrasting its capabilities and efficiency against other notable models.

LLM Perf Enthusiasts AI Discord

- Embracing Simplicity in Local Development: Engineers expressed a preference for building apps with simplicity in mind, favoring tools that enable local execution and filesystem control, and highlighting a desire for lightweight development solutions.

- Anthropic's Ominous Influence?: A shared tweet raised suspicions about Anthropic's intentions, possibly intimidating technical staff, along with acknowledging ongoing issues with content moderation systems.

- The Scale Challenge for Claude Sonnet: Technical discussions surfaced regarding the scalability of using Claude Sonnet, with projections of using "a few dozen million tokens/month" for a large-scale project.

- Debating the Claims of the Knowledge Processing Unit (KPU): The KPU by Maisa sparked debates, with engineers skeptical about its performance claims and comparison benchmarks. The CEO clarified that KPU acts like a "GPU for knowledge management," intended to enhance existing LLMs, offering a notebook for independent evaluation upon request.

- Sparse Details on OpenAI Updates: A single message was posted containing a link: tweet, but with no context or discussion provided, leaving the content and significance of the update unclear.

DiscoResearch Discord

- Fine-Tuning in German Falls Flat: shakibyzn struggles with DiscoLM-mixtral-8x7b-v2 model not responding in German post fine-tuning, hinting at a "ValueError" indicating incompatibility with AutoModel setup.

- Local Model Serving Shenanigans: jaredlcm faces unexpected language responses when serving the DiscoLM-70b model locally, using a server set-up snippet via

vllmand OpenAI API chat completions format. - German Model Training Traps: crispstrobe and peers discuss German models' inconsistencies caused by variables like prompting systems, data translation, merging models' effects, and dataset choices for fine-tuning.

- German LLM Benchmarking Treasure Trove: thilotee highlights resources like supergleber-german-language-evaluation-benchmark and other tools, advocating for more German benchmarks in EleutherAI's lm-evaluation-harness Our Paper.

- German Model Demo Woes and Wins: DiscoResearch models depend on prompt fidelity, illustrating the need for prompt tweaking for optimal demo performance, all against the backdrop of shifting the demo server from a homely “kitchen setup” to a professional environment, which unfortunately led to networking issues.

Datasette - LLM (@SimonW) Discord

- Prompt Engineering's Evolutionary Path: A member reminisced about their involvement in shaping prompt engineering tools with Explosion's Prodigy, which approached prompt engineering as a data annotation challenge, while also acknowledging the technique's limitations.

- A Toolkit for Prompt Experimentation: The guild referenced several resources, such as PromptTools, an open-source resource supporting prompt testing compatible with LLMs including OpenAI and LLaMA, and vector databases like Chroma and Weaviate.

- Measuring AI with Metrics: Platforms like Vercel and Helicone AI were discussed for their capabilities in comparing model outputs and managing prompts, with emphasis on Helicone AI's exploration into prompt management and version control.

- PromptFoo Empowers Prompt Testing: The sharing of PromptFoo was noted, an open-source tool that allows users to test prompts, evaluate LLM outputs, and enhance prompt quality across various models.

- Revolutionizing Blog Content with AI: A member is applying gpt-3.5-turbo to translate blog posts for different personae and considers the broader implications of AI in personalizing reader experiences, demonstrating this through their blog.

- Seed Recovery Puzzle: A member asked if it is possible to retrieve the seed used by OpenAI models for a previous API request, but no additional context or responses were offered regarding this query.

Skunkworks AI Discord

- Paper Teases Accuracy and Efficiency Advances: Baptistelqt is prepping to unveil a paper promising improved global accuracy and sample efficiency in AI training. The release awaits the structuring of results and better chart visualizations.

- Scaling Hurdles Await Solutions: Although Baptistelqt's method shows promise, it lacks empirical proof at scale due to limited resources. There's a call for consideration to allocate more compute for testing larger models.

- VGG16 Sees Performance Boost: Preliminary application of Baptistelqt's method on VGG16 using CIFAR100 led to a jump in test accuracy, climbing from a baseline of 0.04 to 0.1.

- Interest Sparked in Quiet-STaR Project: Satyum is keen on joining the "Quiet-STaR" project and discussed participation prerequisites, such as being skilled in PyTorch and transformer architectures.

- Scheduling Snafu Limits Collaboration: Timezone differences are causing delays in collaborative efforts to scale Baptistelqt's method, with an immediate meeting the next day being unfeasible.

PART 2: Detailed by-Channel summaries and links

Stability.ai (Stable Diffusion) ▷ #announcements (1 messages):

- Introducing Stable Video 3D: Stability AI announces Stable Video 3D, a new model expanding 3D technology capabilities with significantly improved quality and multi-view experiences. It takes a single object image as an input and outputs novel multi-views, creating 3D meshes.

- Building on Stable Video Diffusion's Foundation: Stable Video 3D is based on the versatile Stable Video Diffusion technology, offering advancements over the likes of Stable Zero123 and Zero123-XL, especially in quality and the ability to generate multi-view outputs.

- Stable Video 3D Variants Released: Two variants of the model have been released: SV3D_u, which generates orbital videos from single image inputs without camera conditioning, and SV3D_p (extending capabilities beyond what’s mentioned).

Link mentioned: Introducing Stable Video 3D: Quality Novel View Synthesis and 3D Generation from Single Images — Stability AI: When we released Stable Video Diffusion, we highlighted the versatility of our video model across various applications. Building upon this foundation, we are excited to release Stable Video 3D. This n...

Stability.ai (Stable Diffusion) ▷ #general-chat (988 messages🔥🔥🔥):

- Stable Diffusion 3 Anticipation: There's excitement and anticipation for Stable Diffusion 3 (SD3) with hints that invites for beta access may start rolling out this week. Users are hoping to see new examples and the release is expected sometime next month.

- Debates on Model Efficiency: Discussions are ongoing about the efficiency of various Stable Diffusion models like Stable Cascade versus SDXL, with some users finding Cascade to be better at complex prompts but slower to generate images.

- Concerns Over Blockchain Partnerships: Stability AI's recent partnerships with blockchain-focused companies are raising concerns among users. Some fear these moves could signal a shift towards proprietary models or a less open future for the platform's AI tools.

- Use of .pt Files and SAFETENSORS: A user inquires about converting .pt files to SAFETENSOR format due to concerns about running potentially unsafe pickle files. Although most .pt files are safe and the major UIs don't execute unsafe code, a link for a converter tool is shared.

- Upcoming New 3D Model: Stability AI announces the release of Stable Video 3D (SV3D), an advancement over previous 3D models like Stable Zero123. It features improved quality and multi-view generation, but users will need to self-host the model even with a membership.

- Iron Man Mr Clean GIF - Iron Man Mr Clean Mop - Discover & Share GIFs: Click to view the GIF

- grok-1: Grok-1 is a 314B parameter Mixture of Experts model - Base model (not finetuned) - 8 experts (2 active) - 86B active parameters - Apache 2.0 license - Code: - Happy coding! p.s. we re hiring:

- Avatar Cuddle GIF - Avatar Cuddle Hungry - Discover & Share GIFs: Click to view the GIF

- Yess GIF - Yess Yes - Discover & Share GIFs: Click to view the GIF

- PollyannaIn4D (Pollyanna): no description found

- Introducing Stable Video 3D: Quality Novel View Synthesis and 3D Generation from Single Images — Stability AI: When we released Stable Video Diffusion, we highlighted the versatility of our video model across various applications. Building upon this foundation, we are excited to release Stable Video 3D. This n...

- coqui/XTTS-v2 · Hugging Face: no description found

- Stable Video Diffusion - SVD - img2vid-xt-1.1 | Stable Diffusion Checkpoint | Civitai: Check out our quickstart Guide! https://education.civitai.com/quickstart-guide-to-stable-video-diffusion/ The base img2vid model was trained to gen...

- pickle — Python object serialization: Source code: Lib/pickle.py The pickle module implements binary protocols for serializing and de-serializing a Python object structure. “Pickling” is the process whereby a Python object hierarchy is...

- The Complicator's Gloves: Good software is constantly under attack on several fronts. First, there are The Amateurs who somehow manage to land that hefty contract despite having only finished "Programming for Dummies"...

- Page Not Found | pny.com: no description found

- NVLink | pny.com: no description found

- Короткометражный мультфильм "Парк" (сделан нейросетями): Короткометражный мультфильм "Парк" - невероятно увлекательный короткометражный мультфильм, созданный с использованием нейросетей.

- Vancouver, Canada 1907 (New Version) in Color [VFX,60fps, Remastered] w/sound design added: I colorized , restored and I added a sky visual effect and created a sound design for this video of Vancouver, Canada 1907, Filmed from the streetcar, these ...

- Proteus-RunDiffusion - withoutclip | Stable Diffusion Checkpoint | Civitai: Introducing Proteus-RunDiffusion In the development of Proteus-RunDiffusion, our team embarked on an exploratory project aimed at advancing the cap...

- The Mushroom Motherboard: The Crazy Fungal Computers that Might Change Everything: Unlock the secrets of fungal computing! Discover the mind-boggling potential of fungi as living computers. From the wood-wide web to the Unconventional Compu...

- GitHub - DiffusionDalmation/pt_to_safetensors_converter_notebook: This is a notebook for converting Stable Diffusion embeddings from .pt to safetensors format.: This is a notebook for converting Stable Diffusion embeddings from .pt to safetensors format. - DiffusionDalmation/pt_to_safetensors_converter_notebook

- WKUK - Anarchy [HD]: Economic ignorance at its most comical.— "Freedom, Inequality, Primitivism, and the Division of Labor" by Murray Rothbard (http://mises.org/daily/3009).— "Th...

- Reddit - Dive into anything: no description found

- Install ComfyUI on Mac OS (M1, M2 or M3): This video is a quick wakthrough to show how to get Comfy UI installed locally on your m1 or m2 mac. Find out more about AI Animation, and register as an AI ...

- GitHub - Stability-AI/generative-models: Generative Models by Stability AI: Generative Models by Stability AI. Contribute to Stability-AI/generative-models development by creating an account on GitHub.

- GitHub - chaojie/ComfyUI-DragAnything: Contribute to chaojie/ComfyUI-DragAnything development by creating an account on GitHub.

- GitHub - GraftingRayman/ComfyUI-Trajectory: Contribute to GraftingRayman/ComfyUI-Trajectory development by creating an account on GitHub.

- GitHub - mix1009/sdwebuiapi: Python API client for AUTOMATIC1111/stable-diffusion-webui: Python API client for AUTOMATIC1111/stable-diffusion-webui - mix1009/sdwebuiapi

- Home: Stable Diffusion web UI. Contribute to AUTOMATIC1111/stable-diffusion-webui development by creating an account on GitHub.

- Regional Prompter: Control image composition in Stable Diffusion - Stable Diffusion Art: Do you know you can specify the prompts for different regions of an image? You can do that on AUTOMATIC1111 with the Regional Prompter extension.

Perplexity AI ▷ #announcements (1 messages):

- Unlimited Claude 3 Opus Queries for Pro Users: An announcement was made that Perplexity Pro users now have unlimited daily queries on Claude 3 Opus, which is claimed to be the best Language Model (LLM) in the market today. Pro users are invited to enjoy the new benefit.

Perplexity AI ▷ #general (795 messages🔥🔥🔥):

- Confusion Over "Unlimited" Usage: Users discuss the confusing use of the term "unlimited" in conjunction with Perplexity's services, which are actually capped at 600 searches or uses per day. This has led to complaints and requests for clearer communication from Perplexity.

- Interest in Claude 3 Opus: Many users express interest in the Claude 3 Opus model, asking how it compares to other models like regular GPT-4. Some report a better experience using Opus for complex tasks and enjoying the more natural responses.

- Parenting and AI: There's a heated debate about the appropriate age level for certain knowledge and whether complex topics like calculus or the age of the Earth can be made digestible to young children using AI. Some parents share their positive experiences with using AI as an educational tool for their kids.

- Perplexity Integrations and Capabilities: Users are curious about integrating new AI models like Grok into Perplexity and asking about potential applications, such as integration into mobile devices. Users also inquire about using Perplexity for tasks like analyzing PDFs, which led to a discussion on the proper model settings to use.

- Personal Experiences with Perplexity: Users exchange stories about using Perplexity for job applications, the excitement of seeing Perplexity mentioned in a conference, and using the platform to answer controversial or complex questions. There's a mixture of humor and praise for Perplexity's capabilities.

- Tweet from Bloomberg Technology (@technology): EXCLUSIVE: Apple is in talks to build Google’s Gemini AI engine into the iPhone in a potential blockbuster deal https://trib.al/YMYJw2K

- Tweet from Brivael (@BrivaelLp): Zuck just reacted to the release of Grok, and he is not really impressed. "314 billion parameter is too much. You need to have a bunch of H100, and I already buy them all" 🤣

- Tweet from Aravind Srinivas (@AravSrinivas): We have made the number of daily queries on Claude 3 Opus (the best LLM in the market today) for Perplexity Pro users, unlimited! Enjoy!

- Tweet from Aravind Srinivas (@AravSrinivas): Yep, thanks to @elonmusk and xAI team for open-sourcing the base model for Grok. We will fine-tune it for conversational search and optimize the inference, and bring it up for all Pro users! ↘️ Quoti...

- no title found: no description found

- Shikimori Shikimoris Not Just Cute GIF - Shikimori Shikimoris Not Just Cute Shikimoris Not Just A Cutie Anime - Discover & Share GIFs: Click to view the GIF

- Apple’s AI ambitions could include Google or OpenAI: Another big Apple / Google deal could be on the horizon.

- Nothing Perplexity Offer: Here at Nothing, we’re building a world where tech is fun again. Remember a time where every new product made you excited? We’re bringing that back.

- What Are These Companies Hiding?: Thoughts on the Rabbit R1 and Humane Ai PinIf you'd like to support the channel, consider a Dave2D membership by clicking the “Join” button above!http://twit...

- ✂️ Sam Altman on AI LLM Search: 47 seconds · Clipped by Syntree · Original video "Sam Altman: OpenAI, GPT-5, Sora, Board Saga, Elon Musk, Ilya, Power & AGI | Lex Fridman Podcast #419" by Le...

- FCC ID 2BFB4R1 AI Companion by Rabbit Inc.: FCC ID application submitted by Rabbit Inc. for AI Companion for FCC ID 2BFB4R1. Approved Frequencies, User Manuals, Photos, and Wireless Reports.

Perplexity AI ▷ #sharing (35 messages🔥):

- Exploring Creative Writing Limits: Claude 3 Opus engaged with a prompt on "ever increasing intelligence until it's unintelligible to humans", suggesting exploration into the bounds of creativity and comprehension in AIs. Claude 3 Opus's creative take on literature may push the limits of what we consider coherent.

- Visibility is Key in Sharing Threads: Sharing information is essential, hence the reminder to make sure threads are shared for visibility on the platform, with a direct link for guidance. Reference to sharing thread.

- Cleanliness Comparison Caller: An inquiry into which item is cleaner leads to an analysis that might toss up unexpected results. Discover the cleaner option on Perplexity's analysis.

- North Korea's Insights Unpacked with AI: North Korea's Kim is a subject of scrutiny in the ongoing analysis by Perplexity AI, discussing developments and speculations. Explore the geopolitical insights at this search link.

- Tech Giants Make Waves: Apple's ventures and acquisitions continue to stir discussions, whether it's acquiring DarwinAI or the 30B LLM talk, indicating significant moves in the AI and tech industry. Find details on Apple's acquisition at DarwinAI overview and ongoing discussions around the 30B LLM at this discussion thread.

Perplexity AI ▷ #pplx-api (64 messages🔥🔥):

- Deprecated Model Continues to Function: Messages in the channel indicate confusion around a model scheduled for deprecation on March 15; it was still operational, leading to speculation about whether it's due to be deprecated at the day's end or if plans have changed.

- Inconsistencies in Sonar Model Responses: Users compared responses from the

sonar-medium-onlineAPI to the web-browser version, noting significant differences in answers when asked for news about a specific date, leading to discussions about the accuracy and consistency of API responses. - Job Search with Perplexity API: Users are experimenting with the Perplexity API for job searches, where some prompts yield actual job posting links, while others only return links to job search platforms like LinkedIn or Glassdoor.

- Request for API Rate Limit Increase Goes Unanswered: A user inquired about the process for increasing API rate limits and has not received a response to their emailed request.

- Discussion on Token Limits Affecting LLM Responses: Within the chat, there's an exchange regarding how setting max token limits like 300 might impact the Language Learning Model's (LLM) ability to provide complete responses, with users sharing examples of truncated answers and discussing the model's behavior with varying token ceilings.

- pplx-api: no description found

- pplx-api form: Turn data collection into an experience with Typeform. Create beautiful online forms, surveys, quizzes, and so much more. Try it for FREE.

Unsloth AI (Daniel Han) ▷ #general (853 messages🔥🔥🔥):

- AIKit Adopts Unsloth for Finetuning: AIKit integration now supports finetuning with Unsloth, enabling users to create minimal model images with an OpenAI-compatible API. A Hugging Face Space was also shared for testing Piper TTS in Spanish.

- Grok Open Source Discussion: Elon Musk's team at X.ai open-sourced a massive 314B parameter model called Grok-1, involving 8 experts and 86B active parameters. Discourse focused on the practicality of usage given its size, with many concluding it's impractical for most due to the computational resources required for inference.

- Safety Measures Against Impersonation: A scam account impersonating a member ('starsupernova0') was discovered to be sending friend requests within the Discord. Members reported and issued warnings regarding the fake account.

- Inquisitive Minds Seek Finetuning Guidance: Users shared resources and discussed strategies for optimally finetuning models like Mistral-7b using QLoRA. Concerns about hyperparameters, such as learning rate and number of epochs, were addressed with recommendations to follow provided guidelines in notebooks.

- Fine-tuning and Resource Challenges: Questions arose related to RTX 2080 Ti's capacity for fine-tuning larger models like 'gemma-7b-bnb-4bit', as users experienced out-of-memory (OOM) issues even with a batch_size=1. The conversation highlighted the intensive resource demands of fine-tuning large-scale models.

- Tweet from Unsloth AI (@UnslothAI): Unsloth is trending on GitHub this week! 🙌🦥 Thanks to everyone & all the ⭐️Stargazers for the support! Check out our repo: http://github.com/unslothai/unsloth

- Cosmic keystrokes: no description found

- About xAI: no description found

- Open Release of Grok-1: no description found

- Lightning AI | Turn ideas into AI, Lightning fast: The all-in-one platform for AI development. Code together. Prototype. Train. Scale. Serve. From your browser - with zero setup. From the creators of PyTorch Lightning.

- CodeFusion: A Pre-trained Diffusion Model for Code Generation: Imagine a developer who can only change their last line of code, how often would they have to start writing a function from scratch before it is correct? Auto-regressive models for code generation fro...

- Announcing Grok: no description found

- Qwen/Qwen1.5-72B · Hugging Face: no description found

- Blog: no description found

- ISLR Datasets — 👐OpenHands documentation: no description found

- Mixtral of Experts: We introduce Mixtral 8x7B, a Sparse Mixture of Experts (SMoE) language model. Mixtral has the same architecture as Mistral 7B, with the difference that each layer is composed of 8 feedforward blocks (...

- Google Colaboratory: no description found

- xai-org/grok-1 · Hugging Face: no description found

- Introduction | AIKit: AIKit is a one-stop shop to quickly get started to host, deploy, build and fine-tune large language models (LLMs).

- 🦅 EagleX 1.7T : Soaring past LLaMA 7B 2T in both English and Multi-lang evals (RWKV-v5): A linear transformer has just cross the gold standard in transformer models, LLaMA 7B, with less tokens trained in both English and multi-lingual evals. A historical first.

- Crystalcareai/GemMoE-Beta-1 · Hugging Face: no description found

- Unsloth Fixing Gemma bugs: Unsloth fixing Google's open-source language model Gemma.

- Piper TTS Spanish - a Hugging Face Space by HirCoir: no description found

- damerajee/Llamoe-test · Hugging Face: no description found

- How to Fine-Tune an LLM Part 1: Preparing a Dataset for Instruction Tuning: Learn how to fine-tune an LLM on an instruction dataset! We'll cover how to format the data and train a model like Llama2, Mistral, etc. is this minimal example in (almost) pure PyTorch.

- Paper page - Simple linear attention language models balance the recall-throughput tradeoff: no description found

- Sam Altman: OpenAI, GPT-5, Sora, Board Saga, Elon Musk, Ilya, Power & AGI | Lex Fridman Podcast #419: Sam Altman is the CEO of OpenAI, the company behind GPT-4, ChatGPT, Sora, and many other state-of-the-art AI technologies. Please support this podcast by che...

- argilla (Argilla): no description found

- GitHub - xai-org/grok-1: Grok open release: Grok open release. Contribute to xai-org/grok-1 development by creating an account on GitHub.

- transformers/src/transformers/models/mixtral/modeling_mixtral.py at main · huggingface/transformers: 🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX. - huggingface/transformers

- Mistral Fine Tuning for Dummies (with 16k, 32k, 128k+ Context): Discover the secrets to effortlessly fine-tuning Language Models (LLMs) with your own data in our latest tutorial video. We dive into a cost-effective and su...

- GitHub - jiaweizzhao/GaLore: Contribute to jiaweizzhao/GaLore development by creating an account on GitHub.

- GitHub - unslothai/unsloth: 2-5X faster 70% less memory QLoRA & LoRA finetuning: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- GitHub - AI4Bharat/OpenHands: 👐OpenHands : Making Sign Language Recognition Accessible. | **NOTE:** No longer actively maintained. If you are interested to own this and take it forward, please raise an issue: 👐OpenHands : Making Sign Language Recognition Accessible. | **NOTE:** No longer actively maintained. If you are interested to own this and take it forward, please raise an issue - AI4Bharat/OpenHands

- teknium/GPT4-LLM-Cleaned · Datasets at Hugging Face: no description found

- GitHub - mistralai/mistral-src: Reference implementation of Mistral AI 7B v0.1 model.: Reference implementation of Mistral AI 7B v0.1 model. - mistralai/mistral-src

- Error when installing requirements · Issue #6 · xai-org/grok-1: i have installed python 3.10 and venv. Trying to "pip install -r requirements.txt" ERROR: Ignored the following versions that require a different python version: 1.6.2 Requires-Python >=3...

- Falcon 180B open-source language model outperforms GPT-3.5 and Llama 2: The open-source language model FalconLM offers better performance than Meta's LLaMA and can also be used commercially. Commercial use is subject to royalties if revenues exceed $1 million.

- FEAT / Optim: Add GaLore optimizer by younesbelkada · Pull Request #29588 · huggingface/transformers: What does this PR do? As per title, adds the GaLore optimizer from https://github.com/jiaweizzhao/GaLore This is how I am currently testing the API: import torch import datasets from transformers i...

- Staging PR for implimenting Phi-2 support. by cm2435 · Pull Request #97 · unslothai/unsloth: ….org/main/getting-started/tutorials/05-layer-norm.html]

Unsloth AI (Daniel Han) ▷ #announcements (1 messages):

- Unsloth AI Gains Stardom on GitHub: Unsloth AI has become a trending topic on GitHub this week, gaining popularity and support from the community. The official post encourages users to give a star on GitHub and features a link to the repository which focuses on 2-5X faster 70% less memory QLoRA & LoRA finetuning at GitHub - unslothai/unsloth.

Link mentioned: GitHub - unslothai/unsloth: 2-5X faster 70% less memory QLoRA & LoRA finetuning: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

Unsloth AI (Daniel Han) ▷ #random (25 messages🔥):

- Baader-Meinhof Phenomenon Strikes: A member noted experiencing the Baader-Meinhof phenomenon, also known as the frequency illusion, where one randomly thinks of something and then encounters it soon after. This was attributed to the subconscious mind picking up information from the environment.

- Encouragement for Creative Output: In response to a member sharing a poetic composition, another expressed interest and appreciation, encouraging the sharing of creative monologues.

- The Gemma vs. Mistral Debate: A discussion about fine-tuning domain-specific classification tasks included mentions of Mistral-7b and considering the use of Gemma 7b. Gemma 7b was noted to sometimes outperform Mistral in tests, with Unsloth AI having resolved previous bugs.

- Seeking the Elusive Mixtral Branch: A member looking for the Mixtral branch was redirected to tohrnii's branch with a pull request on GitHub.

- Pokemon RL Agents Conquer the Map: A user shared a link to a visualization of various environments being trained on a single map, depicting the training of Pokemon RL agents as exposed on the interactive map.

- Pokemon Red Map RL Visualizer: no description found

- 4202 UI elements: CSS & Tailwind: no description found

- [WIP] add support for mixtral by tohrnii · Pull Request #145 · unslothai/unsloth: Mixtral WIP

Unsloth AI (Daniel Han) ▷ #help (568 messages🔥🔥🔥):

- VRAM and System RAM Requirements in Model Saving: A user discussed the high VRAM and RAM usage during the model saving process in Colab, noting that the T4 used 15GB VRAM and 5GB system RAM. Clarifications indicated that VRAM is utilized for loading the model during saving, suggesting adequate system RAM is important, especially when dealing with the saving of large models like Mistral.

- Unsloth Supports Llama, Mistral, and Gemma Models: Users inquired about the models supported by Unsloth, clarified to include only open-source models like Llama, Mistral, and Gemma. There were questions regarding whether 4-bit quantization refers to QLoRA, with

load_in_4bit = True, and discussions on whether Unsloth could support full fine-tuning in the future.

- Challenges with GPT4 Deployment via Unsloth: A user asked about deploying OpenAI's GPT4 model with Unsloth, only to be advised that this is outside the scope of Unsloth, which is confirmed to support open-source models for finetuning and not the proprietary GPT4 model.

- Finetuning Issues Addressed for Multiple Models: Multiple discussions revolved around issues encountered during and after finetuning models with Unsloth. These included unexpected model behavior such as generating random questions and answers after processing prompts, and the requirement for properly specifying end-of-sequence tokens in various chat templates.

- Inquiries on Full Fine-tuning and Continuous Pretraining: There was a dialogue on whether the guidelines regarding fine-tuning also apply to continuous pretraining, with Unsloth developers suggesting LoRA might be suitable but clarifying that Unsloth currently specializes in LoRA and QLoRA, not full fine-tuning. The possibility of extending full fine-tuning functionalities in Unsloth Pro was also discussed.

- Kaggle Mistral 7b Unsloth notebook: Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- ybelkada/Mixtral-8x7B-Instruct-v0.1-bnb-4bit · Hugging Face: no description found

- Hugging Face – The AI community building the future.: no description found

- TinyLlama/TinyLlama-1.1B-Chat-v1.0 · Hugging Face: no description found

- unsloth/mistral-7b-instruct-v0.2-bnb-4bit · Hugging Face: no description found

- DPO Trainer: no description found

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- qlora/qlora.py at main · artidoro/qlora: QLoRA: Efficient Finetuning of Quantized LLMs. Contribute to artidoro/qlora development by creating an account on GitHub.

- Generation - GPT4All Documentation: no description found

- GitHub - vllm-project/vllm: A high-throughput and memory-efficient inference and serving engine for LLMs: A high-throughput and memory-efficient inference and serving engine for LLMs - vllm-project/vllm

- Google Colaboratory: no description found

- Unsloth: Merging 4bit and LoRA weights to 16bit...Unsloth: Will use up to 5.34 - Pastebin.com: Pastebin.com is the number one paste tool since 2002. Pastebin is a website where you can store text online for a set period of time.

- GitHub - unslothai/unsloth: 2-5X faster 70% less memory QLoRA & LoRA finetuning: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Does DPOTrainer loss mask the prompts? · Issue #1041 · huggingface/trl: Hi quick question, so DataCollatorForCompletionOnlyLM will train only on the responses by loss masking the prompts. Does it work this way with DPOTrainer (DPODataCollatorWithPadding) as well? Looki...

- Supervised Fine-tuning Trainer: no description found

- Trainer: no description found

- llama.cpp/examples/server/README.md at master · ggerganov/llama.cpp: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- GitHub - abetlen/llama-cpp-python: Python bindings for llama.cpp: Python bindings for llama.cpp. Contribute to abetlen/llama-cpp-python development by creating an account on GitHub.