[AINews] GraphRAG: The Marriage of Knowledge Graphs and RAG

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

KGs are all LLMs need.

AI News for 7/1/2024-7/2/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (419 channels, and 2518 messages) for you. Estimated reading time saved (at 200wpm): 310 minutes. You can now tag @smol_ai for AINews discussions!

Neurosymbolic stans rejoice!

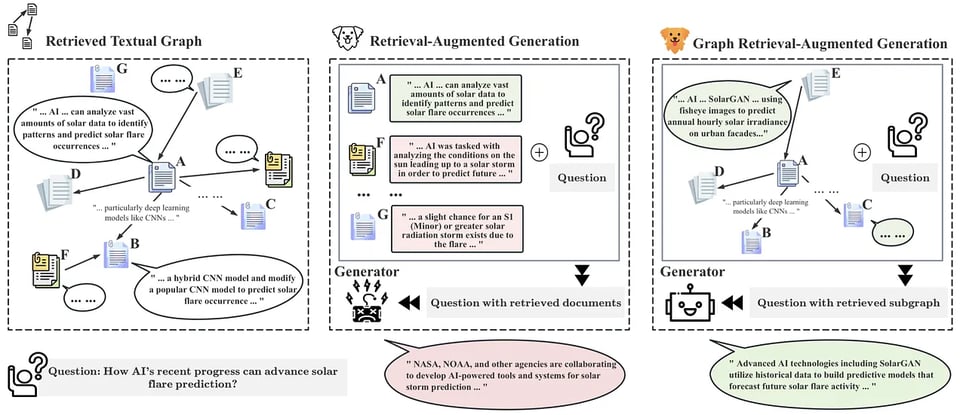

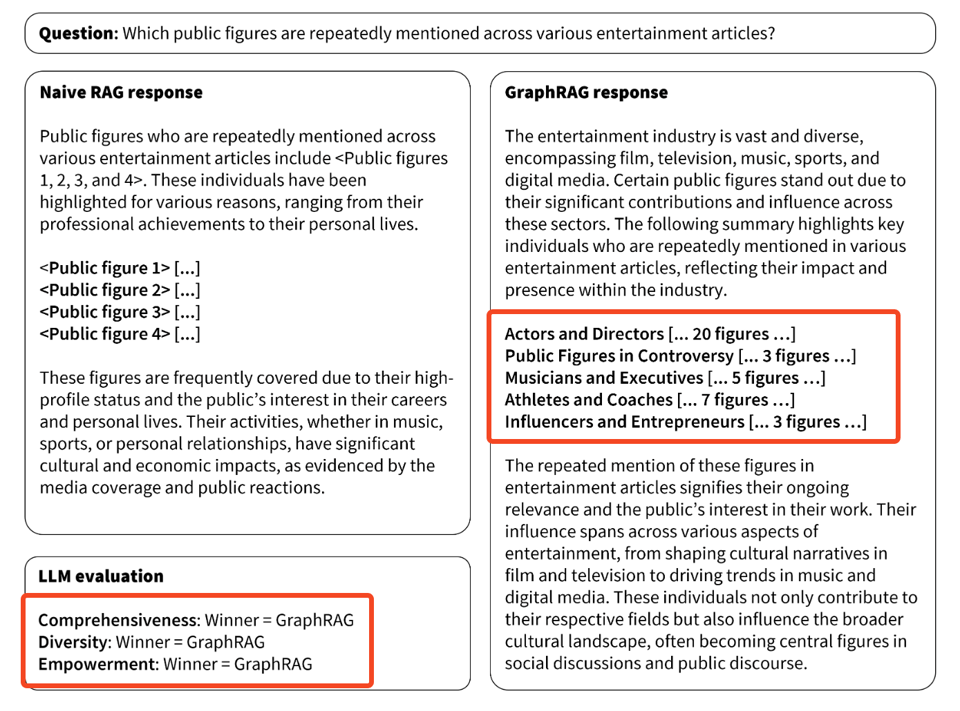

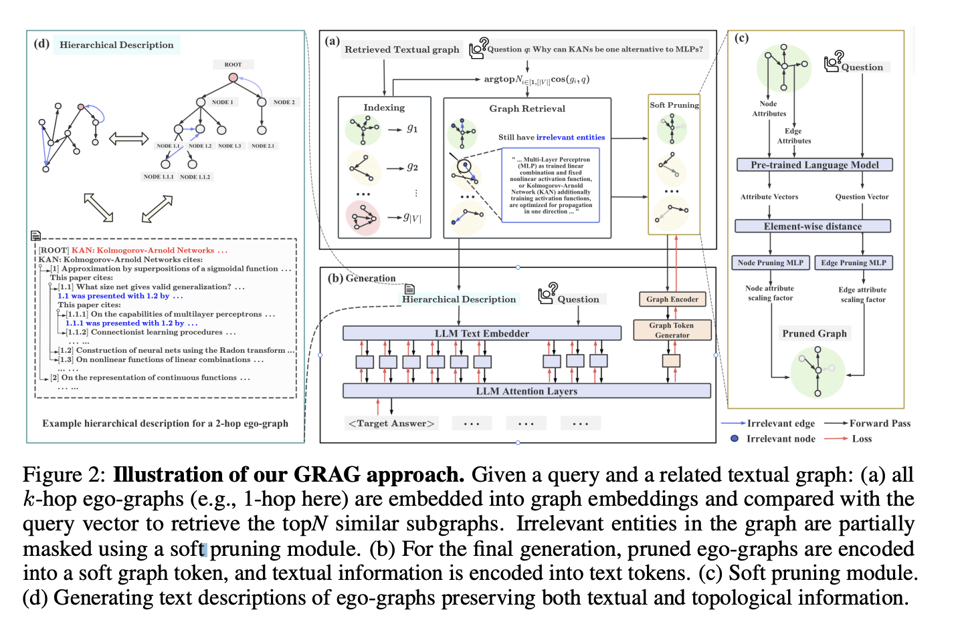

Microsoft Research first announced GraphRAG in April, and it was surprisingly popular during Neo4j's workshops and talks at the AI Engineer World's Fair last week (videos aren't yet live so we haven't seen it yet, but soon (tm)). They have now open sourced their code. As Travis Fischer puts it:

- use LLMs to extract a knowledge graph from your sources

- cluster this graph into communities of related entities at diff levels of detail

- for RAG, map over all communities to create "community answers" and reduce to create a final answer.

Or in their relatively less approachable words:

However, the dirty secret of all performance improvement techniques of this genre: token usage and inference time goes up 🙃

Also of note: their prompt rewriting approach

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

LLM Model Releases and Improvements

- Gemma 2 models released: @rasbt noted Gemma 2 models explore techniques without increasing dataset sizes, focusing on developing small & efficient LLMs. Key design choices include sliding window attention, group-query attention, and RMS norm. Gemma 2 is almost as good as the 3x larger Llama 3 70B.

- Anthropic's Claude 3.5 Sonnet model: @alexandr_wang reported Claude 3.5 Sonnet is now #1 on Instruction Following and Coding in ScaleAI's hidden evals. However, it loses points on writing style and formatting vs other top models.

- Nvidia's Nemotron 340B model: @osanseviero shared that Nemotron, a 340B parameter model, was released as part of the June open model releases.

- Qwen2-72B tops HuggingFace Open LLM Leaderboard: @rohanpaul_ai noted Qwen2-72B scores 43.02 on average, excelling in math, long-range reasoning, and knowledge. Interestingly, Llama-3-70B-Instruct loses 15 points to its pretrained version on GPQA.

Retrieval Augmented Generation (RAG) Techniques and Challenges

- RAG Basics Talk: @HamelHusain shared a talk on RAG Basics from the LLM conf, covering key concepts and techniques.

- Limitations of RAG: @svpino discussed the limitations of RAG, including challenges with retrieval, long context windows, evaluation, and configuring systems to provide sources for answers.

- Improving LLM Context Usage in RAG: @AAAzzam shared a tip to get LLMs to use context more effectively - LLMs use info from function calls far more than general context. Transforming context into pseudo function calls can improve results.

Synthetic Data Generation and Usage

- Persona-Driven Data Synthesis: @omarsar0 shared a paper proposing a persona-driven data synthesis methodology to generate diverse synthetic data. It introduces 1 billion diverse personas to facilitate creating data covering a wide range of perspectives. A fine-tuned model on 1.07M synthesized math problems achieves 64.9% on MATH, matching GPT-4 performance at 7B scale.

- AutoMathText Dataset: @rohanpaul_ai highlighted the 200GB AutoMathText dataset of mathematical text and code for pretraining mathematical language models. The dataset consists of content from arXiv, OpenWebMath, and programming repositories/sites.

- Synthetic Data for Math Capabilities: @rohanpaul_ai noted a paper showing synthetic data is nearly as effective as real data for improving math capabilities in LLMs. LLaMA-2 7B models trained on synthetic data surpass previous models by 14-20% on GSM8K and MATH benchmarks.

Miscellaneous

- 8-bit Optimizers via Block-wise Quantization: @rohanpaul_ai revisited a 2022 paper on 8-bit optimizers that maintain 32-bit optimizer performance. Key innovations include block-wise quantization, dynamic quantization, and stable embedding layers to reduce memory footprint without compromising efficiency.

- Understanding and Mitigating Language Confusion: @seb_ruder introduced a paper analyzing LLMs' failure to generate text in the user's desired language. The Language Confusion Benchmark measures this across 15 languages. Even strong LLMs exhibit confusion, with English-centric instruction tuning having a negative effect. Mitigation measures at inference and training time are proposed.

- Pricing Comparison for Hosted LLMs: @_philschmid shared an updated pricing sheet for hosted LLMs from various providers. Key insights: ~$15 per 1M output tokens from top closed LLMs, Deepseek v2 cheapest at $0.28/M, Gemini 1.5 Flash best cost-performance, Llama 3 70B ~$1 per 1M tokens.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

LLM Development and Capabilities

- Gemma 2 27B model issues: In /r/LocalLLaMA, users are questioning if the Gemma 2 27B model is broken with recent llamacpp updates. Comparisons show answers are similar to aistudio.google.com.

- Scaling synthetic data creation: New research discussed in /r/singularity leverages a collection of 1 billion diverse personas to create synthetic data at scale for training LLMs, enabling tapping into many perspectives for versatile data synthesis.

- LLMs more linear than thought: In /r/MachineLearning, new research reveals near-perfect linear relationships in transformer decoders. Removing or approximating linear blocks doesn't significantly impact performance, challenging assumptions about transformer architectures.

Stable Diffusion Models and Training

- Vanilla SD 2.1 for hyper-realistic faces: In /r/StableDiffusion, the vanilla SD 2.1 base model is being used with extensions like Scramble Prompts and Mann-E_Dreams-0.0.4 in Forge UI to generate impressive hyper-realistic face editing results.

- Halcyon 1.7 tops SDXL rankings: Halcyon 1.7 takes the top spot in SDXL model rankings for prompt adherence and rich results, according to comparisons in /r/StableDiffusion.

- Maintaining facial consistency with IC-light: In /r/StableDiffusion, a user is seeking tips for keeping faces consistent across frames and lighting conditions when using IC-light in projects, looking for techniques, settings and tools to achieve stability.

Hardware and Performance

- Watercooling RTX 3090s: In /r/LocalLLaMA, a user is seeking advice on watercooling a 4x 3090 rig for space efficiency, asking if a single loop is viable to prevent throttling.

- Multiple GPUs for inference speed: Another /r/LocalLLaMA post questions if adding another GPU will actually increase inference speed for llama.cpp via Ollama or just provide a larger memory pool, seeking clarification before purchase.

- Deepspeed vs Unsloth for multi-GPU: An /r/LocalLLaMA thread compares the effectiveness of Deepspeed without Unsloth vs Unsloth with data parallelism for multi-GPU training, planning to use stage 2 Deepspeed if it makes a difference.

Optimizations and Benchmarks

- Beating NumPy matrix multiplication: In /r/LocalLLaMA, a user shares a highly optimized C implementation of matrix multiplication following BLIS design that outperforms NumPy/OpenBLAS with just 3 lines of OpenMP for parallelization.

- Gemma 2 usage with Hugging Face: A Twitter thread linked in /r/LocalLLaMA covers proper Gemma 2 usage with Hugging Face Transformers, including bug fixes, soft capping logits, and precision settings for best results.

- Anthropic funding third-party benchmarks: Anthropic announces an initiative to fund the development of third-party benchmarks for evaluating AI models.

AI Discord Recap

A summary of Summaries of Summaries

-

LLM Performance and Benchmarking Advancements:

- New models like Phi-3 Mini from Microsoft and Gemma 2 from Google are showing significant improvements in instruction following and performance.

- The AI community is actively discussing and comparing model performances, with debates around benchmarks like AlignBench and MT-Bench.

- There's growing interest in reproducible benchmarks, with efforts to replicate results using tools like

lm_evalfor models such as Gemma 2.

-

Optimizing LLM Training and Inference:

- Discussions across discords highlight the importance of efficient training techniques, with focus on methods like eager attention for Gemma2 models.

- The community is exploring various quantization techniques, such as those implemented in vLLM and llama.cpp, to improve inference performance.

- Hardware considerations for AI tasks are a hot topic, with debates around GPU vs CPU performance and the potential of specialized hardware like Apple's variable bit quantization.

-

Open-Source AI Development and Community Collaboration:

- Projects like Axolotl and LlamaIndex are fostering community-driven development of AI tools and frameworks.

- There's increasing interest in open-source alternatives to proprietary models, such as StoryDiffusion as an alternative to Sora.

- Collaborative efforts are emerging around projects like OpenDevin and Microsoft's Graph RAG Architecture.

-

Multimodal AI and Generative Modeling:

- Advancements in vision-language models are being discussed, with projects like Vistral 7B for Vietnamese and Florence-2 running locally with WebGPU.

- Text-to-video generation is gaining traction, with tools like Runway's Gen-3 sparking discussions about capabilities and pricing.

- The community is exploring combinations of models and techniques to achieve DALLE-3-level outputs, indicating a trend towards more sophisticated multimodal systems.

PART 1: High level Discord summaries

LM Studio Discord

- LM Studio's Shiny New Armor: The LM Studio 0.2.27 release celebrated improvements for Gemma 9B and 27B models, with users prompted to download new versions or embrace the auto-update feature. Posted release notes reveal bug fixes and an updated

llama.cppcommit ID.- Community members also eagerly discussed the Phi-3 Mini jump to Phi 3.1 by Microsoft, emphasizing the leap in performance and instruction adherence - head over to Hugging Face to catch the wave.

- Heatwaves and Hurdles in Hardware: VRAM's supremacy over RAM for LLM tasks was the hot topic, with consensus leaning towards 8GB VRAM as the recommended minimum for avoiding performance pitfalls. A user's rig, boasting a total of 120GB VRam with 5x water-cooled 4090s, sparked interest for its brute force approach to LLM inference.

- However, not all sailed smoothly as members reported GPU idling temperature issues with LM Studio on hardware like a P40 GPU, along with compatibility concerns particularly with AMD GPUs post update, as seen in Extension-Pack-Instructions.md.

- Collaborative Debugging in Dev Chat: A clash of SDK versions in LM Studio led users down a rabbit hole of

await client.llm.load(modelPath);errors after upgrading to 0.2.26. The lmstudio.js GitHub issue hosted the saga, setting the stage for community troubleshooting.- Discord bots weren't immune to challenges either; a case of TokenInvalid error, pinned on the lack of MessageContent intents, was laid to rest through community collaboration, highlighting the spirit of collective problem-solving.

- Quantization Quintessentials and Gemma 2: Enthusiasm for the Gemma 2 model updates matched stride with the Phi 3.1 enhancements, both promising smoother operation with the latest LM Studio iteration. The community's pivot towards quantized versions, like Gemma 2 9b and 27b, indicates a keen eye on performance optimizations.

- With Gemma 2 facing an unexpected setback on an AMD 6900 XT GPU, as revealed by the 'failed to load model' debacle, the tides seem to favor a 're-download and try again' approach, albeit with members staying on standby for a more solid fix.

HuggingFace Discord

- Transformers' Tech Triumphs: With the Transformers 4.42 release, users are now able to access a variety of fresh models and features, including Gemma 2, RT-DETR, and others, highlighted in the release notes.

- Community reactions show elevated excitement for this update, anticipating enhanced capabilities and efficient RAG support, as expressed in enthusiastic responses.

- Chronos Data Chronicles: AWS has launched its Chronos datasets on Hugging Face, along with evaluation scripts, providing datasets used for both pretraining and evaluation as detailed here.

- This dissemination of datasets is recognized with community acclaim, marked as a significant contribution for those interested in temporal data processing and analysis.

- Metrics at Scale: Hub's Major Milestone: 100k public models are leveraging the Hub to store

tensorboardlogs, facilities summarized here, illustrating the expanding utility of the platform.- This accomplishment is considered a major nexus for model monitoring, streamlining the process of tracking training logs alongside model checkpoints.

- Vietnamese Vision-Language Ventures: A new wave in Vietnamese AI is led by Vistral 7B, a Vision-Language model, based on LLaVA and Gemini API to enhance image description capacities as shown on their GitHub.

- The team has opened avenues for community engagement, seeking insights into model performance through an interactive demo, harnessing further vision capabilities.

- AI Finesse in Fashion: E-commerce's Future: Tony Assi showcased a variety of AI-driven projects tailored for e-commerce, with a special focus on using computational vision and machine learning to innovate in fashion.

- The diversity of these applications, available here, underlines the potential AI holds in transforming the e-commerce industry.

CUDA MODE Discord

- CUDA Conclave Crunch: A CUDA-only hackathon will surge in San Francisco on July 13th, hosted by Chris Lattner with H100 access, a testament to Nebius AI's backing.

- Aiming to foster a hardcore hacker mindset, the event persists from 12:00pm to 10:00pm, flaunting strict CUDA adherence and sparking discussion threads on Twitter.

- Matrix Multiplication Mastery: Mobicham released a matrix multiplication guide, demonstrating calculations on CPUs, a stand-out resource for AI engineers focusing on foundational operations.

- This often under-discussed core of AI model efficiency gets a spotlight, paving the way for discussions around compute optimization in AI workflows.

- INTx's Benchmarking Blitz: INTx performance benchmarking soars past fp16, with int2 and int4 breaking records in tokens per second.

- The torchao quantization experiments reveal that int8-weight and intx-4 demonstrate formidable speed, while evaluations optimized at batch size 1 underscore future performance explorations.

- Benchmark Script Bazaar: MobiusML shared benchmark scripts vital for the AI community, alongside token/second measurement methodologies.

- These benchmarks are crucial for performance metrics, especially after resolving recent issues with transformers and their caching logic.

- CUDA Chores to Core Overhaul: Engineers revitalize helper and kernel functions within CUDA MODE, enhancing efficiency and memory optimization, while championing GenericVector applications.

- AI practitioners scrutinize training stability, dissect dataset implications, simplify setups, and discuss inference optimization, as evident in contributions to repositories like llm.c.

Perplexity AI Discord

- Talk the Talk with Perplexity's Android App: Perplexity AI released a voice-to-voice feature for Android, enabling a seamless hands-free interaction and soliciting user feedback via a designated channel. The app offers both Hands-free and Push-to-talk modes.

- The Pro Search update magnifies Perplexity's prowess in handling complex queries with staunch support for multi-step reasoning, Wolfram|Alpha, and code execution. Explore the enhancements here.

- Curating Knowledge with Perplexity AI: User discussions surfaced regarding Perplexity AI's search engine limitations, like bias toward Indian news and erratic source selection, assessing the tools against peers like Morphic.sh. The need for source-setting enhancements and balanced global content is evident.

- Community conversations expose a bottleneck with Claude's 32k tokens in Perplexity AI, dampening user expectations of the advertised 200k tokens. This marks a departure from an ideal AI assistant towards a more search-centric model.

- Perplexity's Plotting Potential Probed: Queries on visualizing data within Perplexity AI concluded that while it doesn't inherently generate graphs, it aids in creating code for external platforms like Google Colab. Members proposed utilizing extensions like AIlin for integrated graphical outputs.

- Citing the proliferation of referral links, the Perplexity AI userbase anticipates a potential trial version, despite the apparent absence due to misuse in the past. The community's thirst for firsthand experience before subscription remains unquenched.

- Puzzle Phenom's Anniversary to Meta's Meltdown: Marking half a century of cognitive challenge, the Rubik's Cube celebrates its 50th anniversary with a special tribute, available here. An eclectic array of news shadows the cube's glory, from Meta's EU charges to electric flight innovation.

- Discussions around business plan creation via Perplexity AI surfaced, revealing a lean canvas tutorial to structure entrepreneurial strategy. Eager business minds are drawn to the guide, found here.

- API Angst and Anticipation in Perplexity's Pipeline: The API ecosystem in Perplexity AI faced a hiccup with Chrome settings failing to load, prompting users to explore Safari as an alternative, with the community suggesting cache clearance for remediation.

- While Sonnet 3.5 remains outside the grasp of the Perplexity API, the interest in wielding the search engine via API kindled discussions about available models and their functional parity with Hugging Face implementations. Detailed model documentation is referenced here.

Unsloth AI (Daniel Han) Discord

- Billion-Persona Breakthrough Tackles MATH: Aran Komatsuzaki detailed the Persona Hub project that generated data from 1 billion personas, improving MATH scores from 49.6 to 64.9. They shared the concept in a GitHub repo and an arXiv paper, sparking conversations on data value over code.

- Discussions pivoted on the ease of replicating the Persona Hub and the potential for scaling synthetic data creation, with a member emphasizing, 'the data is way more important than the code'.

- Phi-3 Mini Gets Performance-Enhancing Updates: Microsoft announced upgrades to Phi-3 Mini, enhancing both the 4K and 128K context model checkpoints as seen on Hugging Face and sparking speculations of advanced undisclosed training methods.

- Community reactions on the LocalLLaMA subreddit were enthusiastic, leading to humorous comparisons with OpenAI's secretive practices, as someone quipped 'ClosedAI’s CriticGPT'.

- Unsloth Embraces SPPO for Streamlined Integration: theyruinedelise confirmed SPPO's compatibility with Unsloth, discussing how it operates alongside TRL, offering a straightforward integration for Usloth users.

- Excitement on the integration front continues as Unsloth reliably extends support to SPPO through TRL, simplifying workflows for developers.

- Chit-Chat with Chatbots in Discord: The llmcord.py script by jakobdylanc, available on GitHub, repurposes Discord as an LLM interface, earning acclaim from the community for its practicality and ease of use.

- Community members, inspired by the release, shared their compliments, with expressions like, 'Nice great job!' emphasizing collective support for the innovation.

- A Collective Call for Notebook Feedback: A new Colab notebook supporting multiple datasets appealed to the community for feedback, aiming to refine user experience and functionality.

- The notebook's noteworthy features, like Exllama2 quant support and LoRA rank scaling, mirror the community's collaborative ethos, with anticipation for constructive feedback.

Stability.ai (Stable Diffusion) Discord

- VRAM Vanquishing Diffusion Dilemmas: A member queried about using Stable Diffusion with only 12GB VRAM, facing issues since everydream2 trainer requires 16GB. Another shared successfully generating a tiny checkpoint on an 8GB VRAM system after a 4-hour crunch.

- The conversation shifted to strategies for running Stable Diffusion on systems with VRAM constraints, with members swapping tips on different models and setups that could be more accommodating of the hardware limitations.

- Stable Diffusion 3 Struggles: The community dissected the weaknesses of Stable Diffusion 3 (SD3), citing missing its mark on finer tunability and incomplete feature implementations, like the inefficient training of Low-Rank Adaptation (LoRA).

- One participant voiced their frustration about SD3's image quality deficits in complex poses, advocating for further feature advancements to overcome areas where the model's performance stutters.

- LoRA's Learning Loop: Discussions spiked over training LoRA (Low-Rank Adaptation) for niche styles, ranging from 3D fractals to game screencaps, though Stable Diffusion 3's restrictive nature with LoRA training was a constraint.

- Community members enthusiastically exchanged workarounds and tools to aid specialized training, iterating the value of trial and error in the quest for custom model mastery.

- Stylistic Spectrum of Stable Diffusion: Participants shared their triumphs and trials with Stable Diffusion across a wide stylistic spectrum, from the sharpness of line art to the eeriness of horror, each with a tale of use-case victories and prompt-gone-wild unpredictability.

- Members delighted in the model's capability to interweave accountabilities from different visual narratives, despite the inexplicable mix-ups that prompts can sometimes bestow.

- Art vs. AI: The Anti-AI Algorithm Quandary: The guild debated the creation of software to shield artist creations from AI incorporation, referencing tools like Glaze and Nightshade, acknowledging the approach's shortcomings against persistent loophiles.

- The debate unraveled the practicality of embedding anti-AI features into artworks and discussed the moral questions tied to the reproduction of digital art, punctuated by the challenge of effectively safeguarding against AI assimilation.

OpenAI Discord

- Sticker Shock & Silicon Block: Engineering circles buzzed about the prohibitive cost of 8 H100 GPUs, with figures quoted at over $500k, and bottlenecks in acquisition, requiring an NVIDIA enterprise account.

- A side chat uncovered interest in alternatives such as Google TPUs and services like Paperspace, which provides H100s for $2.24 an hour, as potential cost-effective training solutions.

- AI Artisans Appraise Apparatus: Artificial image aficionados aired grievances about tools like Luma Dream Machine and Runway Gen-3, branding them as overpriced, with $15 yielding a meager 6-7 outputs.

- Enthusiasm dwindled with the perceived lack of progression past the capabilities of predecessors, prompting a demand for more efficiency and creativity in the generative tools market.

- Multi-Step Missteps in Model Prompts: The community contemplated over GPT's tendency to skip steps in multi-step tasks, despite seemingly clear instructions, undermining the model's thoroughness in task execution.

- A technique was shared to structure instructions sequentially, 'this comes before that', prompting GPT to follow through without missing steps in the intended process.

- Intention Interrogation Intrigue: There was a dive into designing a deft RAG-on-GPT-4o prompt for intention checking, segmenting responses as ambiguous, historical, or necessitating new search queries.

- Concerns crept in about the bot's confounding conflation of history with new inquiries, leading to calls for consistency in context and intention interpretation.

- Limitations and Orchestra Layer: Dialogue delved into the limitations of multimodal models and their domain-specific struggles, with specialized tasks like coding in Python as a computed comparison.

- Orchestrators like LangChain leapt into the limelight with their role in stretching AI context limits beyond a 4k token threshold and architecting advanced models like GPT-4 and LLama.

Eleuther Discord

- vLLM Swoops in for HF Rescue: A user suggested vLLM as a solution to a known issue with HF, using a wiki guide to convert models to 16 Bit, improving efficiency.

- The community appreciated the alternative for model deployment, as vLLM proved effective, with users expressing thanks for the tip.

- Gemma 2 Repro Issues Under Microscope: Accuracy benchmarks for Gemma 2 using

lm_evalare not matching official metrics, prompting users to trybfloat16and specific transformer versions as pinpointed here.- The addition of

add_bos_token=trueas amodel_argsyields scores closer to the model's paper benchmarks, notably lambada_openai's accuracy leaping from 0.2663 to 0.7518.

- The addition of

- Semantic Shuffle in LLM Tokenization: A new paper scrutinizes the 'erasure' effect in LLM tokenization, noting how Llama-2-7b sometimes splits words like 'northeastern' into unrelated tokens.

- The research has stirred excitement for its deep dive into high-level representation conversion from random token groups, aiding comprehension of the tokenization process.

- The Factorization Curse Grabs Headlines: Insights from a paper reframing the 'reversal curse' as 'factorization curse' reveal issues in information retrieval with LLMs, introducing WikiReversal to model complex tasks.

- Suggestions hint at UL2 styled objectives after the report implied better model generalization when trained on corrupted or paraphrased data.

- Graphics of Fewshot Prompts Visualized: Users grappling with the impacts of fewshot prompting on accuracy are seeking methods to visualize prompt effects, experimenting with

--log_samplesfor deeper analysis.- A showcased method saves outputs for inspection, aiming to undercover negative fewshot prompting influences and guide improvements in evaluation accuracy.

Nous Research AI Discord

- Apple Dazzles with On-Device Brilliance: Apple's variable bit quantization optimizes on-device LLMs, as exhibited by their Talaria tool which enhanced 3,600+ models since its release. The technique landed a Best Paper Honorable Mention, with credit to notable researchers like Fred Hohman and Chaoqun Wang.

- Further thrust into on-device intelligence was seen at WWDC 2024 with the introduction of Apple Foundation Models that mesh iOS 18, iPadOS 18, and macOS Sequoia, showcasing a 3 billion parameter on-device LLM for daily tasks, according to Apple's report.

- Runway Revolution: Video Gen Costs Stir Stirring Conversations: Runway's state-of-the-art video generation tools, despite the cutting-edge tech, sparked debates over the $12 price tag for a 60-second video.

- Community member Mautonomy led voices that called for a more palatable $0.5 per generation, comparing it to other premium-priced services.

- Genstruct 7B Catalyzes Instruction Creations: NousResearch's Genstruct 7B model emerges as a toolkit for crafting instruction datasets from raw text, romanticizing the inspired beginnings from Ada-Instruct.

- Highlighted by Kainan_e, Genstruct posits to streamline training for LLMs, making it a topic of enchantment for developers seeking to enhance instruction generation capabilities.

- VLLMs: Vision Meets Verbiage in Animation: Discussions ventured into training VLLMs for automating animations, where a diffusion-based keyframe in-betweening method was promoted.

- Community concurred that Hermes Pro might have potential in this space, with Verafice leading criticism for more effective solutions.

Modular (Mojo 🔥) Discord

- Mojo's Raspberry Resolve: An issue surfaced with running Mojo on Ubuntu 24.04 on the Raspberry Pi 5, sparking a search for troubleshooting support.

- This situation remained unresolved in the conversation, highlighting a need for community-driven solutions or further dialogue.

- Mojo Expands its Horizons: Discussions revealed Mojo's potential in advancing areas such as Model and simulator-based RL for LLM agents, along with symbolic reasoning and sub-symbolic model steering.

- Engagement centered on these emerging applications, where community members expressed eagerness to collaborate and exchange insights.

- Benchmark Boons & Blunders: Community efforts yielded significant enhancements to the testing and benchmarking framework in Mojo, notably improving performance metrics.

- Despite this progress, challenges such as benchmark failures due to rounding errors and infinite loops during GFlops/s trials underscore the iterative nature of optimization work.

- Nightly Compiler Complexities: The release of Mojo compiler nightly version 2024.7.205 prompted engagements on version control with

modular update nightly/mojo, accompanied by resolved CI transition issues.- Merchandise inquiries led to direct community support, while some members faced challenges relating to nightly/max package updates that were eventually addressed.

- Matrix Multiplication Musings: A flurry of activity centered on

src/maincompilation errors, specificallyDTypePointerinmatrix.mojo, leading to suggestions of stable build usage.- Participants brainstormed improvements to matrix multiplication, proposing vectors such as vectorization, tiling, and integrating algorithms like Strassen and Winograd-Copper.

OpenRouter (Alex Atallah) Discord

- Models Page Makeover: An update on the /models page has been announced, promising new improvements and seeking community feedback here.

- Changes to token sizes for Gemini and PaLM will standardize stats but also alter pricing and context limits; the community will face adjustments.

- Defaulting from Default Models: The Default Model in OpenRouter settings is facing deprecation, with alternatives like model-specific settings or auto router coming to the forefront.

- Custom auth headers for OpenAI API keys are also being phased out, indicating a shift towards newer, more reliable methods of authentication.

- Discord Bot Dialogues Get Lean: Community members shared tips for optimizing conversation bots, emphasizing token-efficient strategies like including only necessary message parts in prompts.

- This opens discussion on balancing model context limits and maintaining engaging conversations, with a nod to the SillyTavern Discord's approach.

- Claude 3.5's Code Quarrels: Intermittent errors with Claude 3.5 are causing a stir, while Claude 3.0 remains unaffected, indicating a possible model-specific issue.

- The community is actively sharing workarounds and awaiting fixes, highlighting the collaborative nature of debugging in the engineering space.

- iOS Frontends Find OpenRouter Fun: Inquiries about iOS apps supporting OpenRouter were met with recommendations, with Pal Chat and Typingmind leading the charge post-bug fixes.

- The engagement on finding diverse frontend platforms suitable for OpenRouter integration suggests a growing ecosystem for mobile AI applications.

OpenAccess AI Collective (axolotl) Discord

- Eager vs Flash: Attention Heats Up for Gemma2: Eager attention is recommended for training Gemma2 models, with the setting modified to 'eager' in AutoModelForCausalLM.from_pretrained() for enhanced performance.

- Configuring YAML files for eager attention received thorough coverage, offering granularity for training Gemma2 to meet performance benchmarks.

- Optimizing Discussion: Assessing Adam-mini and CAME: There's a buzz around integrating CAME and Adam-mini optimizers within axolotl, circling around their lower memory footprints and potential training stability.

- Adam-mini surfaced as a rival to AdamW for memory efficiency, sparking a discussion on its pragmatic use in large model optimization.

- Getting the Measure: Numerical Precision over Prose: A user sought to prioritize precise numerical responses over explanatory text in model outputs, pondering the use of weighted cross-entropy to nudge model behavior.

- While the quest for research on this fine-tuning method is ongoing, the community spotlighted weighted cross-entropy as a promising avenue for enhancing model accuracy.

- Gemma2's Finetuning Frustrations Grapple Gradient Norm Giants: Finetuning woes were shared regarding Gemma2 27b, with reports of high grad_norm values contrasting with smoother training experiences on smaller models like the 9b.

- Lower learning rates and leveraging 'flash attention' were among the proposed solutions to tame the grad_norm behemoths and ease Gemma2's training temperament.

- ORPO's Rigid Rule: Pairs a Must for Training: A member grappled with generating accepted/rejected pairs for ORPO, seeking confirmation on whether both were required for each row in training data.

- The community consensus underscored the critical role of pair generation in ORPO's alignment process, emphasizing its trickiness in practice.

LlamaIndex Discord

- Llama-Leap to Microservices: Mervin Praison designed an in-depth video tutorial on the new llama-agents framework, outlining both high-level concepts and practical implementations.

- The tutorial, widely acknowledged as comprehensive, drills down into the intricacies of translating Python systems to microservices, with community kudos for covering advanced features.

- Knowledge Assistants Get Brainy: The AI Engineer World Fair showcased breakthrough discussions on enhancing knowledge assistants, stressing the need for innovative data modules to boost their efficiency.

- Experts advocated for a shift towards sophisticated data handling, a vital leap from naive RAG structures to next-gen knowledge deepening.

- Microsoft's Graph RAG Unpacked: Microsoft's innovative Graph RAG Architecture made a splash as it hit the community, envisioned as a flexible, graph-based RAG system.

- The reveal triggered a wave of curiosity and eagerness among professionals, with many keen to dissect its potential for modeling architectures.

- Pinecone's Pickle with Metadata: Technical challenges surfaced with Pinecone's metadata limits in handling DocumentSummaryIndex information, compelling some to code workarounds.

- The troubleshooting sparked broader talks on alternative frameworks, underscoring Pinecone's rigidity with metadata handling, inspiring calls for a dynamic metadata schema.

- Chatbots: The RAG-tag Revolution: AI engineers broached the development of a RAG-based chatbot to tap into company data across diverse SQL and NoSQL platforms, harnessing LlamaHub's database readers.

- The dialog opened up strategies for formulating user-text queries, with knowledge sharing on database routing demonstrating the community's collaborative troubleshooting ethos.

tinygrad (George Hotz) Discord

- Graph Grafting Gains Ground: Discourse in Tinygrad centered on enhancing the existing

graph rewrite followup, speedup / different algorithm, with consensus yet to form on a clear favorite algorithm. Egraphs/muGraphs have been tabled for future attention.- Although not Turing complete, members boldly bracketed the rule-based approach for its ease of reasoning. A call for embedding more algorithms like the scheduler in graph rewrite was echoed.

- Revealing the Mystique of 'image dtype': Tinygrad's 'image dtype' sparked debates over its ubiquitous yet cryptic presence across the codebase; no specific actions to eliminate it have been documented.

- Queries such as 'Did you try to remove it?' circled without landing, leaving the discussion hanging without detailed exploration or outcomes.

- Whispers of Error Whisperers: Tinygrad's recurrent error RuntimeError: failed to render UOps.UNMUL underscored an urgent need to overhaul error messaging as Tinygrad navigates towards its v1.0 milestone.

- George Hotz suggested it was more an assert issue, stating it should never happen, while a community member geared up to attack the issue via a PR with a failing test case.

- Memory Mayhem and Gradient Wrangling: Lively discussions pinpointed the CUDA memory overflow during gradient accumulation in Tinygrad, with users exchanging strategies such as reducing loss each step and managing gradient memory.

Tensor.no_grad = Trueemerged as Tinygrad's torchbearer against gradient calculations during inference, drawing parallels withtorch.no_grad(), anda = a - lr * a.gradas the modus operand becausea -= lr * a.gradtriggers assertions.

- Documentation Dilemmas Demand Deliberation: Championing clarity, participants in Tinygrad channels appealed for richer documentation, especially covering advanced topics like TinyJit and meticulous gradient accumulation.

- As developers navigate the balance between innovation and user support, initiatives for crafting comprehensive guides and vivid examples to illuminate Tinygrad's darker corners gained traction.

LangChain AI Discord

- RAG-Tag Matchup: HydeRetrieval vs. MultiQueryRetrieval: A heated conversation unfolded around the superiority of retrieval strategies, with HydeRetrieval and MultiQueryRetrieval pitted against each other. Several users chimed in, one notably experiencing blank slates with MultiQueryRetrieval, triggering a discussion on potential fallbacks and fixes.

- The debate traversed to the realm of sharded databases, with a curious soul seeking wisdom on implementing such a system. Insights were shared, with a nod toward serverless MongoDB Atlas, though the community's thirst for specifics on shard-query mapping remained unquenched.

- API Aspirations & File Upload Queries: In the domain of LangServe, a conundrum arose about intertwining fastapi-users with langserve, aiming to shield endpoints with user-specific logic. The echo chamber was quiet, with no guiding lights providing a path forward.

- Another LangChain aficionado sought guidance on enabling file uploads, aspiring to break free from the confines of static file paths. A technical crowd shared snippets and insights, yet a step-by-step solution remained just out of grasp, lingering in the digital fog.

- LangChain Chatbots: Agents of Action: Among the LangChain templars, a new quest was to imbue chatbots with the prowess to schedule demos and bridge human connections. A response emerged, outlining using Agents and AgentDirector, flourishing with the potential of debugging through the lens of LangSmith.

- The lore expanded with requests for Python incantations to bestow upon the chatbots the skill of action-taking. Responses cascaded, rich with methodological steps and tutorials, stirring the pot of communal knowledge for those who dare to action-enable their digital creations.

- CriticGPT’s Crusade and RAFT’s Revelation: OpenAI’s CriticGPT stepped into the limelight with a video exposé, dissecting its approach to refining GPT-4's outputs and raising the bar for code generation precision. Keen minds absorbed the wisdom, contemplating the progress marked by the paper-linked video review.

- The forward-thinkers didn't rest, probing the intriguing depths of RAFT methodology, sharing scholarly articles and contrasting it against the age-old RAG mechanics. A beckoning call for collaboration on chatbots using LLM cast wide, with an open invitation for hands to join in innovating.

Latent Space Discord

- Runway Revolution with Gen 3 Alpha: Runway unveiled Gen-3 Alpha Text to Video, a tool for high-fidelity, fast, and controllable video generation, accessible to all users. Experience it here or check the announcement here.

- A head-to-head comparison with SORA by the community underscores Gen-3 Alpha's unique accessibility for immediate use, offering a glimpse into the future of video generation. You can see the side-by-side review here.

- Sonnet Syncs with Artifacts: The fusion of Sonnet with Artifacts is praised for boosting efficiency in visualizing and manipulating process diagrams, promoting a more intuitive and rapid design workflow.

- Enthusiasts express admiration for the ability to synthesize visual concepts at the speed of thought, negating the tedium of manual adjustments and streamlining the creative process.

- Figma Debunks Design Data Doubts: Figma responded to user concerns by clarifying its 'Make Design' feature is not trained on proprietary Figma content, with the official statement available here.

- Despite Figma’s explanations, community discourse speculates over recognizable elements in outputs such as the Apple's Weather app, stirring an ongoing debate on AI-generated design ethics.

- Microsoft's Magnified Phi-3 Mini: Microsoft enhanced the Phi-3 Mini, pushing the limits of its capabilities in code comprehension and multi-language support for more effective AI development. Check out the update here.

- Improvements span both the 4K and 128K models, with a focus on enriching the context and structured response aptitude, heralding advancements in the nuanced understanding of code.

- Magic Dev's Market Magic: Startup Magic Dev, despite lacking concrete product or revenue, is targeting an ambitious $1.5 billion valuation, spotlighting the fervent market speculation in AI. Details discussed on Reuters.

- The valuation goals, set by a lean 20-person team, points to the AI investment sphere's bullish tendencies and renews concerns about a potential bubble in the absence of solid fundamentals.

Mozilla AI Discord

- Llama.cpp on Lightweight Hardware? Think Again: Discussions on running llama.cpp revealed that neither iPhone 13 nor a Raspberry Pi Zero W meet the 64-bit system prerequisite for successful operation, with specific models and memory specs essential.

- Community members pinpointed that despite the allure of portable devices, models like the Raspberry Pi Zero fall short due to system and memory constraints, prompting a reevaluation of suitable hardware.

- Llamafile v0.8.9 Takes a Leap with Android Inclusion: Mozilla announced the launch of llamafile v0.8.9 with enhanced Android compatibility and the Gemma2 model more closely mirroring Google's framework.

- Feedback heralded the version's alignment improvements for Gemma2, suggesting it could now rival larger models according to public assessments.

- Mxbai Model Quirk Fixed with a Simple Switch: A peculiar behavior of the mxbai-embed-large-v1 model, returning identical vectors for varied text inputs, was resolved by changing the input key from 'text' to 'content'.

- Continued refinement was signaled by the community's recommendation to update the model on Hugging Face for clarity and ease of future deployments.

- Navigating the Hardware Maze for Large Language Models: AI enthusiasts convened to determine an optimal hardware setup for running heavyweight language models, with a consensus on VRAM heft and CPU memory prowess dominating the dialogue.

- Practical insights from users favored 3090/4090 GPUs for mainstream usage while advocating for A6000/RTX 6000 workstations for those pushing the boundary, emphasizing the trial and balance of a conducive hardware configuration.

- The Great CPU vs GPU Debate for Model Training: GPUs remain the preferred platform over CPUs for model training, owning to their computational prowess, as exhibited by the slow and inadequate processing capabilities of multicore CPUs when tasked with large models.

- Speculations about the feasibility of CPU-based training brewed, with community testing using llm.c to train models like GPT-2, highlighting the stark limitations of CPUs in large-scale learning applications.

OpenInterpreter Discord

- Windows Woes & Wins: Users grappled with challenges in Building 01 on Windows, with piranha__ unsuccessfully searching for guidance, while stumbling upon a potentially salvaging pull request that promises to update the installation guide.

- The discussed pull request compiles past users' attempts to conquer the installation ordeal on Windows, potentially ironing out wrinkles for future endeavours.

- Troubleshooting Concurrency in OI: chaichaikuaile_05801 discussed the perplexities of concurrency and resource isolation with OI deployments, debating over the benefits of OI Multiple Instances versus other contextual solutions.

- The exchange considered the use of

.reset()to circumvent code-sharing snags, concluding that disparate instances escape the turmoil of shared Python execution environments.

- The exchange considered the use of

- Pictorial Pickles in OI: A poignant plea by chaichaikuaile_05801 highlighted the hurdles faced when displaying images through OI's

MatPlotLib.show(), referencing an open GitHub issue showing the discrepancies between versions.- As the user navigates between versions 0.1.18 and 0.2.5, they call for future versions to bolster image return functionalities, indicating a thirst for visualization improvements.

- In Quest of Quantum Local AI Agents: blurrybboi sought out Local AI Agents with the prowess to prowl the web beyond basic queries, targeting those that can sift through chaff to cherry-pick the prime output.

- Despite the entreaty, responses were nil, leaving unanswered the question of AI agents' aptitude in advanced online filtration.

Torchtune Discord

- WandB Wins for Workflow: A user celebrated their win in finetuning a model successfully, overcoming the need for a personal GPU by leveraging online resources, and embraced WandB for better training insights.

- Community discussions concluded that streamlining YAML configurations and adopting WandB's logger could simplify the training process, as per the shared WandB logger documentation.

- AMD's AI Antics Assessed: Members exchanged experiences on utilizing AMD GPUs for AI purposes, recommending NVIDIA alternatives despite some having success with ROCm and torchtune, as detailed in a Reddit guide.

- A user expressed their struggled journey but eventual success with their 6900 XT, highlighting community support in troubleshooting torchtune on AMD hardware.

- HuggingFace Hug for Torchtune Models: An inquiry was made into converting torchtune models to HuggingFace's format, indicating the user's intent to merge toolsets.

- While specifics of the conversion process were not discussed, participants shared naming conventions and integration strategies, showing the engineering efforts in model compatibility.

Cohere Discord

- Multi-Step Marvels with Cohere's Toolkit: Toolkit multi-step capabilities have been confirmed by users, featuring enabled frontend and backend support, with shared examples in action.

- Discussions also applauded Sandra Kublik's session at AI Engineer in SF, and emphasized the sunset of the LLM-UNIVERSITY channel, directing users to Cohere's API documentation for continued support.

- Slack Bot's Speedy Sync-Up: A user created a Cohere Slack bot expressing its ease of use in the workspace, praised by the community with immediate reactions.

- The conversation underlined the importance of fast model processing due to Slack's 3-second rule for bots, spotlighting the need for responsive AI models.

- London Calling for AI Enthusiasts: An announcement for Cohere For AI's upcoming London event on July 10th focuses on multilingual AI, with exciting activities like lightning talks and the kickoff of Expedition Aya.

- Expedition Aya is a global challenge pushing the boundaries of multilingual AI models, offering teams exclusive resources, API credits, and the chance to win swag and prizes for notable contributions.

LAION Discord

- Model Evaluation Maze: An article detailed the complex landscape of evaluating finetuned LLMs for structured data extraction, emphasizing the intricate metric systems and the tedium of the process without a solid service to maintain evaluations. The focus was on the accuracy metric and the hidden layers of code that often slow down the work.

- The user highlighted the growing challenge of LM evaluation, citing resource demands and difficulty in preserving the integrity of the evaluations over time. No additional resources or diagrams were shared.

- Resounding phi-CTNL Triumph: A groundbreaking paper introduced phi-CTNL, a lean 1 million parameter LLM, showcasing its perfect scores across various academic benchmarks and a grokking-like ability for canary prediction. The paper's abstract presents the full details of the model's prowess.

- This transformer-based LLM, pretrained on an exclusively curated dataset, stands out for its capacity to predict evaluation benchmarks with pinpoint accuracy, sparking discussion across the AI engineering community about the potential applications of such a nimble yet powerful model.

- AIW+ Problem Cracked: One user presented evidence that a correct solution to the AIW+ problem has been authenticated, suggesting the use of a diagram for a formally checked answer. They cited an accurate response from the model Claude 3 Opus as validation.

- The solution's confirmation sparked a discourse on the assumptions utilized in problem statements and how they directly influence outcomes. Investigators are urged to inspect the logic that underpins these AI puzzles closely.

- Terminator Architects: A new model architecture named 'Terminator' has been proposed, featuring a radical departure from traditional designs by eliminating residuals, dot product attention, and normalization.

- The Terminator model was shared in the community, with a paper link provided for those interested in exploring its unique structure and potential implications for model developments.

LLM Finetuning (Hamel + Dan) Discord

- Chainlit Cooks Up a Sound Solution: An AI engineer combined SileroVAD for voice detection with whisper-fast for transcription, advising peers with a Chainlit audio assistant example. TTS alternatives like elevenlabs (turbo), playht, and deepgram were also reviewed for optimizing audio workflows.

- Further discussion revolved around knowledge graphs and the utilization of Lang Graph in AI, with community members actively seeking deeper insights into embedding graph technologies into AI systems.

- Dask's Dive into Data Dimensionality: Engaging with large datasets, particularly from the USPTO Kaggle competition, led to out of memory (OOM) errors when using Dask. The talk turned towards strategies for using Modal to efficiently execute Dask jobs, aiming to manage massive data volumes.

- One practitioner queried the group about success stories of running Dask jobs on Modal, hinting at Modal's potential to better accommodate high-demand computational workloads.

- Autotrainer or Not? That's the Question: Autotrainer's role was questioned, with ambiguity about whether it pertained to Axolotl's features or Huggingface autotrain. The community engaged in an effort to pinpoint its association.

- The source of Autotrainer remained unclear, with at least one guild member seeking clarification and later conjecturing a connection to Huggingface autotain after some investigation.

- OpenAI's Generosity Grapples Guilt: OpenAI's pricing caused a mix of humor and guilt among users, with one lamenting their inability to exhaust their $500 credits in the provided 3-month period.

- This sentiment was shared by another member, who amusingly adopted an upside-down face emoji to express their mixed feelings over the undemanding credit consumption.

- The Slide Deck Side-Quest: Dialogue ensued over the location of a video's slide deck—with members like Remi1054 and jt37 engaging in a hunt for the elusive presentation materials, often hosted on Maven.

- The pursuit continued as hamelh signified that not all speakers, like Jo, readily share their decks, compelling practitioners to directly request access.

AI Stack Devs (Yoko Li) Discord

- Hexagen.World Unveils New Locales: Fresh locations from Hexagen.World have been introduced, expanding the digital terrain for users.

- The announcement sparked interest among members for the potential uses and developments tied to these new additions to the Hexagen.World.

- AI Town Docks at Docker's Shore: Community voices call for a Docker port of AI Town, discussing benefits for enhanced portability and ease of setup.

- A proactive member shared a GitHub guide for setting up AI Town on Windows using WSL, with suggestions to integrate it into the main repository.

Interconnects (Nathan Lambert) Discord

- Apple Nabs OpenAI Seat with Panache: Phil Schiller is set to join the OpenAI board as an observer, giving Apple an edge with a strategic partnership aimed at enhancing their Apple Intelligence offerings, outlined here.

- The AI community bubbled with reactions as Microsoft's hefty investment contrasted sharply with Apple's savvy move, sparking debate and a bit of schadenfreude over Microsoft's disadvantage as seen in this discussion.

- Tech Titans Tussle Over OpenAI Ties: Conversations among the community delve into the business dynamics of OpenAI partnerships, examining Apple's strategic win in gaining an observer seat vis-à-vis Microsoft's direct financial investment.

- Insights and jibes threaded through the discourse, as participants compared the 'observer seat deal' to a game of chess where Apple checkmates with minimal expenditure, while Microsoft's massive outlay drew both admiration and chuckles from onlookers.

Datasette - LLM (@SimonW) Discord

- Web Wins with Wisdom: Be Better, Not Smaller analyzes the missteps of early mobile internet services like WAP, likening them to modern AI products. It explains the limitations of pre-iPhone era mobile browsing and suggests a better approach for current products.

- The article encourages current AI developers to prioritize enhancing user experiences over simply fitting into smaller platforms. Mobile browsing was once like "reading the internet by peering through a keyhole."

- Governing Gifts Gather Gawkers: A Scoop article reveals the array of unusual gifts, such as crocodile insurance and gold medallions, received by U.S. officials from foreign entities.

- It discusses the difficulties in the data management of these gifts, pointing out their often-unstructured format and storage issues, which reflects a larger problem in governmental data handling. "These foreign gifts really are data."

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!