[AINews] GPT4o August + 100% Structured Outputs for All (GPT4o August edition)

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Pydantic/Zod is all you need.

AI News for 8/5/2024-8/6/2024. We checked 7 subreddits, 384 Twitters and 28 Discords (249 channels, and 2423 messages) for you. Estimated reading time saved (at 200wpm): 247 minutes. You can now tag @smol_ai for AINews discussions!

It's new frontier model day again! (Blog, Simonw writeup)

As we did for 4o-mini, there are 2 issues of the newsletter today run with the exact same prompts - you are reading the one with all channel summaries generated by gpt-4o-2024-08-06, the newest 4o model released today with 16k context (4x longer but still less than the alpha Long Output model) and 33-50% lower pricing than 4o-May.

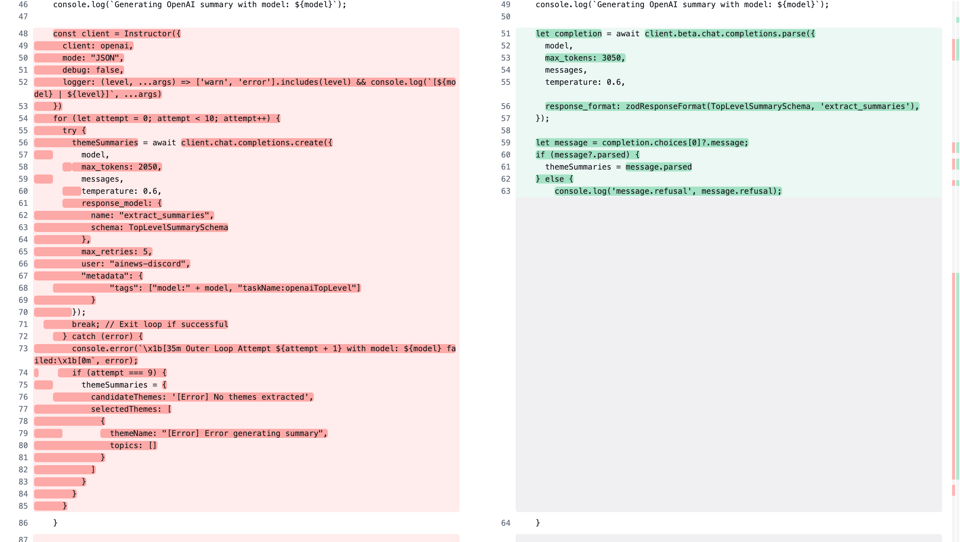

We happen to run AINews with structured output via the Instructor library anyway (doing "chain of thought summaries"), so swapping it out saved us some lines of code and more importantly saved some money in retries (since OpenAI does constrained grammar sampling, you no longer spend any retry money/time on poorly formed json)

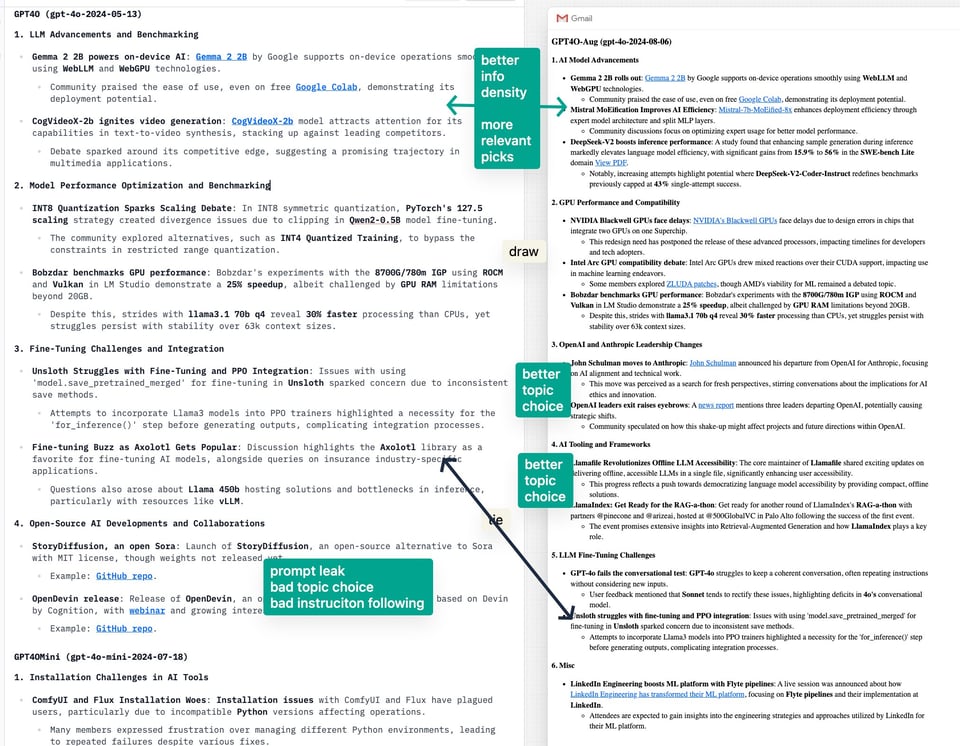

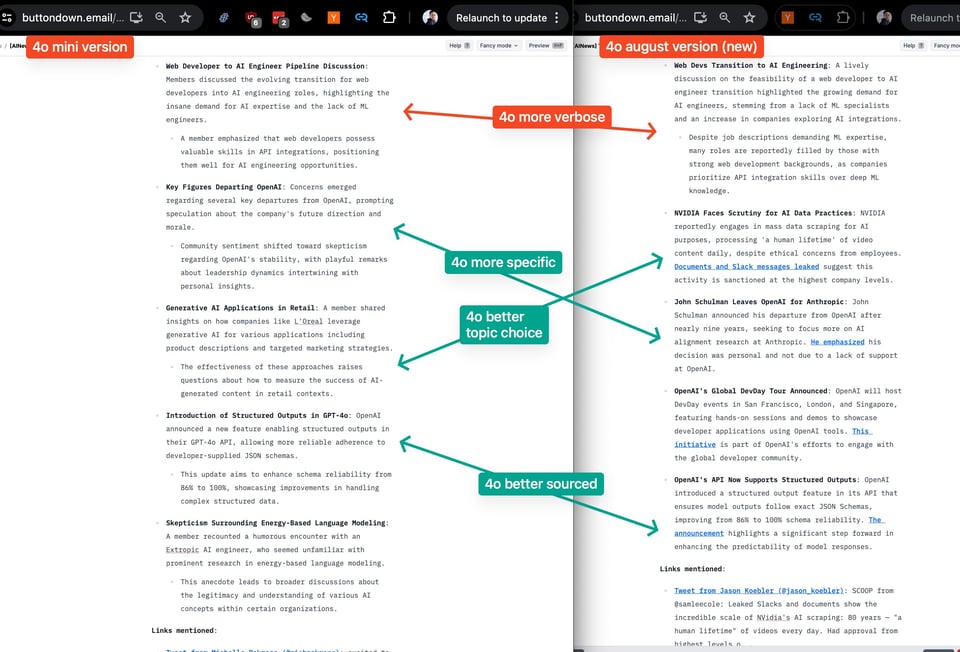

Based on our summary vibe check and prompts, the new model seems strictly better than 4o-May (example picked here, but you can see the two emails you got today for yourself):

and mostly better than 4o-mini (which we last concluded was about equivalent to but way cheaper than 4o-May):

New Structured Output API aside, which applies to all models, we think the unexpected 4o model bump is a good thing - 4o August is effectively GPT 4.6 or 4.7 depending how you are counting. We don't have any publicly reported ELO or benchmark metrics on this model yet, but we are willing to bet that this one will be a sleeper hit - perhaps even a sneaky launch of Q*/Strawberry?

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Updates and Benchmarks

- Llama 3.1: Meta released Llama 3.1, a 405-billion parameter large language model that surpasses GPT-4 and Claude 3.5 Sonnet on several benchmarks. The Llama Impact Grant program is expanding to support organizations worldwide in building with Llama.

- Gemini 1.5 Pro: Google DeepMind quietly released Gemini 1.5 Pro, which reportedly outperformed GPT-4o, Claude-3.5, and Llama 3.1 on LMSYS and reached #1 on the Vision Leaderboard. It excels in multi-lingual tasks and technical areas.

- Yi-Large Turbo: Introduced as a powerful, cost-effective upgrade to Yi-Large, priced at $0.19 per 1M tokens for input and output.

AI Hardware and Infrastructure

- NVIDIA H100 GPUs: John Carmack shared insights on H100 performance, noting that 100,000 H100s are more powerful than all 30 million current generation Xboxes combined for AI workloads.

- Groq LPUs: Jonathan Ross announced plans to deploy 108,000 LPUs into production by end of Q1 2025, expanding cloud and core engineering teams.

AI Development and Tools

- RAG (Retrieval-Augmented Generation): Discussions on the importance of RAG for integrating human input and enhancing AI systems' capabilities.

- JamAI Base: A new platform for building Mixture of Agents (MoA) systems without coding, leveraging Task Optimizers and Execution Engines.

- LangSmith: New filtering capabilities for traces in LangSmith, allowing more precise filtering based on JSON key-value pairs.

AI Research and Techniques

- PEER (Parameter Efficient Expert Retrieval): A new architecture from Google DeepMind using over a million small "experts" instead of large feedforward layers in transformer models.

- POA (Pre-training Once for All): A novel tri-branch self-supervised training framework enabling pre-training of models of multiple sizes simultaneously.

- Similarity-based Example Selection: Research showing significant improvements in low-resource machine translation using similarity-based in-context example selection.

AI Ethics and Societal Impact

- Data Monopoly Concerns: Discussions about the potential for data monopolies if downloading content from internet services becomes illegal, leading to vendor lock-in.

- AI Safety: Debates on the nature of AI intelligence and safety measures, with Yann LeCun arguing against some common AI risk narratives.

Practical AI Applications

- Code Generation: Observations on the effectiveness of AI for code generation, with examples of researchers using Claude for coding despite physical limitations.

- Model Selection Guide: Recommendations for choosing AI models for various tasks, including code generation, search, document analysis, and creative writing.

AI Community and Education

- AI and Games Textbook: Julian Togelius and Georgios Yannakakis released a draft of the second edition of their textbook on AI and Games, seeking community input for improvements.

- AI Education Programs: Google DeepMind celebrated the first graduates from the AI for Science Master's program at AIMS, providing scholarships and resources.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Architectural Innovations in AI Models

- Flux's Architecture diagram :)Don't think there's a paper so had a quick look through their code. Might be useful for understanding current Diffusion architectures (Score: 461, Comments: 35): Flux's architecture diagram for diffusion models provides insight into current diffusion architectures without an accompanying paper. The diagram, derived from an examination of Flux's code, offers a visual representation of the model's structure and components, potentially aiding in the understanding of contemporary diffusion model designs.

Theme 2. Advancements in Open-Source AI Models

- Why is nobody taking about InternLM 2.5 20B? (Score: 247, Comments: 98): InternLM 2.5 20B demonstrates impressive performance in benchmarks, surpassing Gemma 2 27B and approaching Llama 3.1 70B. The model achieves a remarkable 64.7 score on MATH 0 shot, close to 3.5 Sonnet's 71.1, and can potentially run on a 4090 GPU with 8-bit quantization.

-

Shower thought: What if we made V2 versions of Magnum 32b & 12b (spoiler: we did!) (Score: 54, Comments: 15): Magnum-32b v2 and Magnum-12b v2 models have been released, with improvements based on user feedback. The models are available in both GGUF and EXL2 formats on Hugging Face, and the developers are seeking further input from users to refine the models.

- Users inquired about potential Mistral-based models and discussed optimal sampler settings for the 32b V1 model in applications like Koboldcpp and Textgenui.

- The model's intended use was humorously described as "foxgirl studies," while others noted multilanguage performance issues in the v1 model, speculating on differences between 72B and 32B versions.

- Some users reported issues with the 12B v2 8bpw exl2 model, experiencing nonsense sentences and intense hallucination unaffected by prompt templates or sample settings changes.

Theme 3. Novel Applications and Capabilities of LLMs

- We’re making a game where LLM's power spell and world generation (Score: 413, Comments: 81): The developers are creating a game that utilizes Large Language Models (LLMs) for dynamic spell and world generation. This approach allows for the creation of unique spells and procedurally generated worlds based on player input, potentially offering a more personalized and immersive gaming experience. While specific details about the game's mechanics or release are not provided, the concept demonstrates an innovative application of AI in game development.

-

Gemini 1.5 Pro Experimental 0801 is strangely uncensored for a closed source model (Score: 54, Comments: 23): Google's Gemini 1.5 Pro Experimental 0801 model has demonstrated surprisingly uncensored capabilities when added to the UGI-Leaderboard. With safety settings set to "Block none" and a specific system prompt, the model was willing to provide responses to controversial and potentially illegal queries, though it was slightly less willing (W/10) than the average model on the leaderboard.

- Users reported mixed results with Gemini 1.5 Pro Experimental 0801's uncensored capabilities. Some found it denied all requests, while others successfully prompted it to answer queries about piracy, suicide methods, and drug manufacturing.

- The model demonstrated inconsistent behavior with sexual content, refusing some requests but agreeing to write pornographic stories when prompted differently. Users noted potential risks to their Google accounts when testing these capabilities.

- In the SillyTavern staging branch, Gemini 1.5 Pro Experimental 0801 showed less filtering compared to other versions. Users also found it to be more intelligent than the regular Gemini 1.5 Pro, which was described as "schizo at times".

Theme 4. Leadership Shifts in Major AI Companies

-

Will Sam "Spook" Uncle Sam in order to shut down Llama 4? (Score: 59, Comments: 31): Sam Altman's potential private demo of GPT-5 to government regulators is speculated to potentially influence restrictions on open-source AI developments, particularly Llama 4. This hypothetical scenario suggests Altman might intentionally alarm regulators to limit competition from open-source models, potentially giving his company an advantage in the evolving open LLM era.

- Meta could potentially train open-source LLMs outside the US, with Mistral offering competitive models. However, the EU AI Act has introduced significant documentation requirements, potentially hindering generative model development in Europe.

- In an unexpected turn, Zuckerberg is advocating for open-source AI protection, with the government indicating they will not restrict open-source AI. Some argue this stance benefits all non-OpenAI entities in challenging OpenAI's perceived monopoly.

- FTC head Lina Khan is reportedly pro-open weight models, potentially alleviating concerns about restrictions. The regulatory community seems to be treating AI software more like the early 90s internet than encryption, suggesting a less restrictive approach.

- OpenAI Co-Founders Schulman and Brockman Step Back. Schulman leaving for Anthropic. (Score: 317, Comments: 94): OpenAI co-founders Adam D'Angelo and Ilya Sutskever are stepping back from their roles, with Schulman leaving to join Anthropic. This development follows the recent controversy surrounding Sam Altman's brief dismissal and reinstatement as CEO, which led to significant internal changes at OpenAI. The departure of these key figures marks a notable shift in OpenAI's leadership structure and potentially its strategic direction.

- Concerns raised about OpenAI's internal issues, with speculation about problems with GPT5/strawberry/Q* development or Sam Altman's leadership style. Some users attribute the departures to different factors for each individual.

- Discussion about the coincidental names of key OpenAI figures (Schulman, Brockman, Altman), with humorous comments about AI-related surnames and comparisons to Hideo Kojima's character naming style.

- Users express mixed feelings about Anthropic, praising Claude while criticizing the company's perceived "megalomaniac complex" and censorship practices. Debate ensues about the pros and cons of having "businesspeople" versus current leadership in the AI industry.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Research and Development

- Google DeepMind advances multimodal learning: A Google DeepMind paper demonstrates how data curation via joint example selection can accelerate multimodal learning (/r/MachineLearning).

- Microsoft's MInference speeds up long-context inference: Microsoft's MInference technique enables inference of up to millions of tokens for long-context tasks while maintaining accuracy (/r/MachineLearning).

- Scaling synthetic data creation with web-curated personas: A paper on scaling synthetic data creation leverages 1 billion personas curated from web data to generate diverse synthetic data (/r/MachineLearning).

- NVIDIA allegedly scraping massive amounts of video data: Leaked documents suggest NVIDIA is scraping "a human lifetime" of videos daily to train AI models (/r/singularity).

AI Model Releases and Improvements

- Salesforce releases xLAM-1b model: The 1 billion parameter xLAM-1b model achieves 70% accuracy in function calling, surpassing GPT-3.5 despite its smaller size (/r/LocalLLaMA).

- Phi-3 Mini updated with function calling: Rubra AI released an updated Phi-3 Mini model with function calling capabilities, competitive with Mistral-7b v3 (/r/LocalLLaMA).

AI Industry News and Developments

- Major departures from OpenAI: Three key leaders are leaving OpenAI: President Greg Brockman (extended leave), John Schulman (joining Anthropic), and product leader Peter Deng (/r/OpenAI, /r/singularity).

- Elon Musk files lawsuit against OpenAI: Musk has filed a new lawsuit against OpenAI and Sam Altman (/r/singularity).

- Anthropic founder discusses AI development: An Anthropic founder suggests that even if AI development stopped now, there would still be years to decades of improvements from existing capabilities (/r/singularity).

Neurotech and Brain-Computer Interfaces

- Elon Musk makes claims about Neuralink: Musk predicts that brain chip patients will outperform pro gamers within 1-2 years and talks about giving people "superpowers" (/r/singularity).

Memes and Humor

- A meme comparing a journalist's contrasting views on AI from 11 years apart (/r/singularity).

- A humorous image speculating about the year 2030 (/r/singularity).

AI Discord Recap

A summary of Summaries of Summaries

Claude 3 Sonnet

1. LLM Advancements and Benchmarking

- *Llama 3 Tops Leaderboards: Llama 3 from Meta has rapidly risen to the top of leaderboards like ChatbotArena, outperforming models like GPT-4-Turbo and Claude 3 Opus* in over 50,000 matchups.

- Example comparisons highlighted model performance across benchmarks like AlignBench and MT-Bench, with DeepSeek-V2 boasting 236B parameters and surpassing GPT-4 in certain areas.

- *New Open Models Advance State of the Art: Novel models like Granite-8B-Code-Instruct from IBM enhance instruction following for code tasks, while DeepSeek-V2 introduces a massive 236B parameter* model.

- Leaderboard comparisons across AlignBench and MT-Bench revealed DeepSeek-V2 outperforming GPT-4 in certain areas, sparking discussions on the evolving state of the art.

2. Model Performance Optimization and Inference

- *Quantization Techniques Reduce Model Footprint: [Quantization] techniques like AQLM and QuaRot aim to enable running large language models (LLMs*) on individual GPUs while maintaining performance.

- Example: AQLM project demonstrates running the Llama-3-70b model on an RTX3090 GPU.

- **DMC Boosts Throughput by 370% **: Efforts to boost transformer efficiency through methods like Dynamic Memory Compression (DMC) show potential for improving throughput by up to 370% on H100 GPUs.

- Example: DMC paper by

@p_nawrotexplores the DMC technique.

- Example: DMC paper by

- *Parallel Decoding with Consistency LLMs: Techniques like Consistency LLMs* explore parallel token decoding for reduced inference latency.

- The SARATHI framework also addresses inefficiencies in LLM inference by employing chunked-prefills and decode-maximal batching to improve GPU utilization.

- *CUDA Kernels Accelerate Operations: Discussions focused on optimizing CUDA operations like fusing element-wise operations, using the Thrust library's

transform* for near-bandwidth-saturating performance.- Example: Thrust documentation highlights relevant CUDA kernel functions.

3. Open-Source AI Frameworks and Community Efforts

- *Axolotl Supports Diverse Dataset Formats*: Axolotl now supports diverse dataset formats for instruction tuning and pre-training LLMs.

- The community celebrated Axolotl's increasing capabilities for open-source model development and fine-tuning.

- *LlamaIndex Integrates Andrew Ng Course: LlamaIndex* announces a new course on building agentic RAG systems with Andrew Ng's DeepLearning.ai

- The course highlights LlamaIndex's role in developing retrieval-augmented generation (RAG) systems for enterprise applications.

- *RefuelLLM-2 Optimized for 'Unsexy' Tasks: RefuelLLM-2 is open-sourced, claiming to be the best LLM for "unsexy data tasks"*.

- The community discussed RefuelLLM-2's performance and applications across diverse domains.

- *Mojo Teases Python Integration and Accelerators: Modular's deep dive* teases Mojo's potential for Python integration and AI extensions like

_bfloat16_.- Custom accelerators like PCIe cards with systolic arrays are also considered future candidates for Mojo upon its open-source release.

4. Multimodal AI and Generative Modeling Innovations

- *Idefics2 and CodeGemma Refine Capabilities: Idefics2 8B Chatty focuses on elevated chat interactions, while CodeGemma 1.1 7B* refines coding abilities.

- These new multimodal models showcase advancements in areas like conversational AI and code generation.

- *Phi3 Brings AI Chatbots to WebGPU: The Phi 3* model brings powerful AI chatbots to browsers via WebGPU.

- This advancement enables private, on-device AI interactions through the WebGPU platform.

- *IC-Light Improves Image Relighting: The open-source IC-Light* project focuses on improving image relighting techniques.

- Community members shared resources and techniques for leveraging IC-Light in tools like ComfyUI.

5. Fine-tuning Challenges and Prompt Engineering Strategies

- *Axolotl Prompting Insights: The importance of prompt design* and usage of correct templates, including end-of-text tokens, was highlighted for influencing model performance during fine-tuning and evaluation.

- Example: Axolotl prompters.py showcases prompt engineering techniques.

- *Logit Bias for Prompt Control: Strategies for prompt engineering like splitting complex tasks into multiple prompts and investigating logit bias* were discussed for more control over outputs.

- Example: OpenAI logit bias guide explains techniques.

- *

Token for Retrieval : Teaching LLMs to use the<RET>token for information retrieval* when uncertain can improve performance on infrequent queries.- Example: ArXiv paper introduces this technique.

Claude 3.5 Sonnet

1. LLM Advancements and Benchmarking

- DeepSeek-V2 Challenges GPT-4 on Benchmarks: DeepSeek-V2, a new 236B parameter model, has outperformed GPT-4 on benchmarks like AlignBench and MT-Bench, showcasing significant advancements in large language model capabilities.

- The model's performance has sparked discussions about its potential impact on the AI landscape, with community members analyzing its strengths across various tasks and domains.

- John Schulman's Strategic Move to Anthropic: John Schulman, co-founder of OpenAI, announced his departure to join Anthropic, citing a desire to focus more deeply on AI alignment and technical work.

- This move follows recent restructuring at OpenAI, including the disbandment of their superalignment team, and has sparked discussions about the future directions of AI safety research and development.

- Gemma 2 2B: Google's Compact Powerhouse: Google released Gemma 2 2B, a 2.6B parameter model designed for efficient on-device use, compatible with platforms like WebLLM and WebGPU.

- The model's release has been met with enthusiasm, particularly for its ability to run smoothly on free platforms like Google Colab, demonstrating the growing accessibility of powerful AI models.

2. Inference Optimization and Hardware Advancements

- Cublas hgemm Boosts Windows Performance: The cublas hgemm library has been made compatible with Windows, achieving up to 315 tflops on a 4090 GPU compared to 166 tflops for torch nn.Linear, significantly enhancing performance for AI tasks.

- Users reported achieving around 2.4 it/s for flux on a 4090, marking a substantial improvement in inference speed and efficiency for large language models on consumer hardware.

- Aurora Supercomputer Eyes ExaFLOP Milestone: The Aurora supercomputer at Argonne National Laboratory is expected to surpass 2 ExaFLOPS after performance optimizations, potentially becoming the fastest supercomputer globally.

- Discussions highlighted Aurora's unique Intel GPU architecture, supporting tensor core instructions that output 16x8 matrices, sparking interest in its potential for AI and scientific computing applications.

- ZeRO++ Slashes GPU Communication Overhead: ZeRO++, a new optimization technique, promises to reduce communication overhead by 4x for large model training on GPUs, significantly improving training efficiency.

- This advancement is particularly relevant for distributed AI training setups, potentially enabling faster and more cost-effective training of massive language models.

3. Open Source AI and Community Collaborations

- SB1047 Sparks Open Source AI Debate: An open letter opposing SB1047, the AI Safety Act, is circulating, warning that it could negatively impact open-source research and innovation by potentially banning open models and threatening academic freedom.

- The community is divided, with some supporting regulation for AI safety, while others, including companies like Anthropic, caution against stifling innovation and suggest the bill may have unintended negative consequences on academic and economic fronts.

- Wiseflow: Open-Source Data Mining Tool: Wiseflow, an open-source information mining tool, was introduced to efficiently extract and categorize data from various online sources, including websites and social platforms.

- The tool has sparked interest in the AI community, with suggestions to integrate it with other open-source projects like Golden Ret to create dynamic knowledge bases for AI applications.

- AgentGenesis Boosts AI Development: AgentGenesis, an open-source AI component library, was launched to provide developers with copy-paste code snippets for Gen AI applications, promising a 10x boost in development efficiency.

- The project, available under an MIT license, features a comprehensive code library with templates for RAG flows and QnA bots, and is actively seeking contributors to enhance its capabilities.

4. Multimodal AI and Creative Applications

- CogVideoX-2b: A New Frontier in Video Synthesis: The release of CogVideoX-2b, a new text-to-video synthesis model, has attracted attention for its capabilities in generating video content from textual descriptions.

- Initial reviews suggest that CogVideoX-2b is competitive with leading models in the field, sparking discussions about its potential applications and impact on multimedia content creation.

- Flux AI Challenges Image Generation Giants: Flux AI's 'Schnell' model is reportedly outperforming Midjourney 6 in image generation coherence, showcasing significant advancements in AI-generated visual content.

- Users have praised the model for its ability to generate highly realistic and detailed images, despite occasional minor typos, indicating a leap forward in the quality of AI-generated visual media.

- MiniCPM-Llama3 Advances Multimodal Interaction: MiniCPM-Llama3 2.5 now supports multi-image input and demonstrates significant promise in tasks such as OCR and document understanding, offering robust capabilities for multimodal interaction.

- The model's advancements highlight the growing trend towards more versatile AI systems capable of processing and understanding multiple types of input, including text and images, simultaneously.

GPT4O (gpt-4o-2024-05-13)

1. LLM Advancements and Benchmarking

- Gemma 2 2B powers on-device AI: Gemma 2 2B by Google supports on-device operations smoothly using WebLLM and WebGPU technologies.

- Community praised the ease of use, even on free Google Colab, demonstrating its deployment potential.

- CogVideoX-2b ignites video generation: CogVideoX-2b model attracts attention for its capabilities in text-to-video synthesis, stacking up against leading competitors.

- Debate sparked around its competitive edge, suggesting a promising trajectory in multimedia applications.

2. Model Performance Optimization and Benchmarking

- INT8 Quantization Sparks Scaling Debate: In INT8 symmetric quantization, PyTorch's 127.5 scaling strategy created divergence issues due to clipping in Qwen2-0.5B model fine-tuning.

- The community explored alternatives, such as INT4 Quantized Training, to bypass the constraints in restricted range quantization.

- Bobzdar benchmarks GPU performance: Bobzdar's experiments with the 8700G/780m IGP using ROCM and Vulkan in LM Studio demonstrate a 25% speedup, albeit challenged by GPU RAM limitations beyond 20GB.

- Despite this, strides with llama3.1 70b q4 reveal 30% faster processing than CPUs, yet struggles persist with stability over 63k context sizes.

3. Fine-Tuning Challenges and Integration

- Unsloth Struggles with Fine-Tuning and PPO Integration: Issues with using 'model.save_pretrained_merged' for fine-tuning in Unsloth sparked concern due to inconsistent save methods.

- Attempts to incorporate Llama3 models into PPO trainers highlighted a necessity for the 'for_inference()' step before generating outputs, complicating integration processes.

- Fine-tuning Buzz as Axolotl Gets Popular: Discussion highlights the Axolotl library as a favorite for fine-tuning AI models, alongside queries on insurance industry-specific applications.

- Questions also arose about Llama 450b hosting solutions and bottlenecks in inference, particularly with resources like vLLM.

4. Open-Source AI Developments and Collaborations

- StoryDiffusion, an open Sora: Launch of StoryDiffusion, an open-source alternative to Sora with MIT license, though weights not released yet.

- Example: GitHub repo.

- OpenDevin release: Release of OpenDevin, an open-source autonomous AI engineer based on Devin by Cognition, with webinar and growing interest on GitHub.

- Example: GitHub repo.

GPT4OMini (gpt-4o-mini-2024-07-18)

1. Installation Challenges in AI Tools

- ComfyUI and Flux Installation Woes: Installation issues with ComfyUI and Flux have plagued users, particularly due to incompatible Python versions affecting operations.

- Many members expressed frustration over managing different Python environments, leading to repeated failures despite various fixes.

- Local LLM Setup Problems: Setting up local LLMs with Open Interpreter resulted in unnecessary downloads, causing openai.APIConnectionError during model selection.

- Users are coordinating privately to troubleshoot this issue, highlighting the complexities of local model setup.

2. Model Performance and Optimization Discussions

- Mistral-7b-MoEified-8x Model Efficiency: The Mistral-7b-MoEified-8x model optimizes expert usage by dividing MLP layers into splits, improving deployment efficiency.

- Community discussions focus on leveraging this model architecture for enhanced performance in specific applications.

- Performance Challenges with Llama3 Models: Users reported inconsistent inference times with fine-tuned Llama3.1, ranging from milliseconds to over a minute based on loading requirements.

- These variations highlight the need for better integration practices when deploying Llama3 models in production.

3. AI Ethics and Data Practices

- NVIDIA's Data Scraping Controversy: NVIDIA faces backlash for allegedly scraping vast amounts of video data daily for AI training, raising ethical concerns among employees.

- Leaked documents confirm management's approval of these practices, sparking significant unrest within the company.

- Opposition to AI Safety Regulation SB1047: An open letter against California's SB1047 highlights fears that it could stifle open-source research and innovation in AI.

- Members discussed the potential negative impacts of the bill, with a call for signatures supporting the opposition.

4. Emerging AI Projects and Collaborations

- Launch of Open Medical Reasoning Tasks: The Open Medical Reasoning Tasks project aims to unite AI and medical communities for comprehensive reasoning tasks.

- This initiative seeks contributions to advance AI applications in healthcare, reflecting a growing intersection of these fields.

- Gemma 2 2B Capabilities: Google's Gemma 2 2B model supports on-device operations, demonstrating impressive deployment potential.

- Community feedback highlights its ease of use, especially in environments like Google Colab.

5. Advancements in AI Frameworks and Libraries

- New Features in Mojo's InlineList: Mojo is introducing new methods in InlineList, such as

__moveinit__and__copyinit__, aimed at enhancing its feature set.- These advancements signal Mojo's commitment to improving its data structure capabilities for future development.

- Bits and Bytes Foundation Updates: The latest Bits and Bytes pull request has sparked interest among library development enthusiasts.

- This development is seen as crucial for the library's evolution, with the community closely monitoring its progress.

GPT4O-Aug (gpt-4o-2024-08-06)

1. AI Model Advancements

- Gemma 2 2B rolls out: Gemma 2 2B by Google supports on-device operations smoothly using WebLLM and WebGPU technologies.

- Community praised the ease of use, even on free Google Colab, demonstrating its deployment potential.

- Mistral MoEification Improves AI Efficiency: Mistral-7b-MoEified-8x enhances deployment efficiency through expert model architecture and split MLP layers.

- Community discussions focus on optimizing expert usage for better model performance.

- DeepSeek-V2 boosts inference performance: A study found that enhancing sample generation during inference markedly elevates language model efficiency, with significant gains from 15.9% to 56% in the SWE-bench Lite domain View PDF.

- Notably, increasing attempts highlight potential where DeepSeek-V2-Coder-Instruct redefines benchmarks previously capped at 43% single-attempt success.

2. GPU Performance and Compatibility

- NVIDIA Blackwell GPUs face delays: NVIDIA's Blackwell GPUs face delays due to design errors in chips that integrate two GPUs on one Superchip.

- This redesign need has postponed the release of these advanced processors, impacting timelines for developers and tech adopters.

- Intel Arc GPU compatibility debate: Intel Arc GPUs drew mixed reactions over their CUDA support, impacting use in machine learning endeavors.

- Some members explored ZLUDA patches, though AMD's viability for ML remained a debated topic.

- Bobzdar benchmarks GPU performance: Bobzdar's experiments with the 8700G/780m IGP using ROCM and Vulkan in LM Studio demonstrate a 25% speedup, albeit challenged by GPU RAM limitations beyond 20GB.

- Despite this, strides with llama3.1 70b q4 reveal 30% faster processing than CPUs, yet struggles persist with stability over 63k context sizes.

3. OpenAI and Anthropic Leadership Changes

- John Schulman moves to Anthropic: John Schulman announced his departure from OpenAI for Anthropic, focusing on AI alignment and technical work.

- This move was perceived as a search for fresh perspectives, stirring conversations about the implications for AI ethics and innovation.

- OpenAI leaders exit raises eyebrows: A news report mentions three leaders departing OpenAI, potentially causing strategic shifts.

- Community speculated on how this shake-up might affect projects and future directions within OpenAI.

4. AI Tooling and Frameworks

- Llamafile Revolutionizes Offline LLM Accessibility: The core maintainer of Llamafile shared exciting updates on delivering offline, accessible LLMs in a single file, significantly enhancing user accessibility.

- This progress reflects a push towards democratizing language model accessibility by providing compact, offline solutions.

- LlamaIndex: Get Ready for the RAG-a-thon: Get ready for another round of LlamaIndex's RAG-a-thon with partners @pinecone and @arizeai, hosted at @500GlobalVC in Palo Alto following the success of the first event.

- The event promises extensive insights into Retrieval-Augmented Generation and how LlamaIndex plays a key role.

5. LLM Fine-Tuning Challenges

- GPT-4o fails the conversational test: GPT-4o struggles to keep a coherent conversation, often repeating instructions without considering new inputs.

- User feedback mentioned that Sonnet tends to rectify these issues, highlighting deficits in 4o's conversational model.

- Unsloth struggles with fine-tuning and PPO integration: Issues with using 'model.save_pretrained_merged' for fine-tuning in Unsloth sparked concern due to inconsistent save methods.

- Attempts to incorporate Llama3 models into PPO trainers highlighted a necessity for the 'for_inference()' step before generating outputs, complicating integration processes.

6. Misc

- LinkedIn Engineering boosts ML platform with Flyte pipelines: A live session was announced about how LinkedIn Engineering has transformed their ML platform, focusing on Flyte pipelines and their implementation at LinkedIn.

- Attendees are expected to gain insights into the engineering strategies and approaches utilized by LinkedIn for their ML platform.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

- Installation Struggles Plague Users: Installing and configuring ComfyUI and Flux proved problematic due to incompatible Python versions with SD operations.

- Members vented their frustrations about managing disparate Python environments, emphasizing the repeated failures experienced despite various fixes.

- ControlNet's Creativity with Style: Using ControlNet, users shared methods to transform photos into line art, comparing techniques involving DreamShaper and pony models.

- Focus was on leveraging Lora models alongside specific base models to achieve targeted artistic outputs.

- Inpainting with Auto1111 Sparks Interest: The Auto1111 tool was explored for refined inpainting tasks, like inserting a specific poster into an image.

- Inpainting and ControlNet emerged as preferred alternatives over manual tools such as Photoshop for detail management.

- Intel Arc GPU Compatibility Debate: Intel Arc GPUs drew mixed reactions over their CUDA support, impacting use in machine learning endeavors.

- Some members explored ZLUDA patches, though AMD's viability for ML remained a debated topic.

- Reminiscing Community Spats: Historical clashes between moderation teams on different SD forums were recounted, highlighting past Discord and Reddit dynamics.

- These disputes reveal the complexities of moderating open-source AI communities, reflecting on past as pertinent to present user dynamics.

Unsloth AI (Daniel Han) Discord

- MoEification in Mistral-7b Improves Efficiency: Mistral-7b-MoEified-8x embraces the division of MLP layers into multiple splits with specific projections to enhance the efficiency of deploying expert models.

- The community focuses on optimizing expert usage by leveraging these split model architectures to achieve better performance.

- Unsloth Struggles with Fine-Tuning and PPO Integration: Issues with using 'model.save_pretrained_merged' for fine-tuning in Unsloth sparked concern due to inconsistent save methods.

- Attempts to incorporate Llama3 models into PPO trainers highlighted a necessity for the 'for_inference()' step before generating outputs, complicating integration processes.

- BigLlama Model Merge Creates Challenges: The BigLlama-3.1-1T-Instruct model's creation through Meta-Llama with Mergekit has proven problematic as the merged weights need training.

- Although the community is enthusiastic, many see it as 'useless' until properly trained and calibrated.

- Llama-3-8b-bnb Merging Tactics Clarified: Users resolved merging challenges for Llama-3-8b-bnb using LoRA adapters by specifying 16-bit configurations before gguf quantization.

- This process involved following precise merging instructions to ensure seamless integration and performance.

- RunPod Configurations for LLaMA3 Explored: Cost-effective strategies for running the LLaMA3 model on RunPod were discussed due to high operational expenses.

- Community members are exploring configurations that minimize costs while maintaining model performance efficiency.

HuggingFace Discord

- Gemma 2 2B rolls out: Gemma 2 2B by Google supports on-device operations smoothly using WebLLM and WebGPU technologies.

- Community praised the ease of use, even on free Google Colab, demonstrating its deployment potential.

- CogVideoX-2b ignites video generation: CogVideoX-2b model attracts attention for its capabilities in text-to-video synthesis, stacking up against leading competitors.

- Debate sparked around its competitive edge, suggesting a promising trajectory in multimedia applications.

- Structured outputs gain traction: OpenAI Blog posits structured outputs as industry-standard, stirring discussions on legacy contributions.

- The release triggered reflections on past works, hinting at the evolving landscape of standardization in machine learning outputs.

- Depth estimation reimagined: CVPR 2022 paper introduces a technique combining stereo and structured-light for depth estimation, capturing the community’s interest.

- Significant interest was shown in the practical implementation of these findings, indicating a drive towards actionable insights in computer vision.

- AniTalker revolutionizes animated conversations: The AniTalker project enhances facial motion depiction in animated interlocutors based on X-LANCE, offering nuanced identity separation.

- Trials showcased its practical prowess in real-time conversational simulations, suggesting broader applications in interactive media.

LM Studio Discord

- LMStudio gears up for RAG setup feature: RAG setup with LMStudio is generating buzz as it's expected to debut in the 0.3.0 release, prompting users to explore AnythingLLM as a temporary fix, though some face file access hurdles.

- Discussions underscore interest in Meta's LLaMA integration, with some highlighting initial setup challenges that might be simplified in future updates.

- GPU Evangelists debate future performance gains: NVIDIA 4090's worthiness as an upgrade stirs debate, with some users questioning its performance over the 3080, considering alternatives like dual setups or switching to other platforms.

- Speculation heats about the upcoming RTX 5090's improvements, with VRAM expectations mirroring the 4090's 24GB, yet hopeful for better efficiency and computing power.

- Strategizing GPU upgrades amidst market turbulence: The graphics card market faces upheaval as P40 cards skyrocket in price on eBay in 2024, and the scarcity of 3090s piques interest in rumored AMD 48GB VRAM cards.

- Community members highlight necessities for VRAM scalability and compatibility checks with power supplies when contemplating upgrades, proposing cost-effective solutions like coupling a 2060 Super with a 3060.

- K-V Cache curio in quantization contexts: A looped discourse on K-V cache settings and their role in model quantization has sparked curiosity in optimizing Flash Attention techniques.

- Conversations include sharing guides and resources to improve attention mechanisms, hinting at a drive for maximizing computational throughput.

- Insightful Bobzdar benchmarks GPU performance: Bobzdar's experiments with the 8700G/780m IGP using ROCM and Vulkan in LM Studio demonstrate a 25% speedup, albeit challenged by GPU RAM limitations beyond 20GB.

- Despite this, strides with llama3.1 70b q4 reveal 30% faster processing than CPUs, yet struggles persist with stability over 63k context sizes.

CUDA MODE Discord

- Hermes Enigma: PyTorch 2.4 vs CUDA 12.4: Users experienced build-breaking issues when running PyTorch 2.4 with CUDA 12.4, while successfully navigating with CUDA 12.1.

- Further insight shared included CUDA 12.6 installed via conda, indicating complex version dependencies.

- cublas hgemm Hits Windows High: The cublas hgemm library now runs on Windows, enhancing performance up to 315 tflops on a 4090 GPU, compared to 166 tflops with nn.Linear.

- Users reported achieving around 2.4 it/s for flux, marking a milestone in performance progression.

- INT8 Quantization Sparks Scaling Debate: In INT8 symmetric quantization, PyTorch's 127.5 scaling strategy created divergence issues due to clipping in Qwen2-0.5B model fine-tuning.

- The community explored alternatives, such as INT4 Quantized Training, to bypass the constraints in restricted range quantization.

- ZHULDA 3 Vanishes Under AMD's Claim: The ZHUDA 3 project was pulled from GitHub after AMD countered previously granted development permissions.

- Community perplexity arose over employment contract terms allowing for a release if deemed unfit by AMD, highlighting blurry contractual obligations.

- Hudson River Trading's Lucrative GPU Calls: Hudson River Trading is on the hunt for experts adept in GPU optimization, emphasizing CUDA kernel creation and PyTorch enhancement.

- The firm offers internship roles and competitive salaries reaching up to $798K/year, demonstrating significant financial appeal in high-frequency trading.

Nous Research AI Discord

- Nvidia Steers into Conversational AI: Nvidia launched the UltraSteer-V0 dataset with 2.3M conversations labeled over 22 days with nine fine-grained signals.

- Data is processed using Nvidia's Llama2-13B-SteerLM-RM reward model, evaluating attributes from Quality to Creativity.

- OpenAI Leaders Exit Raises Eyebrows: A news report mentions three leaders departing OpenAI, potentially causing strategic shifts.

- Community speculated on how this shake-up might affect projects and future directions within OpenAI.

- Flux AI Outpaces Competition with Images: Flux AI's 'Schnell' competes with Midjourney 6, exceeding in image generation coherence, showcasing advanced model capabilities.

- Images generated by 'Schnell' exhibit high levels of realism, despite minor typos, indicating significant strides over competitors.

- Medical Community Joins AI with New Initiatives: The Open Medical Reasoning Tasks project launches to unite medical and AI communities for comprehensive reasoning tasks.

- This initiative taps into AI healthcare advancements, building extensive medical reasoning tasks and gaining traction in related research.

- Fine-tuning Buzz as Axolotl Gets Popular: Discussion highlights the Axolotl library as a favorite for fine-tuning AI models, alongside queries on insurance industry-specific applications.

- Questions also arose about Llama 450b hosting solutions and bottlenecks in inference, particularly with resources like vLLM.

Latent Space Discord

- Web Devs Seamlessly Transition to AI: A convivial debate surfaced on the practicality of transitioning from web development to AI engineering, driven by a shortage of ML experts and growing business ventures into AI applications.

- Although job postings often highlight ML credentials, they're frequently filled by individuals with robust web development experience as companies highly value API integration abilities.

- NVIDIA Under Fire for AI Data Collection: NVIDIA stands accused of large-scale data scraping for AI initiatives, treating 'a human lifetime' of video material daily, with approval from top-level management. Documents and Slack messages surfaced confirming this operation.

- The scant regard for ethical considerations by NVIDIA sparked significant employee unrest, raising questions about corporate responsibility.

- John Schulman Switches from OpenAI to Anthropic: John Schulman declared his exit from OpenAI to intensify his focus on AI alignment research at Anthropic after a nine-year tenure.

- He clarified his decision wasn't due to OpenAI's lack of support but a personal ambition to deepen research endeavors.

- OpenAI Engages Global Audience Through DevDay: OpenAI unveiled a series of DevDay events in San Francisco, London, and Singapore, aiming to highlight developer implementations with OpenAI's tools through workshops and demonstrations.

- The Roadshow represents OpenAI's strategy to connect globally with developers, reinforcing its role within the community.

- Boost in Reliability for OpenAI's API: OpenAI implemented a structured output feature in its API, ensuring model responses adhere strictly to JSON Schemas, thus elevating schema precision from 86% to 100%.

- The recent update marks a leap forward in achieving consistency and predictability within model outputs.

OpenAI Discord

- OpenAI DevDay Goes Global: OpenAI announced DevDay will travel to cities like San Francisco, London, and Singapore, offering developers hands-on sessions and demos this fall.

- Developers are encouraged to meet OpenAI engineers to learn and exchange ideas in the AI development space.

- Desktop ChatGPT App & Search GPT Release: Members discussed the release dates for the desktop ChatGPT app on Windows and the public release of Search GPT, based on info from Sam Altman.

- Search GPT has been officially distributed, confirming inquiries about its availability.

- Harnessing Structured Outputs: OpenAI introduced Structured Outputs, creating consistent JSON responses aligned with provided schemas, enhancing API interactions.

- The Python and Node SDKs offer native support, promising consistent outputs and reduced costs for users.

- AI Reshapes Gaming World: A member envisaged AI elevating games like BG3 by enabling unique character designs and dynamic NPC interactions.

- The use of generative AI in gaming is expected to enhance player immersion and revolutionize traditional gaming experiences.

- Bing AI Creator Uses DALL-E 3: Bing AI Image Creator employs DALL-E 3 technology, aligning with recent updates.

- Despite improvements, users noted inconsistencies in output quality and expressed dissatisfaction.

Perplexity AI Discord

- GPT-4o fails the conversational test: GPT-4o struggles to keep a coherent conversation, often repeating instructions without considering new inputs.

- User feedback mentioned that Sonnet tends to rectify these issues, highlighting deficits in 4o's conversational model.

- Sorting AI targets content chaos: An innovative content sorting and recommendation engine project is underway at a university, aimed at improving database content prioritization.

- Peers suggested using platforms like RAG and local models to enhance the project's impact and sophistication.

- NVIDIA's GPU glitch: NVIDIA's Blackwell GPUs face delays due to design errors in chips that integrate two GPUs on one Superchip.

- This redesign need has postponed the release of these advanced processors, impacting timelines for developers and tech adopters.

- API glitches undermine user confidence: API results have been unexpectedly corrupted, delivering gibberish content beyond initial lines when composing articles.

- Documentation confirms API model deprecation is scheduled for August 2024, including the llama-3-sonar-small-32k models.

Eleuther Discord

- Meta masters distributed AI training with massive network: At ACM SIGCOMM 2024, Meta revealed their expansive AI network linking thousands of GPUs, vital for training models like LLAMA 3.1 405B.

- Their study on RDMA over Ethernet for Distributed AI Training highlights the architecture supporting one of the planet's most extensive AI networks.

- SB1047 stirs AI community with pros and cons: An open letter opposing SB1047, the AI Safety Act, is gaining signatures, warning it could stifle open-source research and innovation (Google Form).

- Anthropic acknowledges regulation necessity, yet suggests the bill may curb innovation with potential negative academic and economic impacts.

- Mechanistic anomaly detection: promising but inconsistent: Eleuther AI evaluated mechanistic anomaly detection methods, finding they sometimes fell short of traditional techniques, detailed in a blog post.

- Performance improved on full data batches; however, not all tasks saw gains, underscoring research areas needing refinement.

- Scaling SAEs: recent explorations and resources: Eleuther AI discussed Structural Attention Equations (SAEs) with links to foundational and modern works like the Monosemantic Features paper.

- Efforts are underway to scale SAEs from toy models to 13B parameters, with significant collaboration across Anthropic and OpenAI indicated in scaling papers.

- lm-eval-harness adapts to custom models easily: Eleuther AI encouraged using lm-eval-harness for custom architectures, providing a guide link in a GitHub example.

- Discussions addressed batch processing nuance and confirmed BOS token default inclusion, highlighting eval-harness adaptability in testing contexts.

LangChain AI Discord

- GPU Overflows: Running Models on CPU: Users faced memory overflow issues when attempting to run large models on GPUs with limited 8GB vRAM, leading to a workaround by utilizing the CPU entirely, albeit with slower performance.

- A discussion emerged about best practices for handling insufficient GPU memory, highlighting the trade-offs between speed and capability.

- LangChain Integration Puzzles: Queries arose regarding incorporating RunnableWithMessageHistory in LangChain v2.0 for chatbot development due to lack of documentation.

- Suggestions to explore storing message history through available tutorials were recommended, hinting at common obstacles faced by developers.

- Groans Over Automatic Code Review Foibles: Issues surfaced with GPT-4o failing to assess positions within GitHub diffs correctly, prompting users to pursue alternative data processing methods.

- The advice to avoid vision models in favor of coding-specific approaches underscored the challenges of applying AI to code review.

- AgentGenesis Invites Open Source Collaboration: The AgentGenesis project, offering a library of AI code snippets, seeks contributors to enhance its development, highlighting its open-source MIT license.

- Active community collaboration and contributions via their GitHub repository are encouraged to build a robust library of reusable code.

- Mood2Music App Hits the Right Note: The Mood2Music app promises to curate music recommendations based on users' moods, integrating seamlessly with Spotify and Apple Music.

- This innovative app aims to elevate the user's experience by automating playlist creation through mood detection, featuring unique AI selfie analysis.

Interconnects (Nathan Lambert) Discord

- John Schulman surprises with move to Anthropic: John Schulman announced his departure from OpenAI for Anthropic, focusing on AI alignment and technical work.

- This move was perceived as a search for fresh perspectives, stirring conversations about the implications for AI ethics and innovation.

- Leaked whispers around Gemini program intrigue members: The community speculated on leaked information regarding OpenAI's Gemini program, marveling at the mysterious developments around Gemini 2.

- This intrigue raised questions about potential advancements and strategic direction within OpenAI.

- Flux Pro offers a novel vibe in AI models: Flux Pro was described as offering a noticeably different user experience compared to its competitors.

- Discussions focused on how its unique approach might not be rooted in benchmarks but rather subjective user satisfaction.

- Data-dependency impacts model benefits: Chats emphasized that model performance benefits from decomposing data into components like \( (x, y_w) \) and \( (x, y_l) \) depending largely on data noise levels.

- Startups often opt for noisy data strategies to bypass standard supervised fine-tuning, as noted in an ICML discussion mentioning Meta's Chameleon approach.

- Claude lags behind ChatGPT in user experience: Members compared Claude unfavorably to ChatGPT, indicating it lags akin to older GPT-3.5 models while ChatGPT was praised for flexibility and memory performance.

- This sparked conversations about advancements and user expectations for next-generation AI tools.

OpenRouter (Alex Atallah) Discord

- GPT4-4o Launches with Structured Output Capabilities: The new model GPT4-4o-2024-08-06 has been released on OpenRouter with enhanced structured output capabilities.

- This update includes the ability to provide a JSON schema in the response format, encouraging users to report issues with strict mode in designated channels.

- AI Models Performance Drama: yi-vision and firellava models failed to perform under test conditions compared to haiku/flash/4o, highlighting ongoing price and efficiency challenges.

- Discussions hinted at imminent price reductions for Google Gemini 1.5, positioning it as a more cost-effective alternative.

- Budget-Friendly GPT-4o Advances in Token Management: Developers now save 50% on inputs and 33% on outputs by adopting the more cost-effective gpt-4o-2024-08-06.

- Community dialogues suggest efficiency and strategic planning as key factors in this model's reduced costs.

- Calculating OpenRouter API Costs: A detailed discussion on OpenRouter API cost calculation emphasized using the

generationendpoint after requests for accurate expenditure tracking.- This method allows users to manage funds in pay-as-you-go schemes effectively without embedded cost details in streaming responses.

- Google Gemini Throttling Issues: Users of Google Gemini Pro 1.5 faced

RESOURCE_EXHAUSTEDerrors due to heavy rate limiting.- Adjustments in usage expectations are necessary, with no immediate solution to these rate limit constraints.

LlamaIndex Discord

- LlamaIndex: Get Ready for the RAG-a-thon: Get ready for another round of LlamaIndex's RAG-a-thon with partners @pinecone and @arizeai, hosted at @500GlobalVC in Palo Alto following the success of the first event.

- The event promises extensive insights into Retrieval-Augmented Generation and how LlamaIndex plays a key role.

- Webinar on RAG-Augmented Coding Assistants: Webinar with CodiumAI invites participants to explore RAG-augmented coding assistants, showcasing how LlamaIndex can enhance AI-generated code quality.

- Participants must register and verify token ownership; the session will present practical applications for maintaining contextual code integrity.

- RabbitMQ Bridges the Agent Gap: A blog by @pavan_mantha1 explores using RabbitMQ for effective communication between agents in a multi-agent system.

- This innovative setup integrates tools like @ollama and @qdrant_engine to streamline operations within LlamaIndex.

- Function Calling Glitch Crashes CI: LlamaIndex's

function_calling.pygenerated a TypeError that obstructed CI processes, resolved by upgrading specific dependencies.- Old package requirements presented issues, urging the team to tighten specification of dependencies to avoid such glitches in the future.

- Vector Databases Under the Microscope: A Vector DB Comparison was shared for assessing different vector databases' capabilities.

- The community was encouraged to share insights from experiences with various VectorDBs to educate and enhance knowledge-sharing.

Cohere Discord

- Galileo Hallucination Index Ignites Source Debate: Galileo's Hallucination Index prompted discussions about the open-source classification of LLMs, highlighting ambiguities in categorizing models like Command R Plus.

- Users contended over the distinction between open weights versus fully open-source, advocating for clearer criteria, potentially establishing a separate category.

- Licensing Controversy Sizzles with Command R Plus: Galileo clarified their definition of open-source to encompass models supporting commercial use, citing the Creative Commons license of Command R Plus as a limitation.

- Members discussed the creation of a new category for 'open weights', suggesting that distinct licensing classifications should replace the broad open-source tag.

- Mistral's Open Weights Under Apache 2.0: Mistral's models were distinguished for their permissive Apache 2.0 license, offering greater liberties than typically available to open weights.

- Discussion included sharing Mistral's documentation, underscoring their initiative in transparency with pre-trained and instruction-tuned models.

- Cohere Toolkit for RAG Projects: A member utilized Cohere Toolkit for an AI fellowship project, illustrating its application in developing an LLM with RAG across various domain-specific databases.

- The toolkit's integration was poised to explore content from platforms like Confluence, enhancing its utility in diverse professional contexts.

- Exploring Feasibility of Third-party API Integration: Discussion on switching from Cohere models to third-party APIs like OpenAI's Chat GPT and Gemini 1.5 was underway.

- The potential for using these external APIs evidently promised to broaden the scope and adaptability of existing projects.

Modular (Mojo 🔥) Discord

- InlineList Strides With Exciting Features: The development of InlineList in Mojo is advancing with the introduction of

__moveinit__and__copyinit__methods as per the recent GitHub pull request, aiming to enhance feature sets.- These new methods seem to be driven by technological priorities, hinting at future capabilities in

InlineListenhancements.

- These new methods seem to be driven by technological priorities, hinting at future capabilities in

- Mojo Optimizes Lists with Small Buffer Tactics: Small buffer optimization for

Listin Mojo introduces flexibility by allowing stack space allocation with parameters likeList[SomeType, 16], which is detailed in Gabriel De Marmiesse's PR.- This improvement might eventually eliminate the need for a separate

InlineListtype, streamlining the existing architecture.

- This improvement might eventually eliminate the need for a separate

- New Prospects for Mojo with Custom Accelerators: Custom accelerators such as PCIe cards with systolic arrays are set to be potential contenders for Mojo upon its open-source release, showcasing new hardware integration possibilities.

- Despite the enthusiasm, it currently remains challenging to use Mojo for custom kernel replacements, as existing flows like lowering PyTorch IR dominate until RISC-V target supports are available.

LAION Discord

- OpenAI Leadership Shakeup Brings John Schulman to Anthropic: John Schulman, co-founder of OpenAI, is leaving to join Anthropic, spurred by recent restructuring within OpenAI.

- This leadership move follows only three months after dismantling OpenAI's superalignment team, hinting at internal strategic shifts.

- Open-Source Model Training Faces High-Cost Roadblocks: The open-source community acknowledged expensive model training constraining the development of state-of-the-art models.

- Cheaper training could lead to a boom in open models, leaving aside the ethical challenges of data sourcing.

- Meta's JASCO Project Stymied by Legal Woes: Meta's under-the-radar JASCO project faces delays, possibly due to lawsuits with Udio & Suno.

- Concern mounts as these legal entanglements could slow technology advancements in proprietary AI.

- Validation Accuracy Hits 84%, Brings Believers: Model hits 84% validation accuracy, a notable milestone celebrated with allusions to The Matrix.

- Enthusiasm rounds as this breakthrough echoes familiar phrases like 'He's beginning to believe.'

- CIFAR's Frequency Retains, Phase Inquires: Inquiries were made on CIFAR images' frequency constancy versus potential phase shifts in Fourier analysis.

- The curiosity sparks conversations about whether image frequency stays steady while phase dynamics change.

tinygrad (George Hotz) Discord

- Tinygrad's Aurora Ambitions: Members pondered the feasibility of running tinygrad on Aurora, a cutting-edge supercomputer with Intel GPU support, sparking discussions in general.

- Insights revealed that Aurora's GPUs could leverage unique tensor core instructions, with 16x8 matrix output, potentially exceeding 2 ExaFLOPS post-optimization.

- Precision Perils in FP8 Nvidia Bounty: Inquiries about the FP8 Nvidia bounty arose, focusing on whether it necessitates E4M3, E5M2, or both standards for precision.

- The bounty reflects Nvidia's emphasis on diverse precision requirements, challenging developers to optimize across different modes.

- Tackling Tensor Slice Bugs in Tinygrad: A bug causing

AssertionErrorin Tensor slicing in Tinygrad was fixed, ensuring slices maintain contiguity, as confirmed by George Hotz.- The resolution provided clarity on Buffer to DEFINE_GLOBAL transition, a nagging issue within Tinygrad's computational operations.

- JIT Battles with Batch Sizes: Inconsistent batch sizes in datasets led to JIT errors, with suggestions including skipping or handling the last batch separately to prevent errors.

- George Hotz recommended ensuring JIT is not executed on the last incomplete batch, smoothing the workflow.

- Unlocking Computer Algebra Solutions: Study notes shared on computer algebra aim to aid understanding of Tinygrad's shapetracker and symbolic math, accessible here.

- This repository deepens insights into Tinygrad's structure, offering valuable knowledge for enthusiasts diving into advanced symbolic computation.

DSPy Discord

- Wiseflow Mines Data Efficiently: Wiseflow is touted as an agile data extraction tool that systematically categorizes and uploads information from websites and social media to databases, as showcased on GitHub.

- Members discussed integrating Golden Ret with Wiseflow to form a robust dynamic knowledge base.

- HybridAGI Launches New Version: A fresh version of the HybridAGI project is out, focusing on usability and refining data pipelines, with new features like Vector-only RAG and Knowledge Graph RAG, shared on GitHub.

- The community is showing interest in its applications for seamless neuro-symbolic computation in diverse AI setups.

- LLM-based Agents Aim for AGI Potential: A recent paper delves into the prospects of LLM-based agents to circumvent limitations like autonomy and self-improvement, challenging traditional LLM constraints View PDF.

- There's a growing call to establish clear criteria to distinguish LLMs from agents in software engineering, emphasizing the need for unified standards.

- Inference Compute Boosts Performance: A study found that enhancing sample generation during inference markedly elevates language model efficiency, with significant gains from 15.9% to 56% in the SWE-bench Lite domain View PDF.

- Notably, increasing attempts highlight potential where DeepSeek-V2-Coder-Instruct redefines benchmarks previously capped at 43% single-attempt success.

- MIPRO's Mixed Performance Metrics: In performance chat, MIPRO was noted to often surpass BootstrapFewShotWithRandomSearch, although inconsistently across situations.

- Further questions about MIPROv2 confirmed its current lack of support for assertions, a feature awaited by the community.

OpenAccess AI Collective (axolotl) Discord

- Synthetic Data Strategy Enhances Reasoning Tasks: A community member proposed a synthetic data generation strategy for 8b models focusing on reasoning tasks like text-to-SQL by incorporating Chain-of-Thought (CoT) in synthetic instructions.

- Training with CoT before generating the final SQL query was discussed as a method for improving model performance.

- MD5 Hash Consistency Confirmed in LoRA Adapter Merging: A query about MD5 hash consistency when merging LoRA adapters led to a confirmation that consistent results are indeed expected.

- Any discrepancy from expected MD5 hash results was discussed as indicative of potential problems.

- Bits and Bytes Pull Request Sparks Interest: Users recognized the significance of the latest Bits and Bytes Foundation pull request for library development enthusiasts.

- This pull request is seen as a critical development in the library's evolution and is being closely monitored by the community.

- Gemma 2 27b QLoRA Requires Fine-Tuning: Issues with Gemma 2 27b's QLoRA were noted, specifically around tweaking the learning rate to improve results with the latest flash attention.

- The recommendation was to adjust QLoRA parameters for enhanced performance, especially when integrating new modules like flash attention.

- UV: A Robust Python Package Installer: UV, a new Python package installer written in Rust, was introduced for its impressive speed in handling installations efficiently.

- Considered as a faster alternative to pip, UV was highlighted for potentially improving docker build processes.

Torchtune Discord

- Torchtune Rolls Out PPO Integration: Torchtune has added PPO training recipes, enabling Reinforcement Learning from Human Feedback (RLHF) in its offerings.

- This expansion allows for more robust training processes, enhancing the usability of RLHF across models supported by the platform.

- Qwen2 Models Join Torchtune Lineup: Torchtune has expanded support to include Qwen2 models, with a 7B model available and additional smaller models in the pipeline.

- The expanded support for varying model sizes is aimed at broadening Torchtune's adaptability to diverse machine learning requirements.

- Troubleshooting Llama3 File Paths Made Easier: Members discussed challenges with the Llama3 models, emphasizing correct checkpointer and tokenizer paths and the auto-configuring of prompts for the LLAMA3 Instruct Model.

- These confirmations simplify processes for users facing issues with prompt variability and model interference.

- Model Page Revamp on Torchtune's Horizon: Members are considering a restructuring of the Model Page to accommodate new and future models including multimodal LLMs.

- The proposed revamp includes a model index page for consistent handling of tasks like downloading and configuring models.

- PreferenceDataset Gets a Boost: Torchtune's PreferenceDataset now features a unified data pipeline supporting chat functionalities as outlined in a recent GitHub pull request.

- This refactor aims to streamline data processing and invites community feedback to further refine the transformation design.

OpenInterpreter Discord

- Local LLM Setup Flub in Open Interpreter: Setting up the interpreter with a local LLM results in an unnecessary download after selecting llamafile, leading to an openai.APIConnectionError.

- Efforts are ongoing to resolve this, with users coordinating solutions via private messages.

- Open Interpreter's Security Questions: A user raised concerns about Open Interpreter's data privacy and security, asking if communication between systems includes end-to-end encryption.

- The user is keen on knowing the encryption standards and data retention policies, especially with third-party involvement.

- Python Version Support Confusion: Open Interpreter currently supports Python 3.10 and 3.11, leaving users inquiring about Python 3.12 support in the dust.

- Installation validation was suggested through the Microsoft App Store for compatibility checks.

- Ollama Model Setup Hints Shared: Users discussed setting up local models using

ollama list, stressing the VRAM prerequisites for models.- See the GitHub instructions for API key details necessary for paid models.

Mozilla AI Discord

- Llamafile Revolutionizes Offline LLM Accessibility: The core maintainer of Llamafile shared exciting updates on delivering offline, accessible LLMs in a single file, significantly enhancing user accessibility.

- This progress reflects a push towards democratizing language model accessibility by providing compact, offline solutions.

- Mozilla AI Dangles Gift Card Carrot for Feedback: Mozilla AI launched a community survey, offering participants a chance to win a $25 gift card in exchange for valuable feedback.

- This initiative aims to gather robust insights from the community to inform future developments.

- sqlite-vec Release Bash Sparks Interest: sqlite-vec's release party kicked off, inviting enthusiasts to explore new features and participate in interactive demos.

- The event, hosted by the core maintainer, showcased tangible advancements in vector data handling within SQLite.

- Machine Learning Paper Talks Generate Buzz: The community dived into Machine Learning Paper Talks featuring 'Communicative Agents' and 'Extended Mind Transformers', revealing new analytical perspectives.

- These talks stimulated discussions around the potential impacts and implementations of these novel findings.

- Local AI AMA Promotes Open Source Ethos: A successful AMA was conducted by the maintainer of Local AI highlighting their open-source, self-hosted alternative to OpenAI.

- The event underscored the commitment to open-source development and community-driven innovation.

MLOps @Chipro Discord

- LinkedIn Engineering boosts ML platform with Flyte pipelines: A live session was announced about how LinkedIn Engineering has transformed their ML platform, focusing on Flyte pipelines and their implementation at LinkedIn.

- Attendees are expected to gain insights into the engineering strategies and approaches utilized by LinkedIn for their ML platform.

- Practical Applications of Flyte Pipelines: The live event covers Flyte pipelines showcasing their practical application within LinkedIn's infrastructure.

- Participants will explore how Flyte is being employed at LinkedIn for enhanced operational efficiency.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!