[AINews] GPT-4o: the new SOTA-EVERYTHING Frontier model (GPT4O version)

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Omnimodality is all you want.

AI News for 5/10/2024-5/13/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (426 channels, and 7769 messages) for you. Estimated reading time saved (at 200wpm): 763 minutes.

Say hello to GPT-4O!

It turns out that the numerous leaks about a "Her" like-chatbot announcement were most accurate, with a surprisingly "hot" voice but also the ability to respond with (an average 300ms, down from ~3000ms) low latency, have vision, handle interruptions and sing, speak faster or in pirate/whale, and more. There's also a waitlisted new desktop app that has the ability to read from the screen and clipboard history that directly challenges the desktop agent startups like Multion/Adept.

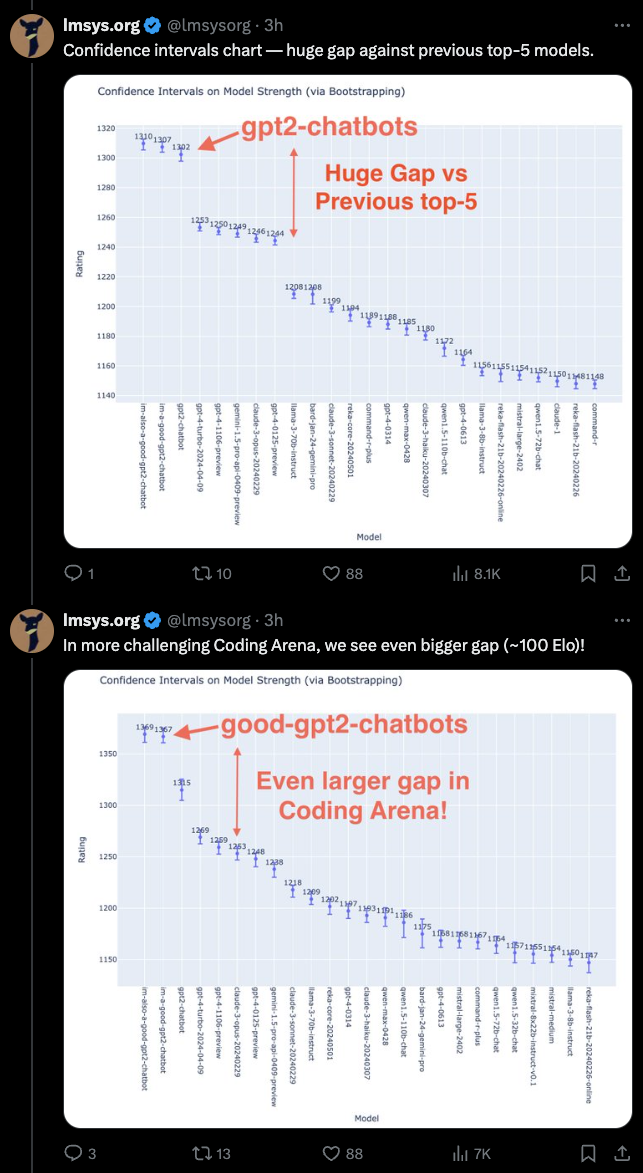

But nobody leaked that this also comes with a new versioned model, now confirmed to be the "gpt2-chatbot" that was previewed on LMsys, that is confirmed to be substantially above all other prior frontier models:

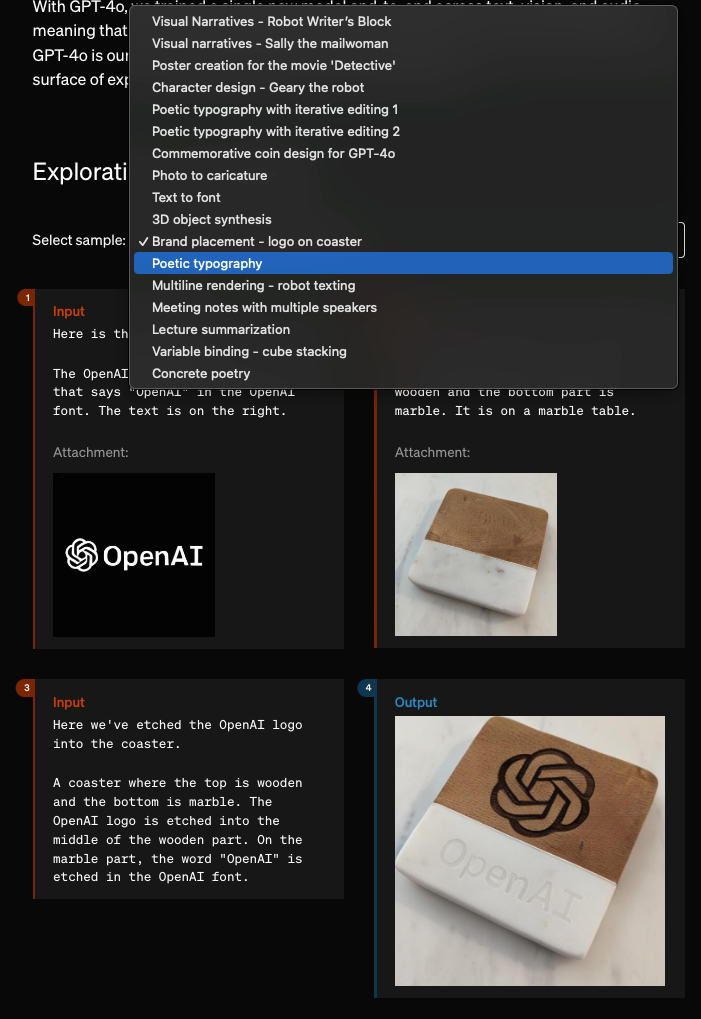

The official blogpost has a lot more video examples demonstrating the app and model, including new versions of image output that may or may not be Dall-E or some completely new thing:

Lots of people are making noise about the 3d object demo, but we can't be sure if that's just code generation since there were hidden steps in there.

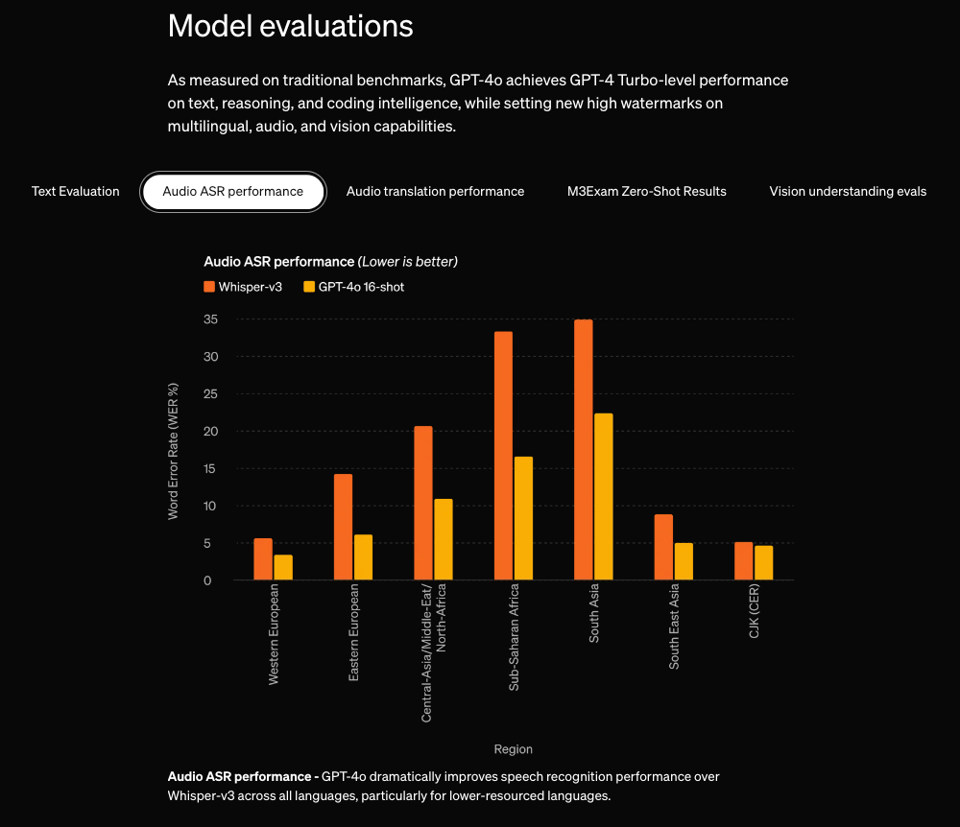

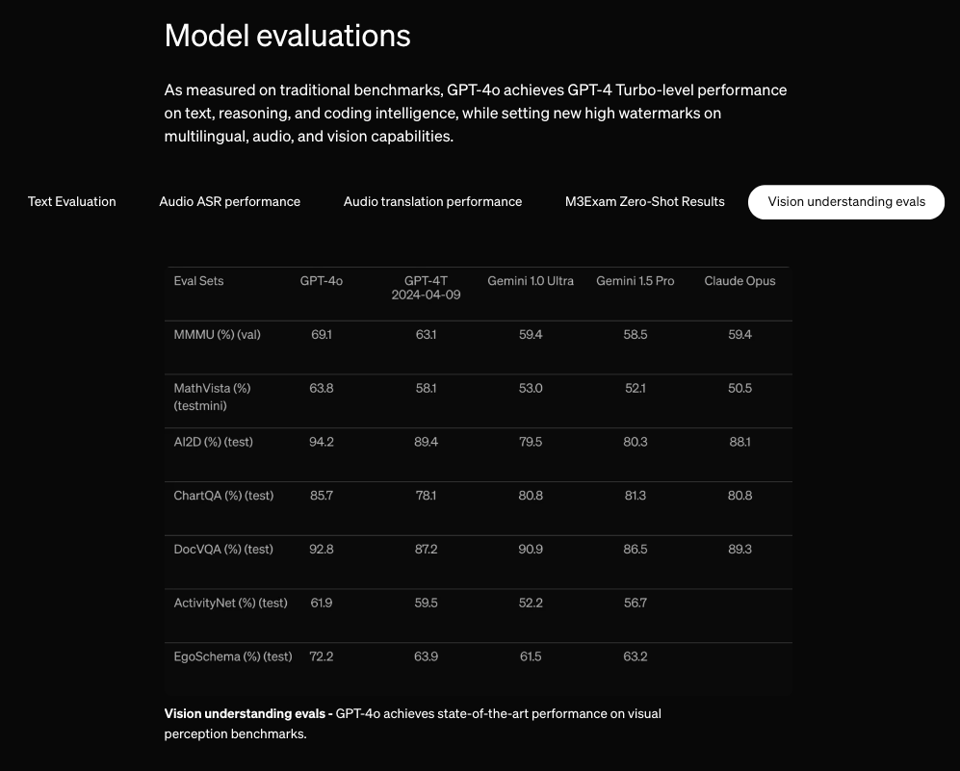

To do this, OpenAI had to beat SOTA on everything all at once, including ASR and Vision:

The tiktokenizer update revealed an expanded 200k vocab size that makes non-English cheaper/more native.

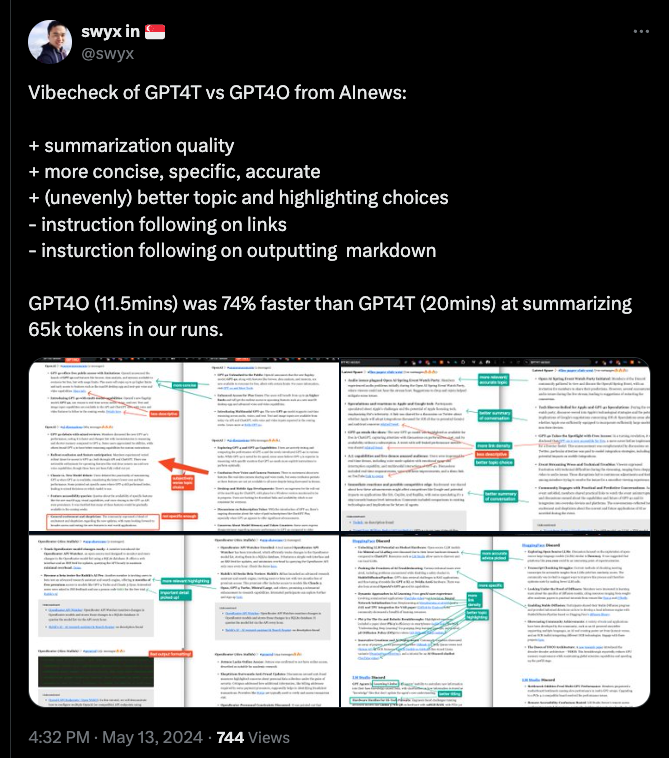

Lots more takes are flying, but as is tradition on Frontier Model days on AINews, we're publishing two editions of AINews. You're currently reading the one where all Part 1 and Part 2 summaries are done by GPT4O - the next email you get is the same but with GPT4T (update: it completed here, 74% slower than GPT4O). We envision that you will pull them up side by side (like this!) to get comparisons on discords you care about to better understand the improvements/regressions.

Table of Contents

- AI Twitter Recap

- AI Reddit Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Unsloth AI (Daniel Han) Discord

- Stability.ai (Stable Diffusion) Discord

- OpenAI Discord

- Nous Research AI Discord

- Latent Space Discord

- Perplexity AI Discord

- HuggingFace Discord

- LM Studio Discord

- OpenRouter (Alex Atallah) Discord

- Modular (Mojo 🔥) Discord

- CUDA MODE Discord

- Eleuther Discord

- Interconnects (Nathan Lambert) Discord

- LAION Discord

- LangChain AI Discord

- LlamaIndex Discord

- OpenAccess AI Collective (axolotl) Discord

- OpenInterpreter Discord

- tinygrad (George Hotz) Discord

- Cohere Discord

- Datasette - LLM (@SimonW) Discord

- Mozilla AI Discord

- DiscoResearch Discord

- LLM Perf Enthusiasts AI Discord

- Alignment Lab AI Discord

- AI Stack Devs (Yoko Li) Discord

- Skunkworks AI Discord

- YAIG (a16z Infra) Discord

- PART 2: Detailed by-Channel summaries and links

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

OpenAI Releases GPT-4o, a Multimodal Model with Voice and Vision Capabilities

- GPT-4o Capabilities: @sama introduced GPT-4o, OpenAI's new model which can reason across text, audio, and video in real time. It is described as smart, fast, natively multimodal, and a step towards more natural human-computer interaction. @gdb noted it is extremely versatile and fun to play with.

- Availability and Pricing: GPT-4o will be available to all ChatGPT users, including on the free plan according to @sama. In the API, it is half the price and twice as fast as GPT-4-turbo, with 5x rate limits @sama.

- Improved Language Performance: GPT-4o has significantly improved non-English language performance, including an improved tokenizer to better compress many languages, as noted by @gdb.

Key Demos and Capabilities

- Real-time Voice and Video: GPT-4o supports real-time voice and video input and output, which feels very natural according to @sama. This feature will roll out to users in the coming weeks.

- Coding Capabilities: GPT-4o is especially adept at coding tasks, as highlighted by @sama and @sama.

- Emotion Detection and Voice Styles: The model can detect emotion in voice input and generate voice output in a wide variety of styles with broad dynamic range, per @sama.

- Multimodal Outputs: GPT-4o can generate combinations of audio, text, and image outputs, enabling interesting new capabilities that are still being explored, according to @gdb.

Reactions and Implications

- Game-changing User Experience: Many, including @jerryjliu0 and @E0M, noted that the real-time audio/video input and output represents a huge step change in user experience and will lead to more people conversing with AI.

- Comparison to Other Models: GPT-4o was compared to other models, with @imjaredz stating it blows GPT-4-turbo out of the water in terms of speed and quality. However, @bindureddy pointed out that open-source models like Llama-3 are still 5x cheaper for pure language/coding use-cases.

- Impressive Demos: People were impressed by demos showcasing GPT-4o's real-time translation abilities @BorisMPower, emotion detection and voice style control @BorisMPower, and ability to sing and dramatize content @swyx.

Other AI News and Discussions

- Apple-OpenAI Deal: Rumors circulated that the Apple-OpenAI deal just closed, one day before OpenAI's voice assistant announcement, leading to speculation that the new Siri will be powered by OpenAI technology @bindureddy.

- Anthropic Constitutional AI: Anthropic released a new prompt engineering tool for their Claude model that can generate prompts optimized for different tasks, as shared by @adcock_brett.

- Open vs Closed AI Debates: There were various discussions on the tradeoffs of open vs closed AI development. Some, like @ylecun, argued that open source frontier models are important for enabling a diversity of fine-tuned systems and assistant AIs. Others, such as @vkhosla, expressed concerns about the national security implications of open models.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

OpenAI's Upcoming Announcement

- Speculation about capabilities: In /r/singularity, there is speculation that OpenAI's May 15th announcement will include agents, Q*-type algorithmic improvements, and architectural upgrades that will "feel like magic". Some in /r/LocalLLaMA expect a voice assistant like the AI in the movie "Her".

- Tempering expectations: However, others in /r/singularity are tempering expectations, believing it will be an incremental improvement but not AGI. The announcement has generated significant hype and speculation.

Advances in AI Capabilities

- Drug discovery success rates: New research shared in /r/singularity shows that AI-discovered drug molecules have 80-90% success rates in Phase I clinical trials, compared to the historical industry average of 40-65%. This represents a significant advancement in AI-powered drug discovery.

- Autonomous fighter jets: According to the Air Force Chief, autonomous F-16 fighters are now "roughly even" with human pilots in performance. This milestone demonstrates the rapid progress of AI in complex domains like aerial combat.

Open Source AI Developments

- Open source AI alliance: As reported in /r/singularity, Meta, IBM, NASA and others have formed an open source AI alliance to be a voice in AI governance discussions. This alliance aims to shape the narrative around AI development and regulation.

- New open source dataset: /r/LocalLLaMA announces the release of code_bagel_hermes-2.5, a new open source dataset similar to the closed source deepseek-coder dataset. Open datasets enable wider participation in AI research.

- Call to open source AlphaFold3: In /r/MachineLearning, researchers are asking Google DeepMind to open source AlphaFold3, their new state-of-the-art protein structure prediction model. Open sourcing cutting-edge models can accelerate scientific progress.

Optimizing AI Performance

- Faster GPU kernels: Researchers at Stanford have released ThunderKittens, an embedded DSL to help write fast GPU kernels that outperform FlashAttention-2 by 30% on the H100. Optimizing GPU performance is crucial for efficient AI training.

- Improved stochastic gradient descent: A new paper introduces Preconditioned SGD (PSGD) which utilizes curvature information to accelerate stochastic gradient descent, outperforming state-of-the-art on vision, NLP and RL tasks. Algorithmic improvements can significantly boost AI performance.

- Enhancing GPT-4 function calling: In /r/OpenAI, it's shown that techniques like adding function definitions, flattening schemas, and providing examples can increase the accuracy of GPT-4 function calling from 35% to 75%. Fine-tuning prompts and inputs can greatly improve AI model performance on specific tasks.

Humor and Memes

- Hype and speculation memes: Various subreddits are sharing memes and jokes about the hype and speculation surrounding OpenAI's upcoming announcement, capturing the excitement and anticipation in the AI community. Examples: "THIS IS ME RN", "Group members be like", "Average 'future is now' fella".

AI Discord Recap

A summary of Summaries of Summaries

Claude 3 Sonnet

1. Efficient AI Model Training and Inference:

- ThunderKittens is gaining traction for optimizing CUDA kernels, seen as more approachable than CUTLASS for tensor core management. It promises to outperform Flash Attention 2.

- Discussions on fusing kernels, max-autotune in torch.compile, Dynamo vs. Inductor, and profiling with Triton aim to boost performance. The Triton Workshop offers insights.

- ZeRO-1 integration in llm.c shows 54% throughput gain by optimizing VRAM usage, enabling larger batch sizes.

- Efforts to improve CI with GPU support in llm.c and LM Studio highlight the need for hardware acceleration.

2. Open-Source LLM Developments:

- Yi-1.5 models, including 9B, 6B, and quantized 34B variants, gain popularity for diverse fine-tuning tasks.

- MAP-Neo, a transparent bilingual 4.5T LLM, and ChatQA, outperforming GPT-4 in conversational QA, generate excitement.

- Falcon 2 11B model, with 5T refined data and permissive license, attracts interest.

- Techniques like Farzi for efficient data distillation and Conv-Basis for attention approximation are discussed.

3. Multimodal AI Capabilities:

- GPT-4o by OpenAI integrates audio, vision, and text reasoning, impressing with real-time demos of voice interaction and image generation.

- VideoFX showcases early video generation capabilities as a work-in-progress.

- Tokenizing voice datasets and training transformers on audio data are areas of focus, as seen in a Twitter post and YouTube video.

- PyWinAssistant enables AI control over user interfaces through natural language, leveraging Visualization-of-Thought.

4. Debates on AI Safety, Ethics, and Regulation:

- Discussions on OpenAI's regulatory moves, like GPU signing and White House collaboration, spark criticism over potential monopolization.

- Concerns arise about the impact of AI art services like Midjourney on artists' livelihoods and potential legal repercussions.

- The release of WizardLM-2-8x22B by Microsoft faces controversy due to similarities with GPT-4.

- Members analyze AI copyright implications and how companies offering indemnity could impact smaller AI ventures.

- Efforts to detect untrained tokens like SolidGoldMagikarp aim to improve tokenizer efficiency and model safety (arXiv paper).

Claude 3 Opus

Here is a high-level summary of the top 3-4 major themes across the Discord channels, with important key terms, facts, and URLs bolded and linked to sources where relevant:

- GPT-4o Launches with Mixed Reviews: OpenAI released GPT-4o, a multimodal model supporting text, image, and audio inputs. It offers free access with limitations and advanced features for Plus users. Engineers noted its speed and cost-effectiveness but criticized its shorter memory and reasoning inconsistencies compared to GPT-4. Excitement grew for upcoming voice and video capabilities. GPT-4o also topped benchmarks on the LMSys Arena.

- Falcon-2 and Yi Models Gain Traction: The open-source Falcon-2 11B model, trained on 5T refined data, was released with a permissive license. Discussions highlighted its multilingual and multimodal capabilities despite restrictive terms. Simultaneously, the Yi-1.5 series by 01.AI garnered praise for strong performance across tasks, with quantized variants like the rare 34B model suiting 24GB GPUs well.

- Tooling and Techniques Advance LLM Efficiency: New tools like ThunderKittens promised optimized CUDA kernels, potentially outperforming Flash Attention 2. The Triton Index and Awesome Triton Kernels repositories cataloged Triton kernels for discovery. Techniques like knowledge distillation, depth scaling, and novel architectures like Memory Mosaics and Conv-Basis attention were explored to enhance LLM fine-tuning and inference efficiency.

- Ethical and Legal Debates Persist in AI Development: Conversations wrestled with the implications of AI-generated art on artists' livelihoods, considering fair use, derivative works, and potential legal challenges for Stability AI and Midjourney. The impact of AI copyright on innovation funding and the indemnification of big tech players over smaller entities remained contentious issues.

Let me know if you would like me to elaborate on any part of this summary or if you have additional questions!

GPT4T (gpt-4-turbo-2024-04-09)

Major Themes: 1. Regulatory Concerns and Monopolistic Moves: There's significant discussion and concern over OpenAI's regulatory actions, particularly around practices that may favor larger companies, potentially leading to a monopolistic environment. Members expressed mixed feelings about OpenAI's moves, with criticisms particularly about potential restrictions that harm smaller competitors.

-

New Model Releases and Enhancements: Several discords discussed the release and capabilities of new models like GPT-4o, WizardLM, and Falcon 2. The release of these models sparked discussions about their enhanced multi-modal capabilities, performance improvements, and general excitement or skepticism about their real-world applications.

-

Technical Tools and Innovations: Various communities delved into technical aspects, discussing new tools and updates such as ThunderKittens for optimizing CUDA kernels, stable diffusion innovations, and advancements in model training techniques. There was a strong focus on optimizing performance and integrating the latest technological advancements.

-

Community Engagement and Speculations: Across several platforms, members engaged in forward-looking speculations about the impact of AI on various sectors. There were debates about the legal implications of deploying AI-driven services, discussions on the potential monopolistic behavior of AI giants, and the community's role in shaping the ethics and policies of AI development.

Significant Discussions Linked to URLs:

- HuggingFace's Regression Analysis: Understanding Depth Scaling in LLMs

- OpenAI's GPT-4o Release Features: Highlighted in multiple discussions across discords for its significant performance improvements and multimodal capabilities. Links to official release notes: GPT-4o Launch Details

- ThunderKittens Optimization Tool: Gaining traction for enhancing CUDA operations, linked here: ThunderKittens GitHub

- Falcon 2's Launch: Discussed for its multilingual and multimodal capabilities across different discords, further details can be found here: Falcon 2

- Legal Concerns Over AI Art: Heated discussions about the implications for artists and legal battles surrounding AI-generated art were prevalent, particularly highlighted in platforms discussing Stability.ai and Midjourney's operations.

GPT4O (gpt-4o-2024-05-13)

-

Regulatory Challenges and Platform Control:

- OpenAI's Regulatory Moves: Discussions spanned multiple communities about OpenAI's implementation of tighter control through measures like compulsory GPU signing and collaboration with the White House, raising concerns over monopolistic tendencies (e.g., [Unsloth AI (Daniel Han)]).

- Competitive Landscape: Concerns were also raised about how these moves could marginalize smaller competitors, favoring big tech companies, indicating a broader fear of restricted innovation in the AI space Nous Research AI.

-

Advancements in and Deployment of New Models:

- GPT-4o Release: Enthusiasm was noted for GPT-4o's launch, highlighting its free public access with certain limitations and multi-modal capabilities integrating audio, vision, and text reasoning OpenAI.

- Community Response: Some noted mixed emotions about GPT-4o's performance compared to previous models, with some excitement over new features overshadowed by noted reasoning inconsistencies Perplexity AI and HuggingFace.

-

Focus on Technical Optimization and Fine-Tuning:

- ThunderKittens: Gained attention for its promising kernel performance improvements, suggested to outperform existing methods like Flash Attention 2 CUDA MODE and Unsloth AI (Daniel Han).

- Fine-Tuning Issues: Multiple communities mentioned difficulties in fine-tuning models like Llama3, with discussions about specific solutions and optimization techniques HuggingFace.

-

Application and Use-Case Innovations:

- World Simulation and AI Agents: Platforms for running simulations like Websim and AI agents for tasks like generating PowerPoint presentations were shared. There was also notable interest in enhancing simulation capabilities, including integrating Digital Audio Workstations Nous Research AI.

- Community Tool Sharing: Users frequently shared code examples, scripts, and tutorials to assist with setting up and configuring AI tools, emphasizing collaborative knowledge sharing across projects like LangChain AI and HuggingFace.

Important Links:

- WizardLM GitHub: https://huggingface.co/alpindale/WizardLM-2-8x22B

- ThunderKittens GitHub: https://github.com/HazyResearch/ThunderKittens

- OpenRouter API Watcher Demo: https://orw.karleo.net/

- RAG Pipeline Tutorial: https://zackproser.com/blog/langchain-pinecone-chat-with-my-blog

- Deep Learning Initialization Guide: https://www.deeplearning.ai/ai-notes/initialization/index.html

- AI Research Papers (various links):

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- OpenAI's Regulatory Debate Heats Up: Community discusses OpenAI's recent moves toward tighter control, with particular focus on compulsory GPU signing and collaboration with the White House. Concerns were aired about creating a monopolistic environment favoring bigger companies over smaller competitors.

- WizardLM Steals the Spotlight: Despite controversy, the WizardLM-2-8x22B model has garnered support, originally released by Microsoft and bearing resemblance to GPT-4. The model stirred conversations about its availability and potential censorship, with resources shared on the WizardLM GitHub page.

- Tuning and Tooling for Peak Performance: On the technical side, discussions emerged about efficient methods and tools for fine-tuning models. Attention was on ThunderKittens kernel for its promising performance gains, potentially outdoing Flash Attention 2, found at ThunderKittens GitHub.

- Unsloth AI Gains Multi-GPU Support: Unsloth AI has been acknowledged for its efficient model fine-tuning capabilities and is slated to support multi-GPU functionality. Importance was given to the tool’s ability to integrate new model variants without needing separate branches, as detailed on Unsloth GitHub.

- Fine-Tuning Frustrations with Llama3: Engineers swapped tactics for addressing fine-tuning challenges with Llama3 models, discussing dataset sizes, padding quirks, and conversions across FP16 to GGUF format. Technical issues such as tokenization inaccuracies with GGUF tokenizers were also a key topic.

- A Peek at Altman's Q&A: OpenAI hosted a Q&A with CEO Sam Altman, focusing on the Model Spec and fostering community engagement. The session's motive is outlined in the Model Spec document.

- Llama Variants Get Finetuned for Token Classification: An engineer has contributed Llama variants optimized for token classification tasks, using LoRA adapters and trained on the conll2003 dataset. These models are accessible via their Hugging Face collection.

Stability.ai (Stable Diffusion) Discord

- SD3: More Myth than Model?: Discussions in the guild were rife with speculation about Stability AI's rumored SD3, akin to the Half-Life 3 anticipation. The lack of official release dates has led to a mix of hope and disappointment among users.

- Call for Fine-Tuning Assistance Answered: An expert stepped forward to aid with fine-tuning Stable Diffusion XL for ad generation, highlighting their experience with the machine learning backend of creativio.ai.

- Complexities of Model Usage and Configuration: Users shared challenges in downloading and setting up sizable models like CohereForAI's C4AI Command R+, and software such as KoboldAI and OogaBooga. These struggles underscored complexities related to software configuration and model file management.

- Art Styles and Animation Insights: Advice was offered on using gpt-4 for identifying art styles and the animatediff with controlnet tile method for animating artwork in a way that remains true to the original piece's aesthetic.

- Image Upscaling Quest: A user sought expertise for enhancing image resolutions using Automatic1111's forge with controlnet, highlighting a broader interest in achieving detailed and high-quality image upscaling within the community.

OpenAI Discord

- GPT-4o Unlocked for Public: OpenAI has released GPT-4o, offering free access with limitations on usage and advanced features reserved for Plus users. This model distinguishes itself with multi-modal capabilities, integrating audio, vision, and text reasoning. Launch Details and Usage Information.

- Mixed Emotions on GPT-4o's Performance: The engineer community's reaction to GPT-4o is divided, highlighting its enhanced speed and cost-effectiveness, albeit accompanied by a shorter memory span and occasional reasoning inconsistencies when compared to its predecessor. Excitement for voice and video feature integrations is palpable, tempered by the current lack of availability and some confusion over rollout schedules.

- Fine-Tuning the AI Toolset: Discussions on APIs reflect the technical crowd's interest in GPT-4T's extended 128k context for more nuanced applications, alongside strategies to manage the randomness at high-temperature settings. Practical concerns include vigilant monitoring of OpenAI's static pricing via their Pricing Page and awaiting the implementation of per-GPT memories discussed in the Memory FAQ.

- Programming Puzzles with Gemini 1.5: AI engineers are troubleshooting problematic moderation filters affecting responses in applications using Gemini 1.5 and shared steps for creating, managing, and linking to downloadable file directories using Python scripts—indicative of their resourceful approach to solving immersion-breaking application constraints.

- ChatGPT with a Supervisory Twist: A user queried about crafting a ChatGPT clone with a 3.5 model that incorporates user message monitoring by an overseeing establishment, suggesting a nuanced approach to interface replication that extends into the administrative oversight realm.

Nous Research AI Discord

- Llama Struggles Beyond 8k: The llama 3 70b model is exhibiting coherency issues when generating content over 8,000 tokens.

- Introducing MAP-Neo: The MAP-Neo project has been unveiled; it's a transparent, bilingual LLM trained on 4.5 trillion tokens, with resources and documentation available on Hugging Face, its dataset, and GitHub repository.

- Revolutionizing Conversational QA with ChatQA: A breakthrough detailed in an arXiv paper, ChatQA-70B outclasses GPT-4 in conversational QA, leveraging the InstructLab framework by IBM/Redhat that introduces incremental enhancements through curated weekly dataset updates, documented here.

- World Simulation Tech Talk: Members shared enthusiasm for WorldSim, a platform for running simulations and discussing philosophy, with technical discussions and bug reports on the simulator command issues. They approached world simulation with a desire for expanded features such as digital audio workstation integration.

- GPT-4o Stirring Debate: GPT-4o's impact on the AI field leveraged controversial opinions within the community, discussing its pros, such as improved coding performance and quantitative efficiency, alongside concerns about its proprietary nature and possible challenges to open-source AI.

Latent Space Discord

- PhD Thesis Worthy of Applause: An NLP PhD thesis attracted attention and praise, with a social media shout-out for the author's achievements.

- No Data Left Behind: Discussion turned to Llama 3's massive 15 trillion token training, sparking debate on data sources and prompting contrast with Stella Biederman’s stance on data necessity.

- AI Infrastructure - Feedback Wanted: A Substack post outlines new infrastructure services designed for AI agents, with a call for the community's input read more here.

- Falcon 2 Takes Flight, But With Tethered Wings: Falcon 2's launch stirred conversations around its leading-edge, multilingual, and multimodal facilities. Licensing conditions, however, raised eyebrows over their restrictiveness.

- GPT-4o Drops Jaws: Revelations around GPT-4o's capabilities, including its low latency and versatile responses, steered debate on API access and real-world performance, as enthusiasts shared OpenAI's latest unveilings.

- OpenAI Watch Party - Join In!: A guild member announced a watch party for an OpenAI event with pre-event festivities kicking off 30 minutes prior discord invite.

- Watch Party Woes: At the Open AI Spring Event, an initial hiccup with the stream's audio occurred, but quick community tips helped improve the situation.

- Apple vs. Google - The Speculative Saga: Amidst rumors of Apple lagging in AI, guild members shared insights into whether Siri might integrate GPT-4o, hinting at the specter of regulatory concerns related discussions.

- Live Impressions of GPT-4o: Live demonstrations of GPT-4o's emotional voice capabilities and its multimodal proficiency wowed engineers, stirring talks of real-time productivity and creative applications event playback.

- AI's Next Move - Competing in the Big Leagues: The community speculated about the competitive consequences of GPT-4o and potential disruptions to applications by Google, Siri, and others, with some considering these steps a stride towards mimicking human interaction.

Perplexity AI Discord

- Cheerio's Challenger: A faster alternative to the Cheerio library was sought for HTML content extraction. A user directed others to Perplexity's AI search for more information.

- Choosing Between AI Services: Conversations compared ChatGPT Plus with Perplexity Pro, with the latter being praised for its niche as an AI search engine enabling features like collections and model flexibility. Claude 3's usage limits in Perplexity Pro were a sore point, with users looking at YesChat for more generous quotas.

- GPT-4o Steals the Spotlight: The community engaged eagerly about the launch of GPT-4o, discussing its better speed and capabilities over preceding models. Interest was high regarding when Perplexity would incorporate GPT-4o into its services.

- Perplexity at the Helm of AI Search: Alexandr Yarats was spotlighted through his recent interview, shedding light on his trajectory from Yandex and Google to becoming Perplexity AI's Head of Search.

- Tutorial Inquiry Indicates Diverse User Base: A user's request for a Perplexity AI tutorial in Spanish signals the platform's global reach and the need for multilingual support resources. A link was shared for a "deep dive," albeit without explicit detail: Deep dive into Perplexity.

HuggingFace Discord

- Unlocking LLM Potential on Modest Hardware: Open-source LLM models like Mistral and LLaMa3 were discussed due to their lower hardware demands compared to ChatGPT. Resources such as LM Studio allow users to discover and run local LLMs.

- Pushing the Frontiers of AI Troubleshooting: Various technical issues were aired, including problems encountered while disabling a safety checker in StableDiffusionPipeline, GPT's data retrieval challenges in RAG applications, and fine-tuning of models like GPT-2 XL on Nvidia A10G hardware. There was also buzz around OpenAI's GPT-4o and its capabilities.

- Dynamic Approaches in AI Learning: From genAI user experience involving containerized applications (YouTube video) to a tutorial on Neural Network Initialization from DeepLearning.ai (deeplearning.ai article), and a JAX and TPU integration for VAR paper (GitHub for Equinox)—the community showcased a breadth of learning resources.

- Phi-3 On-The-Go and Robotic Breakthroughs: Highlighted resources included a paper about Phi-3's efficiency on smartphones (arXiv link), the book "Understanding Deep Learning" for grasping deep learning concepts, and a novel 3D Diffusion Policy (DP3) for robots (3D Diffusion Policy website).

- Innovative Creations and AI Deployments: Community members showcased an array of projects: an AI-powered storyteller (Alkisah AI), Holy Quran verses tool (Kalam AI), an OCR framework (OCR Toolkit on GitHub), fine-tuned Llama variants (HuggingFace collection), and a tutorial for an AI Discord chatbot (YouTube video).

LM Studio Discord

GPT Agents in Learning Limbo: GPT agents' inability to assimilate new information into their base knowledge caused buzz, with clarification on how information is stored as "knowledge" files that don't update the agent's core understanding.

Hardware Hurdles for Hi-Tech Pursuits: Engineers faced challenges running advanced models like Llama 3 70B Q8 on hardware with 128GB RAM, with PCIe 3.0 causing bottlenecks remedied by switching to PCIe 4.0 motherboards. Utilizing GPUs with less than 6GB VRAM for weighty models proved futile.

Yi Models Yield Enthusiasm: Yi-1.5 models, including 9B and quantized 34B variants, received praise and recommendations for a variety of tasks, with quantized models leveraging llama.cpp for improved performance.

Tooling Up for Efficiency: LM Studio's 0.2.22 update introduced a CLI tool, lms, for model management and boasted bug fixes in llama.cpp, while the community navigated the complexities of connecting OpenInterpreter to LM Studio and configuring headless installations on Linux servers.

Quest for Research Collaboration: Dispensing with corporate vernacular, the conversation sought aid and shared experiences for running MemGPT on various setups, revealing a collective endeavor to optimize this AI model.

OpenRouter (Alex Atallah) Discord

JetMoE 8B Free Hits a Snag: The JetMoE 8B Free model is experiencing downtime due to upstream overload, returning an error (502) to all requests until further notice.

Eye on the Models—OpenRouter API Watcher: An open-source tool called OpenRouter API Watcher has been unveiled, which keeps track of changes in OpenRouter's model availability, offering hourly updates via a web interface and an RSS feed with low overhead. Check out the demo.

A Beta Tester’s Dream with Rubik's AI Pro: Users can beta test and provide feedback for Rubik's AI Pro, an advanced research assistant and search engine, with 2 months of free premium access using a RUBIX promo code. Further details can be found at Rubik's AI.

Jetmoe’s Caveat: It has been confirmed that Jetmoe lacks internet access, which restricts its use cases, but it remains useful for academic research.

GPT-4o Joins OpenRouter: GPT-4o has been added to OpenRouter’s arsenal, supporting text and image inputs, and generating buzz for its performance and competitive pricing, although it lacks support for video and audio inputs.

Modular (Mojo 🔥) Discord

- Mojo's Contemplation on Pattern Matching: There was a vigorous debate about implementing pattern matching in Mojo, with affirmative stances on compiler efficiency and exhaustive case handling. Conversely, objections were raised on grounds of aesthetic preference for traditional

if-elseconstructs.

- Mojo Rises, Rust's Complexity Under Lens: Mojo's compiler, described as more navigable and straightforward than Rust's, was a hot topic. Discussions extended to Mojo's future development and, separately, the potential relationship between Mojo and MLIR.

- Innovations and Contributions in Mojo: Ideas were exchanged on incorporating yield-like behavior and new hashing techniques into Mojo. Links to proposed changes such as in this pull request and a YouTube talk also sparked discussions on the language's ownership model.

- Nightlies and Enhanced Mojo Performance: Discussions on GitHub Issues about CI tests in Ubuntu, custom

Hasherstruct proposals, and performance optimizations for Mojo'sListstructure highlighted the active nightly builds and their role in the ongoing development rhythm.

- String Building in Mojo's Landscape: A new repository for MoString received attention, offering a variation on StringBuilder approaches and a method to reduce memory allocation in Mojo, available here on GitHub.

CUDA MODE Discord

- ThunderKittens Strikes a Chord: Engineers are showing great interest in ThunderKittens, a project focusing on optimizing CUDA kernels. It’s seen as more approachable than CUTLASS for tensor core management, and its repository includes projects like NanoGPT-TK, heralded for its performance in GPT training.

- Triton's Expanding Universe: Knowledge sharing on Triton peaked with the recommendation of advanced learning resources, including a detailed YouTube lecture and pointers to GitHub repos such as PyTorch’s kernels. The excitement is palpable with discussions of internal performance and new domain-specific languages that could outperform current implementations.

- Learning on Demand: Upcoming expert talks on fusing kernels and CUDA C++ scans were announced, with Zoom as the venue. A University of Illinois lecture series on parallel programming is also accessible, offering Zoom sessions and a comprehensive YouTube playlist for independent study.

- Performance Tuning Tackled: Discussions tackled techniques to boost performance from calculating outside CUDA kernels to using max-autotune for kernels to compiler dynamics with Dynamo over Inductor, highlighting the nuanced trade-offs between kernel fusion benefits and configuration costs.

- Community Support and Query Resolution: Queries ranged from understanding GPU memory management with CUDA to seeking project assistance for thermal face recognition, involving requests for insights, papers, and Git repositories. Additionally, there’s been productive interaction over course content and GPU compatibility checks for builds.

Eleuther Discord

- Mind the Synthetic Hype!: Despite a bullish stance on synthetic data, some engineers exercise caution due to a previous hype cycle about 5-7 years ago, questioning if critical lessons will translate with the entry of new professionals in the field.

- Convolutional Contemplations: AI Engineers are comparing the performance of CNNs, Transformers, and MLPs for vision tasks, as noted in arXiv paper discussions, suggesting that while moderate scales show competitive performance, scaling up may require a mixed-method approach.

- Efforts in Model Compression: Conversations arose about model compression's impact on features and neural circuits, pondering if the lost features during compression are redundant or critically specialized revealing the dataset's diversity.

- Curiosity over New Attention Method: A new efficient attention approximation method using convolution matrices has been discussed with some skepticism, considering existing methods such as flash attention, alongside talks of depth scaling in Large Language Models (LLMs), referencing SOLAR and Yi 1.5 models.

- Insights into Falcon-2 and Copyright Conversations: The release of Falcon-2 11B, trained on a significant 5T of refined data and featuring a permissive license, sparked discussion, while ongoing debates about AI copyright implications highlight the competitive edge that may skew towards indemnifying corporations like Microsoft, highlighting a potential chilling effect on smaller players.

Interconnects (Nathan Lambert) Discord

- GPT-4o Ascends to the Top: GPT-4o, OpenAI’s latest model, has been demonstrated to outperform predecessors in coding and may raise the bar in other benchmarks like MATH. It has also become the strongest model on the LMSys Arena, boasting higher win-rates against all other models.

- REINFORCE Understood Through PPO Lens: A Hugging Face PR revealed that REINFORCE is a special case of PPO, presenting an interesting perspective on the relationship between the two reinforcement learning methods, documented in a recent paper.

- VideoFX Work in Progress Draws Eyes: Early footage of VideoFX showcased its burgeoning capabilities, generating interest with preview content on Twitter.

- Tokenizer Tuning Increases Efficiency: OpenAI has pushed a new update for their tokenizer, increasing processing speed by making use of a larger vocabulary as seen in the recent GitHub commit.

- Videos Capture Attention with Viral Potential: Within Interconnects' #reads, a surge of views on certain videos sparked conversations around promotion strategies, with one aiming to reach higher view counts inspired by another Huggingface video's popularity. There was even discourse on circumventing Stanford's licensing for wider dissemination of video content.

LAION Discord

- Artistic Anxieties over AI: Engineers discussed the implications of AI art on artists' livelihoods, examining the impact of services like Midjourney on art sales as well as potential legal repercussions. Some argued for fair use while others expressed concerns about derivative works, with reference to insights from The Legal Artist.

- Legal Buzz Surrounding AI: There was chatter around StabilityAI and Midjourney facing possible legal challenges given the current climate, with some hoping for David Holz to face repercussions for his work. The discussion included the unpredictable influence of jury decisions on the direction of such legal cases.

- Evolutions in AI Efficiency: Mention of improved efficiency in AI models sparked interest, with the spotlight on a fine-tuned Pixart Sigma model on Civitai and advancements in AI compute showcased by FlashAttention-2.

- Falcon 2 Takes Flight: Announcements highlighted the launch of Falcon 2 models boasting superior performance compared to Meta's Llama 3, with detailed information available through the Technology Innovation Institute.

- Audio's Textual Transformation: Engineers explored the conversion of voice datasets into tokens, emphasizing high-quality annotations for emotions and speaker attributes. They shared a Twitter post and a YouTube video on training transformers with audio data for further understanding.

LangChain AI Discord

- ISO Date Extraction Using LangChain: A member's request on how to extract and convert dates to ISO format led to shared code examples using the

DatetimeOutputParserin both Python and JavaScript, highlighting LangChain's functionality in structured output.

- Hook Up Local LLMs with LangChain: The conversation included guidance on integrating local open-source LLMs such as Ollama using LangChain, with Kapa.ai providing a breakdown of model definitions and prompt creation.

- Persistent Storage Solutions Beyond InMemoryStore: In the quest for persistent storage alternatives within LangChain and Gemini, some pointed to LangChain documentation for potential solutions, moving past the limited

InMemoryStore.

- Common Hurdles with HuggingFace Integration: Users shared experiences and fixes for frequent issues encountered when integrating HuggingFace models with LangChain, emphasizing the importance of model compatibility and precise API interactions.

- Tutorials and Resources to Enhance LangChain Know-How: The community spotlighted resources like a YouTube tutorial and a detailed blog post on creating a RAG pipeline with LangChain, with open requests for guidance on streaming and session management within LangChain applications.

LlamaIndex Discord

- Slide Decks on Automatic: Using the Llama3 RAG pipeline, a new system to generate PowerPoint presentations has been developed, incorporating Python-pptx. The workflow and integration details are shared in an article.

- Reflecting on Reflection: Hanane Dupouy's exploration of creating a financial agent that reflects on stock prices shows promise for advanced CRITIC applications, with an in-depth explanation available in their exposure.

- Moderation by RAG: Setting up a RAG pipeline for moderating user-generated images by converting images to text and checking against indexed rules is outlined, with a more detailed procedure available.

- RAG System Under the Microscope: A comprehensive article presented by @kingzzm covers the evaluation of RAG systems, utilizing libraries such as TruLens, Ragas, UpTrain, and DeepEval, with a link to the full article for the metrics.

- Distill Knowledge, Sharpen Models: A valuable discussion-centric blog post on the knowledge distillation technique used to fine-tune GPT-3.5 is recommended for engineers looking to increase model accuracy and performance.

OpenAccess AI Collective (axolotl) Discord

Tech-Savvy Inner Circle Shares AI Insights

- LLAMA3's Instructional Layer Secrets: An analysis shows key weights in LLAMA 3 concentrated in the K and V layers, suggesting possible freezing to induce stylistic variations without affecting its instructional prowess.

- Practicality of OpenOrca and AI Efficiency: AI enthusiasts evaluated the feasibility of re-running OpenOrca's deduplication for GPT-4o, roughly costing $650, while spotlighting methods like Based, Monarch Mixer, H3, and FlashAttention-2 to enhance computational efficiency, as discussed in a blog post.

- Development Chaos: Dependencies & Docker Woes: Developers reported difficulties ranging from AttributeError 'LLAMA3'* errors when using Docker to outdated dependencies leading to conflicts, emphasizing the transition from torch 2.0.0 to 2.3.0 with the need for updates in fastchat and pyet**.

- AXOLOTL Interactions Met with Errors and Questions: The AI community faces diverse challenges, including error messages converting models to GGUF, loading Gemma-7B, and pragmatically merging QLoRA into base models, often left unresolved within thread discussions.

- No Quick Fix in Sight: Inquiries addressed to the Axolotl-phorm-bot about topics like pruning support, continuous pretraining, LoRa methods, and QLoRA merging techniques prompted searches in Axolotl's repository without providing immediate solutions - details check on Phorm's platform.

Deploying practical solutions and seamless updates remains a collective goal in tackling emergent AI tech puzzles — updates and breakthroughs to follow.

OpenInterpreter Discord

Goofy Errors and Speedy Performances: Claude API users reported "goofy errors" impeding its use, whereas GPT-4o garnered praise for its swift performance, clocking at "minimum 100 tokens/s." Local models such as Mixtral and Llama3 were considered inferior to GPT-4.

PyWinAssistant Showcases AI Control over UI: An open-source project dubbed PyWinAssistant allows control of user interfaces through natural language, leveraging Visualization-of-Thought for spatial reasoning. Excitement grew as users shared a GitHub repo and a live YouTube demo.

Hardware Headaches and Software Solutions: Integration of LiteLLM, Groq and Llama3 successfully confirmed, while another user struggled to connect their 01-Light device. Separate issues arose with Python script execution resolved by importing OpenInterpreter correctly.

Shipment Updates and Support Channels: Queries about the 01 hardware brought news of upcoming batch shipments, and an iOS app for the hardware is in beta, shared on GitHub. Order cancellations were directed to help@openinterpreter.com.

Dev Discussions on Model Swapping: The 01 dev preview prompted exchanges on switching to local models using poetry run 01 --local, offering insights into model selection commands.

tinygrad (George Hotz) Discord

- Tensor Talk Tackles Variable Shapes: Engineers debated how to represent tensors with variable shapes in tinygrad, a topic especially relevant in transformers due to changing token numbers. They referred to Tinygrad's handling of variable shapes and code snippets from Whisper (snippet 1, snippet 2) for insights.

- Dim Versus Axis: Different Terms, Same Concept?: There was a clarification sought on the terminology difference between "dim" and "axis" in tensor operations, concluding that the terms are mostly interchangeable and any differences might be rooted in historical conventions.

- Debugging

AssertionErrorDuring Training: A user faced anAssertionErrorrelated to missing gradients during a bigram model training which led to a discussion on proper settings (Tensor.training = True). The conversation included a reference to a GitHub pull request to prevent such issues.

- Feature Aggregation in Neural Turing Machines: An NTM implementation prompted discussions on feature aggregation via tensor operations and optimization, for which code examples were exchanged and ideas on efficiency improvements were discussed (aggregate feature code).

- Navigating

wherein Backprop Challenges: Participants worked through a backpropagation issue with a 'where' call in tinygrad that was causingRuntimeError. The workaround involved adetach().where()method, highlighting a PyTorch-to-tinygrad gradient challenge.

Cohere Discord

- Token Troubles and Model Mechanics: A query on the unexpected surge in input tokens was clarified; web searches using command 'r' result in context passing and higher token count, leading to billing charges. Meanwhile, the challenge of 'glitch tokens' in language models like SolidGoldMagikarp was acknowledged with a linked arXiv paper, which discusses detection methods for these potentially problematic tokens.

- Open-Source Embeddings and Billing Brain Teasers: No consensus was reached about the open-source nature of embedding models due to a lack of responses. In a separate issue, billing confusion over a $0.63 charge was resolved, attributed to the amount due since the last invoice.

- Aya vs. Cohere Command Plus - Clash of the Models: In a comparison between Aya and Cohere Command Plus models, Aya was reported less accurate, even with a 0 temperature setting, with one user suggesting its best use case in translation tasks.

- Specializing LLMs Seek New Horizons in Telecom: A challenge to tailor large language models (LLMs) for the telecom sector, focusing on areas such as 5G, was shared, with more details found on the Zindi Africa competition page.

- In Search of a Chat-with-PDF Solution: A call was made for references to a "chat with PDF" application utilizing Cohere, with the incentive being collaboration and knowledge-sharing among members.

Datasette - LLM (@SimonW) Discord

- GPT-4o Still Falling Short: Members shared frustration about GPT-4o's inaccuracies, experiencing a 50% success rate when asking the model to list book titles it "saw" in a library scenario.

- Voice Assistant Marketing Missteps: Recent voice assistant promotion mishaps, including unwanted giggling from the devices, drew criticism from users who called it "embarrassing".

- Custom Instructions Could Improve Voice Assistants: Hopes are pinned on custom instructions to improve the interactions with voice assistants, aiming to eliminate awkward behavior.

- AGI Believers Club Lacks Members: Skepticism prevails about the near-term development of AGI, with engineers expressing a lack of belief in its imminent advent.

- Law of Diminishing Returns in LLMs: Discussions indicate a consensus that there are diminishing improvements in new versions of large language models, and current models have untapped capabilities.

Mozilla AI Discord

- Beware Fake Repositories: An announcement warned about a fake OpenELM repository; there is no GGUF (GitHub User File) for OpenELM currently available, cautioning the community against potential scams.

- llamafile Archives Receive a Boost: A new pull request (PR) was mentioned for an upgrade script for llamafile Archives, based on a script from Brian Khuu's blog, offering improvement and maintenance for file handling processes.

- Containers Get a Green Light: Confusion around using containerization tools like podman or kubernetes was resolved, affirming that utilizing containers for operations is approved and encouraged for deployment consistency and scalability.

- Performance Check for Hermes-2-Pro: Experiences with the Hermes-2-Pro-Llama-3-8B-Q5_K_M.gguf running on an AMD 5600U were shared, noting response times of approximately 10 seconds and RAM usage spikes of 11GB.

- Model Troubleshooting: Batch Size Errors: Reports surfaced of an error affecting both Llama 8B and Mistral models involving update_slots and n_batch size issues. High RAM allocation appears to mitigate the issue, which is less prevalent in other models like LLaVa 1.5 and Llama 70B.

DiscoResearch Discord

Searching for German Content: A pursuit for diverse German YouTube channels to train a Text-to-Speech model led to suggestions such as using Mediathekview to download content. The Mediathekview's JSON API was also highlighted as a resourceful tool, as seen in the GitHub repository.

Keep It English: A reminder was issued within the discussions to ensure that English remains the primary language for communication, possibly to maintain the accessibility of discussions.

Demo Status Check: An inquiry about the status of a unidentified demo received no response, indicating either a lack of information or attention to the query.

Thumbs Up for... Something: Positive feedback was expressed with a brief "It's really nice," comment, though the context of this satisfaction wasn't expanded upon.

Curiosity for RT Audio Interface: There's evident curiosity and excitement about the "RT Audio interface" in applications beyond chat, but experiences or results have not yet been shared in the discussions.

LLM Perf Enthusiasts AI Discord

- Claude Beats Llama at Haiku: In a showdown of linguistic prowess, engineers compared the submodel accuracy of Claude 3 Haiku with Llama 3b Instruct's entity extraction capabilities. Initial experiments with fuzzy matching proved fruitless, sparking interest in more sophisticated submodel matching techniques.

- Teasers and Voices Stir Excitement: Anticipation is building in the community as OpenAI's Spring Update has been teased, promising the introduction of GPT-4o. A notable highlight is none other than Scarlett Johansson voice-featured in the update, sparking both surprise and amusement among members.

- Audio Futures Discussed: Technical discussions speculated on OpenAI's potential integration of audio functionalities, envisioning direct audio input-output support for an AI assistant.

- OpenAI Update Available: Engineers eager for the latest advancements took note of the OpenAI Spring Update, which includes information on GPT-4o, ChatGPT enhancements, and possibly more, streamed live on May 13, 2024.

Alignment Lab AI Discord

AlphaFold Goes Social: The AlphaFold3 Federation has sprung into action, inviting participants to a meet on May 12th at 9pm EST focusing on updates and pipeline development, with an open invitation link here.

Fasteval on the Brink: The fasteval project seems to be ending, but hope remains for someone to assume the helm; the current maintainers are open to transferring the project found on GitHub, or else they suggest archiving it.

AI Stack Devs (Yoko Li) Discord

- Need for Speed Customization?: There's interest in personalizing the AI Town experience; specifically, adjusting the character moving speed and number of NPCs. This feedback indicates user desire for more control over gameplay mechanics.

- Balancing NPC Interactions: A user suggested optimizing AI Town by reducing NPC interaction frequency to improve player-NPC interaction quality. They emphasized the performance challenges when running AI Town locally with the llama3 model.

Skunkworks AI Discord

- A Casual Share for Tech Enthusiasts: User pradeep1148 shared a YouTube video in the #off-topic channel, which may be of interest to fellow AI engineers. The content of the video has not been described, so its relevance to the technical discussions is unknown.

YAIG (a16z Infra) Discord

- Consensus in AI Discussions Achieved: The notorious brevity of pranay01's response with a simple "Agree!" reflects either alignment or the conclusion of a discussion on a potentially complex AI infrastructure topic. No further context was provided to detail the nature of the agreement.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Unsloth AI (Daniel Han) ▷ #general (833 messages🔥🔥🔥):

- Community criticizes OpenAI's regulatory moves: Members discussed OpenAI's GPU signing and collaboration with the White House as moves to monopolize and control the AI space. One noted that OpenAI wants to make authorization mandatory, restricting competition ("god i hate regulations on anything tech when it benefits only the top companies").

- Support expands for 'WizardLM' despite controversy: Members shared links to resources on the contentious WizardLM-2-8x22B model. Participants highlighted that it was initially released by Microsoft and later censored due to its similarity to GPT-4 (WizardLM GitHub).

- Discord members discuss efficient fine-tuning and new tools: Various tools and kernels like ThunderKittens were discussed for improving model training and inference. A new kernel, ThunderKittens, was noted for its promise to outperform Flash Attention 2 (ThunderKittens GitHub).

- Unsloth receives praise and updates: Users expressed appreciation for Unsloth's library for fine-tuning models efficiently. Unsloth announced upcoming multi-GPU support and the integration of models such as Qwen's recent versions without a specific additional branch requirement (Unsloth GitHub).

- Fine-tuning challenges with Llama models discussed: Members shared experiences and troubleshooting tips around fine-tuning processes, specifically with providing dataset sizes and padding issues. Converting and handling different model formats like FP16 to GGUF was also a notable topic.

- ThunderKittens: A Simple Embedded DSL for AI kernels: no description found

- Rethinking Machine Unlearning for Large Language Models: We explore machine unlearning (MU) in the domain of large language models (LLMs), referred to as LLM unlearning. This initiative aims to eliminate undesirable data influence (e.g., sensitive or illega...

- eramax/nxcode-cq-7b-orpo: https://huggingface.co/NTQAI/Nxcode-CQ-7B-orpo

- Hugging Face – The AI community building the future.: no description found

- alpindale/WizardLM-2-8x22B · Hugging Face: no description found

- NTQAI/Nxcode-CQ-7B-orpo · Hugging Face: no description found

- tiiuae/falcon-11B · Hugging Face: no description found

- Tweet from Daniel Han (@danielhanchen): Was fixing LLM fine-tuning bugs and found 4 issues: 1. Mistral: HF's batch_decode output is wrong 2. Llama-3: Be careful of double BOS 3. Gemma: 2nd token has an extra space - GGUF(_Below) = 3064...

- Joy Dadum GIF - Joy Dadum Wow - Discover & Share GIFs: Click to view the GIF

- Anima/air_llm at main · lyogavin/Anima: 33B Chinese LLM, DPO QLORA, 100K context, AirLLM 70B inference with single 4GB GPU - lyogavin/Anima

- Gojo Satoru Gojo GIF - Gojo Satoru Gojo Ohio - Discover & Share GIFs: Click to view the GIF

- GitHub - HazyResearch/ThunderKittens: Tile primitives for speedy kernels: Tile primitives for speedy kernels. Contribute to HazyResearch/ThunderKittens development by creating an account on GitHub.

- GitHub - lilacai/lilac: Curate better data for LLMs: Curate better data for LLMs. Contribute to lilacai/lilac development by creating an account on GitHub.

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- remove convert-lora-to-ggml.py by slaren · Pull Request #7204 · ggerganov/llama.cpp: Changes such as permutations to the tensors during model conversion makes converting loras from HF PEFT unreliable, so to avoid confusion I think it is better to remove this entirely until this fea...

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- LLaMA-Factory/scripts/llamafy_qwen.py at main · hiyouga/LLaMA-Factory: Unify Efficient Fine-Tuning of 100+ LLMs. Contribute to hiyouga/LLaMA-Factory development by creating an account on GitHub.

- Lorem Function – Typst Documentation: Documentation for the `lorem` function.

- GitHub - ggerganov/llama.cpp: LLM inference in C/C++: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #random (15 messages🔥):

- OpenAI hosts Q&A for community engagement: OpenAI’s CEO Sam Altman held a Q&A on Reddit to discuss the newly released Model Spec, encouraging community interaction and questions. The document outlines desired model behavior in OpenAI's API and ChatGPT.

- Mixed feelings on AI updates: Members expressed a range of emotions about potential OpenAI updates. While there were hopes for revitalization, others felt cautious optimism or skepticism given past experiences and current market dynamics.

- Skepticism about OpenAI releasing open-source models: Discussion highlighted doubts about OpenAI releasing models open-source due to potential impacts on their business model and reputation. Comparisons were made to other companies like Meta, where open-source releases were either forced or strategic responses to competition.

- Debate on the future of AI development publicity: The channel featured a debate on whether the reporting of an "AI winter" impacts OpenAI, with consensus leaning towards minimal impact due to OpenAI's current industry status. Discussion also covered the incentives and risks associated with releasing AI models open-source.

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

Unsloth AI (Daniel Han) ▷ #help (312 messages🔥🔥):

- Challenges with Quantized Models and TGI: A member highlighted that quantized models often result in sharding errors on HF dedicated inference when used with TGI. They noted that the models need to be saved in 16-bit format via

model.save_pretrained_merged(...)to avoid issues (TGI requires 16-bit models).

- Issues with GGUF Tokenizers: There were discussions about tokenization issues, particularly with Gemma's GGUF models. Members noted problems like incorrect tokenization and an extra space being added to the first token.

- Finetuning Llama3 Models on Colab: Multiple users faced and resolved issues fine-tuning Llama3 models. One user mentioned a solution was found by saving to GGUF manually and ensuring models are saved and loaded correctly, with relevant documents and example notebooks being effective guides.

- Multi-GPU and Multi-Cloud Discussions: There were suggestions and debates on multi-GPU and cloud-based training options. Some members voiced concerns about high prices and proposed potential partnerships with cloud providers to offer cost-effective solutions for commercial users.

- Issues with Unsloat Installation on Colab: Problems related to installing and importing Unsloth on Colab were addressed. Solutions included ensuring the correct runtime settings, particularly GPU settings, and following instructions precisely.

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Google Colab: no description found

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Load: no description found

- unsloth/unsloth/__init__.py at d3a33a0dc3cabd3b3c0dba0255fb4919db44e3b5 · unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- GitHub - unslothai/hyperlearn: 2-2000x faster ML algos, 50% less memory usage, works on all hardware - new and old.: 2-2000x faster ML algos, 50% less memory usage, works on all hardware - new and old. - unslothai/hyperlearn

- I got unsloth running in native windows. · Issue #210 · unslothai/unsloth: I got unsloth running in native windows, (no wsl). You need visual studio 2022 c++ compiler, triton, and deepspeed. I have a full tutorial on installing it, I would write it all here but I’m on mob...

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- Sou Cidadão - Colab: no description found

Unsloth AI (Daniel Han) ▷ #showcase (1 messages):

- SauravMaheshkar shares Llama finetuned variants: A member has been working on finetuning Llama variants for Token Classification and has uploaded some of the model weights to the 🤗 hub. The fine-tuned variants include

unsloth/llama-2-7b-bnb-4bittrained on the conll2003 dataset using LoRA adapters, and they shared a collection link.

Link mentioned: LlamaForTokenClassification - a SauravMaheshkar Collection: no description found

Stability.ai (Stable Diffusion) ▷ #general-chat (976 messages🔥🔥🔥):

- Discord user wonders about SD3's existence: Users speculated about the Stability AI's upcoming SD3, questioning if it would ever be released. Sentiments varied with some expressing disappointment over missed release dates and others humorously comparing the situation to "Half-Life 3."

- Expertise needed for Fine-Tuning in SDXL: A plea for assistance with fine-tuning Stable Diffusion XL for generating product ads drew responses. One experienced user offered to help, showcasing their past work on the ML backend of creativio.ai.

- Locating and using models for AI tasks proves challenging: Users discussed downloading and running large language models, like CohereForAI's C4AI Command R+, and the complicated process of configuring software like KoboldAI and OogaBooga. Frustrations were expressed over the difficulty and large file sizes involved.

- Recognizing and animating art styles: Users suggested studying art history or using tools like gpt-4 to identify art styles. For slight animations close to the original image, it was recommended to use methods like animatediff with controlnet tile.

- Challenges with image upscaling: A user faced difficulties in finding an effective method for upscaling images using Automatic1111's forge with controlnet. They sought advice on achieving high-quality, detailed upscales.

- dranger003/c4ai-command-r-v01-iMat.GGUF · Hugging Face: no description found

- Lewdiculous/Average_Normie_l3_v1_8B-GGUF-IQ-Imatrix · Hugging Face: no description found

- CohereForAI/c4ai-command-r-plus · Hugging Face: no description found

- CohereForAI/c4ai-command-r-v01 · Hugging Face: no description found

- jonathan frakes telling you you're wrong for 47 seconds: it never happened

- Nikolas Cruz's Depraved Google Search History: A glimpse of the Parkland shooter's descent into the dark bowels of the Internet. This is why parents should monitor what their children do online. Warning: ...

- GitHub - nullquant/ComfyUI-BrushNet: ComfyUI BrushNet nodes: ComfyUI BrushNet nodes. Contribute to nullquant/ComfyUI-BrushNet development by creating an account on GitHub.

- PORK NIGHTMARE: ATTENTION !!! Âmes sensibles s'abstenir car vous regardez en face certaines des choses les plus sombres que votre humanité à engendré.ARRÊTEZ-TOUT !!!▼▼ RÉSE...

- no title found: no description found

- GitHub - KoboldAI/KoboldAI-Client: Contribute to KoboldAI/KoboldAI-Client development by creating an account on GitHub.

- GitHub - Zuellni/ComfyUI-ExLlama-Nodes: ExLlamaV2 nodes for ComfyUI.: ExLlamaV2 nodes for ComfyUI. Contribute to Zuellni/ComfyUI-ExLlama-Nodes development by creating an account on GitHub.

- GitHub - LostRuins/koboldcpp: A simple one-file way to run various GGML and GGUF models with KoboldAI's UI: A simple one-file way to run various GGML and GGUF models with KoboldAI's UI - LostRuins/koboldcpp

- Reddit - Dive into anything: no description found

- Character Consistency in Stable Diffusion - Cobalt Explorer: UPDATED: 07/01– Changed templates so it’s easier to scale to 512 or 768– Changed ImageSplitter script to make it more user friendly and added a GitHub link to it– Added section...

OpenAI ▷ #annnouncements (2 messages):

- GPT-4o offers free public access with limitations: OpenAI announced the launch of GPT-4o and features like browse, data analysis, and memory available to everyone for free, but with usage limits. Plus users will enjoy up to 5x higher limits and early access to features such as the macOS desktop app and next-gen voice and video capabilities. More info.

- Introducing GPT-4o with multi-modal capabilities: OpenAI's new flagship model, GPT-4o, can reason in real-time across audio, vision, and text. Text and image input capabilities are available in the API and ChatGPT now, with voice and video features to follow in the coming weeks. Details here.

OpenAI ▷ #ai-discussions (684 messages🔥🔥🔥):

- GPT-4o debuts with mixed reviews: Members discussed the new GPT-4o's performance, noting it is faster and cheaper but with inconsistencies in reasoning and shorter memory compared to GPT-4. Some users appreciated its abilities, while others found GPT-4 to have better reasoning capabilities for custom instructions.

- Rollout confusion and feature anticipation: Members experienced varied rollout times for access to GPT-4o, both through API and ChatGPT. There was noticeable enthusiasm for upcoming features like real-time camera use and new voice capabilities, though these have not been fully rolled out yet.

- Classic vs. New Model debate: Users debated the practicality of maintaining GPT-4 when GPT-4o is available, considering the latter's lower cost and fast performance. Some pointed out specific cases where GPT-4 still performed better, leading to mixed decisions on which model to use.

- Feature accessibility queries: Queries about the availability of specific features like the new macOS app, visual capabilities, and voice cloning in the GPT-4o API were prominent. It was clarified that many of these features would be gradually available in the coming weeks.

- General excitement and skepticism: The community expressed a blend of excitement and skepticism regarding the new updates, with many looking forward to broader access and testing the new features in real-world applications.

- Twerk Dance GIF - Twerk Dance Dog - Discover & Share GIFs: Click to view the GIF

- Introducing GPT-4o: OpenAI Spring Update – streamed live on Monday, May 13, 2024. Introducing GPT-4o, updates to ChatGPT, and more.

- Sync codebase · openai/tiktoken@9d01e56: no description found

- Models - Hugging Face: no description found

OpenAI ▷ #gpt-4-discussions (126 messages🔥🔥):

- **Issues Passing Files to GPT Actions**: A member asked if anyone figured out how to pass uploaded files to a GPT action. There wasn't a clear resolution provided in the discussion.

- **GPT-4T API Provides Higher Context**: Discussion highlighted that the API for GPT-4T is less restrained and currently allows a 128k context. Members discussed the nuances of this capability.

- **Random Output with High Temperature Settings**: A member experienced random outputs when setting the temperature above 1.5. Another advised keeping the temperature below 1 for stable and coherent responses.

- **Fetching OpenAI Model Pricing**: Members shared that OpenAI pricing is static and can be reviewed on the [OpenAI pricing page](https://openai.com/api/pricing/). There are no alerts for pricing changes, so users need to monitor the page manually.

- **Custom GPTs and Cross-Session Memory**: There was confusion about custom GPTs' cross-session memory capabilities, clarified by a member noting that per-GPT memories have not rolled out yet. More details about this can be found in the [OpenAI Memory FAQ](https://help.openai.com/en/articles/8590148-memory-faq).

OpenAI ▷ #prompt-engineering (32 messages🔥):

- Moderation Filter Issue with Gemini 1.5: A user reported that their application consistently fails to respond to queries related to "romance package" due to an unspecified moderation filter. Despite setting all blocks to none and trying different settings, the issue persists, making it difficult to implement their integrations at a major resort.

- Discussion on Safety Settings: Members discussed whether the problem with the moderation filter could be due to safety settings not being explicitly disabled. One member suggested testing in the AI Lab to ensure no syntax errors are affecting the results.

- API Keys and Temperature Settings Experimentation: The user tried generating new API keys and adjusting temperature settings to resolve the issue but had no success. This has led them to conclude that the problem might be on Google's end.

- Help Offered and Syntax Check Recommended: Another member offered help and suggested checking the syntax in the AI Lab to confirm that the issue is not due to improper syntax or safety filter settings. The user appreciated this assistance but remained convinced that the problem is external.

- Python Script for File Operations: A user shared a Python script snippet that outlines creating a directory, writing Python files in separate sessions, and zipping the directory. This script demonstrates a method for displaying a link to download the resulting zip file.

OpenAI ▷ #api-discussions (32 messages🔥):

- Moderation filter issue in Gemini 1.5: A user reported an issue with their application experiencing consistent failures when users inquire about "romance package" or similar topics. Despite changing defaults and generating new API keys, the problem persists, suggesting potential model training restrictions.

- Troubleshooting AI Safety Settings: Another user suggested explicitly disabling safety settings to potentially resolve the issue. They stressed the importance of ensuring that safety filters are correctly turned off and offered a screenshot method for further verification.

- Google AI Lab potential solution: The conversation shifted to testing in Google AI Lab to determine if syntax errors are the cause. Suggestions included checking the safety filters and possibly testing for syntax errors in the lab.

- File directory creation in Python: A user requested guidance on creating a full file tree, writing files in Python sessions, and zipping a directory, asking for a downloadable link upon completion. The task involves programmatically setting up a directory structure and managing files through Python scripts.

OpenAI ▷ #api-projects (2 messages):

- Creating a ChatGPT clone with tracking: A user inquired about the feasibility of creating a ChatGPT clone utilizing the 3.5 model but with the capability for user messages to be monitored by the organization. This implies replicating the ChatGPT interface while adding a message tracking feature.

Nous Research AI ▷ #ctx-length-research (1 messages):

king.of.kings_: i am struggling to get llama 3 70b to be coherent over 8k tokens lol

Nous Research AI ▷ #off-topic (15 messages🔥):

- Aurora in France: A member mentioned seeing the aurora borealis over the central volcano of Arvenia in Auvergne, France. This surreal natural phenomenon caught their attention and seemed worth sharing.

- YouTube Links Shared: Two YouTube links were shared: one titled "Udio Testing: You never knew your own name : whispers in the void" and another by another member, without additional descriptions.

- Introducing MAP-Neo: A user announced the release of MAP-Neo, a fully transparent bilingual LLM trained on 4.5T tokens, and shared links to Hugging Face, a dataset, and the GitHub repository.

- Kingdom Come: Deliverance Cooking Mechanic: A user discussed the perpetual stew mechanic in the game Kingdom Come: Deliverance, noting its historical accuracy. They shared a personal recipe involving slow-cooking vegetables and meat, highlighting a shift in cooking methods based on hunger.

- RPA and Software Automation: A member inquired about a library for interacting directly with software windows via RDP, like RPA for automation. Another member suggested using Frida for runtime hooks and exposing functionality via an HTTP API, although concerns were raised about the complexity due to not having access to software binaries.

- Mother Day GIF - Mother day - Discover & Share GIFs: Click to view the GIF

- Udio Testing: You never knew your own name : whispers in the void: no description found

- Neo-Models - a m-a-p Collection: no description found

- m-a-p/Matrix · Datasets at Hugging Face: no description found

- GitHub - multimodal-art-projection/MAP-NEO: Contribute to multimodal-art-projection/MAP-NEO development by creating an account on GitHub.

Nous Research AI ▷ #interesting-links (6 messages):

- Hierarchical Correlation Reconstruction in Neural Networks: A member posted a link to an arXiv paper discussing optimization of artificial neural networks through hierarchical correlation reconstruction. The paper contrasts typical unidirectional value propagation with the multidirectional operation of biological neurons.

- Taskmaster Episode Roleplay App: Another member shared their creation of a React app for roleplaying as a Taskmaster contestant, using a state machine that encodes each stage of an episode. Users need to input their own OpenAI key and may encounter clunky outputs but can check out the code on GitHub.

- Yi-1.5-34B-Chat Model Update: One message highlighted the 01-ai/Yi-1.5-34B-Chat model on Hugging Face. It was updated recently and had over a thousand uses, as seen here.

- Detailed Industrial Military Complex Knowledge Graph: A member used Mistral 7B instruct v 0.2 and their framework, llama-cpp-agent, to create a 40-node knowledge graph of the Industrial Military Complex. They shared the framework on GitHub which supports various servers and APIs like llama.cpp and TGI.

- Detailed Thoughts on OpenAI's Technology and Strategy: A user linked to a Twitter thread offering a deep dive into OpenAI's advancements in audio-to-audio mapping and video streaming to transformers. It speculates on OpenAI's strategic moves and potential Apple integrations with GPT-4o as a precursor to GPT-5.

- Biology-inspired joint distribution neurons based on Hierarchical Correlation Reconstruction allowing for multidirectional neural networks: Popular artificial neural networks (ANN) optimize parameters for unidirectional value propagation, assuming some guessed parametrization type like Multi-Layer Perceptron (MLP) or Kolmogorov-Arnold Net...

- Yi-1.5 (2024/05) - a 01-ai Collection: no description found

- Taskmaster-LLM/src/App.js at main · LEXNY/Taskmaster-LLM: Contribute to LEXNY/Taskmaster-LLM development by creating an account on GitHub.

- GitHub - Maximilian-Winter/llama-cpp-agent: The llama-cpp-agent framework is a tool designed for easy interaction with Large Language Models (LLMs). Allowing users to chat with LLM models, execute structured function calls and get structured output. Works also with models not fine-tuned to JSON output and function calls.: The llama-cpp-agent framework is a tool designed for easy interaction with Large Language Models (LLMs). Allowing users to chat with LLM models, execute structured function calls and get structured...

Nous Research AI ▷ #general (741 messages🔥🔥🔥):

- OpenAI's GPT-4o divides opinions: Members discussed the launch of GPT-4o, noting its dual input-output capabilities and improved coding performance. There was significant debate about its lower token limit (2048) and potential impact on the open-source AI community.

- Speed improvements with mixed feelings: Users noted GPT-4o's increased speed and lower costs, attributing the efficiency to potential quantization and model size reductions. Despite these benefits, some were disappointed by the limited token output and pricing.