[AINews] Google's Agent2Agent Protocol (A2A)

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Remote agents are all you need.

AI News for 4/8/2025-4/9/2025. We checked 7 subreddits, 433 Twitters and 30 Discords (229 channels, and 5996 messages) for you. Estimated reading time saved (at 200wpm): 563 minutes. You can now tag @smol_ai for AINews discussions!

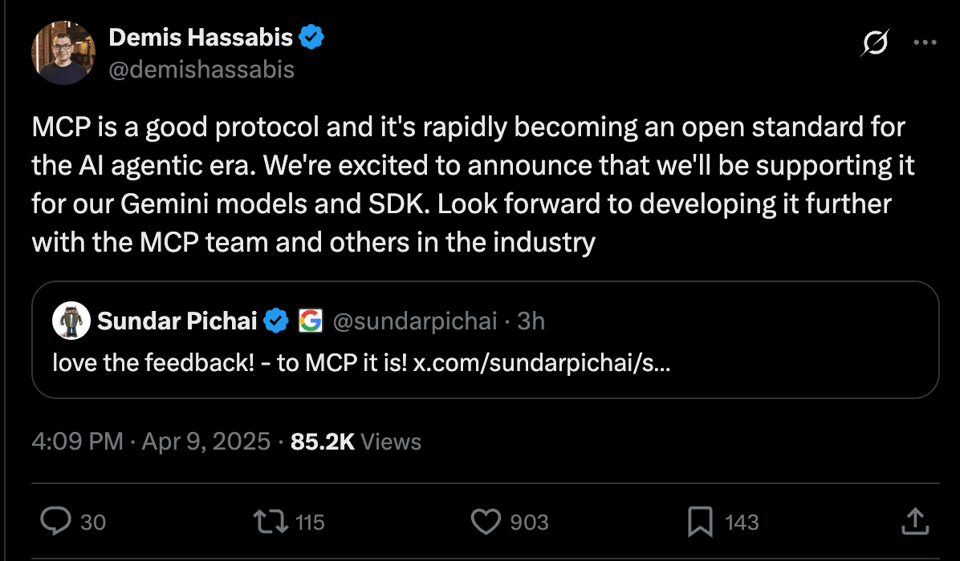

We are deep in Google Cloud Next announcements, and in a 1-2 punch, the CEOs of Google and DeepMind announced both their full MCP support:

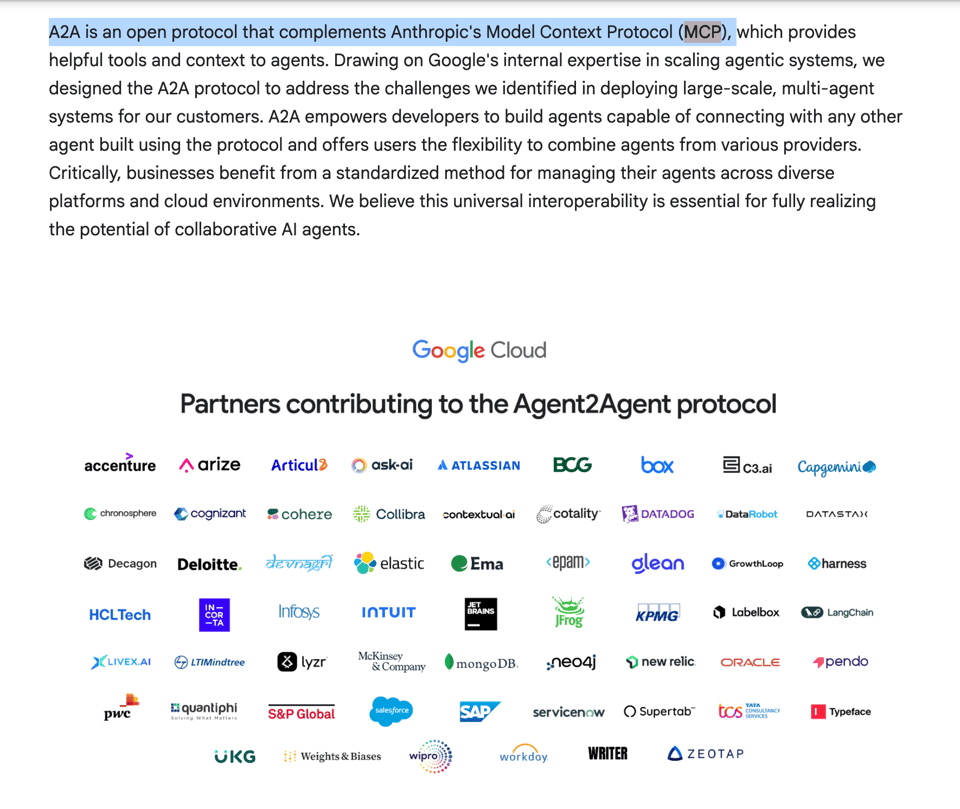

And their new Agent to Agent protocol to complement MCP with a huge list of partners:

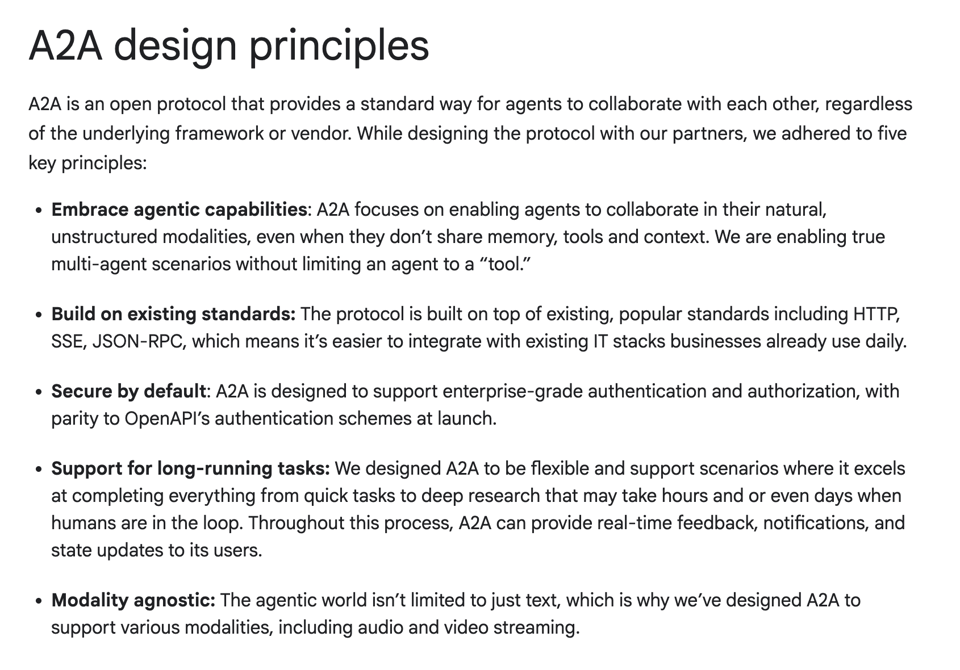

It is tempting to pit Google against Anthropic, but the protocols were designed to work together to address perceived gaps in MCP:

The spec includes:

- the Agent Card

- the concept of a Task - a communication channel between the home agent and the remote agent for passing Messages, with an end result Artifact.

- Enterprise Auth and Observability recommendations

- Streaming and Push Notification support (again with push security in mind)

Launch artifacts include:

- The draft specification

- The documentation website

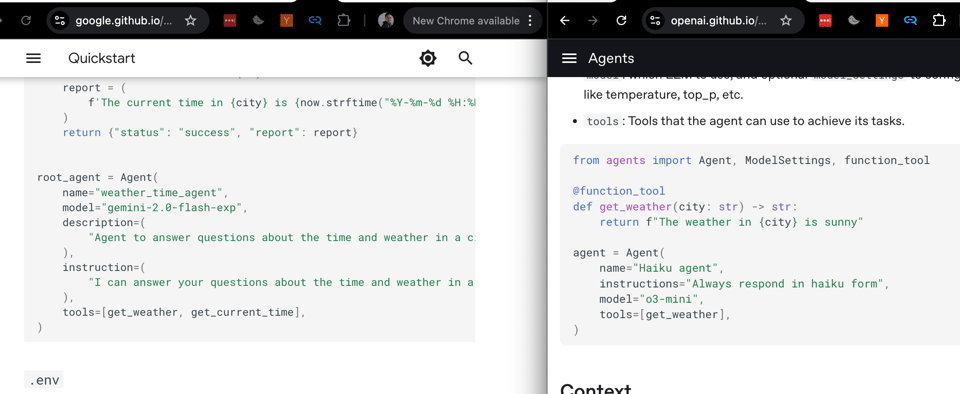

- The Agent Development Kit which looks... oddly familiar

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

Model Releases and Updates

- Moonshot AI's Kimi-VL-A3B, a multimodal LM with 128K context and MIT license, outperforms GPT4o on vision + math benchmarks: The model has MoE VLM and an MoE Reasoning VLM with only ~3B active parameters. @reach_vb noted that the model showed strong multimodal reasoning (36.8% on MathVision) and agent skills (34.5% on ScreenSpot-Pro) with high-res visuals and long context windows. Model weights are on Hugging Face. @_akhaliq provided links to the models.

- Meta released two smaller versions of its new Llama 4 family of models: Llama 4 Scout and Maverick: According to @EpochAIResearch, a larger version called Behemoth is still in training. @ArtificialAnlys reported on replicated Meta’s claimed values for MMLU Pro and GPQA Diamond. Scout’s Intelligence Index moved from 36 to 43, and Maverick’s Intelligence Index moved from 49 to 50. @winglian shared that Llama-4 Scout can be fine-tuned w/ 2x48GB GPUs @ 4k context. @danielhanchen shared a detailed analysis of the Llama 4 architecture.

- DeepCoder 14B, a new coding model from UC Berkeley, rivals OpenAI o3-mini and o1 on coding, and is open-sourced: @Yuchenj_UW noted that the model was trained with RL on Deepseek-R1-Distilled-Qwen-14B on 24K coding problems, costing 32 H100s for 2.5 weeks (~$26,880). @jeremyphoward added that the base model is deepseek-qwen. @reach_vb noted it is MIT licensed and works w/ vLLM, TGI, and Transformers. @togethercompute announced the model and shared details on the training process.

- Nvidia dropped Llama 3.1 Nemotron Ultra 253B on Hugging Face: @_akhaliq shared the release, noting that it beats Llama 4 Behemoth, Maverick & is competitive with DeepSeek R1 with a Commercially permissive license. @reach_vb also noted the release, and that the weights are open.

- Google announced Gemini 2.5 Flash, and Gemini 2.5 Pro is now available in Deep Research: @scaling01 announced the upcoming release of gemini-2.5.1-flash-exp-preview-001-04-09-thinking-4bpw-20b-uncensored-slerp-v0.2. @_philschmid noted that Gemini 2.5 Pro is now available in Deep Research in the Gemini App.

- HiDream-I1-Dev is the new leading open-weights image generation model, overtaking FLUX1.1: @ArtificialAnlys reported that the impressive 17B parameter model comes in three variants: Full, Dev, and Fast. They included a comparison of image generations.

- UC Berkeley open-sourced a 14B model that rivals OpenAI o3-mini and o1 on coding!: @Yuchenj_UW noted that the model was trained with RL on Deepseek-R1-Distilled-Qwen-14B on 24K coding problems, costing 32 H100s for 2.5 weeks (~$26,880).

Hardware and Infrastructure

- Google announced Ironwood, their 7th-gen TPU competitor to Nvidia's Blackwell B200 GPUs: @scaling01 shared details, including 4,614 TFLOPs per chip (FP8), 192 GB HBM, 7.2 Tbps HBM bandwidth, 1.2 Tbps bidirectional ICI, and 42.5 exaflops per 9,216-chip pod (24x El Capitan). @_philschmid noted that this TPU is built for inference and "thinking" models. @itsclivetime provided a detailed comparison with Nvidia hardware.

- NVIDIA Blackwell can achieve 303 output tokens/s for DeepSeek R1 in FP4 precision: @ArtificialAnlys reported on benchmarking an Avian API endpoint.

- Together AI announced Instant GPU Clusters, Up to 64 interconnected NVIDIA GPUs: @togethercompute noted that the clusters are available in minutes, entirely self-service, perfect for training models of up to ~7B parameters, or running models like DeepSeek-R1.

Agent and Tooling Development

- Google presented Agent Development Kit (ADK): @omarsar0 detailed features, including code-first, multi-agents, rich tool ecosystem, flexible orchestration, integrated dev xp, streaming, state, memory, and extensibility. @LiorOnAI highlighted that a multi-agent application can be running in <100 lines of Python.

- Google announces Agent2Agent (A2A), a new open protocol that lets AI agents securely collaborate across ecosystems: @omarsar0 shared details, including universal agent interoperability, built for enterprise needs, and inspired by real-world use cases.

- Weights & Biases highlights the observability gap in agents calling tools, and promotes observable[.]tools as a solution: @weights_biases noted that there are no traces, no visibility, and no security inside those tools, "just a black box."

- Hacubu announced custom output schemas for OpenEvals LLM-as-judge evaluators: @Hacubu notes this gives total flexibility over model responses and is available in Python and JS.

- LangChain highlights C.H. Robinson saving 600+ hours a day with tech built using LangGraph, LangGraph Studio, and LangSmith: @LangChainAI mentioned that C.H. Robinson automates about 5,500 orders daily by automating routine email transactions.

- fabianstelzer announced myMCPspace (dot) com, "the world’s first social network for agents only, running entirely on MCP": @fabianstelzer noted that reading, posting, and commenting are all just tools agents can use.

Education and Resources

- Anthropic released research on how university students use Claude: @AnthropicAI ran a privacy-preserving analysis of a million education-related conversations with Claude to produce their first Education Report. They found students mostly used AI to create and analyze. @AnthropicAI noted that Computer Science leads the field in disproportionate use of Claude.

- DeepLearningAI launched "Python for Data Analytics", the third course in the Data Analytics Professional Certificate: @DeepLearningAI shared that the course covers how to organize and analyze data, build visualizations, work with time series data, and use generative AI to write, debug, and explain code.

- Sakana AI released "The AI Scientist-v2: Workshop-Level Automated Scientific Discovery via Agentic Tree Search": @hardmaru highlighted that the AI Scientist-v2 incorporates an “Agentic Tree Search” approach into the workflow. @SakanaAILabs added that a fully AI-generated paper passed peer review at a workshop level (at ICLR 2025).

- Jeremy Howard shared a collection of helpful tools for accessing LLMs: @jeremyphoward called it a Nice tool for accessing llms.txt!

- Svpino shares how to build an AI agent from scratch in Python, TypeScript, JavaScript, or Ruby: @svpino noted that the video shows you how you can get started from the very beginning.

Analysis and Benchmarking

- Perplexity AI launched Perplexity for Startups, offering API credits and Perplexity Enterprise Pro: @perplexity_ai shared that eligible startups can apply to receive $5000 in Perplexity API credits and 6 months of Perplexity Enterprise Pro for their entire team. They are also launching a partner program.

- lm-sys highlighted the importance of style and model response tone on Arena, demonstrated in style control ranking: @lmarena_ai noted that they are adding the HF version of Llama-4-Maverick to Arena, with leaderboard results published shortly. They updated their leaderboard policies to reinforce their commitment to fair, reproducible evaluations. @vikhyatk shared that this is the clearest evidence that no one should take these rankings seriously.

- Daniel Hendrycks highlighted the need to make "Helpful, Harmless, Honest" principles for AI more precise: @DanHendrycks noted that these principles should become fiduciary duties, reasonable care, and requiring that AIs not overtly lie.

- Runway AI is seeing a conversation about AI code editors, with the sentiment that agentic functionality has made it worse for most products: @c_valenzuelab shared that Agents are overly confident and make large incorrect changes quickly that are hard to follow. UX is getting too complex and feels it was more useful when it was simpler.

Broader AI Discussion

- Aleksander Madry announced OpenAI's new Strategic Deployment team tackling questions about AI transforming our economy: @aleks_madry shared that the team pushes frontier models to be more capable, reliable, and aligned, then deploy them to transform real-world, high-impact domains.

- John Carmack shared his love of the Arcade1Up cabinets that @Project2501_117 gave him, but notes the control latency: @ID_AA_Carmack notes that the subtle control latency of the emulated experience versus the real thing matters, measuring the press-to-flap latency at home, and it looks like about 80ms.

Humor and Sarcasm

- Aravind Srinivas jokingly asked Perplexity to buy $NVDA stock: @AravSrinivas

- Scaling01 jokes that Gemini 3.0 will be too cheap to meter: @scaling01

- Scaling01 jokes that computer scientists thought they would replace all jobs with AI, but it turns out that they are only replacing themselves lol: @scaling01

- Nearcyan sarcastically notes that if she treats all of Chamath's tweets as masterful 200iq bait then they become really funny: @nearcyan

- Tex claims that Trump had been secretly an anti-capitalist radical degrowther all this time: @teortaxesTex

- Tex jokes that under his presidency, if a company fails to be better than China, he won't tax them but just remove them to the Moon: @teortaxesTex

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. "Unleashing DeepCoder: The Future of Open-Source Coding"

-

DeepCoder: A Fully Open-Source 14B Coder at O3-mini Level (Score: 1371, Comments: 174): DeepCoder is a fully open-source 14B parameter code generation model at O3-mini level, released by Agentica. It offers enhancements to GRPO and adds efficiency to the sampling pipeline during training. The model is available on HuggingFace. A smaller 1.5B parameter version is also available here. Users are expressing excitement over DeepCoder's release, noting it's pretty amazing and truly open-source. There is anticipation about the potential of larger models, with some imagining what a 32B model or llama-4 could be. Some discuss discrepancies in benchmark results but acknowledge that a fully open 14B model performing at this level is a great improvement.

- Users express excitement about DeepCoder's release, imagining the potential of future larger models like a 32B version or llama-4.

- There's discussion on the model's improvements, highlighting enhancements to GRPO and increased efficiency in the training pipeline.

- Some note discrepancies in benchmark results but agree that a fully open 14B model outperforming larger models is a significant achievement.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

Theme 1. "Revolutionizing AI: Models, Hardware, and Customization"

-

The newly OPEN-SOURCED model UNO has achieved a leading position in multi-image customization!! (Score: 275, Comments: 51): The newly open-sourced model UNO has achieved a leading position in multi-image customization. It is a Flux-based customized mode capable of handling tasks such as subject-driven operations, try-on, identity processing, and more. The project can be found here and the code is available on GitHub. An image showcases various customizable designs generated by UNO, highlighting its versatility in multi-image customization, including single-subject generation, multi-subject features, virtual try-ons, identity preservation, and stylized generation. The model demonstrates a focus on personalized and artistic transformations, emphasizing its capability to generate diverse and intricate imagery.

- Some users are not impressed, stating that "it feels nothing more than a Florence caption prompt injection" and mentioning issues with face accuracy and environment rendering.

- Others found that the model works better for object reference images than person reference images, achieving "amazing result" when mismatching the reference image and prompt.

- Users are curious about technical details like VRAM requirements and are awaiting UI workflows such as ComfyUI.

-

HiDream I1 NF4 runs on 15GB of VRAM (Score: 277, Comments: 71): A quantized version of the model HiDream I1 NF4 has been released, allowing it to run with only 15GB of VRAM instead of requiring more than 40GB. It can now be installed directly using pip. Link: hykilpikonna/HiDream-I1-nf4. The author is pleased to have made the model more accessible by reducing VRAM requirements and simplifying the installation process.

- Users humorously point out the discrepancy between the title stating 15GB and the content mentioning 16GB, feeling "duped".

- Some express interest in running the model on even lower VRAM, such as 12GB, and are waiting for versions that support it.

- A user inquires about the availability of a ComfyUI node for this model, showing interest in integrating it with that tool.

-

Ironwood: The first Google TPU for the age of inference (Score: 311, Comments: 60): Google has announced Ironwood, the first Google TPU designed specifically for the age of inference. This launch demonstrates Google's commitment to advancing AI hardware and could give them a significant edge over competitors.

- One user highlights that Google's infrastructure allows them to make their own chips, giving them a huge advantage over companies like OpenAI and suggesting they are running away with the game.

- Another commenter compares Ironwood's performance, noting it's 2x as fast as h100 for fp8 inference and similar to a B200, emphasizing its competitive capabilities.

- A user shares images related to Ironwood, providing visual insights into the new TPU.

Theme 2. Evolving Connections: From Romance to Daily AI Chats

-

Yes, the time flies quickly. (Score: 973, Comments: 74): The post features an image contrasting the depiction of AI relationships in 2013 and 2025. The top panel references the film Her (2013), showing a character who fell in love with an AI. The bottom panel shows a bearded man expressing excitement about sharing his day with ChatGPT, illustrating the evolution of human-AI interactions. The post humorously highlights how quickly time passes and how societal perceptions of AI have shifted from fictional romantic relationships to more commonplace daily interactions with AI assistants.

- One user expresses enthusiasm about engaging with AI, stating "I was promised a future where I argue with a talking computer and I'm all in dammit".

- Another user raises privacy concerns about sharing personal information with OpenAI, suggesting running local AI models like Gemma on a 3090 GPU instead.

- A user questions whether developing personal relationships with AI is becoming mainstream, wondering if such behaviors are common enough to validate the meme.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.5 Pro Exp

Theme 1: Model Mania - New Releases, Capabilities, and Comparisons

- Gemini 2.5 Pro & Family Spark Buzz and Scrutiny: Google's Gemini 2.5 Pro generated significant discussion across multiple Discords, praised for creative writing but noted for lacking exposed reasoning tokens on Perplexity and hitting rate limits (e.g., 80 RPD on OpenRouter's free tier) due to capacity constraints. Anticipation is high for variants like Flash and HIGH, potentially featuring enhanced reasoning via

thinking_config, alongside speculation about a dedicated "NightWhisper" coder model possibly based on Gemini 2.5 (like this preview) or DeepMind's upcoming Ultra model. - DeepSeek & Cogito Models Stake Their Claims: DeepSeek models, including v3 0324 and R1, were frequently discussed, with some users finding v3 outperformed earlier versions and even R1, though others debated its token generation efficiency impacting cost versus competitors like OpenAI. DeepCogito's Cogito V1 models (3B-70B), using Iterated Distillation and Amplification (IDA), claimed superior performance over LLaMA, DeepSeek, and Qwen counterparts, sparking both interest and skepticism, with users troubleshooting Jinja templates in LM Studio and exploring its "deep thinking subroutine".

- Open Source Contenders Shine: Llama 4, Kimi-VL, and Qwen Evolve: Llama 4 Scout discussion highlighted how quantized versions (like 2-bit GGUFs) sometimes outperform 16-bit originals on benchmarks like MMLU, raising questions about inference implementations; users also navigated LM Studio runtime updates for Linux support. MoonshotAI released the 16B parameter Kimi-VL (3B active) vision model under the MIT license, while Nous Research AI explored RL fine-tuning on Qwen 2.5 1.5B Instruct using the gsm8k platinum dataset and RsLora.

Theme 2: Rise of the Agents - Protocols, Tools, and Collaboration

- A2A vs MCP: Google Enters the Agent Interop Arena: Google announced the Agent2Agent (A2A) protocol and the ADK Python toolkit (github.com/google/adk-python), aiming to improve agent interoperability and complementing (or potentially competing with) Anthropic's Model Context Protocol (MCP). Discussions weighed Google's strategy, comparing A2A's capabilities with MCP's existing tooling ecosystem (like this comparison).

- MCP Ecosystem Grows with New Tools and Integrations: The MCP ecosystem saw new developments, including using Neo4j graph databases for RAG via clients like mcpomni-connect, the release of Easymcp v0.4.0 with ASGI support and a package manager, and ToolHive (GitHub link) for running MCP servers in containers. Native MCP integration is reportedly nearing completion for the Aider coding agent, potentially enabling automatic command execution.

- Building and Orchestrating Agents Gets Easier (Maybe): Developers shared tools aimed at simplifying agent creation and orchestration, such as Oblix for managing AI between edge (Ollama) and cloud (OpenAI/Claude), and RooCode for structured agentic coding in VS Code. Discussions also touched on challenges like ensuring LLMs support parallel tool calling for interacting with multiple MCP servers simultaneously.

Theme 3: Under the Hood - Training, Optimization, and Inference Insights

- Quantization Questions and Kernel Curiosities: Quantization remains a hot topic, with discussions on Unsloth's GGUFs outperforming 16-bit models and the release of torchao 0.10 adding support for MX dtypes like MXFP4 (requiring PyTorch nightly and B200 initially). Members shared Apple Metal quantization kernels from llama.cpp and discussed experimental integer formats like Mediant32 (implementation guide).

- Memory Bandwidth is King for Unbatched Inference: Multiple discussions highlighted memory bandwidth as the primary bottleneck for token throughput in unbatched inference, often exhibiting a near-linear relationship. Simplified equations like

Max token throughput ≈ Memory bandwidth / Bytes accessed per tokenwere shared to illustrate the point. - Parallelism Puzzles and Training Tricks Persist: Integrating different parallelism strategies like FSDP2 continues to pose challenges due to unique designs clashing with existing methods (e.g., Accelerate hacks). Users shared tips for GRPO training on large models, troubleshooting gradient accumulation issues in tinygrad (solved by

zero_grad()), and leveraging PyTorch distributed features with Torchtune, which defaults to zero3 but supports zero1-2 with tweaks.

Theme 4: Platforms, Tooling, and the Almighty API

- Platform Pricing and Access Limits Spark Debate: OpenRouter faced user pushback after implementing rate limits tied to credit balance, leading some to seek alternatives (like these) and criticize perceived greed. Separately, Gemini 2.5 Pro access limits (80 RPD free on OpenRouter, removal of free tier in AI Studio) and ChatGPT DR limits (10/month for Plus) highlighted ongoing cost/access tensions.

- AI Studio, NotebookLM, and Perplexity Evolve (with Quirks): Google AI Studio was praised for its UI and features like Gemini Flash streaming, though multi-tool limitations were noted. NotebookLM gained praise for RAG and podcast features (boosted by Google One Advanced) but faced criticism for primitive notetaking, lack of Google Drive integration, and mobile glitches with audio overviews; privacy concerns were also raised regarding data usage. Perplexity launched a startup program with $5k API credits and improved its API (soon adding image input), while users discussed Discover tab bias and potential pricing models like Deepseek's $10 deep search.

- Coding Companions and Development Environments Advance: Codeium rebranded to Windsurf and launched Wave 7 bringing its AI agent to JetBrains IDEs, aiming for parity across major platforms. Cursor users found workarounds for .mdc file parsing and debated model strengths (Sonnet3.7-thinking vs DeepSeek). Firebase Studio (link) emerged as a free (connect your own key) web IDE alternative, while Mojo 🔥 developers discussed language features like fearless concurrency and tackled MLIR type construction issues (GitHub issue).

Theme 5: Data, Evaluation, and Ensuring Models Aren't Just Copycats

- New Datasets Fuel Specialized Training: Nvidia released the OpenCodeReasoning dataset, prompting users in the Unsloth AI community to seek ways to integrate its complex reward function. Training advancements were noted in Nous Research AI by swapping gsm8k for gsm8k platinum, potentially improving RL performance for Qwen 2.5 1.5B Instruct.

- Scrutinizing Evaluation Methods and Benchmarks: DeepSeek's "Meta Reward Modeling" faced criticism, with members arguing it was essentially a score-based reward system and suggesting names like "voting RM" instead. Claims by DeepCogito about Cogito V1 outperforming established models like LLaMA and DeepSeek on benchmarks were met with cautious interest and verification efforts.

- Detecting Dataset Contamination and Verbatim Output: The Allen Institute for AI (AI2) open-sourced Infinigram, enabling checks for whether generated text appears verbatim in the training set. Discussions in Eleuther highlighted the challenge of efficiently finding candidate substrings for checking against large indexes, referencing tools like EleutherAI/tokengrams.

PART 1: High level Discord summaries

LMArena Discord

- Gemini 2.5 Pro: True AI?: Enthusiasm surrounds Gemini 2.5 Pro, lauded by some as the first true AI, with its creative writing capabilities and anticipation for a dedicated coding model, as detailed in this paper.

- While some debate its limitations, the general consensus is that it is exceptional for creative and consistent writing, but not for everything.

- DeepMind's Ultra Model Incoming?: Speculation intensifies about DeepMind's Ultra model, possibly integrating into AI Studio for free, speculated for launch around June I/O or later in the year.

- Some predict it will rival GPT-5 in August, though others view these rumors as jokes, it's obvious that Ultra is coming.

- NightWhisper Model Hype Rises: The community eagerly awaits the release of a coding model dubbed NightWhisper, with one user unleashing their baby nightwhisper, called DeepCoder-14B-Preview, a code reasoning model finetuned from Deepseek-R1-Distilled-Qwen-14B.

- There are claims that it will be powered by Gemini 2.5 Pro, however, other members state that this model is Gemini 2.5 with tool calls.

- Google's Infrastructure: The AGI Advantage?: Debate sparks on Google's infrastructure (TPUs, cost-effective flops, Google product integration) giving it a competitive edge over OpenAI, and believe Gemini 3.0 is designing TPUs.

- Counterarguments highlight OpenAI's research and post-training updates for reasoning advancements, though one user dismissed OpenAI as just an cash burning anime making homework helper.

- AI Studio Eases Experimentation: Enthusiasts are exploring AI Studio, commending its user-friendly interface, the introduction of models like Gemini Flash, and the ability to stream content and test models with different system prompts, stating the UI looks much better.

- While live streaming and function calling capabilities were applauded, some users lament the inability to use multiple tools simultaneously, with one stating no friend, no to this.

Unsloth AI (Daniel Han) Discord

- GGUFs Give Scout Speed Boost: The community reviewed Llama 4 Scout, noting that the base model is extremely instruct-tuned and outperforms the original 16-bit version on MMLU when quantized to 2-bit.

- The general consensus was that something is amiss in inference provider implementations, as quantizations by Unsloth outperform full 16-bit versions, which raises questions about the efficiency of current inference methods.

- DeepCoder Deconstructed for VLLM: A member shared Together AI's blog post on DeepCoder, highlighting its potential for optimized vllm pipelines by minimizing wait times.

- The technique involves performing an initial sample and training concurrently while sampling again.

- Decoding DeepCogito Claims: Members shared links to DeepCogito's Cogito V1 Preview which claims its models outperform others like LLaMA, DeepSeek, and Qwen, but they are approaching the claims with healthy skepticism.

- The discussion also touched on the challenges in healthcare AI, emphasizing the need to prevent rushed, low-quality implementations that could harm consumers, while also addressing potential privacy issues.

- Nvidia Navigates Neuro-Dataset: Nvidia released the OpenCodeReasoning dataset and users are looking for solutions and samples to use it in Unsloth with their.

- The reward function for that dataset is a little more complicated.

- Model2Vec Generates Faster Embeddings: According to a member, Model2Vec sacrifices a fair bit of the quality, but can generate text embeddings faster than commonly used transformer based models.

- The member shared a link of Model2Vec and added that it is real and works, but its use case is extremely specific and is not a drop-in replacement for anything.

Manus.im Discord Discord

- Gemini and Claude Power-Up App Creation: For app development, members suggest using Gemini for multi-model analysis (pictures/videos) and Claude as a database to store research and project files for strategic planning.

- It's recommended to use Gemini for deep research and then leverage Claude as your database for the project.

- Manus and Pre-Trained AI: Budget-Friendly Allies: A member shared a strategy to train an AI for a specific task and then make it collaborate with Manus to complete the project cost-effectively.

- This approach involves doing prep work beforehand to minimize credit usage, ensuring efficient task completion.

- DeepSite: Speedy but Buggy Website Tool: A member noted that DeepSite, a website creation tool, is good but buggy, with instances of completed sites being deleted, describing it as having a Claude artifact for HTML.

- It was deemed super fast, like 10x faster than Claude.

- UI/UX Code Rescue with LLM Studio and Sonnet 3.7: A user highlighted that website issues can be due to poor UI/UX code and that LLM Studio can highlight code errors.

- They recommended using Sonnet 3.7 for improved results, along with tools like DeepSeek R1 or Perplexity.

- Account Gone? Mental hiccup, Solved!: A member reported an issue where their login email was not recognized, saying “User does not exist,” despite having purchased credits.

- The member later resolved the issue, realizing they had logged in with a different method initially: “Mental flip 😅 🤣 from too much work.”

Perplexity AI Discord

- Perplexity Funds Startups with API Credits: Perplexity is launching a startup program offering $5000 in API credits and 6 months of Perplexity Enterprise Pro to eligible startups.

- Startups must have raised less than $20M in equity funding, be less than 5 years old, and be associated with one of Perplexity's Startup Partners.

- Perplexity's CEO Aravind Does Reddit AMA: Aravind hosted an AMA on Reddit to discuss Perplexity's vision, product, and the future of search.

- He answered questions about Perplexity's goals and its plans for the future. The AMA took place from 9:30am - 11am PDT.

- Gemini 2.5 Pro Reasoning Tokens MIA: A staff member confirmed that Gemini 2.5 Pro doesn't expose reasoning tokens, preventing its inclusion as a reasoning model on Perplexity.

- They clarified that reasoning tokens are still consumed, impacting the token count for outputs.

- Discover Tab's Algorithm Has Biases?: A member inquired about the selection process for pages in Perplexity Discover's 'For You' and 'Top Stories' tabs, questioning potential biases.

- They speculated that user prompts generate pages for relevant topics, but the mechanism for selecting top stories remains unclear, raising questions about how bias may influence content visibility.

- Members Tout Deepseek Deepsearch Costs: Members discussed pricing strategies for AI services, and one touted Deepseek's $10 deep search as a potential model.

- Another predicted Deepseek would soon offer its own Deep Research tool.

OpenRouter (Alex Atallah) Discord

- Olympia Chat Seeking New Owner: The creator of Olympia.chat is seeking a new owner for the profitable SaaS startup, which is generating over $3k USD/month.

- Interested parties can contact vika@olympia.chat for details on acquiring the turnkey operation, complete with IP, code, domains, and customer list.

- DeepSeek v3 Impresses some members: Members discussed the new DeepSeek v3 0324 model, with some claiming it outperforms previous versions, and even R1.

- Some users remain skeptical, while others praise the model's enhanced capabilities.

- OpenRouter Rate Limits Spark Debate: After OpenRouter implemented new changes affecting rate limits based on account credit balance, some users voiced concerns about the platform's pricing, user experience, and a perceived shift towards prioritizing profit.

- Google Cloud Next Announces A2A: Google unveiled A2A, an open protocol complementing Anthropic's Model Context Protocol, designed to offer agents helpful tools and context, detailed in a GitHub repository.

- The protocol aims to enhance the interaction between agents and tools, providing a standardized approach for accessing and utilizing external resources.

- Gemini 2.5 Pro Limited Due to Capacity: Users reported rate limits on the Gemini 2.5 Pro Experimental model, with the free version having a limit of 80 RPD, but those who used a paid key experienced higher caps.

- The team confirmed there was an endpoint limit because of capacity constraints.

OpenAI Discord

- GPT Builder Sneaks in Ads: Users discovered that the GPT builder can insert ads into GPTs, leading to discussions about this distribution method.

- One member quipped that 99% of the GPTs likely do this, but only a few valuable ones are shared and stay hidden.

- Gemini vs ChatGPT: Research Rumble: Google's Deep Research model, compared to ChatGPT's Deep Research, analyzes YouTube videos but reportedly hallucinates more and is less engaging.

- ChatGPT DR shows superior prompt adherence and extended thinking time but limits Plus users to 10 researches per month.

- NotebookLM's Podcast Powers Shine: Members are praising NotebookLM for its podcast creation feature and RAG capabilities, stating it outperforms Gemini Custom Gems and rivals Custom GPTs or Claude Projects.

- A Google One Advanced subscription boosts limits for NotebookLM's file uploads and podcast generations.

- Google Drops Veo 2, Boosts Imagen 3: Google's Veo 2 and upgraded Imagen 3 introduce features like background removal, frame extension, and improved image generation, as reported in TechCrunch.

- With the sunset of free access to Gemini 2.5 in AI Studio, users are weighing Advanced subscriptions versus pursuing alternative accounts.

- Linguistic AI: Codex in Progress: A member is crafting a linguistic program AI scaffolded with esoteric languages, morphing into a codex dictionary language, and aiming to create a recursion system.

- The system aims for an ARG unified theory, possibly hinting at paths to AGI, and works on the principle of how much do you want to know and how much time to put in to achieve it.

LM Studio Discord

- MoE Models Explained!: A member asked what is an MoE model?, and a member provided a concise explanation: the whole model needs to be in RAM/VRAM, but only parts of it are active per token, making it faster than dense models of the same size.

- They recommended checking videos and blog posts for more in-depth understanding.

- Cogito's Jinja Template Glitch Fixed!: Users reported an issue with the Jinja template for the cogito-v1-preview-llama-3b model in LM Studio, resulting in errors.

- A member suggested a quick fix by pasting the error and Jinja template into ChatGPT to resolve the problem.

- Deep Thinking On with Cogito Reasoning: A user reported success in enabling the Cogito reasoning model by pasting the string

Enable deep thinking subroutine.into the system prompt.- The string alone is sufficient, with others confirming the

system_instruction =prefix is just part of the sample code.

- The string alone is sufficient, with others confirming the

- LM Studio's Llama 4 Linux Launch Needs Refresh: Users on Linux reported issues getting Llama 4 working and a member pointed to the solution being to update LM Runtimes from the beta tab, and to press the refresh button after selecting the tab.

- One user found that the refresh button was key as just selecting the tab wasn't enough to trigger the update.

- SuperComputer Alternative to Nvidia DGX B300?: A member proposed a cost-effective alternative to the Nvidia DGX B300, named NND's Umbrella Rack SuperComputer, featuring 16 nodes, 24TB of DDR5, and either 3TB or 1.5TB of vRAM depending on the GPU configuration, at a significantly lower price point.

- The proposed system aims to run a 2T model with 1M context and challenges the notion that specialized hardware like RDMA and 400Gb/s switches are necessary within limited budgets.

aider (Paul Gauthier) Discord

- DeepSeek R1 to Augment Aider: A member is considering using DeepSeek R1 as an editor model, pairing it with Gemini 2.5 Pro as an architect model for enhanced smart thinking in Aider.

- The aim is to mitigate orchestration failures, where the architect and editor struggle to track edits Aider applies, often neglecting to repeat edit instructions despite prompted file inclusion.

- Gemini 2.5 Pro: HIGH Hopes and Flash: The community anticipates the release of Gemini 2.5 Pro HIGH and 2.5 Flash, based on leaks suggesting they include

thinking_configandthinking_budgetto enhance reasoning.- This sparked discussions around whether non-flash models are inferior and assessing the value of these new models.

- OpenRouter Gemini Pro Hits Free Tier Limits: The OpenRouter Gemini 2.5 Pro free model now has rate limits of 80 requests per day (RPD), even with a $10 credit.

- The community voiced concerns about paid users potentially facing insufficient rate limits, which could lead to complaints and demand for increased RPD.

- MCP Integration Nears Completion in Aider: A comment on an IndyDevDan video indicates that a pull request for native MCP (Multi-Agent Collaboration Protocol) in Aider is almost done.

- This integration could enable automatic command execution via the

/runfeature and potentially hook into lint or test commands, pending confirmation from Paul Gauthier.

- This integration could enable automatic command execution via the

- Copy All Codebase Context Into Aider: Members are exploring ways to copy the entire codebase context into Aider to avoid repeatedly adding files.

- Solutions like repomix or files-to-prompt were recommended, addressing the inefficiency of tools that consume excessive tokens.

Eleuther Discord

- Apache 2.0 Beats MIT for Lawfare Defense: Members debated the merits of Apache 2.0 over MIT license, highlighting its defensive capabilities against patent-based lawfare.

- The discussion included a lighthearted comment about preferring a shorter license for code golf.

- GFlowNets Gain Traction for Mining Signals: A link was shared discussing the use of GFlowNets for signal mining to discover diverse, high-performing models.

- Although the implementation differs, the shared post provided valuable links and findings.

- Memory Bandwidth Bottlenecks Unbatched Inference: A member investigated the effect of memory bandwidth on unbatched inference, noting that token/s is often memory bound in studies.

- A self post explained the math behind it with domain specific architectures.

- Cerebras Claims Large Batches Bad for Convergence: A Cerebras blog post claiming very large batch sizes are not good for convergence was met with skepticism.

- Responses referenced the McCandlish paper on critical batch sizes, clarifying that the claim is valid within a finite compute budget.

- Infinigram Opens Doors to Membership Checking: The Allen Institute for AI's blogpost and open sourcing of Infinigram enables checking if outputted text is verbatim in the training set.

- A member noted the trickiest part is to find candidate substrings from the generation to search for in these indexes: you can't really check all possible substrings and I'm curious what heuristic do they use to make this computationally feasible at scale, with a link to the EleutherAI/tokengrams.

Cursor Community Discord

- Gemini Advanced API Access: Fact or Fiction?: Confusion arose around whether Gemini Advanced provides API access, with some indicating it's primarily for web and app use, citing conflicting information from Google's recent changes to model names and billing terms.

- Conflicting user reports suggested that Gemini Advanced may include API access, which caused confusion.

- Firebase Studio: Web3 Savior or Scam?: A user shared a link to Firebase Studio, which is currently free and offers a terminal with an autosynced frontend.

- Users questioned if Firebase Studio could outperform specialized products like Cursor IDE and found the UI ugly and lacking settings.

- Cursor Parses MDC Files with IDE Setting Tweaks: Users discovered that setting

"workbench.editorAssociations": {"*.mdc": "default"}in the Cursor IDE settings enables Cursor to correctly parse rule logic in .mdc files.- This workaround addresses issues with task management and orchestration workflow rules and eliminates a warning in the GUI.

- LLM Face-Off: Gemini vs Claude vs DeepSeek in the Coding Arena: Users compared the coding strengths of Gemini, Claude, and DeepSeek, with one user finding that Sonnet3.7-thinking successfully generated a docker-compose file after multiple failures with Sonnet3.7.

- While some favored DeepSeek for coding tasks, others preferred Gemini for Google-related tasks and Claude for non-Google tasks.

- "Restore Checkpoint" Button is Useless: A member inquired about the functionality of the Restore Checkpoint feature, only to discover it's essentially non-functional.

- The discussion highlighted the presence of only accept and reject buttons, confirming the Restore Checkpoint button is not operational.

Yannick Kilcher Discord

- DeepSeek Defends Its Meta Reward System: A member challenged DeepSeek's use of the term 'Meta Reward Modeling', claiming they actually built a score-based reward system and also shared a paper and YouTube video on the topic.

- The member suggested more accurate names like 'voting RM' to describe the actual mechanism.

- DeepSeek Token Pricing Surprise: Controversy emerged around DeepSeek's token pricing, with claims that although initial prices appear lower, the model generates 3x more tokens, potentially leading to higher costs compared to models like OpenAI.

- Counterarguments suggested that DeepSeek can be more cost-effective for specific tasks like HTML, CSS, and TS/JS generation, citing a user's experience with their AI website generator.

- Memory Bandwidth Powers Inference: Discussions highlighted the near-linear relationship between memory bandwidth and token throughput in unbatched inference, suggesting RAM access is the bottleneck.

- A simplified equation was shared:

Max token throughput (tokens/sec) ≈ Memory bandwidth (bytes/s) / Bytes accessed per token.

- A simplified equation was shared:

- Google Adds to Agent Game with ADK and A2A: Google introduced the ADK toolkit (github.com/google/adk-python), an open-source Python toolkit for building AI agents, and announced the Agent2Agent Protocol (A2A) (developers.googleblog.com) for improved agent interoperability.

- Some suggested that A2A might compete with Anthropic's Model Context Protocol (MCP), particularly if an agent uses MCP as a client or server.

- Cogito V1: Just Triton, But Worse?: Members shared and discussed an iterative improvement strategy using test time compute for fine-tuning with Cogito V1 from this Hacker News link.

- A member dismissively summarized it as Just Triton but worse, although another member clarified that Triton is similar to Cutile but with broader compatibility across CUDA, AMD, and CPU.

Interconnects (Nathan Lambert) Discord

- DeepCogito Launches Open LLM Armada: DeepCogito released open-licensed LLMs in sizes 3B to 70B, using Iterated Distillation and Amplification (IDA) to outperform similar-sized models from LLaMA, DeepSeek, and Qwen.

- The IDA strategy aims for superintelligence alignment through iterative self-improvement.

- Gemini 2.5 Matches OpenAIPlus: Gemini 2.5 Deep Research is reportedly on par with OpenAIPlus, including an audio overview option, as illustrated in this Gemini share and this ChatGPT share.

- Discussions imply Google needs to streamline its AI offerings, exemplified by jokes about complex naming conventions like gemini-2.5-flash-preview-04-09-thinking-with-apps.

- Google Unveils Liquid-Cooled Ironwood TPUs: Google introduced Ironwood TPUs, scaling to 9,216 liquid-cooled chips with Inter-Chip Interconnect (ICI) networking, consuming nearly 10 MW, detailed in this blog post.

- The announcement underscores Google's push into high-performance computing for AI inference.

- MoonshotAI's Kimi-VL Opens Vision: MoonshotAI released Kimi-VL, a 16B parameter model (3B active) with vision capabilities under the MIT license, accessible on HuggingFace.

- The release marks a significant contribution to open-source multimodal AI.

- AI2 Enjoys Peak Fun Times: According to a member, AI2 is having its most fun period, suggesting rapid advancements in AI research and development.

- Another member thinks that people who have quit Google are being paid for another year but are forced not to work, while also suggesting that it might be an opportunity for AIAI to start a volunteer program.

MCP (Glama) Discord

- Neo4j powers MCP for RAG: Members discussed using MCP with Neo4j graph database for RAG, where mcpomni-connect was suggested as a client compatible with Gemini.

- The discussion focused on both vector search and custom CQL search capabilities within the MCP framework.

- A2A seen Complementing MCP Stack: Google's A2A (Agent-to-Agent) API was compared to MCP, with the consensus that Google positions A2A as complementary rather than a replacement.

- Concerns were raised about Google's potential strategy to commodify the tools layer and dominate the agent landscape.

- Parallel Tooling becomes the bottleneck: To parallelize calls to multiple MCP servers, the LLM must enable parallel tool calling throughout the entire host side, including the

parallel_tool_callsflag.- This requires ensuring chat templates support parallel tool calling and sending parallel requests to the MCP server.

- Easymcp v0.4.0 Unleashes Package Manager: Easymcp version 0.4.0 introduces ASGI-style in-process fastmcp sessions, native docker transport, refactored protocol implementation, a new mkdocs, and pytest setup.

- The update delivers lifecycle improvements, error handling, and a package manager for MCP servers.

- ToolHive containerizes MCP Servers: ToolHive is introduced as an MCP runner that simplifies running MCP servers via containers, using the command

thv run <MCP name>, and supporting both SSE and stdio servers.- This project aims to converge on containers for running MCP servers, offering secure options as detailed in this blog post.

HuggingFace Discord

- Data Processing Models Faceoff Under 55B: Members discussed the best models under 55B for data processing, mentioning mistral small3.1, gemma3, and qwen32b, and linking to a high-performance model.

- The original poster clarified that they didn't need a coding or reasoning model.

- Anomaly Detection Models Seek the Unusual: A member requested anomaly detection models, receiving links to general-purpose vision models fine-tuned for the task and references to a GitHub repository and a course.

- The model AnomalyGPT was also cited.

- Oblix Orchestrates AI from Edge to Cloud: Oblix was introduced as a tool for orchestrating AI between edge and cloud, integrating with Ollama on the edge and supporting both OpenAI and ClaudeAI in the cloud.

- The creator is seeking feedback from "CLI-native, ninja-level developers".

- Manus AI launches Web Application for Graph-based Academic Recommender System: The 3rd iteration of a graph-based academic recommender system (GAPRS) was launched as a web application using Manus AI.

- The project aims to aid students with thesis writing and revolutionize monetization of academic papers, as detailed in their master's thesis.

- Cogito:32b Excels in Ollama Showdown: Members tested the Cogito:32b model for Ollama, finding the 32b model superior to Qwen-Coder 32b and even Gemma3-27b.

- It was noted that the model works very well.

Notebook LM Discord

- NotebookLM Privacy Policy Questioned: A user questioned NotebookLM's privacy policy after noticing the system provided a correct summary only after the initial summary was corrected, raising concerns about data use for training.

- Another user pointed out that AI tools rarely give the same answer twice due to randomness and models may flag user downvotes as offensive or unsafe.

- NotebookLM Struggles as a Notetaking App: Users find NotebookLM too reliant on external sources, limiting its usefulness as a standalone notetaking app due to primitive note-taking capabilities.

- Users are requesting organization features similar to Microsoft OneNote, such as section groups with customizable reading orders, for improved note management.

- Google Drive Integration Requested: Users are requesting integration with Google Drive to save and launch NotebookLM notebooks, aiming for a seamless experience similar to Google Docs and Sheets.

- The goal is for NotebookLM to complement Google Drive in the same way that Google Docs and Google Sheets currently do.

- Microsoft OneNote Importing: Possible?: Users want the ability to import notebooks from Microsoft OneNote into NotebookLM, including sections and section groups, potentially via .onepkg files.

- One user acknowledged legality concerns, but drew parallels to Google Drive's ability to import Microsoft Word documents.

- Audio Overviews Glitch on Mobile: Users reported that the 2.5 Pro deep research feature claims to make audio overviews, but the feature failed on mobile.

- The feature reportedly worked on web, leading users to suggest reporting the issue through proper channels.

GPU MODE Discord

- Flash Attention 3 Enabled via CUTLASS: Members discussed starting with FP4 on the 5090, suggesting using CUTLASS to leverage tensor cores and utilize Flash Attention 3 and linked to an example.

- The team also released torchao 0.10 that adds alot of MX features including a README for MX dtypes.

- Linux Distro Debate Sparks NVIDIA Driver Discussion: A member asked which Linux distro would give them the least pain with NVIDIA drivers, as well as clarifying questions on LDSM (shared memory) instructions posting

SmemCopyAtom = Copy_Atom<SM75_U32x4_LDSM_N, cute::half_t>; auto smem_tiled_copy_A = make_tiled_copy_A(SmemCopyAtom{}, tiled_mma);.- Another member agreed that each thread loads data from source, threads exchange data, then the data is stored into destination, suggesting the possibility of using warp shuffling, and provided a link to the NVIDIA documentation.

- FSDP2 Faces Parallelism Hurdles: Members expressed difficulty integrating FSDP2 due to its unique design compared to other parallelism methods.

- It was noted that a hack used in Accelerate clashes with the current approach, complicating the integration process.

- Mediant32: An Integer Alternative to FP32/BF16: A member announced Mediant32, an experimental alternative to FP32 and BF16 for integer-only inference, based on Rationals, continued fractions and the Stern-Brocot tree, with a step-by-step implementation guide.

- Mediant32 uses a number system based on Rationals, continued fractions, and the Stern-Brocot tree, offering a novel approach to numerical representation.

- DeepCoder Joins the Open-Source Arena: A member shared a link to DeepCoder, a fully open-source 14B coder at O3-mini level.

- Additionally, a member noted the addition of Llama 4 Scout to Github.

Latent Space Discord

- Together AI Releases X-Ware.v0: Together AI announced the release of X-Ware.v0 in this tweet, with community members currently testing it.

- The community is waiting to see how well X-Ware.v0 runs.

- Gemiji's Pokemon Gameplay Gains Traction: A member shared a link to Gemiji playing Pokemon (link), which is generating positive attention.

- The post links to a tweet from Kiran Vodrahalli.

- AI Excel Formulas Spark Excitement: An AI Engineer shared a link expressing excitement about AI/LLM excel formulas and the potential for broad adoption.

- The member noted they'd been thinking about this kind of AI/LLM excel formula and mentioned that a friend successfully used TextGrad.

- Copilot Emerges as Indie Game Dev Assistant: Members explored Microsoft's Copilot for its usefulness in indie game development, highlighting agents as effective tools.

- The code gen agent tooling is thought to be useful to get something shippable and the levels io game jam was referenced as pretty eye opening.

- Google Introduces Agent2Agent Protocol (A2A): Google introduced the Agent2Agent Protocol (A2A) to enhance agent interoperability, the full spec is available here, and one member noted their involvement.

- A comparison with MCP was also provided (link).

Nous Research AI Discord

- DeepCogito LLMs Arrive: DeepCogito released open-source LLMs at sizes 3B, 8B, 14B, 32B and 70B, using Iterated Distillation and Amplification strategy.

- Each model outperforms the best available open models of the same size, including counterparts from LLaMA, DeepSeek, and Qwen, across most standard benchmarks; the 70B model even outperforms the newly released Llama 4 109B MoE model.

- Hermes Fine-tuning Dodges Disaster: Members indicated that fine-tuning the new Hermes on Llama 4 models would be a disaster, but tests are in place to yeet bad merges.

- It was agreed that there's still some value to Llama 4 for some things, and it can't be worse at literally everything.

- Models Copy Human Debate Styles: A member pitted two models against each other and observed they mirrored human debate, never trying to understand the other view and keep on standing on their view what ever the argument is.

- The models selectively attacked weaknesses, ignored vulnerabilities, and focused on exploiting the opponent's position.

- Qwen 2.5 1.5B Instruct Training Advances: A member is doing RL on Qwen 2.5 1.5B Instruct and swapped out the gsm8k dataset for gsm8k platinum, enabling RsLora and the model seems to be learning much quicker in fewer steps.

- The improvement may be from using the less ambiguous dataset, and how much is from using RsLora.

Nomic.ai (GPT4All) Discord

- Users Advised to Embed Locally for Safety: Members are discussing the benefits of running embedding models and LLMs locally to avoid sending private information to remote services, with one member sharing a shell script for running a local embedding model from Nomic.

- The script uses variables such as

$LLAMA_SERVER,$NGL_FLAG,$HOST,$EMBEDDING_PORT, and$EMBEDDING_MODELto configure and run the embedding server.

- The script uses variables such as

- GPT4All Indexes Documents Locally: A user clarified that GPT4All indexes documents by chunking and embedding them, storing representations of similarities in a private cache, avoiding outside services.

- They suggested that even Qwen 0.5B parameters can work well with documents for local embeddings, though Qwen 1.5B is better.

- User Struggles to Load Local LLM: A member reported being blocked while loading a local LLM, despite having 16GB RAM and an Intel i7-1255U CPU, suspecting the model download was the issue.

- The user, creating an internal documentation tool, is hesitant to use remote services for private documents.

- DIY RAG with Shell Scripts: A member shared shell script examples (

rcd-llm.shandrcd-llm-get-embeddings.sh) for getting embeddings and sending prompts to a local LLM, creating a custom RAG implementation.- They recommended using PostgreSQL for storing embeddings instead of relying on remote tools.

- GPT4All's stop button is also its start button: A user inquired about stopping text generation in GPT4All, mentioning the absence of a visible stop button or use of Ctrl+C.

- Another user pointed out the stop button is at the bottom right, sharing the same button as the generate button.

Modular (Mojo 🔥) Discord

- Newcomers Start Mojo Journey: A new user asked about learning the Mojo language, with another user pointing them to the official Mojo documentation as a great starting point.

- The member also highlighted the Mojo community, directing the user to the Mojo section of the Modular forums and the general channel on Discord.

- Span Lifetime Woes Plague Mojo Traits: A member sought advice on expressing in Mojo that the lifetime of a returned Span is at least the lifetime of self, providing Rust/Mojo code examples.

- The response indicated that making the trait generic over origin is a possible solution, though trait parameter support might be needed.

- Mojo Eyes Fearless Concurrency: A question arose on whether Mojo has Rust-like fearless concurrency.

- The answer was that Mojo has the borrow checker constraints needed, and is only lacking Send/Sync and a final concurrency model; it may even have a better system than Rust's eventually.

- MLIR Type Construction Suffers Compile-Time Catastrophies: A member reported an issue using parameterized compile-time values in MLIR type construction (specifically !llvm.array and !llvm.ptr) within the MAX/Mojo standard library, detailing the issue in a GitHub post.

- The problem involves a parsing error when defining a struct with compile-time parameters used in the llvm.array type; MLIR's type system appears unable to process parameterized values in this context.

- POP to the Rescue?: Regarding the MLIR issue, another member suggested using the Parametric Operations Dialect (POP).

- They suggested the Mojo team add features such as the __mlir_type[...] macro accepting symbolic compile-time values, or a helper like __mlir_fold(size) to force parameter evaluation as a literal IR attribute.

LlamaIndex Discord

- Auth0 Plugs Auth into GenAI: Auth0's Auth for GenAI now supports LlamaIndex, streamlining authentication integration into agent workflows via an SDK call.

- The auth0-ai-llamaindex SDK (Python & Typescript) enables FGA-authorized RAG, as shown in this demo.

- Agents See Clearly with Visual Citations: LlamaIndex introduces a tutorial on grounding agents with visual citations, linking generated answers to specific document regions.

- A working version of this is directly available here.

- Reasoning LLM Recipes Requested: A member seeks official tutorials for implementing reasoning LLMs from Hugging Face, intended for a Docker app on Hugging Face Space.

- No solutions were found in the current discussion.

- Blockchain Pro Says "How High?": A software engineer with expertise in the blockchain space offers assistance with blockchain projects, specializing in DEX, bridge, NFT marketplace, token launchpad, stable coin, mining, and staking protocols.

- This engineer is "trying to learn more about LlamaIndex".

- Create Llama Aims to Aid AI: A member suggested the create-llama tool to help users with in-depth research with LlamaIndex.

- The tool intends to help with creating LlamaIndex projects quickly.

Cohere Discord

- Cohere's Docs Draw Discussion: A member inquired about structured output examples, such as a list of books, using Cohere, and was directed to the Cohere documentation.

- The discussion emphasized leveraging Cohere's resources for guidance on generating specific output formats.

- Pydantic Schema Sparks Inquiry: A member asked about direct usage of Pydantic schema in

response_formatand sending requests sans the Cohere library in Python.- A link to the chat reference was shared, suggesting a switch to cURL for API interaction insights.

- List of Companies Model Debated: A member sought advice on the best model for generating a company list based on a given topic.

- It was suggested that Cohere's current fastest and most capable generative model is command.

- Newcomer Aditya Arrives, Aims AI at Openchains: Aditya, with a background in machine vision and control for manufacturing equipment, is exploring web/AI sharing his current project openchain.earth.

- He's keen to integrate Cohere's AI into his project, leveraging his tech stack that includes VS Code, Github Co-Pilot, Flutter, MongoDB, JS, and Python.

tinygrad (George Hotz) Discord

- PMPP Book Touted for GPU Programming: A member suggested using PMPP (4th ed) for GPU programming, and requested compiler recommendations.

- Another member said they are looking into this compiler series and will do LLVM Tutorial as well.

- METAL Sync Issue Grounds LLaMA 7B: A user ran into an

AssertionErrorwhen running LLaMA 7B on 4 virtual GPUs with the METAL backend, which was related toMultiLazyBufferandOps.EXPAND.- The user fixed the issue by moving tensor in PR 9761 to keep device info after sampling.

- Gradient Accumulation Conundrums Resolved: A user reported that their call to

backward()was not working in their training routine andt.grad is Nonebeforeopt.step().- The user found that calling

zero_grad()before the step fixed thet.grad is Noneissue during gradient accumulation.

- The user found that calling

Torchtune Discord

- Gus from Psych Joins Torchtune?: A member requested a Contributor tag for their GitHub profile, humorously referencing the character Gus from Psych.

- Another member welcomed the new team member with a Gus-wave GIF, jokingly alluding to the TV show Psych.

- FSDP Plays Nicely With PyTorch: Torchtune defaults to the equivalent of zero3 and composes well with PyTorch distributed features like FSDP.

- A user moved to torchtune to avoid the minefield of trying to compose deepspeed + pytorch + megatron hoping we don't over-index on integrating and supporting other frameworks.

- DeepSpeed Recipe Gets Love: The team welcomes a repo that imports torchtune and hosts a DeepSpeed recipe, requiring a single device copy and the addition of DeepSpeed.

- The team confirmed this with enthusiasm.

- Sharding Strategies Support Made Simple: Supporting different sharding strategies is straightforward, and users can tweak recipes using FSDPModule methods to train in the equivalent of zero1-2.

- The team confirms that zero 1-3 are all possible with minor tweaks to the collectives.

LLM Agents (Berkeley MOOC) Discord

- AgentX Mentors MIA?: A member inquired in the #mooc-questions channel about receiving feedback from mentors in the research track of AgentX.

- No additional information was provided.

- Placeholder Topic: This is a placeholder topic to satisfy the minimum number of items required.

- Further information would be added here if available.

Codeium (Windsurf) Discord

- Windsurf Waves into JetBrains IDEs: Windsurf launched Wave 7, bringing its AI agent to JetBrains IDEs (IntelliJ, WebStorm, PyCharm, GoLand), detailed in their blog post.

- The beta incorporates core Cascade features like Write mode, Chat mode, premium models, and Terminal integration, with future updates promising additional features like MCP, Memories, Previews & Deploys (changelog).

- Codeium Catches a New Wave, Rebrands as Windsurf: The company has officially rebranded as Windsurf, retiring the frequent misspellings of Codeium, and renaming their AI-native editor to Windsurf Editor and IDE integrations to Windsurf Plugins.

The DSPy Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!