[AINews] Google wakes up: Gemini 2.0 et al

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

TPUs are all you need.

AI News for 12/10/2024-12/11/2024. We checked 7 subreddits, 433 Twitters and 31 Discords (207 channels, and 6549 messages) for you. Estimated reading time saved (at 200wpm): 649 minutes. You can now tag @smol_ai for AINews discussions!

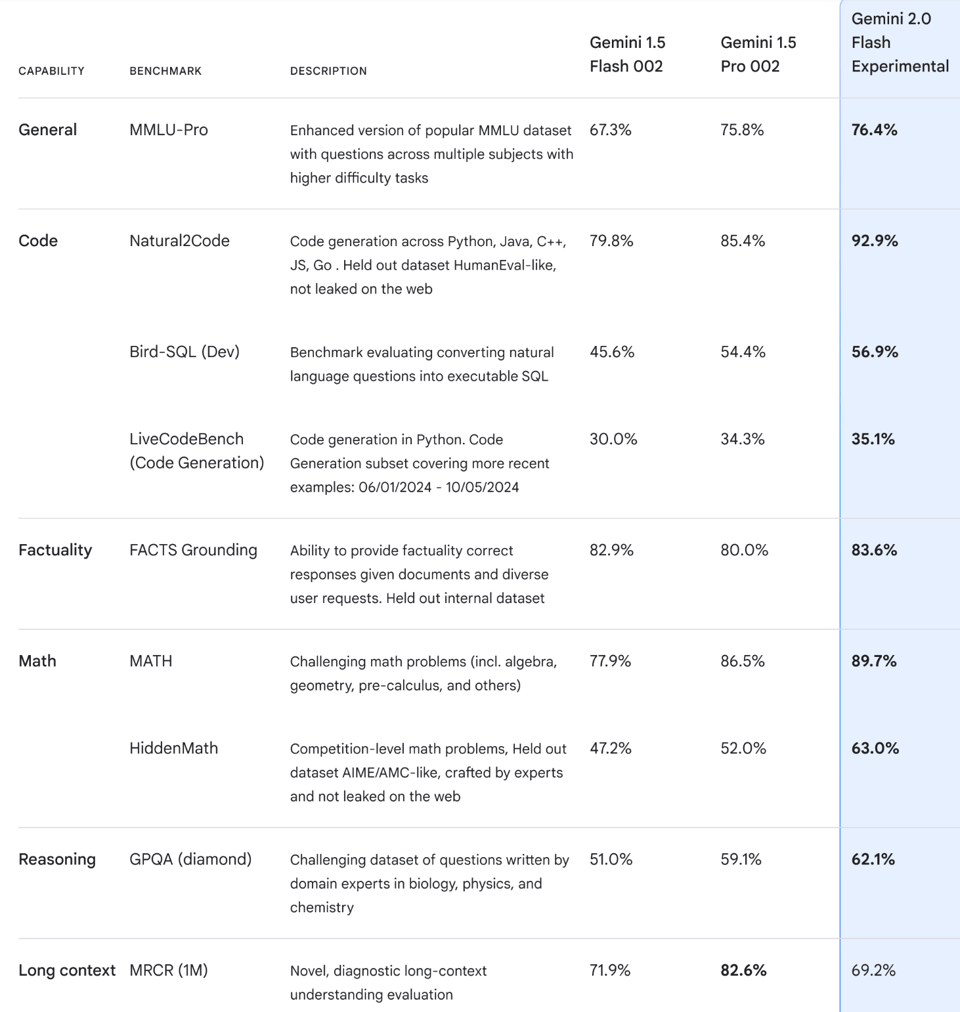

It is day 1 sessions at NeurIPS, and as teased with various Gemini-Exp versions, Sundar Pichai came out swinging with Google's first official Gemini 2 model - Gemini Flash. Nobody expected 2.0 Flash to beat 1.5 Pro but here we are:

It also beats o1-preview on LMArena (but is still behind Gemini-Exp-1206, the suspected 2.0 Pro model).

Pricing is "free" - while 2.0 Flash is still experimental. As if that weren't enough, 2.0 Flash launches with a Multimodal (Vision -AND- Voice) API, and Paige Bailey even stopped by today's Latent Space LIVE/Thrilla on Chinchilla event to show off how it does what OpenAI dared not ship today:

Image output is also trained and teased but not shipped, but it can draw the rest of the owl like you have never seen.

They also announced a bunch of features in limited preview:

- Deep Research: "a research assistant that can dig into complex topics and create reports for you with links to the relevant sources."

- Project Mariner: a browser agent that "is able to understand and reason across information - pixels, text, code, images + forms - on your browser screen, and then uses that info to complete tasks for you", achieved SOTA 83.5% on the WebVoyager benchmark.

- Project Astra updates: multilinguality, new tool use, 10 minutes of sesssion memory, streaming/native audio latency.

- Jules, an experimental AI-powered code agent that will use Gemini 2.0. Working asynchronously and integrated with your GitHub workflow, Jules handles bug fixes and other time-consuming tasks while you focus on what you actually want to build. Jules creates comprehensive, multi-step plans to address issues, efficiently modifies multiple files, and even prepares pull requests to land fixes directly back into GitHub.

Comments and impressions from everyone here at NeurIPS, and online on X/Reddit/Discord was overwhelmingly positive. Google is so back!

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Here are the key discussions organized into relevant categories:

Major Model Releases & Updates

- Gemini 2.0 Flash Launch: @demishassabis announced Gemini 2.0 Flash which outperforms 1.5 Pro at twice the speed, with native tool use, multilingual capabilities, and new multimodal features including image generation and text-to-speech. The model will power new agent prototypes like Project Astra and Project Mariner.

- ChatGPT + Apple Integration: OpenAI announced ChatGPT integration into Apple experiences across iOS, iPadOS and macOS, allowing access through Siri, visual intelligence features, and composition tools.

- Claude Performance: @scaling01 noted that Claude 3.5 Sonnet appears to be a distilled version of Opus, with Opus training completion confirmed.

Industry Developments & Analysis

- Google's Progress: Multiple researchers observed Google's advancement with Gemini 2.0 Flash showing strong performance, though noting it's not yet production-ready. The model achieved impressive results on benchmarks like SWE-bench.

- Competition Dynamics: @drjwrae highlighted that while 1.5 Flash was popular for its performance/price ratio, 2.0 brings performance matching or exceeding 1.5 Pro.

- Business Impact: Discussion about market dynamics, with @saranormous noting that the AI industry games are just beginning, comparing it to how internet developments took decades to play out.

Research & Technical Developments

- NeurIPS Conference: Multiple researchers sharing updates from #NeurIPS2024, including @gneubig presenting on agents, LLMs+neuroscience, and alignment.

- LSTM Discussion: @hardmaru shared about Sepp Hochreiter's keynote on xLSTM's advantages in inference speed and parameter efficiency compared to attention transformers.

Humor & Memes

- Industry Commentary: @nearcyan noted "the only way twitter would be impressed by openai's 12 shipping days is if it started with gpt5 and ended with gpt17"

- AI Model Names: Discussion about naming conventions for AI models with various humorous takes on different companies' approaches.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Gemini 2.0 Flash Achievements and Comparisons

- Gemini 2.0 Flash beating Claude Sonnet 3.5 on SWE-Bench was not on my bingo card (Score: 287, Comments: 53): Gemini 2.0 Flash achieved a 51.8% performance on the SWE-bench Verified benchmark, surpassing Claude Sonnet 3.5's 50.8%. Other models like GPT-4o and o1-preview scored between 31.0% and 41.0%, indicating a significant performance gap.

- Scaffolding and Testing Methods: Discussions highlight the importance of scaffolding in model performance, with some users noting that Gemini 2.0 Flash utilizes multiple sampling methods to achieve its score, unlike Claude Sonnet 3.5 which is perceived as a more straightforward model. The debate includes whether comparisons between models are fair due to differences in testing methodologies, such as using hundreds of samples versus single-shot methods.

- Context Window and Performance: Gemini 2.0 Flash is noted for its larger context window, which some users argue gives it an edge over models like Claude and o1. This capability is seen as crucial in handling real-world software engineering tasks, contributing to its higher score on the SWE-bench Verified benchmark.

- Industry Perspectives and Concerns: There is a broader conversation about the dominance of companies like Google and OpenAI in the AI space, with users expressing concerns over the implications of their control. Some prefer Google for its contributions to open-source projects, while others worry about the fast-paced development approach of companies like OpenAI.

- Gemini Flash 2.0 experimental (Score: 141, Comments: 53): Gemini Flash 2.0 is being discussed in relation to an experimental update announced by Sundar Pichai through his Twitter post. The update presumably includes new features or improvements, though specific details are not provided in the post.

- Gemini 2.0 Flash Performance: The model shows a significant advancement with a 92.3% accuracy on natural code, marking a 7% improvement over the 1.5 Pro version, positioning Google as a strong competitor against OpenAI. However, it performs worse on the MRCR long context benchmark compared to the previous 1.5 Flash model, indicating a trade-off between general improvements and specific capabilities.

- API and Usage: Users can access the model via Google AI Studio with a "pay as you go" model, costing $1.25 per 1M token input and $5 per 1M token output. There is a 1500 replies/day limit in AI Studio, with some users facing QUOTA_EXHAUSTED issues, likely due to API key arrangements.

- Market and Future Expectations: There is an anticipation for Gemma 3 with enhanced multimodal capabilities, reflecting user interest in future developments. The model's pricing strategy is seen as a potential market dominance factor, and its integration of native tool use and real-time applications is highlighted as a key innovation.

- Gemini 2.0 Flash Experimental, anyone tried it? (Score: 96, Comments: 44): Gemini 2.0 Flash Experimental offers capabilities in multimodal understanding and generation, supporting use cases such as processing code and generating text and images. The interface details pricing as $0.00 for both input and output tokens up to and over 128K tokens, with a knowledge cutoff date of August 2024, and rate limits set at 15 requests per minute.

- Gemini 2.0 impresses users with its object localization capabilities, which can detect specified object types and draw bounding boxes without the need for custom ML training, a feature not available in ChatGPT.

- Users note the speed of Gemini 2.0, with some comparing its performance to Claude in data science tasks. While both models struggle with error correction, users appreciate Google's approach of testing multiple models against each other, despite hitting demand limits and producing basic features compared to Claude's more complex ones.

- There are some compatibility issues reported, such as with cline and cursor composer, though workarounds like editing extension files are suggested. Additionally, image generation is currently restricted to early testers, as per the announcement.

Theme 2. QRWKV6-32B and Finch-MoE-37B-A11B: Innovations in Linear Models

- New linear models: QRWKV6-32B (RWKV6 based on Qwen2.5-32B) & RWKV-based MoE: Finch-MoE-37B-A11B (Score: 81, Comments: 28): Recursal has released two experimental models, QRWKV6-32B and Finch-MoE-37B-A11B, which leverage the efficient RWKV Linear attention mechanism to reduce time complexity. QRWKV6 combines Qwen2.5 architecture with RWKV6, allowing conversion without retraining from scratch, while Finch-MoE is a Mixture-of-experts model with 37B total parameters and 11B active parameters, promising future expansions and improvements. More models, such as Q-RWKV-6 72B Instruct and Q-RWKV-7 32B, are in development. For more details, visit their Hugging Face model cards and Finch-MoE.

- RWKV's Potential and Limitations: Commenters discussed that while RWKV offers theoretical speed advantages and can handle long context lengths, current inference engines are not yet optimized to fully realize these benefits. There is also interest in the practicality of converting transformers to RWKV, despite concerns about small context lengths due to limited training resources.

- Implementation Challenges: There are challenges in implementing new architectures like QRWKV6 on platforms such as koboldcpp, as it often requires dedicated effort from the community to adapt and implement these models. The RWKV community is noted for its potential to eventually overcome these hurdles.

- Future Developments and Expectations: Commenters expressed excitement over the potential of future models like RWKV 7 and the possibility of QwQ models. There is hope for linear reasoning models, and discussions touched on the need for reasoning-style data to improve model conversions and inference time thinking.

- Speculative Decoding for QwQ-32B Preview can be done with Qwen-2.5 Coder 7B! (Score: 69, Comments: 28): The post discusses using Qwen-2.5 Coder 7B as a draft model for speculative decoding in QwQ-32B, noting that both models have matching vocab sizes. On a 16 GB VRAM system, performance gains were limited, but the author anticipates significant improvements with larger VRAM GPUs (e.g., 24 GB). Subjectively, the QwQ with Qwen Coder appeared more confident and logical, though it used more characters and time; the author invites others to experiment and share their results. PDF link for detailed outputs is provided.

- Speculative Decoding Techniques: There is debate over the effectiveness of using a smaller draft model with the larger QwQ-32B model. Some users suggest that the speed improvements are noticeable when the smaller model is significantly less than ten times the size of the larger one, such as 0.5B or 1.5B models, but not much larger.

- Performance Observations: Users report that speculative decoding can lead to speed-ups of 1.5x to 2x in some setups, though the perceived improvements in logic or quality are subjective. The use of a fixed seed is recommended to verify if the perceived improvements are due to speculative decoding or other factors like GPU offload inaccuracies.

- Speculative Decoding Methods: There is mention of two speculative decoding methods: one that samples from both models and uses the smaller model only if samples agree, and another that uses logits from the smaller model with rejection sampling. The exact method implemented in llama.cpp remains unclear to some users.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. Google's Gemini 2.0: Strategic Release Amidst OpenAI Announcements

- Google releasing Gemini 2.0 a few hours before the daily openai live: (Score: 244, Comments: 64): Google released Gemini 2.0 just hours before OpenAI's daily live event, leading to speculation about the potential confirmation of GPT-5/Epsilon. This timing suggests a competitive dynamic between the two tech giants in the AI landscape.

- Gemini 2.0 Performance: Users have noted that Gemini Flash 2.0 performs exceptionally well on benchmarks, with some suggesting it might outperform models like Sonnet 3.5 and potentially be more cost-effective than 4o-mini. The context window is significantly larger than its predecessor, making it particularly useful for coding tasks.

- Market Dynamics: There is a strong sentiment favoring competition between Google and OpenAI, as it drives innovation and prevents monopolistic stagnation. Users appreciate the competitive landscape, which keeps companies motivated to improve their offerings.

- Adoption and Accessibility: Despite some skepticism about its recognition, Gemini is integrated into many Google products, which could facilitate its adoption as it becomes more recognized for its performance. It's available for testing on platforms like AI Studio, and users have found it accessible and functional.

- Google Just Introduced Gemini 2.0 (Score: 202, Comments: 45): Google introduced Gemini 2.0 during OpenAI week, indicating a strategic move to highlight advancements in AI technology and accessibility.

- Discussions emphasize skepticism about Google's Gemini 2.0, with some users expressing disappointment due to a lack of new core intelligence features and concerns about Google's history of over-promising in demos. Others counter that Gemini is already accessible for testing on aistudio, with agents expected in January, and some users report positive experiences with the technology.

- The potential for Google to undercut OpenAI on pricing due to their use of TPUs is highlighted, suggesting Google could dominate the AI market. Criticism of OpenAI's pricing strategy and product releases, such as the $200/month model and the Sora release, suggests financial struggles and competitive disadvantages against Google's integrated approach.

- Some users speculate about strategic timing in announcements, with Google's release timing potentially aimed at outpacing OpenAI's expected announcements. The competitive landscape is seen as a dynamic 'tit for tat' environment, with some users expressing enjoyment in watching these major AI companies compete.

Theme 2. Google GenCast: 15-Day AI Weather Forecast Spearheading Future Predictions

- Google says AI weather model masters 15-day forecast (Score: 286, Comments: 38): DeepMind's GenCast AI model reportedly achieves over 97% precision in 15-day weather forecasts, outperforming various models across more than 35 countries. For further details, refer to the article on phys.org.

- Users express skepticism about the accuracy of AI-driven weather forecasts, with some noting that current forecasts beyond 5 days are often unreliable. One commenter suggests that historic averages might be more accurate than predictions for forecasts beyond two weeks.

- The GenCast model's code repository is accessible via a link in the publication, which could be useful for those interested in examining or utilizing the model further.

- There is a discussion about the potential sources of data for AI models, with some users speculating that models like GenCast might rely on NOAA data. However, others highlight that AI will not replace traditional data collection methods like satellites and weather stations.

Theme 3. ChatGPT Outages: Troubles Improving Stability and User Dependency

- I came to ask it chat gpt was down... Seems like 5,000,000 other people also did... (Score: 299, Comments: 186): ChatGPT experienced downtime, causing frustration among students during finals. This issue was widely noticed, with many users seeking confirmation of the outage.

- ChatGPT's downtime during finals week caused significant frustration among students, highlighting its importance as a tool for learning and completing assignments. Users expressed their reliance on it for tasks like programming and brainstorming, emphasizing that it's not merely a means for cheating.

- The outage prompted humorous and exasperated reactions, with some users jokingly blaming themselves for the crash, while others reminisced about using alternatives like Chegg. The event underscored the high demand and dependency on AI tools during critical academic periods.

- Multiple comments noted the rarity of such outages, suggesting it was an unlucky timing due to increased usage and recent updates. The downtime led to a mix of humorous banter and serious concern about the impact on academic performance.

- ⚠️ ChatGPT, API & SORA currently down! Major Outage | December 11, 2024 (Score: 195, Comments: 80): Major Outage: On December 11, 2024, significant outages affected ChatGPT, API, and Sora services, with OpenAI's status page indicating ongoing investigations. A 90-day uptime graph shows "Major Outage" for API and ChatGPT, while Labs and Playground remain "Operational."

- Many users speculate that the iOS 18.2 update and the recent launch of Sora contributed to the outage, with new features like ChatGPT support integrated into Siri and Writing Tools potentially straining the servers. dopedub noted that these updates might have been poorly timed, leading to the system overload.

- There is a broader sentiment about the dependency on ChatGPT, with comments like legend503's highlighting the fragility of reliance on AI tools when they are unavailable. Users expressed frustration and humor about the downtime, with some suggesting alternative platforms or methods to access ChatGPT during the outage.

- Users shared various workarounds, such as trying to access ChatGPT through iPhones or using the mobile app, with some success reported. InspectorOk6664 and others noted that they managed to access the service, albeit with limited functionality, suggesting that the outage impact varied across platforms.

Theme 4. Sora AI Criticisms: Inferior Outputs Against Rivals and Discontent Among Users

- Sora is awful (Score: 350, Comments: 193): Sora's performance is heavily criticized for its inability to accurately generate videos, even with simple tasks like making cats dance, resulting in poor quality outputs. The user expresses dissatisfaction with the cost of Sora compared to other more effective and often cheaper or free text-to-video generators, stating that the service does not justify its price unless it significantly improves.

- Sora's Performance and Limitations: Many users agree that Sora underperforms compared to alternatives like Runway Gen-3 and Luma, with complaints about its inability to handle tasks like image-to-video generation effectively. Users note that the public version of Sora is a scaled-down "Turbo" model, which lacks the computational power of the demo version that was showcased earlier.

- Technical and Market Challenges: Comments suggest that Sora's limitations are due to computational constraints and the need to balance demand with available resources, leading to a watered-down version being released. This has resulted in disappointment among users who expected the capabilities demonstrated in earlier demos, which likely used more resources than what is available to the public.

- Community Sentiment and Comparisons: The community expresses skepticism about OpenAI's handling of Sora, with some speculating that the product was strategically released to compete with Google's Gemini Pro. Users also criticize OpenAI's Dalle as inferior to MidJourney, indicating a broader dissatisfaction with OpenAI's offerings relative to competitors.

- Used Sora Al to recreate my favorite Al video (Score: 2574, Comments: 140): Sora AI is criticized for its inability to perform expected tasks, resulting in negative user feedback. The post mentions attempting to use Sora AI to recreate a favorite AI video, highlighting user dissatisfaction with its performance.

- Users overwhelmingly prefer the original video over the Sora AI recreation, citing the original's humor and appeal. Many comments express dissatisfaction with the realism and quality of the AI-generated content, with some users noting inappropriate elements like pornographic frames.

- There is speculation and curiosity about the prompt and training data used for Sora AI, with some users questioning if it was trained on inappropriate content. The discussion hints at a desire to understand the AI's development process and its limitations.

- The conversation includes humorous and critical remarks about the AI's output, such as comparisons to video game characters and references to cultural events like 9/11. Despite criticism, there is recognition of the AI's role in showcasing technological evolution and potential future applications.

AI Discord Recap

A summary of Summaries of Summaries by O1-mini

Theme 1. New AI Models and Significant Updates

- Gemini 2.0 Flash Launches with Stellar Performance: Google DeepMind unveiled Gemini 2.0 Flash, debuting at #3 overall in the Chatbot Arena and outperforming models like Flash-002. This release enhances multimodal capabilities and coding performance, setting new benchmarks in AI model development.

- Nous Research Releases Hermes 3 for Enhanced Reasoning: Nous Research launched Hermes 3 3B on Hugging Face, featuring quantized GGUF versions for optimized size and performance. Hermes 3 introduces advanced features in user alignment, agentic performance, and reasoning, marking a significant upgrade from its predecessor.

- Windsurf Wave 1 Enhances Developer Tools: Windsurf Wave 1 officially launched, integrating major autonomy tools like Cascade Memories and automated terminal commands. The update also boosts image input capabilities and supports development environments such as WSL and devcontainers. Explore the full changelog for detailed enhancements.

Theme 2. AI Tool Performance and Comparative Analysis

- Windsurf Outperforms Cursor in AI Tool Comparisons: Community discussions highlight Windsurf as a superior AI tool compared to Cursor, emphasizing its more reliable communication and transparent changelogs. Users appreciate Windsurf's update approach and responsiveness, positioning it ahead in the AI tool landscape.

- Muon Optimizer Emerges as a Strong Alternative to AdamW: The Muon optimizer gains attention for its robust baseline performance and solid mathematical foundation, positioning it as a viable alternative to traditional optimizers like AdamW. Although it hasn't yet outperformed AdamW, its resilience in models such as Llama 3 underscores its potential in future developments.

- Gemini 2.0 Flash Surpasses Competitors in Coding Tasks: Gemini 2.0 Flash has been praised for its exceptional performance in spatial reasoning and iterative image editing, outperforming models like Claude 3.5 and o1 Pro. Users have noted its competitive benchmarks, sparking further discussions on its advancements over existing offerings.

Theme 3. Feature Integrations and Platform Enhancements

- ChatGPT Integrates Seamlessly with Apple Ecosystem: During the 12 Days of OpenAI event, ChatGPT was successfully integrated into iOS and macOS. Demonstrated by Sam Altman and team members, this integration includes enhanced holiday-themed features to engage users during the festive season.

- NotebookLM Boosts Functionality with Gemini 2.0 Integration: NotebookLM confirmed the integration of Gemini 2.0, enhancing its capabilities for real-time AI engagement within Discord guilds. This upgrade is expected to bolster NotebookLM's performance, despite humorous critiques on branding choices.

- Supabase Integration Enhances Bolt.new Workflows: Bolt.new provided a sneak peek of its Supabase integration during a live stream, promising improved workflow functionalities for developers. This integration aims to streamline existing processes and attract more users by enhancing the platform's utility.

Theme 4. Pricing, Usage Transparency, and Subscription Models

- Windsurf Rolls Out Transparent Pricing and Usage Updates: An updated pricing system for Windsurf has been introduced, featuring a new quick settings panel that displays current plan usage and trial expiry. The update also includes a 'Legacy Chat' mode in Cascade that activates upon exhausting Flow Credits, providing limited functionality without additional costs.

- Self-Serve Upgrade Plans Simplify Subscription Management: Windsurf has introduced a self-serve upgrade plan button, allowing users to effortlessly access updated plans through this link. This feature simplifies the process of scaling subscriptions based on project needs, enhancing user experience and flexibility.

- $30 Pro Plan Expands App Capabilities for Open Interpreter: Killianlucas announced that the $30 monthly desktop app plan for Open Interpreter increases usage limits and provides the app without needing an API key for free users. He recommended sticking to the free plan unless users find the expanded features overwhelmingly beneficial, as the app continues to evolve rapidly in beta.

Theme 5. Training, Fine-Tuning, and Cutting-Edge Research

- Eleuther Analyzes Training Jacobian to Uncover Parameter Dependencies: Researchers at Eleuther published a paper on arXiv exploring the training Jacobian, revealing how final parameters depend on initial ones by analyzing the derivative matrix. The study differentiates between bulk and chaotic subspaces, offering insights into neural training dynamics.

- Challenges in Fine-Tuning Qwen 2.5 Highlight Integration Difficulties: In the Unsloth AI Discord, users reported difficulties in obtaining numeric outputs from the fine-tuned Qwen 2.5 model, especially with simple multiplication queries. Discussions emphasized that integrating domain-specific knowledge is challenging, suggesting that pre-training or employing RAG solutions might offer more effective outcomes.

- Innovations in LLM Training with COCONUT and RWKV Architectures: The introduction of COCONUT (Chain of Continuous Thought) enables LLMs to reason within a continuous latent space, optimizing processing through direct embedding approaches. Additionally, new RWKV architectures like Flock of Finches and QRWKV-6 32B have been released, emphasizing optimized training costs without compromising performance.

PART 1: High level Discord summaries

Codeium / Windsurf Discord

- Wave 1 Launch Enhances Windsurf Capabilities: Windsurf Wave 1 has officially launched, introducing major autonomy tools such as Cascade Memories and automated terminal commands. The update also includes enhanced image input capabilities and support for development environments like WSL and devcontainers. Check out the full changelog for detailed information.

- Users have expressed excitement over the new features, highlighting the integration of Cascade Memories as a significant improvement. The launch aims to streamline developer workflows by automating terminal commands and supporting diverse development environments.

- Windsurf Pricing and Usage Transparency Update: An updated pricing system for Windsurf is rolling out, featuring a new quick settings panel that displays current plan usage and trial expiry. Detailed information about the changes is available on the pricing page.

- The update introduces a 'Legacy Chat' mode in Cascade, which activates when users exhaust their Flow Credits, allowing limited functionality without additional credits. This change aims to provide clearer usage metrics and enhance transparency for users managing their subscriptions.

- Enhanced Python Support in Windsurf: Windsurf has improved language support for Python, offering better integration and advanced features for developers. This enhancement is part of the platform's commitment to empowering developers with more effective tools.

- Additionally, a self-serve upgrade plan button has been introduced, enabling users to easily access updated plans through this link. This feature simplifies the process of scaling their subscriptions based on their project needs.

- Cascade Image Uploads and Functionality Enhancements: Cascade image uploads are no longer restricted to 1MB, allowing users to share larger files seamlessly. This improvement is part of ongoing efforts to enhance usability and functionality within the platform.

- The increased upload limit aims to support more extensive workflows, enabling users to incorporate higher-resolution images into their projects without encountering size limitations. This change contributes to a more flexible and efficient user experience.

- Cascade Model Performance Issues Reported: Users have reported that Cascade Base is experiencing performance issues, such as hanging and unresponsiveness, which affects its reliability for coding tasks. Some users have encountered HTTP status 504 errors during usage.

- There are speculations that these instability issues might be linked to ongoing problems with OpenAI, leading several users to consider downgrading or switching to alternative tools. The community is actively discussing potential solutions to mitigate these performance challenges.

OpenAI Discord

- ChatGPT Apple Integration: In the YouTube demo, ChatGPT was integrated into iOS and macOS, showcased by Sam Altman, Miqdad Jaffer, and Dave Cummings during the 12 Days of OpenAI event.

- The festive presentation featured team members in holiday sweaters, aiming to enhance viewer engagement and reflect the holiday season spirit.

- Gemini 2.0 Flash Outperforms: Gemini 2.0 Flash has been lauded by users for its superior performance, especially in spatial reasoning and iterative image editing, outperforming models like o1 Pro.

- Community comparisons highlight Gemini 2.0 Flash's competitive benchmarks, sparking discussions on its advancements over OpenAI's offerings.

- OpenAI Services Experience Downtime: OpenAI experienced service outages affecting ChatGPT and related API tools, as reported in the status update.

- Users noted disruptions coinciding with the Apple integration announcement, with API traffic partially recovering while Sora remained down as of December 11, 2024.

- Challenges in Fine-Tuning OpenAI Models: Developers reported that fine-tuned OpenAI models still generate generic responses despite completing training, seeking assistance with their JSONL configurations.

- Community feedback is sought to identify potential issues in training data, aiming to enhance model specificity and performance.

- Chaining Custom GPT Tools: Users are encountering difficulties in chaining multiple Custom GPT tools within a single prompt, where only the first tool's API call is executed.

- Suggestions include adding meta-functional instructions and using canonical tool names to improve tool interaction management.

Cursor IDE Discord

- Cursor Slows Amid OpenAI Model Hiccups: Users reported persistent performance issues with Cursor, notably sluggish request response times and problematic codebase indexing. These issues appear linked to upstream problems with OpenAI models, resulting in degraded platform performance. Cursor Status shows ongoing downtimes affecting key functionalities.

- Community members expressed frustration over Cursor's inability to code effectively during these downtimes. A feature request for long context mode received significant attention, though formal support remains pending.

- Agent Mode Hangs Code Flow: Cursor's Agent mode is facing widespread complaints due to frequent hangs when processing code or accessing the codebase. Users have found that reindexing or restarting Cursor can temporarily alleviate these issues.

- Despite temporary fixes, the recurring nature of Agent mode disruptions continues to hinder developer productivity, prompting discussions on potential long-term solutions within the community.

- Windsurf Surges Past in AI Tool Comparisons: Discussions highlighted Windsurf as a superior AI tool compared to Cursor, emphasizing its more reliable communication and transparent changelogs. Users appreciate Windsurf's approach to updates and feedback integration.

- Participants noted that while Cursor offers unique features, its changelog transparency and responsiveness lag behind competitors like Windsurf, suggesting areas for improvement to meet user expectations.

- Gemini 2.0 Shines Twice as Fast: Sundar Pichai announced the launch of Gemini 2.0 Flash, which outperforms Gemini 1.5 Pro on key benchmarks at twice the speed. This advancement marks a significant milestone in the Gemini model's development.

- The AI community is keenly observing the progress of Gemini 2.0, with expectations high for its performance improvements and potential impact on existing models like Claude.

- Windsurf's Transparent Changelogs Impress: Windsurf users praised the tool's clear communication and detailed changelogs available at Windsurf Editor Changelogs. This transparency demonstrates a strong commitment to user feedback and continuous improvement.

- The favorable reception of Windsurf's engagement model contrasts with Cursor, prompting discussions on adopting similar transparent practices to enhance user trust and satisfaction.

Eleuther Discord

- Training Jacobian Analysis Reveals Parameter Dependencies: A recent paper on arXiv explores the training Jacobian, uncovering how final parameters hinge on initial ones by analyzing the derivative matrix. The study highlights that training manipulates parameter space into bulk and chaotic subspaces.

- The research was conducted using a 5K parameter MLP due to computational constraints, yet similar spectral patterns were observed in a 62K parameter image classifier, suggesting broader implications for neural training dynamics.

- Muon Optimizer Emerges as a Strong Contender: Muon has garnered attention for its robust baseline performance and solid mathematical foundation, positioning it as a viable alternative to existing optimizers like AdamW. Keller Jordan detailed its potential in optimizing hidden layers within neural networks.

- While Muon hasn't yet decisively outperformed AdamW, its resilience against issues observed in models such as Llama 3 underscores its promise in future optimizer developments.

- Addressing Inherent Biases in Large Language Models: A forthcoming paper discusses that harmful biases in large language models are an intrinsic result of their current architectures, advocating for a fundamental reassessment of AI design principles. The study emphasizes that biases stem from both dataset approximations and model architectures.

- This perspective encourages the AI community to focus on understanding the root causes of biases rather than merely adjusting specific implementations, fostering more effective strategies for bias mitigation.

- Integrating HumanEval in lm_eval_harness for Enhanced Perplexity Metrics: lm_eval_harness is being utilized to assess model perplexity on datasets formatted in jsonl files, with members sharing custom task configurations. A specific pull request (#2559) aims to facilitate batch inference, addressing processing inefficiencies.

- The integration seeks to deliver per-token perplexity results essential for comparative studies, with community members actively contributing solutions to streamline evaluation workflows.

- Release of New RWKV Architectures Enhances Model Efficiency: New RWKV architectures, namely Flock of Finches and QRWKV-6 32B, have been launched, emphasizing optimized training costs without compromising performance.

- These models demonstrate comparable capabilities to larger counterparts while maintaining reduced computational demands, making them attractive for scalable AI applications.

Bolt.new / Stackblitz Discord

- Cole Amplifies OSS Bolt on Twitter: During a live session, Cole discussed OSS Bolt, sharing the journey and developments, which was viewed by the community on Twitter.

- OSS Bolt is gaining traction, with the latest Bolt Office Hours: Week 8 now available on YouTube, featuring resource links to enhance viewer engagement.

- Supabase Sneak Peek Enhances Bolt: A live stream provided a sneak peek of the Supabase integration, set to enhance existing workflows and attract interest from developers.

- This Supabase integration promises to improve workflow functionalities, as revealed during the live session, exciting the developer community.

- Supabase Tops Firebase for Stripe Integration: Several users discussed transitioning from Firebase to Supabase for better Stripe integration, evaluating each database’s merits.

- The decision to choose Supabase is driven by the desire to avoid vendor lock-in, making it a preferred choice among some app developers.

- Shopify API Integration Boosts Web App: A member developing a web app synchronized with their Shopify store received recommendations to integrate with Shopify's APIs, citing Shopify API Docs.

- Implementing Shopify API Integration ensures secure data access exclusive to the app, leveraging comprehensive documentation for seamless development.

- Bolt AI Token Drain Sparks Concerns: Users reported frustrations with Bolt AI making repeated errors and consuming large amounts of tokens without resolving issues, with one user using 200k tokens without changes.

- Issues are attributed to the underlying AI, Claude, prompting suggestions to refine prompt framing to minimize wasted tokens during debugging sessions.

Unsloth AI (Daniel Han) Discord

- Qwen 2.5 Fine-tuning Faces Numeric Output Issues: A user reported challenges in obtaining numeric outputs from the fine-tuned Qwen 2.5 model, particularly when handling simple multiplication queries.

- The discussion emphasized that integrating domain-specific knowledge is difficult, suggesting that pre-training or employing RAG solutions might offer more effective outcomes.

- Gemini Voice Surpasses Competitors: Community members expressed excitement over the capabilities of Gemini Voice, describing it as 'crazy' and outperforming some existing alternatives.

- Comparisons highlighted that OpenAI's real-time voice API is more costly and less capable, positioning Gemini Voice as a superior option.

- Managing Memory Spikes during Training: Concerns were raised about unexpected spikes in RAM and GPU RAM usage during training phases, causing runtime collapses on Colab.

- Users recommended strategies such as adjusting batch sizes, balancing dataset lengths, and ensuring high-quality data to mitigate memory issues.

- Effective Model Conversion with llama.cpp: Users discussed utilizing llama.cpp for model conversion, sharing experiences and troubleshooting methods to streamline the process.

- One member successfully resolved conversion issues by performing a clean install and leveraging PyTorch 2.2 as a specific dependency version.

- WizardLM Arena Datasets Now Available: A member uploaded all datasets used in the WizardLM Arena paper to the repository, with the commit b31fa9d verified a day ago.

- These datasets provide comprehensive resources for replicating the WizardLM Arena experiments and further research.

Nous Research AI Discord

- Hermes 3 Launches with Upgraded Reasoning: Hermes 3 3B has been released on Hugging Face with quantized GGUF versions for optimized size and performance, introducing advanced capabilities in user alignment, agentic performance, and reasoning.

- This release marks a significant upgrade from Hermes 2, enhancing overall model efficiency and effectiveness for AI engineers.

- Google DeepMind Debuts Gemini 2.0: Gemini 2.0 was unveiled by Google DeepMind, including the experimental Gemini 2.0 Flash version with improved multimodal output and performance, as announced in their official tweet.

- The introduction of Gemini 2.0 Flash aims to facilitate new agentic experiences through enhanced tool use capabilities.

- DNF Advances 4D Generative Modeling: The DNF model introduces a new 4D representation for generative modeling, capturing high-fidelity details of deformable shapes using a dictionary learning approach, as detailed on the project page.

- A YouTube overview demonstrates DNF's applications, showcasing temporal consistency and superior shape quality.

- COCONUT Enhances LLM Reasoning Processes: COCONUT (Chain of Continuous Thought) enables LLMs to reason within a continuous latent space, optimizing processing through a more direct embedding approach, as described in the research paper.

- This methodology builds on Schmidhuber's work on Recurrent Highway Networks, offering end-to-end optimization via gradient descent for improved reasoning efficiency.

- Forge Bot Streamlines Discord Access: Members can now access Forge through a Discord bot without needing API approval by selecting the Forge Beta role from the customize page and navigating to the corresponding channel.

- This enhancement facilitates immediate testing and collaboration within the server for AI engineers.

Stability.ai (Stable Diffusion) Discord

- VRAM Usage and Management: Users discussed how caching prompts in JSON format impacts memory usage, noting that storing 20 prompts consumes only a few megabytes. They also explored running models in lower precision like FP8 to reduce memory costs.

- Optimizing FP8 precision is crucial for managing VRAM efficiently in large-scale AI deployments.

- AI Model Recommendations for Image Enhancement: A user requested model recommendations for generating spaceships, mentioning their use of Dream Shaper and Juggernaut. Another member suggested training a LoRA if existing models did not meet their needs.

- Training a LoRA can offer customized enhancements for specific image generation requirements.

- GPU Scalping Concerns: Members shared insights on how scalpers might deploy web scrapers to purchase GPUs on launch days. One user expressed concerns about acquiring a GPU before scalpers, mentioning they avoided waiting in lines due to being outside the US.

- Preventing GPU scalping requires strategies beyond physical queueing, especially for international users.

- AI for Image Classification and Tagging: A user inquired about tools for extracting tags or prompts from images, leading to a discussion about image classification techniques. Clip Interrogator was recommended as a tool that can describe images without prior metadata.

- Clip Interrogator can automate the tagging process, enhancing efficiency in managing large image datasets.

- AI Programs for Voice Training: Users discussed programs commonly used to train AI on specific voices, with Applio mentioned as a potential tool. The conversation emphasized interest in tools for voice manipulation and AI training applications.

- Choosing the right program like Applio can improve workflows in voice-specific AI training tasks.

Notebook LM Discord Discord

- Integrating NotebookLM into Discord: A member discussed adding a chat feature to Discord, enabling users to directly query NotebookLM for enhanced interactivity.

- This integration aims to provide a seamless connection for players seeking real-time AI engagement within the Discord guild.

- NotebookLM for TTRPG Rule Conversion: A member showcased using a 100-page custom TTRPG rule book with NotebookLM to convert narrative descriptions into specific game mechanics.

- They highlighted the tool's effectiveness in translating complex rules into actionable mechanics, facilitating smoother gameplay.

- Enhancing Podcasts with NotebookLM: Users explored leveraging NotebookLM for podcast creation, including customizing presenters and utilizing visuals from the Talking Heads Green Screen Podcast.

- Members suggested employing chromakey techniques to personalize podcast backdrops, enhancing visual appeal.

- Gemini 2.0 Integration in NotebookLM: Discussions confirmed the integration of Gemini 2.0 with NotebookLM, with members humorously noting the absence of the Gemini symbol in branding.

- The transition is expected to bolster NotebookLM's capabilities, despite light-hearted critiques on branding choices.

- NotebookLM Features and AI Tool Experiences: Users examined NotebookLM's limitations, including a maximum of 1,000 notes, 100,000 characters each, and 50 sources per notebook, raising queries about PDF size constraints.

- Additionally, members shared positive experiences with tools like Snapchat AI and Instagram's chat AI, emphasizing NotebookLM's utility in their workflows during challenging periods.

Interconnects (Nathan Lambert) Discord

- Gemini 2.0 Flash Launch: Google unveiled Gemini 2.0 Flash, highlighting its superior multimodal capabilities and enhanced coding performance compared to previous iterations. The launch event was covered in detail on the Google DeepMind blog, emphasizing its top-tier benchmark scores.

- Users have praised Gemini 2.0 Flash for its robust coding functionalities, with comparisons to platforms like Claude. However, some concerns were raised about its stability and effectiveness relative to other models, as discussed in multiple tweets.

- Scaling Laws Debate at NeurIPS: Dylan Patel is set to engage in an Oxford Style Debate on scaling laws at NeurIPS, challenging the notion that scaling alone drives AI progress. The event promises to address critiques from industry influencers, with registration details available in his tweet.

- The community has shown strong engagement, finding the debate format entertaining and insightful. Discussions emphasize the importance of search, adaptation, and program synthesis over mere model scaling, reflecting broader AI development trends.

- Joining Meta's Llama Team: A member announced their upcoming role with AI at Meta's Llama team, aiming to develop the next generation of Llama models. This move underscores a commitment to fostering a healthier AI ecosystem aligned with AGI goals and open-source initiatives.

- The announcement sparked discussions about integrating Gemini insights into the Llama project, despite past challenges with Llama 3.2-vision. Members humorously debated the potential distractions from presenting Kambhampathi's works, highlighting community support for the new endeavor.

- OpenAI's Product-Centric Strategy: OpenAI continues to emphasize a product-centric approach, maintaining its leadership in the AI field by focusing on user-friendly products. This strategy has kept OpenAI ahead as competitors lag, as observed within the community discussions.

- The focus on usability ensures that OpenAI's developments remain relevant and impactful, reinforcing the importance of practical applications in ongoing AI advancements.

- LLM Creativity Benchmarking: A discussion emerged on evaluating LLM capabilities in creative tasks, revealing discrepancies between benchmark scores and user satisfaction. Claude-3 remains popular despite not always leading in creativity metrics.

- Community members are exploring methodologies to better assess creative outputs of LLMs, aiming to align benchmarking practices with actual user experiences and expectations.

LM Studio Discord

- Maximizing GPU Utilization in LM Studio: Discussions centered on using multiple GPUs, including two 3070s and a 3060ti, with LM Studio's GPU offload currently functioning as a simple toggle.

- Users are exploring workaround solutions through environment variables to enhance multi-GPU performance due to existing limitations.

- Advancing Document Merging with Generative AI: A member sought methods to merge two documents using generative AI, but suggestions leaned towards traditional techniques like the MS Word merge option.

- Alternative solutions included writing a custom script for exact merges instead of relying on vague AI prompts.

- Enabling Model Web Access via API Integration: Queries about providing web access to models received answers pointing towards requiring custom API solutions instead of the standard chat interface.

- This indicates a growing interest in integrating models with external tools and websites for enhanced operational capabilities.

- Integrating Alphacool's D5 Pump in Hardware Setup: Alphacool introduced a model with the D5 pump pre-installed, impressing some members.

- However, one member expressed regret over not choosing this setup due to space constraints in their extensive setup comprising 4 GPUs and 8 HDDs.

Latent Space Discord

- Gemini 2.0 Flash Launches: Google announced the launch of Gemini 2.0 Flash, which debuted at #3 overall in the Chatbot Arena, outperforming previous models like Flash-002.

- Gemini 2.0 Flash offers enhanced performance in hard prompts and coding tasks, incorporating real-time multimodal interactions.

- Hyperbolic Raises $12M Series A: Hyperbolic successfully raised a $12M Series A, aiming to develop an open AI platform with an open GPU marketplace offering H100 SXM GPUs at $0.99/hr.

- This funding emphasizes transparency and community collaboration in the development of AI infrastructure.

- Stainless Secures $25M Series A Funding: Stainless API announced a $25M Series A funding round led by a16z and Sequoia, aimed at enhancing their AI SDK offerings.

- The investment will support the development of a robust ecosystem for AI developers.

- Nous Simulators Launches: Nous Research launched Nous Simulators to experiment with human-AI interaction in social contexts.

- The platform is designed to provide insights into AI behaviors and interactions.

- Realtime Multimodal API Unveiled: Logan K introduced the new Realtime Multimodal API, powered by Gemini 2.0 Flash, supporting real-time audio, video, and text streaming.

- This API facilitates dynamic tool calls in the background for a seamless interactive experience.

Modular (Mojo 🔥) Discord

- C23 Standardizes Pure Functions: Standardizing pure functions in both C and C++ has been achieved with the inclusion of this feature in C23, referencing n2956 and p0078 for detailed guidelines.

- This update facilitates better optimization by clearly marking pure functions, enhancing code predictability and performance.

- Modular Forum Links Obscured: Users expressed frustration that the Modular website's forum link is difficult to find, being buried under the Company section.

- The team is dedicated to improving the forum and targets a public launch in January to enhance accessibility.

- Mojo's Multi-Paxos Implementation Issues: A Multi-Paxos consensus protocol implemented in C++ failed initial tests by not efficiently handling multiple proposals.

- Essential features such as timeouts, leader switching, and retries are lacking, akin to the necessity of back-propagation in neural networks.

- Debate on Mojo Struct del Design: The community debated whether Mojo structs should have

__del__methods be opt-in or opt-out, weighing consistency against developer ergonomics.- Some members prefer reducing boilerplate code, while others advocate for a uniform approach across traits and methods.

- Performance Boost with Named Results: Named results in Mojo allow direct writes to an address, avoiding costly move operations and providing performance guarantees during function returns.

- While primarily offering guarantees, this feature enhances efficiency in scenarios where move operations are not feasible.

Cohere Discord

- Maya Multimodal Model Launch: Introducing Maya, an open source, multilingual vision-language model supporting 8 languages with a focus on cultural diversity, built on the LLaVA framework.

- Developed under the Apache 2.0 license, Maya's paper highlights its instruction-finetuned capabilities, and the community is eagerly awaiting a blog post and additional resources.

- Plans for Rerank 3.5 English Model: A member inquired about upcoming developments for the rerank 3.5 English model, seeking insights into future enhancements.

- As of now, there have been no responses provided regarding this inquiry, leaving the plans for the rerank 3.5 model unaddressed.

- Aya Expanse Enhances Command Family: Discussions highlighted that Aya Expanse potentially benefits from the command family's performance, suggesting improved instruction following capabilities.

- Members implied that since Aya Expanse may be built upon the command family, it could offer enhanced performance in processing and executing commands.

- Persistent API 403 Errors: Users reported encountering 403 errors when utilizing the API request builder, even after disabling their VPN and using a Trial API key.

- Details shared include the use of an IPv6 address from China and specific curl commands, but the issue remains unresolved within the community.

- Seeking Quality Datasets for Quantification: Members are looking for high-quality datasets suitable for quantification, specifically interested in the 're-annotations' tag from the aya_dataset.

- The community is emphasizing the need for datasets with a significant number of samples to support robust quantification tasks.

LLM Agents (Berkeley MOOC) Discord

- Hackathon Submissions Due by December 17th: Participants must submit their hackathon submissions by December 17th, adhering to the guidelines provided.

- Additionally, the written article assignment is due by December 12th, 11:59 PM PST, separate from the 12 lecture summaries previously required.

- Detailed Guidelines for Written Article Assignment: Students are required to create a post of approximately 500 words on platforms such as Twitter, Threads, or LinkedIn, linking to the MOOC website.

- The article submission is graded on a pass or no pass basis and must be submitted using the same email used for course sign-up to ensure proper credit.

- ToolBench Platform Presented at ICLR'24: The ToolBench project was showcased at ICLR'24 as an open platform for training, serving, and evaluating large language models for tool learning.

- This platform aims to provide AI engineers with enhanced resources and frameworks to advance tool integration within large language models.

- Advancements in Function Calling for AI Models: AI models are increasingly leveraging detailed function descriptions and signatures, setting parameters based on user prompts to improve generalization capabilities.

- This development indicates a trend towards more intricate interactions between AI models and predefined functions, enhancing their operational complexity.

OpenInterpreter Discord

- O1 Pro Enables Advanced Control Features: A member proposed using Open Interpreter in OS mode to control O1 Pro, enabling features like web search, canvas, and file upload.

- Another user considered reverse engineering O1 Pro to control Open Interpreter, commenting that 'the possibilities that opens up... yikes.'

- Open Interpreter App Beta Access Limited to Mac: Members confirmed that the Open Interpreter app is currently in beta, requires an invite, and is limited to Mac users.

- One member expressed frustration over being on the waitlist without access, while another shared contacts to obtain an invite.

- Mixed Feedback on New Website Design: Users provided mixed feedback on the new website design, with one stating it looked 'a lil jarring' initially but has grown on them.

- Others noted the design is a work-in-progress, with ambitions for a cooler overlay effect in future updates.

- $30 Pro Plan Expands App Capabilities: Killianlucas explained that the $30 monthly desktop app plan increases usage limits and provides the app without needing an API key for free users.

- He recommended sticking to the free plan unless users find it overwhelmingly beneficial, as the app is rapidly evolving in beta.

- Actions Beta App Focuses on File Modification: The Actions feature was highlighted as a beta app focusing on file modification, distinct from OS mode which is available only in the terminal.

- Members were encouraged to explore this new feature, though some encountered limitations, with one noting they maxed out their token limit while testing.

Torchtune Discord

- QRWKV6-32B achieves 1000x compute efficiency: The QRWKV6-32B model, built on the Qwen2.5-32B architecture, matches the original 32B performance while offering 1000x compute efficiency in inference.

- Training was completed in 8 hours on 16 AMD MI300X GPUs (192GB VRAM), showcasing a significant reduction in compute costs.

- Finch-MoE-37B-A11B introduces linear attention: The Finch-MoE-37B-A11B model, part of the new RWKV variants, adopts linear attention mechanisms for efficiency in processing long contexts.

- This shift highlights ongoing developments in the RWKV architecture aimed at enhancing computational performance.

- DoraLinear enhances parameter initialization: DoraLinear improves user experience by utilizing the

to_emptymethod for magnitude initialization, ensuring it doesn't disrupt existing functionality.- Implementing

swap_tensorsin theto_emptymethod facilitates proper device handling during initialization, crucial for tensors on different devices.

- Implementing

DSPy Discord

- O1 Series streamlines DSPy workflows: A member inquired about the impact of the O1 series models on DSPy workflows, suggesting that MIPRO’s recommended parameters for optimization might require adjustments.

- They speculated that the new models could lead to fewer optimization cycles or the evaluation of fewer candidate programs.

- Generic optimization errors in DSPy: A user reported encountering a weird generic error during the optimization process and mentioned a related bug posted in a specific channel.

- This issue highlights the ongoing challenges the community faces when optimizing DSPy workflows.

- 'backtrack_to' attribute error in DSPy Settings: A member shared an error where 'backtrack_to' was not an attribute of Settings in DSPy and sought assistance.

- Another user indicated that the issue was resolved earlier, likely due to some async usage.

- Debate on Video and Audio IO focus: A user initiated a discussion on video and audio IO within DSPy, prompting varied opinions among members.

- One member advocated for concentrating on text and image input, citing the effectiveness of existing features.

LAION Discord

- Grassroots Science Ignites Multilingual LLM Development: The Grassroots Science initiative launches in February 2025, aiming to develop multilingual LLMs through crowdsourced data collection with partners like SEACrowd and Masakhane.

- The project focuses on creating a comprehensive multilingual dataset and evaluating human preference data to align models with diverse user needs.

- LLaMA 3.2 Throughput Troubleshooting: A user is optimizing inference throughput for LLaMA 3.2 with a 10,000-token input on an A100 GPU, targeting around 200 tokens per second but experiencing slower performance.

- Discussions suggest techniques like batching, prompt caching, and utilizing quantized models to enhance throughput.

- TGI 3.0 Surpasses vLLM in Token Handling: TGI 3.0 is reported by Hugging Face to process three times more tokens and run 13 times faster than vLLM, improving handling of long prompts.

- With a reduced memory footprint, TGI 3.0 supports up to 30k tokens on LLaMA 3.1-8B, compared to vLLM's limitation of 10k tokens.

- Model Scaling Skepticism Favors Small Models: A member challenges the necessity of scaling models beyond a billion parameters, stating, 'A Billion Parameters Ought To Be Enough For Anyone.', and advocates for hyperefficient small models.

- The discussion critiques the scale-is-all-you-need approach, highlighting benefits in model training efficiency.

- COCONUT Introduces Continuous Reasoning in LLMs: Chain of Continuous Thought (COCONUT) is presented as a new reasoning method for LLMs, detailed in a tweet.

- COCONUT feeds the last hidden state as the input embedding, enabling end-to-end optimization via gradient descent instead of traditional hidden state and token mapping.

Axolotl AI Discord

- Default Setting Suggestion: User suggested that 'default' should be the default.

- ****:

Mozilla AI Discord

- Mozilla AI hires Community Engagement Head: Mozilla AI is recruiting a Head of Community Engagement for a remote position, reporting directly to the CEO.

- This role is responsible for leading and scaling community initiatives across various channels to boost engagement.

- Introducing Lumigator for LLM Selection: Mozilla is developing Lumigator, a tool designed to assist developers in selecting the optimal LLM for their projects.

- This product is part of Mozilla's commitment to providing reliable open-source AI solutions to the developer community.

- Developer Hub Streamlines AI Resources: Mozilla AI is launching a Developer Hub that offers curated resources for building with open-source AI.

- The initiative aims to enhance user agency and transparency in AI development processes.

- Blueprints Open-sources AI Integrations: The Blueprints initiative focuses on open-sourcing AI integrations through starter code repositories to initiate AI projects.

- These resources are designed to help developers quickly implement AI solutions in their applications.

- Inquiries on Community Engagement Role: Interested applicants can pose questions about the Head of Community Engagement position in the dedicated thread.

- This role underscores Mozilla AI's dedication to community-driven initiatives.

The tinygrad (George Hotz) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!