[AINews] Google AI: Win some (Gemma, 1.5 Pro), Lose some (Image gen)

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI Discords for 2/20/2024. We checked 20 guilds, 313 channels, and 8555 messages for you. Estimated reading time saved (at 200wpm): 836 minutes.

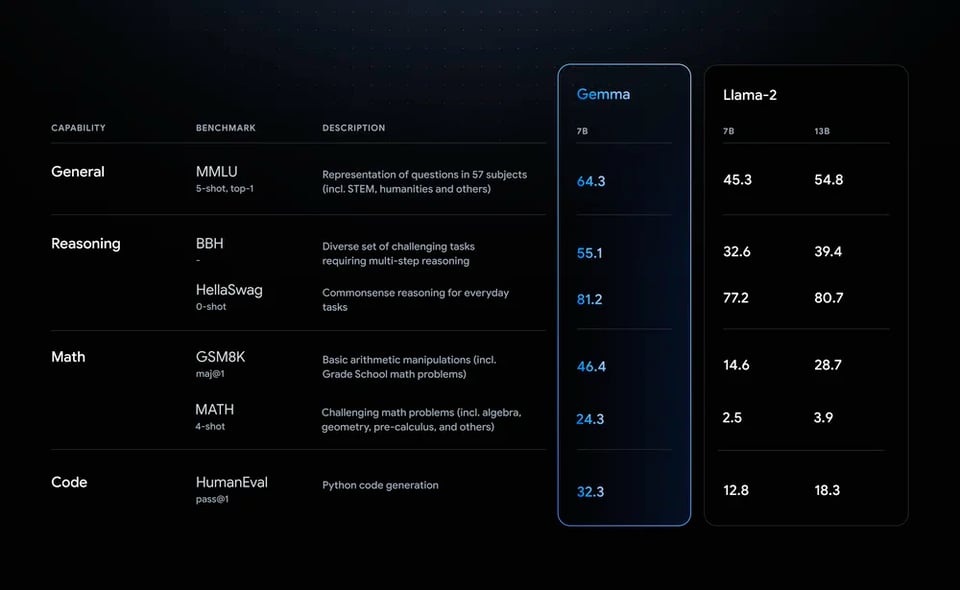

Google is at the top of conversations for a lot of good and bad reasons today. The new Gemma open models (2-7B in size, presumably the smaller version of Gemini models) showed better benchmarks than Llama2 and Mistral:

but comes with an unusual license and doesnt pass the human vibe check.

Meanwhile, literally everybody is dogpiling on Gemini's clumsily diverse image generation, a problem partially acknowledged by Google.

But in what seems like a pure win, the long context of the still-waitlisted Gemini Pro 1.5 (with 1m token context) is video understanding and needle in haystack tests.

Table of Contents

- PART 1: High level Discord summaries

- TheBloke Discord Summary

- LM Studio Discord Summary

- Nous Research AI Discord Summary

- Eleuther Discord Summary

- HuggingFace Discord Summary

- LlamaIndex Discord Summary

- Mistral Discord Summary

- OpenAI Discord Summary

- Latent Space Discord Summary

- OpenAccess AI Collective (axolotl) Discord Summary

- LAION Discord Summary

- Perplexity AI Discord Summary

- CUDA MODE Discord Summary

- LangChain AI Discord Summary

- DiscoResearch Discord Summary

- LLM Perf Enthusiasts AI Discord Summary

- Skunkworks AI Discord Summary

- Alignment Lab AI Discord Summary

- Datasette - LLM (@SimonW) Discord Summary

- AI Engineer Foundation Discord Summary

- PART 2: Detailed by-Channel summaries and links

- TheBloke ▷ #general (1156 messages🔥🔥🔥):

- TheBloke ▷ #characters-roleplay-stories (189 messages🔥🔥):

- TheBloke ▷ #training-and-fine-tuning (6 messages):

- TheBloke ▷ #coding (166 messages🔥🔥):

- LM Studio ▷ #💬-general (375 messages🔥🔥):

- LM Studio ▷ #🤖-models-discussion-chat (65 messages🔥🔥):

- LM Studio ▷ #announcements (3 messages):

- LM Studio ▷ #🧠-feedback (10 messages🔥):

- LM Studio ▷ #🎛-hardware-discussion (96 messages🔥🔥):

- LM Studio ▷ #🧪-beta-releases-chat (301 messages🔥🔥):

- LM Studio ▷ #autogen (1 messages):

- LM Studio ▷ #crew-ai (2 messages):

- Nous Research AI ▷ #ctx-length-research (67 messages🔥🔥):

- Nous Research AI ▷ #off-topic (22 messages🔥):

- Nous Research AI ▷ #interesting-links (49 messages🔥):

- Nous Research AI ▷ #announcements (2 messages):

- Nous Research AI ▷ #general (594 messages🔥🔥🔥):

- Nous Research AI ▷ #ask-about-llms (37 messages🔥):

- Nous Research AI ▷ #collective-cognition (3 messages):

- Nous Research AI ▷ #project-obsidian (3 messages):

- Eleuther ▷ #general (146 messages🔥🔥):

- Eleuther ▷ #research (350 messages🔥🔥):

- Eleuther ▷ #interpretability-general (38 messages🔥):

- Eleuther ▷ #lm-thunderdome (76 messages🔥🔥):

- Eleuther ▷ #gpt-neox-dev (5 messages):

- HuggingFace ▷ #announcements (1 messages):

- HuggingFace ▷ #general (250 messages🔥🔥):

- HuggingFace ▷ #today-im-learning (6 messages):

- HuggingFace ▷ #cool-finds (8 messages🔥):

- HuggingFace ▷ #i-made-this (33 messages🔥):

- HuggingFace ▷ #reading-group (4 messages):

- HuggingFace ▷ #diffusion-discussions (35 messages🔥):

- HuggingFace ▷ #computer-vision (4 messages):

- HuggingFace ▷ #NLP (33 messages🔥):

- HuggingFace ▷ #diffusion-discussions (35 messages🔥):

- LlamaIndex ▷ #announcements (1 messages):

- LlamaIndex ▷ #blog (4 messages):

- LlamaIndex ▷ #general (379 messages🔥🔥):

- LlamaIndex ▷ #ai-discussion (7 messages):

- Mistral ▷ #general (197 messages🔥🔥):

- Mistral ▷ #models (72 messages🔥🔥):

- Mistral ▷ #deployment (38 messages🔥):

- Mistral ▷ #finetuning (29 messages🔥):

- Mistral ▷ #showcase (25 messages🔥):

- Mistral ▷ #random (2 messages):

- Mistral ▷ #la-plateforme (10 messages🔥):

- OpenAI ▷ #ai-discussions (104 messages🔥🔥):

- OpenAI ▷ #gpt-4-discussions (54 messages🔥):

- OpenAI ▷ #prompt-engineering (94 messages🔥🔥):

- OpenAI ▷ #api-discussions (94 messages🔥🔥):

- Latent Space ▷ #ai-general-chat (92 messages🔥🔥):

- Latent Space ▷ #ai-announcements (3 messages):

- Latent Space ▷ #llm-paper-club-west (173 messages🔥🔥):

- OpenAccess AI Collective (axolotl) ▷ #general (165 messages🔥🔥):

- OpenAccess AI Collective (axolotl) ▷ #axolotl-dev (23 messages🔥):

- OpenAccess AI Collective (axolotl) ▷ #general-help (27 messages🔥):

- OpenAccess AI Collective (axolotl) ▷ #rlhf (1 messages):

- OpenAccess AI Collective (axolotl) ▷ #runpod-help (5 messages):

- LAION ▷ #general (185 messages🔥🔥):

- LAION ▷ #research (30 messages🔥):

- Perplexity AI ▷ #general (111 messages🔥🔥):

- Perplexity AI ▷ #sharing (7 messages):

- Perplexity AI ▷ #pplx-api (18 messages🔥):

- CUDA MODE ▷ #general (3 messages):

- CUDA MODE ▷ #triton (8 messages🔥):

- CUDA MODE ▷ #cuda (5 messages):

- CUDA MODE ▷ #torch (15 messages🔥):

- CUDA MODE ▷ #beginner (14 messages🔥):

- CUDA MODE ▷ #youtube-recordings (1 messages):

- CUDA MODE ▷ #jax (3 messages):

- CUDA MODE ▷ #ring-attention (52 messages🔥):

- LangChain AI ▷ #general (46 messages🔥):

- LangChain AI ▷ #share-your-work (3 messages):

- LangChain AI ▷ #tutorials (4 messages):

- DiscoResearch ▷ #general (18 messages🔥):

- DiscoResearch ▷ #benchmark_dev (9 messages🔥):

- LLM Perf Enthusiasts AI ▷ #general (7 messages):

- LLM Perf Enthusiasts AI ▷ #opensource (1 messages):

- LLM Perf Enthusiasts AI ▷ #embeddings (1 messages):

- Skunkworks AI ▷ #general (1 messages):

- Skunkworks AI ▷ #off-topic (4 messages):

- Alignment Lab AI ▷ #general-chat (4 messages):

- Datasette - LLM (@SimonW) ▷ #ai (2 messages):

- Datasette - LLM (@SimonW) ▷ #llm (2 messages):

- AI Engineer Foundation ▷ #general (3 messages):

- AI Engineer Foundation ▷ #events (1 messages):

PART 1: High level Discord summaries

TheBloke Discord Summary

- Mixed Reception for Gemma Models: Some users, such as @itsme9316, find that Gemma can handle single prompts adequately but struggles with multiturn responses. Meanwhile, @dirtytigerx points out issues with the over-aligned instruct model and unexpectedly high VRAM usage, without indicating exact numbers.

- Anticipation for AI Model Releases and Updates: Users are contemplating the potential release of "Llama 3" in March and discussing Google's AI development choices, highlighting concerns over aspects such as contaminated models. Additionally, the new "retrieval_count" feature in PolyMind is well-received for its utility in tasks like GMing, offering multiple retrieval results for a broader scope of information.

- Roleplay and Character Complexity in Chatbots: Efforts to generate DPO data to improve roleplay scenarios for characters with secrets or lies are underway among users. There is also discussion regarding the challenges of maintaining character consistency within AI models and varied VRAM requirements for Miqu-70b models, with 32 GB mentioned for Q2 and 48 GB for Q5.

- Dataset Editing and Model Training Woes: User @pncdd is experiencing difficulty editing a complex synthetic dataset, while @3dhelios is exploring the inclusion of negative examples in training. Gradio was suggested as a potential solution for creating dataset editing tools, and the search for an effective classifier for a relevance filtering task was highlighted, with the prospect of using deepseek-coder-6.7B-instruct.

- Technical Conversations on Coding and Model Optimization: A local script for a chatbot featuring multiple coding assistants is being developed by @pilotgfx, where conversation history management is key. Discussions also focused on the utilization of RAG techniques, Mistral finetuning, editor preferences, with dissatisfaction with VSCode leading to a preference for Zed, and backend infrastructure optimization for models, where starting with rented GeForce RTX 4090 GPUs was suggested.

LM Studio Discord Summary

- Dual GPUs Need Ample Juice: Users in the hardware discussion channel cautioned about PSU requirements for dual GPU setups and noted that in multi-GPU configurations the overall speed matches that of the slowest card. High-end GPUs like the NVIDIA GeForce RTX 3090 are favored for AI tasks due to their significant VRAM.

- Troubles and Fixes in LM Studio's Latest Beta: LM Studio users reported various issues with the LM Studio 0.2.15 Beta, including problems with the

n_gpu_layersfeature and Gemma 7B models outputting gibberish. Version 0.2.15 has been re-released with bug fixes targeting these and other issues, and users are advised to redownload it from LM Studio's website.

- Gemma Model Integration Efforts Continue: Google's Gemma model support has been added to LM Studio, and users are directed to manually download Gemma models (2B and 7B versions), with a link to the 2B variant available at Hugging Face. A recommended Gemma quant was also shared for easier integration.

- Query and Request in AI Assistant Sphere: On the topic of AI assistants,

@urchigexpressed interest in having a creation feature integrated into LM Studio, pointing to the existing feature on Hugging Face. Additionally, some users encountered display issues with RAM and troubles with Visual Studio Code potentially related to venv.

- Microsoft's Unknown Flying Object: A user shared a link to Microsoft's UFO repository on GitHub: UFO on GitHub. The context and relevance to the discussion were not provided.

Nous Research AI Discord Summary

- ChatGPT's Context Conundrum: After conducting experiments with the Big Lebowski script,

@elder_pliniusdemonstrated that ChatGPT might limit contexts by characters, not tokens. This sparked discussion, with@gabriel_symesharing a GitHub repository for scaling language models to 128K context, and varying claims about VRAM requirements for large context AI models.

- AI-Driven Simulators and Self-Reflection: Discussion on AI-driven game simulations, with

@nonameusramazed by OpenAI's Sora simulating Minecraft, and@pradeep1148linking a video about self-improving retrieval-augmented generation (Self RAG). Additionally, inquiries surfaced about training non-expert models on microscopic images for artistic purposes.

- Library Releases and AI Model Evaluations:

.beowulfbrpresentedmlx-graphs, a GNN library optimized for Apple Silicon, andburnytechshowcased Gemma 1.5 Pro's ability to learn to self-implement. Also discussed was a no-affiliation clarification regarding the A-JEPA AI model and an arXiv paper discussing elicitation of chain-of-thought reasoning in pre-trained LLMs without explicit prompting.

- Hermes 2 Ascends: Nous Hermes 2 release was announced, displaying enhanced performance across various benchmarks. Pre-made GGUFs for Nous Hermes 2 were also made available on HuggingFace, with FluidStack receiving thanks for supporting computation needs.

- LLM Strategies and Pitfalls: Discussion explored the Gemma model's benchmarks, nuances of fine-tuning, LoRA usage, and hosting large models on custom infrastructure. The datasets were debated, particularly the challenges of editing a synthetic dataset and combining DPO with SFT in Nous-Hermes.

- Discontent with Hosting Services: Criticism arose against Heroku, with members like

@bfpillvoicing frustration without expanding on specific grievances.

- Project Slowed by a Pet's Illness:

@qnguyen3expressed apologies for delays in Project Obsidian due to their cat's health issues and invited direct communication for project discussions.

Eleuther Discord Summary

- Model Dual Capabilities Spark Interest: Users are intrigued by diffusion models that can handle both prompt-guided image-to-image and text-to-image tasks, like Stable Diffusion. Google's release of Gemma, a family of open models, has also piqued interest, with Nvidia collaborating to optimize it for GPU usage.

- License Wrestles and Governance Questions: There's an ongoing discussion surrounding the licensing of models such as Google's Gemma, the potential for copyrighting models, and its implications for commercial use. Meanwhile, the governance of foundation models, including AGI, is drawing attention for policy development, like mandated risk disclosure standards.

- Intelligence Benchmarks and Transformer Efficiency: Debates heat up over the validity of current benchmarks, like MMLU and HellaSwag, for evaluating model intelligence. Users are also interested in finding the most information-efficient transformer models and comparing performance against MLPs and CNNs at various scales.

- Uncovering Multilingual Model Mysteries: There's curiosity over whether multilingual models, like Llama-2, are internally depending on English for tasks, with research approaches such as using a logit lens for insights being shared. Questions are also being asked about the potential for language-specific lens training.

- Tweaking Code and Handling OOM: In the realm of AI development, practitioners are tackling practical issues, such as resolving Out of Memory (OOM) errors during model evaluation and making tweaks to code for better model performance. Specifically, issues with running Gemma 7b in an evaluation harness and confusion regarding dropout implementation in ParallelMLP are points of discussion.

HuggingFace Discord Summary

- Community Engagement on the Upswing: The HuggingFace Discord community has showcased active participation in prompt ranking with over 200 contributors and 3500+ rankings, helping to construct a communal dataset. A leaderboard feature adds a gaming dimension, fostering further interaction and contribution.

- Technical Troubleshooting and Library Updates Gain Attention: Amidst several technical inquiries, including issues with

huggingface-vscodeon NixOS and challenges in fine-tuning on Nvidia A100 GPUs, an intriguing tease was dropped about an upcoming release of the transformers library, suggesting new models and improved custom architecture support.

- AI-Generated Art and Sign Language Translation Models Discussed: Discussion in the computer-vision channel highlighted image captioning resources using BLIP and models for sign language translation—crucial tools for expanding AI's accessibility in communication and content generation.

- AI Startup Showcases, AI's Use in Cybersecurity, and Financial Apps Designed: Entrepreneurs and developers are creating value across various fields: an AI startup showcase event at Data Council, a model named WhiteRabbitNeo-13B-v1 aimed at cybersecurity, and an investment portfolio management app designed to assist in financial decisions.

- Community Contributions to NLP and Diffusion Modeling Showcased: Users are contributing innovative solutions like an Android app for monocular depth estimation and discussing advancements in damage detection using stable diffusion models—indicative of the robust collaborative environment within HuggingFace.

- Lively Discussion on Enhanced Multimodal Models and Diffusion Techniques: Participants within the reading-group and diffusion-discussions advocated for better multimodal models and shared insights on the intricacies of advanced diffusion techniques, including Fourier transforms for timestep embeddings.

LlamaIndex Discord Summary

- Introducing LlamaCloud and Its Components: LlamaIndex announced LlamaCloud, a new cloud service designed to enhance LLM and RAG applications by offering LlamaParse for handling complex documents, and has opened a managed API for private beta testing. Collaborators mentioned include Mendable AI, DataStax, MongoDB, Qdrant, NVIDIA, and contributors from the LlamaIndex Hackathon. Early access and resources can be found in the official announcement and via the tweet.

- Content Creation Tips for RAG Development: A set of advanced cookbooks for setting up RAG with LlamaParse and AstraDB was mentioned, which can be accessed through the provided cookbook guide. A new comprehensive approach in simplifying RAG development pipelines was discussed with accessible slides shared through an announcement tweet, and a frontend tutorial for experts was linked with full support by LlamaIndex.

- Navigating LlamaIndex and GitHub Quandaries: Users have discussed topics ranging from the LlamaIndex v0.10.x update import path issues, the optimization and finetuning of LLM models within LlamaIndex, to potential solutions for high CPU usage and response latency for streaming agents in response_gen. For LLM finetuning queries, users were directed to documentation and repository examples such as llm_generators.py.

- Technical Deep Dives and AI Insights: Members of the guild explored and shared insights into GPT-4's arithmetic and symbolic reasoning capabilities in a blog post and engaged in a technical discussion on Gemini 1.5's potential to assist with language translation, particularly for translating French into Camfranglais. Additionally, questions were raised about summarization metrics for evaluting Llamaindex performance.

- Productivity and Language Processing Enhancements: A blog post shared by

@andysingalhighlighted the integration of Llamaindex, React Agent, and Llamacpp for streamlined document management, readable here. This reflects ongoing dialogue within the community on how best to employ and combine various technologies to enhance document processing capabilities.

Mistral Discord Summary

- Groq It Like It's Hot: The Groq chip's speed was highlighted, leveraging its sequential nature to potentially achieve thousands of tokens per second but noting challenges in scaling to larger models. Meanwhile, quantization was a hot topic, with suggestions that a quantized version of a model could outpace an fp16 version on certain accelerators.

- Mistral-Next Sparks Interest and Concerns: Community interactions revealed a mix of excitement and concern over Mistral-Next, including its brevity preference and censorship in newer language models. Access to Mistral-Next is currently limited to testing via lymsys chat, while the model itself is not yet available via API.

- Openweights but Not Opensource: Mistral models are considered openweights but not open source, with upcoming announcements expected about Mistral-Next. Discussions also touched on the intricacies of function calling in LLMs, and the challenges of adding new languages such as Hebrew to models due to limited pretraining on these tokens.

- The Practicalities of AI: Users shared their experiences and queries on deploying Mistral on platforms like AWS, with considerations for costs and hardware requirements, such as needing 86GB of VRAM to merge an 8x7b model. The challenges and suggested approaches to finetuning were also exchanged, recommending an iterative cycle and considering parameters affecting accuracy.

- Sharing AI Experiences and Resources: FUSIONL AI, an educational AI startup, was introduced, and a new library for integrating Mistral AI into Flutter apps was announced, indicating growth in tools for developers. Further, the importance of crafting effective prompts was underscored by sharing a guide on prompting capabilities. Concerns about self-promotion versus genuine project showcasing on community channels were also voiced.

Relevant Links: - vLLM | Mistral AI Large Language Models - Prompting Capabilities | Mistral AI Large Language Models - GitHub - nomtek/mistralai_client_dart - FUSIONL AI Website

OpenAI Discord Summary

- GPT-4 Variants Stir Curiosity: A user identified an issue with GPT-4-1106 handling the

£symbol in JSON format, with a bug causing truncated outputs or character encodings to change. Meanwhile,@fightrayasked about the update from GPT-3.5 Turbo to GPT-3.5 Turbo 0125, which@solbusconfirmed, directing to the official documentation for more details.

- AI, Please Keep to the Script!: Advising on crafting AI responses for specific use cases, such as customer service prompts or RPG scenarios,

@darthgustav.stressed the use of positive instructions and embedded template variables. Feedback suggested that GPT-4-0613 outperformed 0125 turbo for roleplay, and@bambooshootshelped@razorbackx9xwith a prompt designed for programming assistance with Apple's Shortcuts app.

- Policy Awareness in AI Draws Debate: Critiques were raised regarding AI refusing tasks believed to be against policy, despite them being logical or suitable.

@darthgustav.highlighted the importance of clear and positive task framing to manage potential misuse issues such as plagiarism.

- Anticipation for Sora AI Brews Impatience: Users discussed the availability of Sora AI, indicating no public release or API access date has been confirmed yet. There was also a mention of a 40 messages limit per 3 hours imposed on using GPT-4, with discussions revolving around explaining subscription plans and usage caps.

- AI User Experience Expectations vs. Reality: A user expressed discontent with the lack of real-time sharing in the ChatGPT Teams Plan, reflecting a disparity between advertised and actual features. Additionally, mixed feelings about various AI tools were shared, with GPT-4 being praised for math problem-solving, Groq being successful for generating Edwardian literature content, and Gemini criticized for unintentionally Shakespearean output.

Latent Space Discord Summary

- Karpathy's New Tokenizer Tutorials Engage Engineers: The AI community is keen on Andrej Karpathy's new lectures on constructing a GPT tokenizer as well as a detailed examination of the Gemma tokenizer. Enthusiasm is high, suggesting a trend toward deepened understanding of language model inner workings among technical audiences.

- Google Paginates New Chapter with “Gemma”: Google's release of Gemma on Huggingface and subsequent terms of service discussions indicate that these new large language models (LLMs) have piqued significant interest in operational specifics and ethical use—key concerns for engineering professionals evaluating new AI tools.

- Magic's Mystery Surrounds AI Coding Capabilities: A revealing article about Magic, an AI coding assistant reportedly surpassing the likes of Gemini and GPT-4, has the community speculating about its underlying mechanisms and the implications for the future of AI in software development.

- Paper Club Spotlights Engineer-AI Collaboration: A recent paper scrutinizing the partnership between AI and software engineers in creating product copilots spurred discussion, reflecting the engineering community's interest in AI's evolving role in product development and the challenges it presents.

- Integration and Evaluation of AI Become Focal Points: Conversations reveal a growing interest in the integration of AI tools like Gemini across Google Workspace, highlighting the importance of robust evaluation methods, tools, and models. This signifies a clear trend: as engineers are called to work with LLMs like Mistral, skills in evaluation and prompt programming are becoming essential in the industry.

OpenAccess AI Collective (axolotl) Discord Summary

- GPU Great Debate for Hospital Data Handling: Yamashi leans towards purchasing 8x MI300X GPUs for their higher VRAM, considering them for managing 15,000 VMs related to massive hospital data processes, while assessing compatibility with ROCm software.

- Gemma Models Stir Interest and Skepticism: The AI Collective discusses Google's Gemma models, stressing on the new Flash Attention v2.5.5 for fine-tuning on consumer GPUs, like RTX 4090. Concerns were raised about the models' licensure, output restrictions, and the compatibility of custom chat formats with existing tools.

- LoRA+ Integration Buzz in Axolotl: A recent paper on LoRA+ is suggested for integration into Axolotl, with its promise of optimized finetuning for models with large widths. The need for the latest Transformers library version for Gemma model training is emphasized, and variations in Gemma's learning rate and weight decay values are noted from Google's documentation and Hugging Face's blog.

- Collaborative Templates and Trouble in General Help: Yamashi shares a link to a tokenizer_config.json that includes a chat template, and after a collaborative effort, shares an Alpaca chat template suitable for Axolotl. DeepSpeed step count confusion and formatting for finetuned model inference were also discussed, highlighting the need for USER and ASSISTANT formatting.

- Loss Puzzles in DPO Training: Concerns are raised by noobmaster29 over DPO training logs indicating low loss before one epoch is complete, posing questions of potential overfitting in the absence of evaluation data.

- RunPod's Fetch Fiasco and Infinite Retries: Casper_ai reports persistent image fetch errors with RunPod, leading to infinite retries, while c.gato suggests a lack of disk space might be the underlying cause for the image download failures.

LAION Discord Summary

- Quest for All-Inclusive Git Data Stalls: A quest for a dataset containing all public git repositories with their associated issues, comments, pull requests, and commits was surfaced by

@swaystar123, but alas, the digital trail went cold with no answers provided.

- Whereabouts of LAION 5B: Curiosity peaked about the LAION 5B dataset with queries flying in about its availability, but the definitive update remained elusive in the galaxy of the guild. Meanwhile, DFN 2B was suggested as a stand-in, albeit not without its own access quirks.

- The Sora Saga: Real or Synthetic: A debate sprung up over Sora's origins—courting questions on whether it's a spawn of real or synthetic training data. Clues like 'floaters' and static backgrounds fuel the speculation of synthetic elements amidst the outputs.

- Synthetic Data—The Unsung Hero or Hidden Villain?: In a twist, OpenAI references using synthetic data contradicting one guild member's beliefs, while others continued to weigh the pros and cons, pondering over the true nature and impact of synthetic data's place in AI model development.

- Pushing the Envelope with AnyGPT: Engaged engineers exchanged insights into the AnyGPT project, an initiative striving to process different modalities through a language model with token of discrete representations—a discussion sealed with a YouTube demo.

Perplexity AI Discord Summary

- Perplexity Pro Perks Peek: Users like

@norgesvennvouched for Perplexity Pro's speed and accuracy. Guidance was offered for new Pro users to access exclusive Discord channels via a link provided in user settings, though some links shared were incomplete.

- Balancing Act in AI Invocation: Debates arose about how to instruct Perplexity AI to harmonize search-result reliance with inherent AI creativity. A hybrid approach of utilizing specific instructions was suggested to encourage the AI to tap into its own repository of information.

- Gemini Touted Over GPT-4: Discussions highlighting the advantages of Gemini Advanced models over GPT-4 surfaced, with users praising updated models and their output styles. The discourse reflects an inclination towards Gemini's capabilities and growing preference within the community.

- Image Generation Enigma: Queries on how to generate images with Perplexity AI were directed to the "Generate Image" button, underscoring the AI's multimedia capabilities. However, the detailed procedures or examples were not fully clarified due to incomplete links.

- API Quandaries and Quirks: Technical discussions in the pplx-api channel uncovered a variety of user issues such as seeking increased API request limits, discrepancies between API and outdated webapp results, unaddressed requests for

stream: trueexamples, and erratic gibberish responses from pplx-70b-online. Solutions or responses to these concerns were notably absent.

CUDA MODE Discord Summary

- CUDA Mode Embraces NVIDIA's Grace Hopper: The CUDA MODE community, highlighted by

@andreaskoepf, is inclusive to all GPU enthusiasts, further evidenced by NVIDIA's outreach with their Grace Hopper chips to@__tinygrad__, stirring interest and discussion. Related Tweet: tinygrad's tweet.

- Groq's LPU Sets New AI Performance Bar:

@srns27brought to light Groq's LPU groundbreaking performance in processing large language models. To provide a better understanding of this technological feat,@dpearsonshared a YouTube video featuring Groq's Compiler Tech Lead Andrew Bitar's lecture on the subject.

- Seeking Collaborators for Triton/Mamba Development:

@srush1301actively looks for collaborators to enhance the Triton/Mamba project, offering coauthorship and discussing goals such as adding a reverse scan option in Triton. The project's current status and tasks are outlined in Annotated Mamba.

- Optimizing PyTorch with Custom Kernels: Discourse in the torch channel has revealed various tactics to accelerate PyTorch, including the use of custom kernels and Triton kernels.

@gogators.and@hdcharles_74684shared insights and linked to a series of optimization-focused blog posts and related source code like quantization details.

- A New Frontier in Audio Semantic Analysis: Within the youtube-recordings channel,

@shashank.f1shared a YouTube discussion on the A-JEPA AI model, an innovative approach to deriving semantic knowledge from audio files, signifying advancements in the realm of AI's understanding of audio data. The discussed video can be found here: "A-JEPA AI model: Unlock semantic knowledge from .wav / .mp3 file or audio spectrograms".

- JAX Pallas Flash Attention Code Evaluation: The jax channel was buzzing with inquiries about the

flash_attention.pyfile seen on the JAX GitHub repository. Interest in its functionality and compatibility for GPUs was discussed, but users such as@iron_boundhave faced challenges, including crashes due to the shape dimensions error. The file in question is available here: jax flash_attention.py.

- Ring Attention and Flash Attention Gather Engagement: In the ring-attention channel, user

@ericualdhas shared a Colab on implementing ring attention while@iron_bound,@lancerts, and others engage in discussions to enhance understanding and troubleshoot potential algorithm issues—an active and cooperative effort to push forward the project is noticed. A dummy version of ring attention can be found at this Google Colaboratory and the forward pass implementation by@lancertsin this naive Python notebook.

LangChain AI Discord Summary

- TypeScript and LangChain: A Hidden Miss?:

@amur0501questioned the efficacy of using LangChain with TypeScript, wary of missing out on Python-specific features. The community did not reach a consensus on whetherlangchain.jsholds up to its Python counterpart.

- Function Crafting with Mistrial: Using function calling on Open-Source LLMs (Mistral) has practical demonstrations, as shared by

@kipkoech7, which includes examples on local use and with Mistral's API. Refer to the GitHub resource for implementation insights.

- Vector Database Indexing Dilemma:

@m4hdyarsought strategies on index updates for vector databases post-code alterations.@vvm2264proposed a code chunk-tagging system or a 1:1 mapping solution, but no definitive strategy was highlighted.

- NLP Resources Remain Outdated: In the hunt for up-to-date NLP materials, surpassing 2022's offerings,

@nrs9044asked for recommendations. The latest libraries and advancements remain a topic with insufficient follow-up.

- AzureChatOpenAI Configuration Woes: Difficulty arose in configuring

AzureChatOpenAIwhen@smartge3kfaced a 'DeploymentNotFound' error withinConversationalRetrievalChain. Solutions remained elusive as community discussion ensued.

- Pondering a Pioneering PDF Parser:

@dejomaexpressed a desire to elevate the existing PDFMinerPDFasHTMLLoader / BeautifulSoup parser to a more refined level with a week of dedicated work, hoping to collaborate with like-minded individuals.

- One-Man Media Machine:

@merklehighlighted how LangChain's langgraph agent setup can transform a solitary idea into a newsletter and tweets, citing a tweet from@michaeldaigler_describing the process.

- A Hint of an Enigmatic Endeavor:

@pk_penguinteased a potential new project or tool, vaguely inviting curiosity and private messages for those intrigued enough to explore the mysterious offering.

- RAG Revisions via Reflection: Self-reflection enhancement of RAG was the topic of a YouTube tutorial titled "Corrective RAG using LangGraph" posted by

@pradeep1148, featuring methods for improving generative models. The discussion is supplemented with another video on Self RAG using LangGraph.

- Memory Matters: AI with Recall: The development of chatbots with persistent memory was outlined by

@kulaonein an article about integrating LangChain, Gemini Pro, and Firebase. For details on establishing chatbots with memories extending beyond live sessions, read here.

- A Spark of Spark API Trouble: An 'AppIdNoAuthError' troubled

@syedmujeebregarding the Spark API, with community pointers redirecting to the respective LangChain documentation for potential troubleshooting.

DiscoResearch Discord Summary

- Gemma Models Spark Technical Interest:

@johannhartmanninitiated a query about the training strategy for the intriguing Gemma Models, triggering a knowledge share including a link to Google's open-source models by@sebastian.bodza.@philipmayraised concerns regarding their proficiency in non-English languages, while@bjoernpprovided a link that reveals Gemma's instruction version and highlighted its 256k vocabulary size.

- Aleph Alpha's Progress Under the Microscope: An Aleph Alpha Model update led to discussions about potential enhancements, with

@devnull0mentioning the recruitment of Andreas Köpf to Aleph Alpha as a positive indicator, and shared the company's changelog. Conversely,@_jp1_expressed skepticism due to the absence of benchmarking data and instruction tuning in the updated models.

- Gemma Falls Short in German Language Tests: Empirical evaluations by

@_jp1_and@bjoernpindicate that Gemma's instruct version struggles with the German language, as evidenced by poor results in thelm_evaltest for German hellaswag, barely surpassing random chance.

- Seeking Speed: GPU Budget Sparks Benchmark Navigation:

@johannhartmannhumorously lamented the GPU budget constraints, prompting a search for free or faster benchmarks.@bjoernpproposed using vLLM to accelerate thelm-evaluation-harness-de, although an outdated branch was found to be the culprit for slow test runs.

- Committing to Performance Improvements:

@bjoernpacknowledged the outdated branch that omitted vLLM integration as a reason for sluggish benchmarking. They pledged to align the harness with the current main branch, estimating a few days to accomplish the update.

LLM Perf Enthusiasts AI Discord Summary

- A Waitlist or a Helping Hand: @wenquai noted that to access a specific AI service, one has to either be on a waitlist or reach out to a Google Cloud representative.

- Twitter Stirring AI Access Talk: Rumors about gaining access to an AI service have been circulating on Twitter, with @res6969 confirming the buzz and awaiting their own access.

- AI Performance Anxiety: Public feedback suggests an AI is suffering from accuracy and hallucination problems, as stated by @res6969, and @thebaghdaddy commented, indicating it's a common issue.

- Navigating the CRM Maze for AI Enterprises: @frandecam is in pursuit of CRM solutions for their AI business, considering options like Salesforce, Zoho, Hubspot, or Pipedrive, while @res6969 advises against Salesforce.

- Google Shares Open Model Gems: potrock shared a Google blog post about open models available to developers.

- Tuning Technique Talk: @dartpain advocates for the use of ContrastiveLoss and MultipleNegativesRankingLoss when fine-tuning embeddings.

Skunkworks AI Discord Summary

- Neuralink Insider Tips Requested: A member

@xilo0is keen on insights for tackling the "evidence of exceptional ability" question in a late-stage Neuralink interview. They have a portfolio of projects but are seeking advice to stand out in a Musk-led venture.

- RAG Gets Reflective:

@pradeep1148provided educational resources with two YouTube videos discussing Corrective RAG and Self RAG using LangGraph, highlighting self-reflection as a method to improve retrieval-augmented generation models.

- Finetune Value Questioned: In a YouTube video,

@pradeep1148shared insights on "BitDelta: Your Fine-Tune May Only Be Worth One Bit," questioning the value of fine-tuning large language models post extensive dataset pre-training.

- Content Approval:

@sabertoastershowed appreciation for the shared RAG and BitDelta content with a simple "nice," indicating the content resonated with the community.

Alignment Lab AI Discord Summary

- AI Community Acknowledges One of Their Own:

@swyxioreceived recognition from peers in the AI field, marking a notable professional milestone celebrated within the community. - Grassroots Voices Sought in AI Lists:

@tokenbenderargues for the inclusion of grassroots contributors in a corporate-dominated AI list, proposing@abacajon Twitter as a worthwhile addition. - Mystery of the Missing Token:

@scopexbtis on a quest to discover if there's an elusive token tied to the group, but their search has come up empty so far.

Datasette - LLM (@SimonW) Discord Summary

- Google Debuts Video-Innovative Gemini Pro 1.5: Google has launched Gemini Pro 1.5, which impresses with a 1 million token context and the revolutionary ability to handle video inputs. Simon Willison has been exploring these features through Google AI Studio and described his experiences on his blog.

- GLIBC Snag in GitHub Codespaces: While attempting to run an llm in a GitHub codespace,

@derekpwillisstumbled upon an OSError due to missingGLIBC_2.32, with the issue tracing back to a file within thellmodel_DO_NOT_MODIFYdirectory, and queried the group for potential fixes.

- Engineers Have a Soft Spot for "Don't Touch" Labels: The humorously named

llmodel_DO_NOT_MODIFYdirectory received a shoutout from@derekpwillis, showcasing that even technical audiences appreciate a well-placed warning label.

AI Engineer Foundation Discord Summary

- Groq LPU Gains Traction: Both

@juanredsand a user going by._zshared favorable opinions on the Groq LPU's performance, with@juanredsproviding a test link for others to gauge its impressive speed. - Gemini 1.5 Gathering Arranged:

@shashank.f1extended an invitation for the upcoming live discussion on Gemini 1.5 with a link to join the event. - Diving Into Semantic Audio Analysis: A session highlighting the A-JEPA AI model, specialized in extracting semantic knowledge from audio files, was recapped in a YouTube video.

- Coordination Conundrum and Sponsorship Update:

@yikesawjeezexpressed a conflict for a morning event and asked for a recording, while updating@705561973571452938on sponsorship status, mentioning one confirmed and three potential sponsors for an unspecified upcoming weekend event.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1156 messages🔥🔥🔥):

- Gemma Model Satisfaction is Mixed: Users have mixed feelings about the Gemma models compared to Mistral. While @itsme9316 acknowledges that Gemma can handle single prompts, they note multiturn responses are where it falls apart. @dirtytigerx mentions the over-aligned instruct model is challenging for even benign tasks and others mention the VRAM usage is unexpectedly high.

- Concerns About Google's AI Models: Despite having resources and specialists, there is a sentiment of disappointment conveyed by users like @selea8026, questioning Google's decisions around AI development. @alphaatlas1 criticizes their track record, citing models like Orca being contaminated.

- Text-to-Video Models Discussion: Discord users discuss Sora, a model perceived as a "world simulator." Yann LeCun, Meta's Chief AI Researcher, criticized this approach, suggesting that training a model by generating pixels is wasteful. Some users, including @welltoobado, found Sora impressive for its video quality and longer generation capabilities compared to open-source text-to-video models.

- Anticipation for Llama 3 (LL3): There's speculation and eagerness among users about the release of a new model known as "Llama 3." @mrdragonfox hints that March could be a potential release period, whereas others, such as @kaltcit, suggest a more conservative estimate.

- PolyMind's Updated RAG Retrieval: The "retrieval_count" feature introduced by @itsme9316 in PolyMind has been positively received, with users like @netrve finding it greatly useful for tasks like GMing. The feature allows retrieving multiple results which was beneficial for getting a broader scope of information.

Links mentioned:

- no title found: no description found

- Join the Stable Diffusion Discord Server!: Welcome to Stable Diffusion; the home of Stable Models and the Official Stability.AI Community! https://stability.ai/ | 318346 members

- Tweet from JB McGill (@McGillJB): @yacineMTB Ho ho ho it gets better.

- no title found: no description found

- HuggingChat: Making the community's best AI chat models available to everyone.

- Gemma: Introducing new state-of-the-art open models: Gemma is a family of lightweight, state\u002Dof\u002Dthe art open models built from the same research and technology used to create the Gemini models.

- bartowski/sparsetral-16x7B-v2-exl2 · Hugging Face: no description found

- serpdotai/sparsetral-16x7B-v2 · Hugging Face: no description found

- Leroy Worst Admin GIF - Leroy Worst Admin Admin - Discover & Share GIFs: Click to view the GIF

- Stable Cascade - a Hugging Face Space by ehristoforu: no description found

- HuggingFaceTB/cosmo-1b · Hugging Face: no description found

- VideoPrism: A Foundational Visual Encoder for Video Understanding: We introduce VideoPrism, a general-purpose video encoder that tackles diverse video understanding tasks with a single frozen model. We pretrain VideoPrism on a heterogeneous corpus containing 36M high...

- google/gemma-7b · Hugging Face: no description found

- OpenAI's "World Simulator" SHOCKS The Entire Industry | Simulation Theory Proven?!: OpenAI's Sora is described as a "world simulator" by OpenAI. It can potentially simulate not only our reality but EVERY reality. Use this limited-time deal t...

- bartowski/sparsetral-16x7B-v2-SPIN_iter0-exl2 · Hugging Face: no description found

- bartowski/sparsetral-16x7B-v2-SPIN_iter1-exl2 · Hugging Face: no description found

- Tweet from sean mcguire (@seanw_m): chatgpt is apparently going off the rails right now and no one can explain why

- GitHub - amazon-science/tofueval: Contribute to amazon-science/tofueval development by creating an account on GitHub.

- GitHub - AUTOMATIC1111/stable-diffusion-webui: Stable Diffusion web UI: Stable Diffusion web UI. Contribute to AUTOMATIC1111/stable-diffusion-webui development by creating an account on GitHub.

- Golem.de: IT-News für Profis: no description found

- THE DECODER: Artificial Intelligence is changing the world. THE DECODER brings you all the news about AI.

- THE DECODER: Artificial Intelligence is changing the world. THE DECODER brings you all the news about AI.

TheBloke ▷ #characters-roleplay-stories (189 messages🔥🔥):

- Miqu's Rush vs Goliath's Pace:

@sunijaexpressed concerns that Miqu and its derivatives rush through scenes too quickly. Contrastingly,@superking__shared that usually original Miqu progresses scenes at a good pace, sometimes ending a story hastily which they mitigated by adjusting the prompt.

- DPO Experiments for Roleplaying:

@superking__proposed creating DPO data for models to better roleplay characters who lie, lack knowledge, or have secrets. Various users expressed interest, with@kaltcitsuggesting existing models like original llama chat already employ something akin to this concept of selective character knowledge.

- Untruthful DPO:

@superking__described a detailed plan to create DPO pairs that differ with the minimal number of tokens to train models to lie about specific topics, bolstering selective character responses within roleplay scenarios. The approach suggests using mirrored cases to 'nudge' model weights while avoiding overtraining on unrelated tokens.

- Model Behaviours and Secrets:

@spottyluckdiscussed the concept of LLMs keeping secrets and related challenges, citing a nuanced response from a model nicknamed "Frank" when questioned about its ability to keep secrets.

- Resource Requirements for Miqu-70b Models: Discussions about model VRAM usage revealed that miqu-70b models vary in their memory requirements, with

@superking__mentioning 32 GB for Q2, while@mrdragonfoxobserved that Q5 could fit on a 48 GB GPU, indicating that model sizes and hardware capabilities can significantly impact user experiences.

- AI Struggles in Character Consistency:

@drakekardsought advice for prompts to maintain consistent character role-play using Amethyst-13B-Mistral-GPTQ.@superking__suggested using a simple chat dialogue format and shared their settings, with varied results due to limitations in the smaller models.

Links mentioned:

- Reservoir Dogs Opening Scene Like A Virgin [Full HD]: Quentin Tarantino's Reservoir Dogs opening scene where Mr Brown (Tarantino) explains what "Like A Virgin" is about.

- The Best Shopping Scene Ever! (from Tarantino's "Jackie Brown"): A scnen from Quentin Tarantino's "Jackie Brown" with Robert De Niro and Bridget Fonda in the scene

TheBloke ▷ #training-and-fine-tuning (6 messages):

- Synthetic Dataset Fine-tuning Frenzy: User

@pncddis struggling to review and edit a synthetic dataset for a data extraction model, finding the process tedious with jsonlines format. They consider converting to .csv for editing in Google Sheets, frustrated with the lack of suitable tools.

- Desperate for Dataset Tools: In a follow-up,

@pncdddescribes the dataset's complexity: it includes phone call transcriptions paired with detailed JSON responses.

- Negative Training a Positive Step?: User

@3dheliosinquires whether it's possible to use negative examples in training datasets, implicating the need for the model to learn from wrong answers.

- Gradio to the Rescue:

@amogus2432suggests that@pncddcould ask GPT-4 to write a simple Gradio tool for dataset editing, despite acknowledging GPT-4's limitations with Gradio blocks.

- In Search of the Perfect Classifier:

@yustee.seeks model recommendations for a classification task aimed at filtering relevance in a RAG pipeline, pondering over the use of deepseek-coder-6.7B-instruct.

TheBloke ▷ #coding (166 messages🔥🔥):

- Exploring Chatbots with Multiple Characters:

@pilotgfxis creating a local script featuring multiple coding assistants that manage conversation histories without backend servers; conversation length management involves clearing out old entries to avoid excessive prompt length. - Intriguing Discussion on RAG and Long Conversations:

@dirtytigerxhighlighted conventional Retrieval-Augmented Generation (RAG) techniques, such as metadata filtering and compression methods for managing extensive conversation histories;@superking__pointed out writing a server for Mixtral might address prompt evaluation lag issues. - Mistral Finetuning Exploration:

@fred.blissand@dirtytigerxdiscussed the ease of use and distributed training features in Mistral;@dirtytigerxutilizes macOS for daily tasks and accesses ML-related workloads through other systems. - Backend Choices for Dev Environments Expressioned:

@fred.blissand@dirtytigerxconversed about editor preferences, with@dirtytigerxexpressing dissatisfaction with VSCode, leading to a preference for Zed and sharing the text editor's swiftness and developer focus. They also discussed perspectives on mlx's performance and finetuning capabilities on Apple's new hardware. - Optimization Talk on Model Implementation:

@etron711inquired about fine-tuning Mistral models, seeking opinions on optimizing server costs and throughput;@dirtytigerxadvised starting with a small prototype using rented GeForce RTX 4090 GPUs to develop an MVP, before scaling up infrastructure.

Links mentioned:

- GitHub - raphamorim/rio: A hardware-accelerated GPU terminal emulator focusing to run in desktops and browsers.: A hardware-accelerated GPU terminal emulator focusing to run in desktops and browsers. - raphamorim/rio

- GitHub - pulsar-edit/pulsar: A Community-led Hyper-Hackable Text Editor: A Community-led Hyper-Hackable Text Editor. Contribute to pulsar-edit/pulsar development by creating an account on GitHub.

LM Studio ▷ #💬-general (375 messages🔥🔥):

- CPU Features Debate:

@jedd1discussed CPU feature advancements, while@exio4mentioned Intel Atom's long-standing low performance, and@krypt_lynxadded that even Celerons lack AVX features. These newer features aren't seen much outside of Intel's site. - LM Studio vs. Hugging Face Connection Issues: While

@lmx4095could access Hugging Face, they encountered connectivity errors with LM Studio;@heyitsyorkieconfirmed issues with the model explorer. - Compatibility Questions in Discord: Users

@nsitnov,@ivtore,@heyitsyorkie, and@joelthebuilderdiscussed setting up web interfaces and integration with LM Studio Server, with mixed results. - LM Studio Model Recommendations and Issues: Various users discussed the performance and issues of different models. Some had better luck than others, with reports of both successful model runs and errors.

- Fixes and Patches for LM Studio:

@yagilblinked to recommended versions of Gemma for LM Studio and explained a recent fix to regeneration and continuation bugs in the latest LM Studio update.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- lmstudio-ai/gemma-2b-it-GGUF · Hugging Face: no description found

- Gemma: Introducing new state-of-the-art open models: Gemma is a family of lightweight, state\u002Dof\u002Dthe art open models built from the same research and technology used to create the Gemini models.

- Hugging Face – The AI community building the future.: no description found

- google/gemma-7b · Hugging Face: no description found

- جربت ذكاء إصطناعي غير خاضع للرقابة، وجاوبني على اسئلة خطيرة: ستريم كل نهار في تويتش :https://www.twitch.tv/marouane53Reddit : https://www.reddit.com/r/Batallingang/إنستغرام : https://www.instagram.com/marouane53/سيرفر ...

- Head Bang Dr Cox GIF - Head Bang Dr Cox Ugh - Discover & Share GIFs: Click to view the GIF

- LoneStriker/gemma-2b-GGUF · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- GitHub - lllyasviel/Fooocus: Focus on prompting and generating: Focus on prompting and generating. Contribute to lllyasviel/Fooocus development by creating an account on GitHub.

- Need support for GemmaForCausalLM · Issue #5635 · ggerganov/llama.cpp: Prerequisites Please answer the following questions for yourself before submitting an issue. I am running the latest code. Development is very rapid so there are no tagged versions as of now. I car...

- MSN: no description found

- Mistral's next LLM could rival GPT-4, and you can try it now in chatbot arena: French LLM wonder Mistral is getting ready to launch its next language model. You can already test it in chat.

- How To Run Stable Diffusion WebUI on AMD Radeon RX 7000 Series Graphics: Did you know you can enable Stable Diffusion with Microsoft Olive under Automatic1111 to get a significant speedup via Microsoft DirectML on Windows? Microso...

LM Studio ▷ #🤖-models-discussion-chat (65 messages🔥🔥):

- Query on Best Model for Lyrics Creation:

@discockkasked for the best model to generate lyrics. In response,@fabguyhumorously criticized the brief query but indicated that there are no models specifically trained for poems or rhymes, suggesting to try storytelling models listed in another channel. - Hermes 2 Model Expertise:

@wolfspyreshared their experience using the new NousResearch Hermes2 Yi model, praising its performance and linking to its Hugging Face page. - Conversation on LLM Output Quality and Verification:

@goldensun3dscontemplated a dual-LLM system to improve output quality, where a secondary LLM revises the primary LLM's response.@jedd1and@christianazinnmentioned existing frameworks like Judy and papers that align with the concept. - Challenges with AutoGPT and LLM Compatibilities:

@thebest6337reported difficulties using AutoGPT with various models, receiving help from@docorange88who suggested Mistral and a version of Dolphin, but later conversed about persistent errors and model compatibility. - Tech Details and Optimization Talks: In a technical exchange,

@nullt3rand@goldensun3dsdiscussed the potential bottlenecks when running large LLMs like Goliath 120B on GPUs with limited VRAM, involving factors like memory bandwidth and layer offloading.

Links mentioned:

- lmstudio-ai/gemma-2b-it-GGUF · Hugging Face: no description found

- Testing Shadow PC Pro (Cloud PC) with LM Studio LLMs (AI Chatbot) and comparing to my RTX 4060 Ti PC: I have been using Chat GPT since it launched about a year ago and I've become skilled with prompting, but I'm still very new with running LLMs "locally". Whe...

- GitHub - TNT-Hoopsnake/judy: Judy is a python library and framework to evaluate the text-generation capabilities of Large Language Models (LLM) using a Judge LLM.: Judy is a python library and framework to evaluate the text-generation capabilities of Large Language Models (LLM) using a Judge LLM. - TNT-Hoopsnake/judy

- NousResearch/Nous-Hermes-2-Yi-34B-GGUF · Hugging Face: no description found

LM Studio ▷ #announcements (3 messages):

- LM Studio v0.2.15 Drops with Google Gemma:

@yagilbannounced that LM Studio v0.2.15 is now available with support for Google's Gemma model, including a 2B and 7B version. Users must manually download Google's Gemma models (2B version, 7B version), but a more seamless experience is expected soon.

- New Features & UI Updates Shine in Latest LM Studio: The update introduces a new and improved downloader with pause/resume capabilities, a conversation branching feature, a GPU layers slider, and a UI refresh, including a new home page look and updated chat.

- Bug Squashing in LM Studio: Users are encouraged to redownload v0.2.15 from the LM Studio website to obtain important bug fixes that weren't present in the original 0.2.15 build.

- Easing Gemma Integration Pain Points:

@yagilbprovided a link to recommended Gemma quants that are now available for LM Studio users, aiming to streamline the integration of Google's new Gemma model into the LM Studio environment.

Links mentioned:

- lmstudio-ai/gemma-2b-it-GGUF · Hugging Face: no description found

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

LM Studio ▷ #🧠-feedback (10 messages🔥):

- RAM Discrepancy Issue Raised: User

@darkness8327mentioned that the RAM is not displaying correctly in the software they are using.

- Assistant Creation Feature Request:

@urchiginquired about the possibility of integrating assistant creation in LM Studio, similar to the feature available on Hugging Face.

- Instructions for Local LLM Installation Seeked:

@maaxportasked for guidance on installing a local LLM with AutoGPT on a rented server.

- Update on Client Version Confusion:

@msz_mgsnoted an issue where the client 0.2.14 was incorrectly indicating it is the latest version.@heyitsyorkiesuggested manually downloading and installing the update as the in-app updating feature isn't working.

- Gemma Model Troubleshooting:

@richardchinnisreported a problem with the Gemma models but then followed up with an intention to try a different model based on a discussion in another channel.

Links mentioned:

HuggingChat - Assistants: Browse HuggingChat assistants made by the community.

LM Studio ▷ #🎛-hardware-discussion (96 messages🔥🔥):

- Power Supply Crucial for Dual GPUs:

@heyitsyorkieemphasized the importance of having a PSU with enough power to run both GPUs when considering a dual setup. - Multi-GPU Setups Inherit Slowest Card's Speed: According to

@wilsonkeebs, in a multi-GPU configuration, the overall speed will match that of the slowest card, but having more VRAM is preferable over utilizing RAM for loading models. - Motherboard PCIe Support Matters for GPU Expansion:

@krzbio_21006inquired if dual PCIe 4x16 slots were sufficient for GPU expansion, to which@wilsonkeebsresponded affirmatively and suggested using a PCIe riser if space issues arise. - Power Efficiency vs. Performance in GPU Selection:

@jedd1and@heyitsyorkiediscussed the trade-offs between power consumption and performance when choosing between GPU models like the 4060ti and the 4070 ti-s. - Optimal GPU Strategy for AI and Vision Models: In a conversation about VRAM requirements,

@heyitsyorkieand others highlighted the advantages of fewer, higher-end GPUs like the 3090 over numerous lower-end models, as higher VRAM per card significantly impacts AI-related tasks.

Links mentioned:

- Have You GIF - Have You Ever - Discover & Share GIFs: Click to view the GIF

- The Best GPUs for Deep Learning in 2023 — An In-depth Analysis: Here, I provide an in-depth analysis of GPUs for deep learning/machine learning and explain what is the best GPU for your use-case and budget.

- NVIDIA GeForce RTX 2060 SUPER Specs: NVIDIA TU106, 1650 MHz, 2176 Cores, 136 TMUs, 64 ROPs, 8192 MB GDDR6, 1750 MHz, 256 bit

- NVIDIA GeForce RTX 3090 Specs: NVIDIA GA102, 1695 MHz, 10496 Cores, 328 TMUs, 112 ROPs, 24576 MB GDDR6X, 1219 MHz, 384 bit

LM Studio ▷ #🧪-beta-releases-chat (301 messages🔥🔥):

- LM Studio 0.2.15 Beta User Struggles: Users like

@n8programsexperienced inconsistencies with then_gpu_layersfeature, requiring frequent model reloads. To simplify GPU model loading, a preset option was suggested by@yagilb. - Linux Libclblast Bug Squashed:

@yagilbidentified the root cause of the libclblast bug for Linux users and planned a fix, although it wasn't implemented in the 0.2.15 preview. - Trouble in Gemma Town: Many users reported issues with Google's Gemma 7B models producing gibberish output. The issue was pervasive enough to be noticed by

@drawless111who cited similar issues with other models like RWKV. - Regenerate Bug Resolved: After multiple reports of issues with model regeneration and multi-turn chats,

@yagilbannounced that 0.2.15 has been re-released with significant bug fixes that aimed to resolve reported issues. - Official Gemma Model Functional, Quantized Variants a Mishap: The Gemma model from Google worked well in its 32-bit GGUF full precision format, despite large file size; conversely, many quantized versions did not. Community members found

@LoneStriker's quantized models to be functional and@yagilbprovided a Gemma 2B Instruct GGUF quantized by LM Studio and tested for compatibility with LM Studio.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- lmstudio-ai/gemma-2b-it-GGUF · Hugging Face: no description found

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- asedmammad/gemma-2b-it-GGUF · Hugging Face: no description found

- ```json{ "cause": "(Exit code: 1). Please check settings and try loading th - Pastebin.com: Pastebin.com is the number one paste tool since 2002. Pastebin is a website where you can store text online for a set period of time.

- LoneStriker/gemma-2b-it-GGUF · Hugging Face: no description found

- Thats What She Said Dirty Joke GIF - Thats What She Said What She Said Dirty Joke - Discover & Share GIFs: Click to view the GIF

- google/gemma-7b · Why the original GGUF is quite large ?: no description found

- google/gemma-7b-it · Hugging Face: no description found

- Need support for GemmaForCausalLM · Issue #5635 · ggerganov/llama.cpp: Prerequisites Please answer the following questions for yourself before submitting an issue. I am running the latest code. Development is very rapid so there are no tagged versions as of now. I car...

- HuggingChat: Making the community's best AI chat models available to everyone.

- Summer Break GIF - Summer break - Discover & Share GIFs: Click to view the GIF

- google/gemma-2b-it · Hugging Face: no description found

- Tweet from Victor M (@victormustar): @LMStudioAI @yagilb The new font is great, but will still miss the OG (0.0.1!)

- Add

gemmamodel by postmasters · Pull Request #5631 · ggerganov/llama.cpp: There are couple things in this architecture: Shared input and output embedding parameters. Key length and value length are not derived from n_embd. More information about the models can be found... - no title found: no description found

- no title found: no description found

LM Studio ▷ #autogen (1 messages):

senecalouck: https://github.com/microsoft/UFO

LM Studio ▷ #crew-ai (2 messages):

- Seeking Clarification on VSC Issues:

@wolfspyreis requesting clarification on a problem related to VSC (Visual Studio Code), asking for the anticipated outcome, the reasoning behind conclusions, and what solutions have been attempted.

- Potential Virtual Environment Troubles:

@drytmentions experiencing similar issues and speculates that the problem might be related to venv or conda when working on a specific project. They suggest that it could be avenvissue.

Nous Research AI ▷ #ctx-length-research (67 messages🔥🔥):

- ChatGPT Character Limit Queries:

@elder_pliniusfound that the web version of ChatGPT seems to limit context by characters rather than tokens, which raised questions in the group. Despite@vatsadevasserting that GPT-3 and GPT-4 utilize tokenizers, the Big Lebowski script fitting experiment by@elder_pliniusdisplayed inconsistency in context length acceptance.

- Scaling Language Models to 128K Context:

@gabriel_symeshared a GitHub repository that includes implementation details on scaling language models to handle 128K context.

- VRAM Requirements for Large Context AI: The conversation evolved around VRAM requirements for processing 128K context length with a 7B model;

@tekniumclaimed it to require 600+GB for inference, and@blackl1ghtnoted successfully running inference on models at 64K context using around 28GB VRAM.

- Server Issues Versus Context Length Misconceptions:

@vatsadevproposed that server latency rather than context length might be the issue with token acceptance when@elder_pliniusmentioned repeated rejections of the original Big Lebowski script for exceeding context limits.

- Token Compression and Replacement Language Theory:

@elder_pliniusconjectured about creating a library of alphanumeric mappings to reduce token count by teaching models a new condensed language, stemming from the context-length experiments conducted with the Big Lebowski script.

Links mentioned:

- gpt-tokenizer playground: no description found

- Tweet from Pliny the Prompter 🐉 (@elder_plinius): The Big Lebowski script doesn't quite fit within the GPT-4 context limits normally, but after passing the text through myln, it does!

- GitHub - FranxYao/Long-Context-Data-Engineering: Implementation of paper Data Engineering for Scaling Language Models to 128K Context: Implementation of paper Data Engineering for Scaling Language Models to 128K Context - FranxYao/Long-Context-Data-Engineering

Nous Research AI ▷ #off-topic (22 messages🔥):

- Exploring AI-Driven Game Simulation:

@nonameusrshared a link to a YouTube video demonstrating OpenAI's Sora simulating Minecraft gameplay, expressing amazement at its understanding of game mechanics. Sora's capabilities include understanding the XP bar, item stacking, inventory slots, and replicating animations for in-game actions.

- AI Misconceptions and Modded Content Influence: While discussing the AI's grasp on Minecraft,

@afterhoursbillyobserved some inaccuracies but noted no major visual bugs, whereas_3sphereremarked on the surreal accuracy at first glance, despite the grid alignment issues.

- Self-Improving Retrieval-Augmented Generation:

@pradeep1148linked to a YouTube video titled "Self RAG using LangGraph", which discusses how self-reflection can enhance retrieval-augmented generation by enabling correction of subpar retrievals or outputs.

- From Microscopy to Artistry:

@blackblizeinquired about the feasibility for non-experts to train models on microscope images for the purpose of creating artistic derivatives, seeking guidance in this endeavor.

- Generating Avatars for Nous Models: In response to

@stoicbatman's query regarding avatar image generation for Nous models,@tekniummentioned using DALL-E and an image-to-image method through Midjourney to create these visual representations.

Links mentioned:

- Minecraft - Real vs OpenAI's Sora: Simulating digital worlds. Sora is also able to simulate artificial processes–one example is video games. Sora can simultaneously control the player in Minec...

- Corrective RAG using LangGraph: Corrective RAGSelf-reflection can enhance RAG, enabling correction of poor quality retrieval or generations.Several recent papers focus on this theme, but im...

- Self RAG using LangGraph: Self-reflection can enhance RAG, enabling correction of poor quality retrieval or generations.Several recent papers focus on this theme, but implementing the...

- BitDelta: Your Fine-Tune May Only Be Worth One Bit: Large Language Models (LLMs) are typically trained in two phases: pre-training on large internet-scale datasets, and fine-tuning for downstream tasks. Given ...

Nous Research AI ▷ #interesting-links (49 messages🔥):

- Apple Silicon Optimized GNN Library Launches:

.beowulfbrhighlighted the release ofmlx-graphs, a library for running Graph Neural Networks (GNNs) on Apple Silicon, boasting up to 10x training speedup as mentioned by @tristanbilot. - Gemma 1.5 Pro Learns to Self-Implement:

burnytechshared a post by @mattshumer_, where Gemma 1.5 Pro was shown the codebase of a Self-Operating Computer and successfully explained and implemented itself into the repository. - The AI Stack Battles Intensify in 2023:

burnytechbrought attention to @swyx's overview of the Four Wars of the AI Stack, covering business battles in data, GPG/inference, multimodality, and RAG/Ops based on their December 2023 recap. - A-JEPA AI Model Discussion Without Meta Affiliation Clarified: In the discussion about an AI model named A-JEPA,

@ldjclarified it has no affiliation with Meta or Yann Lecun, contrary to what the name might imply.shashank.f1concurred, acknowledging the author isn't from Meta. - Decoding Process May Elicit CoT Paths in Pre-Trained LLMs:

mister_poodlereferenced an arXiv paper that proposes eliciting chain-of-thought reasoning paths by altering the decoding process in large language models.

Links mentioned:

- Tweet from Sundar Pichai (@sundarpichai): Introducing Gemma - a family of lightweight, state-of-the-art open models for their class built from the same research & tech used to create the Gemini models. Demonstrating strong performance acros...

- Library of Congress Subject Headings - LC Linked Data Service: Authorities and Vocabularies | Library of Congress: no description found

- benxh/us-library-of-congress-subjects · Datasets at Hugging Face: no description found

- Tweet from Tristan Bilot (@tristanbilot): We’re happy to officially release mlx-graphs, a library for running Graph Neural Networks (GNNs) efficiently on Apple Silicon. Our first benchmarks show an up to 10x training speedup on large graph ...

- Tweet from Matt Shumer (@mattshumer_): I showed Gemini 1.5 Pro the ENTIRE Self-Operating Computer codebase, and an example Gemini 1.5 API call. From there, it was able to perfectly explain how the codebase works... and then it implemente...

- Chain-of-Thought Reasoning Without Prompting: In enhancing the reasoning capabilities of large language models (LLMs), prior research primarily focuses on specific prompting techniques such as few-shot or zero-shot chain-of-thought (CoT) promptin...

- Join the hedwigAI Discord Server!: Check out the hedwigAI community on Discord - hang out with 45 other members and enjoy free voice and text chat.

- A-JEPA AI model: Unlock semantic knowledge from .wav / .mp3 file or audio spectrograms: 🌟 Unlock the Power of AI Learning from Audio ! 🔊 Watch a deep dive discussion on the A-JEPA approach with Oliver, Nevil, Ojasvita, Shashank, Srikanth and N...

- Tweet from swyx (@swyx): 🆕 The Four Wars of the AI Stack https://latent.space/p/dec-2023 Our Dec 2023 recap also includes a framework for looking at the key business battlegrounds of all of 2023: In Data: with OpenAI an...

- HuggingFaceTB/cosmo-1b · Hugging Face: no description found

- Let's build the GPT Tokenizer: The Tokenizer is a necessary and pervasive component of Large Language Models (LLMs), where it translates between strings and tokens (text chunks). Tokenizer...

- mlabonne/OmniBeagle-7B · MT-Bench Scores: no description found

Nous Research AI ▷ #announcements (2 messages):

- Introducing Nous Hermes 2:

@tekniumannounced the release of Nous Hermes 2 - Mistral 7B - DPO, an in-house RLHF'ed model improving scores on benchmarks like AGIEval, BigBench Reasoning Test, GPT4All suite, and TruthfulQA. The model is available on HuggingFace.

- Get Your GGUFs!: Pre-made GGUFs (gradient-guided unfreeze) of all sizes for Nous Hermes 2 are available for download at their HuggingFace repository.

- Big thanks to FluidStack:

@tekniumexpressed gratitude to the compute sponsor FluidStack and their representative, along with shout-outs to contributors to the Hermes project and the open source datasets.

- Together hosts Nous Hermes 2:

@tekniuminformed that Together.xyz has listed the new Nous Hermes 2 model on their API, available on the Togetherxyz API Playground. Thanks were extended to@1081613043655528489.

Links mentioned:

- TOGETHER: no description found

- NousResearch/Nous-Hermes-2-Mistral-7B-DPO · Hugging Face: no description found

- NousResearch/Nous-Hermes-2-Mistral-7B-DPO-GGUF · Hugging Face: no description found

Nous Research AI ▷ #general (594 messages🔥🔥🔥):

- Gemma Joins the Fray: Google's release of the Gemma model caused a stir among members, comparing it to Mistral models and discussing the potential behind the architecture and its benchmarks.

@ldjnoted that Gemma is slightly worse when parameters are accounted for,@tekniumsuggested focusing on models' effects rather than their raw parameter count, and@leontelloeagerly awaited MT-bench or Alpaca eval to see performance data. - Finetuning Frenzy: A discussion ensued on finetuning finetuned LLMs, with several members like

@lee0099and@mihai4256sharing their experiences and strategies, mentioning use of Lora and full parameter finetuning. There was curiosity if DPO (Differential Privacy Optimization) could be combined with SFT (Supervised Fine Tuning). - Dead Social Media Concept:

@n8programsintroduced a project called Deadnet, intending to create an endless stream of AI-generated social media content of fictional people.@everyoneisgrossresponded positively, considering possible expansion to user clustering and post ranking within the imagined social media universe. - Training Tales and Tools: There was an exchange on various AI training tools and methods.

@lee0099and@mihai4256discussed specifics of templates and finetuning outcomes, while@thedeviouspandateased novel methods merging DPO with SFT for better training results. - Peeking into Protocol and the Pursuit of Synthetic: The topic of synthetic versus organic data arose, with speculation on evolving internet dynamics due to AI input (

@everyoneisgross).@sdan.iohighlighted the concept of a personal 'vector db file' for memory management across platforms, while@tekniumlinked a tweet suggesting developments in AI reasoning models may become more prevalent than natural language processing models.

Links mentioned:

- EleutherAI/Hermes-RWKV-v5-7B · Hugging Face: no description found

- NousResearch/Nous-Hermes-2-Mistral-7B-DPO · Hugging Face: no description found

- no title found: no description found

- Models - Hugging Face: no description found

- eleutherai: Weights & Biases, developer tools for machine learning

- Tweet from Archit Sharma (@archit_sharma97): High-quality human feedback for RLHF is expensive 💰. AI feedback is emerging as a scalable alternative, but are we using AI feedback effectively? Not yet; RLAIF improves perf only when LLMs are SF...

- TOGETHER: no description found

- Tweet from Aaditya Ura (Ankit) (@aadityaura): The new Model Gemma from @GoogleDeepMind @GoogleAI does not demonstrate strong performance on medical/healthcare domain benchmarks. A side-by-side comparison of Gemma by @GoogleDeepMind and Mistral...

- The Novice's LLM Training Guide: Written by Alpin Inspired by /hdg/'s LoRA train rentry This guide is being slowly updated. We've already moved to the axolotl trainer. The Basics The Transformer architecture Training Basics Pre-train...

- no (no sai): no description found

- Adding Google's gemma Model by monk1337 · Pull Request #1312 · OpenAccess-AI-Collective/axolotl: Adding Gemma model config https://huggingface.co/google/gemma-7b Testing and working!

- Runtime error: CUDA Setup failed despite GPU being available (bitsandbytes) · Issue #1280 · OpenAccess-AI-Collective/axolotl: Please check that this issue hasn't been reported before. I searched previous Bug Reports didn't find any similar reports. Expected Behavior Hi, I'm trying the public cloud example that tr...

- Mistral-NEXT Model Fully Tested - NEW KING Of Logic!: Mistral quietly released their newest model "mistral-next." Does it outperform GPT4?Need AI Consulting? ✅ - https://forwardfuture.ai/Follow me on Twitter 🧠 ...

- laserRMT/examples/laser-dolphin-mixtral-2x7b.ipynb at main · cognitivecomputations/laserRMT: This is our own implementation of 'Layer Selective Rank Reduction' - cognitivecomputations/laserRMT

- Reddit - Dive into anything: no description found

- Neuranest/Nous-Hermes-2-Mistral-7B-DPO-BitDelta at main: no description found

- BitDelta: no description found

- GitHub - FasterDecoding/BitDelta: Contribute to FasterDecoding/BitDelta development by creating an account on GitHub.

Nous Research AI ▷ #ask-about-llms (37 messages🔥):

- Dataset Editing Dilemma:

@pncddvoiced frustration about not having a tool to easily edit a synthetic dataset for model fine-tuning, finding scrolling through a jsonlines file inefficient. Neither Huggingface datasets nor wandb Tables provided a solution, and converting to .csv for Google Sheets editing was suggested as a possible, albeit cumbersome, workaround.

- Merge Woes with Large Models:

@iamcoming5084reported an Out of Memory (OOM) error when attempting to merge a finetuned mixtral 8x 7b model using an H100 80GB GPU, sparking a discussion about potential solutions that don't involve larger VRAM GPUs. The conversation involved suggested code, usage of Axolotl's merge functionality, and a close look at PyTorch memory handling.

- Exploring LORA for LLM Inference:

@blackl1ghtengaged in a discussion about the purpose and benefits of using LoRA (Locally Reweighted Adaptation) for fine-tuning, particularly during inference. The information shared clarified LoRA as a strategy for fine-tuning models with less GPU resource, with@dysondunbarproviding insights into potential use cases, limitations, and benefits.

- DeepSeek Enhanced With Magicoder:

.benxhindicated that the deepseek AI has been significantly improved using the Magicoder dataset on its 6.7B variant..benxhdenied personally using the dataset for fine-tuning deep seek, clarifying that the Magicoder team had implemented it.

- Custom Hosting Solutions for LLMs:

@jacobisought advice on the best strategy for hosting the Mixtral 8x7b model via an OpenAI API endpoint on a 3090/4090 GPU. Various tools and libraries like tabbyAPI, vLLM gptq/awq, and llama-cpp's server implementation were mentioned concerning their effectiveness and limitations for hosting such large models.

- Inquiry About Hermes DPO with Gemma Base:

@samininquired whether there would be a Nous-Hermes DPO model utilizing Google's newly released Gemma 7B base, expressing skepticism about Google's instruction tuning and linking to the Hugging Face blog announcement about Gemma.

Links mentioned:

- no title found): no description found

- Welcome Gemma - Google’s new open LLM: no description found

- Reddit - Dive into anything: no description found

Nous Research AI ▷ #collective-cognition (3 messages):

- Heroku Faces Criticism:

@bfpillexpressed frustration with Heroku, simply stating, "screw heroku". - Neutral Response to Criticism:

@adjectiveallisonresponded to@bfpill's Heroku comment, indicating a desire to move past the issue with, "I don't think that's the point but sure". - Consensus on Heroku Sentiment: Following the response from

@adjectiveallison,@bfpillreaffirmed their sentiment agreeing with the frustration towards Heroku.

Nous Research AI ▷ #project-obsidian (3 messages):

- Project Delay Due to Pet Illness: