[AINews] Genesis: Generative Physics Engine for Robotics (o1-2024-12-17)

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

A universal physics engine is all you need.

AI News for 12/17/2024-12/18/2024. We checked 7 subreddits, 433 Twitters and 32 Discords (215 channels, and 4542 messages) for you. Estimated reading time saved (at 200wpm): 497 minutes. You can now tag @smol_ai for AINews discussions!

You are reading AINews generated by o1-2024-12-17. As is tradition on new frontier model days, we try to publish multiple issues for A/B testing/self evaluation. Check our archives for the o1-mini version. We are sorry for the repeat sends yesterday (platform bug) but today's is on purpose.

December has been the month of Generative Video World Simulators apparently, with Sora Turbo going GA, both Genie 2 and Veo 2 getting teased by Google. Now, a group of academics led by CMU PhD student Zhou Xian have announced Genesis: A Generative and Universal Physics Engine for Robotics and Beyond, a 2 year large scale research collaboration involving over 20 labs, debuting with a drop of water rolling down a Heineken bottle:

Because it is a physics engine, it can render the same engine from different camera angles:

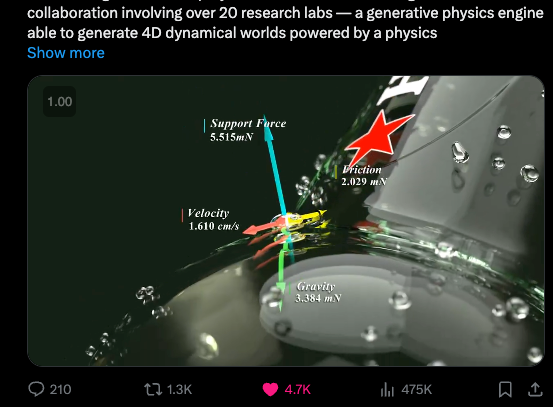

as well as expose the driving vectors:

The "unified physics engine" integrates various SOTA physics solvers (MPM, SPH, FEM, Rigid Body, PBD, etc.), supporting simulation of a wide range of materials: rigid body, articulated body, Cloth, Liquid, Smoke, Deformables, Thin-shell materials, Elastic/Plastic Body, Robot Muscles, etc.

Rendering consistent objects is immediately useful today, but does not sound like the "purist" bitter pilled approach taken by the big labs - being a pile of physics solvers manually put together rather than machine learned through data - but it does have the advantage of being open source and usable today (no paper yet).

If the purpose were video generation, this would already be impressive, but the real goal is robotics. Genesis is really a platform for 4 things:

- A universal physics engine re-built from the ground up, capable of simulating a wide range of materials and physical phenomena.

- A lightweight, ultra-fast, pythonic, and user-friendly robotics simulation platform.

- A powerful and fast photo-realistic rendering system.

- A generative data engine that transforms user-prompted natural language description into various modalities of data.

and it should be the robotics applications that should really shine.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Here are the key discussions organized by topic:

OpenAI o1 API Launch and Features

- o1 model released to API with function calling, structured outputs, vision support, and developer messages. Model uses 60% fewer reasoning tokens than o1-preview and includes a new "reasoning_effort" parameter.

- Performance Benchmarks: @aidan_mclau noted o1 is "insanely good at math/code" but "mid at everything else". Benchmark results show o1 scoring 0.76 on LiveBench Coding, compared to Sonnet 3.5's 0.67.

- New SDKs: Released beta SDKs for Go and Java. Also added WebRTC support for realtime API with 60% lower prices.

Google Gemini Updates

- @sundarpichai confirmed that Gemini Exp 1206 is Gemini 2.0 Pro, showing improved performance on coding, math and reasoning tasks.

- Gemini 2.0 deployment accelerated for Advanced users in response to feedback.

Model Development & Architecture

- Discussion around model sizes and training - debate about whether o1-preview's size matches o1 and relationship to GPT-4o.

- Meta's new research on training transformers directly on raw bytes using dynamic patching based on entropy.

Industry & Business

- @adcock_brett reported successful deployment of commercial humanoid robots at client site with rapid transfer from HQ.

- New LlamaReport tool announced for converting document databases into human-readable reports using LLMs.

Memes & Humor

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Hugging Face's 3B Llama Model: Outperforming the 70B with Search

- Hugging Face researchers got 3b Llama to outperform 70b using search (Score: 668, Comments: 123): Hugging Face researchers achieved a breakthrough by making the 3B Llama model outperform the 70B Llama model in MATH-500 accuracy using search techniques. The graph demonstrates that the 3B model surpasses the 70B model under certain conditions, with accuracy measured across generations per problem, highlighting the model's potential efficiency and effectiveness compared to larger models.

- Inference Time and Model Size Optimization: Users discuss the potential of finding an optimal balance between inference time and model size, suggesting that smaller models can be more efficient if they perform adequately on specific tasks, especially when the knowledge is embedded in prompts or fine-tuned for particular domains.

- Reproducibility and Dataset References: Concerns are raised about the reproducibility of the results due to the non-publication of the Diverse Verifier Tree Search (DVTS) model, with a link provided to the dataset used (Hugging Face Dataset) and the DVTS implementation (GitHub).

- Domain-Specific Limitations: There is skepticism about the applicability of the method outside math and code domains due to the lack of PRMs trained on other domains and datasets with step-by-step labeling, questioning the generalizability of the approach.

Theme 2. Moonshine Web: Faster, More Accurate than Whisper

- Moonshine Web: Real-time in-browser speech recognition that's faster and more accurate than Whisper (Score: 193, Comments: 25): Moonshine Web claims to provide real-time in-browser speech recognition that is both faster and more accurate than Whisper.

- Moonshine Web is open source under the MIT license, with ongoing efforts to integrate it into transformers as seen in this PR. The ONNX models are available on the Hugging Face Hub, although there are concerns about the opacity of the ONNX web runtime.

- Discussion highlights include skepticism about the real-time capabilities and accuracy claims of Moonshine compared to Whisper models, specifically v3 large. Users are curious about the model's ability to perform speaker diarization and its current limitation to English only.

- Moonshine is optimized for real-time, on-device applications, with support added in Transformers.js v3.2. The demo source code and online demo are available for testing and exploration.

Theme 3. Granite 3.1 Language Models: 128k Context & Open License

- Granite 3.1 Language Models: 128k context length & Apache 2.0 (Score: 144, Comments: 22): Granite 3.1 Language Models now feature a 128k context length and are available under the Apache 2.0 license, indicating significant advancements in processing larger datasets and accessibility for developers.

- Granite Model Performance: The Granite 3.1 3B MoE model is reported to have a higher average score on the Open LLM Leaderboard than the Falcon 3 1B, contradicting claims that MoE models perform similarly to dense models with equivalent active parameters. This is despite having 20% fewer active parameters than its competitors.

- Model Specifications and Licensing: The Granite dense models (2B and 8B) and MoE models (1B and 3B) are trained on over 12 trillion and 10 trillion tokens, respectively, with the dense models supporting tool-based use cases and the MoE models designed for low latency applications. The models are released under the Apache 2.0 license, with the 8B model noted for its performance in code generation and translation tasks.

- Community Insights and Comparisons: The Granite Code models are praised for their underrated performance, particularly the Granite 8BCode model, which competes with the Qwen2.5 Coder 7B. Discussions also highlight the potential for MoE models to facilitate various retrieval strategies and the importance of familiar enterprise solutions like Red Hat's integration of Granite models.

Theme 4. Moxin LLM 7B: A Fully Open-Source AI Model

- Moxin LLM 7B: A fully open-source LLM - Base and Chat + GGUF (Score: 131, Comments: 5): Moxin LLM 7B is a fully open-source large language model trained on text and coding data from SlimPajama, DCLM-BASELINE, and the-stack-dedup, achieving superior zero-shot performance compared to other 7B models. It features a 32k context size, supports long-context processing with grouped-query attention, sliding window attention, and a Rolling Buffer Cache, with comprehensive access to all development resources available on GitHub and Hugging Face.

- Moxin LLM 7B is praised for being an excellent resource for model training, with its clean and accessible code and dataset, as noted by Stepfunction. The model's comprehensive development resources are highlighted as a significant advantage.

- TheActualStudy commends the model for integrating Qwen-level context, Gemma-level tech, and Mistral-7B-v0.1 performance. This combination of advanced methods and data is regarded as impressive.

- Many_SuchCases mentions exploring the GitHub repository and notes the absence of some components like intermediate checkpoints, suggesting that these might be uploaded later.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. Imagen v2 Quality Elevates Image Generation Benchmark

- New Imagen v2 is insane (Score: 680, Comments: 119): Imagen 3 is establishing new benchmarks in image quality with its release, referred to as Imagen v2. The post highlights the impressive advancements in the technology without providing additional context or details.

- Access and Usage: Users discuss accessing Imagen 3 through the Google Labs website, suggesting the use of VPNs for regions with restrictions. There is a mention of free access with some daily usage quotas on labs.google/fx/tools/image-fx.

- Artistic Concerns: There is significant concern among artists about Imagen 3's impact on the art industry, with fears of reduced need for human artists and the overshadowing of traditional art by AI-generated images. Some users express the belief that this shift may lead to the privatization of creative domains and the erosion of artistic labor.

- Model Confusion and Improvements: Some confusion exists regarding the naming and versioning of Imagen 3, with users clarifying it as Imagen3 v2. Users note significant improvements in image quality, with early testers expressing satisfaction with the results compared to previous versions.

Theme 2. NotebookLM's Conversational Podcast Revolution

- OpenAI should make their own NotebookLM application, it's mindblowing! (Score: 299, Comments: 75): NotebookLM produces highly natural-sounding AI-generated podcasts, surpassing even Huberman's podcast in conversational quality. The post suggests that OpenAI should develop a similar application, as it could significantly impact the field.

- NotebookLM's voice quality is praised but still considered less natural compared to human hosts, with Gemini 2.0 offering live chat capabilities with podcast hosts, enhancing its appeal. Users note issues with feature integration across different platforms, highlighting limitations in using advanced voice modes and custom projects.

- The value of conversational AI for tasks like summarizing PDFs is debated, with some seeing it as revolutionary in terms of time savings and adult learning theory, while others find the content shallow and lacking depth. The Gemini model is noted for its large context window, making it well-suited for handling extensive information.

- Google's hardware advantage is emphasized, with their investment in infrastructure and energy solutions allowing them to offer more cost-effective AI models compared to OpenAI. This positions Google to potentially outperform OpenAI in the podcast AI space, leveraging their hardware capabilities to reduce costs significantly.

Theme 3. Gemini 2.0 Surpass Others in Academic Writing

- Gemini 2.0 Advanced is insanely good for academic writing. (Score: 166, Comments: 39): Gemini 2.0 Advanced excels in academic writing, offering superior understanding, structure, and style compared to other models, including ChatGPT. The author considers switching to Gemini 2.0 until OpenAI releases an improved version.

- Gemini 2.0 Advanced is identified as Gemini Experimental 1206 on AI Studio and is currently available without a paid version, though users exchange data for access. The naming conventions and lack of a central AI service from Google cause some confusion among users.

- Gemini 2.0 Advanced demonstrates significant improvements in academic writing quality, outperforming GPT-4o and Claude in evaluations. It provides detailed feedback, often critiquing responses with humor, which users find both effective and entertaining.

- Users discuss the availability of Gemini 2.0 Advanced through subscriptions, with some confusion over its listing as "2.0 Experimental Advanced, Preview gemini-exp-1206" in the Gemini web app. The model's performance in academic contexts is praised, with users expressing hope that it will push OpenAI to address issues in ChatGPT.

Theme 4. Veo 2 Challenges Sora with Realistic Video Generation

- Google is challenging OpenAl's Sora with the newest version of its video generation model, Veo 2, which it says makes more realistic-looking videos. (Score: 124, Comments: 34): Google is competing with OpenAI's Sora by releasing Veo 2, a new version of its video generation model that claims to produce more realistic videos.

- Veo 2's Availability and Performance: Several commenters highlight that Veo 2 is still in early testing and not widely available, which contrasts with claims of its release. Despite this, some testers on platforms like Twitter report impressive results, particularly in areas like physics and consistency, outperforming Sora.

- Market Strategy and Accessibility: There is skepticism about the release being a marketing strategy to counter OpenAI. Concerns about the lack of public access and API availability for both Veo 2 and Sora are prevalent, with a noted confirmation of a January release on aistudio.

- Trust in Video Authenticity: The discussion touches on the potential erosion of trust in video authenticity due to advanced generation models like Veo 2. Some propose solutions like personal AIs for verifying media authenticity through blockchain registers to address this issue.

AI Discord Recap

A summary of Summaries of Summaries by o1-2024-12-17

Theme 1. Challenges in AI Extensions and Projects

- Codeium Extension Breaks Briefly in VSCode: The extension only displays autocomplete suggestions for a split second, making it unusable. Reverting to version 1.24.8 restores proper functionality, according to multiple user reports.

- Windsurf Performance Crumbles Under Heavy Load: Some users experience over 10-minute load times and sporadic “disappearing code” or broken Cascade functionality. Filing support tickets is the top recommendation until a stable fix arrives.

- Bolt Users Cry Foul Over Wasted Tokens: They jokingly proposed a “punch the AI” button after receiving irrelevant responses that deplete credits. Many called for improved memory controls in upcoming releases.

Theme 2. New and Upgraded Models

- OpenAI o1 Dazzles With Function Calling: This successor to o1-preview introduces a new “reasoning_effort” parameter to control how long it thinks before replying. It also features noticeably lower latency through OpenRouter.

- EVA Llama Emerges as a Storytelling Specialist: Targeted at roleplay and narrative tasks, it reportedly excels at multi-step storytelling. Early adopters praise its creative outputs and user-friendly design.

- Major Price Cuts on Fan-Favorite Models: MythoMax 13B dropped by 12.5% and the QwQ reasoning model plunged 55%. These discounts aim to widen community access for experimentation.

Theme 3. GPU & Inference Pitfalls

- AMD Driver Updates Slash Performance: Users saw tokens-per-second plummet from 90+ to around 20 when upgrading from driver 24.10.1 to 24.12.1. Rolling back fixes the slowdown, reinforcing caution with fresh GPU driver releases.

- Stable Diffusion on Ubuntu Hits Snags: Tools like ComfyUI or Forge UI often demand in-depth Linux know-how to fix compatibility issues. Many still recommend an NVIDIA 3060 with 16GB VRAM as a smoother baseline.

- TinyGrad, Torch, and CUDA Memory Confusion: Removing checks like IsDense(y) && IsSame(x, y) solved unexpected inference failures, but introduced new complexities. This led developers to reference official CUDA Graphs discussions for potential solutions.

Theme 4. Advanced Fine-Tuning & RAG Techniques

- Fine-Tuning Llama 3.2 With 4-bit Conversions: Many rely on load_in_4bit=true to balance VRAM usage and model accuracy. Checkpoints can be reused, and resource constraints are minimized through partial-precision settings.

- Depth AI Indexes Codebases at Scale: It attains 99% accuracy answering technical queries, though indexing 180k tokens may take 40 minutes. Rival solutions like LightRAG exist, but Depth AI is praised for simpler setup.

- Gemini 2.0 Adds Google Search Grounding: A new configuration allows real-time web lookups to refine answers. Early reviews highlight improved factual precision in coding and Q&A scenarios.

Theme 5. NotebookLM and Agentic Workflows

- NotebookLM Revamps Its 3-Panel UI: The update removed “suggested actions” due to low usage, but developers promise to reintroduce similar features with better design. Plans include boosted “citations” and “response accuracy” based on user feedback.

- Multilingual Prompts Spark Wide Engagement: Users tried Brazilian Portuguese and Bangla queries, discovering that explicitly telling NotebookLM the language context makes interactions more fluid. This showcases its capability for inclusive global communication.

- Controlling Podcast Length Remains Elusive: Even with time specifications in prompts, final outputs often exceed or ignore constraints. Most rely on flexible length ranges to strike a balance between deep coverage and listener engagement.

PART 1: High level Discord summaries

Codeium (Windsurf) Discord

-

Codeium Conundrums Continue: Users flagged trouble with Codeium extensions, like disappearing autocomplete and unwanted charges after cancellations, directing folks to codeium.com/support.

- Fans of Elementor wondered if Codeium could produce JSON or CSS code, and flex credits drew lingering questions about usage and rollover.

- Windsurf Woes Worsen: Many reported Windsurf bogging down their laptops for ten minutes or more, with recurring errors shaking confidence in the latest release.

- Some considered downgrading while referencing feature requests like Windsurf Focus Follows Mouse to address performance hiccups.

- Codeium vs Copilot Clash: Debates focused on whether Codeium still has an edge as Copilot opens a free tier, with speculation about GPT-based services hitting capacity issues.

- Advocates insisted Codeium’s autocomplete is still strong, hinting that Copilot’s free tier could spur widespread usage concerns for Claude and GPT.

- Cascade’s Auto-Approval Annoyance: Some devs criticized Cascade for automatically approving code changes, blocking thorough reviews for critical merges.

- Discussion centered on improving review workflows, as people pushed for better output checks to avoid unvetted merges.

- Llama Model & Free AI Tools Talk: Benchmarks of Llama 3.3 and 4o-mini stirred interest, with claims that smaller variants can stand toe-to-toe with bigger models.

Cursor IDE Discord

-

Cursor 0.44.2 Quiets Bugs: The updated Cursor v0.44.2 emerged after a rollback from 0.44, addressing composer quirks and terminal text issues as seen in the changelog.

- Members described better stability with devcontainers, referencing a cautionary forum post, which originally flagged disruptions in version 0.44.

- Kepler Browser Emphasizes Privacy: A Python-built browser named Kepler promises minimal server reliance and user control, spotlighted in the community repo.

- It implements randomized user agents and invites open-source contributions to bolster security.

- UV Tool Streamlines Python: Community discussions revealed the UV tool for Python environment management, with robust version handling as shown in its docs.

- It simplifies project dependencies and integrates with other resources like Poetry and the Python Environment Manager extension.

- O1 Pro Steals the Show: The O1 Pro feature resolved a user’s persistent bug within 20 attempts, illustrating improved performance in tricky scenarios.

- However, consistency issues emerged in chat output formatting, suggesting more refinements to come.

- Galileo API Access Stalls: Inquiries about the Galileo API and models like Gemini 2.0 within Cursor revealed limited availability, prompting curiosity from the developer crowd.

- They seek official integration timelines, especially within Cursor’s platform.

aider (Paul Gauthier) Discord

-

O1 Onslaught & Ties with Sonnet: OpenAI rolled out the O1 API to Tier 5 key holders, but some reported lacking access, causing excitement and frustration over its reasoning abilities.

- Several users showed O1 scoring 84.2 in tests, tying with Sonnet, and debated pricing and performance differences between O1 Pro and standard versions.

- Competition Among AI Models: Members compared Google's Veo 2 with existing contenders like Sonnet, evaluating output quality and utility for coding tasks.

- They also raised concerns about rising subscription fees and sustainability of multiple model plans.

- Support and Refunds Roll the Dice: Community members reported inconsistent refund timelines, with some waiting 4 months while others got a refund in hours.

- This led to skepticism about the overall responsiveness of customer support.

- Gemini Gains Credibility as an Editor: Enthusiasts highlighted gemini/gemini-exp-1206 for minimal coding errors and strong synergy with Aider in practical scenarios.

- Still, they acknowledged Gemini’s limits compared to O1, suggesting further testing for advanced use cases.

- Depth AI and LightRAG for Codebase Insights: Participants praised Depth AI for deeper code indexing accuracy nearing 99%, with moderate resource usage.

- Though LightRAG was mentioned as an alternative, some observed 'no output' glitches from Depth AI and questioned its consistency.

OpenAI Discord

-

OpenAI’s 12 Days & the Surprising Dial-a-Bot: OpenAI is celebrating the 12 Days of OpenAI with a Day 10 highlight reel and a bold new phone feature at 1-800-chatgpt.

- Some find the call-in service questionable for advanced tasks, yet it might help older folks or complete beginners.

- Gemini Gains Ground in AI Rivalry: Gemini is rumored to outpace OpenAI, fueling talk of an intensifying AI competition.

- Skeptics imply OpenAI may be holding back features to deploy them only when absolutely needed, stirring more buzz.

- AI Model Safety Sparks Heated Debate: Participants wondered if humans alone can tackle AI safety without advanced AI support, citing the AlignAGI repo.

- They wrestled with balancing censorship and creative expression, warning of possible unintended extremes on both sides.

- DALL-E Takes a Hit vs Midjourney & Imagen: Some claim DALL-E struggles in realism against Midjourney and Imagen, blaming restrictive design choices.

- Critics note that 'Midjourney brags too much' while others insist 'DALL-E needs more freedom' to shine.

- GPT Manager Training & Editing Headaches: Enthusiasts tried GPT as a manager, hoping for streamlined tasks, but found it only moderately effective.

- Others vented frustration about editing limitations on custom GPTs, pushing for more user control.

Nous Research AI Discord

-

Prompt Chaining for Snappier Prototypes: Contributors noted how prompt chaining connects outputs from one model to the prompt of another, improving multi-stage workflows and advanced agentic designs via Langflow. They cited speedier iteration and more structured AI responses as key benefits, showcasing better synergy across small-scale models.

- Several members considered this chaining approach vital for test-driving ideas quickly, praising how it eliminates cumbersome manual orchestration as they refine new AI prototypes.

- Falcon 3 Gains Momentum: Members highlighted Falcon3-7B on Hugging Face as a fresh release with improved tool-call handling and scalable performance across 7B to 40B parameters. Eager testers discussed potential for simulation and inference tasks, citing interest in how well it handles real-world usage on local hardware.

- They also noted upcoming improvements across larger Falcon variants, referencing user feedback on HPC deployments for more robust model experiments.

- Local Function Calls for Nimble Models: Participants weighed function calling techniques for smaller local models, comparing libraries that parse structured outputs effectively. They sought flexible setups for personalized tasks without reliance on massive cloud solutions.

- Some pointed to Gemini as an example of offloading searches to real data sources, proposing a hybrid approach instead of solely depending on chatbots for recollection.

- Hermes 3 405B Repetitive Quirks: A user reported Hermes 3 405B echoing prompts verbatim despite instructions to avoid it, complicating conversation flow. They compared it against gpt-4o, noting more concise compliance and fewer repetition issues in the latter’s behavior.

- Community members tried specialized prompting strategies to curb repetition, emphasizing the importance of iterative fine-tuning for reliable high-parameter models.

- Signal vs Noise & Consistent LLM Outputs: One discussion underscored the ratio of signal vs noise in AI inference, proposing it as a cornerstone for coherent thinking. Community comparisons drew parallels to how the human brain filters irrelevant input, linking clarity to better model outputs.

- A member also requested the best papers on maintaining output consistency in extended AI responses, hinting at ongoing interest in sustained reliability for lengthy text generation.

Notebook LM Discord Discord

-

Three-Panel Tweak Tones Up NotebookLM: The new 3-panel UI no longer includes 'suggested actions' such as 'Explain' and 'Critique,' addressing the feature's limited usage.

- For now, users rely on copying text from sources into chat, while devs plan to restore missing functionalities in a more intuitive way.

- Citations & Notes Panel Shuffle: NotebookLM's recent build removed embedded citations from notes, prompting requests for their return.

- Users also discovered they can't combine selected notes easily, pushing them to pick either all or one at a time.

- Podcast Length Control Gets Testy: Attempts to set shorter audio segments in NotebookLM often fail, as the AI apparently ignores length instructions.

- One idea is splitting content into smaller files, while the blog announcement hints future improvements.

- Multilingual Mux for Gaming & Chat: Gamers praised NotebookLM for simplifying complex rules through retrieval-augmented queries, while some tested multilingual chat for interactive podcasts.

- They shared links like Starlings One on YouTube for broader usage, also warning about superficial AI podcasts called 'AI slop.'

- Sharing Notebooks and Space Oddities: Users want to share NotebookLM projects outside their organizations, raising the question of external access and family-friendly features.

- Meanwhile, an AI video about isolation in space asked 'Would you survive?', illustrating NotebookLM's potential for broader audio-visual usage.

Unsloth AI (Daniel Han) Discord

-

Llama 3.2 Loss Mystery: Trainers encountered a puzzling higher loss (5.1→1.5) with the Llama template vs (1.9→0.1) using Alpaca for the 1bn instruct model.

- One user wondered if incorrect prompt style caused the discrepancy, with further questions on merging new datasets for repeated fine-tuning.

- QwQ Reasoning Model Quarrel: Members tested open-source model QwQ but noted it defaulted to instruct behavior when math prompts were omitted.

- Some claimed RLHF was essential to sharpen reasoning, while others argued SFT alone could build advanced logic capabilities.

- Multi-GPU & M4 MAX Mac Matters: Users verified that Unsloth supports multi-GPU usage across platforms, though some struggled with installing it on M4 MAX GPUs.

- Developers plan to add official support around Q2 2025, suggesting Google Colab and community contributions as interim solutions.

- LoRA Gains & Merged Minimization: Participants clarified that LoRA adapters tweak fewer parameters and merge with base models for smaller final files.

- They noted that typical merges produce compact LoRA outputs, curbing VRAM usage without hurting training performance.

- DiLoCo’s Distributed Dev: Community members showcased their DiLoCo research presentations for low-communication training of large language models.

- They highlighted continuing work on open-source frameworks and linked the DiLoCo arXiv paper, encouraging collaboration.

OpenRouter (Alex Atallah) Discord

-

OpenAI's O1 Powers Up: OpenRouter rolled out the new O1 model with function calling, structured outputs, and a revamped

reasoning_effortparameter, detailed at openrouter.ai/openai/o1.- Users can find a tutorial on structured outputs at this link, and explore challenges in the Chatroom to test the model's thinking prowess.

- EVA Llama Arrives: OpenRouter added EVA Llama 3.33 70b, a storytelling and roleplay specialist, expanding its line-up of advanced models at this link.

- This model focuses on narrative generation, boosting the platform’s range of creative interactions.

- Price Slashes Spark Joy: The gryphe/mythomax-l2-13b model now boasts a 12.5% lower price, easing experimentation for enthusiasts.

- Meanwhile, the QwQ reasoning model's cost plunged by 55%, encouraging more users to push its boundaries.

- Keys Exposed, Support Alert: A user discovered exposed OpenRouter keys on GitHub and was advised to contact support for immediate assistance, avoiding direct email submission of the compromised tokens.

- Others noted that only metadata is retained after calls, prompting solutions like proxy-based logging if more detailed tracking is needed.

- Google Key Fees & Reasoning Hiccups: Community members confirmed a 5% service fee when linking personal Google AI keys to OpenRouter, applying to credit usage as well.

- Meanwhile, QwQ struggles with strict instruction formats, though OpenAI's 'developer' role may eventually bolster compliance in reasoning-focused models.

Interconnects (Nathan Lambert) Discord

-

Gemini Gains Ground: During Google Shipmas, Gemini 2.0 drew attention with demos like Astra and Mariner, as Jeff Dean confirmed progress on Gemini-exp-1206.

- Community feedback admired its multimodal feats but flagged rate-limit issues in Gemini Exp 1206.

- Copilot’s Complimentary Code: GitHub announced a free tier for Copilot with 2,000 code completions and 50 chat messages monthly, referencing this tweet.

- Members praised the addition of Claude 3.5 Sonnet and GPT-4o models while forecasting a developer surge to GitHub’s now 150M+ user base.

- Microsoft Mulls Anthropic Move: Microsoft is rumored to invest in Anthropic at a $59B valuation, as indicated by Dylan Patel.

- They aim to incorporate Claude while juggling ties with OpenAI, prompting community chatter on this delicate partnership.

- Qwen 2.5 Tulu Teaser: Qwen 2.5 7B Tulu 3 is expected to surpass Olmo, featuring improved licensing and teased with "more crazy RL stuff" in the pipeline.

- Team members compare repeated RL runs to "souping," highlighting surprising positive results that stoke hype for the upcoming release.

- RL Emergent Surprises: Experiments with LLM agents in the 'Donor Game' pointed to cooperation differences that hinge on the base model, as noted by Edward Hughes.

- Subsequent updates on RLVR training drew attention to self-correction behavior in outcome-based rewards, prompting renewed interest in repeated RL runs.

Eleuther Discord

-

Retail Roundup: E-commerce Tools Eye Runway: Members discussed using Runway, OpenAI Sora, and Veo 2 to produce ad content for retail in both video and copy formats, seeking next-level solutions to stand out.

- They invited more suggestions to refine marketing approaches without rehashing old tactics.

- Koopman Kommotion: Are We Spinning Our Wheels?: A paper on Koopman operator theory in neural networks stirred debate about whether it truly adds new insights or repackages residual connections.

- Some argued it lacks practical value, while others insisted the approach might complement network analysis with advanced linear operator techniques.

- The Emergent Label: Real or Hype?: Community members pored over Are Emergent Abilities of Large Language Models a Mirage? to question if these abilities reflect major leaps or mere evaluation artifacts.

- This skepticism extended to assumptions that simply scaling large models solves core limitations, pointing to deeper unresolved theoretical gaps.

- Iterate to Compress: Cheaper Surrogates & OATS: Engineers discussed iterated function approaches for model compression and pointed to the OATS pruning technique as a path to reduce size without sacrificing advanced behaviors.

- They also floated strategies for cheap surrogate layers, though some worried about error buildup when chaining approximations.

- WANDB Logging & Non-Param Norm: What's Next?: Developers requested direct logging of MFU and throughput metrics to WANDB, hinting at soon-to-arrive features in GPT-NeoX's logging code.

- They also anticipate a non-parametric layernorm pull request in coming days, broadening experimentation options for GPT-NeoX.

Stability.ai (Stable Diffusion) Discord

-

LoRA Lore: Training Triumphs: Members emphasized collecting a strong dataset before training a LoRA, along with thorough testing and iteration for quality assurance.

- They highlighted the importance of dataset curation strategies, suggesting research into specialized resources to refine training success.

- Stable Diffusion Smorgasbord: Beginners were advised to try InvokeAI for its straightforward workflow, while ComfyUI and Forge UI were touted for modular functionality.

- Links to models on Civitai and a GitHub script for stable-diffusion-webui-forge were shared, along with tips for leveraging them effectively.

- Quantum Quibbles vs Classical Crunch: Certain members mentioned breakthroughs in quantum computing, while noting that practical deployment is still distant.

- Concerns arose around future warfare scenarios and major leaps in computational capabilities driven by quantum advancements.

- GPU Gains & FP8 Tuning: Optimizing VRAM usage was a hot tip, especially employing FP8 mode on a 3060 GPU for speed and memory efficiency.

- Monitoring GPU memory usage during image generation was advised to avoid unexpected slowdowns or crashes.

- AI Video Visions & Limitations: Participants agreed that while AI-generated images have progressed significantly, video output still has room to improve.

- Practical timelines for seamless AI video were acknowledged, with references to tools like static FFmpeg binaries for macOS to refine post-generation processing.

Perplexity AI Discord

-

Spaces Shakeup: Custom Web Sources: Perplexity introduced Custom Web Sources in Spaces, allowing users to pick favored sites for more specialized results. A short launch video showcases streamlined setup for these sources.

- Community members highlighted the potential for advanced tasks, mentioning how customizing Perplexity suits intense engineering demands.

- Gift a Pro: Subscriptions & Rate Limits: Users praised the Perplexity Pro gift subscriptions for added sources and AI models, with a tweet from Perplexity Supply advertising the offer. They also expressed concern about hitting request caps, suspecting higher tiers might solve those constraints.

- Some advocated a fun snowfall effect in the UI, while others dismissed it as too distracting for hardcore usage.

- Meta Locks Horns with OpenAI: Meta wants to halt OpenAI's for-profit ventures, sparking debate over the ethics of monetized AI. Community chatter questioned if corporate priorities might overshadow open research ideals.

- Others cited earlier showdowns, framing this as a defining moment for unbarred development versus revenue-driven models.

- Cells That Refuse to Die: Research implies cells can be brought back to function after death, unsettling the idea of a final cellular shutdown. A video explanation fueled talk about possible breakthroughs in acute medical treatments.

- Forum discussions also touched on a microbial threat warning, urging close scrutiny of health implications and future prevention.

- Botanical Tears and Dopamine Gears: New findings claim plants might exhibit stress cues resembling crying, challenging older views on plant communication. Talk of dopamine precursor exploration surfaced, hinting at refined strategies for mental health interventions.

- Participants wondered about broader impacts, referencing how these biological insights could shape research trajectories.

GPU MODE Discord

-

MatX Skips Right to Silicon: MatX announced an LLM accelerator ASIC designed to boost AI performance, and they are actively hiring specialists in low level compute kernels, compiler development, and ML performance engineering as shown at MatX Jobs.

- They highlighted the potential to reshape next-gen inference and training, drawing interest from engineers curious about on-chip performance gains.

- Pi 5 Pushes a 1.5B Parameter Punch: A Raspberry Pi 5 overclocked to 2.8GHz with 256GB NVMe is running 1.5B-parameter models via Ollama and OpenBLAS, showcasing local LLM deployment on an edge device.

- Community members appreciated the practical approach, noting the Pi 5 could host smaller specialized models without large-scale GPU resources.

- CoT Gains Vision & Depth: A team explored custom vision encoder integration for small-scale images and discussed expanding Chain of Thought to refine inference with deeper iterative steps.

- They planned a Proof of Concept to embed inner reasoning into LLMs, aiming for better context handling and solution accuracy.

- Beefy int4group with Tinygemm: Engineers described using int4 weights and fp16 activations in an int4group scheme, letting the matmul kernel handle on-the-fly dequantization.

- They confirmed no activation quantization is done during training, leveraging bf16 computations to ensure consistent performance.

- A100 vs H100 Face Off: Users noticed a 0.3% difference in training loss when comparing A100 and H100, raising questions about potential hardware-specific variability.

- They debated whether Automatic Mixed Precision (AMP) or GPU architecture subtleties caused the gap, underscoring the need for deeper analysis.

LM Studio Discord

-

LM Studio Beta Surprises: Users tested LM Studio with Llama 3.2-11B-Vision-Instruct-4bit and ran into architecture errors like unknown model architecture mllama.

- Some overcame hassles by checking LM Studio Beta Releases, noting corrupt downloads and heavy models working for certain users.

- Roleplay LLM Gains Momentum: A user requested pointers on configuring a roleplay LLM, prompting the community to share advanced usage tips.

- They advertised separate channels for deep-dive discussions, emphasizing memory constraints for extended sessions.

- GPU Confusion and Driver Dilemmas: Members questioned if a 3060 Ti 11GB really existed or if it was a 12GB 3060, igniting a debate on GPU details.

- Meanwhile, the Radeon VII suffered from driver 24.12.1 issues causing 100% GPU usage without power draw, forcing a return to 24.10.1.

- Inference Overheads and Mac Aspirations: Enthusiasts realized a 70B Llama model might need 70GB total memory at q8 quantization, spanning VRAM and system RAM.

- One user joked that owning an M2 MacBook Air fueled a craving for a future MBP M4, pointing to the high cost of powerful setups.

Stackblitz (Bolt.new) Discord

-

Supabase Switch from Firebase: Members discussed migrating an entire site from Firebase to Supabase, encountering style conflicts with create-mf-app and Bootstrap usage.

- One user noted that the frameworks overlapped in configuration, and they hinted at refining the migration steps soon.

- Bolt Pilot GPT Blares for Beta: A member introduced the Bolt Pilot GPT for ChatGPT and suggested exploring stackblitz-labs/bolt.diy for relevant code samples.

- They showcased optimism for multi-tenant capabilities and invited community feedback to guide future updates.

- Token Tangle & Bolt Blues: Multiple members complained about Bolt consuming tokens on placeholder prompts, urging a reset feature and possible holiday discounts during Office Hours.

- Some users spent significant sums on tokens with lackluster results, provoking interest in collaboration and pooling resources.

Cohere Discord

-

Massive Rate-Limits on Multimodal Embeds: Cohere soared from 40 images/min to 400 images/min on production keys for the Multimodal Image Embed endpoint, with trial keys fixed at 5 images/min.

- They encouraged usage with the updated API Keys and spelled out more details in their docs.

- Maya Commands for Local Model Gains: Developers explored bridging a local model with Maya by issuing command-r-plus-08-2024, enabling the model to handle image paths alongside queries.

- They also added tool use in base models for better image analysis, prompting talk on advanced pipeline setups.

- Cohere Toolkit Battles AWS Stream Errors: A successful Cohere Toolkit deployment on AWS faced an intermittent stream ended unexpectedly warning, breaking chat compatibility.

- Users examined docker logs to diagnose the random glitch, hoping to pinpoint root causes.

- Structured Outputs Showcase & Reranker Riddles: People tested Cohere's Structured Outputs with

strict_tools, refining prompt parameters for precise JSON responses.

- Meanwhile, a RAG-based PDF system stumbled with the Cohere Reranker ignoring relevant chunks, but occasionally nailing the right content.

- Findr Debuts as Infinite Brain: Findr went live on Product Hunt, offering an endless memory vault to store and access notes.

- Enthusiasts cheered the launch, praising the concept of a searchable digital brain for better retention.

Modular (Mojo 🔥) Discord

-

Mojo & Archcraft: Missing Linker Mystery: One user faced issues launching the Mojo REPL on Archcraft due to a missing mojo-ldd library and an unmanaged environment blocking Python requirements. They also noted a stalled attempt to install Max, sparking a suggestion to create a dedicated thread for problem-solving.

- A Stable Diffusion example was mentioned as a helpful GitHub resource for new features. The conversation highlighted environment setup adjustments to avert abrupt installation failures.

- Docs & 'var' Spat: When Syntax Meets Confusion: Discussions arose over the var keyword requirement explicitly mentioned in Mojo docs, leaving some users unsettled. An upcoming documentation update was teased, though no definitive timeline was provided.

- Community members voiced differing opinions on the necessity of a dedicated keyword for variables. They encouraged feedback to refine future documentation releases.

- Kernel or Just a Function? Mojo's Terminology Tangle: Members clarified that a 'kernel' in Mojo often refers to a function optimized for GPU execution, distinguishing it from operating system kernels. The term varies in meaning, sometimes describing core computational logic or accelerator-friendly code.

- Participants exchanged interpretations of the concept, debating whether it should remain specialized to GPU tasks. Some noted its usage in mathematics to denote a fundamental operation within broader calculations.

- argmax & argmin Missing: Reduction Ruminations: A user lamented the absence of argmax and argmin in algorithm.reduction, questioning the need to rebuild them from scratch. They noted the frustration of reimplementing optimized functions that might be standard in other libraries.

- Members called for better documentation or official support for these operations. The discussion underscored continuity issues in Mojo’s evolving standard library.

- MAX & Mojo: Custom Ops Conundrum: Users wrestled with custom ops integration, citing the

session.load(graph, custom_ops_paths=Path("kernels.mojopkg"))fix for a missing mandelbrot kernel. They also referenced Issue #269 requesting improved error messages and single compilation unit kernels.

- MOToMGP Pass Manager errors further complicated custom op loading, prompting calls for better clarity in failure reports. Contributors emphasized the importance of guiding users with more descriptive diagnostics.

Latent Space Discord

-

Nvidia’s Nimble Nano Nudges AI: Nvidia introduced Jetson Orin Nano Super Developer Kit at $249, boasting 67 TOPS and showing a 70% performance bump over its predecessor.

- It includes 102GB/s bandwidth and aims to help hobbyists execute heavier AI, though some participants questioned if it can handle advanced robotics.

- GitHub Copilot Goes Gratis: GitHub Copilot is now free for VS Code, as Satya Nadella confirmed, with a 50 chats per month limit.

- Community members debated if that cap diminishes Copilot’s usefulness in contrast to rivals like Cursor.

- 1-800-CHATGPT Hits the Hotline: Announced by Kevin Weil, 1-800-CHATGPT provides free phone and WhatsApp access to GPT across the globe.

- Discussions highlighted its accessibility for broader audiences, removing the need for extra apps or accounts.

- AI Video Tools Turn a Corner: OpenAI’s Sora sparked talks about evolving video models, referencing Will Smith test clips to measure progress.

- Enthusiasts compared these breakthroughs to earlier image generation waves, citing a Replicate blogpost on the expanding appetite for high-res AI video.

- EvoMerge & DynoSaur Double Feature: The LLM Paper Club showcased Sakana AI’s EvoMerge and DynoSaur in a double header session, inviting live audience queries.

- Attendees were urged to add the Latent.Space RSS feed to calendars to keep up with future events.

OpenInterpreter Discord

-

Interpreter Interruption Intensifies: Multiple users faced repeated exceptions while using Open Interpreter, losing crucial conversation logs in the process. They reported issues with loading chat histories and dealing with API key confusion, stirring frustration in various threads.

- One user specifically lamented losing good convos, while others mentioned similar incidents across multiple setups. Unresolved technical hiccups continue to hinder extended usage.

- 1.x Mystery Mingles with 0.34: Community members debated the existence of Open Interpreter 1.x while they're currently limited to 0.34. They questioned changes in functionality, pointing out that OS mode doesn't seem present in 1.0.

- This mismatch sparked confusion about updating procedures and support. Some asked for official steps to switch versions, hoping to confirm new features.

- Cloudflare Plays Gateway Gambit: A user proposed Cloudflare AI Gateway to tackle some configuration snags with Open Interpreter. This approach triggered a short debate on external solutions and advanced deployment.

- Members considered new toolchains, examining how Cloudflare’s platform might improve reliability. They also mentioned synergy with other AI applications, but withheld a final verdict.

- Truffle's Tasty On-Device Teaser: A user introduced the Truffle-1: a device with 64GB of memory at a $500 deposit plus $115/month. They posted the official site, showcasing an orb that supports indefinite on-device inference.

- A tweet from simp 4 satoshi gave more financial details, referencing the deposit and monthly plan. This stirred interest in building and sharing custom apps through the orb’s local stack.

- Long-Term Memory Mindset: Enthusiasts explored ways to integrate extended memory with Open Interpreter, focusing on codebase management. Some proposed local setups, including Raspberry Pi, to store conversation data for future use.

- They saw potential for streamlined collaboration tools with persistent logs over time. The idea gained traction among participants seeking to keep larger context windows readily available.

tinygrad (George Hotz) Discord

-

Scramble for LLaMA Benchmarks: A user asked if anyone had benchmarks comparing LLaMA models using tinygrad OpenCL against PyTorch CUDA, but no data surfaced.

- The discussion concluded there are no known head-to-head performance stats, leaving AI engineers in the dark for now.

- ShapeTracker Merge Mayhem: A bounty regarding merging two arbitrary ShapeTrackers in Lean sparked questions about strides and shapes.

- Contributors noted a universal approach seems unworkable since variables complicate the merging beyond straightforward fixes.

- Counterexamples Cause Crashes: Members encountered counterexamples that break the current merging algorithm for unusual view pairs.

- They hinted more examples could be auto-generated from a single irregular case, highlighting dimension overflow issues.

- CuTe Layout Algebra Compared: Pairs of TinyGrad merges were likened to composition in CuTe’s layout algebra, referencing layout docs.

- This parallel calls attention to the intricate process of verifying certain algebraic properties before concluding shape compatibility.

- Injectivity Proof Deemed NP Hard: Skepticism arose over proving injectivity in layout algebra, with some suggesting it might be NP hard.

- Checking both necessity and sufficiency appears too complex for a swift resolution, hinting at a deeper theoretical challenge.

Torchtune Discord

-

FSDP Fracas & TRL Tangles: Team realized that if the FSDP reduce operation averages, scaling by

world_sizemust be applied in this snippet. They also discovered a possible scaling glitch in trl, pointing to this fix PR.- Members recommended an Unsloth-style bug report, suggesting direct code tweaks in

scale_gradsfor clarity. They expect this correction to simplify gradient behavior across distributed setups. - Loss Topping Tactics & Gradient Gains: Contributors agreed that scaling the loss explicitly in the training recipe improves clarity, referencing updates in this memory code. They emphasized that code comments help highlight the purpose of each scaling step.

- An optimizer_in_bwd scenario fix was added to handle normalization properly in the PR. This adjustment aims to keep the training loop transparent and maintain consistent gradient scaling.

- Sakana's Evolutionary Spin: Workers raised interest in Sakana for scaling up evolutionary algorithms to rival gradient-based techniques. They found it noteworthy that evolution-driven methods may inject a different angle into AI development.

- Some saw promise in merging evolutionary ideas with standard gradient recipes. Others plan to watch Sakana’s progress to see if it holds up in rigorous benchmarks.

- Members recommended an Unsloth-style bug report, suggesting direct code tweaks in

DSPy Discord

-

Collabin Clip Sparks Curiosity: A brief video called collabin surfaced at this link, hinting at some collaborative project or demo.

- Participants shared minimal details, but the video teased possibilities for future group efforts or demonstrations.

- Autonomous Agents Boost Knowledge Elite: A new paper titled Artificial Intelligence in the Knowledge Economy (link) highlights how autonomous AI agents elevate the most skilled individuals by automating routine work.

- Community comments cautioned that as these agents proliferate, those with deeper expertise gain an added edge in productivity.

- Coconut Steers LLM Reasoning into Latent Space: A paper on Coconut (Chain of Continuous Thought) (link) challenges text-based coherence, suggesting a latent space approach instead.

- Token-heavy strategies can miss subtleties, so community remarks championed rewriting LLM thought processes to manage complex planning.

- RouteLLM Falls Behind but Questions Arise for DSPy: Folks noticed that RouteLLM (repo) is no longer maintained, raising concerns for future synergy with DSPy.

- No concrete plans emerged, but it signaled a desire for robust routing tools within the DSPy ecosystem.

- DSPy Charts a Reasoning-Centric Path: Discussions pointed to TypedReAct’s partial deprecation, urging a shift toward simpler naming and patterns without 'TypedChainOfThought.'

- Others see fine-tuning pivoting to reward-level branching within DSPy, with references pointing to DSPy’s agent tutorial as a resource on those next steps.

Nomic.ai (GPT4All) Discord

-

Localdocs Support Lights Up GPT4All: Members discussed referencing local docs with GPT4All but found the older CLI lacking official support, pushing them toward the server API or GUI methods.

- Several participants confirmed the CLI’s limitations, highlighting that local document features still require specific configurations in the official toolset.

- Docker Dream Stalls for GPT4All: A user asked about running GPT4All in a Docker container with a web UI, but no one provided a ready-to-use solution.

- The question remains open, leaving container enthusiasts hoping someone releases an official or community image soon.

LlamaIndex Discord

-

Agentic AI SDR Sparks Leads: Check out this agentic AI SDR that uses LlamaIndex to automate revenue tasks while generating leads, as discussed in the blog channel.

- It showcases new possibilities for blending function calling with sales workflows, highlighting LlamaIndex's capacity for direct business impact.

- Composio Quickstarters Jumpstart LLM Agents: The Quickstarters folder points to Composio, linking LLM agents with GitHub and Gmail.

- Through function calling, it enables a streamlined approach for tasks like code commits or inbox scanning, all triggered by natural language input.

- Async Tools Boost OpenAIAgent Concurrency: Members discussed OpenAIAgent concurrency, referencing the OpenAI docs for parallel function calls in API v1.1.0+.

- They shared code snippets suggesting async function usage, clarifying concurrency does not imply true parallel CPU execution.

- RAG Evaluation Collaboration Surfaces: A member invited the community to team up on RAG evaluation, urging others to share insights.

- They welcomed direct discussions for deeper technical explorations, reflecting a growing interest in retrieval-augmented generation techniques.

Gorilla LLM (Berkeley Function Calling) Discord

-

BFCL Leaderboard Burps: One user observed that the BFCL Leaderboard got stuck on "Loading Model Response..." due to a certificate issue that caused a temporary outage.

- They highlighted that the model endpoint was inaccessible, sparking concern among testers eager to try new function calling features.

- Gorilla Benchmark Eyes JSON Adherence: A participant proposed using the Gorilla benchmark to verify the model’s alignment with a JSON schema or Pydantic model, emphasizing structured output testing.

- They asked if there are specialized subtasks for measuring structured generation accuracy, though no official mention of such tasks emerged.

LAION Discord

-

GPT-O1 Reverse Engineering Excites Researchers: Enthusiasts requested any known efforts or materials detailing GPT-O1 reverse engineering, including technical reports and papers, and welcomed additional insights from social media posts. This sparked a push for collective sharing and resource gathering to demystify the intricacies of GPT-O1.

- They proposed forming a collaboration initiative to compile references on GPT-O1, particularly from Twitter discussions and published materials, aiming to pool knowledge in the community.

- Meta Opens Generative AI Internship: Meta announced a 3–6 month research internship on text-to-image models and vision-language models, offering hands-on experimentation at scale, available to apply here. The role focuses on driving core algorithmic advances and building new capabilities in generative AI.

- The Monetization Generative AI team seeks researchers with backgrounds in deep learning, computer vision, and NLP, highlighting a global impact on how users interact online.

LLM Agents (Berkeley MOOC) Discord

-

No Major Discussion: We only saw a friendly expression of thanks with no additional info or references posted.

- No further chat or data were shared, so there's nothing else to highlight.

- No Technical Updates: No new models, code releases, or relevant technical developments surfaced in this discussion.

- We remain without further data for AI specialists from this single message.

Axolotl AI Discord

-

January Reinforcement Rendezvous: A new engineer is set to join the team in January to assist with Reinforcement Learning (RL) initiatives, bringing an extra set of hands for training expansions.

- They will also provide direct support for the KTO project, ensuring timely integration of RL components and improved functionality.

- KTO Gains Extra Pair of Hands: The new engineer will help refine the KTO system after they onboard, focusing on real-time performance improvements.

- Project leads expect their contribution to yield a notable boost in productivity for RL tasks.

Mozilla AI Discord

-

Developer Hub Gains Momentum: A big announcement rolled out brand-new features for the Developer Hub, emphasizing community feedback for continuous refinement, as detailed here.

- Participants stressed the significance of clarifying usage guidelines and gathering input on future expansions.

- Blueprints Initiative Simplifies AI Builds: The Blueprints initiative aims to help developers assemble open-source AI solutions with carefully provided resources, as discussed here.

- They noted planned enhancements could enable broader project collaboration and flexible templates for specialized use cases.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!