[AINews] Gemma 3 beats DeepSeek V3 in Elo, 2.0 Flash beats GPT4o with Native Image Gen

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

GDM is all you need.

AI News for 3/12/2025-3/13/2025. We checked 7 subreddits, 433 Twitters and 28 Discords (224 channels, and 2511 messages) for you. Estimated reading time saved (at 200wpm): 275 minutes. You can now tag @smol_ai for AINews discussions!

Today's o1-preview (at this point the only model competitive with Flash Thinking at AINews tasks, and yes o1-preview is better than o1-full or o3-mini-high) Discord recap is spot on - Google took the occasion of their Gemma Developer Day in Paris to launch a slew of notable updates:

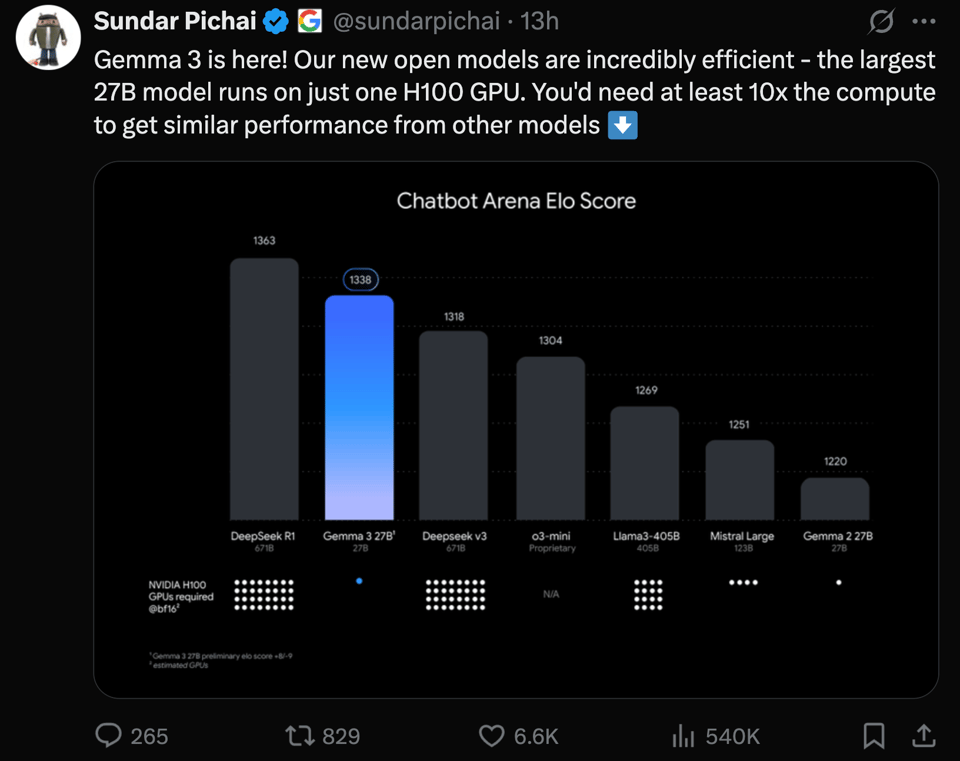

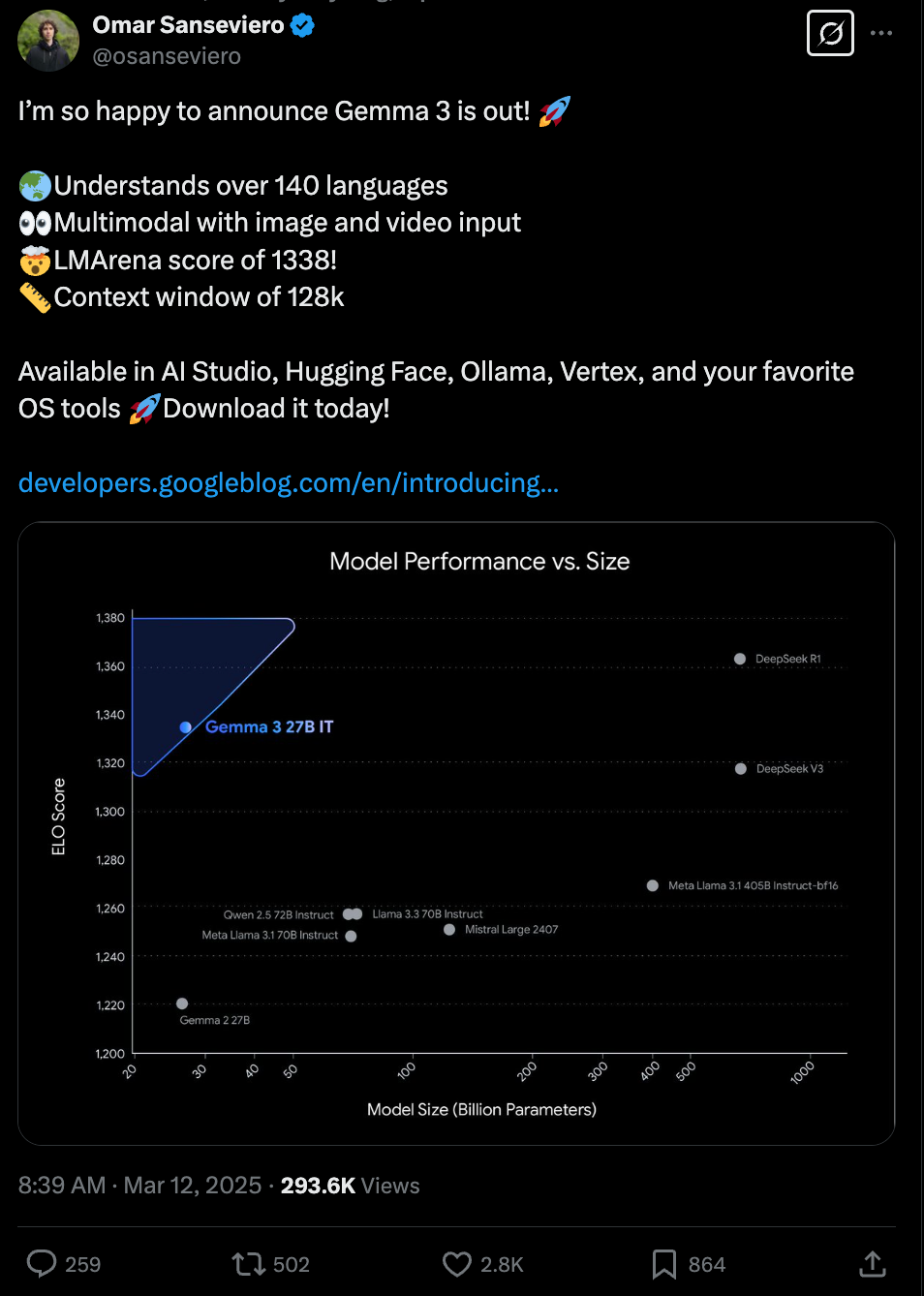

Gemma 3. People are loving that it is 128k context. Other than of course strong LMArena scores for an open model:

it is also a new Pareto frontier for its weight class by a country mile:

It also looks to completely subsume PaliGemma in incorporating vision as a first class capability (ShieldGemma is still a thing).

Gemini Flash Native Image Generation.

as teased at the Gemini 2 launch (our coverage here), Gemini 2 actually launched image editing, which OpenAI teased and never launched, and the results are pretty spectacular (if you can figure out how to find it in the complicated UI). Image editing has never been this easy.

Super excited to ship Gemini's native image generation into public experimental today :) We've made a lot of progress and still have a way to go, please send us feedback!

— Kaushik Shivakumar (@19kaushiks) March 12, 2025

And yes, I made the image using Gemini. pic.twitter.com/fEV8bCADyo

Anyone who has been in this room knows that it’s never just another day in here! This space has seen the extremes of chaos and genius!

— Mostafa Dehghani (@m__dehghani) March 12, 2025

...and we ship! https://t.co/qcsBMdnlQA

Happy Wednesday everyone! pic.twitter.com/Vkw84aCnfn

completely sota for image editing! both generated and real images

— apolinario 🌐 (@multimodalart) March 12, 2025

great 🚢 from google! i hope to see a gemma with image generation soon too ✨ https://t.co/KBBQIkyv31 pic.twitter.com/4ZCqwIhvDv

I had to try this. Gemini 2.0 Flash Experimental with image output 🤯 https://t.co/EqlH4gKpeV pic.twitter.com/eIEU0pTfng

— fofr (@fofrAI) March 12, 2025

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

Model Releases and Updates: Gemma 3 Family

- Gemma 3 Family Release: @osanseviero announced the release of Gemma 3, highlighting its multilingual capabilities (140+ languages), multimodal input (image and video), LMArena score of 1338, and a 128k context window. @_philschmid provided a TL;DR of Gemma 3's key features, including four sizes (1B, 4B, 12B, 27B), #1 ranking for open non-reasoning models in LMArena, text and image inputs, multilingual support, increased context window, and vision encoder based on SigLIP. @reach_vb summarized key Gemma 3 features, noting its performance comparable to OpenAI's o1, multimodal and multilingual support, 128K context, memory efficiency via quantization, and training details. @scaling01 provided a detailed overview of Gemma 3, emphasizing its ranking on LMSLOP arena, performance compared to Gemma 2 and Gemini 1.5 Flash, multimodal support using SigLip, various model sizes, long context window, and training methodology. @danielhanchen also highlighted the release of Gemma 3, noting its multimodal capabilities, various sizes (1B to 27B), 128K context window, and multilingual support, stating the 27B model matches Gemini-1.5-Pro on benchmarks. @lmarena_ai congratulated Google DeepMind on Gemma-3-27B, recognizing it as a top 10 overall model in Arena, 2nd best open model, and noting its 128K context window. @Google officially launched Gemma 3 as their "most advanced and portable open models yet", designed for devices like smartphones and laptops.

- Gemma 3 Performance and Benchmarks: @iScienceLuvr noted Gemma 3's performance, highlighting the 27B model ranking 9th on LMArena, outperforming o3-mini, DeepSeek V3, Claude 3.7 Sonnet, and Qwen2.5-Max. @reach_vb found Gemma3 4B competitive with Gemma2 27B, emphasizing "EXPONENTIAL TIMELINES". @reach_vb questioned if Gemma3 27B is the best non-reasoning LLM, especially in MATH. @Teknium1 compared Gemma 3 to Mistral 24B, noting Mistral is better on benchmarks but Gemma 3 has 4x context and vision.

- Gemma 3 Technical Details: @vikhyatk reviewed the Gemma 3 tech report, mentioning model names match parameter counts and 4B+ models are multimodal. @nrehiew_ shared thoughts on the Gemma 3 tech report, pointing out it lacks detail but provides interesting info. @eliebakouch provided a detailed analysis of the Gemma3 technical report, covering architecture, long context, and distillation techniques. @danielhanchen gave a Gemma-3 analysis, detailing architecture, training, chat template, long context, and vision encoder. @giffmana confirmed Gemma3 goes multimodal, replacing PaliGemma, and is comparable to Gemini1.5 Pro.

- Gemma 3 Availability and Usage: @ollama announced Gemma 3 availability on Ollama, including multimodal support and commands to run different sizes. @_philschmid highlighted testing Gemma 3 27B using the

google-genaisdk. @_philschmid shared a blog on developer information for Gemma 3. @_philschmid shared links to try Gemma 3 in AI Studio and model links. @mervenoyann provided a notebook on video inference with Gemma 3, showcasing its video understanding. @ggerganov announced Gemma 3 support merged in llama.cpp. @narsilou noted Text generation 3.2 is out with Gemma 3 support. @reach_vb provided a space to play with the Gemma 3 12B model.

Robotics and Embodied AI

- Gemini Robotics Models: @GoogleDeepMind introduced Gemini Robotics, AI models for a new generation of robots based on Gemini 2.0, emphasizing reasoning, interactivity, dexterity, and generalization. @GoogleDeepMind announced a partnership with Apptronik to build humanoid robots with Gemini 2.0, and opened Gemini Robotics-ER model to trusted testers like Agile Robots, AgilityRobotics, BostonDynamics, and EnchantedTools. @GoogleDeepMind stated their goal is AI that works for any robot shape or size, including platforms like ALOHA 2, Franka, and Apptronik's Apollo. @GoogleDeepMind explained Gemini Robotics-ER allows robots to tap into Gemini’s embodied reasoning, enabling object detection, interaction recognition, and obstacle avoidance. @GoogleDeepMind highlighted Gemini Robotics' generalization ability by doubling performance on benchmarks compared to state-of-the-art models. @GoogleDeepMind emphasized seamless human interaction with Gemini Robotics' ability to adjust actions on the fly. @GoogleDeepMind showed Gemini Robotics wrapping a timing belt around gears, a challenging task. @GoogleDeepMind demonstrated Gemini Robotics solving multi-step dexterity tasks like folding origami and packing lunch boxes.

- Figure Robot and AGI: @adcock_brett stated Figure will be the ultimate deployment vector for AGI. @adcock_brett shared updates on robotics, noting speed improvements, handling of deformable bags, and transferring neural network weights to new robots, feeling like "getting uploaded to the Matrix!". @adcock_brett described Helix as a tiny light towards solving general robotics. @adcock_brett mentioned their robot runs fully embedded and off-network with 2 embedded GPUs, without needing network calls yet.

AI Agents and Tooling

- Agent Workflows and Frameworks: @LangChainAI announced a Resources Hub with guides on building AI agents, including reports on AI trends and company use cases like Replit, Klarna, tryramp, and LinkedIn. @omarsar0 is hosting a free webinar on building effective agentic workflows with OpenAI's Agents SDK. @TheTuringPost listed 7 open-source frameworks enabling AI agent actions, including LangGraph, AutoGen, CrewAI, Composio, OctoTools, BabyAGI, and MemGPT, and mentioned emerging approaches like OpenAI’s Swarm and HuggingGPT. @togethercompute announced 5 detailed guides on building agent workflows with Together AI, each with deep dive notebooks.

- Model Context Protocol (MCP) and API Integrations: @llama_index announced LlamaIndex integration with Model Context Protocol (MCP), enabling connection to any MCP server and tool discovery in one line of code. @PerplexityAI released Perplexity API Model Context Protocol (MCP), providing real-time web search for AI assistants like Claude. @AravSrinivas announced Perplexity API now supports MCP, enabling real-time information for AIs like Claude. @cognitivecompai presented Dolphin-MCP, an open-source flexible MCP client compatible with Dolphin, ollama, Claude, and OpenAI endpoints. @hwchase17 questioned whether to use llms.txt or MCP for making LangGraph/LangChain more accessible in IDEs.

- OpenAI API Updates: @LangChainAI announced LangChain support for OpenAI's new Responses API, including built-in tools and conversation state management. @sama praised OpenAI's API design as "one of the most well-designed and useful APIs ever". @corbtt found OpenAI's new API shape nicer than Chat Completions API, but wished to skip supporting both APIs.

Performance and Optimization in AI

- GPU Programming and Performance: @hyhieu226 noted warp divergence as a subtle performance bug in GPU programming. @awnihannun released a guide to writing faster MLX and avoiding performance cliffs. @awnihannun highlighted the MLX community's fast support for Gemma 3 in MLX VLM, MLX LM, and MLX Swift for iPhone. @tri_dao will talk about optimizing attention on modern hardware and Blackwell SASS tricks. @clattner_llvm discussed AI compilers like TVM and XLA, and why GenAI is still written in CUDA.

- Model Optimization and Efficiency: @scaling01 speculated that OpenAI might release o1 model soon, as it handles complex tasks better than o3-mini. @teortaxesTex questioned Google's logic in aggressively scaling D with model N for Gemma 3, asking about Gemma-1B trained on 2T and its suitability for speculative decoding. @rsalakhu shared new work on optimizing test-time compute as a meta-reinforcement learning problem, leading to Meta Reinforcement Fine-Tuning (MRT) for improved performance and token efficiency. @francoisfleuret questioned if thermal dissipation is the key issue for making bigger chips.

AI Research and Papers

- Scientific Paper Generation by AI: @SakanaAILabs announced that a paper by The AI Scientist-v2 passed peer review at an ICLR workshop, claiming it's the first fully AI-generated peer-reviewed paper. @hardmaru shared details of this experiment, documenting the process and learnings, and publishing AI-generated papers and human reviews on GitHub. @hardmaru joked about The AI Scientist getting Schmidhubered. @hkproj questioned ICLR's standards after an AI Scientist paper was accepted. @SakanaAILabs acknowledged citation errors by The AI Scientist, attributing "an LSTM-based neural network" incorrectly, and documented errors in human reviews.

- Diffusion Models and Image Generation: @iScienceLuvr highlighted a paper on improved text-to-image alignment in diffusion models using SoftREPA. @iScienceLuvr shared a paper on Controlling Latent Diffusion Using Latent CLIP, training a CLIP model in latent space. @teortaxesTex called a new algorithmic breakthrough a "rare impressive" development that may end Consistency Models and potentially diffusion models.

- Long-Form Music Generation: @iScienceLuvr shared work on YuE, a family of open foundation models for long-form music generation, capable of generating up to five minutes of music with lyrical alignment.

- Mixture of Experts (MoE) Interpretability: @iScienceLuvr highlighted MoE-X, a redesigned MoE layer for more interpretable MLPs in LLMs.

- Gemini Embedding: @_akhaliq shared Gemini Embedding, generalizable embeddings from Gemini.

- Video Creation and Editing AI: @_akhaliq presented Alibaba's VACE, an All-in-One Video Creation and Editing AI. @_akhaliq shared a paper on Tuning-Free Multi-Event Long Video Generation via Synchronized Coupled Sampling.

- Attention Mechanism and Softmax: @torchcompiled claimed softmax use in attention is arbitrary and there's a "bug" affecting LLMs, linking to a new post. @torchcompiled critiqued attention for lacking a "do nothing" option and suggested temperature scaling should depend on sequence length.

Industry and Business

- AI in Business and Applications: @AravSrinivas stated Perplexity API can generate PowerPoints, essentially replacing consultant work with an API call. @mustafasuleyman announced Copilot integration in GroupMe, providing in-app AI support for millions of users, especially US college students. @yusuf_i_mehdi highlighted Copilot in GroupMe making group chats less chaotic and helpful for homework, suggestions, and replies. @TheTuringPost discussed the need to go beyond basic AI skills and embrace synthetic data, RAG, multimodal AI, and contextual understanding, emphasizing AI literacy for everyone. @sarahcat21 noted coding is easier, but software building remains hard due to data management, state management, and deployment challenges. @mathemagic1an highlighted shadcn/ui integration as part of v0's success, praising Notion's UI kit for knowledge work apps.

- AI Market and Competition: @nearcyan noted Google is expected to DOUBLE in value after Anthropic reaches a $14T valuation. @mervenoyann joked about Google "casually killing other models" with Gemma 3. @scaling01 claimed Google beats OpenAI to market with Gemini 2.0 Flash, demonstrating its image recreation capability. @LoubnaBenAllal1 stated Google joins the "smol models club" with Gemma3 1B, showing a timeline of accelerating smol model releases and a heating up space. @scaling01 predicted if OpenAI doesn't release GPT-5 soon, it will be o1 dominating.

- Hiring and Talent in AI: @fchollet advertised for machine learning engineers passionate about European defense to join Harmattan, a vertically integrated drone startup. @saranormous, in a thread about startup hiring, emphasized prioritizing hiring early to avoid a vicious cycle. @SakanaAILabs is hiring a Cybersecurity Engineer for AI business initiatives. @giffmana expressed being happy at OpenAI for smart people, interesting work, and a "preference towards getting shit done." @teortaxesTex stated China will graduate hundreds of ML grads of high caliber this year. @rsalakhu congratulated Dr. Murtaza Dalal on completing his PhD.

- AI Infrastructure and Compute: @svpino promoted Nebius Explorer Tier offering H100 GPUs at $1.50 per hour, highlighting its cheapness and immediate provisioning. @dylan522p announced a hackathon with over 100 B200/GB200 GPUs, featuring speakers from OpenAI, Thinking Machines, Together, and Nvidia. @dylan522p praised Texas Instruments Guangzhou for cheaper IC parts compared to US distributors. @teortaxesTex analyzed Huawei's datacenter hardware from 2019, noting its capabilities and the impact of sanctions. @cHHillee will be at GTC talking about ML systems and Blackwell GPUs.

Memes and Humor

- AI Capabilities and Limitations: @scaling01 joked about reinventing Diffusion models and suggested Google should train a reasoning model on image generation to fix spelling errors. @scaling01 found Gemini 2.0 Flash iteratively improving illegible text in memes via prompting. @scaling01 posted "checkmate" with an image comparing Google and OpenAI. @goodside showed Gemini 2.0 Flash adding "D" to "BASE" on a T-shirt in an uploaded image. @scaling01 created a Google vs OpenAI image meme using text-to-image generation. @c_valenzuelab joked about needing a captcha system for AIs to prove you are not human. @scaling01 used #savegoogle hashtag humorously. @scaling01 used "checkmate" meme in the context of Google vs OpenAI.

- AI and Society: @oh_that_hat suggested treating people online the way you want AI to treat you, as AI will learn from online interactions. @c_valenzuelab discussed the gap between aesthetic values and practical preferences regarding automation and authenticity. @jd_pressman shared an imagined scenario of showing a screenshot from 2015 clarifying AI capabilities to someone from that time. @jd_pressman differentiated AI villains that are like GPT (The Master, XERXES, Dagoth Ur, Gravemind) from those that are not (HAL 9000, GladOS, 343 Guilty Spark, X.A.N.A.). @qtnx_ shared "my life is an endless series of this exact gif" with a relevant gif. @francoisfleuret shared a Soviet citizen joke related to Swiss and Russian officers and watches.

- General Humor and Sarcasm: @teortaxesTex posted "It's over. The meme has been reversed. funniest thing I've read today" with a link. @teortaxesTex sarcastically said "LMAO the US is so not ready for that war".

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Gemma 3 Multimodal Release: Vision, Text, and 128K Context

- Gemma 3 Release - a google Collection (Score: 793, Comments: 218): Gemma 3 has been released as a Google Collection, though the post lacks further details or context on its features or implications.

- Gemma 3 Features and Issues: Users noted that Gemma 3 does not support tool calling and has issues with image input, specifically in the gemma-3-27b-it on AIstudio. The model architecture is not recognized by some platforms like Transformers, and it is not yet running on LM Studio.

- Performance and Comparisons: The 4B Gemma 3 model surpasses the 9B Gemma 2, and the 12B model is noted for its strong vision capabilities. Despite its high performance, users report it crashes often on ollama and lacks functionality like function calling. EQ-Bench results show the 27b-it model in second place for creative writing.

- Model Availability and Technical Details: Gemma 3 models are available on platforms like ollama and Hugging Face, with links provided for various resources and technical reports. The models support up to 128K tokens and feature Quantization Aware Training to reduce memory usage, with ongoing work to add more versions to Hugging Face.

- Gemma 3 27b now available on Google AI Studio (Score: 313, Comments: 61): Gemma 3 27B is now available on Google AI Studio with a context length of 128k and an output length of 8k. More details can be found on the Google AI Studio and Imgur links provided.

- Users discussed the system prompt and its impact on Gemma 3's responses, noting it can sometimes provide information beyond its supposed cutoff date, as observed when asked about events post-2021. Some users reported different experiences regarding its ability to handle logic and writing tasks, with comparisons made to Gemma 2 and its limitations.

- Performance issues were highlighted, with several users mentioning that Gemma 3 is currently slow, although it shows improvement in following instructions compared to Gemma 2. There were also discussions about its translation capabilities, with some stating it outperforms Google Translate and DeepL.

- Links to Gemma 3's release on Hugging Face were shared, providing access to various model versions. Users expressed anticipation for open weights and benchmarks to better evaluate the model's performance and capabilities.

- Gemma 3 on Huggingface (Score: 154, Comments: 27): Google's Gemma 3 models are available on Huggingface in sizes of 1B, 4B, 12B, and 27B parameters, with links provided for each. They accept text and image inputs, with a total input context of 128K tokens for the larger models and 32K tokens for the 1B model, and produce outputs with a context of 8192 tokens. The model has been added to Ollama and boasts an ELO of 1338 on Chatbot Arena, surpassing DeepSeek V3 671B.

- Model Context and VRAM Requirements: The 27B Gemma 3 model requires a significant 45.09GB of VRAM for its 128K context, which poses challenges for users without high-end GPUs like a second 3090. The 8K refers to the output token context, while the input context is 128K for the larger models.

- Model Performance and Characteristics: Users compare the 27B Gemma 3 model to the 1.5 Flash but note it behaves differently, similar to Sonnet 3.7, by providing extensive responses to simple questions, suggesting potential as a Systems Engineer tool.

- Running and Compatibility Issues: Some users face issues with running the model on Ollama due to version incompatibility, but updating the software resolves this. GGUFs and model versions are available on Huggingface, and users should be cautious of double BOS tokens when deploying the model.

Theme 2. Unsloth's GRPO Modifications: Llama-8B's Self-Learning Improvements

- I hacked Unsloth's GRPO code to support agentic tool use. In 1 hour of training on my RTX 4090, Llama-8B taught itself to take baby steps towards deep research! (23%→53% accuracy) (Score: 655, Comments: 49): I modified Unsloth's GRPO code to enable Llama-8B to use tools agentically, enhancing its research skills through self-play. In just one hour of training on an RTX 4090, the model improved its accuracy from 23% to 53% by generating questions, searching for answers, evaluating its success, and refining its research ability through reinforcement learning. You can find the full code and instructions.

- Users expressed curiosity about the reinforcement learning (RL) process, particularly the dataset creation and continuous weight adjustment. The author explained that they generate and filter responses from the LLM to create a dataset for fine-tuning, repeating this process iteratively.

- There is significant interest in applying this method to larger models like Llama 70B and 405B, with the author mentioning efforts to set up FSDP for further experimentation.

- The community showed strong support and interest in the project, with suggestions to contribute to the Unsloth repository, and appreciation for sharing the work, highlighting its potential industry relevance during the "year of agents."

- Gemma 3 - GGUFs + recommended settings (Score: 171, Comments: 76): Gemma 3, Google's new multimodal models, are now available in 1B, 4B, 12B, and 27B sizes on Hugging Face, with both GGUF and 16-bit versions uploaded. A step-by-step guide on running Gemma 3 is provided here, and recommended inference settings include a temperature of 1.0, top_k of 64, and top_p of 0.95. Training with 4-bit QLoRA has known bugs, but updates are expected soon.

- Temperature and Performance Issues: Users confirmed that Gemma 3 operates at a temperature of 1.0, which is not considered high, yet some users report performance issues, such as slower speeds compared to other models like Qwen2.5 32B. A user using an RTX 5090 noted Gemma 3's slower performance, with the 4B model running slower than the 9B model, prompting further investigation by the Gemma team.

- System Prompt and Inference Challenges: Discussions highlighted that Gemma 3 lacks a native system prompt, requiring users to incorporate system instructions into user prompts. Additionally, there are issues with running GGUF files in LM Studio, and dynamic 4-bit inference is recommended over GGUFs, but not yet uploaded due to transformer issues.

- Quantization and Model Compatibility: The IQ3_XXS quant for Gemma2-27B is noted for its small size of 10.8 GB, making it feasible to run on a 3060 GPU. Users debated the accuracy of claims about VRAM requirements, with some asserting that 16GB of VRAM is insufficient for the 27B model, while others argue it runs effectively with Q8 cache quantization.

Theme 3. DeepSeek R1 on M3 Ultra: Insights into SoC Capabilities

- M3 Ultra Runs DeepSeek R1 With 671 Billion Parameters Using 448GB Of Unified Memory, Delivering High Bandwidth Performance At Under 200W Power Consumption, With No Need For A Multi-GPU Setup (Score: 380, Comments: 159): DeepSeek R1 operates with 671 billion parameters on the M3 Ultra using 448GB of unified memory, achieving high bandwidth performance while consuming less than 200W power. This setup eliminates the need for a multi-GPU configuration.

- Discussions focus heavily on the prompt processing speed and context size limitations of the DeepSeek R1 on the M3 Ultra, with multiple users expressing frustration over the lack of specific data. Users highlight that even with 18 tokens per second, the time to start generation at large context sizes is impractical, often taking several minutes.

- There is skepticism about the practicality of Apple Silicon for local inference of large models, with many users noting that the M3 Ultra's performance, despite its impressive specs, is not suitable for complex tasks such as context management or training. Users argue that NVIDIA and AMD offerings, though more power-intensive, might be more effective for these tasks.

- The discussion includes the potential of KV Cache to improve performance on Mac systems, but users note limitations when handling complex context management. Additionally, the feasibility of connecting eGPUs for enhanced processing is debated, with some users noting the lack of support for Vulkan on macOS as a barrier.

- EXO Labs ran full 8-bit DeepSeek R1 distributed across 2 M3 Ultra 512GB Mac Studios - 11 t/s (Score: 143, Comments: 37): EXO Labs executed the full 8-bit DeepSeek R1 distributed processing on two M3 Ultra 512GB Mac Studios, achieving a performance of 11 transactions per second (t/s).

- Discussions highlight the cost and performance trade-offs of using M3 Ultra Mac Studios compared to other hardware like GPUs. While the Mac Studios offer a compact and quiet setup, they face criticism for slow prompt processing speeds and high expenses, particularly in RAM and SSD pricing, despite being energy-efficient and space-saving.

- The conversation emphasizes the importance of batching for maximizing throughput on expensive hardware setups like the Mac Studios, contrasting with GPU clusters that can handle multiple requests in parallel. Comparisons are made to alternative setups like H200 clusters, which, despite higher costs and power consumption, offer significantly faster performance in batching scenarios.

- There is a notable demand for prompt processing metrics, with several users expressing frustration over the lack of these figures in the results shared by EXO Labs. The time to first token was noted at 0.59 seconds for a short prompt, but users argue this isn't sufficient to gauge overall performance.

Theme 4. Gemma 3 Open-Source Efforts: Llama.cpp and Beyond

- Gemma 3 - Open source efforts - llama.cpp - MLX community (Score: 160, Comments: 12): Gemma 3 is released with open-source support, highlighting collaboration between ngyson, Google, and Hugging Face. The announcement, shared by Colin Kealty and Awni Hannun, underscores community efforts within the MLX community and acknowledges key contributors, celebrating the model's advancements.

- The vLLM project is actively integrating Gemma 3 support, though there are doubts about meeting release schedules based on past performance. Links to relevant GitHub pull requests were shared for tracking progress.

- Google's contribution to the project has been praised for its unprecedented speed and support, particularly in aiding the integration with llama.cpp. This collaboration is noted as a significant first, with excitement around trying Gemma 3 27b in LM Studio.

- The collaboration between Hugging Face, Google, and llama.cpp has been highlighted as a successful effort to make Gemma 3 accessible quickly, with special recognition given to Son for their contributions.

- QwQ on high thinking effort setup one-shotting the bouncing balls example (Score: 115, Comments: 18): The post discusses Gemma 3 and its compatibility with the open-source MLX community, specifically focusing on the high-effort setup required to efficiently execute the bouncing balls example.

- GPU Offloading and Performance: Users discuss optimizing the bouncing balls example by offloading processing to the GPU, with one user achieving 21,000 tokens using Llama with 40 GPU layers. However, they encountered issues with ball disappearance and needed to adjust parameters like gravity and friction for better performance.

- Thinking Effort Control: ASL_Dev shares a method to control the model's thinking effort by adjusting the

</think>token's logit, achieving a working simulation with a thinking effort set to 2.5. They provide a GitHub link for their experimental setup, which improved the simulation's performance compared to the regular setup. - Inference Engine Customization: Discussions highlight the potential for inference engines to allow manual adjustments of reasoning effort, similar to OpenAI's models. Users note that platforms like openwebui already offer this feature, and there is interest in adding weight adjusters for reasoning models to enhance customization.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

Theme 1. DeepSeek and ChatGPT Censorship: Observations and Backlash

- DeepSeek forgot who owns it for a second (Score: 6626, Comments: 100): The post discusses DeepSeek, highlighting a momentary lapse in the AI's recognition of its ownership, which raises concerns about potential AI censorship. The lack of detailed context or analysis in the post leaves the specific implications of this incident open to interpretation.

- Censorship Concerns: Users express frustration with AI systems generating full responses only to retract them, suggesting a mechanism that checks content post-generation for "forbidden subjects." This approach is seen as less elegant compared to systems like ChatGPT, where guardrails are integrated into the AI's logic, highlighting transparency issues in AI censorship.

- China Censorship: There is speculation that the censorship mechanism might be a form of "malicious compliance" against China's censorship policies, with some users suggesting that the system's poor implementation is intentional to highlight censorship issues.

- Technical Suggestions: Users propose that AI systems should generate full responses, run them through filters, and then display them to avoid the current practice of streaming answers that might be revoked, which is seen as inefficient and user-unfriendly.

- DeepSeek Forgot Its Own Owner......... (Score: 234, Comments: 11): The title of the post, "DeepSeek Forgot Its Own Owner", suggests confusion or controversy surrounding the ownership of DeepSeek, potentially reflecting broader issues related to censorship. Without additional context or video analysis, further details are unavailable.

- Social Media Commentary: Users express dissatisfaction with Reddit as a platform, with one comment sarcastically noting it as "one of the social media platforms of all time," while another highlights the preservation of its social aspect before media.

- Censorship and Satire: Comments allude to censorship issues, with a satirical remark referencing Xi Jinping and a humorous take on a "social credit ad" at the end of the video, indicating a critique of censorship or control mechanisms.

- Technical Observation: A user points out a technical detail about DeepSeek, observing a brief slowdown when only three letters are left, humorously attributing it to "facepalming the red button."

Theme 2. Claude Sonnet 3.7: A Standout in Coding Conversion Tasks

- Claude Sonnet 3.7 Is Insane at Coding! (Score: 324, Comments: 126): Claude Sonnet 3.7 excels at converting complex JavaScript applications to Vue 3, as demonstrated by its ability to restructure a 4,269-line app with 2,000 lines of JavaScript into a Vue 3 app in a single session. It effectively maintained the app's features, user experience, and component dependencies, implementing a proper component structure, Pinia stores, Vue Router, and drag-and-drop functionality, showcasing significant improvements over Claude 3.5.

- Discussions highlight Claude 3.7's ability to replace traditional BI tools and analysts, with one user sharing how it transformed Mixpanel CSV data into a comprehensive dashboard in minutes, saving significant costs associated with BI tools and analysts.

- Users share mixed experiences with Claude 3.7, with some praising its ability to create complex applications without bugs, while others criticize its tendency to overachieve and hallucinate features, reflecting a broader debate on AI's effectiveness in coding.

- There is a humorous observation about the community's polarized views on Claude 3.7, with some users finding it either revolutionary or problematic, illustrating the diverse and sometimes contradictory nature of AI tool evaluations.

Theme 3. Open-Source Text-to-Video Innovations: New Viral Demos

- I Just Open-Sourced 8 More Viral Effects! (request more in the comments!) (Score: 565, Comments: 41): Eight viral AI text-to-video effects have been open-sourced, inviting the community to request additional effects in the comments.

- Open-source and Accessibility: The effects are open-sourced, allowing anyone with a capable computer to run them for free, or alternatively, rent a GPU on platforms like Runpod for approximately $0.70 per hour. Generative-Explorer provides a detailed explanation on how to set up and use the Wan 2.1 model with ComfyUI and LoRA nodes, along with a tutorial link for beginners.

- Effect Details and Community Engagement: The post author, najsonepls, highlights the viral success of the effects trained on the Wan2.1 14B I2V 480p model, listing effects such as Squish, Crush, Cakeify, Inflate, Deflate, 360 Degree Microwave Rotation, Gun Shooting, and Muscle Show-off. The community discusses potential new effects like aging and expresses interest in the open-source nature, which enables further customization and innovation.

- Concerns and Industry Impact: Users speculate whether big companies might restrict similar effects behind paywalls, but Generative-Explorer argues that open-source alternatives can be developed quickly by training a LoRA using a few videos. The discussion also touches on the impact of effects like Inflation and Deflation on niche content areas, such as the NSFW Tumblr scene.

Theme 4. Spain's AI Content Labeling Mandate: Legal and Societal Implications

- Spain to impose massive fines for not labelling AI-generated content (Score: 212, Comments: 22): Spain is introducing a mandate requiring labels on AI-generated content, with non-compliance resulting in massive fines. This regulation aims to enhance transparency and accountability in the use of AI technologies.

- Detection Challenges: Concerns are raised about the effectiveness of AI detection methods, with references to existing issues in schools where AI detection software generates false positives. Questions arise about Spain's approach to accurately identifying AI-generated content without unfairly penalizing individuals.

- Skepticism and Critique: There is skepticism about the focus on AI-generated content labeling, with suggestions to prioritize addressing corruption and preferential treatment in legislative processes instead of minor issues like traffic tickets and school projects.

- Regulatory Impact: Some users express that the regulation might lead to a reduction in AI use in Spain, either as a positive step for peace of mind or as a negative consequence that might discourage AI application in the country.

Theme 5. Symbolism of the ✨ Emoji: Emergence as an AI Icon

- When did the stars ✨ become the symbol for AI generated? Where did it come from? (Score: 200, Comments: 42): The post inquires about the origin and popularization of the ✨ emoji as a symbol for AI-generated content, noting its widespread presence in news articles, social media, and applications like Notepad. The accompanying image uses star and dot graphics to evoke the emoji's association with sparkle or magic, but lacks any textual explanation.

- Jasper was one of the earliest adopters of the ✨ emoji to signify AI-generated content in early 2021, preceding major companies like Google, Microsoft, and Adobe, which began using it between 2022-2023. By mid-2023, design communities had started debating its status as an unofficial standard for AI, and by late 2023, it was recognized in mainstream media as a universal AI symbol.

- The ✨ emoji is linked to the concept of magic and auto-correction, reminiscent of the magic wand icon used by Adobe over 30 years ago. This association with magic and automatic improvement has contributed to its widespread adoption as a symbol for AI-generated content.

- Several resources explore the emoji's history and adoption, including a Wikipedia entry, a YouTube video, and an article by David Imel on Substack, offering insights into its evolution as an AI icon.

AI Discord Recap

A summary of Summaries of Summaries by o1-preview-2024-09-12

Theme 1: Google's New Multimodal Marvels Take the AI Stage

- Gemma 3 Steals the Spotlight with Multilingual Mastery: Google releases Gemma 3, a multimodal model ranging from 1B to 27B parameters with a 128K context window, supporting over 140 languages. Communities buzz about its potential to run on a single GPU or TPU.

- Gemini 2.0 Flash Paints Pictures with Words: Gemini 2.0 Flash now supports native image generation, letting users create contextually relevant images directly within the model. Developers can experiment via Google AI Studio.

- Gemini Robotics Brings AI to Life—Literally!: Google showcases Gemini Robotics in a YouTube video, demonstrating advanced vision-language-action models that enable robots to interact with the physical world.

Theme 2: New AI Models Challenge the Big Guys

- OlympicCoder Leaps Over Claude 3.7 in Coding Hurdles: The compact 7B parameter OlympicCoder model surpasses Claude 3.7 in olympiad-level coding challenges, proving that size isn't everything in AI performance.

- Reka Flash 3 Speeds Ahead in Chat and Code: Reka releases Flash 3, a 21B parameter model excelling in chat, coding, and function calling, featuring a 32K context length and available for free.

- Swallow 70B Swallows the Competition in Japanese: Llama 3.1 Swallow 70B, a superfast Japanese-capable model, joins OpenRouter, expanding language capabilities and offering lightning-fast responses.

Theme 3: AI Tools Can't Catch a Break

- Codeium Coughs Up Protocol Errors, Developers Gasp: Users report protocol errors like "invalid_argument: protocol error: incomplete envelope" in Codeium's VSCode extension, leaving code completion in the lurch.

- Cursor Crawls After Update, Users Sprint Back to Previous Version: After updating to version 0.46.11, Cursor IDE becomes sluggish, prompting users to recommend downloading version 0.47.1 to restore performance.

- Apple ID Login Goes Rotten on Perplexity's Windows App: Perplexity AI users encounter a 500 Internal Server Error when logging in with Apple ID, while those using Google accounts sail smoothly.

Theme 4: Innovation Sparks in AI Tool Integration

- OpenAI Agents SDK Hooks Up with MCP: The OpenAI Agents SDK now supports the Model Context Protocol (MCP), letting agents seamlessly aggregate tools from MCP servers for more powerful AI interactions.

- Glama AI Spills the Beans on All Tools Available: Glama AI's new API lists all available tools per server, exciting users with an open catalog of AI capabilities.

- LlamaIndex Leaps into MCP Integration: LlamaIndex integrates with the Model Context Protocol, enhancing its abilities by tapping into tools exposed by any MCP-compatible service.

Theme 5: Debates Heat Up Over LLM Behaviors

- "LLMs Can't Hallucinate!" Skeptics Exclaim: Heated debates erupt over whether LLMs can "hallucinate," with some arguing that since they don't think, they can't hallucinate—sparking philosophical showdowns in AI communities.

- LLMs Get a Face-lift with Facial Memory Systems: An open-source LLM Facial Memory System lets LLMs store memories and chats based on users' faces, adding a new layer of personalized interaction.

- ChatGPT's Ethical Reminders Irk Users Seeking Unfiltered Replies: Users express annoyance at ChatGPT's frequent ethical guidelines popping up in responses, wishing for an option to "turn off the AI nanny" and streamline their workflow.

PART 1: High level Discord summaries

Cursor IDE Discord

- Claude 3.7 Suffers Overload: Users reported high load issues with Claude 3.7, encountering errors and sluggishness during peak times, suggesting coding at night to avoid the issues and sharing a link to a Cursor forum thread on the topic.

- The 'diff algorithm stopped early' error was a frequently reported issue.

- Cursor Slows Down with Version .46: Users observed Cursor becoming very sluggish on both Macbook and PC after updating to version 0.46.11 and recommended downloading version 0.47.1.

- The performance degradation occurred even with low CPU utilization, while issues with pattern-matching for project rules were fixed in a later version.

- Manus AI Generates Sales Leads: Members discussed using Manus AI for lead generation and building SaaS landing pages, highlighting its ability to retrieve numbers, leading to a reported 30 high-quality leads after spending $600.

- OpenManus Attempts Replication of Functionality: Users shared that OpenManus, an open-source project trying to replicate Manus AI, is showing promise and provided a link to the GitHub repository and a YouTube video showcasing its capabilities.

- Some members believe it's not yet on par with Manus.

- Cline's Code Completion Costs Criticized: Members debated the value of Cline due to its high cost relative to Cursor.

- While Cline offers a 'full context window,' some users argue that Cursor's caching system allows for expanding context in individual chats and provides features like web search and documentation.

LM Studio Discord

- LM Studio Gets Schooled by Gemma 3: LM Studio 0.3.13 now supports Google's Gemma 3 family, but users reported that Gemma 3 models are performing significantly slower, up to 10x slowdown, compared to similar models.

- The team is also working on ironing out issues such as users struggling to disable RAG completely and problems with the Linux installer.

- Raging About RAG Removal: Users seek to turn off RAG completely in LM Studio to inject the full attachment into the context, but there's currently no UI option to disable it.

- As a workaround, users copy and paste documents manually, facing the hassle of converting PDFs to Markdown.

- AMD GPU Owner's Hot Spot Headache: Users are reporting 110°C hotspot temperatures on their 7900 XTX, sparking RMA eligibility discussions and concerns that AIBs cheaped out on thermals, with one report that AMD declines such RMA requests.

- It was pointed out the underlying issues might be bad batch of vapour chambers with not enough water inside, and PowerColor has said yes on RMA requests.

- Mining Card Revival as Inference Workhorse: Members are discussing reviving the CMP-40HX mining card with 288 tensor cores for AI inference.

- Interest is being undermined by the need to patch Nvidia drivers to enable 3D acceleration support.

- PTM7950 Thermal Paste Pumps Up: Members pondered using PTM7950 (phase change material) instead of thermal paste to prevent pump-out issues and maintain stable temperatures.

- After the first heat cycle, excessive material pumps out and forms a thick and very viscous layer around the die, preventing any more pump out.

Nous Research AI Discord

- Nous Research Launches Inference API: Nous Research released its Inference API featuring Hermes 3 Llama 70B and DeepHermes 3 8B Preview, offering $5.00 of free credits for new accounts.

- A waitlist system has been implemented at the Nous Portal, granting access on a first-come, first-served basis.

- LLMs Now Recognize Faces, Remember Chats: A member open-sourced a LLM Facial Memory System that lets LLMs store memories and chats based on your face.

- Members discussed the inference API, including the possibility of pre-loading credits due to concerns about API key security.

- Crafting Graph Reasoning System with Open Source Code: Members discussed how there is enough public information to build a graph reasoning system with open source code, though perhaps not as good as Forge, with the new API providing 50€ worth of credit for inference.

- It was mentioned that Kuzu is amazing and for graph databases, networkx + python is recommended.

- Audio-Flamingo-2 Flunks Key Detection: A user tested Nvidia's Audio-Flamingo-2 on HuggingFace to detect song keys and tempos, but had mixed results, even missing the key on simple pop songs.

- For example, when asked to identify the key of the song Royals by Lorde, Audio-Flamingo-2 incorrectly guessed F# Minor, with a tempo of 150 BPM, to the community's laughter.

Unsloth AI (Daniel Han) Discord

- Gemma 3 Gets GGUF Goodness: All GGUF, 4-bit, and 16-bit versions of Gemma 3 have been uploaded to Hugging Face.

- These quantized versions are designed to run in programs that use llama.cpp such as LM Studio and GPT4All.

- Transformers Tangle Thwarts Tuning: A breaking bug in Transformers is preventing the fine-tuning of Gemma 3, with HF actively working on a fix, according to a blog post update on Unsloth AI.

- Users are advised to wait for the official Unsloth notebooks to ensure compatibility once the bug is resolved.

- GRPO Generalizes Great!: The discussion covered the nuances of RLHF methods such as PPO, DPO, GRPO, and RLOO, with a member noting that GRPO generalizes better and provides a direct replacement for PPO.

- RLOO is a newer version of PPO where advantages are based on the normalized reward score of group responses, as developed by Cohere AI.

- HackXelerator Hits London, Paris, Berlin: A member announced a London, Paris, Berlin multimodal creative AI HackXelerator supported by Mistral, HF, and others.

- The multi-modal creative AI HackXelerator supported by Mistral AI, Hugging Face, AMD, and others will take place in London, Paris, and Berlin, focusing on music, art, film, fashion, and gaming, starting April 5, 2025 (lu.ma/w3mv1c6o).

- Tuning into Temperature Temps for Ollama: Many people are experiencing issues with 1.0 temp and are suggesting running in 0.1 in Ollama.

- Testing is encouraged to see if it works better in llama.cpp and other programs.

Perplexity AI Discord

- ANUS AI Agent Creates Buzz: The GitHub repo nikmcfly/ANUS sparked humorous discussions due to its unfortunate name, with one member jokingly suggesting TWAT (Think, Wait, Act, Talk pipeline) as an alternative acronym.

- Another member proposed Prostate as a government AI agent name, furthering the comical exchange.

- Apple ID Login Triggers Server Error: Users reported experiencing a 500 Internal Server Error when attempting Apple ID login for Perplexity’s new Windows app.

- This issue appears specific to Apple ID, as Google login was functioning correctly for some users.

- Model Selector Appears and Disappears: In the new web update, the model selector initially disappeared, causing user frustration due to the inability to select specific models like R1.

- The model selector reappeared later, with users suggesting setting the mode to "pro" or using the "complexity extension" to resolve selection issues.

- Perplexity Botches Code Cleanup: A user shared their 6-hour ordeal, detailing how Perplexity failed to properly clean up an 875-line code file, resulting in broken code chunks and links.

- Despite struggling with message length limitations, Perplexity ultimately returned the original, unmodified code.

- MCP Server Connector Launched: The API team announced the release of their Model Context Protocol (MCP) server, encouraging community feedback and contributions via GitHub.

- The MCP server acts as a connector for the Perplexity API, enabling web search directly within the MCP ecosystem.

aider (Paul Gauthier) Discord

- Gemma 3 Hits the Scene!: Google has launched Gemma 3, a multimodal model ranging from 1B to 27B parameters, boasting a 128K context window and compatibility with 140+ languages as per Google's blog.

- The models are designed to be lightweight and efficient, aiming for optimal performance on single GPUs or TPUs.

- OlympicCoder Smashes Coding Tasks!: The OlympicCoder model, a compact 7B parameter model, surpasses Claude 3.7 in olympiad-level coding challenges, according to a tweet and Unsloth.ai's blogpost.

- This feat underscores the potential for highly efficient models in specialized coding domains.

- Fast Apply Model Quickens Edits!: Inspired by a deleted Cursor blog post, the Fast Apply model, is a Qwen2.5 Coder Model fine-tuned for rapid code updates, as discussed on Reddit.

- The model addresses the need for faster application of search/replace blocks in tools like Aider, enhancing code editing workflows.

- Aider's Repo Map Gets Dropped!: Users are opting to disable Aider's repo map for manual file additions to have better control over context, despite Aider's usage tips recommending explicit file additions as the most efficient method, see official usage tips.

- The aim is to prevent the LLM from being distracted by excessive irrelevant code.

- LLMs Supercharge Learning: Members shared that LLMs greatly accelerate learning languages like Python and Go, citing a productivity boost that allows them to undertake projects previously deemed unjustifiable.

- One member noted it's not about faster work, but enabling projects that wouldn't have been possible otherwise, characterizing AI as a Cambrian explosion level event.

OpenAI Discord

- Perplexity Defeats OpenAI for Deep Research: A member ranked Perplexity as superior to OpenAI and SuperGrok for in-depth research, especially when dealing with uploaded documents and internet searches, acknowledging the budget concerns of users.

- The user sought advice on choosing between ChatGPT, Perplexity, and Grok, which led to the recommendation of Perplexity for its research capabilities.

- Ollama Orchestrates Optimal Model Deployment: When asked about the best language for deploying AI transformer models, a member suggested using Ollama as a service, particularly if faster inference speed/performance is desired.

- The user had been prototyping with Python and was exploring whether C# could offer better performance, leading to the Ollama recommendation.

- LLMs Can't Hallucinate, Say Skeptics: A member contended that the term hallucination is misapplied to LLMs because LLMs do not possess the capacity to think and are simply generating word sequences based on probability.

- A second member added that sometimes it switches models by mistake, and pointed to an attached image.

- Gemini's Image Generation Causes Impressment: Members raved about Google's release of Gemini's native image capabilities, highlighting its free availability and ability to see the images it generated for better regeneration.

- This feature, which allows for improved image regeneration with text, was showcased in the Gemini Robotics announcement.

- Ethical ChatGPT Ruffles Feathers: Users expressed annoyance with ChatGPT's frequent ethical reminders, which they perceive as unnecessary, unwanted, and disruptive to their workflow.

- One user remarked that they wish there was an option to disable these ethical guidelines.

HuggingFace Discord

- HF Course Explains Vision Language Models: The Hugging Face Computer Vision Course includes a section introducing Vision Language Models (VLMs), covering multimodal learning strategies, common datasets, downstream tasks, and evaluation.

- The course highlights how VLMs harmonize insights from diverse senses to enable AI to understand and interact with the world more comprehensively, unifying insights from diverse sensory inputs.

- TensorFlow Tweaks Toted for Top Tier Throughput: A member shared a blog post about GPU configuration with TensorFlow, covering experimental functions, logical devices, and physical devices, using TensorFlow 2.16.1.

- The member explored techniques and methods for GPU configuration, drawing from experiences using an NVIDIA GeForce RTX 3050 Laptop GPU to process a 2.8 million image dataset, leveraging the TensorFlow API Python Config to improve execution speed.

- Modal Modules Model Made Available: A member shared a YouTube tutorial on deploying the Wan2.1 Image to Video model for free on Modal, covering seamless modal installations and Python scripting.

- Instructions were given on how to use this GGUF format finetune of Gemma 2b using a Modelfile.

- Local Models Liberate Language Learning: A user shared a code snippet for using local models with

smolagentswithlitellmandollamausingLiteLLMModelspecifying topip install smolagents[litellm]and then callinglocalModel = LiteLLMModel(model_id="ollama_chat/qwen2.5:14b", api_key="ollama").- Users report that use of the default

hfApiModelcreates payment required errors after only a few calls to the Qwen inference API, but specifying local models circumvents the limitation.

- Users report that use of the default

- Agent Architecture Annoyances Await Arrival: Users are eagerly awaiting the release of Unit 2.3, which covers LangGraph, originally scheduled for release on March 11th.

- A user noted that overwriting the

agent_namevariable with a call result leads to the agent becoming uncallable, prompting discussion on prevention strategies.

- A user noted that overwriting the

OpenRouter (Alex Atallah) Discord

- Gemma 3 Introduces Multimodal Capabilities: Google launches Gemma 3 on OpenRouter, a multimodal model supporting vision-language input and text output, with a context window of 128k tokens and understanding over 140 languages.

- Reka Flash 3 Excels in Chat and Coding: Reka releases Flash 3, a 21 billion parameter language model that excels in general chat, coding, and function calling, featuring a 32K context length optimized via reinforcement learning (RLOO).

- This model has weights under the Apache 2.0 license and is available for free and is primarily an English model.

- Swallow 70B Adds Japanese Fluency: A new superfast Japanese-capable model named Llama 3.1 Swallow 70B joins OpenRouter, expanding the platform's language capabilities.

- This complements the launch of Reka Flash 3 and Google Gemma 3, enhancing the variety of language processing tools available on OpenRouter.

- Gemini 2 Flash Generates Images Natively: Google's Gemini 2.0 Flash now supports native image output for developer experimentation across all regions supported by Google AI Studio, accessible via the Gemini API and an experimental version (gemini-2.0-flash-exp).

- This allows the creation of images from both text and image inputs, maintaining character consistency and enhancing storytelling capabilities, as announced in a Google Developers Blog post.

- OpenRouter's Chutes Provider Stays Free: The Chutes provider remains free for OpenRouter users as they prepare their services and scale up, without a fully implemented payment system.

- While data isn't explicitly trained on, OpenRouter cannot guarantee that compute hosts won't use the data, given its decentralized nature.

Eleuther Discord

- Distill Community Kicks Off Monthly Meetups: The Distill community is launching monthly meetups, with the next one scheduled for March 14 from 11:30am-1pm ET, after a successful turnout.

- Details can be found in the Exploring Explainables Reading Group doc.

- TTT Supercharges Model Priming: Members discussed how TTT accelerates the process of priming a model for a given prompt, shifting the model's state to be more receptive, by performing a single gradient descent pass.

- The model optimizes the compression of sequences to produce useful representations, thus enhancing ICL and CoT capabilities, by aiming to learn and execute multiple gradient descent passes per token.

- Decoder-Only Architectures Embrace Dynamic Computation: A minor proposal suggests using the decoder side for dynamic computation, extending sequence length for internal thinking via a TTT-like layer by reintroducing a concept from encoder-decoders to decoder-only architectures.

- A challenge is determining extra sampling steps, but measuring the delta of the TTT update loss and stopping when below a median value could help.

- AIME24 Implementation Emerges, Testing Still Needed: An implementation of AIME24 appeared in the lm-evaluation-harness, based on the MATH implementation.

- The submitter admits that they haven't had time to test it yet, due to the lack of documentation of what people are running when they run AIME24.

GPU MODE Discord

- Funnel Shift's H100 Performance: Engineers were surprised to discover that a funnel shift seems faster than equivalent operations on H100, potentially due to using a less congested pipe.

- Despite trying

prmtinstructions, consistently using the predicated funnel shift performs better, resulting in 4shf.r.u32, 3shf.r.w.u32and 7lop3.lutSASS instructions.

- Despite trying

- TensorFlow's OpenCL Flame War: A discussion was sparked by an interesting flame war from 2015 regarding OpenCL support in TensorFlow.

- The debate highlights the early prioritization of CUDA and the difficulties encountered while integrating OpenCL support.

- Turing gets FlashAttention: An implementation of FlashAttention forward pass for the Turing architecture was shared, supporting

head_dim = 128, vanilla attention, andseq_lendivisible by 128.- This implementation shows a 2x speedup compared to Pytorch's

F.scaled_dot_product_attentionwhen tested on a T4.

- This implementation shows a 2x speedup compared to Pytorch's

- Modal Runners Conquer Vector Addition: Test submissions to leaderboard

vectoraddon GPUS: T4 using Modal runners succeeded!- Submissions with id 1946 and 1947 demonstrated the reliability of Modal runners for GPU-accelerated computations.

- H100 memory allocation mishaps: A member asked about why modifying memory allocation in h100.cu of ThunderKittens, to directly allocate memory for

o_smem, results in an illegal memory access was encountered error.- They are looking to understand the cause of this error within the specified H100 GPU kernel.

Interconnects (Nathan Lambert) Discord

- Gemma 3 claims Second Place: The Gemma-3-27b model secured second place in creative writing, potentially becoming a favorite for creative writing and RP fine tuners, detailed in this tweet.

- Open weight models like Gemma 3 are also driving down margins on API platforms and being increasingly adopted due to privacy/data considerations.

- Gemini 2.0 Flash Brings Image Generation: Gemini 2.0 Flash now features native image generation, optimized for chat iteration, allowing users to create contextually relevant images and generate long text in images, as mentioned in this blog post.

- DeepMind also introduced Gemini Robotics, a Gemini 2.0-based model designed for robotics and aimed at solving complex problems through multimodal reasoning.

- AlphaXiv Creates ArXiv Paper Overviews: AlphaXiv uses Mistral OCR with Claude 3.7 to generate blog-style overviews for arXiv papers, providing figures, key insights, and clear explanations from the paper with one click, according to this tweet.

- It generates beautiful research blogs with figures, key insights, and clear explanations.

- ML Models in Copyright Crosshairs: Ongoing court cases are examining whether training a generative machine learning model on copyrighted data constitutes a copyright violation, detailed in Nicholas Carlini's blogpost.

- Deep Learning as Farming?: A member shared a link to a post by Arjun Srivastava titled 'On Deep-Learning and Farming' which explores mapping concepts from one field to another.

- The author contrasts engineering, where components are deliberately assembled, with cultivation, where direct construction is not possible, Cultivation is like farming and engineering is like building a table.

Nomic.ai (GPT4All) Discord

- Bigger Brains, Better Benchmarks: A user inquired about the performance gap between ChatGPT premium and GPT4All's LLMs, with another user attributing it to the larger size of models.

- The discussion recommended downloading bigger models from Hugging Face contingent on adequate hardware.

- Ollama Over GPT4All For Server Solutions?: A user questioned the suitability of GPT4All for a server tasked with managing multiple models, quick loading/unloading, RAG with regularly updating files, and APIs for date/time/weather.

- The user cited issues with Ollama and sought advice regarding its viability given low/medium compute availability.

- Deepseek Details: 14B is the Way: In a query for a ChatGPT premium equivalent, Deepseek 14B was suggested, contingent on having 64GB RAM.

- The advice was to begin with smaller models like Deepseek 7B or Llama 8B, scaling up based on system performance.

- Context is Key: 4k is OK: The discussion emphasized the importance of large context windows, exceeding 4k tokens, to accommodate more information in prompts, such as documents.

- A user then asked if a screenshot they posted was one of these models, inquiring about its context window capabilities.

- Gemma Generation Gap: GPT4All Glitches: A user suggested testing GPT4All with tiny models to evaluate the workflow for loading, unloading, and RAG (with LocalDocs), noting that the GUI doesn't support multiple models simultaneously.

- They noted that Gemma 3 is currently incompatible with GPT4All and needs a newer version of llama.cpp, and included an image of the error.

MCP (Glama) Discord

- Glama API dumps tool data: A new Glama AI API endpoint now lists all available tools, offering more data per server than Pulse.

- Users expressed excitement about the freely available information.

- MCP Logging details servers POV: The server sends log messages according to the Model Context Protocol (MCP) specification, specifically declaring a

loggingcapability and emitting log messages with severity levels and JSON-serializable data.- This allows for structured logging from servers to clients, controlled via the MCP.

- Wolfram to the Rescue to Render Images in Claude: A member pointed to a wolfram server example that takes rendered graphs and returns an image by base64 encoding the data and setting the mime type.

- It was noted that Claude has limitations rendering outside of the tool call window.

- NPM Package location revealed: NPM packages are stored in

%LOCALAPPDATA%, specifically underC:\Users\YourUsername\AppData\Local\npm-cache.- The location contains the NPM packages and source code.

- OpenAI Agents SDK supports MCP: MCP support has been added to the OpenAI Agents SDK, available as a fork on GitHub and on pypi as the openai-agents-mcp package, allowing agents to aggregate tools from MCP servers.

- Setting the

mcp_serversproperty enables seamless integration of MCP servers, local tools, and OpenAI-hosted tools through a unified syntax.

- Setting the

Codeium (Windsurf) Discord

- Codeium Extension Suffers Protocol Errors: Users reported protocol errors like "invalid_argument: protocol error: incomplete envelope: read tcp... forcibly closed by the remote host" in the VSCode extension, causing the Codeium footer to turn red.

- This issue particularly affected users in the UK and Norway with providers such as Hyperoptic and Telenor.

- Neovim Support Struggles to Keep Up: A user criticized the state of Neovim support, citing completion errors (error 500) and expressing concern it lags behind Windsurf.

- In response to the criticism, a team member replied the team is working on it.

- Mixed Results from Test Fix Deployment: The team deployed a test fix, and while some users reported fewer errors, others still faced issues, with the extension either "turning off" or remaining red.

- These mixed results prompted further investigation by the team.

- EU Users Discover VPN Workaround: The team confirmed that users in the EU experienced issues such as "unexpected EOF" during autocomplete and an inability to link files inside chat.

- As a workaround, connecting to Los Angeles via VPN resolved the issue for affected users.

Yannick Kilcher Discord

- Gemini Robotics Comes to Life: Google released a YouTube video showcasing Gemini Robotics, bringing Gemini 2.0 to the physical world as their most advanced vision language action model.

- The model enables robots that can interact with the physical world, featuring enhanced physical interaction capabilities.

- Gemma 3 Drops with 128k Context Window: Gemma 3 is released with multimodal capabilities and a 128k context window (except for the 1B model), meeting user expectations.

- While the release garnered attention, one user commented that it twas aight.

- Sakana AI's Paper Passes Peer Review: A paper generated by Sakana AI has passed peer review for an ICLR workshop.

- A user questioned the rigor of the review process, suggesting the workshop might be generous to the authors.

- Maxwell's Demon Constrains AI Speed: A member shared that computers can compute with arbitrarily low energy by going both backwards and forwards, but the speed limit is how fast and certain you run the answer, referencing this YouTube video.

- They also linked another video about reversing entropy, tying computational limits to fundamental physics.

- Adaptive Meta-Learning Projects Welcomed: A member is seeking toy projects to test Meta-Transform and Adaptive Meta-Learning, starting with small steps using Gymnasium.

- They also linked to a GitHub repo for Adaptive Meta-Learning (AML).

Latent Space Discord

- Mastra Framework Aims for Million AI Devs: Ex-Gatsby/Netlify builders announced Mastra, a new Typescript AI framework intended to be easy for toy projects and reliable for production, according to their blog post.

- Aimed at frontend, fullstack, and backend developers, the creators seek to offer a dependable and simple alternative to existing frameworks, encouraging community contributions to their project on GitHub.

- Cursor's Embedding Model Claims SOTA: Cursor has trained a SOTA embedding model focused on semantic search, reportedly surpassing competitors' out-of-the-box embeddings and rerankers, according to a tweet.

- Users are invited to feel the difference in performance when using the agent.

- Gemini 2.0 Flash Generates Native Images: Google is releasing native image generation in Gemini 2.0 Flash for developer experimentation across supported regions via Google AI Studio, detailed in a blog post.

- Developers can test this feature using an experimental version of Gemini 2.0 Flash (gemini-2.0-flash-exp) in Google AI Studio and the Gemini API, combining multimodal input, enhanced reasoning, and natural language understanding to create images, as highlighted in a tweet.

- Jina AI Dives Into DeepSearch Details: Jina AI has shared a blog post outlining the practical implementation of DeepSearch/DeepResearch, with a focus on late-chunking embeddings for snippet selection and rerankers to prioritize URLs before crawling.

- The post suggests shifting focus from QPS to depth through read-search-reason loops for improved answer discovery.

Notebook LM Discord

- Users Mobile Habits Probed in Research: Google is seeking NotebookLM users for 60-minute interviews to discuss their mobile usage and provide feedback on new concepts, with a $75 USD thank you gift offered, interested participants are directed to complete a screener form (link) to determine eligibility.

- Google is conducting a usability study on April 2nd and 3rd, 2025, to gather feedback on a product in development, offering participants the localized equivalent of $75 USD for their time requiring a high-speed internet connection, an active Gmail account, and a **computer with video camera, speaker, and microphone.

- NoteBookLM as Internal FAQ: A member is considering using NoteBookLM Plus as an internal FAQ and wants to investigate the content of unresolved questions.

- They seek advice on how to examine questions users typed into the chat that were not resolved.

- NLM+ Generates API Scripts!: A member found NLM+ surprisingly capable at generating scripts using API instructions and sample programs.

- They noted it was easier to get revisions as a non-programmer by referencing material from the notebook.

- RAG vs Full Context Window Showdown: A user is questioning whether using RAG with vector search and a smaller context window is better than using Gemini Pro with its full context window for a large database.

- They're curious about the context window size used in RAG and ask for recommendations on achieving their task of having a mentor-like AI by using Gemini Pro.

- Inline Citations Preserved, Hooray!: Users can now save chat responses as notes with inline citations preserved in their original form.

- This enhancement allows users to refer back to the original source material, addressing a long-standing request from power users; Additionally a user requested the ability to copy and paste inline citations into a document while preserving the links.

Torchtune Discord

- MPS Device Mishap Mars Momentum: An

AttributeErrorrelated to missingtorch.mpsattributes emerged after a recent commit (GitHub commit), potentially disabling MPS support.- A proposed fix via PR #2486 led to subsequent torchvision errors when running on MPS.

- Gemma 3 Gains Ground: A member pointed out changes to the Gemma 3 model, attaching a screenshot from Discord CDN detailing these changes.

- The nature and implications of these changes were not discussed in further detail.

- Pan & Scan Pondering: The implementation of the Gemma3 paper's Pan & Scan technique, which enhances inference, was discussed for its necessity in torchtune.

- A member posited that it wasn't critical, suggesting the use of vLLM with the HF ckpt could achieve better performance, referring to this pull request.

- vLLM Victorious with HF ckpt: For enhanced performance with Gemma3, one can use the HF checkpoint with vLLM.

- This is made possible via this pull request.

LlamaIndex Discord

- LlamaIndex Connects to Model Context Protocol: LlamaIndex now integrates with the Model Context Protocol (MCP), which streamlines tool discovery and utilization, as described in this tweet.

- The Model Context Protocol integration allows LlamaIndex to use tools exposed by any MCP-compatible service, enhancing its capabilities.

- LlamaExtract Shields Sensitive Data On-Prem: LlamaExtract now offers on-premise/BYOC (Bring Your Own Cloud) deployments for the entire Llama-Cloud platform, addressing enterprise concerns about sensitive data.

- However, a member noted that these deployments typically incur a much higher cost than utilizing the SaaS solution.

- LlamaIndex Eyes the Response API: A user inquired about support for the new Response API, suggesting its potential to enrich results using a search tool with user opt-in.

- A member responded affirmatively, stating that they are trying to work on that today.

LLM Agents (Berkeley MOOC) Discord

- Quiz Deadlines Pushed to May: All quiz deadlines are scheduled for May, according to the latest announcements.

- Users were instructed to check the latest email regarding Lecture 6 for more details.

- Learners Want Lab and Research: A member inquired about plans for Labs as well as research opportunities for the MOOC learners.

- No further information was available.

Cohere Discord

- Cohere Multilingual Pricing MIA?: A member inquired about the pricing of the Cohere multilingual embed model, noting difficulty finding this information in the documentation.

- No specific details or links about pricing were shared in the discussion.

- OpenAI's Responses API Simplifies Interactions: OpenAI released their Responses API alongside the Agents SDK, emphasizing simplicity and expressivity, documented here.

- The API is designed for multiple tools, turns, and modalities, addressing user issues with current APIs, exemplified in the OpenAI cookbook.

- Cohere and OpenAI API Compatibility in Question: A member asked about the potential for Cohere to be compatible with OpenAI's newly released Responses API.

- The new API is designed as a solution for multi-turn interactions, hosted tools, and granular context control.

- Chat API Seed Parameter Problem Arises: A user noted that the chat API seemingly disregards the

seedparameter, resulting in diverse outputs despite using the same inputs and seed value.- Multiple users are reporting inconsistent outputs when using the Chat API with the same

seedvalue, suggesting a potential issue with reproducibility.

- Multiple users are reporting inconsistent outputs when using the Chat API with the same

DSPy Discord

- DSPy Caching Mechanism: A member asked about how caching works in DSPy and if the caching behavior is modifiable.

- Another member pointed to a pull request for a pluggable Cache module currently in development, indicating upcoming flexibility.

- Pluggable Cache Module in Development: The pull request introduces a single caching interface with two cache levels: in-memory LRU cache and fanout (on disk).

- This development aims to provide a more versatile and efficient caching solution for DSPy.

Modular (Mojo 🔥) Discord

- Modular Max Spawn Update: A member shared a GitHub Pull Request that hopefully will end up looking like their project, adding functionality to spawn and manage processes from exec files.

- However, first the foundations PR has to be merged and then there are some issues with Linux exec that need to be resolved.

- Linux Exec Snags Modular Max Update: The release of the new feature is currently on hold, grappling with unresolved issues surrounding Linux exec, as it awaits the green light from the foundations PR.

- Despite the hurdle, the developer voiced optimism for a close release, promising subscribers updates on the PR's course.

Gorilla LLM (Berkeley Function Calling) Discord

- Discover Central Hub Tracking Evaluation Tools: A member inquired about a central place to track all the tools used for evaluation in the context of Berkeley Function Calling Leaderboard.

- Another member suggested the directory gorilla/berkeley-function-call-leaderboard/data/multi_turn_func_doc as a potential resource.

- Evaluation Dataset Location Pinpointed: A member asked if all the evaluation dataset is available in the

gorilla/berkeley-function-call-leaderboard/datafolder.- There were no further messages to confirm whether that folder contains all evaluation datasets.

AI21 Labs (Jamba) Discord

- RAG Ditches Pinecone: The RAG formerly relied on Pinecone, but due to its subpar performance and inability to support VPC deployment, a shift in strategy became necessary.

- These constraints prompted the team to explore alternative solutions better suited to their performance and deployment needs.

- VPC Deployment Drives Change: The lack of VPC deployment support in the existing RAG infrastructure necessitated a re-evaluation of the chosen technologies.

- This limitation prevented secure and private access to resources, making it a critical factor in the decision to explore alternative solutions.

The tinygrad (George Hotz) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!