[AINews] Gemma 2: The Open Model for Everyone

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Knowledge Distillation is all you need to solve the token crisis?

AI News for 6/26/2024-6/27/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (416 channels, and 2698 messages) for you. Estimated reading time saved (at 200wpm): 317 minutes. You can now tag @smol_ai for AINews discussions!

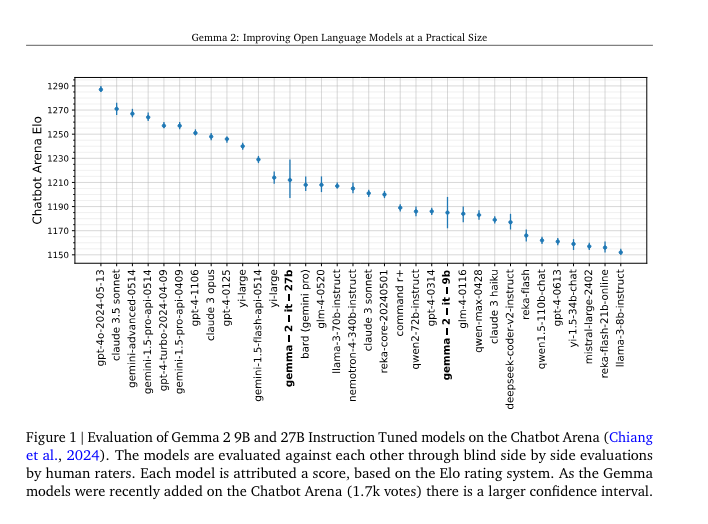

Gemma 2 is out! Previewed at I/O (our report), it's out now, with the 27B model they talked about, but curiously sans 2B model. Anyway, it's good, of course, for its size - does lower in evals than Phi-3, but better in ratings on LMSys, just behind yi-large (which also launched at the World's Fair Hackathon on Monday):

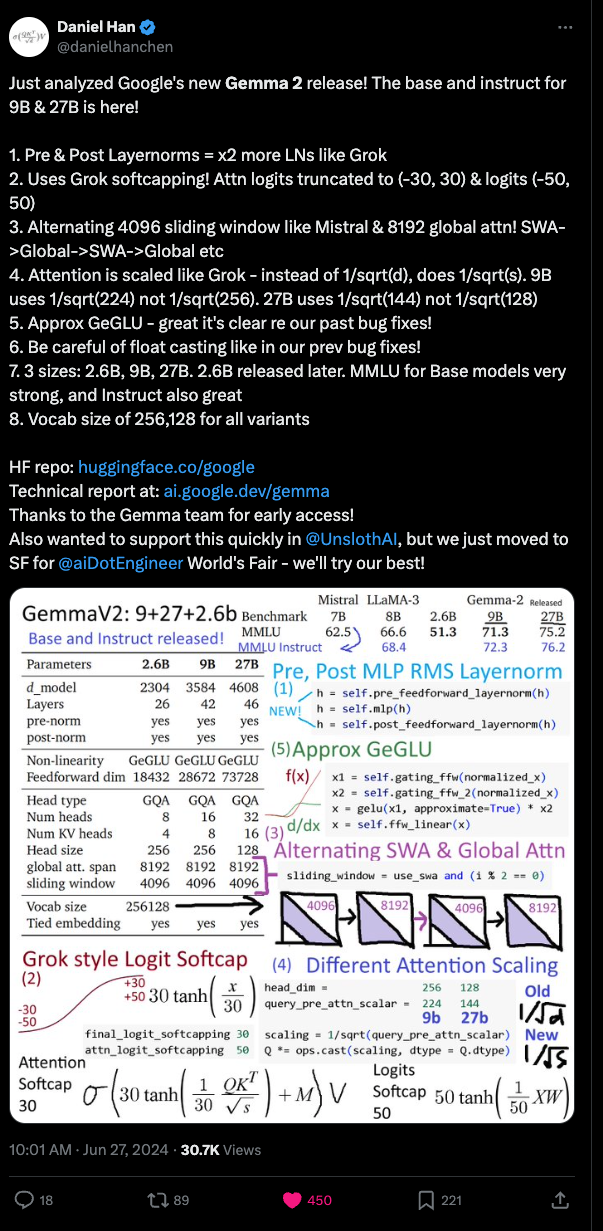

We have some small hints as to what the drivers might be:

- 1:1 alternation between local and global attention (similar to Shazeer et al 2024)

- Logit soft-capping per Gemini 1.5 and Grok

- GQA, Post/pre rmsnorm

But of course, data is the elephant in the room; and here the story has been KD:

In particular, we focus our efforts on knowledge distillation (Hinton et al., 2015), which replaces the one-hot vector seen at each token with the distribution of potential next tokens computed from a large model.

This approach is often used to reduce the training time of smaller models by giving them richer gradients. In this work, we instead train for large quantities of tokens with distillation in order to simulate training beyond the number of available tokens. Concretely, we use a large language model as a teacher to train small models, namely 9B and 2.6B models, on a quantity of tokens that is more than 50× the compute-optimal quantity predicted by the theory (Hoffmann et al., 2022). Along with the models trained with distillation, we also release a 27B model trained from scratch for this work.

At her World's Fair talk on Gemma 2, Gemma researcher Kathleen Kenealy also highlighted the Gemini/Gemma tokenizer:

"while Gemma is trained on primarily English data the Gemini models are multimodal they're multilingual so this means the Gemma models are super easily adaptable to different languages. One of my favorite projects we saw it was also highlighted in I/O was a team of researchers in India fine-tuned Gemma to achieve state-of-the-art performance on over 200 variants of indic languages which had never been achieved before."

Fellow World's Fair speaker Daniel Han also called out the attention-scaling that was only discoverable in the code:

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

AI Models and Architectures

- New Open LLM Leaderboard released: @ClementDelangue noted the new Open LLM Leaderboard evaluates all major open LLMs, with Qwen 72B as the top model. Previous evaluations have become too easy for recent models, indicating AI builders may have focused too much on main evaluations at the expense of model performance on others.

- Alibaba's Qwen models dominate Open LLM Leaderboard: @clefourrier highlighted that Alibaba's Qwen models are taking 4 of the top 10 spots, with the best instruct and base models. Mistral AI's Mixtral-8x22B-Instruct is in 4th place.

- Anthropic releases Claude 3.5 Sonnet: @dl_weekly reported that Anthropic released Claude 3.5 Sonnet, raising the bar for intelligence at the speed and cost of their mid-tier model.

- Eliminating matrix multiplication in LLMs: @rohanpaul_ai shared a paper on 'Scalable MatMul-free Language Modeling' which eliminates expensive matrix multiplications while maintaining strong performance at billion-parameter scales. Memory consumption can be reduced by more than 10× compared to unoptimized models.

- NV-Embed: Improved techniques for training LLMs as generalist embedding models: @rohanpaul_ai highlighted NVIDIA's NV-Embed model, which introduces new designs like having the LLM attend to latent vectors for better pooled embedding output and a two-stage instruction tuning method to enhance accuracy on retrieval and non-retrieval tasks.

Tools, Frameworks and Platforms

- LangChain releases self-improving evaluators in LangSmith: @hwchase17 introduced a new LangSmith feature for self-improving LLM evaluators that learn from human feedback, inspired by @sh_reya's work. As users review and adjust AI judgments, the system stores these as few-shot examples to automatically improve future evaluations.

- Anthropic launches Build with Claude contest: @alexalbert__ announced a $30K contest for building apps with Claude through the Anthropic API. Submissions will be judged on creativity, impact, usefulness, and implementation.

- Mozilla releases new AI offerings: @swyx noted that Mozilla is making a strong comeback with new AI offerings, suggesting they could become an "AI OS" after the browser.

- Meta opens applications for Llama Impact Innovation Awards: @AIatMeta announced the opening of applications for the Meta Llama Impact Innovation Awards to recognize organizations using Llama for social impact in various regions.

- Hugging Face Tasksource-DPO-pairs dataset released: @rohanpaul_ai shared the release of the Tasksource-DPO-pairs dataset on Hugging Face, containing 6M human-labelled or human-validated DPO pairs across many datasets not in previous collections.

Memes and Humor

- @svpino joked about things they look forward to AI replacing, including Jira, Scrum, software estimates, the "Velocity" atrocity, non-technical software managers, Stack Overflow, and "10 insane AI demos you don't want to miss".

- @nearcyan made a humorous comment about McDonald's Japan's "potato novel" (ポテト小説。。。😋).

- @AravSrinivas shared a meme about "Perplexity at Figma config 2024 presented by Head of Design, @henrymodis".

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Progress and Capabilities

- Low-energy LLMs: Researchers have developed a high-performing large language model that can run on the energy needed to power a lightbulb. This was achieved by eliminating matrix multiplication in LLMs, upending the AI status quo.

- AI self-awareness debate: Claude 3.5 has passed the mirror test, a classic test for self-awareness in animals, sparking debate on whether this truly demonstrates self-awareness in AI. Another post on the same topic had commenters skeptical that it represents true self-awareness.

- AI outperforming humans: In a real-world "Turing test" case study, AI outperformed college students 83.4% of the time, with 94% of AI submissions going undetected as non-human. However, humans still outperform LLMs on the MuSR benchmark according to normalized Hugging Face scores.

- Rapid model progress: A timeline of the LLaMA model family over the past 16 months demonstrates the rapid progress being made. Testing of the Gemma V2 model in the Lmsys arena suggests an impending release based on past patterns. Continued improvements to llama.cpp bitnet are also being made.

- Skepticism of current LLM intelligence: Despite progress, Google AI researcher Francois Chollet argued current LLMs are barely intelligent and an "offramp" on the path to AGI in a recent podcast appearance. An image of "The Myth of Artificial Intelligence" book prompted discussion on the current state of AI.

Memes and Humor

- AI struggles and quirks: Memes poked fun at AI quirks, like an AI model struggling to generate a coherent image of a girl lying in grass, and verbose AI outputs. One meme joked about having the most meaningful conversations with AI.

- Poking fun at companies, people and trends: Memes took humorous jabs at Anthropic, a specific subreddit, and people's cautious optimism about AI. A poem humorously praised the "Machine God".

Other AI and Tech News

- AI copyright issues: Major music labels Sony, Universal and Warner are suing AI music startups Suno and Udio for copyright infringement.

- New AI capabilities: OpenAI has confirmed a voice mode for its models will start rolling out at the end of July. A Redditor briefly demonstrated access to a GPT-4o real-time voice mode.

- Advances in image generation: A new open-source super-resolution upscaler called AuraSR based on GigaGAN was introduced. The ResMaster method allows diffusion models to generate high-res images beyond their trained resolution limits.

- Biotechnology breakthroughs: Two Nature papers on "bridge editing", a new genome engineering technology, generated excitement. A new mechanism enabling programmable genome design was also announced.

- Hardware developments: A developer impressively designed their own tiny ASIC for BitNet LLMs as a solo effort.

AI Discord Recap

A summary of Summaries of Summaries

Claude 3.5 Sonnet

-

Google's Gemma 2 Makes Waves:

- Gemma 2 Debuts: Google released Gemma 2 on Kaggle in 9B and 27B sizes, featuring sliding window attention and soft-capping logits. The 27B version reportedly approaches Llama 3 70B performance.

- Mixed Reception: While the 9B model impressed in initial tests, the 27B version disappointed some users, highlighting the variability in model performance.

-

Meta's LLM Compiler Announcement:

- New Models for Code Tasks: Meta introduced LLM Compiler models built on Meta Code Llama, focusing on code optimization and compiler capabilities. These models are available under a permissive license for research and commercial use.

-

Benchmarking and Leaderboard Discussions:

- Unexpected Rankings: The Open LLM Leaderboard saw surprising high rankings for lesser-known models like Yi, sparking discussions about benchmark saturation and evaluation metrics across multiple Discord communities.

-

AI Development Frameworks and Tools:

- LlamaIndex's Multi-Agent Framework: LlamaIndex announced llama-agents, a new framework for deploying multi-agent AI systems in production with distributed architecture and HTTP API communication.

- Figma AI Free Trial: Figma AI is offering a free year, allowing users to explore AI-powered design tools without immediate cost.

-

Hardware Debates for AI Development:

- GPU Comparisons: Discussions across Discord servers compared the merits of NVIDIA A6000 GPUs with 48GB VRAM against setups using multiple RTX 3090s, considering factors like NVLink connectivity and price-performance ratios.

- Cooling Challenges: Users in multiple communities shared experiences with cooling high-powered GPU setups, reporting thermal issues even with extensive cooling solutions.

-

Ethical and Legal Considerations:

- AI-Generated Content Concerns: An article about Perplexity AI citing AI-generated sources sparked discussions about information reliability and attribution across different Discord servers.

- Data Exclusion Ethics: Multiple communities debated the ethics of excluding certain data types (e.g., child-related) from AI training to prevent misuse, balanced against the need for model diversity and capability.

Claude 3 Opus

1. Advancements in LLM Performance and Capabilities

- Google's Gemma 2 models (9B and 27B) have been released, showcasing strong performance compared to larger models like Meta's Llama 3 70B. The models feature sliding window attention and logit soft-capping.

- Meta's LLM Compiler models, built on Meta Code Llama, focus on code optimization and compiler tasks. These models are available under a permissive license for both research and commercial use.

- Stheno 8B, a creative writing and roleplay model from Sao10k, is now available on OpenRouter with a 32K context window.

2. Open-Source AI Frameworks and Community Efforts

- LlamaIndex introduces llama-agents, a new framework for deploying multi-agent AI systems in production, and opens a waitlist for LlamaCloud, its fully-managed ingestion service.

- The Axolotl project encounters issues with Transformers code affecting Gemma 2's sample packing, prompting a pull request and discussions about typical Hugging Face bugs.

- Rig, a Rust library for building LLM-powered applications, is released along with an incentivized feedback program for developers.

3. Optimizing LLM Training and Inference

- Engineers discuss the potential of infinigram ensemble techniques to improve LLM out-of-distribution (OOD) detection, referencing a paper on neural networks learning low-order moments.

- The SPARSEK Attention mechanism is introduced in a new paper, aiming to overcome computational and memory limitations in autoregressive Transformers using a sparse selection mechanism.

- Adam-mini, an optimizer claiming to perform as well as AdamW with significantly less memory usage, is compared to NovoGrad in a detailed discussion.

4. Multimodal AI and Generative Modeling Innovations

- Character.AI launches Character Calls, allowing users to have voice conversations with AI characters, although the feature receives mixed reviews on its performance and fluidity.

- Stable Artisan, a Discord bot by Stability AI, integrates models like Stable Diffusion 3, Stable Video Diffusion, and Stable Image Core for media generation and editing directly within Discord.

- The Phi 3 model, mentioned in a Reddit post, brings powerful AI chatbots to browsers via WebGPU.

GPT4O (gpt-4o-2024-05-13)

-

LLM Deployment and Training Optimization:

- Hurdles in AI Deployment Leave Engineers Frustrated: Engineers shared challenges in deploying custom models efficiently, with discussions focused on avoiding weights errors and optimizing parameters for hardware like the RTX 4090 using tools like Koboldcpp.

- Diving Into Flash Attention: Members requested tutorials on Flash Attention, an efficient technique for memory management in models, highlighting the need for better understanding of this optimization.

-

Benchmarking and Performance Evaluation:

- Yi Takes LLM Leaderboard by Storm: The Open LLM Leaderboard sparked interest as models like Yi surprisingly rose to top ranks, challenging engineers to reassess their models' performances.

- Gemma 2's Mixed Reactions: Excitement and skepticism surrounded Gemma 2—while some praised its innovations, others were unsure if it marked a significant leap. Comparisons with existing models were fueled by benchmark analyses.

-

Open-Source AI Frameworks and Tools:

- LlamaIndex Introduces llama-agents: LlamaIndex announced llama-agents, a multi-agent AI framework aiming to streamline production deployments; it includes distributed architecture and HTTP API communication.

- LangChain AI Discusses Endpoint Building: Engineers shared examples of building LangChain endpoints with documentation showing proper use of

load_qa_chain()and handling high-volume requests.

-

AI Licensing and Ethical Considerations:

- AI Training Ethics Stir Heated Debate: Engineers in LAION deliberated over ethical training practices, debating whether to exclude child-related data to prevent misuse, while balancing the impact on model diversity and normal scene generation.

- Skepticism Towards AI Licensing Models: Legal and practical concerns arose around the exclusive Command-R model via OpenRouter, examining potential licensing misuse and enforcing compliance.

-

Cutting-Edge AI Models and Innovations:

- Meta Unveils LLM Compiler Models: Meta introduced the Meta LLM Compiler focusing on code optimization, with models built on extensive token corpuses for advanced compiler tasks.

- Innovative SPARSEK Attention Mechanism: The SPARSEK Attention mechanism promises efficient long-sequence processing with linear complexity, as detailed in a new paper, aiming to overcome typical self-attention limitations.

-

Misc

- Mojo Compiles and Executes Models with Ease: Community members discussed Mojo language challenges, highlighting object identity and self-referential type issues and the need for thorough GitHub documentation.

- Storage Requirements for Large Models Revealed: Insights shared in Nous Research AI discussed the necessary hardware for running models like DeepCoder V2, indicating that substantial RAM and VRAM are required for efficient performance.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

Yi Tops LLM Leaderboard: New benchmarks have placed lesser-known models like Yi at surprising high ranks in the LLM leaderboard, intriguing the AI community.

Rollout of Gemma 2 Stirs Excitement and Skepticism: The release of Gemma 2 has sparked enthusiasm and curiosity, particularly around its similarities with Grok. Notably, a tweet dissecting Gemma 2's innovations became a focal point, despite some users questioning if the advancements mark a significant leap from previous models.

Hurdles in AI Deployment and Training: Discussions pointed to challenges and solutions in deploying custom models, with an emphasis on avoiding weights errors. AI engineers shared insights about saving and serving models using Ollama and suggested parameters adjustments for optimization on hardware like the RTX 4090, citing specific tools like Koboldcpp.

Bugs and Support Discussed Ahead of the AI World's Fair: The Unsloth AI team is gearing up for the AI World's Fair, planning to discuss open-source model issues and the new inclusion of @ollama support, as announced in this tweet.

The Heat on ChatGPT: ChatGPT became a contentious topic, with some community members calling it "literally fucking garbage" while others acknowledged its role in paving AI's path, despite ChatGPT 3.5's accuracy issues. Problems with AI hardware overheating were also humorously lamented.

HuggingFace Discord

Multimodal RAG on the Horizon: Excited chatter surrounded the development of a multimodal RAG article with anticipation for a groundbreaking outcome; however, the specifics such as models or results were not discussed.

Entity Extraction Tools Evaluated: Technical discussion identified shortcomings of BERT for NER, with members suggesting alternatives like GLiNER and NuExtract, which are touted for their flexibility in extracting non-predefined entities, pointing to community resources like ZeroGPU Spaces.

Skeptical Reception for Sohu AI Chip: The community shared cautious skepticism regarding the claimed performance of Sohu's new AI chip, with members considering experimentation on Sohu's advertised service, despite no direct experience shared.

Efficient Dynamic Diffusion Delivery: Strategies for enhancing the performance of stable diffusion models were enthusiastically exchanged, notably including "torch compile" and leveraging libraries such as Accelerate and stable-fast for improved inference times.

AI Leaderboard Reflections: The Open LLM Leaderboard blog spurred concerns about saturation in AI benchmarks, reflecting a sentiment for the community's drive for continuous improvement and new benchmarks.

OpenAI Discord

GPT Sibling Rivalry: CriticGPT emerges to squash bugs in GPT-4’s code, boasting integration into OpenAI's RLHF pipeline for enhanced AI supervision, official announcement details.

Claude vs. GPT-4o - The Context Window Showdown: Claude 3.5 Sonnet is lauded for its coding prowess and expansive context window, overshadowing GPT-4o, which some claim lacks real omnimodal capabilities and faces slow response times.

Beyond Traditional Text Chats: Innovators employ 3.5 Sonnet API and ElevenLabs API to drive real-time conversation, challenging the necessity of ChatGPT in certain contexts.

Prompt Engineering Puzzles and Pitfalls: Users exchange methods for few-shot prompting and prompt compression, with an eye on structuring prompts in YAML/XML for precision, and experimenting with "Unicode semiotics" for token-efficient prompts.

Navigating the API Labyrinth: Discussions focused on calculating prompt costs, seeking examples of knowledge bases for model training, gif creation challenges with GPT, deprecated plugin replacements, and the API's knack for struggling with certain word puzzles.

CUDA MODE Discord

- Tensor Cores Lean Towards Transformers: Engineers noted that while tensor cores on GPUs are generic, there is a tendency for them to be more "dedicated to transformers." Members concurred, discussing the wide applicability of tensor cores beyond specific architectures.

- Diving Into Flash Attention: A tutorial was sought on Flash Attention, a technique for fast and memory-efficient attention within models. An article was shared to help members better understand this optimization.

- Power Functions in Triton: Discussions on Triton language centered around implementing pow functions, eventually using

libdevice.pow()as a workaround. It was advised to check that Triton generates the optimal PTX code for pow implementations to ensure performance efficiency.

- PyTorch Optimizations Unpacked: The new TorchAO 0.3.0 release captured attention with its quantize API and FP6 dtype, intending to provide better optimization options for PyTorch users. Meanwhile, the

choose_qparams_affine()function's behavior was clarified, and community contributions were encouraged to strengthen the platform.

- Sparsity Delivers Training Speed: The integration of 2:4 sparsity in projects using xFormers has led to a 10% speedup in inference and a 1.3x speedup in training, demonstrated on NVIDIA A100 for models like DINOv2 ViT-L.

Eleuther Discord

- Infinigram and the Low-Order Momentum: Discussions highlighted the potential of using infinigram ensemble techniques to boost LLMs' out-of-distribution (OOD) detection, referencing the "Neural Networks Learn Statistics of Increasing Complexity" paper and considering the integration of n-gram or bag of words in neural LM training.

- Attention Efficiency Revolutionized: A new SPARSEK Attention mechanism was presented, promising leaner computational requirements with linear time complexity, detailed in this paper, while Adam-mini was touted as a memory-efficient alternative to AdamW, as per another recent study.

- Papers, Optimizers, and Transformers: Researchers debated the best layer ordering for Transformers, referencing various arxiv papers, and shared insights on manifold hypothesis testing, though no specific code resources were mentioned for the latter.

- Mozilla's Local AI Initiative: There was an update on Mozilla's call for grants in local AI, and the issue of an expired Discord invite was resolved through a quick online search.

- Reframing Neurons’ Dance: The potential efficiency gains from training directly on neuron permutation distributions, using Zipf's Law and Monte Carlo methods, was a point of interest, suggesting a fresh way to look at neuron weight ordering.

LAION Discord

- GPU Face-off: A6000 vs. 3090s: Engineers compared NVIDIA A6000 GPUs with 48GB VRAM against quad 3090 setups, citing NVLink for A6000 can facilitate 96GB of combined VRAM, while some preferred 3090s for their price and power in multi-GPU configurations.

- Cost-Effective GPU Picks: There was a discussion on budget GPUs with suggestions like certified refurbished P40s and K80s as viable options for handling large models, indicating significant cost savings over premium GPUs like the 3090s.

- Specialized AI Chip Limitations: The specialized Sohu chip by Etched was criticized for its narrow focus on transformers, leading to concerns about its adaptability, while Nvidia's forthcoming transformer cores were highlighted as potential competition.

- AI Training Ethics & Data Scope: There was a spirited debate regarding whether to exclude child-related data in AI training to prevent misuse, with some expressing concerns that such exclusions could diminish model diversity and impede the ability to generate non-NSFW content like family scenes.

- NSFW Data's Role in Foundational AI: The necessity of NSFW data for foundational AI models was questioned, leading to the conclusion that it's not crucial for pre-training, and post-training can adapt models to specific tasks, though there were varying opinions on how to ethically manage the data.

- AIW+ Problem's Complexity Unpacked: The challenges of solving the AIW+ problem were explored in comparison to the common sense AIW, with the complexities of calculating family relationships like cousins and the nuanced possibilities leading to the conclusion that ambiguity persists in this matter.

Nous Research AI Discord

- Predictive Memory Formula Sought for AI Models: Engineers are searching for a reliable method to predict memory usage of models based on the context window size, considering factors like gguf metadata and model-specific differences in attention mechanisms. Empirical testing was proposed for accurate measurement, while skepticism persists about the inclusivity of current formulas.

- Chat GPT API Frontends Showcased: The community shared new frontends for GPT APIs, including Teknium’s Prompt-Engineering-Toolkit and FreeAIChatbot.org, while expressing security concerns about using platforms like Big-AGI. The use of alternative solutions such as librechat and huggingchat was also debated.

- Meta and JinaAI Elevate LLM Capabilities: Meta's newly introduced models that optimize for code size in compiler optimizations and JinaAI's PE-Rank reducing reranking latency, indicate rapid advancements, with some models now available under permissive licenses for hands-on research and development.

- Boolean Mix-Up in AI Models: JSON formatting issues were highlighted where Hermes Pro returned

Trueinstead oftrue, stirring a debate on dataset integrity and the potential impact of training merges on boolean validity across different AI models.

- RAG Dataset Expansion: The release of Glaive-RAG-v1 dataset signals a move toward fine-tuning models on specific use cases, as users discuss format adaptability with Hermes RAG and consider new domains for data generation to enhance dataset diversity while aiming for an ideal size of 5-20k samples.

Stability.ai (Stable Diffusion) Discord

- MacBook Air: AI Workhorse or Not?: Members are debating the suitability of a MacBook Air with 6 or 8GB of RAM for AI tasks, noting a lack of consensus about Apple hardware's performance in such applications.

- LoRA Training Techniques Under Scrutiny: For better LoRA model performance, varying batch sizes and epochs is key; one member cites specifics like a combination of 16 batch size and 200 epochs to achieve good shape with less detail.

- Stable Diffusion Licensing Woes: Licensing dilemmas persist with SD3 and civitai models; members discuss the prohibition of such models under the current SD3 license, especially in commercial ventures like civit.

- Kaggle: A GPU Haven for Researchers: Kaggle is giving away two T4 GPUs with 32GB VRAM, beneficial for model training; a useful Stable Diffusion Web UI Notebook on GitHub has been shared.

- Save Our Channels: A Plea for the Past: AI community members express desire to restore archived channels filled with generative AI discussions, valuing the depth of specialized conversations and camaraderie they offered.

Modular (Mojo 🔥) Discord

- Navigating Charting Library Trade-offs: Engineers engaged in a lively debate over optimal charting libraries, considering static versus interactive charts, native versus browser rendering, and data input formats; the discourse centered on identifying the primary needs of a charting library.

- Creative Containerization with Docker: Docker containers for Mojo nightly builds sparked conversations, with community members exchanging tips and corrections, such as using

modular install nightly/mojofor installation. There was also a promotion for the upcoming Mojo Community meeting, including video conference links.

- Insights on Mojo Language Challenges: Topics in Mojo discussions highlighted the necessity of reporting issues on GitHub, addressed questions about object identity from a Mojo vs Rust blog comparison, and observed unexpected network activity during Mojo runs, prompting a suggestion to open a GitHub issue for further investigation.

- Tensor Turmoil and Changelog Clarifications: The Mojo compiler's nightly build

2024.6.2705introduced significant changes, like relocating thetensormodule, initiating discussions on the implications for code dependencies. Participants called for more explicit changelogs, leading to promises of improved documentation.

- Philosophical Musings on Mind and Machine: A solo message in the AI channel offered a duality concept of the human mind, categorizing it as "magic" for the creative part and "cognition" for the neural network aspect, proposing that intelligence drives behavior, which is routed through cognitive processes before real-world interaction.

Perplexity AI Discord

Perplexity API: Troubleshoot or Flustered?: Users are encountering 5xx and 401 errors when interfacing with the Perplexity AI's API, prompting discussions about the need for a status page and authentication troubleshooting.

Feature Wish List for Perplexity: Enthusiasts dissect Perplexity AI's current features such as image interpretation and suggest enhancements like artifact implementation for better management of files.

Comparing AI's Elite: The community analyzed and contrasted various AI models, notably GPT-4 Turbo, GPT-4o, and Claude 3.5 Sonnet; preferences were aired but no consensus emerged.

Perplexity's Search for Relevance: Shared Perplexity AI pages indicated interest in diverse topics ranging from mental health to the latest in operating systems, such as the performance boosts in Android 14.

AI in Journalism Ethics Crosshairs: An article criticized Perplexity for increasingly citing AI-generated content, sparking conversations about the reliability and privacy of AI-generated sources.

Latent Space Discord

Grab Figma AI while It's Hot: Figma AI is currently free for one year as shared by @AustinTByrd; details can be found in the Config2024 thread.

AI Engineer World Fair Woes: Members mentioned technical difficulties during an event at the AI Engineer World Fair, ranging from audio issues to screen sharing, and strategies such as leaving the stage and rejoining were suggested to resolve problems.

LangGraph Cloud Takes Off: LangChainAI announced LangGraph Cloud, a new service offering robust infrastructure for resilient agents, yet some engineers questioned the need for specialized infrastructure for such agents.

Conference Content Watch: AI Engineer YouTube channel is a go-to for livestreams and recaps of the AI Engineer World Fair, featuring key workshops and technical discussions for AI enthusiasts, while conference transcripts are available on the Compass transcript site.

Bee Buzzes with Wearables Update: Wearable tech discussions included innovative products like Bee.computer, which can perform tasks like recording and transcribing, and even offers an Apple Watch app, indicating the trend towards streamlined, multifunctional devices.

LM Studio Discord

LM Studio Lacks Critical Feature: LM Studio was noted to lack support for document-based training or RAG capabilities, emphasizing a common misunderstanding of the term 'train' within the community.

Code Models Gear Up: Claude 3.5 Sonnet received praise within the Poe and Anthropic frameworks for coding assistance, while there is anticipation for upcoming Gemma 2 support in LM Studio and llama.cpp.

Hardware Dependency Highlighted: Users discussed running DeepCoder V2 on high-RAM setups with good performance but noted crashes on an M2 Ultra Mac Studio due to memory constraints. Additionally, server cooling and AVX2 processor requirements for LM Studio were topics of hardware-related conversations.

Memory Bottlenecks and Fixes: Members shared their experiences with VRAM limitations when loading models in LM Studio, providing advice such as disabling GPU offload and upgrading to higher VRAM GPUs for better support.

Emerging AI Tooling and Techniques: There's buzz around Meta's new LLM Compiler models and integrating Mamba-2 into llama.cpp, showcasing advancement in AI tooling and techniques for efficiency and optimization.

LangChain AI Discord

Can't Print Streams Directly in Python: A user emphasized that you cannot print stream objects directly in Python and provided a code snippet showing the correct method: iterate over the stream and print each token's content.

Correctly Using LangChain for Relevant User Queries: There were discussions on improving vector relevance in user queries with LangChain, with potential solutions including keeping previous retrieval in chat history and using query_knowledge_base("Green light printer problem") functions.

Integrating LangChain with FastAPI and Retrieval Enhancements: Community members shared documentation and examples on building LangChain endpoints using add_routes in FastAPI, and optimizing the use of load_qa_chain() for server-side document provisioning.

Cutting-Edge Features of LangChain Expression Language: Insights into LangChain Expression Language (LCEL) were provided, highlighting async support, streaming, parallel execution, and retries, pointing to the need for comprehensive documentation for a full understanding.

New Tools and Case Studies for LangChain: Notable mentions include the introduction of Merlinn, an AI bot for troubleshooting production incidents, an Airtable of ML system design case studies, and the integration of security features into LangChain with ZenGuard AI. A YouTube tutorial was also highlighted, showing the creation of a no-code Chrome extension chatbot using Visual LangChain.

LlamaIndex Discord

- LlamaIndex's New AI Warriors: LlamaIndex announced llama-agents, a new multi-agent AI framework, touting a distributed architecture and HTTP API communication. The emerging LlamaCloud service commenced its waitlist sign-ups for users seeking a fully-managed ingestion service.

- JsonGate at LlamaIndex: Engineers engaged in a lively debate over the exclusion of JSONReader in LlamaIndex's default Readers map, concluding with a pull request to add it.

- When AIs Imagine Too Much: LlamaParse, noted for its superior handling of financial documents, is under scrutiny for hallucinating data, prompting requests for document submissions to debug and improve the model.

- BM25's Re-indexing Dilemma: User discussions pointed out the inefficiency of needing frequent re-indexing in the BM25 algorithm with new document integrations, leading to suggestions for alternative sparse embedding methods and a focus on optimization.

- Ingestion Pipeline Slowdown: Performance degradation was highlighted when large documents are processed in LlamaIndex's ingestion pipelines, with a promising proposal of batch node deletions to alleviate the load.

Interconnects (Nathan Lambert) Discord

- API Revenue Surpasses Azure Sales: OpenAI's API now generates more revenue than Microsoft's resales of it on Azure, as highlighted by Aaron P. Holmes in a significant market shift revelation. Details were shared in Aaron's tweet.

- Meta's New Compiler Tool: Unveiled was the Meta Large Language Model Compiler, aimed at improving compiler optimization through foundation models, which processes LLVM-IR and assembly code from a substantial 546 billion token corpus. The tool's introduction and research can be explored in Meta's publication.

- Character Calls - The AI Phone Feature: Character.AI rolled out Character Calls, a new feature enabling voice interactions with AI characters. While aiming to enhance user experience, the debut attracted mixed feedback, shared in Character.AI's blog post.

- The Coding Interview Dilemma: Engineers shared vexations regarding excessively challenging interview questions and unclear expectations, along with an interesting instance involving claims of access to advanced voice features with sound effects in ChatGPT, mentioned by AndrewCurran on Twitter.

- Patent Discourse - Innovation or Inhibition?: The community debated the implications of patented technologies, from a chain of thought prompting strategy to Google's non-enforced transformer architecture patent, fostering discussions on patentability and legal complexities in the tech sphere. References include Andrew White's tweet regarding prompting patents.

OpenRouter (Alex Atallah) Discord

- Stheno 8B Grabs the Spotlight on OpenRouter: OpenRouter has launched Stheno 8B 32K by Sao10k as its current feature, offering new capabilities for creative writing and role play with an extended 32K context window for the year 2023-2024.

- Technical Troubles with NVIDIA Nemotron Selection: Users experience a hit-or-miss scenario when selecting NVIDIA Nemotron across devices, with some reporting 'page not working' errors while others have a smooth experience.

- API Key Compatibility Query and Uncensored AI Models Discussed: Engineers probe the compatibility of OpenRouter API keys with applications expecting OpenAI keys and delve into alternatives for uncensored AI models, including Cmd-r, Euryale 2.1, and the upcoming Magnum.

- Google Gemini API Empowers with 2M Token Window: Developers welcome the news of Gemini 1.5 Pro now providing a massive 2 million token context window and code execution capabilities, aimed at optimizing input cost management.

- Seeking Anthropic's Artifacts Parallel in OpenRouter: A user's curiosity about Anthropic’s Artifacts prompts discussion on the potential for Sonnet-3.5 to offer a similar ability to generate code through typical prompt methods in OpenRouter.

Cohere Discord

Innovative API Strategies: Using the Cohere API, OpenRouter can engage in non-commercial usage without breaching license agreements; the community confirms that the API use circumvents the non-commercial restriction.

Command-R Model Sparks Exclusivity Buzz: The Command-R model, known for its advanced prompt-following capabilities, is available exclusively through OpenRouter for 'I'm All In' subscribers, sparking discussions around model accessibility and licensing.

Licensing Pitfalls Narrowly Avoided: Debate ensued regarding potential misuse of Command-R's licensing by SpicyChat, but members concluded that payments to Cohere should rectify any licensing issues.

Technical Troubleshooting Triumph: A troubleshooting success was shared after a member resolved a Cohere API script error on Colab and PyCharm by following the official Cohere multi-step tool documentation.

Rust Library Unveiled with Rewards Program: Rig, a new Rust library aimed at building LLM-powered applications, was introduced alongside a feedback program, rewarding developers for their contributions and ideas, with a nod to compatibility with Cohere's models.

OpenInterpreter Discord

- Decoding the Neural Networks: Engineers can join a 4-week, free study group at Block's Mission office in SF, focusing on neural networks based on Andrej Karpathy's series. Enrollment is through this Google Form; more details are available on the event page.

- Open-Source Models Attract Interpreter Enthusiasts: The Discord community discussed the best open-source models for local deployment, specifically GPT-4o. Conversations included potential usage with backing by Ollama or Groq hardware.

- GitHub Policy Compliance Dialogue: There's a concern among members about a project potentially conflicting with GitHub's policies, highlighting the importance of open conversations before taking formal actions like DMCA notices.

- Meta Charges Ahead with LLM Compiler: Meta's new LLM Compiler, built on Meta Code Llama, aims at optimizing and disassembling code. Details are available in the research paper and the corresponding HuggingFace repository.

- Changing Tides for O1: The latest release of O1 no longer includes the

--localoption, and the community seeks clarity on available models and the practicality of a subscription for usage in different languages, like Spanish.

OpenAccess AI Collective (axolotl) Discord

- Debugging Beware: NCCL Watchdog Meets CUDA Error: Engineers noted encountering a CUDA error involving NCCL watchdog thread termination and advised enabling

CUDA_LAUNCH_BLOCKING=1for debugging and compiling withTORCH_USE_CUDA_DSAto activate device-side assertions.

- Gemma2 Garners Goggles, Google Greatness: The community is evaluating Google's Gemma 2 with sizes of 9B & 27B, which implements features like sliding window attention and soft-capped logits, showing scores comparable to Meta's Llama 3 70B. While the Gemma2:9B model received positive feedback in one early test, the Gemma2:27B displayed disappointing results in its initial testing, as discussed in another video.

- Meta Declares LLM Compiler: Meta's announcement of their LLM Compiler models, based on Meta Code Llama and designed for code optimization and compiler tasks, sparked interest due to their permissive licensing and reported state-of-the-art results.

- Gemma2 vs Transformers: Round 1 Fight: Technical issues with the Transformers code affecting Gemma 2's sample packing came to light, with a suggested fix via a pull request and awaiting an upstream fix from the Hugging Face team.

- Repeat After Me, Mistral7B: An operational quirk was reported with Mistral7B looping sentences or paragraphs during full instruction-tuning; the issue was baffling given the absence of such patterns in the training dataset.

tinygrad (George Hotz) Discord

PyTorch's Rise Captured on Film: An Official PyTorch Documentary was shared, chronicling PyTorch’s development and the engineers behind its success, providing insight for AI enthusiasts and professionals.

Generic FPGA Design for Transformers: A guild member clarified their FPGA design is not brand-specific and can readily load any Transformer model from Huggingface's library, a notable development for those evaluating hardware options for model deployment.

Iterative Improvement on Tinygrad: Work on integrating SDXL with tinygrad is progressing, with a contributor planning to streamline the features and performance before opening a pull request, a point of interest for collaborators.

Hotz Hits the Presentation Circuit: George Hotz was scheduled for an eight-minute presentation, details of which were not disclosed, possibly of interest to followers of his work or potential collaborators.

Tinygrad Call for Code Optimizers: A $500 cash incentive was announced for enhancements to tinygrad's matching engine's speed, an open invitation for developers to contribute and collaborate on improving the project's efficiency.

Deep Dive into Tinygrad's Internals: Discussions included a request for examples of porting PyTorch's MultiheadAttention to tinygrad, a strategy to estimate VRAM requirements for model training by creating a NOOP backend, and an explanation of Shapetracker’s capacity for efficient data representation with reference to tinygrad-notes. These technical exchanges are essential for those seeking to understand or contribute to tinygrad's inner workings.

LLM Finetuning (Hamel + Dan) Discord

- Anthropic Announces Build-With-Claude Contest: A contest focusing on building applications with Claude was highlighted, with reference to the contest details.

- LLM Cover Letter Creation Queries: Members have discussed fine-tuning a language model for generating cover letters from resumes and job descriptions, seeking advice on using test data to measure the model’s performance effectively.

- Social Media Style Mimicry via LLM: An individual is creating a bot that responds to queries in their unique style, using Flask and Tweepy for Twitter API interactions, and looking for guidance on training the model with their tweets.

- Cursor Gains Ground Among Students: Debates and suggestions have surfaced regarding the use of OpenAI's Cursor versus Copilot, including the novel idea of integrating Copilot within Cursor, with directions provided in a guide to install VSCode extensions in Cursor.

- Credit Allocation and Collaborative Assistance: Users requested assistance and updates concerning credit allocation for accounts, implying ongoing community support dynamics without providing explicit details.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!