[AINews] Gemini Live

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Lots of little $20/month subscriptions for everything in your life are all you need.

AI News for 8/12/2024-8/13/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (253 channels, and 2423 messages) for you. Estimated reading time saved (at 200wpm): 244 minutes. You can now tag @smol_ai for AINews discussions!

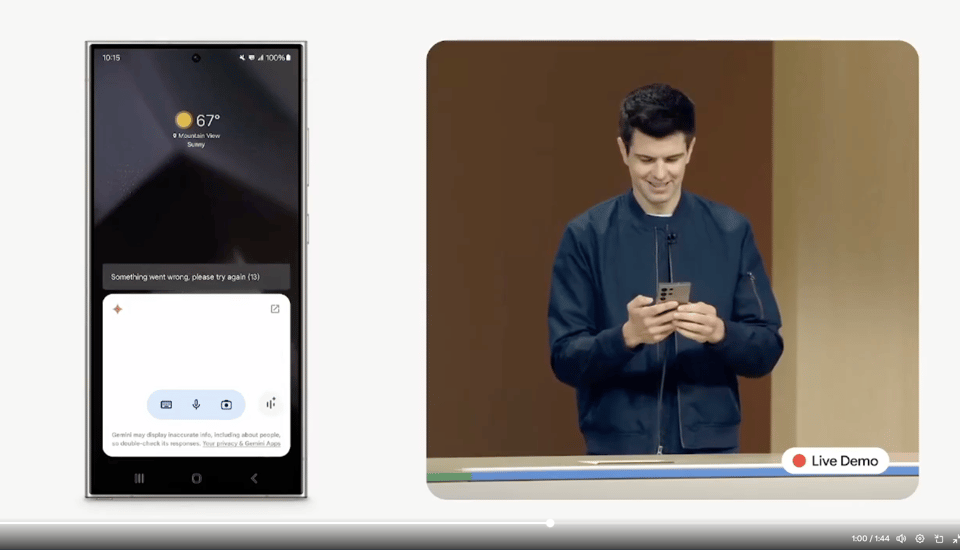

As promised at Google I/O, Gemini Live launched in Android today, for Gemini Advanced subscribers, as part of the #MadeByGoogle Pixel 9 launch event. With sympathies to the poor presenter who had 2 demo failures onstage:

The embargoed media reviews of Gemini Live have been cautiously positive. It will have "extensions" that are integrations with your Google Workspace (Gmail, Docs, Drive), YouTube, Google Maps, and other Google properties.

The important thing is Google started the rollout of it today (though we still cannot locate anyone with a live recording of it as of 5pm PT) vs a still-indeterminate date for ChatGPT's Advanced Voice Mode. Gemini Live will also come to iOS subscribers at a future point.

The company also shared demos of Gemini Live with Pixel Buds Pro 2 to people in the audience and with the WSJ. For those that care about the Pixel 9, there are also notable image AI integrations with the Add Me photo feature and the Magic Editor.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Developments and Benchmarks

- Anthropic released Genie, a new AI software engineering system achieving state-of-the-art performance on SWE-Bench with 30.08%, a 57% improvement over previous models. Key aspects include reasoning datasets, agentic systems with planning and execution abilities, and self-improvement capabilities. @omarsar0

- Falcon Mamba, a new 7B open-access model by TII, was released. It's an attention-free model that can scale to arbitrary sequence lengths and has strong metrics compared to similar-sized models. @osanseviero

- Researchers benchmarked 13 popular open-source and commercial models on context lengths from 2k to 125k, finding that long context doesn't always help with Retrieval-Augmented Generation (RAG). Performance of most generation models decreases above a certain context size. @DbrxMosaicAI

AI Tools and Applications

- Supabase launched an AI-based Postgres service, described as the "ChatGPT of databases". It allows users to build and launch databases, create charts, generate embeddings, and more. The tool is 100% open source. @AlphaSignalAI

- Perplexity AI announced a partnership with Polymarket, integrating real-time probability predictions for events like election outcomes and market trends into their search results. @perplexity_ai

- A tutorial on building a multimodal recipe recommender using Qdrant, LlamaIndex, and Gemini was shared, demonstrating how to ingest YouTube videos and index both text and image chunks. @llama_index

AI Engineering Insights

- An OpenAI engineer shared insights on success in the field, emphasizing the importance of thoroughly debugging and understanding code, and a willingness to work hard to complete tasks. @_jasonwei

- The connection between matrices and graphs in linear algebra was discussed, highlighting how this relationship provides insights into nonnegative matrices and strongly connected components. @svpino

- Keras 3.5.0 was released with first-class Hugging Face Hub integration, allowing direct saving and loading of models to/from the Hub. The update also includes distribution API improvements and new ops supporting TensorFlow, PyTorch, and JAX. @fchollet

AI Ethics and Regulation

- Discussions around AI regulation and its potential impact on innovation were highlighted, with some arguing that premature regulation could hinder progress towards beneficial AI applications. @bindureddy

- Concerns were raised about the effectiveness of AI "business strategy decision support" startups, with arguments that their value is not easily measurable or trustable by customers. @saranormous

AI Community and Events

- The Google DeepMind podcast announced its third season, exploring topics such as the differences between chatbots and agents, AI's role in creativity, and potential life scenarios after AGI is achieved. @GoogleDeepMind

- An AI Python for Beginners course taught by Andrew Ng was announced, designed to help both aspiring developers and professionals leverage AI to boost productivity and automate tasks. @DeepLearningAI

Memes and Humor

- Various humorous tweets and memes related to AI and technology were shared, including jokes about AI model names and capabilities. @swyx

This summary captures the main themes and discussions from the provided tweets, focusing on recent developments in AI models, tools, applications, and the broader implications for AI engineering and the tech industry.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Advanced Quantization and Model Optimization Techniques

- Llama-3.1 70B 4-bit HQQ/calibrated quantized model: 99%+ in all benchmarks in lm-eval relative performance to FP16 and similar inference speed to fp16 ( 10 toks/sec in A100 ). (Score: 91, Comments: 26): The Llama-3.1 70B model has been successfully quantized to 4-bit using HQQ/calibrated quantization, achieving over 99% relative performance compared to FP16 across all benchmarks in lm-eval. This quantized version maintains a similar inference speed to FP16, processing approximately 10 tokens per second on an A100 GPU. The achievement demonstrates significant progress in model compression while preserving performance, potentially enabling more efficient deployment of large language models.

- Why is unsloth so efficient? (Score: 94, Comments: 35): Unsloth demonstrates remarkable efficiency in handling 32k text length for summarization tasks on limited GPU memory. The user reports successfully training a model on an L40S 48GB GPU using Unsloth, while traditional methods like transformers llama2 with qlora, 4bit, and bf16 techniques fail to fit on the same hardware. The significant performance boost is attributed to Unsloth's use of Triton, though the exact mechanisms remain unclear to the user.

- Pre-training an LLM in 9 days 😱😱😱 (Score: 216, Comments: 53): Researchers at Hugging Face and Google have developed a method to pre-train a 1.3B parameter language model in just 9 days using 16 A100 GPUs. The technique, called Retro-GPT, combines retrieval-augmented language modeling with efficient pre-training strategies to achieve comparable performance to models trained for much longer, potentially revolutionizing the speed and cost-effectiveness of LLM development.

Theme 2. Open-source Contributions to LLM Development

- An extensive open source collection of RAG implementations with many different strategies (Score: 91, Comments: 20): The post introduces an open-source repository featuring a comprehensive collection of 17 different Retrieval-Augmented Generation (RAG) strategies, complete with tutorials and visualizations. The author encourages community engagement, inviting users to open issues, suggest additional strategies, and utilize the resource for learning and reference purposes.

- Falcon Mamba 7B from TII (Technology Innovation Institute TII - UAE) (Score: 87, Comments: 18): The Technology Innovation Institute (TII) in the UAE has released Falcon Mamba 7B, an open-source State Space Language Model (SSLM) combining the Falcon architecture with Mamba's state space sequence modeling. The model, available on Hugging Face, comes with a model card, collection, and playground, allowing users to explore and experiment with this new AI technology.

- Users tested Falcon Mamba 7B, reporting mixed results. One user found it "very very very poor" for a Product Requirements Document task, with responses becoming generic and disorganized.

- The model's performance was questioned, with some users finding it worse than Llama and Mistral models despite claims of superiority. Testing with various prompts yielded disappointing results.

- Some users expressed skepticism towards Falcon models based on past negative experiences, suggesting a potential pattern of underperformance in the Falcon series.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Releases and Capabilities

- Speculation about new GPT-4 model: A post on r/singularity claims ChatGPT mentioned a "new GPT-4o model out since last week", generating discussion about potential new OpenAI releases.

- Flux image generation model: Several posts discuss the capabilities of the new Flux image generation model:

- Impressive impressionist landscape generation using a custom LoRA trained on 5000 images

- Attempts at generating anatomically correct nude images using a custom LoRA

- Creative ad concept generation for fictional products

AI-Generated Media

- AI-generated video with synthetic voice: A demo video shows Flux-generated images animated and paired with AI-generated voice, though commenters note issues with lip sync and voice quality.

Autonomous Vehicles

- Waymo self-driving car issues: A video post shows Waymo autonomous vehicles having difficulties navigating from their starting point, sparking discussion on current limitations.

AI and Society

- AI companions and relationships: A controversial meme post sparked debate about the potential impact of AI companions on human relationships and societal dynamics.

AI Discord Recap

A summary of Summaries of Summaries by GPT4O (gpt-4o-2024-05-13)

1. Model Performance and Benchmarking

- Uncensored Model Outperforms Meta Instruct: An uncensored model tuned to retain the intelligence of the original Meta Instruct model has been released and has outperformed the original model on the LLM Leaderboard 2.

- The model's performance sparked discussions about the trade-offs between censorship and utility, with many users praising its ability to handle a wider range of inputs.

- Mistral Large: The Current Champion?: A member found Mistral Large 2 to be the best LLM right now, outcompeting Claude 3.5 Sonnet for difficult novel problems.

- However, Gemini Flash undercut OpenAI 4o mini severely in price, but OpenAI 4o was less expensive than Mistral Large.

- Google's Gemini Live: It's here, it's now, it's not free: Gemini Live is now available to Advanced Subscribers, offering conversational overlay on Android and more connected apps.

- Many users said that it is an improvement over the old voice mode, but is only available to paid users and lacks live video functionality.

2. GPU and Hardware Discussions

- GPU Wars - A100 vs A6000: Members discussed the pros and cons of A100 vs A6000 GPUs, with one member noting the A6000's great price/VRAM ratio and its lack of limitations compared to 24GB cards.

- The discussion highlighted the importance of VRAM and cost-efficiency for large model training and inference.

- Stable Diffusion Installation Woes: A user reported difficulties installing Stable Diffusion, encountering issues with CUDA installation and finding their token on Hugging Face.

- Another user provided guidance on generating a token through the profile settings menu and installing CUDA correctly.

- TorchAO presentation at Cohere for AI: Charles Hernandez from PyTorch Architecture Optimization will be presenting on TorchAO and quantization at the ml-efficiency group at Cohere For AI.

- The event is hosted by @Sree_Harsha_N and attendees can join Cohere For AI through the provided link.

3. Fine-tuning and Optimization Techniques

- Model Fine-Tuning Tips and Tricks: Discussion revolved around fine-tuning a Phi3 model and whether to use LoRA or full fine-tuning, with one member suggesting RAG as a potential solution.

- Users shared experiences and best practices, emphasizing the importance of choosing the right fine-tuning strategy for different models.

- TransformerDecoderLayer Refactor PR: A PR has been submitted to refactor the TransformerDecoderLayer, touching many files and making core changes in modules/attention.py and modules/transformer.py.

- This PR implements RFC #1211, aiming to improve the TransformerDecoderLayer architecture.

- PyTorch Full FP16: Is it possible?: A user asked if full FP16 with loss/grad scaling is possible with PyTorch core, specifically when fine-tuning a large-ish model from Fairseq.

- They tried using torch.GradScaler() and casting the model to FP16 without torch.autocast('cuda', torch.float16), but got an error 'ValueError: Attempting to unscale FP16 gradients.'

4. UI/UX Issues in AI Platforms

- Perplexity's UI/UX issues: Users reported several UI/UX issues including missing buttons and a disappearing prompt field, leading to difficulties in interacting with the platform.

- These bugs were reported across both the web and iOS versions of Perplexity, causing significant user frustration and hindering their ability to effectively utilize the platform.

- LLM Studio's Model Explorer is Down: Several members reported that HuggingFace, which powers the LM Studio Model Explorer, is down.

- The site was confirmed to be inaccessible for several hours, with connectivity issues reported across various locations.

- Perplexity's Website Stability Concerns: Users reported a significant decline in website stability, citing issues with sporadic search behavior, forgetting context, and interface bugs on both web and iOS versions.

- These issues raised concerns about the reliability and user experience provided by Perplexity.

5. Open-Source AI Frameworks and Community Efforts

- Rust GPU Transitions to Community Ownership: The Rust GPU project, previously under Embark Studios, is now community-owned under the Rust GPU GitHub organization.

- This transition marks the beginning of a broader strategy aimed at revitalizing, unifying, and standardizing GPU programming in Rust.

- Open Interpreter for Anything to Anything: Use Open Interpreter to convert any type of data into any other format.

- This is possible by using the 'Convert Anything' tool, which harnesses the power of Open Interpreter.

- Cohere For AI research lab: Cohere For AI is a non-profit research lab that seeks to solve complex machine learning problems.

- They support fundamental research exploring the unknown, and are focused on creating more points of entry into machine learning research.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Unsloth Pro Early Access: Early access to the Unsloth Pro version is currently being given to trusted members of the Unsloth community.

- A100 vs A6000 GPU Showdown: Members discussed the pros and cons of A100 vs A6000 GPUs, with one member noting the A6000's great price/VRAM ratio and its lack of limitations compared to 24GB cards.

- Uncensored Model Tops the Charts: An uncensored model tuned to retain the intelligence of the original Meta Instruct model has been released and has outperformed the original model on the LLM Leaderboard 2.

- Dolphin Model Suffers From Censorship: One member reported that the Dolphin 3.1 model fails the most basic requests and refuses them, possibly due to its heavy censorship.

- Fine-tuning for AI Engineers: Discussion revolved around fine-tuning a Phi3 model and whether to use LoRA or full fine-tuning, with one member suggesting RAG as a potential solution.

CUDA MODE Discord

- TorchAO Presentation at Cohere For AI: Charles Hernandez from PyTorch Architecture Optimization will be presenting on TorchAO and quantization at the ml-efficiency group at Cohere For AI on August 16th, 2000 CEST.

- This event is hosted by @Sree_Harsha_N and attendees can join Cohere For AI through the link https://tinyurl.com/C4AICommunityApp.

- CPU matmul Optimization Battle: A user is attempting to write a tiling-based matmul in Zig but is having difficulty achieving optimal performance.

- They received advice on exploring cache-aware loop reordering and the potential for using SIMD instructions, and also compared the performance to GGML and NumPy, which leverages optimized BLAS implementations for incredibly fast results.

- FP16 Weights and CPU Performance: A user asked about handling FP16 weights on the CPU, noting that recent models generally use BF16.

- They were advised to convert the FP16 weights to BF16 or FP32, with FP32 leading to no accuracy loss but potentially slower inference and exploring converting tensors at runtime from FP16 to FP32 to potentially improve performance.

- PyTorch Full FP16: Is it Really Possible?: A user asked if full FP16 with loss/grad scaling is possible with PyTorch core, specifically when fine-tuning a large-ish model from Fairseq.

- They attempted to use

torch.GradScaler()and cast the model to FP16 withouttorch.autocast('cuda', torch.float16)but got an error "ValueError: Attempting to unscale FP16 gradients."

- They attempted to use

- torch.compile: The Missing Manual: A new PyTorch document titled "torch.compile: The Missing Manual" was shared along with a YouTube video.

- The document and video are available at https://docs.google.com/document/d/1y5CRfMLdwEoF1nTk9q8qEu1mgMUuUtvhklPKJ2emLU8/edit#heading=h.ivdr7fmrbeab and https://www.youtube.com/live/rew5CSUaIXg?si=zwbubwKcaiVKqqpf, respectively, and provide detailed information on utilizing

torch.compile.

- The document and video are available at https://docs.google.com/document/d/1y5CRfMLdwEoF1nTk9q8qEu1mgMUuUtvhklPKJ2emLU8/edit#heading=h.ivdr7fmrbeab and https://www.youtube.com/live/rew5CSUaIXg?si=zwbubwKcaiVKqqpf, respectively, and provide detailed information on utilizing

LM Studio Discord

- Vision Adapters: The Key to Vision Models: Only specific LLM models have vision adapters, most of them are going by name "LLaVa" or "obsidian".

- The "VISION ADAPTER" is a crucial component for vision models; without it, the error you shared will pop up.

- Mistral Large: The Current Champion?: A member found Mistral Large 2 to be the best LLM right now, outcompeting Claude 3.5 Sonnet for difficult novel problems.

- However, the member also noted that Gemini Flash undercut OpenAI 4o mini severely in price, but OpenAI 4o was less expensive than Mistral Large.

- LLM Studio's Model Explorer is Down: Several members reported that HuggingFace, which powers the LM Studio Model Explorer, is down.

- The site was confirmed to be inaccessible for several hours, with connectivity issues reported across various locations.

- Llama 3.1 Performance Issues: A user reported that their Llama 3 8B model is now running at only 3 tok/s, compared to 15 tok/s before a recent update.

- The user checked their GPU offload settings and reset them to default, but the problem persists; the issue appears to be related to a change in the recent update.

- LLM Output Length Control: A member is looking for ways to restrict the output length of responses, as some models tend to output whole paragraphs even when instructed to provide a single sentence.

- While system prompts can be modified, the member found that 8B models, specifically Meta-Llama-3.1-8B-Instruct-GGUFI, are not the best at following precise instructions.

OpenAI Discord

- Google Rolls Out Gemini Live, But Not for Everyone: Gemini Live is now available to Advanced Subscribers, offering conversational overlay on Android and more connected apps.

- Many users said that it is an improvement over the old voice mode, but is only available to paid users and lacks live video functionality.

- Strawberry: Marketing Genius or OpenAI's New Face?: The discussion of a mysterious user named "Strawberry" with a string of emojis sparked speculation about a possible connection to OpenAI or Sam Altman.

- Users remarked on how the strawberry emojis, linked to Sam Altman's image of holding strawberries, were a clever marketing strategy, successfully engaging users in conversation.

- Project Astra's Long-Awaited Arrival: The announcement of Gemini Live hinted at Project Astra, but many users were disappointed by the lack of further development.

- One user even drew a comparison to a Microsoft recall, suggesting that people are skeptical about the product's release due to security concerns.

- LLMs: Not a One-Size-Fits-All Solution: Some users expressed skepticism about LLMs being the solution to every problem, especially when it comes to tasks like math, database, and even waifu roleplay.

- Other users emphasized that tokenization is still a fundamental weakness, and LLMs require a more strategic approach rather than relying on brute force tokenization to solve complex problems.

- ChatGPT's Website Restrictions: A Persistent Issue: A member asked about getting ChatGPT to access a website and retrieve an article, but another member noted that ChatGPT might be blocked from crawling or hallucinating website content.

- One user asked if anyone has attempted to use the term "web browser GPT" as a possible workaround.

Perplexity AI Discord

- Perplexity's UI/UX Bugs: Users encountered UI/UX issues including missing buttons and a disappearing prompt field, leading to difficulties in interacting with the platform.

- These bugs were reported across both the web and iOS versions of Perplexity, causing significant user frustration and hindering their ability to effectively utilize the platform.

- Sonar Huge: New Model, New Problems: The new model "Sonar Huge" replaced the Llama 3.1 405B model in Perplexity Pro.

- However, users observed that the new model was slow and failed to adhere to user profile prompts, prompting concerns about its effectiveness and performance.

- Perplexity's Website Stability Issues: Users reported a significant decline in the website's stability, with issues like sporadic search behavior, forgetting context, and various interface bugs.

- These issues were observed on both web and iOS versions, raising concerns about the reliability and user experience provided by Perplexity.

- Perplexity's Success Team Takes Note: Perplexity's Success Team acknowledged receiving user feedback on the recent bugs and glitches experienced in the platform.

- They indicated awareness of the reported issues and their impact on user experience, hinting at potential future solutions and improvements.

- Feature Implementation Delays at Perplexity: A user expressed frustration over the prolonged wait time for feature implementation.

- They highlighted the discrepancy between promised features and the actual rollout pace, emphasizing the importance of faster development and delivery to meet user expectations.

Stability.ai (Stable Diffusion) Discord

- Stability AI's SXSW Panel Proposal: Stability AI CEO Prem Akkaraju and tech influencer Kara Swisher will discuss the importance of open AI models and the role of government in regulating their impact at SXSW.

- The panel will explore the opportunities and risks of AI, including job displacement, disinformation, CSAM, and IP rights, and will be available to view on PanelPicker® at PanelPicker | SXSW Conference & Festivals.

- Google Colab Runtime Stops Working: A user encountered issues with their Google Colab runtime stopping prematurely.

- Another user suggested switching to Kaggle, which offers more resources and longer runtimes, providing a solution for longer AI experimentation.

- Stable Diffusion Installation and CUDA Challenges: A user faced difficulties installing Stable Diffusion due to issues with CUDA installation and locating their Hugging Face token.

- Another user provided guidance on generating a token through the Hugging Face profile settings menu and correctly installing CUDA, offering a solution to the user's challenges.

- Model Merging Discussion: A user suggested using the difference between UltraChat and base Mistral to improve Mistral-Yarn as a potential model merging tactic.

- While some users expressed skepticism, the original user remained optimistic, citing successful past attempts at model merging, showcasing potential advancements in AI model development.

- Flux Realism for Face Swaps: A user sought alternative solutions to achieve realistic face swaps after experimenting with fal.ai, which produced cartoonish results.

- Another user suggested using Flux, as it is capable of training on logos and accurately placing them onto images, providing a potential solution for the user's face swap goals.

OpenRouter (Alex Atallah) Discord

- Gemini Flash 1.5 Price Drop: The input token costs for Gemini Flash 1.5 have decreased by 78% and the output token costs have decreased by 71%.

- This makes the model more accessible and affordable for a wider range of users.

- GPT-4o Extended Early Access Launched: Early access for GPT-4o Extended has launched through OpenRouter.

- You can access it via this link: https://x.com/OpenRouterAI/status/1823409123360432393.

- OpenRouter's Update Hurdle: OpenRouter's update was blocked by the new 1:4 token:character ratio from Gemini, which doesn't map cleanly to the

max_tokensparameter validation.- A user expressed frustration about the constantly changing token:character ratio and suggested switching to a per-token pricing system.

- Euryale 70B Downtime: A user reported that Euryale 70B was down for some users but not for them, prompting questions about any issues or error rates.

- Further discussion revealed multiple instances of downtime, including a 10-minute outage due to an update and possible ongoing issues with location availability.

- Model Performance Comparison: Users compared the performance of Groq 70b and Hyperbolic, finding nearly identical results for the same prompt.

- This led to a discussion about the impact of FP8 quantization, with some users noting that it makes a minimal difference in practice, but others pointing to potential degraded quality with certain providers.

Modular (Mojo 🔥) Discord

- Mojo License's Catchy Clause: The Mojo License prohibits the development of applications using the language for competitive activities.

- However, it states that this rule does not apply to applications that become competitive after their initial release, but it is unclear how this clause will be applied.

- Mojo Open-Sourcing Timeline Remains Unclear: Users inquired about the timeline for open-sourcing the Mojo compiler.

- The team confirmed that the compiler will be open-sourced eventually but did not provide a timeline, suggesting it may be a while before contributions can be made.

- Mojo Development: Standard Library Focus: The current focus of Mojo development is on building out the standard library.

- Users are encouraged to contribute to the standard library, while work on the compiler is ongoing, but not yet open to contributions.

- Stable Diffusion and Mojo: Memory Matters: A user encountered a memory pressure issue running the Stable Diffusion Mojo ONNX example in WSL2, leading to the process being killed.

- The user had 8GB allocated to WSL2, but the team advised doubling it as Stable Diffusion 1.5 is approximately 4GB, requiring more memory for both the model and its optimization processes.

- Java by Microsoft: A Blast from the Past: One member argued that 'Java by Microsoft' was unnecessary and could have been avoided, while another countered that it seemed crucial at the time.

- The discussion acknowledged the emergence of newer solutions and the decline of 'Java by Microsoft' over time, highlighting its 20-year run and its relevance in the Microsoft marketshare.

Cohere Discord

- Cohere For AI Research Lab Expands: Cohere For AI is a non-profit research lab focused on complex machine learning problems. They are creating more points of entry into machine learning research.

- They support fundamental research exploring the unknown.

- Price Changes on Cohere's Website: A user inquired about the classify feature's pricing, as it's no longer listed on the pricing page.

- No response was provided.

- JSONL Uploads Failing: Users reported issues uploading JSONL datasets for fine-tuning.

- Cohere support acknowledged the issue, stating it is under investigation and suggesting the API for dataset creation as a temporary solution.

- Azure JSON Formatting Not Supported: A member asked about structured output with

response_formatin Azure, but encountered an error.- It was confirmed that JSON formatting is not yet available on Azure.

- Rerank Overview and Code Help: A user asked for help with the Rerank Overview document, encountering issues with the provided code.

- The issue was related to an outdated document, and a revised code snippet was provided. The user was also directed to the relevant documentation for further reference.

Torchtune Discord

- TransformerDecoderLayer Refactor Lands: A PR has been submitted to refactor the TransformerDecoderLayer, touching many files and making core changes in modules/attention.py and modules/transformer.py.

- This PR implements RFC #1211, aiming to improve the TransformerDecoderLayer architecture, and can be found here: TransformerDecoderLayer Refactor.

- DPO Preferred for RLHF: There is a discussion about testing the HH RLHF builder with DPO or PPO, with DPO being preferred for preference datasets while PPO is dataset-agnostic.

- The focus is on DPO, with the expectation of loss curves similar to normal SFT, and potential debugging needed for the HH RLHF builder, which may be addressed in a separate PR.

- Torchtune WandB Issues Resolved: A user encountered issues accessing WandB results for Torchtune, with access being granted after adding the user as a team member.

- The user reported poor results with the default DPO config and turning gradient accumulation off, but later discovered it started working again, potentially due to a delay or some other factor.

- Torchtune Performance with DPO: There is a discussion about potential issues with the default DPO config causing poor performance in Torchtune.

- The user suggested trying SIMPO (Stack Exchange Paired) and turning gradient accumulation back on, as having a balanced number of positive and negative examples in the batch can significantly improve loss.

- PyTorch Conference: A Gathering of Minds: There is a discussion about the upcoming PyTorch Conference, with links to the website and details on featured speakers.

- You can find more information about the conference here: PyTorch Conference. There was also a mention of sneaking in a participant as an 'academic' for the conference, but this is potentially a joke.

OpenAccess AI Collective (axolotl) Discord

- Perplexity Pro's Reasoning Abilities: A user noted that Perplexity Pro has gotten "crazy good at reasoning" and is able to "literally count letters" like it "ditched the tokenizer".

- They shared a link to a GitHub repository that appears to be related to this topic.

- Llama 3 MoE?: A user asked if anyone has made a "MoE" version of Llama 3.

- Grad Clipping Demystified: A user asked about the functionality of grad clipping, specifically wondering what happens to gradients when they exceed the maximum value.

- Another user explained that grad clipping essentially clips the gradient to a maximum value, preventing it from exploding during training.

- OpenAI Benchmarks vs New Models: A user shared their surprise at OpenAI releasing a benchmark instead of a new model.

- They speculated that this might be a strategic move to steer the field towards better evaluation tools.

- Axolotl's Capabilities: A member noted that AutoGPTQ could do certain things, implying that Axolotl may be able to do so as well.

- They were excited about the possibility of Axolotl replicating this capability.

LAION Discord

- Grok 2.0 Early Leak: A member shared a link to a Tweet about Grok 2.0 features and abilities, including image generation using the FLUX.1 model.

- The tweet also noted that Grok 2.0 is better at coding, writing, and generating news.

- Flux.1 Makes an Inflection Point: A member mentioned that many Elon fan accounts predicted X would use MJ (presumably referring to a model), suggesting that Flux.1 may have made an inflection point in model usage.

- The member questioned if Flux.1 is Schnellit's Pro model, given Elon's history.

- Open-Source Image Annotation Search: A member asked for recommendations for good open-source GUIs for annotating images quickly and efficiently.

- The member specifically mentioned single-point annotations, straight-line annotations, and drawing polygonal segmentation masks.

- Elon's Model Bluff: A member discussed the possibility that Elon is using a development version of Grok and calling the bluff on weight licenses.

- This member believes that Elon could potentially call this a "red-pill" version.

- 2D Pooling Success: A user expresses surprise at how well 2D pooling works.

- The user noted it was recommended by another user, and is currently verifying the efficacy of a new position encoding they believe they may have invented.

tinygrad (George Hotz) Discord

- Tensor Filtering Performance?: A user asked for the fastest way to filter a Tensor, such as

t[t % 2 == 0], currently doing it by converting to list, filtering, and converting back to list.- A suggestion was made to use masking if computing something on a subset of the Tensor, but it was noted that the exact functionality is not possible yet.

- Transcendental Folding Refactor Optimization: A user proposed a refactor to only apply transcendental rewrite rules if the backend does not have a

code_for_opfor theuop.- The user implemented a

transcendental_foldingfunction and called it fromUOpGraph.__init__but wasn't sure how this could be net negative lines, and asked what could be removed.

- The user implemented a

- CUDA TIMEOUT ERROR - Resolved: A user ran a script using

CLANG=1and received aRuntimeError: wait_result: 10000 ms TIMEOUT!error.- The error occurred with the default runtime and was resolved by using

CUDA=1, and the issue was potentially related to ##4562.

- The error occurred with the default runtime and was resolved by using

- Nvidia FP8 PR Suggestions: A user made suggestions on the Nvidia FP8 PR for Tinygrad.

MLOps @Chipro Discord

- Poe Partners with Agihouse for Hackathon: Poe (@poe_platform) announced a partnership with Agihouse (@agihouse_org) for a "Previews Hackathon" to celebrate their expanded release.

- The hackathon, hosted on AGI House, invites creators to build innovative "in-chat generative UI experiences".

- In-Chat UI is the Future: The Poe Previews Hackathon encourages developers to create innovative and useful "in-chat generative UI experiences", highlighting the importance of user experience in generative AI.

- The hackathon hopes to showcase the creativity and skill of its participants in a competitive environment.

- Virtual Try On Feature Speeds up Training: A member shared their experience building a virtual try-on feature, noting its effectiveness in speeding up training runs by storing extracted features.

- The feature uses online preprocessing and stores extracted features in a document store table, allowing for efficient retrieval during training.

- Flexible Virtual Try On Feature: A member inquired about the specific features being extracted for the virtual try-on feature.

- The member detailed the generic nature of the approach, successfully accommodating models of various sizes, demonstrating its flexibility in handling computational demands and model complexities.

LangChain AI Discord

- Llama 3.1 8b Supports Structured Output: A user confirmed that Llama 3.1 8b can produce structured output through tool use, having tested it directly with llama.cpp.

- RAG Struggles With Technical Images: A user is seeking advice on extracting information from images like electrical diagrams, maps, and voltage curves for RAG on technical documents.

- They mentioned encountering difficulties with traditional methods, highlighting the need for capturing information not present in text form but visually interpretable by experts.

- Next.js POST Request Misinterpreted as GET: A user encountered a 405 Method Not Allowed error when making a POST request from a Next.js web app running on EC2 to a FastAPI endpoint on the same EC2 instance.

- They observed the request being incorrectly interpreted as a GET request despite explicitly using the POST method in their Next.js code.

- AWS pip install Issue Resolved: A user resolved an issue with pip install on an AWS system by installing packages specifically for the Unix-based environment.

- The problem arose from the virtual environment mistakenly emulating Windows during the pip install process, causing the issue.

- Profundo Launches to Automate Research: Profundo automates data collection, analysis, and reporting, enabling everyone to do deep research on topics they care about.

- It minimizes errors and maximizes productivity, allowing users to focus on making informed decisions.

OpenInterpreter Discord

- Open Interpreter in Obsidian: A new YouTube series will demonstrate how to use Open Interpreter in the Obsidian note-taking app.

- The series will focus on how the Open Interpreter plugin allows you to control your Obsidian vault, which could have major implications for how people work with knowledge. Here's a link to Episode 0.

- AI Agents in the Enterprise: A user in the #general channel asked about the challenges of monitoring and governance of AI agents within large organizations.

- The user invited anyone working on AI agents within an enterprise to share their experiences.

- Screenless Personal Tutor for Kids: A member in the #O1 channel proposed using Open Interpreter to create a screenless personal tutor for kids.

- The member requested feedback and asked if anyone else was interested in collaborating on this project.

- Convert Anything Tool: The "Convert Anything" tool can be used to convert any type of data into any other format using Open Interpreter.

- This tool harnesses the power of Open Interpreter and has potential for significant applications across various fields.

Alignment Lab AI Discord

- SlimOrca Without Deduplication: A user asked about a version of SlimOrca that has soft prompting removed and no deduplication, ideally including the code.

- They also asked if anyone had experimented with fine-tuning (FT) on data with or without deduplication, and with or without soft prompting.

- Fine-tuning with Deduplication: The user inquired about the effects of fine-tuning (FT) with soft prompting versus without soft prompting.

- They also inquired about the effects of fine-tuning (FT) on deduplicated data versus non-deduplicated data.

LLM Finetuning (Hamel + Dan) Discord

- Building an Agentic Jupyter Notebook Automation System: A member proposed constructing an agentic system to automate Jupyter Notebooks, aiming to create a pipeline that takes an existing notebook as input, modifies cells, and generates multiple variations.

- They sought recommendations for libraries, cookbooks, or open-source projects that could serve as a starting point for this project, drawing inspiration from similar tools like Devin.

- Automated Notebook Modifications and Validation: The system should be able to intelligently replace specific cells within a Jupyter Notebook, generating diverse notebook versions based on these modifications.

- Crucially, the system should possess an agentic quality, enabling it to validate its outputs and iteratively refine the modifications until it achieves the desired results.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!