[AINews] Gemini (Experimental-1114) retakes #1 LLM rank with 1344 Elo

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Race dynamics is all you need.

AI News for 11/13/2024-11/14/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (217 channels, and 2424 messages) for you. Estimated reading time saved (at 200wpm): 272 minutes. You can now tag @smol_ai for AINews discussions!

Special note from the team: Thanks Andrej! Hi to the >3k of you who joined us! As a brief intro, hi, we are AI News, a side project started over the 2023 holiday break to solve AI Discord overwhelm almost 1 year ago. We currently save ~15 human years of reading per day.

- The main thing to understand is this is a recursively summarized tool for AI Engineers. You are not meant to read the whole thing! Skim, then cmd+f or search archives for more on the thing you want.

- If you'd like a personalized version pointed at different data sources/with different priorities, you can now try Smol Talk for Twitter and Reddit which we just launched today!

- We are also experimenting with smol text ads to fund development, email us only if you have something relevant for AI Engineers!

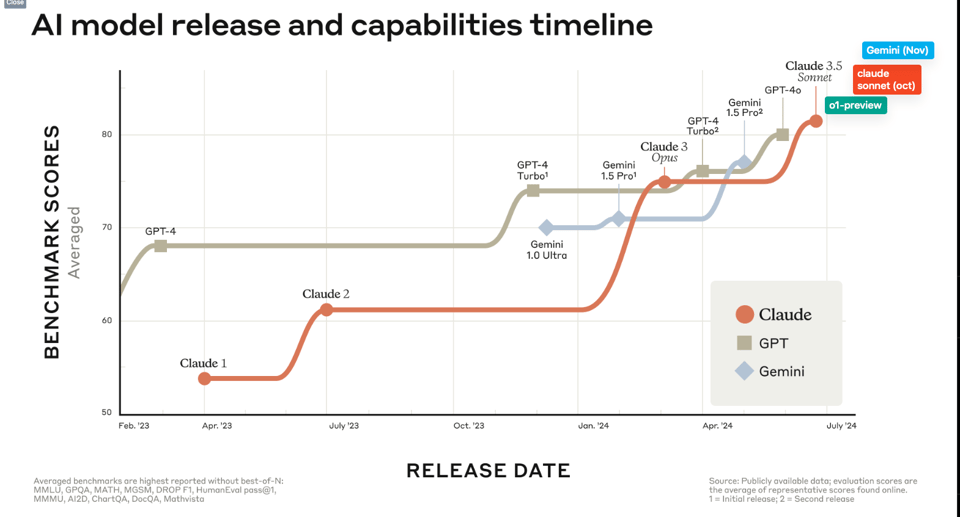

When Anthropic announced 3.5 Sonnet in June, they also published an oddly descriptive chart demonstrating what Dario terms a "race to the top" - the world's top 3 AI labs (ex Meta/X.ai/01.ai) running up benchmarks in tight lockstep. With the latest Nov 14 edition of Gemini, we can now update this chart with the fall editions of all 3 frontier models:

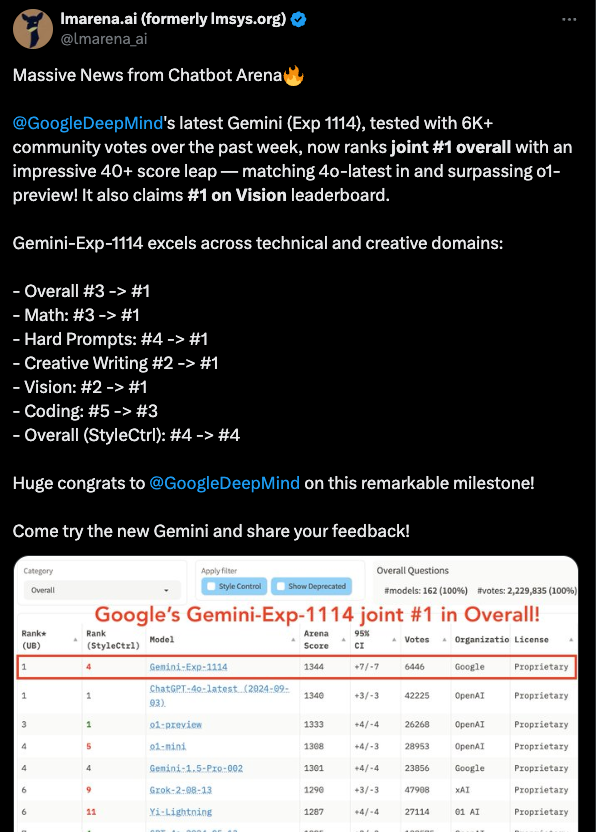

LMArena (formerly LMsys) explains the rank updates best:

There is no paper accompanying this update, nor is it yet available in the API, so there's unfortunately not much else to discuss here - normally a disqualifier for feature story, but when we have a new #1 LLM, we have to report on it.

This update comes at a convenient time for Gemini just as it deals with some very bizarre and alarming alignment issues.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Developments and Tools

- Model Releases and Enhancements: @jerryjliu0 introduced a new RAG technique for contiguous chunk retrieval, enhancing @OpenAI's GPT-4 capabilities. Additionally, @AnthropicAI announced the release of their benchmark for jailbreak robustness, emphasizing adaptive defenses against new attack classes. @LangChainAI launched Promptim, an experimental library for prompt optimization, aimed at systematically improving AI system prompts.

- Tool Integrations and Services: @Philschmid highlighted the decoupling of hf(.co)/playground into a standalone open-source project, fostering community collaboration. @AIatMeta unveiled NeuralFeels with neural fields, enhancing visuotactile perception for in-hand manipulation.

AI Governance and Ethics

- Resignations and Governance Insights: @RichardMCNgo announced his resignation from OpenAI, urging stakeholders to read his thoughtful message on AI governance and theoretical alignment. @teortaxesTex discussed the importance of truthful public information in AI governance to prevent misinformation and ensure ethical alignment.

- Ethical Deployment and Guardrails: @AndrewYNg and @ShreyaR promoted a new course on AI guardrails, focusing on reliable LLM applications. @AnthropicAI emphasized the significance of jailbreak rapid response in making LLMs safer through adaptive techniques.

Scaling AI and Evaluation Challenges

- Scaling Limits and Evaluation Saturation: @swxy addressed the notion that scaling has hit a wall, citing evaluation saturation as a primary factor. @synchroz echoed concerns about scaling limitations, highlighting the economic challenges in further scaling AI models.

- Compute and Optimization: @bindureddy argued that the perceived AI slowdown is misleading, attributing it to the saturation of benchmarks. @sarahookr discussed the diminishing returns of scaling pre-training and the need to explore architecture optimization beyond current paradigms.

Software Tools, Libraries, and Development Platforms

- Development Tools and Libraries: @tom_doerr shared multiple releases, including a zero-config tool for development certificates and the Spin framework for serverless applications powered by WebAssembly. @wightmanr enhanced timm.optim, making optimizer factories more accessible for developers.

- Integration and Workflow Automation: @LangChainAI demonstrated how AI Assistants can leverage custom knowledge sources for improved threat detection. @swyx emphasized the importance of focusing on AI product development rather than research for non-researchers.

AI Research and Papers

- Published Research and Papers: @SchmidhuberAI presented a new paper on narrative essence for story formation with potential military applications. @wsmerk shared insights from the paper titled "On the diminishing returns of scaling", discussing compute thresholds and the limitations of current scaling laws.

- Conference Highlights: @sarahookr showcased their main-track work at #EMNLP2024, highlighting Aya Expanse breakthroughs. @finbarrtimbers announced an upcoming event related to reinforcement learning and the exploration of exploitation/exploration boundaries.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Nvidia RTX 5090 enters production with 32GB VRAM

- Nvidia RTX 5090 with 32GB of RAM rumored to be entering production (Score: 271, Comments: 139): Nvidia is reportedly shifting its production focus to the RTX 50 series, with the RTX 5090 rumored to feature 32GB of RAM. Concerns are rising about potential scalper activity affecting the availability and pricing of these new GPUs, as highlighted in multiple sources including VideoCardz and PCGamesN.

- There is skepticism about the 32GB RAM rumor for the RTX 5090, with some users questioning the validity of the sources and suggesting that Nvidia might change specifications last minute, referencing past incidents like the 4080/4070 fiasco. The rumor of 32GB VRAM has been circulating widely, but it remains unconfirmed by official sources.

- Users express concerns over scalper activity and high pricing, with predictions of prices reaching $3000 or more due to scalpers and market demand. Some comments discuss the potential impact of Nvidia's production shifts and legal restrictions, like the inability to sell in China, on the availability and pricing in other regions such as the European Union.

- Discussions highlight the use cases of RTX 5090 beyond gaming, focusing on professional and hobbyist applications like running local models and AI tasks. Users compare the potential performance and VRAM requirements of the 5090 with current models like the RTX 3090 and emphasize the importance of VRAM in handling tasks like AI video generation and large language models.

Theme 2. MMLU-Pro scores: Qwen and Claude Sonnet models

- MMLU-Pro score vs inference costs (Score: 215, Comments: 31): MMLU-Pro score and inference costs are likely the focus of analysis, examining the relationship between model performance metrics and the financial implications of running inference tasks. This discussion is relevant for engineers optimizing AI models for cost-efficiency while maintaining high performance.

- Claude Sonnet 3.5 is praised for its versatility and accuracy in handling complex tasks, though it requires specific prompting for novel solutions. It is considered a highly efficient tool for programmers due to its ability to understand and solve errors quickly.

- The Tencent Hunyuan model is noted for its high MMLU score and its architecture as a mixture of experts with 52 billion active parameters. This model is suggested as potentially outperforming existing models like Sonnet 3.5.

- Discussions highlight the Qwen models as cost-effective, with Qwen 2.5 prominently defining the Pareto curve for performance and cost efficiency. The Haiku model is criticized for being overpriced, and the analysis of inference costs shows Claude 3.5 Sonnet has significantly higher costs compared to 70B models.

Theme 3. Qwen2.5 RPMax v1.3: Creative Writing Model

- Write-up on repetition and creativity of LLM models and New Qwen2.5 32B based ArliAI RPMax v1.3 Model! (Score: 103, Comments: 60): The post discusses the Qwen2.5 32B based ArliAI RPMax v1.3 Model, focusing on its repetition and creativity in the context of LLM (Large Language Model) performance. The absence of a detailed post body limits specific insights into the model's training methods or performance metrics.

- Model Versions and Training Improvements: The discussion highlights the evolution of the RPMax model from v1.0 to v1.3, with improvements in training parameters and dataset curation. Notably, v1.3 uses rsLoRA+ for better learning and lower loss, and the model is praised for its creativity and reduced repetition in writing tasks.

- Dataset and Fine-Tuning Strategy: The model's success is attributed to a curated dataset that avoids repetition and focuses on quality over quantity. The training involves only a single epoch with a higher learning rate, aiming for creative output rather than exact replication of training data, which differs from traditional fine-tuning methods.

- Community Feedback and Model Performance: Users report that the model achieves its goal of being a creative writing/RP model, with some describing interactions as feeling almost like engaging with a real person. The model's performance in creative writing is discussed, with comparisons to other models like EVA-Qwen2.5-32B for context handling and writing quality.

Theme 4. Qwen 32B vs 72B-Ins on Leetcode Comparison

- Qwen 32B Coder-Ins vs 72B-Ins on the latest Leetcode problems (Score: 79, Comments: 23): The post evaluates the performance of Qwen 32B Coder versus 72B non-coder variant and GPT-4o on recent Leetcode problems, highlighting the models' strengths in reasoning over pure coding. Tests were conducted using vLLM with models quantized to FP8 and a 32,768-token context length, running on H100 GPUs. The author notes that this benchmark is 70% reasoning and 30% coding, emphasizing that hard Leetcode problems were mostly excluded due to their complexity and the models' generally poor performance on them.

- The author confirms that all test results are based on pass@1, which is a common metric for evaluating model performance on coding tasks. A user suggests expanding the tests to include 14B and 7B coders for broader comparison, and the author expresses openness to this if there is enough interest, potentially leading to an open-source project.

- One commenter suggests that the skill required to solve Leetcode problems has become more accessible due to advancements in AI, equating the skillset to the size of a PS4 game. Another user counters that this raises the skill floor, implying that while AI can handle simpler tasks, more complex problem-solving skills are still necessary.

- There is interest in comparing different quantization methods, specifically FP8 versus Q4_K_M, to determine which is better for inference. This highlights ongoing curiosity about the efficiency and performance trade-offs in model quantization techniques.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. Gemini 1.5 Pro Released - Claims Top Spot on LMSys Leaderboard

- Gemini-1.5-Pro, the BEST vision model ever, WITHOUT EXCEPTION, based on my personal testing (Score: 48, Comments: 28): Gemini-1.5-Pro appears to be a multimodal vision model, but without any post content or testing details provided, no substantive claims about its performance can be verified. The title makes subjective claims about the model's superiority but lacks supporting evidence or comparative analysis.

- Users noted varying performance across different tasks, with one reporting that for graph analysis, their testing showed Claude Sonnet 3.5 > GPT-4 > Gemini-1.5-Pro, though others cautioned against drawing conclusions from limited testing samples.

- Discussion of multimodal capabilities highlighted both strengths and limitations, with users noting that while Gemini and Imagen are underrated for multimodal input and image generation, the technology isn't yet advanced enough for real-time webcam interaction.

- Specific image analysis comparisons showed mixed accuracy, with Flash correctly identifying certain details (pigtails) while Pro provided more comprehensive descriptions, though both had some inaccuracies in their observations.

- New Gemini model #1 on lmsys leaderboard above o1 models ? Anthropic release 3.5 opus soon (Score: 163, Comments: 57): Google's Gemini has reached the #1 position on the LMSys leaderboard, surpassing OpenAI's models in performance rankings. Anthropic plans to release their new Claude 3.5 Opus model in the near future.

- LMSYS leaderboard is criticized for lacking quality control and being based solely on user votes about formatting rather than actual performance. Multiple users point to LiveBench as a more reliable benchmark for model evaluation.

- Users debate the performance of Claude 3.5 Sonnet (also referred to as 3.6), with some highlighting its 32k input context and slower but more thorough "thinking" approach. Several alternative benchmarking resources were shared, including Scale.com and LiveBench.ai.

- Anthropic's CEO Dario acknowledged in a Lex interview that naming both versions "3.5" was confusing and suggested they should have called the new version "3.6" instead. The company has recently removed the "new" label from their UI for the model.

Theme 2. Undetectable ML Model Backdoors Using Digital Signatures - New Research

- [R] Undetectable Backdoors in ML Models: Novel Techniques Using Digital Signatures and Random Features, with Implications for Adversarial Robustness (Score: 27, Comments: 5): The research demonstrates how to construct undetectable backdoors in ML models using two frameworks: digital signature scheme-based backdoors and Random Fourier Features/Random ReLU based backdoors, which remain undetectable even under white-box analysis and with full access to model architecture, parameters, and training data. The findings reveal critical implications for ML security and outsourced training, showing that backdoored models maintain identical generalization error as clean models while allowing arbitrary output manipulation through subtle input perturbations, as detailed in their paper "Planting Undetectable Backdoors in Machine Learning Models".

Theme 3. New CogVideoX-5B Open Source Text-to-Video Model Released

- CogvideoX + DimensionX (Comfy Lora Orbit Left) + Super Mario Bros. [NES] (Score: 52, Comments: 4): A post referencing CogVideoX 5B and DimensionX models used with Super Mario Bros NES content, though no specific details or examples were provided in the post body. The combination suggests video generation capabilities using these AI models with retro gaming content.

- CogVideoX-5b multiresolution finetuning on 4090 (Score: 21, Comments: 0): CogVideoX-5b model can be fine-tuned using LoRA on an NVIDIA RTX 4090 GPU using the cogvideox-factory repository. The post includes a video demonstration of the fine-tuning process.

Theme 4. StackOverflow Traffic Plummets as AI Tools Rise

- RIP Stackoverflow (Score: 703, Comments: 125): Stack Overflow experienced a significant traffic decline after the rise of AI coding tools, leading to discussions about the future viability of traditional programming Q&A platforms. The lack of post body content prevents a more detailed analysis of specific metrics or causes of this decline.

- Users overwhelmingly criticize Stack Overflow's toxic culture, with a 40-year software engineering veteran receiving 552 upvotes for condemning the platform's arrogant attitude, and multiple users citing frustration with the "duplicate question" responses and dismissive treatment of newcomers.

- Concerns about model collapse and AI training data were raised, as the decline in Stack Overflow traffic could lead to outdated information sources for future AI models, with users noting that AI tools still rely on human-annotated data for training.

- Multiple developers express preference for ChatGPT's friendlier approach to answering questions, with users highlighting that AI tools provide immediate responses without the gatekeeping and hostility experienced on Stack Overflow, particularly noting that GPT was released in late 2022.

- ChatGPT doesn’t have a shitty attitude when you ask a relevant question either. (Score: 221, Comments: 25): ChatGPT provides a more welcoming environment for asking technical questions compared to Stack Overflow's known hostile community responses. The post implies that ChatGPT delivers answers without the negative attitudes sometimes encountered on Stack Overflow when users ask legitimate questions.

- Users strongly criticize Stack Overflow's toxic culture, with multiple examples of questions being marked as duplicates linking to 14-year-old obsolete answers. The community's elitist behavior includes dismissive responses and hostile treatment of new users.

- ChatGPT learned from a broad range of internet content including public GitHub repositories and pastebin scripts, not just Stack Overflow. The AI provides a more approachable platform for asking repeated or basic questions without fear of negative feedback.

- The post references a traffic bump in July 2023 coinciding with the launch of OverflowAI. Users note that Stack Exchange forums beyond programming, such as physics and electrical engineering, suffer from similar toxicity issues.

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1. AI Models Take the Spotlight: Gemini Soars and New Releases Impress

- Gemini AI Takes the Throne in Chatbot Arena: Google's Gemini (Exp 1114) skyrockets to the top rank in Chatbot Arena, outperforming competitors with a 40+ point increase based on 6K+ community votes. Users praise its enhanced performance in creative writing and mathematics.

- UnslopNemo 12B and Friends Join the Adventure Club: UnslopNemo 12B v4 launches for adventure writing and role-play, joined by SorcererLM and Inferor 12B, models optimized for storytelling and role-play scenarios.

- Tinygrad Flexes Muscles at MLPerf Training 4.1: Tinygrad participates in MLPerf Training 4.1, successfully training BERT and aiming for a 3x performance boost in the next cycle, marking the first inclusion of AMD in their training process.

Theme 2. AI Gets Cozy with Developers: Tools Integrate into Coding Environments

- ChatGPT Moves into VS Code's Spare Room: ChatGPT for macOS now integrates with desktop applications like VS Code and Terminal, offering context-aware coding assistance for Plus and Team users in beta.

- Code Editors Break the Token Ceiling: Tools like Cursor and Aider defy limits by generating code edits exceeding 4096 tokens, prompting developers to wonder about their token management magic.

- LM Studio Users Sideload Llama.cpp for Extra Power: Frustrated LM Studio users discuss sideloading features from llama.cpp, eager to overcome current limitations and enhance their AI models' capabilities.

Theme 3. Data Privacy Panic: GPT-4 and LAION Face Scrutiny

- GPT-4 Spills the Beans with Data Leaks: Users report potential data leaks in GPT-4, noting unexpected Instagram usernames in outputs, sparking concerns over training data integrity.

- LAION Tangled in EU Copyright Web: Debates ignite over LAION's dataset allowing downloads of 5 billion images, with critics claiming violations of EU copyright laws due to circumventing licensing terms.

Theme 4. Robots Meet AI: Benchmarking Vision Language Action Models

- AI Models Put Through Their Paces on 20 Real-World Tasks: A collaborative paper titled "Benchmarking Vision, Language, & Action Models on Robotic Learning Tasks" evaluates how VLA models control robots across 20 tasks, aiming to establish new benchmarks.

- Researchers Unite: Georgia Tech, MIT, and More Dive into Robotics: Institutions like Georgia Tech, MIT, and Metarch AI collaborate to assess VLA models, sharing resources and code on GitHub for community engagement.

Theme 5. Ads Crash the AI Party: Users Frown at Sponsored Questions

- Perplexity's Ads Perplex Users (Even the Paying Ones): Perplexity introduces ads as "sponsored follow-up questions", frustrating Pro subscribers who expected an ad-free experience.

- Ad Rage: Subscription Value Questioned: Users across platforms express dissatisfaction over ads appearing despite paid subscriptions, sparking debates on the viability of current subscription models.

PART 1: High level Discord summaries

HuggingFace Discord

-

GPT-4 Data Leak Raises Data Integrity Concerns: Users reported potential data leaks in the GPT-4 series, specifically noting the inclusion of Instagram usernames in the model's outputs.

- This issue raises questions about the integrity of training data and the completeness of leak assessments.

- Benchmarking Vision Language Action Models Released: A new paper titled Benchmarking Vision, Language, & Action Models on Robotic Learning Tasks profiles VLA models and evaluates their performance on 20 real-world tasks.

- The study, a collaboration between Georgia Tech, MIT, and Manifold, aims to establish benchmarks for multimodal action models.

- Kokoro TTS Model Gains Community Feedback: The Kokoro TTS model with approximately 80M parameters was shared for feedback, with users noting improvements in English output quality.

- Despite its compact size, the model's speed and stability impressed users, accompanied by a roadmap for enhanced emotional speech capabilities.

- Open3D-ML Enhances 3D Machine Learning: Open3D-ML was highlighted as a promising extension of Open3D tailored for 3D Machine Learning tasks.

- Its integration is attracting interest for its potential to improve various 3D applications, expanding the utility of the framework.

- Stable Diffusion 1.5 Optimized for CPU Performance: A user opted for Stable Diffusion 1.5 as the lightest version available to ensure efficient CPU performance.

- This choice underscores the community's focus on optimizing model operations for more accessible hardware configurations.

LM Studio Discord

-

Boosting LM Studio with llama.cpp Sideloading: A user requested a method to seamlessly sideload features from llama.cpp into LM Studio, highlighting frustrations with the existing limitations.

- The discussion emphasized ongoing development efforts to incorporate this functionality in upcoming updates, with the community eagerly anticipating a more flexible integration.

- GPU Struggles Running Nemotron 70b Models: Users reported varying performance metrics when running Nemotron 70b on different GPU setups, achieving throughput rates between 1.97 to 14.0 tok/s.

- It was identified that memory availability and CPU bottlenecks are primary factors affecting model performance, prompting considerations for GPU upgrades.

- CPUs Lag Behind GPUs for LLM Workloads: The consensus among members is that CPUs are often unable to match the performance of GPUs for modern LLM tasks, as evidenced by lower tok/s rates.

- Insights were shared on how memory bandwidth and effective GPU offloading are critical for optimizing overall model performance.

- M4 Max's Potential with 128GB RAM: With the M4 Max equipped with 128GB of RAM, users are keen to test its capabilities against dedicated GPU configurations for LLM performance.

- There is a strong interest in conducting and sharing benchmarks to guide purchasing decisions, addressing the community's need for AI-specific performance evaluations.

- Integrating AI into SaaS Platforms: A member outlined plans to embed AI functionalities into a SaaS application, leveraging LM Studio's API to enhance development processes.

- The conversation explored various AI tools that could be utilized to improve software features, indicating a robust interest in practical AI integrations.

Unsloth AI (Daniel Han) Discord

-

Unsloth AI Training Efficiency: Members discussed the memory efficiency of the Unsloth platform, with theyruinedelise affirming that it is the most memory-efficient training service available.

- Unsloth is set to implement a CPO trainer, further enhancing its training efficiency.

- LoRA Parameters in Fine-Tuning: It was indicated that using smaller values for rank and adaptation can help improve training on datasets without distorting model quality.

- Users were advised to understand rank (r) and adaptation (a) factors, emphasizing that a quality dataset is crucial for effective training.

- Harmony Project Collaboration: A member introduced the Harmony project, an initiative developing an AI LLM-based tool for data harmonization, and provided a Discord server for contributions.

- Currently based at UCL, Harmony is seeking volunteers and is hosting a competition to enhance their LLM matching algorithms, with details available on their competition page.

- Editing Code with AI Tools: anubis7645 is building a utility for editing large React files, considering how tools like Cursor generate edits seamlessly despite model token limits.

- lee0099 explained the concept of speculative edits, allowing for fast application and relating it to coding practices.

- Using LoftQ without Loading Unquantized Models: A query was raised about using LoftQ directly without loading an unquantized model in VRAM-constrained environments like T4.

- It was suggested to adjust target modules for LoRA to include only linear and embedding layers to enhance patch efficacy during fine-tuning.

OpenRouter (Alex Atallah) Discord

-

Launch of UnslopNemo 12B v4 for Adventure Writing: The latest model, UnslopNemo 12B, is now available, optimized for adventure writing and role-play scenarios.

- A free variant can be accessed for 24 hours via UnslopNemo 12B Free.

- SorcererLM Enhances Storytelling: SorcererLM is fine-tuned on WizardLM-2-8x22B, offering improved storytelling capabilities.

- Users can request access or seek further information through the Discord channel.

- Inferor 12B: The Ultimate Roleplay Model: Inferor 12B integrates top roleplay models, though users are advised to set output limits to prevent excessive text.

- Access to this model is available upon request through Discord.

- AI Studio Introduces generateSpeech API: A new

generateSpeechAPI endpoint has been launched in AI Studio, enabling speech generation from input transcripts.

- This feature aims to enhance model capabilities in converting text to audio output.

- Companion Bot Enhances Discord Security: Companion is introduced as an AI-powered Discord bot that personalizes personas while automating moderation.

- Features include impersonation detection, age exploit detection, and dynamic message rate adjustments to boost server engagement.

Eleuther Discord

-

Benchmarking Vision Language Action Models: A collaboration between Manifold, Georgia Tech, MIT, and Metarch AI released the paper 'Benchmarking Vision, Language, & Action Models on Robotic Learning Tasks', evaluating models like GPT4o across 20 real-world tasks.

- Related resources include Twitter highlights and the GitHub repository, providing detailed insights into the experimental setups and results.

- Transformer Architecture Evolves with Decoder-Only Models: Transformers continue to dominate with advancements like decoder-only architectures and mixtures of experts, though their compatibility with current hardware remains under scrutiny.

- Members debated the necessity for evolving hardware to support these architectures, acknowledging the ongoing trade-offs in performance and efficiency.

- Shampoo and Muon Optimize Learning: Discussions on Shampoo and Muon algorithms highlighted their roles in optimizing the Fisher Information Matrix for better Hessian estimation, referencing the paper 'Old Optimizer, New Norm: An Anthology'.

- Participants questioned the underlying assumptions of these algorithms, comparing them to methods like KFAC and debating their practical effectiveness in diverse training scenarios.

- Hardware Advances Boost AI Training Efficiency: Blackwell's latest hardware advancements have significantly improved transformer inference efficiency, surpassing previous benchmarks set by Hopper.

- Conversations emphasized the critical importance of memory bandwidth and VRAM in implementing large-scale AI models effectively.

- Enhancing Pythia with Mixture of Experts: A query about integrating a mixture-of-expert (MoE) version of the Pythia model suite sparked interest in modernizing hyperparameters using techniques like SwiGLU.

- The discussion focused on determining specific research questions that MoE could address within the Pythia framework, considering the existing training setup and potential benefits.

aider (Paul Gauthier) Discord

-

Aider v0.63.0 Now Available: The new release of Aider v0.63.0 integrates support for Qwen 2.5 Coder 32B and includes enhancements like Web Command improvements and Prompting Enhancements.

- Aider's contribution comprises 55% of the code in this update, boosting performance and reliability.

- Qwen 2.5 Coder Gains Ground in Aider v0.63.0: The Qwen 2.5 Coder 32B model is now supported in Aider v0.63.0, demonstrating improved performance in benchmarks compared to previous versions.

- Users are experimenting with the model through OpenRouter, though some report underwhelming results against established benchmarks.

- Gemini Experimental Models Introduced: New Gemini experimental models have been released, aiming to tackle complex prompts and enhance usability within the Aider ecosystem.

- However, accessing these models has been challenging due to permission restrictions on Google Cloud, limiting user experimentation.

- CLI Scripting Enhancements with Aider: Members are leveraging CLI scripting with Aider to automate repetitive tasks, indicating a growing demand for programmable interactions.

- The Aider scripting documentation highlights capabilities like applying edits to multiple files programmatically, showcasing the tool’s adaptability.

- Aider Ecosystem Documentation Improvements: Users are advocating for enhanced documentation within the Aider ecosystem, considering platforms like Ravel for improved searchability.

- These discussions underscore the necessity for clearer guides as Aider’s functionalities expand rapidly.

Nous Research AI Discord

-

Joining Forge API Beta Made Easier: Multiple members experienced issues joining the Forge API Beta, with teknium confirming additions based on requests.

- Some users were confused about email links directing them to the general channel instead.

- Insights into Hermes Programming: Members discussed their initial programming languages, with shunoia pivoting to Python thanks to Hermes, while oleegg offered sympathy for the decision.

- jkimergodic_72500 elaborated on Perl as a flexible language, providing context for the current dialogue on programming experiences.

- Concerns Over TEE Wallet Collation: mrpampa69 raised concerns regarding the inconsistency of wallets for TEE, arguing that it undermines the bot's perceived sovereignty.

- Responses indicated a need for robust decision-making before collation to maintain operational autonomy and prevent misuse.

- Advanced Translation Tool Launched: A new AI-driven translation tool focuses on cultural nuance and adaptability, making translations more human-like.

- It tailors the output by considering dialects, formality, tone, and gender, making it a flexible choice for diverse needs.

Modular (Mojo 🔥) Discord

-

Mojo's Low-Level Syntax Performance: Members discussed how Mojo's low-level syntax may not maintain the Pythonic essence while providing better performance compared to high-level syntax.

- One pointed out that high-level syntax lacks the performance of C, but tools like NumPy can still achieve close results under certain conditions.

- Struggles with Recursive Vectorization: The conversation shifted to Recursive Vectorization and its impact on performance in Mojo, highlighting concerns over the lack of optimizations in recursive code compared to Rust or C++.

- Participants agreed that missing features in the type system currently impede the development of the standard library, making it hard to write efficient code.

- Tail Call Optimization in MLIR: A sentiment emerged around implementing Tail Call Optimization (TCO) in MLIR to enable compiler optimizations for recursive code and better performance.

- Members expressed uncertainty over the need for preserving control flow graphs in LLVM IR, debating its importance for debugging.

- Lang Features Priority Discussion: There was a consensus on prioritizing basic type system features over more advanced optimizations to ensure language readiness as more users are onboarded.

- Participants warned against overwhelming the development with additional issues while the foundational features are still pending.

- LLVM Offload and Coroutine Implementation: Interest was shown in LLVM's offload capabilities and how coroutine implementations are being facilitated in Mojo.

- Discussion highlighted that coroutines are conceptually similar to tail-recursive functions, leading to considerations of whether transparent boxing is necessary.

Perplexity AI Discord

-

Perplexity Expands Campus Strategist Program to Canada: Responding to high demand, Perplexity is extending their Campus Strategist Program to Canada, allowing interested applicants to apply for the 2024 cohort.

- The program offers hands-on experience and mentorship for university students, enhancing their skills and providing valuable industry exposure.

- Google Gemini Dominates Chatbot Arena: Google's Gemini (Exp 1114) has achieved the top rank in the Chatbot Arena, outperforming competitors with a 40+ score increase based on 6K+ community votes over the past week, as highlighted by lmarena.ai.

- This advancement underscores Gemini's enhanced performance and solidifies its position as a leading model in AI chatbot competitions.

- Ads Challenge Pro Subscription Value: Users are expressing frustration over the introduction of ads for all users, including Pro subscribers, questioning the value of their subscriptions.

- Concerns center around the expectation of an ad-free experience for paying users, leading to discussions about the subscription model's viability.

- API Dashboard Reports Inaccurate Token Usage: Several users have reported that the API dashboard is not updating token usage accurately, causing confusion and potential billing issues.

- This malfunction affects multiple members, prompting suggestions to report the issue for a timely resolution.

- Reddit Citations Failing via API: Users are encountering issues with Reddit citations not functioning correctly through the API, despite previous reliability.

- Instances of random URL injections without valid sources are leading to inaccurate results, raising concerns about the API's citation integrity.

Interconnects (Nathan Lambert) Discord

-

Operator AI Agent Set to Automate Tasks: OpenAI's new AI agent tool, Operator, is scheduled for a January launch, aiming to automate browser-based tasks such as writing code and booking travel, as detailed in this tweet.

- This tool represents a significant advancement in AI utility, enhancing user efficiency in managing routine operations.

- Gemini-Exp-1114 Dominates Chatbot Arena: @GoogleDeepMind's Gemini-Exp-1114 achieved a top ranking in the Chatbot Arena, outperforming competing models with substantial score improvements across various categories.

- It now leads the Vision leaderboard and excels in creative writing and mathematical tasks, demonstrating its superior capabilities.

- Qwen Outperforms Llama in Division Tasks: In comparative tests, Qwen 2.5 outperformed Llama-3.1 405B when handling basic division problems with prompts like

A / B.

- Funnily enough, Qwen switches to CoT mode with large numbers using LaTeX or Python, whereas Llama's output remains unchanged.

- Open-source AI Urged Before Competitors Involve: Community members emphasized the urgent need to engage in open-source AI discussions with Dwarkesh to prevent another prominent firm from taking the lead.

- Collaboration was proposed to address current concerns over financial powers influencing technology dialogues.

GPU MODE Discord

-

Triton Performance Tuning: Discussions highlighted challenges in kernel design, particularly in determining if the first dimension is a vector with sizes varying between 1 and 16, considering padding to a minimum size of 16 as a potential solution.

- Members suggested utilizing

BLOCK_SIZE_Mastl.constexprfor conditional statements in kernels and employingearly_config_prunefor autotuning based on batch size, recommending a gemv implementation for batch size of 1 to enhance GPU performance. - torch.compile() Integration with Distributed Training: Concerns were raised about using torch.compile() in conjunction with Distributed Data Parallel (DDP), specifically whether to wrap torch.compile() around the DDP wrapper or place it inside.

- Similar inquiries were made regarding the integration of torch.compile() with Fully Sharded Data Parallel (FSDP), questioning if analogous considerations apply as with DDP.

- Shared Memory Constraints in CUDA Kernels: A user encountered kernel crashes when requesting 49,160 bytes of shared memory, which is below the

MAX_SHARED_MEMORYlimit, attributing the issue to static shared memory constraints on certain architectures.

- The discussion included the necessity of using dynamic shared memory for allocations exceeding 48KB, referencing the StackOverflow discussion for potential solutions involving

cudaFuncSetAttribute(). - GPU Profiling Tools Insights: A member sought recommendations on GPU profiling tools, expressing difficulties in interpreting reports generated by ncu.

- Another member advised acclimating to NCU, asserting it as the premier profiler that provides valuable optimization insights despite its learning curve.

- React Native LLM Library Launch: Software Mansion unveiled a new library for integrating LLMs within React Native, leveraging ExecuTorch to enhance performance.

- The library streamlines usage through installation commands that involve cloning the GitHub repository and running it on the iOS simulator, facilitating easier adoption and contribution.

- Members suggested utilizing

Notebook LM Discord Discord

-

Magic Book Podcast Experiment: A member created a magical PDF that reveals different interpretations based on who views it, shared in a podcast format.

- Listeners were encouraged to share their thoughts on this innovative podcast approach.

- NotebookLM Data Security Clarification: According to Google's support page, users' data is secure and not used to train NotebookLM models, regardless of account type.

- The privacy notice reiterated that human reviewers may only access information for troubleshooting.

- Feature Requests for Response Language: A user requested the ability to set response languages per notebook due to issues receiving answers in English instead of Greek.

- Implementing this feature could enhance user satisfaction in multilingual contexts.

- Pronunciation Challenges in NotebookLM: NotebookLM struggles with correctly pronouncing certain words, such as treating 'presents' as a gift rather than as an action.

- A suggested workaround involved using pasted text to instruct on pronunciation directly.

- Interest in API Updates: Members showed curiosity about potential updates regarding an API for NotebookLM, but were informed that no roadmap for features is currently published.

- The community relies on the announcement channel for any updates and new features.

Latent Space Discord

-

Perplexity's Ads Experimentation: Perplexity is initiating ads as 'sponsored follow-up questions' in the U.S., partnering with brands like Indeed and Whole Foods. TechCrunch Article details the launch.

- They stated that revenue from ads would help support publishers, as subscriptions alone aren’t enough for sustainable revenue generation.

- Gemini AI Ascends to #1: @GoogleDeepMind's Gemini (Exp 1114) has risen to joint #1 in the Chatbot Arena after a substantial performance boost in areas like math and creative writing. Google AI Studio is currently offering testing access.

- API access for Gemini is forthcoming, expanding its availability for developers and engineers.

- ChatGPT Desktop Gains Integrations: The ChatGPT desktop app for macOS now integrates with local applications such as VS Code and Terminal, available to Plus and Team users in a beta version.

- Some users have reported missing features and slow performance, raising questions about its current integration capabilities.

- AI Amplifies Tech Debt Costs: A blog post titled AI Makes Tech Debt More Expensive discussed how AI could increase the costs associated with tech debt, suggesting that companies with older codebases will struggle more than those with high-quality code.

- The post emphasized how generative AI widens the performance gap between these two groups.

- LLM Strategies for Excel Parsing: Users explored effective methods for handling Excel files with LLMs, particularly focusing on parsing financial data into JSON or markdown tables.

- Suggestions included exporting data as CSV for easier programming language integration.

OpenAI Discord

-

AI UI Control with ChatGPT: A member shared their system where ChatGPT can indirectly control a computer's UI using a tech stack that includes Computer Vision and Python's PyAutoGUI, and hinted at a video demonstration.

- Others raised questions about the code's availability and compared it to existing solutions like OpenInterpreter.

- GPT Lorebook Development: A user created a lorebook for GPT that loads entries based on keywords, featuring import/export capabilities and preventing spammed entries, set to be shared on GreasyFork after debugging.

- Discussions clarified that this lorebook is implemented as a script for Tampermonkey or Violentmonkey.

- Mac App Interface Optimizations: Members expressed gratitude for the optimization in the Mac App's model chooser interface, noting it enhances user experience significantly.

- One member remarked that the entire community is indebted to the team who implemented this change, echoing appreciation for usability improvements.

- LLM Mastery Techniques: Members discussed that while anyone can use LLMs, effectively prompting them requires skill and practice, much like carpentry tools.

- Knowing what to include to improve the chance of getting desired output can significantly enhance the interaction experience.

- 9 Pillars Solutions Exploration: A member encouraged pushing the limits of ChatGPT to discover the potential of the 9 Pillars Solutions, hinting at transformative outcomes.

- They claimed that significant insights could be achieved through this approach, sparking interest among other members.

OpenInterpreter Discord

-

Docker Open Interpreter: Streamlining Worker Management: A member proposed a fully supported Docker image for Open Interpreter, optimized for running as workers or warm spares, enhancing their current workaround-based workflow.

- They emphasized the necessity for additional configuration features, such as maximum iterations and settings for ephemeral instances, pointing to significant backend improvements.

- VividNode v1.7.1 Amplifies LiteLLM Integration: The new release of VividNode v1.7.1 introduces comprehensive support for LiteLLM API Keys, encompassing 60+ providers and 100+ models as detailed on GitHub.

- Enhancements feature improved usability with QLineEdit for model input and address bugs related to text input and LlamaIndex functionality, ensuring a smoother user experience.

- Voice Lab Unleashed: Open-Sourcing LLM Agent Evaluation: A member announced the open sourcing of Voice Lab, a framework designed for evaluating LLM-powered agents across various models and prompts, available on GitHub.

- Voice Lab aims to refine prompts and enhance agent performance, actively inviting community contributions and discussions to drive improvements.

- ChatGPT Desktop Dive: macOS Apps Integration: ChatGPT has been integrated with desktop applications on macOS, enabling enhanced responses in coding environments for Plus and Team users in its beta version.

- This update marks a significant shift in how ChatGPT interacts with coding tools on user desktops, offering a more cohesive development experience.

- Probabilistic Prowess: 100Mx GPU Efficiency Leap: A YouTube video highlighted a breakthrough in probabilistic computing that reportedly achieves 100 million times better energy efficiency compared to leading NVIDIA GPUs, available here.

- The video delves into advancements in probabilistic algorithms, suggesting potential revolutionary impacts on computational efficiency.

Cohere Discord

-

Cohere’s Token Tuning: Optimal Embedding Count: A member inquired about the optimal number of tokens for Cohere embedding models, especially for multi-modal inputs, clarifying based on current limits.

- Another member explained that the max context is currently 512 tokens, recommending experimentation within this boundary to achieve optimal performance.

- Beta Program Blitz: Research Prototype Sign-ups: Reminders were sent that the research prototype beta program sign-ups close before Tuesday, urging interested participants to register via the sign-up form.

- The program aims to explore the new Cohere tool for enhancing research and writing tasks, with participants providing valuable feedback.

- Podcast Purging: Scrubbing Content for LLMs: A member sought advice on how to scrub hours of podcast content, aiming to extract information for use with large language models.

- Another member queried if the goal was to transcribe the podcast content, emphasizing the importance of accurate transcriptions for effective LLM integration.

- VLA Models Unveiled: New Robotics Benchmarks: A new paper titled Benchmarking Vision, Language, & Action Models on Robotic Learning Tasks was released, showcasing collaborations among Manifold, Georgia Tech, MIT, and Metarch AI.

- The research evaluates how Vision Language Action models control robots across 20 different real-world tasks, marking a significant advancement in benchmarking robotics.

- Azure AI V2 API Status: Coming Soon: Users inquired about the availability of the Azure AI V2 API, which is currently not operational as per the documentation.

- It was noted that existing offerings support the Cohere v1 API, with the V2 API expected to be available soon, according to the latest updates.

LlamaIndex Discord

-

RAGformation automates cloud setup: RAGformation allows users to automatically generate cloud configurations by describing their use case in natural language, producing a tailored cloud architecture.

- It also provides dynamically generated flow diagrams for visualizing the setup.

- Mem0 memory system integration: Mem0 was recently added to LlamaIndex, introducing an intelligent memory layer that personalizes AI assistant interactions over time. Detailed information is available in the Mem0 Memory documentation.

- Users can access this system via a managed platform or an open source solution.

- ChromaDB ingestion issues: A user reported unexpected vector counts when ingesting a PDF into ChromaDB, resulting in two vectors instead of the expected one. Members suggested this might be due to the default behavior of the PDF loader splitting documents by page.

- Additionally, the SentenceWindowNodeParser may increase vector counts because it generates a node for each sentence.

- Using SentenceSplitter with SentenceWindowNodeParser: A user inquired about combining SentenceSplitter and SentenceWindowNodeParser in an ingestion pipeline, noting concerns over the resulting vector count.

- Community feedback confirmed that improper combination can lead to excessive node creation, complicating outcomes.

tinygrad (George Hotz) Discord

-

Tinygrad Shines in MLPerf Training 4.1: Tinygrad showcased its capabilities by having both tinybox red and green participate in MLPerf Training 4.1, successfully training BERT.

- The team aims for a 3x performance improvement in the next MLPerf cycle and is the first to integrate AMD in their training process.

- New Buffer Transfer Function Introduced: A contributor submitted a pull request for a buffer transfer function in tinygrad, enabling seamless data movement between CLOUD devices.

- The implementation focuses on maintaining consistency with existing features, deeming size checks as non-essential.

- Evaluating PCIe Bandwidth Enhancements: Members discussed the potential of ConnectX-6 adapters to achieve up to 200Gb/s with InfiniBand, relating it to OCP3.0 bandwidth.

- Theoretical assessments suggest the possibility of 400 GbE bidirectional connectivity by bypassing the CPU.

- Optimizing Bitwise Operations in Tinygrad: A proposal was made to modify the minimum fix using bitwise_not, targeting improvements in the argmin and minimum functions.

- This enhancement is expected to significantly boost the efficiency of these operations.

- Investigating CLANG Backend Bug: A bug was identified in the CLANG backend affecting maximum value calculations in tensor operations, leading to inconsistent outputs from

.max().numpy()and.realize().max().numpy().

- The issue highlights potential flaws in handling tensor operations, especially with negative values.

OpenAccess AI Collective (axolotl) Discord

-

Nanobitz recommends alternative Docker images: Nanobitz advised using the axolotlai/axolotl images even if they lag a day behind the winglian versions.

- Hub.docker.com reflects that the latest tags are from 20241110.

- Discussion on Optimal Dataset Size for Fine-Tuning Llama: Arcadefira inquired about the ideal dataset size for fine-tuning a Llama 8B model, especially given its low-resourced language.

- Nanobitz responded with questions about tokenizer overlaps and suggested that if overlaps are sufficient, a dataset of 5k may be adequate.

- Llama Event at Meta HQ: Le_mess asked if anyone is attending the Llama event at Meta HQ on December 3-4.

- Neodymiumyag expressed interest, requesting a link to more information about the event.

- Liger kernel sees improvements: Xzuyn mentioned that the Liger project has an improved orpo kernel, detailing this through a GitHub pull request.

- They also noted it behaves like a flat line with an increase in batch size.

- Social Media Insight shared: Kearm shared a post from Nottlespike on X.com, indicating a humorous perspective on their day.

- The shared link leads to a post detailing Nottlespike's experiences.

LAION Discord

-

EPOCH 58 COCK model updates: The EPOCH 58 COCK model now has 60M parameters and utilizes f16, showing progress as its legs and cockscomb become more defined.

- This advancement indicates improvements in the model's structural detail and parameter efficiency.

- LAION copyright debate intensifies: A debate emerged around LAION's dataset, which allows downloading of 5 Billion images, with claims it may violate EU copyright laws.

- Critics argue this approach circumvents licensing terms and paywalls, unlike standard browser caching.

- New paper benchmarks VLA models on 20 robotics tasks: A collaborative paper titled Benchmarking Vision, Language, & Action Models on Robotic Learning Tasks was released by Manifold, Georgia Tech, MIT, and Metarch AI, evaluating VLA models' performance on 20 real-world robotics tasks.

- Highlights are available in the Thread w/ Highlights, and the full analysis can be accessed via the Arxiv paper.

- Watermark Anything implementation launched on GitHub: The project Watermark Anything with Localized Messages is now available on GitHub, providing an official implementation of the research paper.

- This tool enables dynamic watermarking, potentially enhancing various AI workflows.

- 12M Public Domain Images dataset released: A 12M image set in the public domain has been released, offering valuable resources for machine learning projects.

- Interested developers can access the dataset here.

DSPy Discord

-

ChatGPT for macOS Integrates with Desktop Apps: ChatGPT for macOS now integrates with desktop applications such as VS Code, Xcode, Terminal, and iTerm2, enhancing coding assistance capabilities for users. This feature is currently in beta for Plus and Team users.

- The integration allows ChatGPT to interact directly with development environments, improving workflow productivity. Details were shared in a tweet from OpenAI Developers.

- Code Editing Tools Surpass 4096 Tokens: Tools like Cursor and Aider are successfully generating code edits that exceed 4096 tokens, showcasing advancements in handling large token outputs. Developers are seeking clarity on the token management strategies employed by these tools.

- The discussion emphasizes the need for effective token handling mechanisms to maintain performance in large-scale code generation tasks.

- Clarifying LM Assertions Deprecation: Members have raised concerns about the potential deprecation of LM assertions, noting the absence of

dspy.Suggestordspy.Assertin the latest documentation.

- It was clarified that while direct references are missing, these functions can still be accessed via the search bar, indicating ongoing updates to the documentation.

- Expanding Multi-Infraction LLM Applications: A member is developing an LLM application that currently generates defensive documents for specific infractions, such as alcohol ingestion. They aim to extend its capabilities to cover additional infractions without the need for separate optimized prompts.

- This initiative seeks to create a unified approach for handling various infractions, enhancing the application's versatility and efficiency.

LLM Agents (Berkeley MOOC) Discord

-

Quiz Eligibility and Deadlines: A new member inquired about completing quizzes to remain eligible for Trailblazer and above trails. Another member confirmed eligibility but stressed the importance of catching up quickly, with all quizzes and assignments due by December 12th.

- Members emphasized that quizzes are directly related to the course content, highlighting the necessity to stay up to date for full participation.

- Upcoming Event Announcement:

sheilabelannounced an event happening today: Event Link.

- No further details were provided about the event.

Gorilla LLM (Berkeley Function Calling) Discord

-

Writer Handler and Palmyra X 004 Model Added: A member announced the submission of a PR to incorporate a Writer handler and the Palmyra X 004 model into the leaderboard.

- This addition enhances the leaderboard's functionality, awaiting feedback and integration from the development team.

- Commitment to Review PR: Another member expressed intent to review the submitted PR, stating, 'Will take a look. Thank you!'

- This response underscores the collaborative effort and active participation within the project's review process.

AI21 Labs (Jamba) Discord

-

Legacy Models Cause Disruption: A member expressed frustration over the deprecation of legacy models, stating that the impact has been hugely disruptive due to the new models not being 1:1 in terms of output.

- We would like to continue using legacy models as the transition has not been smooth.

- Transition to Open Source Solutions: A member is working on converting to an open source solution but has been paying for the old models for almost 2 years.

- They raised concerns about future deprecations, asking, How can we be sure AI21 won't deprecate the new models in the future too?

Mozilla AI Discord

-

Local LLMs Workshop Kicks Off Tuesday: Join the Local LLMs Workshop on Tuesday, featuring Building your own local LLM's: Train, Tune, Eval, RAG all in your Local Env., to develop local language models.

- Participants will engage in hands-on training and gain insights on constructing effective local LLM systems.

- SQLite-Vec Enhances Metadata Filtering: Attend the SQLite-Vec Metadata Filtering event on Wednesday at SQLite-Vec now supports metadata filtering! to explore the new metadata filtering feature.

- This update allows users to efficiently filter metadata, improving data management capabilities.

- Autonomous AI Sessions with Refact.AI: Explore autonomous agents at the Explore Autonomous AI with Refact.AI session on Thursday, detailed in Autonomous AI Agents with Refact.AI.

- Learn about innovative strategies and applications for AI technologies through this engaging presentation.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Stability.ai (Stable Diffusion) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!