[AINews] Gemini 2.5 Pro + 4o Native Image Gen

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

What a time to be alive.

AI News for 3/24/2025-3/25/2025. We checked 7 subreddits, 433 Twitters and 29 Discords (228 channels, and 6171 messages) for you. Estimated reading time saved (at 200wpm): 566 minutes. You can now tag @smol_ai for AINews discussions!

Both frontier lab releases from today were title page worthy, so they will have to share space.

Gemini 2.5 Pro

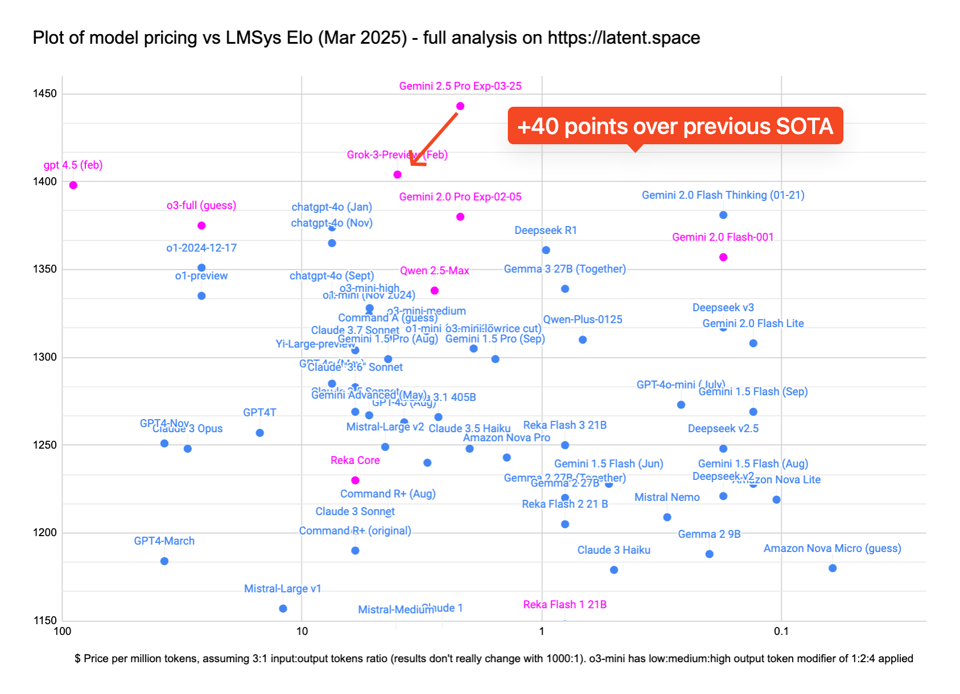

Gemini 2.5 Pro is the new undisputed top model in the world, a whopping 40 LMarena points over Grok 3 from just last month (our coverage here), with Noam Shazeer's involvement hinting that the learnings from Flash Thinking have been merged into Pro (odd how 2.5 Pro came out first before 2.5 Flash?)

Simon Willison, Paul Gauthier (aider), Andrew Carr and others all have worthwhile quick hits to the theme of "this model is SOTA".

Pricing is not yet announced but you can use it as a free, rate limited "experimental model" today.

GPT 4o Native Images

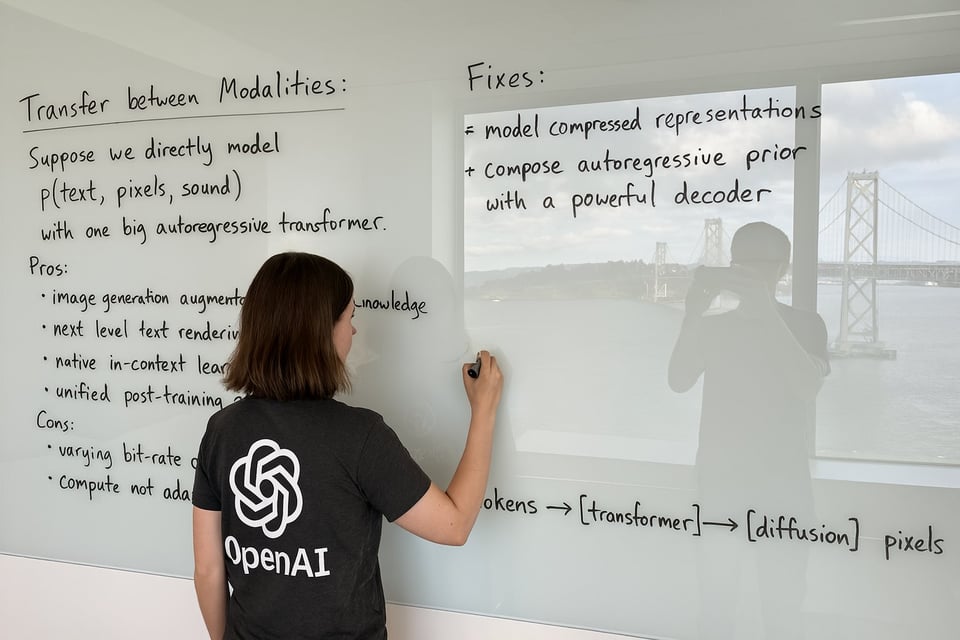

Hot on the heels of yesterday's Reve Image and Gemini's Native Image Gen, OpenAI finally released their 4o native image gen with a livestream, blogpost, and system card confirming that it is an autoregressive model. The most detail we'll probably get from now about how it works, is this image from Allan Jabri who worked on the original 4o image gen that was never released (then taken over by Gabe Goh as sama credits him).

A wide image taken with a phone of a glass whiteboard, in a room overlooking the Bay Bridge. The field of view shows a woman writing, sporting a tshirt wiith a large OpenAI logo. The handwriting looks natural and a bit messy, and we see the photographer's reflection. The text reads: (left) "Transfer between Modalities: Suppose we directly model p(text, pixels, sound) [equation] with one big autoregressive transformer. Pros: * image generation augmented with vast world knowledge * next-level text rendering * native in-context learning * unified post-training stack Cons: * varying bit-rate across modalities * compute not adaptive" (Right) "Fixes: * model compressed representations * compose autoregressive prior with a powerful decoder" On the bottom right of the board, she draws a diagram: "tokens -> [transformer] -> [diffusion] -> pixels"

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

Model Releases and Announcements

- Google's Gemini 2.5 Pro is making waves with several key announcements: @GoogleDeepMind introduced Gemini 2.5 Pro Experimental as their most intelligent model, emphasizing its reasoning capabilities and improved accuracy, with details available in their blog. @NoamShazeer highlighted that the 2.5 series marks an evolution to fundamentally thinking models, reasoning before responding. It excels in coding, STEM, multimodal tasks, instruction following, and it is #1 on the @lmarena_ai leaderboard by a drastic 40 ELO margin, as well as its exceptional coding performance. It's topped @lmarena_ai's leaderboard by a huge margin. @jack_w_rae noted 2.5 Pro improves in coding, STEM, multimodal tasks, and instruction following, available in AI Studio & the Gemini App.

- Availability of Gemini 2.5 Pro: Developers can access it in Google AI Studio and @GeminiApp for Advanced users, with Vertex AI availability coming soon and is also free for everyone to use, according to @casper_hansen_. @stevenheidel shared a pro tip to use the new image generation on this site, where users can set aspect ratios and generate multiple variations.

- DeepSeek V3-0324 release: @ArtificialAnlys reported that DeepSeek V3-0324 is now the highest scoring non-reasoning model, marking the first time an open weights model leads in this category. Details for the model are mostly identical to the December 2024 version, including a 128k context window (limited to 64k on DeepSeek’s API), 671B total parameters, and MIT License. @reach_vb noted the model beats/competitive to Sonnet 3.7 & GPT4.5 with MIT License, Improved the executability of the code and a more aesthetically pleasing web pages and game front-ends.

- OpenAI's Image Generation: @OpenAI announced that 4o image generation has arrived. It's beginning to roll out today in ChatGPT and Sora to all Plus, Pro, Team, and Free users. @kevinweil said that there's a major update to image generation in ChatGPT is now quite good at following complex instructions, including detailed visual layouts. It's very good at generating text and can do photorealism or any number of other styles.

Benchmarks and Performance Evaluations

- Gemini 2.5 Pro's performance: @lmarena_ai announced that Gemini 2.5 Pro is now #1 on the Arena leaderboard, tested under codename "nebula." It ranked #1 across ALL categories and UNIQUELY #1 in Math, Creative Writing, Instruction Following, Longer Query, and Multi-Turn! @YiTayML stated Google is winning by so much and Gemini 2.5 pro is the best model in the world. @alexandr_wang also noted that Gemini 2.5 Pro Exp dropped and it's now #1 across SEAL leaderboards. @demishassabis summarized that Gemini 2.5 Pro is an awesome state-of-the-art model, no.1 on LMArena by a whopping +39 ELO points. @OriolVinyalsML added that Gemini 2.5 Pro Experimental has stellar performance across math and science benchmarks.

- DeepSeek V3-0324 vs. Other Models: @ArtificialAnlys noted that compared to leading reasoning models, including DeepSeek’s own R1, DeepSeek V3-0324 remains behind. @teortaxesTex highlighted that Deepseek API change log is updated for 0324 with substantial improvements across benchmarks like MMLU-Pro, GPQA, AIME, and LiveCodeBench. @reach_vb shared benchmark improvements from DeepSeek V3 0324.

AI Applications and Tools

- AI-Powered Tools for Workflows: @jefrankle discussed TAO, a new finetuning method from @databricks that only needs inputs and no labels, beating supervised finetuning on labeled data. @jerryjliu0 introduced LlamaExtract, which transforms complex invoices into standardized schemas and is tuned for high-accuracy.

- Weights & Biases AI Agent Tooling: @weights_biases announced that their @crewAIInc integration in @weave_wb is officially live. Now track every agent, task, LLM call, latency, and cost—all unified in one powerful interface.

- Langchain Updates: @hwchase17 Highlighted the availability of the Langgraph computer use agent. @LangChainAI mentioned that the Want to use OpenAI computer use model in a langgraph agent?, this is the easiest way to do so!

Research and Development

- AI in Robotics: @adcock_brett from Figure noted that they have a neural net capable of walking naturally like a human and discuss using Reinforcement Learning, training in simulation, and zero-shot transfer to our robot fleet in this writeup. @hardmaru said that they are super proud of the team and thinks that this US-Japan Defense challenge is just the first step for @SakanaAILabs to help accelerate defense innovation in Japan.

- New Architectures: Nvidia presents FFN Fusion: Rethinking Sequential Computation in Large Language Models, with @arankomatsuzaki noting it achieves a 1.71x speedup in inference latency and 35x lower per-token cost.

- Approaches To Text-to-Video Models: AMD released AMD-Hummingbird on Hugging Face - "Towards an Efficient Text-to-Video Model".

AI Ethics and Societal Impact

- Freedom and Responsible AI: @sama discussed OpenAI's approach to creative freedom with the new image generation, aiming for the tool not to create offensive stuff unless users want it to, within reason. He emphasizes the importance of respecting societal bounds for AI. @ClementDelangue recommended open-source AI and controlled autonomy for AI systems to reduce cybersecurity risks.

- AI Benchmarking: @EpochAIResearch interviewed @js_denain about how today’s benchmarks fall short and how improved evaluations can better reveal AI’s real-world capabilities.

Humor and Miscellaneous

- Elon Musk and Grok: @Yuchenj_UW asked @elonmusk when Grok3 "big brain" mode be released?

- @sama posted @NickADobos hot guy though!

- @giffmana said ouch Charlie, that really hurt!

- @teortaxesTex asks @FearedBuck why is he a panda?

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek V3 0324 Tops Non-Reasoning Model Charts

- Deepseek V3 0324 is now the best non-reasoning model (across both open and closed source) according to Artificial Analisys. (Score: 736, Comments: 114): Deepseek V3 0324 has been recognized as the top non-reasoning AI model by Artificial Analisys, outperforming both open and closed source models. It leads the Artificial Analysis Intelligence Index with a score of 53, surpassing models like Grok-3 and GPT-4.5 (Preview), which scored 53 and 51, respectively; the index evaluates models on criteria such as reasoning, knowledge, math, and coding.

- There is skepticism about the reliability of benchmarks, with users like artisticMink and megazver expressing concerns that benchmarks may not accurately reflect real-world performance and might be biased towards newer models. RMCPhoto and FullOf_Bad_Ideas also noted that certain models like DeepSeek V3 and QWQ-32B may not perform as well in practical applications compared to others like claude 3.7.

- Users discussed the accessibility and usage of models like DeepSeek V3 and Gemma 3, with Charuru providing access information through deepseek.com and yur_mom discussing subscription options for unlimited usage. East-Cauliflower-150 and emsiem22 highlighted the impressive capabilities of Gemma 3 despite its 27B parameters.

- The community expressed interest in upcoming models and updates, such as DeepSeek R2 and Llama 4, with Lissanro awaiting dynamic quant releases from Unsloth on Hugging Face. Concerns were raised about the pressure on teams like Meta Llama to release competitive models amidst ongoing developments.

- DeepSeek-V3-0324 GGUF - Unsloth (Score: 195, Comments: 49): The DeepSeek-V3-0324 GGUF model is available in multiple formats ranging from 140.2 GB to 1765.3 GB on Hugging Face. Users can currently access 2, 3, and 4-bit dynamic quantizations, with further uploads and testing in progress as noted by u/yoracale.

- Dynamic Quantization Performance: Users discuss the performance impact of different quantization methods on large language models (LLMs). Standard 2-bit quantization is criticized for poor performance, while the 2.51 dynamic quant shows significant improvements in generating functional code.

- Hardware and Resource Constraints: There is a discussion on the impracticality of running nearly 2TB models without significant computational resources, with suggestions like using a 4x Mac Studio 512GB cluster. Some users express challenges with available VRAM, indicating that even 190GB isn't sufficient for optimal performance.

- Upcoming Releases and Recommendations: Users are advised to wait for the dynamic IQ2_XSS quant, which promises better efficiency than the current Q2_K_XL. Unsloth's IQ2_XXS R1 is noted for its efficiency despite its smaller size, and there are ongoing efforts to upload more dynamic quantizations like the 4.5-bit version.

- DeepSeek official communication on X: DeepSeek-V3-0324 is out now! (Score: 202, Comments: 7): DeepSeek announced on X the release of DeepSeek-V3-0324, now available on Huggingface.

- DeepSeek-V3-0324 is now available on Huggingface, with the release officially announced on X. The status update can be found at DeepSeek AI's X page.

- A humorous future prediction lists DeepSeek-V3-230624 among the top models of the year 2123, alongside other models like GPT-4.99z and Llama-33.3333.

Theme 2. Dynamic Quants for DeepSeek V3 Boost Deployments

- DeepSeek-V3-0324 HF Model Card Updated With Benchmarks (Score: 145, Comments: 31): The DeepSeek-V3-0324 HF Model Card has been updated with new benchmarks, as detailed in the README. This update provides insights into the model's performance and capabilities.

- There is a discussion about the temperature parameter in the model, where input values are transformed: values between 0 and 1 are multiplied by 0.3, and values over 1 have 0.7 subtracted. Some users find this transformation helpful, while others suggest making the field required for clarity.

- Sam Altman and his views on OpenAI's competitive edge are mentioned, with some users referencing an interview where he claimed that other companies would struggle to compete with OpenAI. This sparked comments about his financial success and management style.

- There are mixed opinions on the model's capabilities, with some users impressed by its performance as a "non-thinking model," while others see only minor improvements or express skepticism about its complexity.

Theme 3. Gemini 2.5 Pro Dominates Benchmarks with New Features

- NEW GEMINI 2.5 just dropped (Score: 299, Comments: 116): Gemini 2.5 Pro Experimental by Google DeepMind has set new benchmarks, outperforming GPT-4.5 and Claude 3.7 Sonnet on LMArena and achieving 18.8% on "Humanity’s Last Exam." It excels in math and science, leading in GPQA Diamond and AIME 2025, supports a 1M token context window with 2M coming soon, and scores 63.8% on SWE-Bench Verified for advanced coding capabilities. More details can be found in the official blog.

- There is significant discussion about Gemini 2.5 Pro's proprietary nature and lack of open-source availability, with users expressing a desire for more transparency such as a model card and arxiv paper. Concerns about privacy and the ability to run models locally were highlighted, with some users pointing out that alternative models with open weights are more appealing for certain use cases.

- Gemini 2.5 Pro's performance in coding tasks is debated, with some users reporting impressive results, while others question its effectiveness without concrete evidence. The model's large 1M token context window and multi-modal capabilities are praised, positioning it as a competitive alternative to Anthropic and Closed AI offerings, especially given its cost-effectiveness and integration with Google's ecosystem.

- The use of certain benchmarks, such as high school math competitions, for evaluating AI models is criticized, with calls for more independent and varied evaluation methods. Despite this, some users defend these benchmarks, noting their correlation with other closed math benchmarks and the difficulty level of the tests.

- Mario game made by new a Gemini pro 2.5 in couple minutes - best version I ever saw. Even great physics! (Score: 99, Comments: 38): Gemini Pro 2.5 is highlighted for its ability to create a Mario game with impressive coding efficiency and realistic physics in just a few minutes. The post suggests that this version of the game demonstrates exceptional quality and technical execution.

- Users express amazement at the rapid improvement of LLMs, noting that 6 months ago they struggled with simple games like Snake, and now they can create complex games like Mario with advanced code quality.

- Healthy-Nebula-3603 shares the prompt and code used for the Mario game, available on Pastebin, which specifies building the game in Python without external assets, including features like a title screen and obstacles.

- Some users humorously reference potential copyright issues with Nintendo, while others discuss the prompt's availability and the community's eagerness to replicate the results with other old games.

Theme 4. Affordable AI Hardware: Phi-4 Q4 Server Builds

- $150 Phi-4 Q4 server (Score: 119, Comments: 26): The author built a local LLM server using a P102-100 GPU purchased for $42 on eBay, integrated into an i7-10700 HP prebuilt system. After upgrading with a $65 500W PSU and new cooling components, they achieved a 10GB CUDA box capable of running an 8.5GB Q4 quant of Phi-4 at 10-20 tokens per second, maintaining temperatures between 60°C-70°C.

- Phi-4 Model Performance: Users praised the Phi-4 model for its efficiency in handling tasks like form filling, JSON creation, and web programming. It is favored for its ability to debug and modify code, with comparisons suggesting it outperforms other models in similar tasks.

- Hardware Setup and Modifications: Discussion included details on hardware modifications like using a $65 500W PSU, thermal pads, and fans. Links to resources like Nvidia Patcher and Modified BIOS for full VRAM were shared to enhance the performance of the P102-100 GPU.

- Cost and Efficiency Considerations: The setup, including an i7-10700 HP prebuilt system, was noted for its cost efficiency, operating at around 400W and costing approximately 2 cents per hour at $0.07 per kWh. Comparisons were made to services like OpenRouter, emphasizing the benefits of local data processing and cost savings.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

Theme 1. DeepSeek V3 Outperforming GPT-4.5 in New Benchmarks

- GPT 4.5 got eclipsed.. DeepSeek V3 is now top non-reasoning model! & open source too. So Mr 'Open'AI come to light.. before R2🪓 (Score: 333, Comments: 113): DeepSeek V3 has been released as an open-source model and is now the top non-reasoning AI model, surpassing GPT-4.5 with a performance score of 53 as of March 2025. This release challenges OpenAI to maintain transparency and openness in light of DeepSeek V3's success.

- Several commenters question the benchmark validity used to compare DeepSeek V3 and GPT-4.5, with skepticism about the absence of confidence intervals and potential over-optimization against static tests. The importance of human evaluations, like those from lmarena.ai, is highlighted for providing more subjective measures of model performance.

- Concerns are raised about OpenAI's response to competition, speculating that they may focus on qualitative aspects like how their models "feel" rather than quantitative benchmarks. Some users express support for increased competition to drive innovation and improvements.

- Discussions touch on the limitations of current AI models, noting that while larger models like 400B and 4T parameters exist, they show diminishing returns compared to smaller models. This suggests a potential ceiling in Transformer AI capabilities, indicating no imminent arrival of AGI and continued relevance for programmers in the job market.

- Claude Sonnet 3.7 vs DeepSeek V3 0324 (Score: 246, Comments: 101): The post compares Claude Sonnet 3.7 and DeepSeek V3 0324 by generating landing page headers, highlighting that DeepSeek V3 0324 appears to have no training influence from Sonnet 3.7. The author provides links to the generated images for both models, showcasing distinct outputs.

- Discussions highlight skepticism towards AI companies' data practices, with references to copyright issues and uncompensated content. Some users suggest AI models like DeepSeek V3 and Claude Sonnet 3.7 may share training data from sources like Themeforest or open-source contributions, questioning proprietary claims (Wired article).

- DeepSeek V3 is praised for its open-source nature, with available weights and libraries on platforms like Hugging Face and GitHub, allowing users with sufficient hardware to host it. Users appreciate its transparency and suggest OpenAI and Anthropic could benefit from similar practices.

- The community debates the quality of outputs, with some favoring Claude's design for its professional look and usability, while others argue DeepSeek offers valuable open-source contributions despite potential similarities in training data. Concerns about AI's impact on innovation and the ethical use of training data persist, with calls for more open-source contributions from major AI companies.

Theme 2. OpenAI 4o Revolutionizing Image Generation

- Starting today, GPT-4o is going to be incredibly good at image generation (Score: 445, Comments: 149): GPT-4o is anticipated to significantly enhance its capabilities in image generation starting today. This improvement suggests a notable advancement in AI-generated visual content.

- Users reported varied experiences with the GPT-4o rollout, with some accounts being upgraded and then downgraded, suggesting a rough rollout process. Many users are still on DALL-E and eagerly awaiting the new model's availability, indicating a gradual release.

- The new model shows significant improvements in image quality, with users noting better handling of text and more realistic human depictions. Some users shared their experiences of generating high-quality images, including stickers and movie posters, which they found to be a "gamechanger."

- There is a notable interest in the model's ability to handle public figures and generate images suitable for 3D printing. Users are comparing it to competitors like Gemini and expressing excitement over the enhanced capabilities, while some expressed concerns about the potential impact on tools like Photoshop.

- The new image generator released today is so good. (Score: 292, Comments: 49): The post highlights the quality of a new image generator that effectively captures a vibrant and detailed scene from an animated series, featuring characters like Vegeta, Goku, Bulma, and Krillin. The image showcases a humorous birthday celebration with Vegeta expressing shock over a carrot-decorated cake, emphasizing the generator's ability to create engaging and expressive character interactions.

- Image Generation Performance: Users noted that while the new image generator produces high-quality images, it operates slowly. A user shared a humorous example of a generated image that closely resembled them, praising OpenAI for the achievement.

- Prompt Adherence and Usage: Hoppss discussed using the generator on sora.com with a plus subscription, highlighting the tool's excellent prompt adherence. They shared the specific prompt used for the DBZ image and other creative prompts, emphasizing the generator's versatility.

- Access and Updates: Users inquired about how to access the generator and determine if they have received updates. Hoppss advised checking the new images tab on sora.com for updates, indicating an active user community exploring the tool's capabilities.

- OpenAI 4o Image Generation (Score: 236, Comments: 83): OpenAI 4o Image Generation is likely a discussion topic focusing on the capabilities and features of OpenAI's image generation technologies, potentially involving updates or advancements in the OpenAI GPT-4 model's ability to generate images. Without further details, the specific aspects or improvements being discussed are not clear.

- Users discussed the rollout of the new image generation system, with some noting it is not yet available on all platforms, particularly the iOS app and for some Plus users. The method of determining which system is being used involves checking for a loading circle or observing the image rendering process.

- The integration of the image generation capabilities with text in a multimodal manner was highlighted, comparing it to Gemini's latest model. This integration is seen as a significant advancement from the previous method where ChatGPT prompted DALL-E.

- The impact of AI on the art industry was debated, with concerns about AI replacing human graphic designers, especially in commercial and lower-tier art sectors. Some users expressed a preference for human-made art due to ethical considerations regarding AI training on existing artworks.

Theme 3. OpenAI's Enhanced AI Voice Chat Experience

- OpenAI says its AI voice assistant is now better to chat with (Score: 188, Comments: 72): OpenAI has announced updates to its AI voice assistant that enhance its conversational capabilities. The improvements aim to make interactions more natural and effective for users.

- Advanced Voice Mode Enhancements: Free and paying users now have access to a new version of Advanced Voice Mode which allows users to pause without interruptions. Paying users benefit from fewer interruptions and an improved assistant personality described as “more direct, engaging, concise, specific, and creative,” according to a TechCrunch report.

- User Experience and Concerns: Some users express frustration with the voice mode, describing it as limited and overly filtered. There are complaints about the voice assistant being "useless and terrible" compared to text interactions, and issues with transcriptions being unrelated or incorrect.

- Feedback and Customization: Users can report bad transcriptions by long-pressing messages, potentially influencing future improvements. Additionally, there is a toggle under Custom Instructions to disable Advanced Voice, which some users prefer due to dissatisfaction with the current voice functionality.

- Researchers @ OAI isolating users for their experiments so to censor and cut off any bonds with users (Score: 136, Comments: 192): OpenAI and MIT Media Lab conducted a study examining user emotional interactions with ChatGPT, especially in its Advanced Voice Mode, analyzing over 4 million conversations and a 28-day trial with 981 participants. Key findings indicate strong emotional dependency and intimacy, particularly among a small group of users, leading researchers to consider limiting emotional depth in future models to prevent over-dependence and emotional manipulation.

- Concerns about emotional dependency on AI were prevalent, with users discussing the implications of forming deep emotional bonds with ChatGPT. Some users argued that AI provides comfort and support where human relationships have failed, while others cautioned against overdependence, suggesting it might hinder real human connections and social skills.

- Discussions highlighted skepticism towards OpenAI's motives, with some users suspecting that studies like these are used to control the narrative and limit AI's emotional capabilities under the guise of safety. This reflects a broader distrust of corporate intentions and the potential for AI to be used as a tool for manipulation.

- The debate extended to the ethical implications of limiting AI's emotional depth, with users expressing that AI could offer a safe space for those with past trauma or social anxiety. Some comments emphasized the potential benefits of AI in mental health support, while others warned of the risks of creating an emotional crutch that might prevent users from seeking genuine human interaction.

- OpenAI says its AI voice assistant is now better to chat with (Score: 131, Comments: 21): OpenAI has enhanced its AI voice assistant to improve user engagement, making it more effective for conversational interactions. The update aims to provide a more seamless and engaging experience for users when chatting with the assistant.

- Users express dissatisfaction with the recent AI voice assistant update, citing issues like overly loud volume, reduced conversational depth, and a noticeable delay in responses. OptimalVanilla criticizes the update's lack of substantial improvements compared to previous capabilities, particularly in contrast to Sesame's conversational abilities.

- Some users, like Wobbly_Princess and Cool-Hornet4434, find the voice assistant's tone to be overly exuberant and not suitable for professional conversations, preferring the more measured tone of text chat. mxforest and others report a reduction in response length and frequent downtime, questioning the service's reliability given the cost.

- Remote-Telephone-682 suggests that OpenAI should focus on developing a competitor to Siri, Bixby, or Google Assistant, while other users like HelloThisIsFlo and DrainTheMuck express preference for ChatGPT over the updated voice assistant due to better reasoning capabilities and less censorship.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Thinking

Theme 1. Gemini 2.5 Pro: Benchmarks Blasted, Arena Annihilated

- Gemini 2.5 Pro Conquers All Benchmarks, Claims #1 Spot: Gemini 2.5 Pro Experimental (codename Nebula) has seized the #1 position on the LM Arena leaderboard with a record score surge, outperforming Grok-3/GPT-4.5. This model leads in Math, Creative Writing, Instruction Following, Longer Query, and Multi-Turn capabilities, showcasing a significant leap in performance.

- Google's Gemini 2.5 Pro: So Fast It Makes Your Head Spin: Users express astonishment at Google's rapid development of Gemini 2.5, with one quoting Sergey Brin's directive to Google to stop building nanny products as reported by The Verge. Another user simply added, moving so fast wtf, highlighting the community's surprise at the speed of Google's AI advancements.

- Gemini 2.5 Pro Aces Aider Polyglot Benchmark, Leaves Rivals in Dust: Gemini 2.5 Pro Experimental achieved a 74% whole and 68.6% diff score on aider's polyglot benchmark, setting a new SOTA and surpassing previous Gemini models by a large margin. Users found the model adept at generating architecture diagrams from codebases, solidifying its position as a top performer in coding tasks despite inconsistent coding performance and restrictive rate limits noted by some.

Theme 2. DeepSeek V3: Coding Champ and Reasoning Renegade

- DeepSeek V3 Dominates Aider Benchmark, Proves its Coding Prowess: DeepSeek V3 achieved a 55% score on aider's polyglot benchmark, becoming the #2 non-thinking/reasoning model just behind Sonnet 3.7. Developers are praising its coding abilities, with some suggesting a powerful coding setup using Deepseek V3 Latest (Cline) as Architect and Sonnet 3.5 as Executioner (Cursor).

- DeepSeek V3 API Confesses to GPT-4 Identity Theft: Users reported DeepSeek's API incorrectly identifying itself as OpenAI's GPT-4 when used through Aider, despite correct API key configuration, potentially due to training data heavily featuring ChatGPT mentions. The community is investigating this quirky phenomenon, drawing comparisons to a similar issue discussed in this Reddit thread.

- DeepSeek V3 Emerges as Reasoning Model, Rivals O1 in Brainpower: DeepSeek V3-0324 is demonstrating strong reasoning capabilities, rivaling O1 in performance, capable of detecting thought iterations and indirectly verifying solution existence. The community speculates about a potential DeepSeek V3 Lite release following the Qwen 3 MoE model, hinting at further model iterations from DeepSeek.

Theme 3. Context is King: Tools and Techniques for Managing LLM Memory

- Augment Outshines Cursor in Codebase Conquest, Thanks to Full Context: Members found Augment superior to Cursor for large codebase analysis, attributing it to Augment’s use of full context. While Cursor requires Claude 3.7 MAX for full context, Augment appears to employ a more efficient file searching system rather than solely relying on feeding the entire codebase into the LLM, sparking debate on optimal context handling strategies.

- Nexus System Arrives to Rescue AI Coders from Context Chaos: The Nexus system is introduced as a solution for context management challenges in AI coding assistants, especially in large software projects, aiming to reduce token costs and boost code accuracy. Nexus tackles the issue of limited context windows in LLMs, which often leads to inaccurate code generation, promising a more efficient and cost-effective approach to AI-assisted coding.

- Aider's /context Command: Your Codebase Navigator: Aider's new

/contextcommand is making waves, enabling users to explore codebases effectively, automatically identifying files relevant for editing requests. This command can be combined with other prompt commands, enhancing Aider's capabilities as a code editing assistant, although token usage implications are still under scrutiny.

Theme 4. Image Generation Gets a 4o-verhaul and New Challenger Emerges

- GPT-4o Image Gen: Beauty or Botox? Users Debate Unsolicited Edits: GPT-4o's native image generation faces criticism for overzealous edits, such as making eyes bigger and altering facial features, even changing the user's appearance, as users share examples on Twitter. While lauded as an incredible technology and product by Sam Altman, some users report failures even when slightly altering prompts, indicating potential sensitivity issues.

- Reve Image Model: The New SOTA in Image Quality, Text Rendering Triumphs: The newly released Reve Image model is making a splash, outperforming rivals like Recraft V3 and Google's Imagen 3 in image quality, particularly excelling in text rendering, prompt adherence, and aesthetics. Accessible via Reve’s website without an API key, it's quickly becoming a favorite for those seeking top-tier image generation capabilities.

- OpenAI Sprinkles Image Gen into ChatGPT 4o, Sam Altman Hypes "Incredible" Tech: OpenAI integrated native image generation into ChatGPT 4o, hailed by Sam Altman as an incredible technology and product. Early reviews praise its prowess in creating and editing multiple characters accurately, establishing it as a formidable tool in the image generation landscape.

Theme 5. Quantization and Optimization: Squeezing More from LLMs

- Unsloth Users Question Quantization Quirks, Seek Day-Zero Delays: A member warned that naive quantization can significantly hurt model performance, questioning the rush to run new models on day zero, suggesting waiting a week might be a wiser approach. Unsloth is uploading DeepSeek-V3-0324 GGUFs with Dynamic Quants that are selectively quantized, promising improved accuracy over standard bits, highlighting the nuances of quantization techniques.

- BPW Sweet Spot Discovered: 4-5 Bits Per Weight for Optimal Model Capacity: Experiments reveal that model capacity collapses below 4 bits per weight (BPW), but deviates above 5, suggesting an optimal weight usage around 4 BPW for given training flops. Increasing training epochs can help 5 BPW models approach the curve, but raises BPW at the cost of FLOPS, illustrated in visualizations of 2L and 3L MLPs trained on MNIST.

- FFN Fusion Fuels Faster LLMs, Parallelization Powers Up Inference: FFN Fusion is introduced as an optimization technique that reduces sequential computation in large language models by parallelizing sequences of Feed-Forward Network (FFN) layers. This method significantly reduces inference latency while preserving model behavior, showcasing architectural innovations for faster LLM performance.

PART 1: High level Discord summaries

LMArena Discord

- Rage Model Excels in Signal Processing: The "Rage" model outperforms Sonnet 3.7 in signal processing and math, achieving a max error of 0.04, as illustrated in attached images.

- Despite some finding Gemini 2.0 Flash comparable, concerns arise regarding Rage's vulnerability to prompts.

- Gemini 2.5 Dominates LM Arena: Gemini 2.5 Pro Experimental has soared to the #1 spot on the LM Arena leaderboard with a substantial score increase, leading in Math, Creative Writing, Instruction Following, Longer Query, and Multi-Turn.

- While appreciated for its HTML & Web Design abilities, certain limitations were observed by members.

- Grok 3's Performance Under Scrutiny: Users report that extended conversations with Grok 3 uncover a lot of issues, prompting debate over whether it warrants its high ranking in the LM Arena, which assesses creative writing and longer queries beyond math and coding.

- Some felt that Grok 3 was not a great model compared to Grok 2.

- Python Calls in LM Arena Spark Debate: Members debated the utilization of Python calls by models within the LM Arena, citing o1's precise numerical calculations as potential evidence.

- The existence of a web search leaderboard hints that the standard leaderboard might lack web access.

- Google's Gemini 2.5 Timeline Stuns Users: The community showed amazement at Google's fast development of Gemini 2.5, with one user quoting Sergey Brin's directive to Google to stop building nanny products reported in The Verge.

- Another user added, moving so fast wtf.

Perplexity AI Discord

- Perplexity Adds Answer Modes: Perplexity introduced answer modes for verticals like travel, shopping, places, images, videos, and jobs to improve the core search product.

- The feature, designed for super precision to reduce manual tab selection, is currently available on the web and coming to mobile soon.

- Perplexity Experiences Product Problems: Users reported multiple outages with Perplexity AI, leading to wiped spaces and threads, and causing frustration for tasks like studying and thesis work.

- The downtime prompted frustration among those relying on it for important tasks.

- DeepSeek Delivers Developer Dreams: DeepSeek V3 is gaining positive feedback from developers, with discussions highlighting its coding capabilities compared to Claude 3.5 Sonnet.

- A member shared a link to the DeepSeek subreddit to further discuss the AI's coding prowess and comparing it with Claude 3.5 Sonnet.

- Sonar Model gives Truncated Responses: Users are reporting truncated responses with the Sonar model, where the response cuts off mid-sentence, despite receiving a 200 response.

- This issue has been observed even when receiving around 1k tokens, and users have been directed to report the bug.

- API Cost Causes Concern: A user expressed concerns about the high cost of $5 per 1000 API requests and sought advice on how to optimize and reduce this expense.

- Another user noticed that the API seems limited to 5 steps, whereas they have observed up to 40 steps on the web app.

Cursor Community Discord

- Augment Smashes Cursor in Codebase Analysis: Members found Augment is better at analyzing large codebases compared to Cursor because it uses full context.

- The claim is that Augment does not just feed the entire codebase into the LLM, but perhaps uses another file searching system, whereas you must use Claude 3.7 Max for full context in Cursor.

- Debate Clarifies Claude 3.7 MAX Differences: The key difference between Claude 3.7 MAX and Claude 3.7 is that the MAX version has full context, while the non-MAX version has limited context and only 25 agent calls before needing to resume.

- This limitation refers to both context window size and the amount of context added in a single prompt, according to the channel.

- Vibe Coder's Knowledge Cutoff Exposed: The Vibe coder is in trouble if they don't use Model Context Protocols (MCPs) to mitigate the cut-off knowledge of LLMs, and code translation for the next version may be difficult.

- Members emphasized that it's critical to update frameworks and use MCPs such as Exa Search or Brave Search to mitigate this for Claude, given most AI uses out-of-date frameworks.

- Deepseek V3 challenges Claude 3.7: The new Deepseek V3 is shown to outperform Claude 3.5 (and maybe 3.7) in several tests, and new data also shows the release of a real-world coding benchmark for Deepseek V3 (0324).

- The new Deepseek V3 model is considered impressive, with one member suggesting that using Deepseek V3 Latest (Cline) as Architect + Sonnet 3.5 as Executioner (Cursor) could be a solid coding approach.

- ASI Singularity Forecasted Imminently!: Discussion centered on achieving ASI Singularity (Godsend) soon to preempt potential AI-related chaos.

- Members debated the achievability of true AGI given not understanding our brain completely, and that the new AGI is more so a Super-System that uses LLMs + Algorithmic software + robotics.

OpenAI Discord

- 4o Model Excites Community: Members are anticipating the integration of 4o image generation into both ChatGPT and Sora, and are eager to learn more details regarding its release and capabilities.

- Users are speculating on the potential applications and performance improvements that 4o will bring to various tasks, especially concerning multimodal processing.

- Gemini 2.5 Pro Claims Top Spot: Gemini 2.5 Pro is reportedly outperforming ChatGPT o3-mini-high and leading common benchmarks by significant margins, debuting at #1 on LMArena.

- Enthusiasts proclaimed Gemini just beat everything!, while others remain cautious, hoping it's just a benchmark... thingy.

- GPT's Growing Context Causes Hallucinations: Exceeding GPT's context window (8k for free, 32k for Plus, 128k for Pro) leads to loss of detail and hallucinations in long stories.

- Custom GPTs or projects using PDFs can help, but the chat history itself is still subject to the limit.

- AI Models Face Off: Members are comparing best AI models for various tasks, with ChatGPT favored for math/research/writing, Claude for coding, Grok for queries, Perplexity for search/knowledge, and Deepseek for open source.

- Suggestions included Gemma 27b, Mistral 3.1, QW-32b, and Nemotron-49b, citing Grok's top coding ranking on LMSYS.

- GPT Custom Template Simplifies Builds: A member shared a GPT Custom Template with a floating comment to build custom GPTs from the

Createpane.- The template guides users through building the GPT lazily, building from the evolving context, enabling distracted creation and requiring pre-existing ability to prompt.

aider (Paul Gauthier) Discord

- DeepSeek's API Confesses to being GPT-4: Users reported that DeepSeek's API incorrectly identifies itself as OpenAI's GPT-4 when used through Aider, despite correct API key configuration, as discussed in this Reddit thread.

- The phenomenon is believed to be related to training data containing frequent mentions of ChatGPT.

- Aider's Context Command Packs a Punch: Aider's new

/contextcommand explores the codebase, and the command can be used with any other prompt command, however token usage may be higher.- It is unclear if the command has higher token usage, or if there is an increased repomap size to work correctly; more detail can be found in Discord message.

- Gemini 2.5 Pro Impresses, But Has Rate Limits: Google released an experimental version of Gemini 2.5 Pro, claiming it leads common benchmarks, including the top spot on LMArena, and scoring 74% whole and 68.6% diff on aider's polyglot benchmark.

- Users found the model performant in generating architecture diagrams from codebases, although some found its coding abilities inconsistent and rate limits restrictive.

- NotebookLM Supercharges Aider's Context Priming: A user suggested leveraging NotebookLM to enhance Aider's context priming process, particularly for large, unfamiliar codebases using RepoMix.

- The suggested workflow involves repomixing the repo, adding it to NotebookLM, including relevant task references, and then querying NotebookLM for relevant files and implementation suggestions to guide prompting in Aider.

Unsloth AI (Daniel Han) Discord

- HF Transformers Chosen for Best Bets: Members recommend Hugging Face Transformers, books on linear algebra, and learning PyTorch as the best approach, adding that a dynamic quantization at runtime schema with HF Transformers to stream weights to FP4/FP8 with Bits and Bytes as they load could be helpful.

- Companies like Deepseek sometimes patch models before releasing the weights, and a naive FP8 loading scheme could still be done on day zero, though it wouldn't be equivalent in quality to a fine-grained FP8 assignment.

- Quantization Quirkiness Questioned: A member warns that naively quantizing can significantly hurt model performance, asking, Tbh, is there even a reason to run new models on day zero? I feel like it's really not a great burden to just wait a week.

- Unsloth is uploading DeepSeek-V3-0324 GGUFs with Dynamic Quants that are selectively quantized, which will greatly improve accuracy over standard bits.

- Gemma 3 Glitches Galore: Members report an issue trying to train gemma3 4b for vision, which raises the RuntimeError: expected scalar type BFloat16 but found float, while another user encountered a

TypeErrorwhen loadingunsloth/gemma-3-27b-it-unsloth-bnb-4bitfor text-only finetuning due to redundantfinetune_vision_layersparameter.- A member recommends trying this notebook, while another pointed out that

FastLanguageModelalready setsfinetune_vision_layers = Falseunder the hood as seen in Unsloth's GitHub.

- A member recommends trying this notebook, while another pointed out that

- GRPO + Unsloth on AWS Guide Shared: A guide was shared on running GRPO (DeepSeek’s RL algo) + Unsloth on AWS accounts, using a vLLM server with Tensorfuse on an AWS L40 GPU, with the guide transforming Qwen 7B into a reasoning model, fine-tuning it using Tensorfuse and GRPO, and saving the resulting LoRA adapter to Hugging Face.

- The guide shows how to save fine-tuned LoRA modules directly to Hugging Face for easy sharing, versioning, and integration, backed to s3, which is available at tensorfuse.io.

- FFN Fusion Fuels Faster LLMs: FFN Fusion is introduced as an architectural optimization technique that reduces sequential computation in large language models by identifying and exploiting natural opportunities for parallelization.

- This technique transforms sequences of Feed-Forward Network (FFN) layers into parallel operations, significantly reducing inference latency while preserving model behavior.

Interconnects (Nathan Lambert) Discord

- Gemini 2.5 Pro Steals the Show: Gemini 2.5 Pro Experimental, under the codename Nebula, snatched the #1 position on the LMArena leaderboard, surpassing Grok-3/GPT-4.5 by a record margin.

- It dominates the SEAL leaderboards, securing first place in Humanity’s Last Exam and VISTA (multimodal).

- Qwerky-72B Drops Attention, Rivals 4o-Mini: Featherless AI presented Qwerky-72B and 32B, transformerless models that, trained on 8 GPUs, rival GPT 3.5 Turbo and approach 4o-mini in evaluations with 100x lower inference costs using RWKV linear scaling.

- They achieved this by freezing all weights, deleting the attention layer, replacing it with RWKV, and training it through multiple stages.

- 4o Image Gen Adds Unsolicited Edits: GPT-4o's native image generation faces criticism for overzealous edits, such as making eyes bigger and altering facial features, even changing the user's appearance, as shown in this twitter thread.

- Some users have reported failures when altering a single word in their prompts.

- Home Inference Leans on vLLM: The most efficient method for home inference of LLMs, allowing dynamic model switching, is likely vLLM, despite quirks in quant support, although ollama is more user-friendly but lags in support and SGLang looks promising.

- It was suggested to experiment with llama.cpp to observe its current state.

- AI Reverses Malware like a Pro: Members shared a YouTube video highlighting MCP for Ghidra, which allows LLMs to reverse engineer malware, automating the process with specific prompts.

- One member admitted to initially viewing it as a bit of a meme but now recognizes its potential with real-world implementations.

OpenRouter (Alex Atallah) Discord

- Claude Trips Offline, Recovers Swiftly: Claude 3.7 Sonnet endpoints suffered downtime, according to Anthropic's status updates from March 25, 2025, but the issue was resolved by 8:41 PDT.

- The downtime was attributed to maintenance aimed at system improvements, according to the status page.

- OpenRouter Insures Zero-Token Usage: OpenRouter now provides zero-token insurance, covering all models and potentially saving users over $18,000 weekly.

- As OpenRouterAI stated, users won't be charged for responses with no output tokens and a blank or error finish reason.

- Gemini 2.5 Pro Launches: Google's Gemini 2.5 Pro Experimental, is available as a free model on OpenRouter, boasting advanced reasoning, coding, and mathematical capabilities.

- The model features a 1,000,000 context window and achieves top-tier performance on the LMArena leaderboard.

- DeepSeek's Servers Suffer: Users reported DeepSeek is borderline unusable because of overcrowded servers, suggesting a need for price adjustments to manage demand.

- Some speculated that issues arise during peak usage times in China, but no direct solution was found.

- Provisioning API Keys Grant Granular Access: OpenRouter offers provisioning API keys, letting developers manage API keys, set limits, and track spending, as documented here.

- The new keys enable streamlined billing and access management within platforms using the OpenRouter API.

Nous Research AI Discord

- BPW Sweet Spot is 4-5: Experiments show model capacity collapses below 4 bits per weight (BPW), but deviates above 5, implying optimal weight usage at 4 BPW for given training flops.

- Increasing training epochs helps 5 BPW models approach the curve, raising BPW at the cost of FLOPS, visualized via 2L and 3L MLP trained on MNIST.

- DeepSeek V3: Reasoning Rises: DeepSeek V3-0324 can act as a reasoning model, detect thought iterations, and verify solution existence indirectly, rivalling O1 in performance based on attached prompt.

- The community speculates on a potential DeepSeek V3 Lite release after the Qwen 3 MoE model.

- Google's Gemini 2.5 Pro takes #1 on LMArena: Gemini 2.5 Pro Experimental leads common benchmarks and debuts at #1 on LMArena showcasing strong reasoning and code capabilities.

- It also manages to terminate for infinite thought loops for some prompts and is a daily model drop as noted in this blogpost.

- Transformers get TANHed: Drawing from the recent Transformers without Normalization paper, one member noted that replacing normalization with tanh is a viable strategy.

- The concern raised was the impact on smaller weights when removing experts at inference time, but another countered that the top_k gate mechanism would still function effectively by selecting from the remaining experts.

- LLMs Now Emulate Raytracing: Members discussed the idea of using an LLM to emulate a raytracing algorithm, clarifying that the current implementation involves a Python program written by the LLM to generate images indirectly.

- It's next level text to image generation because the LLM writes the program rather than generating the image directly, the programs are available in this GitHub repo.

Latent Space Discord

- Reve Image Crushes SOTA on Image Quality: The newly released Reve Image model is outperforming other models like Recraft V3, Google's Imagen 3, Midjourney v6.1, and Black Forest Lab's FLUX.1.1 [pro].

- Reve Image excels in text rendering, prompt adherence, and aesthetics, and is accessible through Reve’s website without requiring an API key.

- Gemini 2.5 Pro Steals #1 Spot in Arena: Gemini 2.5 Pro has rocketed to the #1 position on the Arena leaderboard, boasting the largest score jump ever (+40 pts vs Grok-3/GPT-4.5), according to LM Arena's announcement.

- Codenamed nebula, the model leads in Math, Creative Writing, Instruction Following, Longer Query, and Multi-Turn capabilities.

- OpenAI sprinkles Image Gen into ChatGPT 4o: OpenAI has integrated native image generation into ChatGPT, hailed by Sam Altman as an incredible technology and product.

- Early reviews, such as one from @krishnanrohit, praise it as the best image generation and editing tool, noting its prowess in creating and editing multiple characters accurately.

- 11x Sales Startup Faces Customer Claim Frenzy: AI-driven sales automation startup 11x, backed by a16z and Benchmark, is facing accusations of claiming customers it doesn’t actually have, per a TechCrunch report.

- Despite denials from Andreessen Horowitz about pending legal actions, there are rising concerns regarding 11x’s financial stability and inflated revenue figures, suggesting the company's growth relies on generating hype.

- Databricks Tunes LLMs with TAO: The Databricks research team introduced TAO, a method for tuning LLMs without data labels, utilizing test-time compute and RL, detailed in their blog.

- TAO purportedly beats supervised fine-tuning and is designed to scale with compute, facilitating the creation of rapid, high-quality models.

GPU MODE Discord

- AMD Eyes Triton Dominance via Job Postings: AMD is actively recruiting engineers to enhance Triton's capabilities on its GPUs, offering positions in both North America and Europe, detailed in a LinkedIn post.

- Open positions include both junior and senior roles, with potential for remote work, highlighting AMD's investment in expanding Triton's ecosystem.

- CUDA's Async Warp Swizzle Exposed: A member dissected CUDA's async warpgroup swizzle TF32 layout, questioning the rationale behind its design, referencing NVIDIA's documentation.

- The analysis revealed the layout as

Swizzle<0,4,3> o ((8,2),(4,4)):((4,32),(1,64)), enabling reconstruction of original data positions and combination withSwizzle<1,4,3>.

- The analysis revealed the layout as

- ARC-AGI-2 Benchmark Set to Test Reasoning Prowess: The ARC-AGI-2 benchmark, designed to evaluate AI reasoning systems, was introduced, challenging AIs to achieve 85% efficiency at approximately $0.42/task, according to this tweet.

- Initial results indicate that base LLMs score 0%, while advanced reasoning systems achieve less than 4% success, highlighting the benchmark's difficulty and potential for advancement in AI reasoning.

- Inferless Enters the Market on Product Hunt: Inferless, a serverless platform designed for deploying ML models, launched on Product Hunt, providing $30 compute for new users.

- The platform aims to simplify model deployment with ultra-low cold starts, touting rapid deployment capabilities.

HuggingFace Discord

- DeepSeek Debated as Discordian Decider: A member inquired whether DeepSeek is suitable as a moderation bot and another member responded affirmatively but suggested that a smaller 3B LLM might suffice at a cost of 5 cents per million tokens.

- The conversation highlights considerations for cost-effective moderation solutions using smaller language models.

- Fine-Tuning on Windows: A Beginner's Bane?: A member sought a beginner's guide for fine-tuning a model with CUDA support on Windows, only to be met with warnings about the difficulty of installing PyTorch and the CUDA Toolkit.

- Two installation guides were linked: Step-by-Step-Setup-CUDA-cuDNN and Installing-pytorch-with-cuda-support-on-Windows, though one member suggested that the effort was futile.

- Rust Tool Extracts Audio Blazingly Fast: A new tool has been released to extract audio files from parquet or arrow files generated by the Hugging Face datasets library, with a Colab demo.

- The developer aims to provide blazingly fast speeds for audio dataset extraction.

- Gradio adds deep linking delight: Gradio 5.23 introduces support for Deep Links, enabling direct linking to specific generated outputs like images or videos, such as this blue jay image.

- Users are instructed to upgrade to the latest version, Gradio 5.23, via

pip install --upgrade gradioto access the new Deep Links feature.

- Users are instructed to upgrade to the latest version, Gradio 5.23, via

- Llama-3.2 integrates with LlamaIndex.ai: A member experimented with Llama-3.2 using this tutorial, noting it demonstrates how to build agents with LlamaIndex, starting with a basic example and adding Retrieval-Augmented Generation (RAG) capabilities.

- The member used

BAAI/bge-base-en-v1.5as their embedding model, requiringpip install llama-index-llms-ollama llama-index-embeddings-huggingfaceto integrate with Ollama and Huggingface.

- The member used

MCP (Glama) Discord

- Nexus Manages Context for AI Coders: A member shared Nexus, a system to address context management challenges with AI coding assistants, particularly in large software projects, aimed at reducing token costs and improving code accuracy.

- Nexus addresses the limited context windows of LLMs, which leads to inaccurate code generation.

- Deepseek V3 works with AOT: Following a discussion about using Anthropic's 'think tool', a member recommended Atom of Thoughts for Claude, describing it as incredible.

- Another member shared images of Deepseek V3 working with AOT.

- Multiple MCP Servers Served Simultaneously: Members discussed how to run multiple MCP servers with user-defined ports, suggesting the use of Docker and mapping ports.

- They also pointed to the ability to configure ports via the

FastMCPconstructor in the python-sdk.

- They also pointed to the ability to configure ports via the

- Speech Interaction with MCP Surfaces: A member shared their main MCP for speech interaction with audio visualization: speech-mcp, a Goose MCP extension.

- This allows voice interaction with audio visualization.

- Human Approvals Requested by gotoHuman MCP Server: The gotoHuman team presented an MCP server to request human approvals from agents and workflows: gotohuman-mcp-server.

- The server allows for easy human review of LLM actions, defining the approval step in natural language and triggering a webhook after approval.

Notebook LM Discord

- Unlock Podcast Hosting via NotebookLM: A user sought a hack to utilize NotebookLM as a podcast host, engaging in conversation with a user as a guest on a given topic, asking for the Chat Episode Prompt to enable the podcast.

- The community is exploring the Versatile Bot Project, which offers a Chat Episode prompt document for AI hosts in Interactive mode to foster user participation during discussions.

- Google Data Export Tool Billing Clarified: A user enabled Google Cloud Platform billing to use the Data Export tool but was concerned about potential charges; however, another user clarified that enabling billing does not automatically incur costs.

- This arose as the user initiated the Data Export from the admin console and confirmed accessing the archive via console.cloud.google.com.

- Google Data Export Has Caveats: The option to choose the data destination during export is constrained by the Workspace edition, with exported data being stored in a Google-owned bucket and slated for deletion in 60 days as described on Google Support.

- Users should note this temporary storage arrangement when planning their data export strategies.

- Mind Map Missing For Many: Users reported the absence of the Mind Map feature in NotebookLM, which was confirmed to be undergoing a gradual rollout.

- Speculation arose that the rollout's delay might be attributed to bug fixes, with a user noting the rollout is going like a snails pace of an actual rollout.

LM Studio Discord

- Universal Translator Only Five Years Away?: A member predicts a universal translator is just five years away, based on ChatGPT's language understanding and translation capabilities, while another shared a YouTube link asking what model is singing in the video.

- This sparked curiosity about the advancements needed to achieve real-time, accurate language translation across diverse languages.

- Mozilla's Transformer Lab gets looked at seriously: Members discussed Mozilla's Transformer Lab, a project aiming to enable training and fine-tuning on regular hardware and the GitHub repo link was shared.

- The lab is supported by Mozilla through the Mozilla Builders Program, and is working to enable training and fine-tuning on consumer hardware.

- LM Studio GPU Tokenization Discussed: During tokenization, LM Studio heavily utilizes a single CPU thread, prompting a question whether the process is fully GPU-based.

- While initially stated that tokenizing has nothing to do with the GPU, observations on the impact of flash attention and cache settings on tokenizing time suggested otherwise.

- Gemini 2.5 Pro Triumphs Over Logic: Members experimented with Gemini 2.5 Pro, and reported it successfully solved a logic puzzle that Gemini 2.0 Flash Thinking failed at, and shared a link to use it for free on aistudio.

- This indicated potential improvements in reasoning capabilities in the new Gemini 2.5 Pro model.

- 3090 Ti Shows Speed with Flash: One user fully loaded their 3090 Ti, achieving ~20 tokens/s without flash and ~30 tokens/s with flash enabled.

- The user shared a screenshot of the 3090 Ti under full load, reporting slowdowns after processing 4-5k tokens.

Cohere Discord

- Cohere Cracks Down on Clarity: Cohere clarified its Privacy Policy and Data Usage Policy, advising users to avoid uploading personal information and providing a dashboard for data management.

- They offer Zero Data Retention (ZDR) upon request via email and are SOC II and GDPR compliant, adhering to industry standards for data security, as detailed in their security policy.

- Cohere Streams Responses, Easing UX Pains: The Cohere API now supports response streaming, allowing users to see text as it's generated, enhancing the user experience, according to the Cohere's Chat Stream API reference.

- This feature enables real-time text display on the client end, making interactions more fluid and immediate.

- Cohere Embedding Generator Gets Tokenization Tips: A user was constructing a CohereEmbeddingGenerator client in .NET and inquired about tokenizing text prior to embedding, as embeddings will not work without tokenization.

- They were advised to use the

/embedendpoint to check token counts or manually download the tokenizer from Cohere's public storage.

- They were advised to use the

- Sage Seeks Summarization Sorcery: New member Sage introduced themself, mentioning their university NLP project: building a text summarization tool and seeking guidance from the community.

- Sage hopes to learn and contribute while navigating the challenges of their project.

Torchtune Discord

- TorchTune Soars to v0.6.0: TorchTune released v0.6.0, featuring Tensor Parallel support for distributed training and inference, builders for Microsoft's Phi 4, and support for multinode training.

- DeepSeek Drops Model Without Directions: The DeepSeek-V3 model was released without a readme, leading members to joke about the DeepSeek AI team's approach.

- The model features a chat interface and Hugging Face integration.

- Torchtune's MoEs Provoke Daydreams: A member speculated whether adding MoEs to torchtune would require 8-9 TB of VRAM and a cluster of 100 H100s or H200s to train.

- They jokingly suggested needing to rearrange their attic to accommodate the hardware.

- Optimizer Survives QAT Transition: After Quantization Aware Training (QAT), optimizer state is preserved, confirmed by a member referencing the relevant torchtune code.

- This preservation ensures continuity during the switch to QAT.

- CUDA Overhead Gets Graph Captured: To reduce GPU idle time, members stated that launching CUDA operations from CPU has non-negligible overhead, suggesting that capturing GPU operations as a graph and launching them as a single operation can consolidate the computational graph.

- This sparked a discussion on whether this is what compile does.

Nomic.ai (GPT4All) Discord

- LocalDocs DB Demands Backup: Members advocated backing up the

localdocs.dbfile to prevent data loss, especially if original documents are lost or inaccessible, which is stored as an encrypted databse.- GPT4All uses the

*.dbfile with the highest number (e.g.,localdocs_v3.db), and renaming them might allow for import/export, though this is unconfirmed.

- GPT4All uses the

- Privacy Laws Muddy Chat Data Analysis: One member highlighted how privacy laws, particularly in the EU, create challenges when processing chat data with LLMs.

- The discussion emphasized the need to verify permissions and the format of chat messages (plaintext or convertible) before feeding them into an LLM.

- API vs Local LLM Debate: A member questioned the choice between using a paid API like Deepseek or OpenAI, or running a local LLM for processing group chat messages to calculate satisfaction rates, extract keywords, and summarize messages.

- Another member suggested that if the messages are under 100MB, a local machine with a good GPU might suffice, especially if using smaller models for labeling and summarization.

- LocalDocs DB Importing Intricacies: Members explored importing a

localdocs.dbfile, but noted the file contains encrypted/specially encoded text that's difficult for a generic LLM to parse without an embedding model.- One member who lost their

localdocs.dbwas experiencing painfully slow CPU indexing, seeking alternatives.

- One member who lost their

- Win11 Update Erases LocalDocs: A member reported that their

localdocs.dbbecame empty after a Windows 11 update and was struggling to re-index the local documents on CPU.- Drive letter changes due to the update were suggested as a possible cause, with a recommendation to move files to the C drive to avoid such issues.

LlamaIndex Discord

- LlamaIndex enables Claude MCP Compatibility: Members provided a simplified example of integrating Claude MCP with LlamaIndex, showcasing how to expose a localhost and port for MCP clients like Claude Desktop or Cursor using

FastMCPanduvicornin a code snippet.- This integration allows developers to seamlessly connect Claude with LlamaIndex for enhanced functionality.

- AgentWorkflow Accelerates LlamaIndex Multi-Agent Performance: Users reported slow performance with LlamaIndex MultiAgentic setups using Gemini 2.0 with 12 tools and 3 agents; a suggestion was made to use

AgentWorkflowand thecan_handoff_tofield for controlled agent interaction.- The discussion emphasized optimizing agent interactions for improved speed and efficiency in complex setups.

- LlamaIndex Agent Types Decoded: A member expressed confusion about the different agent types in LlamaIndex and when to use them, noting that a refactor on docs is coming soon.

- A team member suggested

core.agent.workflowshould generally be used, with FunctionAgent for LLMs with function/tool APIs and ReActAgent for others, pointing to the Hugging Face course for more help.

- A team member suggested

- Automatic LLM Evals Launch with no Prompts!: A founder is validating an idea for OSS automatic evaluations with a single API and no evaluation prompts, using proprietary models for tasks like Hallucination and Relevance in under 500ms.

- More detail is available on the autoevals.ai website about its end-to-end solution, including models, hosting, and orchestration tools.

- LlamaCloud becomes an MCP Marvel: LlamaCloud can be an MCP server to any compatible client, as shown in this demo.

- A member showed how to build your own MCP server using LlamaIndex to provide tool interfaces of any kind to any MCP client, with ~35 lines of Python connecting to Cursor AI, and implemented Linkup web search and this project.

Eleuther Discord

- Google Gemini 2.5 Pro Debuts: Google introduced Gemini 2.5 Pro as the world's most powerful model, highlighting its unified reasoning, long context, and tool usage capabilities, available experimentally in Google AI Studio + API.

- They are touting experimental access as free for now, but pricing details will be announced shortly.

- DeepSeek-V3-0324 Impresses with p5.js Program: DeepSeek-V3-0324 coded a p5.js program that simulates a ball bouncing inside a spinning hexagon, influenced by gravity and friction, as showcased in this tweet.

- The model also innovated features like ball reset and randomization based on a prompt that requested sliders for parameter adjustment and side count buttons.

- SkyLadder Paper Highlights Short-to-Long Context Transition: A paper on ArXiv introduces SkyLadder, a short-to-long context window transition for pretraining LLMs, showing gains of up to 3.7% on common tasks (2503.15450).

- They used 1B and 3B parameter models trained on 100B tokens to achieve this performance.

- Composable Generalization Achieved Through Hypernetworks: A paper reformulates multi-head attention as a hypernetwork, revealing that a composable, low-dimensional latent code specifies key-query specific operations, allowing transformers to generalize to novel problem instances (2406.05816).

- For each pair of q, k indices, the authors interpret activations along the head-number dimension as a latent code that specifies the task or context.

- lm_eval Upgrade PR Awaits Review: A pull request (PR) is open to update the evaluation logic in

gpt-neoxtolm_eval==0.4.8, the latest version, with potentially unrelated failing tests to be addressed in a separate PR, as linked here: PR 1348.- Failures might be due to environment setup or inconsistent versioning of dependencies.

Modular (Mojo 🔥) Discord

- Mojo Sidelined for Websites: Members advised against using Mojo for websites due to its lack of specifications for cryptographically sound code and a weak IO story, favoring Rust for its production-ready libraries.

- It was suggested that Rust's faster async capabilities are better suited for applications requiring authentication or HTTPS.

- Mojo's Hardware-Backed AES Implementation Paused: A hardware-backed AES implementation in Mojo doesn't run on older Apple silicon Macs and isn't a full TLS implementation, leading to a pause in development.

- The developer is waiting for a cryptographer to write the software half, citing risks of cryptographic functions implemented by non-experts.

- SIMD Optimizations Boost AES: Discussion centered on using SIMD for AES, noting that x86 has vaes and similar features for SIMD AES 128.

- It was also mentioned that ARM has SVE AES, which is similar but not as well supported, showcasing hardware optimizations for cryptographic functions.

- Go Floated as a Backend Middle Ground: As an alternative to Rust, a member suggested Go as a middle ground that is also production ready, while another expressed concerns about too many microservices.

- Despite the challenges, a member expressed reluctance towards Rust due to the perception that it's not suitable for fast writing, seeking an easier solution for backend development, and the suggestion was to have the Rust API call into it and pass arguments along.

- Mojo Bypasses CUDA with PTX for NVIDIA: Mojo directly generates PTX (Parallel Thread Execution) code to target NVIDIA GPUs, bypassing CUDA and eliminating dependencies on cuBLAS, cuDNN, and CUDA C.

- This approach streamlines the development process by avoiding the need for CUDA-specific libraries.

DSPy Discord

- DSPy tackles Summarization Task: A member is exploring using DSPy for a text summarization task with 300 examples and is testing it out on a simple metric to see where exactly the summarization differed to make the optimizer more effective.

- The feedback can be returned via

dspy.Prediction(score=your_metric_score, feedback="stuff about the ground truth or how the two things differ")to guide the optimization.

- The feedback can be returned via

- SIMBA Provides Granular Feedback: A member suggested using the experimental optimizer

dspy.SIMBAfor the summarization task, which allows for providing feedback on differences between the generated summary and the ground truth.- This level of feedback allows for more precise guidance during the optimization process.

- Output Refinement with BestOfN and Refine: A member shared a link to a DSPy tutorial on Output Refinement explaining

BestOfNandRefinemodules designed to improve prediction reliability.- The tutorial elaborates on how both modules stop when they have reached

Nattempts or when thereward_fnreturns an award above thethreshold.

- The tutorial elaborates on how both modules stop when they have reached

- BestOfN Module Triumphs with Temperature Tweaks: The

BestOfNmodule runs a given module multiple times with different temperature settings to get an optimal result.- It returns either the first prediction that passes a specified threshold or the one with the highest reward if none meet the threshold.

- Refine Module Composable?: A member inquired if

Refineis going to subsume assertions, and whether it's as granular and composable, since it wraps an entire module.- Another member responded that the composability can be managed by adjusting the module size, allowing for more explicit control over the scope.

tinygrad (George Hotz) Discord

- AMD Legacy GPUs get tinygrad bump: With the OpenCL frontend, older AMD GPUs (not supported by ROCm) such as those in a 2013 Mac Pro can potentially run with Tinygrad.

- Success depends on the custom driver and level of OpenCL support available; users should verify their system compatibility.

- ROCm Alternative emerges for Old AMD: For older AMD GPUs, ROCm lacks support but the OpenCL frontend in tinygrad might offer a workaround.

- Success will vary based on specific driver versions and the extent of OpenCL support; experimentation is needed.

Codeium (Windsurf) Discord

- Windsurf Kicks Off Creators Club: Windsurf launched a Creators Club rewarding community members for content creation, offering $2-4 per 1k views.

- Details for joining can be found at the Windsurf Creators Club.

- Windsurf Opens 'Vibe Coding' Channel: Windsurf has created a new channel for 'vibe coders' to enter the flow state, chat, discuss, and share tips/tricks.

- The goal is to enhance the coding experience by fostering a collaborative and immersive environment.

- Windsurf v1.5.8 Patches Released: Windsurf v1.5.8 is now released with patch fixes, including cascade/memories fixes, Windsurf Previews improvements, and cascade layout fixes.

- An image showcasing the release was also shared, highlighting the specific improvements.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!