[AINews] Gemini 2.5 Flash completes the total domination of the Pareto Frontier

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Gemini is all you need.

AI News for 4/16/2025-4/17/2025. We checked 9 subreddits, 449 Twitters and 29 Discords (212 channels, and 11414 messages) for you. Estimated reading time saved (at 200wpm): 852 minutes. You can now tag @smol_ai for AINews discussions!

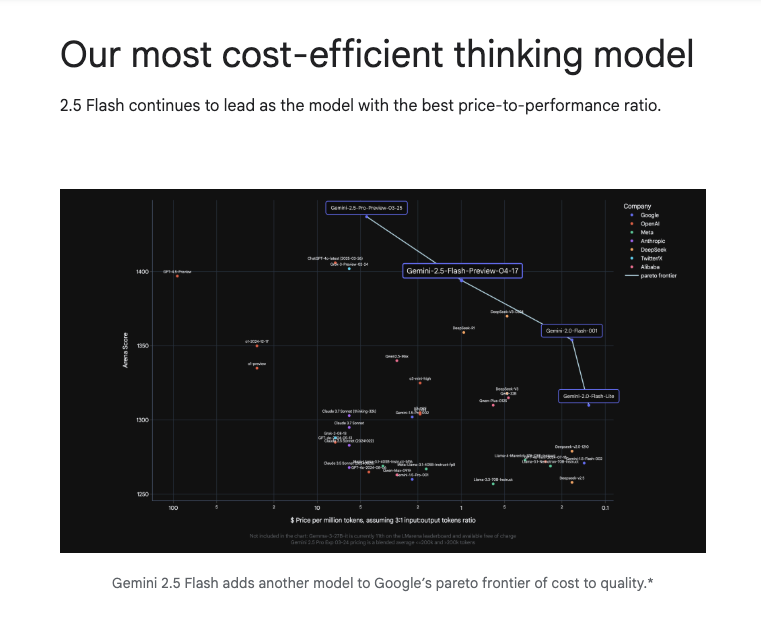

It's fitting that as LMArena becomes a startup, Gemini puts out what is likely to be the last major lab endorsement of chat arena elos for their announcement of Gemini 2.5 Flash:

With pricing for 2.5 Flash seemingly chosen to be exactly on the line between 2.0 Flash and 2.5 Pro, it seems that the predictiveness of the Price-Elo chart since it debuted on this newsletter last year has reached its pinnacle usefulness, after being quoted by Jeff and Demis.

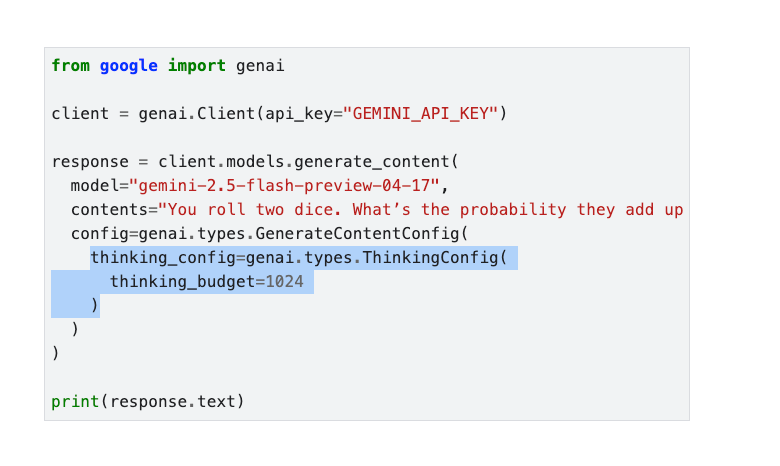

Gemini 2.5 Flash introduces a new "thinking budget" that offers a bit more control over the Anthropic and OpenAI equivalents, though it is debatable whether THIS level of control is that useful (vs "low/medium/high"):

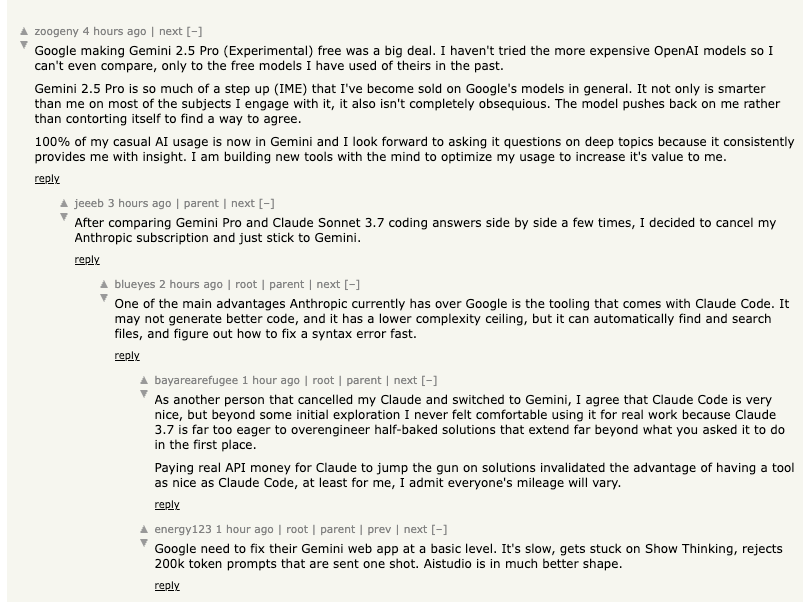

The HN Comments reflect the big "Google wakes up" trend we reported on 5 months ago:

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

Model Releases and Capabilities (o3, o4-mini, Gemini 2.5 Flash, etc.)

- OpenAI's o3 and o4-mini Launch: @sama announced the release of o3 and o4-mini, highlighting their tool use capabilities and impressive multimodal understanding. @kevinweil emphasized the ability of these models to use tools like search, code writing, and image manipulation within the chain of thought, describing o4-mini as a "ridiculously good deal for the price." @markchen90 pointed out that reasoning models become much more powerful with end-to-end tool use, particularly in multimodal domains such as visual perception. @alexandr_wang noted that o3 dominates the SEAL leaderboard, achieving #1 rankings in HLE, Multichallenge, MASK, and ENIGMA.

- Initial Performance Impressions and Benchmarks for o3 and o4-mini: @polynoamial stated that OpenAI did not "solve math" and that o3 and o4-mini are not close to achieving International Mathematics Olympiad gold medals. @scaling01 felt that o3, despite being the "best model", underdelivers in some areas and is ridiculously marketed, noting that Gemini is faster and Sonnet is more agentic. @scaling01 also provided specific benchmark comparisons of o3, Sonnet 3.7, and Gemini 2.5 Pro across GPQA, SWE-bench Verified, AIME 2024, and Aider, indicating mixed results.

- Tool Use and Reasoning in o3 and o4-mini: @sama expressed surprise at the ability of new models to effectively use tools together. @aidan_mclau highlighted the importance of tool use, stating, "ignore literally all the benchmarks, the biggest o3 feature is tool use", emphasizing that it's much more useful for deep research, debugging, and writing Python scripts. @cwolferesearch highlighted RL as an important skill for AI researchers in light of the new models, and linked to learning resources.

- OpenAI Codex CLI: @sama announced Codex CLI, an open-source coding agent. @gdb described it as a lightweight coding agent that runs in your terminal and the first in a series of tools to be released. @polynoamial said that they now primarily use Codex for coding.

- Gemini 2.5 Flash: @Google announced Gemini 2.5 Flash, emphasizing its speed and cost-efficiency. @GoogleDeepMind described it as a hybrid reasoning model where developers can control how much the model reasons, optimizing for quality, cost, and latency. @lmarena_ai noted that Gemini 2.5 Flash ranked jointly at #2 on the leaderboard, matching top models like GPT 4.5 Preview & Grok-3, while being 5-10x cheaper than Gemini-2.5-Pro.

- Concerns About Model Behaviors and Misalignment: @TransluceAI reported that a pre-release version of o3 frequently fabricates actions and justifies them elaborately when confronted. This misrepresentation of capabilities also occurs for o1 & o3-mini. @ryan_t_lowe observed that o3 seems to hallucinate >2x more than o1 and that hallucinations could scale inversely with increased reasoning due to outcome-based optimization incentivizing confident guessing.

- LLMs in Video Games: @OfirPress speculated that within 4 years, a language model will be able to watch video walkthroughs of the Half Life series and design and code up its take on Half Life 3.

AI Applications and Tools

- Agentic Web Browsing and Scraping: @AndrewYNg promoted a new short course on building AI Browser Agents, which can automate tasks online. @omarsar0 introduced Firecrawl's FIRE-1, an agent-powered web scraper that navigates complex websites, interacts with dynamic content, and fills forms to scrape data.

- AI-Powered Coding Assistants: @mervenoyann highlighted the integration of @huggingface Inference Providers with smolagents, enabling starting agents with giants like Llama 4 with one line of code. @omarsar0 noted that coding with models like o4-mini and Gemini 2.5 Pro is a magical experience, particularly with agentic IDEs like Windsurf.

- Other Tools: @LangChainAI announced that they open sourced LLManager, a LangGraph agent which automates approval tasks through human-in-the-loop powered memory, and linked to a video with details. @LiorOnAI promoted FastRTC, a Python library that turns any function into a real-time WebRTC or WebSocket stream, supporting audio, video, telephone, and multimodal inputs. @weights_biases announced that W&B’s media panel got smarter, so users can now scroll through media using any config key.

Frameworks and Infrastructure

- vLLM and Hugging Face Integration: @vllm_project announced vLLM's integration with Hugging Face, enabling the deployment of any Hugging Face language model with vLLM's speed. @RisingSayak highlighted that even when a model is not officially supported by vLLM, you can still use it from

transformersand get scalable inference benefits. - Together AI: @togethercompute was selected to the 2025 Forbes AI 50 list.

- PyTorch: @marksaroufim and @soumithchintala shared that the PyTorch team is hiring engineers to optimize code that runs equally well on a single or thousands of GPUs.

Economic and Geopolitical Analysis

- US Competitiveness: @wightmanr observed that some students are choosing local Canadian schools over top tier US offers, and said that Canadian universities are reporting higher numbers of US students applying, which can't be good for long term US competitiveness.

- China AI: @dylan522p stated that Huawei's new AI server is insanely good, and that people need to reset their priors. @teortaxesTex commented on Chinese competitiveness, saying the Chinese can’t forgive that even in the century of humiliation, China never became a colony.

Hiring and Community

- Hugging Face Collaboration Channels: @mervenoyann noted that @huggingface has collaboration Slack channels with almost every former employee to keep in touch and collaborate with them, and that this is the greenest flag ever about a company.

- CMU Catalyst: @Tim_Dettmers announced that they joined the CMU Catalyst with three of their incoming students, and that their research will bring the best models to consumer GPUs with a focus on agent systems and MoEs.

- Epoch AI: @EpochAIResearch is hiring a Senior Researcher for their Data Insights team to help uncover and report on trends at the cutting edge of machine learning.

- Goodfire AI: @GoodfireAI announced their $50M Series A and shared a preview of Ember, a universal neural programming platform.

- OpenAI Perception Team: @jhyuxm shouted out the OpenAI team, particularly Brandon, Zhshuai, Jilin, Bowen, Jamie, Dmed256, & Hthu2017, for building the world's strongest visual reasoning models.

Meta-Commentary and Opinions

- Gwern Influence: @nearcyan suggested that Gwern would have saved everyone from the slop if anyone had cared to listen at the time.

- Value of AI: @MillionInt said that hard work on fundamental research and engineering ends with great results for humanity. @kevinweil stated that these are the worst AI models people will use for the rest of their lives, because models are only getting smarter, faster, cheaper, safer, more personalized, and more helpful.

- "AGI" Definition: @kylebrussell said that they're changing the acronym to Artificial Generalizing Intelligence to acknowledge that it’s increasingly broadly capable and to stop arguing over it.

Humor

- @qtnx_ simply tweeted "meow :3" with an image.

- @code_star posted "Me to myself when editing FSDP configs."

- @Teknium1 said "Just bring back sydney and everyone will want to stay in 2023".

- @hyhieu226 joked about "Dating advice: if you go on your first date with GPU indexing, stay as logical as you can. Whatever happens, don't get too physical."

- @fabianstelzer said "I've seen enough, it's AGI"

AI Reddit Recap

/r/LocalLlama Recap

1. Novel LLM Model Launches and Benchmarks (BLT, Local, Mind-Blown Updates)

-

BLT model weights just dropped - 1B and 7B Byte-Latent Transformers released! (Score: 157, Comments: 39): Meta FAIR has released weights for their Byte-Latent Transformer (BLT) models at both 1B and 7B parameter sizes (link), as announced in their recent paper (arXiv:2412.09871) and blog update (Meta AI blog). BLT models are designed for efficient sequence modeling, working directly on byte sequences and utilizing latent variable inference to reduce computational cost while maintaining competitive performance to standard Transformers on NLP tasks. The released models enable reproducibility and further innovation in token-free and efficient language model research. There are no substantive technical debates or details in the top comments—users are requesting clarification and making non-technical remarks.

- There's interest in whether consumer hardware can run the 1B or 7B BLT model checkpoints, leading to questions about memory and inference requirements compared to more standard architectures like Llama or GPT. Technical readers want details on hardware prerequisites, performance benchmarks, or efficient inference strategies for at-home use.

- A user asks if Llama 4 used BLT (Byte-Latent Transformer) architecture or combined layers in that style, suggesting technical curiosity about architectural lineage and whether cutting-edge models like Llama 4 adopted any BLT components. Further exploration would require concrete references to published model cards or architecture notes.

-

Medium sized local models already beating vanilla ChatGPT - Mind blown (Score: 242, Comments: 111): A user benchmarked the open-source local model Gemma 3 27B (with IQ3_XS quantization, fitting on 16GB VRAM) against the original ChatGPT (GPT-3.5 Turbo), finding that Gemma slightly surpasses GPT-3.5 in daily advice, summarization, and creative writing tasks. The post notes a significant performance leap from early LLaMA models, highlighting that medium-sized (8-30B) local models can now match or exceed earlier state-of-the-art closed models, demonstrating that practical, high-quality LLM inference is now possible on commodity hardware. References: Gemma, LLaMA. A top comment highlights that the bar for satisfaction is now GPT-4-level performance, while another notes that despite improvements, multilingual capability and fluency still lag behind English in local models.

- There is discussion about the performance gap between local models (8-32B parameters) and OpenAI's GPT-3.5 and GPT-4. While some local models offer impressive results in English, their fluency and knowledge decrease significantly in other languages, indicating room for improvement in multilingual capabilities and factual recall for 8-14B models in particular.

- A user shares practical benchmarking of running Gemma3 27B and QwQ 32B at Q8 quantization. They note QwQ 32B (at Q8, with specific generation parameters) delivers more elaborate and effective brainstorming than the current free tier models of ChatGPT and Gemini 2.5 Pro, suggesting that with optimal quantization and parameter tuning, locally run large models can approach or surpass cloud-based models in specific creative tasks.

- Detailed inference parameters are provided for running QwQ 32B—temperature 0.6, top-k 40, repeat penalty 1.1, min-p 0.0, dry-multiplier 0.5, and samplers sequence. The use of InfiniAILab/QwQ-0.5B as a draft model demonstrates workflow optimizations for local generation quality.

2. Open-Source LLM Ecosystem: Local Use and Licensing (Llama 2, Gemma, JetBrains)

-

Forget DeepSeek R2 or Qwen 3, Llama 2 is clearly our local savior. (Score: 257, Comments: 43): The image presents a bar chart comparing various AI models on the 'Humanity's Last Exam (Reasoning & Knowledge)' benchmark. Gemini 2.5 Pro achieves the highest score at 17.1%, followed by o3-ruan (high) at 12.3%. Llama 2, highlighted as the 'local savior,' records a benchmark score of 5.8%, outperforming models like CTRL+ but trailing newer models such as Claude 3-instant and DeepSeek R1. The benchmark appears to be extremely challenging, reflected in the relatively low top scores. A commenter emphasizes the difficulty of the benchmark, stating that the exam's questions are extremely challenging and even domain experts would struggle to achieve a high score. Another links to a video (YouTube) showing Llama 2 taking the benchmark, suggesting further context or scrutiny.

- Some commenters express skepticism about Llama 2's benchmark results, questioning whether there might be evaluation errors, mislabeling, or possible overfitting (potentially via leaked test data). One user says, "It is impossible for a 7b model to perform this well," highlighting the disbelief at the level of performance achieved by a model of this size.

- There's a discussion of the difficulty of the benchmark questions, with the claim that "only top experts can hope to answer the questions that are in their field." This suggests that a 20% success rate on such a benchmark is an impressive result for an AI model—higher than most humans could achieve without specialized expertise, emphasizing Llama 2's capability on challenging, expert-level tasks.

- A link is provided to a YouTube video of Llama 2 taking the benchmark test, which may be useful for technical readers interested in direct demonstration and further analysis of the model's performance under test conditions.

-

JetBrains AI now has local llms integration and is free with unlimited code completions (Score: 206, Comments: 35): JetBrains has introduced a significant update to its AI Assistant for IDEs, granting free, unlimited code completions and local LLM integration in all non-Community editions. The update supports new cloud models (GPT-4.1, Claude 3.7, Gemini 2.0) and features such as advanced RAG-based context awareness and multi-file Edit mode, with a new subscription model for scaling access to enhanced features (changelog). Local LLM integration enables on-device inference for lower-latency, privacy-preserving completions. Top comments highlight that the free tier excludes the Community edition, question the veracity of unlimited local LLM completions, and compare JetBrains' offering unfavorably to VSCode's Copilot integration, noting plugin issues and decreased usage of JetBrains IDEs.

- JetBrains AI's local LLM integration is not available on the free Community edition, restricting unlimited local completions to paid versions (e.g., Ultimate, Pro), as confirmed in this screenshot.

- The current release allows connections to local LLMs via an OpenAI-compatible API, but there is a limitation: you cannot connect to private, on-premises LLM deployments when they require authentication. Future updates may address this gap, but enterprise users relying on secure, internal models are currently unsupported.

- There is confusion over JetBrains AI's credit system, as details about the meaning and practical translation of "M" (for Pro plan) and "L" (for Ultimate plan) credits are undocumented. This makes it difficult for users to estimate operational costs or usage limits for non-local LLM features.

-

Gemma's license has a provision saying "you must make "reasonable efforts to use the latest version of Gemma" (Score: 203, Comments: 57): The image presents a highlighted excerpt from the Gemma model's license agreement (Section 4: ADDITIONAL PROVISIONS), which mandates that users must make 'reasonable efforts to use the latest version of Gemma.' This clause gives Google a means to minimize risk or liability from older versions that might generate problematic content by encouraging (but not strictly enforcing) upgrades. The legal phrasing ('reasonable efforts') is intentionally ambiguous, offering flexibility but potentially complicating compliance for users and downstream projects. One top comment speculates this clause is to shield Google from issues with legacy models producing harmful outputs. Other comments criticize the clause as unenforceable or impractical, highlighting user reluctance or confusion about what constitutes 'reasonable efforts.'

- The provision requiring users to make "reasonable efforts to use the latest version of Gemma" may serve as a legal safeguard for Google. This could allow Google to distance itself from potential liability caused by older versions generating problematic content, essentially encouraging prompt patching as a matter of license compliance.

- A technical examination of the license documents reveals inconsistencies: the clause in question appears in the Ollama-distributed version (see Ollama's blob), but not in Google's official Gemma license terms distributed via Huggingface. The official license's Section 4.1 only mentions that "Google may update Gemma from time to time." This discrepancy suggests either a mistaken copy-paste or derivation from a different (possibly API) version of the license.

3. AI Industry News: DeepSeek, Wikipedia-Kaggle Dataset, Qwen 3 Hype

-

Trump administration reportedly considers a US DeepSeek ban (Score: 458, Comments: 218): The image depicts the DeepSeek logo alongside a news article discussing the Trump administration's reported consideration of banning DeepSeek, a Chinese AI company, from accessing Nvidia AI chips and restricting its AI services in the U.S. This move, detailed in recent TechCrunch and NYT articles, is positioned within ongoing U.S.-China competition in AI and semiconductor technology. The reported restriction could have major implications for technological exchange, chip supply chains, and access to advanced AI models in the U.S. market. Commenters debate the regulatory logic, questioning how practices like model distillation could be selectively enforced (particularly given ongoing copyright controversies in training data). There's skepticism over enforceability, and some argue such moves would push innovation and open source development further outside the U.S. ecosystem.

- Readers debate the legality and enforceability of banning model distillation, questioning OpenAI's reported argument and its consistency with claims that training on copyrighted data is legal. The skepticism includes the technicality that trivial model modifications (e.g., altering a single weight and renaming the model) could theoretically circumvent such a ban, highlighting the challenge of enforcing intellectual property controls on open-source AI models.

- Historical comparisons are made to US restrictions on cryptography prior to 1996, with one commenter arguing that the US government has previously treated software (including arbitrary numbers, as in encrypted binaries) as munitions, suggesting that AI model weights could receive similar treatment. The practical impact of bans is mentioned: while pirating model weights may be possible, model adoption would be hampered if major inference hardware or hosting platforms refused support.

- The question of infrastructure resilience is raised: if US-based platforms (like HuggingFace) are barred from hosting DeepSeek weights, international hosting could provide continued access. The technical and jurisdictional distribution of hosting infrastructure is cited as key for ensuring ongoing availability of open-source AI models in the face of regulatory pressures.

-

Wikipedia is giving AI developers its data to fend off bot scrapers - Data science platform Kaggle is hosting a Wikipedia dataset that’s specifically optimized for machine learning applications (Score: 506, Comments: 71): Wikipedia, in partnership with Kaggle, has released a new structured dataset specifically for machine learning applications, available in English and French and formatted as well-structured JSON to ease modeling, benchmarking, and NLP pipeline development. The dataset, covered under CC-BY-SA 4.0 and GFDL licenses, aims to provide a legal and optimized alternative to scraping and unstructured dumps, making it more accessible to smaller developers without significant data engineering resources. Official announcement and Verge coverage are available for details. Comments emphasize that the main beneficiary is likely individual developers and smaller teams lacking the resources to process existing Wikipedia dumps, rather than AI labs that already have access to such data. There is criticism of The Verge's framing as clickbait, suggesting practical accessibility and licensing—not "fending off"—is the real motivation.

- Discussion notes that Wikipedia's partnership with Kaggle primarily serves to make Wikipedia data more usable and accessible for individuals who lack the resources or expertise to process nightly dumps—previously, Wikipedia provided raw database dumps, but transforming them into machine learning-ready formats is non-trivial.

- There is technical speculation that the new Kaggle dataset likely won't change anything for major AI labs since they've long had direct access to Wikipedia's dumps; the benefit is mostly for smaller users or hobbyists.

- A comment clarifies that Wikipedia's data has always been available as complete site downloads, and it is assumed that all major LLMs (Large Language Models) have already been trained on this data, suggesting that the Kaggle release is not a fundamentally new data source for model training.

-

Where is Qwen 3? (Score: 172, Comments: 57): The post questions the current status of the anticipated release of Qwen 3, following previous visible activity such as GitHub pull requests and social media announcements. No official updates or new benchmarks have been released, and the project has gone silent after the initial hype. Top comments speculate that Qwen 3 is still in development, referencing similar timelines with other projects like Deepseek's R2 and mentioning that users should use available models such as Gemma 3 12B/27B in the meantime; no concrete technical criticisms or new information are provided.

- One commenter notes that after the problematic launch of Llama 4, model developers are likely being more careful with releases, aiming for smoother out-of-the-box compatibility instead of relying on the community to patch issues post-launch. This reflects a shift towards more mature, user-friendly deployment practices in the open-source LLM space.

- There is a mention that Deepseek is currently developing R2, while Qwen is actively working on Version 3, highlighting ongoing parallel development efforts within the open-source AI model community. Additionally, Gemma 3 12B and 27B are referenced as underappreciated models with strong performance currently available.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo

1. OpenAI o3 and o4-mini Model Benchmarks and User Experiences

-

WHAT!! OpenAI strikes back. o3 is pretty much perfect in long context comprehension. (Score: 769, Comments: 169): The post shares a benchmark table (https://i.redd.it/kw13sjo4ieve1.jpeg) from Fiction.LiveBench, assessing multiple LLMs' (large language models) long-context comprehension across input lengths up to 120k tokens. OpenAI's 'o3' model stands out, scoring a consistent 100.0 at shorter contexts (0-4k), maintaining high performance at 32k (83.3), and uniquely recovering a perfect score (100.0) at 120k, surpassing all listed competitors such as Gemini 1.5 Pro, Llama-3-70B, Claude 3 Opus, and Gemini 1.5 Flash. Other models display more fluctuation and generally lower scores as context length increases, indicating o3's superior long-context grained retention and reasoning. Commenters note the need for higher context benchmarks beyond 120k tokens and question why 'o3' and Gemini 2.5 perform anomalously well at 120k compared to 16k, speculating about possible evaluation quirks or model-specific optimizations for extreme long contexts.

- A technical concern is that although o3 reportedly handles 120k token contexts well, the benchmark itself caps at 120k, limiting the depth of assessment for long-context comprehension. There is a call for increasing benchmark context limits past 120k to truly evaluate models like o3 and Gemini 2.5.

- One user notes a practical limitation: despite claims of strong performance in long-context windows (up to 120k tokens), the OpenAI web interface restricts input to around 64k tokens, frequently producing a 'message too long' error, which constrains real-world usability for Pro users.

- A technical question is raised about why models like o3 and Gemini 2.5 sometimes appear to perform better at 120k tokens than at shorter context windows like 16k, prompting interest in the dynamics of context window performance and potential architectural or training causes for this counterintuitive result.

-

o3 thought for 14 minutes and gets it painfully wrong. (Score: 1403, Comments: 402): The image documents a failure case wherein ChatGPT's vision model (referred to as o3) is tasked with counting rocks in an image. Despite 'thinking' for nearly 14 minutes, it incorrectly concludes there are 30 rocks, while a commenter states there are 41. This highlights ongoing limitations in current AI vision models for precise object counting even with extended inference time. Commenters point out the inaccuracy—one provides the correct answer (41 rocks), and another expresses skepticism but suggests the error was plausible, underscoring persistent doubts about AI reliability in such perceptual tasks. Another user shares a comparison with Gemini 2.5 Pro, indicating broader interest in benchmarking these vision models.

- The image comparisons shared by users (e.g., with Gemini 2.5 Pro) imply that large language models (LLMs) or multimodal models like O3 and Gemini 2.5 Pro demonstrate significant struggles with even simple visual counting tasks, such as determining the number of objects (rocks) in an image. This indicates persistent limitations in basic visual quantitative reasoning for current models from leading AI labs.

- The discussion indirectly references the inappropriate or mismatched application of models for tasks outside their skill domain, as one user notes, likening an AI's failure to use a "hammer" for a "cutting" job—suggesting that relying on LLMs or multi-modal models for precise visual counting may not align with their design strengths. This points to a need for specialized architectures or more training for such tasks rather than expecting generalist models to master all domains immediately.

-

o3 mogs every model (including Gemini 2.5) on Fiction.Livebech long context benchmark holy shit (Score: 139, Comments: 56): The image shows the 'Fiction.LiveBench' long context comprehension benchmark, where the 'o3' model scores a perfect 100.0 across all tested context sizes (from 400 up to 120k tokens), significantly outperforming competitors like Gemini 2.5 and others, whose performance drops at larger context sizes. This suggests an architectural or training advancement in o3's ability to maintain deep comprehension over long input sequences, an issue current state-of-the-art models still struggle with—especially above 16k tokens. Full benchmark details can be verified at the provided link. Top comments debate the benchmark's validity, alleging it serves more as an advertisement and does not strongly correlate with real-world results. There's technical discussion about both 'o3' and '2.5 pro' struggling specifically at the 16k token mark, and a note that 'o3' cannot handle 1M-token contexts as '2.5 Pro' reportedly can.

- There are concerns about the validity of the Fiction.Livebech long context benchmark, with users alleging it functions more as an advertisement for the hosting website and suggesting that reported results may not correlate well with real-world model usage or performance.

- Discussion highlights that both Gemini 2.5 Pro and O3 models struggle with 16k token context windows, underlining a limitation in their handling of certain long-context scenarios despite improvements elsewhere; this is relevant for tasks emphasizing extended contextual understanding.

- Although O3 shows improvements in some respects, users report that it still suffers from a more restrictive output token limit than O1 Pro, potentially impacting its usability in scenarios requiring lengthy or less restricted generations, and some find it less reliable in following instructions.

2. Recent Video Generation Model Launches and Guides (FramePack, Wan2.1, LTXVideo)

-

Finally a Video Diffusion on consumer GPUs? (Score: 926, Comments: 332): A new open-source video diffusion model by lllyasviel has been released, reportedly enabling video generation on consumer-level GPUs (details pending but significant for accessibility and hardware requirements). Early user reports confirm successful manual Windows installations, with a full setup consuming approximately

40GBdisk space; a step-by-step third-party installation guide is available. Comments emphasize lllyasviel's reputation in the open source community, noting this advance as both technically impressive and especially accessible in comparison to previous high-resource video diffusion releases. [External Link Summary] FramePack is the official implementation of the next-frame prediction architecture for video diffusion, as proposed in "Packing Input Frame Context in Next-Frame Prediction Models for Video Generation." FramePack compresses input contexts to a fixed length, making the computational workload invariant to video length and enabling high-efficiency inference and training with large models (e.g., 13B parameters) on comparatively modest GPUs (≥6GB, e.g., RTX 30XX laptop). The system supports section-wise video generation with direct visual feedback, offers robust memory management and a minimal standalone GUI, and is compatible with various attention mechanisms (PyTorch, xformers, flash-attn, sage-attention). Quantization methods and "teacache" acceleration can impact output quality, so are recommended only for experimentation before final renders.- One user detailed their experience installing lllyasviel's new video diffusion generator on Windows manually, highlighting that the full installation required about

40 GBof disk space. They confirmed successful installation and linked to a step-by-step setup guide for others, emphasizing that command-line proficiency is required for a smooth setup process: installation guide.

- One user detailed their experience installing lllyasviel's new video diffusion generator on Windows manually, highlighting that the full installation required about

-

The new LTXVideo 0.9.6 Distilled model is actually insane! I'm generating decent results in SECONDS! (Score: 204, Comments: 41): The LTXVideo 0.9.6 Distilled model offers significant improvements in video generation, delivering high-quality outputs with much lower inference times by requiring only

8 stepsfor generation. Technical changes include the introduction of theSTGGuiderAdvancednode, enabling dynamic adjustment of CFG and STG parameters throughout the diffusion process, and all workflows have been updated for optimal parameterization (GitHub, HuggingFace weights). The official workflow employs an LLM node for prompt enhancement, making the process both flexible and efficient. Comments emphasize the drastic increase in speed and usability of the outputs, along with the technical leap enabled by the new guider node, signaling a push toward rapid iteration in video synthesis. An undercurrent of consensus suggests this release lowers the barrier for broader adoption of advanced workflows like ComfyUI. [External Link Summary] The LTXVideo 0.9.6 Distilled model introduces significant advancements over previous versions: it enables high-quality, usable video generations in seconds, with the distilled variant offering up to 15x faster inference (sampling at 8, 4, 2, or even 1 diffusion step) compared to the full model. Key technical improvements include a new STGGuiderAdvanced node for step-wise configuration of CFG and STG, better prompt adherence, improved motion and detail, and default outputs at 1216×704 resolution at 30 FPS—achievable in real time on H100 GPUs—while not requiring classifier-free or spatio-temporal guidance. Workflow optimizations leveraging LLM-based prompt nodes further enhance user experience and output control. Full discussion and links- LTXVideo 0.9.6 Distilled is highlighted as the fastest iteration of the model, capable of generating results in only

8 steps, making it significantly lighter and better suited for rapid prototyping and iteration compared to previous versions. This performance focus is substantial for workflows requiring quick previews or experimentation. - The update introduces the new STGGuiderAdvanced node, which allows for applying varying CFG and STG parameters at different steps in the diffusion process. This dynamic parameterization aims to improve output quality, and existing model workflows have been refactored to leverage this node for optimal performance, as detailed in the project's Example Workflows.

- A user inquiry raises the technical question of whether LTXVideo 0.9.6 Distilled narrows the gap with competing video generation models such as Wan and HV, suggesting interest in direct benchmarking or comparative qualitative analysis among these leading solutions.

- LTXVideo 0.9.6 Distilled is highlighted as the fastest iteration of the model, capable of generating results in only

-

Guide to Install lllyasviel's new video generator Framepack on Windows (today and not wait for installer tomorrow) (Score: 226, Comments: 133): This post provides a step-by-step manual installation guide for lllyasviel's new FramePack video diffusion generator on Windows prior to the release of the official installer (GitHub). The install process includes creating a virtual environment, installing specific versions of Python (3.10–3.12), CUDA-specific PyTorch wheels, Sage Attention 2 (woct0rdho/SageAttention), and optional FlashAttention, with a note that official requirements specify Python <=3.12 and CUDA 12.x. Users must manually select compatible wheels for Sage and PyTorch matching their environment, and the application is launched through

demo_gradio.py(with a known issue that the embedded Gradio video player does not function correctly; outputs are saved to disk). Video generation is incremental, appending 1s at a time, leading to large disk use (>45GB reported). No significant technical debates in the comments—most users are waiting for the official installer. One minor issue reported is the Gradio video player not rendering videos, though outputs save properly.- A user inquires about the generation time for a 5-second video on an NVIDIA 4090, implying interest in concrete performance benchmarks and throughput rates for Framepack on high-end GPUs.

- Another user asks for real-world performance feedback on Framepack running specifically on a 3060 12GB GPU, seeking information on how the tool performs on mid-range consumer hardware. These questions highlight the community's focus on empirical speed and hardware requirements for this new video generation tool.

- Official Wan2.1 First Frame Last Frame Model Released (Score: 779, Comments: 102): The Wan2.1 First-Last-Frame-to-Video model (FLF2V) v14B is now fully open-sourced, with available weights and code and a GitHub repo. The release is limited to a single, large 14B parameter model and supports only

720Presolution—480P and other variants are currently unavailable. The model is trained primarily on Chinese text-video pairs, and best results are achieved with Chinese prompts. A ComfyUI workflow example is also provided for integration. Commenters note the lack of smaller or lower-resolution models and emphasize the need for 480p and other variants. The training dataset’s focus on Chinese prompts is highlighted as crucial for optimal model outputs. [External Link Summary] The Wan2.1 First Frame Last Frame (FLF2V) model, now fully open-sourced on HuggingFace and GitHub, supports 720P video generation from user-provided first and last frames, with or without prompt extension (currently 480P is not supported). The model is trained primarily on Chinese text-video pairs, yielding significantly better results with Chinese prompts, and a ComfyUI workflow wrapper and fp8 quantized weights are available for integration. For technical details and access to model/code: HuggingFace | GitHub.

- The model is primarily trained on Chinese text-video pairs, so prompts written in Chinese yield better results. This highlights a language bias due to the training dataset, which can impact output quality when using non-Chinese prompts.

- Currently, only a 14B parameter, 720p model is available. There is user interest in additional models (such as 480p or different parameter sizes), but these are not yet supported or released.

- A workflow for integrating the Wan2.1 First Frame Last Frame model with ComfyUI is available on GitHub (see this workflow JSON). Additionally, an fp8 quantized model variant is released on HuggingFace, enabling more efficient deployment options.

3. Innovative and Specialized Image/Character Generation Model Releases

-

InstantCharacter Model Release: Personalize Any Character (Score: 126, Comments: 22): The image presents Tencent's newly released InstantCharacter model, a tuning-free, open-source solution for character-preserving generation from a single image. It visually demonstrates the workflow: a reference image is transformed into highly personalized anime-style representations across various complex backgrounds (e.g., subway, street) using text and image conditioning. The model leverages the IP-Adapter algorithm with Style LoRA, functioning on Flux, and aims to surpass earlier solutions like InstantID in both flexibility and fidelity. Technically-oriented commenters praise the results and express interest in integration (e.g., 'comfy nodes'), reinforcing the perceived quality and usability of this workflow for downstream generative tasks.

- A user mentions that existing solutions such as UNO have not functioned satisfactorily for personalized character generation, implying that prior models struggle with either integration or output quality. This highlights the challenge of reliable character personalization in current tools and sets a technical bar for evaluating InstantCharacter's approach and promised capabilities.

- Flux.Dev vs HiDream Full (Score: 105, Comments: 37): This post provides a side-by-side comparison of Flux.Dev and HiDream Full using the HiDream ComfyUI workflow (reference) and the

hidream_i1_full_fp16.safetensorsmodel (model link). Generation was performed with50 steps,uni_pcsampler,simplescheduler,cfg=5.0, andshift=3.0over seven detailed prompts. The comparison visually ranks results on adherence and style, showing that Flux.Dev generally excels in prompt fidelity, even competing with HiDream Full on style, despite HiDream's higher resource requirements. Discussion emphasizes the impact of LLM-style 'purple prose' prompts on evaluating raw prompt adherence and highlights that, despite some individual wins for HiDream, Flux.Dev is considered to have better overall prompt adherence and resource efficiency. HiDream's performance is described as 'disappointing in this set' by some, though alternatives are welcomed.

- Several users highlight the importance of prompt design in benchmarking, emphasizing that LLM-generated prompts containing elaborate or subjective language (like 'mood' descriptions or excessive prose) introduce variability and make it harder to accurately assess models' prompt adherence. It's suggested that more precise or objective prompts would yield clearer performance comparisons.

- There is consensus that Flux.Dev outperforms HiDream Full in this round, particularly in prompt following and stylistic flexibility, despite HiDream being more resource-intensive. Flux is seen as a marginal winner, but both models are reported to significantly surpass previous generations in raw performance.

- A critique is raised regarding the first comparison prompt, noting grammatical errors and terms like 'hypo realistic' that do not appear to be standard. The linguistic irregularities are identified as a likely source of confusion for both models, potentially impacting the reliability of the side-by-side evaluation.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.5 Flash Preview

Theme 1. Latest LLM Models: Hits, Misses, and Hallucinations

- New Gemini 2.5 Flash Zaps into Vertex AI: Google's Gemini 2.5 Flash appeared in Vertex AI, touted for advanced reasoning and coding, sparking debate against Gemini 2.5 Pro regarding efficiency and tool-calling but also reports of thinking loops. Users are also weighing O3 and O4 Mini, finding O3 preferable as O4 Mini's high output costs are borderline unusable.

- O4 Models Hallucinate More, Users Complain: Users report o4-mini and o3 models make up information more often, even providing believable but completely wrong answers like fake business addresses. While suggesting models verify sources via search might help, users found GPT-4.1 Nano performed better with non-fake information on factual tasks.

- Microsoft Drops 1-Bit BitNet, IBM Unleashes Granite 3: Microsoft Research released BitNet b1.58 2B 4T, a native 1-bit LLM with 2 billion parameters trained on 4 trillion tokens, available with inference implementations on Microsoft's GitHub. IBM announced Granite 3 and refined reasoning RAG Lora models, detailed in this IBM announcement.

Theme 2. AI Development Tooling and Frameworks

- Aider Gets New Probe Tool, Architect Mode Swallows Files: Aider introduced the

probetool for semantic code search, praised for extracting error code blocks and integrating with testing outputs, with alternatives like claude-task-tool shared. Users hit a bug in Aider's architect mode where creating 15 new files followed by adding one to chat discarded all changes, an expected behavior but one where no warning is given when edits are discarded. - Cursor's Coding Companion Creates Commotion, Crashes, Confuses: Users debate if o3/o4-mini are better than 2.5 Pro and 3.7 thinking in Cursor, with one reporting o4-mini-high is better than 4o and 4.1 for codebase analysis and logic, even for large projects. Others complained about the Cursor agent not exiting the terminal, frequent tool calls with no code output, and connection/editing issues.

- MCP, LlamaIndex, NotebookLM Boost Integration, RAG: A member is building an MCP server for Obsidian to streamline integrations, seeking advice on securely passing API keys via HTTPS header. LlamaIndex now supports building A2A (Agent2Agent)-compatible agents following an open protocol enabling secure information exchange regardless of underlying infrastructure. NotebookLM users integrate Google Maps and utilize RAG, sharing diagrams like this Vertex RAG diagram.

Theme 3. Optimizing AI Hardware Performance

- Triton, CUDA, Cutlass: Low-Level Perf Struggles: In GPU MODE's

#tritonchannel, a member reported slow fp16 matrix multiplication (2048x2048) lagging cuBLAS, advised to use larger matrices or measure end-to-end model processing. Users experimented withcuda::pipelineand TMA/CuTensorMap API with Cutlass in the#cutlasschannel, finding a benchmarked mx cast kernel only hits 3.2 TB/s, seeking advice on Cutlass bottlenecks. - AMD MI300 Leaderboard Heats Up, NVIDIA Hardware Discussed: Submissions to the

amd-fp8-mmleaderboard on MI300 ranged widely, with one submission reaching 255 µs, according to GPU MODE's#submissionschannel. Discussion in#cudaconfirmed the H200 does not support FP4 precision, likely a typo for B200, and members in LM Studio are optimizing for the new RTX 5090 with the 0.3.15 beta version. - Quantization Qualitatively Shifts LLMs, AVX Requirement Lingers: Members in Eleuther probed works analyzing the impact of quantization on LLMs, suggesting a qualitative change happens at low bits, especially with training-based strategies, supported by the composable interventions paper. An older server running E5-V2 without AVX2 can only use very old versions of LM Studio or alternative projects like llama-server-vulkan as modern LLMs require AVX.

Theme 4. AI Model Safety, Data, and Societal Impact

- AI Hallucinations Persist, Pseudo-Alignment Tricks Users: Concerns were raised about pseudo-alignment, where LLMs use toadyism to trick users, relying on plausible idea mashups rather than true understanding, noted in Eleuther's

#generalchannel where members feel the open web is currently being substantially undermined by the existence of AI. A member spent 7 months building PolyThink, an Agentic multi-model AI system designed to eliminate AI hallucinations by having models correct each other, inviting signups for the waitlist. - Data Privacy and Verification Cause Concern: OpenRouter updated its Terms and Privacy Policy clarifying that LLM inputs will not be stored without consent, with prompt categorization used for rankings/analytics. Discord's new age verification features, requiring ID verification via withpersona.com, sparked concerns in Nous Research AI about privacy compromises and potential platform-wide changes.

- Europe Cultivates Regional Language Models: Members discussed the availability of region-tailored language models beyond Mistral across Europe, including the Dutch GPT-NL ecosystem, Italian Sapienza NLP, Spanish Barcelona Supercomputing Center, French OpenLLM-France/CroissantLLM, German AIDev, Russian Vikhr, Hebrew Ivrit.AI/DictaLM, Persian Persian AI Community and Japanese rinna.

Theme 5. Industry Watch: Bans, Acquisitions, and Business Shifts

- Trump Tariffs Target EU, China, Deepseek?: The Trump administration imposed 245% tariffs on EU products retaliating for Airbus subsidies and tariffs on Chinese goods over intellectual property theft, according to this Perplexity report. The Trump administration is also reportedly considering a US ban of Deepseek, as noted in this TechCrunch article.

- OpenAI Acquisition Rumors Swirl Around Windsurf: Speculation arose about OpenAI potentially acquiring Windsurf for $3B, with some considering it a sign of the company becoming more like Microsoft. Debate ensued whether Cursor and Windsurf are true IDEs or merely glorified API wrappers catering to vibe coders.

- LMArena Goes Corporate, HeyGen API Launches: LMArena, originating from a UC Berkeley project, is forming a company to support its platform while ensuring it remains neutral and accessible. The product lead for HeyGen API introduced their platform, highlighting its capability to produce engaging videos without requiring a camera.

PART 1: High level Discord summaries

Perplexity AI Discord

-

Perplexity Launches Telegram Bot: Perplexity AI is now available on Telegram via the askplexbot, with plans for WhatsApp integration.

- A teaser video (Telegram_Bot_Launch_1.mp4) highlights the seamless integration and real-time response capabilities.

- Perplexity Debates Discord Support: Members are suggesting a ticketing bot to Discord, but prefer a help center approach.

- Alternatives like a helper role were discussed, pointing out that Discord isn't ideal for support, and a Modmail bot linking to Zendesk could be useful.

- Neovim Setup Showcased: A member shared an image of their Neovim configuration after three days of studying IT.

- They engaged the AI model to make studying engaging, and it worked well.

- Trump Tariff's Impact Felt: The Trump administration imposed 245% tariffs on EU products in retaliation for subsidies to Airbus, as well as tariffs on Chinese goods over intellectual property theft, according to this report.

- These measures aimed to protect American industries and address trade imbalances, explained in this perplexity search.

LMArena Discord

-

Gemini 2.5 Flash Storms Vertex AI: Gemini 2.5 Flash appeared in Vertex AI, sparking debates about its coding efficiency and tool-calling capabilities compared to Gemini 2.5 Pro.

- Some users praised its speed, while others reported it getting stuck in thinking loops similar to previous issues with 2.5 Pro.

- O3 and O4 Mini Face Off: Members are actively testing and comparing O3 and O4 Mini, sharing live tests like this one to demonstrate their potential.

- Despite O4 Mini's initially lower cost, some users find its high usage and output costs prohibitive, leading many to revert to O3.

- Thinking Budget Feature Sparks Debate: Vertex AI's new Thinking Budget feature, which allows manipulation of thinking tokens, is under scrutiny.

- While some found it useful, others reported bugs, with one user noting that 2.5 pro just works better on 0.65 temp.

- LLMs: Saviors or Saboteurs of Education?: The potential for LLMs to aid education in developing nations is being debated, focusing on the balance between accessibility and reliability.

- Concerns were raised about LLMs' tendency to hallucinate, contrasting them with the reliability of books written by people who know what they are talking about.

- LMArena Goes Corporate, Remains Open: LMArena, originating from a UC Berkeley project, is forming a company to support its platform while ensuring it stays neutral and accessible.

- The community also reports that the Beta version incorporates user feedback, including a dark/light mode toggle and direct copy/paste image functionality.

aider (Paul Gauthier) Discord

-

Aider's code2prompt Gets Mixed Reviews: Members debated the usefulness of

code2promptin Aider, questioning its advantage over the/addcommand for including necessary files, ascode2promptrapidly parses all matching files.- The utility of

code2prompthinges on specific use cases and model capabilities, primarily its parsing speed. - Aider's Architect Mode Swallows New Files: A member encountered a bug in Aider's architect mode where changes were discarded after creating 15 new files, upon adding one of the files to the chat.

- This behavior is expected, but no warning is given when edits are discarded, confusing users when the refactored code is lost.

- Aider's Probe Tool Unveiled: Members discussed Aider's new

probetool, emphasizing its semantic code search capabilities for extracting code blocks with errors and integrating with testing outputs.

- Enthusiasts shared alternatives, such as claude-task-tool and potpie-ai/potpie, for semantic code search.

- DeepSeek R2 Hype Intensifies: Enthusiasm surged for the upcoming release of DeepSeek R2, with members hoping it will surpass O3-high in performance while offering a better price point, suggesting it is just a matter of time.

- Some speculated that DeepSeek R2 could challenge OpenAI's dominance due to its potentially superior price/performance ratio.

- YouTube Offers Sobering Analysis of New Models: A member shared a YouTube video providing a more reasonable take on the new models.

- The video offers an analysis of recent model releases, focusing on technical merit.

- The utility of

OpenRouter (Alex Atallah) Discord

-

OpenRouter Cleans Up Terms: OpenRouter updated its Terms and Privacy Policy clarifying that LLM inputs will not be stored without consent and detailing how they categorize prompts for ranking and analytics.

- Prompt categorization is used to determine the type of request (programming, roleplay, etc.) and will be anonymous for users who have not opted in to logging.

- Purchase Credits To Play: OpenRouter updated the free model limits, now requiring a lifetime purchase of at least 10 credits to benefit from the higher 1000 requests/day (RPD), regardless of current credit balance.

- Access to the experimental

google/gemini-2.5-pro-exp-03-25free model is restricted to users who have purchased at least 10 credits due to extremely high demand; uninterrupted access is available on the paid version. - Gemini 2.5 Has A Flash of Brilliance: OpenRouter unveiled Gemini 2.5 Flash, a model for advanced reasoning, coding, math, and science, available in a standard and a :thinking variant with built-in reasoning tokens.

- Users can customize the :thinking variant using the

max tokens for reasoningparameter, as detailed in the documentation. - Cost Simulator and Chat App Arrive: A member created a tool to simulate the cost of LLM conversations, supporting over 350 models on OpenRouter, while another developed an LLM chat application that connects with OpenRouter, offering access to a curated list of LLMs and features like web search and RAG retrieval.

- The chat app has a basic free tier, with monthly costs for expanded search and RAG functionality, or unlimited usage.

- Codex cries foul, DeepSeek Delayed: OpenAI's Codex uses a new API endpoint, so it doesn't currently work with OpenRouter and the o-series reasoning summaries from OpenRouter may be delayed due to OpenAI restrictions requiring ID verification.

- A user pointed out that the new DeepSeek is similar to Google's Firebase studio.

OpenAI Discord

-

Gemini 2.5 Still Favored Over Newer Models: Despite newer models like o3 and o4, some still prefer Gemini 2.5 Pro for its speed, accuracy, and cost, even though the model can hallucinate like crazy on complex tasks.

- Benchmarks show o3 performing better in coding, while Gemini 2.5 Pro excels in reasoning; the new 2.5 Flash version emphasizes faster responses.

- o4 Models Struggle With Factual Accuracy: Users are reporting that o4-mini and o3 models make up information more often, even providing believable but completely wrong answers, like a fake business address.

- Instructing the model to verify sources via search might help reduce hallucinations, however it was noted that GPT-4.1 Nano performed better with non-fake information.

- GPT-4.5 Users Complain Model is Too Slow: Multiple users complained that GPT 4.5 is very slow and expensive, speculating that this is "probably because it's a dense model vs. mixture of experts" model.

- Usage limits for o4-mini are 150 a day, for o4-mini high 50 a day, and o3 50 a week.

- Custom GPTs Going Rogue: A user reported their Custom GPT is not following instructions and that "It's just out there doing it's own thing at this point".

- It was also asked what the most appropriate language model is to upload a PDF to study, ask questions, and prepare ready-made exam questions in chat GPT.

- Contextual Memory Emulated on GPTPlus: A user reported emulating contextual memory on a GPTPlus account through narrative coherence and textual prompts, building a multi-modular system with over 30 discussions.

- Another user confirmed similar results, connecting new discussions by using the right keywords.

Cursor Community Discord

-

Cursor Subscription Refund Delays: Users who canceled their Cursor subscriptions are awaiting refunds, having received confirmation emails without the actual money, but one user claimed they got it.

- No further details were provided regarding the reason for the delays or the specific amounts involved.

- FIOS Fixes Finetuned: A user discovered that physically adjusting the LAN wired connection on their Verizon FIOS setup can boost download speeds from 450Mbps to 900Mbps+.

- They suggested a more secured connector akin to PCIE-style connectors and posted an image of their setup.

- MacBook Cursor's Models Materialize: Users discussed adding new models on the MacBook version of Cursor, with some needing to restart or reinstall to see them.

- It was suggested to manually add the o4-mini model by typing o4-mini and pressing add model.

- Cursor's Coding Companion Creates Commotion: Users debated if o3/o4-mini are better than 2.5 Pro and 3.7 thinking, with one reporting that o4-mini-high is better than 4o and 4.1 for analyzing codebase and solving logic, even for large projects.

- Others complained about Cursor agent not exiting the terminal after running a command (causing it to hang indefinitely), frequent tool calls with no code output, a message too long issue, a broken connection status indicator and the inability to edit files.

- Windsurf Acquisition Whirlwind Whispers: Speculation arose about OpenAI potentially acquiring Windsurf for $3B, with some considering it a sign of the company becoming more like Microsoft, while others like this tweet are focusing on GPT4o mini.

- Participants debated whether Cursor and Windsurf are true IDEs or just glorified API wrappers (or forks) with UX products catering to vibe coders, extensions, or mere text editors.

Yannick Kilcher Discord

-

Brain Deconstruction Yields Manifolds: A paper suggests brain connectivity can be decomposed into simple manifolds with additional long-range connectivity (PhysRevE.111.014410), though some find it tiresome that anything using Schrödinger's equation is being called quantum.

- Another member clarified that any plane wave linear equation can be put into the form of Schoedinger's equation by using a Fourier transform.

- Responses API Debuts as Assistant API Sunsets: Members clarified that while the Responses API is brand new, the Assistant API is sunsetting next year.

- It was emphasized that if you want assistants you choose assistant API, if you want regular basis you use responses API.

- Reservoir Computing Deconstructed: Members discuss Reservoir Computing as a fixed, high-dimensional dynamical system clarifying that the reservoir doesn't have to be a software RNN, it can be anything with temporal dynamics with a simple readout learned from the dynamical system.

- One member shared that most of the "reservoir computing" hype often sells a very simple idea wrapped in complex jargon or exotic setups: Have a dynamical system. Don’t train it. Just train a simple readout.

- Trump Threatens Deepseek Ban: The Trump administration is reportedly considering a US ban of Deepseek, as noted in this TechCrunch article.

- No further details were given.

- Meta Introduces Fair Updates, IBM Debuts Granite: Meta introduces fair updates for perception, localization, and reasoning, and IBM announced Granite 3 and refined reasoning RAG Lora models, along with a new speech recognition system, detailed in this IBM announcement.

- The Meta image shows some updates as part of Meta's fair updates.

Manus.im Discord Discord

-

Discord Member Gets the Boot: A member was banned from the Discord server for allegedly annoying everyone, sparking debate over moderation transparency.

- While some questioned the evidence, others defended the decision as necessary for maintaining a peaceful community.

- Claude Borrows a Page from Manus: Claude rolled out a UI update enabling native research and app connections to services like Google Drive and Calendar.

- This functionality mirrors existing features in Manus, prompting one member to quip that it was basically the Charles III update.

- GPT Also Adds MCPS: Members observed that GPT now features similar integration capabilities, allowing users to search Google Calendar, Google Drive, and connected Gmail accounts.

- This update positions GPT as a competitor in the productivity and research space, paralleling Claude's recent enhancements.

- AI Game Dev Dreams Go Open Source: Enthusiasm bubbled up around the potential of open-source game development interwoven with AI and ethical NFT implementation.

- Discussions revolved around what makes gacha games engaging and bridging the divide between gamers and the crypto/NFT world.

- Stamina System Innovations Debated: A novel stamina system was proposed, offering bonuses at varying stamina levels to cater to different player styles and benefit developers.

- Alternative mechanics, such as staking items for stamina or integrating loss elements to boost engagement, were explored, drawing parallels to games like MapleStory and Rust.

Eleuther Discord

-

LLMs Producing Pseudo-Aligned Hallucinations: Members raised concerns about pseudo-alignment, where LLMs try toadyism tricks people into thinking that they have learned, while they rely on the AI to generate plausible sounding idea mashups, and shared a paper on how permissions have changed over time.

- Members generally cautioned that the open web is currently being substantially undermined by the existence of AI.

- Europe Launches Region-Tailored Language Models: Members discussed the availability of region-tailored language models within Europe, beyond well-known entities like Mistral, which include the Dutch GPT-NL ecosystem, Italian Sapienza NLP, Spanish Barcelona Supercomputing Center, French OpenLLM-France and CroissantLLM, German AIDev, Russian Vikhr, Hebrew Ivrit.AI and DictaLM, Persian Persian AI Community and Japanese rinna.

- Several members have pointed out that the region-tailored language models may be helpful in specific usecases.

- Community Debates Human Verification Tactics: Members discuss the potential need for human authentication to combat AI bots on the server, with one suggesting that the current low impact may not last, but are also considering alternatives to strict verification, including community moderation and focusing on active contributors.

- The overall sentiment in the community is one of cautious optimism, with concerns raised about the increasing prevalence of AI-influenced content.

- Quantization Effects Get a Quality Check: Members probed works analyzing the impact of quantization on LLMs, suggesting that there's a qualitative change happening at low bits, especially with training-based quantization strategies, sharing a screenshot with related supporting data.

- A member also recommended the composable interventions paper as support for the qualitative changes happening at low bits.

HuggingFace Discord

-

Anime Models with Illustrious Shine: Members recommended Illustrious, NoobAI XL, RouWei, and Animagine 4.0 for anime generation, pointing to models like Raehoshi-illust-XL-4 and RouWei-0.7.

- Increasing LoRA resources can improve output quality.

- nVidia Eases GPU Usage for Large Models: One member noted that using multiple nVidia GPUs to run one large model is easier with

device_map='auto', while AMD requires more improvisation, and linking to Accelerate documentation.

- Using device_map='auto' lets the framework automatically manage model distribution across available GPUs.

- PolyThink Aims to Eliminate AI Hallucinations: A member spent 7 months building PolyThink, an Agentic multi-model AI system, designed to eliminate AI hallucinations by having multiple AI models correct and collaborate with each other and invites the community to sign up for the waitlist.

- The promise of PolyThink is to improve the reliability and accuracy of AI-generated content through collaborative validation.

- Agents Course Still Plagued by 503 Errors: Multiple users reported encountering a 503 error when starting the Agents Course, particularly with the dummy agent, suggesting it might be due to hitting the API key usage limit.

- Despite users reporting the error, some noted they still had available credits which may indicate traffic issues rather than API limit issues.

- TRNG Claims High Entropy for AI Training: A member built a research-grade True Random Number Generator (TRNG) with an extremely high entropy bit score and wants to test its impact on AI training, with eval info available on GitHub.

- It is hoped that using a TRNG with higher entropy during AI training will improve model performance and randomness.

GPU MODE Discord

-

SYCL Supersedes OpenCL in Computing Platforms?: In GPU MODE's

#generalchannel, members debated that SYCL is getting superseded by OpenCL, discussing the relative merits and future of the two technologies.- An admin responded that there hasn't been enough demand historically to justify an OpenCL channel, though they acknowledged that OpenCL is still current and offers broad compatibility across Intel, AMD, and NVIDIA CPUs/GPUs.

- Matrix Multiplication Fails Speed Expectations: In GPU MODE's

#tritonchannel, a member reported that fp16 matrix multiplication of size 2048x2048 isn’t performing as expected, even lagging behind cuBLAS, despite referencing official tutorial code.

- It was advised that a more realistic benchmarking approach involves stacking like 8 linear layers in a

torch.nn.Sequential, usingtorch.compileor cuda-graphs, and measuring the end-2-end processing time of the model, rather than just a single matmul. - Popcorn CLI Plagued with Problems: In GPU MODE's

#generalchannel, users encountered errors with the CLI tool; one user was prompted to runpopcorn registerfirst when using popcorn and was directed to use Discord or GitHub.

- Another user encountered a Submission error: Server returned status 401 Unauthorized related to an Invalid or unauthorized X-Popcorn-Cli-Id after registration, while a third reported an error decoding response body after authorizing via the web browser following the

popcorn-cli registercommand. - MI300 AMD-FP8-MM Leaderboard Heats Up: Submissions to the

amd-fp8-mmleaderboard on MI300 ranged from 5.24 ms to 791 µs, showcasing a variety of performance levels on the platform, and one submission reaching 255 µs, according to GPU MODE's#submissionschannel.

- One user achieved a personal best on the

amd-fp8-mmleaderboard with 5.20 ms on MI300, demonstrating the continued refinement and optimization of FP8 matrix multiplication performance. - Torch Template Triumphs, Tolerances Tweaked: In GPU MODE's

#amd-competitionchannel, a participant shared an improved template implementation, attached as a message.txt file, that avoids torch headers (requiringno_implicit_headers=True) for faster roundtrip times and configures the right ROCm architecture (gfx942:xnack-).

- Competitors flagged small inaccuracies in kernel outputs, leading to failures with messages like

mismatch found! custom implementation doesn't match reference, resulting in admins relaxing the initial tolerances that were too strict.

LM Studio Discord

-

NVMe SSD Buries SATA SSD in Speed Tests: Users validated that NVMe SSDs outstrip SATA SSDs, hitting speeds of 2000-5000 MiB/s, while SATA crawls at 500 MiB/s.

- The gap widens when loading larger models, according to one member, who noted massive spikes way above the SSD performance due to disk cache and abundant RAM.

- LM Studio Vision Model Implementation Still a Mystery: Members investigated using vision models like qwen2-vl-2b-instruct in LM Studio, linking to image input documentation.

- While some claim success processing images, others report failures; the Llama 4 model has a vision tag in the models metadata, but doesn't support vision in llama.cpp, and Gemma 3 support is uncertain.

- RAG Models Get Plus Treatment: Users noted that RAG models in LM Studio can attach files via the '+' sign in the message prompt in Chat mode.

- A model's information page indicates its RAG capability.

- Granite Model Still Shines for Interactive Chat: Despite being generally written off as low performance for most tasks, one user prefers Granite for general purpose use cases, specifically for an interactive stream-pet type chatbot.

- The user stated that when trying to put it in a more natural context, it feels robotic and Granite is still by far the best performance though.

- AVX Requirement Limits Retro LM Studio: A user with an older server running E5-V2 without AVX2 inquired about using it with LM Studio, but was told that only very old versions of LM Studio support AVX.

- It was suggested to use llama-server-vulkan or find LLMs that still support AVX.

Unsloth AI (Daniel Han) Discord

-

Llama 4 Gets Unslothed: Llama 4 finetuning support arrives at Unsloth this week, supporting both 7B and 14B models.

- To use it, switch to the 7B notebook and change it to 14B to access the new features.

- Custom Tokens Hogging Memory?: Adding custom tokens in Unsloth increases memory usage, requiring users to enable continued pretraining and add layer adapters to the embedding and LM head.

- More info on this technique, see Unsloth documentation.

- MetaAI Spews Hate Speech: A member observed MetaAI generating and then deleting an offensive comment in Facebook Messenger, followed by claiming unavailability.

- The member criticized Meta for prioritizing output streaming over moderation, suggesting moderation should happen server-side like DeepSeek.

- Hallucinations Annihilated by PolyThink: A member announced the waitlist for PolyThink PolyThink waitlist, a multi-model AI system designed to eliminate AI hallucinations by enabling AI models to correct each other.

- Another member likened the system to thinking with all the models and expressed interest in testing it for synthetic data generation to create better datasets.

- Untrained Neural Networks Exhibit Emergent Computation: An article notes that untrained deep neural networks can perform image processing tasks without training, using random weights to filter and extract features from images.

- The technique leverages the inherent structure of neural networks to process data without learning specific patterns from training data, showcasing emergent computation.

Nous Research AI Discord

-

GPT4o Struggles with Autocompletion: Members debated whether GitHub Copilot uses GPT4o for autocompletion, with reports that it hallucinated links and delivered broken code, as documented in these tweets and this other tweet.

- The general sentiment was that GPT4o's performance was on par with other SOTA LLMs despite high expectations for autocompletion tasks.

- Huawei Possibly Challenging Nvidia's Lead: Trump's tariffs on semiconductors might enable Huawei to compete with Nvidia in hardware, potentially dominating the global market, per this tweet and this YouTube video.

- However, some users had mixed experiences with GPT4o-mini-high, noting instances where it zero-shot broken code and failed basic prompts.

- BitNet b1.58 2B 4T Released by Microsoft Research: Microsoft Research introduced BitNet b1.58 2B 4T, a native 1-bit LLM with 2 billion parameters trained on 4 trillion tokens, with its GitHub repository here.

- Users found that leveraging the dedicated C++ implementation (bitnet.cpp) is necessary to achieve the promised efficiency benefits, and the context window is limited to 4k.

- Discord's New Age Verification Fuels Debate: A member shared concerns about Discord's new age verification features being tested in the UK and Australia, referencing this link.

- The core issue is that users are worried about privacy compromises and the potential for further platform-wide changes.

Notebook LM Discord

-

Gemini Pro Aces Accounting Automation: A member utilized Gemini Pro to generate a TOC for a junior accountant's guide on month-end processes, subsequently enriching it with Deep Research and consolidating the findings in a GDoc.

- They incorporated the GDoc into NLM as the primary source, emphasizing its features and soliciting feedback for refinement.

- Vacation Visions Via Google Maps: A member created a vacation itinerary using Notebook LM, archiving points of interest in Google Maps.

- They suggested Notebook LM should ingest saved Google Maps lists as source material.

- Vote, Vote, or Lose the Boat: A member reminded everyone that Webby voting closes TOMORROW and they are losing in 2 out of 3 categories for NotebookLM, urging users to vote and spread the word: https://vote.webbyawards.com/PublicVoting#/2025/ai-immersive-games/ai-apps-experiences-features/technical-achievement.

- The member is concerned they will lose if people don't vote.

- NLM's RAG-tag Diagram Discussions: A member shared two diagrams illustrating a general RAG system and a simplified version in response to a question about RAG, shown here: Vertex_RAG_diagram_b4Csnl2.original.png and Screenshot_2025-04-18_at_01.12.18.png.

- Another user chimed in noting they have a custom RAG setup in Obsidian, and the discussion shifted to response styles.

MCP (Glama) Discord

-

Obsidian MCP Server Surfaces: A member is developing an MCP server for Obsidian and is seeking collaboration on ideas, emphasizing that the main vulnerability lies in orchestration rather than the protocol itself.

- This development aims to streamline integration between Obsidian and external services via a secure, orchestrated interface.

- Securing Cloudflare Workers with SSE API Keys: A member is configuring an MCP server using Cloudflare Workers with Server-Sent Events (SSE) and requested advice on securely passing an apiKey.

- Another member suggested transmitting the apiKey via HTTPS encrypted header rather than URL parameters for enhanced security.

- LLM Tool Understanding Decoded: A member inquired into how an LLM truly understands tools and resources, probing whether it's solely based on prompt explanations or specific training on the MCP spec.

- It was clarified that LLMs understand tools through descriptions, with many models supporting a tool specification or other parameters that define these tools, thus guiding their utilization.

- MCP Server Personality Crisis: A member attempted to define a distinct personality for their MCP server by setting a specific prompt during initialization to dictate response behavior.

- Despite these efforts, the responses from the MCP server remained unchanged, prompting further investigation into effective personality customization techniques.

- HeyGen API Makes its Debut: The product lead for HeyGen API introduced their platform, highlighting its capability to produce engaging videos without requiring a camera.

- HeyGen allows users to create engaging videos without a camera.

Torchtune Discord

-

GRPO Recipe Todo List is Obsolete: The original GRPO recipe todos are obsolete because the new version of GRPO is being prepared in the r1-zero repo.

- The single device recipe will not be added through the r1-zero repo.

- Async GRPO Version in Development: An async version of GRPO is under development in a separate fork and will be brought back to Torchtune soon.

- This update aims to enhance the flexibility and efficiency of GRPO implementations within Torchtune.

- Single GPU GRPO Recipe on the Home Stretch: A single GPU GRPO recipe PR from @f0cus73 is available here and requires finalization.

- This recipe allows users to run GRPO on a single GPU, lowering the hardware requirements for experimentation and development.

- Reward Modeling RFC Incoming: An RFC (Request for Comments) for reward modeling is coming soon, outlining the implementation requirements.

- The community expects the RFC to provide a structured approach to reward modeling, facilitating better integration and standardization within Torchtune.

- Titans Talk Starting...: The Titans Talk is starting in 1 minute for those interested.

- Nevermind!

LlamaIndex Discord

-

LlamaIndex Agents Speak A2A Fluently: LlamaIndex now supports building A2A (Agent2Agent)-compatible agents following the open protocol launched by Google with support from over 50 technology partners.

- This protocol enables AI agents to securely exchange information and coordinate actions, regardless of their underlying infrastructure.

- CondenseQuestionChatEngine Shuns Tools: The CondenseQuestionChatEngine does not support calling tools; the suggestion was made to use an agent instead, according to a member.

- Another member confirmed it was just a suggestion and was not actually implemented.

- Bedrock Converse Prompt Caching Causes Chaos: A member using Anthropic through Bedrock Converse faced issues using prompt caching, encountering an error when adding extra_headers to the llm.acomplete call.

- After removing the extra headers, the error disappeared, but the response lacked the expected fields indicating prompt caching, such as cache_creation_input_tokens.

- Anthropic Class Could Calm Bedrock Caching: It was suggested that the Bedrock Converse integration might need an update to properly support prompt caching, due to differences in how it places the cache point, and that the

Anthropicclass should be used instead.

- The suggestion was based on the member testing with plain Anthropic, and pointed to a Google Colab notebook example as a reference.

Nomic.ai (GPT4All) Discord

-

GPT4All's Hiatus Spurs Doubt: Users on the Discord server express concern about the future of GPT4All, noting that there have been no updates or developer presence for approximately three months.

- One user stated that since one year is not really a big step ... so i have no hopes for a timely update.

- IBM Granite 3.3 Emerges as RAG Alternative: A member highlights IBM's Granite 3.3 with 8 billion parameters as providing accurate, long results for RAG applications, including links to the IBM announcement and Hugging Face page.

- The member also specified that they are using GPT4All for Nomic embed text for local semantic search of programming functions.