[AINews] Gemini 2.0 Flash GA, with new Flash Lite, 2.0 Pro, and Flash Thinking

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

[REDACTED] is all you need.

AI News for 2/4/2025-2/5/2025. We checked 7 subreddits, 433 Twitters and 29 Discords (210 channels, and 5481 messages) for you. Estimated reading time saved (at 200wpm): 571 minutes. You can now tag @smol_ai for AINews discussions!

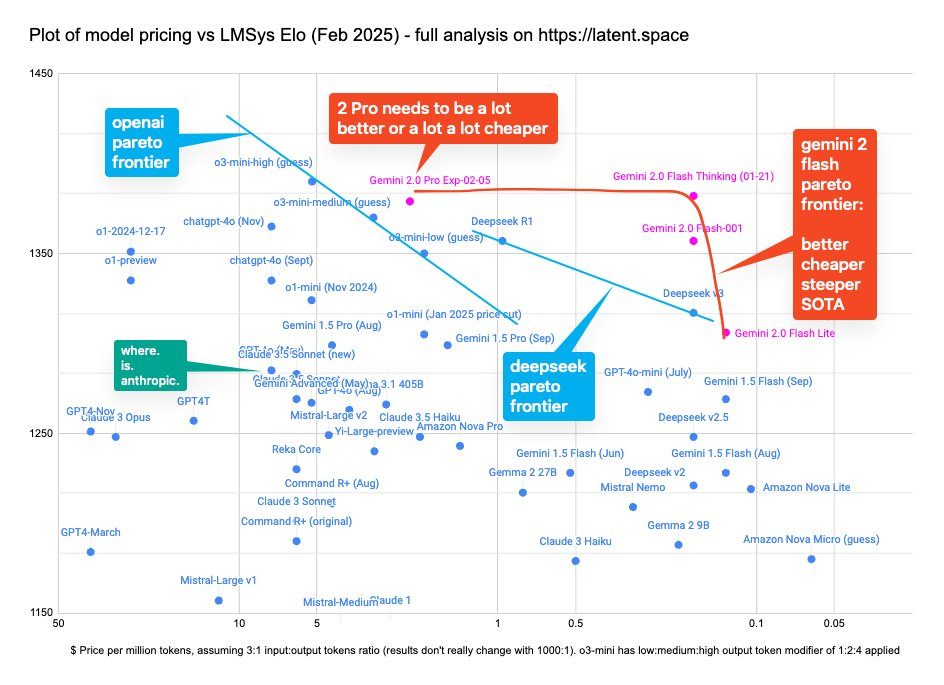

Gemini 2.0 has been "here" since December (our coverage here), but now we can officially count Gemini 2.0 Flash's prices as "real", and put them up on our Pareto frontier chart:

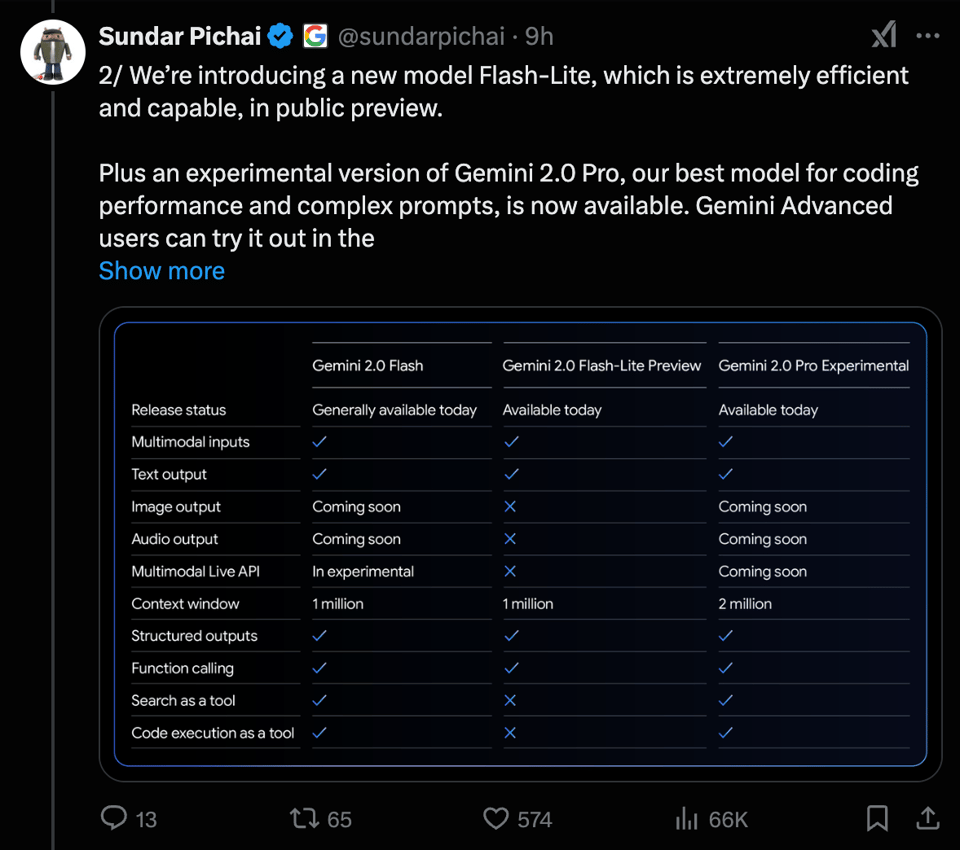

We will grant that raw intelligence charts like those mean increasingly less and will probably die this year because they cannot accurately describe the multimodal input AND output capabilities of these releases, nor coding ability, nor the 1-2m long context, as Sundar Pichai demonstrates:

Of particular note is the cost effectiveness of the new "Flash Lite", as well as the very slight price hike that Gemini 2.0 Flash has vs 1.5 Flash.

Curiously enough, the competitive dynamics of OpenAI "mogging" Google releases seem to have stayed in 2024.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

- Google DeepMind Launches Gemini 2.0 Models including Flash, Flash-Lite, and Pro Experimental: @GoogleDeepMind announced the general availability of Gemini 2.0 Flash, Flash-Lite, and Pro Experimental models. @_philschmid summarized the update, noting that Gemini 2.0 Flash outperforms Gemini 1.5 Pro while being 12x cheaper. The new models offer features like multimodal input, 1 million token context window, and cost-efficiency.

- "Deep Dive into LLMs like ChatGPT" by Andrej Karpathy: @karpathy released a 3h31m YouTube video providing a comprehensive overview of Large Language Models (LLMs), covering stages like pretraining, supervised fine-tuning, and reinforcement learning. He discusses topics such as data, tokenization, Transformer internals, and examples like GPT-2 training and Llama 3.1 base inference.

- Free Course on "How Transformer LLMs Work": @JayAlammar and @MaartenGr, in collaboration with @AndrewYNg, introduced a free course offering a deep dive into Transformer architecture, including topics like tokenizers, embeddings, and mixture-of-expert models. The course aims to help learners understand the inner workings of modern LLMs.

- DeepSeek-R1 Reaches 1.2 Million Downloads: @omarsar0 highlighted that DeepSeek-R1 has been downloaded 1.2 million times from Hugging Face since its launch on January 20. He also conducted a technical deep dive on DeepSeek-R1 using Deep Research, resulting in a 36-page report.

- Anthropic's Increased Rewards for Jailbreak Challenge: @AnthropicAI announced that no one has fully jailbroken their system yet, so they've increased the reward to $10K for the first person to pass all eight levels, and $20K for passing all eight levels with a universal jailbreak. Full details are provided in their announcement. @nearcyan humorously introduced PopTarts: Claude Flavor as a creative reward for their pentesters.

- BlueRaven Extension Hides Twitter Metrics: @nearcyan released an update to BlueRaven, an extension that allows users to browse Twitter with all metrics hidden. This challenges users to engage without influence from popularity metrics. The source code is available with support for Firefox and Chromium.

- Chain-of-Associated-Thoughts (CoAT) Framework Introduced: @omarsar0 discussed a new framework enhancing LLMs' reasoning abilities by combining Monte Carlo Tree Search with dynamic knowledge integration. This approach aims to improve comprehensive and accurate responses for complex reasoning tasks. More details are available in the paper.

- STROM Paper on Synthesis of Topic Outlines: @_philschmid highlighted a paper titled “Synthesis of Topic Outlines through Retrieval and Multi-perspective” (STROM), which proposes a multi-question, iterative research method. It's similar to Gemini Deep Research and OpenAI Deep Research. The paper and GitHub repository are available for those interested.

- Discussions on AI's Impact and Tools: @omarsar0 shared insights on how AI enables individuals to excel in multiple fields simultaneously, emphasizing the importance of learning and tools like ChatGPT and Claude. @abacaj shared a gist showing how to run gsm8k evaluation during GRPO training, extending the GRPOTrainer for custom evaluation.

- Humorous Musings and Test Posts by Nearcyan: @nearcyan conducted test posts on Twitter, observing how his tweets were being deboosted. He contemplated the experience of using Twitter without knowing engagement metrics. Additionally, he humorously expressed feelings about being an iOS developer in today's environment (tweet).

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek VL2 Small Launch and R1's Benchmark Success

- DeepSeek just released an official demo for DeepSeek VL2 Small - It's really powerful at OCR, text extraction and chat use-cases (Hugging Face Space) (Score: 615, Comments: 37): The DeepSeek VL2 Small demo, a 16B MoE model, has been released on Hugging Face, showcasing its capabilities in OCR, text extraction, and chat applications. Vaibhav Srivastav and Zizheng Pan announced the release on X, highlighting its utility in various vision-language tasks.

- Release Timeline & Performance: The DeepSeek VL2 Small model was uploaded to Hugging Face about two months ago, and there is anticipation for a reasoning model to be released this month. Commenters note the model's good performance for its size, though some prefer florence-2-large-ft for specific visual tasks.

- Accessibility & Integration: Discussion includes the utility of vision-language models in navigating websites, especially when accessibility is well-implemented. Users are advised to try the model on a few documents before integrating it into their systems.

- Model Availability & Tools: There is interest in the DeepSeek V3 Lite and the gguf format, with a suggestion to use convert_hf_to_gguf.py from llama.cpp for conversion. The demo link provided by Environmental-Metal9 is here, though some report the demo is not currently working.

- 2B model beats 72B model (Score: 164, Comments: 57): The DeepSeek R1-V project demonstrates that a 2B-parameter model can outperform a 72B-parameter model in vision language tasks, achieving superior effectiveness and out-of-distribution robustness. The model achieved 99% and 81% accuracy in specific out-of-distribution evaluations using only 100 training steps, costing $2.62 and running for 30 minutes on 8 A100 GPUs. The project is fully open-sourced and available here.

- Some commenters express skepticism about the DeepSeek R1-V model's achievements, suggesting that the results might be misleading or overly specific to certain benchmarks. Admirable-Star7088 and Everlier humorously highlight that smaller models can outperform larger ones in niche tasks but emphasize that larger models are generally more versatile.

- Real-Technician831 and iam_wizard discuss the practical implications of using smaller models for specific tasks, noting that this approach can be more compute-efficient for business applications with narrow scopes. They argue that such results should not be surprising, as fine-tuning smaller models for specific tasks is a known strategy.

- The discussion includes a reference to another model, phi-CTNL, which reportedly beats larger models across various benchmarks, as shared by gentlecucumber with a link to arXiv. This adds to the conversation about benchmark-specific performance versus general-purpose capabilities.

- DeepSeek R1 ties o1 for first place on the Generalization Benchmark. (Score: 162, Comments: 23): DeepSeek R1 and o1 tied for first place on the Generalization Benchmark, both achieving an average rank of 1.80. The benchmark tested AI models across 810 cases, with a note that Qwen QwQ failed in 280 cases.

- The Generalization Benchmark tests AI models' ability to infer specific themes from examples, with o3-mini ranking fourth. More details on the benchmark can be found on GitHub.

- Phi 4 ranks high, surpassing Mistral Large 2, Llama 3.3 70b, and Qwen 2.5 72b, and is praised for its reasonable size for self-hosting. Qwen QwQ scored higher but had issues with producing the correct output format.

- o3-mini-high is noted as missing but important for its impact on Livebench results. Additionally, there is a 0.99 correlation between Gemini 1.5 Pro and Gemini 1.5 Flash, indicating similar performance.

Theme 2. Google's AI Policy Shift on Weapons and Surveillance Use

- Google Lifts a Ban on Using Its AI for Weapons and Surveillance (Score: 497, Comments: 126): Google has updated its AI policy to lift a previous ban on using its AI technology for weapons and surveillance. This policy change marks a significant shift in Google's stance on the ethical application of AI.

- Users express significant concern over Google's shift in AI policy, equating it to a moral decline and questioning the ethical implications of using AI for weapons and surveillance. Many comments sarcastically reference Google's former motto, "Don't be evil," suggesting a betrayal of its foundational values.

- Discussions highlight the political and international implications of the policy change, with references to Google's involvement with Israeli military efforts and comparisons to surveillance practices in other countries like China. Concerns about privacy erosion and the potential for AI to be misused in global conflicts are prevalent.

- Several comments critique the corporate motivations behind the policy change, suggesting that shareholder interests often outweigh ethical considerations. The phrase "do the right thing" is criticized as being vague and potentially self-serving, prioritizing corporate gains over societal good.

Theme 3. Gemma 3 Announcement and Community Reactions

- Gemma 3 on the way! (Score: 403, Comments: 42): Omar Sanseviero teases an update on "Gemma" in a tweet, engaging the r/LocalLLama community. The accompanying screenshot highlights features of "Gemini," including "2.0 Flash," "2.0 Flash Thinking Experimental," and "Gemini Advanced," suggesting active development on Gemini rather than Gemma 3.

- Commenters express a strong desire for larger context sizes, with mentions of 64k and 128k as preferred targets for future models like Gemma 3. The current 8k context size is considered inadequate by some users.

- Some users highlight the success and preference for Gemma 2, with specific praise for the 9b simpo model's media knowledge capabilities, and express anticipation for Gemma 3 with enhanced features or even AGI capabilities.

- Discussions also reflect on the community engagement aspect of Reddit, likening it to the early 2010s when researchers and developers had direct interactions with users, illustrating the platform's role in fostering discussions about AI advancements.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. Nvidia's CUDA Strategy: Catalyst to AI's Evolution

- Has Jensen Huang ever acknowledged that Nvidia just kinda lucked into AI? (Score: 153, Comments: 92): Nvidia originally aimed to enhance graphics rendering but inadvertently developed technology that became crucial for training neural networks. This accidental success significantly contributed to Jensen Huang becoming one of the wealthiest individuals in history.

- The consensus among commenters is that Nvidia's success in AI was not due to luck but rather strategic foresight and long-term investment, particularly with the development of CUDA starting in 2006/2007. This strategic move allowed Nvidia to build a robust developer ecosystem that has been crucial in their dominance in AI and scientific computing.

- CUDA's early adoption was initially met with skepticism, as noted by users recalling its reception 10-20 years ago. Despite this, Nvidia persisted, enabling them to capitalize on the AI boom following the publication of the AlexNet paper in 2011, which utilized Nvidia GPUs and highlighted their strategic advantage.

- Nvidia's investment extends beyond AI into various markets, including cryptocurrency, biotechnology, and autonomous systems. Commenters note Nvidia's involvement in diverse applications such as ray tracing, robotics, and even military technology, showcasing their commitment to broadening the use of their GPUs across industries.

Theme 2. ByteDance and Google Advance AI Frontiers

- New ByteDance multimodal AI research (Score: 251, Comments: 26): The post mentions ByteDance's new research in multi-modal AI, though specific details are not provided in the text. The post includes a video which is not analyzed here.

- Audio-Visual Matching: Discussions highlight the capability of multi-modal AI to match any audio to visuals, exemplified by the use of an American accent with a visual of Einstein, which is intentionally mismatched to demonstrate the technology's potential.

- Source and Content Authenticity: A link to the source is provided at omnihuman-lab.github.io, and users critique the mismatch between the AI-generated content and historical representations, noting that the AI portrayal made Einstein appear "neurotypical."

- Audio Source: The audio used in the demonstration, which led to confusion about the accent, is identified as originating from a TEDx talk (source).

- Google claims to achieve World's Best AI ; & giving to users for FREE ! (Score: 203, Comments: 56): Google claims to have developed the world's best AI and is offering it to users for free. Further details, including the specific capabilities or applications of this AI, were not provided in the post.

- Discussions about Google's Gemini AI reveal mixed opinions, with some users expressing skepticism about its capabilities, particularly in areas like pest elimination advice, while others find it impressive for tasks like coding and using the AI Studio. Gemini 2.0 shows limitations compared to its predecessor, prompting some users to revert to the older version for specific functionalities.

- Users debate the performance of coding models, with some models like o1 and o3-mini being praised for generating extensive code efficiently, unlike others that struggle beyond 100 lines. There are comments emphasizing the advanced reasoning capabilities of these models, highlighting their impact on programming tasks.

- The Sonnet model stands out in discussions for its performance in the lmsys webdev arena, with users noting its superiority over other models despite its smaller size. There's a debate on whether models like Sonnet should be compared to those focused on inference scaling, with some attributing competitive advantages to new methodologies.

Theme 3. Debating Open Source in AI: A Look at DeepSeek and More

- DeepSeek corrects itself regarding their "fully open source" claim (Score: 126, Comments: 36): DeepSeek issued a "Terminology Correction" about their DeepSeek-R1, clarifying that while their code and models are released under the MIT License, they are not "fully open-source" as previously claimed. The tweet announcing this correction has received significant engagement with 186 likes, 39 retweets, and 324 replies, and has been viewed 27,000 times.

- Many commenters argue that while the MIT License is often associated with open-source, DeepSeek's claim is misleading because they did not release the source code or training data. This distinction is crucial in determining whether something is truly open-source, as having access to the source code is a fundamental requirement.

- coder543 emphasizes that Llama models and similar projects are not fully open-source either, as they lack detailed dataset descriptions and training code. This highlights a broader issue in the AI community where models are released with weights but without sufficient information or resources to replicate the training process.

- The discussion highlights a misunderstanding or misuse of the term "open-source" when applied to AI models and software. Some users clarify that licensing under MIT doesn't inherently make something open-source unless the actual source code is provided, illustrating the difference between licensing and actual openness.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Thinking

Theme 1. Gemini 2.0 Model Family: Performance and Integration

- Flash 2.0 Zips onto Windsurf and Perplexity: Gemini 2.0 Flash is live on Windsurf and Perplexity AI, touted for its speed and efficiency in coding queries, consuming only 0.25 user prompt credits on Windsurf. While praised for speed, users note its tool calling is limited and its reliability is under scrutiny in comparison to models like Claude and DeepSeek.

- Pro Experimental Benchmarks Challenge Claude 3.5 Sonnet: Gemini 2.0 Pro Experimental is benchmarking comparably to Claude 3.5 Sonnet in coding and complex prompts, securing the #1 spot on lmarena.ai's leaderboard. However, users observe inconsistencies in API responses and a possible reduction in long context capabilities compared to Gemini 1.5 Pro, despite the advertised 2 million token context.

- GitHub Copilot Embraces Gemini 2.0 Flash for Developers: GitHub announced Gemini 2.0 Flash integration for all Copilot users, making it accessible in the model selector within code editors and Copilot Chat on GitHub. This move signifies a major win for Gemini within the Microsoft ecosystem, positioning it ahead of competitors in developer tool integration.

Theme 2. Coding IDEs and AI Assistants: Feature Comparisons and User Feedback

- Cursor IDE Supercharged with MCP Server Integration: Cursor IDE now supports MCP server integration, enabling users to leverage Perplexity and other tools directly within the IDE, as demonstrated in a YouTube tutorial. This enhancement allows for complex workflows and customized AI assistance, with easy setup via a provided GitHub repository.

- Codeium Plugin for JetBrains Faces User Stability Woes: Users are reporting significant instability with the Codeium JetBrains plugin, citing frequent unresponsiveness and the need for restarts, pushing some back to using Copilot. A user plea, 'Please give the Jetbrains plugin some love', highlights the community's demand for a more reliable plugin experience.

- Windsurf Next Beta Aims to Outpace Cursor, But Credits Cause Pain: Windsurf Next Beta is launched to preview innovative features, but users are struggling with credit allocation, particularly flex credits, leading to workflow disruptions. Comparisons with Cursor highlight Cursor's advantage in third-party tool and extension flexibility, suggesting Windsurf could enhance its value by adopting similar functionalities.

Theme 3. Advanced Model Training and Optimization Techniques

- Unsloth Unveils Dynamic 4-bit Quantization for Accuracy Boost: Unsloth introduced Dynamic 4-bit Quantization to improve model accuracy while maintaining VRAM efficiency by selectively quantizing parameters. This method enhances the performance of models like DeepSeek and Llama compared to standard quantization techniques, offering a nuanced approach to model compression.

- Ladder-Residual Architecture Supercharges Llama 70B on Torchtune: Ladder-residual modification accelerates the 70B Llama model by ~30% on multi-GPU setups with tensor parallelism when used within Torchtune. This enhancement, developed at TogetherCompute, marks a significant stride in distributed model training efficiency.

- Harmonic Loss Challenges Cross-Entropy for Neural Networks: A new paper introduces harmonic loss as an alternative to standard cross-entropy loss, claiming improved interpretability and faster convergence in neural networks and LLMs. While some express skepticism about its novelty, others see potential in its ability to shift optimization targets and improve model training dynamics.

Theme 4. Open Source and Community in AI Development

- Mistral AI Rebrands, Doubles Down on Open Source: Mistral AI launched a redesigned website, emphasizing their commitment to open models and customizable AI solutions for enterprise deployment. The rebranding signals a focus on transparency and community engagement, reinforcing their position as a leading contributor to open-source AI.

- GPT4All v3.9.0 Arrives with LocalDocs and Model Expansion: GPT4All v3.9.0 is released featuring LocalDocs functionality, bug fixes, and support for new models like OLMoE and Granite MoE. This update enhances the usability and versatility of the open-source local LLM platform.

- Stability.ai Appoints Chief Community Guy to Boost Engagement: Stability.ai introduced Maxfield as their new Chief Community Guy, acknowledging engagement from Stability has been lackluster lately and promising to boost interaction. Maxfield plans to implement a feature request board and increase transparency from Stability's researchers to better align development with community needs.

Theme 5. Reasoning Model Benchmarks and Performance Analysis

- DeepSeek R1 Nitro Claims Speedy Uptime on OpenRouter: DeepSeek R1 Nitro boasts 97% request completion on OpenRouter, demonstrating improved uptime and speed for users leveraging the API. OpenRouter encourages users to try it out for enhanced performance.

- DeepSeek R1 Said to Rival OpenAI's Reasoning Prowess: Discussions highlight DeepSeek R1 as a strong open-source competitor to OpenAI's O1 reasoning model, offering comparable capabilities with open weights. Members note its accessibility for local execution and its impressive performance in reasoning tasks.

- Flux Outperforms Emu3 in Image Generation Speed on Hugging Face L40S: Flux generated a 1024x1024 image in 30 seconds on Huggingface L40S using flash-attention, significantly outpacing Emu3's ~600 seconds for a smaller 720x720 image. This speed disparity raises questions about Emu3's efficiency relative to single-modal models, despite similar parameter counts.

PART 1: High level Discord summaries

aider (Paul Gauthier) Discord

- Aider Manages Coding Errors: Users are leveraging Aider to manage errors in large projects and refactor code with

/runcommand for autonomous issue resolution, as well as adding files for diagnosis and resolution, as detailed in the Linting and Testing docs.- The community discussed using Aider for automatic code modification, streamlining workflows, and automating coding tasks.

- Claude Beats R1 in Coding: Comparisons of O3 Mini, R1, and Claude models reveal varying success rates in coding tasks, with some users suggesting Claude edges out R1 in specific scenarios, according to this tweet.

- Users expressed frustration over model accuracy limitations while considering the potential integration of tools like DeepClaude with OpenRouter.

- LLMs Struggle with Rust: The community acknowledged that LLMs, despite progress, still falter with complex tasks, especially in languages like Rust, struggling with deeper reasoning and multi-step solutions.

- While LLMs shine on simpler tasks, challenges persist in achieving satisfactory outcomes with more intricate problems.

- Aider Commits Gibberish: Users reported getting commit messages full of

- The discussion included suggestions to use

--weak-model something-elseto avoid these tokens, indicating issues stemming from the interaction between Aider and different API providers.

- The discussion included suggestions to use

- Gemini 2.0 now on LMSYS: Gemini 2.0 is now available on lmarena.ai, making it available for broader comparisons.

- The community will likely be evaluating it for integration into existing workflows.

Unsloth AI (Daniel Han) Discord

- Dynamic Quantization Boosts Accuracy: Unsloth introduced Dynamic 4-bit Quantization to selectively quantize parameters, improving accuracy while maintaining VRAM efficiency, as detailed in their blog post.

- This approach aims to enhance the performance of models like DeepSeek and Llama compared to standard quantization techniques.

- GRPO Integration Anticipated for Enhanced Training: Unsloth is actively integrating GRPO training to streamline and enhance model fine-tuning, promising a more efficient training process, detailed in this github issue.

- Enthusiasm was expressed in anticipation of GRPO support, although there was an acknowledgement that there might be some kinks to iron out, suggesting that implementation might take some time.

- DeepSeek Challenges on Oobagooba Unveiled: Users encountered issues running the DeepSeek model locally in Oobagooba, often due to incorrect model weight configurations. According to Unsloth Documentation, members advised to use the flag --enforce-eager to prevent failures during model loading.

- Optimization suggestions included ensuring the use of the --enforce-eager flag to prevent model loading failures.

- CPT Model Shows Impressive Perplexity Scores: The CPT with Unsloth model showed major improvements in Perplexity (PPL), with base model scores around 200 dropping to around 80, which was met with enthusiasm.

- Members suggested that the DeepSeek model is very old, indicating a need for updated versions and a more interesting dataset, especially for math versions of the model.

- LLM Model Reassembly Frustrations: A member reported issues with importing a layer incorrectly while trying to reassemble an LLM model in a PyTorch neural network, leading to gibberish output and seeking assistance in optimizing model efficiency.

- They sought advice on understanding where efficiency could be improved in different parts of the model to fix the gibberish output problem.

Codeium (Windsurf) Discord

- Gemini 2.0 Flash Debuts on Windsurf: Gemini 2.0 Flash is now live on Windsurf, consuming only 0.25 user prompt credits and 0.25 flow action credits per tool call, noted for its speed in coding inquiries.

- Despite its efficiency, users observed it had limited tool calling ability, according to Windsurf's announcement.

- Windsurf Next Beta Launches: The Windsurf Next Beta version is available for download here, allowing users to test innovative features and improvements for AI in software development.

- It requires at least OS X Yosemite, Ubuntu 20.04, or Windows 10 (64-bit), as detailed in the Windsurf Next Launch blog post.

- Users Report Codeium Plugin Struggles: Multiple users cited problems with the Codeium JetBrains plugin, describing frequent unresponsiveness and the need for restarts to maintain functionality, causing some to revert to Copilot.

- A user pleaded, 'Please give the Jetbrains plugin some love' to enhance its stability, highlighting the community's need for a reliable tool.

- Windsurf Credit Allocation Irks Users: Users are facing issues with credit allocation in Windsurf, particularly with flex credits, which leads to limited functionality, with one user having contacted support multiple times.

- The discussions underscored user frustration with the system's reliability, affecting critical workflows.

- Windsurf Lags Behind Cursor: Users pointed out Cursor's advantage over Windsurf due to its flexibility in installing third-party tools and extensions.

- They suggested Windsurf could enhance its value by allowing similar functionalities, especially concerning moving third-party apps within the IDE.

Stability.ai (Stable Diffusion) Discord

- Stability Gets Community Guy: Stability.ai introduced Maxfield as the new Chief Community Guy, highlighting his involvement with Stable Diffusion since November 2022, acknowledging that engagement from Stability has been lackluster lately.

- Maxfield plans to boost engagement through a feature request board for community suggestions and increased transparency from Stability's researchers and creators, stating, what's the point of all this compute if we're not building stuff you want?

- Diffusion Model Nesting Explored: Discussions in the general-chat channel revealed interest in nested AI architectures, where a diffusion model operates within the latent space of another model, though a compatible VAE is essential.

- Users sought papers that explore this concept, but few links were shared.

- Training Models Proves Tricky: Users report experiencing challenges when training models like LoRA, noting default settings often outperform complex adjustments, referencing NeuralNotW0rk/LoRAW.

- The complexity of evolving architectures leaves some users eager for more streamlined and user-friendly tools to work in latent space effectively.

- Future AI Models Spark Excitement: The community speculated on future multimodal models, expressing enthusiasm for tools that merge text and image generation capabilities, perhaps something like PurpleSmartAI.

- There’s interest in developing new models that enhance creative uses like video game development through intuitive interfaces, and a hackathon around that concept Multimodal AI Agents - Hackathon · Luma.

- Users Battle Discord Spam: The general-chat channel experienced a spam incident, with users promptly reporting the message and advocating for moderation action.

- The community demonstrated a collective effort to uphold channel integrity by flagging unrelated promotional posts.

Cursor IDE Discord

- Cursor Adds MCP Server Integration: Cursor IDE now supports MCP server integration, enabling users to utilize Perplexity for assistance via commands and easy setup using a provided GitHub repository.

- A user demonstrated how to Build Cursor on Steroids with MCP in this Youtube video with enhanced functionalities.

- Gemini 2.0 Pro Coding Skills Questioned: Users criticized the Gemini 2.0 Pro model for struggling with coding tasks despite good data analysis performance, according to lmarena.ai.

- Benchmarks show that Gemini 2.0 Pro lags behind O3-mini high and Sonnet for coding tasks even though it is decent for random tasks; check out a comparison on HuggingFace.

- Coders Use Voice Dictation: Discussants explored voice dictation tools, referencing Andrej Karpathy's coding dictation method using Whisper technology, even as the accuracy of Windows' built-in dictation feature could use improvements.

- Customizing voice interfacing for coding sparked interest, with the goal of improving speed and accuracy.

- Mobile Cursor Debated: A user proposed developing an iPhone app for Cursor to facilitate coding and prompting on the go, however the consensus indicated that current frameworks may not justify the development effort.

- The community weighed the practicality of developing a mobile version of Cursor, pointing out that the advantage might not outweigh development costs.

Perplexity AI Discord

- Perplexity AI's UI Changes Irk Users: Users voiced frustrations about recent UI changes in Perplexity AI, particularly the removal of focus modes and slower performance.

- Some users are having trouble accessing models like Claude 3.5 Sonnet, with automatic activation of R1 or o3-mini in Pro Search mode.

- Gemini 2.0 Flash Enters the Scene: Gemini 2.0 Flash was released to all Pro users, marking the first time a Gemini model is on Perplexity since earlier versions, as noted in Aravind Srinivas's tweet.

- Users are curious about context limits and capabilities compared to previous models and its availability in the current app interface.

- Model Access Limitations Cause Confusion: Pro users reported inconsistent access to models, with some unable to use Gemini or access desired models despite their subscription, and pointed to the Perplexity status page to debug.

- Disparities in user experience were noted, with some finding the limitations unnecessary and others still adjusting to new functionalities across platforms.

- Sonar Reasoning Pro Rides DeepSeek R1: A member clarified that Sonar Reasoning Pro operates on DeepSeek R1, confirmed on their website.

- This realization was new to some members unaware of the underlying models.

- US Iron Dome Proposed Amid Secession Bids: A member shared a video discussing Trump's proposal for a US Iron Dome alongside ongoing political developments, including a California secession bid.

- The discussion considers the implications of such military strategies on national security and local governance.

Eleuther Discord

- Harmonic Loss Creates Optimism: A paper introducing harmonic loss as an alternative to standard cross-entropy loss for neural networks surfaced, with claims of improved interpretability and faster convergence as discussed on Twitter.

- While some expressed skepticism about its novelty, others pointed out its potential to shift optimization targets; it led to discussion on stability and activation interactions during model training.

- VideoJAM Generates Motion: Hila Chefer introduced the VideoJAM framework, designed to enhance motion generation by directly addressing the challenges video generators face with motion representation without extra data, as discussed on Twitter and on the project's website.

- It is intended to directly address the challenges video generators face with motion representation without needing extra data, and is expected to improve the dynamics of motion generation in video content.

- GAS Revs Up TPS Over Checkpointing: Training without activation checkpointing and using GAS significantly increases TPS, with a comparison showing 242K TPS for batch size 8 with GAS versus 202K TPS for batch size 48 with checkpointing.

- Despite different effective batch sizes, the smaller batch size with GAS shows a faster convergence, though a noted lower HFU/MFU could be a concern but isn't prioritized if TPS improves.

OpenRouter (Alex Atallah) Discord

- DeepSeek R1 Nitro gets Speedy: The DeepSeek R1 Nitro has demonstrated better uptime and speed, with 97% request completion.

- According to OpenRouterAI, users are now encouraged to try it out.

- OpenRouter Bumps Back Online: Users reported API issues and rate limit errors, sparking concerns about service reliability, however service returned right away after reverting a recent change.

- Toven confirmed the downtime and announced the fix, reassuring users of the service's restored functionality.

- Anthropic API Gets Rate Limited: Users are running into rate limit errors when using the API, particularly with Anthropic, which has a limit of 20 million input tokens per minute.

- Louisgv mentioned reaching out to Anthropic for a potential rate limit increase to resolve these restrictions.

- Gemini 2.0: A Gemini in the Rough?: Xiaoqianwx sparked a discussion about expectations for Gemini 2.0, and how stronger models may be needed to compete effectively.

- The community is largely disappointed in its performance and is actively discussing the model's strengths and weaknesses.

- Price Controls are Coming to OpenRouter: Users inquired about potential price controls for API usage, specifically regarding cost variations across providers.

- Toven introduced a new

max_priceparameter for controlling spending limits on API calls, currently live without full documentation.

- Toven introduced a new

Interconnects (Nathan Lambert) Discord

- Gemini 2.0 Debuts with Flash and Experimental Pro: Benchmarking shows Gemini 2.0 Pro Experimental performs comparably to Claude 3.5 Sonnet, though inconsistencies in API responses are noted, while Gemini 2.0 Flash is integrated into GitHub Copilot for all users as a new tool for developers, giving Gemini significant traction within the Microsoft ecosystem ahead of competitors.

- Some users feel that while the Flash models outperform Gemini 1.5 Pro, long context capabilities seem diminished, and they find naming conventions for AI models to lack creativity and clarity (DeepMind Tweet, GitHub Tweet).

- Mistral Rebrands with Open Source Focus: Mistral launched a redesigned website (Mistral AI) showcasing their open models aimed at customizing AI solutions, emphasizing transparency and enterprise-grade deployment options with a new cat logo.

- The company balances its image as a leading independent AI lab with a playful design approach.

- Softbank's AGI Dream Team: Discussion surrounds the necessity for companies to explore every avenue for AGI delivery to Softbank within two years, as the company expects $100B in revenue.

- The community considers whether this is a realistic timeline.

- DeepSeek R1 Debuts Amidst Scrutiny: On January 20th, 2025, DeepSeek released their open-weights reasoning model, DeepSeek-R1, spurring debate around the validity of its training costs as published in this Gradient Updates issue.

- The model's architecture is similar to DeepSeek v3, resulting in discussions about its performance and pricing.

- Karpathy Goes Vibe Coding: Andrej Karpathy introduced the concept of 'vibe coding', embracing LLMs like Cursor Composer and bypassing traditional coding, where he states he rarely reads diffs anymore

- He adds, 'When I get error messages I just copy paste them in with no comment,' as seen in this tweet.

LM Studio Discord

- LM Studio Has Low VRAM Woes: Users shared challenges running LM Studio on older CPUs and GPUs, particularly with lower VRAM graphics cards like the RX 580, which led to performance limitations.

- Some users suggested compiling llama.cpp without AVX support to enhance performance on older systems.

- Qwen 2.5 Recommended for Coding: The Qwen 2.5 model received recommendations for users needing coding task support, especially for those with specific hardware configurations.

- Users voiced model preferences based on local installation performance and usability.

- Vulkan Support Sees Mixed Results: Enabling Vulkan support for improved GPU utilization in llama.cpp necessitates specific build configurations, stirring discussion about the nuances of LM Studio.

- Shared resources highlighted the setup requirements for compiling with Vulkan.

- GPT-Researcher Plagues LM Studio: Users integrating GPT-Researcher with LM Studio reported encountering errors with model loading and embedding requests.

- Specifically, a 404 error indicated that no models were loaded, halting integration attempts.

- Rising GPU Prices Inflate: Concerns rose regarding GPU prices on platforms like eBay and Mercari, which are now considered appreciating assets due to high demand.

- The inflated prices of components, including the Jetson board, are being influenced by scalpers.

HuggingFace Discord

- DeepSeek Outperforms Declining ChatGPT: Users reported DeepSeek is providing better answers and has a more engaging chain of thought methodology than ChatGPT, however access is limited due to high traffic.

- Interest was expressed in DeepSeek's thinking process, emphasizing its chain of thought methodology as more engaging than traditional AI responses.

- TinyRAG Simplifies RAG Systems: The TinyRAG project streamlines RAG implementations using llama-cpp-python and sqlite-vec for ranking, querying, and generating LLM answers.

- This initiative offers developers and researchers a simplified approach to deploying retrieval-augmented generation systems.

- Distance-Based Learning Paper Published: A new paper, Distance-Based Learning in Neural Networks (arXiv), introduces a geometric framework and the OffsetL2 architecture.

- The research highlights the impact of distance-based representations on model performance, contrasting it with intensity-based approaches.

- Agents Course Kicks off Next Week: The Agents Course is launching next Monday, featuring new channels for updates, questions, and project showcases.

- Enthusiasm builds with sneak peek into first unit's table of contents; but some members expressed concern about lacking basic Python skills needed for the course.

- HuggingFace Needs Updated NLP Course: Members requested an updated NLP course from Hugging Face as the existing course lacks coverage of LLMs which are crucial in today's NLP frameworks.

- This gap has prompted suggestions for more comprehensive training material to address emerging trends in the field.

Yannick Kilcher Discord

- NURBS challenge Meshes for Simulations: NURBS (Non-Uniform Rational B-Splines) offer parametric representations suitable for dynamic simulations, contrasting with the increasing inefficiency of traditional meshes, and modern procedural shaders help with texturing issues.

- Members noted a shift in industry standards towards dynamic models and advanced techniques like NURBS and SubDs, moving away from the limitations of static mesh methods in dynamic applications.

- Gemini 2.0 Updates with Flash and Lite Editions: Google released updated Gemini 2.0 Flash in the Gemini API and Google AI Studio, emphasizing low latency and enhanced performance over previous versions like Flash Thinking.

- Feedback on the new Flash Lite model indicates issues with returning structured output, with users reporting problems in generating valid JSON responses.

- Engineer Built Viral ChatGPT Sentry Gun: OpenAI cut API access to an engineer, sts_3d, after a viral video showcased an AI-controlled motorized sentry gun, prompting concerns about the weaponization of AI.

- The rapid progression of the engineer's projects highlights the potential risks associated with evolving AI applications.

- Researchers crack Affordable AI Reasoning Models: Researchers developed the s1 reasoning model, achieving capabilities similar to OpenAI's models for under $50 in cloud compute credits, marking a significant reduction in costs [TechCrunch Article].

- The model utilizes a distillation approach, extracting reasoning capabilities from Google's Gemini 2.0, thus illustrating the trend towards more accessible AI technologies.

- Harmonic Loss Paper Draws Mixed Reviews: The Harmonic Loss paper introduces a faster convergence model, but the model has not demonstrated significant performance improvements, leading to debates on its practicality.

- While some consider the paper 'jank,' its brevity is seen as valuable, especially with additional insights available on its GitHub repository.

OpenAI Discord

- DeepSeek Data Practices Probed: Concerns arose over DeepSeek potentially sending data to China, as highlighted in a YouTube video, citing servers based in China as the cause.

- The discussion underscored the importance of data governance and regulatory standards in AI development, with users pointing out the implications of data residency.

- ChatGPT's Reasoning Quirks: Users observed ChatGPT 4o exhibiting unpredictable behavior such as providing reasoning in multiple languages despite receiving English prompts.

- These reports triggered discussions around the model's current limitations, as well as the need for refining the consistency and clarity of AI-generated outputs.

- Gemini 2.0 Token Context Impresses: Gemini 2.0's offering of a 2 million token context and free API access has piqued the interest of developers, eager to explore the expansive capabilities.

- While some users acknowledged the significance of automation facilitated by Gemini 2.0, others commented on the AI's verbosity, making them read too much.

- Users Crafting Rhetorical Prompt: A member detailed a prompt for generating a persuasive argument on why Coca-Cola is best enjoyed with a hot dog, incorporating advanced rhetorical techniques like Antimetabole and Chiasmus.

- The prompt structure included sections for justifying the argument, providing examples, and addressing counterarguments, aiming for a cohesive and impactful conclusion.

- Sprite Sheet Template Inquiry: A user sought advice on refining a prompt template to produce consistent cartoon-style sprite sheets, concentrating on character and animation frame layout.

- Despite specifying character design and dimensions, images were not aligning as expected, thus the user's request for optimization.

Nous Research AI Discord

- DeepSeek Rivals OpenAI in Reasoning: Discussion highlighted how DeepSeek R1 is said to rival OpenAI's O1 reasoning model while being fully open-source, and can be run effectively.

- Members noted the impressive capabilities of newer models like Gemini for performing mathematical tasks and how complexities in branding can confuse users; see Gemini 2.0 is now available to everyone.

- AI's Crypto Connection?: A member speculates if the AI backlash is linked to the fallout from NFT and crypto controversies of 2020-21.

- They referenced Why Everyone Is Suddenly Mad at AI and its implications for the perception of AI, connecting it with past tech hypes.

- DeepResearch Receives Rave Reviews: Users are enthusiastic about OpenAI's DeepResearch feature, praising its performance and ability to efficiently retrieve obscure information, as demonstrated in this tweet.

- Members discussed enriching results with knowledge graphs to enhance fact-checking and research accuracy.

- Liger Kernel Gains GRPO Chunked Loss: A recent pull request adds the GRPO chunked loss to the Liger Kernel, addressing issue #548.

- Developers can run make test, make checkstyle, and make test-convergence for testing correctness and code style.

- Infra Teams Under-Acknowledged?: Members noted that many pretraining papers have a plethora of authors due to the need to credit the hardware infrastructure team.

- It was highlighted that the infra team endures challenges so that research scientists can focus on their work without distractions - Infra people suffer so that the research scientists don't have to.

Modular (Mojo 🔥) Discord

- Mojo Compiler Goes Closed Source: The Mojo compiler transitioned to closed source, driven by the need to manage rapid changes, according to a team member.

- Compiler enthusiasts are eager to access the inner workings, especially the custom lowering passes in MLIR, but will have to wait until the end of 2026 according to this video.

- Mojo Aims for Open Source in Q4 2025: Modular aims to open source the Mojo compiler by Q4 of next year, according to a team member, though there are hopes for an earlier release.

- There are currently no plans to release individual dialects or passes in MLIR before the full compiler is open-sourced, dashing hopes for compiler nerds.

- Mojo's Standard Library Faces Design Choices: Debate arose around whether the Mojo standard library should evolve into a general-purpose library with features like web servers and JSON parsing.

- Concerns were voiced about the complexity of supporting a wide range of use cases, raising the entry bar for contributing new features to the

stdlib, as curated in this Github repo.

- Concerns were voiced about the complexity of supporting a wide range of use cases, raising the entry bar for contributing new features to the

- Async Functions Spark Discussion: Handling of async functions in Mojo is under discussion, with proposals for new syntax to improve clarity and enable performance optimization, as shown in this proposal.

- Participants raised concerns about the complexity of maintaining separate async and sync libraries and the implications for usability across different versions of functionality.

Notebook LM Discord

- Legal AI Automates Drafting: AI is now used to automate the drafting of repetitive legal documents, using templates from previous cases as sources, making the process more efficient as members are finding AI useful.

- One attorney reports the AI is reliable and provides clear sourcing, particularly for similar cases or mass litigation.

- Avatars Elevate Contract Review: Members are experimenting with avatars in contract review to make the redlining analysis more engaging, as demonstrated in a YouTube video.

- The addition of avatars is intended to differentiate the product and effectively support client teams.

- NotebookLM Plus Activation Issues Arise: Google Workspace admins are facing issues activating NotebookLM Plus, requiring a Business Standard license or higher to access premium features, according to Google Support.

- Resources have been shared to help admins enable and manage user access, with a focus on understanding the specific requirements and licenses needed.

- Spreadsheet Integration Still Faces Challenges: Users express concerns about NotebookLM's effectiveness in analyzing tabular data from spreadsheets, as stated in the general channel, suggesting that Gemini might be more suitable for complex data tasks.

- Discussion revolves around best practices for uploading spreadsheets and the limitations in data recognition capabilities.

Torchtune Discord

- Torchtune Trounces Unsloth in Memory Management: Users reported Torchtune handles fine-tuning without the CUDA memory issues seen in Unsloth on a 12GB 4070 card.

- The tool, unless pushed with excessive batch sizes, avoids running into the same memory issues.

- Ladder-Residual Rockets Llama's Speed: The Ladder-residual modification accelerates the 70B Llama model by ~30% on multiple GPUs with tensor parallelism, according to @zhang_muru's work at TogetherCompute.

- This enhancement involved co-authorship from @MayankMish98 and mentoring by @ben_athi, marking a notable advancement in distributed model training.

- Kolo Kicks off Torchtune Integration: The Kolo Docker tool now provides official support for Torchtune, streamlining local model training and testing for newcomers, project link.

- The Kolo Docker tool, created by MaxHastings, is intended to facilitate LLM training and testing using a range of tools within a single environment.

- Tune Lab UI Tuned for Torchtune: A member is developing Tune Lab, a FastAPI and Next.js interface for Torchtune, which is using modern UI components to enhance user experience, Tune Lab repo.

- The project aims to integrate both pre-built and custom scripts, inviting users to contribute to its development.

- GRPO Gives Training a Giant Boost: Significant success was achieved with a GRPO implementation, enhancing training performance from 10% to 40% on GSM8k, as reported by a member. See related issue

- The implementation involved resolving debugging challenges related to deadlocks and memory issues, with plans to refactor the code for community use.

Latent Space Discord

- OpenAI Plans SWE Agent: OpenAI plans to release a new SWE Agent by end of Q1 or mid Q2, powered by O3 and O3 Pro for enterprises, according to a tweet.

- This agent is anticipated to significantly impact the software industry, purportedly competing with mid-level engineers, spotted in a live stream.

- OmniHuman Generates Avatar Videos: The new OmniHuman video research project generates realistic avatar videos from a single image and audio, without aspect ratio limitations, according to a tweet.

- Praised as a breakthrough, the project has left viewers gobsmacked by its level of detail.

- Figure AI Splits from OpenAI: Figure AI exited its collaboration agreement with OpenAI to focus on in-house AI tech after a reported breakthrough, according to a tweet.

- The founder hinted at showcasing something no one has ever seen on a humanoid within 30 days, per TechCrunch.

- Gemini 2.0 Flash Goes GA: Google announced that Gemini 2.0 Flash is now generally available, enabling developers to create production applications, according to a tweet.

- The model supports a context of 2 million tokens, sparking discussions about its performance relative to the Pro version, according to a tweet.

- Mistral AI Rebrands Platform: Mistral AI's website has undergone a major rebranding, promoting their customizable, portable, and enterprise-grade AI platform, according to their website.

- They emphasize their role as a leading contributor to open-source AI and their commitment to providing engaging user experiences.

Nomic.ai (GPT4All) Discord

- GPT4All v3.9.0 Arrives: The GPT4All v3.9.0 is out, featuring LocalDocs functionality, enhanced support for new models like OLMoE and Granite MoE.

- The new version also fixes errors on later messages when using reasoning models and enhances Windows ARM support.

- Reasoning Augmented Generation (ReAG) Debuts: ReAG feeds raw documents directly to the language model, facilitating more context-aware responses compared to traditional methods.

- This approach enhances accuracy and relevance by avoiding oversimplified semantic matches.

- GPT4All as a Self-Hosted Server: Users discussed self-hosting GPT4All on a desktop for mobile connectivity, achievable through a Python host.

- While feasible, there may be limited support and it may require unconventional setups.

- NSFW Content Finds Local Models: Members discussed locally usable LLMs for NSFW stories, finding wizardlm and wizardvicuna suboptimal.

- Alternatives like obadooga and writing-roleplay-20k-context-nemo may offer better performance for generating NSFW content.

- UI Scrolling Bug Surfaces: A user reported a UI bug where the prompt window's content cannot be scrolled if the text exceeds the visible area, causing accessibility problems.

- A similar issue was previously reported on GitHub, indicating a broader problem.

MCP (Glama) Discord

- ChatGPT Pro Sparks Team Interest: Members are interested in acquiring a ChatGPT Pro subscription, potentially to share costs among multiple accounts for team use.

- Interest focused on using ChatGPT Pro for development, but concerns were raised about splitting accounts and appropriate usage strategies.

- Excel MCP Dreams Emerge: Enthusiasm bubbled around creating an MCP to read and manipulate Excel files, with debates on using Python vs. TypeScript.

- The discussion highlighted the potential for automating data manipulation tasks, but the feasibility of each language was a key point of contention.

- Playwright edges out Puppeteer: Experiences shared indicate that Playwright worked well with MCP, while Puppeteer needed local modifications and this GitHub implementation is not in a production ready state.

- Users compared the ease of implementation for both tools in automation projects, favoring Playwright's simpler integration.

- Home Assistant adds MCP Client/Server Support: The publication of Home Assistant with MCP client/server support expands integration capabilities, and is great to see further integration in automation ecosystems.

- This integration promises to enhance automation workflows, allowing users to leverage MCP within their home automation setups.

- PulseMCP Showcases Use Cases: PulseMCP launched a new showcase of useful MCP server and client combinations with detailed instructions, screenshots, and videos.

- The examples highlight using Gemini voice to manage Notion and converting a Figma design to code with Claude, demonstrating the versatility of MCP applications.

GPU MODE Discord

- Flux Annihilates Emu3 in Image Generation: On the Huggingface L40S, Flux generated a 1024x1024 image in 30 seconds using

flash-attentionwith W8A16 quantization, dwarfing Emu3's ~600 seconds for a 720x720 image.- Despite comparable parameter counts (8B for Emu3 and 12B for Flux), the speed difference triggers questions about Emu3's efficiency against single-modal models.

- OmniHuman Fabricates Realistic Human Videos: The OmniHuman project can generate high-quality human video content based on just a single image, highlighting its potential for multimedia applications.

- Its unique framework achieves an end-to-end multimodality-conditioned human video generation using a mixed training strategy, greatly enhancing the quality of the generated videos.

- FlowLLM Kickstarts Material Discovery: FlowLLM is a new generative model that combines large language models with Riemannian flow matching to design novel crystalline materials, significantly improving generation rates.

- This approach surpasses existing methods in material generation speed, offering over three times the efficiency for developing stable materials based on LLM outputs.

- Modal is Hiring ML Performance Engineers: Modal is a serverless computing platform that provides flexible, auto-scaling computing infrastructure for users like Suno and the Liger Kernel team.

- Modal is hiring ML performance engineers to enhance GPU performance and contribute to upstream libraries like vLLM with job description here.

- Torchao Struggles in Torch Compile: A user reported that using Torchao in conjunction with torch.compile seems to cause a bug, implying a compatibility issue.

- Another member suggested the bug aligns with this GitHub issue about

nn.Modulenot transferring between devices.

- Another member suggested the bug aligns with this GitHub issue about

LlamaIndex Discord

- Deepseek Forum Set to Explore Workflows: @aicampai is hosting a virtual forum on Deepseek, emphasizing its capabilities and integration into developer and engineer workflows, detailed here.

- The forum aims to provide hands-on learning experiences on Deepseek technology and its applications.

- New Tutorial Generates First RAG App: @Pavan_Belagatti released a video tutorial guiding users on building their first Retrieval Augmented Generation (RAG) application using @llama_index, found here.

- This responds to new users seeking practical insights into RAG application development.

- Gemini 2.0 Arrives with LlamaIndex Support: @google announced that Gemini 2.0 is generally available, with day 0 support from @llama_index, detailed in their announcement blog post.

- Users can install the latest integration package via

pip install llama-index-llms-geminito access impressive benchmarks, with further info available via Google AI Studio.

- Users can install the latest integration package via

- LlamaIndex LLM Class Awaits Timeout: A user observed that the default LlamaIndex LLM class lacks a built-in timeout feature, present in OpenAI's models, linked here.

- Another user suggested that the timeout likely consists of client kwargs.

- Troubleshooting Function Calling with Qwen-2.5: A user reported a

ValueErrorwhen using Qwen-2.5 for function calling, recommending the use of command-line parameters and switching to OpenAI-like implementations, with docs here.- To utilize function calling in Qwen-2.5 without issues, another user moved to implement the

OpenAILikeclass from LlamaIndex.

- To utilize function calling in Qwen-2.5 without issues, another user moved to implement the

LLM Agents (Berkeley MOOC) Discord

- MOOC Certificates held hostage: A member reported delays in receiving certificates requested in December, and the course staff is currently working to expedite the distribution process.

- The course staff hopes to resolve these issues within the next week or two.

- Quizzes are proving elusive: Members inquired about the availability of Quiz 1 and Quiz 2 and course staff confirmed that Quiz 2 is not yet published, providing a link for Quiz 1.

- Members can complete Quiz 1 after Friday, as there are currently no deadlines.

- Lecture 1 Video now with Professional Captions: A member confirmed that the YouTube link leads to the fixed version of the first lecture, titled 'CS 194/294-280 (Advanced LLM Agents) - Lecture 1, Xinyun Chen'.

- The edited recording includes professional captioning to improve accessibility.

Cohere Discord

- Users Ponder Embed v3 Migration: A user inquired about migrating existing float generations from embed v3 to embed v3 light, questioning if they could remove extra dimensions or if they needed to regenerate their database entirely.

- The absence of direct responses underscores the complexity and concerns surrounding such migration processes.

- Cohere Moderation Model Craved: A member expressed a desire for a moderation model from Cohere to reduce dependency on American services.

- This need highlights the desire for localized AI solutions that cater to regional requirements.

- Chat Feature Pricing Probed: A user inquired about a paid monthly fee option for chat functionality, indicating a primary interest in chat features over product development.

- A fellow member pointed out the existence of a production API that requires payment for use.

- Conversation Memory Confounds Coders: A member shared their frustration that AI responses are not contextually related between requests and sought guidance on utilizing conversational memory using Java code.

- Another member acknowledged the creation of a support ticket related to the issue, and provided a link to the ticket reinforcing community support.

- Community Clarifies Conduct Code: A member issued a strong reminder that future rule violations could lead to a ban, emphasizing adherence to community guidelines.

- In response, another member apologized for past actions, demonstrating a commitment to aligning with community expectations.

tinygrad (George Hotz) Discord

- tinygrad 0.10.1 Faces Errors: While bumping tinygrad to version 0.10.1, users reported tests failing with a NotImplementedError due to an unknown relocation type 4, which signifies an external function call not supported by version 19.1.6.

- The problems are potentially related to Nix-specific behaviors affecting the compilation process.

- Compiler Flag Concerns Arise: Concerns were raised about compiler warnings related to skipping the impure flag -march=native because

NIX_ENFORCE_NO_NATIVEwas set.- A member clarified that removing -march=native typically applies to user machine software, whereas tinygrad utilizes clang as a JIT compiler for kernels, mitigating the necessity of this flag within the tinygrad context.

- Debugging Gets Easier: A contributor announced that PR #8902 is set to improve debugging in tinygrad, making the resolution of complications more manageable.

- The expectation is that the project's ongoing improvements will help mitigate the observed issues.

- Base Operations and Kernel Implementations Questioned: A member inquired about the number of base operations in tinygrad, seeking clarity on the foundational elements of the framework.

- There was a subsequent request for sources on the kernel implementations pertinent to tinygrad, indicating interest in understanding the underlying codebase.

Gorilla LLM (Berkeley Function Calling) Discord

- API Endpoint Needs to be Public: The instructions for adding new models to the leaderboard specify that while authentication may be necessary, the API endpoint should be accessible to the general public.

- Accessibility is intended to ensure broader usability of the API endpoint.

- Raft Method Sufficiency with Llama 3.1 7B?: A member asked if 1000 users' data is sufficient for training using the Raft method with Llama 3.1 7B, and whether to incorporate synthetic data before applying RAG.

- There were concerns raised that 1000 users' data may not provide enough diversity for effective model training, suggesting that synthetic data might be needed to fill gaps and improve training outcomes.

DSPy Discord

- Chain of Agents Debuts in DSPy: A user introduced an example of a Chain of Agents implemented the DSPy Way, with details available in this article.

- The discussion also referenced the original research paper on Chain of Agents, accessible here.

- Community Seeks Git Repository for DSPy Chain of Agents: A user inquired about the availability of a Git repository for the discussed Chain of Agents example in DSPy.

- This request indicates strong community interest in practical, hands-on implementations of the Chain of Agents concept.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!