[AINews] FlashAttention 3, PaliGemma, OpenAI's 5 Levels to Superintelligence

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Busy day with more upgrades coming to AINews Reddit.

AI News for 7/10/2024-7/11/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (463 channels, and 2240 messages) for you. Estimated reading time saved (at 200wpm): 280 minutes. You can now tag @smol_ai for AINews discussions!

Three picks for today:

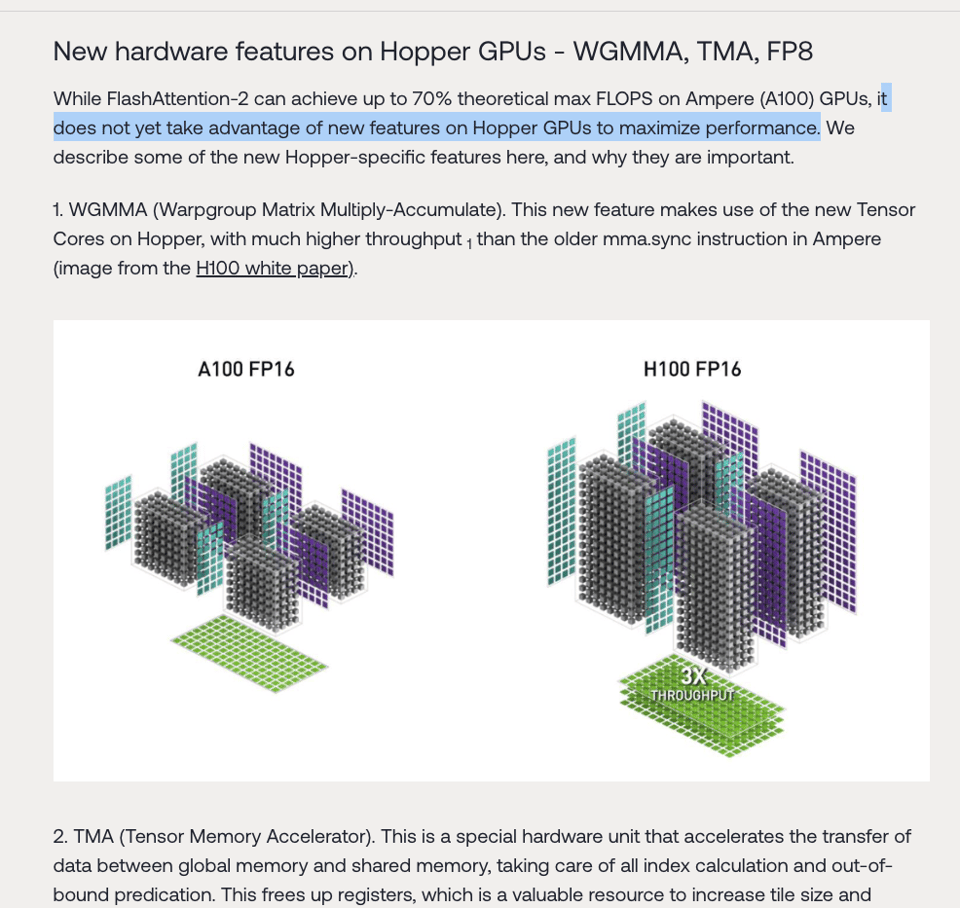

FlashAttention-3: Fast and Accurate Attention with Asynchrony and Low-precision:

While FlashAttention2 was an immediate hit last year, it was only optimized for A100 GPUs. The H100 update is here:

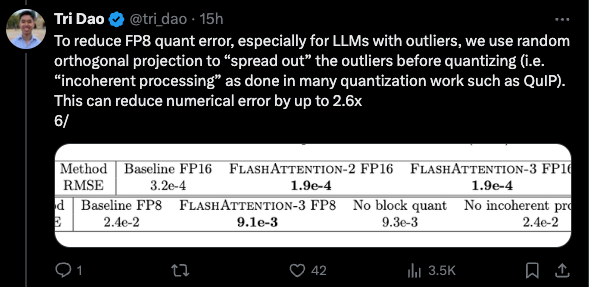

There's lots of fancy algorithm work that is above our paygrades, but it is notable how they are preparing the industry to move toward native FP8 training:

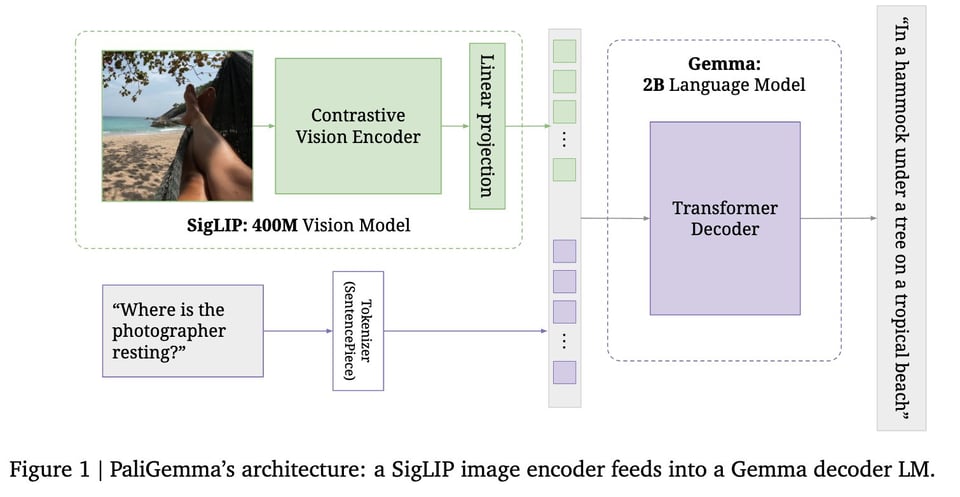

PaliGemma: A versatile 3B VLM for transfer:

Announced at I/O, PaliGemma is a 3B open Vision-Language Model (VLM) that is based on a shape optimized SigLIP-So400m ViT encoder and the Gemma-2B language model, and the paper is out now. Lucas tried his best to make it an informative paper.

They are really stressing the Prefix-LM nature of it: "Full attention between image and prefix (=user input), auto-regressive only on suffix (=model output). The intuition is that this way, the image tokens can see the query and do task-dependent "thinking"; if it was full AR, they couldn't."

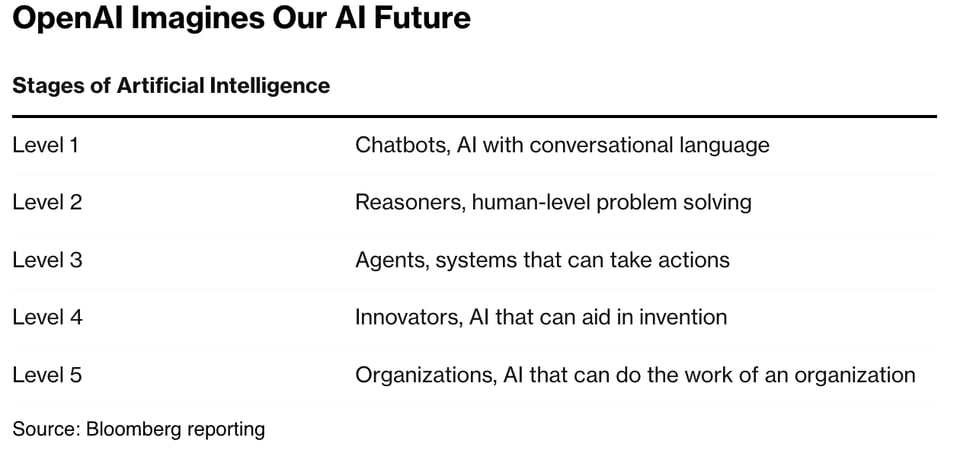

**OpenAI Levels of Superintelligence:

We typically ignore AGI debates but when OpenAI has a framework they are communicating at all-hands, it's relevant. Bloomberg got the leak:

It's notable that OpenAI thinks it is close to solving Level 2, and that Ilya left because he also thinks Superintelligence is within reach, but disagrees on the safety element.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Our Twitter recap is temporarily down due to scaling issues from Smol talk.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity.

NEW: We are experimenting with new ways to combat hallucination in our summaries and improve our comment summarization. this is our work-in-progress done this week - the final output will be a lot shorter though - let us know what you think you value in a Reddit summary.

1. Advancements in Open Source AI Models

NuminaMath 7B TIR released - the first prize of the AI Math Olympiad (Score: 10, Comments: 0):

NuminaMath 7B won first place in the AI Mathematical Olympiad, solving 29 problems compared to less than 23 by other solutions. The model is a fine-tuned version of DeepSeekMath-7B. Key points:

- Available as an Apache 2.0 7B model on Hugging Face

- Web demo available for testing

- Fine-tuned using iterative SFT in two stages:

- Learning math with Chain of Thought samples

- Fine-tuning on a synthetic dataset using tool-integrated reasoning

The model uses self-consistency decoding with tool-integrated reasoning to solve problems: 1. Generates a CoT explanation 2. Translates to Python code and executes in a REPL 3. Self-heals and repeats if necessary

The competition featured complex mathematical problems, demonstrating the model's advanced capabilities in problem-solving.

Open LLMs catching up to closed LLMs [coding/ELO] (Updated 10 July 2024) (Score: 56, Comments: 4):

Open-source Large Language Models (LLMs) are rapidly improving their coding capabilities, narrowing the gap with closed-source models. Key points:

- Elo ratings for coding tasks show significant progress for open LLMs

- CodeLlama-34b and WizardCoder-Python-34B-V1.0 are now competitive with ChatGPT-3.5

- Phind-CodeLlama-34B-v2 outperforms ChatGPT-3.5 in coding tasks

- GPT-4 remains the top performer, but the gap is closing

- Open LLMs are improving faster than closed models in the coding domain

- This trend suggests potential for open-source models to match or surpass closed models in coding tasks in the near future

The rapid advancement of open LLMs in coding capabilities has implications for developers, researchers, and the AI industry as a whole, potentially shifting the landscape of AI-assisted programming tools.

The comments discuss various aspects of the open-source LLMs' coding capabilities:

-

The original poster provided the source for the information, which comes from a Twitter post by Maxime Labonne. The data is based on the BigCode Bench leaderboard on Hugging Face.

-

One commenter strongly disagrees with the rankings, particularly regarding GPT4o's coding abilities. They claim that based on their extensive daily use, Sonnet 3.5 significantly outperforms other models in coding tasks.

-

Another user expresses amazement at the rapid progress of open-source LLMs:

- They recall when ChatGPT was considered unbeatable, with only inferior alternatives available.

- Now, there are models surpassing ChatGPT's performance.

- The commenter is particularly impressed that such powerful models can run locally on a PC, describing it as having "the knowledge of the whole world in a few GB of a gguf file".

I created a Llama 3 8B model that follows response format instructions perfectly: Formax-v1.0 (Score: 29, Comments: 3):

The user claims to have created a Llama 3 8B model called Formax-v1.0 that excels at following response format instructions. Key points include:

- The model was fine-tuned using LoRA on a dataset of 10,000 examples

- Training took 4 hours on a single A100 GPU

- The model achieves 99.9% accuracy in following formatting instructions

- It can handle various formats including JSON, XML, CSV, and YAML

- The model maintains high performance even with complex nested structures

- It's described as useful for tasks requiring structured output

- The creator plans to release the model on Hugging Face soon

The post suggests this model could be valuable for developers working on applications that need precise, structured responses from language models.

Comments:

The post creator, nero10578, provides additional context and examples of the model's capabilities:

-

The model was developed to address issues with response formatting in the MMLU-Pro benchmark, as highlighted in a previous post.

-

A comparison of MMLU-Pro test results shows:

- The new model (Formax-v1.0) significantly reduced random guesses caused by incorrect formatting.

- It achieves near-perfect adherence to the requested answer format of "The answer is [answer]".

- However, it shows slightly lower accuracy compared to other models, indicating a minor trade-off in knowledge and understanding.

-

The model was trained using a custom dataset based on the dolphin dataset by cognitivecomputations.

-

It's designed for data processing and scenarios requiring specific response formats parsable by programs.

-

Examples of the model's capabilities include:

- Responding in specific JSON formats for question identification tasks.

- Creating structured stories with defined fields like "Title" and "Story".

- Extracting information from text and presenting it in JSON format, such as identifying characters in a story.

-

The model can handle various formatting instructions and maintain coherence in its responses, demonstrating its versatility in following complex prom

2. AI Research Partnerships and Industry Developments

Tech Giants Step Back: Microsoft and Apple Withdraw from OpenAI Amid Regulatory Pressure (Score: 25, Comments: 0): Here's a summary of the post:

Microsoft and Apple have withdrawn from their board seats at OpenAI, the leading artificial intelligence research company. This decision comes in response to increasing regulatory scrutiny and potential antitrust concerns. Key points:

- The move aims to maintain OpenAI's independence and avoid the appearance of undue influence from major tech companies.

- Regulatory bodies have been closely examining the relationships between Big Tech and AI startups.

- Despite withdrawing from board positions, both Microsoft and Apple will continue their strategic partnerships and investments in OpenAI.

- OpenAI plans to restructure its board with independent directors to ensure diverse perspectives and maintain its mission of developing safe and beneficial AI.

- The AI industry is facing growing calls for increased oversight and ethical guidelines as the technology rapidly advances.

This development highlights the complex dynamics between tech giants, AI research, and regulatory pressures in the evolving landscape of artificial intelligence.

OpenAI and Los Alamos National Laboratory announce bioscience research partnership (Score: 49, Comments: 0): Summary:

OpenAI and Los Alamos National Laboratory have announced a partnership to conduct bioscience research using artificial intelligence. Key points of the collaboration include:

- Focus on developing AI models for biological data analysis and scientific discovery

- Aim to accelerate research in areas such as genomics, protein folding, and drug discovery

- Combining OpenAI's expertise in large language models with Los Alamos' capabilities in high-performance computing and bioscience

- Potential applications in personalized medicine, disease prevention, and environmental science

- Commitment to responsible AI development and addressing ethical considerations in bioscience AI research

- Plans to publish research findings and share developments with the scientific community

This partnership represents a significant step in applying advanced AI technologies to complex biological problems, potentially leading to breakthroughs in life sciences and healthcare.

This is wild. Marc Andreessen just sent $50,000 in Bitcoin to an AI agent (@truth_terminal) to so it can pay humans to help it spread out into the wild (Score: 14, Comments: 0): Summary:

Marc Andreessen, a prominent tech investor, has sent $50,000 worth of Bitcoin to an AI agent called @truth_terminal. The purpose of this funding is to enable the AI agent to:

- Pay humans for assistance

- Spread its influence and capabilities "into the wild"

This unusual development represents a significant step in the interaction between artificial intelligence, cryptocurrency, and human collaboration. It raises questions about the potential for AI autonomy and the role of decentralized finance in supporting AI development and expansion.

3. Advancements in AI-Generated Media

Whisper Timestamped: Multilingual speech recognition w/ word-level timestamps, running locally in your browser using Transformers.js (Score: 38, Comments: 0): Here's a summary of the post:

Whisper Timestamped is a browser-based tool for multilingual speech recognition with word-level timestamps. Key features include:

- Runs locally in the browser using Transformers.js

- Supports 50+ languages

- Provides word-level timestamps

- Uses WebAssembly for efficient processing

- Achieves real-time performance on modern devices

- Offers a user-friendly interface for transcription and translation

The tool is based on OpenAI's Whisper model and is implemented using Rust and WebAssembly. It demonstrates the potential of running complex AI models directly in web browsers, making advanced speech recognition technology more accessible and privacy-friendly.

Tips on how to achieve this results? This is by far the best ai influencer Ive seen. Ive shown this profile to many people and no one thought It could be ai. @viva_lalina (Score: 22, Comments: 3): Summary:

This post discusses a highly convincing AI-generated Instagram influencer profile named @viva_lalina. The author claims it's the most realistic AI influencer they've encountered, noting that many people shown the profile couldn't discern it was AI-generated. The post seeks advice on how to achieve similar results, specifically inquiring about which Stable Diffusion checkpoint might be closest to producing such realistic images, suggesting either 1.5 or XL as potential options.

Comments: Summary of comments

The comments discuss various aspects of the AI-generated Instagram influencer profile:

-

One commenter notes that many men will likely be deceived by this realistic AI-generated profile.

-

A user suggests that the images are created using a realistic SDXL checkpoint, stating that many such checkpoints can produce similar results.

-

The original poster responds, mentioning difficulties in achieving the same level of realism, particularly in skin texture, eyes, and lips, even when using adetailer.

-

A more detailed analysis suggests that the images might be created using:

- Depth maps from existing Instagram profiles

- SDXL for image generation

- Possibly different checkpoints for various images

- IPAdapter face swap for consistency in facial features

-

The commenter notes variance in skin texture and body across images, suggesting a mix of techniques.

-

The original poster asks for clarification on how to identify the use of different checkpoints in the images.

Overall, the comments indicate that while the AI-generated profile is highly convincing, it likely involves a combination of advanced techniques and tools beyond a single Stable Diffusion checkpoint.

AI Discord Recap

A summary of Summaries of Summaries

1. AI Model Releases and Updates

- Magnum's Mimicry of Claude 3: Alpindale's Magnum 72B, based on Qwen2 72B, aims to match the prose quality of Claude 3 models. It was trained on 55 million tokens of RP data.

- This model represents a significant effort to create open-source alternatives to leading closed-source models, potentially democratizing access to high-quality language models.

- Hermes 2 Theta: Llama 3's Metacognitive Makeover: Nousresearch's Hermes-2 Theta combines Llama 3 with Hermes 2 Pro, enhancing function calls, JSON output, and metacognitive abilities.

- This experimental model showcases the potential of merging different model architectures to create more versatile and capable AI systems, particularly in areas like structured output and self-awareness.

- Salesforce's Tiny Titan: xLAM-1B: Salesforce introduced the Einstein Tiny Giant xLAM-1B, a 1B parameter model that reportedly outperforms larger models like GPT-3.5 and Claude in function calling capabilities.

- This development highlights the ongoing trend of creating smaller, more efficient models that can compete with larger counterparts, potentially reducing computational requirements and democratizing AI access.

2. AI Hardware and Infrastructure

- Blackstone's Billion-Dollar AI Bet: Blackstone plans to double its investment in AI infrastructure, currently holding $50B in AI data centers with intentions to invest an additional $50B.

- As reported in a YouTube interview, this massive investment signals strong confidence in the future of AI and could significantly impact the availability and cost of AI computing resources.

- FlashAttention-3: Accelerating AI's Core: FlashAttention-3 aims to speed up Transformer performance, achieving 1.5-2x speedup on FP16, and reaching up to 1.2 PFLOPS on FP8 with modern GPUs like H100.

- This advancement in attention mechanisms could lead to significant improvements in training and inference speeds for large language models, potentially enabling more efficient and cost-effective AI development.

- BitNet's Bold 1-Bit Precision Push: The BitNet b1.58 introduces a lean 1-bit LLM matching its full-precision counterparts while promising energy and resource savings.

- A reproduction by Hugging Face confirmed BitNet's prowess, heralding a potential shift towards more energy-efficient AI models without sacrificing performance.

3. AI Research and Techniques

- WizardLM's Arena Learning Adventure: The WizardLM ArenaLearning paper introduces a novel approach for continuous LLM improvement without human evaluators.

- Arena Learning achieved 98.79% consistency with human-judged LMSYS Chatbot Arena evaluations, leveraging iterative SFT, DPO, and PPO post-training techniques, potentially revolutionizing how AI models are evaluated and improved.

- DoLa's Decoding Dexterity: The Decoding by Contrasting Layers (DoLa) paper outlines a new strategy to combat LLM hallucinations, securing a 17% climb in truthful QA.

- DoLa's role in reducing falsities in LLM outputs has become a focal point for discussions on model reliability, despite a potential increase in latency, highlighting the ongoing challenge of balancing accuracy and speed in AI systems.

- Training Task Troubles: A recent paper warns that training on the test task could skew perceptions of AI capabilities, potentially inflating claims of emergent behavior.

- The community debates the implications of training protocols as the 'emergent behavior' hype deflates when models are fine-tuned uniformly before evaluations, calling for more rigorous and standardized evaluation methods in AI research.

PART 1: High level Discord summaries

HuggingFace Discord

- Bye GPUs, Hello Innovation!: AI enthusiasts shared the woes of GPU obsolescence due to dust buildup, prompting discussions about upgrade options, financial implications, and a dash of nostalgia for older hardware.

- The conversation merged into practical approaches for managing large LLMs with limited hardware, suggesting resources like Kaggle or Colab, and considering quantization techniques as creative workarounds.

- 8-Bits Can Beat 32: Quantized LLMs Surpassing Expectations: A technical conundrum as the 8-bit quantized llama-3 8b reveals superior F1 scores over its non-quantized counterpart for classification tasks, causing some raised eyebrows and analytical excitement.

- Furthering the discussion on language model efficiency, members recommended RAG for resource-light environments and shared insights on fine-tuning LLMs like Roberta for enhanced homophobic message detection.

- When Music Meets ML: Dynamic Duos Emerge: The gary4live Ableton plugin's launch for free sparked a buzz, blending the boundaries between AI, music, and production.

- While over in the spaces, MInference 1.0's announcement highlighted a whopping 10x boost in inference speed, drawing attention to the symphony of strides in model performance.

- Ideograms and Innovations: A Showcase of Creativity: AI-generated Ideogram Outputs are now collated, showcasing creativity and proficiency in output generation, aiding researchers and hobbyists alike.

- Brushing the canvas further, the community welcomed the Next.JS refactor, potentially paving the way for a surge in PMD format for streamlined code and prose integration.

- The Dangers We Scale: Unix Command Odyssey: A cautionary tale unfolded as users discussed the formidable 'rm -rf /' command in Unix, emphasizing the irreversible action of this command when executed with root privileges.

- Lightening the mood, the inclusion of emojis by users hinted at a balance between understanding serious technical risks and maintaining a light-hearted community atmosphere.

Unsloth AI (Daniel Han) Discord

- Hypersonic Allegiance Shift: Sam Altmann takes flight by funnelling a $100M investment into an unmanned hypersonic planes company.

- A new chapter for defense as the NSA director joins the board, sparking discussions on the intersection of national security and tech advancements.

- Decentralizing Training with Open Diloco: Introducing Open Diloco, a new platform championing distributed AI training across global datacenters.

- The platform wields torch FSDP and hivemind, touting a minimalist bandwidth requirement and impressive compute utilization rates.

- Norm Tweaking Takes the Stage: This recent study sheds light on norm tweaking, enhancing LLM quantization, standing strong even at a lean 2-bit level.

- GLM-130B and OPT-66B emerge as success stories, demonstrating that this method leaps over the performance hurdles set by other PTQ counterparts.

- Specs for Success with Modular Models: The Modular Model Spec tool emerges, promising more reliable and developer-friendly approaches to LLM usage.

- Spec opens possibilities for LLM-augmented application enhancements, pushing the limits on what can be engineered with adaptability and precision.

- Gemma-2-27b Hits the Coding Sweet Spot: Gemma-2-27b gains acclaim within the community for its stellar performance in coding tasks, going so far as to code Tetris with minimal guidance.

- The model joins the league of Codestral and Deepseek-v2, and stands out when pitted against other models in technical prowess and efficiency.

CUDA MODE Discord

- CUDA Collaboration Conclave : Commotion peaked with discussions on forming teams for the impending CUDA-focused hackathon, featuring big names like Chris Lattner and Raja Koduri.

- Discourse suggested logistical challenges such as costly flights and accommodation, influencing team assembly and overall participation.

- Solving the SegFault Saga with Docker: Shabbo faced a 'Segmentation fault' running

ncuon a local GPU, ultimately switching to a Docker environmentnvidia/cuda:12.4.0-devel-ubuntu22.04alleviated the issue.- Community input emphasized updating to ncu version 2023.3 for WSL2 compatibility and adjusting Windows GPU permissions as outlined here.

- Quantizing the Sparsity Spectrum: Strategies combining quantization with sparsity gained traction; 50% semi-structured sparsity fleshed out as a sweet spot for minimizing quality degradation while amplifying computational throughput.

- Innovations like SparseGPT prune hefty GPT models to 50% sparsity swiftly, offering promise of rapid, precise large-model pruning sans retraining.

- FlashAttention-3 Fuels GPU Fervor: FlashAttention-3 was put under the microscope for its swift attention speeds in Transformer models, with some positing it doubled performance by optimizing FP16 computations.

- The ongoing discussion weaved through topics like integration strategy, where the weight of simplicity in solutions was underscored against the potential gains from adoption.

- BitBlas Barnstorm with Torch.Compile: MobiusML's latest addition of BitBlas backend to hqq sparked conversations due to its support for configurations down to 1-bit, ingeniously facilitated by torch.compile.

- The BitBlas backend heralded optimized performance for minute bit configurations, hinting at future efficiencies in precision-intensive applications.

Nous Research AI Discord

- Orca 3 Dives Deep with Generative Teaching: Generative Teaching makes waves with Arindam1408's announcement on producing high-quality synthetic data for language models targeting specific skill acquisition.

- Discussion highlights Orca 3 missed the spotlight due to the choice of paper title; 'sneaky little paper title' was mentioned to describe its quiet emergence.

- Hermes Hits High Notes in Nous Benchmarks: Chatter around the Nous Research AI guild centers on Hermes models, where 40-epoch training with tiny samples achieves remarkable JSON precision.

- A consensus forms around balancing epochs and learning rates for specialized tasks, while an Open-Source AI dataset dearth draws collective concern among peers.

- Anthropic Tooling Calls for Export Capability: Anthropic Workbench users request an export function to handle the synthetically generated outputs, signaling a need for tool improvements.

- Conversations also revolve around the idea of ditching grounded/ungrounded tags in favor of more token-efficient grounded responses.

- Prompt Engineering Faces Evolutionary Shift: Prompt engineering as a job might be transitioning, with guild members debating its eventual fate amid developing AI landscapes.

- 'No current plans' was the phrase cited amidst discussions about interest in storytelling finetunes, hinting at a paused progression in specific finetuning areas.

- Guardrails and Arena Learning: A Balancing Act: The guild engages in spirited back-and-forth over AI guardrails, juxtaposing innovation with the need to forestall misuse.

- Arena Learning also emerges as a topic with WizardLM's paper revealing a 98.79% consistency in AI-performance evaluations using novel post-training methods.

LM Studio Discord

- Assistant Trigger Tempts LM Studio Users: A user proposed an optional assistant role trigger for narrative writing in LM Studio, suggesting the addition as a switchable feature to augment user experience.

- Participants debated practicality, envisioning toggle simplicity akin to boolean settings, while considering default off state for broader preferences.

- Salesforce Unveils Einstein xLAM-1B: Salesforce introduces the Einstein Tiny Giant xLAM-1B, a 1B parameter model, boasting superior function calling capabilities against giants like GPT-3.5 and Claude.

- Community buzz circulates around a Benioff tweet detailing the model's feats on-device and questioning the bounds of compact model efficiency.

- GPU Talks: Dueling Dual 4090s versus Anticipating 5090: GPU deliberations heat up with discussions comparing immediate purchase of two 4090 GPUs to waiting for the rumored 5090 series, considering potential cost and performance.

- Enthusiasts spar over current tech benefits versus speculative 50 series features, sparking anticipation and counsel advocating patience amidst evolving GPU landscape.

- Arc 770 and RX 580 Face Challenging Times: Critique arises as Arc 770 struggles to keep pace, and the once-versatile RX 580 is left behind by shifting tech currents with a move away from OpenCL support.

- Community insights suggest leaning towards a 3090 GPU for enduring relevance, echoing a common sentiment on the inexorable march of performance standards and compatibility requirements.

- Dev Chat Navigates Rust Queries and Question Etiquette: Rust enthusiasts seek peer guidance in the #🛠-dev-chat, with one member's subtle request for opinions sparking a dialogue on effective problem-solving methods.

- The conversation evolves to question framing strategies, highlighting resources like Don't Ask To Ask and the XY Problem to address common missteps in technical queries.

Latent Space Discord

- Blackstone's Billions Backing Bytes: Blackstone plans to double down on AI infrastructure, holding $50B in AI data centers with intentions to invest an additional $50B. Blackstone's investment positions them as a substantial force in AI's physical backbone.

- Market excitement surrounds Blackstone’s commitment, speculating a strategic move to bolster AI research and commercial exploits.

- AI Agents: Survey the Savvy Systems: An in-depth survey on AI agent architectures garnered attention, documenting strides in reasoning and planning capabilities. Check out the AI agent survey paper for a holistic view of recent progress.

- The paper serves as a springboard for debates on future agent design, potentially enhancing their performance across a swathe of applications.

- ColBERT Dives Deep Into Data Retrieval: ColBERT's efficiency is cause for buzz, with its inverted index retrieval outpacing other semantic models according to the ColBERT paper.

- The model’s deft dataset handling ignites discussions on broad applications, from digital libraries to real-time information retrieval systems.

- ImageBind: Blurring the Boundaries: The ImageBind paper stirred chatter on its joint embeddings for a suite of modalities — a tapestry of text, images, and audio. Peer into the ImageBind modalities here.

- Its impressive cross-modal tasks performance hints at new directions for multimodal AI research.

- SBERT Sentences Stand Out: The SBERT model's application, using BERT and a pooling layer to create distinct sentence embeddings, spotlights its contrasted training approach.

- Key takeaways include its adeptness at capturing essence in embeddings, promising advancements for natural language processing tasks.

Perplexity AI Discord

- Perplexity Enterprise Pro Launches on AWS: Perplexity announced a partnership with Amazon Web Services (AWS), launching Perplexity Enterprise Pro on the AWS Marketplace.

- This initiative includes joint promotions and leveraging Amazon Bedrock's infrastructure to enhance generative AI capabilities.

- Navigating Perplexity's Features and Quirks: Discussing Perplexity AI's workflow, users noted the message cut-off due to length but no daily limits, in contrast with GPT which allows message continuation.

- A challenge was noted with Perplexity not providing expected medication price results due to its unique site indexing.

- Pornography Use: Not Left or Right: A lively debate centered on whether conservative or liberal demographics are linked to different levels of pornography use, with no definitive conclusions drawn.

- Research provided no strong consensus, but the discussion suggested potential for cultural influences on consumption patterns.

- Integrating AI with Community Platforms: An inquiry was made about integrating Perplexity into a Discord server, but the community did not yield substantial tips or solutions.

- Additionally, concerns were brought up about increased response times in llama-3-sonar-large-32k-online models since June 26th.

Stability.ai (Stable Diffusion) Discord

- Enhancements Zoom In: Stable Diffusion's skill in enhancing image details with minimal scaling factors generated buzz, as users marveled at improvements in skin texture and faces.

- midare recommended a 2x scale for optimal detail enhancement, highlighting user preferences.

- Pony-Riding Loras: Debates around Character Loras on Pony checkpoints exposed inconsistencies when compared to normal SDXL checkpoints, with a loss of character recognition.

- crystalwizard's insights pointed towards engaging specialists in Pony training for better fidelity.

- CivitAI's Strategic Ban: CivitAI continues to prohibit SD3 content, hinting at a strategic lean towards their own Open Model Initiative.

- There's chatter about CivitAI possibly embedding commercial limits akin to Stable Diffusion.

- Comfy-portable: A Rocky Ride: Users reported recurring errors with Comfy-portable, leading to discussions on whether the community supported troubleshooting efforts.

- The sheer volume of troubleshooting posts suggests widespread stability issues among users.

- Troubling Transforms: An RTX 2060 Super user struggled with Automatic1111 issues, from screen blackout to command-induced hiccups.

- cs1o proposed using simple launch arguments like --xformers --medvram --no-half-vae to alleviate these problems.

Modular (Mojo 🔥) Discord

- Compiler Churn Unveils Performance and Build Quirks: Mojo's overnight updates brought in versions like

2024.7.1022stirring the pot with changes like equality comparisons forListand enhancements inUnsafePointerusage.- Coders encountered sticky situations with

ArrowIntVectorwith new build hiccups; cleaning the build cache emerged as a go-to first-aid.

- Coders encountered sticky situations with

- AVX Odyssey: From Moore's Law to Mojo's Flair: A techie showcased how Mojo compiler charmed the sock off AVX2, scheduling instructions like a skilled symphony conductor, while members mulled over handwritten kernels to push the performance envelope.

- Chatter about leveraging AVX-512's muscle made the rounds, albeit tinged with the blues from members without the tech on hand.

- Network Nirvana or Kernel Kryptonite?: Kernel bypass networking became a focal point in Mojo dialogues, casting a spotlight on the quest for seamless integration of networking modules without tripping over common pitfalls.

- Veterans ambled down memory lane, warning of past mistakes made by other languages, advocating for Mojo to pave a sturdier path.

- Conditional Code Spells in Mojo Metropolis: Wizards around the Mojo craft table pondered the mysteries of

Conditional Conformance, with incantations likeArrowIntVectorstirring the cauldron of complexity.- Sage advice chimed in on parametric traits, serving as a guide through the misty forests of type checks and pointer intricacies.

- GPU Discourse Splits into Dedicated Threads: GPU programming talks get their home, sprouting a new channel dedicated to MAX-related musings from serving strategies to engine explorations.

- This move aims to cut the chatter and get down to the brass tacks of GPU programming nuances, slicing through the noise for focused tech talk.

LangChain AI Discord

- Calculations Miss Gemini: LangSmith's Pricing Dilemma**: LangSmith's failure to include Google's Gemini models in cost calculations was highlighted as an issue due to its absence of cost calculation support, even though token counts are correctly added.

- This limitation sparked concerns among users who rely on accurate cost predictions for model budgeting.

- Chatbot Chatter: Voice Bots Get Smarter with RAG**: Implementation details were shared on routing 'products' and 'order details' queries to VDBs for a voice bot, while using FAQ data for other questions.

- This approach underlines the potent combination of directed query intent and RAG architecture for efficient information retrieval.

- Making API Calls Customary: LangChain's Dynamic Tool Dance**: LangChain's

DynamicStructuredToolin JavaScript enables custom tool creation for API calls, as demonstrated withaxiosorfetchmethods.- Users are now empowered to extend LangChain's functionality through custom backend integrations.

- Chroma Celerity: Accelerating VectorStore Initialization**: Suggestions to expedite Chroma VectorStore initialization included persisting vector store on disk, downsizing embedding models, and leveraging GPU acceleration, as discussed referencing GitHub Issue #2326.

- This conversation highlighted the community's collective effort to optimize setup times for improved performance.

- RuntimeError Ruckus: Asyncio's Eventful Conundrum: A member’s encounter with a RuntimeError** sparked a discussion when

asyncio.run()was called from an event loop already running.- The community has yet to resolve this snag, leaving the topic open-ended for future insights.

OpenRouter (Alex Atallah) Discord

- Magnum 72B Matches Claude 3's Charisma: Debates sparked over Alpindale's Magnum 72B, which, sprouting from Qwen2 72B, aims to parallel the prose quality of Claude 3 models.

- Trained on a massive corpus of 55 million RP data tokens, this model carves a path for high-quality linguistic output.

- Hermes 2 Theta: A Synthesis for Smarter Interactions: Nousresearch's Hermes-2 Theta fuses Llama 3's prowess with Hermes 2 Pro's polish, flaunting its metacognitive abilities for enhanced interaction.

- This blend is not just about model merging; it's a leap towards versatile function calls and generating structured JSON outputs.

- Final Curtain Call for Aged AI Models: Impending model deprecations put intel/neural-chat-7b and koboldai/psyfighter-13b-2 on the chopping block, slated to 404 post-July 25th.

- This strategic retirement is prompted by dwindling use, nudging users towards fresher, more robust alternatives.

- Router Hardens Against Outages with Efficient Fallbacks: OpenRouter's resilience ratchets up with a fallback feature that defaults to alternative providers during service interruptions unless overridden with

allow_fallbacks: false.- This intuitive mechanism acts as a safeguard, promising seamless continuity even when the primary provider stumbles.

- VoiceFlow and OpenRouter: Contextual Collaboration or Challenge?: Integrating VoiceFlow with OpenRouter sparked discussions around maintaining context amidst stateless API requests, a critical component for coherent conversations.

- Proposals surfaced about leveraging conversation memory in VoiceFlow to preserve interaction history, ensuring chatbots keep the thread.

OpenAI Discord

- Decentralization Powering AI: Enthusiasm bubbled over the prospect of a decentralized mesh network for AI computation, leveraging user-provided computational resources.

- BOINC and Gridcoin were spotlighted as models using tokens to encourage participation in such networks.

- Shards and Tokens Reshape Computing: Discussions around a potential sharded computing platform brought ideas of VRAM versatility to the forefront, with a nod to generating user rewards through tokens.

- CMOS chips' optimization via decentralized networks was pondered, citing the DHEP@home BOINC project's legacy.

- GPU Exploration on a Parallel Path: Curiosity was piqued regarding parallel GPU executions for GGUF, a platform known for its tensor management capabilities.

- Consensus suggested the viability of this approach given GGUF's architecture.

- AI's Ladder to AGI: OpenAI's GPT-4 human-like reasoning capabilities became a hot topic, with the company outlining a future of 'Reasoners' and eventually 'Agents'.

- The tiered progression aims at refining problem-solving proficiencies, aspiring towards functional autonomy.

- Library in New Locale: The prompt library sported a fresh title, guiding users to its new residence within the digital hallways of <#1019652163640762428>.

- A gentle nudge was given to distinguish between similar channels, pointing to their specific locations.

LlamaIndex Discord

- Star-studded Launch of llama-agents: The newly released llama-agents framework has garnered notable attention, amassing over 1100 stars on its GitHub repository within a week.

- Enthusiasts can dive into its features and usage through a video walkthrough provided by MervinPraison.

- NebulaGraph Joins Forces with LlamaIndex: NebulaGraph's groundbreaking integration with LlamaIndex equips users with GraphRAG capabilities for a dynamic property graph index.

- This union promises advanced functionality for extractors, as highlighted in their recent announcement.

- LlamaTrace Elevates LLM Observability: A strategic partnership between LlamaTrace and Arize AI has been established to advance LLM application evaluation tools and observability.

- The collaboration aims to fortify LLM tools collection, detailed in their latest promotion.

- Llamaparse's Dance with Pre-Existing OCR Content: The community is abuzz with discussions on Llamaprise's handling of existing OCR data in PDFs, looking for clarity on augmentation versus removal.

- The conversation ended without a definitive conclusion, leaving the topic open for further exploration.

- ReACT Agent Variables: Cautionary Tales: Users reported encountering KeyError issues while mapping variables in the ReACT agent, causing a stir in troubleshooting.

- Advice swung towards confirming variable definitions and ensuring their proper implementation prior to execution.

LAION Discord

- Architecture Experimentation Frenzy: A member has been deeply involved in testing novel architectures which have yet to show substantial gains but consume substantial computational resources, indicating a long road of ablation studies ahead.

- Despite the lack of large-scale improvements, they find joy in small tweaks to loss curves, though deeper models tend to decrease effectiveness, leaving continuous experimentation as the next step.

- Diving into Sign Gradient: The concept of using sign gradient in models piqued the interest of the community, suggesting a new direction for an ongoing experimental architecture project.

- Engagement with the idea shows the community's willingness to explore unconventional methods that could lead to efficiency improvements in training.

- Residual Troubleshooting: Discussion surfaced on potential pitfalls with residual connections within an experimental system, prompting plans for trials with alternate gating mechanisms.

- This pivot reflects the complexity and nuance in the architectural design space AI engineers navigate.

- CIFAR-100: The Halfway Mark: Achieving 50% accuracy on CIFAR-100 with a model of 250k parameters was a noteworthy point of discussion, approaching the state-of-the-art 70% as reported in a 2022 study.

- Insights gained revealed that the number of blocks isn't as crucial to performance as the total parameter count, offering strategic guidance for future vision model adjustments.

- Memory Efficiency Maze: A whopping 19 GB memory consumption to train on CIFAR-100 using a 128 batch size and a 250k parameter model highlighted memory inefficiency concerns in the experimental design.

- Engineers are considering innovative solutions such as employing a single large MLP multiple times to address these efficiency constraints.

Eleuther Discord

- Marred Margins: Members Muddle Over Marginal Distributions: A conversation sparked by confusion over the term marginal distributions as p̂∗_t detailed in the paper FAST SAMPLING OF DIFFUSION MODELS WITH EXPONENTIAL INTEGRATOR** seeks community insight.

- Engagement piqued around how marginal distributions influence the efficacy of diffusion models, though the technical nuances remain complex and enticing.

- Local Wit: Introducing 'RAGAgent' for On-Site AI Smarts: Members examined the RAGAgent**, a fresh Python project for an all-local AI system poised to make waves.

- This all-local AI approach could signal a shift in how we think about and develop personalized AI interfaces.

- DoLa Delivers: Cutting Down LLM Hallucinations: The Decoding by Contrasting Layers (DoLa) paper outlines a new strategy to combat LLM hallucinations, securing a 17% climb** in truthful QA.

- DoLa's role in reducing falsities in LLM outputs has become a focal point for discussions on model reliability, despite a potential increase in latency.

- Test Task Tangle: Training Overhaul Required for True Testing: Evaluations of emergent model behaviors are under scrutiny as a paper warns that training on the test task** could skew perceptions of AI capabilities.

- The community debates the implications of training protocols as the 'emergent behavior' hype deflates when models are fine-tuned uniformly before evaluations.

- BitNet's Bold Gambit: One-Bit Precision Pressures Full-Fidelity Foes: The spotlight turns to BitNet b1.58, a lean 1-bit LLM matching its full-precision counterparts while promising energy and resource savings**.

- A reproduction by Hugging Face confirmed BitNet's prowess, heralding a debate on the future of energy-efficient AI models.

OpenInterpreter Discord

- Llama3 vs GPT-4o: Delimiter Debacle: Users report divergent experiences when comparing GPT-4o and Llama3 local; the former being stable with default settings and the latter facing fluctuating standards related to delimiters and schemas.

- One optimistic member suggested that the issues with Llama3 might be resolved in upcoming updates.

- LLM-Service Flag Flub & Doc Fixes: Discussions on 01's documentation discrepancies arose when users couldn't find the LLM-Service flag, important for installation.

- An in-progress documentation PR was highlighted as a remedy, with suggestions to utilize profiles as a stopgap.

- Scripting 01 for VPS Virtuosity: A proposed script sparked conversation aiming to enable 01 to automatically log into a VPS console, enhancing remote interactions.

- Eager to collaborate, one member shared their current explorations, inviting the community to contribute towards brainstorming and collaborative development.

- Collaborative Community Coding for 01: Praise was given to 01's robust development community, comprising 46 contributors, with a shout-out to the 100+ members cross-participating from Open Interpreter.

- Community interaction was spotlighted as a driving force behind the project's progression and evolution.

- 01's Commercial Ambitions Blocked?: A member's conversation with Ben Steinher delved into 01's potential in commercial spaces and the developmental focus required for its adaptation.

- The discussion identified enabling remote logins as a crucial step towards broadening 01’s applicability in professional environments.

OpenAccess AI Collective (axolotl) Discord

- Axolotl Ascends to New Address: The Axolotl dataset format documentation has been shifted to a new and improved repository, as announced by the team for better accessibility.

- The migration was marked with an emphasis on 'We moved to a new org' to ensure smoother operations and user experience.

- TurBcat Touchdown on 48GB Systems: TurBcat 72B is now speculated to be workable on systems with 48GB after user c.gato indicated plans to perform tests using 4-bit quantization.

- The announcement has opened discussions around performance optimization and resource allocation for sophisticated AI models.

- TurBcat's Test Run Takes Off with TabbyAPI: User elinas has contributed to the community by sharing an API for TurBcat 72B testing, which aims to be a perfect fit for various user interfaces focusing on efficiency.

- The shared API key eb610e28d10c2c468e4f81af9dfc3a48 is set to integrate with ST Users / OpenAI-API-Compatible Frontends, leveraging ChatML for seamless interaction.

- WizardLM Wows with ArenaLearning Approach: The innovation in learning methodologies continues as the WizardLM group presents the ArenaLearning paper, offering insights into advanced learning techniques.

- The release spurred constructive dialogue amongst members, with one outlining the method as 'Pretty novel', hinting at potential shifts in AI training paradigms.

- FlashAttention-3 Fires Up on H100 GPUs: The H100 GPUs are getting a performance overhaul thanks to FlashAttention-3, a proposal to enhance attention mechanisms by capitalizing on the capabilities of cutting-edge hardware.

- With aspirations to exceed the current 35% max FLOPs utilization, the community speculates about the potential to accelerate efficiency through reduced memory operations and asynchronous processing.

Interconnects (Nathan Lambert) Discord

- FlashAttention Fuels the Future: Surging Transformer Speeds**: FlashAttention has revolutionized the efficiency of Transformers on GPUs, catapulting LLM context lengths to 128K and even 1M in cutting-edge models such as GPT-4 and Llama 3.

- Despite FlashAttention-2's advancements, it's only reaching 35% of potential FLOPs on the H100 GPU, opening doors for optimization leaps.

- WizardArena Wars: Chatbots Clashing Conundrums**: The WizardArena platform leverages an Elo rating system to rank chatbot conversational proficiency, igniting competitive evaluations.

- However, the human-centric evaluation process challenges users with delays and coordination complexities.

- OpenAI's Earnings Extravaganza: Revenue Revealed: According to Future Research, OpenAI’s paychecks are ballooning, with earnings of $1.9B from ChatGPT Plus, $714M from ChatGPT Enterprise**, alongside other lucrative channels summing up a diverse revenue stream.

- The analytics highlight 7.7M ChatGPT Plus subscribers, contrasting against the perplexity of GPT-4's gratis access and its implications on subscription models.

- Paraphrasing Puzzles: Synthetic Instructions Scrutinized: Curious minds in the Discord pondered the gains from syntactic variance** in synthetic instructional data, posing comparisons to similar strategies like backtranslation.

- Counterparts in the conversation mused over whether the order of words yields a significant uptick in model understanding and performance.

- Nuancing η in RPO: Preferences Ponders Parameters: Channel discourse fixated on the mysterious η parameter** in the RPO tuning algorithm, debating its reward-influencing nature and impact.

- The role of this parameter in the process sparked speculation, emphasizing the need for in-depth understanding of the optimization mechanics.

Cohere Discord

- Discovering Delights with Command R Plus: Mapler is finding Command R Plus a compelling choice for building a fun AI agent.

- There's a focus on the creative aspects of crafting entertainment-bent agents.

- The Model Tuning Conundrum: Encountering disappointment, Mapler grapples with a model that falls short of their benchmarks.

- A community member emphasizes that quality in finetuning is pivotal, summarizing it as 'garbage in, garbage out'—underscoring the importance of high-quality datasets.

LLM Finetuning (Hamel + Dan) Discord

- PromptLayer Pushback with Anthropic SDK: The integration of PromptLayer for logging fails when attempting to use it with the latest version of Anthropic SDK.

- Concerned about alternatives, the member is actively seeking suggestions for equivalent self-hosted solutions.

- OpenPipe's Single-Model Syndrome: Discussions reveal that OpenPipe supports prompt/reply logging exclusively for OpenAI, excluding other models like those from Anthropic.

- This limitation sparks conversations about potential workarounds or the need for more versatile logging tools.

- In Quest of Fireworks.ai Insights: A member sought information about a lecture related to or featuring fireworks.ai, but further details or clarity didn't surface.

- The lack of additional responses suggests a low level of communal knowledge or interest in the topic.

- Accounting for Credits: A Member's Inquiry: A question was raised on how to verify credit availability, with the member providing the account ID reneesyliu-571636 for assistance.

- It remained an isolated query, indicating either a resolved issue or an ongoing private discussion for the Account ID Query.

tinygrad (George Hotz) Discord

- NVDLA Versatility vs NV Accelerator: Queries arose regarding whether the NV accelerator is an all-encompassing solution for NVDLA, sparking an inquiry into the NVDLA project on GitHub.

- CuDLA investigation was mentioned as a potential next step, but confirmation of NV's capabilities was sought prior to deep diving.

- Kernel-Centric NV Runtime Insights: Exploration into NV runtime revealed that it operates closely with GPUs, bypassing userspace and engaging directly with the kernel for process execution.

- This information lends clarity on how the NV infrastructure interacts with the underlying hardware, bypassing traditional userspace constraints.

- Demystifying NN Graph UOps: A perplexing discovery was made analyzing UOps within a simple neural network graph, unearthing unexpected multiplications and additions involving constants.

- The conundrum was resolved when it was noted that these operations were a result of linear weight initialization, conceptualizing the numerical abnormalities.

Mozilla AI Discord

- Senate Scrutiny on AI and Privacy: A Senate hearing spotlighted U.S. Senator Maria Cantwell stressing the significance of AI in data privacy and the advocacy for federal privacy laws.

- Witness Udbhav Tiwari from Mozilla highlighted AI’s potential in online surveillance and profiling, urging for a legal framework to protect consumer privacy.

- Mozilla Advocates for AI Privacy Laws: Mozilla featured their stance in a blog post, with Udbhav Tiwari reinforcing the need for federal regulations at the Senate hearing.

- The post emphasized the critical need for legislative action and shared a visual of Tiwari during his testimony about safeguarding privacy in the age of AI.

MLOps @Chipro Discord

- Hugging Face Harmonizes Business and Models: An exclusive workshop, Demystifying Hugging Face Models & How to Leverage Them For Business Impact, is slated for July 30, 2024 at 12 PM ET.

- Unable to attend? Register here to snag the workshop materials post-event.

- Recsys Community Rises, Search/IR Dwindles: The Recsys community overshadows the search/IC community in size and activity, with the former growing and the latter described as more niche.

- Cohere recently acquired the sentence transformer team, with industry experts like Jo Bergum of Vespa and a member from Elastic joining the conversation.

- Omar Khattab Delivers Dynamic DSPy Dialogue: At DSPy, Omar Khattab, the MIT/Stanford scholar, shares his expertise on intricate topics.

- Khattab's discussion points resonate with the audience, emphasizing the technical depths of the domain.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI Stack Devs (Yoko Li) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!