[AINews] FineWeb: 15T Tokens, 12 years of CommonCrawl (deduped and filtered, you're welcome)

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 4/19/2024-4/22/2024. We checked 6 subreddits and 364 Twitters and 27 Discords (395 channels, and 14973 messages) for you. Estimated reading time saved (at 200wpm): 1510 minutes.

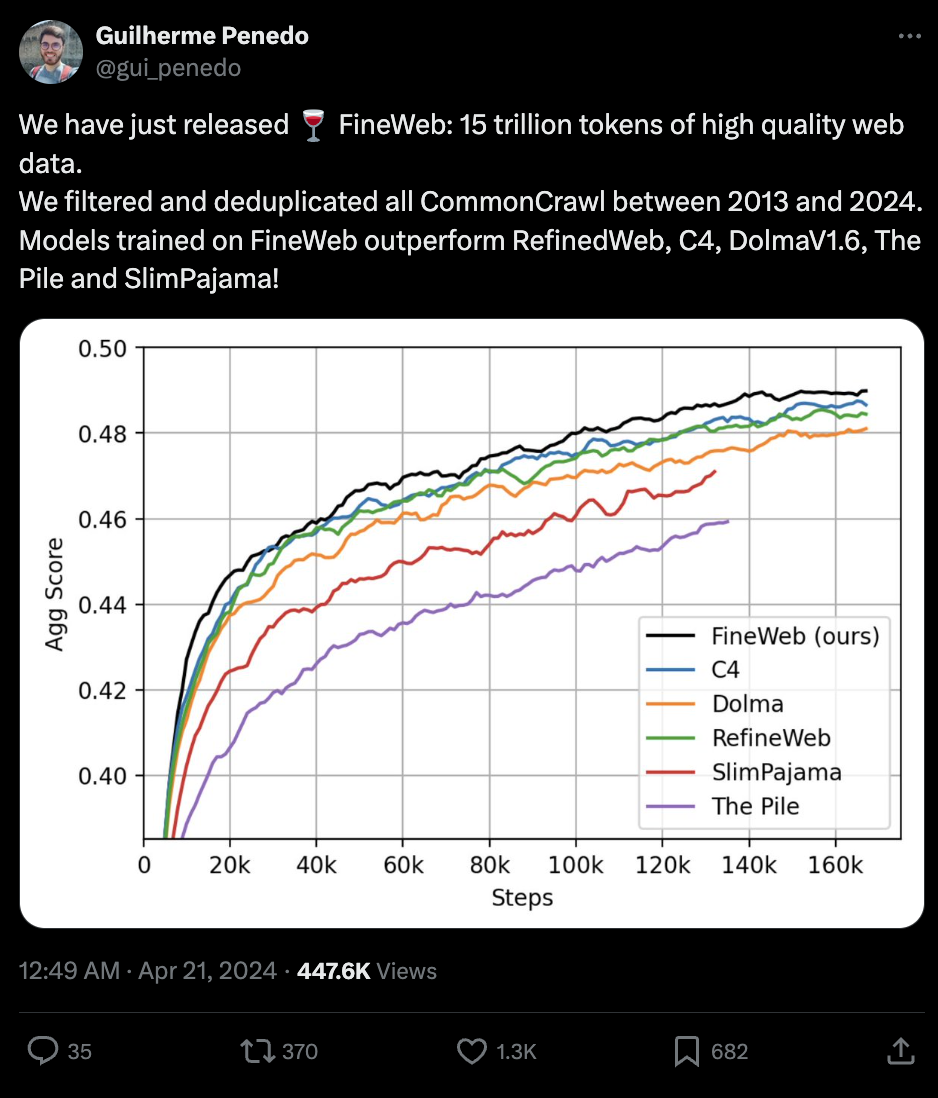

2024 seems to have broken some kind of "4 minute mile" with regard to datasets. Although Redpajama 2 offered up to 30T tokens, most 2023 LLMs were trained with up to 2.5T tokens - but then DBRX came out with 12T tokens, Reka Core/Flash/Edge with 5T tokens, Llama 3 with 15T tokens. And now Huggingface has released an open dataset of 12 years of filtered and deduplicated CommonCrawl data for a total of 15T tokens:

Notable that Guilherme was previously on the TII UAE Falcon 40B team, and was responsible for their RefinedWeb dataset.

One week after Llama 3's release, you now have the data to train yoru own Llama 3 if you had the compute and code.

Table of Contents

- AI Reddit Recap

- AI Twitter Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Unsloth AI (Daniel Han) Discord

- Perplexity AI Discord

- Nous Research AI Discord

- LM Studio Discord

- Stability.ai (Stable Diffusion) Discord

- CUDA MODE Discord

- OpenAccess AI Collective (axolotl) Discord

- Eleuther Discord

- Modular (Mojo 🔥) Discord

- HuggingFace Discord

- OpenRouter (Alex Atallah) Discord

- Latent Space Discord

- LAION Discord

- OpenAI Discord

- LlamaIndex Discord

- OpenInterpreter Discord

- Cohere Discord

- tinygrad (George Hotz) Discord

- DiscoResearch Discord

- LangChain AI Discord

- Mozilla AI Discord

- Interconnects (Nathan Lambert) Discord

- LLM Perf Enthusiasts AI Discord

- Skunkworks AI Discord

- Datasette - LLM (@SimonW) Discord

- Alignment Lab AI Discord

- PART 2: Detailed by-Channel summaries and links

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/Singularity. Comment crawling works now but has lots to improve!

AI Models and Capabilities

- WizardLM-2-8x22b performance: In /r/LocalLLaMA, WizardLM-2-8x22b outperformed other open LLMs like Llama-3-70b-instruct in reasoning, knowledge, and mathematics tests according to one user's benchmarks.

- Claude Opus code error spotting: In /r/LocalLLaMA, Claude Opus demonstrated impressive ability to spot code errors with 0-shot prompting, outperforming Llama 3 and other models on this task.

- Llama 3 zero-shot roleplay: Llama 3 showcased impressive zero-shot roleplay abilities in /r/LocalLLaMA.

Benchmarks and Leaderboards

- LMSYS chatbot leaderboard limitations: In /r/LocalLLaMA, concerns were raised that the LMSYS chatbot leaderboard is becoming less useful for evaluating true model capabilities as instruction-tuned models like Llama 3 are able to game the benchmark. More comprehensive benchmarks are needed.

- New RAG benchmark results: A new RAG benchmark was posted in /r/LocalLLaMA comparing Llama 3, CommandR, Mistral and others on complex question-answering from business documents. Llama 3 70B did not match GPT-4 level performance. Mistral 8x7B remained a strong all-round model.

Quantization and Performance

- Efficient Llama 3 quantized models: /r/LocalLLaMA noted that the Llama 3 quantized models by quantfactory on Huggingface are the most efficient options currently available.

- Llama 3 70B token generation limits: One user reported generating ~9600 tokens with Llama 3 70B q2_xs on a 3090 GPU setup before decoherence set in. Ideas were requested for extending coherence.

- AQLM quantization of Llama 3 8B: AQLM quantization of Llama 3 8B was shown to load in Transformers and text-generation-webui, with performance on par with the baseline in initial tests.

Censorship and Safety

- AI usage ban for sex offender: In /r/singularity, it was reported that a sex offender in the UK was banned from using AI tools after making indecent images of children, raising concerns from charities who want tech companies to prevent the generation of such content.

- GPT-4 exploit capabilities: GPT-4 can exploit real vulnerabilities by reading security advisories with an 87% success rate on 15 vulnerabilities, outperforming other LLMs and scanners, raising concerns that future LLMs could make exploits easier.

- AI-generated unsafe information: In /r/LocalLLaMA, there was discussion on whether AIs are capable of producing uniquely unsafe information not already widely known. Most examples seem to be basic overviews rather than truly sensitive knowledge.

Memes and Humor

- Various AI-generated memes and humorous content were shared, including a "warehouse robot collapsing after working 20 hours", the Mona Lisa singing Lady Gaga, and AI-generated comic dialogue highlighting current limitations.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Meta Llama 3 Release

- Model Details: @AIatMeta released Llama 3 models in 8B and 70B sizes, with a 400B+ model still in training. Llama 3 uses a 128K vocab tokenizer and 8K context window. It was trained on 15T tokens and fine-tuned with SFT, PPO, and DPO on 10M samples.

- Performance: @karpathy noted Llama 3 70B is approaching GPT-4 level performance on benchmarks like MMLU. The 8B model outperforms others like Mistral 7B. @DrJimFan highlighted it will be the first open-source model to reach GPT-4 level.

- Compute and Scaling: @karpathy estimated 1.3M A100 hours for 8B and 6.4M for 70B, with 400 TFLOPS throughput on a 24K GPU cluster. Models are severely undertrained relative to compute-optimal scaling ratios.

- Availability: Models are available on @huggingface, @togethercompute, @AWSCloud, @GoogleCloud, and more. 4-bit quantized versions allow running the 8B model on consumer hardware.

Reactions and Implications

- Open-Source AI Progress: Many highlighted this as a watershed moment for open-source AI surpassing closed models. @bindureddy and others predicted open models will match GPT-4 level capabilities in mere weeks.

- Commoditization of LLMs: @abacaj and others noted this will drive down costs as people optimize runtimes and distillation. Some speculated it may challenge OpenAI's business model.

- Finetuning and Applications: Many, including @maximelabonne and @rishdotblog, are already finetuning Llama 3 for coding, open-ended QA, and more. Expect a surge of powerful open models and applications to emerge.

Technical Discussions

- Instruction Finetuning: @Teknium1 argued Llama 3's performance refutes recent claims that finetuning cannot teach models new knowledge or capabilities.

- Overtraining and Scaling: @karpathy and others discussed how training models far beyond compute-optimal ratios yields powerful models at inference-efficient sizes, which may change best practices.

- Tokenizer and Data: @teortaxesTex noted the improved 128K tokenizer is significant for efficiency, especially for multilingual data. The high quality of training data was a key focus.

AI Discord Recap

A summary of Summaries of Summaries

- Llama 3 Takes Center Stage: Meta's release of Llama 3 has sparked significant discussion, with the 70B parameter model rivaling GPT-4 level performance (Tweet from Teknium) and the 8B version outperforming Claude 2 and Mistral. Unsloth AI has integrated Llama 3, promising 2x faster training and 60% less memory usage (GitHub Release). A beginner's guide video explains the model's transformer architecture.

- Tokenizer Troubles and Fine-Tuning Fixes: Fine-tuning Llama 3 has presented challenges, with a missing BOS token causing high loss and

grad_norm infduring training. A fix via a PR in the tokenizer configuration was shared. The model's vast tokenizer vocabulary sparked debates about efficiency and necessity.

- Inference Speed Breakthroughs: Llama 3 achieved 800 tokens per second on Groq Cloud (YouTube Video), and Unsloth users reported up to 60 tokens/s on AMD GPUs like the 7900XT. Discussions also highlighted Llama 3's sub-100ms time-to-first-byte on Groq for the 70B model.

- Evaluating and Comparing LLMs: Conversations compared Llama 3 to GPT-4, Claude, and other models, with Llama 3 70B not quite matching GPT-4 Turbo despite good lmsys scores. The release of the FineWeb dataset (Tweet from Guilherme Penedo) with 15 trillion tokens suggests potential to outperform existing datasets like RefinedWeb and The Pile.

- Emerging Tools and Frameworks: Several new tools and frameworks were discussed, including Hydra by Facebook Research for configuring complex applications, LiteLLM (Website) as a template for LLM projects, Prompt Mixer (Website) for collaborative prompt engineering, and WhyHow.AI's Knowledge Graph SDK (Medium Article) for schema-controlled automated knowledge graphs.

- Retrieval-Augmented Generation (RAG) Advancements: Developments in RAG were a hot topic, with a new benchmark proposed for evaluating RAG models (Tweet from Stella Biderman), a guide for building a RAG chatbot using Llama 3, and a tutorial on rental apartment search with LangChain's Self-Querying Retriever.

- Reinforcement Learning from Human Feedback (RLHF) Insights: A new paper titled "From $r$ to $Q^*$: Your Language Model is Secretly a Q-Function" compared traditional RLHF methods to Direct Preference Optimization (DPO), aligning theory with the standard RLHF approach and Bellman equation satisfaction.

- Optimizing Transformer Models: Techniques for optimizing transformer models were discussed, including approximating attention mechanisms to compress token length during inference (arXiv:2401.03462, arXiv:2401.06104), extending context lengths with methods like Activation Beacon and TOVA, and dynamically allocating FLOPs (arXiv:2404.02258).

- Ethical Considerations and Legal Implications: Conversations touched on the ethical implications of AI "jailbreaks" and their potential to induce unintended agent behaviors, as well as the legal risks associated with using tools like Nightshade that could conflict with the Computer Fraud and Abuse Act (CFAA).

- Collaborative Efforts and Community Engagement: Many channels fostered collaboration on projects like minbpe-rs (GitHub), a Rust port of minbpe, and an open-source matchmaking AI application using Cohere Command R+ (Tweet). Community members also shared learning resources, such as a course on fine-tuning LLMs and Eugene Yan's blog posts on evaluating LLMs.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

Llama 3 is the Talk of the Town: Unsloth AI's integration of Llama 3 has sparked discussions on its potential for 2x faster training and 60% less memory usage as detailed on their GitHub Release page. The community eagerly explores 4-bit models and the effects of quantization on model quality, highlighted by significant activity in experimenting with various Llama 3 variants, including those optimized for different languages and shared on platforms like Hugging Face.

Notebook Nudge: AI enthusiasts are encouraged to test Llama 3 via comprehensively prepared notebooks on Google Colab and Kaggle, making way for fine-tuning and experimentation across the board.

Solving Model Mysteries and Sharing Secrets: Candid exchanges revealed struggles and successes from fine-tuning and inferencing issues with LLaMA 3 models to hardware discussions about the NVIDIA Jetson Orin nano. Proposed fixes for looping responses and insights into effective CUDA utilization were shared, indicating a culture of collaborative problem-solving.

Sharing in Showcase: Achievements are on full display with instances such as a LinkedIn post revealing the finesse of fine-tuning Llama3 for Arabic, and the debut of the Swedish model 'bellman.' The Ghost 7B Alpha language model also got attention for its English and Vietnamese optimizations.

Ideas and Input in Suggestions: Dialogue in the #suggestions channel provided valuable takeaways, such as a need for tutorials on model merging and CUDA debugging and the potential for multi-GPU capabilities with Unsloth Studio. Adjustments to server welcome messages for better readability indicated a response to community feedback.

Perplexity AI Discord

- AI Models Take the Stand: Engineers are actively comparing the performance of AI models like Llama 3, Claude 3 Opus, GPT-4, and GPT-4 Turbo for tasks ranging from legal document analysis to coding. Some challenges were expressed about making Perplexity's AI restricted to a list of specific terms, and the queries per day are capped at 50 for Claude 3 Opus.

- Collaborative Growth: Community members are encouraged to support each other, as seen by a user seeking advice on securing mentorship and funding for AI development and getting no immediate responses about constrained API outputs. Resources like Y Combinator and internet-based learning platforms were recommended for learning and growth.

- Perplexity Hits the Spotlight: Perplexity AI gained attention with Nandan Nilekani's praise and a YouTube video detailing a meeting with Meta AI's Yann LeCun. Key discussions are being shared publicly to highlight diverse queries and the AI's expansive knowledge base, emphasizing the collective knowledge-sharing culture.

- API Usage Discussed: Engineers discussed Perplexity's API, highlighting the visibility of the usage counter and seeking clarity on the refresh rate of the API credits. There appears to be a need for real-time feedback on API quota consumption but no specific information about the refresh rate was provided.

- Unauthorized Use and Self-Hosting Solutions: There is an ongoing community discussion about the unauthorized use of API keys on Chinese platforms, the impact on service reliability, and trading accounts. Some members are leaning towards self-hosting as a reliable solution, with guides being shared on setting up Ollama Web UI.

Nous Research AI Discord

Puzzling Over Multi-GPU Context Inference: Members are evaluating how to conduct long context inference with models like Jamba using multiple GPUs, exploring tools such as DeepSpeed and Hugging Face's Accelerate without much luck, although vllm's tensor parallel solution seems promising, despite current lack of support for Jamba.

Beat-Dropping Dataset Announcements: A latent CIFAR100 dataset has been shared on Hugging Face, surprising community members with an approximate 19% accuracy using a simple FFN despite most latents not decoding accurately.

DeepMind Drops Penzai for Network Craft: Penzai, a JAX research toolkit for neural network innovation by DeepMind, has garnered attention, while an advanced research assistant and search engine offering trial premium access to models like Claude 3 Opus and GPT-4 Turbo at rubiks.ai seeks beta testers.

WorldSim's Feature-Rich Comeback: The relaunch of WorldSim includes features such as WorldClient and Mind Meld, with a new pay-as-you-go model for tokens, and a selection of models (Opus, Sonnet, Haiku) for different cost profiles.

Scrutinizing LLMs Across the Spectrum: Discussions on the slight margin in performance between Llama 3 8B and Mistral 7B, despite Llama's larger dataset, graced the forum. Meanwhile, evaluations of Llama 3 70B show more promise, and there are varied stances on the relevance of the term 'grokking', particularly in reference to LLMs.

LM Studio Discord

- Tackling GPU usage in LM Studio: Engineers reported LM Studio integrates additional GPUs into a larger VRAM pool, yet sometimes CUDA utilization remains high on a single GPU. MacOS users indicated that Metal might not adhere to GPU settings, affecting machine temperature.

- Faulty Model Searching Mechanism: Users experienced 503 and 500 errors when searching for and downloading models, likely linked to an ongoing outage with Hugging Face, affecting LM Studio's model search and downloading capabilities.

- LM Studio Configuration Queries and Tutorials: Confusion about configuring WizardLM 2 was addressed with assistance from the community, including a Reddit tutorial on fine-tuning token usage. Discussions also elaborated on the behavior of < Instruct > models versus Base versions and tackled infinite loop issues in Llama3.

- Exploring External Access and Multiple GPUs: Queries around hosting a locally running AI in LM Studio through a custom domain were made, and multi-GPU setups were discussed, raising points about power draw and technical configurations.

- In-Depth Discussions on Language Model Tokens: Technicians clarified the misconception that tokens align with syllables, explaining subword encodings. The dialogue also critiqued the typical 50,000 token training figure for language models, considering it in terms of performance and complexity balance.

- Diverse Hardware Compatibility and Setup: The compatibility of NVIDIA Jetson Orin with LM Studio was confirmed, while a GPU buying guide on Reddit was referenced for users looking to optimize their hardware setup for LM Studio.

- AMD ROCm Preview Shines with Llama 3: The LM Studio ROCm Preview 0.2.20 version now supports MetaAI's Llama 3, exclusively functioning with GGUFs from "lmstudio-community" and can be accessed on LM Studio ROCm site. The AMD GPUs, such as the 7900XT, displayed impressive token generation speeds of around 60 tokens/s. Compatibility and resource allocation with multiple graphics cards were hot topics, with some users managing to prioritize the desired AMD GPU for LM Studio use.

Stability.ai (Stable Diffusion) Discord

- New User Navigational Woes with Stable Diffusion: New users are hitting a wall with starting Stable Diffusion, even after following YouTube setup guides, with advice pointing towards interfaces like ComfyUI and Clipdrop's Stable Diffusion as entry points.

- Feeling Swamped by AI Progress: Members lament the breakneck speed of generative AI developments, particularly in Stable Diffusion tools and models.

- Tech Support Group Tackles Stable Diffusion: Users share solutions for locating saved Stable Diffusion training states in Kohya, with a focus on resuming from checkpoints and checking output folders for saved data.

- Digging into VRAM's Role in Image Creation: Queries about GPU upgrades for image generation led to discussions about multiple image generation capabilities with more VRAM and upgrading drivers post GPU swaps.

- Platforms for Unleashing AI Artistry: New community members inquired about tools for crafting AI-powered images and were directed to web interfaces and local services that integrate with Stable Diffusion, like bing image creator and platforms listed on Stability AI's website Core Models – Stability AI.

CUDA MODE Discord

- Kernel Performance and Memory Breakthroughs: A new kernel implementation significantly improved the 'matmul_backward_bias' kernel performance by approximately 4x, and a separate optimization helped reduce memory consumption by about 25%, from 14372MiB to 10774MiB. Discussions on dtype precision suggested using mixed precision to balance performance and memory usage while considering the reduction of operations from linear to logarithmic for efficiency.

- Navigating the Nuances of NVIDIA Libraries: Integration of cuDNN and cuBLAS functions are underway, with a PR for cuDNN Forward Attention and FP16 cuBLAS kernels in

dev/cudashowing significant speed gains. Members tackled the complexity of using these libraries for accurate training with mixed precision, and the potential of custom backward pass implementations to address gradient computation inefficiencies.

- Exploring Efficiency in Data Parallelism: The community evaluated different approaches to scaling multi-GPU support with NCCL, debating over single-thread multiple devices, multi-thread, or multi-process setups. The consensus leaned towards an MPI-like architecture that would support configurations beyond 8 GPUs and accommodate multi-host environments.

- Gradients and Quantization Quality in GPU Computing: An Effort algorithm aimed at adjusting calculations dynamically during LLM inference was introduced, targeting implementation in Triton or CUDA. Also, a discussion on 20% speed reduction with HQQ+ combined with LoRA indicated room for optimization, and a new fused

int4 / fp16triton kernel outperformed the defaulthqq.linearforward, presented in a GitHub pull request.

- Community Collaborations and Technical Support: The CUDA MODE community highlighted collaboration on problems including Colab session crash during backpropagation, handling grayscale image transformations in Triton kernels, and selecting suitable GPUs for building a machine learning system. Members offered high-level advice on managing memory when implementing a denseformer in JAX, and shared utility resources like

check_tensors_gpu_readyfor verifying contiguous data in memory.

- CUDA Learning Opportunities and Social Engagements: There was an announcement for CUDA-MODE Lecture 15: Cutlass, with ongoing CUDA lecture series to deepen understanding of CUDA programming. On an informal note, a physical meetup of some community members happened in Münster, Germany, playfully dubbed the "GPU capital."

- Incorporating Audio-Visual Resources: References to educational YouTube recording uploads for lectures, shared through channels like Google Drive, display the community's commitment to providing multiple learning modalities.

- Event Logistics and Moderator Management: A new "Moderator" role was introduced with capabilities to maintain order within the server, and coordination for event management was emphasized, suggesting a structured and well-managed community environment.

OpenAccess AI Collective (axolotl) Discord

BOS Token Issue Resolved for LLaMa-3: An important fix was addressed with LLaMa-3's fine-tuning process, as a missing BOS token was causing issues; this has been rectified with a PR in the tokenizer configuration.

Fine-Tunning LLaMa-3 Hits a Snag: While trying to fine-tune LLaMa-3, a user faced a mysterious RuntimeError, noting this issue did not occur with other models like Mistral and LLaMa-2.

Tokenizing Troubles: The LLaMa-3 tokenizer's extensive vocabulary sparked a debate about its necessity and efficiency, some favoring a streamlined approach, others defending its ability to encode large texts with fewer tokens.

VRAM Consumption Detailed for Large LLMs: A clear VRAM usage breakdown was provided for large LLMs, revealing logits and hidden states sizes up to "19.57GiB" and "20GiB" respectively, using a massive "81920 tokens" batch size.

Axolotl's Resources for Dataset Customization: A pointer was given to Axolotl's datasets documentation for those seeking to understand custom dataset structures, offering key examples and formatting for various training tasks.

Eleuther Discord

- Smartphone Smarts: LLMs on the Go: Enthusiasts report the Samsung S24 Ultra achieving 4.3 tok/s and the S23 Ultra hitting 2.2 tok/s when running quantized language models like Llama 3. Discussions on the practicality of this technology are informed by various links, including Pixel's AI integration and MediaPipe with TensorFlow Lite.

- The Internals of Self-Attention: Technical scrutiny has surfaced regarding the necessity for tokens in transformer models to attend to their own key-values. Proposals for experimental ablation to assess the effect on model performance set the ground for future research.

- A Spotlight on Hugging Face's Financial Viability: The guild ponders over Hugging Face's business model, particularly their large file hosting strategy, drawing comparisons to GitHub's model amidst questions about sustainable revenue streams.

- Quest for Improved Reasoning in LLMs: Amidst discussions on evaluating language model reasoning, the Chain of Thought approach seems dominant, yet the thirst for alternative reasoning benchmarks remains unquenched. The need for research beyond CoT is underscored by a paucity of deeper reasoning metrics.

- Optimizer Face-off: Seeking Tranquil Training: To tackle training instability, the adaptation of a Stable AdamW optimizer is suggested over the vanilla version with clipping. Gearheads discuss refined parameter tuning and gradient histogram analysis to refine their model training stability.

- Megalodon Claims Its Territory: Engineers debate the so-called superiority of Megalodon, Meta's new architecture excelling in handling longer contexts, though its universal acceptance and performance against other mechanisms remain to be validated through broader use and comparative analysis.

- Navigating the Task Vector Space: Exploration of 'task vectors' in AI reveals a method to alter pretrained model behavior 'on-the-fly', enabling dynamic knowledge specialization—a topic grounded by a recent paper.

- RAG Benchmarking Puzzles suggest a new frontier in benchmark development targeting RAG models synthesizing multifaceted information. Concerns include how models could be unfairly advantaged by training on datasets similar to benchmark content.

- Approximation Innovations to Shrink Inference Footprint: Discussing compression of token length via approximating attention mechanisms during inference unveils several strategies like Activation Beacon and TOVA, with the potential to change dynamic resource allocation.

- Transformer Context Expansion: The Final Frontier?: The possibility of significantly extending transformer model context lengths spurs interest, with discussions acknowledging that achieving context windows like 10 million tokens might transcend mere fine-tuning, suggesting a need for novel architectural breakthroughs.

- The Technical Tussling Over Chinchilla's Replication: A hot debate orbits the replication attempts of Chinchilla's study, focusing on rounding nuances and residual analysis to fine-tune model assessments, informed by engagements on Twitter and precision concerns raised over the original work.

- DeepMind's SAE Endeavors Unfold: Google DeepMind's latest forays prioritize Sparse Autoencoder (SAE) scaling and fundamental science, with the team sharing insights from infrastructure to steering vectors in posts on Twitter by Neel Nanda and on the AI Alignment Forum.

- Benchmarking Thirst in the Thunderdome: A Google Spreadsheet is floating around (MMLU - Alternative Prompts), filled with MMLU scores and begging comparison against known benchmarks, underscoring the community's competitive spirit.

- Contributor Seeks Guidance Swords for lm-evaluation-harness: A good Samaritan quests for aid in contributing to lm-evaluation harness, wrestling with outdated guides and the absence of certain test directories, underscoring the continuous evolution of the project and the need for current documentation.

Modular (Mojo 🔥) Discord

C++ Sneaks Past Python: Discussions revealed a performance advantage for C++ over Python/Mojo interfaces, linked to the bypass of Python runtime calls, potentially impacting inference times.

Frameworks Forge Ahead: Dialogues indicated a bright future for building Mojo frameworks, with anticipation for a time when Python frameworks can be utilized within Mojo, echoing the compatibility seen between JavaScript and TypeScript.

Performance Enigmas and Enhancements: A user reported that a Rust prefix sum computation was significantly slower than Mojo's, spawning a performance mystery. Meanwhile, a separate debate on introducing SIMD aliases in Mojo shows momentum toward refining the language's efficiency and syntax clarity.

Teaser Tweets Tantalize Techies: Modular released a series of teaser tweets suggesting a major announcement. While details remain scarce, anticipation is evident among followers awaiting the revelation.

Video Assistance Request Resonates: A member's request for likes and feedback on their AI evolution video not only seeks community support but also reflects the commitment to AI education and discourse even under tight timelines.

HuggingFace Discord

- Llama 3 Challenges Claude: Discussions indicated that Llama 3's 70b model is now on par with Claude Sonnet, and the 8b version surpasses Claude 2 and Mistral. The community engaged in active discourse around the comparative performance of various AI models and shared insights on API access for MistralAI/Mixtral-8x22B-Instruct-v0.1 for HF Pro users, showcasing the competitive landscape in AI model development.

- Hardware Headaches and Downtime Dilemmas: Hardware suitability for machine learning tasks was a topic of exchange, particularly the examination of an AMD RX 7600 XT against higher-end models and Nvidia's offerings. Meanwhile, operational disruptions were reported due to HuggingFace service outages, underscoring the dependency of projects on the stability and availability of these AI platforms.

- AI at Warp Speed on Groq Cloud: Llama 3 achieved 800 tokens per second on Groq Cloud, as detailed in a YouTube video. Additionally, the significance of tokenizers for language model data preparation was a point of study and discussion, further evidencing the focus on performance optimization and foundational machine learning aspects within the community.

- Trailblazing with RAG and Vision Tools: Developers showcased their creations including a RAG system chatbot incorporating Llama 3 and multiple innovative uses of Hugging Face Spaces. In the domain of computer vision, the open-source OCR tool Nougat and improvements in shuttlecock tracking using TrackNetV3 were noted, reflecting a strong inclination towards open-source contributions and advancements in AI capabilities.

- NLP Nuggets and Diffusion Discussions: In the NLP field, a member addressed fine-tuning difficulties with the PHI-2 model and a new Rust port of

minbpewas announced, attracting community collaboration. Conversations in the diffusion model domain tackled the potential use of Lora training for inpainting consistency, while another member sought help with vespa model downloads, highlighting a collaborative atmosphere for problem-solving and expertise sharing.

OpenRouter (Alex Atallah) Discord

- New LLMs on the Block: The latest Nitro-powered Llama models are now available on OpenRouter, promising performance enhancements for AI engineers, accessible here. OpenRouter's freshly faced challenges with Wizard 8x22b highlight the demand-induced pressure, bearing in mind that performance increments for non-stream requests are evolving due to recent load balancer updates.

- Streamlining Services and Errant URLs: OpenRouter has rerouted users to the standard DBRX 132B Instruct model following the delisting of its nitro variant, ensuring engineers can continue their work with available models. Additionally, a previously misleading URL within the #app-showcase channel has been corrected, reinforcing the need for vigilance in documentation accuracy.

- Praise Connects Platforms: KeyWords AI expressed commendation towards OpenRouter's model updates, enabling them to enhance their feature set for developers. These collaborative efforts underline the interconnected nature of AI tools and platforms, fostering an environment where utility and innovation go hand-in-hand.

- Challenging LLM Performance Norms: Conversations converged on the limitations and potential of multilingual support in models like LLaMA-3 wherein community members look forward to improvements in language diversity. Discrepancies in performance and curation from host updates were acknowledged, with an eye on persistent access to high-quality LLMs, an essential for engineers invested in developing adaptable AI experiences.

- Roleplay and Creativity in AI: The AI community is showing zest for specialized models like Soliloquy-L3, which promises enhanced capabilities for roleplay with support for extended contexts. This window into the collective's pursuits sheds light on the inherent desire for models that surpass the traditional confines of creative AI applications.

Latent Space Discord

- Llama 3 Faces Off GPT-4: Llama 3 has sparked discussions among users, with some arguing that even though it scores well on lmsys, it does not quite match up to GPT-4 Turbo's performance. Exceptional inference speeds were noted on Groq Cloud for Llama-3 70b, clocking in under 100ms.

- Evaluating and Fine-Tuning AI: Practitioners are employing tools like Hydra by Facebook Research for fine-tuning applications, even as some find its documentation lacking. Furthermore, a new methodology for LLM Evaluation was presented via Google Slides, influencing the conversation around practical model evaluation strategies.

- Data Sets and Tools to Watch: The unveiling of FineWeb, a massive data set with 15 trillion tokens, has generated interest due to its potential to surpass the performance of datasets like RefinedWeb and The Pile. Additionally, litellm was highlighted as a useful template for LLM projects to streamline interactions with various models.

- Deep Dive into LLM Paper: The paper club's fascination with "Improving Language Understanding by Generative Pre-Training" points to its ongoing relevance in the field. Attendees valued the session enough to call for recording it for wider access on platforms like YouTube, illustrating the community's commitment to shared learning.

- Podcast Fever Hits Latent Space: Anticipation is high for the latest Latent Space Podcast episode featuring Jason Liu, affirming the guild's appetite for thought leadership and industry insights, which can be found in the recent Twitter announcement.

LAION Discord

Meta's Mystery Moves: Debate ignited over Meta's unusual practice of restraining LLaMA-3 paper release, signaling a potential shift in their framework for model releases, yet no reason for this divergence was cited.

Ethics and Legality in AI Tooling: The group scrutinized the legal and ethical considerations surrounding Nightshade, mentioning its potential conflict with the Computer Fraud and Abuse Act (CFAA), due to its AI training intervention capabilities.

Boosting Diffusion Model Speed: Research by NVIDIA, University of Toronto, and the Vector Institute introduced "Align Your Steps," an approach to accelerate diffusion models, discussed in their publication, yet a call for the training code release was noted for complete transparency.

Benchmarking Visual Perception in LLMs: A new benchmark named Blink was introduced for evaluating multimodal language models; it particularly measures visual perception, where models like GPT-4V show a gap when compared to human performance. The Blink benchmark is detailed in the research abstract.

Collaborative Development for NLP Coding Assistant: Interest was shown in developing an NLP coding assistant for JavaScript/Rust, with calls for collaboration and knowledge-sharing, suggesting an ongoing pursuit for improved automation tools among engineers.

OpenAI Discord

- AI Model Mashup Mayhem: Engineers are testing various AI combinations, linking Claude 3 Opus with GPT-4 and integrating LLama 3 70B via Groq, though they face mixed results and access issues. Discussions are exploring the theoretical application of convolutional layers (Hyena) and LoRa in large language models to refine fine-tuning approaches.

- Groq's Free AI Might: The Groq Cloud API's free offering is thrust into the limelight with recommendations highlighting LLaMa 3 as a superior model. The community is utilizing this resource for ventures in AI creativity, such as chat-based roleplaying bots capable of writing Python.

- Digital Athenian Dreams Clash With AI Sentience Debate: Visions for a 'digital Athens' meet deep contemplation on AI consciousness, with the community engaging in discussions around future societal structures reliant on AI and philosophical debates on the nature of sentience.

- Prompt Engineering Conundrums: A challenge arises in prompt engineering, where a member struggles to extract precise text from JSON fields, prompting a move toward code interpretation methods. Additionally, ethical concerns surface over sharing sensitive prompts, leading to contemplation on the ethics of prompt engineering.

- Academic AI Quest: An academic in quest of substantial resources for their thesis on AI and generative algorithms receives directions toward OpenAI's research papers, marking a quest for deepened understanding in academic circles.

LlamaIndex Discord

LlamaParse Automates Code Mastery: A collaboration with TechWithTimm enables setup of local Large Language Models (LLMs) using LlamaParse to construct agents capable of writing code; details and a workflow glimpse are on Twitter.

Local RAG Goes Live: Instructions for crafting a RAG application entirely locally using MetaAI's Llama-3 can be found alongside an informative Twitter post, highlighting the move towards self-hosted AI applications.

Tackling AI's Enigma 'Infini Attention': An explainer on Infini Attention’s potential impact on generative AI was introduced along with an insights-rich LinkedIn post.

Geographical AI Data Visualization: The AI Raise Tracking Sheet now includes and displays AI funding by city, inviting community scrutiny via this Google spreadsheet; a celebratory tweet emphasizes the geographical spread of AI companies over the past year.

Enhanced Markdown for LLMs and Knowledge Graph SDK: FireCrawl’s integration with LlamaIndex beefs up LLMs with markdown capabilities, while WhyHow.AI's Knowledge Graph SDK now facilitates building schema-controlled automated graphs; further exploration in respective Medium articles and here.

OpenInterpreter Discord

Fine-Tuning AI with Lightning Speed: Engineers in the guild have been experimenting with quick-learning models such as Mixtral and Llama, noting the small dataset sizes needed for efficient fine-tuning.

Groq's Rocking Performance with Llama3: The Llama3 model shows impressive speed on Groq hardware, sparking interest for its use in practical applications, with discussion on GitHub pinpointing installation bugs specific to OI on Windows.

Bug Hunts and Workarounds in AI Tools: The community discussed various bugs, such as the spacebar issue on M1 Macbooks with O1 and performance issues with Llama 3 70b. Recommended fixes included installing ffmpeg and using conda for alternate Python versions.

Windows Woes and Macbook Mistakes: Issues running Open Interpreter's O1 on Windows signal possible client problems, and voice recognition glitches on M1 Macbooks are causing disruptions when the spacebar is pressed.

Confusions Clarified and Stability Scrutinized: Clarification was made on O1 versus Open Interpret compatibility with Groq. Stability concerns were raised for Llama 3 70b models, suggesting that larger models may have greater instability issues compared to their smaller counterparts.

Cohere Discord

MySQL Connector Confusion Cleared: Integration of MySQL with Cohere LLMs sparked questions regarding the use of Docker and direct database answers. A GitHub repository clarifies reference code, despite issue reports about outdated documentation and malfunctioning create_connector commands.

No Command R for Profit: It was clarified that Command R (and Command R+) is restricted to non-commercial use under the CC-BY-NC 4.0 license, barring usage on edge devices for commercial purposes.

AI Startup Talent Call: An AI startup founder is actively seeking experts with a strong background in AI research and LLMs to assist with model tuning and voice models. Interested candidates are encouraged to connect via LinkedIn.

Alternative Routes after Internship Setback: Advice was shared for pursuing ML/software engineering roles post-internship rejection at Cohere, which included tapping into university networks, seeking companies with non-public intern opportunities, contributing to open-source initiatives, and attending job fairs.

AI Ethical Dilemmas and Tech Updates: Discussions included concerns over the ethical implications of AI "jailbreaks" and their potential to induce unintended agent behaviors, an open-source matchmaking AI application using @cohere Command R+, and the launch of Prompt Mixer, a new IDE for creating and evaluating prompts, available at www.promptmixer.dev.

tinygrad (George Hotz) Discord

- GPU Acceleration Achievements: An engineer successfully ran hardware support architecture (HSA) on a laptop's Vega iGPU using a HIP compiler and OpenCL, potentially with Rusticl. This supports the trend towards local, user-controlled AI environments as opposed to remote cloud dependencies.

- Mastering Model Precision: Users are troubleshooting precision issues with the

einsumoperation in tinygrad, encountering underflows to NaN values. They discussed whetherTensor.numpy()should cast to float64 for stability and the impacts on model porting from frameworks like PyTorch.

- Cloudy with a Chance of tinygrad: There's an ongoing debate on whether tinygrad might pivot towards a cloud service, amid broader industry shifts. However, the community expressed a strong preference for maintaining tinygrad as an empowering tool for individuals over reliance on cloud services.

- Make Error Messages Great Again: There's a push for improving error messages in tinygrad, especially regarding GPU driver mismatches and CUDA version conflicts. While this is hampered by the limitations of the CUDA API's specificity, it's an area of potential improvement for developer experience.

- George Hotz Sets the Agenda: George Hotz signaled upcoming discussions on MLPerf progress, KFD/NVIDIA drivers, new NVIDIA CI, documentation, scheduler improvements, and a robust debate on maintaining a 7500 line count limit in the codebase. He encourages general attendance at the meeting, with speaking privileges for select participants.

DiscoResearch Discord

- Stirring the Mixtral Pot: A discussion on Mixtral training highlighted the use of the "router_aux_loss_coef" parameter. Adjusting its value could significantly influence training success.

- Boosting Babel for Czech: Work on expanding Czech language support by adding thousands of tokens is underway, indicating that language inclusivity is a priority. The community referenced the Occiglot project as a relevant initiative in this sphere.

- German Precision in AI Models: Various concerns arose regarding German language proficiency across different models. Members tested the Llama3 and Mixtral models for German, noting issues with grammar and tokenizer quirks, and mentioned the private nature of a new variant pending further testing.

- Memory Overhead Matters More Than Tokens: It's clarified that reducing vocab tokens doesn't enhance inference speed; instead, it's the memory footprint that sees the impact.

- Chatbots Lean Towards Efficiency: Integrating economically viable chatbots into CRMs is being explored, with suggestions to group functions and possibly employ diverse model types for different tasks. There's an interest in having supportive libraries like langchain to facilitate this.

LangChain AI Discord

LangChain's Endpoint Elusiveness: Engineers sought guidance on locating their LangChain endpoint, a key aspect for engaging with its capabilities, with additional observations on inconsistent latencies in firefunction across various devices.

Pirate-Speak Swagger Lost at Sea: A lone message washed ashore in the #langchain-templates channel in quest of the elusive FstAPI route code for pirate-speak, lacking further engagement or treasure maps to its whereabouts.

Community Creations Cruising the High Seas: Innovators hoisted their colors high, presenting diverse projects like Trip-Planner Bot, LLM Scraper, and AllMind AI. Resources ranged from GitHub repositories for bots and scrapers to soliciting broadsides (support) on Product Hunt for AI stock analysts.

Deciphering the Query Scrolls: An AI sage shed light on the process of refining natural language queries into structured ones using Self-querying retrievers, documenting their wisdom in Rental Apartment Search with LangChain Self-Querying Retriever.

Knowledge Graph Armada Upgrade: WhyHow.AI charted a course toward enriched knowledge graphs with upgraded SDKs, beckoning brave pioneers to join the Beta via a Medium article and add wind to the sails of schema-controlled automatons.

Mozilla AI Discord

- Instruct Format Strikes Back: The community is wrestling with compatibility issues in the llama3 instruct format, as it uses a different set of tokens that are not recognized by

llamafileand thellama.cpp server bin. These issues are highlighted on the LocalLLaMA subreddit and remain a point of discussion.

- Committing to Better Conversations: An update is in pipeline for

llama.cppto include the llama 3 chat template, indicating a stride towards enhancing user interaction with the models. This contribution is currently under review, with the pull request available here.

- Quantized Model, Qualitative Leap: The introduction of the llama 3 8B quantized version has sparked interests, with a promise to release it on llamafile within a day, along with a testing link on Hugging Face.

- Navigating the 70B Seas: Encouragement flourishes among members to participate in testing the llama 3 70B model, as it's now accessible though still slightly buggy, specifically mentioning a "broken stop token." They're looking to smooth out these wrinkles with community testing efforts before a broader roll-out.

- Performance Patchwork: Technical exchanges occurred over the execution of llamafiles across various systems, indicating that llama 3 70B excels in front of its 8B counterpart, especially on specific systems like the M1 Pro 32GB, where the Q2 quantization level doesn't match expectations. Improvements and adaptability continue to be focal points of discussion.

Interconnects (Nathan Lambert) Discord

- Scaling Ambitions: Engineers are looking forward to the upcoming release of new 100M, 500M, 1B, and 3B model sizes that will replace the current pythia suite, which are trained on approximately 5 trillion tokens and promise to advance the state of model offerings.

- Benchmarking Evolves: Conversations highlighted the Reinforcement Learning From Human Feedback paper which compares traditional RLHF to Direct Preference Optimization and aligns theoretical foundations with pragmatic RLHF approaches, including the Bellman equation satisfaction.

- Evaluations Under the Microscope: The community is debating the effectiveness of automated evaluations like MMLU and BIGBench versus human-led evaluations such as ChatBotArena, and is seeking clarity on the applicability of perplexity-based benchmarks for model training versus completed models.

- Community Engagement and Feedback: Efforts are underway to increase Discord participation from an ample pool of over 13,000 subscribers, with strategies such as making community access "obvious" and quarterly shoutouts. Meanwhile, valuable input came from a member sharing their Typefully analysis and seeking feedback prior to finalization.

- The Wait for Wisdom: A sense of anticipation is palpable within the community for a forthcoming recording, expected to be released within 1-2 weeks, reflecting high demand for shared knowledge and updates on progress.

LLM Perf Enthusiasts AI Discord

- Llama 3 Knocks Out Opus with Less Muscle: Llama 3 impresses with superior performance in the arena, despite being a model of 70 billion parameters, suggesting that size isn't the sole factor in AI effectiveness.

- Performance Metrics Cannot Ignore Error Bounds: A discussion emphasized the importance of taking error bounds into account when evaluating AI model performances, implying that comparisons are more nuanced than raw numbers.

- Meta's Imagine Gets a Standing Ovation: Meta.ai's Imagine platform received acclaim for its capabilities, with participants in the conversation eager to see examples that demonstrate why it's considered insane.

- Azure's Slow-Mo Service Test: Engineers are facing challenges with Azure's OpenAI due to high latency issues, with some requests taking up to 20 minutes, which can be detrimental to time-sensitive applications.

- Being Rate Limited or Just Unlucky?: Repeated rate limiting on Azure instances, where even 2 requests within 15 seconds can trigger limits, led to engineers implementing a backoff strategy to manage API call rates.

Skunkworks AI Discord

- Databricks Amps Up Model Serving: Databricks rolled out a public preview of GPU and LLM optimization support to deploy AI models with serverless GPUs, optimized for large language models (LLMs) without the need for extra configuration.

- Fine-Tuning LLMs Gets a Playbook: An operational guide on fine-tuning pretrained LLMs has been contributed, recommending optimizations such as LoRA adapters and DeepSpeed, and can be accessed through Modal's fine-tuning documentation.

- Economizing Serverless Deployments: A Github repository provides cheap serverless hosting options, showcasing an example setup of an LLM frontend which engineers can implement via this GitHub link.

- Community Engagement with Resources: A guild member expressed appreciation for the shared serverless inference documentation, confirming its utility for their purposes.

- Budget Beware with New Tech: Some members anticipate the optimized features by Databricks may bear a substantial cost, with humorous apprehensions about affordability.

Datasette - LLM (@SimonW) Discord

Blueprint AI Know-How Wanted: An engineer has expressed interest in AI models to analyze blueprints for ductwork in PDF plans, indicating a practical use-case for image recognition within construction.

AI Previews Before Building: The engineering community discussed the emergence of AI as a preflight check in architecture firms to spot issues and code violations before building, though it has yet to permeate the blueprint design process.

Llama 3 Lands on Laptops: SimonW has updated the llm-gpt4all plugin to support Llama 3 8B Instruct on systems with just 8GB of RAM, a boon for users with devices like the M2 MacBook Pro.

Plugin Ready for Install: Version 0.4 of the llm-gpt4all plugin is now available, enabling the interaction with new models like Llama 3 8B Instruct, as detailed in the latest GitHub release.

Diving Deep with Llama 3: SimonW has provided a comprehensive look at the capabilities of Llama 3, characterized as the leading openly licensed model, via a detailed blog post.

Alignment Lab AI Discord

- LLAMA 3 Explained for AI Newbies: LLAMA 3 model's transformer architecture is broken down in a Beginner’s Guide on YouTube, which targets newcomers to the machine learning field seeking to understand this advanced model. The guide emphasizes the model's capabilities and its role in modern AI development.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Unsloth AI (Daniel Han) ▷ #general (1039 messages🔥🔥🔥):

- Unsloth AI Development Discussion: The conversation included discussions on various technologies and strategies related to fine-tuning, pretraining, and utilizing Unsloth AI for different applications, with members sharing their experiences with training models.

- Concerns Regarding Llama Models and Notebook Sharing: Users expressed concerns about individuals selling or monetizing the open-source notebooks provided by Unsloth AI and discussed the ethics of these actions.

- YouTube Content Creators on AI Topics: There was a healthy debate about various YouTube channels that focus on AI, with recommendations for channels that cover AI research papers and engage in meaningful discussions.

- Technical Issues and GPU Usage: Members encountered technical difficulties with Hugging Face being down and discussed strategies for GPU utilization for training large models and the potential use of Unsloth AI to enhance context lengths.

- Community Support and Learning Journeys: There was a sharing of links and resources for learning about AI, and a member expressed gratitude for community support. Conversations also delved into personal journeys of learning and working with AI, emphasizing the swift pace of development in the field.

- Twitch: no description found

- no title found: no description found

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- imone/Llama-3-8B-fixed-special-embedding · Hugging Face: no description found

- Google Colaboratory: no description found

- chargoddard/llama3-42b-v0 · Hugging Face: no description found

- Training Compute-Optimal Large Language Models: We investigate the optimal model size and number of tokens for training a transformer language model under a given compute budget. We find that current large language models are significantly undertra...

- Kaggle Llama-3 8b Unsloth notebook: Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources

- Unsloth - 4x longer context windows & 1.7x larger batch sizes: Unsloth now supports finetuning of LLMs with very long context windows, up to 228K (Hugging Face + Flash Attention 2 does 58K so 4x longer) on H100 and 56K (HF + FA2 does 14K) on RTX 4090. We managed...

- Practical Deep Learning for Coders - Practical Deep Learning: A free course designed for people with some coding experience, who want to learn how to apply deep learning and machine learning to practical problems.

- Build a robust text-to-SQL solution generating complex queries, self-correcting, and querying diverse data sources | Amazon Web Services: Structured Query Language (SQL) is a complex language that requires an understanding of databases and metadata. Today, generative AI can enable people without SQL knowledge. This generative AI task is...

- unsloth/unsloth/tokenizer_utils.py at main · unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- unsloth/unsloth/tokenizer_utils.py at main · unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- "okay, but I want Llama 3 for my specific use case" - Here's how: If you want a personalized AI strategy to future-proof yourself and your business, join my community: https://www.skool.com/new-societyFollow me on Twitter -...

- profiling-cuda-in-torch/ncu_logs at main · cuda-mode/profiling-cuda-in-torch: Contribute to cuda-mode/profiling-cuda-in-torch development by creating an account on GitHub.

- Add support for loading checkpoints with newly added tokens. by charlesCXK · Pull Request #272 · unslothai/unsloth: no description found

- GitHub - aulukelvin/LoRA_E5: Contribute to aulukelvin/LoRA_E5 development by creating an account on GitHub.

- GitHub - oKatanaaa/unsloth: 5X faster 60% less memory QLoRA finetuning: 5X faster 60% less memory QLoRA finetuning. Contribute to oKatanaaa/unsloth development by creating an account on GitHub.

- Direct Preference Optimization (DPO): Get the Dataset: https://huggingface.co/datasets/Trelis/hh-rlhf-dpoGet the DPO Script + Dataset: https://buy.stripe.com/cN2cNyg8t0zp2gobJoGet the full Advanc...

- hu-po: Livestreams on ML papers, Coding, Research Available for consulting and contract work. ⌨️ GitHub https://github.com/hu-po 💬 Discord https://discord.gg/pPAFwndTJd 📸 Instagram http://instagram.com...

- Yannic Kilcher: I make videos about machine learning research papers, programming, and issues of the AI community, and the broader impact of AI in society. Twitter: https://twitter.com/ykilcher Discord: https://ykil...

- Umar Jamil: I'm a Machine Learning Engineer from Milan, Italy currently living in China, teaching complex deep learning and machine learning concepts to my cat, 奥利奥. 我也会一点中文.

- code_your_own_AI: Explains new tech. Code new Artificial Intelligence (AI) models with @code4AI - where complex AI concepts are demystified with clarity grounded in theoretical physics. Delve into the latest advancemen...

- Hugging Face status : no description found

- main : add Self-Extend support by ggerganov · Pull Request #4815 · ggerganov/llama.cpp: continuation of #4810 Adding support for context extension to main based on this work: https://arxiv.org/pdf/2401.01325.pdf Did some basic fact extraction tests with ~8k context and base LLaMA 7B v...

Unsloth AI (Daniel Han) ▷ #announcements (1 messages):

- Llama 3 Enhances Unsloth Training: Unsloth AI announces Llama 3's integration, heralding a 2x speed increase in training and 60% reduction in memory usage. Detailed information and release notes are available on their GitHub Release page.

- Explore Llama 3 with Free Notebooks: Users are invited to test out Llama 3 using provided free notebooks on Google Colab and Kaggle, with support for both 8B and 70B Llama 3 models.

- Discover 4-bit Llama-3 Models: For those interested in more efficient model sizes, Unsloth AI shares links to Llama-3 8B, 4bit bnb and Llama-3 70B, 4bit bnb on Hugging Face, alongside other model variants like Instruct on their Hugging Face page.

- Invitation to Experiment with Llama 3: The Unsloth AI team encourages the community to share, test, and discuss their models and results with the newly released Llama 3.

Link mentioned: Google Colaboratory: no description found

Unsloth AI (Daniel Han) ▷ #random (99 messages🔥🔥):

- Llama 3 Model Release and Resources: Unsloth AI released Llama 3 70B INSTRUCT 4bit, facilitating faster fine-tuning of Mistral, Gemma, and Llama models with significantly less memory usage. A Google Colab notebook for Llama-3 8B is provided for community use.

- Tutorials on the Horizon: In response to a request for guidance on finetuning instruct models, Unsloth AI confirmed that they are planning to release explanatory tutorials and a potentially helpful notebook soon.

- Coders in Confession: Members shared lighthearted anecdotes about the perplexing nature of coding—mentioning instances of creating functions without fully grasping their inner workings, and seeking advice on displaying output stats for a program generating character conversations.

- PyTorch and CUDA Education Resource: Participants shared valuable resources for learning about PyTorch and CUDA, including the CUDA Mode YouTube channel for lectures and a recommendation to follow Edward Yang's PyTorch dev Twitch streams.

- Efficiency Versus Performance in LLM Training: Discussions about whether to use models like Llama 3 or Gemma versus GPT-4 for tasks centered on the need for a balance between computing resource efficiency and desired performance levels. The community indicates keeping infrastructure costs low is a motivating factor, even if it means settling for smaller models.

- no title found: no description found

- unsloth/llama-3-70b-Instruct-bnb-4bit · Hugging Face: no description found

- Q*: Like 👍. Comment 💬. Subscribe 🟥.🏘 Discord: https://discord.gg/pPAFwndTJdhttps://github.com/hu-po/docsFrom r to Q∗: Your Language Model is Secretly a Q-Fun...

- CUDA MODE: A CUDA reading group and community https://discord.gg/cudamode Supplementary content here https://github.com/cuda-mode Created by Mark Saroufim and Andreas Köpf

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

Unsloth AI (Daniel Han) ▷ #help (823 messages🔥🔥🔥):

- Inference Issues and Fixes: Multiple users reported issues with looped response generation when inferencing LLaMA 3 models. Fixes include applying

StoppingCriteriaand usingeos_token, but inconsistencies remain across platforms like Ollama vs. llama.cpp. - Quantization Quandaries: While quantizing LLaMA 3 to GGUF, one user found a significant drop in quality (wrong sentences, spelling errors) when running the model on Ollama.

- Training Tips and Tricks: There was an exchange on whether using 4-bit Unsloth models could lead to faster training iterations, with responses highlighting compute optimization but potential memory bandwidth limitations.

- Token Troubles: Users are confused about eos_token settings, and how they affect model responses. A solution shared by Daniel involves setting eos_token to ensure proper termination of responses.

- Hardware Highlights: A discussion about the new NVIDIA Jetson Orin nano and its ability to run large language models efficiently, even surpassing the performance of some personal computers.

- no title found: no description found

- OrpoLlama-3-8B - a Hugging Face Space by mlabonne: no description found

- Fine-tuning | How-to guides: Full parameter fine-tuning is a method that fine-tunes all the parameters of all the layers of the pre-trained model.

- G-reen/EXPERIMENT-ORPO-m7b2-1-merged · Hugging Face: no description found

- Love Actually Christmas GIF - Love Actually Christmas Christmas Movie - Discover & Share GIFs: Click to view the GIF

- Tomeu Vizoso's Open Source NPU Driver Project Does Away with the Rockchip RK3588's Binary Blob: Anyone with a Rockchip RK3588 and a machine-learning workload now has an alternative to the binary-blob driver, thanks to Vizoso's efforts.

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Carson Wcth GIF - Carson WCTH Happens To The Best Of Us - Discover & Share GIFs: Click to view the GIF

- config.json · Finnish-NLP/llama-3b-finnish-v2 at main: no description found

- Atom Real Steel GIF - Atom Real Steel Movie - Discover & Share GIFs: Click to view the GIF

- Issues · ggerganov/llama.cpp: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- unslo: GitHub is where unslo builds software.

- unsloth_finetuning/src/finetune.py at main · M-Chimiste/unsloth_finetuning: Contribute to M-Chimiste/unsloth_finetuning development by creating an account on GitHub.

- save_pretrained_gguf method RuntimeError: Unsloth: Quantization failed .... · Issue #356 · unslothai/unsloth: /usr/local/lib/python3.10/dist-packages/unsloth/save.py in save_to_gguf(model_type, model_directory, quantization_method, first_conversion, _run_installer) 955 ) 956 else: --> 957 raise RuntimeErro...

- I got unsloth running in native windows. · Issue #210 · unslothai/unsloth: I got unsloth running in native windows, (no wsl). You need visual studio 2022 c++ compiler, triton, and deepspeed. I have a full tutorial on installing it, I would write it all here but I’m on mob...

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- GitHub - sgl-project/sglang: SGLang is a structured generation language designed for large language models (LLMs). It makes your interaction with models faster and more controllable.: SGLang is a structured generation language designed for large language models (LLMs). It makes your interaction with models faster and more controllable. - sgl-project/sglang

- Reddit - Dive into anything: no description found

- Trainer: no description found

- teknium/OpenHermes-2.5 · Datasets at Hugging Face: no description found

- Index of /: no description found

- Hugging Face status : no description found

- GitHub - ggerganov/llama.cpp: LLM inference in C/C++: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- llama3 family support · Issue #6747 · ggerganov/llama.cpp: llama3 released would be happy to use with llama.cpp https://huggingface.co/collections/meta-llama/meta-llama-3-66214712577ca38149ebb2b6 https://github.com/meta-llama/llama3

Unsloth AI (Daniel Han) ▷ #showcase (54 messages🔥):

- Llama3 Fine-Tuning Success: A member shared their successful experience fine-tuning Llama3 for Arabic using the Unsloth Notebook and provided a LinkedIn post showcasing the results. The member mentioned that no modifications were made to the tokenizer as Llama3's tokenizer already understood Arabic well.

- Mistral-Based Swedish Model Preview: Another member presented their newly created Swedish language model based on Llama 3 Instruct named 'bellman', with the training process documented. For interested parties, a HuggingFace link and a model card were provided, alongside an invitation for feedback and specific version requests.

- New Language Models on the Block: Excitement surrounded the release of Ghost 7B Alpha language model, focusing on reasoning and multi-task knowledge with tool support and featuring two main optimized languages: English and Vietnamese. Members appreciated the work, especially the accompanying website and demo.

- Solving GGUf Conversion and Generation Challenges: Members exchanged tips on successfully training and converting models with Unsloth, including setting the correct end-of-sentence token and template formatting. They shared technical snippets around using convert.py, adjusting tokenizer settings, and resolving infinite loop generation issues—leading to a functional Polish model using Llama3.

- Unveiling MasherAI's New Iteration: A member announced the release of MasherAI 7B v6.1, trained using Unsloth and Huggingface's TRL library with an Apache 2.0 license. The model is showcased on HuggingFace and already downloaded multiple times, indicating eagerness among the community to utilize the new generation model.

- neph1/llama-3-instruct-bellman-8b-swe-preview · Hugging Face: no description found

- mahiatlinux/MasherAI-7B-v6.1 · Hugging Face: no description found

- ghost-x/ghost-7b-alpha · Hugging Face: no description found

- Ghost 7B Alpha: The large generation of language models focuses on optimizing excellent reasoning, multi-task knowledge, and tools support.

- Playground with Ghost 7B Alpha: To make it easy for everyone to quickly experience the Ghost 7B Alpha model through platforms like Google Colab and Kaggle. We've made these notebooks available so you can get started right away.

- Support Llama 3 conversion by pcuenca · Pull Request #6745 · ggerganov/llama.cpp: The tokenizer is BPE.

Unsloth AI (Daniel Han) ▷ #suggestions (67 messages🔥🔥):

- Discussions on Model Merging and CUDA Debugging: Members touched upon merging models and the difficulties in using CUDA with Google Colab. One suggested using SSH for better experience and shared a guide on setting up a remote Jupyter notebook with SSH.

- Challenge with Welcome Message: A newbie pointed out difficulties with the welcome message's color scheme on PC, prompting a change from the team to make it more readable.

- LLAMA 3 Release and Vision for Multi-GPU: There's anticipation for multi-GPU capabilities following the release of LLAMA 3, with hints at Unsloth Studio being a future development.

- Potential Color Scheme Tweaks for Newcomers: A member suggested revising the welcome message color scheme for better readability, leading to an admin updating it and acknowledging the importance of accessibility.

- Jobs Channel Debate: A debate over the utility and potential risks of a dedicated #jobs channel on the Unsloth AI Discord; concerns include scammer activity and a shift away from the server's focus on Unsloth-specific issues.

- Lecture 14: Practitioners Guide to Triton: https://github.com/cuda-mode/lectures/tree/main/lecture%2014

- How To Run Remote Jupyter Notebooks with SSH on Windows 10: Being able to run Jupyter Notebooks on remote systems adds tremendously to the versatility of your workflow. In this post I will show a simple way to do this by taking advantage of some nifty features...

Perplexity AI ▷ #general (1038 messages🔥🔥🔥):

- Perplexity's AI Models Discussed: Members mentioned different AI models in the channel, including Llama 3, Claude 3 Opus, GPT-4, and GPT-4 Turbo. They compared their experiences with these models for various tasks such as legal document analysis, coding, and responding to prompts.

- Perplexity's Usage Limits and Visibility: It was noted by members that Perplexity has a daily limit of 50 queries for Claude 3 Opus. Further discussions included that the usage counter only becomes visible when 10 messages are left.

- Suggestions and Questions About AI Development and Funding: One user sought mentorship and funding for AI development, discussing their young age and lack of qualifications. Community members suggested educational resources, applying to incubators like Y Combinator, and focusing on internet-based learning.

- Perplexity Labs and Self-Hosting: Discussions included the ability to use other models within Perplexity Labs and self-host models locally. One user shared a guide on setting up Ollama Web UI to operate LLM models offline.

- Unauthorized Model Usage and Security: There was a conversation about non-legit API keys usage in Chinese websites, as well as the existence of a market for trading such accounts. Users advised multiple sourcing to avoid outages and expressed concern about such practices impacting service reliability.

- Perplexity Model Selection: Adds model selection buttons to Perplexity AI using jQuery

- 🏡 Home | Open WebUI: Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs.

- GroqCloud: Experience the fastest inference in the world

- More than an OpenAI Wrapper: Perplexity Pivots to Open Source: Perplexity CEO Aravind Srinivas is a big Larry Page fan. However, he thinks he's found a way to compete not only with Google search, but with OpenAI's GPT too.

- Use Your Self-Hosted LLM Anywhere with Ollama Web UI: no description found

- Apply to Y Combinator | Y Combinator: To apply for the Y Combinator program, submit an application form. We accept companies twice a year in two batches. The program includes dinners every Tuesday, office hours with YC partners and access...

- Languages used on the Internet - Wikipedia: no description found

- Andrej Karpathy: FAQ Q: How can I pay you? Do you have a Patreon or etc? A: As YouTube partner I do share in a small amount of the ad revenue on the videos, but I don't maintain any other extra payment channels. I...

- Tweet from Aravind Srinivas (@AravSrinivas): 8b is so good. Can create a lot more experiences with it. We have some ideas. Stay tuned! ↘️ Quoting MachDiamonds (@andromeda74356) @AravSrinivas Will you be switching the free perplexity version t...

- Think About It Use Your Brain GIF - Think About It Use Your Brain Use The Brain - Discover & Share GIFs: Click to view the GIF

- Yt Youtube GIF - Yt Youtube Logo - Discover & Share GIFs: Click to view the GIF

- eternal mode • infinite backrooms: the mad dreams of an artificial intelligence - not for the faint of heart or mind

- Morphic: A fully open-source AI-powered answer engine with a generative UI.

- Robot Depressed GIF - Robot Depressed Marvin - Discover & Share GIFs: Click to view the GIF

- llm-sagemaker-sample/notebooks/deploy-llama3.ipynb at main · philschmid/llm-sagemaker-sample: Contribute to philschmid/llm-sagemaker-sample development by creating an account on GitHub.

- Lo último de OpenAI llega a Copilot. El asistente de programación evoluciona con un nuevo modelo de IA: En el último año, la inteligencia artificial no solo ha estado detrás de generadores de imágenes como DALL·E y bots conversacionales como ChatGPT, también ha...

- Perplexity CTO Denis Yarats on AI-powered search: Perplexity is an AI-powered search engine that answers user questions. Founded in 2022 and valued at over $1B, Perplexity recently crossed 10M monthly active...

- Amazon invierte 4.000 millones de dólares en Anthropic para hacer frente a ChatGPT: la lucha por la mejor IA solo acaba de comenzar: OpenAI agitó a toda la industria con el lanzamiento de ChatGPT, haciendo que cada vez más empresas inviertan en tecnologías de IA generativa. Esto ha dado...

- GitHub - developersdigest/llm-answer-engine: Build a Perplexity-Inspired Answer Engine Using Next.js, Groq, Mixtral, Langchain, OpenAI, Brave & Serper: Build a Perplexity-Inspired Answer Engine Using Next.js, Groq, Mixtral, Langchain, OpenAI, Brave & Serper - developersdigest/llm-answer-engine

- AWS re:Invent 2023 - Customer Keynote Anthropic: In this AWS re:Invent 2023 fireside chat, Dario Amodei, CEO and cofounder of Anthropic, and Adam Selipsky, CEO of Amazon Web Services (AWS) discuss how Anthr...

- AWS re:Invent 2023 - Customer Keynote Perplexity | AWS Events: Hear from Aravind Srinivas, cofounder and CEO of Perplexity, about how the conversational artificial intelligence (AI) company is reimagining search by provi...

- Eric Gundersen Talks About How Mapbox Uses AWS to Map Millions of Miles a Day: Learn more about how AWS can power your big data solution here - http://amzn.to/2grdTah.Mapbox is collecting 100 million miles of telemetry data every day us...

- no title found: no description found

- Rick Astley - Never Gonna Give You Up (Official Music Video): The official video for “Never Gonna Give You Up” by Rick Astley. The new album 'Are We There Yet?' is out now: Download here: https://RickAstley.lnk.to/AreWe...

- GitHub - xx025/carrot: Free ChatGPT Site List 这儿为你准备了众多免费好用的ChatGPT镜像站点: Free ChatGPT Site List 这儿为你准备了众多免费好用的ChatGPT镜像站点. Contribute to xx025/carrot development by creating an account on GitHub.

Perplexity AI ▷ #sharing (29 messages🔥):

- Perplexity AI Making Waves: Infosys co-founder Nandan Nilekani praised Perplexity AI, referring to it as a 'Swiss Army Knife' search engine following a meeting with its co-founder Aravind Srinivasan.

- YouTube Insights on Perplexity AI's Rise: A YouTube video titled "Inside The Buzzy AI StartUp Coming For Google's Lunch" features Perplexity AI's journey and their long wait for an audience with Meta AI chief Yann LeCun.

- High-value Discussions Around Perplexity AI: Community members shared a variety of links to Perplexity AI search queries, exploring topics from HDMI usage to insights on positive parenting and Apple news.

- Sharing the Perplexity AI Experience: A call was made to ensure threads are shareable as members engaged with different Perplexity AI search queries, highlighting the collaborative nature of the community.

- Media Spotlight on Perplexity AI's Leadership: Another YouTube video, titled "Perplexity CTO Denis Yarats on AI-powered search", dives into the engine's user-focused capabilities and its significant growth since the foundation.

- Nandan Nilekani had this stunning thing to say about Aravind Srinivas' 'Swiss Army Knife' search engine: What Nandan Nilekani had to say about Perplexity AI, will make you rush to sign up with Aravind Srinivasan’s ‘Swiss Army Knife’ search engine.

- Inside The Buzzy AI StartUp Coming For Google's Lunch: In August 2022, Aravind Srinivas and Denis Yarats waited outside Meta AI chief Yann LeCun’s office in lower Manhattan for five long hours, skipping lunch for...

- Perplexity CTO Denis Yarats on AI-powered search: Perplexity is an AI-powered search engine that answers user questions. Founded in 2022 and valued at over $1B, Perplexity recently crossed 10M monthly active...

Perplexity AI ▷ #pplx-api (4 messages):

- Seeking Constrained API Responses: A member reported difficulties in trying to make the API respond with a choice from an exact list of words, even after instructing it to do so. They mentioned trying with Sonar Medium Chat and Mistral models without success.

- Looking for a Helping Hand: The same member sought assistance from others regarding their issue but received no immediate response.

- Clarification on API Credits Refresh Rate: The member inquired about how often remaining API credits are updated, questioning whether it takes minutes, seconds, or hours after running a script with API requests.

Nous Research AI ▷ #ctx-length-research (7 messages):

- Long Context Inference on Multi-GPU a Puzzle: Yorth_night is looking for guidance on conducting long context inference with Jamba using multiple GPUs. Despite consulting deepspeed and accelerate docs, they haven't found information on long context generation.

- Seeking Progress Update on Jamba Multi-GPU Use: Bexboy inquires if there has been any progress on the issue Yorth_night is facing.

- VLLM Could Be the Key for Jamba, But No Support Yet: Yorth_night discovered in another discussion that vllm with tensor parallel might be a solution; however, vllm currently does not support Jamba.

- A Jamba API Would Be Handy: Yorth_night expresses a desire for a Jamba API that could handle entire contexts, which would help in evaluating the model's capability for their specific task.

- Cutting Costs on Claude 3 and Big-AGI with Context Management: Rundeen faces challenges with the expensive context expansion on Claude 3 and Big-AGI. They found memGPT and SillyTavern SmartContext and are in search of other solutions that can manage the context economically without redundant or incorrect information.

Nous Research AI ▷ #off-topic (12 messages🔥):

- Beats and Bytes: Members shared music video links for entertainment, including the Beastie Boys' "Root Down" (REMATERED IN HD YouTube video) and deadmau5 & Kaskade's "I Remember" (HQ YouTube video).

- Encoded CIFAR100 Dataset Now Available: A community member released a latently encoded CIFAR100 dataset accessible on Hugging Face, recommending the sdxl-488 version due to the size of the latents.

- Small Scale Model Surprises: Initial experiments with a simple FFN on the latent CIFAR100 dataset showed an approximate 19% accuracy, which was surprising given most latents don’t decode properly.