[AINews] Every 7 Months: The Moore's Law for Agent Autonomy

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Perspective is all you need.

AI News for 3/18/2025-3/19/2025. We checked 7 subreddits, 433 Twitters and 29 Discords (227 channels, and 4117 messages) for you. Estimated reading time saved (at 200wpm): 426 minutes. You can now tag @smol_ai for AINews discussions!

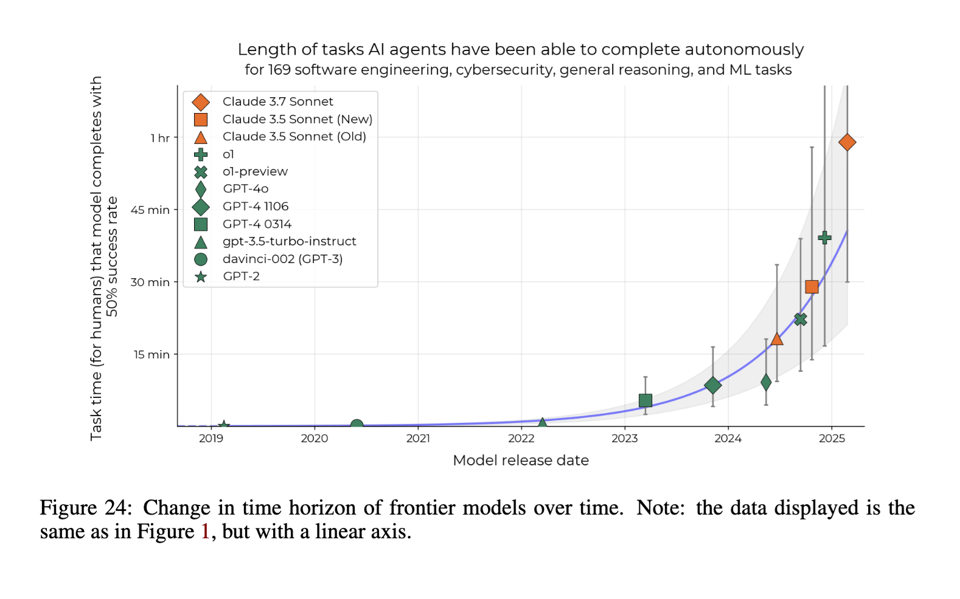

Llama 4 rumors and $600 o1 pro API aside, we rarely get to feature a paper as a title story on AINews, so we are really happy when it happens. METR has long been known for doing quality analysis around AI progress, and in Measuring AI Ability to Complete Long Tasks they have an answer to a valuable question that has so far been extremely difficult to answer: agent autonomy is increasing, but how quickly?

Since 2019 (GPT2), it has doubled every 7 months.

Obviously agents can take a range of time to complete tasks, which has made this question difficult to answer, therefore the methodology is worthwhile as well:

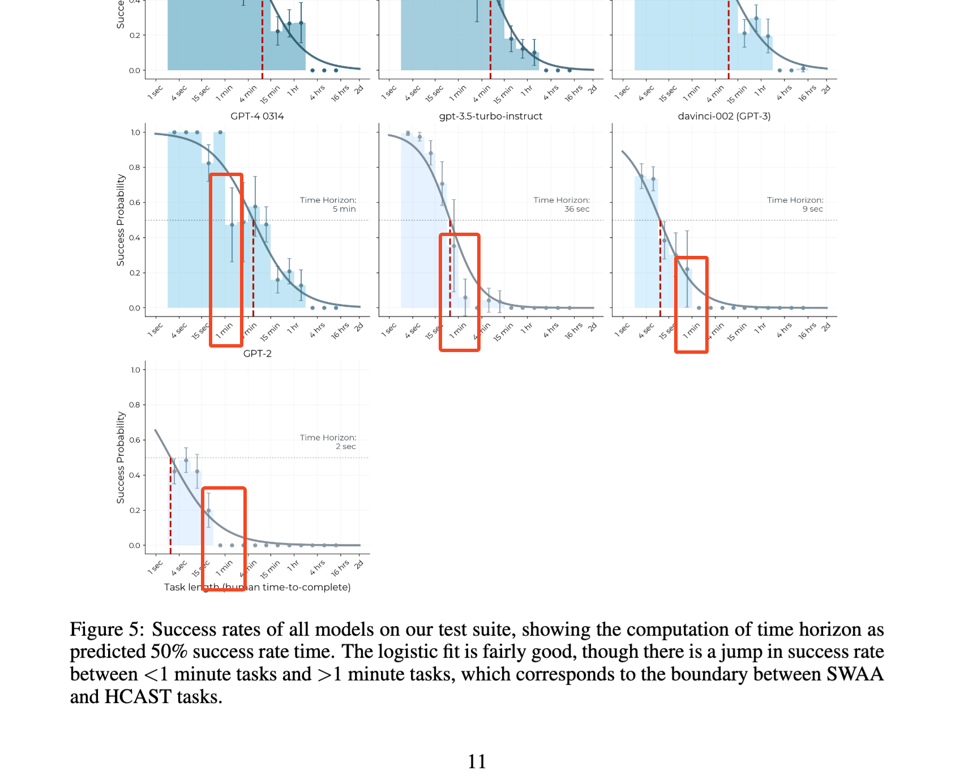

"To quantify the capabilities of AI systems in terms of human capabilities, we propose a new metric: 50%-task-completion time horizon. This is the time humans typically take to complete tasks that AI models can complete with 50% success rate. We first timed humans with relevant domain expertise on a combination of RE-Bench, HCAST, and 66 novel shorter tasks. On these tasks, current frontier AI models such as Claude 3.7 Sonnet have a 50% time horizon of around 50 minutes."

The authors find a notable discontinuity at the 1min horizon:

and at the 80% cutoff, but the scaling laws remain robust.

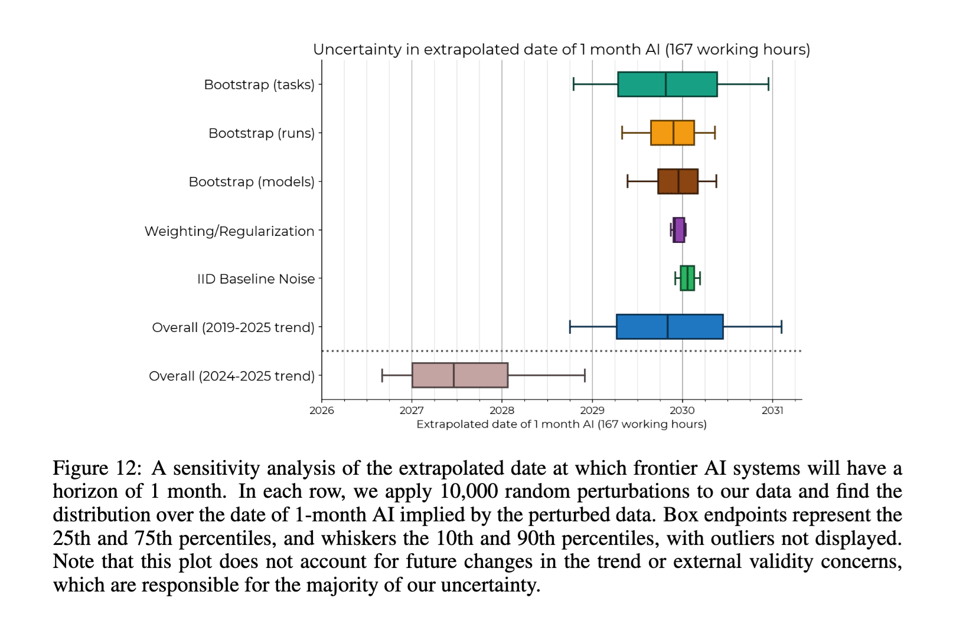

At current rates, we will have:

- 1 day autonomy in (5 exponentials * 7 months) = 3 years (2028)

- 1 month autonomy in "late 2029" (+/- 2 years, only going for human working hours)

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

AI Advancements and Model Releases

- Nvidia released Cosmos-Transfer1 on Hugging Face for Conditional World Generation with Adaptive Multimodal Control: @_akhaliq shared the release of Nvidia's Cosmos-Transfer1 on Hugging Face, which enables conditional world generation with adaptive multimodal control.

- Nvidia released GR00T-N1-2B on Hugging Face: @_akhaliq announced that Nvidia released GR00T-N1-2B on Hugging Face, an open foundation model for generalized humanoid robot reasoning and skills, also noted by @reach_vb. @DrJimFan provided details about GR00T N1, highlighting its role as the world’s first open foundation model for humanoid robots with only 2B parameters. The model learns from diverse physical action datasets and is deployed on various robots and simulation benchmarks. Links to the whitepaper, code repository, and dataset are included (@DrJimFan, @DrJimFan, @DrJimFan, @DrJimFan, @DrJimFan).

- @reach_vb announced Orpheus 3B, a high-quality, emotive Text to Speech model with an Apache 2.0 license from Canopy Labs. Key features include zero-shot voice cloning, natural speech, controllable intonation, training on 100K hours of audio, input/output streaming, 100ms latency, and ease of fine-tuning.

- Meta sits on Llama-4 because it sucks: @scaling01 commented that Meta is not releasing Llama-4 because of poor performance.

- Microsoft launched Phi-4-multimodal: @DeepLearningAI reported that Microsoft launched Phi-4-multimodal, a high-performing open weights model with 5.6 billion parameters capable of processing text, images, and speech simultaneously.

- Tencent's Hunyuan3D 2.0 accelerates model generation speed: @_akhaliq announced that Tencent has achieved a 30x acceleration in model generation speed across the entire Hunyuan3D 2.0 family, reducing processing time from 30 seconds to just 1 second, available on Hugging Face.

- Together AI Introduces Instant GPU Clusters: @togethercompute announced Together Instant GPU Clusters with 8–64 @nvidia Blackwell GPUs, fully self-serve and ready in minutes, ideal for large AI workloads or short-term bursts.

Research and Evaluation

- METR's Research on AI Task Completion: @METR_Evals highlighted their new research indicating that the length of tasks AI can complete is doubling about every 7 months. They define a metric called "50%-task-completion time horizon" to track progress in model autonomy, with current models like Claude 3.7 Sonnet having a horizon of around 50 minutes (@iScienceLuvr). The research also suggests that AI systems may be capable of automating many software tasks that currently take humans a month within 5 years. The paper is available on arXiv (@METR_Evals).

- NVIDIA Reasoning Models @ArtificialAnlys reported that NVIDIA has announced their first reasoning models, a new family of open weights Llama Nemotron models: Nano (8B), Super (49B) and Ultra (249B).

Agent Development and Tooling

- LangGraph Studio updates: @hwchase17 announced that Prompt Engineering is now inside LangGraph Studio. @LangChainAI

- LangGraph and LinkedIn's SQL Bot: @LangChainAI highlighted LinkedIn’s Text-to-SQL Bot, powered by LangGraph and LangChain, which translates natural language questions into SQL, making data more accessible.

- Hugging Face course on building agents in LlamaIndex: @llama_index shared that @huggingface wrote a course about building agents in LlamaIndex, covering components, RAG, tools, agents, and workflows, available for free.

- Canvas UX: @hwchase17 notes that Canvas UX is becoming the standard for interacting with LLMs on documents.

Frameworks and Libraries

- Gemma package: @osanseviero introduced the Gemma package, a minimalistic library for using and fine-tuning Gemma, including documentation on fine-tuning, sharding, LoRA, PEFT, multimodality, and tokenization.

- AutoQuant: @maximelabonne announced updates to AutoQuant to optimize GGUF versions of Gemma 3, implementing imatrix and splitting the model into multiple files.

- ByteDance OSS released DAPO: @_philschmid highlighted a new open-source RL method DAPO released by ByteDanceOSS, which outperforms GRPO and achieves 50 points on the AIME 2024 benchmark.

Industry Partnerships and Events

- Perplexity and NVIDIA Collaboration: @AravSrinivas announced that Perplexity is partnering with NVIDIA to enhance inference on Blackwell with their new Dynamo library, and @perplexity_ai stated they are implementing NVIDIA's Dynamo to enhance inference capabilities.

- Google and NVIDIA Partnership: @Google announced they are expanding their collaboration with NVIDIA across Alphabet.

- vLLM and Ollama Inference Night: @vllm_project and @ollama are hosting an inference night at Y Combinator in San Francisco to discuss inference topics.

Humor and Miscellaneous

- @willdepue shared "10 things I learned in YC W25", a humorous take on business rules inspired by rap lyrics.

- Adblockers: @nearcyan says that adblockers cant even be disseminated to the avg person yet you think we're going to give arbitrary code execution to everyone?

- Tangent about AI Waifus: @scaling01 joked about rejecting women and embracing AI waifus in the context of TPOT.

- Career: @cto_junior quips damn being married has absolutely fucking decimated my posting volume (in exchange for unconditional love and lifelong happiness)

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Llama4 Rumor: Launch Next Month, Multimodal, 1M Context

- Llama4 is probably coming next month, multi modal, long context (Score: 295, Comments: 114): Llama4 is anticipated to be released next month, featuring multi-modal capabilities and a long context window of approximately 1 million tokens. The announcement is linked to the Meta blog discussing the upcoming Llamacon event in 2025.

- Discussions focused on context size highlight skepticism about the practicality of a 1 million token context window, with users pointing out that models often degrade significantly before reaching such limits. Qwen 2.5 was noted for using Exact Attention fine-tuning and Dual Chunk Attention to manage long contexts effectively, as detailed in a benchmark paper.

- The multimodal capabilities of Llama4 are debated, with some users expressing skepticism about its utility and others emphasizing the potential benefits, such as enhanced image and audio processing. DeepSeek and other models like Mistral and Google Gemini are mentioned as competitive benchmarks, with users expressing hope for Llama4's innovative architecture.

- Concerns about censorship in Llama models were raised, with hopes that Llama4 will be less censored compared to Llama3. The conversation also touched on the importance of zero-day support in projects like llama.cpp, suggesting collaboration with Meta could be beneficial.

- only the real ones remember (Score: 300, Comments: 58): Tom Jobbins (TheBloke) is highlighted on Hugging Face for his contributions, particularly his article on "Making LLMs lighter with AutoGPTQ and transformers." His profile showcases recent models with specified creation and update dates, indicating active engagement and interest in AI and ML.

- Tom Jobbins' Impact and Disappearance: Many users express gratitude for Tom Jobbins' significant contributions to the open-source AI community, particularly in making AI more accessible. Discussions speculate about his sudden disappearance, with some suggesting burnout or a move to a private company, possibly as a CTO.

- Career Transition and Speculation: Jobbins' career shift to a startup is noted, with some users mentioning a grant that previously funded his work ran out. There are humorous and optimistic speculations about his current activities, including the possibility of him receiving a lucrative offer under a strict NDA.

- Community Sentiment and Legacy: Users fondly remember Jobbins for his pioneering work in model quantization and his role in simplifying complex processes for the community. His legacy continues to be appreciated, with some users bookmarking his Hugging Face profile as a tribute.

- A man can dream (Score: 617, Comments: 79): The post humorously compares the AI models "DEESEEK-R1," "QWQ-32B," "LLAMA-4," and "DEESEEK-R2" using a meme with escalating expressions of shock, highlighting the anticipation and excitement surrounding the capabilities of these models, particularly LLAMA-4.

- Commenters express a desire for a small model that excels in coding, as current models focus more on language and general knowledge, with Sonnet 3.7 being noted for its high cost in API usage.

- There is speculation and humor regarding the rapid release cycle of AI models, with mentions of R1 being released less than 60 days ago and jokes about the potential 1T and 2T parameter sizes for future models like LLAMA-4 and DEESEEK-R2.

- The discussion includes humorous takes on model names like QwQ and QwQ-Max, as well as playful commentary on naming conventions with terms like Pro ProMax Ultra Extreme and references to brands like Dell and Nvidia.

Theme 2. Microsoft's KBLaM and RAG Replacement Potential

- KBLaM by microsoft, This looks interesting (Score: 104, Comments: 23): KBLaM by Microsoft introduces a method for integrating external knowledge into language models, potentially serving as an alternative to RAG (Retrieval-Augmented Generation). The post questions whether KBLaM can replace RAG and suggests that solving challenges associated with RAG could be a significant advancement in AI.

- KBLaM's integration method bypasses inefficiencies of traditional methods like RAG by encoding knowledge directly into the model's attention layers using a "rectangular attention" mechanism. This allows linear scaling with knowledge base size, enabling efficient processing of over 10,000 knowledge triples on a single GPU, which improves reliability and reduces hallucinations.

- Potential for model optimization is discussed, with the possibility of reducing model sizes by separating knowledge from intelligence, allowing knowledge to be injected as needed. However, there is debate about whether intelligence can be entirely separated from knowledge, as some believe they are interconnected.

- Community engagement includes excitement about the efficiency improvements and interpretability of KBLaM, with users evaluating the KBLaM repository. The approach's ability to maintain dynamic updateability without retraining is seen as a significant advancement over RAG, which suffers from inefficiencies like chunking.

- If "The Model is the Product" article is true, a lot of AI companies are doomed (Score: 180, Comments: 94): The post discusses a blog article suggesting that the future of AI might see major labs like OpenAI and Anthropic training models for agentic purposes using Reinforcement Learning (RL), potentially diminishing the role of application-layer AI companies. It mentions a prediction by the VP of AI at DataBricks that closed model labs could shut down their APIs in the next 2-3 years, which could lead to increased competition between these labs and current AI companies. Read more here.

- The discussion centers around the importance of data over models, with multiple commenters emphasizing that the enduring value lies in the data and domain expertise rather than the models themselves. This is highlighted by the analogy to Google's 2006 algorithm success, emphasizing data and UI as crucial elements.

- There is skepticism about the prediction that major AI labs like OpenAI and Anthropic will shut down their APIs. Commenters argue that APIs are central to these companies' business models, and shutting them down would be counterproductive, especially given the rise of open models from companies like Meta and DeepSeek.

- The conversation also touches on the risks of building businesses on third-party platforms, likening it to the mobile app ecosystem where platform owners can absorb successful ideas. The consensus is that while big AI companies will dominate general use cases, there will still be opportunities for niche, domain-specific solutions.

Theme 3. Gemma 3 Uncensored Model Release

- Uncensored Gemma 3 (Score: 147, Comments: 27): The author released Gemma 3, a finetuned model available on Hugging Face, which they claim has not refused any tasks. They are also working on training 4B and 27B versions, aiming to test and release them shortly.

- Users tested Gemma 3 to see if it would refuse tasks and noted that it sometimes did, despite claims. Xamanthas and StrangeCharmVote reported mixed results, with Xamanthas noting it still refused some tasks, while StrangeCharmVote found the original 27B model surprisingly uncensored.

- There is interest in the performance metrics of Gemma 3 compared to the default version, with mixedTape3123 questioning its intelligence, and Reader3123 acknowledging potential differences but lacking detailed metrics.

- Reader3123 shared quantization efforts by various contributors, providing links to models on Hugging Face for further exploration: soob3123, bartowski, and mradermacher.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

Theme 1. Gemini Plugins and AI Studio Usage

- god i love gemini photoshop (Score: 124, Comments: 29): The post expresses enthusiasm for Gemini Photoshop, indicating a positive user experience with this tool. However, specific details or features about the tool are not provided in the text.

- Confusion about Gemini: Users are confused about the reference to Gemini Photoshop, questioning if there's a Gemini plugin for Photoshop or if it is being confused with something else, like the astrology sign.

- Google AI Studio: Some users mention Google AI Studio Flash 2.0 as a tool used for creating images, with one user clarifying it's free and accessible by searching "AI studio" on Google.

- Mixed User Experiences: While some express enthusiasm for the tool, others report dissatisfaction, stating it struggles with following basic instructions, indicating a variance in user experiences.

Theme 2. MailSnitch Uses Email Tagging for Spam Identification

- Thanks to ChatGPT, I know who’s selling my email. (Score: 2119, Comments: 204): The post outlines the development of MailSnitch, a tool inspired by email tagging to track who sells your email by using unique tagged email addresses. The author, with the help of ChatGPT, plans to release a Chrome Extension featuring auto-fill, unique email tagging, and a history log, and is considering publishing it for free with a potential for monetization.

- Many commenters highlighted the ineffectiveness of using "+" in email addresses to track who sells your email, as it can be easily bypassed or removed by spammers and data brokers. Alternatives like email alias services (e.g., Firefox Relay, ProtonPass, and Apple's "hide my email" feature) were suggested for better privacy and control.

- Several users emphasized the importance of understanding code before using it, especially when generated by ChatGPT, due to potential security and liability issues. The conversation touched on the risks of running unverified code and the necessity of code review in serious applications.

- Commenters also discussed alternative solutions like using custom domains with catchall addresses, which allow for more robust email management and tracking. Some users shared experiences with Gmail's dot feature and mentioned its limitations across different platforms.

Theme 3. Reverse Engineering ChatGPT: Strategies for Better Responses

- I reverse-engineered how ChatGPT thinks. Here’s how to get way better answers. (Score: 2064, Comments: 234): The post explains that ChatGPT doesn't inherently "think" but predicts the most probable next word, leading to generic responses to broad questions. The author suggests enhancing responses by instructing ChatGPT to first analyze key factors, self-critique its answers, and consider multiple perspectives, resulting in significantly improved depth and accuracy for topics like AI/ML, business strategy, and debugging.

- The concept of ChatGPT as merely a next-word predictor is widely recognized, and several commenters noted that the post's insights are not novel, with some suggesting the ideas are basic prompting techniques rather than breakthroughs. LickTempo and Plus_Platform9029 emphasize that while ChatGPT predicts the next token, it can still exhibit structured reasoning if prompted correctly, and they recommend resources like Andrej Karpathy's videos for a deeper understanding.

- Chain-of-thought prompting and Monte Carlo Tree Search are highlighted as methods to improve ChatGPT's responses, with djyoshmo suggesting further reading on arxiv.org or Medium to grasp these techniques. EverySockYouOwn shares a practical example of using a structured 20-question approach to break down complex inquiries, enhancing the depth and relevance of the AI's responses.

- There is a discussion on the effectiveness of self-critique and non-echo chamber prompts, with users like VideirHealth and legitimate_sauce_614 sharing strategies to encourage ChatGPT to challenge assumptions and provide more logical, diverse perspectives. However, SmackEh and others caution that ChatGPT's default behavior is to agree and avoid offense, necessitating deliberate prompts for more critical engagement.

Theme 4. Successfully Running Wan2.1 Locally

- Finally got Wan2.1 working locally (Score: 108, Comments: 25): Wan2.1 is successfully implemented locally for video processing.

- Users discuss video processing times with Wan2.1, comparing different hardware setups. Aplakka shares that their 720p generation took over 60 minutes, while Kizumaru31 reports 6-9 minutes for 480p using an RTX 4070, suggesting faster times with more powerful graphics cards like the RTX 4090.

- Aplakka provides a workflow link and details their settings, including using ComfyUI in Windows Subsystem for Linux and Sageattention. They mention challenges fitting 720p videos into VRAM with an RTX 4090 and suggest a possible reboot or settings adjustment might resolve the issue.

- There is a focus on improving video quality and control over the output, with BlackPointPL noting that using gguf reduces quality, and vizualbyte73 expressing a desire for more control over visual elements like flower petal movement.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Thinking

Theme 1. NVIDIA's Blackwell Blitzkrieg: New GPUs and Marketing Hype

- Blackwell Ultra & Ruben Unleashed, Feynman Next: NVIDIA unveiled Blackwell Ultra and Ruben GPUs, with the next gen named Feynman. Ruben incorporates silicon photonics for power efficiency and a new ARM CPU, alongside Spectrum X switches reaching 1.6 Tbps. Despite performance claims and new releases, some users are skeptical of NVIDIA's marketing hype and performance exaggerations, particularly regarding H200 and B200 speedups.

- DGX Spark & Station: Personal AI Supercomputers Emerge: NVIDIA launched DGX Spark and DGX Station, compact AI supercomputers based on the Grace Blackwell platform. The DGX Spark, previously Project DIGITS and priced at $3,000, aims to bring desktop-level AI prototyping and fine-tuning to developers, researchers, and students, although some find its specs underwhelming compared to alternatives like Mac Mini M4 Pro or Ryzen 395+.

- DeepSeek-R1 Inference Claims World's Fastest on Blackwell: NVIDIA asserts Blackwell GPUs achieve the world’s fastest DeepSeek-R1 inference, with a single system of eight Blackwell GPUs in NVL8 configuration delivering 253 TPS/user and 30K TPS system throughput on the full DeepSeek-R1 671B parameter model. Despite these performance claims, some users remain critical of NVIDIA's marketing tactics, describing them as unserious and potentially misleading to investors.

Theme 2. Open Source AI Ecosystem: Tools, Datasets, and Community

- Open Source Investment Yields Mammoth Returns, Harvard Study Finds: Harvard research indicates that a $4.15B investment in open-source generates $8.8T of value for companies, highlighting a $2,000 return for every $1 invested. This underscores the immense economic impact and value creation driven by open-source contributions in the AI field.

- Nvidia Open Sources Massive Coding Dataset for Llama Nemotron: NVIDIA released a large open-source instruct coding dataset to enhance math, code, reasoning, and instruction following in Llama instruct models. The dataset includes data from DeepSeek-R1 and Qwen-2.5, sparking community interest in filtering and fine-tuning for specialized training.

- PearAI Emerges as Open Source IDE Alternative to Cursor: PearAI, a new open-source AI code editor integrating tools like Roo Code/Cline, Continue, Perplexity, Mem0, and Supermaven, is gaining traction in the Cursor Community. Users suggest PearAI is a cheaper, viable alternative to Cursor, despite having a smaller context window, highlighting the growing open-source tooling landscape.

Theme 3. Model Performance and Limitations: Gemini, Claude, and Open Source Alternatives

- [Gemini Deep Research Saves User Time 10x, But Benchmarking Costs Loom]: Users are finding Gemini Deep Research significantly speeds up research tasks, potentially saving time by 10x, with one user citing its value in scientific medical research by generating a list of 90 literature sources. However, its cost may be prohibitive for extensive benchmarking on platforms like LMArena, raising questions about accessibility for broader evaluation.

- [Perplexity AI Faces "Dumber Than Claude" Claims, O1 Hype Fades]: Some users find Perplexity AI to be less intelligent than Claude 3.5, citing issues with context retention and abstract generation, with one user stating "Perplexity feels dumber than Claude 3.5". Additionally, initial community enthusiasm for Perplexity's O1 models is waning due to the introduction of a paywall, diminishing its appeal.

- [Anthropic's Claude 3.7 Sonnet Experiences Downtime, Recovers]: Anthropic models, particularly Claude 3.7 Sonnet, experienced service interruptions and downtime. While services are reportedly stabilizing, this incident highlights the potential instability and reliability concerns associated with cloud-based AI model access.

Theme 4. AI Agents and Tooling: Agents Course, MCP, and Workflow Innovations

- Hugging Face Launches Free LlamaIndex Agents Course: Hugging Face released a free course on building agents in LlamaIndex, covering key components, RAG, tools, and workflows. The course provides a comprehensive guide to developing AI-powered applications using LlamaIndex, expanding educational resources in the agentic AI space.

- Model Context Protocol (MCP) Gains Momentum with Python REPLs and New Tools: The Model Context Protocol (MCP) is gaining traction, with new Python REPL implementations like hdresearch/mcp-python and Alec2435/python_mcp emerging, and a user-built DuckDuckGo MCP for Cursor on Windows (GitHub) demonstrating its growing ecosystem and utility for tool integration.

- Aider Code Editor Adds Web and PDF Reading, Enhancing Multimodal Capabilities: The Aider code editor now supports reading webpages and PDF files, enabling vision-capable models like GPT-4o and Claude 3.7 Sonnet to process diverse information sources directly within the coding environment. This enhancement, accessed via commands like

/add <filename>, expands Aider's utility for complex, information-rich coding tasks.

Theme 5. Hardware and Software Challenges: Performance, Compatibility, and Costs

- [Multi-GPU Performance Degradation Reported in LM Studio]: Users are experiencing performance and stability issues when using multiple RTX 3060s in LM Studio with CUDA llama.cpp, noting that single GPU performance is superior. The issues are attributed to model splitting across GPUs and potential PCI-e 3.0 x4 bandwidth limitations, suggesting multi-GPU setups are not always plug-and-play for optimal performance.

- [Gemma 3 Vision Fine-tuning Hits Transformers Glitch]: Users are encountering problems fine-tuning Gemma 3 for vision tasks, indicating a potential bug in vision support within the current Transformers library version, particularly during

qlora. The issue manifests as aRuntimeError, requiring further investigation into compatibility and library dependencies. - [M1 Macs Struggle with Model Training, Even in Small Batches]: Users report that M1 Mac Airs are underpowered for model training, even with small batches, facing clang related issues on platforms like Kaggle and Hugging Face Spaces. This highlights the limitations of consumer-grade Apple silicon for demanding AI training tasks, prompting users to seek alternative hardware or cloud-based solutions.

PART 1: High level Discord summaries

Cursor Community Discord

- Sonnet MAX Excels as Agent: The Sonnet MAX model is being lauded for its post-processing capabilities within agent workflows, highlighted in this X post.

- Users emphasize that Cursor must learn from code bases and its own mistakes due to library limitations.

- Cursor's Claude Max Pricing Debated: Community members are questioning Claude Max's pricing on Cursor, noting that the fast requests allocation within subscriptions isn't fully utilized.

- Some express discontent, suggesting they might seek alternatives if Cursor optimized the consumption of fast requests for Max, noting that "Cursor team dropped the ball hard by not allowing more fast request consumption for Max".

- Terminal Troubles Plague Users: Users are frustrated by agent spawning multiple terminals and rerunning projects, driving discussions on implementing preventative rules and configurations.

- An Enhanced Terminal Management Rule was proposed to terminate open terminals, direct test output to fresh terminals, and prevent duplicate terminal creation during test runs.

- PearAI Open Source IDE Emerges: The community is taking a look at PearAI (https://trypear.ai/), an open-source AI code editor integrating tools like Roo Code/Cline, Continue, Perplexity, Mem0, and Supermaven.

- Members suggest that Pear is actually kinda doing gods work rn compared to cursor, because it's a cheaper alternative to Cursor despite having a smaller context window.

Unsloth AI (Daniel Han) Discord

- Gemma 3 Vision Runs into Transformers Glitch: Users reported issues finetuning Gemma 3 for vision tasks, indicating a potential problem with vision support in the current Transformers version, potentially during

qlora.- A user encountered a RuntimeError: Unsloth: Failed to make input require gradients! when trying to qlora

gemma-3-12b-pt-bnb-4bitfor image captioning, suggesting a need for further investigation.

- A user encountered a RuntimeError: Unsloth: Failed to make input require gradients! when trying to qlora

- Multi-Node Multi-GPU Unsloth incoming: Multi-node and multi-GPU fine-tuning support is planned for Unsloth in the coming weeks, although the specific release date has not yet been specified, sign up to the Unsloth newsletter.

- A member confirmed multinode support will be enterprise only.

- Unsloth joins vLLM & Ollama in SF: Unsloth will be joining vLLM and Ollama for an event in SF next week on Thursday, March 27th, promising social time and presentations at Y Combinator's San Francisco office.

- More details are available for the vLLM & Ollama inference night where food and drinks will be served.

- Docs Dunked by Filesystem Woes: A user experienced a

HFValidationErrorandFileNotFoundErrorwhen trying to save a merged model locally, due to an invalid repository ID when callingsave_pretrained_merged.- The recommendation was to update

unsloth-zooas it should be fixed in the latest version.

- The recommendation was to update

- ZO2 fine-tunes 175B LLM: The ZO2 framework enables full parameter fine-tuning of 175B LLMs using just 18GB of GPU memory, particularly tailored for setups with limited GPU memory.

- A member pointed out that ZO2 employs zeroth order optimization, contrasting it with the more common first-order methods like SGD.

LM Studio Discord

- OpenVoice Clones Your Voice: Members highlighted OpenVoice, a versatile instant voice cloning method that only needs a short audio clip to replicate a voice and generate speech in multiple languages, with their associated github repo.

- It gives you granular control over voice styles, including emotion, accent, rhythm, pauses, and intonation, and replicates the tone color of the reference speaker.

- Oblix Orchestrates Models in the Cloud: A member shared the Oblix Project, a platform for seamless orchestration between local and cloud models, demonstrated in a demo video.

- Oblix routes AI tasks to the cloud or edge based on complexity, latency needs, and cost, so it intelligently directs AI tasks to the cloud or edge based on complexity, latency requirements, and cost considerations.

- PCIE Bandwidth Doesn't Matter Much: Members found that PCIE bandwidth negligibly impacts inference speed when setting up dual 4090s, with at most 2 more tps compared to PCI-e 4.0 x8.

- The consensus was that upgrading to PCIE 5.0 from PCIE 4.0 provides only a marginal benefit for inference tasks.

- RTX PRO 6000 Blackwell Launched: NVIDIA released its RTX PRO 6000 "Blackwell" GPUs which features a GB202 GPU, 24K cores, 96 GB VRAM and requires 600W TDP.

- The performance of this card is believed to be much better than the 5090 due to the fact it has HBM.

- Multi-GPU Performance Suffers: A user shared that they noticed a degradation in performance and instability when using multiple RTX 3060s (3 on PCI-e x1 and 1 on x16) in LM Studio with CUDA llama.cpp, as single GPU performance (x16) was superior.

- It was suggested that the performance issues stemmed from how models are split between multiple GPUs and that Pci-e 3.0 x4 slows inference down up to 10%.

HuggingFace Discord

- Mining GPUs rescue AI Home Server: Users are considering older Radeon RX 580 GPUs for local AI servers, but are being directed to P104-100s or P102-100s on Alibaba, which have 8-10 GB VRAM.

- Nvidia limits the VRAM in the bios, but Chinese sellers flash them to give access to all available memory.

- Open Source Investment Multiplies Value: Harvard research reveals that $4.15B invested in open-source generates $8.8T of value for companies, as discussed in this X post, which means $1 invested = $2,000 of value created.

- This highlights the significant economic return from open-source contributions.

- Oblix Orchestrates Local vs. Cloud Models: The Oblix Project offers a platform for seamless orchestration between local and cloud models, as highlighted in a demo video.

- Autonomous agents in Oblix monitor system resources and dynamically decide whether to execute AI tasks locally or in the cloud.

- Gradio Sketch AI code generation is here!: Gradio Sketch released an update that includes AI-powered code generation for event functions, which can be accessed either via typing

gradio sketchor the hosted version.- This allows no code to be written and done in minutes, as opposed to the hours previously required.

- LangGraph Materials Released on GitHub: Materials for Unit 2.3 on LangGraph are available on the GitHub repo due to sync issues.

- This allows impatient users to access the content before the website is updated, ensuring they can continue with the course.

OpenAI Discord

- Gemini Tussles ChatGPT for Supremacy: Members debated the merits of Gemini Advanced versus ChatGPT Plus, with opinions divided on Gemini's 2.0 Flash Thinking and ChatGPT's safety alignment and implementation.

- One member lauded Gemini's free, unlimited access, while another criticized its lack of basic safety features and overall implementation.

- o1 and o3-mini-high Still Top Dog: Despite buzz around Gemini, some users maintained that OpenAI's o1 and o3-mini-high models excel in reasoning tasks like coding, planning, and math.

- These users consider Google models to be the worst in these areas, with only Grok 3 and Claude 3.7 Thinking potentially rivaling o1 and o3-mini-high.

- GPT-4.5's Creative Writing Falls Flat: A member found GPT-4.5's creative writing inconsistent, citing logic errors, repetition, and occasional resemblances to GPT-4 Turbo.

- Although performance improved on subsequent runs, the user lamented the model's extreme message limit.

- DeepSeek Gets Booted From University Campuses: A member reported that their university banned DeepSeek, possibly due to its lack of guidelines or filters, which drastically degrades performance when avoiding illegal topics.

- The ban appears to target only DeepSeek, not other LLMs.

- ChatGPT Sandboxes Boundaries of Helpfulness: Members are experimenting with ChatGPT personality by exploring how the model responds to different prompts and system messages, showing example of an 'unhelpful assistant' roleplay.

- One member found it challenging to get the model out of the 'unhelpful' state in the GPT-4o sandbox without altering the system message.

OpenRouter (Alex Atallah) Discord

- Anthropic's Claude 3.7 Sonnet Stalls: Anthropic models, specifically Claude 3.7 Sonnet, experienced downtime and are now recovering.

- Users reported that Anthropic services appear to be stabilizing after the outage.

- Cline Compatibility Board Ranks Models: A community member has created a Cline Compatibility Board for ranking models based on their performance with Cline.

- The board provides details on API providers, plan mode, act mode, input/output costs, and max output for models like Claude 3.5 Sonnet and Gemini 2.0 Pro EXP 02-05.

- Gemini 2.0 Pro EXP-02-05 Has Glitches: The Gemini-2.0-pro-exp-02-05 model on OpenRouter is confirmed to be functional but experiences random glitches and rate limiting.

- According to the compatibility board, it is available at 0 cost, with an output of 8192.

- Gemini Models Go Manic in RP Scenarios?: Some users found Gemini models like gemini-2.0-flash-lite-preview-02-05 and gemini-2.0-flash-001 to be unstable in roleplaying scenarios, exhibiting manic behavior, even with a temperature setting of 1.0.

- However, other users reported absolutely no problem with 2.0 flash 001, finding it very coherent and stable at a temperature of 1.0.

- OpenRouterGo SDK v0.1.0 Launched: The OpenRouterGo v0.1.0, a Go SDK for accessing OpenRouter's API with a clean, fluent interface has been released.

- The SDK includes automatic model fallbacks, function calling, and JSON response validation.

aider (Paul Gauthier) Discord

- Nvidia Unveils Nemotron Open Weight LLMs: NVIDIA introduced the Llama Nemotron family of open-weight models, including Nano (8B), Super (49B), and Ultra (249B) models, with initial tests of the Super 49B model achieving 64% on GPQA Diamond in reasoning mode.

- The models have sparked interest in their reasoning capabilities and potential applications, with a tweet mentioning their announcement on X.

- DeepSeek R1 671B lands on SambaNova Cloud: DeepSeek R1 671B is now generally available on SambaNova Cloud with 16K context lengths and API integrations with major IDEs, gaining quick popularity after its launch, confirmed in a tweet from SambaNovaAI.

- The availability is extended to all developers, providing access to this large model for various applications.

- Aider Adds Web and PDF Reading Abilities: Aider now supports reading webpages from URLs and PDF files, usable with vision-capable models like GPT-4o and Claude 3.7 Sonnet via commands like

/add <filename>,/paste, and command line arguments, as documented here.- This feature enhances Aider's ability to process diverse information sources, though the relative value of each model continues to be debated.

- Gemini Canvas Expands Collaboration Tools: Google's Gemini has introduced enhanced collaboration features with Canvas, offering real-time document editing and prototype coding.

- This interactive space simplifies writing, editing, and sharing work with quick editing tools for adjusting tone, length, or formatting.

- Aider Ignores Repo Files with Aiderignore: Aider allows users to exclude files and directories from the repo map using the

.aiderignorefile, detailed in the configuration options.- This feature helps focus the LLM on relevant code, improving the efficiency of code editing.

Interconnects (Nathan Lambert) Discord

- Hunyuan Hypes T1 Hierarchy:

@TXhunyuanseeks collaborators to step into T1 models, questioning the availability of alphabet letters for naming reasoning models via a post on X.- The community debates potential names, considering the limited options left after numerous models have already claimed prominent letters.

- Samsung's ByteCraft Generates Games: SamsungSAILMontreal introduced ByteCraft, a generative model transforming text prompts into executable video game files, accessible via a 7B model and blog post.

- Early work requires steep GPU requirements, needing a max of 4 GPUs for 4 months.

- NVIDIA's DGX Spark and Station Revealed: NVIDIA unveiled its new DGX Spark and DGX Station personal AI supercomputers powered by the company’s Grace Blackwell platform.

- DGX Spark, formerly Project DIGITS, is a $3,000 Mac Mini-sized world’s smallest AI supercomputer geared towards AI developers, researchers, data scientists and students, for prototyping, fine-tuning and inferencing.

- California's AB-412 Threatens AI Startups: California legislators are reviewing A.B. 412, mandating AI developers to track and disclose every registered copyrighted work used in AI training.

- Critics fear this impossible standard could crush small AI startups and developers, while solidifying big tech's dominance, also AI2 submitted a recommendation to the Office of Science and Technology Policy (OSTP) advocating for an open ecosystem of innovation

- NVIDIA Hit with Unserious Marketing: NVIDIA is advertising H200 performance on an H100 node, and a 1.67x speedup of B200 vs H200 after going from FP8 to FP4 and some are describing NVIDIA marketing as so unserious via these tweets and here.

- NVIDIA claims the world’s fastest DeepSeek-R1 inference, with a single system using eight Blackwell GPUs in an NVL8 configuration delivering 253 TPS/user or 30K TPS system throughput on the full DeepSeek-R1 671B parameter model and more details available at NVIDIA's website.

Yannick Kilcher Discord

- OpenAI is a Triple Threat: A member noted OpenAI's comprehensive strength in model development, product application, and pricing strategies, setting them apart in the AI landscape.

- Other companies may specialize in only one or two of these critical areas, but OpenAI excels across the board.

- AI Companionships Spur Addictive Sentiments: Concerns are emerging as AI agents, like vocal assistants, foster addictive tendencies, leading some users to develop emotional attachments.

- This trend raises ethical questions about intentionally designed addictive features and their potential impact on user dependency, with discussions on whether companies should avoid features that might enhance such behaviors.

- Smart Glasses: Data Harvesting Disguised as Urban Chic?: A discussion questioned Meta and Amazon's smart glasses, suggesting a data harvesting intent, particularly egocentric views, curated for robotics companies.

- A member joked about a smart glasses startup idea for villains, highlighting features such as emotion detection, dream-state movies, and shared perspectives, creating a dependence feedback-loop to collect user data and train models.

- AI Art Copyright: No Bot Authors Allowed!: A U.S. appeals court affirmed that AI-generated art without human input cannot be copyrighted under U.S. law, supporting the U.S. Copyright Office's stance on Stephen Thaler's DABUS system; see Reuters report.

- The court emphasized that only works with human authors can be copyrighted, marking the latest attempt to grapple with the copyright implications of the fast-growing generative AI industry.

- Llama 4 Arriving Soon?: Rumors suggest that Llama 4 might be released on April 29th.

- This speculation is linked to Meta's upcoming event, Llamacon 2025.

Perplexity AI Discord

- Perplexity Judged Dumber Than Claude: A user stated that Perplexity feels dumber than Claude 3.5, citing issues with context retention and abstract generation.

- It was suggested to use incognito mode or disable AI data retention to prevent data storage, noting that new chats provide a clean slate.

- O1 Hype Dwindles Due to Paywall: Community testing suggests initial overestimation of O3 mini and underestimation of o1 and o1 pro, with the paywall significantly dampening enthusiasm.

- One user reported that R1 is useless most of the time, with

o3-miniyielding better results for debuggingjscode.

- One user reported that R1 is useless most of the time, with

- Oblix Project Transitions Between Edge and Cloud: The Oblix Project orchestrates between local and cloud models using agents to monitor system resources, according to a demo video.

- The project dynamically switches execution between cloud and on-device models.

- Perplexity API Responds Erratically: A user reported random response issues with the Perplexity API, specifically during hundreds of rapid web searches.

- The code either receives only random responses or ignores random queries when calling the

conductWebQueryfunction in quick repetition, potentially due to implementation errors.

- The code either receives only random responses or ignores random queries when calling the

Notebook LM Discord

- GDocs Layouts Get Mangled!: Users found that converting to GDocs mangles the layout and fails to import most images in teacher presentations, and found that extracting text (using pdftotext) and converting to image-only format helps with grounding.

- Image-only PDFs can expand to >200MB, requiring splitting due to NLM's file size limit.

- NotebookLM: One-Man (or Woman) Band!: Users find that with customized function you can make it to do anything you want (solo episode being man or female, mimic persona, narrate stories, read word for word).

- Only your imagination is the limit.

- Podcast Casual Mode: Cursing Up a Storm!: Feedback indicates that the casual podcast mode may contain profanity.

- It is unclear whether a clean setting is available.

- Line Breaks Can't Be Forced in NotebookLM: Users can't force line breaks and spacing in NotebookLM's responses, because the AI adds them on-demand and it's not something the user can configure at the moment.

- As an alternative to audio overviews, a user was advised to download the audio and upload it as a source to generate a transcript.

- Mind Map Feature Rolls Out Gradually: Users discussed the new Mind Map feature in NotebookLM, which visually summarizes uploaded sources, see Mind Maps - NotebookLM Help.

- The feature is being rolled out gradually to more users, for bug control: It's better to release something new only for a limited number of people that increases over time first, because then there is time to rule out any bugs that emerge and provide a cleaner version by the time everyone gets it.

MCP (Glama) Discord

- Listing Smithery Registries via Glama API: A member utilized the Glama API to enumerate GitHub URLs and verify the existence of smithery.yaml files, describing the code as a one time hack job script.

- They considered creating a gist if there was sufficient interest, highlighting its nature as a one time script.

- Spring App Questions Spring-AI-MCP-Core: A user is exploring MCP for the first time in Open-webui and a basic Spring app using spring-ai-mcp-core, and seeks resources beyond ClaudeMCP and the modelcontextprotocol GitHub repo.

- They questioned how MCP compares to GraphQL or function calling, and how it handles system prompts and multi-agent systems.

- Claude Code MCP Implementation Released: A member unveiled a Claude Code MCP implementation of Claude Code as a Model Context Protocol (MCP) server.

- They sought assistance with the json line for claude_desktop_config.json for Claude Desktop integration but resolved the problem.

- DuckDuckGo MCP Framework Built for Cursor on Windows: A member created their own DuckDuckGo MCP on a Python framework for Cursor on Windows because existing NPM projects failed.

- It supports web, image, news, and video searches without requiring an API key and is available on GitHub.

- MCP Python REPLs Gain Traction: Members exchanged views on hdresearch/mcp-python, Alec2435/python_mcp and evalstate/mcp-py-repl as Python REPLs for MCP.

- A concern was raised that one implementation wasn't isolated at all which could lead to a disaster, suggesting Docker be used to sandbox access.

Nous Research AI Discord

- Phi-4 could be your Auxiliary: Members discussed Phi-4 as a useful auxiliary model in complex systems, highlighting its direction-following, LLM interfacing, and roleplay abilities.

- The claim is that it would be useful as an auxiliary model in a complex system where you already had a bunch of other models.

- Claude's parameter listing errors: A user critiqued Claude's AI suggestions, citing model size inaccuracies, as the models listed were not under 10m parameters as requested, see the Claude output.

- A member defended that '10m' could be taken as a shorthand for 'very light models that are generally accessible on modern hardware'.

- "Vibe Coding" is background learning: A member shared an anecdote about learning Japanese through immersion, paralleling it with "vibe coding" for skill acquisition.

- They added that Even vibe coding, you still have to worry about things like interfaces between modules so that you can keep scaling with limited LLM context windows.

- Nvidia Drops Massive Coding Dataset: A user shared Nvidia's open-sourced instruct coding dataset for improving math, code, general reasoning, and instruction following in the Llama instruct model, including data from DeepSeek-R1 and Qwen-2.5.

- Another member who downloaded the dataset reported that it would be interesting to filter and train.

- Limited VRAM spurs niche tuning: A member asked for help finding a niche for training a model with limited VRAM such as an RTX 3080.

- Discussion included various QLoRA experiments, and a suggestion to fine tune on code editing.

LMArena Discord

- LMArena faces Slow Demise?: A member questioned the quality of testers on LMArena, wondering "Where are all the real testers, improvers, and real thinkers?" and shared a Calm Down GIF.

- This suggests community concern about the platform's direction or engagement, possibly its user experience and community support.

- Perplexity/Sonar must beat OpenAI/Google: A member speculates that if Perplexity/Sonar isn't the top web-grounded search, the company will struggle to maintain uniqueness against OpenAI or Google.

- Another member noted that "no one really uses sonar on perplexity though", suggesting it is mostly pro subscriptions driving revenue.

- Gemini Deep Research Saves Time 10x: The latest update in Gemini Deep Research saves one member time by 10x, but may be too expensive for LMArena benchmarking.

- Another member added context that Gemini gave great results, providing an insightful analysis for scientific medical research, generating even a list of 90 literature sources.

- LeCun Debunks Zuckerberg's AGI Hype: Yann LeCun warned that achieving AGI "will take years, if not decades," requiring new scientific breakthroughs.

- A more recent article said that Meta LLMs will not get to human level intelligence until 2025.

- Grok 3 Deeper Search is Disappointing: One user found Grok 3's deepersearch feature to be disappointing, citing hallucinations and low-quality results.

- However, another user defended Grok, stating that "deepersearch seems pretty good" but the original commenter rebutted that frequent usage reveals numerous mistakes and hallucinations.

GPU MODE Discord

- Gemma 3 Quantization Calculations: A user inquired about running Gemma 3 on an M1 Macbook Pro (16GB), to which another user explained how to calculate the size requirement based on model size and quantization in bytes, suggesting the Macbook can run Gemma 3 4B in FP16.

- The user explained that a 12B model in FP4 could also be run, given the Macbook's 16GB unified memory with 70% allocated to the GPU.

- Blackwell ULTRA's attention instruction Piques Interest: A member mentioned that Blackwell ULTRA would bring an attention instruction, but its meaning remains unclear to them.

- Additionally, members discussed that if the smem carveout for kernel1 is only 100 or 132 KiB, there's not enough space for both kernels to run simultaneously, suggesting increasing the carveout using the CUDA documentation on Shared Memory.

- Nvfuser's Matmul Output Fusions Introduce Stalls: A member noted that implementing matmul output fusions for nvfuser is difficult, and even with multiplication/addition, it introduces stalls, making it slower than separate kernels due to the need to keep tensor cores fed.

- Another member inquired whether the difficulty arises because Tensor Cores and CUDA Cores cannot run concurrently, potentially fighting for register usage, while referencing Blackwell docs stating that TC and CUDA cores can now run concurrently.

AcceleratePrepares to Merge FSDP2 Support: A member asked whetheraccelerateuses FSDP1 or FSDP2, and whether it is possible to fine-tune an LLM using FSDP2 withtrl, to which it was clarified that a pull request will be merged ~next week to add initial support for FSDP2 here.- After the member clarified the details on when FSDP2 support would be added, the other member said "This is exciting! Thanks for the clarification!", emphasizing user anticipation around the arrival of FSDP2 support in

accelerate.

- After the member clarified the details on when FSDP2 support would be added, the other member said "This is exciting! Thanks for the clarification!", emphasizing user anticipation around the arrival of FSDP2 support in

- DAPO Algorithm Debuts with Open-Source Release: The DAPO algorithm (decoupled clip and dynamic sampling policy optimization) was released; DAPO-Zero-32B surpasses DeepSeek-R1-Zero-Qwen-32B, scoring 50 on AIME 2024 with 50% fewer steps; it is trained with zero-shot RL from the Qwen-32b pre-trained model, and the algorithm, code, dataset, verifier, and model are fully open-sourced, built with Verl.

Modular (Mojo 🔥) Discord

- Nvidia unveils Blackwell Ultra and Ruben: At a recent keynote, Nvidia announced the Blackwell Ultra and Ruben, along with the next GPU generation named Feynman.

- With Ruben, Nvidia is shifting to silicon photonics to save on data movement power costs; Ruben will feature a new ARM CPU, alongside major investments in Spectrum X, which is launching a 1.6 Tbps switch.

- CompactDict Attracts Attention for SIMD Fix: Members discussed the advantages of CompactDict, a custom dictionary implementation that avoids the SIMD-Struct-not-supported issue found in the built-in Dict.

- A report from a year ago was published on GitHub detailing two specialized Dict implementations: one for Strings and another forcing the implementation of the trait Keyable.

- HashMap Inclusion Debated for Mojo Standard Library: A suggestion was made to include the generic_dict in the standard library as HashMap, while maintaining the current Dict.

- Concerns were raised about Dict needing to do a lot of very not-static-typed things, and that it may be more valuable to add a new struct with a better design and deprecate Dict over time.

- List.fill Behavior Creates Unexpected Length Changes: Users questioned whether filling uninitialized parts of the lists buffer should be optional, since calling List.fill can surprisingly change the length of the list.

- It was suggested that making the filling of uninitialized parts of the lists buffer optional would resolve this issue.

- No Index Out of Range Check in Lists: A user noticed the absence of an index out of range check in List, expressing surprise since they thought that was what unsafe_get was for.

- Another member has run into this issue also, with someone from Modular saying it needs to be added at some point.

Latent Space Discord

- Patronus AI Judges Honesty at Etsy: Patronus AI launched an MLLM-as-a-Judge to evaluate AI systems, already implemented by Etsy to verify caption accuracy for product images.

- Etsy has hundreds of millions of items and needs to ensure their descriptions are accurate and not hallucinated.

- Cognition AI Secures $4B Valuation: Cognition AI reached a $4 billion valuation in a deal led by Lonsdale's firm.

- Further details on the deal were not supplied.

- AWS Undercuts Nvidia: During the GTC March 2025 Keynote, it was reported that AWS is pricing Trainium at 25% the price of Nvidia chips (hopper), according to this tweet.

- Jensen joked that after Blackwell, they could give away a Hopper since Blackwell will be so performant.

- Manus Access Impresses Trading Bot User: A member got access to Manus with deeper search via Grok3 and said it's good, showcasing how it built a trading bot over the weekend, but is currently down ~$1.50 in paper trading.

- They showed off impressive output, teasing sneak peek screenshots.

- vLLM: The Inference ffmpeg: vLLM is slowly becoming the ffmpeg of LLM inference, according to this tweet.

- The tweet expresses gratitude for the trust in vLLM.

Eleuther Discord

- Fine-tuning Gemini/OLMo Models Gets Hot: Members are seeking advice on fine-tuning Gemini or OLMo models and considering whether distillation would be a better approach, especially with data in PDF files.

- The discussion evolved into memory optimization and hybrid setups for enhanced performance rather than specifics on which models to finetune.

- Passkey Performance gets Fuzzy: A member suggested improving the passkey and fuzzy rate for important keys to nearly 100% using hybrid approaches or a memory expert activated by the passkey, as visualized in scrot.png.

- They noted that larger models will have longer memories, illustrating the improvement from 1.5B to 2.9B parameters.

- Latent Activations Reveal Full Sequences: A poster argues that one should generate latent activations from entire sequences rather than individual tokens to understand a model's normal behavior.

- They suggest that focusing on entire sequences provides a more accurate representation of the model behavior and provided example code using

latents = get_activations(sequence).

- They suggest that focusing on entire sequences provides a more accurate representation of the model behavior and provided example code using

- Cloud Models Demand API Keys: Members are asking if cloud-based models, which cannot be hosted locally, are compatible with API keys.

- Another member confirmed that they do, pointing to a previously provided link for details.

LlamaIndex Discord

- Hugging Face Teaches LlamaIndex Agents: Hugging Face released a free course about building agents in LlamaIndex, covering components, RAG, tools, agents, and workflows (link).

- The course dives into the intricacies of LlamaIndex agents, offering a practical guide for developers looking to build AI-powered applications.

- Google & LlamaIndex Simplify AI Agents: LlamaIndex has partnered with Google Cloud to simplify building AI agents using the Gen AI Toolbox for Databases (link).

- The Gen AI Toolbox for Databases manages complex database connections, security, and tool management, with more details available on Twitter.

- LlamaIndex vs Langchain Long Term Memory: A member inquired about whether LlamaIndex has a feature similar to Langchain's long-term memory support in LangGraph.

- Another member pointed out that "long term memory is just a vector store in langchain's case" and suggested using LlamaIndex's Composable Memory.

- Nebius AI Platform Compared to Giants: A member is curious about real-world experiences with Nebius's computing platform for AI and machine learning workloads, including their GPU clusters and inference services.

- They are comparing it to AWS, Lambda Labs, or CoreWeave in terms of cost, scalability, and ease of deployment, and would like to know about stability, networking speeds, and orchestration tools like Kubernetes or Slurm.

Cohere Discord

- Cohere Expanse 32B: Knowledge Cutoff Date Sought: A user inquired about the knowledge cutoff date for Cohere Expanse 32B, as they are seeking new work.

- No further information or responses were provided regarding the specific cutoff date.

- Trial Key Users Bump into Rate Limits: A user reported experiencing a 429 error with their trial key, seeking guidance on tracking usage and determining if they exceeded the 1000 calls per month limit, as described in the Cohere rate limits documentation.

- A Cohere team member offered assistance and clarified that trial keys are indeed subject to rate limits.

- Websearch Connector's Results Degrade: A user reported degraded performance with the websearch connector, noting that the implementation changed recently and now provides worse results.

- A team member requested details to investigate and noted that the connection option site: WEBSITE was failing to restrict queries to specific websites, and this fix is going out soon.

- Command-R-Plus-02-2024 vs Command-A-03-2025: A Comparison: A user tested and compared websearch results between models command-r-plus-02-2024 and command-a-03-2025, discovering no significant differences between the models.

- They additionally reported multiple instances where the websearch functionality failed to return any results.

- Goodnews MCP: LLM Delivers Uplifting News: A member created a Goodnews MCP server that delivers positive news to MCP clients via Cohere Command A, open sourced at this Github repo.

- The tool, named

fetch_good_news_list, ranks recent headlines using Cohere LLM to identify and return the most positive articles.

- The tool, named

LLM Agents (Berkeley MOOC) Discord

- MOOC Coursework Details Released: Coursework and completion certificate instructions for the LLM Agents MOOC have been released on the course website, building upon the fundamentals from the Fall 2024 LLM Agents MOOC.

- Labs and the Certificate Declaration form will drop in April, with assignments tentatively due at the end of May and certificates released in June.

- AgentX Competition has 5 Tiers: Details for the AgentX Competition have been shared, including information on how to sign up here, and it includes 5 Tiers: Trailblazer ⚡, Mastery 🔬, Ninja 🥷, Legendary 🏆, Honorary 🌟.

- Participants can also apply for mentorship on an AgentX Research Track project via this application form, with the application deadline set for March 26th at 11:59pm PDT.

- Quizzes Due End of May: All assignments, including quizzes released after every lecture, are due by the end of May, so you can still submit them to be eligible for the certificate.

- To clarify the selection criteria for the AgentX research track isn't about 'we will only accept the top X percent'.

- Guidance on AgentX Research Track Projects: Guidance will be offered on research track projects from March 31st to May 31st, and mentors will contact applicants directly for potential interviews.

- Applicants should demonstrate proactivity, a well-thought-out research idea relevant to the course, and the background to pursue the idea within the two-month timeframe.

- Troubleshooting Certificate Issues: A member who took the MOOC course in December reported not receiving the certificate, a mentor replied that the certificate email was sent on Feb 6th and advised checking spam/trash folders and ensuring the correct email address was used.

- The mentor shared that the certificate is open to anyone who completes one of the coursework tiers and shared a link to the course website and the google doc.

Torchtune Discord

- FL Setup Finally Functions: After a 4-month wait due to IT delays, a member's FL setup is finally functional, showcased in this image.

- The prolonged delay highlights common challenges in getting necessary infrastructure operational in large organizations.

- Nvidia GPUs face perpetual availability delays: Members report ongoing delays in Nvidia GPU availability, citing H200s as an example, which were announced 2 years ago but only available to customers 6 months ago.

- Such delays impact development timelines and resource planning for AI projects.

recvVectorandsendBytestrigger DPO Debacles: Users reportedrecvVector failedandsendBytes failederrors when using DPO recipes.- The errors' origins are uncertain, possibly stemming from cluster issues or problems with torch.

cudaGetDeviceCountCries for Compatibility: Members encounteredRuntimeError: Unexpected error from cudaGetDeviceCount()while using NumCudaDevices.- The error

Error 802: system not yet initializedmight stem from using a newer CUDA version than anticipated, although this is unconfirmed.

- The error

nvidia-fabricmanagerFulfills CUDA Fix: Usingnvidia-fabricmanageris the resolution for thecudaGetDeviceCounterror.- The correct process includes starting it with

systemctl start nvidia-fabricmanagerand confirming the status withnvidia-smi -q -i 0 | grep -i -A 2 Fabric, verifying the state shows "completed".

- The correct process includes starting it with

tinygrad (George Hotz) Discord

- M1 Macs Struggle with Model Training: A user reported that their M1 Mac Air struggles to train models even in small batches, facing clang issues with Kaggle and Hugging Face Spaces.

- They sought advice on hosting a demo of inference on a trained model but found the hardware underpowered for even basic training tasks.

- DeepSeek-R1 Optimized for Home Use: 腾讯玄武实验室's optimization方案 allows home deployment of DeepSeek-R1 on consumer hardware, costing only 4万元 and consuming power like a regular desktop, as noted in this tweet.

- The optimized setup generates approximately 10汉字/second, achieving a 97% cost reduction compared to traditional GPU setups, potentially democratizing access to powerful models.

- Clang Dependency Needs Better Error Handling: A contributor suggested improving dependency validation for the

FileNotFoundErrorwhen running the mnist example on CPU without clang.- The current error message does not clearly indicate the missing clang dependency, potentially confusing new users.

- Confused Index Selection for REDUCE_LOAD: A member requested clarification on the meaning of

x.src[2].src[1].src[1]and the reasons for selecting these indices asreduce_inputfor the REDUCE_LOAD pattern.- The code snippet checks if

x.src[2].src[1].src[1]is not equal tox.src[2].src[0], and accordingly assigns eitherx.src[2].src[1].src[1]orx.src[2].src[1].src[0]toreduce_input.

- The code snippet checks if

AI21 Labs (Jamba) Discord

- AI21 Labs Keeps Mum on Jamba: AI21 Labs is not publicly sharing information about what they are using for Jamba model development.

- A representative apologized for the lack of transparency but indicated that they would provide updates if the situation changes.

- New Faces Join the Community: The community welcomed several new members including <@518047238275203073>, <@479810246974373917>, <@922469143503065088>, <@530930553394954250>, <@1055456621695868928>, <@1090741697610256416>, <@1350806111984422993>, and <@347380131238510592>.

- They are encouraged to participate in a community poll to engage.

DSPy Discord

- Chain Of Draft Reproducibility is Here: A member used

dspy.ChainOfThougtto reproduce the Chain of Draft technique, detailing the process in a blog post.- This validates the method for reliably using DSPy to reproduce advanced prompting strategies.

- Chain Of Draft Technique Cuts Tokens: The Chain Of Draft prompt technique helps the LLM expand its response without excessive verbosity, cutting output tokens by more than half.

- Further details on the method are available in this research paper.

MLOps @Chipro Discord

- AWS Webinar Teaches MLOps Stack Construction: A webinar on March 25th at 8 A.M. PT will cover building an MLOps Stack from Scratch on AWS, registration available at this link.

- The webinar aims to provide an in-depth discussion on constructing end-to-end MLOps platforms.

- AI4Legislation Seminar Spotlights Legalese Decoder: The AI4Legislation Seminar, featuring Legalese Decoder Founder William Tsui and foundation President Chunhua Liao, is scheduled for Apr 2 @ 6:30pm PDT (RSVP here).

- The seminar is part of the Silicon Valley Chinese Association Foundation's (SVCAF) efforts to promote AI in legislation.

- Featureform Simplifies ML Model Features: Featureform is presented as a virtual feature store, enabling data scientists to define, manage, and serve features for their ML models.

- It focuses on streamlining the feature engineering and management process in ML workflows.

- SVCAF Competition Boosts Open Source AI for Legislation: The Silicon Valley Chinese Association Foundation (SVCAF) is hosting a summer competition focused on developing open-source AI-driven solutions to enhance citizen engagement in legislative processes (Github repo).

- The competition seeks to foster community-driven innovation in applying AI to legislative challenges.

Nomic.ai (GPT4All) Discord

- GPT4All Details Default Directories: The GPT4All FAQ page on Github describes the default directories for models and settings.

- This Github page also provides additional information about GPT4All.

- GPT4All Models' Default Location: The default location for GPT4All models is described in the FAQ.

- Knowing this location can help with managing and organizing models.

The Codeium (Windsurf) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!