[AINews] Evals-based AI Engineering

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 3/27/2024-3/29/2024. We checked 5 subreddits and 364 Twitters and 25 Discords (377 channels, and 5415 messages) for you. Estimated reading time saved (at 200wpm): 615 minutes.

Evals are the "eat your vegetables" of AI engineering - everyone knows they should just do more of it:

Hamel Husain has yet another banger in his blog series: Your AI Product Needs Evals:

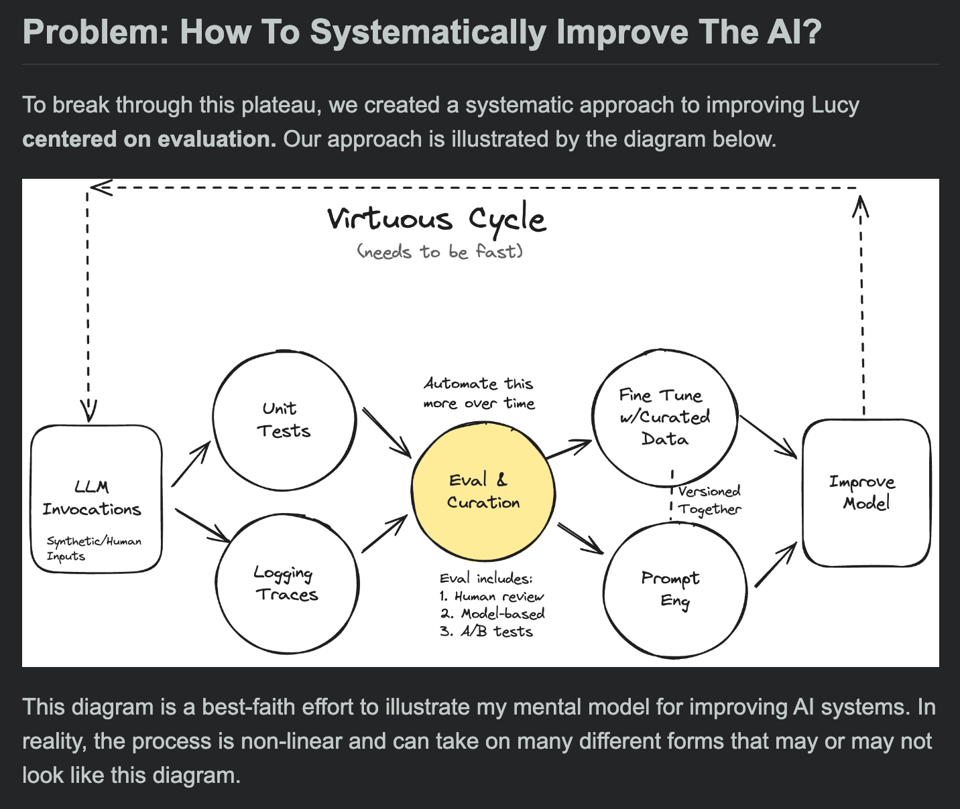

Like software engineering, success with AI hinges on how fast you can iterate. You must have processes and tools for:

- Evaluating quality (ex: tests).

- Debugging issues (ex: logging & inspecting data).

- Changing the behavior or the system (prompt eng, fine-tuning, writing code)

Many people focus exclusively on #3 above, which prevents them from improving their LLM products beyond a demo. Doing all three activities well creates a virtuous cycle differentiating great from mediocre AI products (see the diagram below for a visualization of this cycle).

We are guilty of this at AINews - our loop is slow and hence the product improvement pace has also been much slower than we would want to see. Hamel proposes a mental model to center on evals:

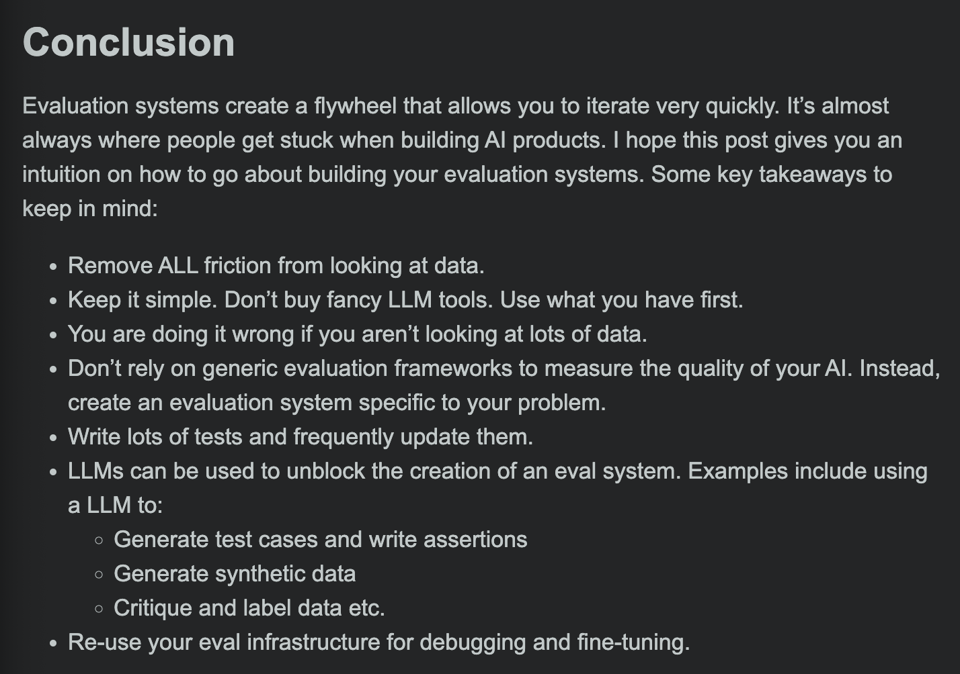

Excerpts we liked:

- You must remove all friction from the process of looking at data.

- Many vendors want to sell you tools that claim to eliminate the need for a human to look at the data. but You should track the correlation between model-based and human evaluation to decide how much you can rely on automatic evaluation.

- Eval Systems Unlock Superpowers For Free. In addition to iterating fast, having good evals unlock the ability to finetune and synthesize data.

The post has a lot of practical advice on how to make these "sensible things" easy, like using spreadsheets for hand labeling or hooking up LangSmith (which doesn't require LangChain).

Obligatory AI Safety PSA: OpenAI today released some samples of their rumored Voice Engine taking a 15s voice samples and successfully translating to different domains and languages. It's a nice demo and is great marketing for HeyGen, but more importantly they are trying to warn us that very very good voice cloning from small samples is here. Take Noam's word for it (who is at OpenAI but not on the voice team):

Alec Radford does not miss. We also enjoyed Dwarkesh's pod with Sholto and Trenton.

Table of Contents

- AI Reddit Recap

- AI Twitter Recap

- PART 0: Summary of Summaries of Summaries

- PART 1: High level Discord summaries

- Stability.ai (Stable Diffusion) Discord

- Perplexity AI Discord

- Unsloth AI (Daniel Han) Discord

- LM Studio Discord

- OpenAI Discord

- Eleuther Discord

- Nous Research AI Discord

- Modular (Mojo 🔥) Discord

- HuggingFace Discord

- LAION Discord

- LlamaIndex Discord

- OpenInterpreter Discord

- CUDA MODE Discord

- OpenRouter (Alex Atallah) Discord

- AI21 Labs (Jamba) Discord

- tinygrad (George Hotz) Discord

- Latent Space Discord

- OpenAccess AI Collective (axolotl) Discord

- LangChain AI Discord

- Interconnects (Nathan Lambert) Discord

- DiscoResearch Discord

- Skunkworks AI Discord

- PART 2: Detailed by-Channel summaries and links

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence. Comment crawling still not implemented but coming soon.

New Models and Architectures:

- Jamba: The first production-grade Mamba-based model using a hybrid Transformer-SSM architecture, outperforming models of similar size. (Introducing Jamba - Hybrid Transformer Mamba with MoE)

- Bamboo: A new 7B LLM with 85% activation sparsity based on Mistral's weights, achieving up to 4.38x speedup using hybrid CPU+GPU. (Introducing Bamboo: A New 7B Mistral-level Open LLM with High Sparsity)

- Qwen1.5-MoE: Matches 7B model performance with 1/3 activated parameters. (Qwen1.5-MoE: Matching 7B Model Performance with 1/3 Activated Parameters)

- Grok 1.5: Beats GPT-4 (2023) in HumanEval code generation and has 128k context length. (Grok 1.5 now beats GPT-4 (2023) in HumanEval (code generation capabilities), but it's behind Claude 3 Opus, X.ai announces grok 1.5 with 128k context length)

Quantization and Optimization:

- 1-bit Llama2-7B: Heavily quantized models outperforming smaller full-precision models, with a 2-bit model outperforming fp16 on specific tasks. (1-bit Llama2-7B Model)

- QLLM: A general 2-8 bit quantization toolbox with GPTQ/AWQ/HQQ, easily converting to ONNX. (Share a LLM quantization REPO , (GPTQ/AWQ/HQQ ONNX ONNX-RUNTIME))

- Adaptive RAG: A retrieval technique dynamically adapting the number of documents based on LLM feedback, reducing token cost by 4x. (Tuning RAG retriever to reduce LLM token cost (4x in benchmarks), Adaptive RAG: A retrieval technique to reduce LLM token cost for top-k Vector Index retrieval [R])

Stable Diffusion Enhancements:

- Hybrid Upscaler Workflow: Combining SUPIR for highest quality with 4x/16x quick upscaler for speed. (Hybrid Upscaler Workflow)

- IPAdapter V2: Update to adapt old workflows to the new version. (IPAdapter V2 update old workflows)

- Krita AI Diffusion Plugin: Fun to use for image generation. (Krita AI Diffusion Plugin is so much fun (link in thread))

Humor and Memes:

- AI Lion Meme: Humorous image of a lion. (AI Lion Meme)

- "No, captain! What you don't get is that these plants are Alive!!": Humorous text-to-video generation. ("No, captain! What you don't get is that these plants are Alive!! & they are overwhelming us... what's that? Yes! Marijuana Leaves! Hello Captain? Hello!!" (TEXT TO VIDEO- SDCN for A1111, no upscale))

- "Filming animals at the zoo": Humorous image of a person filming animals. ("Filming animals at the zoo")

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

AI Models and Architectures

- New open-source models released: @AI21Labs introduced Jamba, an open SSM-Transformer model based on Mamba architecture, achieving 3X throughput and fitting 140K context on a single GPU. @databricks released DBRX, setting new SOTA on benchmarks like MMLU, HumanEval, and GSM 8k. @AlibabaQwen released Qwen1.5-MoE-A2.7B, a MoE model with 2.7B activated parameters that can achieve 7B model performance.

- Quantization advancements: @maximelabonne shared a Colab notebook comparing FP16 vs. 1-bit LLama 2-7B models quantized using HQQ + LoRA, with SFT greatly improving the quantized models, enabling fitting larger models into smaller memory footprints.

- Mixture of Experts (MoE) architectures: @osanseviero provided an overview of different types of MoE models, including pre-trained MoEs, upcycled MoEs, and FrankenMoEs, which are gaining popularity for their efficiency and performance benefits.

AI Alignment and Factuality

- Evaluating long-form factuality: @quocleix introduced a new dataset, evaluation method, and aggregation metric for assessing the factuality of long-form LLM responses using LLM agents as automated evaluators through Search-Augmented Factuality Evaluator (SAFE).

- Stepwise Direct Preference Optimization (sDPO): @_akhaliq shared a paper proposing sDPO, an extension of Direct Preference Optimization (DPO) for alignment tuning that divides available preference datasets and utilizes them in a stepwise manner, outperforming other popular LLMs with more parameters.

AI Applications and Demos

- AI-powered GTM platform: @perplexity_ai partnered with @copy_ai to create an AI-powered Go-To-Market platform offering real-time market insights, with Copy AI users receiving 6 months of Perplexity Pro for free.

- Journaling app with long-term memory: @LangChainAI introduced LangFriend, a journaling app leveraging memory capabilities for a personalized experience, available to try with a developer-facing memory API in the works.

- AI avatars and video generation: @BrivaelLp showcased an experiment with Argil AI, creating entirely AI-generated content featuring an AI Barack Obama teaching quantum mechanics, demonstrating AI's potential to revolutionize user-generated and social content creation.

AI Community and Events

- Knighthood for services to AI: @demishassabis, CEO and co-founder of @GoogleDeepMind, was awarded a Knighthood by His Majesty for services to Artificial Intelligence over the past 15 years.

- AI Film Festival: @c_valenzuelab announced the second annual AI Film Festival in Los Angeles on May 1 to showcase the best in AI cinema.

PART 0: Summary of Summaries of Summaries

1) New AI Model Releases and Architectures:

- AI21 Labs unveils Jamba, a hybrid SSM-Transformer model with 256K context window, 12B active parameters, and 3X throughput for long contexts. Open weights under Apache 2.0 license.

- Qwen1.5-MoE-A2.7B, a Transformer-based MoE model matching 7B performance with only 2.7B activated parameters.

- LISA algorithm enables 7B parameter fine-tuning on 24GB GPU, outperforming LoRA and full training for instruction tasks.

- SambaNova unveils Samba-1, a Composition of Experts (CoE) model claiming reduced compute needs and higher performance, though transparency concerns exist.

2) Open Source Collaboration and Community Projects:

- Modular open-sources Mojo standard library under Apache 2 license, with nightly builds and a community livestream covering updates.

- LlamaIndex blog shares optimizations like Int8 and Binary Embeddings for RAG pipelines by Cohere to reduce memory and costs.

- Community showcases include Tinyllama and Mischat models leveraging Unsloth notebooks.

- OpenGPTs project gets a dedicated <#1222928565117517985> channel for collaboration on the open-source platform.

3) Model Evaluation, Benchmarking and Datasets:

- Discussions on reliability of existing AI benchmarks like HuggingFace Chatbot Arena which includes human evaluation.

- Sharing of long context datasets like MLDR on HuggingFace for training models requiring extensive sequences.

- Plans for translation model battle between DiscoLM, Occiglot, Mixtral, GPT-4, DeepL, Azure Translate on Capybara dataset.

- Shure-dev mission to curate high-quality LLM research papers as a resource for the rapidly evolving field.

4) Local LLM Deployment and Hardware Optimization:

- Guidance on running LLMs locally with LM Studio, Open Interpreter, and hardware like RTX 3090 GPUs.

- Recommendations for power supply sizing (1200-1500W) and efficient GPU power limits (350-375W for RTX 4090).

- Discussions on VRAM requirements for training large models like LLaMa 7B with QLoRA and LoRA.

- Windows Subsystem for Linux (WSL2) suggested for running CUDA/Torch on Windows gaming machines.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

- Supercharging SD3 with Turbo Techniques: Enthusiasm buzzes around SD3 Turbo to provide a high-detail model similar to SDXL Lightning, with discussions hinting at promising performance on 12GB VRAM systems.

- Balancing Power & Accessibility in AI Art: Debate centers on potential SD3 feature downscaling for broader accessibility; suggestions include providing control nets and fine-tuning tools upon launch to empower users.

- Training LoRA with SD3 Under the Microscope: The likelihood of training LoRA models using SD3 with 24GB of VRAM is mulled over, with questions about the transition of these tools to SD3 after its release remaining unanswered.

- Smaller GPUs Tackling Big Training Ambitions: Success stories of training smaller LoRA models on 16GB VRAM GPUs emerge, highlighting optimisations such as Aggressive train rates and network dropouts.

- Arc2Face Steals the Spotlight: Arc2Face entices members with its face manipulation prowess amid jests about alien technology and dataset censorship controversies.

Perplexity AI Discord

DBRX Takes the Limelight: DBRX, a newfound heavyweight in language models by Databricks, steals the show at Perplexity Labs, outshining GPT-3.5 and neck-to-neck with Gemini 1.0 Pro on tasks demystifying math and coding challenges. A green light is given to test-run DBRX for free at Perplexity Labs, throwing the gauntlet down for AI connoisseurs.

Mark Your Calendars for Copy AI and Perplexity Alliance: The fusion of Copy AI's platform with Perplexity's state-of-the-art APIs points to an uprising in go-to-market strategies, lighting the path for real-time market acumen. For users leveraging Copy AI, a half-year access to Perplexity Pro cuts the ribbon, and it's all spelled out in their co-authored blog post.

In Search of Perfection with Perplexity: Users are scratching their heads over the hit-or-miss performance of academic focus mode in Perplexity's search capabilities, puzzled by intermittent outages. Improvement in the Pro Search spaces and conflicting tales of file sources dominated discussions, with a spotlight on possibly employing RAG or GPT-4-32k technology for diverse file processing.

Tuning into Enhanced Scratchpad Tactics: The community exchanges notes on drawing out the best from Perplexity; one user gives a hands-on demo using <scratchpad> XML tags, and space enthusiasts fling questions at the AI about Starship and astronautics. Users also threw in finance-flavored queries, probing into Amazon's monetary moves and the FTX conundrum.

API Adventures and Misadventures: Queries abound regarding the Perplexity AI API's unpredictable behavior, where search results are sometimes lost in the web/API rift while hunting for answers, veering off from the steadiness promised on the web interface. For those thirsting for beta feature participation, including coveted URL citations, a beeline can be made to Apply for beta features, keeping API fanatics at the edge of their seats.

Unsloth AI (Daniel Han) Discord

- Jamba Juices Up AI Debate: The discussion was sparked by the announcement of Jamba by AI21 Labs, a model that integrates Mamba SSM with Transformers. Engineers debated Jamba's capacity, tokenization, and lack of transparency in token counts.

- Quantifying Model Behaviors: Conversations arose around evaluating open-ended generation in models. To assist, various resources, including the paper Are Emergent Abilities of Large Language Models a Mirage? available here, were shared to provide clarity on perplexity and pairwise ranking metrics.

- Fine-Tuning Face-Off: There was robust sharing of fine-tuning strategies on models like Mistral, involving SFTTrainer difficulties and optimal epochs and batch sizes discussion. Technical difficulties in multi-GPU support and dependencies like bitsandbytes were flagged for resolution.

- Karpathy's Kernel of Wisdom: Andrej Karpathy's YouTube deep-dive likened LLMs to operating systems, stirring talks on finetuning practices and the importance of using original training data. He voiced criticism of current RLHF techniques, contrasting them with AlphaGo, and suggested a fundamental shift in model training approaches.

- Training Trials and Successes Shared: Insights were exchanged regarding tokenizer errors, finetuning pitfalls, checkpointing methods, and best practices to avoid overfitting. Practical guidance was given on pushing local checkpoints to Hugging Face and the usage of

hub_tokenandsave_strategy.

- Innovative Model Instances Introduced: The community showcased new models, such as Tinyllama and Mischat, illustrating how Unsloth's notebooks and a Huggingface dataset can lead to novel LLMs. A member promoted 'AI Unplugged 5,' a blogpost covering diverse AI topics found at AI Unplugged.

LM Studio Discord

- AI's Pricey Playground: AI hobbyists are voicing concerns over the high cost of entry, with investments closing in on $10k for necessary hardware like cutting-edge GPUs or MacBooks to ensure optimal performance. Discussions reveal a "hard awakening" to the complex, jargon-heavy, and resource-intensive nature of the field.

- GPU Talk – Power, Performance, and Particulars: Engagements in hardware chat center on optimal power supply sizing with recommendations such as 1200-1500W for high-performance builds and efficient power limit settings. There's also interest in the practical performance benefits of legacy hardware like Nvidia K80, alongside advanced cooling hacks using old iMac fans.

- Model Performance and Compatibility Challenges: Conversations pinpoint VRAM choke points and compatibility, noting the inadequacy of 32GB VRAM for upcoming models and discussing model performance, with one mention of Zephyr's superiority in creative writing tasks over Hermes2 Pro. Users are also troubleshooting issues with GPUs proper recognition and utilization with LM Studio's ROCm beta version and grappling with the selection of coder models for constrained VRAM.

- LM Studio Talk – Updates, Issues, and Integrations: The latest LM Studio version 0.2.18 rollout, which introduces new features and bug fixes, is being dissected, with users highlighting discrepancies in VRAM display and version typings and requesting features like GPU and NPU monitoring tools. A member shared their successful integration of LM Studio into Nix, available as a PR. Plus, a repository for a chatbot OllamaPaperBot designed to work with PDFs was shared on GitHub.

- Rethinking Abstraction with AI: There is exploration into using AI to distill abstract concepts, with a reference to the paper on Abstract Concept Inflection. A request was made for guidance on choosing the right Agent program to plug into LM Studio, aiming for a seamless integration.

OpenAI Discord

Voice Cloning Sparks Heated Debate: Discussions emerged around OpenAI's Voice Engine, with some excited by its potential to generate natural-sounding speech from a 15-second audio sample, while others raised ethical concerns about the tech's misuse.

Confused Consumers and Missing Models: Confusion reigns among users regarding different versions of GPT-4 implemented in various applications, with contradictory reports about model stability and cutoff dates. Meanwhile, anticipation for GPT-5 is rife, yet no concrete information is available.

Encounters with Errant Equations: Users across multiple channels grappled with transferring LaTeX equations into Microsoft Word, proposing MathML as a potential solution. The intricacies of proper prompt structuring for specific AI tasks, like translations maintaining HTML tags, also took center stage.

Meta-Prompting Under the Microscope: AI enthusiasts debated the merits of metaprompting over direct instructions, with experiences suggesting inconsistent results. Precise prompts were underscored as pivotal for optimized AI performance.

Roleplay Resistance in GPT: A peculiar behavior was noted with the gpt-4-0125-preview model regarding roleplay prompts, with the AI refusing to role-play when an example format was given, yet complying when the example was omitted. Users shared workarounds and tactics to guide the AI's responses.

Eleuther Discord

New Fine-Tuning Frontiers: LISA, a new fine-tuning technique, has outshined LoRA and full-parameter training in instruction following tasks, capable of tuning 7B parameter models on a 24GB GPU. LISA's details and applications can be explored through the published paper and its code.

Chip Chat heats up: AI21 Labs revealed Jamba, a model fusing the Mamba architecture and Transformers, with a claim of 12B active parameters from a 52B total. Meanwhile, SambaNova introduced Samba-1, a Composition of Experts (CoE) model alleging reduced compute needs and higher performance, though transparency concerns persist. Details about Jamba can be found on their official release page, and scrutiny over Samba-1's performance is encouraged via SambaNova's blog.

Sensitive Data Safety Solutions Discussed: Techniques for safeguarding sensitive data in training, including SILO and differential privacy methods, formed a topic of serious discussion. Researchers interested in these topics can examine the SILO paper and differential privacy papers for more insights.

Discrepancy Detective Work in Model Weights: Discordants untangled differences in model weight parameters between Transformer Lens (tl) and Hugging Face (hf). The debugging process involved leveraging from_pretrained_no_processing to avoid preset weight modifications by Transformer Lens, as elucidated in this GitHub issue.

MMLU Optimization Achieved: Efficiency in MMLU tasks has been boosted, enabling extraction of multiple logprobs within a single forward call. A user reported memory allocation issues when attempting to load the DBRX base model on incorrect GPU configurations, corrected upon realizing the node configuration error. Further, a pull request aimed at improving context-based task handling in the lm-evaluation-harness awaits review and feedback after the CoLM deadline.

Nous Research AI Discord

A Peek at AI21's Transformer Hybrid: AI21 Labs has launched Jamba, a transformative SSM-Transformer model with a 256K context window and performance that challenges existing models, openly accessible under the Apache 2.0 license.

LLMs Gearing Up with MoE: The engineering community is charged up about microqwen, speculated to be a more compact version of Qwen, and the debut of Qwen1.5-MoE-A2.7B, a transformer-based MoE model that promises high performance with fewer active parameters.

LLM Training Woes and Wins: Engineers are troubleshooting issues with the Deepseek-coder-33B's full-parameter fine-tuning, exploring structured approaches for a large book dataset, and peeking at Hermes 2 Pro's multi-turn agentic loops. Meanwhile, they're diving into the significance of 'hyperstition' in expanding AI capacities and clarifying heuristic versus inference engines in LLMs.

RAG Pipelines and Data Structuring Strategies: To boost performance and efficiency in retrieval tasks, AI engineers are exploring structured XML with metadata and discussing RAG models. A mention of a ragas GitHub repository indicates ongoing enhancements to RAG systems.

Worldsim, LaTeX, and AI's Cognitive Boundaries: Tips and resources, like the gist for LaTeX papers, are being exchanged on the Worldsim project. Engineers are considering the potential of AI to delve into alternate history scenarios, while carefully differentiating between large language model use-cases.

With these elements converged, engineers are evidently navigating the challenges and embracing the evolving landscape of AI with a focus on efficiency, structure, and the constant sharing of knowledge and resources.

Modular (Mojo 🔥) Discord

Mojo Gets Juiced with Open Source and Performance Tweaks: Modular has cracked open the Mojo standard library to the open-source community under the Apache 2 license, showcasing this in the MAX 24.2 release. Enhancements include implementations for generalized complex types, workshop sessions on NVIDIA GPU support, and a focus on stabilizing support for MLIR with the syntax set to evolve.

Hype Train Gathers Steam for Modular's Upcoming Reveal: Modular is stoking excitement through a series of cryptic tweets, signaling a new announcement with emojis and a ticking clock. Community members are keeping a keen eye on the official Twitter handle for details on the enigmatic event.

MAX Engine's Leaps and Bounds: With the MAX Engine 24.2 update, Modular introduces support for TorchScript models with dynamic input shapes and other upgrades, as detailed in their changelog. A vivid discussion unfolded around performance benchmarks using the BERT model and GLUE dataset, showcasing the advancements over static shapes.

Ecosystem Flourishing with Community Contributions and Learning: Community projects are syncing up with the latest Mojo version 24.2, with an expressed interest in creating deeper contributions through understanding MLIR dialects. Modular acknowledges this enthusiasm and plans to divulge more on internal dialects over time, adapting a progressive disclosure approach towards the complex MLIR syntax.

Teasers and Livestreams Galore: Modular is shedding light on their recent developments with a livestream on YouTube covering the open sourcing of Mojo's stdlib and MAX Engine support, whereas tantalizing teasers in the form of tweets here sustain high anticipation for impending announcements.

HuggingFace Discord

Quantum Leaps in Hugging Face Contributions: New advancements have been made in AI research and applications: HyperGraph Representation Learning provides novel insights into data structures, Perturbed-Attention Guidance (PAG) boosts diffusion model performance, and the Vision Transformer model is adapted for medical imaging applications. The HyperGraph paper is discussed on Hugging Face, while PAG's project details are on its project page and the Vision Transformer details on Hugging Face space.

Colab and Coding Mettle: Engineers have been sharing tools and tips ranging from the use of Colab Pro to run large language models to the HF professional coder assistant for improving coding. Another shared their experience with AutoTrain, posting a link to their model.

Model Generation Woes and Image Classifier Queries: Some are facing challenges with models generating infinite text, prompting suggestions to use repetition penalty and StopCriterion. Others are seeking advice on fine-tuning a zero-shot image classifier, sharing issues and soliciting expertise in channels like #NLP and #computer-vision.

Community Learning Announcements: The reading-group channel's next meeting has a confirmed date, strengthening community collaboration. Interested parties can find the Discord invite link to participate in the group discussion.

Real-Time Diffusion Innovations: Marigold's depth estimation pipeline for diffusion models now includes a LCM function, and an improvement allows real-time image transitions at 30fps for 800x800 resolution. Questions on the labmlai diffusion repository indicate ongoing interest in optimizing these models.

LAION Discord

- Voices for AI Training Debated: Some participants expressed concern that professional voice actors may shy away from contributing to AI projects like 11labs due to negative sentiments towards AI, suggesting that amateur voices might suffice for training purposes where emotional depth isn't crucial.

- Benchmarks Under Scrutiny: There is criticism regarding the reliability of AI benchmarks, with the HuggingFace Chatbot Arena being recommended for its human evaluation element. This conversational note calls into question the effectiveness of prevalent benchmarking methods in the AI field.

- Jamba Ignites Hype: AI21 Labs has introduced Jamba, a cutting-edge model fusing SSM-Transformer architecture, which has shown promise in excelling at benchmarks. The discussion revolved around this innovation and its implications on model performance (announcement link).

- Confusion and Clarification on Diffusion Models and Transformers: A member pointed toward a YouTube video that they believe misinterprets how diffusion transformers work, particularly concerning the use of attention mechanisms (YouTube video). Calls were made for better explanations of transformers within diffusion models, highlighting the need for simpler "science speak" breakdowns.

LlamaIndex Discord

- Intelligent RAG Optimizations Emerge: Cohere is enhancing Retrieval-Augmented Generation (RAG) pipelines by introducing Int8 and Binary Embeddings, offering a significant reduction in memory usage and operational costs.

- PDF Parsing Puzzles Addressed by Tech: While parsing PDFs poses more complexity than Word documents, the community spotlighted LlamaParse and Unstructured as useful tools to tackle challenges, particularly with tables and images.

- GenAI Gets a Data Boost from Fivetran: Fivetran's integration for GenAI apps simplifies data management and streamlines engineering workflows, as detailed in their blog post.

- Beyond Fine-Tuning: Breakthroughs in Model Alignment: RLHF, DPO, and KTO alignment techniques are proving to be advanced methodologies for improved language generation in Mistral and Zephyr 7B models, per insights from a blog post on the topic.

- LLM Repository Ripe for Research: The mission of Shure-dev is to compile a robust repository of LLM-related papers, providing a significant resource for researchers to access a breadth of high-quality information in a rapidly evolving field.

OpenInterpreter Discord

International Shipping Hacks for O1 Light: Engineers explored workarounds for international delivery of the O1 light, including buying through US contacts. It was noted that O1 devices built by users are functional globally.

Local LLMs Cut API Expenses: There's active engagement around using Open Interpreter in offline mode to eliminate API costs. Contributions for running it with local models such as LM Studio were detailed, including running commands like interpreter --model local --api_base http://localhost:1234/v1 --api_key dummykey, and can be referenced in the official documentation.

Calls for Collaboration on Semantic Search: A call to action was issued for improving local semantic search within the OpenInterpreter/aifs GitHub repository. This highlights a community-driven approach to enhancing the project.

Integrating O1 Light with Arduino's Extended Family: Technical discussions looked at merging O1 Light with Arduino hardware for greater utility. While ESP32 is standard, there's eagerness to experiment with alternatives like Elegoo boards.

O1 Dev Environment Installation Windows Woes: Members reported and discussed issues with installing the 01 OS on Windows systems. A GitHub pull request aims to provide solutions and streamline the setup process for Windows-based developers.

CUDA MODE Discord

Compilers Confront CUDA: While the debate rages on the merits of using compiler technology like PyTorch/Triton versus manual CUDA code creation, members also sought guidance on CUDA courses, including recommendations for the CUDA mode on GitHub and Udacity's Intro to Parallel Programming available on YouTube. A community-led CUDA course by Cohere titled Beginners in Research-Driven Studies (BIRDS) was announced, starting April 5th, advertised on Twitter.

Windows Walks with WSL: Several members provided ease-of-use solutions for running CUDA on Windows, emphasizing Windows Subsystem for Linux (WSL), particularly WSL2, supported by a helpful Microsoft guide.

Circling the Ring-Attention Revolution: In the #[ring-attention] channel, a misalignment of fine-tuning experiments with ring-attention goals halted progress, but insights on resolving modeling_llama.py loss issues spearheaded advancements. The successful training of tinyllama models with extended context lengths up to 100k on substantial A40 VRAM was a hot topic, alongside a Reddit discussion on the hefty VRAM needs for Llama 7B models with QLoRA and LoRA.

Triton Tangle Untangled: The #[triton-puzzles] channel was abuzz with a sync issue in triton-viz linked to a specific pull request, and an official fix was provided, though some still faced installation woes. The use of Triton on Windows was also clarified, pointing to alternative environments like Google Colab for running Triton-based computations.

Zhihu Zeal Over Triton: A member successfully pierced the language barrier on the Chinese platform Zhihu to unearth a trove of Triton materials, stimulating a wish for a glossary of technical terms to aid in navigating non-English content.

OpenRouter (Alex Atallah) Discord

Model Mania: OpenRouter Introduces App Rankings: OpenRouter launched an App Rankings feature for models, exemplified by Claude 3 Opus, to showcase utilization and popularity based on public app usage and tokens processed.

Databricks and Gemini Pro Stir Excitement, But Bugs Buzz: Engineers shared enthusiasm for Databricks' DBRX and Gemini Pro 1.5, although issues like error 429 suggested rate-limit challenges, and downtime coupled with error 502 and error 524 signaled areas for reliability improvements in model availability.

Claude's Capabilities and API Discussed: The community clarified that Claude in OpenRouter doesn't support prefill features and explored error fixing for Claude 2.1. A side conversation praised ClaudeAI via OpenRouter for better handling roleplay and sensitive content with fewer false positives, noting standardized access and cost parity with official ClaudeAI API.

APIs and Clients Get a Tune-Up: OpenRouter has simplified their API to /api/v1/completions and shunned Groq for Nitro models due to rate limitations, alongside improvements in OpenAI's API client support.

Easing Crypto Payments for OpenRouter Users: OpenRouter is slashing gas costs of cryptocurrency transactions by harnessing Base chain, an Ethereum L2 solution, aiming for more economical user experiences.

AI21 Labs (Jamba) Discord

- Jamba Joins the AI Fray: AI21 Labs has launched Jamba, an advanced language model integrating Mamba with Transformer elements, marked by a 256K context window and single GPU hosting for 140K contexts. It's available under the Apache 2.0 license on Hugging Face and received coverage on TechCrunch.

- Groundbreaking Throughput Announced: Jamba achieves a 3X throughput improvement in long-context tasks such as Q&A and summarization, setting a new performance benchmark in the generative AI space.

- AI21 Labs Platform Ready for Jamba: The SaaS platform of AI21 Labs will soon feature Jamba, complementing its current availability through Hugging Face, and staff have indicated a forthcoming deep-dive white paper detailing Jamba's training specifics.

- Jamba, the Polyglot: Trained on multiple languages including German, Spanish, and Portuguese, Jamba's multilingual prowess is confirmed, although its efficacy in Korean language tasks seems to be in question with no Hugging Face Space demonstration planned.

- Pricing and Performance Tweaks: AI21 Labs has removed the $29 monthly minimum charge for model use, and discussions regarding Jamba's high efficiency reveal that its mix of transformer and Mixture-of-Experts (MoE) layers enables sub-quadratic scaling. Community debates touched on quantization for memory reduction and clarified that Jamba's MoE consists of Mamba blocks, not transformers.

tinygrad (George Hotz) Discord

- Debugging Delight: An engineer shared their meme-inducing madness while dealing with a Conda metal compiler bug, indicating high levels of frustration with debugging the issue.

- Tinygrad's Memory Management: The topic of implementing virtual memory in the tinygrad software stack was brought up with interest in adapting it for cache/MMU projects.

- Comparing CPU Giants: Users debated the responsiveness of AMD's versus Intel's customer service, highlighting Intel's better GPU price-performance and pointing out software issues with AMD.

- Optimization Opportunities for Intel Arc: Suggestions arose regarding potential performance enhancements for the Intel Arc by optimizing transformers/dot product attention within the IPEX library.

- FPGA-Driven Tinygrad: An inquiry about extending tinygrad to leverage FPGA was made, engaging users in potential hardware acceleration benefits.

Latent Space Discord

- OpenAI's Prepayment Explainer: OpenAI's adoption of a prepayment model is to combat fraud and offer clearer pathways to increased rate limits, with LoganK's tweet providing insider perspective. Despite its advantages, comparisons are drawn to issues faced by consumers with Starbucks' gift card model, where unspent balances contribute significantly to revenue.

- AI21's Jamba Roars: AI21's new "Jamba" model merges Structured State Space and Transformer architecture, boasting an MoE structure and currently evaluated on Hugging Face. However, the community noted the lack of straightforward mechanisms for testing the newly announced model.

- xAI's Grok-1.5 Making Strides in AI Reasoning: The newly launched Grok-1.5 model by xAI is recognized for outperforming its predecessor, especially in coding and mathematical tasks, with the community anticipating its release on the xAI platform. Benchmarks and performance details were shared via the official blog post.

- Ternary LLMs Stir BitNet Debate: The community engaged in a technical debate over the correct terminology for LLMs using three-valued logic, discussing the impact of BitNet and emphasizing that these are natively trained models rather than quantized versions.

- Stability AI's Shakeup: Emad Mostaque's exit from Stability AI sparked conversations about leadership changes in the generative AI space, fueled by insights from his interview on Peter Diamandis's YouTube channel and a detailed backstory found on archive.is. These discussions reflect ongoing shifts and potential volatility in the industry.

OpenAccess AI Collective (axolotl) Discord

Jamba Sets the Bar High: AI21 Labs has introduced a new model, Jamba, featuring a 256k token context window, optimized for performance with 12 billion parameters active. Their accelerated progress is underlined by the quick training time, with knowledge cutoff on March 5, 2024, and details can be found in their blog post.

Pushing the Boundaries of Optimization: Discussions have highlighted the effectiveness of bf16 precision in torchTune leading to substantial memory savings over fp32, with these optimizations being applied to SGD and soon to the Adam optimizer. Skepticism remains over whether Axolotl provides the same level of training control as torchTune, particularly in the context of memory optimization.

The Cost of Cutting-Edge: Conversations around the GB200-based server prices revealed a steep cost of US$2-$3 million each, prompting a consideration of alternative hardware solutions by the community due to the high expenses.

Size Matters for Datasets: The hunt for long-context datasets prompted sharing of resources including one from Hugging Face's collections and the MLDR dataset on Hugging Face, which cater to models requiring extensive sequence training.

Fine-Tuning Finesse and Repetition Debate: The community has been engaging in detailed discussions about model training, with a focus on strategy sharing like ▀ and ▄ usage in prompts and debates over dataset repetition's utility, referencing a paper on data ordering to support repetition. New fine-tuning approaches for larger models like Galore are also being experimented with, despite some memory challenges.

LangChain AI Discord

OpenGPTs: DIY Food Ordering System: A resourceful engineer integrated a custom food ordering API with OpenGPTs, capturing the adaptability and potential of LangChain's open-source platform, showcased in a demonstration video. They encouraged peer reviews to refine the innovation.

A Smarter SQL AI Chatbot: Members explored methods to enable an SQL AI Chatbot to remember previous interactions, enhancing the bot’s context-retaining abilities for more effective and coherent dialogues.

Gearing Up for Product Recommendations: Engineers discussed the development of a bot that would suggest products using natural language queries, considering the use of vector databases for semantic search or employing an SQL agent to parse user intents like "planning to own a pet."

Upgrade Your Code Reviews With AI: A new AI pipeline builder designed to automate code review tasks including validation and security checks was introduced, coupled with a demo and a product link, poised to streamline the code review process.

GalaxyAI Throws Down the Gauntlet: GalaxyAI is providing free access to elite AI models such as GPT-4 and Gemini-PRO, presented as an easy-to-adopt option for projects via their OpenAI-compatible API service.

Nurturing Engineer Dialogues: The creation of the <#1222928565117517985> channel fosters concentrated discussion on OpenGPTs and its growth, as evidenced by its GitHub repository.

Interconnects (Nathan Lambert) Discord

- Jamba Jumps Ahead: AI21 Studios unveils Jamba, a new model merging Mamba's Structured State Space with Transformers, delivering a whopping 256K context window. The model is not only touted for its performance but is also accessible, with open weights under Apache 2.0 license.

- Jamba's Specs Spark Interest: Jamba's hybrid design has prompted discussions over its 12B parameters focused on inference and its total 52B parameter size, leveraging a MoE framework with 16 experts and only 2 active per token, as found on Hugging Face.

- Dissecting Jamba's DNA: Conversations surfaced around Jamba's true architectural definition, debating whether it should be dubbed a "striped hyena" due to its hybrid nature and specific incorporation of attention mechanisms.

- Qwen Redefines Efficiency: The hybrid model

Qwen1.5-MoE-A2.7Bis recognized for matching the prowess of 7B models, with a much leaner 2.7B parameters, a feat of efficiency highlighted with resources including its GitHub repo and Hugging Face space.

- Microsoft Gains Genius, Databricks on Megablocks: Liliang Ren hops to Microsoft GenAI as a Senior Researcher to construct scalable neural architectures evident from his announcement, while Megablocks gets a new berth at Databricks, enhancing its long-term developmental prospects mentioned on Twitter.

DiscoResearch Discord

AI21 Labs Cooks Up Jamba: AI21 Labs has launched Jamba, a model blending Structured State Space models with Transformer architecture, promising high performance. Check out Jamba through its Hugging Face deployment, and read about its groundbreaking approach on the AI21 website.

Translation Titans Tussle: Members are gearing up for a translation battle among DiscoLM, Occiglot, Mixtral, GPT-4, DeepL, and Azure Translate, using the first 100 lines from a dataset like Capybara to compare performance.

Course to Conquer LLMs: A GitHub repository offering a course for Large Language Models with roadmaps and Colab notebooks was shared, aiming to educate on LLMs.

Token Insertion Tangle Untangled: A debugging success was shared regarding unexpected token insertions believed to be caused by either quantization or the engine; providing a added_tokens.json resolved the anomaly.

Training Data Transparency Tremors: The community has asked for more information on the training data used for a certain model, with specific interest in the definition and range of "English data" as stated in the model card or affiliated blog post.

Skunkworks AI Discord

- Call for Python Prodigies: A member inquired about the upcoming onboarding schedule for Python developers keen on joining the project, indicating that sessions tailored for experienced Python talent were previously in the pipeline.

- YouTube Educational Content Shared: Pradeep1148 shared a YouTube video link, but no context was provided regarding the content or relevance to the ongoing discussions.

PART 2: Detailed by-Channel summaries and links

Stability.ai (Stable Diffusion) ▷ #general-chat (936 messages🔥🔥🔥):

- Turbo Boost for SD3: The idea of finetuning SD3 lingers, with a hypothesis that the SD3 Turbo might serve as a powerful, detailed model akin to SDXL Lightning. Members speculate on the performance benefits of running the upcoming SD3 on hardware with 12GB VRAM.

- AI Art and Politics: The discussion covers the balance between model power and public access, with concerns over potential downsizing of SD3 features for public use. There's hope for providing fine-tuning tools and control nets at launch, which could keep the user base productive.

- LoRA's Future with SD3: Conversations revolve around training LoRA (Local Rank Aware) models on SD3, with the likelihood that 24GB of VRAM should be sufficient. There are uncertainties about how quickly and effectively these tools will be adapted to SD3 post-launch.

- Training on Hardware: Users discuss the feasibility of training smaller sized LoRA models on GPUs that have 16GB VRAM, with some managing to achieve this on resolutions as high as 896 with success. Techniques and optimisations for training, such as Aggressive train rates, network dropouts, and ranking are shared.

- Leveraging New Models for Growth: Members showcase and recommend using Arc2Face for face manipulation, admiring its demo and capabilities. Discussions are sprinkled with irony as users mock the potential of technology being perceived as alien intervention and trivialize the controversy over dataset censorship.

- SuperPrompt - Better SDXL prompts in 77M Parameters | Brian Fitzgerald: Left SDXL output with SuperPrompt applied to the same input prompt.

- Arc2Face: A Foundation Model of Human Faces: no description found

- Talking to Myself or How I Trained GPT2-1.5b for Rubber Ducking using My Facebook Chat Data: Previously in this series - finetuning 117M, finetuning 345M

- Arc2Face - a Hugging Face Space by FoivosPar: no description found

- So Sayweall Battlestart Galactica GIF - So Sayweall Battlestart Galactica William Adama - Discover & Share GIFs: Click to view the GIF

- Character bones that look like Openpose for blender _ Ver_96 Depth+Canny+Landmark+MediaPipeFace+finger: -Blender version 3.5 or higher is required.- Download — blender.orgGuide : https://youtu.be/f1Oc5JaeZiwCharacter bones that look like Openpose for blender Ver96 Depth+Canny+Landmark+MediaPipeFace+fing...

- Home v2: Transform your projects with our AI image generator. Generate high-quality, AI generated images with unparalleled speed and style to elevate your creative vision

- Stable Diffusion explained (in less than 10 minutes): Curious about how Generative AI models like Stable Diffusion work? Join me for a short whiteboard animation where we will explore the basics. In just under 1...

- Beast Wars: Transformers - Theme Song | Transformers Official: Subscribe to the Transformers Channel: https://bit.ly/37mNTUzAutobots, roll out! Welcome to Transformers Official: The only place to see all your favorite Au...

- GitHub - foivospar/Arc2Face: Arc2Face: A Foundation Model of Human Faces: Arc2Face: A Foundation Model of Human Faces. Contribute to foivospar/Arc2Face development by creating an account on GitHub.

- GitHub - lllyasviel/Fooocus: Focus on prompting and generating: Focus on prompting and generating. Contribute to lllyasviel/Fooocus development by creating an account on GitHub.

- GitHub - Nick088Official/SuperPrompt-v1: SuperPrompt-v1 AI Model (Makes your prompts better) both Locally & Online: SuperPrompt-v1 AI Model (Makes your prompts better) both Locally & Online - Nick088Official/SuperPrompt-v1

- FNAF - Multi-Character LoRA - v1.11 | Stable Diffusion LoRA | Civitai: Multi-character FNAF LoRA. Earlier versions were for Yiffy-e18, current version is for Pony Diffusion V3. Latest version contains Classic Freddy, C...

- GitHub - Vargol/StableDiffusionColabs: Diffusers Stable Diffusion script that run on the Google Colabs' free tier: Diffusers Stable Diffusion script that run on the Google Colabs' free tier - Vargol/StableDiffusionColabs

- GitHub - Vargol/8GB_M1_Diffusers_Scripts: Scripts demonstrating how to run Stable Diffusion on a 8Gb M1 Mac: Scripts demonstrating how to run Stable Diffusion on a 8Gb M1 Mac - Vargol/8GB_M1_Diffusers_Scripts

Perplexity AI ▷ #announcements (2 messages):

- DBRX Debuts on Perplexity Labs: The state-of-the-art language model DBRX from Databricks is now available on Perplexity Labs, outperforming GPT-3.5 and rivaling Gemini 1.0 Pro especially in math and coding tasks. Users are invited to try DBRX for free at Perplexity Labs.

- Copy AI and Perplexity Forge a Powerful Partnership: Copy AI integrates Perplexity's APIs to create an AI-powered go-to-market (GTM) platform, providing real-time market insights for improved decision-making. As an added perk, Copy AI users get 6 months of Perplexity Pro for free - details on the collaboration can be found in their blog post.

Link mentioned: Copy.ai + Perplexity: Purpose-Built Partners for GTM Teams | Copy.ai: Learn more about how Perplexity and Copy.ai's recent partnership will fuel your GTM efforts!

Perplexity AI ▷ #general (728 messages🔥🔥🔥):

- Perplexity Search & Focus Modes: Discussions reveal confusion among users whether academic focus mode is functioning properly on Perplexity, noting potential outages with semantic search. Users recommend turning off focus mode or trying focus all as potential workarounds.

- Exploring Pro Search and "Pro" Features: Conversation around Pro Search suggests some users find the follow-up questions redundant, preferring immediate answers. Pro users receive $5 credit for the API and access to multiple AI models, including the ability to attach sources like PDFs persistently in threads for referencing.

- Understanding Perplexity's File Context and Sources: Through testing, users found the context for files on Perplexity differs from direct uploads vs. text input, with speculation that Retrieval Augmented Generation (RAG) is utilized—Claude may rely on other models like GPT-4-32k for file processing and referencing.

- Generative Capabilities & API Utilization: Users discuss the costs and benefits of using the Perplexity API vs. subscriptions to services like GPT Plus and Claude Pro. Models like Opus and other subscription-based models are mentioned for specific tasks, such as generating content ideas, with users seeking guidance on when to use which service or feature.

- Clarifying Claude Opus and GPT-4.5: User inquire about the accuracy and authenticity of Claude Opus responses, noting it has incorrectly identified itself as an OpenAI development in outputs. Participants suggest caution when interpreting such AI self-descriptions and to refrain from querying AI about their origins.

- Sora AI: no description found

- Tweet from Perplexity (@perplexity_ai): DBRX, the state-of-the-art open LLM from @databricks is now available on Perplexity Labs. Outperforming GPT-3.5 and competitive with Gemini 1.0 Pro, DBRX excels at math and coding tasks and sets new b...

- Announcing Grok-1.5: no description found

- Pricing: no description found

- Supported Models: no description found

- Introducing Jamba: AI21's Groundbreaking SSM-Transformer Model: Debuting the first production-grade Mamba-based model delivering best-in-class quality and performance.

- Rickroll Meme GIF - Rickroll Meme Internet - Discover & Share GIFs: Click to view the GIF

- Lost Go Back GIF - Lost Go Back Jack - Discover & Share GIFs: Click to view the GIF

- Older Meme Checks Out GIF - Older Meme Checks Out - Discover & Share GIFs: Click to view the GIF

- Reddit - Dive into anything: no description found

- Anthropic Status: no description found

- Introducing DBRX: A New State-of-the-Art Open LLM | Databricks: no description found

- Answer Engine Tutorial: The Open-Source Perplexity Search Replacement: Answer Engine install tutorial, which aims to be an open-source version of Perplexity, a new way to get answers to your questions, replacing traditional sear...

- Perplexity CEO: Disrupting Google Search with AI: One of the most preeminent AI founders, Aravind Srinivas (CEO, Perplexity), believes we could see 100+ AI startups valued over $10B in our future. In the epi...

- Reddit - Dive into anything: no description found

- GitHub - shure-dev/Awesome-LLM-related-Papers-Comprehensive-Topics: Towards World's Most Comprehensive Curated List of LLM Related Papers & Repositories: Towards World's Most Comprehensive Curated List of LLM Related Papers & Repositories - shure-dev/Awesome-LLM-related-Papers-Comprehensive-Topics

- Let Claude think: no description found

Perplexity AI ▷ #sharing (22 messages🔥):

- Exploration of Perplexity's Capabilities: Various members are exploring and sharing Perplexity AI search results on topics such as Truth Social, Anthropic tag models, Amazon's investment strategies, Exchange administration intricacies, and global warming research implications.

- Delving into Detailed Analyses: One member has been experimenting with

<scratchpad>XML tags in Perplexity AI to demonstrate differences in search results, specifically illustrating the effectiveness in structuring detailed outputs. - Space Enthusiasm on Display: Members shared their curiosity about space technologies and phenomena through Perplexity searches, inquiring into details about SpaceX's Starship chunks and how space impacts the human body.

- Tech and Finance Merge in User Queries: The topics of investment by Amazon and the story of FTX's co-founder, Sam Bankman-Fried, were brought into focus, reflecting users' interests in the intersection of technology and finance.

- Scratchpad Strategy Sharpening: By employing improved scratchpad strategies, a member claims to have significantly enhanced the content quality of final outputs, highlighting the potential advancements in extracting more explorative and useful content from AI-generated text.

Perplexity AI ▷ #pplx-api (8 messages🔥):

- API Results Inconsistency: A member reports that they often receive no results when using the Perplexity AI API, contrasting with the "plenty of results" on the web interface when searching for information, such as "Olivia Schough spouse."

- Seeking API Parameter Guidance: The same member inquires if there are more parameters to guide the API to improve results.

- Comparison of API and Web Interface Strengths: The member expresses an opinion that the web app seems "way better" than the API in terms of performance.

- Sources Lacking in API Responses: Another user highlights a discrepancy: the API's returned response lacks the variety of sources that are present when using Perplexity AI on the web.

- Beta Features and Application Process: In response, a user redirects members to a previous message about URL citations still being in beta and provides a link to apply for it: Apply for beta features.

Link mentioned: pplx-api form: Turn data collection into an experience with Typeform. Create beautiful online forms, surveys, quizzes, and so much more. Try it for FREE.

Unsloth AI (Daniel Han) ▷ #general (351 messages🔥🔥):

- Jamba's Potential and Limitations Discussed: Members discussed the newly announced Jamba model by AI21 Labs, noting its claim of blending Mamba SSM technology with traditional Transformers. Some were impressed with its performance and context window, while others were skeptical about its tokenization and absence of detailed token counts.

- Concerns Over Various Models: Members shared mixed experiences with AI tools like Copilot and claude, noting a drastic drop in Copilot quality, while some found claude low-key helpful. Concerns were raised regarding Model Merging tactics, with a mention of potentially discussing Argilla in the future.

- Evaluation of Generative Models: Discussion centered on how to evaluate open-ended generation quality, with links shared to resources that delve into perplexity, pairwise ranking, and metrics linked to apparent emergent abilities, such as this emergence as a myth paper.

- Model Training Challenges: Users shared experiences and sought advice on training AI models, particularly issues when fine-tuning Mistral and using SFTTrainer. They exchanged configurations and debated the optimal number of training epochs and batch sizes.

- multi-GPU Support and Other Technical Difficulties Addressed: Concerns were raised about the lack of multi-GPU support with Unsloth AI, along with issues related to bitsandbytes dependency and error messages during fine-tuning. Advice and troubleshooting tips were shared among members.

- 1-bit Quantization: A support blog for the release of 1-bit Aana model.

- Are Emergent Abilities of Large Language Models a Mirage?: Recent work claims that large language models display emergent abilities, abilities not present in smaller-scale models that are present in larger-scale models. What makes emergent abilities intriguin...

- unsloth/mistral-7b-v0.2-bnb-4bit · Hugging Face: no description found

- Introducing Jamba: AI21's Groundbreaking SSM-Transformer Model: Debuting the first production-grade Mamba-based model delivering best-in-class quality and performance.

- yanolja/EEVE-Korean-10.8B-v1.0 · Hugging Face: no description found

- ai21labs/Jamba-v0.1 · Hugging Face: no description found

- SambaNova Delivers Accurate Models At Blazing Speed: Samba-CoE v0.2 is climbing on the AlpacaEval leaderboard, outperforming all of the latest open-source models.

- Come Look At This GIF - Come Look At This - Discover & Share GIFs: Click to view the GIF

- no title found: no description found

- Preparing for the era of 32K context: Early learnings and explorations: no description found

- togethercomputer/Long-Data-Collections · Datasets at Hugging Face: no description found

- trainer-v1.py: GitHub Gist: instantly share code, notes, and snippets.

- Benchmarking Samba-1: Benchmarking Samba-1 with the EGAI benchmark - a comprehensive collection of widely adapted benchmarks sourced from the open source community.

- no title found: no description found

- A little guide to building Large Language Models in 2024: A little guide through all you need to know to train a good performance large language model in 2024.This is an introduction talk with link to references for...

- MOE-LLM-GPU-POOR_LEADERBOARD - a Hugging Face Space by PingAndPasquale: no description found

- GitHub - potsawee/selfcheckgpt: SelfCheckGPT: Zero-Resource Black-Box Hallucination Detection for Generative Large Language Models: SelfCheckGPT: Zero-Resource Black-Box Hallucination Detection for Generative Large Language Models - potsawee/selfcheckgpt

- GitHub - unslothai/unsloth: 2-5X faster 70% less memory QLoRA & LoRA finetuning: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Trainer: no description found

Unsloth AI (Daniel Han) ▷ #random (34 messages🔥):

- Andrej Karpathy's AI Analogy: A YouTube video featuring Andrej Karpathy discusses LLMs in analogy to operating systems, citing important aspects of finetuning and the misconception of labeling Mistral and Gemma as open source.

- Finetuning's Fine Balance: It's highlighted that for effective finetuning, a blend of the model’s original training data and new data is essential; otherwise, a model's proficiency might regress.

- Missed Opportunity at AI Panel: Dialogues revealed disappointment as a non-technical question about Elon Musk's management style took precedence over deeper AI topics during a public panel featuring AI expert Andrej Karpathy.

- Reinforcement Learning Critique: Andrej Karpathy criticizes current RLHF techniques, comparing them unfavorably to AlphaGo's training, suggesting that we need more fundamental methods for training models, akin to textbook exercises.

- Snapdragon's New Chip in the Spotlight: Discussion of the new Snapdragon X Elite chip's benchmarks and capabilities surfaced, with comparisons to existing technology and hopes for future advancements in processor performance shared alongside a benchmark review video.

- Making AI accessible with Andrej Karpathy and Stephanie Zhan: Andrej Karpathy, founding member of OpenAI and former Sr. Director of AI at Tesla, speaks with Stephanie Zhan at Sequoia Capital's AI Ascent about the import...

- Now we know the SCORE | X Elite: Qualcomm's new Snapdragon X Elite benchmarks are out! Dive into the evolving ARM-based processor landscape, the promising performance of the Snapdragon X Eli...

Unsloth AI (Daniel Han) ▷ #help (201 messages🔥🔥):

- Tokenizer Troubles: User encountered a

list index out of rangeerror using theget_chat_templatefunction from unsloth with the Starling 7B tokenizer. This suggests potential tokenization issues with Starling's implementation. - Finetuning Frustrations: One member discussed difficulties with the finetuning process, describing various parameters tried without desired results. Suggestions from others included fixing the rank and experimenting with different alpha rates and learning rates.

- Checkpoint Challenges: Concerns about unsloth's need for internet access during training were raised, especially when internet instability occurs, potentially interfering with checkpoint saving. Unsloth doesn't inherently need internet connection to train, and issues may relate to Hugging Face model hosting or WandB reporting.

- Pushing Checkpoints to Huggingface: A member asked how to push local checkpoints to Hugging Face, with the response pointing towards using Hugging Face's

hub_tokenfor cloud checkpoints andsave_strategyfor local storage. - Overfitting Overhead: A user sought advice for a model that overfits, with recommendations provided to reduce the learning rate, lower the LoRA rank and alpha, shorten the number of epochs, and include an evaluation loop to stop training if loss increases.

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- Support Unsloth AI on Ko-fi! ❤️. ko-fi.com/unsloth: Support Unsloth AI On Ko-fi. Ko-fi lets you support the people and causes you love with small donations

- ollama/docs/modelfile.md at main · ollama/ollama: Get up and running with Llama 2, Mistral, Gemma, and other large language models. - ollama/ollama

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- GitHub - toranb/sloth: python sftune, qmerge and dpo scripts with unsloth: python sftune, qmerge and dpo scripts with unsloth - toranb/sloth

- Trainer: no description found

- unsloth/unsloth/models/llama.py at main · unslothai/unsloth: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- transformers/src/transformers/models/llama/modeling_llama.py at main · huggingface/transformers: 🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX. - huggingface/transformers

- Mistral Fine Tuning for Dummies (with 16k, 32k, 128k+ Context): Discover the secrets to effortlessly fine-tuning Language Models (LLMs) with your own data in our latest tutorial video. We dive into a cost-effective and su...

- Efficient Fine-Tuning for Llama-v2-7b on a Single GPU: The first problem you’re likely to encounter when fine-tuning an LLM is the “host out of memory” error. It’s more difficult for fine-tuning the 7B parameter ...

Unsloth AI (Daniel Han) ▷ #showcase (6 messages):

- Tinyllama Leverages Unsloth Notebook: The Lora Adapter from Unsloth's notebook got converted to a ggml adapter to be used in the Ollama model, resulting in the Tinyllama model with training emerged from the Unsloth Notebook and a dataset from Huggingface.

- Mischat Model Unveiled: Another model named Mischat, which leverages gguf, is now updated on Ollama. It involved fine-tuning with the Mistral-ChatML Unsloth notebook and can be found at Ollama link.

- Showcasing Template Reflection in Models: A user showcased how templates defined in notebooks can reflect in Ollama modelfiles, using the recent models as examples of this process.

- Blogpost for AI Enthusiasts: A member introduced a new blog post titled 'AI Unplugged 5' covering a variety of AI topics ranging from Apple MM1 to DBRX to Yi 9B which offers insight into recent AI developments and available at AI Unplugged.

- Positive Reception for AI Summary Content: The blog summarizing weekly AI activities received positive feedback from another user who appreciated the effort to provide insights on AI advancements.

- pacozaa/mischat: Model from fine-tuning session of Unsloth notebook ChatML with Mistral Link to Note book: https://colab.research.google.com/drive/1Aau3lgPzeZKQ-98h69CCu1UJcvIBLmy2?usp=sharing

- pacozaa/tinyllama-alpaca-lora: Tinyllama Train with Unsloth Notebook, Dataset https://huggingface.co/datasets/yahma/alpaca-cleaned

- AI Unplugged 5: DataBricks DBRX, Apple MM1, Yi 9B, DenseFormer, Open SORA, LlamaFactory paper, Model Merges.: Previous Edition Table of Contents Databricks DBRX Apple MM1 DenseFormer Open SORA 1.0 LlaMaFactory finetuning ananlysis Yi 9B Evolutionary Model Merges Thanks for reading Datta’s Substack! Subscribe ...

- Notion – The all-in-one workspace for your notes, tasks, wikis, and databases.: A new tool that blends your everyday work apps into one. It's the all-in-one workspace for you and your team

Unsloth AI (Daniel Han) ▷ #suggestions (1 messages):

starsupernova: ooo very cool!

LM Studio ▷ #💬-general (237 messages🔥🔥):

- AI Hobbies Aren't Cheap: Members discuss the costs associated with AI as a hobby, with expenditures reaching around $10k due to the need for high-end GPUs or MacBooks for good performance.

- Challenges for Newcomers in AI: Newcomers to the world of AI face a steep learning curve, with discussions highlighting the "hard awakening" to the computationally demanding and jargon-laden field.

- Local LLM Guidance and Troubleshooting: Several inquiries and tips were exchanged on running local LLMs, from issues with LM Studio and GPU compatibility to recommendations on model selection based on VRAM and system specifications.

- LM Studio Enhancements and Feature Requests: Users express excitement for new features like the Branching system, and highlight the usefulness of having folders for branched chats; some are requesting a 'Delete All' feature for smoother user experience.

- Community Support and Shared Resources: Helpful conversations and shared resources like YouTube guides and GitHub links provide support among users, who collaboratively troubleshoot technical challenges and explore the capabilities of different models.

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- Qwen/Qwen1.5-MoE-A2.7B-Chat · Hugging Face: no description found

- AnythingLLM | The ultimate AI business intelligence tool: AnythingLLM is the ultimate enterprise-ready business intelligence tool made for your organization. With unlimited control for your LLM, multi-user support, internal and external facing tooling, and 1...

- LM Studio Realtime STT/TTS Integration Please: A message to the LM Studio dev team. Please give us Realtime Speech to Text and Text to Speech capabilities. Thank you!

- Build a Next.JS Answer Engine with Vercel AI SDK, Groq, Mistral, Langchain, OpenAI, Brave & Serper: Building a Perplexity Style LLM Answer Engine: Frontend to Backend TutorialThis tutorial guides viewers through the process of building a Perplexity style La...

- LM Studio: Easiest Way To Run ANY Opensource LLMs Locally!: Are you ready to dive into the incredible world of local Large Language Models (LLMs)? In this video, we're taking you on a journey to explore the amazing ca...

- LM Studio: Easiest Way To Run ANY Opensource LLMs Locally!: Are you ready to dive into the incredible world of local Large Language Models (LLMs)? In this video, we're taking you on a journey to explore the amazing ca...

- Add qwen2moe by simonJJJ · Pull Request #6074 · ggerganov/llama.cpp: This PR adds the support of codes for the coming Qwen2 MoE models hf. I changed several macro values to support the 60 experts setting. @ggerganov

LM Studio ▷ #🤖-models-discussion-chat (39 messages🔥):

- VRAM Requirements for Future Models: An issue has been raised about current GPU configurations lacking the VRAM for upcoming models, specifically pointing out that 32GB VRAM might fall short for Q2 or Q3 versions of models. A GitHub issue mentions that llama.cpp doesn't yet support newer, VRAM-intensive model formats like DBRX.

- LM Studio Pairing with Open Interpreter: A member asked if anyone has gotten Open Interpreter to work with LM Studio, and another member linked both the official documentation and a YouTube tutorial to assist with setup.

- Leveraging Existing Server Setups for Large LLMs: Some members discussed using older servers with Nvidia P40 GPUs for running large language models, noting that while they are not ideal for tasks like stable diffusion, they can handle large models with decent speed.

- Assessing Creative Writing Capabilities: A few members compared different language models, noting that Zephyr may outperform Hermes2 Pro in creative writing tasks, highlighting the nuances in how various language models handle specific functions.

- Choosing the Right Coder LLM for Limited VRAM: A query about the best coder language model to use on a 24GB RTX 3090 led to a mention that DeepSeek Coder might be the top choice, and that instruct models might function better when used as agents.

- DBRX: My First Performance TEST - Causal Reasoning: On day one of the new release of DBRX by @Databricks I did performance tests on causal reasoning and light logic tasks. Here are some of my results after the...

- no title found: no description found

- LM Studio + Open Interpreter to run an AI that can control your computer!: This is a crappy video (idk how to get better resolution) that shows how easy it is to use ai these days! I run mistral instruct 7b in the client and as a se...

- Add support for DBRX models: dbrx-base and dbrx-instruct · Issue #6344 · ggerganov/llama.cpp: Prerequisites Please answer the following questions for yourself before submitting an issue. I am running the latest code. Development is very rapid so there are no tagged versions as of now. I car...

LM Studio ▷ #announcements (2 messages):

- LM Studio 0.2.18 Update Rolls Out: LM Studio has released version 0.2.18, which introduces stability improvements, a new 'Empty Preset' for Base/Completion models, default presets for various LMs, a 'monospace' chat style, along with a number of bug fixes. Download the new version from LM Studio or use the 'Check for Updates' feature.

- Comprehensive Bug Fixes Detailed: The update addresses issues such as duplicated chat images, ambiguous API error messages without models, GPU offload bugs, and incorrect model names in the UI. Mac users get a Metal-related load bug fixed, and Windows users see a correction for app opening issues post-installation.

- Documentation Site Launched: A dedicated documentation site for LM Studio is now live at lmstudio.ai/docs, with plans to expand the content available in the near future.

- Config Presets Accessible on GitHub: Users who can't find the new configs in their setup can access them at openchat.preset.json and lm_studio_blank_preset.preset.json on GitHub, although the latest downloads and updates should already include these presets.

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- configs/openchat.preset.json at main · lmstudio-ai/configs: LM Studio JSON configuration file format and a collection of example config files. - lmstudio-ai/configs

- configs/lm_studio_blank_preset.preset.json at main · lmstudio-ai/configs: LM Studio JSON configuration file format and a collection of example config files. - lmstudio-ai/configs

LM Studio ▷ #🧠-feedback (13 messages🔥):

- Praise for AI Ease-of-Use: A member expressed their appreciation for an AI tool, calling it the most user-friendly AI project they have ever used.

- VRAM Display Inaccuracies Reported: A member noted that the VRAM displayed is incorrect, showing only 68.79GB instead of the expected 70644 GB.

- Seeking Stable Models Amidst Volume: Another member disclosed that they are sifting through almost 2TB of models, encountering issues with many models producing garbled or repetitive text.

- Prompt Formatting Woes: Members discussed the importance of using the correct prompt format for models to function properly and mentioned checking the model's Hugging Face page for clues.

- VRAM Readings Fluctuate Across Versions: It was observed that the displayed VRAM changed across different versions of the software, with one member speculating that version 2.17 possibly showed the most accurate value.

LM Studio ▷ #🎛-hardware-discussion (111 messages🔥🔥):

- Power Supply Sizing Recommendations: Discussions suggest a 1200-1500W power supply for an Intel 14900KS and an RTX 3090 build, whereas 1000W might suffice for AMD setups like a 7950x/3d, considering no GPU power limits. Gold/platinum rated PSUs were endorsed for better efficiency.

- GPU Power Limit Tips: It was shared that locking RTX 4090 GPUs to a 350-375W range only results in a minimal performance loss, emphasizing that such a limit is more practical than full power overclocking's minuscule gains.

- Performance Enthusiasm for Legacy Hardware: Some members discuss leveraging older GPU hardware like the K80, while noting limitations like high heat output. It was suggested that old iMac fans can be modded to help with cooling on such antique tech.

- Exploring the Utility of NVLink: Members exchanged ideas on whether NVLink can improve performance for model inference, with some arguing for a noticeable speed increase in token generation with the link, while others questioned its utility for inference tasks.

- LM Studio Compatibility Queries: As LM Studio updates arrive, users typically inquire about support for various GPUs, highlighting limitations and workarounds, like using OpenCL for GPU offloading or ZLUDA for older AMD cards. Moreover, issues are sometimes encountered and solved, such as the UI problem fixed by running the app in fullscreen.

- Grammar Police Drift GIF - Grammar Police Drift Police Car - Discover & Share GIFs: Click to view the GIF

- no title found: no description found

LM Studio ▷ #🧪-beta-releases-chat (80 messages🔥🔥):

- User Proposes GPU/NPU Monitoring: A suggestion was made to add a monitoring feature for GPU and NPU in the usage sections along with utilization statistics for better system parameter optimization.

- GPU Offloading Troubleshooting: Users discussed issues with ROCm beta on Windows not loading models into GPU memory. The conversation led to identifying a possible issue with partial offloading when not all layers are offloaded to the GPU. Users shared logs and error messages to diagnose the problem.

- LM Studio Windows Version Confusion: Some users reported the LM Studio version appears as 0.2.17 in logs, even when 0.2.18 is in use. There's discussion about an issue with adjusting the GPU offload layers slider in the UI.

- ROCm Beta Stability Questions: One user encountered crashes while using the 0.2.18 ROCm Beta; it works initially but fails upon asking questions. There is a call for users to test with a debug build and send verbose logs for further investigation.

- LM Studio on Nix via GitHub PR: A user successfully integrated LM Studio into Nix, shared the Pull Request link (https://github.com/NixOS/nixpkgs/pull/290399), and indicated plans to merge the update soon.

- no title found: no description found

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- no title found: no description found

- Windows 11 - release information: Learn release information for Windows 11 releases

- lmstudio: init at 0.2.18 by drupol · Pull Request #290399 · NixOS/nixpkgs: New app: https://lmstudio.ai/ Description of changes Things done Built on platform(s) x86_64-linux aarch64-linux x86_64-darwin aarch64-darwin For non-Linux: Is sandboxing enabled in nix....

LM Studio ▷ #langchain (2 messages):

- Check Out OllamaPaperBot's Code: A member shared a GitHub repository for OllamaPaperBot, a chatbot designed to interact with PDF documents using open-source LLM models, inviting others to review their code.

- Inquiring about LMStudio's JSON Output: A member inquired if anyone has tried using the new JSON output format from LMStudio in conjunction with the shared OllamaPaperBot repository.

Link mentioned: OllamaPaperBot/simplechat.py at main · eltechno/OllamaPaperBot: chatbot designed to interact with PDF documents based on OpenSource LLM Models - eltechno/OllamaPaperBot

LM Studio ▷ #amd-rocm-tech-preview (54 messages🔥):

- Understanding GPU Utilization with LM Studio: Users reported issues with LM Studio where in version 0.2.17, GPUs were properly utilized, but after updating to 0.2.18, there was a noticeable drop in GPU usage under 10%. Instructions were provided for exporting app logs using a debug version to investigate the problem.

- Geared Up for Debugging: In response to low GPU usage, a user attempted troubleshooting with a verbose debug build and noted that GPU usage improved when LM Studio was used as a chatbot rather than a server.

- Ejecting Models causes errors: A user discovered a potential bug where after ejecting a model in LM Studio, they were unable to load any new models without restarting the application. Other users were unable to reproduce this failure.

- Driver Issues May Affect Performance: A conversation about the proper application of ROCm instead of AMD OpenCL suggested that driver updates or installing the correct build could resolve some issues. A user confirmed improvement after updating AMD drivers and installing the correct build of LM Studio.

- Select GPUs Unrecognized by New Update: Users observed that while LM Studio version 2.16 recognized secondary GPUs, version 2.18 no longer did. A user clarified that models started working properly after ensuring they were using the ROCm build, not the regular LM Studio build.

- no title found: no description found

- How to run a Large Language Model (LLM) on your AMD Ryzen™ AI PC or Radeon Graphics Card: Did you know that you can run your very own instance of a GPT based LLM-powered AI chatbot on your Ryzen™ AI PC or Radeon™ 7000 series graphics card? AI assistants are quickly becoming essential resou...

LM Studio ▷ #crew-ai (2 messages):