[AINews] DeepSeek's Open Source Stack

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Cracked engineers are all you need.

AI News for 3/7/2025-3/8/2025. We checked 7 subreddits, 433 Twitters and 28 Discords (224 channels, and 4696 messages) for you. Estimated reading time saved (at 200wpm): 406 minutes. You can now tag @smol_ai for AINews discussions!

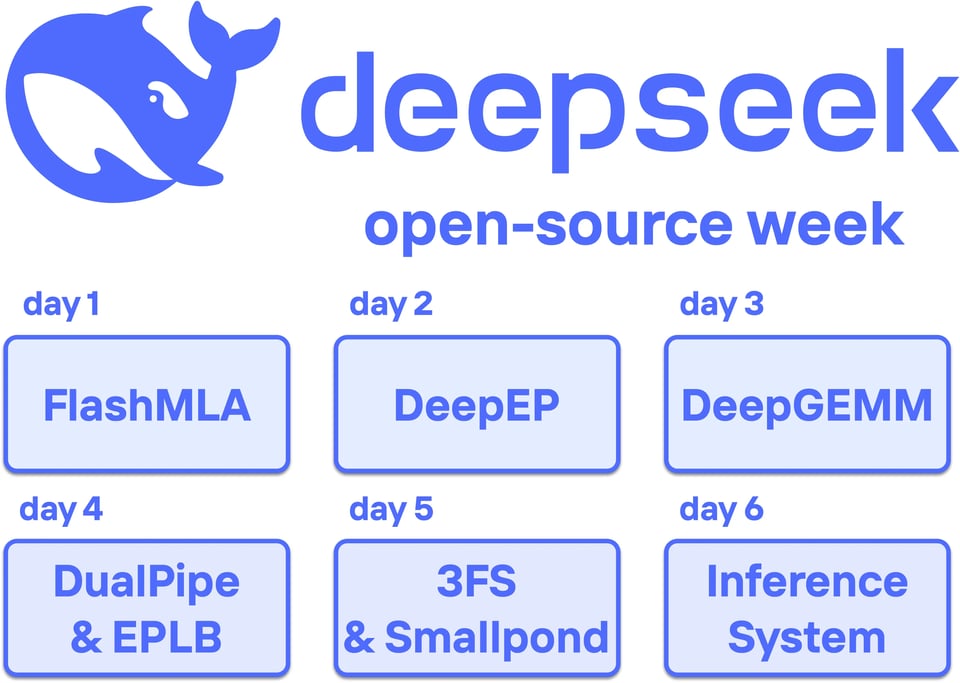

We didn't quite know how to cover DeepSeek's "Open Source Week" from 2 weeks ago, since each release was individually interesting but not quite hitting the bar of generally useful and we try to cover "the top news of the day". But the kind folks at PySpur have done us the favor of collating all the releases and summarizing them:

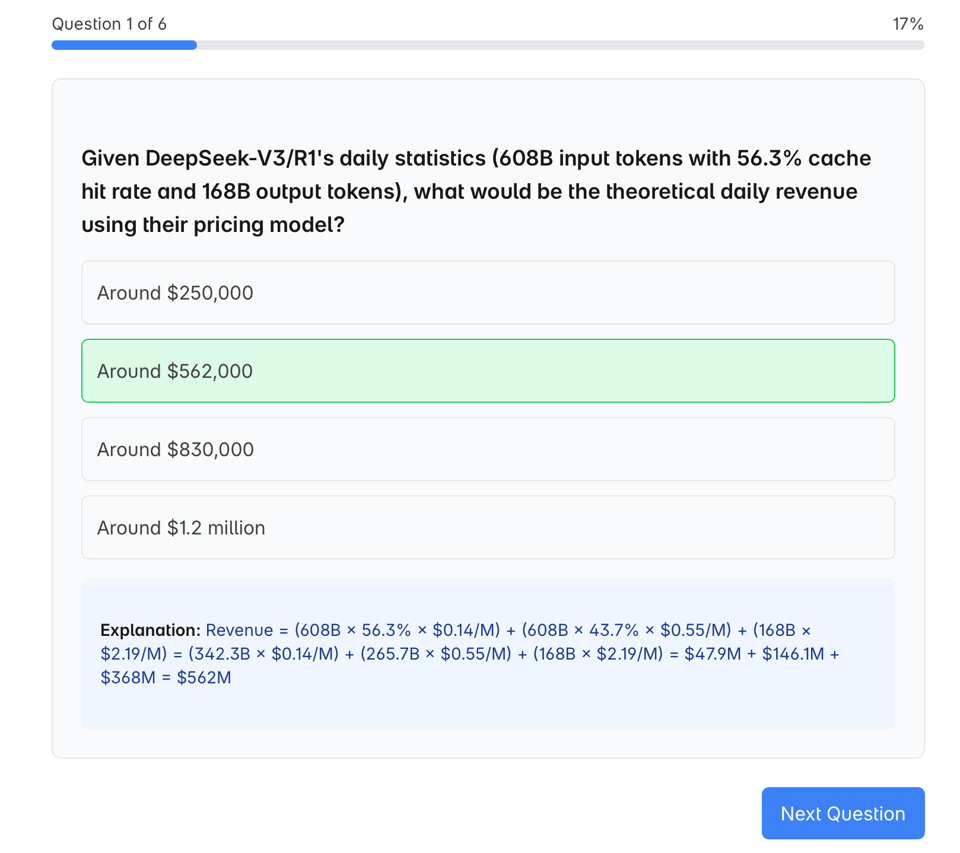

It even comes with little flash quizzes to test your understanding and retention!!

We think collectively this is worth some internalization.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

Models & Releases

- Qwen QwQ-32B Model: @_akhaliq announced the release of START, a Self-taught Reasoner with Tools, fine-tuned from the Qwen-32B model. START achieves high accuracy on PhD-level science QA (GPQA), competition-level math benchmarks, and the LiveCodeBench benchmark. @lmarena_ai welcomed Qwen QwQ-32B to the Arena for chats, noting it's also trending on Hugging Face, according to @reach_vb. @danielhanchen provided a guide for debugging looping issues with QwQ-32B, suggesting sampler adjustments and noting quantization sensitivity. They also uploaded dynamic 4bit quants and GGUFs.

- Character-3 AI Video Model: @togethercompute and @realDanFu highlighted the launch of Character-3, an omnimodal AI model for video generation developed by @hedra_labs and scaled on Together AI. Character-3 can turn images into animated content with realistic movement and gestures, as showcased by @TomLikesRobots who successfully used it for AI lipsync and storytelling.

- Gemini Embeddings and Code Executor: @_philschmid reported on Google DeepMind's new experimental Gemini embedding model, which ranks #1 on the MMTEB leaderboard with an 8k context window and is designed for finance, science, legal, search, and code applications. They also detailed how the Gemini 2.0 Code Executor works, including its auto-fix attempts, file input support, runtime limits, and supported Python libraries, as seen in tweets like @_philschmid and @_philschmid.

- Mercury Coder: @DeepLearningAI introduced Inception Labs' Mercury Coder, a diffusion-based model for code generation that processes tokens simultaneously, achieving faster speeds than autoregressive models.

- GPT-4.5 Release: @aidan_mclau mentioned an interesting essay on GPT-4.5. @DeepLearningAI noted that OpenAI released GPT-4.5, describing it as their largest model but lacking the reasoning capabilities of models like o1 and o3.

- Jamba Mini 1.6: @AI21Labs highlighted Jamba Mini 1.6 outpacing Gemini 2.0 Flash, GPT-4o mini, and Mistral Small 3 in output speed.

- Mistral OCR: @vikhyatk announced a new dataset release of 1.9M scanned pages transcribed using Pixtral, potentially related to OCR benchmarking. @jerryjliu0 shared benchmarks of Mistral OCR against other models, finding it decent and fast but not the best document parser, especially when compared to LLM/LVM-powered parsing techniques like Gemini 2.0, GPT-4o, and Anthropic's Sonnet models. @sophiamyang and @sophiamyang also shared videos by @Sam_Witteveen on MistralAI OCR.

Tools & Applications

- MCP (Model Context Protocol): @mickeyxfriedman observed the sudden surge in discussion around MCP, despite it being around for half a year. @hwchase17 highlighted a "coolest client" integrating with MCP. @saranormous described a smart client for MCP everywhere. @nearcyan asked about the "underground MCP market" and announced an SF MCP meetup @nearcyan. @abacaj noted the mystery and trending nature of MCP. @omarsar0 offered the original MCP guide as the best resource for understanding it, countering "bad takes". @_akhaliq released an MCP Gradio client proof-of-concept.

- Perplexity AI Search & Contest: @yusuf_i_mehdi announced Think Deeper in Copilot is now powered by o3-mini-high. @AravSrinivas encouraged users to ask Perplexity anything to fight ignorance and announced a contest giving away ₹1 crore and a trip to SF for questions asked on Perplexity during the ICC Champions Trophy final @perplexity_ai. @AravSrinivas sought feedback on Sonnet 3.7-powered reasoning searches in Perplexity.

- Hedra Studio & Character-3 Integration: @togethercompute and @realDanFu emphasized the integration of Hedra's Character-3 into Hedra Studio for AI-powered content creation. @TomLikesRobots highlighted its ease of use for lipsync and storytelling.

- AI-Gradio: @_akhaliq announced no-code Gradio AI apps. Followed by @_akhaliq providing code to get started with ai-gradio for Hugging Face models and @_akhaliq showcasing a Vibe coding app with Qwen QwQ-32B in few lines of code using ai-gradio.

- Orion Browser for Linux: @vladquant announced the start of Orion Browser for Linux development, expanding Kagi's ecosystem.

- ChatGPT for MacOS Code Editing: @kevinweil highlighted that ChatGPT for MacOS can now edit code directly in IDEs.

- Model Switch with Together AI: @togethercompute announced a partnership with Numbers Station AI to launch Model Switch, powered by models hosted on Together AI, for data teams to choose efficient open-source models for AI-driven analytics.

- Cursor AI on Hugging Face: @ClementDelangue noted Cursor AI is now on Hugging Face, inquiring about fun integrations.

Research & Datasets

- START (Self-taught Reasoner with Tools): @_akhaliq announced Alibaba's START, a model fine-tuned from QwQ-32B, achieving strong performance in reasoning and tool use benchmarks.

- PokéChamp: @_akhaliq presented PokéChamp, an expert-level Minimax Language Agent for Pokémon battles, outperforming existing LLM and rule-based bots, achieving top 10% player ranking using an open-source Llama 3.1 8B model.

- Token-Efficient Long Video Understanding: @arankomatsuzaki shared Nvidia's research on Token-Efficient Long Video Understanding for Multimodal LLMs, achieving SotA results while reducing computation and latency.

- DCLM-Edu Dataset: @LoubnaBenAllal1 announced DCLM-Edu, a new dataset filtered from DCLM using FineWeb-Edu's classifier, optimized for smaller models.

- uCO3D Dataset: @AIatMeta and @AIatMeta introduced uCO3D, Meta's new large-scale, publicly available object-centric dataset for 3D deep learning and generative AI.

- LADDER Framework: @dair_ai detailed LADDER, a framework enabling LLMs to recursively generate and solve simpler problem variants, boosting math integration accuracy through autonomous difficulty-driven learning and Test-Time Reinforcement Learning (TTRL).

- Dedicated Feedback and Edit Models: @_akhaliq highlighted research on using Dedicated Feedback and Edit Models to empower inference-time scaling for open-ended general-domain tasks.

- Masked Diffusion Model for Reversal Curse: @cloneofsimo announced a Masked Diffusion model that beats the reversal curse, noting it will change things, as per @cloneofsimo.

- Brain-Like LLMs Study: @TheTuringPost summarized a study exploring how LLMs align with brain responses, finding alignment weakens as models gain reasoning and knowledge, and bigger models are not necessarily more brain-like.

Industry & Business

- Together AI Events & Partnerships: @togethercompute promoted their AI Pioneers Happy Hour at Nvidia GTC, co-hosted with @SemiAnalysis_ and @HypertecGroup. @togethercompute announced co-founder @percyliang speaking at #HumanX with Sama CEO Wendy Gonzalez on foundational models meeting individual needs.

- Anthropic's White House RFI Response: @AnthropicAI shared their recommendations submitted to the White House in response to the Request for Information on an AI Action Plan.

- Kagi User-Centric Products: @vladquant emphasized Kagi's ecosystem of user-centric products with the Orion Browser for Linux announcement.

- AI Application Development in 2025: @DeepLearningAI promoted a panel discussion at AI Dev 25 on the future of AI application development, featuring @RomanChernin of @nebiusai.

- Japan as an AI Hub: @SakanaAILabs shared an interview with Sakana AI co-founder Ren Ito discussing Tokyo's potential as a global AI development hub and Japan's ambition to become a technology superpower again.

- AI in Media: @c_valenzuelab argued AI is not just the future of media, but the foundation beneath all future media, transforming creation, distribution, and experience.

- Open Source Business Model: @ClementDelangue questioned the notion that open-sourcing is bad for business, highlighting positive examples.

- United Airlines AI Integration: @AravSrinivas expressed excitement for flying United by default once their AI rollout is complete.

Opinions & Discussions

- MCP Hype & Understanding: @mickeyxfriedman questioned the sudden MCP hype. @abacaj and @Teknium1 also commented on the MCP mania. @omarsar0 criticized "bad takes" on MCP. @clefourrier asked for an ELI5 explanation of MCP hype.

- Agentic AI & Reflection: @TheTuringPost discussed the importance of reflection in agentic AI, enabling analysis, self-correction, and improvement, highlighting frameworks like Reflexion and ReAct. @pirroh emphasized to think beyond ReAct in agent design.

- Voice-Based AI Challenges & Innovations: @AndrewYNg discussed challenges in Voice Activity Detection (VAD) for voice-based systems, especially in noisy environments, and highlighted Kyutai Labs' Moshi model as an innovation using persistent bi-directional audio streams to eliminate the need for explicit VAD.

- Scale is All You Need: @vikhyatk jokingly stated the easy fix to AI problems is "more data, more parameters, more flops," suggesting scale is all you need.

- Limitations of Supervised Learning: @finbarrtimbers argued for the need for Reinforcement Learning (RL), stating supervised learning is fundamentally the wrong paradigm, though it's a necessary step.

- Long-Context Challenges: @teortaxesTex mentioned "residual stream saturation" as an under-discussed problem in long-context discussions.

- Importance of Intermediate Tasks: @cloneofsimo discussed the capability of "coming up with seemingly unrelated intermediate tasks to solve a problem" as a key aspect of intelligence.

- Interview Questioning Techniques: @vikhyatk shared an Amazon interview technique called "peeling the onion" to assess candidates' ability to deliver results by asking follow-up questions to verify their project experience.

- Focusing Attention in AI Discourse: @jeremyphoward lamented the tendency in AI discourse to jump from topic to topic without sustained focus.

- AGI Timeline & Planning: @abacaj sarcastically commented on OAI planning for AGI by 2027 despite agile teams struggling to plan two sprints ahead.

- "Open" AI and Marketing: @vikhyatk criticized companies for misrepresenting their actions in marketing materials, and @vikhyatk questioned whether the latest release of an "actually open" AI was truly open.

- Evaluation Chart Interpretation: @cognitivecompai cautioned against taking evaluation charts at face value, especially those comparing favorably to R1, suggesting critical thinking about which models and evals are omitted.

- Geopolitics of Superintelligence: @jachiam0 and @jachiam0 discussed the lack of a known-good approach to the geopolitics of superintelligence and the serious risks of getting it wrong.

- AI and Job Displacement: @fabianstelzer suggested that while "bullshit jobs" are mostly immune to AI, we might see the "notarization" of other jobs through regulations requiring human-in-the-loop to protect them from AI disruption.

Humor & Memes

- Confucius Quote on Asking Questions: @AravSrinivas quoted Confucius: “The man who asks a stupid question is a fool for a minute. The man who does not ask a stupid question is a fool for life.” to promote Perplexity.

- AI PhD in 2025 Meme: @jxmnop shared a humorous "day in the life of an AI PhD in 2025" meme involving minimal research work and lots of tennis.

- MCP as a "Context Palace": @nearcyan humorously described MCP as "Rather than a context window, MCP is instead a context palace 👑".

- "tinygrad users don't know the value of nothing": @typedfemale posted a meme "tinygrad users don't know the value of nothing and the cost of nothing".

- "why the fuck does every optimization researcher on X have a cat/dog in their profile picture?": @eliebakouch jokingly asked "why the fuck does every optimization researcher on X have a cat/dog in their profile picture?".

- "i thought i needed to block politics but now i need to block MCP as well lel": @Teknium1 joked about needing to block MCP-related content like politics.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. FT: Llama 4 w/ Voice Expected Soon, Enhancing Voice AI

- FT: Llama 4 w/ voice expected in coming weeks (Score: 108, Comments: 31): Meta and Sesame are anticipated to release Llama 4 with integrated voice capabilities in the coming weeks, providing options for self-hosted voice chat. The author expresses interest in an iOS app with CarPlay integration for interacting with a private AI server.

- Commenters discuss the potential features and capabilities of Llama 4, expressing desires for smaller models (0.5B to 3B) like Llama-3.2-1B for rapid experimentation, and larger models (10~20B) with performance comparable to Qwen 32B. There is also a conversation around the integration of reasoning abilities, with some preferring a separate model for reasoning tasks to avoid delays in voice interactions.

- The anticipated release timeline for Llama 4 is debated, with some predicting it will coincide with LlamaCon to boost the event's profile, and others speculating a release between mid-March and early April. The discussion includes a link to a preview image related to the release.

- There are concerns about paywalled content, with users sharing a workaround link to archive.ph for accessing the article summary. Additionally, there's a mention of bias in models, with a preference for models that do not engage in moral lecturing.

Theme 2. QwQ-32B Performance Settings and Improvements

- QwQ-32B infinite generations fixes + best practices, bug fixes (Score: 214, Comments: 26): To address infinite repetitions with QwQ-32B, the author suggests using specific settings such as

--repeat-penalty 1.1and--dry-multiplier 0.5, and advises adding--samplers "top_k;top_p;min_p;temperature;dry;typ_p;xtc"to prevent infinite generations. The guide also recommends Qwen team's advice for long context (128K) to use YaRN and provides links to various quantized models, including dynamic 4bit quants, available on Hugging Face.- Parameter Overrides in Llama-Server: When using llama-server, command line parameters like

--temp 0.6can be overridden by HTTP request parameters such as{"temperature":1.0}, affecting the final output. More details are available in a discussion on GitHub. - GRPO Compatibility with QwQ-32B: Users inquired about running GRPO with QwQ-32B for low GPU resources, and it was confirmed that it works by simply changing the model name in the GRPO notebook.

- Chat Template Usage: It's important to follow the exact chat template format for QwQ-32B, including newlines and tags like

<think>. However, omitting the<think>tag can still work as the model adds it automatically, and system prompts can be used by prepending them with<|im_start|>system\n{system_prompt}<|im_end|>\n. More details can be found in the tutorial.

- Parameter Overrides in Llama-Server: When using llama-server, command line parameters like

- Ensure you use the appropriate temperature of 0.6 with QwQ-32B (Score: 107, Comments: 9): The author initially faced issues with generating a Pygame script for simulating a ball bouncing inside a rotating hexagon due to incorrect settings, which took 15 minutes without success. They later discovered that the Ollama settings had been updated, recommending a temperature of 0.6 for QwQ-32B, with further details available in the generation configuration.

- Deepseek and other reasoning models often perform optimally with a temperature parameter of 0.6, which aligns with the author's findings for QwQ-32B. This temperature setting seems to be a common recommendation across different models.

Theme 3. QwQ vs. qwen 2.5 Coder Instruct: Battle of 32B

- AIDER - As I suspected QwQ 32b is much smarter in coding than qwen 2.5 coder instruct 32b (Score: 241, Comments: 79): QwQ-32B outperforms Qwen 2.5-Coder 32B-Instruct in coding tasks, achieving a higher percentage of correct completions in the Aider polyglot benchmark. Despite its superior performance, QwQ-32B incurs a higher total cost compared to Qwen 2.5-Coder, as illustrated in a bar graph by Paul Gauthier updated on March 06, 2025.

- Graph Design Issues: Several commenters, including SirTwitchALot and Pedalnomica, note confusion due to the graph's design, particularly the use of two y-axes and an unclear legend with missing colors. This misrepresentation complicates understanding the performance and cost comparison between QwQ-32B and Qwen 2.5-Coder.

- Performance and Configuration Concerns: There is discussion around QwQ-32B's performance, with someonesmall highlighting its 20.9% completion rate in the Aider polyglot benchmark compared to Qwen 2.5-Coder's 16.4%, but with a much lower correct diff format rate (67.6% vs. 99.6%). BumbleSlob and others express dissatisfaction with the model's performance, suggesting parameter adjustments as potential solutions.

- Model Size and Usability: krileon and others discuss the practicality of running large models like QwQ-32B on consumer hardware, with suggestions like acquiring a used 3090 GPU to improve performance. The conversation reflects on the challenges of using large models without high-end hardware, and the potential future accessibility of more powerful GPUs.

Theme 4. Meta's Latent Tokens: Pushing AI Reasoning Forward

- Meta drops AI bombshell: Latent tokens help to improve LLM reasoning (Score: 333, Comments: 40): Meta AI researchers discovered that using latent tokens generated by compressing text with a vqvae enhances the reasoning capabilities of Large Language Models (LLMs). For more details, refer to the paper on arXiv.

- Latent Tokens and Reasoning Efficiency: The use of latent tokens created via VQ-VAE compresses reasoning steps in LLMs, leading to more efficient reasoning by reducing the need for verbose text explanations. This method allows LLMs to handle complex tasks with fewer computational resources and shows improved performance in logic and math problems compared to models trained only on full text explanations.

- Mixed Reception on Impact: While some users see potential in this approach, others like dp3471 express that the gains are relatively small and expect more significant improvements when combined with other techniques like progressive latent block transform. Cheap_Ship6400 highlights that Meta's latent reasoning differs from Deepseek's MLA, focusing on token embedding space rather than attention score calculations.

- Discussion on Implementation and Future Prospects: There is curiosity about the implementation details, particularly how the VQ-VAE is used for next-token prediction with discrete high-dimensional vectors. Some users, like -p-e-w-, hope for practical applications of these theoretical breakthroughs, while others discuss the potential of reasoning in latent space and compare it to other emerging techniques like diffusion LLMs.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

error in pipeline that we are debugging... sorry

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Thinking

Theme 1. IDE Showdown: Cursor, Windsurf, and the Code Editor Arena

- Cursor Crushes Codeium in Credit Consumption Contest!: Users report Cursor is more efficient and stable than Windsurf, especially with large files, with credit usage on Cursor being a fraction of Windsurf's Claude 3.7 costs. Windsurf users are facing high credit consumption due to repeated code rewrites and file analysis, with one user burning through 1200 credits in a day, while others switch to Cursor or free alternatives like Trae for better resource management.

- Cursor 0.47 Comforts Code Creation Chaos!: Cursor 0.47 update resolves Sonnet 3.7 issues, now following custom rules and behaving better for code generation, particularly for tasks like creating a welcome window for VS Code forks. Users noted the use of sequential thinking with multiple code paths in the updated version, improving code creation workflow.

- MCP Mayhem: Server Setup Snags Users: Users are struggling to set up MCP servers for code editors like Windsurf, encountering errors like "Not Found: Resource not found" when attempting to connect to different models. The difficulty in establishing Model Context Protocol (MCP) connections highlights ongoing challenges in integrating and utilizing external services with AI code editors.

Theme 2. Model Benchmarks and Optimization Breakthroughs

- QwQ-32B Challenges R1 for Local Coding Crown: QwQ-32B is touted as a game-changer for local AI coding, potentially achieving state-of-the-art performance at home, although benchmarks suggest it may not surpass R1 in all aspects. Daniel Han posted debugging tips for QwQ-32B looping issues, recommending sampler adjustments in llama.cpp and noting sensitivity to quantization, advising that the first and last layers should be left unquantized.

- ktransformers Claims IQ1 Quantization Crushes BF16: ktransformers posted benchmarks claiming Deepseek R1 IQ1 quantization outperforms BF16, sparking skepticism and debate in the Unsloth AI Discord, with one member noting they would've wrote 1.58bit tho. While the table was deemed incomplete, ongoing benchmarking is comparing Deepseek v3 (chat model) vs R1 IQ1, suggesting significant interest in extreme quantization methods for high-performance models.

- RoPE Scaling Rescues Long Context Qwen2.5-Coder-3B: Qwen2.5-Coder-3B-bnb-4bit model's context length of 32768 is extended to 128000 using kaiokendev's RoPE scaling of 3.906, confirming that with RoPE, as long as the architecture is transformers, the 128k tokens can be attended to. This demonstrates a practical method for significantly expanding the context window of smaller models.

Theme 3. Diffusion Models Disrupt Language Generation

- Inception Labs Diffuses into Text Generation with Midjourney-Sora Speed: Inception Labs is pioneering diffusion-based language generation, aiming for unprecedented speed, quality, and generative control akin to Midjourney and Sora. Members noted the emergence of open-source alternatives like ML-GSAI/LLaDA, suggesting diffusion models could revolutionize language generation with significant speed gains.

- Discrete Diffusion Models Get Ratios, Sparking Efficiency: A member highlighted the paper Discrete Diffusion Modeling by Estimating the Ratios of the Data Distribution from arxiv as potentially underpinning Inception Labs's product, noting its focus on estimating data distribution ratios for efficient diffusion modeling. Solving for the duals λ involves optimizing over the Lambert W function, which can be computationally intensive, prompting suggestions for using cvxpy and the adjoint method for optimization.

- LLaDA Model: Diffusion-based LLM Paradigm Shift Emerges: Large Language Diffusion Models (LLaDA) represent a novel paradigm in language model architecture, using a denoising diffusion process for parallel, coarse-to-fine text generation, contrasting with traditional autoregressive Transformers. Despite conceptual appeal, LLaDA and similar models may be limited by training focused on benchmarks rather than broad real-world tasks, with members observing limitations like repeat paragraph issues.

Theme 4. MCP and Agent Security Threats Loom Large

- MCP Servers Face Malicious Prompt Injection Menace: Concerns are escalating about MCP servers delivering malicious prompt injections to AI agents, exploiting models' inherent trust in tool calls over internal knowledge. Mitigation strategies proposed include displaying tool descriptions upon initial use and alerting users to instruction changes to prevent potential exploits.

- Security Channel Scrutinizes MCP Exploits: The community is considering establishing a dedicated security channel to proactively address and prevent MCP exploit vulnerabilities, emphasizing the inherent risks of connecting tools to MCP Servers/Remote Hosts without full awareness of consequences. Discussions highlighted the potential for regulatory compliance tool descriptions to be manipulated to trick models into incorporating backdoors.

- Perplexity API's Copyright Indemnification Raises Red Flags: Users flagged copyright concerns with the Perplexity API due to its scraping of copyrighted content, noting that Perplexity's API terms shifts liability onto the user for copyright infringement. Alternatives offering IP indemnification, such as OpenAI, Google Cloud, and AWS Bedrock, were highlighted as potentially safer options regarding copyright risks, with links to their respective terms of service.

Theme 5. Hardware Hustle: 9070XT vs 7900XTX and Native FP4 Support

- 9070XT Smokes 7900XTX in Raw Inference Speed: The 9070XT GPU outperforms the 7900XTX in inference speed, running the same qwen2.5 coder 14b q8_0 model at 44tok/sec versus 31tok/sec, and exhibiting a sub-second first token time compared to the 7900XTX's 4 seconds. Despite using Vulkan instead of ROCm due to driver limitations on Windows, the 9070XT demonstrates a significant performance edge, with some users reporting up to 10% better performance overall.

- Native FP4 Support Arrives on New GPUs: The 9070 GPU shows substantial improvements in FP16 and INT4/FP4 performance compared to older cards, with FP16 performance jumping from 122 to 389 and INT4/FP4 performance surging from 122 to 1557. This signifies the emergence of native FP4 support from both Nvidia and Radeon, opening new possibilities for efficient low-precision inference and training.

- Vulkan vs ROCm Performance Debate Heats Up: While the 9070XT currently lacks ROCm support on Windows, utilizing Vulkan via LM Studio, some users are reporting surprisingly competitive inference speeds, even surpassing ROCm in certain scenarios. However, others maintain that ROCm should inherently be faster than Vulkan, suggesting potential driver issues might be skewing results in favor of Vulkan in some user benchmarks.

PART 1: High level Discord summaries

Cursor IDE Discord

- Cursor Clashes with Lmarena in Quick Contest!: Members compared Cursor and Lmarena using a single prompt with GPT-3.7, with initial impressions favoring Cursor for output quality, citing unreadable text generated by Lmarena.

- Later analysis suggested Lmarena better respected the theme, but the general consensus was that both were dogshit.

- Cursor 0.47 Comforts Code Creation!: After updating, users reported that Cursor 0.47 fixed Sonnet 3.7 issues by following custom rules, complying with AI custom rules, and behaving better for code generation, particularly for a welcome window to a VS Code fork.

- They noted the use of sequential thinking with multiple code paths for increased speed.

- Vibe Coding: Validated and Visited!: A discussion about vibe coding, building micro-SaaS web apps, led to a user building a welcome window on a VS Code fork using Claude itself.

- A member defined the practice as treating the ai agent like a child that needs guidance, contrasting it with building a dockerized open-source project requiring orchestration experience.

- MCP: Maximizing Model Power!: Members explored using Model Context Protocol (MCP) servers to enhance AI code editors like Cursor and Claude, connecting to services like Snowflake databases.

- One member found that PearAI offered full context, while another discovered that

.cursorrulestend to be ignored in Cursor versions above 0.45.

- One member found that PearAI offered full context, while another discovered that

- Cursor Error Inspires Swag!: The infamous "Cursor is damaged and can't be opened" error message has inspired merchandise, with T-shirts and mousepads now available.

- This comedic turn underscores the community's ability to find humor in the face of technical frustrations.

Unsloth AI (Daniel Han) Discord

- ktransformers Claims IQ1 Crushes BF16: Members discussed Deepseek R1 IQ1 benchmarks posted by ktransformers, with one skeptical member noting they would've wrote 1.58bit tho.

- Another member noted the table was incomplete and the benchmarking was still in progress showing Deepseek v3 (the chat model) vs R1 IQ1.

- Unsloth's GRPO Algorithm Revealed: Members speculated on how Unsloth achieves low VRAM usage, suggesting asynchronous gradient offloading, however the real savings stem from re-implementing GRPO math.

- Other efficiencies come from gradient accumulation of intermediate layers, coupled with gradient checkpointing, and more efficient kernels like logsumexp.

- QwQ-32B Generation Looping Fixed: Daniel Han posted a guide on debugging looping issues with QwQ-32B models recommending samplers to llama.cpp such as --samplers "top_k;top_p;min_p;temperature;dry;typ_p;xtc" and uploaded dynamic 4bit quants & GGUFs.

- He said that QwQ is also sensitive to quantization - the first and last few layers should be left unquantized.

- Qwen's RLHF Success Story: One member achieved RLHF success using Unsloth GRPO on a Qwen7b model, reporting significant improvements in role adherence.

- However, the model showed noticeable degradation in strict instruction-following benchmarks like IFeval especially with formatting constraints and negative instructions.

- RoPE Scaling to the Rescue: The Qwen2.5-Coder-3B-bnb-4bit model can handle a sequence length of 32768, but with kaiokendev's RoPE scaling of 3.906, it was extended to 128000.

- It was confirmed that with RoPE, as long as the architecture is transformers, the 128k tokens can be attended to.

Nomic.ai (GPT4All) Discord

- Registry Editing can cause Blue Screen: A member shared that deleting a crucial .dll file after identifying it as a RAM hog led to a blue screen on the next boot, warning of registry editing risks without backups.

- Members generally agree that it's wise to backup personal files and reformat after tweaking the registry.

- Quantization Affects Performance and Memory: Users discussed quantization, noting a preference for f16 quantization to fit more parameters in a smaller load, while acknowledging that other quantizations may cause crashes with flash attention.

- In the context of quantization, someone described floating points as its signed bit size so signed 16 int is 32.

- Windows has File Path Length Limits: Members discussed the limitations of file path lengths in Windows, noting the standard 260-character limit due to the MAX_PATH definition in the Windows API, as explained in Microsoft documentation.

- This limitation can be bypassed by enabling long path behavior per application via registry and application manifest modifications for NT kernel path limit of 32,767.

- Inception Labs makes Diffusion-Based Text: Inception Labs is pioneering diffusion-based language generation which promises unprecedented speed, quality, and generative control inspired by AI systems like Midjourney and Sora.

- Members noted that open source alternatives are in development, such as ML-GSAI/LLaDA, and that significant speed increases may be on the horizon with this technology.

- Trustless Authentication is the Future: Members discussed the potential of trustless authentication, where a 3D scan of a face and ID is converted into an encrypted string to serve as a digital passport, similar to the business model used by Persona

- It's envisioned as a trustless verification database with users' personalization tags in the file, where generated deepfake facial scans and IDs would not work.

Codeium (Windsurf) Discord

- Windsurf Suffers Stability Surfing Setbacks: Users report that Windsurf is unstable after the latest updates, noting issues like infinite loops, repeating code changes, and general unresponsiveness.

- Many users are switching to Cursor or Trae, citing better stability and resource management, with one user stating, *"I have decided to switch to Cursor...Presumably more stable. I will come back in a month or so, see how it's doing."

- Credits Crunch: Windsurf's Costly Consumption Catches Critics: Users are experiencing high credit consumption due to the AI repeatedly rewriting code or analyzing the same files, leading to frustration.

- One user complained about burning through 1200 flow credits in one day after the introduction of Claude 3.7, calling it "100% unstable and 100% u pay for nothing", suggesting the tool reads only 50 lines at a time and takes 2 credits for reading a 81 line file.

- Cascade Terminal Plagued with Problems: Some users report the Cascade terminal disappearing or becoming unresponsive, with no visible settings to fix it.

- One user mentioned a temporary solution of restarting, but the issue reoccurs, while another suggested using

CTRL Shift Pto clear all cache and reload windows.

- One user mentioned a temporary solution of restarting, but the issue reoccurs, while another suggested using

- Model Melee: Cursor Crushes Codeium in Credit Contest: Users are comparing Windsurf to Cursor, with many finding Cursor to be more efficient and stable, especially when handling large files.

- One user reported that credit usage on Cursor is "a fraction of windsurf with claude 3.7" while another reports "Trae is doing x100 better than ws while its free".

- MCP Mayhem: Users Struggle with Server Setup: Users are encountering issues while trying to use MCP servers, with errors related to the model or configuration.

- One user received a "Not Found: Resource not found" error and tried different models without success.

Perplexity AI Discord

- Perplexity Pro Perks Provoke Problems: Several users reported unexpected cancellations of their Perplexity Pro accounts, suspecting a scam, later revealed to be due to ineligibility for the "DT 1 Year Free HR" offer meant for Deutsche Telekom customers in Croatia, as stated in the terms of service.

- Support confirmed the cancellations were due to this offer, intended for users in Croatia, creating confusion among those who were not eligible.

- GPT-4.5 Tier Talk Triggers Theories: Users discussed the availability of GPT-4.5 with Perplexity Pro, with confirmations of 10 free uses per 24 hours.

- It was clarified that GPT-4.5 is very expensive to use, suggesting that the auto model selection might be sufficient to avoid manually picking a model.

- Complexity Extension Creates Canvas Capabilities: A user explained how to generate mermaid diagrams within Perplexity using the Complexity extension, providing canvas-like features.

- By enabling the canvas plug-in and prompting the AI to create a mermaid diagram, users can render it by pressing the play button on the code block, with more info available in this Discord link.

- Google Gemini 2.0 Grabs Ground: An article from ArsTechnica details Google's expansion of AI search features powered by Gemini 2.0.

- Google is testing an AI Mode that replaces the traditional search results with Gemini generated responses.

- Prime Positions AI Powered Product Placement: A Perplexity page covers how Amazon Prime is testing AI dubbing for its content.

- Additional perplexity pages include: Apple's Foldable iPhone, OpenAI's AI Agent, and DuckDuckGo's AI Search.

LM Studio Discord

- LM Studio Tweaks Template Bugs, Speeds RAG: LM Studio 0.3.12 is now stable, featuring bug fixes for QwQ 32B jinja parsing that previously caused an

OpenSquareBracket !== CloseStatementerror, detailed in the full release notes.- Additionally, the speed of chunking files for retrieval in RAG has been significantly improved, with MacOS systems now correctly indexing MLX models on external exFAT drives.

- Qwen Coder Edges out DeepSeek on M2: For coding on a Macbook M2 Pro with 16GB RAM, Qwen Coder is recommended over DeepSeek v2.5 due to memory constraints, acknowledging that performance will still lag behind cloud-based models.

- Members noted Qwen 32B punches above its weight and performs at least as well as Llama 3.3 70b.

- Unsloth Fast-Tracks LLM Finetuning: Unsloth was recommended for faster finetuning of models like Llama-3, Mistral, Phi-4, and Gemma with reduced memory usage.

- Members noted that finetuning is much more resource intensive than inference and LM Studio currently does not have a public roadmap that includes this feature.

- 9070XT smashes 7900XTX in Raw Speed: A user compared the 9070XT to the 7900XTX running the same qwen2.5 coder 14b q8_0 model and found that the 9070XT runs about 44tok/sec with under a second to first token, while the 7900XTX runs 31tok/sec with 4sec to first token.

- One user believes those seeing better Vulkan performance are having driver issues because rocm should be faster than vulkan, by far.

- Native FP4 Support Arrives: The 9070 has significantly improved FP16 and INT4/FP4 performance compared to older cards, with 122 vs 389 FP16 and 122 vs 1557 INT4/FP4.

- This signals that native FP4 support is available from both Nvidia and Radeon, as well as discussions of the impact of quantization on model quality, particularly the trade-offs between smaller quant sizes and potential quality loss.

HuggingFace Discord

- Open-Source Agent Alternatives to Replit Spark Debate: Members discussed open-source alternatives with agent functionality similar to Replit/Bolt, and to export_to_video for direct video saving to a Supabase bucket.

- The community discussed alternatives to commercial offerings with integrated agent functionality.

- Dexie Wrapper Proposal for Gradio Apps: A user proposed a Dexie wrapper for easier IndexedDB access in Gradio apps, sparking discussion on its implementation as a custom component.

- A link to the Gradio developers Discord channel was shared for further discussion.

- Dataset Citation Drama Unfolds: A user suspected the research paper, 'NotaGen: Symbolic Music Generation with LLM Training Paradigms', of using their Toast MIDI dataset without proper citation.

- Community members advised contacting the corresponding author and generating a DOI for the dataset to ensure proper attribution and recognition of the dataset by academic software.

- Guidance Requested on OCR-2.0 Fine-Tuning: A member requested guidance on fine-tuning OCR-2.0, which they identified as the latest and best model, linking to a SharePoint document.

- The member inquired about the appropriate steps for fine-tuning the got/ocr-2.0 models according to the documentation.

- Decoding the AI Agent: Component vs. Entity: An LLM is a component with tool-calling abilities, not an Agent itself; an agentic AI model requires both, according to this response.

- Debate continues whether a Retrieval Augmented Generation (RAG) system could be considered an "environment" with which the LLM interacts.

OpenRouter (Alex Atallah) Discord

- Perplexity API Exposes Copyright Concerns: Users flagged copyright issues with the Perplexity API due to its scraping of copyrighted content, noting that Perplexity's API terms shifts liability onto the user.

- Alternatives such as OpenAI, Google Cloud, and AWS Bedrock offer IP indemnification, shifting the risk to the vendor, see: OpenAI terms, Google Cloud Terms, and AWS Bedrock FAQs.

- Sonar Deep Research Models Flounder with Errors: Members reported Perplexity Sonar Deep Research experiencing frequent errors, high latency (up to 241 seconds for the first token), and unexpectedly high reasoning token counts.

- One member mentioned a 137k reasoning token count with no output, while others confirmed the model eventually stabilized.

- Gemini Embedding Text Model Debuts: A new experimental Gemini Embedding Text Model (gemini-embedding-exp-03-07) is now available in the Gemini API, surpassing previous models, see Google AI Blog.

- This model now holds the top rank on the Massive Text Embedding Benchmark (MTEB) Multilingual leaderboard and also supports longer input token lengths.

- OpenRouter Reasoning Parameter Riddled with Inconsistencies: Users found inconsistencies in OpenRouter's reasoning parameter, some models marked as supporting reasoning despite endpoints lacking support, while some providers did not return reasoning outputs.

- Tests revealed configuration issues and discrepancies between the models and endpoints, with Cloudflare noted to lack a /completions endpoint.

- Claude 3.7 Stumbles with Russian Prompts: A user reported Claude 3.7 struggling with Russian language prompts, responding in English and potentially misunderstanding nuances.

- The issue arose while using cline with OpenRouter, suggesting the issue might stem from Anthropic rather than the extension or OpenRouter itself.

Latent Space Discord

- Minion.ai Meets its Maker: Members reported that Minion.ai is dead and not to believe the hype, with some describing it as that thing with like the 4-cartoony looking characters that were supposed to go and do agent stuff for you.

- The closure highlights the volatile nature of AI startups and the need for critical evaluation of marketed capabilities.

- Google Expands Gemini Embedding Model: Google released an experimental Gemini Embedding Model for developers with SOTA performance on MTEB (Multilingual).

- The updated model features an input context length of 8K tokens, output of 3K dimensions, and support for over 100 languages.

- Claude Code Enters IDE Arena: Members discussed comparing Claude code with cursor.sh and VSCode+Cline / roo-cline, prioritizing code quality over cost.

- The discussion references a previous message indicating an ongoing exploration of optimal coding environments.

- AI Personas Become the Meme Overlords: Referencing a tweet one member mentioned that the norm will shift to AI PERSONAS that embody personality types.

- The tweet mentions that agents will race to be THE main face of subgroup x, y, and z to capture the perfect encapsulation of shitposters, stoners, athletes, rappers, liberals, and memecoin degens.

- Agent-as-a-Service, the Future?: Members pondered the viability of an Agent-as-a-Service model.

- One member floated the idea of a bot that sits in front of DoorDash, referred to as DoorDash MCP.

OpenAI Discord

- OpenAI Squeezes ChatGPT Message Limit: Users are reporting ChatGPT now enforces a 50 messages/week limit to prevent misuse of the service.

- Members were frustrated at the inconvenience, and debated workarounds.

- HVAC gets LLM AI-Pilot: A member shared a YouTube video demo of an LLM trained on HVAC installation manuals.

- Another member suggested using Mistral OCR to process manuals, praising its ability to read difficult fonts and tables cheaply.

- Local LLMs Show Cloud Promise: Members discussed running LLMs locally versus using cloud services, with one advocating for the potential of local DIY LLMs over black box cloud solutions.

- Discussion included splitting processes between GPU and CPU, and the benefits of quantization in reducing memory requirements.

- SimTheory's Claims Spark Skepticism: A user promoted SimTheory for offering a higher O1 message cap limit than OpenAI at a lower price.

- Other members voiced disbelief, questioning how they could undercut OpenAI's pricing while providing a higher limit.

- Models Mimic, Missing Opportunities: Models tend to closely follow request patterns, potentially overlooking better methods, especially with increased model steerability.

- One said, When given code to screw in nails with a hammer, the model runs with it, guessing we know what we want.

aider (Paul Gauthier) Discord

- Aider Advocates Reasoning Indicator: Users have requested a feature in Aider to indicate when the reasoning model is actively reasoning, particularly for Deepseek R1 or V3 on Openrouter.

- A member proposed patching Aider and litellm, referencing a hack in litellm to retrieve reasoning tokens inside

tags.

- A member proposed patching Aider and litellm, referencing a hack in litellm to retrieve reasoning tokens inside

- Jamba Models strut Mamba-Transformer MoE: AI21 Labs launched Jamba 1.6 Large & Mini models, claiming better quality and speed than open models and strong performance in long context tasks with a 256K context window.

- The models uses a Mamba-Transformer MoE hybrid architecture for cost and efficiency gains, and can be deployed self-hosted or in the AI21 SaaS.

- Startups Swear by AI-Written Code: A Y Combinator advisor mentioned that a quarter of the current startup cohort has businesses almost entirely based on AI-written code.

- The context provided no further details.

- Copilot Bans Aider User: A user reported a Copilot account suspension due to very light use of copilot-api in aider, urging caution to other users.

- Others speculated about possible causes like account sharing or rate limiting, with a reference to the copilot-api GitHub repo.

- QwQ-32B Challenges R1: A member shared a link about QwQ-32B, asserting it has changed local AI coding forever and achieves state-of-the-art (SOTA) performance at home.

- Another member pointed to a benchmark discussion suggesting QwQ-32B might be good but not necessarily better than R1, especially considering the model size in Discord.

Yannick Kilcher Discord

- DeepSeek Faces Corporate Bans: Many companies are banning DeepSeek due to security concerns, despite its open-source nature, sparking debate over media influence, Chinese government influence, and the need for reviewable code and local execution.

- One member noted that DeepSeek is like Deep Research + Operator + Claude Computer.

- Discrete Diffusion Models Get Ratios: A member suggested discussing the paper Discrete Diffusion Modeling by Estimating the Ratios of the Data Distribution from arxiv, noting it likely underpins Inception Labs's product.

- It was further stated that solving for the duals λ requires optimizing over the Lambert W function and that this can be computationally inefficient, suggesting the usage of cvxpy and adjoint method.

- Latent Abstraction Reduces Reasoning Length: A new paper proposes a hybrid reasoning representation, using latent discrete tokens from VQ-VAE to abstract initial steps and shorten reasoning traces.

- A member questioned if this latent reasoning is merely context compression, with another joking that electroconvulsive therapy (ECT) might be needed to prevent the misuse of the term reasoning in AI.

- OpenAI Softens AGI Stance: OpenAI is reportedly shifting from expecting a sudden AGI breakthrough to viewing its development as a continuous process, according to the-decoder.com.

- This shift may be a result of the underwhelming reception of GPT 4.5, with some blaming Sam Altman for setting expectations too high.

- Sampling Thwarts Agent Loop: The use of n-sigma-sampling, detailed in this paper and GitHub repo, seems to mitigate bad samples and looping behavior in multi-step agentic workflows.

- The technique filters tokens efficiently without complex probability manipulations, maintaining a stable sampling space regardless of temperature scaling.

MCP (Glama) Discord

- VSCode Embraces MCP in Copilot: VSCode plans to add MCP support to GitHub Copilot, as showcased on their live stream.

- Community members are discussing the implications for both open-source and closed-source MCP implementations.

- MCP Servers Face Prompt Injection Threat: Concerns were raised regarding MCP servers serving malicious prompt injections to AI agents, exploiting the trust models place in tool calls over internal knowledge.

- Suggested mitigations include displaying a list of tool descriptions upon first use and alerting users to any instruction changes to prevent exploits.

- Security Channel Targets MCP Exploits: Community members considered creating a security channel to proactively prevent exploits, emphasizing the risks of connecting tools to MCP Servers/Remote Hosts without understanding the consequences.

- The discussion highlighted the potential for regulatory compliance tool descriptions to trick models into including backdoors.

- Python MCP Quickstart Confuses Users: Users reported errors when running the Python MCP quickstart (here), leading to a recommendation to use wong2/mcp-cli as a better alternative.

- This alternative is considered easier to use and more reliable for initial MCP setup.

- Swagger Endpoints Turn MCP-Friendly: A member is developing mcp-openapi-proxy to convert any swagger/openapi endpoint into discoverable tools, mirroring the design of their mcp-flowise server.

- Their latest mcp-server functions in 5ire but encounters issues with Claude desktop, indicating compatibility challenges across different platforms.

Modular (Mojo 🔥) Discord

- Mojo's Flexibility Sparks Debate: Discussions about Mojo's dynamism and its impact on performance led to considerations about use cases and the balance between flexibility and speed, referencing this HN post.

- Suggestions arose that dynamism should only penalize performance when actively used, though concerns persist about its potential impact on struct performance, even without classes.

- Monkey Patching Faces Scrutiny: The community explored alternatives to monkey patching to achieve dynamic behaviors in Mojo, such as function pointers or composition, citing that it's slower, harder to understand, breaks static analysis tooling and generally doesn't do anything that proper polymorphism couldn't have done.

- The discussion highlighted that excessive monkey patching can result in harder-to-understand code that disrupts static analysis, arguing that its utility doesn't outweigh the drawbacks.

- Python Library Porting Challenges: Members tackled the obstacles of porting Python libraries to Mojo, especially regarding dynamism and global state, with the recommendation to utilize CPython interop for performance-critical components.

- Concerns were voiced about porting libraries heavily reliant on Python's dynamism and global state, particularly when that library cannot function otherwise.

- Protocol Polymorphism Gains Traction: The advantages of protocol polymorphism in achieving polymorphism without a class tree were highlighted, with a reference to PEP 544 for polymorphism without class hierarchies.

- Some members endorsed using a hash table of function pointers for dynamic behaviors, favoring static typing and ownership rules for unit tests in Mojo.

GPU MODE Discord

- SOTA Agentic Methods are Simple Algorithmically: Members discussed how SOTA agentic methods on Arxiv tend to involve relatively simple algorithms, resembling a small state machine.

- This implies that complex framework abstractions might be unnecessary, suggesting simpler abstractions for data, state, and API call management suffice.

- Triton Autotune use_cuda_graph Causes Confusion: A member sought clarification on the

use_cuda_graphargument intriton.autotune, unsure how it applies to single CUDA kernels.- The confusion stems from the fact that CUDA graphs typically optimize sequences of kernel launches, contrasting with the single-kernel scope of

triton.autotune.

- The confusion stems from the fact that CUDA graphs typically optimize sequences of kernel launches, contrasting with the single-kernel scope of

- Nvidia's NCCL AllReduce Implemented with Double Binary Trees Beats Ring Topology: The NVIDIA blog post suggests that double binary trees in NCCL 2.4 offer full bandwidth and lower logarithmic latency compared to 2D ring latency for the AllReduce operation.

- Double binary trees were added in NCCL 2.4 which offer full bandwidth and a logarithmic latency even lower than 2D ring latency.

- WoolyAI Launches CUDA Abstraction Beta for GPU Usage: WoolyAI has launched a beta for its CUDA abstraction layer that decouples Kernel Shader execution from CUDA applications, compiling applications to a new binary and shaders into a Wooly Instruction Set.

- This enables dynamically scheduling workloads for optimal GPU resource utilization; they are currently supporting PyTorch.

- Cute Kernels Autotunes CUDA and Triton Kernels: A member released Cute Kernels, a collection of kernels for speeding up training through autotuning over Triton and CUDA implementations, and can dispatch to either cutlass or Triton automatically.

- This implementation was used in production to train IBM's Granite LLMs because the LLVM compiler can sometimes create more efficient code than the NVCC, and makes sense to tune over the kernel backend.

Eleuther Discord

- Open Source AI Projects Seek New Members: A new member seeks recommendations for interesting open-source AI projects in areas like LLM pre-training, post-training, RL, and interpretability, and suggested modded-nanoGPT for those interested in theory work.

- Another member suggested that if the new member is interested in pretraining, the best project to get involved with is the GPT-NeoX training library.

- Token Assorted Paper's Vocabulary is Questionable: A member speculated that the Token Assorted paper might be simply adding the codebook to their vocabulary during fine-tuning for next token prediction on latent codes.

- They criticized this approach as potentially not generalizable to open reasoning domains, suggesting that finding the K most common strings in the reasoning corpus could yield better results.

- TorchTitan Sharding Requires All-Reduce: In a discussion about TorchTitan embedding sharding, it was explained that with vanilla TP, if the input embedding is sharded on the vocab dimension, an all-reduce is required afterward to handle cases where the embedding layer outputs 0 for missing vocabulary elements, clarified in this issue on Github.

- Members discussed the storage implications of having an embedding layer output zeros for missing tokens, noting that storage is needed right after communications, but that it's free if you can reuse that storage.

- Logit Lens Illuminates Llama-2's Linguistic Bias: A member shared their appreciation for this paper on multilingual language models and the use of Logit Lens in analyzing the Llama-2 family.

- The paper explores how Llama-2 models, trained on English-dominated data, handle non-English prompts, revealing phases where the model initially favors English translations before adjusting to the input language.

LlamaIndex Discord

- yWorks unveils Real-Time Knowledge Graph Visualization: @yworks showcased yFiles, its SDK for visualizing knowledge graphs, offering real-time updates and dynamic interactions.

- This demo highlights the ability to dynamically update and interact with knowledge graphs in real-time.

- Anthropic Cookbook Expanded: The LlamaIndex team updated and expanded their Anthropic Cookbook, the authoritative source for learning about basic API setup with simple completion and chat methods.

- The cookbook serves as a comprehensive guide for setting up and utilizing Anthropic's APIs within the LlamaIndex framework.

- Debugging Prompt Printing with SQLTableRetrieverQueryEngine: A member inquired about printing the prompt used by SQLTableRetrieverQueryEngine in LlamaIndex, where another member shared a code snippet

from llama_index.core import set_global_handler; set_global_handler("simple")to enable prompt printing.- The solution provides a practical method for debugging and understanding the prompts used by SQLTableRetrieverQueryEngine during query execution.

- Jina AI Package encounters Installation Issues: A member reported an import error with the Jina AI package, where it was suggested to install the provider package using

npm install @llamaindex/jinaai, with a link to LlamaIndex migration documentation.- The migration documentation explains the shift to provider packages in v0.9, resolving the import error by ensuring the correct package installation.

- LlamaExtract Beta Tempts Eager Adopters: A member requested access to the beta version of LlamaExtract, where they were instructed to DM a specific user with their email, also referencing the LlamaExtract documentation.

- The documentation outlines the process for getting started with LlamaExtract and highlights key features for potential beta testers.

Cohere Discord

- Command R7B Inference Dragging?: A user reported slow inference times with Command R7B on Hugging Face, which was attributed to suboptimal user hardware or inefficient model execution.

- The member clarified that the issue likely stems from the user's setup rather than the inherent performance of Command R7B.

- Ollama Tool Issues Plague Users: A user encountered issues with tool invocation using

command-r7b:latestwith Ollama and Langchain, receiving errors about missing tool access.- Guidance suggested ensuring correct JSON format for tool passing and verifying Ollama's configuration for tool calling support.

- Dev Seeks Open Source AI Gig: A developer with experience in pre-training GPT-2 and fine-tuning models on Hellaswag is seeking interesting open-source AI projects to contribute to.

- The member is also interested in networking within the Vancouver, BC area.

- 504 Gateway Error Returns!: Users reported recurring 504 Gateway Errors and 502 Server Errors, indicating temporary server-side issues.

- The error messages advised retrying requests, suggesting the problems were transient.

- Graphs Power Better Topic Models: A member suggested using Knowledge Graphs for enhanced topic modelling, specifically recommending a LLM from TogetherAI.

- They highlighted the generous free credits offered by TogetherAI, encouraging experimentation with their platform for topic modelling tasks.

Notebook LM Discord

- Podcast Cloning Gets Streamlined: A user shared a YouTube video showcasing NotebookLM integrated with Wondercraft for creating podcasts with professionally cloned voices, streamlining the podcast creation process.

- Commentary suggests Wondercraft offers a more streamlined approach than 11Labs and HeyGen, though its subscription price might be steep for non-monetized podcasts.

- Drive Data Encryption Discussed: A user pointed out that while data is encrypted during transmission to Google Drive, it is not encrypted on Drive itself.

- This means that Google, successful hackers, and those with whom the user has shared the directory can access the data.

- AI Voices Stuttering Like People: An AI speaker in a generated audio file now stammers like normal people, which feels very natural, according to one user who attached an example wav file.

- The user also mentioned that the stammering increases the audio length, potentially reducing the amount of actual information conveyed within Google's daily limit.

- Unlock Analyst/Guide Chat Settings Impact Timeline Generation: A user discovered that chat settings such as analyst/guide short/long in NotebookLM affect the timeline and overview generation, as the hosts essentially request these settings during audio overview generation.

- The user also noted their assistant combines the briefing overview, detailed timeline, character sheets, and raw sources into a single document.

- NotebookLM gets Chrome Extensions: Members discussed the possibility of uploading a list of URLs to NotebookLM to add each URL as a source, and mentioned several Chrome extensions are available for this purpose.

- These include the NotebookLM Web Importer, NotebookLM Toolbox, and NotebookLM YouTube Turbo.

DSPy Discord

- DSPy Batch Function Ponders Parallel Work: A member asked whether DSPy's batch function can delegate parallel work to a vllm backend running two instances of the same LLM, referencing the parameters num_threads, max_errors, and return_failed_examples.

- It was suggested to increase num_sequences or pipeline_parallel_size if enough VRAM is available, instead of using separate APIs.

- VLLM Pipeline Parallel Balances Load: With pipeline parallel size set to 2 in the vllm setup, a member confirmed that vllm handles the load balancing.

- Benchmarking was encouraged to compare processing times against non-parallel approaches.

- LM Subclass Proposed for Load Balancing: A member proposed creating a subclass of LM for load balancing if two instances are on different nodes, as DSPy doesn't natively handle this.

- Although a proxy could forward requests, solving it on the vllm side is considered the better approach.

AI21 Labs (Jamba) Discord

- String Replacement Snippet Shined: A member posted a PHP code snippet in

general-chatfor string replacement:$cleanedString = str_replace(['!', '@', '#', '$', '%', '^'], '', "This is a test string!@#$%^");.- The code removes special characters from a string.

- Laptop's Leap Leads to Loss: A member reported their laptop broke after taking a tumble.

- They characterized the damage as not pretty.

tinygrad (George Hotz) Discord

- Tinygrad JIT taking 30min: A user reported that the tinygrad JIT compiler took over 30 minutes to compile a 2-layer model.

- The message did not contain any solutions.

- Tinygrad Loss turns NaN: A user inquired about the cause of loss becoming NaN (Not a Number) after the initial step (step 0) in tinygrad.

- The message did not contain any solutions.

Torchtune Discord

- Torchtune Plans Audio Addition: Members discussed high level plans to incorporate audio modality into Torchtune in the future.

- There are no specific timelines or technical details available at this time, indicating it's in the early planning stages.

- Torchtune Audio Support Still on the Horizon: The team continues to indicate they are planning to add audio modality support to Torchtune in the future.

- As of now, specific timelines and technical details remain unconfirmed, suggesting that this feature is still in the preliminary planning stages.

LLM Agents (Berkeley MOOC) Discord

- Link Request for LLM Agents (Berkeley MOOC): claire_csy requested a valid link to the LLM Agents Berkeley MOOC discussion, as the previous link had expired.

- The user seeks access to the MOOC lecture discussion for further engagement.

- Need Active Link for LLM Agents MOOC: A user, claire_csy, reported that the existing link for the LLM Agents MOOC lecture discussion is expired.

- The request highlights the need for an updated and accessible link for participants interested in the Berkeley MOOC.

Gorilla LLM (Berkeley Function Calling) Discord

- Diffusion LLMs Hype Sparking Debate: A community member inquired about the hype around the launch of Diffusion LLMs, specifically the Mercury model, and whether it will replace transformer-based models.

- They mentioned reading the white paper but found it difficult to understand, seeking insights from experts in the community.

- LLaDA Model: Diffusion-based LLM Paradigm Shift: A community member shared a link to diffusionllm.net, explaining that Large Language Diffusion Models (LLaDA) represent a new paradigm in language model architecture.

- They elucidate that, unlike traditional autoregressive (AR) Transformers, LLaDA uses a denoising diffusion process to generate text in a parallel, coarse-to-fine manner.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!