[AINews] DeepSeek v3: 671B finegrained MoE trained for $5.5m USD of compute on 15T tokens

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Full co-design of algirthms, frameworks, and hardware is all you need.

AI News for 12/25/2024-12/26/2024. We checked 7 subreddits, 433 Twitters and 32 Discords (215 channels, and 5486 messages) for you. Estimated reading time saved (at 200wpm): 548 minutes. You can now tag @smol_ai for AINews discussions!

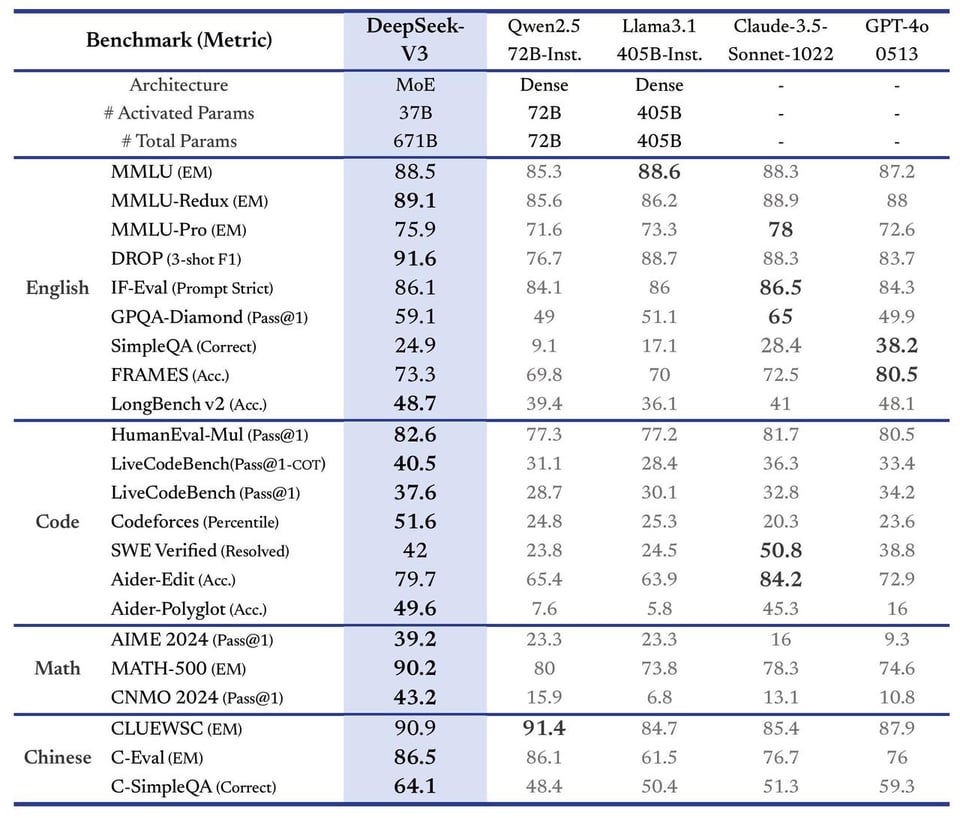

As teased over the Christmas break, DeepSeek v3 is here (our previous coverage of DeepSeek v2 here). The benchmarks are as good as you've come to expect from China's frontier open model lab:

(more details on aider and bigcodebench)

But the training details are even better:

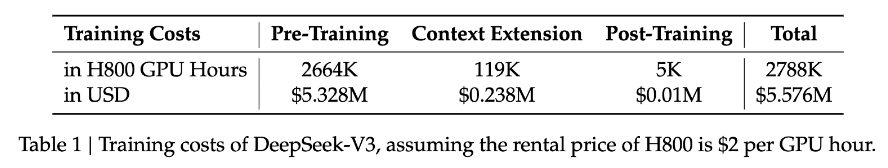

- trained on 8-11x less the normal budget of these kinds of models: specifically 2048 H800s (aka "nerfed H100s"), in 2 months. Llama 3 405B was, per their paper, trained on 16k H100s. They estimate this cost $5.5m USD,

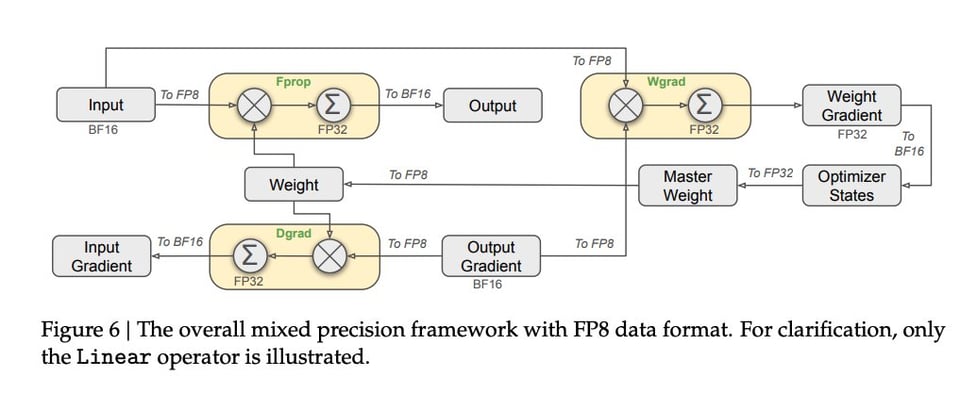

- homegrown native FP8 mixed precision training (without having access to Blackwell GPUs - as Shazeer intended?)

- Scaling up Multi-Head Latent Attention from DeepSeek v2

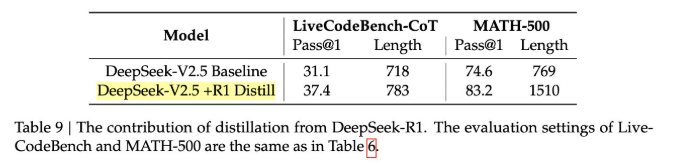

- distilling from R1-generated synthetic reasoning data

and using other kinds of reward models

and using other kinds of reward models - no need for tensor parallelism - recently named by Ilya as a mistake

- pruning + healing for DeepSeekMoE style MoEs, scaled up to 256 experts (8 active + 1 shared)

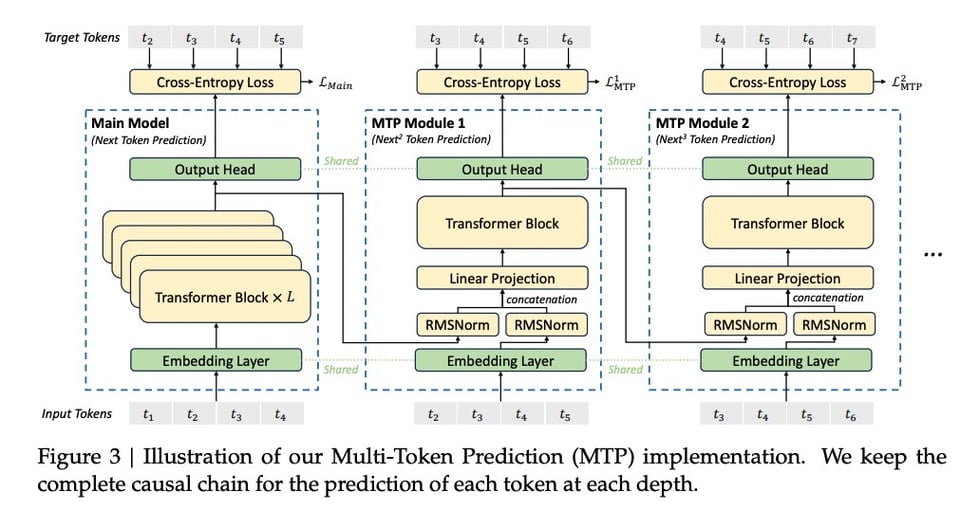

- a new "multi token prediction" objective (from Better & Faster Large Language Models via Multi-token Prediction) that allows the model to look ahead and preplan future tokens (in this case just 2 at a time)

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Developments and Releases

- DeepSeek-V3 Launch and Performance: @deepseek_ai and @reach_vb announced the release of DeepSeek-V3, featuring 671B MoE parameters and trained on 14.8T tokens. This model outperforms GPT-4o and Claude Sonnet-3.5 in various benchmarks.

- Compute Efficiency and Cost-Effectiveness: @scaling01 highlighted that DeepSeek-V3 was trained using only 2.788M H800 GPU hours, significantly reducing costs compared to models like Llama 3 which used 30.8M GPU-hours.

- Deployment and Accessibility: @DeepLearningAI and @reach_vb shared updates on deploying DeepSeek-V3 through platforms like Hugging Face, emphasizing its open-source availability and API compatibility.

AI Research Techniques and Benchmarks

- OREO and NLRL Innovations: @TheTuringPost discussed the OREO method and Natural Language Reinforcement Learning (NLRL), showcasing their effectiveness in multi-step reasoning and agent control tasks.

- Chain-of-Thought Reasoning Without Prompting: @denny_zhou introduced a breakthrough in Chain-of-Thought (CoT) reasoning by fine-tuning models to reason intrinsically without relying on task-specific prompts, significantly enhancing model reasoning capabilities.

- Benchmark Performance: @francoisfleuret and @TheTuringPost reported that new techniques like Multi-Token Prediction (MTP) and Chain-of-Knowledge consistently outperform existing benchmarks in areas such as math problem-solving and agent control.

Open Source AI vs Proprietary AI

- Competitive Edge of Open-Source Models: @scaling01 emphasized that DeepSeek-V3 now matches or exceeds proprietary models like GPT-4o and Claude Sonnet-3.5, advocating for the sustainability and innovation driven by open-source AI.

- Licensing and Accessibility: @deepseek_ai highlighted that DeepSeek-V3 is open-source and licensed for commercial use, making it a liberal alternative to closed models and promoting wider accessibility for developers and enterprises.

- Economic Implications: @reach_vb and @DeepLearningAI discussed how open-source AI democratizes access, reduces dependency on high-margin proprietary models, and fosters a more inclusive AI ecosystem.

AI Infrastructure and Compute Resources

- Optimizing GPU Usage: @francoisfleuret and @scaling01 explored how DeepSeek-V3 leverages H800 GPUs efficiently through techniques like Multi-Token Prediction (MTP) and Load Balancing, enhancing compute utilization and training efficiency.

- Hardware Design Improvements: @francoisfleuret suggested hardware enhancements such as improved FP8 GEMM and better quantization support to support MOE training, addressing communication bottlenecks and compute inefficiencies.

- Cost-Effective Scaling Strategies: @reach_vb detailed how DeepSeek-V3 achieved state-of-the-art performance with a fraction of the typical compute resources, emphasizing algorithm-framework-hardware co-design to maintain cost-effectiveness while scaling.

Immigration and AI Talent Policies

- Advocacy for Skilled Immigration: @AndrewYNg and @HamelHusain stressed the importance of high-skill immigration programs like H-1B and O-1 visas for fostering innovation and economic growth within the AI sector.

- Policy Critiques and Recommendations: @bindureddy and @HamelHusain critiqued restrictive visa policies, advocating for easier visa transitions, eliminating job-specific restrictions, and expanding legal immigration to enhance US AI competitiveness and innovation.

- Economic and Moral Arguments: @AndrewYNg highlighted that immigrants create more jobs than they take, framing visa reforms as both an economic imperative and a moral issue to support the American economy.

Memes and Humor

- Fun Interactions and Memes: @HamelHusain humorously remarked on the misunderstandings in AI model performances, bringing a casual and entertaining tone to the technical discourse.

- Playful AI Conversations: @teortaxesTex posted a meme-like comment, injecting humor into the conversation about AI capabilities.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek V3 Release: Technical Innovations and Benchmarks

- DeepSeek-V3 Officially Released (Score: 101, Comments: 22): DeepSeek has released DeepSeek-V3, featuring a Mixture of Experts (MoE) architecture with 671B total parameters and 37B activated parameters, outperforming other open-source models and matching proprietary models like GPT-4o and Claude-3.5-Sonnet. The model shows significant improvements in knowledge-based tasks, long text evaluations, coding, mathematics, and Chinese language capabilities, with a 3x increase in token generation speed. The open-source model supports FP8 weights, with community tools like SGLang and LMDeploy offering native FP8 inference support, and a promotional API pricing period until February 8, 2025.

- DeepSeek-V3's FP8 Training: The model is trained using a FP8 mixed precision training framework, marking a first in validating FP8 training's feasibility on a large-scale model. This approach yielded a stable training process without any irrecoverable loss spikes or rollbacks, prompting curiosity about whether DeepSeek has effectively "cracked" FP8 training.

- Economic and Technical Considerations: Training DeepSeek-V3 cost $5.5 million, highlighting the economic efficiency valued by the quantitative firm behind it. Discussions also touched on potential GPU sanctions influencing the model's design, suggesting it may be optimized for CPU and RAM use, with mentions of running it on Epyc boards.

- Community and Open Source Dynamics: There is a distinction between open-source and free software, with comments noting that DeepSeek-V3's release on r/localllama targets the local community rather than broader open-source promotion. Some users humorously noted the model's release on Christmas, likening it to a surprise announcement from a Chinese "Santa."

- Deepseek V3 Chat version weights has been uploaded to Huggingface (Score: 143, Comments: 67): DeepSeek V3 chat version weights are now available on Huggingface, providing access to the latest iteration of this AI model.

- Hardware Requirements and Performance: Discussions highlight the significant hardware requirements for running DeepSeek V3, with mentions of needing 384GB RAM and four RTX 3090s for one-bit quantization. Users discuss various quantization levels and their VRAM requirements, with a humorous tone about needing to sell assets to afford the necessary GPUs.

- Open Source and Competition: There's a lively debate about open-source models outperforming proprietary ones, with references to Elon Musk's X.AI and the irony of open-source models potentially surpassing his proprietary Groq2 and Groq3 models. The conversation underscores the value of open-source competition in driving technological advancement.

- Model Size and Complexity: The model's size, at 685B parameters and 163 shards, is a focal point of discussion, with users joking about the impracticality of needing 163 GPUs. This highlights the challenges of handling such a large and complex model in terms of both hardware and software implementation.

- Sonnet3.5 vs v3 (Score: 83, Comments: 19): DeepSeek V3 significantly outperforms Sonnet 3.5 in benchmarks, as illustrated by an animated image showing a dramatic confrontation between characters labeled "Claude" and "deepseek." The scene conveys a dynamic and competitive environment, emphasizing the notable performance gap between the two.

- DeepSeek V3 is significantly more cost-effective, being 57 times cheaper than Sonnet 3.6, and offers nearly unlimited availability on its website, compared to Claude's limited access even for paid users.

- There is some concern about DeepSeek V3's low context window, though its price-to-performance ratio is highly praised, rated as 10/10 by users.

- Users express interest in real-world testing of DeepSeek V3 to verify benchmark results, with a suggestion to include it in lmarena's webdev arena for a more comprehensive comparison against Sonnet.

Theme 2. Cost Efficiency of DeepSeek V3 vs Competition

- PSA - Deepseek v3 outperforms Sonnet at 53x cheaper pricing (API rates) (Score: 291, Comments: 113): Deepseek V3 outperforms Sonnet while being 53x cheaper in API rates, which is a significant difference even compared to a 3x price disparity. The author expresses interest in Anthropic and suggests that they might still pay more for superior performance in coding tasks if a model offers a substantial improvement.

- The training cost for Deepseek V3 was $5.6M, utilizing 2,000 H800s over less than two months, highlighting potential efficiencies in LLM training. The model's API pricing is significantly cheaper than Claude Sonnet, with costs of $0.14/1M in and $0.28/1M out compared to $3/1M in and $15/1M out for Sonnet, making it ~5x cheaper than some local builds' electricity costs.

- Deepseek V3's context window is only 64k, which might contribute to its cost-effectiveness, though it still underperforms against Claude in some benchmarks. There is a discussion on the model's parameter size (37B active parameters) and the use of MoE (Mixture of Experts) to reduce inference costs.

- Concerns about data usage and training on API requests were raised, with some skepticism about the model's performance and data practices. There is anticipation for Deepseek V3's availability on platforms like OpenRouter, with mentions of promotions running until February to further reduce costs.

- Deepseek V3 benchmarks are a reminder that Qwen 2.5 72B is the real king and everyone else is joking! (Score: 86, Comments: 46): DeepSeek V3 benchmarks demonstrate Qwen 2.5 72B as a leading model, outperforming others such as Llama-3.1-405B, GPT-4o-0513, and Claude 3.5 across several benchmarks. Notably, DeepSeek-V3 excels in the MATH 500 benchmark with a score of 90.2%, highlighting its superior accuracy.

- Discussion highlights the cost-efficiency of running models like DeepSeek V3 on servers for multiple users instead of local setups with GPUs like 2x3090, emphasizing savings on electricity and hardware. OfficialHashPanda notes the advantages of MoE (Mixture of Experts), which allows for reduced active parameters while increasing capabilities, making it suitable for serving many users.

- Comments explore the hardware requirements and costs, with mentions of using cheap RAM and server CPUs with high memory bandwidth for running large models efficiently. The conversation contrasts the cost of APIs versus local hardware setups, suggesting server-based solutions are more economical for large-scale usage.

- The potential for smaller, efficient models is discussed, with interest in what a DeepSeek V3 Lite could offer. Calcidiol suggests that future "lite" models might match the capabilities of today's larger models by leveraging better training data and techniques, indicating the ongoing evolution and optimization of AI models.

Theme 3. FP8 Training Breakthrough in DeepSeek V3

- Deepseek V3 is officially released (code, paper, benchmark results) (Score: 372, Comments: 96): DeepSeek V3 has been officially released, featuring FP8 training capabilities. The release includes access to the code, a research paper, and benchmark results, marking a significant development in the field of AI training methodologies.

- DeepSeek V3's Performance and Capabilities: Despite its impressive architecture and FP8 training, DeepSeek V3 still trails behind models like Claude Sonnet 3.5 in some benchmarks. However, it is praised for being the strongest open-weight model currently available, with potential for easier self-hosting if the model size is reduced.

- Technical Requirements and Costs: Running DeepSeek V3 requires substantial resources, such as 384GB RAM for a 600B model, and could cost around $10K for a basic setup. Users discuss various hardware configurations, including EPYC servers and the feasibility of CPU-only inference, highlighting the need for extensive RAM and VRAM.

- Innovative Features and Licensing Concerns: The model introduces innovative features like Multi-Token Prediction (MTP) and efficient FP8 mixed precision training, significantly reducing training costs to 2.664M GPU hours. However, licensing issues are a concern, as the Deepseek license is seen as highly restrictive for commercial use.

- Wow this maybe probably best open source model ? (Score: 284, Comments: 99): DeepSeek-V3 demonstrates exceptional performance as an open-source model, surpassing its predecessors and competitors like DeepSeek-V2.5, Qwen2.5-72B-Inst, Llama-3.1-405B-Inst, GPT-4o-0513, and Claude-3.5-Sonnet-1022. Notably, it achieves a 90.2% accuracy on the MATH 500 benchmark, indicating its robust training stability and efficiency when using FP8.

- Inference Challenges and Capabilities: Users discussed the difficulty of running DeepSeek-V3 locally due to its 671B parameters, with 4-bit quantization needing at least 336GB of RAM. Despite this, it can achieve around 10 tokens/second in CPU inference on a 512GB dual Epyc system due to its 37B active parameters and mixture of experts architecture with 256 experts.

- Model Comparison and Performance: The model's performance is celebrated as comparable to closed-source models like GPT-4o and Claude-3.5-Sonnet, with some users noting its potential to outperform in goal-oriented tasks despite possibly lagging in instruction adherence compared to Llama.

- Open Weights vs. Open Source: There is confusion and clarification around the model being open weights rather than fully open source, with discussions about the implications and potential for future distillation to a smaller, more manageable size like 72B parameters.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. OpenAI O1 Model Impacting Financial Markets

- A REAL use-case of OpenAI o1 in trading and investing (Score: 232, Comments: 207): The OpenAI O1 model demonstrates significant advancements in financial research and trading strategies, outperforming traditional models by offering precise, data-driven solutions and supporting function-calling for generating JSON objects. Notably, the model's ability to perform accurate financial analysis, such as identifying SPY's 5% drops over 7-day periods since 2000, enables the creation of sophisticated trading strategies that can be deployed without coding. The model's enhanced capabilities, including vision API and improved reasoning, make it accessible for complex financial tasks, potentially transforming finance and Wall Street by democratizing algorithmic trading and research.

- Commenters highlighted the challenges and skepticism around using AI for financial markets, emphasizing issues like data leakage and the efficient market hypothesis. Many pointed out that historical backtesting doesn't guarantee future success due to market adaptability and randomness, suggesting that these models may not perform well in real-time scenarios.

- Behavioral aspects of investing were discussed, with some users noting that emotional responses to market fluctuations, such as selling during a drawdown, can undermine strategies. The importance of understanding risk-reward dynamics and avoiding overconfidence in AI-generated strategies was also stressed.

- A few users shared personal experiences and projects, like a Vector Stock Market bot using various LLMs, but acknowledged the limitations and need for further testing. The general consensus was that AI might democratize access to tools but won't necessarily lead to consistent outperformance due to inherent market complexities.

Theme 2. Debates Surrounding O1 Pro Mode's Usefulness

- o1 pro mode is pathetic. (Score: 177, Comments: 133): The post criticizes OpenAI's O1 Pro mode, describing it as overpriced and inefficient for programming tasks compared to 4o, due to its slow output generation. The author, self-identified as an AI amateur, argues that the models are overfit for benchmarks and not practical for real-world applications, suggesting that the "reasoning models" are primarily a marketing tactic. The only practical use noted is in alignment tasks, where the model assesses user intent.

- The o1 Pro model receives mixed reviews; some users find it invaluable for complex programming tasks, citing its ability to handle large codebases and produce accurate results on the first try, while others criticize its slow response time and dated knowledge cutoff. Users like ChronoPsyche and JohnnyTheBoneless praise its capability in handling complex tasks, whereas others, like epistemole, argue that unlimited rate limits are the real advantage, not the model's performance.

- Several users emphasize the importance of detailed prompts for maximizing o1 Pro's potential, suggesting that providing a comprehensive document or using iterative methods with large context windows can yield better results compared to feeding small code snippets. Pillars-In-The-Trees compares effective prompting to instructing a graduate student, highlighting the model's proficiency in logical tasks.

- Discussions reveal that o1 Pro excels in certain programming languages, with users like NootropicDiary mentioning its superiority in Rust over other models like Claude, while others find Claude more effective for different languages such as TypeScript. This reinforces the notion that the model's effectiveness can vary significantly depending on the task and language used.

Theme 3. OpenAI's Latest Developments and Tools Overview

- 12 Days of OpenAi - a comprehensive summary. (Score: 227, Comments: 25): The "12 Days of OpenAI" grid captures daily highlights between December 5th and December 20th, featuring updates like the ChatGPT Pro Plan on December 5th and Reinforcement Fine-Tuning on December 6th. The series culminates with o3 and o3-mini advancements on December 20th, indicating progress towards AGI.

- Reinforcement Fine-Tuning on Day 2 is highlighted as a significant development, with potential to substantially improve systems based on minimal examples. While the absence of a formal paper leaves some uncertainty, its implications for agent development are considered promising, especially looking towards 2025.

- Discussion around Canvas UX indicates that its recent update was minor, with some users expressing dissatisfaction over its limitation to approximately 200 lines. Despite being a past introduction, it remains a point of contention among users.

- There is curiosity about the availability of a Windows app following the MacOS app update, with a humorous suggestion that it may coincide with Microsoft building OpenAI a nuclear reactor.

Theme 4. ChatGPT Downtime and User Impact

- CHAT GPT IS DOWN. (Score: 366, Comments: 206): ChatGPT experienced a significant service disruption, with a peak of 5,315 outages reported at 6:00 PM. The graph highlights a sharp increase in outage reports after a period of minimal activity, indicating widespread user impact.

- Users express frustration and humor over the ChatGPT outage, with some joking about the reliance on AI for tasks like homework and productivity. Street-Inspectors humorously notes the irony of asking ChatGPT why it isn't working.

- There is a reference to OpenAI's status page and Downdetector as sources for checking outage status, with a link provided by bashbang showing the major outage affecting ChatGPT, API, and Sora.

- Kenshiken and BuckyBoy3855 mention an "upstream provider" as the cause of the issue, highlighting the technical aspect of the outage, while HappinessKitty speculates about server capacity issues.

AI Discord Recap

A summary of Summaries of Summaries by o1-2024-12-17

Theme 1. DeepSeek V3 Takes Center Stage

- Massive Mixed-Precision Boost: DeepSeek V3 unveiled a 685B-parameter model with FP8 training, claiming 2 orders of magnitude cost savings. It runs at about 60 tokens/second, trained on 14.8T tokens, and many regard it as a strong open competitor to GPT-4o.

- API Spread and Triple Usage: OpenRouter reported DeepSeek V3 usage tripled post-release, rivaling pricier incumbents. Community members praised its robust coding performance but flagged slow responses and large VRAM demands.

- MoE Structure Stirs Hype: DeepSeek’s Mixture-of-Experts architecture offers clearer scaling pathways and drastically lower training costs. Engineers speculate about future open-source expansions and 320-GPU HPC clusters for stable inference.

Theme 2. Code Editors & IDE Woes

- Windsurf & Cascade Blues: Windsurf’s Cascade Base model earned criticism for mishandling coding prompts and devouring credits without results. Engineers proposed global_rules workarounds, yet many remain frustrated by UI lags and unresponsive queries.

- Cursor IDE's Token Trials: Cursor IDE struggles with limited context handling and performance dips for large code tasks. Users contrasted it with DeepSeek and Cline, praising their extended context windows for more robust code generation.

- Bolt Token Tensions: Stackblitz (Bolt.new) users burned up to 1.5 million tokens in repetitive code requests. Many demanded direct code edits over example snippets and turned to GitHub feedback for subscription-tier improvements.

Theme 3. AI Powers Creative & Collaborative Work

- Podcasting Meets Real-Time Summaries: NotebookLM users integrated Google News into AI-driven podcasts, generating comedic bits alongside current events. Some shared short 15-minute TTRPG recaps, highlighting AI’s ability to keep hobbyists swiftly informed.

- ERP and Roleplay: Enthusiasts wrote advanced prompts for immersive tabletop campaigns, ensuring continuity in complex narratives. They cited chunking and retrieval-augmented generation (RAG) as vital for stable long-form storytelling.

- Voice to Voice & Music Generation: AI engineers showcased voice-to-voice chat apps and music creation from text prompts. They invited collaborators to refine DNN-VAD pipelines, bridging audio conversion with generative text models in fun new workflows.

Theme 4. Retrieval, Fine-Tuning, and HPC Upscaling

- GitIngest & GitDiagram: Devs mapped massive codebases to text and diagrams for RAG experiments. This approach streamlined LLM training and code ingestion, letting HPC clusters process big repos more effectively.

- LlamaIndex & DocumentContextExtractor: Users plugged in batch processing to slice costs by 50% and handle tasks off-hours. Combining chunk splitting, local embeddings, and optional open-source RLHF tools improved accuracy on real-world data.

- Fine-Tuning VLMs & HPC MLOps: Researchers tackled LLaVA, Qwen-VL, and HPC frameworks like Guild AI to manage large-scale model training. They noted HPC’s overhead and debated rolling their own minimal ops solutions to avoid SaaS pitfalls.

Theme 5. Key Tech & Performance Fixes

- TMA Beats cp.async: HPC folks explained how TMA outperforms cp.async on H100 for GEMM, allowing bulk scheduling and lower register usage. They praised structured sparse kernels in CUTLASS for further gains, especially with FP8.

- Mojo & Modular Gains: Users debugged StringRef crashes and uncovered missing length checks in memcpy calls. They praised new merchandise and debated MAX vs XLA compile times, eyeing improvements for HPC code.

- Tinygrad vs. PyTorch Speed Race: Tinygrad trailed PyTorch on CUDA with 800ms vs. 17ms forward passes, but devs pinned hopes on beam search caching and jitting. They merged PR fixes for input-creation loops and hammered out matching-engine bounties to reduce overhead.

PART 1: High level Discord summaries

Codeium (Windsurf) Discord

- Windsurf's Bold Industry Breakthrough: A new video shows engineers detailing how Windsurf deliberately defies typical development approaches, sharing insights on workflow changes and design choices (Windsurf's Twitter).

- They also sent out a holiday greeting, emphasizing community spirit and sparking conversation about the fresh perspective behind these bold moves.

- Cascade Base Model Blues: Users criticized the Cascade Base model for lacking accuracy in complex coding tasks and often failing to follow simple commands, especially when compared to Claude 3.5 Sonnet.

- Though some shared partial successes with global rules, others found minimal improvement and posted their frustrations alongside links like awesome-windsurfrules.

- Remote-Host Hiccups & Lag: People connecting via SSH Remote Hosts noticed that Windsurf displayed significant delays, making real-time edits confusing and untracked until Cascade updated.

- They reported that commands would still execute properly, but the delayed interface created a disjointed workflow that many found disruptive.

- Credit Drains & Unresponsive Queries: Users felt shortchanged when unresponsive requests devoured tokens without delivering functional outputs, leading to repeated support contact via Windsurf Editor Support.

- Many voiced concern over these credit-consuming failures, suggesting they undermined confidence in Windsurf's reliability for extensive codebases.

Cursor IDE Discord

- DeepSeek V3 Dominates Discourse: Developers praised DeepSeek V3 for code generation and analysis, claiming it rivals Sonnet 3.5 and offers faster outputs, as seen in this tweet from DeepSeek.

- Community members discussed integration with Cursor IDE, referencing the DeepSeek Platform for an API-compatible approach, and shared interest in lower-cost usage.

- Cursor IDE's Token Trials: Many reported Cursor IDE has a limited context window, reducing performance for large code generation tasks, with the Cursor site offering downloads for various platforms.

- Users compared how DeepSeek and Cline handle extended context windows more efficiently, referencing the Cursor Forum for continuing feedback about better token utilization.

- Next.js UI Woes Trip Up Designers: Creators battled UI issues in Next.js, complaining that code generation from Claude sometimes misaligned elements and complicated styling, even after using libraries like shadcn.

- They recommended embedding relevant docs into context for better design outcomes and pointed to Uiverse for quick UI components.

- OpenAI's Reliability Rollercoaster: Some confronted OpenAI's recent performance issues, citing slower response times and reduced availability, with alternative models offering steadier results at lower cost.

- They advised testing multiple AI systems, referencing the DeepSeek API Docs for compatibility, while others simply toggled between providers to keep tasks moving forward.

aider (Paul Gauthier) Discord

- Aider v0.70.0 Revs Up Self-Code Stats: Aider v0.70.0 introduced analytics opt-in, new error handling for interactive commands, and expanded model support, described in detail within Aider Release History.

- Community members highlighted 74% self-contributed code from Aider, praising the tool's improved installation, watch files functionality, and Git name handling as major upgrades.

- DeepSeek V3 Delivers 3x Speed Gains: DeepSeek V3 now processes 60 tokens/second (3x faster than V2), showing stronger coding performance than Sonnet 3.5 and featuring a 64k token context limit, as seen in this tweet.

- Community voiced excitement about DeepSeek V3 outpacing Claude in some tasks, although slow responses and context management remain consistent points of discussion.

- BigCodeBench Spots LLM Strengths & Shortfalls: The BigCodeBench Leaderboard (link) evaluates LLMs on real-world programming tasks and references the arXiv paper for deeper methodology.

- Contributors compared DeepSeek and O1 scores, noting how these metrics helped clarify each model’s code-generation capabilities under practical conditions.

- GitDiagram & GitIngest Make Repos Transparent: GitDiagram converts GitHub repositories into interactive diagrams, while GitIngest renders any Git repo as plain text for hassle-free code ingestion.

- Users only replace 'hub' with 'diagram' or 'ingest' in the URL to instantly visualize repository structures or prepare them for any LLM.

Nous Research AI Discord

- DeepSeek V3’s GPU Gobbler Gains: DeepSeek V3 launched with 685 billion parameters and demands about 320 GPUs like H100 for optimum performance, as shown in the official code repository.

- Discussions emphasized its large-scale VRAM requirements for stable inference, with members calling it one of the largest open-weight models available.

- Differentiable Cache Speeds Reasoning: Research on Differentiable Cache Augmentation reveals a method for pairing a frozen LLM with an offline coprocessor that manipulates the key-value (kv) cache, as described in this paper.

- The approach cuts perplexity on reasoning tasks, with members observing it maintains LLM functionality even if the coprocessor goes offline.

- Text-to-Video Tussle: Hunyuan vs LTX: Users compared Hunyuan and LTX text-to-video models for performance, emphasizing VRAM requirements for smoother rendering.

- They showed interest in T2V developments, suggesting resource-intensive tasks might benefit from pipeline adjustments.

- URL Moderation API Puzzle: An AI engineer struggled to build a URL moderation API that classifies unsafe sites accurately, highlighting issues with Llama’s structured output and OpenAI’s frequent denials.

- Community feedback noted the importance of specialized domain handling, as repeated attempts produced inconsistent or partial results.

- Inference Cost Conundrum: Participants debated the cost structure for deploying large AI models, questioning whether promotional pricing could endure high-usage demand.

- They suggested continuous load might balance operational expenses, keeping high-performance AI services feasible despite cost concerns.

OpenRouter (Alex Atallah) Discord

- Web Searching LLMs & Price Plunge: OpenRouter introduced Web Search for any LLM, currently free to use, offering timely references for user queries, as shown in this demonstration. They also slashed prices for multiple models, including a 12% cut on qwen-2.5 and a 31% cut on hermes-3-llama-3.1-70b.

- Community members labeled the cuts significant and welcomed these changes, particularly for high-tier models. Some anticipate an even broader shift in cost structures across the board.

- DeepSeek v3 Triples Usage: DeepSeek v3 soared in popularity on OpenRouter, reportedly tripling usage since release and matching bigger models in some metrics, as per this post. It competes with Sonnet and GPT-4o at a lower price, fueling discussions that China has caught up in AI.

- Users in the general channel also shared mixed reviews about its performance for coding tasks and poetry. Some praised its creative outputs, while others flagged the results as inconsistent.

- Endpoints & Chat Woes: OpenRouter launched a beta Endpoints API to let devs pull model details, referencing an example usage here. Some users faced OpenRouter Chat lag with large conversation histories, calling for more responsive handling of big data sets.

- The community noted no direct support for batching requests, emphasizing timely GPU usage. Meanwhile, certain 'no endpoints found' errors stemmed from misconfigured API settings, highlighting the importance of correct setup.

- 3D Game Wizardry with Words: A newly shown tool promises 3D game creation from simple text prompts, improving on earlier attempts with o-1 and o-1 preview. The approach hints at future voxel engine integration for more complex shapes, as teased in this project link.

- Enthusiasts see it as a leap from prior GPT-based attempts, with features seemingly refined for building entire interactive experiences. Some in the channel believe it could transform indie game development pipeline if scaled.

- AI Chat Terminal: Agents in Action: The AI Chat Terminal (ACT) merges agent features with codebase interactions, letting users toggle between providers like OpenAI and Anthropic. It introduces an Agent Mode to automate tasks and aims to streamline coding sessions, as shown in this repo.

- Developers in the app-showcase channel highlighted the potential for flexible multi-model usage within a single terminal. Many praised the convenience for building scripts that transcend typical chat constraints.

LM Studio Discord

- LM Studio Calms Large Models: Build 0.3.5 eased prior bugs in GGUF model loading and tackled session handling for MLX, as referenced in Issue #63.

- Users noted that QVQ 72B and Qwentile2.5-32B run better now, though some memory leaks remain under investigation.

- RPG Fans Retain Narrative Flow: Enthusiasts used models like Mistral and Qwen to manage long-running tabletop storylines, with prompts inspired by ChatGPT TTRPG guides.

- They explored fine-tuning and RAG techniques for better continuity, citing separate chunking as a strategy to keep the lore consistent.

- X99 Systems Keep Pace: Users running Xeon E5 v4 on X99 motherboards reported solid performance for model inference, even with older gear.

- A dual RTX 2060 setup showcased stable handling of bigger models, debunking the urgent need for new hardware.

- Multi-GPU Gains and LoRAs Hype: Participants observed low GPU usage (around 30%) and highlighted that extra VRAM doesn’t always provide a speed boost unless paired with enhancements like NVLink.

- They also speculated on soon-to-arrive video-generation LoRAs, though some doubted results when training on very few still images.

Stability.ai (Stable Diffusion) Discord

- Prompt Precision Powers SD: Many participants found that more descriptive prompts yield superior Stable Diffusion outputs, underscoring the advantage of thorough instructions. They tested various models, highlighting differences in style and resource usage.

- They emphasized the need for robust prompting to command better image control and suggested experimentation with models to refine the results.

- ComfyUI’s Conquerable Complexity: Contributors operated ComfyUI by symlinking models and referencing the Stability Matrix for simpler management, though many found the learning curve steep. They also shared that SwarmUI provided a more accessible interface for novices.

- Users compared less-intimidating frontends like SwarmUI to standard ComfyUI, reflecting on how these tools streamline generative art without sacrificing advanced features.

- Video Generation Gains Steam: Enthusiasts experimented with img2video models in ComfyUI, contrasting them with Veo2 and Flux for efficiency. They discovered that LTXVideo Q8 works well for setups with 8GB of VRAM.

- They remain eager to test new video generation approaches that extend resource-friendly possibilities, continuing to push boundaries on lower hardware specs.

- NSFW LoRA, Some Laughs Ensued: A playful exchange emerged over NSFW filters in LoRA, factoring in how censorship toggles may be managed. Participants wanted open discussions on each setting’s role in controlling adult content.

- They emphasized that standard LoRA constraints occasionally hamper legitimate creative tasks, prompting calls for clearer documentation on censorship toggles.

OpenAI Discord

- OpenAI Outage Incites New Options: Members encountered ChatGPT downtime, referencing OpenAI status page and weighing alternatives like DeepSeek and Claude.

- The outage felt less severe than previous incidents, but it drove renewed interest in exploring different models.

- DeepSeek V3 Dashes Ahead: Members noted DeepSeek V3 boasts a 64k context limit, outperforming GPT-4 in speed and coding consistency.

- Enthusiasts celebrated its reliable coding support, while some pointed out missing features like direct file handling and reliance on OCR.

- GPT-O3 Looms on the Horizon: A late January release for O3-mini was mentioned, raising hopes for the full O3 model soon after.

- Concrete details remain scarce, fueling speculation over its performance and possible new features.

- Acronyms Annoy LLMs: Acronym recognition sparked debate, revealing how certain models struggle to expand domain-specific abbreviations properly.

- Techniques like custom dictionaries or refined prompts were proposed to keep expansions consistent.

- Canvas & ESLint Clashes: Users encountered a canvas window that vanishes seconds after opening, halting their editing workflow.

- Others wrestled with ESLint settings under O1 Pro, aiming for a tidy config that suits advanced development needs.

Stackblitz (Bolt.new) Discord

- ProductPAPI in the Pipeline: A member teased ProductPAPI, an app developed by Gabe to simplify tasks, but withheld details like launch date, core functionality, and API structure.

- Another user said 'we need more insights to gauge its potential' and suggested referencing community feedback threads for any planned expansions.

- Anthropic's Concise Conundrum: Members reported a drop in quality when using Anthropic's concise mode on Bolt, highlighting scalability concerns at peak usage times.

- One user theorized a 'universal scaling strain' across providers, referencing the correlation when a Claude demand warning also triggered slower performance on Bolt.

- Direct Code Tweaks or Bust: Frustrated devs noted they keep receiving 'example code' instead of direct edits, urging others to specify 'please make the changes directly' in their prompts.

- They tested adding clarifying instructions in a single prompt, linking to best practices for prompt phrasing and confirming better code modifications.

- Token Tensions in Bolt: Users flagged high token consumption—some claiming to burn through 1.5 million tokens while rewriting the same code requests, citing ignored prompts and unexpected changes.

- They posted feedback on GitHub and Bolters.io to propose subscription-tier updates, with many exploring free coding on StackBlitz once token caps are hit.

- Rideshare Ambitions with Bolt: A newcomer asked if they could build a nationwide Rideshare App with Bolt, referencing their existing airport rides portal, seeking scalability and multi-region support.

- Community members cheered the idea, calling it 'a bold extension' and citing Bolters.io community guides for step-by-step checklists on expansions.

Unsloth AI (Daniel Han) Discord

- QVQ-72B Debuts Big Scores: QVQ-72B arrived in both 4-bit and 16-bit variants, hitting 70.3% on the MMMU benchmark and positioning itself as a solid visual reasoning contender (Qwen/QVQ-72B-Preview).

- Community members emphasized data formatting and careful training steps, pointing to Unsloth Documentation for model best practices.

- DeepSeek V3 Fuels MoE Buzz: The DeepSeek V3 model, featuring a Mixture of Experts configuration, drew attention for being 50x cheaper than Sonnet (deepseek-ai/DeepSeek-V3-Base).

- Some speculated about OpenAI and Anthropic employing similar techniques, sparking technical discussions on scaling and cost efficiency.

- Llama 3.2 Hits Snags with Data Mismatch: Several users struggled to fine-tune Llama 3.2 on text-only JSONL datasets, encountering unexpected checks for image data despite disabling vision layers.

- Others reported patchy performance, attributing failures to input quality over quantity, while referencing potential solutions in Unsloth's peft_utils.py.

- GGUF and CPU Load Hurdles for Trained Models: A few community members wrestled with deteriorating performance after converting Llama 3.2 models to GGUF via llama.cpp, citing prompt format mismatches.

- Others complained of strange outputs on local hardware, highlighting the need to quantize carefully and consult Unsloth Documentation for proper CPU-only setups.

- Stella Overlooked as Mixed Bread Gains Fans: A user questioned why Stella seldom gets recommended, and Mrdragonfox acknowledged not using it, suggesting it lacks broad community traction.

- Meanwhile, mixed bread models see daily use and strong support, with folks insisting benchmarking and finetuning are vital for real-world outcomes.

Perplexity AI Discord

- Gemini Gains Ground & 'o1' Rumors: Some users claimed that Gemini's Deep Research Mode outperforms Claude 3.5 Sonnet and GPT-4o in context handling and overall utility.

- Speculation arose about a new model named 'o1', prompting questions about whether Perplexity might integrate it for wider AI functionality.

- OpenRouter Embraces Perplexity's Models: After purchasing credits, a user discovered OpenRouter provides direct access to Perplexity for question answering and inference.

- Despite finding this option, the user chose to stick with another provider, highlighting a vibrant discussion about OpenRouter expansions.

- DeepSeek-V3 Stirs Strong Impressions: A mention of DeepSeek-V3 indicated it's available via a web interface and an API, prompting interest in its capabilities.

- Testers described its performance as 'too strong' and hoped pricing would remain stable, comparing it positively to other installations.

- India's LeCun-Inspired Leap: A newly introduced AI model from India references Yann LeCun's ideas to enhance human-like reasoning and ethics, stirring conversation.

- Members expressed optimism about its implications, suggesting it could reshape model training and demonstrate the power of applied AI.

Latent Space Discord

- DeepSeek V3 Storms the Stage: Chinese group DeepSeek introduced a 685B-parameter model, claiming a total training cost of $5.5M with 2.6 million H800 hours and roughly 60 tokens/second throughput.

- Tweets like this one showcase superior benchmarks compared to larger budgets, with some calling it a new bar for cost efficiency.

- ChatGPT Eyes 'Infinite Memory': Rumors state ChatGPT may soon access all past chats, potentially changing how users rely on extensive conversational context.

- A tweet from Mark Kretschmann suggests this feature is imminent, prompting debates on deeper and more continuous interaction.

- Reinforcement Training Ramps LLM Reasoning: A shared YouTube video showed advanced RL approaches for refining large language models’ logic without extra overhead.

- Contributors cited verifier rewards and model-based RMs (e.g., @nrehiew_), suggesting a more structured training method.

- Anduril Partners with OpenAI: A tweet from Anduril Industries revealed a collaboration merging OpenAI models with Anduril’s defense systems.

- They aim to elevate AI-driven national security tech, causing fresh debates on ethical and practical boundaries in the military domain.

- 2024 & 2025: Synthetic Data, Agents, and Summits: Graham Neubig offered a keynote on agents in 2024, while Loubna Ben Allal reviewed papers on Synthetic Data and Smol Models.

- Meanwhile, the AI Engineer Summit is slated for 2025 in NYC, with an events calendar available for those following industry gatherings.

Interconnects (Nathan Lambert) Discord

- DeepSeek’s Daring V3 Debut: DeepSeek unveiled V3 trained on 14.8 trillion tokens, boasting 60 tokens/second (3x faster than V2) and fully open-source on Hugging Face.

- Discussion highlighted Multi-Token Prediction, new reward modeling, and questions on critique efficiency, with members noting it outperforms many open-source models.

- Magnitude 685B: DeepSeek’s Next Big Bet: Rumors swirl about a 685B LLM from DeepSeek possibly dropping on Christmas Day, supposedly over 700GB with no listed license yet, as hinted by a tweet.

- Community members joked about overshadowing existing solutions and expressed curiosity about open-source viability without any clear license noted in the repo.

- MCTS Magic for Better Reasoning: A recent paper (arXiv:2405.00451) showcases Monte Carlo Tree Search (MCTS) plus iterative preference learning to boost reasoning in LLMs.

- It integrates outcome validation and Direct Preference Optimization for on-policy refinements, tested on arithmetic and commonsense tasks.

- DPO vs PPO: The Rivalry Rages: A CMU RL seminar explored DPO vs PPO optimizations for LLMs, hinting at robust ways to handle clip/delta constraints and PRM biases in practice.

- Attendees debated if DPO outperforms PPO, with a paper heading to ICML 2024 and a YouTube session fueling further curiosity.

GPU MODE Discord

- DeepSeek-V3 Deals a Double Punch: The DeepSeek-V3 documentation highlights mass scale FP8 mixed precision training, claiming a cost reduction by 2 orders of magnitude.

- Community members debated the project's funding and quality tradeoffs, but recognized the potential for big savings in HPC workloads.

- Triton Trips on FP8 to BF16: A casting issue from fp8 to bf16 on SM89 leads to ptx errors, covered in Triton’s GitHub Issue #5491.

- Developers proposed using

.to(tl.float32).to(tl.bfloat16)plus a dummy op to prevent fusion while addressing the ptx error.

- Developers proposed using

- TMA Triumph Over cp.async: Users explained that TMA outperforms cp.async for GEMM on Hopper (H100), thanks to the higher flops on H100.

- They highlighted async support, bulk scheduling, and bounds checks as crucial features that reduce register usage in HPC kernels.

- No-Backprop Approach Sparks 128 Forward Passes: A new training method claims it can avoid backprop or momentum by taking 128 forward passes to estimate the gradient with low cos similarity to the true gradient.

- Though it promises 97% energy savings, many engineers worry about its practicality beyond small demonstration setups.

- ARC-AGI-2 & 1D Task Generators: Researchers gathered resources for ARC-AGI-2 experiments in a shared GitHub repository, inviting community-driven exploration.

- They also showcased 1D task generators that may extend into 2D symbolic reasoning, stimulating broader interest in puzzle-based AI tasks.

Notebook LM Discord Discord

- Podcast Partnerships with Google News: Members proposed integrating Google News with AI-generated podcast content to summarize the top 10 stories in short or long segments, sparking interest in interactive Q&A. They reported rising engagement from listeners intrigued by a dynamic blend of news delivery and on-demand discussions.

- Several participants shared examples of comedic bits woven into real-time updates, reflecting how AI-driven podcasts might keep audiences entertained while staying informed.

- AI Chatters About Life’s Biggest Questions: A user showcased an AI-generated podcast that playfully addressed philosophy, describing it as 'smurf-tastic banter' in a refreshing twist. This format combined humor with reflective conversation, hinting at a broader appeal for audiences who relish intellectual fun.

- Others called it a lively alternative to standard talk radio, highlighting how natural-sounding AI voices can both amuse and prompt deeper thought.

- Pathfinder in 15 Minutes: A participant generated a concise 15-minute podcast summarizing a 6-book Pathfinder 2 campaign, giving game masters a rapid-fire plot overview. They balanced storyline highlights with relevant tips, enabling swift immersion in the tabletop content.

- This approach stirred excitement around short-form tabletop recaps, signaling potential synergy between AI-led storytelling and roleplaying communities.

- Akas Bridges AI Podcasters and Their Audiences: An enthusiast introduced Akas, a website for sharing AI-generated podcasts and publishing personalized RSS feeds, as seen on Akas: share AI generated podcasts. They positioned it as a smooth connection between AI-driven shows and each host’s individual voice, bridging creative ideas to larger audiences.

- Some predicted expansions that unify tools like NotebookLM, encouraging user-driven AI episodes to reach broader platforms and spark further collaboration.

LlamaIndex Discord

- Report-Ready: LlamaParse Powers Agentic Workflows: Someone posted a new Report Generation Agent that uses LlamaParse and LlamaCloud to build formatted reports from PDF research papers, referencing a demonstration video.

- They highlighted the method's potential to automate multi-paper analysis, offering robust integration with input templates.

- DocumentContextExtractor Slices Costs: A conversation centered on using DocumentContextExtractor for batch processing to cut expenses by 50%, allowing users to process tasks off-hours.

- This approach spares the need to keep Python scripts running, letting individuals review results whenever they choose.

- Tokenization Tangle in LlamaIndex: Participants criticized the LlamaIndex tokenizer for lacking decoding support, prompting disappointment over the partial feature set.

- Though chunk splitting and size management were recommended, some teased the idea of dropping truncation entirely to blame users for massive file submissions.

- Unstructured RAG Steps Up: A blog detailed how Unstructured RAG, built with LangChain and Unstructured IO, handles data like images and tables more effectively than older retrieval systems, referencing this guide.

- It also described using FAISS for PDF embeddings and suggested an Athina AI evaluation strategy to ensure RAG accuracy in real-world settings.

- LlamaIndex Docs and Payroll PDFs: Some look for ways to get LlamaIndex documentation in PDF and markdown, while others struggle to parse payroll PDFs using LlamaParse premium mode.

- Discussions concluded that generating these docs is feasible, and LlamaParse can handle payroll tasks if fully configured.

Eleuther Discord

- Optimizers on the Loose: Some discovered missing optimizer states in Hugging Face checkpoints, stirring questions about checkpoint completeness.

- Others confirmed that the checkpointing code ordinarily saves these states, leaving the real cause uncertain.

- VLM Fine-Tuning Frenzy: Engineers grappled with model-specific details for LLaVA, Qwen-VL, and InternVL finetuning scripts, noting that each approach differs.

- They shared LLaVA as a popular reference, emphasizing that hugging the correct methodology matters for results.

- Chasing Lower Latency: Participants compiled a range of methods targeting CUDA- or Triton-level optimizations to trim LLM inference times.

- They also pointed to progress in open-source solutions that sometimes beat GPT-4 in tasks like function calling.

- GPT-2’s Shocking First Token: In GPT-2, the initial token’s activations soared to around 3000, unlike the typical 100 for subsequent tokens.

- Debate continued over whether a BOS token even exists in GPT-2, with some asserting it’s simply omitted by default.

- EVE Sparks Encoder-Free Curiosity: Researchers explored EVE, a video-focused encoder-free vision-language model that sidesteps CLIP-style architectures.

- Meanwhile, the Fuyu model series faced doubts about practical performance gains, prompting calls for additional insights on encoder efficiency.

tinygrad (George Hotz) Discord

- Lean Bounty and the BITCAST Quest: Members tackled the Lean bounty proof challenges, referencing tinygrad notes for guidance. They also debated implementing BITCAST const folding to optimize compile time.

- A question was raised about interest in implementing BITCAST const folding for compile-time optimization, and a user asked which directory held relevant code. Another user suggested referencing older PRs for examples on how to proceed.

- Tinygrad vs. PyTorch Face-Off: Some reported that Tinygrad took 800ms versus PyTorch's 17ms for a forward pass on CUDA, prompting improvement attempts with jitting. Community members anticipated concurrency gains from beam search and repeated that a stable approach could match or exceed PyTorch speeds.

- They acknowledged speed disparities likely stemmed from different CUDA setups and system configurations. A few participants suggested intensifying jitting efforts to shrink the performance gap.

- Rewrite Rumble in Matching Engines: Participants explored matching engine performance bounties, with links to open issues at tinygrad/tinygrad#4878.

- One user clarified their focus on the rewrite portion, referencing outdated PRs that still guided the direction of proposed solutions.

- Input Handling Hiccups: One user flagged input tensor recreation in loops that severely slowed Tinygrad while producing correct outputs, also hitting attribute errors with the CUDA allocator. In response, changes from PR #8309 were merged to fix these issues, underscoring the importance of regression tests for stable performance.

- A deeper dive revealed that

tiny_input.clone()triggered errors in the CUDA memory allocator. Contributors agreed more testing was needed to prevent regression in loop-based input creation.

- A deeper dive revealed that

- GPU Gains with Kernel Caching: Chat highlighted an RTX 4070 GPU with driver version 535.183.01, using CUDA 12.2, raising open-source driver concerns. Discussions on beam search caching confirmed kernels are reused for speed, with the prospect of sharing those caches across similar systems.

- Attendees surmised potential driver mismatches might limit performance, urging debug logs to confirm. Some suggested distributing compiled beam search kernels to expedite setups on matching hardware.

Cohere Discord

- Riding the CMD-R & R7B Rollercoaster: Members debated upcoming changes to CMD-R and showed curiosity about the 'two ans' quirk in R7B, referencing a shared image that hints at unexpected updates.

- They joked about how rarely such weird outcomes appear, with some calling it 'a comedic glitch worth investigating' in the community discussion.

- Pinching Pennies on Re-ranker Pricing: The Re-ranker cost structure drew attention, especially the $2.50 for input and $10.00 for output per 1M tokens, as shown in Cohere's pricing page.

- Questions spurred interest in how teams might budget for heavy usage, with some folks comparing this to alternative solutions.

- LLM University Gains Ground: Cohere introduced LLM University, offering specialized courses for NLP and LLMs, aiming to bolster enterprise AI expertise.

- Attendees gave enthusiastic feedback, praising the well-structured resources and noting that users can adopt these materials for quick skill expansion.

- Command R & R+ Reign Supreme in Multi-step Tasks: Command R provides 128,000-token context capacity and efficient RAG performance, while Command R+ showcases top-tier multi-step tool usage.

- Participants credited its multilingual coverage (10 languages) and advanced training details, especially for challenging production demands in the cmd-r-bot channel.

- Voice, VAD & Music Merge AI Magic: An AI Engineer showcased a Voice to Voice chat app leveraging DNN-VAD and also shared music generation from text prompts using a stereo-melody-large model.

- They invited collaborators, stating 'I would like to work with you,' and extended a Merry Christmas greeting to keep the atmosphere upbeat.

Modular (Mojo 🔥) Discord

- io_uring Intrigue & Network Nudges: Members explored how io_uring can enhance networking performance, referencing man pages as a starting point and acknowledging limited familiarity.

- Community speculation suggests io_uring might streamline asynchronous I/O, with calls for real-world benchmarks to confirm its synergy.

- StringRef Shenanigans & Negative Length Crash: A crash occurred when StringRef() received a negative length, pointing to a missing length check in memcpy.

- One user recommended StringSlice instead, emphasizing StringRef’s risk when dealing with length validation.

- EOF Testing & Copyable Critique: Users confirmed read_until_delimiter triggers EOF correctly, referencing GitHub commits.

- Conversations highlighted Copyable and ExplicitlyCopyable traits, with potential design adjustments surfacing on the Modular forum.

- Mojo Swag & Modular Merch Frenzy: Members flaunted their Mojo swag, expressing gratitude for overseas shipping and sharing photos of brand-new gear.

- Others praised Modular’s merchandise for T-shirt quality and 'hardcore' sticker designs, fueling further brand excitement.

- Modular Kernel Queries & MAX vs XLA: A user inquired about a dedicated kernel for the modular stack, hinting at possible performance refinements.

- MAX was compared to XLA, citing 'bad compile times with JAX' as a reason to consider alternative compiler strategies.

DSPy Discord

- PyN8N Gains Node Wiz: Enthusiasts noted that PyN8N integrates AI to build custom workflows, though some users reported loading issues linked to ad blockers.

- They highlighted the README’s aspirational tone and recommended switching browsers or disabling extensions to resolve these site-blocking errors.

- DSpy Dances with DSLModel: Community members found that DSpy extends functionality via DSLModel, allowing advanced features for better performance.

- They suggested this approach reduces code overhead while keeping complex data workflows streamlined.

- NotebookLM Inline Sourcing Sparks Curiosity: A user asked how NotebookLM accomplishes inline sourcing, noting a lack of detailed responses.

- They sought more insight into the underlying implementation but the conversation provided limited follow-up.

- Jekyll Glossary Gets a DSPy Boost: A Jekyll script was shared to generate a glossary of key terms, using DSPy for LLM interactions.

- They refined entries like Artificial General Intelligence and noted potential improvements for the long description parameter.

- Typing.TypedDict & pydantic Tangle: Members discovered

typing.TypedDictfor typed fields, acknowledging its complexity for Python use cases.- They also discussed pydantic for multi-instance output arrays, aiming for a more refined layout.

LLM Agents (Berkeley MOOC) Discord

- Certificate Confusion & Strict Forms: Members confirmed that certificates will be distributed by the end of January, as noted here.

- One participant asked if they'd still receive certification without the Certificate Declaration Form, and was told it's mandatory with no exceptions.

- Spring Session Hype for LLM Agents MOOC: Community chatter revealed a spring start for the next LLM Agents course, aligning with completion of the current session.

- Attendees showed excitement, referencing the certificate process and hoping for an on-time rollout of course updates.

OpenInterpreter Discord

- Open Interpreter's Pioneering Pixel-Precision: The Open Interpreter API offers near pixel-perfect control for UI automation, includes OCR for text recognition, and provides usage examples in Python scripts.

- A community member mentioned OCR appears broken, while others inquired about the desktop version release timeline, showing broad interest in further development.

- Voice to Voice Chat & QvQ synergy: One AI engineer introduced a Voice to Voice chat app featuring Music Generation from text prompts, seeking collaboration with other Generative AI enthusiasts.

- Another user questioned how QvQ would function in OS mode for Open Interpreter, hinting at bridging speech and system-level tasks.

Nomic.ai (GPT4All) Discord

- Copy Button Conundrum: A member noticed the absence of a dedicated 'copy' button for AI-generated code in the GPT4All chat screen UI, prompting questions about possible UI improvements.

- They expressed gratitude for any workaround suggestions, emphasizing that code-copy convenience ranks high among developer requests.

- Keyboard Shortcut Shenanigans: Community members confirmed that mouse-based cut and paste do not work across chat UI or configuration pages, frustrating those relying on right-click behavior.

- They clarified that Control-C and Control-V remain functional, offering a fallback for copying code snippets.

- New Template Curiosity: A member asked in French if anyone had tried writing with the new template, indicating multilingual adoption outside of English contexts.

- They hoped for feedback on post-install steps, though no specific outcomes or shared examples emerged from the exchange.

LAION Discord

- Mega Audio Chunks for TTS: A member sought tips for building a TTS dataset from massive, hour-long audio files and asked about tools to split them properly.

- They aimed for a method that maintains quality while reducing manual labor, focusing on audio segmentation approaches that handle large file sizes.

- Whisper Splits the Script: Another participant proposed Whisper for sentence-level splitting, seeing it as a practical way to prepare audio for TTS tasks.

- They highlighted how Whisper streamlines segmentation, reducing production time while preserving consistent sentence boundaries.

MLOps @Chipro Discord

- HPC MLOps frameworks in the spotlight: A member requested a stable and cost-effective ML ops framework for HPC requiring minimal overhead and singled out Guild AI as a possibility.

- They questioned Guild AI's reliability and leaned toward a self-hosted approach, citing distaste for SaaS solutions.

- Server chores spark talk of a DIY ops tool: Mounting setup and maintenance burdens made them wary of running a dedicated server for MLOps tasks.

- They voiced willingness to code a simple ops framework themselves if it avoids heavy server administration.

The Axolotl AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!