[AINews] DeepSeek-V2 beats Mixtral 8x22B with >160 experts at HALF the cost

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 5/3/2024-5/6/2024. We checked 7 subreddits and 373 Twitters and 28 Discords (419 channels, and 10335 messages) for you. Estimated reading time saved (at 200wpm): 1112 minutes.

More experts are all you need?

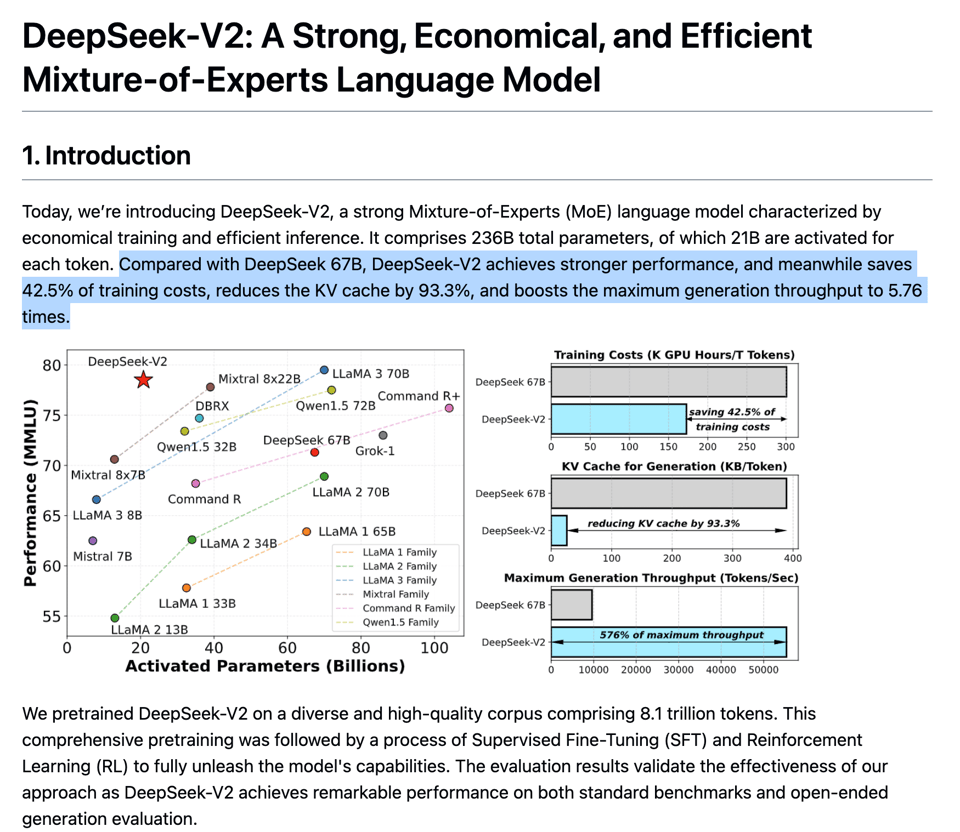

DeepSeek V2 punches a hole in the Mistral Convex Hull from last month:

Information on dataset is extremely light; all they say is it's 8B tokens (4x more than DeepSeek v1) with about 12% more Chinese than English.

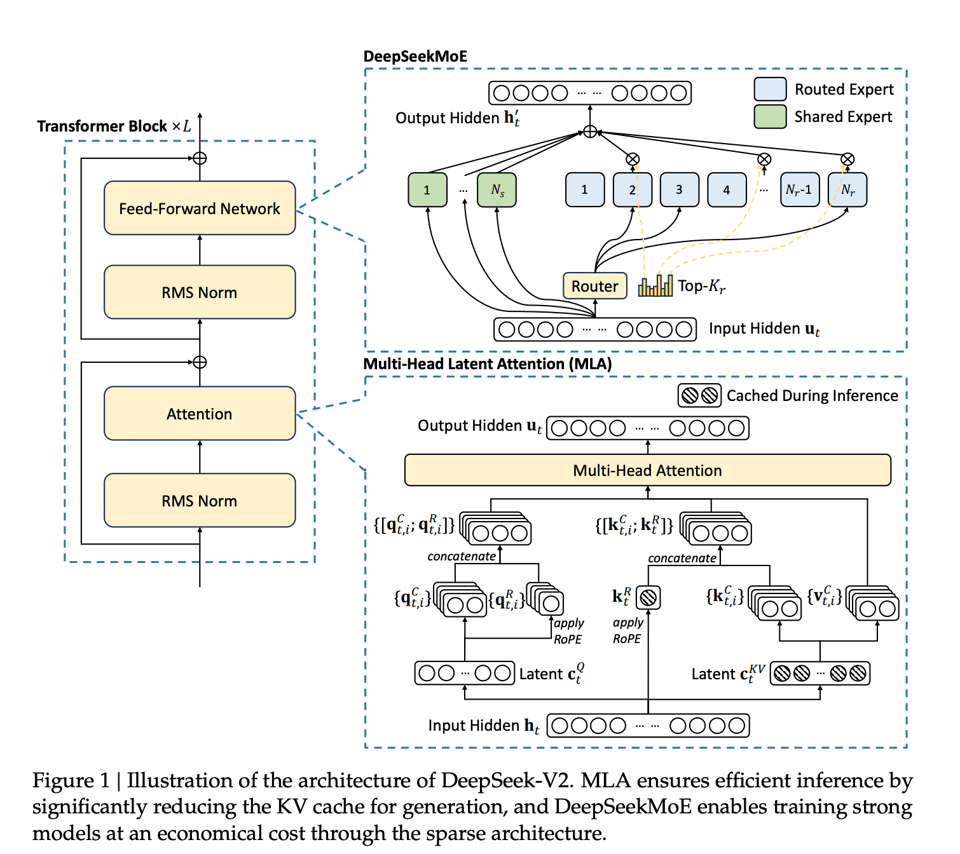

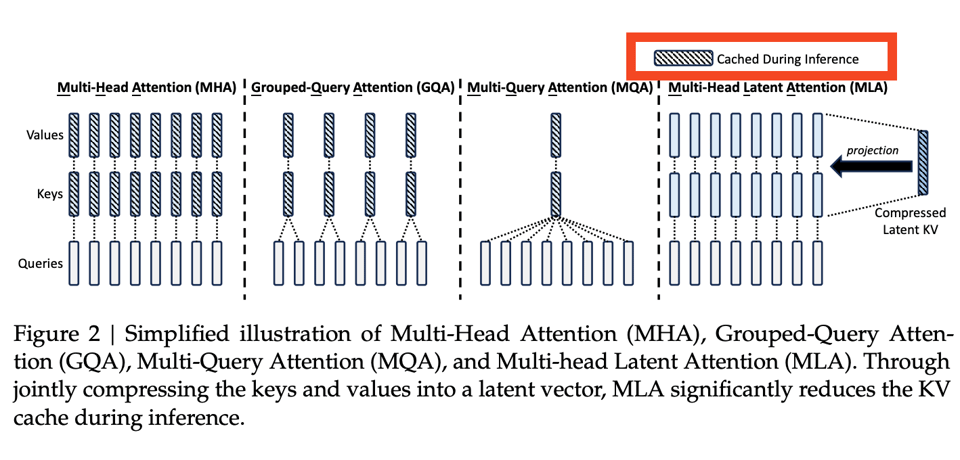

Snowflake Arctic was the last very large MoE model with the highest number of experts (128) we'd seen in the wild; DeepSeek v2 now sets a new high water mark scaling up what was already successful with DeepSeekMOE, but also introducing a new attention variant called Multi-Head Latent Attention.

These result in much faster inference by caching compressed KVs ("reducing KV cache by 93.3%").

The paper details other minor tricks they find useful.

DeepSeek is putting their money where their mouth is - they are offering token inference on their platform for $0.28 per million tokens about half of the lowest prices seen in the Mixtral Price War of Dec 2023.

Table of Contents

- AI Twitter Recap

- AI Reddit Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Unsloth AI (Daniel Han) Discord

- OpenAI Discord

- Stability.ai (Stable Diffusion) Discord

- Nous Research AI Discord

- Perplexity AI Discord

- LM Studio Discord

- CUDA MODE Discord

- Modular (Mojo 🔥) Discord

- HuggingFace Discord

- OpenInterpreter Discord

- Eleuther Discord

- OpenRouter (Alex Atallah) Discord

- LlamaIndex Discord

- OpenAccess AI Collective (axolotl) Discord

- Latent Space Discord

- AI Stack Devs (Yoko Li) Discord

- LAION Discord

- LangChain AI Discord

- tinygrad (George Hotz) Discord

- Mozilla AI Discord

- DiscoResearch Discord

- Interconnects (Nathan Lambert) Discord

- LLM Perf Enthusiasts AI Discord

- Skunkworks AI Discord

- Datasette - LLM (@SimonW) Discord

- Cohere Discord

- AI21 Labs (Jamba) Discord

- Alignment Lab AI Discord

- PART 2: Detailed by-Channel summaries and links

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

LLM Developments and Releases

- Llama 3 Release: @erhartford noted that Llama 3 120B is smarter than Opus, and is very excited about llama3-400b. @maximelabonne shared that Llama 3 120B > GPT-4 in creative writing but worse than L3 70B in reasoning.

- DeepSeek-V2 Release: @deepseek_ai launched DeepSeek-V2, an open-source MoE model that places top 3 in AlignBench, surpassing GPT-4. It has 236B parameters with 21B activated during generation.

- MAI-1 500B from Microsoft: @bindureddy predicted Microsoft is training its own 500B param LLM called MAI-1, which may be previewed at their Build conference. As it becomes available, it will compete with OpenAI's GPT line.

- Mistral and Open LLMs Overfitting Benchmarks: @adcock_brett shared that Scale AI released research uncovering 'overfitting' of certain LLMs like Mistral and Phi on popular AI benchmarks, while GPT-4, Claude, Gemini, and Llama stood their ground.

Robotics and Embodied AI

- Tesla Optimus Update: @DrJimFan congratulated the Tesla Optimus team on their update, noting their human data collection farm is Optimus' biggest lead with best-in-class hands, teleoperation software, sizeable fleet, and carefully designed tasks & environments.

- Open-Source Robotics with LeRobot: @ClementDelangue welcomed LeRobot by @remicadene and team, signaling a shift towards open-source robotics AI.

- DrEureka from Nvidia: @adcock_brett shared Nvidia's 'DrEureka', an LLM agent that automates writing code to train robot skills, used to train a robot dog's skills in simulation and transfer them to real-world zero-shot.

Multimodal AI and Hallucinations

- Multimodal LLM Hallucinations Overview: @omarsar0 shared a paper that presents an overview of hallucination in multimodal LLMs, discussing recent advances in detection, evaluation, mitigation strategies, causes, benchmarks, metrics, and challenges.

- Med-Gemini from Google: @adcock_brett reported Google's introduction of Med-Gemini, a family of AI models fine-tuned for medical tasks, achieving SOTA on 10 of 14 benchmarks from text, multimodal, and long-context applications.

Emerging Architectures and Training Techniques

- Kolmogorov-Arnold Networks (KANs): @rohanpaul_ai highlighted a paper proposing KANs as alternatives to MLPs for approximating nonlinear functions, outperforming MLPs and possessing faster neural scaling laws without using linear weights.

- LoRA for Parameter-Efficient Finetuning: @rasbt implemented LoRA from scratch to train a GPT model for 98% accuracy in SPAM classification, noting LoRA as a favorite technique for parameter-efficient finetuning of LLMs.

- Hybrid LLM Approach with Expert Router: @rohanpaul_ai shared a paper on a cost-efficient hybrid LLM approach that uses an expert router to direct "easy" queries to a smaller model for cost reduction while maintaining quality.

Benchmarks, Frameworks, and Tools

- TorchScript Model Export from PyTorch Lightning: @rohanpaul_ai noted that exporting and compiling models to TorchScript from PyTorch Lightning is smooth with the

to_torchscript()method, enabling model serialization for non-Python environments. - Hugging Face Inference Endpoints with Whisper and Diarization: @_philschmid created an optimized Whisper with speaker diarization for Hugging Face Inference Endpoints, leveraging flash attention, speculative decoding, and a custom handler for 4.15s transcription of 60s audio on 1x A10G GPU.

- LangChain for Complex AI Agents: @omarsar0 shared a free 2-hour workshop on building complex AI agents using LangChain for automating tasks in customer support, marketing, technical support, sales, and content creation.

Trends, Opinions, and Discussions

- LLMs as a Commodity: @bindureddy argued that LLMs have become a commodity, and even if GPT-5 is fantastic, other major players will catch up within months. Inference prices will trend down, and the winning LLM changes every few weeks. The best strategy is to use an LLM-agnostic service and move on from foundation models to building AI agents.

- Literacy and Technology: @ylecun shared an observation on shifting attitudes towards reading and technology over time, from "why don't you plow the field instead of reading books?" in 1900 to "why don't you watch TV instead of being on your tablet?" in 2020.

- Funding Fundamental Research: @ylecun argued that almost all federal funding to universities goes to STEM and biomedical research, with very little to social science and essentially zero to humanities. Cutting these funds would "kill the golden goose" and potentially cost lives.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Development and Capabilities

- Tesla Optimus advancements: In /r/singularity, a new video showcases the latest capabilities of Tesla's Optimus robot, including fine tactile and force sensing in the hands. Discussions revolve around the robot's current speed limitations and potential for 24/7 operation in factories once it reaches "20x rate" of human workers.

- Sora AI video rendering: In /r/singularity, Sora, an AI system, demonstrates the ability to render a video while changing a single element, although this feature is still in the research phase and not yet publicly available.

- GPT-4 trained robo-dog: In /r/singularity, a robo-dog, trained using GPT-4, showcases its ability to maintain balance on a rolling and deflating yoga ball, demonstrating advancements in AI-powered robotics and balance control.

- Compute power and AI milestones: In /r/singularity, Microsoft CTO Kevin Scott suggests that the common factor in AI milestone achievements is the use of more compute power. Discussions on the potential for Llama 3 400b to outperform GPT-4 due to training on 25,000 H100s compared to GPT-4's reported 10,000 A100s.

- LLaMA 70B performance: In /r/singularity, a user reports running Llama 3 70B on a 7-year-old PC with a 4-year-old 3090 GPU, achieving better responses than GPT-4 and Claude 3 in some cases. The post highlights the implications of having a highly intelligent AI that doesn't require an internet connection and can provide high-quality outputs.

Societal Impact and Concerns

- Public awareness of AI-generated images: In /r/singularity, a survey reveals that half of Americans are unaware that AI can generate realistic images of people, raising questions about how many AI-generated images people have encountered without realizing it. Comments discuss the general lack of knowledge among the American public.

- AI and abundance for all: In /r/singularity, a post questions the belief that AI will lead to abundance for all, arguing that AI will likely entrench existing power structures and increase inequality. The author suggests that the transition will be gradual, with unemployment and cost of living increasing slowly over time until a catastrophe occurs.

- Warren Buffett's concerns about AI: In /r/StableDiffusion, Warren Buffett compares AI to the atomic bomb, highlighting its potential for scamming people and expressing concerns about the power of AI. Comments discuss the dual nature of AI, comparing it to the advent of electricity, with both positive and negative implications.

AI Applications and Developments

- AI in medical notetaking: In /r/singularity, an Ontario family doctor reports that AI-powered notetaking has significantly improved her work and saved her job, highlighting the potential for AI to assist in medical documentation.

- Optimus hand advancements: In /r/singularity, Elon Musk announces that the new Optimus hand, set to be released later this year, will have 22 degrees of freedom (DoF), an increase from the previous 11 DoF.

- Future of AI training and inference: In /r/singularity, Nvidia CEO predicts that in the future, AI training and inference will be a single process, allowing AI to learn while interacting with users. The video is recommended as interesting content for those following AI developments.

Memes and Humor

- AI training and weird results: In /r/StableDiffusion, a meme image suggests that training AI on unusual or unconventional images leads to weird results.

- Delicate banana: In /r/StableDiffusion, a humorous image post features a delicate banana.

- Safety team suggestions: In /r/StableDiffusion, a video meme depicts a prank where a person's chair is pulled out from under them, causing them to fall. Comments discuss the dangerous nature of the prank and the potential for serious injury.

AI Discord Recap

A summary of Summaries of Summaries

- Llama3 GGUF Conversion Challenges: Users encountered issues converting Llama3 models to GGUF format using llama.cpp, with training data loss unrelated to precision. Regex mismatches for new lines were identified as a potential cause, impacting platforms like ollama and lm studio. Community members are collaborating on fixes like regex modifications.

- GPT-4 Turbo Performance Concerns: OpenAI users reported significant latency increases and confusion over message cap thresholds for GPT-4 Turbo, with some experiencing 5-10x slower response times and caps between 25-50 messages. Theories include dynamic adjustments during peak usage.

- Stable Diffusion Installation Woes: Stability.ai community members sought help with Stable Diffusion setups failing to access GPU resources, encountering errors like "RuntimeError: Torch is not able to use GPU". Discussions also covered the lack of comprehensive, up-to-date LoRA/DreamBooth/fine-tuning tutorials.

- Hermes 2 Pro Llama 3 Impresses with Context: Hermes 2 Pro Llama 3 showcased ~32k context on a 32GB Nvidia v100 Tesla using vLLM and RoPE scaling, with perfect 16k token recall and no degradation. Editing

config.jsonand the rope scaling factor enables extended context.

- Perplexity AI's Pages Feature Garners Attention: Perplexity AI's new Pages feature for comprehensive report creation generated buzz, while users expressed frustration over the 50 message per day limit on Claude 3 Opus compared to GPT-4 Turbo and Sonnet. Discussions also covered Perplexity's shift from unlimited to limited messages.

- LM Studio Enables Headless Mode: LM Studio users leveraged the

lmsCLI tool for headless operation alongside the GUI, troubleshooting memory anomalies and strategizing for smooth server-side deployments without VRAM consumption via RDP. Fine-tuning bottlenecks were also discussed, with a member reporting success fine-tuning for 8 hours on a 128GB M3 Max MacBook Pro.

- CUDA Compiling and Multi-GPU Training Challenges: CUDA developers encountered issues with

nvcc 11.5throwing errors for bfloat16 operations on older GPUs, with a fix proposed to manually handle arithmetic for backward compatibility. Recent commits also caused multi-GPU training hangs, as reported in Issue #369, with a separate branch maintaining functionality.

- Mojo Compiler and Type System Evolution: Mojo's nightly compiler update brought changes to align with current practices, moving away from 80-column width and transitioning to register passable types. Discussions touched on phasing out

OptionalRegin favor of traits indicating register passability, as detailed in the changelog.

- HuggingFace Community Highlights: Notable projects in the HuggingFace community include Moondream 2 batch processing, FLUENT's newest iteration, a Portuguese translation of HF Audio course chapters, and a BLIP fine-tune for long captions. A comprehensive list is available in the community highlights.

- Eleuther Ponders Transformer Chess Prowess: An arXiv paper showcasing a 270M parameter transformer model outperforming AlphaZero's policy and value networks in chess without domain-specific algorithms sparked discussions on the impact of scale on strategy games within the Eleuther community.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

GGUF Conversion Hiccups for Llama3: The Unsloth community encountered conversion issues with Llama3 models when using llama.cpp, notably affecting training data when transitioning to GGUF format. Issues weren't limited to FP16 conversions, implying deeper underlying problems than just precision loss.

New Lines, Big Problems: A recurrent theme in the glitches was linked to new line tokenization, with different behaviors across regex libraries leading to erratic tokenizer.json patterns. Potential solutions involving regex modifications were explored to fix the GGUF conversion challenges.

Llama Variant Takes on Genomic Data: The introduction of the LLaMA-3-8B-RDF-Experiment model by M.chimiste marks a push towards integrating LLMs with genomic data and knowledge graph construction.

Demand for Vision-Language Model Tuning Tools: Community request surfaced for a generalized method to fine-tune Language-Vision Models (LVLM), demonstrated by a member's interest in supporting Moondream, as detailed in their GitHub notebook.

Showcasing and Sharing Platform Growth: Proposals for a separate discussion channel on deploying large language models (LLMs) highlight a demand for shared learning. This aligns with showcases like Oncord's integration of Unsloth AI for web development AI tools and the release of models that enhance Llama-3 capabilities.

OpenAI Discord

Perplexity AI Pulls Ahead with Pages: Perplexity AI's new Pages feature garners attention for its ability to create comprehensive reports. Meanwhile, a healthy skepticism surrounds the potential of GPT-5 as engineers discuss the diminishing returns on investment.

AGI Concept Sparks Debate: The AI community on Discord is locked in a debate over the definition of AGI and whether AI models like ChatGPT are pioneering versions of AGI. Interest in AI-generated music indicates a growing appetite for creative AI applications, with reference to services like Udio.

Performance Frustration Hits GPT-4 Turbo: Significant increases in response latency are reported for GPT-4 Turbo, and users are seeking clarity about inconsistent message cap thresholds, suggesting possible dynamic adjustments during peak times.

Prompt Engineering Challenges and Strategies: Engineers share experiences and resources, recommend "Wordplay" by Teddy Dicus Murphy for prompt-crafting insights, and delve into the intricacies of using logit bias to manipulate token probabilities in the OpenAI API.

Fine-Tuning AI for Queries: A lively discussion revolves around fine-tuning models to generate questions rather than answers, including strategies for improving GPT-4-TURBO prompts for product information extraction, backed by a logit bias tutorial.

Stability.ai (Stable Diffusion) Discord

- GPU Troubles Take Center Stage: Members report difficulties with Stable Diffusion installations failing to access GPU resources, highlighted by errors like "RuntimeError: Torch is not able to use GPU."

- Stable Diffusion 3 Rumors Stir the Pot: Anticipation bubbles around the release of Stable Diffusion 3, sparking debates on the implications of its potential delay, while skeptics question its arrival altogether.

- The Finetuning Tutorial Void: The community voices frustration over a shortage of up-to-date, comprehensive tutorials for techniques like LoRA/DreamBooth/fine-tuning, which many find are either antiquated or skimpy on details.

- Quest for Unique Faces: A member inquires about strategies to train AI for generating unique, realistic-looking faces, wondering whether to use LoRa on multiple faces or to train on a generated random face as the foundation.

- Open-Source Obstacles Discussed: Conversations turn to the authenticity of Stable Diffusion's open-source commitments, with concerns about potential future gatekeeping of high-quality models, checkpoints, and training intricacies.

Nous Research AI Discord

- SVMs Still Kick in AI Circles: Discord members clarified that SVM stands for Support Vector Machine amidst technical chitchat.

- Anticipation for Meta-Llama-3-120B-Instruct: The Meta-Llama-3-120B-Instruct Hugging Face model sparked discussions on its potential, with calls for comprehensive benchmarking rather than relying on mere hype.

- Deployment Dilemmas: Users debated serverless Llama limitations, whilst discussing better GPU options with sufficient VRAM, like Azure's NC80adis_H100_v5, for handling large-context task demands.

- Hermes 2's Memorable Performance: The Hermes 2 Pro Llama 8B demonstrated an impressive ~32k extended context capacity with no noticeable degradation, showing perfect recall at 16k on a 32GB Nvidia v100 Tesla.

- Cynde Contributes to Data Farming: An update on Cynde was shared, marking the completion of its core implementation. Enthusiasm for this framework for intelligence farming is evident, with Cynde's repository welcoming contributors.

Perplexity AI Discord

- Pages Beta No More Open Applications: The beta tester application phase for Pages has concluded due to sufficient participant enrollment. Future updates on Pages will be communicated accordingly.

- Prominent Discussions on Perplexity AI's Performance and Limitations: Members experienced slow response times with the Claude 3 model and have expressed frustration over the 50 messages per day limitation on the Claude 3 Opus model. While comparing Opus with GPT-4 Turbo and Sonnet, users also expressed concerns over Perplexity's shift from unlimited to limited message capabilities.

- Exploring AI for Creative and Novelty Uses: The Perplexity AI community is actively exploring the platform's abilities in image generation, emulating writing styles from novels, and diverse searches such as uncovering the history of BASIC programming language or delving into Perplexity's own history.

- API Adventures and Agile Adjustments: Users discussed model transitions, specifically from sonar-medium-online to llama-3-sonar-large-32k-online, and queried about potential billing inconsistencies. The conversation also included successes and troubles with AI result optimization and suggestions for creating a minimum-code Telegram bot using Perplexity API.

- Multi-Channel Search Query Sharing: The community shared multiple search queries and outcomes, engendering discussions about Perplexity’s effective use and the depth of insights it can provide. Such explorations were wrapped in a variety of contexts, ranging from programming history to proprietary technological insights.

LM Studio Discord

- Headless Cauldron Brews Progress: Engineers are utilizing LM Studio's CLI tool,

lms, for headless operation alongside GUI versions, working through memory consumption anomalies and discussing tactics for smooth server-side deployments without consuming VRAM through RDP.

- Fine-tuning Finesse & Model Mishaps: Members troubleshoot fine-tuning bottlenecks, sharing success stories of long fine-tuning sessions on hardware like the 128GB M3 Max MacBook Pro, and discuss the inconsistent output issues plaguing models like Llama 3.

- Interactive Intents & AI Memory Quirks: Users express a confounding observation that language models might hold onto the context of deleted prompt elements, suggesting potential bugs or a misunderstanding of model behavior. They explore interactive techniques to personalize writing styles and enable "scoped access" to document parts for LLMs.

- Role-Play Without Limits? Not So Fast: A vivid conversation sparkles around the intersection of AI and RPGs, with users aiming to train AIs as Dungeon Masters for D&D, indicating that existing systems tangle with content moderation, which can impact the story's darkness and depth.

- ROCm Raves & Linux Enthusiasm: Updates to ROCm prove resilient, but discussions also broach the challenges converting models and sending longer sequences for embeddings. The dialog shifts toward community interest in contributing to a Linux ROCm build, hinting at further engagement if the project sought more open-source collaboration.

- AI on the Hardware Frontier: Members plunge into heated hardware exchanges, contrasting the appropriateness of older GPUs like the Tesla P40 over the GRID K1 and geeking out on multi-GPU setup nuances for AI-centric home labs. The nitty-gritty spreads from server hardware acquisitions to cooling, power, and driver compatibility issues.

- LM Studio's Latest Line-up: The

lmstudio-communityrepo has been updated with CodeGemma 1.1 and Nvidia's ChatQA 1.5 models, with the former eliciting keen anticipation and the latter offering specialized models tailored for context-based Q/A applications.

CUDA MODE Discord

Backpack Packs a Punch: BackPACK, a PyTorch extender for extracting additional information from backward passes, has been discussed, highlighting its potential for PyTorch developers. Details are in the publication "BackPACK: Packing more into Backprop" by Dangel et al., 2020.

DoRA Delivers on Fusion: A new fused DoRA layer implementation decreases the number of individual kernels and has been optimized for GEMM and reduction operations, detailed in a GitHub pull request. Enthusiasm was noted for upcoming benchmarks focused on these enhancements.

Custom CUDA Extensions Customization: Members discussed best practices for installing custom PyTorch/CUDA extensions, sharing multiple GitHub pull requests like PR#135 and a sample setup.py for reference, aiming for cleaner installation processes.

Streaming Ahead with CUTLASS Interest has bubbled around stream-K scheduling techniques used in CUTLASS, with suggestions of diving deeper into its workings in a future talk.

GPU Communication Goes to School: Upcoming sessions on GPU Collective Communications with NCCL have been announced, with a focus on distributed ML concepts.

Must-Read ML Systems Papers: For newcomers to machine learning systems, an ML Systems Onboarding list on GitHub provides a curated selection of informative papers.

Overcoming CUDA Compiling Conundrums: Issues with CUDA compilers like nvcc 11.5 throwing errors for operations in bfloat16 have been addressed in a fix proposal, aiming to support older GPUs and toolkits. Multi-GPU training hangs have also been discussed, linked to Issue #369, with a separate branch maintaining functionality.

LLaMa's Lean Learning: Discussions around memory efficiencies during LLaMa 2 70B model training highlighted configurations that allow for reduced memory usage. A tool named HTA was mentioned for pinpointing performance bottlenecks in PyTorch.

Post-training Peaks with Quantization: A YouTube video was shared, detailing the process and benefits of quantization in PyTorch.

GreenBitAI Goes Global: A toolkit called green-bit-llm was introduced for fine-tuning and inferencing GreenBitAI's language models. Attention was drawn to BitBlas for rapid 2-bit operation gemv kernels, along with a unique approach to calculating gradients captured in the GreenBitAI's toolkit.

Modular (Mojo 🔥) Discord

Tune in to Mojo Livestream for MAX 24.3 Updates: Modular's new livestream video titled "Modular Community Livestream - New in MAX 24.3" invites the community to explore the latest features of MAX Engine and Mojo, along with an introduction to the MAX Engine Extensibility API.

Community Projects Zoom Ahead: Noteworthy updates include NuMojo's improved performance and the introduction of Mimage for image parsing. The Basalt project also reached a milestone of 200 stars and released new documentation.

Mojo Compiler Evolves: Mojo compiler sees nightly updates with changes to better fit current practices, such as the move away from 80-column width and transitioning to types more suited for register passability.

AI Engineers Seek Don Hoffman's Consciousness Exploration: Interest in Donald Hoffman's work at UCI linked to consciousness research correlates with AI, as parallels are drawn between sensory data limitations seen in split-brain patients and AI hallucinations.

Mojo's Growing Ecosystem & Developer Guidance: Discussion on contribution processes to Mojo, inline with GitHub's pull request guidelines, and insights into the development workflow with tutorials on parameters demonstrate the active support for contributors to the rapidly expanding Mojo ecosystem.

HuggingFace Discord

Moondream and BLOOM Make Waves: The HuggingFace community has spotlighted new advancements including Moondream 2 batch processing and FLUENT's newest iteration, as well as tools for multilingual support. Particularly noteworthy is the BLOOM multilingual chat and AutoTrain's support for YAML configs, simplifying the training process for machine learning newcomers. Check out the community highlights.

When Audio Models Sing: There's interest in audio diffusion models for generative music with Whisper being fine-tuned for Filipino ASR, prompting discussions on optimization. However, a user faced challenges converting PyTorch models into TensorFlow Lite due to size limits.

AI's Frontline: Cybersecurity took center stage as the Hugging Face Twitter account was compromised, underlining the need for robust AI-related security. Members also exchanged GPU utilization tips for variance in training times between setups.

Visions of Quantum and AI Unions: In computer vision, the emphasis was on improving traditional methods like YOLO for gap detection in vehicle parts and adapting models like CLIP for image recognition with rotated objects. GhostNet's pre-trained weights were sought after, and CV members pondered the contemporary relevance of methods like SURF and SIFT.

Graph Gurus Gather: Recent papers on using LLMs with graph machine learning propose novel ways to integrate the two, with a paper](https://arxiv.org/abs/2404.19705) specifically teaching LLMs to retrieve information only when needed via the <RET> token. The reading group provided additional resources for those eager to learn more.

Showcasing Synthesis and Applied AI: From the #i-made-this section, there's the launch of tools like Podcastify and OpenGPTs-platform, along with models like shadow-clown-BioMistral-7B-DARE using mergekit.

NLPer's Quandaries and Queries: In NLP, a user offered compensation for custom training on Mistral-7B-instruct and concerns were raised about LLMs evaluating other LLMs. The GEMBA metric for translation quality using GPT 3.5+ was introduced, with a link provided to learn more.

OpenInterpreter Discord

Integrating OpenInterpreter with Groq LLM: Engineers discussed challenges with integrating Groq LLM onto Open Interpreter, highlighting issues such as uncontrollable output and erroneous file creation. The connection command shared was interpreter --api_base "https://api.groq.com/openai/v1" --api_key "YOUR_API_KEY_HERE" --model "llama3-70b-8192" -y --max_tokens 8192.

Microsoft Hackathon Seeks Open Interpreter Enthusiasts: A team is forming to participate in the Microsoft Open Source AI Hackathon utilizing Open Interpreter; the event promises to offer hands-on tutorials and the sign-up details are available here.

Open Interpreter Gets an iOS Reimagining: Discussions revolved around reimplementation of TMC protocol for iOS on Open Interpreter and troubleshooting issues with setting up with Azure Open AI models, with one member sharing a GitHub repository link for the iOS app in development here.

Local LLMs Challenge Developers: Personal testings on local LLMs like Phi-3-mini-128k-instruct were shared, indicating significant performance variances and calling out for better optimization methods in future implementations.

AI Vtuber's STT Conundrum: Implementing Speech-to-Text for AI powered virtual streamers brought up practical challenges, with engineers considering using trigger words and working towards AI-driven Twitch chat interactions through a separate LLM instance, aiming for comprehensive responses. For those tackling similar integrations, a member pointed to a main.py file on their GitHub as a resource.

Eleuther Discord

- Chess Grandmasters Beware, Transformers Are Coming: A new study reveals a 270M parameter transformer model surpassing AlphaZero's policy and value networks in chess without domain-specific algorithms, raising questions on scale's effectiveness in strategy games.

- LLM Research Flourishes with Multilingualism and Prompting Techniques: Research highlights include a study on LLMs handling multilingual inputs and the potential of "Maieutic Prompting" for working with inconsistent data despite skepticism about its practicality. Contributions in this area provided insights and links to papers such as How Mixture Models Handle Multilingualism and methods to counteract LLM vulnerabilities, including The Instruction Hierarchy paper.

- Model Performance Under the Microscope: The scaling laws for transfer learning indicate that pre-trained models improve on fixed-sized datasets via effective transferred data, resonating with the community's efforts to determine accurate measures of LLM in-context learning and performance evaluation methods.

- Interpreting Transformers and Improving Deployability: A primer and survey on interpreting transformer-based LLMs have been shared, alongside discussions on cross-model generalization. There's active interest in resolving weight tying issues in models like Phi-2 and Mistral-7B and clarifying misunderstandings regarding weight tying in notable open models.

- Community Engagement with ICLR and Job Searches: Preparations for an in-person meet-up at ICLR are unfolding despite travel challenges, and community support is evident with members sharing employment resources and experiences from engaging with projects such as OSLO and the Polyglot team.

OpenRouter (Alex Atallah) Discord

- New Kids on the Llama Block: The Llama 3 Lumimaid 8B model has been released with an extended version also available, while the Llama 3 8B Instruct Extended sees a price reduction. A brief downtime was announced for the Lynn models due to server updates.

- Beta Testers Wanted for High-Stakes AI: Rubik's AI Pro, an advanced research assistant and search engine, is seeking beta testers with 2 months of premium access including models like GPT-4 Turbo and Mistral Large. The project can be accessed here with the promo code

RUBIX.

- Mix and Match Models: Community members reported that Gemini Pro is now error-free and discussed potential hosts for Lumimaid 70B. Models like Phi-3 are sought after, but availability is scarce. Model precision varies across providers, with most using fp16 and some using quantized int8.

- Mergers and Acquisitions: A conversation highlighted a newly created self-merged version of Meta-Llama 3 70B on Hugging Face, spurring debates about the effectiveness of self-merges versus traditional layer-mapped merges.

LlamaIndex Discord

Boosting Agent Smarts: LlamaIndex 0.10.34 ushers in introspective agents capable of self-improvement through reflection mechanisms, detailed in a notebook which comes with a content warning for sensitive material.

Agentic RAG Gets an Upgrade: An informative video demonstrates the integration of LlamaParse + Firecrawl for crafting agentic RAG systems, and the release can be found through this link.

Trust-Scored RAG Responses: "Trustworthy Language Model" by @CleanlabAI introduces a scoring system for the trustworthiness of RAG responses, aiming to assure accuracy in generated content. For more insights, refer to their announcement here.

Local RAG Pipeline Handbook Hits Shelves: For developers seeking independence from cloud services, a manual for setting up a fully local RAG pipeline with LlamaIndex is unveiled, promising a deeper dive than quickstart guides and accessible here.

Hugging Face, Now Hugging LlamaIndex Tightly: LlamaIndex declares support for Hugging Face TGI, enabling optimal deployment of language models on Huggingface with enhanced features like function calling and improved latency. Shed light on TGI's new capabilities here.

Creating Conversant SQL Agents: AI engineers are contemplating the use of HyDE to craft NL-SQL bots for databases brimming with tables, eyeing ways to elevate the precision of SQL queries by the LLM; meanwhile, introspective agent methodologies are making waves, with further reading at Introspective Agents with LlamaIndex.

OpenAccess AI Collective (axolotl) Discord

Hermes 2 Pro Llama 3 Speed Test Results: Hermes 2 Pro Llama 3 has showcased impressive inference speed on an Android device with 8GB RAM, boosted by enhancements in llama.cpp.

Anime’s Role in AI Conversations: Members humorously discussed the rise of anime as it relates to increasing capabilities in AI question-answering and image generation tasks.

Gradio Customization Achievements: Adjustments in Gradio now allow dynamic configuration set through a YAML file, enabling the setting of privacy levels and server parameters programmatically.

Datasets for AI Training Spotlighted: A new dataset containing 143,327 verified Python examples (Python Dataset) and difficulties in improving mathematical performance of Llama3, even with math-centric datasets, were discussed, highlighting dataset challenges in AI training.

AI Training Platform Enhancements and Needs: There was a call to refine Axolotl's documentation, particularly regarding merging model weights and model inference, accessible at Axolotl Community Docs. Additionally, issues with gradient clipping configurations were addressed, and Phorm offered insights into customizing TrainingArguments for gradient clipping and the chatbot prompt.

Latent Space Discord

- Gary Rocks the Ableton: A new work-in-progress Python project, gary4live, integrates Python continuations with Ableton for live music performance, inviting contributors and peer review from the community.

- Suno Scales Up Music Production: Discussion about using Suno for music generation included comparisons with other setups like Musicgen, with an emphasis on Suno's tokenization process for audio and exploration on whether these models can automatically produce sheet music.

- Token Talk: Engaging deeply with music model token structures, participants navigated the token length and composition in audio synthesis, referencing but not detailing specific architectural designs from academic papers.

- Breaking Barriers in Audio Synthesis: The potential of direct audio integration into multimodal models was discussed, focusing on real-time replacement of audio channels and the importance of direct audio for enabling omnimodal functionality.

- The Business Beat of Stable Audio: Commercial use and licensing questions surfaced regarding stable audio model outputs, with a specific eye towards their real-time application in live performances and the possible implications for industries.

AI Stack Devs (Yoko Li) Discord

- Local Hardware Tackles AI: Users can now use llama-farm to run Ollama locally on old laptops for processing LLM tasks without exposing them to the public internet. This was also linked to a GitHub repository with more details on its implementation (llama-farm chat on GitHub).

- AI Cloud Independence Achieved: Discussions indicated that using Faraday allows users to keep downloaded characters and models indefinitely, and running tools locally can circumvent cloud subscription fees, given a 6 GB VRAM setup. Local execution requires no subscription, acting as a potential budget-friendly option for tool usage.

- Ubuntu Users Regain Control: Installation problems with

convex-local-backendon Ubuntu 18 were solved by downgrading to Node version 18.17.0 and updating Ubuntu as per a GitHub issue. Dockerization was proposed as a potential solution to simplify future setups.

- Simulated Realities Attract Spotlight: An AI Simulated Party was featured at Mission Control in San Francisco, blending real and digital experiences. Additionally, the AI-Westworld simulation entered public beta, and a web app called AI Town Player was launched for replaying AI Town scenarios by importing sqlite files.

- Clipboards and Beats Converge: There was a call for collaboration to create a simulation involving hip-hop artists Kendrick and Drake. It demonstrates an interest in combining AI development with cultural commentary.

LAION Discord

CLIP vs. T5: The Model Smackdown: There's a spirited discussion about integrating CLIP and T5 encoders for training AI models; while the use of both encoders shows promise, some argue using T5 alone due to prompt adherence issues with CLIP.

Are Smaller Models the Big Deal?: In the realm of model size, enhancement of smaller models is being prioritized, as evidenced by the focus on the 400M DeepFloyd, with technical conversations touching upon the challenges in scaling up to 8B models.

Releasing SD3: Keep 'Em Waiting or Drop 'Em All?: The community's reaction to Stability AI's hinted gradual rollout of SD3 models—from small to large—was a mix of skepticism and eagerness, reflecting on whether this release strategy meets the community's anticipation.

LLama Embeds Strut into the Spotlight: Debates over the efficacy of using LLama embeds in model training emerged, with some members advocating for their use over T5 embeds, and sharing resources like the LaVi-Bridge to illustrate modern applications.

From Concept to Application: A Data Debate: The conversation dove into why synthetic datasets are favored in certain research over real-world datasets such as MNIST and ImageNet, alluding to the value of interpretability in AI methods and sharing resources like the StoryDiffusion website for insights.

LangChain AI Discord

Code Execution Finds an AI Buddy: Enthusiastic dialogues emerged around using AI to execute generated code, highlighting methods like Open Interpreter and developing custom tools such as CLITOOL. These discussions are pivotal for those crafting more interactive and automated systems.

Langchain Learns a New Language: The Langchain library's expansion into the Java ecosystem via langchain4j marks a crucial step for Java developers keen to harness AI assistant capabilities.

Langchain Gets a High-Performance Polish: The coupling of LangChain and Dragonfly has yielded impressive enhancements in chatbot context management, as depicted in a blog post detailing these advancements.

Decentralized Search Innovations: The community is buzzing with the development of a decentralized search feature for LangChain, promising to boost search functionalities with a user-owned index network. The work is showcased in a recent tweet.

Singularity Spaces with Llama & LangGraph: A contributor shared a video on Retrieval-Augmented Generation techniques without a vectorstore using Llama 3, while another enriches the dialogue with a comparison between LangGraph and LangChain Core in the execution realm.

tinygrad (George Hotz) Discord

Clojure Captures Engineer's Interest in Symbolic Programming: Engineers are discussing the ease of using Clojure for symbolic programming compared to Python, suggesting the use of bounties to ramp up on tinygrad, and debating the merits of Julia over Clojure in the ML/AI space.

tinygrad's UOps Puzzle Engineers: A call for proposals was made to reformat tinygrad's textual UOps representation to be more understandable, potentially resembling llvm IR, alongside an explanation that these UOps are indeed a form of Static Single Assignment (SSA).

Optimizing tinygrad for Qualcomm's GPU Playground: It was highlighted that tinygrad runs efficiently on Qualcomm GPUs by utilizing textures and pixel shaders, with the caveat that activating DSP support might complicate the process.

Single-threaded CPU Story in tinygrad: Confirmation from George Hotz himself that tinygrad operates single-threaded on the CPU side, with no threads bumping into each other.

Understanding tinygrad's Tensor Tango: A user's curiosity about the matmul function and transposing tensors spurred explanations, and another user shared their written breakdown on computing symbolic mean within tinygrad.

Mozilla AI Discord

- Json\Schema Skips a Beat with llamafile: A clash between

json_schemaand llamafile 0.8.1 prompted discussions, with a workaround using--unsecuresuggested and hints of a permanent fix in upcoming versions.

- In Search of Leaner Machine Learning Models: The community exchanged ideas on lightweight AI models, where phi 3 mini was deemed too heavy and Rocket-3B was suggested for its agility on low-resource systems.

- Clubbing Caches for Llamafile: It was confirmed that llamafile can indeed utilize models from the ollama cache, potentially streamlining operations by avoiding repeated downloads, providing that GGUF file compatibility is maintained.

- AutoGPT Goes Hand-in-Hand with Llamafile: An integration initiative was shared, highlighting a draft pull request to meld llamafile with AutoGPT; setup instructions were posted at AutoGPT/llamafile-integration, pending maintainer feedback.

- Choosing the Right Local Models for Llamafile: Real-time problem-solving was spotlighted as a user managed to get llamafile up and running with locally cached .gguf files after distinguishing between actual model files and metadata.

DiscoResearch Discord

Mixtral Woes Spiral: The mixtral transformers hit a snag due to bugs impacting finetune performance; references include Twitter, Gist, and a closed GitHub PR. There's ambiguity whether the bug affects only training or generation as well, necessitating further scrutiny.

Quantized LLaMA-3 Takes a Hit: A Reddit post reveals quantization deteriorates LLaMA-3's performance notably compared to LLaMA-2, with a potentially enlightening arXiv study available. Meta's scaling strategy may account for LLaMA-3's precision reduction woes, while GitHub PR #6936 and Issue #7088 discuss potential fixes.

Meet the New Model on the Block: Conversations indicate 8x22b Mistral is being leveraged for current engineering tasks, though no performance metrics or usage specifics were disclosed.

Interconnects (Nathan Lambert) Discord

- AI Voices: So Real It's Unreal: The Atlantic published an article discussing how ElevenLabs has created advanced AI voice cloning technology. Users expressed both fascinated and wary reactions to ElevenLabs' capabilities, with one showing disdain towards paywalls that limit full access to such content.

- Prometheus 2: Judging the Judges: A recent arXiv publication introduced Prometheus 2, a language model evaluator aligned with human and GPT-4 judgments, targeting transparency and affordability issues in proprietary language models. Although the paper notably omitted RewardBench scores where the model underperformed, there is keen interest in the community to test Prometheus 2's evaluation prowess.

- Enigma of Classical RL: Conversations in the rl channel featured curiosity about unexplored areas in classical reinforcement learning. Discussion put a spotlight on the importance of the value function in approaches like PPO and DPO, and emphasized its critical role in planning within RL systems.

- The Mystery of John's Ambiguity: In the random channel, members shared cryptic concerns about repeated success and joked about a certain "john's" ambiguous response to a proposal. The relevance and context behind these statements remained unclear.

LLM Perf Enthusiasts AI Discord

- Anthropic's Prompt Generator Makes Waves: Engineers discussed a new prompt generator tool available in the Anthropic console, which may be useful for those seeking efficient ways to generate prompts.

- Politeness Mode Test Run: The tool's capability to rephrase sentences politely was tested, producing results that were well-received by members.

- Deciphering the System's Mechanics: Efforts are underway to understand how the tool's system prompt operates, with a focus on unraveling the secrets of the k-shot examples embedded within.

- Extracting the Long Game: There have been challenges in extracting complete data from the tool, with reports of system prompts being truncated, particularly during the extended Socratic math tutor example.

- Leak the Secrets: A commitment was made to share the full system prompt with the community once it has been successfully extracted in its entirety, which could be a resource for those interested in prompt engineering.

Skunkworks AI Discord

- Fake It 'til You Make It: A member is on the lookout for a dataset of fabricated data aimed at testing fine-tuning on Llama 3 and Phi3 models, implying that authenticity is not a requirement for their experiments.

- Accelerating AI with Fast Compute: Fast compute grants are up for grabs for Skunkworks AI projects that show promise, with further details available in a recent tweet.

- Educational AI Content on YouTube: An AI-related educational YouTube video was shared, potentially adding value to the community’s ongoing technical discussions.

Datasette - LLM (@SimonW) Discord

- LLM Turns Error Logs into Enlightenment: An approach that utilizes LLM to swiftly summarize errors after running a

conda activatecommand has proven effective, with suggestions to integrate the method into the LLM README documentation. - Bash Magic Meets LLM Insights: A newly crafted

llm-errbash function is on the table, designed to feed command outputs directly into LLM for quick error diagnosis, further streamlining error troubleshooting for engineers.

Cohere Discord

- Calling AI Experts in Austin: A friendly hello was extended to AI professionals located in Austin, TX.

- Finexov's Funding Frontier: Vivien introduced Finexov, an AI platform aimed at simplifying the identification of R&D funding opportunities, already active with initial partnerships and support from the Founder Institute (fi.co).

- Tech Leadership Hunt for Finexov: Seeking a CTO co-founder with a strong ML background to pilot Finexov and gear up for the challenges of team-building and fundraising; preference for candidates based in Europe or Middle East, French speakers are a bonus.

- Dubai Meetup on the Horizon: Vivien signals a potential meetup in Dubai this June, inviting potential collaborators to discuss opportunities with Finexov.

AI21 Labs (Jamba) Discord

- AI21 Labs Pushes the Envelope: AI21 Labs indicated their ambition to expand their technology further. The staff encouraged community members to share their use cases and insights through direct messages.

Alignment Lab AI Discord

- Get Your Compute Loading: Interested parties have a chance to gain fast compute grants; a tweet shared by a member calls for applications or nominations to award compute resources, beneficial for AI research and projects. Check out the tweet for details.

PART 2: Detailed by-Channel summaries and links

Unsloth AI (Daniel Han) ▷ #general (791 messages🔥🔥🔥):

- Discussions on Llama3 finetuning and GGUF conversion: Users have been experimenting with finetuning Llama3 using Unsloth, and converting the finetuned models to GGUF with various outcomes. Some reported issues with infinite generation post-conversion and were directed to keep tabs on a GitHub issue highlighting problems with models converted to GGUF.

- Inquiries on full finetuning with Unsloth: A user was curious about the possibility of full finetuning (not just LoRA) using Unsloth, leading to discussions about possible VRAM savings and performance. Unsloth community members provided insights into how to potentially achieve this, referencing a GitHub feature request.

- Investigation into performance of heavily quantized models: A user questioned the effectiveness of heavy quantization like 4 Bit Q2_K for a 7B model, with the recommendation to possibly use Phi-3 instead for low resource applications, underscoring the importance of choosing the right quant level for model performance.

- Sharing of resources and troubleshooting Unsloth: Users shared their experiences and offered advice on cloud providers like Tensordock for running Unsloth models, the usage of Unsloth Studio, as well as general tips on dealing with finetune datasets, quantization effects, and the use of different inference engines.

- Uncertainties about fine-tuning low-resource languages with LLMS: A user considering fine-tuning with LLMs for low-resource languages sought advice on the efficacy of LLMs versus models like T5. Community discussion highlighted the potential of models like Phi-3 for such tasks, with contributions addressing how to handle different aspects of the fine-tuning process.

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- Paper page - A Closer Look at the Limitations of Instruction Tuning: no description found

- GGUF My Repo - a Hugging Face Space by ggml-org: no description found

- unsloth/Phi-3-mini-4k-instruct · Hugging Face: no description found

- LLM Model VRAM Calculator - a Hugging Face Space by NyxKrage: no description found

- Google Colab: no description found

- unsloth/Phi-3-mini-4k-instruct-bnb-4bit · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- gradientai/Llama-3-8B-Instruct-Gradient-1048k · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

- unsloth (Unsloth AI): no description found

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- unsloth (Unsloth AI): no description found

- GitHub - IBM/unitxt: 🦄 Unitxt: a python library for getting data fired up and set for training and evaluation: 🦄 Unitxt: a python library for getting data fired up and set for training and evaluation - IBM/unitxt

- Grizzly: Grizzly has 9 repositories available. Follow their code on GitHub.

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Google Colab: no description found

- Cerebras Systems Unveils World’s Fastest AI Chip with Whopping 4 Trillion Transistors - Cerebras: Third Generation 5nm Wafer Scale Engine (WSE-3) Powers Industry’s Most Scalable AI Supercomputers, Up To 256 exaFLOPs via 2048 Nodes

- Padding and truncation: no description found

- gradientai/Llama-3-8B-Instruct-262k · Hugging Face: no description found

- How to Fine Tune Llama 3 for Better Instruction Following?: 🚀 In today's video, I'm thrilled to guide you through the intricate process of fine-tuning the LLaMA 3 model for optimal instruction following! From setting...

- Reddit - Dive into anything: no description found

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- no title found: no description found

Unsloth AI (Daniel Han) ▷ #random (107 messages🔥🔥):

- Graphic Content Alert with LLaMA3: A user reported inappropriate and graphic content generated by LLaMa3 when prompted with an obscene query, questioning the level of censorship in the model. Another user found similar results, even when using system prompts to prevent such responses.

- Fancy New Roles for Supporters: In a brief confusion about support roles, a user learned that there is a new "regulars" role, and private supporter channels are available for those who become members or donate at least $10.

- RTX 4090 Gets a Suprim(ary) Deal: A new graphics card deals discussion highlighted the MSi GeForce RTX 4090 SUPRIM LIQUID X on sale for $1549, with a user urging others to take advantage of the offer. The card's compact size compared to other models sparked further debate.

- Kendrick vs. Drake Dynamic: Users discussed the recent developments in the Kendrick Lamar and Drake beef, indicating that Kendrick's track "Meet the Grahams" was released shortly after Drake's "Family Ties" causing a significant stir in the rap world.

- Unsloth.ai on YouTube: A conversation thread involved a user congratulating another on presenting to the PyTorch team, directing them to a YouTube video from Unsloth.ai, hinting at further updates to be posted soon.

- Papers with Code - X-LoRA: Mixture of Low-Rank Adapter Experts, a Flexible Framework for Large Language Models with Applications in Protein Mechanics and Molecular Design: Implemented in 3 code libraries.

- Reddit - Dive into anything: no description found

- Unsloth.ai: Easily finetune & train LLMs: no description found

- Saving Memory Using Padding-Free Transformer Layers during Finetuning: no description found

Unsloth AI (Daniel Han) ▷ #help (1215 messages🔥🔥🔥):

- Llama3 GGUF Conversion Issues Pinned Down: Users found that GGUF conversion for Llama3 models using llama.cpp fails, resulting in altered or lost training data with no clear pattern of loss, regardless of using FP16 or FP32 conversion methods. These abnormalities occur even in F32, proving the issue is not tied to precision loss.

- Possible Regex Mismatch for New Lines: The problem may link to a regex library issue where

\nsequences are improperly tokenized, potentially due to different regex library behaviors. The suggested fix modifies the tokenizer.json regex pattern for more compatibility across regex libraries, but concerns remain about the impact on different '\n' lengths. - Issues Exist Beyond GGUF: Similar inference issues were found with AWQ in applications like ooba, pointing towards tokenizer or tokenization issues beyond just GGUF formatting. Unsloth's inference function seems to perform well, hinting at problems possibly specific to llama.cpp.

- Multiple Platforms Impacted: Platforms dependent on llama.cpp like ollama, and lm studio also face related bugs, with tokenization problems reported across different interfaces and potentially affecting a wide range of users and applications.

- Community Cooperation Towards Solutions: User contributions, including regex modifications, are being discussed and tested to provide temporary fixes for the gguf conversion troubles, with a focus on narrowing down whether issues are specific to the Unsloth fine-tuning process or the llama.cpp tokenization method.

- Orenguteng/Llama-3-8B-LexiFun-Uncensored-V1-GGUF · Hugging Face: no description found

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- microsoft/Phi-3-mini-128k-instruct · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Load: no description found

- llama.cpp/examples/export-lora at finetune-lora · xaedes/llama.cpp: Port of Facebook's LLaMA model in C/C++. Contribute to xaedes/llama.cpp development by creating an account on GitHub.

- Reddit - Dive into anything: no description found

- AutoAWQ/examples/generate.py at main · casper-hansen/AutoAWQ: AutoAWQ implements the AWQ algorithm for 4-bit quantization with a 2x speedup during inference. Documentation: - casper-hansen/AutoAWQ

- creating gguf model from lora adapter · ggerganov/llama.cpp · Discussion #5360: I have a ggml adapter model created by convert-lora-to-ggml.py (ggml-adapter-model.bin). Now my doubt is how to create the complete gguf model out of these? I have seen using ./main -m models/llama...

- ScottMcNaught - Overview: ScottMcNaught has one repository available. Follow their code on GitHub.

- llama.cpp failing by bet0x · Pull Request #371 · unslothai/unsloth: llama.cpp is failing to generate quantize versions for the trained models. Error: You might have to compile llama.cpp yourself, then run this again. You do not need to close this Python program. Ru...

- Tweet from bartowski (@bartowski1182): After days of compute (since I had to start over) it's finally up! Llama 3 70B GGUF with tokenizer fix :) https://huggingface.co/bartowski/Meta-Llama-3-70B-Instruct-GGUF In other news, just orde...

- llama : fix BPE pre-tokenization (#6920) · ggerganov/llama.cpp@f4ab2a4: * merged the changes from deepseeker models to main branch * Moved regex patterns to unicode.cpp and updated unicode.h * Moved header files * Resolved issues * added and refactored unic...

- GGUF breaks - llama-3 · Issue #430 · unslothai/unsloth: Findings from ggerganov/llama.cpp#7062 and Discord chats: Notebook for repro: https://colab.research.google.com/drive/1djwQGbEJtUEZo_OuqzN_JF6xSOUKhm4q?usp=sharing Unsloth + float16 + QLoRA = WORKS...

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- readme : add note that LLaMA 3 is not supported with convert.py (#7065) · ggerganov/llama.cpp@ca36326: no description found

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- I got unsloth running in native windows. · Issue #210 · unslothai/unsloth: I got unsloth running in native windows, (no wsl). You need visual studio 2022 c++ compiler, triton, and deepspeed. I have a full tutorial on installing it, I would write it all here but I’m on mob...

- Cannot convert llama3 8b model to gguf · Issue #7021 · ggerganov/llama.cpp: Please include information about your system, the steps to reproduce the bug, and the version of llama.cpp that you are using. If possible, please provide a minimal code example that reproduces the...

- GitHub - ggerganov/llama.cpp at gg/bpe-preprocess: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- llama3 custom regex split by jaime-m-p · Pull Request #6965 · ggerganov/llama.cpp: Implementation of unicode_regex_split_custom_llama3().

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- llama.cpp/convert.py at master · ggerganov/llama.cpp: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

Unsloth AI (Daniel Han) ▷ #showcase (80 messages🔥🔥):

- Proposal for Model Size Discussion Channel: A user suggested creating a separate channel on Unsloth Discord for discussing the successes and strategies in deploying large language models (LLMs). The conversation emphasized the value of sharing experiences to enhance collective learning.

- Push for Llama-3-8B-Based Projects: RomboDawg announced the release of a new coding model that enhances Llama-3-8B-Instruct and competes with Llama-3-70B-Instruct performance. The model can be accessed here, and excitement for a version 2, promised to be available in about three days, was expressed.

- Knowledge Graph LLM Variant Released: M.chimiste has developed a Llama-3 variant to assist in knowledge graph construction, named LLaMA-3-8B-RDF-Experiment, emphasizing its utility in generating knowledge graph triples and potential for genomic data training. The model can be found at Hugging Face's model repository.

- On the Horizon of Creptographic Collaborations: In an extended discussion, one user is seeking advice and collaborative discussion about building a system that could potentially integrate cryptographic elements into blockchain technologies, expressing interest in learning from the community.

- AI-Enhanced Web Development Tools Theme: Oncord is showcased as providing a modern web development platform with built-in marketing and commerce tools, and its developer is integrating Unsloth AI for LLM fine-tuning to provide code completions and potentially power an AI-driven redesign feature. More about Oncord can be found here.

- no title found: no description found

- M-Chimiste/Llama-3-8B-RDF-Experiment · Hugging Face: no description found

- Dog Awkward GIF - Dog Awkward Awkward dog - Discover & Share GIFs: Click to view the GIF

- The miR-200 family is increased in dysplastic lesions in ulcerative colitis patients - PubMed: UC-Dysplasia is linked to altered miRNA expression in the mucosa and elevated miR-200b-3p levels.

- Tweet from RomboDawg (@dudeman6790): Announcing Codellama-3-8B A Qalore Finetune of llama-3-8b-instruct on the full OpenCodeInterpreter dataset. It codes far better than the base instruct model, and iterated on code extremely well. Forgi...

- Llama-3-8B-Instruct-Coder: no description found

- Oncord - Digital Marketing Software: Website, email marketing, and ecommerce in one intuitive software platform. Oncord hosted CMS makes it simple.

- no title found: no description found

Unsloth AI (Daniel Han) ▷ #suggestions (3 messages):

- Fine-tuning LVLM Desired: A member expressed a wish for a generalised way of fine-tuning LVLM, indicating ongoing interest in customization and optimization of language-vision models.

- MoonDream Fine-tuning Interest: Another member recommended support for Moondream, a tiny vision-language model which currently only finetunes the phi 1.5 text model. They provided a GitHub notebook as a resource: moondream/notebooks/Finetuning.ipynb on GitHub.

Link mentioned: moondream/notebooks/Finetuning.ipynb at main · vikhyat/moondream: tiny vision language model. Contribute to vikhyat/moondream development by creating an account on GitHub.

OpenAI ▷ #ai-discussions (854 messages🔥🔥🔥):

- A New Challenger Approaches Perplexity: Users are discussing the benefits of Perplexity AI, particularly its new Pages feature which allows for creation of comprehensive reports.

- AI and Self-Learning: Some discuss the possibility of AI engines like OpenAI's GPT to teach programming basics to users and help in creating code, espousing the idea of self-sufficient AIs with the capacity for self-improvement.

- The Evolving Definition of AGI: The community is engaging in a debate about the current state of AI and its proximity to true AGI (Artificial General Intelligence), with varying opinions on whether modern AI like ChatGPT qualifies as early AGI.

- Appetite for AI-Generated Music: Users express interest in AI-generated music, referencing services like Udio and discussing whether OpenAI should release its own AI music service.

- AI as a Tool for Expansion: The conversation explores how AI currently augments human productivity and the potential future where AI might take over mundane and complex tasks, also reflecting on how this might disrupt our socio-economic models.

- Google Scholar Citations: no description found

- Dirty Docks Shawty GIF - Dirty Docks Shawty Triflin - Discover & Share GIFs: Click to view the GIF

- ChatGPT listed as author on research papers: many scientists disapprove: At least four articles credit the AI tool as a co-author, as publishers scramble to regulate its use.

- GitHub - catppuccin/catppuccin: 😸 Soothing pastel theme for the high-spirited!: 😸 Soothing pastel theme for the high-spirited! Contribute to catppuccin/catppuccin development by creating an account on GitHub.

OpenAI ▷ #gpt-4-discussions (40 messages🔥):

- Slow and Steady Doesn't Win the Race: Members are reporting significant increases in latency with GPT-4 Turbo, with some experiencing response times 5-10x slower than usual.

- The Cap on Conversation: There's confusion around the message cap for GPT-4, as users report different timeout thresholds. Some state a cap between 25 and 50 messages, while others suspect dynamic adjustments during high usage periods.

- OpenAI Platform's UX Blues: Complaints have emerged about the user experience on OpenAI's new projects feature, with issues in project management, deletion, and navigability; also noting an absence of activity tracking per project.

- Will There Be a GPT-5? Users are skeptical about the release of GPT-5, discussing diminishing returns and the likelihood that it would be "2x the cost for 1.5x better GPT-4".

- The Hunt for Knowledge Prioritization: Users debate strategies to make ChatGPT search its knowledge base first before responding, touching on concepts like RAG (Retrieval-Augmented Generation) and the vectorization of knowledge to assist in providing contextually relevant answers.

OpenAI ▷ #prompt-engineering (30 messages🔥):

- Fine-tuning GPT for Questioning: A member is seeking advice on how to fine-tune a model to ask questions instead of giving answers, mentioning previous struggles with a similar project. They note difficulty finding appropriate user query and assistant query pairs and are considering using single tuple chats as samples for fine-tuning.

- The Resilient Onboarding Bot: Member leveloper mentions a successfully functioning bot designed to ask questions during an onboarding process, which remains untricked by user attempts despite being on a large server.

- Avoiding Negative Prompts: majestic_axolotl_19289 suggests that using negative prompts can backfire, as they tend to influence the outcome in unintended ways. Other members discuss whether negative prompts can be effective, citing the "Contrastive Chain of Thoughts" paper and personal experiences.

- Book Recommendation for Prompt Engineering: Member sephyfox_ recommends "Wordplay: Your Guide to Using Artificial Intelligence for Writing Software" by Teddy Dicus Murphy, finding it helpful for prompt engineering.

- Request for Improving GPT-4-TURBO Prompt for Product Info Extraction: Member stevenli_36050 seeks assistance in refining a prompt to extract product information, names, and prices from PDF supermarket brochures and categorize them accordingly.

- Discussing Logit Bias in Token Suppression: The user bambooshoots shares a link (https://help.openai.com/en/articles/5247780-using-logit-bias-to-alter-token-probability-with-the-openai-api) about manipulating probabilities using logit bias to suppress certain tokens in the OpenAI API.

OpenAI ▷ #api-discussions (30 messages🔥):

- In Search of the Questioning Bot: A member discussed the challenge of fine-tuning GPT for generating questions in conversations rather than providing answers, highlighting the difficulty in defining the structure of user queries and bot responses in such scenarios.

- Contrastive Chain of Thought (CCoT) Discourse: There was a debate on the use of negative prompts in prompting strategies. The conversation mentioned a paper on "Contrastive Chain of Thoughts" and questioned the effectiveness of using CCoT in longer dialogs, prompting an invitation to read further on the AIEmpower blog.

- Prompt Engineering Resources and Techniques Shared: Users shared resources about prompt engineering, including a recommendation for the book "Wordplay: Your Guide to Using Artificial Intelligence for Writing Software" by Teddy Dicus Murry and a LinkedIn learning course by Ronnie Sheer.

- Extracting Supermarket Product Data: A user sought advice on improving GPT-4-TURBO prompts for identifying product names and prices from PDF supermarket brochures, seeking to output the results in CSV format.

- Logit Bias for Token Probability Manipulation: A member referenced the logit bias as a method for manipulating token probabilities in prompts with a link to OpenAI's official documentation: Using logit bias to alter token probability with the OpenAI API.

Stability.ai (Stable Diffusion) ▷ #general-chat (919 messages🔥🔥🔥):

- GPU Compatibility Queries: Users are asking for assistance with Stable Diffusion installations that can't access GPU resources, mentioning errors like "RuntimeError: Torch is not able to use GPU".

- Stability.ai and SD3 Speculation: Conversations revolve around the anticipated release of Stable Diffusion 3, with many expressing doubt it will come out, while others discuss the impact if it doesn't.

- Finetuning Tutorials Seekers: Members express frustration over the lack of detailed tutorials for LoRA/DreamBooth/fine-tuning models, stating that available resources are outdated or not comprehensive.

- Request for Help on Generating Unique Faces: A query was made on how to train a unique realistic-looking person using AI, either through training LoRa on multiple faces or generating random ones and then training the LoRa on that result.

- Discussion on the 'Open Source' Nature of Stable Diffusion: Some users discuss the barriers to truly "open-source" AI art generation, sharing concerns about future paywalled access to high-quality model checkpoints and training details.

- EMO: EMO: Emote Portrait Alive - Generating Expressive Portrait Videos with Audio2Video Diffusion Model under Weak Conditions

- Highlight: Generate photos with friends: Highlight is an app to daydream with friends by genering images with them.

- Stable Diffusion 3: Research Paper — Stability AI: Following our announcement of the early preview of Stable Diffusion 3, today we are publishing the research paper which outlines the technical details of our upcoming model release, and invite you to ...

- Fireworks - Generative AI For Product Innovation!: Use state-of-the-art, open-source LLMs and image models at blazing fast speed, or fine-tune and deploy your own at no additional cost with Fireworks.ai!

- Login • Instagram: no description found

- How to Install Stable Diffusion - automatic1111: Part 2: How to Use Stable Diffusion https://youtu.be/nJlHJZo66UAAutomatic1111 https://github.com/AUTOMATIC1111/stable-diffusion-webuiInstall Python https://w...

- High-Similarity Face Swapping: ControlNet IP-Adapter + Instant-ID Combo: Discover the art of high-similarity face swapping using WebUI Forge, IP-Adapter, and Instant-ID for seamless, realistic results.🖹 Article Tutorial:- https:/...

- GitHub - philz1337x/clarity-upscaler: Clarity AI | AI Image Upscaler & Enhancer - free and open-source Magnific Alternative: Clarity AI | AI Image Upscaler & Enhancer - free and open-source Magnific Alternative - philz1337x/clarity-upscaler

- Aether Light - LoRA for SDXL - v1.0 | Stable Diffusion LoRA | Civitai: For business inquiries, commercial licensing, custom models, and consultation, contact me at joachim@rundiffusion.com . Introducing Aether Light, o...

- GitHub - crystian/ComfyUI-Crystools: A powerful set of tools for ComfyUI: A powerful set of tools for ComfyUI. Contribute to crystian/ComfyUI-Crystools development by creating an account on GitHub.

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

- LORA training EXPLAINED for beginners: LORA training guide/tutorial so you can understand how to use the important parameters on KohyaSS.Train in minutes with Dreamlook.AI: https://dreamlook.ai/?...

- THE OTHER LoRA TRAINING RENTRY: Stable Diffusion LoRA training science and notes By yours truly, The Other LoRA Rentry Guy. This is not a how to install guide, it is a guide about how to improve your results, describe what options d...

Nous Research AI ▷ #ctx-length-research (1 messages):

I apologize for the confusion, but as an AI, I do not have direct access to Discord servers, channels, or messages. Thus, I am unable to summarize the content from the Nous Research AI Discord channel named ctx-length-research. If you can provide the text from specific Discord messages that you'd like to be summarized, I'd be happy to assist you.

Nous Research AI ▷ #off-topic (20 messages🔥):

- Color Evolution on Camera: A member humorously noted that comparing images of Saint Petersburg, Ligovsky Avenue at Vosstaniya Square from 2002 and 2024 shows that cameras have gotten more color accurate.

- Culinary Flavor Fusion: A simple mention was made of Okroshka on kvas with mayonnaise accompanied by rye bread, possibly suggesting a discussion or reference to traditional Russian cuisine.

- Inquiry About SVM: A member asked, "What is SVM?" to which another member quickly clarified that SVM stands for Support Vector Machine.

- Improve the UX for FreeGPT.today: A member requested feedback on the user experience for their site FreeGPT.today, inviting others to sign up, chat, and test a PDF upload feature that generates graphs. Several suggestions for improvement were offered, including adding Google authentication, changing the default login landing page to "chat now," improving UI elements, and implementing a progress bar for file uploads.

- Beware of Spam Links: A mention was made that a Discord invite link shared in the chat was actually spam and led to the sharer getting banned.

- Recipic Demo: Ever felt confused about what to make for dinner or lunch? What if there was a website where you could just upload what ingredients you have and get recipes ...

- FreeGPT.today - The Most Powerful AI Language Model for Free!: Access the most powerful AI language model for free. No credit card required.

Nous Research AI ▷ #interesting-links (47 messages🔥):

- Exploring Taskmaster with LLM: A code implementation of the show Taskmaster using structured data management, a state machine, and the OpenAI API was shared. The code is available on GitHub.

- Evaluating LLM Responses: Another GitHub repository was introduced featuring Prometheus, a tool for evaluating LLM responses, available at prometheus-eval.