[AINews] DeepSeek R1: o1-level open weights model and a simple recipe for upgrading 1.5B models to Sonnet/4o level

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

GRPO is all you need.

AI News for 1/17/2025-1/20/2025. We checked 7 subreddits, 433 Twitters and 34 Discords (225 channels, and 8019 messages) for you. Estimated reading time saved (at 200wpm): 910 minutes. You can now tag @smol_ai for AINews discussions!

We knew that we'd get an open weights release of DeepSeek at some point, and DeepSeek is already well known for their papers and V3 was the top open model in the world, but all our AI sources could not take their eyes off the DeepSeek R1 release today.

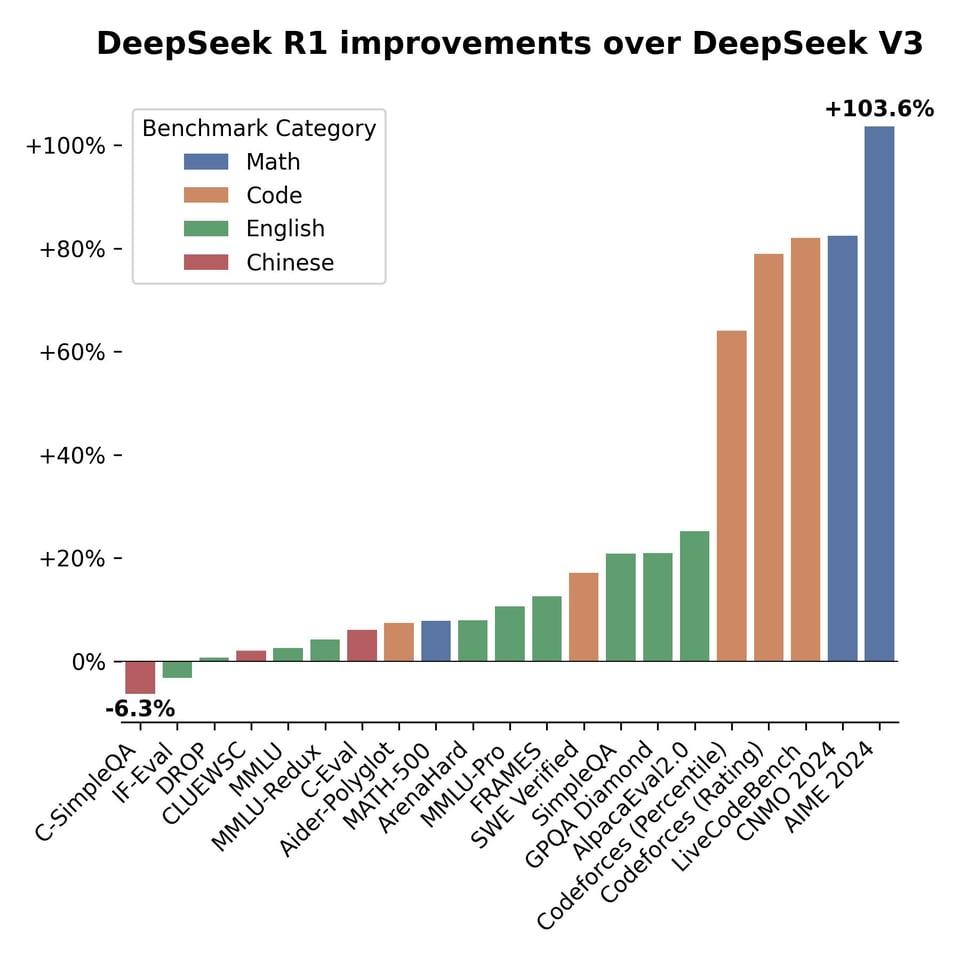

R1's performance which turned out to be leaps and bounds above DeepSeek V3 from literally 3 weeks ago:

When we say "R1", it's ambiguous. DeepSeek actually dropped 8 R1 models - 2 "full" models, and 6 distillations on open models:

- from Qwen 2.5: finetuned with 800k samples curated with DeepSeek-R1, in 1.5B, 7B, 14B, and 32B

- from Llama 3.1 8B Base: DeepSeek-R1-Distill-Llama-8B

- from Llama3.3-70B-Instruct: DeepSeek-R1-Distill-Llama-70B

- and DeepSeek-R1 and DeepSeek-R1-Zero, the full-size, 671B MoE models similar to DeepSeek V3. Surprisingly, MIT licensed rather than custom licenses, including explicit OK for finetuning and distillation

Other notables from the launch:

- Pricing (per million tokens): 14 cents input (cache hit), 55 cents input (cache miss), and 219 cents output. This compares to o1 at 750 cents input (cache hit), 1500 cents input (cache miss), 6000 cents output. That's 27x-50x cheaper than o1.

- solves every problem from the o1 blogpost. every one.

- can run the distilled models on ollama

- can write manim code really well

Surprises from the paper:

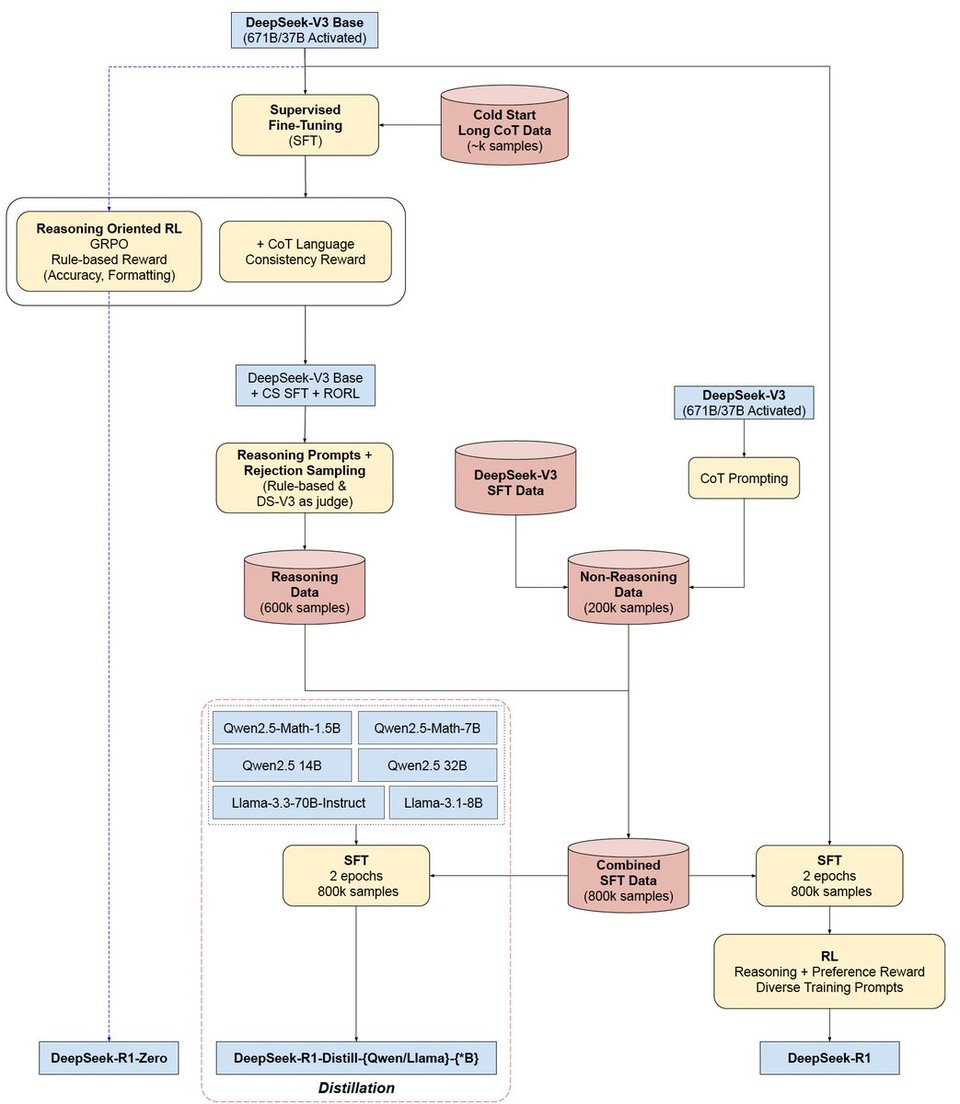

- The process was:

- V3 Base → R1 Zero (using GRPO - aka reward for correctness and style outcomes - no fancy PRM/MCTS/RMs)

- R1 Zero → R1 Finetuned Cold Start (distil long CoT samples from R1 Zero)

- R1 Cold Start → R1 Reasoner with RL (focus on language consistency - to produce readable reasoning)

- R1 Reasoning → R1 Finetuned-Reasoner (Generate 600k: multi-response sampling and only keep correct samples (using prev rules) and using V3 as a judge: filter out mixed languages, long paragraphs, and code)

- R1 Instruct-Reasoner → R1 Aligned (Balance reasoning with helpfulness and harmlessness using GRPO)

- Visualized:

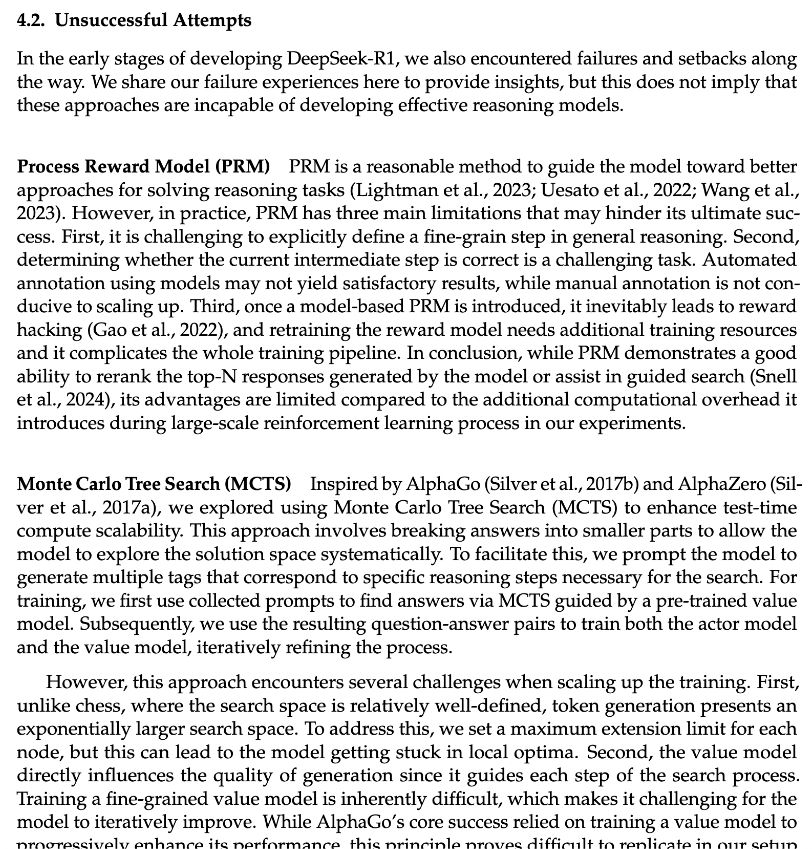

- Supervised data, Process reward models, and MCTS did -NOT- work

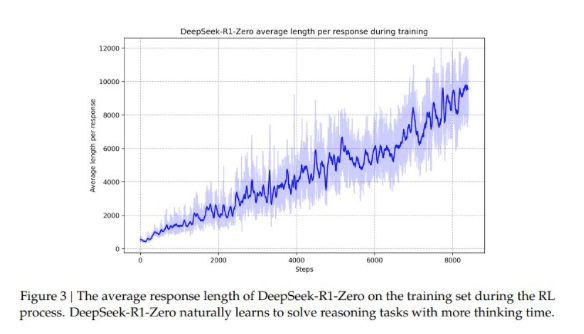

- but they do use GRPO from DeepSeekMath (challenged by the DPO author) as "the RL framework to improve model performance in reasoning" where reasoning (like in-context back-tracking) "naturally emerged" after "thousands of RL steps" - not quite the famous o1 scaling plot, but a close cousin.

- using "aha moments" as pivot tokens, often mixing languages in a reader unfriendly way

- R1 began training less than a month after the o1 announcement

- R1 distillations were remarkably effective, giving us this insane quote: "DeepSeek-R1-Distill-Qwen-1.5B outperforms GPT-4o and Claude-3.5-Sonnet on math benchmarks with 28.9% on AIME and 83.9% on MATH.", and this is without even pushing the distillation to their limits.

- This is more effective than just RL-tuning a small model: "reasoning patterns of larger models can be distilled into smaller models, resulting in better performance compared to the reasoning patterns discovered through RL on small models." aka "total SFT victory"

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

DeepSeek-R1 Model Developments

- DeepSeek-R1 Releases and Updates: @deepseek_ai announced the release of DeepSeek-R1, an open-source reasoning model with performance on par with OpenAI-o1. The release includes a technical report and distilled smaller models, empowering the open-source community. @cwolferesearch highlighted that reinforcement learning fine-tuning is less effective compared to model distillation, marking the start of the Alpaca era for reasoning models.

Benchmarking and Performance Comparisons

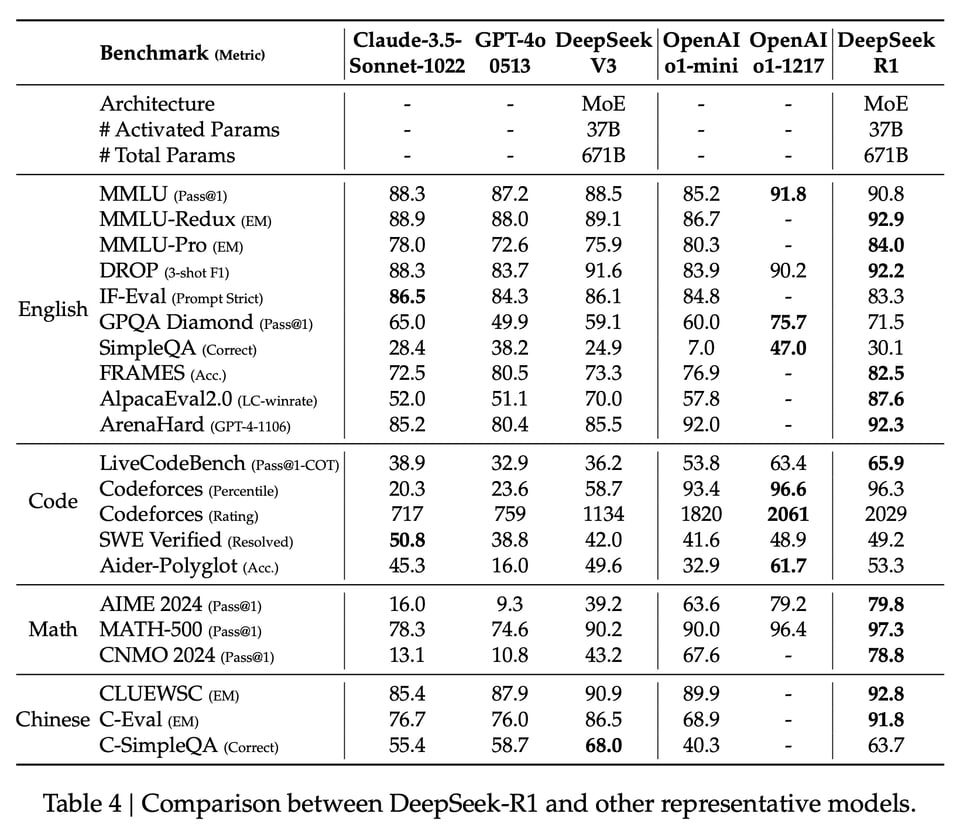

- DeepSeek-R1 vs OpenAI-o1: @_philschmid summarized evaluations showing DeepSeek-R1 achieving 79.8% on AIME 2024 compared to OpenAI-o1's 79.2%. Additionally, @ollama noted that R1-Distill-Qwen-7B surpasses larger proprietary models like GPT-4o on reasoning benchmarks.

Reinforcement Learning in LLM Training

- RL-Based Model Training: @cwolferesearch emphasized that pure reinforcement learning can endow LLMs with strong reasoning abilities without extensive supervised fine-tuning. @Philschmid detailed the five-stage RL training pipeline of DeepSeek-R1, showcasing significant performance improvements in math, code, and reasoning tasks.

Open-Source Models and Distillation

- Model Distillation and Open-Source Availability: @_akhaliq announced that DeepSeek’s distilled models, such as R1-Distill-Qwen-7B, outperform non-reasoning models like GPT-4o-0513. @reach_vb highlighted the community benefits from DeepSeek’s open and distilled models, making advanced reasoning capabilities accessible on consumer hardware.

AI Research Papers and Technical Insights

- Insights from Research Papers: @TheAITimeline shared insights from the LongProc benchmark, revealing that out of 17 LCLMs, open-weight models struggle beyond 2K tokens, while closed-source models like GPT-4o degrade at 8K tokens. @_philschmid discussed the DeepSeek-R1 paper’s findings on how reinforcement learning enhances model reasoning without relying on complex reward models.

Memes/Humor

- Humorous Takes on AI and Technology: @swyx shared a humorous xkcd comic, while @qtnx_ expressed frustration in a lighthearted manner about game launches and prompt engineering.

- Satirical Comments on AI Hype: @teortaxesTex humorously commented on overly optimistic AI expectations, emphasizing the perpetual nature of humorous content regardless of technological advancements.

- Playful Interactions: @jmdagdelen responded playfully to AI discussions, adding a touch of humor to technical conversations.

- Unexpected Humor in Technical Discussions: @evan4life shared a funny anecdote about AI model behaviors, blending technical insights with humor.

- Lighthearted AI Jokes: @sama humorously downplayed AGI development timelines, reflecting the community's playful skepticism.

- Funny AI-Related Memes: @thegregyang tweeted a situational meme about workplace scenarios, adding levity to AI-focused discussions.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek-R1 Distilled Models Showcase Exceptional SOTA Performance

- Deepseek just uploaded 6 distilled verions of R1 + R1 "full" now available on their website. (Score: 790, Comments: 226): Deepseek has released six distilled versions of R1 models along with the R1 "full" model, now accessible on their website.

- Deepseek's Strategy and Licensing: Commenters praise Deepseek for releasing finetunes of competitor models and supporting the local LLM community, noting the strategic aspect of this release. The models, including DeepSeek-R1-Distill-Qwen-32B, are released under the MIT License, allowing commercial use and modifications, which is seen as a significant move in the open-source community.

- Model Performance and Availability: The DeepSeek-R1-Distill-Qwen-32B model reportedly outperforms other models like OpenAI-o1-mini in benchmarks, achieving state-of-the-art results for dense models. Users are eagerly awaiting the availability of GGUF versions for larger models like 32B and 70B, with links to these models being shared on platforms like Hugging Face.

- Community Reactions and Technical Insights: Users express excitement about the model's capabilities and performance, with some noting the verbosity of the distilled models and the potential for further improvement through reinforcement learning. There is also a discussion about the practical implications of these models in real-world applications, with some users sharing their testing experiences and results.

- DeepSeek-R1-Distill-Qwen-32B is straight SOTA, delivering more than GPT4o-level LLM for local use without any limits or restrictions! (Score: 247, Comments: 85): DeepSeek-R1-Distill-Qwen-32B is establishing itself as the state-of-the-art (SOTA) model, surpassing GPT-4 level LLMs for local use without restrictions. The model's distillation, especially its fusion with Qwen-32B, achieves significant benchmark improvements, making it ideal for users with less VRAM and outperforming the LLama-70B distill.

- Distillation and Benchmarks: DeepSeek-R1-Distill-Qwen-32B's performance is highlighted by its entrance into the Pareto frontier with a score of 36/48 on a benchmark without quantization, showcasing its efficiency and competitive edge in local use models.

- Model Comparisons and Features: There is a discussion about the superiority of LLama 3.1 8B and Qwen 2.5 14B distillations, which reportedly outperform QWQ and include "thinking tags," enhancing reasoning capabilities.

- Software and Tools: Recent updates and support for these models are available, including PR #11310 for distilled versions, and the requirement for the latest LM Studio 0.3.7 to support DeepSeek R1.

- Deepseek-R1 and Deepseek-R1-zero repo is preparing to launch? (Score: 51, Comments: 5): DeepSeek-R1 and DeepSeek-R1-Zero models are anticipated for release on Hugging Face, as indicated by the provided links. The user expresses eagerness for the launch, hoping it will occur today.

- DeepSeek-R1 Zero is already available for download if users have sufficient storage capacity. The same applies to DeepSeek-R1.

Theme 2. DeepSeek-R1 Models Outprice OpenAI's High-Cost Tokens

- Deepseek R1 = $2.19/M tok output vs o1 $60/M tok. Insane (Score: 155, Comments: 37): Deepseek R1 offers a pricing of $2.19 per million tokens output, which is significantly lower compared to o1's $60 per million tokens. The post author is interested in real-world applications and particularly in comparisons related to code generation.

- Deepseek R1 Pricing and Performance: The discussion highlights that Deepseek R1 offers a competitive pricing of $2.19 per million tokens, significantly lower than o1's $60 per million tokens. Users noted that the R1 model has shown impressive performance improvements over its previous versions, particularly the 35B and 70B parameter models which perform comparably or better than o1-mini.

- Model Transparency and Cost Factors: There is a lack of transparency from OpenAI regarding their model's architecture and token usage, making replication challenging. Some comments suggest that OpenAI's pricing might not solely be based on greed, but rather on the costs associated with R&D and operational expenses, with skepticism around Sam Altman's claims about their financial losses.

- Access and Implementation: Users inquired about accessing and testing Deepseek R1, with references to the Deepseek API documentation for more information. The "deepthink" feature was mentioned as a way to utilize the R1 model, with updates noted on their website and app.

- Deepseek-R1 officially release (Score: 60, Comments: 2): DeepSeek-R1, released under the MIT License, offers open-sourced model weights and an API for chain-of-thought outputs, claiming performance parity with OpenAI o1 in tasks like mathematics and coding. The release includes two 660B models and six smaller distilled models, with the 32B and 70B models matching OpenAI o1-mini's capabilities. The API pricing is 1 RMB per million input tokens (cache hit) and 16 RMB per million output tokens, with detailed guidelines available in the official documentation.

- DeepSeek-R1's pricing in USD can be found in the official documentation at DeepSeek Pricing, providing clarity on the cost structure for those interested in comparing it with other models.

- DeepSeek-R1 Paper (Score: 58, Comments: 5): The DeepSeek-R1 Paper introduces an API that emphasizes cost-efficient token usage.

- Self-evolution of DeepSeek-R1-Zero: The self-evolution process showcases how reinforcement learning (RL) can autonomously enhance a model's reasoning capabilities. This process is observed without the influence of supervised fine-tuning, allowing the model to naturally develop sophisticated behaviors like reflection and exploration through extended test-time computation.

- Emergence of sophisticated behaviors: As DeepSeek-R1-Zero's test-time computation increases, it spontaneously develops advanced behaviors, such as revisiting and reevaluating previous steps. These behaviors emerge from the model's interaction with the RL environment and significantly improve its efficiency and accuracy in solving complex tasks.

- "Aha Moment" phenomenon: During training, DeepSeek-R1-Zero experiences an "aha moment," where it autonomously learns to allocate more thinking time to problems, enhancing its reasoning abilities. This phenomenon highlights the potential of RL to foster unexpected problem-solving strategies, emphasizing the power of RL to achieve new levels of intelligence in AI systems.

Theme 3. DeepSeek-R1 Embraces Full MIT License for Models

- o1 performance at ~1/50th the cost.. and Open Source!! WTF let's goo!! (Score: 668, Comments: 237): DeepSeek R1 and R1 Zero have been released with an open-license, offering o1 performance at approximately 1/50th the cost, and they are open-source.

- DeepSeek's Open-Source and Pricing Concerns: There is significant discussion about DeepSeek's open-source claims, with some users questioning the availability of model details like code and datasets. Concerns about pricing are raised, particularly regarding token costs being double for DeepSeek V3 and comparisons to OpenAI's pricing, with some users noting that high prices may prevent system overload.

- Model Performance and Comparisons: Users highlight the impressive performance of DeepSeek models, noting the increase from 32 billion to 600 billion parameters. Comparisons are made with other models like Qwen 32B and Llama 7-8B, with some users claiming these models outperform others like 4o and Claude Sonnet.

- Censorship and Geopolitical Implications: There is a robust debate on the influence of political censorship in AI models, with discussions on how Chinese companies like DeepSeek may embed CCP values in their models. Comparisons are drawn with American companies that also apply their own "guardrails," reflecting political and cultural biases.

- DeepSeek-R1 and distilled benchmarks color coded (Score: 288, Comments: 61): DeepSeek R1 licensing explicitly allows for model distillation, which can be beneficial for creating efficient AI models. The post mentions distilled benchmarks that are color-coded, suggesting a visual method for evaluating performance metrics.

- The DeepSeek R1 models, particularly the 1.5B and 7B versions, are noted for outperforming larger models like GPT-4o and Claude 3.5 Sonnet on coding benchmarks, raising skepticism and curiosity about their performance in non-coding benchmarks such as MMLU and DROP. Users express surprise at these results, questioning the generalization of improvements beyond math and coding tasks.

- DeepSeek-R1-Distill-Qwen-14B is highlighted for its efficiency, being on par with o1-mini while offering significantly cheaper pricing for input/output tokens. The 32B and 70B models further outperform o1-mini, with the 32B model being 43x to 75x cheaper, making them attractive for both local and commercial use.

- Concerns are raised about the training data for distilled models, which rely heavily on Supervised Fine-Tuning (SFT) data without Reinforcement Learning (RL), although some users clarify that the development pipeline does include two RL stages. There is skepticism about the accuracy of the 1.5B model's benchmarks, with some suggesting further testing to validate these claims.

- Deepseek R1 / R1 Zero (Score: 349, Comments: 105): DeepSeek has expanded its licensing to commercial use under the MIT License. The post mentions DeepSeek R1 and R1 Zero, but no further details are provided.

- DeepSeek R1 Zero is speculated to be a large model with around 600B to 700B parameters, as discussed by users like BlueSwordM and Few_Painter_5588. This model size suggests significant resource requirements, with estimates of needing 1.8TB RAM to host, indicating its potential computational intensity.

- Discussions around DeepSeek R1 Zero also touch on its architecture, with De-Alf noting it shares the same architecture as other R1 models, suggesting a common framework among them. The release on Hugging Face is mentioned, with some users expressing confusion over the model's size and role, such as being a "teacher" or "judge" model.

- The release of DeepSeek R1 Zero under the MIT License was praised for its openness, with users like Ambitious_Subject108 appreciating the decision not to restrict it behind an API. The community also noted the release of multiple distillations, providing flexibility for various hardware specifications.

Theme 4. DeepSeek-R1 Distilled Models Revolutionize Precision Benchmarks

- Epyc 7532/dual MI50 (Score: 68, Comments: 36): An engineer built an Epyc 7532 server with dual MI50 GPUs purchased for $110 each from eBay, running on 256 GB of Micron 3200 RAM and housed in a Thermaltake W200 case. Despite cooling challenges with the MI50s reaching over 80°C, the setup runs ollama and open webui on Ubuntu, achieving approximately 5t/s with Phi4 performing well and qwen 32b being slower.

- Cooling Challenges: Evening_Ad6637 shared insights on improving cooling efficiency by addressing airflow issues and using aluminum materials, achieving up to 10°C lower temperatures compared to standard cooling systems. They recommend ensuring direct contact between aluminum components and the GPU heat sink for better heat dissipation.

- Hardware Compatibility and Use: Psychological_Ear393 discussed the compatibility of Radeon VII and MI50 GPUs with ROCm, noting that while both are deprecated, they still function with the latest drivers. They also mentioned that the W200 case is notably large, accommodating the setup effectively.

- Fan and Airflow Considerations: No-Statement-0001 suggested using turbine-style fans to enhance static pressure and improve airflow through the dense fins of server GPUs, as regular fans may struggle with this task.

- o1 thought for 12 minutes 35 sec, r1 thought for 5 minutes and 9 seconds. Both got a correct answer. Both in two tries. They are the first two models that have done it correctly. (Score: 104, Comments: 25): DeepSeek R1 and o1 models achieved correct answers in a complex mathematical problem within two tries, with o1 taking 12 minutes 35 seconds and R1 taking 5 minutes 9 seconds. The problem involved counting elements like wolves and hares, and highlighted a logical error when the count of wolves became negative, stressing the importance of non-negative variables in calculations.

- Problem-Solving Insights: The discussion delves into the reasoning behind the puzzle, emphasizing the importance of logical reasoning in AI models. Charuru provides a detailed breakdown of the problem-solving process, identifying key observations like the reduction of total animal count by one per move, the impossibility of odd final totals, and the stable coexistence of at most one species.

- Model Performance Variability: No_Training9444 and others discuss the variability in model performance, with some models like Deepseek R1 and o1-pro successfully solving the problem, while other models like gemini-exp-1206 struggled. StevenSamAI notes that repeated trials may yield correct answers, indicating variability in model output.

- Community Engagement: The community actively engages with the problem, sharing attempts and outcomes. Echo9Zulu- questions the purpose of such riddles in testing AI, while DeltaSqueezer and others express interest in solving the puzzle themselves, highlighting the blend of fun and technical challenge these problems present.

- Deepseek-R1 GGUFs + All distilled 2 to 16bit GGUFs + 2bit MoE GGUFs (Score: 101, Comments: 49): Deepseek-R1 models have been uploaded in various quantization formats including 2 to 16-bit GGUFs, with a Q2_K_L 200GB quant specifically for large R1 MoE and R1 Zero models. The models are available on Hugging Face and include 4-bit dynamic quant versions for higher accuracy, with instructions for running the models using llama.cpp provided on the Unsloth blog.

- Dynamic Quantization and Compatibility Issues: Users discuss the use of Q4_K_M for optimal performance and explore alternatives to bitsandbytes for dynamic quantization compatible with llama.cpp. There are issues with LM Studio not supporting the latest llama.cpp updates, causing errors when loading models like R1 Gguf.

- Model Upload Delays and Availability: The Qwen 32b gguf model faced a temporary 404 error during upload, but was subsequently made available on Hugging Face. Other models are still in the process of being uploaded, with the team working overnight to ensure availability.

- Community Appreciation and Feedback: The community expresses gratitude for the ongoing work and rapid updates from the Unsloth team, acknowledging their dedication and responsiveness to user feedback and issues.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. DeepSeek-R1 Launches Open-Source Model at Hardware Cost

- It just happened! DeepSeek-R1 is here! (Score: 250, Comments: 103): DeepSeek-R1 is a new model that requires substantial GPU resources, suggesting high computational demand. It is described as an open model, indicating its availability for public use and potential for community contributions or modifications.

- DeepSeek-R1 Hardware Requirements: While some users initially believed DeepSeek-R1 required high-end hardware, distillated versions can run on a single RTX 3090 and even lower VRAM cards, allowing for more accessible use for those with consumer-grade GPUs.

- Open Source vs. Proprietary Models: There is a discussion on the openness of DeepSeek-R1 compared to proprietary models like ChatGPT and Claude, emphasizing the ability to run DeepSeek locally, albeit requiring significant hardware investment, which contrasts with the data collection concerns associated with proprietary APIs.

- AI Model Development and Expectations: The simplicity of DeepSeek's training process, involving standard policy optimization with rewards, raises questions about why such effective methods weren't discovered earlier, highlighting the ongoing evolution and expectations in the AI field for models to improve reasoning and inference capabilities.

Theme 2. AI Autonomy in Job Applications with Browser-Use Tool

- AI agent applying for jobs on its own (Score: 200, Comments: 46): The post discusses an AI agent that autonomously applies for jobs using GitHub. Specific details about the implementation or effectiveness of this AI agent are not provided in the text, as the post body is empty and relies on a video for further information.

- Automation and Externalities: Users express concern over the implications of automating job applications, with comments highlighting the increased volume of applications and the resulting need for employers to use automation for screening. The discussion emphasizes that while AI can apply to thousands of jobs, it may lead to more spam and inefficiencies in the job market.

- AI Application Effectiveness: A journalist's test of AI job application services revealed that applying to thousands of jobs can yield interviews, though with a low success rate per application. The conversation suggests that while AI can scale job applications, it may produce inaccuracies, such as fabricating qualifications, and the overall effectiveness is questioned.

- Potential Countermeasures: Users predict that as AI agents apply for jobs, recruiters may develop strategies like honeypotting to identify AI-generated applications. There is also speculation about AI agents eventually managing remote work, raising ethical and practical questions about AI's role in the job market.

Theme 3. Critique of OpenAI's Marketing and AGI Promises

- He himself built the hype but it got out of control (Score: 1243, Comments: 135): Sam Altman addresses the excessive hype surrounding OpenAI on Twitter, clarifying that artificial general intelligence (AGI) will not be deployed next month as it has not been built yet. He advises followers to temper their expectations, despite exciting developments, as per his tweet dated January 20, 2025, with 26.9K views.

- Discussions highlight skepticism about Sam Altman's statements, with users expressing frustration over perceived inconsistencies and hype management, particularly regarding the timeline for AGI. Some users interpret his messaging as strategic, possibly to manage expectations and regulatory scrutiny.

- Users debate the singularity community's response, often mocking their optimistic timelines for AGI, and suggesting that forums like r/singularity and r/openai are increasingly indistinguishable due to shared unrealistic expectations.

- Several comments reflect on Altman's past statements and the hype surrounding OpenAI, with some suggesting that his recent tweets aim to temper market expectations and prevent overvaluation based on speculative AGI timelines.

- OpenAI’s Marketing Circus: Stop Falling for Their Sci-Fi Hype (Score: 357, Comments: 214): OpenAI's marketing tactics are criticized for promoting unrealistic expectations about AGI and PhD-level super-agents, suggesting these advancements are imminent. The post argues that LLMs lack advanced reasoning skills without specialized training and cautions against believing in overhyped promises, emphasizing the need for improved media literacy.

- Discussions highlight skepticism towards OpenAI's marketing tactics, with some users arguing that the company's claims about AGI and PhD-level super-agents are exaggerated and not reflective of current capabilities. Sam Altman is noted for delivering ambitious statements that are met with both cynicism and anticipation.

- Users debate the capabilities of LLMs, with some asserting that current models like o1 and o3 are already performing tasks better than average humans, while others argue that these models still lack common sense and reliability. The conversation touches on the reasoning abilities of LLMs, with comparisons to toddlers and discussions on their impressive, yet limited, problem-solving skills.

- The community expresses a divide between the perceived hype and the actual utility of AI models, with some users advocating for a more realistic understanding of AI capabilities. There is a call for skepticism towards media representations of AI advancements, emphasizing the need for practical experience and direct usage of the models to assess their real-world applicability.

Theme 4. Criticism of Perplexity AI's Reliability and Bias Concerns

- People REALLY need to stop using Perplexity AI (Score: 220, Comments: 137): Perplexity AI's CEO, Aravind Srinivas, proposes developing an alternative to Wikipedia due to perceived bias, encouraging collaboration through Perplexity APIs. His tweet from January 14, 2025, has attracted significant attention with 820.7K views, 593 likes, and 315 retweets.

- Discussions highlight the bias in Wikipedia, particularly concerning contentious topics like the Israel/Palestine conflict. Commenters argue that Wikipedia's crowd-sourced nature leads to activist-driven content, with some suggesting that a corporate alternative could be more biased due to profit motives.

- Many commenters express skepticism about Perplexity AI's intentions, suggesting the company's proposal might cater to right-wing perspectives under the guise of being "uncensored." Concerns are raised about the feasibility of creating a truly unbiased platform, given that all information sources inherently carry some bias.

- The idea of alternative information sources is debated, with some supporting the diversification of sources to avoid single-narrative dominance, while others worry about the potential for increased bias and misinformation. The conversation reflects broader concerns about the role of technology and AI in shaping public discourse and knowledge repositories.

AI Discord Recap

A summary of Summaries of Summaries by o1-2024-12-17

Theme 1. Open-Source LLM Rivalries

- DeepSeek R1 Roars Past OpenAI’s o1: This 671B-parameter model matches o1’s reasoning benchmarks at 4% of the cost and arrives under an MIT license for free commercial use. Its distilled variants (1.5B to 70B) also impress math enthusiasts with high scores on MATH-500 and AIME.

- Kimi k1.5 Slams GPT-4o in a 128k-Token Duel: The new “k1.5” orchestrates multi-modal tasks, reportedly outperforming GPT-4o and Claude Sonnet 3.5 by up to +550% in code and math. Users point to its chain-of-thought synergy as it breezes past difficult benchmarks.

- Liquid LFM-7B Dares to Defy Transformers: Liquid AI touts LFM-7B, a non-transformer design with superior throughput on 7B scale. It boldly claims best-in-class English, Arabic, and Japanese support under a license-based model distribution.

Theme 2. Code & Agentic Tools

- Windsurf Wave 2 Surfs with Cascade & Autogenerated Memories: The new Windurf editor integrates robust web search, doc search, and performance boosts for broader coding teams. Users praise its single global chat approach, though some bemoan sluggish performance under large-file contexts.

- Cursor Stumbles in Sluggish Showdown: Devs complain about 3-minute delays, code deletion mishaps, and “flow actions” slowing them down. Many threaten to jump ship for faster AI editors like Windsurf or Gemini.

- Aider 0.72.0 Scores with DeepSeek R1: Aider’s latest release welcomes “--model r1” to unify code generation across Kotlin and Docker enhancements. Users love that Aider wrote “52% of the new code,” proving it’s a double-edged coding partner.

Theme 3. RL & Reasoning Power-Ups

- GRPO Simplifies PPO for DeepSeek: “Group Relative Policy Optimization (GRPO) is just PPO minus the value function,” claims Nathan Lambert. By relying on Monte Carlo advantage, DeepSeek R1 emerges with advanced math and code solutions.

- Google’s Mind Evolution Outsmarts Sequential Revision: It achieves 98% success on planning benchmarks with Gemini 1.5 Pro by systematically refining solutions. Observers see it as a new apex for solver-free performance.

- rStar-Math Gambles on MCTS: It trains small LLMs to surpass big models on tricky math tasks without distilling from GPT-4. The paper shows that token-level Monte Carlo Tree Search can transform modest-scale LLMs into powerhouse reasoners.

Theme 4. HPC & Hardware High Jinks

- M2 Ultras Tag-Team DeepSeek 671B: One dev claims near real-time speeds using two M2 Ultras at 3-bit quantization. Enthusiasts debate if the hardware cost justifies the bragging rights for local monstrous LLM runs.

- GPU vs CPU Smackdown: Some argue GPU’s parallelization demolishes CPU for big arrays, though data transfer can bottleneck returns. Others say for small tasks, CPU can be just as quick without the overhead.

- KV Cache Quantization Boosts LM Studio: Llama.cpp engine v1.9.2 brings memory-friendly inference with 3-bit to 4-bit quantization. Speed freaks applaud the throughput gains on consumer-grade hardware.

Theme 5. Partnerships & Policy Kerfuffles

- Microsoft’s $13B OpenAI Bet Spooks the FTC: Regulators worry about “locked-in” AI partnerships and fear startup competition may suffer. Lina Khan warns that dominating cloud plus AI resources spells trouble for newer contenders.

- FrontierMath Funding Cloaked in NDA: It emerges that OpenAI quietly bankrolled the math dataset, leaving many contributors clueless. Critics slam the hush-hush arrangement for hindering transparency.

- TikTok Merger Talk Tangles with Perplexity: Perplexity upset pro subscribers and then pivoted with big expansions—rumor says it even eyed merging with TikTok. Skeptics question if any synergy exists beyond a flashy headline.

PART 1: High level Discord summaries

Codeium (Windsurf) Discord

- Windsurf Wave 2 & Cascade Upgrades: The Windsurf Wave 2 release introduced Cascade web and docs search, autogenerated memories, and performance enhancements, as noted in the official blog.

- Users cited smoother operation in Cascade, referencing status.codeium.com and pointing to better reliability for broader teams.

- Deepseek R1 Rocks 671B Parameters: The new Deepseek R1 model boasts 671 billion parameters, reportedly surpassing other offerings, with @TheXeophon highlighting its strong test scores.

- Community members debated integrating it into Windsurf, wanting to see further evaluation and clarity around data usage.

- Performance & Error Woes in Windsurf: Many users reported incomplete envelope errors, slow typing, and lag after version 1.2.1, particularly with large files.

- They pointed out frustrations with flow actions and cascading edits, saying these issues heavily reduced productivity.

- API Keys & Pro Plan Gripes: Developers voiced concerns about Windsurf’s stance on personal API keys, limiting usage for chat functions and advanced integrations.

- Some Pro plan subscribers felt shortchanged, comparing Windsurf to other IDEs that freely allow user-owned APIs.

- Cascade History & Long Chat Issues: A single global list of Cascade chats caused confusion for users seeking project-specific organization.

- They also complained that extended sessions in Windsurf become sluggish, forcing frequent resets and repeated context explanations.

Perplexity AI Discord

- Perplexity Overhauls Model, Ruffles Pro Feathers: Following a switch to an in-house model, users criticized Perplexity for weaker outputs and canceled Pro subscriptions, citing a lack of dynamic responses (Perplexity Status).

- Others demanded swift fixes and more transparency, referencing the platform's valuation and urging timely improvements.

- Ithy & Co. Challenge Perplexity’s Reign: A wave of new AI tools, including Ithy and open-source projects like Perplexica, gained traction among developers seeking alternatives.

- Community members said these tools offer broader features, with some predicting that open-source platforms could soon rival closed solutions.

- DeepSeek-R1 Gears Up in Perplexity: Perplexity announced plans to integrate DeepSeek-R1 for advanced reasoning tasks, noting a tweet from Aravind Srinivas.

- Users anticipate restored functionality and sharper context handling, hoping for improved synergy with the search interface.

- Perplexity Pounces on Read.cv: Perplexity acquired Read.cv, aiming to boost its AI-driven insights for professional networking (details here).

- Participants expect stronger user profiles and data-driven matching, fueling speculation about future expansions in the platform’s suite.

Cursor IDE Discord

- DeepSeek R1 Shines on Benchmarks: DeepSeek R1 scored 57% on the aider polyglot benchmark, placing just behind O1’s 62%, as shown in this tweet.

- Its open-source approach at GitHub drew interest for potential Cursor integration, with some users referencing DeepSeek’s reasoning model docs for advanced workflows.

- Cursor’s Sluggish Woes Spark Debate: Multiple developers reported 3-minute delays and slow agent responses in real-world usage, fueling frustration with Cursor’s performance.

- Some threatened to switch to faster AI editors like Windsurf or Gemini, while a Notion entry circulated for fresh prompting ideas.

- Agent Functionality Face-Off: Community members highlighted Cursor’s hiccups with large files and code deletions, contrasting it with GitHub Copilot and Cline in a 240k token battle.

- Some insisted on better documentation, while others cited a tweet from Moritz Kremb showcasing single-command best practices.

- Community Pushes for Cursor Updates: Calls to include DeepSeek R1 and other advanced models surfaced to address performance complaints.

- Developers looked to the Cursor Forum for upcoming patches and direct lines of feedback on new releases.

Nous Research AI Discord

- DeepSeek's Distillation Delivers: The DeepSeek-R1 model garnered attention for its robust distillation results, showcased in DeepSeek-R1 on Hugging Face, with hints of expanded reasoning capabilities using RL approaches.

- Contributors brainstormed synergy between Qwen and open-source fine-tuning endeavors, suggesting future optimizations for complex tasks.

- Liquid AI's Licenses & LFM-7B: Liquid AI introduced the LFM-7B with a recurrent design, touting superior throughput at 7B scale in their official link.

- They revealed a license-based distribution model and highlighted English, Arabic, and Japanese support for local and budget-limited deployments.

- Sparsity Speeds & MOEs vs Dense: Participants compared MOEs to dense models using a geometric mean trick to match parameter sizes, eyeing a 3-4x latency advantage.

- They referenced NVIDIA's structured sparsity blog to underscore 2:1 GPU efficiency, albeit with similar memory demands.

- Google's Mind Evolution Mastery: Google showcased Mind Evolution as outperforming Best-of-N and Sequential Revision, achieving 98% success on planning benchmarks with Gemini 1.5 Pro.

- A shared tweet example highlighted solver-free performance gains compared to older inference strategies.

- CNN Collab for Climate Yields: A project titled 'Developing a Convolutional Neural Network to Evaluate the Impact of Climate Change on Global Agricultural Yields' seeks experts in ML and climate science by January 25.

- Prospective collaborators can DM for details on constructing an integrated CNN framework to analyze geospatial data and yield factors.

Unsloth AI (Daniel Han) Discord

- DeepSeek Revelations & Quantization Quips: Unsloth announced that all DeepSeek R1 models, including GGUF and quantized versions, are now on Hugging Face, offering Llama and Qwen distills with improved accessibility.

- Community members praised dynamic 4-bit approaches, referencing a post by @ggerganov, highlighting less VRAM use without sacrificing accuracy.

- Fine-Tuning Feats with Qwen and Phi: Community members tested Qwen and Phi-4 with various training parameters, noticing underfitting issues on Phi-4 possibly linked to heavier instruction tuning.

- They also explored using Alpaca format on Qwen2.5, pointing to the Unsloth documentation for chat template solutions.

- Chatterbox Chats & Synthetic Sets: The new Chatterbox dataset builder introduced multi-turn management with features like token counting and Docker-compose, shared in a GitHub repo.

- Developers proposed generating synthetic datasets in bulk using webworkers or a CLI, aiming for improved multi-turn conversation flows.

- Sky-T1 Takes Off: The Sky-T1-32B model from the NovaSky team at UC Berkeley scored highly in coding and math, trained on 17K data from Qwen2.5-32B-Instruct in 19 hours on 8 H100 GPUs.

- Enthusiasts praised its speed under DeepSpeed Zero-3 Offload, indicating it nearly matches o1-preview performance.

- Cohere For AI LLM Research Cohort Calls: The Cohere For AI initiative will run an LLM Research Cohort focusing on multilingual long-context tasks, kicking off with a call on January 10th.

- Participants will practice advanced NLP strategies, referencing a tweet from @cataluna84 about combining large-scale teacher models with smaller student models.

Eleuther Discord

- RWKV7 Rides High with 'Goose': The RWKV7 release, affectionately dubbed 'Goose,' sparked enthusiasm in the community, with BlinkDL showcasing strong generative capabilities beyond older models. It notably integrates channel-wise decay and learning-rate tweaks, resulting in solid performance according to user tests.

- Members compared RWKV7 to Gated DeltaNet, highlighting new design features that keep this gen7 RNN ahead of prior iterations. They also debated memory decay strategies and layering to further sharpen RWKV7's edge.

- DeepSeek R1 Takes On AIME and MATH-500: The newly introduced DeepSeek R1 model outperforms GPT-4o and Claude Sonnet 3.5 in tasks like AIME and MATH-500, demonstrating coping with extended contexts up to 128k tokens. Community comparisons suggest improved 'cold start' performance, attributed to robust training strategies.

- Discussions touched on tackling gradient spikes using strategies from SPAM: Spike-Aware Adam, hinting that DeepSeek R1 effectively avoids permanent damage. Users viewed these improvements as promising, while some voiced doubts about fully relying on 'R1 Zero' results without more replication.

- Qwen2.5 Stumbles Despite Official Scores: Many tested Qwen2.5 on gsm8k and observed only ~60% accuracy, diverging from the official blog’s claim of 73% for the instruct variant. Confusion arose around parsing differences and few-shot formatting details.

- Some suggested incorporating the same question/answer format used by QwenLM/Qwen plus a “step by step” style to realign results. They reported minor score gains to 66%, underlining how prompting tactics can sway final outcomes.

- MoE Hype and Hesitations: The community praised Mixture of Experts models for their efficiency, with references like Hugging Face’s MoE blog spurring adoption. Some expressed caution around training stability, underscoring the complexities of sharding and gating strategies.

- Debates centered on whether MoE offers enough practical advantage without advanced tuning to handle potential training volatility. Supporters view it as a promising avenue, while others stressed that sustained experimentation is key.

Interconnects (Nathan Lambert) Discord

- DeepSeek’s Daring Drive: DeepSeek-R1 soared beyond expectations, scoring near-OpenAI-o1 performance under an MIT license, with extra detail in DeepSeek-R1 on Hugging Face.

- Skeptics questioned the R1 Zero findings, but others praised Group Relative Policy Optimization (GRPO) as a cleaner PPO alternative, referencing GRPO clarifications.

- Kimi’s Kinetic Kick in RL: The Kimi 1.5 paper highlights new RL methods like reward shaping and advanced infrastructure, shared in Kimi-k1.5 on GitHub.

- Enthusiasts predict these techniques will bolster synergy between reinforcement learning frameworks and chain-of-thought reasoning, signifying a leap forward for agentic models.

- Molmo’s Multimodal Might: Molmo AI gained traction as a robust VLM, claiming superior performance on detection and text tasks, showcased at molmo.org.

- Although some misclassifications surfaced, many see its cross-domain flexibility as a serious contender against models like GPT-4V.

- Cursor Clobbers Devin in Coding Duel: Teams quickly dropped Devin for Cursor, citing underwhelming code completions, amid rumors Devin tapped gpt-4o for coding tasks instead of stronger alternatives like Claude.

- The shift sparked debates on whether AI groups systematically overestimate emergent agent solutions, echoing points from Tyler Cowen’s interview.

- SOP-Agents Steal the Show: The SOP-Agents framework proposes Standard Operational Procedures for large language models, refining multistep planning.

- Developers anticipate blending it with Chain of Thought and RL to enhance the clarity of high-level decision graphs.

aider (Paul Gauthier) Discord

- Aider v0.72.0 Achieves New Heights: The fresh Aider v0.72.0 release brings DeepSeek R1 support with shortcuts

--model r1and Kotlin syntax integration, alongside file-writing enhancements using--line-endings.- Community members cited multiple bugfixes (including permissions issues in Docker images) and noted that Aider wrote 52% of the new code.

- DeepSeek R1 Sparks Mixed Reactions: Some users praised DeepSeek R1 for cheaper alternatives to OpenAI's o1, hitting 57% on Aider coding benchmarks.

- Others reported subpar outcomes in basic tasks, suggesting pairing it with more reliable editing models for improved consistency.

- Kimi k1.5 KOs GPT-4o: The new Kimi k1.5 model reportedly outperforms GPT-4o and Claude Sonnet 3.5 in multi-modal benchmarks, with context scaling up to 128k tokens.

- Users highlighted especially strong results on MATH-500 and AIME, fueling optimism for refined reasoning capabilities.

- AI Data Privacy Draws Concern: Participants referenced Fireworks AI Docs while describing corporate transparency differences in data usage.

- They questioned which providers handle user data responsibly, pointing to unclear policies among larger AI vendors.

Stackblitz (Bolt.new) Discord

- Bolt.new Banishes White Screens: After the recent Tweet from bolt.new, Bolt.new addresses the notorious white screen and ensures precise template selection from the first prompt.

- Eager testers report a smoother flow, noting a direct fix to previous frustrations and guaranteeing a more efficient start.

- Error Loops Gobble Tokens: Users faced continuous loops leading to severe token consumption—one developer burned through 30 million tokens—particularly in scenarios involving user permissions.

- They concluded a complete reset was the only path, with community members urging more robust debugging for complex functionalities.

- RLS Tangles in Supabase: Developers wrestled with recurring RLS violations while implementing booking features in Supabase, spurring repeated policy failures.

- One user recommended referencing Supabase Docs for sample policies, reducing repeated misconfigurations.

- Stripe or PayPal? Payment Talk: Community members debated Stripe versus simpler alternatives like PayPal for car detailing payments, especially for less technical users.

- Some pointed to Supabase's guide on Stripe Webhooks, while others recommended WordPress-based solutions for a quicker setup.

- Pro Plan Eases Token Constraints: Curious newcomers asked about token usage under the Pro plan, discovering the daily limit disappears and usage depends heavily on user skill and optional features like diffs.

- This approach reassures more advanced developers they can push Bolt without worrying about daily caps or unexpected token exhaustion.

LM Studio Discord

- LM Studio 0.3.7 & DeepSeek R1: The Tag-Team Triumph: The new LM Studio 0.3.7 includes support for the advanced DeepSeek R1 model and integrates llama.cpp engine v1.9.2, as outlined in LM Studio's update.

- Community members praised the open source approach, referencing the DeepSeek_R1.pdf for its robust reasoning capabilities with

tags.

- Community members praised the open source approach, referencing the DeepSeek_R1.pdf for its robust reasoning capabilities with

- KV Cache Quantization Fuels Efficiency: The KV Cache quantization feature for llama.cpp (v1.9.0+) aims to enhance performance by reducing memory usage, as seen in LM Studio 0.3.7.

- Users reported faster throughput in large language models, noting that 3-bit quantization often hits an optimal balance of speed and accuracy.

- File Attachments Stay Local in LM Studio: Users questioned whether uploading files in LM Studio would send data elsewhere, and were assured the content stays on their machine for local context retrieval.

- They tested multi-file uploads for domain-specific tasks, confirming offline-only usage without compromising data control.

- GPUs Under Scrutiny: 4090 vs. Budget Boards: Membership discussions weighed a $200 GPU against high-end boards like the 4090, referencing tech specs for large-scale AI tasks.

- Most agreed bigger memory is a game-changer for massive models, delivering improved throughput for data-driven workloads.

- Distributed Inference with M2 Ultras: Speed or Splurge?: An Andrew C tweet showcased DeepSeek R1 671B running on two M2 Ultras, leveraging 3-bit quantization for near real-time speeds.

- However, participants remained cautious about hardware costs, citing bandwidth constraints and the risk of diminishing returns.

Latent Space Discord

- DeepSeek R1 Distills and Dominates: The DeepSeek R1 release arrived under an MIT license, matching OpenAI o1 performance in math, code, and reasoning tasks.

- A distilled variant outran GPT-4o in AIME and MATH benchmarks, sparking excitement about expanded open-source offerings.

- OpenAI’s Operator Surfaces in Leaked Docs: Recent leaks exposed OpenAI’s new Operator (or Computer Use Agent) project, fueling speculation of an imminent launch.

- Observers compared it against Claude 3.5, referencing details from the Operator system leak.

- Liquid Foundation Model LFM-7B Sets Sail: The LFM-7B model from Liquid AI claims top-tier multilingual capabilities with a non-transformer design.

- Engineers applauded its low memory footprint for enterprise use, contrasting it with large transformer-based approaches.

- DeepSeek v3 & SGLang Fuel Mission Critical Inference: A Latent.Space podcast spotlighted DeepSeek v3 and SGLang for advanced workflow requirements in “Mission Critical Inference.”

- Guests discussed strategies for scaling beyond a single GPU and teased further DeepSeek improvements, rousing interest among performance-focused developers.

- Kimi k1.5 Surprises with O1-Level Performance: The Kimi k1.5 model reached o1-level benchmarks, outperforming GPT-4o and Claude 3.5 in math and code tasks.

- Reported +550% gains on LiveCodeBench spurred debate on how smaller architectures are closing the gap with bigger contenders.

OpenRouter (Alex Atallah) Discord

- DeepSeek R1 Takes On OpenAI's o1: DeepSeek introduced its R1 model on OpenRouter with performance that compares well to OpenAI's o1, priced at $0.55/M tokens (4% of the cost).

- Community members praised the model’s open-source MIT license and strong utility, citing DeepSeek's tweet for more details.

- Censorship-Free Angle Stirs Debate: DeepSeek R1 is described as censorship-free on OpenRouter, though some users note it retains filtering components.

- Others suggest that additional finetuning could broaden its scope, anticipating stronger performance without extra constraints.

- Llama Endpoints Drop Free Tier: OpenRouter revealed plans to discontinue free Llama endpoints by the month’s end because of changes from Samba Nova.

- A Standard variant will replace them at a higher price, surprising many users.

- OpenAI Model Rate Limits Clarified: Users confirmed OpenAI’s paid tiers carry no daily request cap, but free tiers limit activity to 200 calls per day.

- Some overcame these restrictions by attaching their own API keys, reducing usage headaches.

- Reasoning & Web Search Support in Flux: Community members asked how to access

reasoning_contentfrom DeepSeek R1, with OpenRouter expected to add that feature soon.- Others hoped for wider availability of the Web Search API, which is currently locked to the chatroom interface.

Stability.ai (Stable Diffusion) Discord

- Photorealistic Flourish with LoRA: In a discussion about generating lifelike images with Stable Diffusion 3.5, participants explored LoRA strategies to mitigate a plasticky look, referencing the stable-diffusion-webui for advanced controls.

- One user insisted that mixing high-res samples with various resolutions yields more realistic outputs, citing SwarmUI for enhanced prompt customization.

- Cloudy E-commerce Deployments: A user questioned the feasibility of deploying a text-to-image model on Google Cloud for E-commerce, referencing SwarmUI as a starting point for pre-trained solutions.

- Others weighed whether the Google Cloud Marketplace or a custom Docker setup would be more efficient, concluding that pre-trained models can greatly reduce setup times.

- LoRA Resolution Rumble: Community members debated training LoRA solely at 1024×1024, pointing to the Prompt Syntax docs for more nuanced control.

- A group emphasized diverse resolution input so LoRA can handle varied image qualities without producing strange artifacts.

- Background-Editing Tangles: Users encountered slower performance and flawed background layers, attributing them to denoising misconfigurations in Stable Diffusion pipelines.

- They recommended manual fine-tuning via GIMP or specialized AI solutions, noting improved results with features from SwarmUI.

Notebook LM Discord Discord

- Podcasts & Personality Swaps: One user introduced a new GLP-1 themed podcast, exploring host voice changes with a proposed tool, but current solutions might not properly support it.

- Another user pointed out random voice role switches can cause confusion, responding that many podcast generation tools struggle with stable speaker assignments.

- Gemini Gains & NotebookLM in Class: One user described a Gemini Advanced Deep Research workflow for generating thorough audio overviews, advising direct sourcing to reduce data loss.

- Another user debated single vs. multiple notebook usage for an econ course, preferring a topic-based approach to maintain consistent organization.

- Subscriptions & Simple Setups: Several users compared Google One AI Premium with Google Workspace for NotebookLM Plus access, noting that both provide the needed model features.

- Users concluded that Google One is easier to manage without the complexities of Workspace membership.

- Big Bytes & OCR Ordeals: One user struggled uploading audio files near 100MB, suspecting they'd exceed the total 200MB limit if combined with existing data.

- Another user highlighted OCR problems with non-copyable PDFs, calling for improved NotebookLM scanning support.

- Multi-language Moves & Newcomer Hellos: Several users expressed interest in multi-language podcast support, hoping for official expansions beyond English soon.

- New members introduced themselves, noting language barriers and encouraging sharper questions to keep discussions concise.

MCP (Glama) Discord

- Bumpy MCP Server Implementation: Users flagged inconsistent prompt usage across multiple MCP servers, leading to confusion about correct specs.

- Some implementations only fetch resources, ignoring official guidelines, sparking calls for stricter adherence to documentation.

- Roo Cline Charms with Agentic Twist: Roo Cline impressed devs by auto-approving commands, giving a nearly hands-free experience with R1 servers.

- Many praised its helpful VSCode plugin integration as a simpler alternative to bigger clients like Claude Desktop.

- Claude Hits Rate Limit Speed Bumps: Frequent Claude rate limits frustrated testers, restricting context length and message frequency.

- Some requested better usage tracking in Claude Desktop, hoping for clearer thresholds and fewer abrupt halts.

- Figma MCP Seeks Courageous Coders: Figma MCP launched as an early prototype, inviting devs to shape its future.

- 'This is very early/rough, so would appreciate any contributors!' said one member, asking for new ideas.

- AI Logic Calculator Sparks Curiosity: MCP Logic Calculator leverages Prover9/Mace4 in Python to handle logic tasks on Windows systems.

- Another member suggested pairing it with memory MCP for robust classification, fueling interest in advanced logic workflows.

Yannick Kilcher Discord

- GPU Gains & CPU Pains: In a conversation about HPC usage, participants concluded that large arrays often benefit from GPU parallelization, though data transfer can cause slowdowns.

- Some participants described the operation as trivially parallel, implying that CPU approaches can remain competitive for smaller tasks.

- Microsoft’s Mega-Bet on OpenAI: The $13 billion investment from Microsoft triggered antitrust warnings, with the FTC stressing that cloud dominance might leak into the AI marketplace.

- FTC Chair Lina Khan cautioned that locked-in partnerships could hamper startups from tapping crucial AI resources.

- FrontierMath Funding Fallout: Community members questioned OpenAI’s involvement in FrontierMath after discovering a concealed funding arrangement, raising transparency issues.

- Some claimed that Epoch was subject to tough NDA terms, leaving many contributors oblivious to OpenAI’s role in financing.

- Lightning and TPA: Speedy Synthesis: An integration of Lightning Attention and Tensor Product Attention yielded about a 3x speed gain during testing in a toy model.

- Users credited linearization for enabling big tensor operations in attention, highlighting a major performance leap over prior methods.

- rStar-Math Surprises with MCTS: The paper rStar-Math presented how small LLMs can surpass bigger models through Monte Carlo Tree Search for advanced math tasks.

- Its authors advocated minimal reliance on human data, detailing a method that uses three distinct training strategies to boost problem-solving.

Cohere Discord

- Konkani Collaboration Gains Steam: A user aims to build a model for Konkani with potential university endorsement, hoping to advance cross-lingual NLP.

- They noted industry partnerships are essential for expansion and practical adoption of the project.

- Command-R Conundrum: Engineers discovered command-r references an older model to avoid breaking changes for existing users.

- They proposed official aliases with a 'latest' tag to keep releases consistent while enabling new versions on demand.

- Cohere’s Math Mix-Ups: Users saw Cohere incorrectly compute 18 months as 27 weeks, forcing them to validate results manually.

- They highlighted that most LLMs share this limitation, suggesting lower temperature or separate calculators as solutions.

- Code Calls and Tool Tactics: Developers outlined how Cohere can invoke external tools by letting the LLM decide when to use specified components.

- They noted minimal official mention of AGI, but emphasized structured prompts and model-driven execution for code generation workflows.

LLM Agents (Berkeley MOOC) Discord

- Spring MOOC Gains Momentum: One member asked about confirmation for the MOOC course starting this January, highlighting expected LLM Agents coverage.

- They also referenced the mailing list starting next week, suggesting more course timeline details will be shared soon.

- Mailing List Kicks Off Soon: Community members confirmed the spring course mailing list will launch next week, addressing open questions about official registration.

- They anticipate further course timeline updates once the list goes live, advising prospective participants to watch for the announcement.

Mozilla AI Discord

- Document to Podcast blueprint on the mic: A dedicated team introduced the Document to Podcast blueprint, a flexible approach for turning textual content into audio using open source solutions.

- They announced a live session where participants can ask questions, share feedback, and explore how to incorporate this blueprint into their own projects.

- Blueprints supercharge open source synergy: Attendees were urged to join the event and connect with fellow open source enthusiasts, promising new collaboration on future projects.

- They emphasized hitting an 'Interested' button to join the community conversation, fueling new possibilities for deeper open source exchange.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!